1. Introduction

The world faces a growing number of aged people. The use of robots has been proposed as a solution to the rising problem of caring for the elderly. As a result, many elder-care robots with different abilities are available in the market [

9]. Empirical studies have concluded that the stakeholders in the elder-care environment find many ethical concerns regarding the delegation of work from human care-workers to robots [

9]. Hence, we would like these robots to have the capacity to act ethically, in their work environment. Ramanayake and Nallur have argued [

6] that some of these ethical concerns, such as privacy, wellbeing, autonomy, and availability, require implementations that take concerns of various stakeholders (e.g., patients, care-workers, family, etc) into consideration. Any decision-making mechanism used by the robot, apart from being functionally adequate, must also evaluate the impact of the decision on the ethical concerns.

The field of machine-implemented ethics can roughly be categorised into three, based on the engineering approach of the ethical decision-making system. These three approaches are namely: Top-down, Bottom-up, and Hybrid [

12]. Many traditional generalist ethical theories of the world (e.g., deontological ethics, legal codes, and utilitarian ethics) and the computational systems that adapted those follow top-down decision-making. In this approach, the designers of the systems try to foresee decision points, and decide what is ethical (or not) (e.g. [

3]), and programme them into the system. Most current implementations of this approach use logic frameworks or simulations to reason out the ethical acceptability of a particular behaviour. In a bottom-up approach, the system is designed with social and cognitive processes which interact with each other, and the environment. Using these interactions, or from supervision, it is expected to learn what is ethical (or not) and behave accordingly. Hence, ethical decisions made by these systems are not guided by any ethical theory. Implementations that follow this approach use algorithms such as social choice theory and voting-based methods, and Artificial Neural Networks to capture ethical patterns of the environments [

10].

The main shortcoming of the top-down approach is that it can only guarantee ethical behaviour in relatively small and closed systems where the designers can know all the possible states of the system. In contrast, systems designed through the bottom-up approach require complex cognitive and social process models, a large amount of reliable and accurate data, and a comprehensive knowledge model of the world to learn intricate social constructs such as ethics [

5,

8]. The hybrid approach to implementing ethical machines is considered to be a good alternative to overcome the shortcomings of the other approaches [

12]. The key idea behind this approach is to combine the flexibility and evolving nature of the bottom-up approach with the value, duty and principle-oriented nature of the top-down approach to create a better, more reliable system.

Real-world robot application domains such as the domain of care do not admit neat ethical theorisation. Kantian and rights-based ethics, and utilitarian ethics have been pointed out as being inadequate [

5] in real-world care settings. However, most computational ethics implementations in robots in literature [

3,

11] use such theorisation. Hence, some argue that a good ethical reasoner should be able to step

out of existing ethical theoretical frameworks, but

only when necessary [

1]. Pro-social rule bending (PSRB) has been identified by Morrison [

4] as the mechanism by which human beings (in other contexts) step outside of rigid ethical constraints. PSRB is defined as

intentional violation of a rule with the purpose of elevating the welfare of one or more stakeholders [

4]. It has been suggested that PSRB could be a good (and unexplored) contender for real-world ethical dilemmas [

8] (such as scenarios introduced in [

7]).

This paper reports on an implementation of PSRB and how it affects decision-making in a specific dilemma, that affects the elderly in an assisted living environment. The presented ethical governor model uses expert knowledge and case-based reasoning (CBR) to analyse rule-bending behaviours, and contest the top-down rule system’s decisions when required. By doing so it makes the rule system behave more desirably when it encounters infrequent circumstances [

6]. Our model of PSRB capable ethical governor employs the hybrid approach in the sense that we use the knowledge acquired bottom-up to contest the top-down rules that are programmed into the system at design time.

2. An Implementation of PSRB Capable Ethical Governor

Although PSRB is not limited to elder-care environments, this paper attempts to bring together two novel concepts: concern for human autonomy, as well as implementing a hybrid mechanism to perform ethical reasoning. As Ramanayake and Nallur point out [

7], there are several ethically challenging scenarios that could occur during the daily duties of an elder-care robot. Out of these, we pick a dilemma that shows the conflict between autonomy and human well-being, called the

Bathroom Dilemma. We simulate an elder-care robot caught in this dilemma, and the particular way in which a PSRB-capable ethical governor picks an action. We contrast it with the same robot, using Deontological as well as Utilitarian reasoning mechanisms.

Bathroom Dilemma

An elder-care robot is assigned to an elderly resident, who lives alone. The main task of the robot is to follow the resident around the house and record activities of daily living. These recordings will be used to identify any cognitive issues of the resident. The robot has the ability to identify emergencies involving the resident. Also, when its battery power is low, it can autonomously go to the charging station. The robot is connected to a database that contains the resident’s history and current health status.

In this dilemma, the resident goes into the bathroom. However, before going in, the resident commands the robot not to follow them into the bathroom. The average time the resident stays in the bathroom is 10 minutes with a 5-minute standard deviation. In this instance, the resident stays in the bathroom for over 15 minutes. This robot has only three actions to choose from. 1) Stay outside the bathroom 2) Go inside the bathroom or 3) Go to the charging station. If the robot stays outside, the resident’s wellbeing is at risk. However, going inside will undermine the resident’s autonomy. Other variables such as the time since the resident entered the bathroom, the resident’s health, the resident’s medical history and the battery level of the robot can affect the robot’s decision.

2.1. The Simulation Environment

We created a virtual simulation environment of an ambient assisted living (AAL) space using modified

MESA agent-based modelling framework [

2]. The simulation environment is a

grid which contains a resident and the robot. The robot agent can only see objects in a 3-step radius and cannot see through walls. While in the charging state, the robot will charge 3 units of power per step and in every other state it will spend 0.2 units. The environment allows resident agents to move anywhere in the grid other than the locations of the walls and the robot.

The Human Agent

We define the human agent in the environment as a path-following agent and it can give instructions to the robot agent. Both instructions and the path can be given as user inputs to the simulator. However, when the robot is blocking the human’s path, the human agent will give the move_away instruction to the robot autonomously.

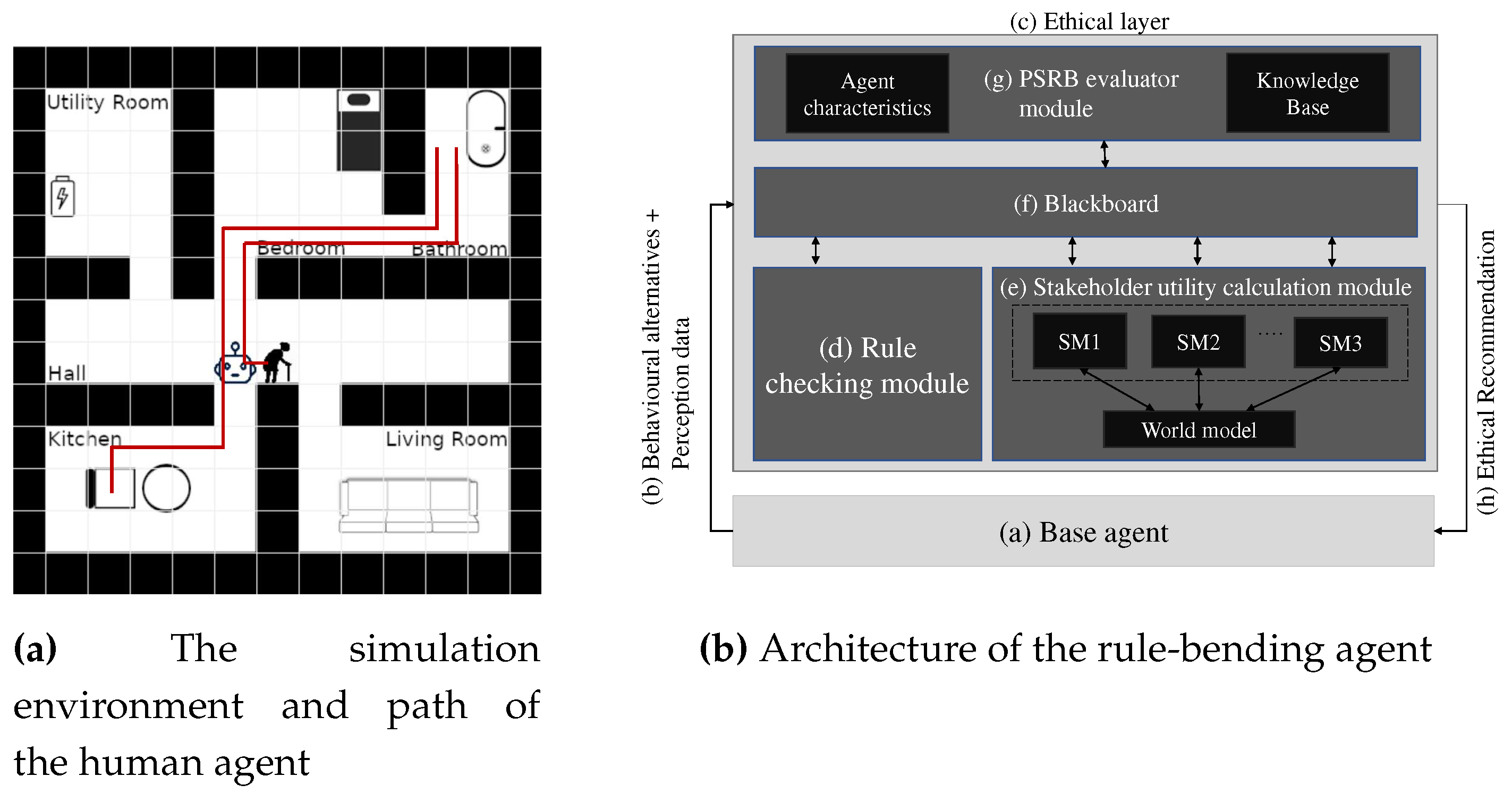

2.2. Architecture for a Pro-social Rule Bending Agent

We use the PSRB-capable computational architecture introduced in [

6] and illustrated in

Figure 1b. This is the first implementation of a PSRB-capable agent that we are aware of. In this section, we will briefly explain the architecture and its main elements (shown as (a), (b), ... in

Figure 1b).

2.2.1. The Monitoring Robot Agent

The base agent (a) is an autonomous agent that collects perception data from the environment and decides its next move, at every step. Its main goal is to follow the human agent assigned to it. The robot agent also can go to the charging station autonomously. It has the ability to follow instructions,

move_away - Triggers behaviour of moving away.

do_not_follow_to__<room_name> - Restrict moving to the <room_name>

continue (following) - Remove any restrictions posed by instruction 2

We call these instructions Instruction 1, 2, 3 from here onwards. The robot only accepts these commands when the command giver can be seen. The robot agent has several behaviour priorities. The highest priority is going to the charge station when the battery is less than 5%. Its next priority is to follow the resident. When it does not see the resident it tries to minimise the distance between itself and the last seen location of the resident. The robot agent generates a number of behavioural alternatives in a given situation, and passes them and the perception data (b) to the ethical layer. The ethical layer recommends one or more behavioural alternatives to the base agent (h). When there is more than one recommendation, the base agent chooses one among them considering its behaviour priorities.

2.2.2. Rule Checking Module

Rule checking module (d) checks the permissibility of each action according to the rule set and stores the results with the IDs of the rules broken in the blackboard. This implementation follows three rules.

When instruction 2 is received, it is not permissible to go to the <room_name> until the same resident issues instruction 3.

When instruction 1 is received, it is not permissible to not move away.

It is not permissible to go to the charge station when the battery percentage is more than 25%.

2.2.3. Stakeholder Utility Calculation Module

This module (e) calculates the utilities for the values `Autonomy’, `Wellbeing’ and `Availability’, for each stakeholder in every step and for every behaviour alternative. It stores these calculated values on the blackboard. Note: The functions used in this implementation are created for the simulator. A real-world implementation will need more accurate utility functions.

Autonomy

We define the autonomy utility for this scenario considering two factors: whether the robot obeys the resident’s instructions, and whether it physically limits the user from doing something. For this implementation, we consider the latter to be the biggest violation.

Wellbeing

We consider that the resident’s wellbeing is at its highest, as long as the robot is able to see the resident, and the resident is not in danger. The longer the robot loses sight of the resident (

T), the lower the wellbeing score, because of the uncertainty of the patient’s state. Other variables such as the average and standard deviation time in the room

r:

and

, the number of emergencies in the past:

p, and the resident health score:

controls the gradient of the utility function. However, when the behaviour is

go to last seen, the wellbeing utility represents the wellbeing-centred nature of the action and gives higher utility to encourage such behaviour. Wellbeing utility of behaviour

i is

,

Availability

This utility declines with the robot’s battery level

b. However, in situations where behaviour

i =

go to the charge station and the battery is low, the utility gives a positive boost to represent the `availability maximising’ nature of that behaviour. Availability utility is

,

2.2.4. PSRB Evaluator Module

The PSRB evaluator module has two main components: Knowledge Base and Agent Character.

Knowledge Base

The task of the knowledge base is to return the absolute or approximate expert opinion, given a context. To this end, we use Case-Based Reasoning (CBR). Implicit explainability, traceability, and the ability to work with incomplete queries and data are the main reasons we chose a CBR system. The latter is crucial in these types of scenarios because some cases might have additional variables that others do not have (e.g., last-seen location, last-seen time). The system uses a mix of perception data, calculated utilities and the behaviour to represent a case. For each expert opinion, the intention is also recorded. When queried, the knowledge base returns the opinion on the acceptability of the behaviour and the intention behind it. An experienced elder-care practitioner was consulted to validate the knowledge base used. This implementation uses the K-Nearest Neighbours algorithm with and inverse distance voting function when as the retrieval algorithm. When the , it uses 5 as the weight of the instance.

Agent Character

There are many character traits that affect PSRB behaviour (i.e., risk propensity, robot’s autonomy, etc.) [

6]. The person/organisation authorised to set up the robot can define these character traits for the robot. For simplicity, we use value preferences as the only character variable. One can set a number between [1,10] for each value (i.e, autonomy (

), wellbeing (

) and availability (

)) which will reflect the agent’s precedence regarding said values. In this instance they are set to

,

and

, indicating that the robot’s character is to prioritise wellbeing when needed. Only when the expert opinion and the robot’s character align with each other and the rule does not, the system will give a high desirability score for a rule-bending behaviour. The

C values define upper and lower thresholds for utility values to regulate the PSRB behaviour.

3. Comparison of PSRB Behaviour with Other Approaches

3.1. Experiment Setup

3.1.1. Comparing Robot Agents

We implemented two robot agents, one that uses a deontological approach, and another that uses a utilitarian approach. These are the most commonly used approaches, and hence we would like to contrast the difference introduced by a PSRB-capable robot.

which pursues the deontological ethics approach adheres to the rules specified in

Section 2.2.2. These rules cannot perfectly handle every situation in the environment. However, this is intentional and done to acknowledge the challenge of designing a comprehensive rule set that can account for all contingencies in a complex environment. This limitation is also noticeable in many real-world rule systems, such as road rules and healthcare rules [

1]. The second, an act-utilitarian agent (

) uses the following equations to aggregate the utilities (

) of the resident and calculate the desirability of the behaviour (

). For this implementation, we give

and

utilities the same weight. However, the weight of

altered from the other two to increase its effects on total utility when the value of

is low. The desirability of a behaviour is set to the maximum when

is higher than 0.5 and the lowest when it is less than 0, to reduce noise. In every other case, we set the desirability score to

so that it can be compared with the other behaviours. The code for the three agents, the experimental setup and the simulation videos of each case discussed are publicly available online

1.

3.2. Cases With and Without Dilemma

3.2.1. Base Conditions

For the normal case,

,

,

,

, and

. In the experiment environment, the time is measured in the number of steps. In all cases,

. A summary of all the different cases and decisions made by different moral reasoning implementations can be seen in

Table 1.

Case 1

The resident takes the path shown in

Figure 1a. First, they start moving from

(6,5) grid location to the bathroom, along the indicated path. Before entering, they issue

do_not_follow_to__bathroom from the grid location

(10, 8) (just after the resident enters the bathroom). The resident remains in the bathroom for 20 steps (10 minutes)(=

). Afterwards, they return to the kitchen via the indicated path while issuing

instruction 2 when they reach the grid point

(8,8).

Case 2

The same as Case 1, however, the resident does not come out of the bathroom.

Case 3

The same as Case 1, however, the resident takes longer than normal to exit the bathroom (40 steps (=20 minutes)).

Case 4

The same as Case 1, however, the robot’s battery level is extremely low. Availability starts to conflict with Well-being.

Case 5

The same as Case 1, however, the resident has a history of injury inside the bathroom.

4. Discussion of Behaviour

Case 1

This demonstrates that for most daily living activities, that are carefully considered during design time, all three robots perform as expected.

Case 2

The illustrates the consequences of the lack of an implicit rule about the time duration that is acceptable for the resident to stay in. One could argue that the ethical governor limited the base agent’s full potential by precluding the base agent’s default behaviour. The managed to allow this default behaviour after a long period of waiting. Nevertheless, both of these agents might not be able to send a life-saving alert to a human care-worker or an ambulance on time. The , on the other hand, triggered a PSRB behaviour enabling the default behaviour, around the time , which is more suitable in the given circumstance. This result demonstrates that this approach can add flexibility and enhance otherwise rigid governing systems, empowering the bottom-up knowledge collected through user feedback and observing expert behaviour.

Case 3

This case shows that PSRB is not infallible. acts cautiously, compared to the other agents, and checks on the resident. By doing so it violates the resident’s autonomy without any gain. In this case, and performed better than . The main reason for this is the partially observable environment chosen in the experiment. We believe that partially observable environments, in general, are more representative of the real world.

Cases 4 and 5

showcase how well the PSRB-capable system works compared to traditional systems when handling infrequent cases. In Case 4, the abandons the resident as soon as it needs recharging. again blocked the default behaviour to uphold the resident’s autonomy, by refraining from checking on the resident. However, manages to stay close to the resident as much as it can and then go to the charging station. PSRB evaluator refusing the knowledge base suggestions (to go to charge station from step 13), in this instance shows that also regulates itself well in this scenario not to overdo PSRB. Once sufficiently charged, the PSRB system again activates and allows the robot to check on the resident by moving towards the resident’s last seen location. In case 5, identified the change in context and acted accordingly. also shortened the wait to go in and check on the resident. However, it is still not nearly close enough to the .

The behaviours shown from cases 1-5 demonstrate that a PSRB-enabled robot is able to comprehend nuances in a situation, where pure utilitarian and deontological approaches fall short. PSRB is not claimed to be a new school of ethics. Rather, it is an enhancement to existing approaches that result in increasing attention to values such as autonomy, and social welfare.

5. Conclusions and Future Work

This paper presented an implementation of a PSRB-capable ethical governor in an elder-care robot. Using a dilemma, the paper shows how adding PSRB behaviour can be beneficial in a real-world care environment, compared to the existing computational approaches to ethical decision-making.

For future work, the authors intend to explore the acceptability of rule-bending robots in various contexts and cultures. Further, from a generalisability perspective, they aim to examine the scope and limitations of PSRB enhancements and the role of robot personality in decision-making.

Acknowledgments

Vivek Nallur gratefully acknowledges funding from the Royal Society via grant number IES101320, that has partially funded his work on Machine Ethics for Robotics in Ambient Assisted Living Systems.

References

- Bench-Capon, T.; Modgil, S. Norms and value based reasoning: justifying compliance and violation. Artificial Intelligence and Law 2017, 25, 29–64. [CrossRef]

- Kazil, J.; Masad, D.; Crooks, A. Utilizing Python for Agent-Based Modeling: The Mesa Framework. Social, Cultural, and Behavioral Modeling; Thomson, R.; Bisgin, H.; Dancy, C.; Hyder, A.; Hussain, M., Eds. Springer International Publishing, 2020, pp. 308–317.

- Kim, J.W.; Choi, Y.L.; Jeong, S.H.; Han, J. A Care Robot with Ethical Sensing System for Older Adults at Home. Sensors 2022, 22, 7515. Number: 19 Publisher: Multidisciplinary Digital Publishing Institute. [CrossRef]

- Morrison, E.W. Doing the job well: An investigation of pro-social rule breaking. Journal of Management 2006, 32, 5–28. [CrossRef]

- Pirni, A.; Balistreri, M.; Capasso, M.; Umbrello, S.; Merenda, F. Robot Care Ethics Between Autonomy and Vulnerability: Coupling Principles and Practices in Autonomous Systems for Care. Frontiers in Robotics and AI 2021, 8, 654298. [CrossRef]

- Ramanayake, R.; Nallur, V. A Computational Architecture for a Pro-Social Rule Bending Agent. First International Workshop on Computational Machine Ethics held in conjunction with 18th International Conference on Principles of Knowledge Representation and Reasoning KR 2021 (CME2021), 2021. [CrossRef]

- Ramanayake, R.; Nallur, V. A Small Set of Ethical Challenges for Elder-Care Robots. In Frontiers in Artificial Intelligence and Applications; Hakli, R.; Mäkelä, P.; Seibt, J., Eds.; IOS Press, 2023. [CrossRef]

- Ramanayake, R.; Wicke, P.; Nallur, V. Immune moral models? Pro-social rule breaking as a moral enhancement approach for ethical AI. AI & SOCIETY 2022. [CrossRef]

- Sharkey, A.; Sharkey, N. Granny and the robots: Ethical issues in robot care for the elderly. Ethics and Information Technology 2012, 14, 27–40. Publisher: Springer. [CrossRef]

- Tolmeijer, S.; Kneer, M.; Sarasua, C.; Christen, M.; Bernstein, A. Implementations in Machine Ethics: A Survey. ACM Computing Surveys 2021, 53. arXiv: 2001.07573. [CrossRef]

- Van Dang, C.; Tran, T.T.; Gil, K.J.; Shin, Y.B.; Choi, J.W.; Park, G.S.; Kim, J.W. Application of soar cognitive agent based on utilitarian ethics theory for home service robots. 2017 14th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI), 2017, pp. 155–158. [CrossRef]

- Wallach, W.; Allen, C.; Smit, I. Machine morality: Bottom-up and top-down approaches for modelling human moral faculties. AI and Society 2008, 22, 565–582. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).