Introduction

The nascent quantum computing field has a number of theoretical algorithms proposed in recent decades, some with a supreme advantage over conventional computers at their specific tasks.[

1,

2,

3,

4] The Shor algorithm[

3] that can factor large numbers rapidly, breaking current RSA encryption. RSA encryption relies on current computers, even the world’s most powerful, taking far too long, on the timescale of years, to break. However, there exist two extremely powerful applications of quantum computing to physical chemistry, the first of which would entirely transform the field. This application is exactly solving for the ground state energy of the Schrödinger equation for quantum systems.[

5,

6] The second is solving linear sets of equations which arise in chemical kinetics.[

7] Rapid solutions to large linear systems of equations would advance chemical kinetics modeling.[

7,

8,

9,

10]

Quantum computers have been shown, in principle, to achieve accurate solutions to the Schrödinger equation for molecules in far less time than needed for equivalent calculations from current and planned exascale computing. In additional to hardware development of quantum computers, programming implementations are necessary to achieve the promise of rapid and nearly perfect quantum mechanical computations with quantum computers. Once achieved, though, such a technology will utterly change the face of the computational chemistry field, because the equilibrium concentrations and rate of any chemical reaction could be reliably known prior to experiment.[

1]

The quantum computer solves the Schrödinger eigendecomposition problem.[

11,

12] If only the ground state energy (eigenvalue of the Schrödinger equation), and not the wavefunctions (eigenvectors) can be precisely obtained at various ionic positions, a variational quantum algorithm could be employed.[

13,

14] The movement of the ions would be conducted with a classical algorithm, for example conjugate gradient, and the ground state energy would be obtained with a quantum computer.[

15,

16] This separation is allowed by the Born-Oppenheimer approximation.

An example experimental validation of a quantum computing solution to the Schrödinger equation is the overall reaction energy of the water-gas shift reaction (WGS). The overall reaction energy has been experimentally measured,[

17] and a prediction from a quantum computer could be immediately checked. WGS is the leading industrial reaction for producing hydrogen.[

18,

19] Once validated for accuracy, the goal of solving the Schrödinger equation will be to predict the exact reaction mechanism for any catalyst, at the molecular scale. That way, computational screening of catalysts would be far more tractable and rapid. For example, the exact mechanism of WGS is still not known for certain on the least complex single crystal precious metal catalysts. The molecular catalytic reaction mechanism underpins any improvements in catalyst design. Quantum computing would provide the speedup and accuracy to change this situation in the computational catalysis field.

The current leading computational catalysis method for finding molecular reaction mechanisms is density functional theory (DFT). DFT places all the uncertain part of the Schrödinger equation into a function of the electron density function of space.[

20,

21] A function of a function is known as a functional. The electron density field is changed over iterations until it is self-consistent, meaning the Schrödinger equation is satisfied, for that particular functional of electron density.[

22,

23]

The quantum computation of the Schrödinger equation is fundamentally different from its computation with DFT. In a quantum computer, the quantum phase estimation (QPE) circuit[

24] extracts the energy levels which are the eigenvalues of the Schrödinger equation for a molecule, solid-state material or any many-body set consisting of nuclei and electrons. The challenge lies in expressing the Hamiltonian operator (left-hand side of Schrödinger equation) containing the kinetic energy and potential energy operators, in the acceptable format of QPE. The acceptable format is an exponential of a Hermitian matrix representing the Hamiltonian operator.[

25] Current approaches include split-operator techniques[

12] and Taylor series approximations[

11,

26].

Density functional theory

The time-independent Schrödinger equation which governs elementary particles (electrons and nuclei in the context of physical chemistry) is,

is the Hamiltonian operator. An operator takes one function and returns another function. Unlike matrix algebra, in which a multiplication is taken across a matrix row, down a vector, and summed, an operator, denoted by a hat, , takes the wave function , and changes it. is the wavefunction of all the electrons. takes in position and returns wave amplitude. is the energy, and it is a scalar number.

In broad terms, the Hamiltonian operator consists of a kinetic energy operator and a potential energy operator. The kinetic energy operator performs a second-order partial derivative on the wavefunction with respect to space (the operator). The potential energy operator term of the Hamiltonian operator multiplies the potential energy function dependent upon vector variables for each elementary particle by the wavefunction. The potential energies are the coulombic potential energy of each elementary particle due to each of the other elementary particles. The difficulty in computation is that the positions of the elementary particles are variables and not constants. The result is analytical solutions become too large to solve.

Density functional theory (DFT) gets around analytical solutions with a numerical approach. To begin with, an amplitude is initiated at each point in space. This is a (wave)function because it takes in a location and returns an amplitude.[

21] A functional takes the field and changes it.[

23] Since it is a function of a function, it is called a functional. DFT derives its name from functionals of the electron density field. (The electron density is the square of the wavefunction amplitude).

Hohenberg,[

21] Kohn and Sham[

20,

23] separate out the Hamiltonian as,

The first term in equation 2 is the static potential energy of each electron in an interacting inhomogeneous electron gas. The potential energy is static because it is due to unmoving nuclei (the Born-Oppenheimer approximation allows this separation). is the potential function of , the vector indicating position of an electron. is the density of the electron gas at position . The second term in equation 2 is the potential energy due to one position in the electron density field due to another position in the electron density field.

is functional (function of a function of position) of the electron density.

is split into two terms,

is the kinetic energy functional. is the exchange-correlation functional. First, the kinetic energy functional, besides multiplication by constants, takes the second derivative (the momentum operator). There are numerical methods for taking the second derivative at all points in a field. Second, the exchange-correlation functional accounts for two electronic energies that arise. The exchange energy is the stabilization due to electrons being able to exchange among equal energy (also known as degenerate) orbitals. For example, the three orbitals are at the same energy level, and electrons may exchange between those three. The correlation energy correction is phenomena of how the electrons of a system interact with each other. Further explanation of electron correlation energy is outside of the scope of this article. With all the Hamiltonian operation on the electron density field in place, the field can be changed during numerical iterations to find a self-consistent field. The self-consistent field satisfies the Schrödinger equation for the guessed functional form of electron density for the exchange-correlation energy.

Quantum computing will not work with a guess of the functional form of electron density for the exchange-correlation energy, but rather return to accounting each electron and nucleus with their entangled wavefunctions. Within quantum phase estimation (QPE), the analytical form of the wavefunction will likely remain unknown and unmeasurable. However, the ground state energy, or the phase of the Hamiltonian operator, is the most crucial information.

Quantum phase estimation

QPE is the quantum computing algorithm which solves eigendecomposition problems and underpins many of the prominent quantum algorithms.[

27,

28,

29] QPE extracts the eigenvalues and eigenvectors of a unitary matrix,

. Matrices are in bold font. A unitary matrix times its complex conjugate transpose, by definition, is equal to the identity matrix. For example,

Here, is the complex conjugate transpose of . is the identity matrix. By contrast, a Hermitian matrix is equal to its complex conjugate transpose, and the asterisks could be removed from equation 4. A Hermitian matrix is therefore necessarily also unitary. The eigenvalues of a unitary matrix have a norm, or length, of one. The non-complex equivalent of a unitary matrix is an orthonormal matrix. Fortunately, quantum computing gates are square, and the complex conjugate transpose is the inverse, which does not hold true for non-square matrices.

The eigenvectors of a unitary matrix are orthogonal to each other. The significant outcome of this property is that the eigenvalues preserve the overall lengths of the eigenvectors. This property harks back to the probabilities of all the computational bases of qubits, i.e. possible bitstrings measured, summing to one. This property of unitary matrices is known as length preservation. The vector of qubits maintains unit length.

The eigendecomposition equation for

which is executed by QPE is,

In equation 2, the eigenvector is

. The

ket notation may be perceived as a vertical vector, the matrix mechanics equivalent. A matrix multiplication is denoted by

in

bra-ket notation. The imaginary number is denoted

, where

. Equation 5 is set up specifically with the special case of the eigenvalues being of the form

. For any matrix containing any eigenvalues and eigenvectors, further preparation is necessary to arrive at the form of equation 5. These preparations vary by the type of matrix,[

30] are outside the scope of this Article. Nonetheless,

may be thought of as containing the eigenvalues of a matrix which has been transformed to

.

may be also be considered converted to radians (unitless) by the multiplicative constant

in equation 5.

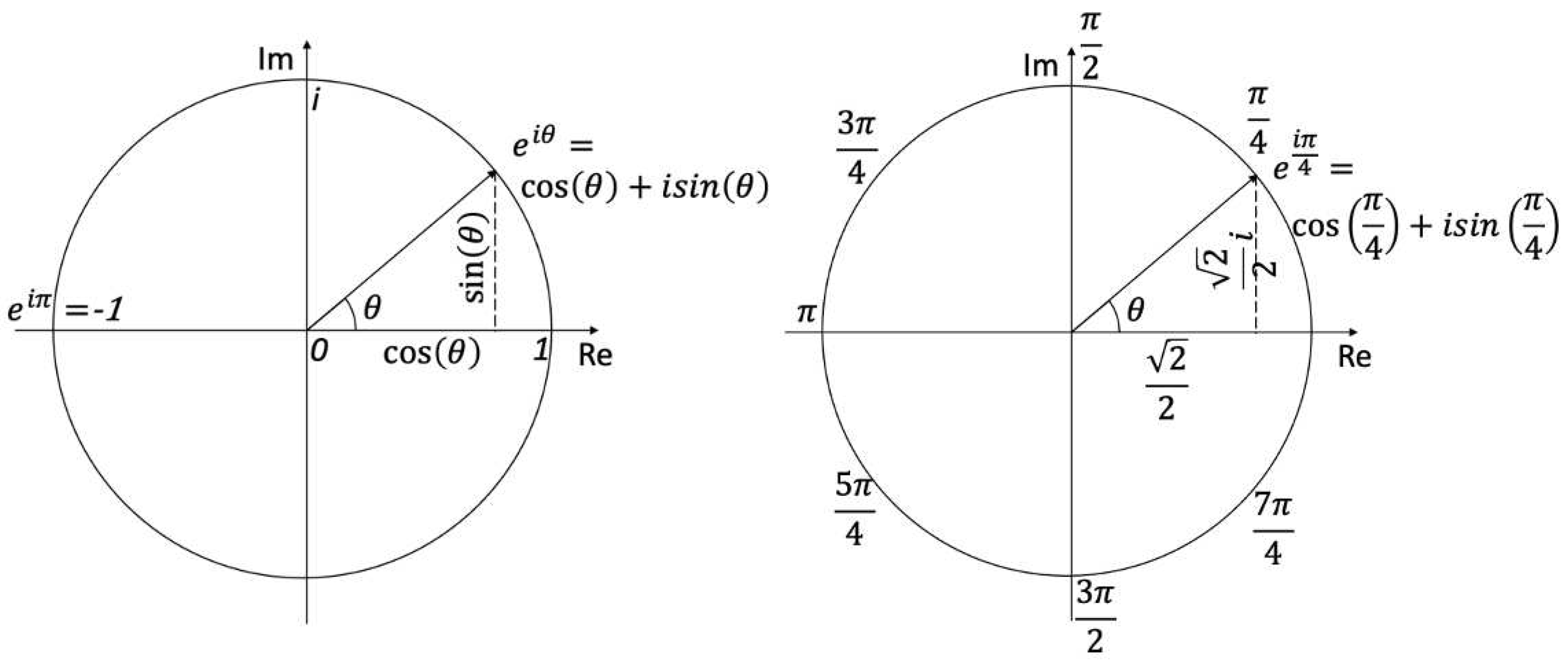

The eigenvalues in equation 5 will always lie on the complex unit circle as visualized in

Figure 1a. On the complex plane,

is always length 1. The length of a complex number is

, where

is the real part and

is the imaginary part. For example,

always contains only real parts when

is a multiple of

(pointing to the left perfectly horizontal). The eigenvalues of equation 5 are unit vectors on the complex plane.

A unitary matrix has advantages in calculation. The dot products of the column vectors of

are zero (inner product) except when dotted with itself, in which case it is 1. This property may be demonstrated with the following unitary matrix and its complex conjugate,

For demonstration purposes,

may be written as two orthonormal vectors:

Then, the property of unitary matrices is checked:

The dot product equaling 1 establishes unit length of the vector. This check is analogous to the norm, in which the algebra is the same. Taking

:

In equation (6), when the norm (length) of a complex number is taken, the complex conjugate (opposite sign imaginary part) is multiplied with the original complex number.

A unitary matrix with known eigenvalues and eigenvectors may be created. For example,

. The eigenvalues are placed in the diagonals of the

matrix.

The eigenvalues and eigenvectors of matrix

are then,

In QPE, the eigenvalues of

are “kicked back” in a Fourier basis, which are extracted by an inverse quantum Fourier transform.[

31] The eigenvalues,

, are kicked back in this way. A quantum Fourier transform (QFT), those eigenvalues are converted back to

’s.

A scalar example of

is considered (1x1 matrix). This is a rotation of

around the complex unit circle, as seen in

Figure 1b. Therefore, the value of

is,

When applied as a gate in a quantum circuit in QPE, the matrix is made into a controlled- matrix. The control is created by adding the identity matrix, , to the upper left block, and the matrix to the lower-right block. The upper-right and lower-right blocks of this matrix are zeroes. The “controlled” term means that a qubit controls whether or not the gate is applied to another qubit. In QPE, the control qubits are the qubits onto which the phase, or eigenvalue, is kicked back. In the simplifying case of a scalar, the matrix is dimension 2x2, with a one in the upper left, and the scalar in the lower-right.

This

matrix is for the

in equation 12 is,

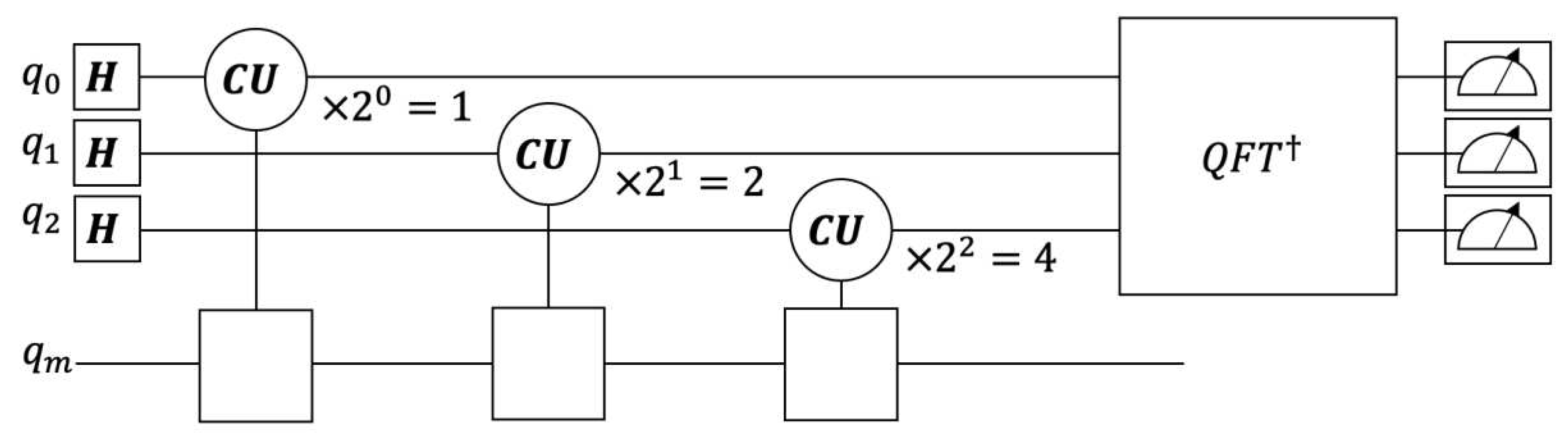

To begin QPE, Hadamard gates,

, are applied to each of the qubits.

The Hadamard gate happens the same as the 2-dimensional discrete Fourier transform gate.[

32] Each qubit of the compute register will receive the

matrix

number of times, where

is the qubit number. The zeroeth qubit will receive the

matrix once, the first qubit will receive the

matrix twice, and the third qubit will receive the

matrix four times. These gates and their number of repetitions are shown in

Figure 2.

Next, an inverse QFT,

, block of gates converts the eigenvalue, in the format of

to the format from which

may be measured as a computational basis (bitstring). The (forward) QFT block applies the discrete Fourier transform matrix. Here is a 2-dimensional QFT matrix,

The result of

radians on the complex unit circle equaling -1 may be observed from

Figure 1.

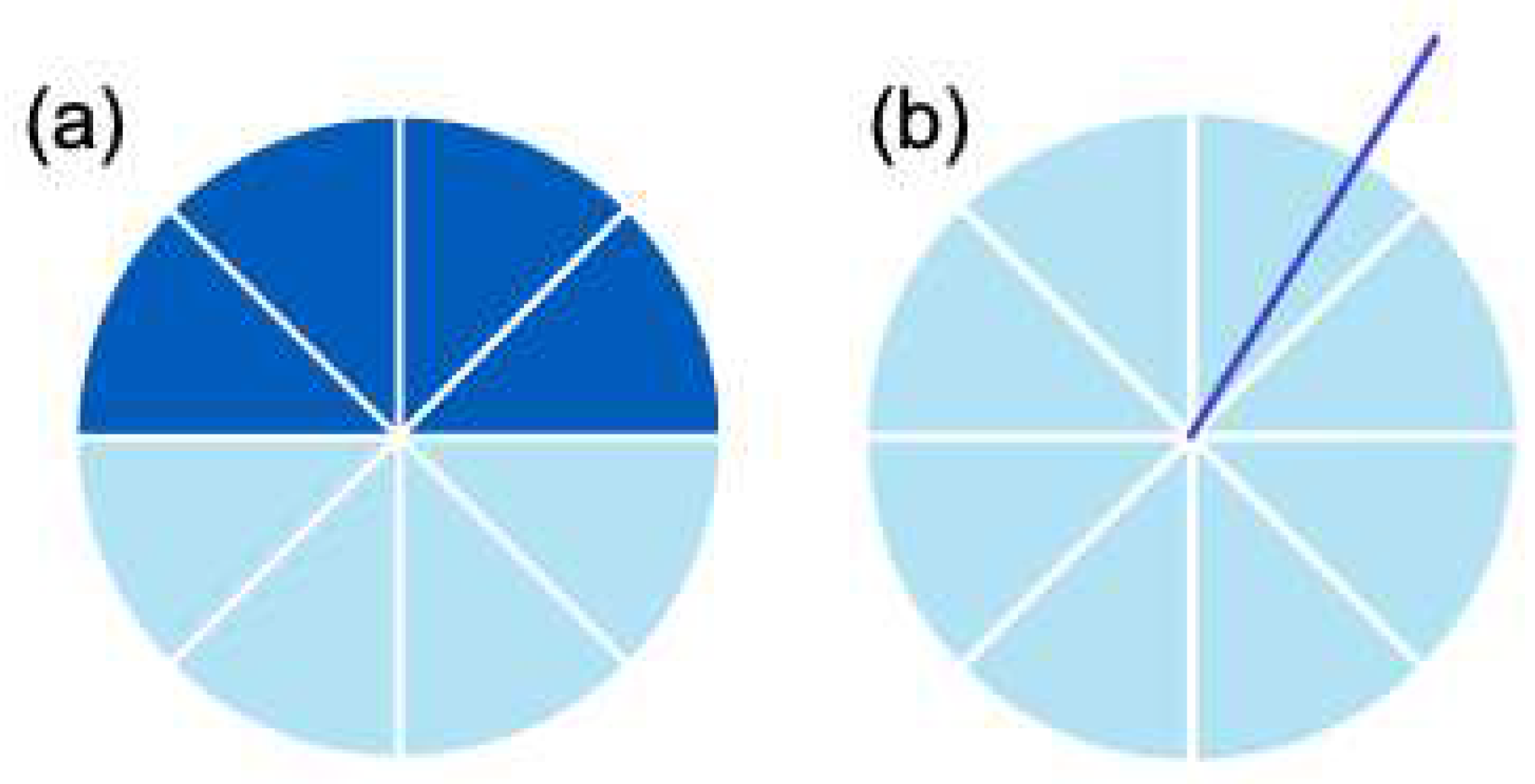

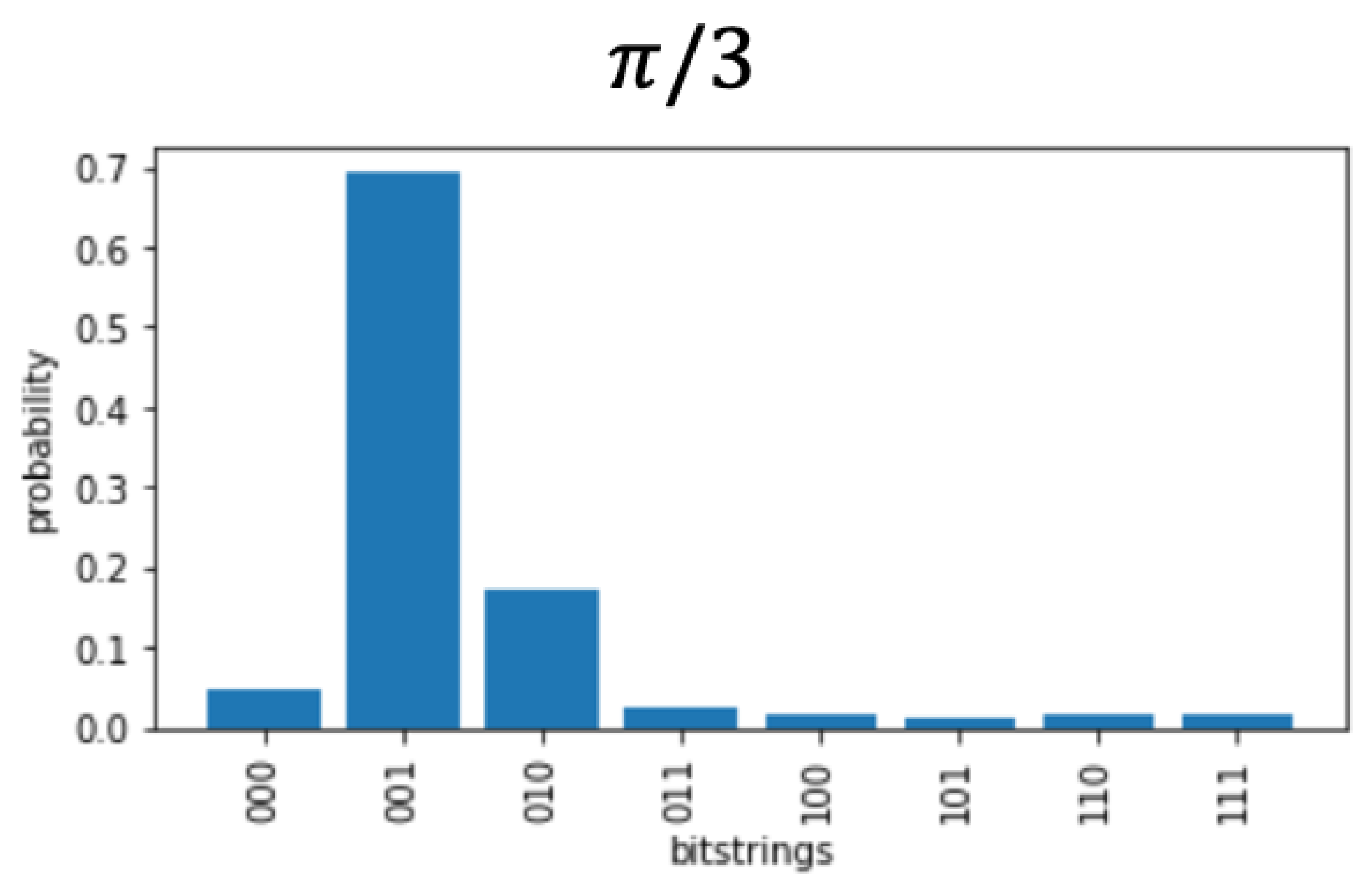

Figure 3a displays

rotation with the

allowed steps due to the maximum precision of 3 qubits.

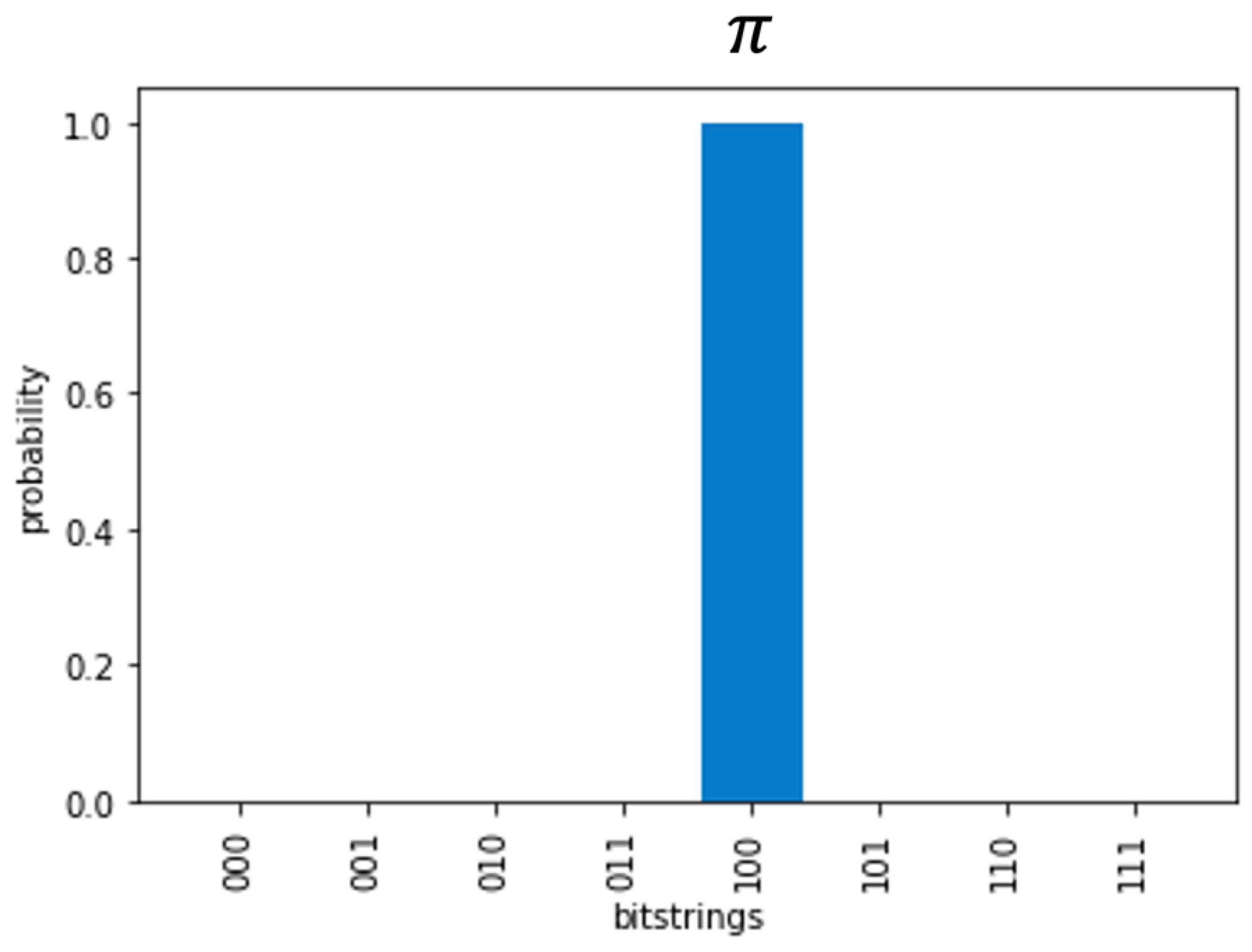

Figure 4 displays the measurement when the precise

rotation is taken.

Figure 4 displays how the probability is distributed among possible measurements when a rotation is not on the increments allowed by the precision due to the number of qubits.

is the QFT matrix.

is the Nth root of unity, and

.

is the dimension.

The

gate for 3 qubits (example here) has dimension 8x8.[

32]

This gate is not directly applied to the three qubits. There are a number of gates applied in sequence.[

7]

Finally, the measurements output computational bases or bitstrings. Each qubit, when measured, collapses to a

or

. Since,

the intervals of

which result in exact computational bases are,

The values of

and their corresponding computational basis are listed in

Table 1. A count in binary numbers is spread over the interval of equation 17. When an eigenvalue is not precisely a multiple of the value in equation 18, the probability is spread among computational bases (

Figure 5). Statistical sampling of the quantum circuit is accomplished via multiple shots of the circuit. The precision is doubled with each extra qubit, as shown in equation 18, although the gate depth also increases, which is a hardware consideration.

This example has disregarded matrices with eigenvalues not on the complex unit circle, i.e. eigenvalues which are not of the format

. In fact, the

matrix is constructed from an original matrix which is necessarily Hermitian.[

32,

33] There is a block matrix form which can make any matrix Hermitian which doubles the matrix dimensions.

is an original Hermitian matrix.

is,

The eigenvalues are then in the complex unit circle format,

, where

was the original eigenvalue before exponentials were taken. There is an expense in taking the matrix exponential. There is a form of the Euler identity which can be applied to exponentials,[

34] but there will be computational cost in terms of taking sines and cosines. There is also an infinite series expansion of the matrix exponential, which may be truncated.[

30] Obtaining the matrix exponential is left out of the scope of this Article.

Particle in a box

Returning to the Schödinger equation (equation 1), the Hamiltonian operator[

35] for a single particle is expanded to yield,[

36]

is the reduced Planck’s constant. Instead of mass , a reduced mass is used to combine the mass of the nucleus and electron into one constant. Reduced mass, , is defined as refers to the mass of the nucleus, and is the mass of the electron. is the wavefunction of a single particle. In the particle in a box example, only the Cartesian dimension is retained.

The problem is visualized as a single subatomic particle sandwiched between two parallel infinite barriers of infinitely high potential, where the subatomic particle cannot be. The constant potential is also set to zero, which causes only the kinetic energy to remain in the Hamiltonian operator.

The analytical solution requires conceiving of a function for

such that the left-hand side of equation 22 yields the original

times some constant, which will be the value of

. The solution for

, which is not immediately derived but can be shown to be correct (known as an

ansatz), is,

and

are constants to be obtained shortly. Necessary boundary conditions for solving the particle in a box problem are that the amplitude of the wavefunction must be equal to zero at the walls. Assume the walls to be at

and

.

Since the interpretation of the wavefunction is that its square provides the probability of the particle being in the position . Therefore, according to equation 24, the particle has zero probability of being at the walls.

First,

is solved for. The position of

is selected to eliminate a term.

In equation 25,

, but

. Therefore,

by necessity of satisfying the equation at the boundary condition of

. The wavefunction is reduced to,

Derivatives of the wavefunction are obtained for further determination of the constants.

In the right-hand side of equation 27b, the original

reappeared. Multiple derivatives of the sine and cosine functions repeat in a known cycle. Therefore,

The second derivative of

with respect to

,

, is substituted into the Schrödinger equation for the particle in a box problem (equation 22).

As a side note,

. Plugging

into the wavefunction leaves only

unknown,

The other boundary condition provides a route.

Equation 31 is only zero (disregarding the unproductive

case), when the sine is some integer times

.

In equation 32a,

is not zero, because the equation would not be balanced in that case. The left-hand side of equation 33b fits into equation 30, yielding,

The probability of the particle being anywhere along the

dimension must total one. In mathematical terms, the integral of the probability must equal one. This is known as normalizing the wavefunction. Normalization is expressed,

The solution to the integral is lengthy. However, it has been previously solved[

37] and the solution is,

The wavefunction now has no unknown constants:

Equation 37 may now be substituted in equation 22,

Since the eigenvalue problem is solved, the constant when

may be placed in the lower right of a 2x2 controlled unitary matrix. The controlled unitary matrix,

, would then be,

When , the quantity in the lower right-corner is exactly 4 times the current value. Since increases by integer multipliers, and since there is a factor before , representing exactly one revolution around the complex unit circle, the eigenvalue measured by QPE will always be precisely the same for all energy levels. I.e., for any correct energy level, QPE will measure out the correct eigenvalue, or phase. However, there is some information loss, because the allowed energies scale by . Any integer multiplier will return the correct-appearing result due to the factor conducting precisely one revolution. Therefore, any would be indistinguishable from the correct in this QPE arrangement. The quantum harmonic oscillator, exhibited below

Harmonic oscillator

The harmonic oscillator has a symmetric potential energy which allows for an analytical solution to its energy levels (eigenvalues) and wavefunctions (eigenvectors). The Schrödinger equation with the Hamiltonian operator expanded for the harmonic oscillator example is,

The wavefunction,

, is not derived here, but the correct guess is,

and

are constants. Equation 41 is in the form of a gaussian function, and the tails of a gaussian function diminish to zero. This fact signifies that the wavefunction may be normalized, as essential criteria. Not fully shown here, the constant

cancels in the Schrödinger equation, via an exponent by becoming

. There remain

polynomial terms and

terms in the analytical solution. The

coefficients are set equal to obtain

. The

coefficients being equal yields,

Equation 42b also satisfies the Schrödinger equation when multiplied by the integer

, though the proof of this is not included in this Article. (The wavefunction also includes

in these other higher-energy solutions.)

is the reduced Planck’s constant, and

is the angular velocity, also assumed constant. Both sides of the eigendecomposition equation may be divided by a scalar,

. When placed on the complex unit circle, a full revolution occurs after 1, because of the multiplicative

factor. Therefore, only the scalar

is necessary. When the measured computational basis is

, or

, the solution may be,

is an integer. For example,

,

on the complex unit circle, is place exactly at

, also

on the complex unit circle. The matrix exponential is taken of

, and the control block is added. The

matrix is then very similar to the previous

matrix of equations 13 and 39:

The result, after matching with

Table 1, will be multiplied by the constant

.

Figure 4 shows the measurements from the code implementation of QPE. Subsequent to publication, all code will be made publicly available at

https://bitbucket.org/ericawalk/ oscillator/src/master/.

Hydrogen atom and larger

The hydrogen atom

does include potential energy that is nonzero and dependent upon position, specifically radial distance from the nucleus. I.e., it is more complex than the quantum harmonic oscillator example which experiences symmetric potential energy allowing for an analytical solution. For one electron in a hydrogen atom, the coulombic potential energy function is,[

35]

is the charge of the nucleus.

is the charge of the electron.

is the permittivity of a vacuum. Permittivity is a measure of how much an electric field can permeate a vacuum.

is the same spatial coordinate, radius.

The energy levels may be analytically solved in a lengthy procedure left out of the scope of this work. The final energy levels of the hydrogen atom are,

In the case of equation 46, the energy levels scale as , and therefore do not repeat at constant integer intervals. Therefore, for QPE, the various ’s for various ’s would have to be entered into the matrix diagonal. The dimension of the matrix inputted into QPE would increase with every new energy level, and many distinct eigenvalues would be measured. The ground-state energy, when , could be placed in a matrix and be measured, although the same may be said for nearly any number which follows certain conditions. The advantage of a quantum computer would come in solving multi-electron potential energy rather than the hydrogen atom.

The challenge exists in expressing the coulombic energies among multiple electrons in the potential energy function in a format for QPE. The positions of the electrons are not known and are left as variables in the multi-electron potential energy function. As put forward by Aspuru-Guzik, et al.,[

12] every entangled qubit state can represent a point in space. Then, the electronic structure may be tracked over a very short time to obtain the ground state energy. Future work will be writing Hamiltonians as numerical matrices via a finite difference method over the space. With a fine enough mesh due to a large number of entangle qubit states, greater and greater accuracy will be achievable. It is very likely that the matrix exponential via truncated Taylor series approach for quantum computing as demonstrated by Berry, et al.[

11,

26] will be employed on numerical Hamiltonian matrices. Another related option is to apply full configuration interaction up to a limit of number of electrons (and nuclei).[

38,

39] A remarkable advantage of quantum computing which has not been available thus far via analytical analysis, is that the wavefunction need not be measured, only the ground state energy.