1. Introduction

In contemporary computing, the majority of tasks necessitate some form of digital signal processing [

1]. With the escalating computational capabilities of digital processing systems, there is a concurrent surge in power consumption. This is further exacerbated by the rapid advancements in the field of artificial intelligence. Neuromorphic sampling, or time encoding, is an alternative to traditional digital encoding that transforms an analog signal into a sequence of low-power time-based pulses, often referred to as a spike train. Neuromorphic sampling draws its inspiration from neuroscience and introduces a paradigm shift by significantly reducing power consumption during encoding and transmission [

2,

3]. Despite these advantages, as of now, there exists no equivalent of digital signal processing operations tailored to neuromorphic sampling. This unexplored territory holds the promise of groundbreaking developments in low-power and efficient signal processing.

In this paper, we address the problem of filtering a signal using its neuromorphic measurements, thus extending the principle of digital signal filtering for the case of neuromorphic sampling. In the literature, the concept of spike train filtering predominantly refers to convolving the filter function with a sequence of Diracs centered in the spike times [

4]. This is not an operation on the analog signal that resulted in those neuromorphic samples. Moreover, in the context of neuromorphic measurements generated from multidimensional signals, such as those generated by event-cameras [

5], filtering also refers to performing multidimensional spatial convolution [

6,

7]. The case of filtering the analog signal via its neuromorphic measurements was proposed in [

8], but the process also involves signal reconstruction. A filtering approach of neuromorphic signals without reconstruction was first studied in [

9].

Therefore, conventionally, to process the signal underlying a sequence of spike measurements, the signal is recovered in the analog domain, followed by filtering and neuromorphic sampling. There are a number of drawbacks with this approach. First, this method does not exploit the power consumption advantage of neuromorphic measurements. Second, this approach is heavier computationally due to the complexity of signal reconstruction from neuromorphic samples [

10,

11]. Third, reconstruction is only possible if the input satisfies some restrictive smoothness or sparsity constraints. However, for conventional sampling, the process of digital filtering is independent of the charateristics of the signal that generated the measurements.

In this paper, we derive a direct mapping between the time encoded inputs and outputs of an analog filter. The proposed mapping forms the basis for a practical filtering algorithm of the underlying signal corresponding to some given neuromorphic measurements, without direct access to the signal. We introduce theoretical guarantees and error bounds for the measurements generated with the proposed algorithm. Throrugh numerical simulations, we demonstrate the performance of our method in terms of speed, but also reduced restrictions on the input signal, in comparison with the existing conventional method.

This paper is structured as follows.

Section 2 presents a brief review of the time encoding model used in this paper.

Section 3 introduces the proposed problem.

Section 4 describes the proposed filtering method. Numerical results are in

Section 5.

Section 6 presents the concluding remarks.

2. Time Encoding

The time encoding machine (TEM) is a conceptualization of neuromorphic sampling that maps an input

into a sequence of samples

. The particular TEM considered here is the integrate-and-fire (IF) TEM, which is inspired from neuroscience. Consequently, sequence

is called a spike train, where the spike refers to the firing of an action potential, representing the information transmission method in the mammalian cortex. Previously, the IF model was used for system identification of biological neurons [

12,

13], to perform machine learning tasks [

14,

15] but also for input reconstruction [

11,

16,

17,

18,

19]. The IF model adds an input

with bias parameter

b, and subsequently integrates the output to generate a strictly increasing function

. When

crosses threshold

, the integrator is reset and the IF generates an output spike time

. The IF TEM is described by the following equations

Without reducing the generality, it is assumed that

. A common assumption is that the input is bounded by

, such that

. This bound enables deriving the following density guarantees [

2]

Signal

is, in the general case, not recoverable from

. To ensure it can be reconstructed, we require imposing restrictive assumptions. A common assumption is that

belongs to

, the space of functions bandlimited to

that are also square integrable, i.e.,

. If this assumption is satisfied, then

can be recovered from

if

Further generalizations were introduced for the case where

is a function in a shift-invariant space [

11,

17] or in a space with finite rate of innovation [

18,

19]. However, if

does not belong to one of the classes above, or if it is bandlimited and does not satisfy (

3), then the conventional theory does not allow any processing of the signal

via its samples

. The same is not true for conventional digital signals, that can be processed even when they’re not sampled at Nyquist rate. We will show how some types of processing such as filtering is still doable even when (

3) is not true.

3. Problem Statement

Here we formulate the proposed signal filtering problem as follows. Assume the neuron input is continuous, i.e.,

. To satisfy the neuron encoding requirement in

Section 2, we assume the input is bounded, such that

. Furthermore, we assume that the input is absolutely integrable

and square integrable

. Following the same idea as in digital filtering, we do not impose any general conditions on the bandwidth, smoothness, or sparsity of the analog signal in order to compute the filter output.

The filter is assumed linear, with impulse response

that is continuous,

, and absolutely integrable,

. The output of the filter then satisfies

where the last inequality assumes that

, which is introduced to ensure that

, which in turn allows

to be sampled by the same neuron. According to the properties of the convolution operator, we also have that

, where

denotes the space of square integrable functions.

Let

and

be the neuromorphic samples of signals

u and

y, respectively, computed using an IF neuron with parameters

. The proposed problem is to compute

knowing

, sampling parameters

and filter

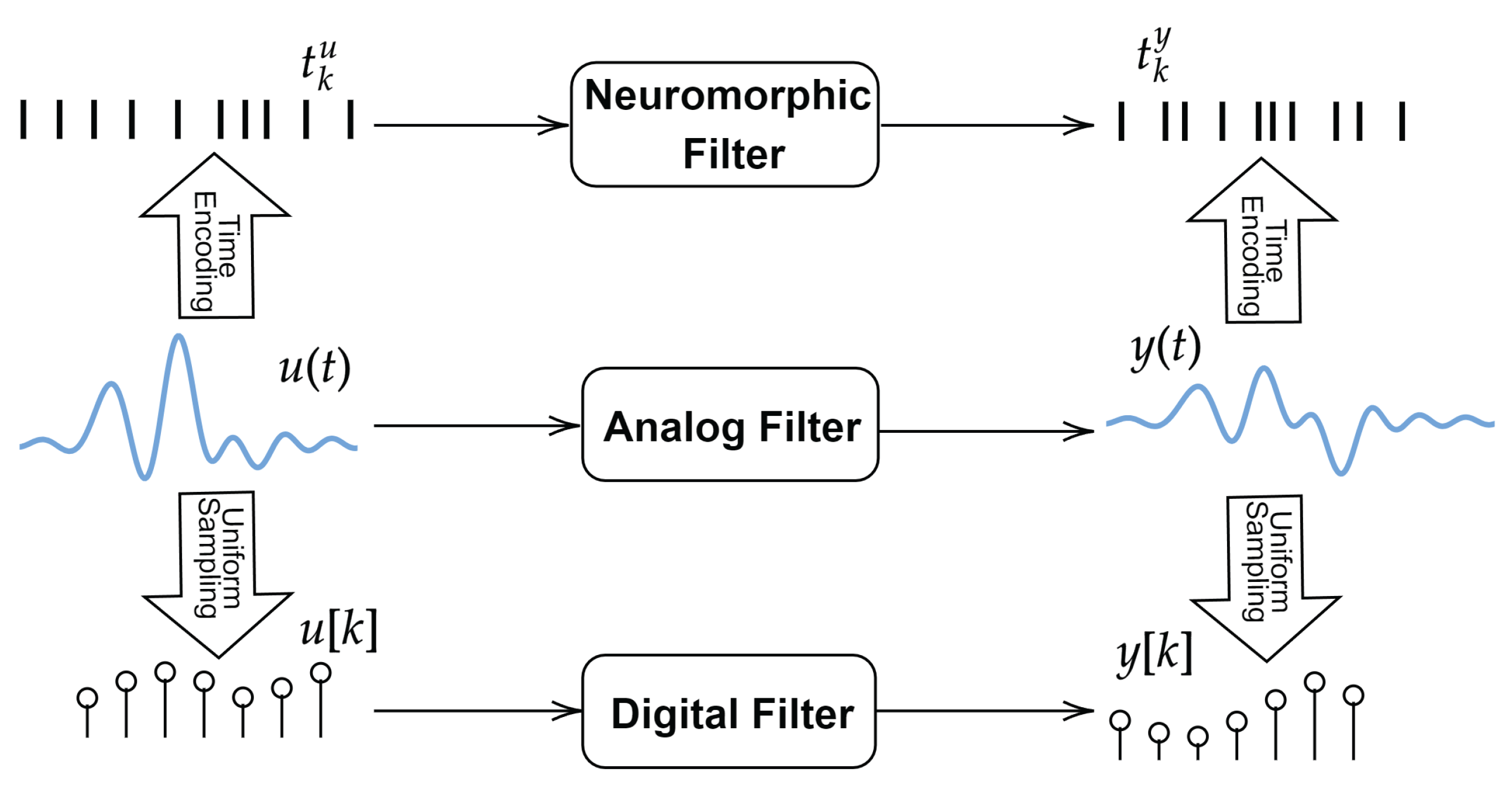

. This problem, illustrated in

Figure 1, is inspired from digital signal processing, where a digital filter is applied directly to the samples of a signal. The conventional way to address this problem would be to recover

from

, apply filter

in the analog domain, and subsequently sample the output

with the same IF model to get

. We will refer to this as the

indirect method for filtering. The first step of recovery, however, is not possible unless we impose some further restrictive conditions on

such as being bandlimited [

20], shift-invariant [

11,

17], or having a finite rate of innovation [

16,

18]. Therefore, the proposed problem is not solvable it its full generality using conventional approaches.

However, if we replace neuromorphic sampling by conventional uniform sampling, this problem leads to the widely used operation of digital filtering. The operation itself does not require any special conditions on the analog signal that generated these samples. Therefore, an equivalent of this solution for the case of neuromorphic sampling is highly desirable.

4. The Proposed Neuromorphic Direct Filtering Method

In this section we describe the proposed direct filtering method. To compute

from

we need to create an analytical link between the integrals of the underlying analog signals

and

due to the integral operator in (

6). To this end, we define the following auxiliary functions

We note that

U satisfies

. Using Young’s convolution inequality, we get that

. Using these functions, we derive the equivalent of the t-transform equations (

1) as

Assuming we know

, the target spike train

satisfies

, where

The following result shows that

can be uniquely computed using

.

Lemma 1 (Exact Output Samples Computation)

Function has a unique fixed point . Furthermore, let be computed recursively such as is arbitrary and

Then and .

Proof. Assume, by contradiction, that

such that

. It follows that

On the other hand, we know that

due to (

4), and thus

is a strictly increasing function of

, which ensures that

has a unique solution. Using (

6), we get that

, which invalidates our initial assumption and proves uniqueness.

From (

6), it follows that

The following holds

and thus

Let

and

. It follows that

Similarly, by choosing

and

we get

and the process continues recursively, which completes the proof via

. □

Therefore

can be computed by solving the fixed-point equation

. This equation requires knowing

, which satisfies

where the last equality uses the variable change

. In reality, however,

is unknown, since it could only be precisely computed using

. Given that we only know

, and don’t impose any smoothness or sparsity conditions on

, we don’t have access to

, but only to its samples

via (

6). In the following, we show how

,

, and subsequently

can be estimated using a piecewise constant approximation of

at points

. Let

be defined by

where

where

is the piecewise constant interpolant to

U at points

, such that

for

. The next proposition derives some properties of

.

Proposition 1.

Function is continuous and satisfies

where .

Proof. Using (

12), we get

which proves (

13). It follows that

is a linear combination of continuous functions, thus it is itself continuous. □

Proposition

Section 4 shows that, unlike

and

, function

and, consequently, also

are fully known from the IF parameters and input samples

. The remaining challenge is to show that the fixed point equation

can be solved and to provide an error bound for estimating

. This challenge is addressed rigorously in the next theorem. Moreover, the result allows computing recursively a sequence of estimations

that converges to a vicinity of

.

Theorem 1 (Estimating Output Samples from Input Samples) Let be a signal satisfying . Furthermore, let be the impulse response of a filter satisfying , and let be the filter output in response to input . Signals and , sampled with an IF neuron with parameters , generate values and , respectively. Then the following hold true

-

(a)

such thatwheresatisfies (

11).

-

(b)

Letbe a sequence defined recursively as, where. Then

-

(c)

Fordefined above,such that.

Proof.

(a) Function

satisfies

where

From (

9), the following holds

Using that

,

Unlike in the case of Lemma

Section 4, in this case, applying

recursively does not guarantee exact computation of

. However, we observe that by picking

, we get identical intervals for

t and

:

This observation is very useful as it enables applying Brouwer fixed-point theorem which states that for any continuous function

, where

is a nonempty compact convex set, there is a point

such that

. Given that

is continuous due to Proposition

Section 4, it follows that

is also continuous. By applying Brouwer’s fixed point theorem for

and

, it follows that

has a fixed point in

. We recall that

, which, using (

2), leads to

It follows that

, which yields the required result.

(b) We approach this proof using mathematical induction. We select

in (

18). Using (

20), it follows that

We note that

is always true for

, which yields

This demonstrates that (

15) is true for

. To finalise the induction, we assume (

15) to be true, and show it’s true for

as follows.

Finally, as before, we use that

guarantees

. We also use that

is bounded by (

15), which, when substituted in (

23), leads to the desired result via (

20).

(c) Equation (

15) can be expanded into

The required result follows from

via (

20). □

Theorem

Section 4 shows one can construct a sequence

that approximates

with an error

. We note that this error is dependent only on the neuron parameters, and thus can be made arbitrarily small by changing the IF model. In practice, we are using a finite sequence of input measurements

to approximate the output samples

that satisfy

. The proposed recovery steps are summarized in Algorithm 1.

Algorithm 6.1.

Step 1. Set and . While ,

Step 1a. Calculate

where

Step 1b. .

Step 1c. Compute for , where .

Step 1d. .

Step 1e. ;

Step 2. Set .

We note that, in practice, convergence was achieved in Algorithm 1 for iterations in all examples we evaluated. In the next section, we evaluate numerically the proposed algorithm.

5. Numerical Study

In order to evaluate the performance of the proposed method we define the following approximation errors

Output inter spike time error between the output of the time-encoded filter and the time-encoded output of the conventional filter:

where

is the output spike train prediction with Algorithm 1 (

) and the conventional method, involving the reconstruction of

(

).

Output error between the decoded output of the time-encoded filter and the output of the conventional digital filter:

We compute the errors as percentage of the true quantities

and

, respectively, by defining the following normalized error metrics

Furthermore, we denote by

and

the computation times of the output spike train with the proposed and indirect methods, respectively. In our numerical examples, we discretised the time using a simulation time step of

. The parameters used, errors and computing times are summarised in

Table 1.

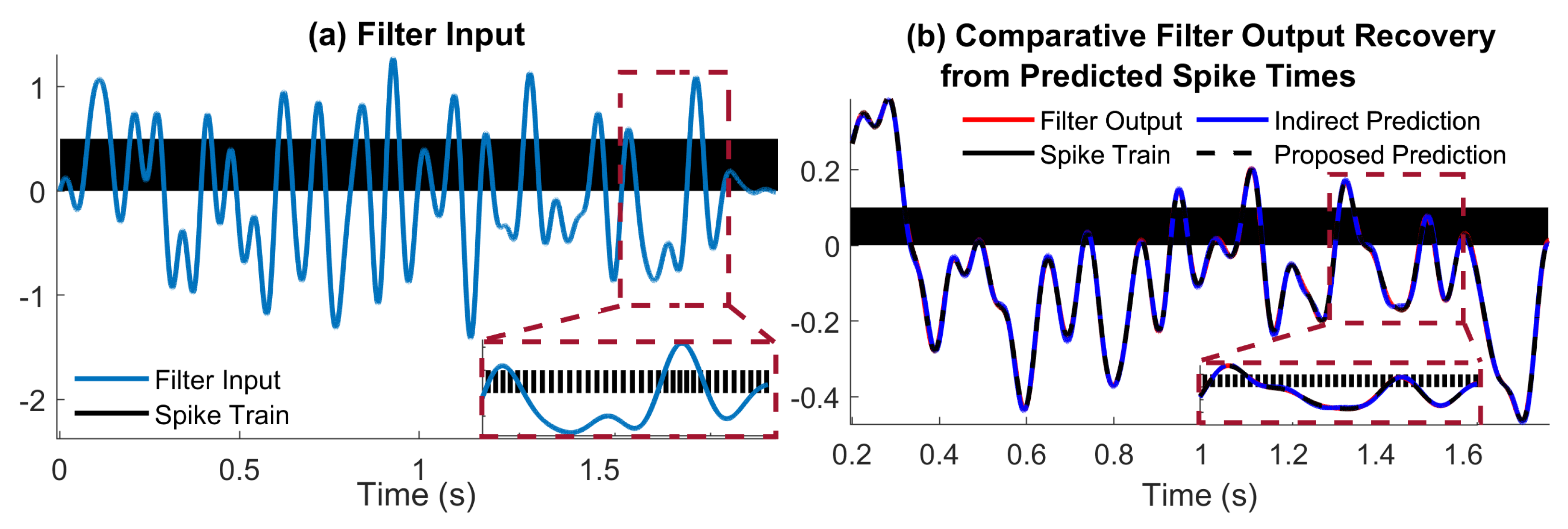

5.1. Low-Pass Filtering of a Bandlimited Signal

The filter selected has the following transfer function

where

. Furthermore, the input is generated as

where

and

are random coefficients drawn from the uniform distribution on

, and

. We selected

in order to avoid boundary errors for input recovery. The input and output of system

are sampled with an integrate and fire neuron with parameters

. Signals

and corresponding spike times are depicted in

Figure 2 (a) and

Figure 2 (b), respectively.

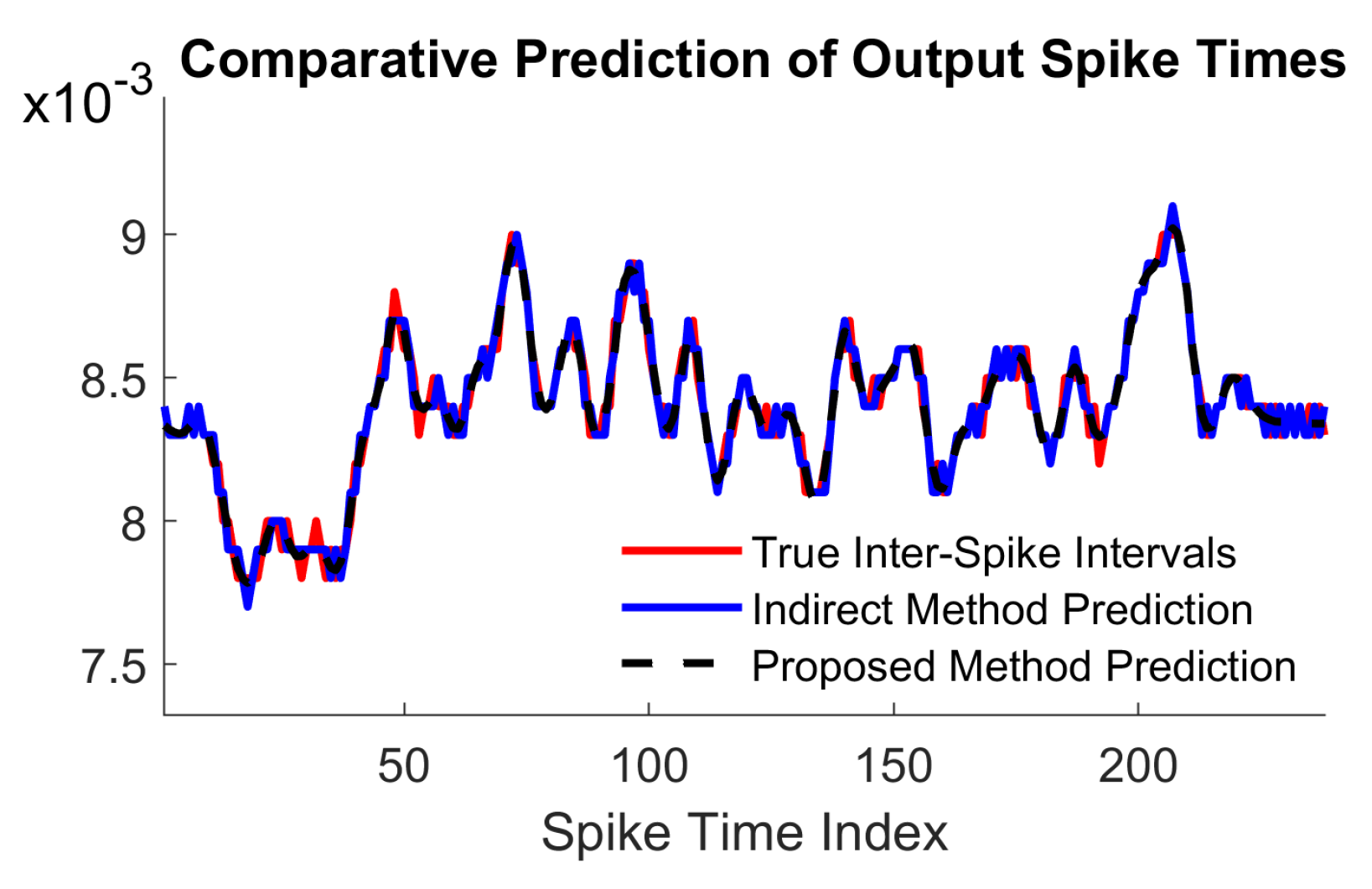

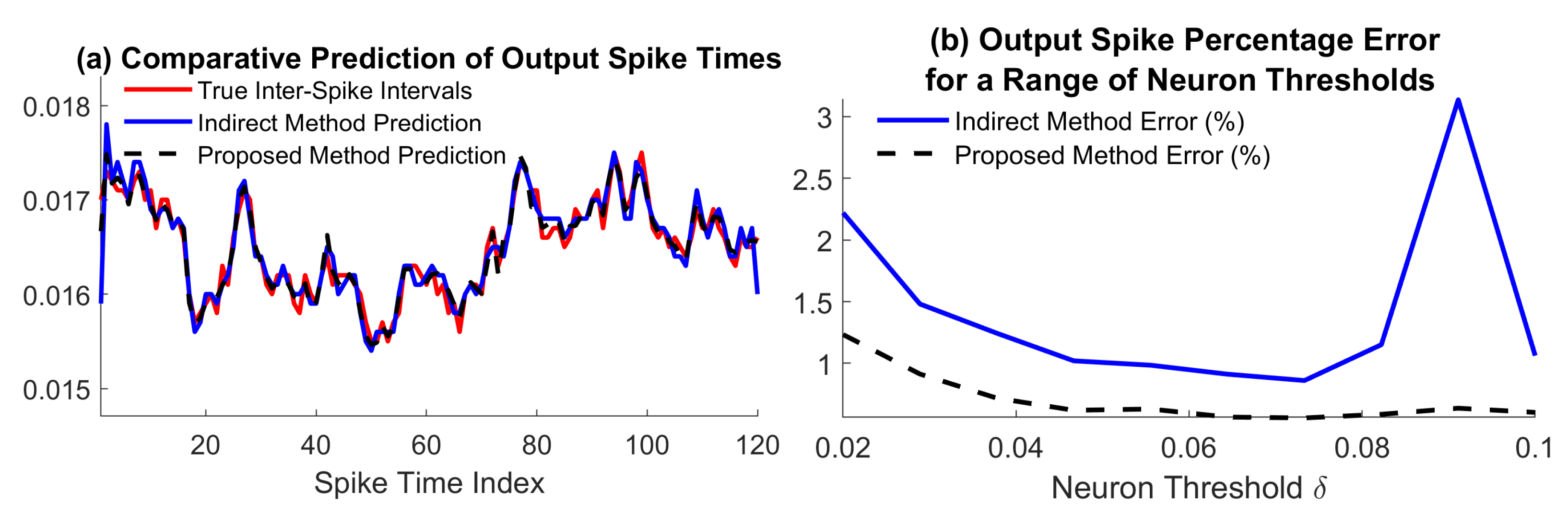

We estimated spike times

in two different ways, via the indirect method presented in

Section 3, and the proposed direct method in

Section 4. The results are depicted in fig:spikepred, and the recovery errors are

for the proposed method and

for the indirect method. Furthermore, the filter output errors are

and

. However, the computing times are

for the proposed method and

for the indirect method. We note that a major contribution in the error induced by the conventional method is due to boundary errors, which are a known artefact of reconstruction [

10,

21].

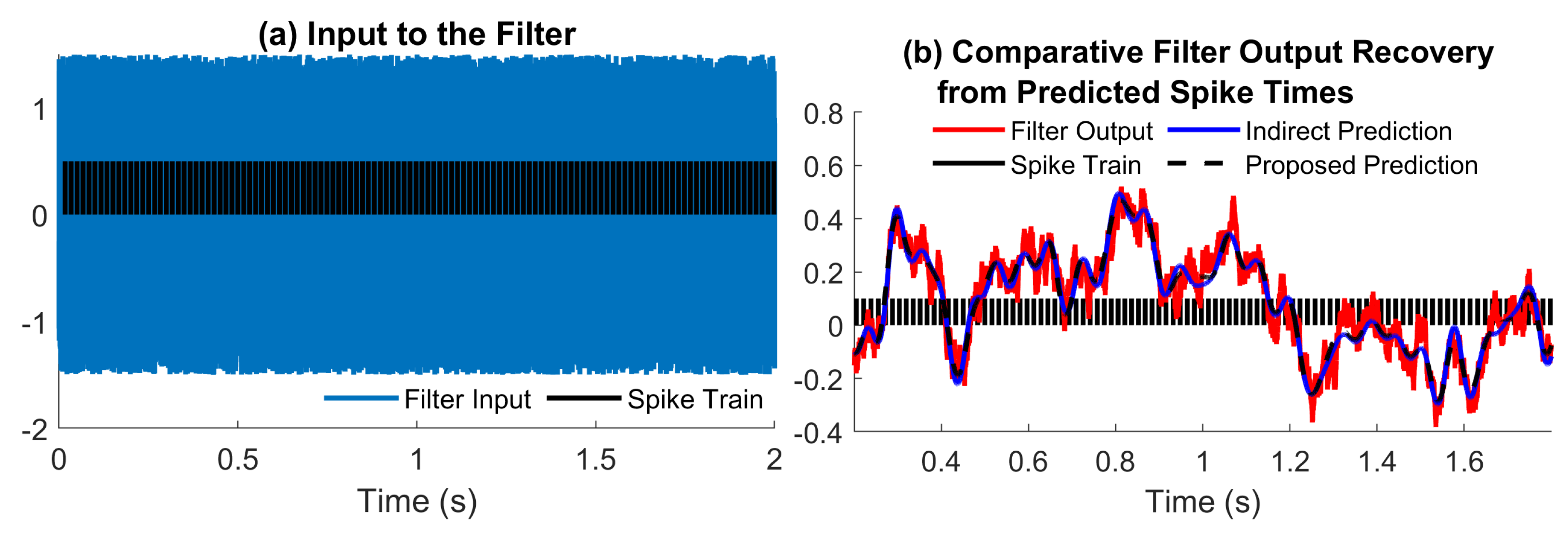

5.2. Low-Pass Filtering of Uniform White Noise

To show that the proposed method has no limitations in terms of input bandwidth, as indicated by the bounds in Theorem

Section 4, here we choose

to be drawn from the random uniform distribution on

. Given that in this case the filter

reduces significantly the noise amplitude, we amplify the output by 10 by using the new filter

, where

. The neuron parameters are

. The input and output signals, as well as the output recovered with both methods are in

Figure 4a,b. The proposed prediction method does not need any knowledge of the input bandwidth. As an alternative, we reconstructed the input with the conventional indirect method, where the recovery bandwidth is

. The value of

is well above the cuttoff frequency of the filter

, which would lead to a accurate recovery of the filter output. However, the random input

cannot be recovered for any

.

The output spike train prediction with both methods is in

Figure 5a. The corresponding errors are

and

, also summarised in

Table 1. The computing times are

for the proposed method and

for the indirect method.

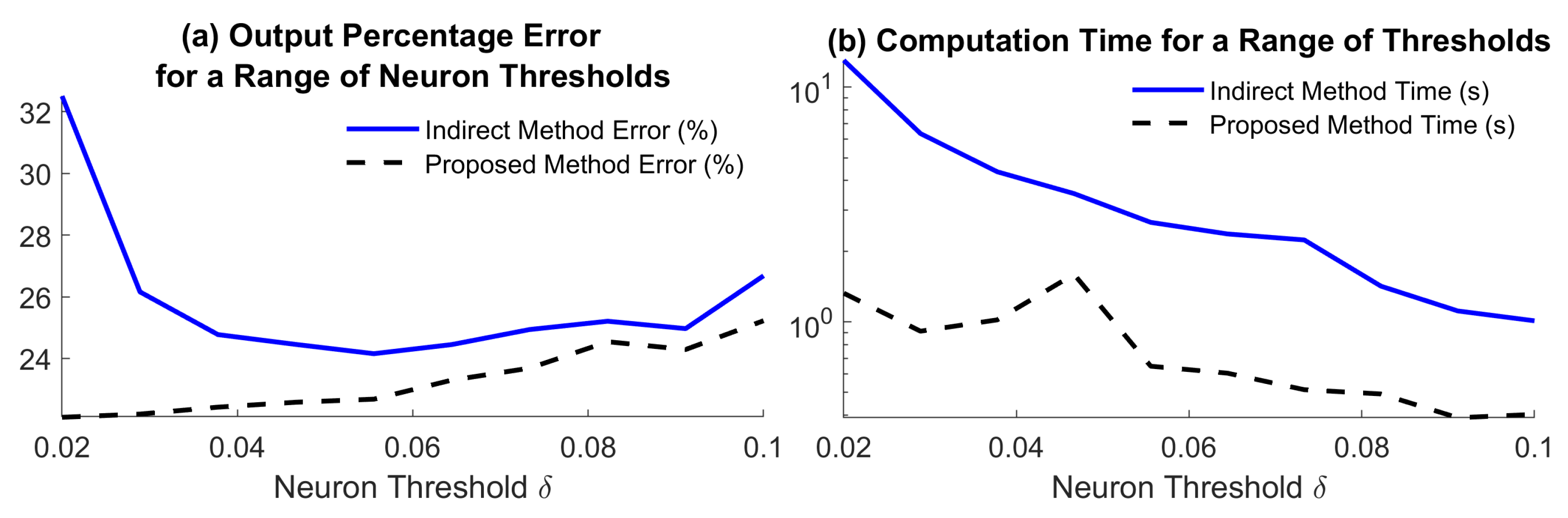

5.3. The Effect of Spike Density on Performance

During our experiments, we noticed that the neuron threshold

has an important role in deciding the computation time of both prediction routines. To illustrate this, we repeated the experiment in

Section 5.2 using the same settings where

was iteratively assigned 10 values uniformly spaced in interval

. As before, we measured the error on the predicted spike train, the reconstructed filter output and computation time for each method, illustrated in

Figure 5b,

Figure 6a and

Figure 6b, respectively.

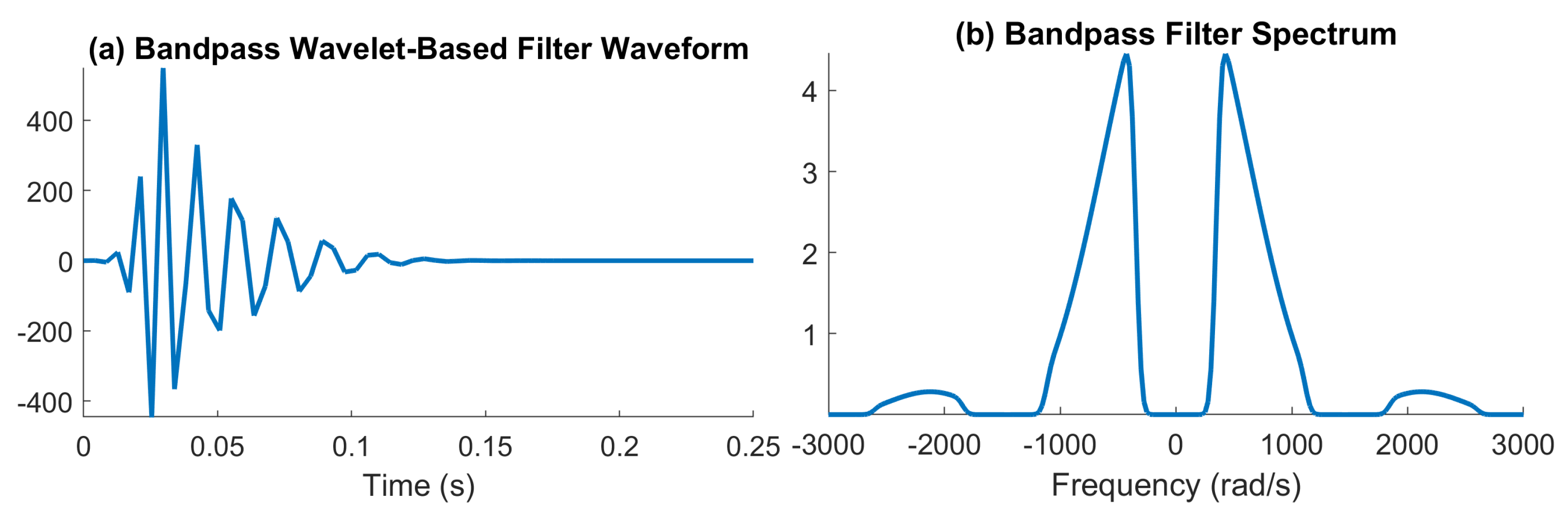

5.4. Output Spikes Prediction for Neurons Sampling Below Nyquist Rate

In the examples presented so far, the filter output is sampled at a rate that allows input reconstruction. In this example, we use a uniform white noise input bounded in

. The input is filtered using a bandpass filter computed using the Daubechies orthogonal wavelet 30, depicted in

Figure 7. We note that the effective bandwidth is around

. When sampled with an IF with parameters

, the filter output samples satisfy

, which violates the condition for perfect recovery in (

3). Therefore, in this example, we will only evaluate the error using

, as

involves the reconstruction of the filter output from IF measurements.

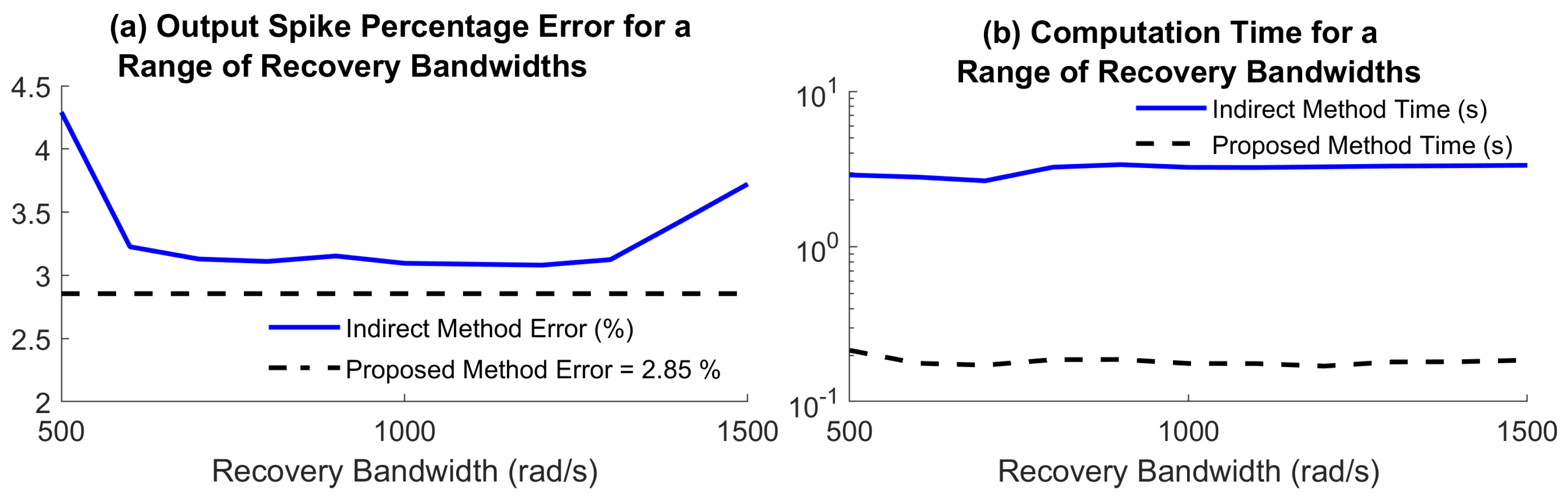

We note that, as in

Section 5.2 and

Section 5.3, here the input is not bandlimited, and its recovery cannot be guaranteed by (

3). In this case, the choice of the bandwidth parameter

used in recovery is not determined by any existing theoretical result. We run the conventional method by changing the recovery bandwidth across 11 uniformly distributed values in interval

. The comparative results are depicted in

Figure 8a,b. The results show that the error introduced by the conventional method

changes quite significantly with

. An error around

higher than the proposed method is achieved for

, but the difference becomes significantly larger for

outside this interval. Meanwhile, the computation time of the proposed method is more than one order of magnitude lower than the conventional method (see

Figure 8b) for all values of

.

6. Conclusions

In this work we introduced a new method to filter an analog signal via its neuromorphic measurements. Unlike existing approaches, the method does not require imposing smoothness type assumptions on the analog input and filter output, such as a limited bandwidth. We introduced recovery guarantees, showing that it is possible to approximate the output spike train with arbitrary accuracy for an appropriate choice of the sampling model. We compared the proposed method numerically against the conventional solution to this problem, which involves reconstruction of the analog signal. The results show the accuracy of the proposed method is comparable to that of the conventional approach. However, the computing time was smaller for the proposed method in all examples, ranging from 2-3 times up to more than one order of magnitude smaller.

Conceptually, the proposed method has the advantage that it does not depend on the characteristics of the analog signal, and therefore it is not restricted to satisfy any reconstruction guarantees. As demonstrated numerically, the method works well in the case of random inputs, as well as when the input and output of the filter are sampled below Nyquist. Moreover, given it bypasses input reconstruction, the proposed method is not affected by known artefacts of recovery methods such as boundary errors. This work has the potential to lead to the development of neuromorphic filters, that would facilitate a faster transition towards a power efficient computing infrastructure.

Author Contributions

All the authors have contributed substantially to the paper. D.F. conceptualized the methodology, performed numerical studies and prepared the draft paper. D.C. contributed in the writing phase through review and editing. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding. The APC is funded by the Imperial College London Open Access Fund.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| IF |

Integrate and fire |

| TEM |

Time encoding machine |

References

- Rabiner, L.R.; Gold, B. Theory and application of digital signal processing. Englewood Cliffs: Prentice-Hall 1975.

- Lazar, A.A.; Tóth, L.T. Perfect recovery and sensitivity analysis of time encoded bandlimited signals. IEEE Trans. Circuits Syst. I 2004, 51, 2060–2073. [Google Scholar] [CrossRef]

- Roza, E. Analog-to-digital conversion via duty-cycle modulation. IEEE Trans. Circuits Syst. II 1997, 44, 907–914. [Google Scholar] [CrossRef]

- Abdul-Kreem, L.I.; Neumann, H. Estimating visual motion using an event-based artificial retina. In Proceedings of the Computer Vision, Imaging and Computer Graphics Theory and Applications: 10th International Joint Conference, VISIGRAPP 2015, Berlin, Germany, 2015, Revised Selected Papers 10. Springer, 2016, March 11–14; pp. 396–415.

- Gallego, G.; Delbrück, T.; Orchard, G.; Bartolozzi, C.; Taba, B.; Censi, A.; Leutenegger, S.; Davison, A.J.; Conradt, J.; Daniilidis, K.; et al. Event-based vision: A survey. IEEE transactions on pattern analysis and machine intelligence 2020, 44, 154–180. [Google Scholar] [CrossRef] [PubMed]

- Kowalczyk, M.; Kryjak, T. Interpolation-Based Event Visual Data Filtering Algorithms. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2023, pp.; pp. 4055–4063.

- Scheerlinck, C.; Barnes, N.; Mahony, R. Asynchronous spatial image convolutions for event cameras. IEEE Robotics and Automation Letters 2019, 4, 816–822. [Google Scholar] [CrossRef]

- Lazar, A.A. A simple model of spike processing. Neurocomputing 2006, 69, 1081–1085. [Google Scholar] [CrossRef]

- Florescu, D.; Coca, D. Implementation of linear filters in the spike domain. In Proceedings of the 2015 European Control Conference (ECC). IEEE; 2015; pp. 2298–2302. [Google Scholar]

- Lazar, A.A. Time encoding with an integrate-and-fire neuron with a refractory period. Neurocomputing 2004, 58, 53–58. [Google Scholar] [CrossRef]

- Florescu, D.; Coca, D. A novel reconstruction framework for time-encoded signals with integrate-and-fire neurons. Neural Comput. 2015, 27, 1872–1898. [Google Scholar] [CrossRef]

- Paninski, L.; Pillow, J.W.; Simoncelli, E.P. Maximum likelihood estimation of a stochastic integrate-and-fire neural encoding model. Neural Comput. 2004, 16, 2533–2561. [Google Scholar] [CrossRef]

- Florescu, D.; Coca, D. Identification of linear and nonlinear sensory processing circuits from spiking neuron data. Neural Comput. 2018, 30, 670–707. [Google Scholar] [CrossRef] [PubMed]

- Maass, W.; Natschläger, T.; Markram, H. Real-time computing without stable states: A new framework for neural computation based on perturbations. Neural Comput. 2002, 14, 2531–2560. [Google Scholar] [CrossRef] [PubMed]

- Florescu, D.; Coca, D. Learning with precise spike times: A new decoding algorithm for liquid state machines. Neural Comput. 2019, 31, 1825–1852. [Google Scholar] [CrossRef] [PubMed]

- Florescu, D. A Generalized Approach for Recovering Time Encoded Signals with Finite Rate of Innovation. arXiv preprint arXiv:2309.10223 2023, arXiv:2309.10223 2023. [Google Scholar]

- Gontier, D.; Vetterli, M. Sampling based on timing: Time encoding machines on shift-invariant subspaces. Appl. Comput. Harmon. Anal. 2014, 36, 63–78. [Google Scholar] [CrossRef]

- Alexandru, R.; Dragotti, P.L. Reconstructing classes of non-bandlimited signals from time encoded information. IEEE Trans. Signal Process. 2019, 68, 747–763. [Google Scholar] [CrossRef]

- Hilton, M.; Dragotti, P.L. Sparse Asynchronous Samples from Networks of TEMs for Reconstruction of Classes of Non-Bandlimited Signals. In Proceedings of the IEEE Intl. Conf. on Acoustics, 2023., Speech and Sig. Proc. (ICASSP).

- Lazar, A.A.; Pnevmatikakis, E.A. Faithful Representation of Stimuli with a Population of Integrate-and-Fire Neurons. Neural Comput. 2008, 20, 2715–2744. [Google Scholar] [CrossRef] [PubMed]

- Lazar, A.A.; Simonyi, E.K.; Tóth, L.T. An overcomplete stitching algorithm for time decoding machines. IEEE Trans. Circuits Syst. I 2008, 55, 2619–2630. [Google Scholar] [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).