1. Introduction

Experimental science has still a lot of questions to solve in the fields of language learning and multilingualism. Due to the global and multicultural ambiance we are involved nowadays, it is mandatory to be able to communicate in different languages. For this reason, there is a research question which has gained more and more importance in the last decades. What is the best way to learn a foreign language (FL)? Different settings including immersion and studying abroad programs emerge as favorable options for language acquisition [

1,

2,

3,

4,

5,

6,

7], however these alternatives may not consistently be accessible to those seeking to acquire a new language. In addition, the issue of language learning does not only affect the younger generations. Nowadays, the adult population finds itself confronted by the challenge of knowing languages other than their mother tongues. In this context, research becomes essential in order to provide strategies to guide and facilitate learning in the usual linguistic contexts of the speakers. The emergence of this increasing necessity creates a direct practical application framework and a way in which investigation can directly impact the language learning experience and facilitates the new FL learners experience.

Early techniques for acquiring FL vocabulary employed a first language (L1)-FL words association strategy aimed at establishing connections between newly acquired FL words and their corresponding lexical translations in the native language [

8,

9,

10,

11]. To illustrate, a native speaker of Spanish would learn that the English translation for “fresa” (L1) is strawberry (FL). Going a step further in the word association strategy, the keyword method [

12,

13] involves the utilization of a mnemonic method based on selecting a L1 word phonetically resembling a portion of a FL word (the keyword). In this approach, learners initially associate the spoken FL word with the keyword, followed by connecting the keyword to the L1 translation of the target word in the FL. For instance, the Spanish word “cordero” meaning “lamb”, associated with the word “cord” (phonetically related keyword). Previous research has confirmed the effectiveness of these methods during the early phases of FL learning, attributed to the establishment of lexical associations between L1 and FL [

14,

15]. Nevertheless, when proficient bilingual individuals seek to express themselves in FL, the most optimal processing route is the direct access to FL words from their associated concepts. The reliance on cross-linguistic lexical connections (L1-FL) and the retrieval of L1 words becomes superfluous when bilinguals communicate in FL [

16]. In addition, when learning programs based on the reinforcement of semantic connections are compared with lexically-based learning procedures at the earliest stages of FL acquisition, advantages have been found associated with conceptually mediated strategies [

8,

9,

15,

16,

17,

18,

19].

In this context, understanding how fluent bilinguals process FL can serve as a foundation for identifying learning methodologies that can replicate this processing pattern, thus constituting effective learning strategies. Research from earlier studies confirmed the notion that building connections between FL words and concepts thrives through training protocols involving semantic processing [

9,

17,

20,

21,

22,

23,

24]. These conceptually mediated strategies usually consist of protocols involving multimedia learning. The term "multimedia" encompasses the incorporation of five distinct types of stimuli within the learning protocol, which can be presented in combination or isolation: text, audio, image, animation and captions/subtitles [

25,

26,

27]. Today, the principles of the Cognitive Load Theory [

28] have largely been surpassed. This theory supports that working memory and cognitive channels have finite capacity and can become overloaded when presented with redundant information [

28]. Now, we know that when new information is presented in combination with different multimedia modalities learners have the opportunity to form numerous mental representations of the target knowledge, organize and integrate them into their long-term memory [

29,

30,

31] ultimately improving learning outcomes. Notably, the presentation of FL words alongside images denoting their meanings (picture association method) outperforms the presentation of FL words with translations in the L1 (word association method) when behavioral measures are collected in single-word tasks [

8,

9,

10,

15,

18,

32], and tasks with words embedded in the context of sentences [

10]. Moreover, electrophysiological measures are also sensitive to benefit of the picture association method even after a single and brief learning session [

15]. Likewise, the act of envisioning the meanings of words to be learned in a FL enhances the acquisition process [

33,

34]. More relevant for the purpose of this manuscript, the integration of words and gestures has been proposed as a powerful FL learning tool, as these elements (words and gestures) interact to construct an integrated memory representation of the new words meaning [

35,

36,

37,

38,

39,

40]. Theoretically supporting these robust findings regarding the effectiveness of semantically related learning strategies, the Dual Coding Theory [

41] suggests that forming mental images while learning may contribute to acquiring new words. According to this theory, the integration of verbal information, visual imagery, and movement heightens the likelihood of remembering new words compared to relying solely on verbal glosses.

In this paper, our objective is to conduct a comprehensive review of the topic, beginning with a general overview of the influence of movements on learning. We will then progressively delve deeper into the examination of how gestures specifically impact the acquisition of vocabulary in a FL. Then, this review will center on detailing the contributions of studies conducted in our lab to understand the role of gestures in FL vocabulary acquisition.

Movements and Language

As humans, we possess the capacity to execute various types of gestures contingent upon the context or situation we encounter. This variability is influenced by the level of consciousness invested in the action, as well as the intended purpose for which the gestures are enacted. For example, there are large differences between the type of gestures we naturally do while teaching in a classroom setting or when trying to explain something to our colleagues relative to the type of gestures that we do when we are playing with a child and we try to represent a lion or a snake. In 1992, McNeill [

38] proposed a simple

gesture taxonomy encompassing many of the possible movements that we can do in a natural communication context. Firstly, representational gestures encompass iconic gestures, employed to visually illustrate spoken content by employing hand movements to refer to tangible entities and/or actions. Additionally, metaphorical gestures fall under this category, conveying abstract concepts by expressing concrete attributes that can be associated with them. This classification introduces two further gesture types: deictic gestures, wherein one or more fingers point towards a reference, and beat gestures, constituted by hand movements that mirror speech prosody and accentuate its emphasis. It might be mentioned that iconic gestures can be distinguished from emblematic gestures, which are culturally specific and involve bodily motions conveying messages akin to words, such as the "good" sign (thumb up, closed fist).

Across all spoken languages, individuals complement their speech with visual-manual communication [

42,

43,

44]. This form of multimodal interaction, known as co-speech gestures, encompasses spoken language, facial expressions, body movements, and, notably, hand movements. Together, these visual and auditory components constitute an interconnected stream of information that enhances the process of communication [

45]. In fact, the significance of movement in language processing has been substantiated by numerous studies [

46,

47]. As an interesting example, Glenberg and colleagues [

48] observed a correlation between movement performance and language comprehension. In their study, participants were tasked with transferring 600 beans (individually) from a larger container to a narrower one, either toward or away from their bodies based on the container's location. Subsequently, sentences describing movements, both meaningful and meaningless, were presented, and participants judged their plausibility. The results revealed that the time taken to evaluate the sentences depended on whether the direction of the bean's movement matched the direction described in the sentence (toward or away from the body). Thus, the execution of actions influenced language comprehension. Notably, Marstaller and Burianová [

49] demonstrated that the right auditory cortex and the left posterior superior temporal brain areas appear to be selectively activated for multisensory integration of sounds from spoken language and accompanying gestures.

Numerous

theoretical frameworks exist to elucidate the connections between gestures and speech. These frameworks primarily delve into the underlying representations involved in gesture processing. These models can be differentiated by considering the interplay of visuospatial and linguistic information. Some perspectives propose that gestures' representations are rooted in visuospatial images (e.g., the sketch model by de Ruiter [

50]; the interface model by Kita and Özyürek [

51]; the gestures-as-simulated-action (GSA) framework by Hostetter & Alibali [

52]). Conversely, other models emphasize the close interrelation between gesture representations and linguistic information (e.g., the interface model by Kita and Özyürek [

51]; the growth point theory by McNeill [

38,

39]). Another distinction among models lies in how gestures and speech are processed, whether as separate entities or as a unified process. Some models propose that gestures and speech are processed independently (e.g., the lexical gesture process model by Krauss and colleagues [

53]), interacting when forming communicative intentions (sketch model by de Ruiter [

50]), or during the conceptualization phase (interface model by Kita and Özyürek [

51]) to facilitate effective communication. On the contrary, other models posit that gestures and speech function collaboratively within a single system (the growth point theory by McNeill [

38,

39]; the GSA framework by Hostetter and Alibali [

52]). For instance, the gesture-in-learning-and-development model (Goldin-Meadow [

54,

55]) suggests that children process gestures and speech autonomously, and these elements integrate into a unified system in proficient speakers [

56,

57]. Beyond the mechanics of gesture and speech processing, a pertinent question arises regarding the role of gestures in communication. Research has established that listeners extract information from gestures [

58,

59,

60,

61,

62]. This aligns with the fact that gestures frequently emerge during speech planning, leading many models to emphasize the communicative value of gestures (e.g., the sketch model by de Ruiter [

50]; the interface model by Kita and Özyürek [

51]; the growth point theory by McNeill [

38,

39]; and the GLD framework by Goldin-Meadow [

54,

55]).

In conclusion, it is reasonable to infer that the gestures we utilize while attempting to convey a concept, as well as the natural use of deictic or beat gestures within our spontaneous linguistic expressions, play a role in both language production and comprehension, ultimately enhancing the overall communication process.

Gestures as FL Learning Tool

Several studies have underscored the significance of different types of gestures in FL learning (e.g., [

63,

64,

65,

66,

67,

68,

69,

70], for reviews). Generally, it is widely accepted that gestures have a positive impact on vocabulary acquisition, advocating for their integration into FL instruction, aligned with a natural language teaching approach (see [

71,

72,

73,

74], however, see [

35,

75], for evidence about the limited effect of gestures on learning segmental phonology). More relevant to the purpose of this paper, past studies have illustrated the role of iconic gestures in language comprehension [

76] (for a comprehensive review, see [

77]), as well as in language production (see [

78], for a review on gestures in speech).

In cognitive psychology, three main perspectives offer explanations for the beneficial role of iconic gestures in FL vocabulary learning.

The

self-involvement explanation posits that gestures promote participant engagement in the learning task, enhancing attention and favoring FL vocabulary acquisition [

79]. This increase in attention is primarily attributed to heightened perceptual and attentional processes during the proprioception of movements associated with gestures or the use of objects to perform actions [

80]. Here, the motor component itself might not be cause of improvement [

81], rather it is the multisensory information conveyed by the gesture that leads to enhanced semantic processing and greater attention [

82]. Consequently, according to this perspective, learning new FL words with gestures aids vocabulary acquisition regardless of whether a gesture is commonly produced within a language or signifies the same meaning as the word being learned. Increased attentional processing contributes to word retention [

83], highlighting its role in encoding and information retrieval [

84]. To illustrate, if the learner needs to acquire the word “teclear” in Spanish whose translation in English is “to type”, the mere fact of performing a movement associated with the new word would facilitate the process independently of any other intrinsic characteristic of the gesture.

The

motor-trace perspective asserts that the physical element of gestures is encoded in memory, leaving a motor trace that assists in acquiring new FL words [

85,

86]. In this view, physical enactment is crucial as it allows the formation of a motor trace tied to the word's meaning. Recent neuroscientific studies, employing techniques like repetitive transcranial magnetic stimulation, support the role of the motor cortex in comprehending written words [

87]. Additionally, evidence suggests that familiar gestures might engage procedural memory due to their reliance on well-defined motor programs [

88]. Consequently, the interplay of procedural and declarative memory could enhance vocabulary learning. Hence, well-practiced familiar gestures would facilitate the FL learning process to a greater extent than unfamiliar gestures (e.g., the gesture of typing on a keyboard vs. touching the right and the left cheeks with the right forefinger sequentially). Thus, the extent of an individual's familiarity with certain gestures would determine the facilitation effect. However, according to this perspective, the impact of gestures operates independently of their meaning and well-practiced gestures might benefit learning regardless of whether they match the new word's meaning.

The

motor-imagery perspective suggests that gestures are tied to motor images that contribute to a word's meaning [

89]. Specifically, executing a gesture while processing a word fosters the formation of a visual image linked to the word's meaning, enhancing its semantic content [

66,

90]. Neurobiological evidence, including functional connectivity analyses, suggests the involvement of the hippocampal system in binding visual and linguistic representations of words learned with pictures [

91]. According to this view, the facilitation effect of gestures is heightened when they align with the meaning of the words being learned, compared to cases where gestures and word meanings don't match. This constitutes the primary point of disagreement with the motor-trace theory. Additionally, this perspective points out that learning words with gestures of incongruent meanings can lead to semantic interference and reduced recall due to the creation of a dual task scenario which ultimately has an adverse impact on the learning process [

92,

93].

It's important to note that, from our view, these three perspectives are not mutually exclusive, but rather highlight different aspects of gestures' effect on FL learning. A gesture accompanying a word could increase self-involvement (gestures enhancing attention to FL learning), create a motor trace (meaningful movements), and/or evoke a semantic visual image integrated with the word's meaning.

Empirical Evidence Regarding the Role of Gestures in FL Learning

Empirical evidence pertaining to the role of movements in FL instruction indicates that enhanced vocabulary learning outcomes are observed when participants learn FL words alongside gestures that embody the practical use of objects whose names are learned [

94,

95,

96]. The significance of movements in FL vocabulary acquisition has been examined in previous learning protocols. For instance, many years ago, Asher [

97] was a pioneer in introducing movements in the FL learning process. He presented the Total Physical Response strategy as an effective means of acquiring new vocabulary. This strategy involved a guided approach where students received instructions in the target language (FL). For example, children were taught the Japanese word "tobe" (meaning 'to jump' in English), and each time they heard this word, they physically performed the corresponding gesture (to jump). The author observed an advantageous impact linked with the integration of gestures in FL word instruction, an effect that has been demonstrated across various educational domains, including online courses, language learning, and technology implementation [

98,

99] (although see [

100] for an alternative view).

Then, the first empirical study focused on the impact of iconic gestures on learning a FL was developed by Quinn-Allen [

101]. Within this study, English-speaking participants were tasked with acquiring French expressions under two distinct conditions. In the control condition, the participants were presented with French sentences (e.g., Il est très collet monté? - He takes himself seriously), which they then had to repeat in French. Conversely, in the experimental condition, learners were provided with the sentences accompanied by a symbolic gesture illustrating the intended meaning (e.g., Head up, one hand in front of neck, the other hand lower as if adjusting a tie), and they were subsequently required to reproduce the gesture. The pattern of outcomes revealed that sentences presented alongside gestures showed enhanced recall compared to the control condition where participants simply had to repeat the sentences. The efficacy of gestures observed in Quinn-Allen's study aligns with the self-involvement theory [

79]. As mentioned above, this theory posits that participants become more engaged in the learning process when they receive and produce gestures compared to the control condition. However, the enhanced learning effect could equally be explained by the motor-trace account and the motor-imagery explanation. The gestures employed in this study, like the action of adjusting a tie, are defined as conventional gestures; the type of gestures which are commonly used in interpersonal communication. This aspect might elucidate the learning enhancement under the motor-trace perspective. Furthermore, the gestures are congruent in meaning with the sentence being acquired ("He takes himself seriously"), supporting the explanation proposed by the motor-imagery account. In this line, this work laid the foundation to study the effect of gestures in FL acquisition, however, the causes underlying this improvement associated with the use of gestures during FL learning remain unclear. Further experimental work has resolved this question by including several gesture conditions and manipulating the correspondence between the gestures and new words meaning [

66,

90,

95,

102].

Another benchmark study in the field is the work by Macedonia and collaborators [

90]. In this study, a group of German speakers learned new words in Vimmi (an artificial language developed by the authors) that served as FL. The new nouns to be learned were accompanied by either meaningful congruent iconic gestures (e.g., the term "suitcase" paired with a gesture mimicking an actor lifting an imaginary suitcase) or meaningless gestures (e.g., "suitcase" with a gesture involving touching one's own head). The results revealed a superior recall of words associated with iconic gestures when compared to those coupled with meaningless gestures. These findings suggest that gestures introduce something else beyond merely involving the participant in the task. The simple engagement associated with the gesture’s performance cannot explain the advantages found in the iconic gestures condition. However, in this case, both the motor-trace and motor-imagery theories could potentially explain the heightened performance observed in the context of iconic gestures relative to meaningless gestures. Iconic gestures might enhance FL learning due to their semantic richness and their higher frequency of use compared to meaningless gestures, resulting in stronger motor activation.

Other researchers have employed additional experimental conditions to distinguish between explanations grounded in the motor component of gestures and those derived from the motor-imagery account. Studies including monolingual speakers have explored congruity effects in communication by deliberately mismatching the semantics of words and the meanings conveyed by gestures [

94,

103,

104,

105,

106] (see [

107] for congruity effects in an unfamiliar language context). Kelly and colleagues [

65,

108] employed an event-related potential study alongside a Stroop-like paradigm. Participants were presented with words (e.g., "cut") and corresponding gestures, which could be either congruent (e.g., a cutting movement) or incongruent (a drinking movement). The study revealed an attenuated N400 response to words paired with congruent gestures compared to incongruent ones, displaying, thus, a semantic integration effect [

109]. Additionally, participants were faster responding in the congruent condition compared to the incongruent one. These results suggest that gestures are integrated within new words meaning producing benefits when gestures and words meaning match and an interference when learners perceive a conceptual mismatch. In this case, the gestures used in both conditions, congruent and incongruent were familiar to participants a there was an equal level of engagement. Hence, the results obtained in this study could not be explained by the self-involvement or the motor-trace accounts. The motor-imagery theory would be the unique pointing out differences between the use of congruent and incongruent iconic gestures that rely on the meaning match or mismatch between gestures and words.

Taken together, previous studies have confirmed the positive effect associated with the use of congruent gestures on FL vocabulary learning. However, considering the experimental conditions included in past studies there is a lack of empirical evidence comparing the consequences of several conditions in a single study within a single participants sample. For example, in different studies, the meaningless gesture condition is not included in the experimental design. In this context, it is not possible to determine the degree to which the congruent and incongruent conditions produce a facilitation or an interference effect respectively due to the lack of a baseline condition (see [

110] for a review of a comparable experimental paradigm concerning Stroop tasks). We addressed this concern by developing Experiments 1 and 2.

In general, previous studies seem to point out that congruent iconic gestures produce a learning facilitation effect with increased recall when movements accompany new words in the codification process. However, does this effect solely manifest when FL learners perform gestures themselves? Previous research indicates that the mere observation of movements can activate brain areas associated with motor actions [

111,

112]. Hence, while executing movements appears to enhance learning, it remains unclear whether a learning approach involving self-generated actions would yield an additional benefit beyond the mere observation of movements. This possibility will be explored in the next paragraphs.

Effects of “Seeing” and “Acting” Gestures While Learning a FL

In the realm of education, the potential advantages of learning through actions compared to observation-based learning have been under discussion for decades [

113]. The perspective of "learning-by-doing" advocates for active individual engagement in the learning process by executing actions while learning is taking place. Learning-by-doing can have a positive influence on the formation of neural networks underlying knowledge acquisition and the performance of cognitive skills [

114]. This beneficial effect has been demonstrated across various educational domains such as online courses, language acquisition, learning through play, and new technology utilization [

98,

99,

115]. However, as reported in the next paragraphs, empirical evidence is not fully consistent about the advantages of self-generated movements compared to the mere observation of actions performed by others.

In this context, various studies have examined the differences obtained when participants reproduce the experimental tasks by themselves or when they merely observe the experimenter [

116,

117]. Previous studies have pointed out that self-generated movements enhance cognitive processing. Out of the linguistic context, Goldin-Meadow and colleagues [

114], directly compared the impact of self-generated gestures versus observing another individual producing them when children were required to perform a mental transformation task. In their study, children had to perform a mental rotation task, determining whether two shapes presented in different orientations were the same figure. This task was chosen due to the close connection between mental rotation and motor processing. When mentally rotating a target, premotor areas involved in action planning become active [

48,

118], and participants naturally and spontaneously used gestures when explaining how they solved the task [

119]. Goldin-Meadow et al. [

114] demonstrated that children achieved better results when instructed to enact the gesture required to solve the transformation task, rather than simply observing the experimenter's movements.

If we move to the field of language learning, other empirical studies have also highlighted the importance of self-generated movements in acquiring linguistic material. The production of spontaneous gestures seems to be more beneficial than observing non-spontaneous movements [

18]. Comparing pictures and gestures, the production of gestures associated to new words is more efficient than the use of picture-word associations. This effect is interpreted as a result of the inclusion of motor traces in the semantic of new words while acquisition is taking place [

120]. In 2011, James and Swain [

121] planned a study in which children were taught action words associated with tangible toys. Children who manipulated the objects during learning exhibited activation in motor brain areas when hearing the words they had learned. Thus, performing motor actions enhances new word learning, and this benefit is likely attributed to the formation of a motor trace that become activated during subsequent information recall. In the same line and at a higher level of linguistic processing, Engelkamp and colleagues [

122] required participants to learn sentences while physically performing the actions described in these sentences, as opposed to a solely listening and memorizing condition. The findings revealed that sentence recall was higher when participants engaged in actions during the learning phase. Again, this was interpreted as the performance of actions promoting the formation of a motor trace that facilitated information retention and recall.

On the other hand, Stefan and colleagues [

112] reported motor cortex involvement during the observation of movement (e.g., simple repetitive thumb movements), leading to a specific memory trace in the motor cortex akin to the activation pattern during actual motor actions. If there is an activation overlap between movements observation and performance, is the advantage associated to generating movements that clear? In fact, previous research has found confounding results or similar outcomes when participants engage in producing actions or when they solely observe actions performed by others [

111]. At the lowest level of linguistic processing, the production versus the mere observation of hand gestures have limited impact on learning segmental phonology or phonetic distinction in a FL [

35,

75]. In more advanced linguistic stages, where hand gestures have demonstrated a positive influence on learning, various studies show that self-generated movements and the observation of gestures yield comparable outcomes. For instance, learning of anatomy lectures was similar when the instructor executed movements related to the lecture content compared to the situation in which the students imitated these motor actions [

123]. In the specific context of FL acquisition, Baills and collaborators [

124] found similar results during the learning of Chinese tones and words when pitch gestures (metaphoric gestures mimicking speech prosody) were used. Participants exhibited similar performance levels when required to learn through observation (Experiment 1) or production (Experiment 2). In a recent study, undergraduate English speakers were presented with 10 Japanese verbs acoustically, while an instructor accompanied them with iconic gestures. Comparable outcomes were observed when participants learned the words solely by observing the instructor's gestures or by mimicking her movements [

125]. In a midway position, Glenberg and colleagues ([

46], Experiment 3) investigated the impact of movements on sentence reading comprehension in children and found intermediate outcomes. Children were presented with narratives set in a particular scenario (a farm) involving different elements (e.g., a sheep or a tractor). One group manipulated the objects mentioned in the text, while another group imagined doing so. The manipulation condition yielded better results, while the imagined condition showed modest improvement compared to a read-only condition.

In summary, the self-generation of movements during learning appears to yield positive effects in both non-linguistic tasks [

114] and FL instruction [

18,

120,

122]. The creation of a more comprehensive semantic representation in memory, encompassing both verbal and motor information, facilitates greater accessibility to previously acquired knowledge. This underlies the advantageous impact of performing movements while learning is taking place [

121,

122]. Nevertheless, other studies propose that merely observing gestures is sufficient for learning, regardless of whether participants engage in the gestures themselves [

125]. This conflicting pattern of outcomes may stem from methodological differences between studies, such as participant demographics, including children [

114] versus undergraduate students [

75], and the nature of the learning tasks, ranging from dialogic tasks [

18] to segmental phonology [

35]. Notably, many studies supporting the positive effects of self-generated gestures involve semantically rich materials like words [

120] or sentences [

122], while some studies indicating no differences between gesture observation and production focus on non-semantic linguistic levels (e.g., segmental phonology, [

35,

75]) or the manipulation of the gesture conditions is conducted in different experiments [

124]. An additional purpose of our experimental series was to address these aspects comprehensively, investigating the performance versus observation of gestures in different groups while participants learn words accompanied by iconic gestures conveying semantic information.

Gestures in Verbs and Nouns Learning

When considering studies on FL vocabulary learning, there are many theoretical and practical issues that must be attended. In the case of learning and gestures, the close relationship between movements and verbs has an special role regarding codification and recall processes. The GSA framework stablish specific predictions about the role of gestures on learning different types of word. This theory posits that gestures emerge from simulated action and perception, which underlie mental imagery and language production [

52]. While viewing the size or shape of an object (nouns) does involve simulated movements, the connection between verbs and actions is more pronounced. Consequently, gestures would exert a more significant influence on verb learning compared to noun learning. In fact, it has been proposed that nouns and verbs lexical acquisition mechanisms might implement a bipolar approach. In this way, the cognitive mechanisms for nouns and verbs acquisition would be different at least for children [

126].

In a study conducted by Hadley and colleagues [

127] with preschool children, the impact of gestures on teaching different word types was directly examined. Results revealed that while concrete nouns exhibited higher learning rates, employing gestures during verb instruction acted as a scaffold for the accompanying verbal contents. This insight elucidates why the majority of research evaluating gesture effects in FL learning has employed verbs as instructional material [

63,

95,

107]. Many verbs (e.g., those describing actions involving manipulable objects) closely correlate with movements [

95]. In fact, prior studies confirm that the semantic representation of verbs inherently includes a gestural or motor component [

63,

128,

129,

130].

However, beyond gestures, it has been found that nouns are easier to learn than verbs. Concrete nouns possess distinct perceptual attributes that facilitate the acquisition of new words, whereas verbs convey dynamic information that facilitates the extraction of the motion meaning [

131]. Numerous studies have demonstrated that children acquired English nouns more effortlessly than verbs in a natural context [

127,

132,

133,

134]. However, this phenomenon seems to be culturally specific and although it appears in English speaking cultures, the effect is blurred in oriental cultures (see [

134,

135,

136] for the absence of this advantage in Korean and Mandarin languages). A possible explanation for these crosslinguistic differences would be the especial emphasis that English speakers place on nouns when they interact with children during the acquisition of L1 words.

To the best of our knowledge, the study by García-Gámez and Macizo in 2019 [

137] was the first research that directly compared nouns and verbs in the context of adult individuals learning FL words with accompanying gestures. This study will be reviewed in the current paper.

The Current Series of Studies

In the studies described below, we wanted to shed light on strategies that could help learners on the road to FL vocabulary acquisition. In this way, the current review paper explores the potential role that gestures have on FL vocabulary learning. Taking into account all the discussion previously offered the introduction section, we firstly present the experimental conditions that we have used across studies and then, specific factors and predictions addressed in each experiment. The whole of our research allowed us to provide specific contributions to the field and to formulate further predictions described in the subsequent paragraphs.

Previous research suggests that gestures play a role in facilitating the acquisition of FL vocabulary. The available data indicates that gestures contribute to the formation of a motor trace linked to word meanings, involving procedural memory which, in turn, supports FL acquisition [

88]. However, it is important to note that alternative explanations cannot be dismissed, and these accounts may complement each other in elucidating the impact of gestures on FL vocabulary learning. Furthermore, it is plausible that gestures might have both positive and negative effects on the learning process, as individuals potentially face a dual-task situation while learning with gestures.

In our studies, native Spanish speakers (L1) learned foreign words (FL) in an artificial language, Vimmi, developed by Macedonia and collaborators [

90], over three consecutive learning sessions. The words were presented in various conditions: alone (no gesture condition), accompanied by meaningless or unfamiliar gestures (meaningless gesture condition), or presented alongside iconic gestures, where the meanings of the gestures were either semantically congruent or incongruent with the meanings of the words (congruent and incongruent conditions, respectively). Finally, our participants were evaluated after each learning session by completing a forward (L1-FL) and backward (FL-L1) translation task.

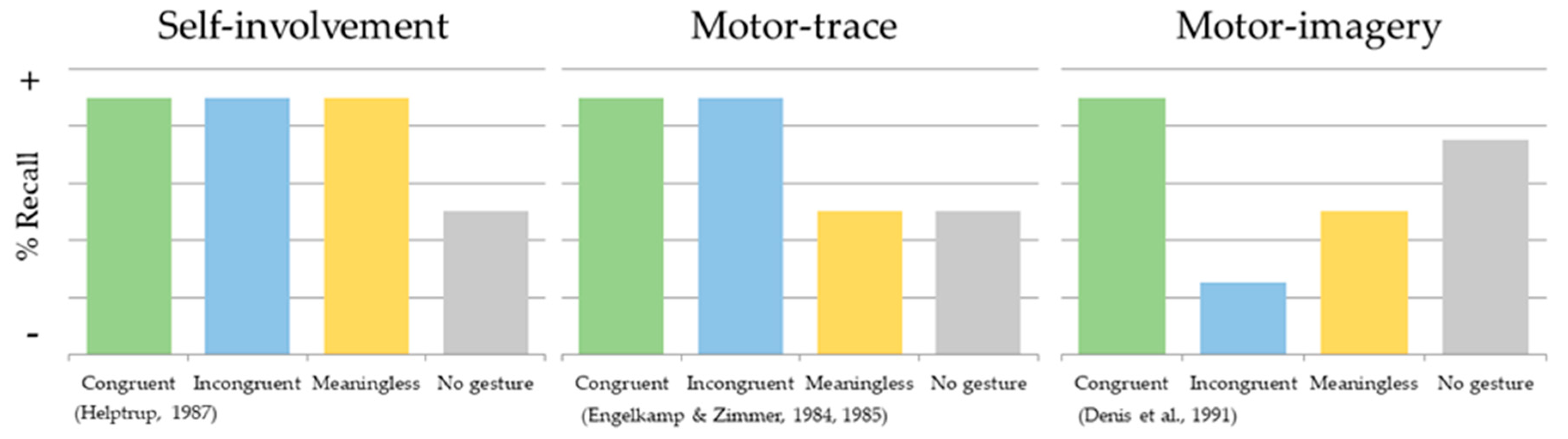

When considering the theoretical perspectives on the role of gestures in FL vocabulary acquisition (previously described), namely the self-involvement account (Helstrup, 1987), the motor-trace account [

85,

86], and the motor-imagery account [

89], it becomes possible to formulate specific predictions attending to our experimental conditions (see

Figure 1 to observe the predictions of each of the theories for the learning conditions included in our studies).

If gestures primarily serve to enhance the participant's engagement in the learning tasks, then all conditions involving gestures should lead to improved FL vocabulary acquisition compared to the condition without gestures.

If the motor trace left by gestures aids participants in learning new words, familiar gestures (in the congruent and incongruent conditions) should be associated with better FL vocabulary learning outcomes than less familiar gestures (meaningless condition) and the condition without gestures.

Furthermore, the motor-imagery account suggests that learning meaningful gestures could either facilitate or interfere with vocabulary acquisition, depending on the alignment between the gesture's meaning and the meaning of the FL word being learned. Thus, congruent gestures may enhance vocabulary learning, while incongruent gestures might hinder the acquisition of new words. Conversely, meaningless gestures could become distinctive and contribute to the encoding and recall of FL words [

138].

As noted before, it is worth noting that these perspectives are not mutually exclusive. For example, the acquisition of FL words paired with congruent gestures might involve a trade-off between the positive impact of strengthening the connection between semantic information and FL words and the negative consequence of engaging participants in a dual-task situation.

In Experiments 1 and 2, we stablished a direct comparison between nouns and verbs learning. The prevailing notion is that verbs might be more difficult to acquire compared to nouns [

129,

135,

139]. Additionally, it is commonly accepted that action verbs inherently incorporate a gestural or motor component within their mental representation [

128,

130]. Therefore, disparities between these two words types may hinge on whether they involve overt bodily movements, as is the case with action verbs. For instance, De Grauwe et al. [

63] observed that the comprehension of motion verbs (e.g., "to throw") in FL triggered activation in motor and somatosensory brain regions. Consequently, it is plausible to speculate that the impact of gestures in FL vocabulary acquisition might be more pronounced with verbs than with nouns.

In addition, there exists a debate surrounding the impact of self-generated movement compared to the mere observation of gestures on FL vocabulary acquisition. To directly address this issue, two experimental groups were included in Experiment 3. Participants were randomly assigned to the “see” and “do” learning group. In the “see” learning group, learners were required to read aloud Spanish-Vimmi word pairs (L1-FL) while simultaneously observing and mentally envisioning themselves replicating the gestures depicted in a video. On the contrary, in the “do” learning group, learners were instructed to say aloud the word pairs in both Spanish and Vimmi (L1-FL) and physically mimic the gestures as they were presented on the screen. Only action verbs served as learning material in this experiment.

As previously mentioned, certain studies demonstrate enhanced learning outcomes associated with the active generation of gestures [

18,

120,

122]. Conversely, in other research, no discernible difference is observed between the act of viewing gestures and the act of performing gestures by FL learners [

35,

75,

125]. In this work, we controlled for several factors that might explain differences found in previous studies (type of learning material, type of gestures or word-gesture meaning relation). Unlike the vast majority of research on the field, we explore the effect of self-generated movements in adult population [

46,

114,

120,

121]. It is crucial to emphasize this methodological distinction because adults generally possess more experience in executing actions compared to children. Furthermore, for adults, the semantic content of gestures and the visual imagery associated with words tend to be rich [

140]. Given this consideration, merely observing movements resembling action verbs might suffice for adult individuals to harness the beneficial effects of gestures in FL learning. Consequently, the act of observing gestures could serve to strengthen the connections between FL words and the semantic system in a manner akin to actually performing the gestures themselves, as proposed by Sweller et al. [

125]. In other words, we hypothesized that adult participants may not exhibit any discernible difference between the act of viewing gestures and the act of physically performing those gestures in terms of FL vocabulary acquisition.

Regarding the learning results, our general hypothesis was fitted to the principles of the Revised Hierarchical Model (RHM) [

16]. The translation task allowed us to establish conclusions at the level of lexical and semantic access to the new learned words. First, we expected an asymmetrical effect, as seen in prior studies, with more efficient performance in backward translation compared to the forward translation [

16]. This prediction arises from the fact that forward translation necessitates more semantic processing than backward translation, making more challenging to translate from L1 to FL than the reverse. In general, it was predicted that in learning conditions where semantic processing is promoted, facilitation would be particularly noticeable in forward translation because it is semantically mediated [

16,

141,

142]. In terms of potential interactions between the translation direction and the gesture conditions (congruent, incongruent, meaningless, and no gestures), we anticipated that forward translation would be more affected by the semantic congruence (congruent and incongruent conditions) compared to the condition with meaningless gestures.

Finally, we anticipated (a) a positive effect (learning facilitation) when employing congruent gestures during FL word acquisition (congruent condition), and (b) a negative effect (learning interference) associated with gestures unrelated to word meanings (incongruent and meaningless conditions). This pattern of results may align with the postulates of the motor-imagery account [

138] and with previous studies that have illustrated both the benefits and drawbacks of using gestures in FL vocabulary acquisition [

90,

94,

95]. In addition, regarding the focus of Experiment 3, if the mere act of observing gestures proved to be sufficient to enhance vocabulary acquisition, the outcomes would be consistent across both learning methods. Conversely, if active engagement in gestures during instruction, as seen in the “do” learning group, maximized learning, we would anticipate a higher learning rate in this group compared to the “see” learning group.

2. Materials and Methods

Participants

First of all, regarding the type of population, in all of our studies, we selected Spanish “monolingual” speakers. All the participants were young-adult students from the University of Granada that received course credits as reward for participating. Nowadays it is confusing to classify a Spanish person as monolingual. As is often the case in other countries, the younger generations are exposed to foreign language learning in regular education. In this context, we decided to recruit participants who were as minimally proficient in any FL as possible. To achieve this, we established the following inclusion criteria regarding the participants FL proficiency level. On a daily basis, they needed to confirm that:

They had no contact with any language other than Spanish, whether spoken or sign language.

Their most recent exposure to a FL had to occur during high school.

They had never received any formal instruction in a FL beyond regular education.

They had never obtained a certification in any FL.

The language proficiency level of the participants was relevant for the experimental design in these studies. Previous research has shown that there are differences in the way new languages are acquired depending on how proficient speakers are in other languages [

143,

144,

145]. For instance, learning a third language (L3) is generally assumed to be less demanding or costly than learning a L2 [

146,

147,

148]. Existing literature suggests that bilingual experience confers individuals with tools that facilitate the L3 learning process (for a comprehensive review, see [

149]).

A total of 25 individuals participated in Experiment 1, consisting of 21 women and 4 men. The average age of the participants was 21.72 years, (SD = 3.17). In Experiment 2, a total of 32 students were involved (6 men and 26 women). Their mean age was 20.97 years (SD = 3.21). Finally, in Experiment 3, 31 participants were recruited. Sixteen of them (15 women and 1 man) were randomly assigned to the “do” group. Their mean age was 21.12 (SD = 2.53). The remaining 15 participants (13 women and 2 men) formed the “see” learning group. Their mean age was 21.13 years (SD = 2.72).

All participants provided written informed consent before engaging in the experiment. None of the participants reported history of language disabilities, and they all had either normal visual acuity or corrected-to-normal visual acuity. The data obtained in the studies were treated anonymously by the researchers of this paper.

Materials

Regarding the materials used in the experimental design, the same gestures pool was used across studies. This material was specifically created by the experimenters. In addition, 40 Spanish nouns and 40 Spanish verbs were selected to act as L1 words. Participants had to learn the translation for these words in a FL. As FL, we selected an artificial corpus described below (Vimmi, developed by Macedonia et al. [

90]).

The gestures used in the studies included only hand movements. We implemented iconic gestures depicting common actions people normally perform when interacting with objects (e.g., mimicking writing a letter or brushing hair) [

38,

150]. The videos showing the hand gestures were recorded by the first author of all the studies. These gestures were used in the congruent and incongruent gesture conditions. Furthermore, in the meaningless condition, gestures consisted of small hand movements without iconic or metaphoric connections to the meanings of the accompanying word (e.g., forming a fist with one hand and raising the fingers of the other hand). Care was taken to ensure that these meaningless gestures shared similar characteristics with meaningful gestures, such as hand configuration, the use of a simple movement trajectory, and spatial location. In this condition, 10 different movements were selected, and all participants were exposed to the same set of gestures.

Furthermore, we aimed to ensure that the congruent, incongruent, and meaningless conditions varied in the extent to which the semantics of the word corresponded to the accompanying gesture. To achieve this, a group of 15 Spanish participants who did not participate in any of the experiments took part in a pilot study. They were presented with a video displaying a gesture (without sound) at the top of the screen and a word written in Spanish at the bottom of the screen. They were then instructed to rate the degree of alignment between the meaning of the word and the gesture, using a scale from 1 (indicating a high mismatch) to 9 (indicating a high match).

In Experiment 1, there were differences between nouns included in the congruent condition, meaningless condition, and incongruent condition. The gesture-word pairs were rated higher in the congruent condition compared to the meaningless condition, and the incongruent condition. The incongruent condition and the meaningless condition also differed. Therefore, the three conditions with gestures used in the study differed in terms of the association between the meaning of the word and the gesture. In Experiments 2 and 3, significant differences were found between verbs included in the three conditions: congruent condition, meaningless condition, and incongruent condition. Specifically, the gesture-word pairs received significantly higher ratings in the congruent condition compared to both the meaningless condition and the incongruent condition. Furthermore, the incongruent condition and the meaningless condition also differed. Thus, the three conditions involving gestures in the study differed in terms of the association between the word's meaning and the accompanying gesture.

As FL to be learned, we selected an artificial language called Vimmi [

66,

90]. The corpus of Vimmi words has been design to eliminate factors that could potentially bias the learning of particular items. This includes avoiding patterns like the co-occurrence of syllables and any resemblance to words from languages such as Spanish, English, and French. Vimmi words were meticulously chosen to be pseudowords in the L1 of the participants (Spanish), thus maintaining proper orthography and phonology in Spanish but without meaning (see [

151], for a discussion of these variables in vocabulary learning). As reported by Bartolotti and Marian [

152], divergent linguistic structures across languages can hinder new vocabulary acquisition. Vimmi matches the orthotactic probabilities of Latin languages such as Spanish, French or Italian. When the set of Vimmi words was selected in our studies, different linguistic variables were controlled such as number of graphemes, phonemes and syllables.

Furthermore, Spanish words were selected to act as L1. In Experiment 1, all of them represented concrete nouns denoting objects that could be manipulated with the hands (e.g., spoon, comb, etc.). In Experiments 2 and 3, the same pool of 40 Spanish verbs were used to be coupled with the FL translations. Linguistic variables such as number of graphemes, phonemes and syllables, lexical frequency, familiarity or concreteness were carefully controlled.

Experimental Design

The studies involved four FL vocabulary learning conditions that were manipulated within participants as follows. In

Figure 2 we show an example of the experimental design for each learning condition:

- a)

Congruent condition: L1-FL word pairs were presented with gestures that reflected the common use of objects whose names had to be learned in FL. For example, "teclado (keyboard in Spanish)-saluzafo (Vimmi translation)" was coupled with the gesture of typing with both hands fingers as if we had a keyboard in front of us.

- b)

Incongruent condition: L1-FL word pairs were linked to gestures associated with the use of an object different from that denoted by the L1 word. For instance, "teclado-saluzafo” was paired with the gesture of striking something with a hammer.

- c)

Meaningless condition: L1-FL word pairs were paired with unfamiliar gestures. For instance, "teclado-saluzafo" was accompanied by a gesture of touching the forehead and then one ear with the right forefinger.

- d)

No gesture condition: Participants had to learn Spanish (L1)–Vimmi (FL) word pairs without the use of gestures. For example, they had to associate "teclado" with "saluzafo”.

To create the learning material, the 40 Spanish words were randomly paired with the 40 Vimmi words that were previously selected. This resulted in a total of 40-word pairs, with each pair consisting of a L1-Spanish word and a FL-Vimmi word. These 40-word pairs were then randomly divided into four sets, each containing 10-word pairs. Each set of 10 pairs was associated with one of the gesture conditions: congruent, incongruent, meaningless, or no gesture.

To ensure a balanced distribution of gesture conditions across the word sets, four lists of materials were created. Hence, a word pair (e.g., "teclado-saluzafo") was linked with a congruent gesture in list 1, an incongruent gesture in list 2, a meaningless gesture in list 3, and presented without gesture in list 4. Each participant was randomly assigned to one of these four lists. Consequently, across the lists, all 40-word pairs were evenly distributed over the four training conditions, ensuring that across participants, every word pair appeared in all of the training conditions.

In this series of studies, we equated the Spanish nouns and verbs across the four sets of word pairs in lexical variables. There were no significant differences across the nouns sets in terms of: number of graphemes, number of phonemes, number of syllables, lexical frequency, familiarity, or concreteness. Likewise, Vimmi words in the four sets were made equivalent in terms of number of graphemes, number of phonemes, and number of syllables. Furthermore, we ensured that the similarity between the Spanish words and Vimmi words was consistent across sets of word pairs. The number of shared phonemes between the Spanish and Vimmi words remained the same across the four sets, both when phoneme position was considered and when it was not.

Procedure

The learning phase involved three training sessions conducted on three consecutive days. In each session, participants first completed the FL training and then the evaluation of new words learning. The two phases were separated by a 15-minute break. E-prime experimental software was used for stimulus presentation and data acquisition [

153]. Participants were informed that the training sessions would be recorded on video to ensure they followed the instructions provided by the experimenter. The procedure performed in this study was approved by the Ethical Committee on Human Research at the University of Granada (Spain) associated with the research project (Grant PSI2016-75250-P; number issued by the Ethical Committee: 86/CEIH/2015) awarded to Pedro Macizo. It was conducted in accordance with the 1964 Helsinki Declaration and its subsequent amendments.

The design of the learning and evaluation phases remained consistent across experimental studies. However, we introduced certain variations that are specified in the next sections.

Vocabulary Learning Phase

The learning phase lasted approximately 1 hour per session. We employed a stimulus presentation procedure organized by experimental conditions, following a similar approach to that used in other studies with various gesture conditions [

90]. Participants were presented with a block of 40 Spanish–Vimmi word pairs, with each block containing 10 word pairs for each of the four learning conditions (congruent gestures, incongruent and meaningless gestures, and no gestures). This block was repeated 12 times, resulting in each participant receiving 480 trials, where the 40 word pairs were presented 12 times. Short breaks were introduced between learning blocks. Word pairs were randomly presented within each condition, and the order of learning conditions within a block was counterbalanced. This blocked design was adopted to minimize the cognitive effort associated with constantly switching between conditions where participants had to perform gestures and those without gestures.

The gestures were recorded on video by the experimenter and varied in nature, being congruent, incongruent, or meaningless depending on the accompanying word and thus, the learning condition. Each recorded gesture had a duration of 5 seconds and the gesture was repeated twice. The video appeared in the middle top part of the screen. In addition, in all experimental conditions, participants were presented with a Spanish–Vimmi (L1-FL) word pair visually displayed at the bottom of the screen. These word pairs were presented with the experimenter in a static position (no gesture condition) or accompanied by a gesture (remaining conditions) (see

Figure 2).

Participants were instructed to read aloud each L1-FL word pair twice. In the gesture conditions, participants were also required to produce the gesture that accompanied the word pair each time they vocalized it. Participants initiated the gesture production simultaneously with the vocalization of the L1-FL word pair, ensuring that each gesture and word pair were produced twice. For example, when participants received the word pair “teclado-saluzafo” along with the congruent gesture (

Figure 2a), they were instructed to vocalize the word pair while simultaneously performing the corresponding gesture of moving fingers as if tying on a keyboard. After producing the word pair twice while mimicking the experimenter movements also twice, participants pressed the space bar to proceed to the next trial.

As previously mentioned, we implemented some variations to adapt the procedure to the goal of each study. Experiment 1 included nouns as learning material while in Experiments 2 and 3, verbs served as words to be learned. In addition, the learning procedure previously described was the same for all experiments but in the case of the “see” learning group (Experiment 3), the participants were instructed to verbally say aloud each L1–FL pair twice while mentally simulating the gesture presented with the word pair, but without performing it overtly. For instance, when participants encountered the word pair "teclado-saluzafo" paired with the congruent gesture, they were expected to say aloud this word pair while concurrently generating a mental image of themselves moving fingers as if typing on a keyboard. This was maintained in the 3 gesture conditions (congruent, incongruent and meaningless conditions) but the participants did not perform any movement in the condition without gestures as occurred in the rest of the experiments and in the "do" learning group. The reason for instructing participants in the “see” learning group to mentally recreate the gesture was to ensure their engagement with the gesture and prevent them from solely focusing on learning the words in Vimmi. While it was challenging to confirm a priori whether participants were indeed mentally visualizing the required gesture, post-experiment observations, indicate that participants did, in fact, follow the experimenter's instructions and mentally executed the prescribed gestures.

Evaluation Phase

To evaluate the acquisition of Vimmi words two tests were employed in all the studies: Translation from Spanish into Vimmi (forward translation from L1 to FL) and translation from Vimmi into Spanish (backward translation from FL to L1). These tasks have been used previously in FL learning studies [

19,

154].

To prevent any potential order/practice effects, the order of presenting the translation tests was randomized across the three training sessions and among participants. In each translation task, participants received 40 Spanish words for forward translation and 40 Vimmi words for backward translation. On each trial, a word appeared in the center of the screen until the participants’ response. Oral translations were recorded for subsequent accuracy analysis, and response times (RTs) were recorded from word presentation to the start of oral translation. The learning assessment took approximately 10 minutes, depending on individual performance.

3. Results

In this section, the common issues regarding the analysis protocol are described first. Then, the results of Experiments 1 and 2 are presented followed by the outcomes of the two learning groups evaluated in Experiment 3.

While recall percentages (Recall %) serve as the primary measure of vocabulary acquisition, we also analyzed RTs in all the studies. RTs associated with correct translations were subjected to trimming following the procedure outlined by Tabachnick and Fidell [

155] to remove univariate outliers. Raw scores were converted to standard scores (z-scores), and data points that, after standardization, deviated by 3 standard deviations from the normal distribution were identified as outliers. Outliers were removed until no observations exceeded the 3

SD threshold. In all analyses, we maintained a significance level of α = .05. Only correct responses were included in the RT analyses. Data points were excluded from RT analyses under the following situations: (1) participants produced nonverbal sounds that triggered the voice key, (2) participants stuttered or hesitated in producing the word, (3) participants produced something other than the required word. Some minor errors were allowed and considered correct responses depending on the length of the correct word to be produced:

For monosyllabic words, the replacement of a vowel.

For disyllabic words, the replacement of a vowel or a consonant, but not both.

For words with three or more syllables, the inversion of a vowel and a consonant or the replacement of a vowel or a consonant.

Low-level (sublexical) errors, such as the replacement of vowels that did not introduce new semantic content, were considered correct. Since the FL words were in an artificial language (Vimmi), when participants replaced a vowel in Vimmi (e.g., 'rel') to create a legal word (e.g., 'rol'), this type of response was regarded as an error (comprising less than 5% of total errors).

Results of Experiment 1

Several factors treated as within-participant variables were entered in Analyses of variance (ANOVA). These factors were the translation test (comprising forward translation and backward translation), the training session (comprising the first session, second session, and third session), and the learning condition (which included congruent, incongruent and meaningless gestures, and the no gestures condition). Initially, the order in which participants received the translation tasks, either in a forward-backward translation order or a backward-forward translation order, was included as a between-subject factor in the analysis. However, the translation order was not significant nor did it interact with other factors, so this factor was not considered any further.

Response time analysis revealed that participants learned the new words properly across the three acquisition sessions. They responded faster on the last learning session compared to the first day of training. The recall analysis showed a wider array of main effects and interactions. In line with the response time results, participants remembered more words at the end of the training compared to the first evaluation phase.

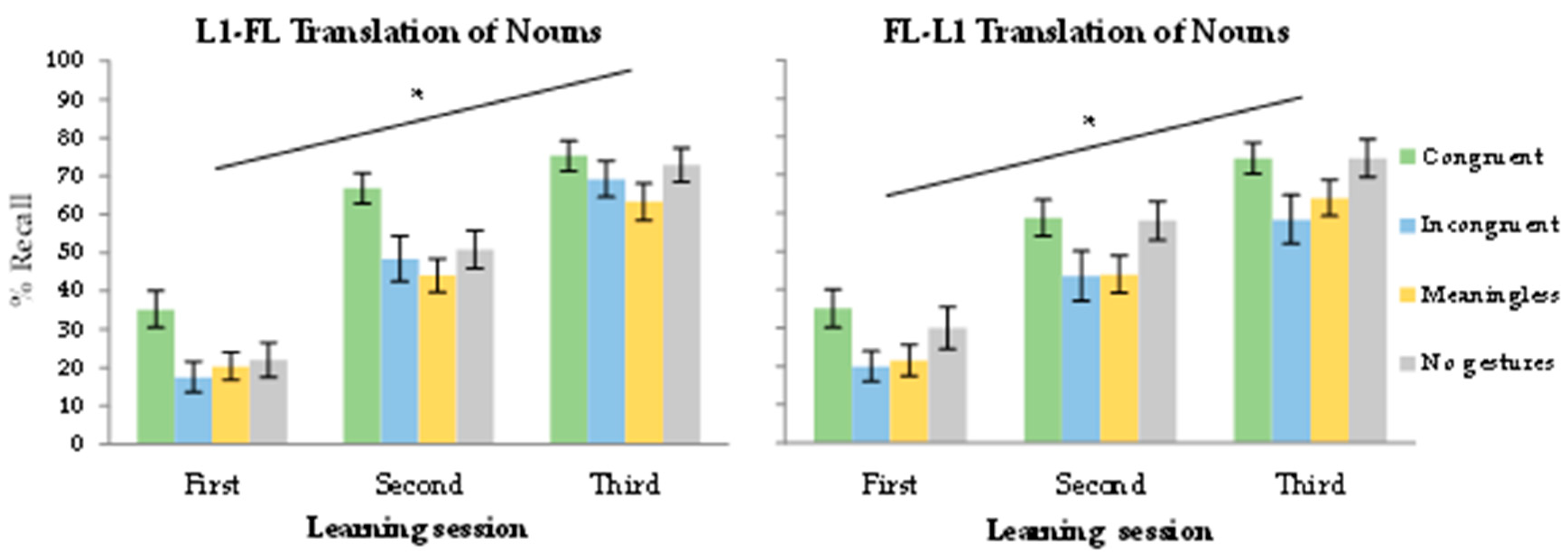

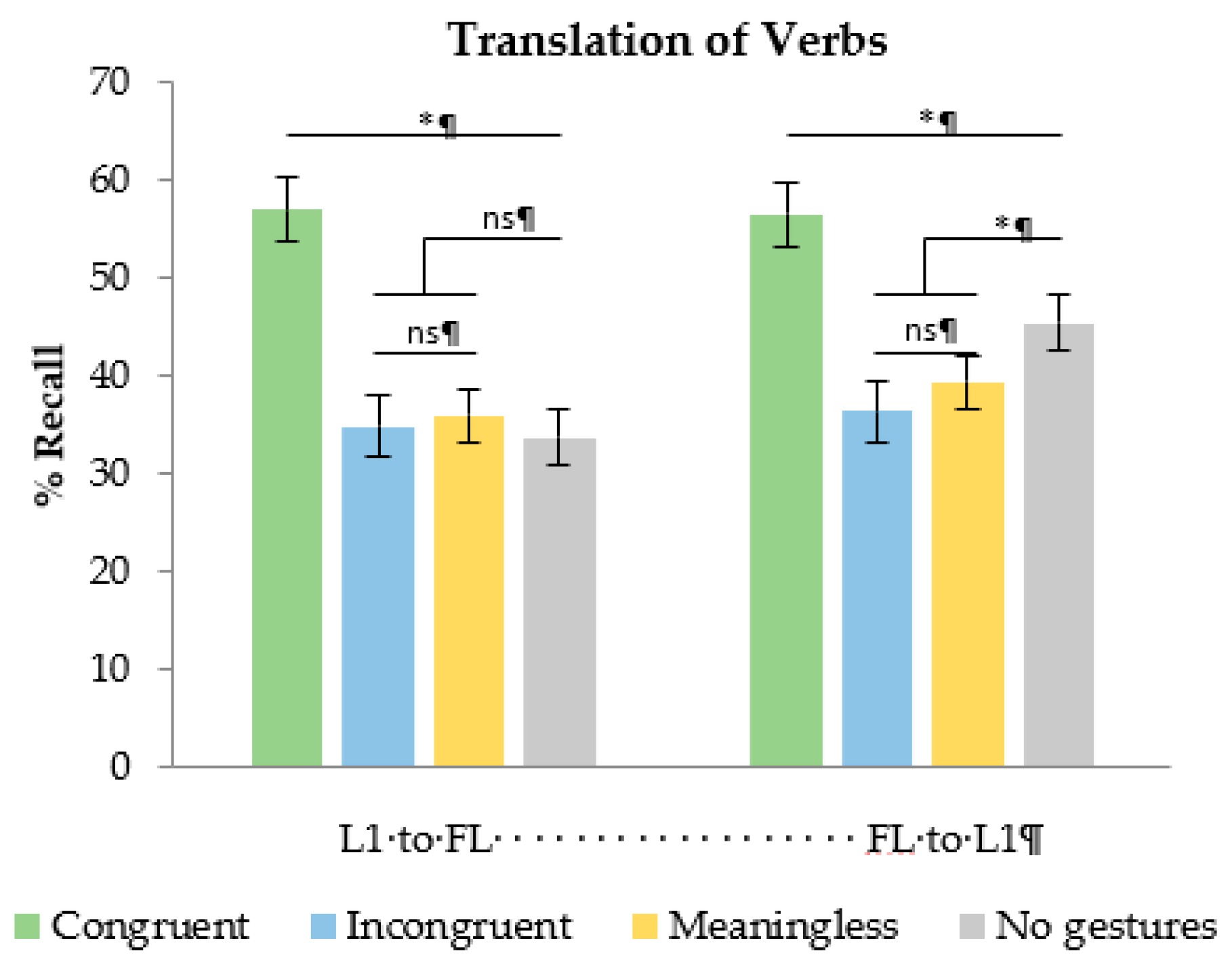

Figure 3 illustrate the interaction between translation direction and learning condition in each learning session. In the forward translation direction (L1-FL), performance improved across sessions. The main effect of learning condition was modulated by the translation test (see

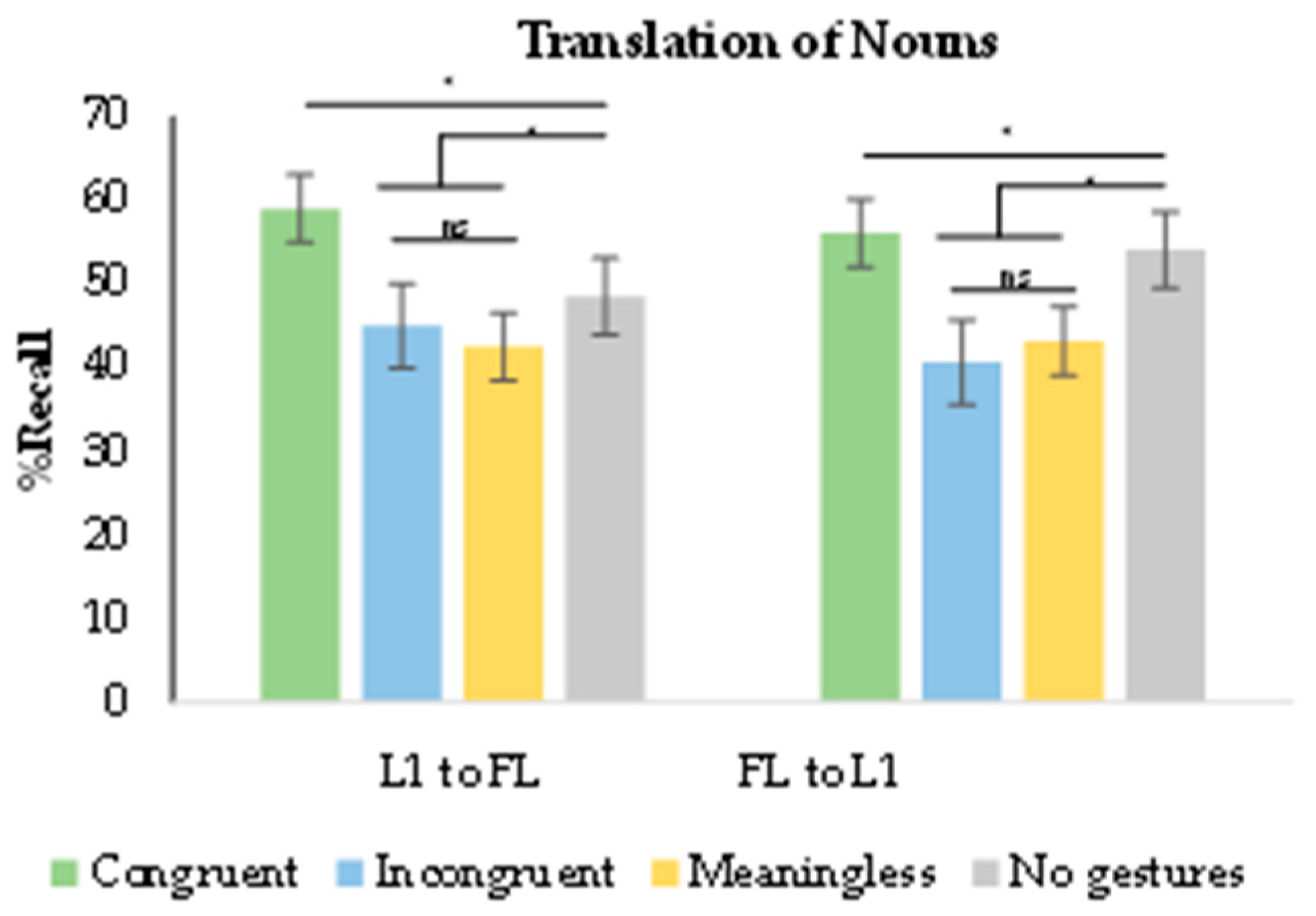

Figure 4). The comparison between learning conditions showed better recall in the congruent gestures condition compared to the meaningless gestures condition. The meaningless, incongruent and no gesture conditions showed similar outcomes. Compared to the no gesture condition, the recall was lower in the meaningless condition. The backward translation direction revealed a similar session effect showing that the more participants trained, the more they learned. Comparing learning conditions, congruent gestures improved performance compared to the meaningless condition. No differences were observed between the meaningless and incongruent conditions. Compared to the no gesture condition, the recall was lower in the incongruent and meaningless conditions.

In Experiment 1, when investigating the learning of FL nouns across various training conditions, two primary effects emerged. When participants learned FL words in the congruent condition, there was a notably higher recall percentage compared to the meaningless condition. However, the recall of FL words was lower in both the incongruent and meaningless conditions relative to the no-gesture condition, as shown in the backward translation task. This pattern of results suggests that these conditions had a detrimental impact on the learning process. Altogether, these findings reveal two contrasting effects when comparing different methods of FL learning: facilitation and interference.

Figure 4 shows the % Recall of nouns as a function of translation direction and gesture conditions (learning session collapsed).

The facilitation effect observed with congruent gestures appears to lend support to the motor-imagery account of gestures' role in FL vocabulary learning (as proposed by [

89]). The shared semantic meaning between gestures and L1 words seemed to enhance the acquisition of FL words. Another perspective, the motor-trace theory [

85,

86], could also explain the facilitation effect, as congruent gestures were familiar and could activate motor traces associated with the words. However, this perspective would not account for the interference effect found with incongruent gestures relative to the no gesture condition, given that incongruent gestures were also common and familiar to the participants. Furthermore, the self-involvement explanation could not accommodate the observed pattern of results, as there were clear differences between conditions involving gestures (as indicated by Helstrup [

79]).

Notably, the magnitude of the interference effect was similar in both the incongruent and meaningless conditions when compared to the learning of nouns in the no-gesture condition. This observation suggests that the negative impact of gestures in these two conditions may be attributed to the fact that participants were engaged in a dual-task setting, which heightened the difficulty of the learning process (unless it provided consistent information as in the congruent condition).

Results of Experiment 2

The same analysis protocol and set of factors used in Experiment 1 were employed in Experiment 2. The sole distinction between the two studies was the category of words participants were tasked with learning. In Experiment 2, students acquired verbs instead of nouns. When comparing the acquisition of nouns and verbs, previous research has consistently shown that verbs tend to be more challenging to learn [

129,

135,

139]. To address this inherent difficulty associated with verb acquisition, one potential approach is to incorporate gestures during the learning process. It has been posited that the semantic representation of verbs intrinsically involves a motor component [

128,

130]. Consequently, in Experiment 2, we evaluated whether the use of gestures could alleviate the difficulty of learning verbs vs. nouns.

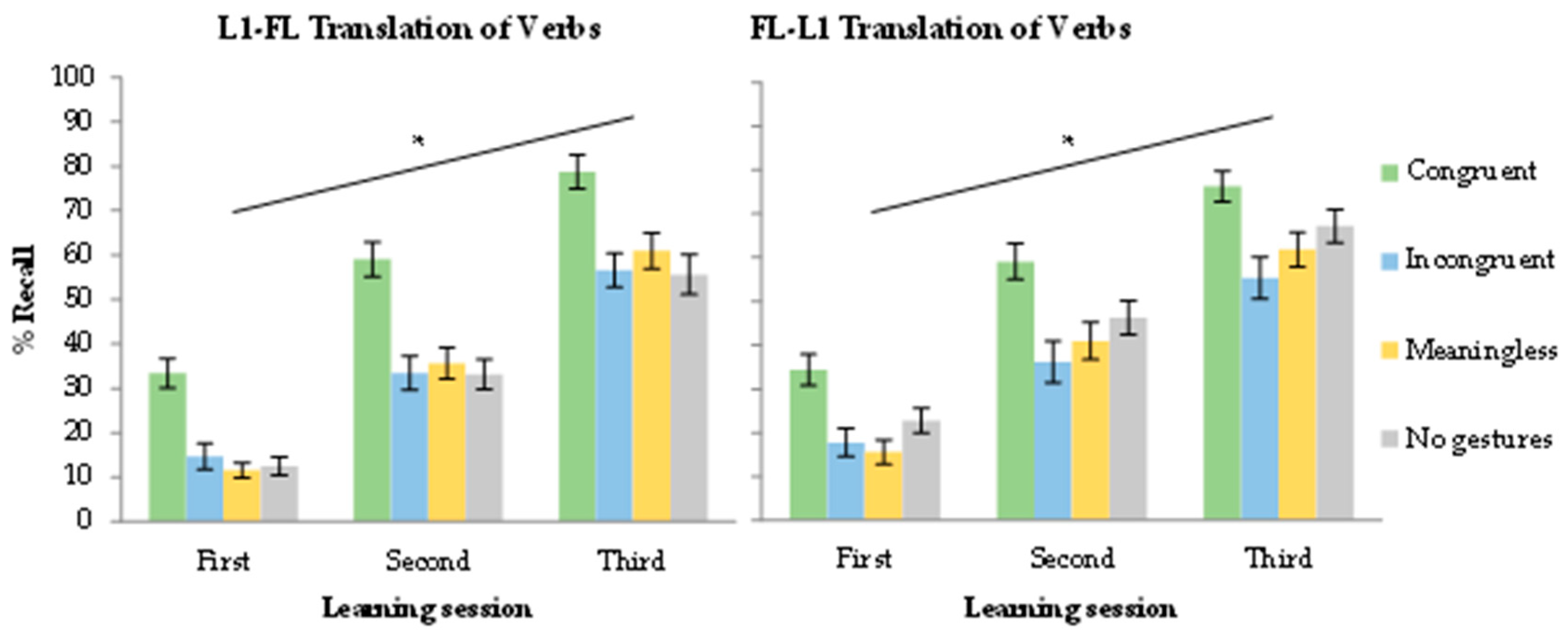

When it comes to RTs, learners responded faster in the last training session compared to the first learning day. Regarding learning conditions, congruent gestures exhibited faster responses than the incongruent, meaningless and no gesture conditions. No differences were found between any other learning condition. Recall was better in the last learning day compared to the beginning of the training (see

Figure 5 to observe the % Recall across sessions, translation directions and learning conditions). There was also a main effect of translation direction that interacted with the learning condition factor. This effect is shown in

Figure 6 (learning session collapsed). In the forward translation, the session effect previously described persisted. Better recall was observed in the congruent condition relative to the meaningless and no gesture condition. No differences were found between the incongruent, meaningless and no gesture conditions. The backward translation direction showed again the increasement in percentages of recall across learning sessions. Better recall was observed in the congruent condition relative to the no gesture and meaningless conditions. No differences were observed between meaningless and incongruent gestures. However, compared to the no gestures condition, the recall was lower in the incongruent condition and the meaningless condition.

In Experiment 2, we focused on investigating the role of gestures in the acquisition of FL verbs. The observed pattern of results closely mirrored what we found in Experiment 1, which pertained to the learning of FL nouns.

Once again, we identified a facilitation effect stemming from the integration of gestures into the learning process. Specifically, congruent gestures proved to enhance the acquisition of FL verbs when compared to learning without gestures or with meaningless gestures. However, as in Experiment 1, we also encountered an interference effect when participants were exposed to incongruent and meaningless gestures, as opposed to the no-gesture condition, particularly evident in the FL to L1 translation task. The discussion section will provide a more in-depth explanation of these two effects, shedding further light on their underlying mechanisms.

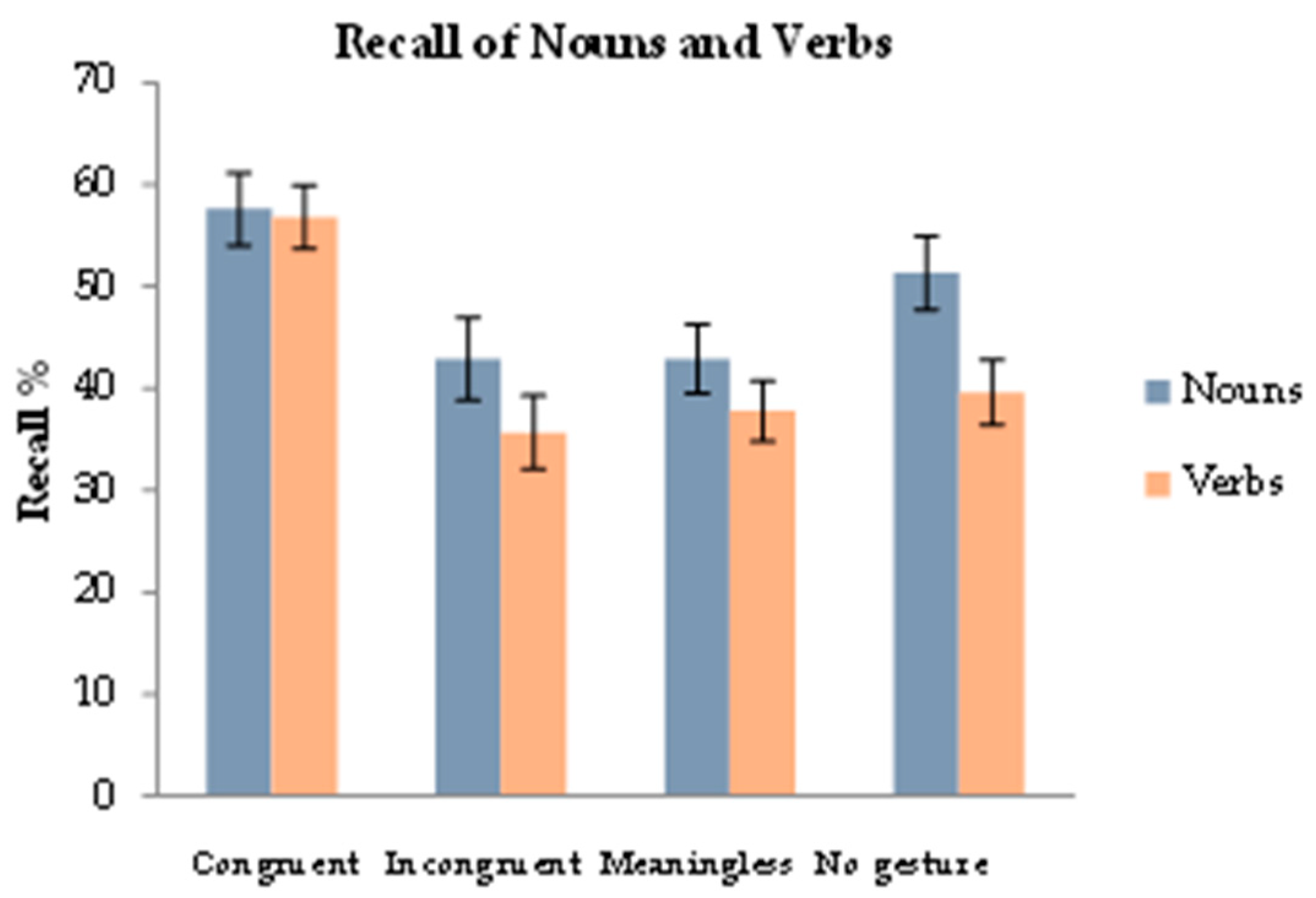

Between Experiments Comparison

To stablish comparisons between word types, which constituted one of the primary objectives of this experimental series, we introduced the word type factor (nouns, verbs) in a new analysis along with the factors previously mentioned. In the forward translation direction, participants recalled more nouns than verbs. Also, the learning condition interacted with the word type factor. In the no gesture and incongruent conditions, better recall was noted for nouns than verbs. However, no differences between nouns and verbs were obtained for congruent and meaningless gestures. Considering the backward translation, no main effects or interactions were significant.

Figure 7 shows the results found for nouns and verbs recall in each of the learning conditions.

Results of Experiment 3

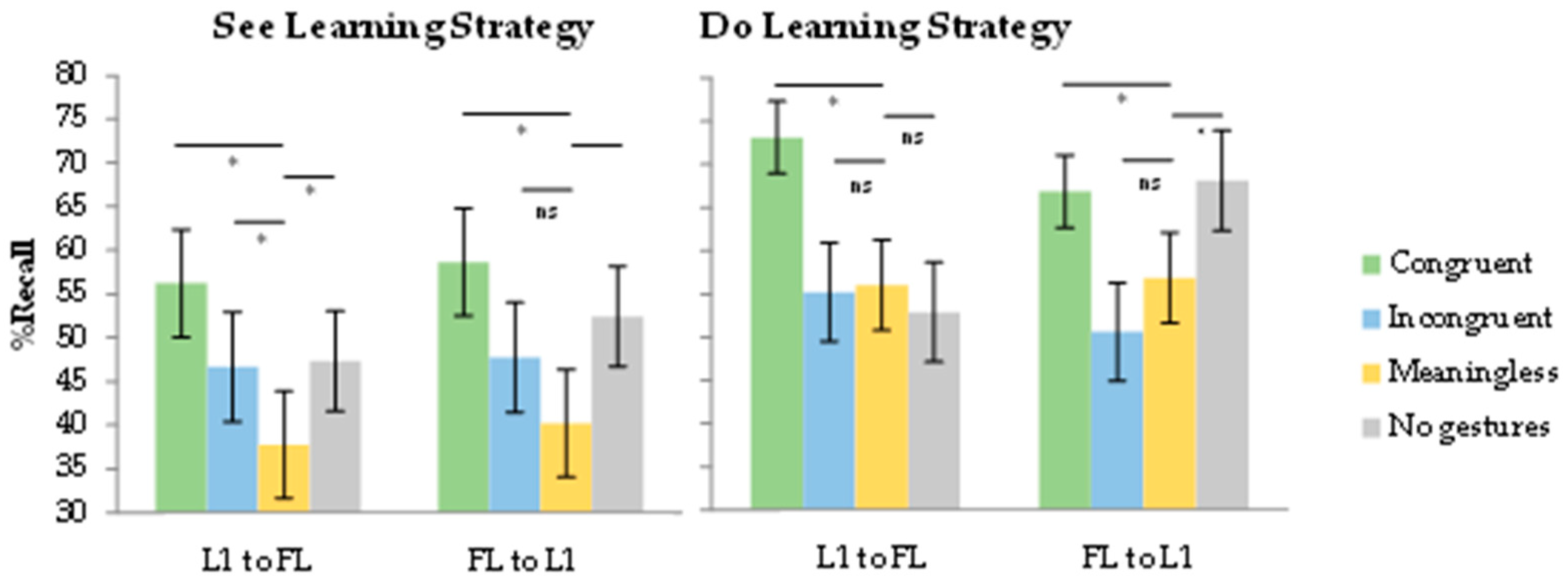

We conducted ANOVAs including translation direction (forward translation, backward translation), training session (first session, second session, third session), and learning condition (congruent, incongruent, meaningless, no gestures) that were treated as within-participants factors, while the learning group (“see”, “do”) was considered a between-groups variable in Experiment 3. Consistently with Experiments 1 and 2, the more participants trained the faster and more accurate they were.

Regarding RTs, the main effect of translation direction was significant so participants were faster in the backward than in the forward translation. The learning condition was also significant and learners were faster in the congruent condition compared to the meaningless condition and the no gesture condition. No differences were found between incongruent and meaningless gestures. Finally, marginal differences were obtained between meaningless gestures and the no gesture condition with participants responding subtly slower to the meaningless condition. It might be highlighted that participants in the “do” learning group were faster than the ones who learned under the “see” instruction across learning sessions.

Recall results showed that participants were more accurate in the congruent and no gesture conditions compared to the meaningless condition. No differences appeared between the incongruent and meaningless conditions. Finally, the congruent condition exhibited advantages compared to the no gesture condition. In this case, the meaningless condition seemed to be the most detrimental to the participants performance while the congruent gestures facilitated the learning process compared to the no gesture condition. Differences emerged between learning groups with participants included in the “do” group being more accurate than participants in the “see” group. The learning group interacted with learning condition and translation direction and hence, the analyses for each learning group were conducted separately. In the “see” group congruent gestures produced a facilitation effect compared to meaningless gestures. Also, participants remembered more words in the no gesture condition compared to the meaningless condition. Marginal differences were obtained when the incongruent and meaningless conditions we compared being the meaningless gestures the most detrimental learning situation. The final comparison between congruent and no gesture conditions showed a marginal effect. The “do” learning group revealed an interaction between learning condition and translation direction. In this way, the learning condition effect was explored for each translation direction separately. In the forward translation, there was a facilitation effect associated with the use of congruent gestures. No differences were obtained between incongruent, meaningless and no gesture conditions. The learning interference associated with the use of meaningless gestures that appeared in the “see” group, was not present in the case of the “do” group. Advantages were associated with congruent gestures compared to the no gesture condition. Finally, in the backward translation direction, congruent gestures showed better recall patterns compared to meaningless gestures. Differences appeared between incongruent and meaningless gestures but similar results were obtained when the congruent and no gesture conditions were compared (see

Figure 8).

4. Discussion

It is commonly accepted that movements appear to play a significant role in a variety of cognitive processes. A facilitative effect has been observed for different types of movements, not only in educational settings but also in clinical contexts such as developmental disorders and aphasia treatments [

156,

157,

158]. For instance, pointing movements, defined as deictic gestures, and beat gestures, which reflect prosody and emphasize speech, have demonstrated positive effects on language learning and development [

68,

69,

159]. Particularly noteworthy are iconic gestures, referring to concrete entities or actions. These gestures have been used in many studies to investigate how they enhance memory consolidation in language production and comprehension [

76,

78] and in FL vocabulary acquisition [

69,

120]. The findings from the present series of studies indicate that iconic gestures play a crucial role in enhancing semantic processing and linking the semantic system with the lexicon in FL learning. Several pieces of evidence support this conclusion.

Previous studies explored the comparison between congruent and incongruent conditions, employing either speech or gestures, particularly in the context of verb learning [

95] and noun learning [

90]. Additionally, some works have compared congruent gestures to meaningless gestures when presented alongside written words [

102] and sentences [

76]. Previous research has also examined conditions with gestures versus conditions without gestures [

66]. Moreover, some studies have considered all three conditions —congruent, incongruent, and no gestures— [

94]. In addition, the comparison between gesture imitation and picture observation [

120] and the contrast between the observation and production of non-iconic gestures, such as pitch gestures and beat gestures [

68,

124] have been explored. Sweller and colleagues [

125] directly assessed the impact of self-performed gestures and gesture observation when using iconic gestures as learning materials along with acoustically presented FL words. As far as we know, our series of studies addressed for the first time the role of four gesture condition (congruent, incongruent, meaningless and no gesture) on FL vocabulary learning comparing nouns and verbs acquisition. In addition, the effects of observing versus producing gestures taking into account the different semantic relationships that can be established between gestures and words (congruent, incongruent, meaningless) had not been evaluated until the publication of our work on this issue. The current experimental design and manipulations holds significant potential for advancing our understanding of the role of gestures in FL vocabulary learning.