1. Introduction

The healthcare sector in the United States has undergone a remarkable transformation over the past few decades. Not only has it expanded significantly, but it has also become a driving force behind the nation’s economic growth, employing approximately 14.3 million individuals. With projections indicating the creation of an additional 3.2 million healthcare-related jobs soon [

1], the healthcare industry’s significance in the American economy is set to soar even higher. Beyond its economic impact, healthcare is pivotal in American citizens’ lives, as it is fundamentally dedicated to supporting their health and well-being. In recent years, healthcare competition has greatly increased, especially due to the COVID-19 pandemic [

2]. Despite the sector’s overall commendable performance, significant challenges persist.

One of the most pressing issues plaguing the US healthcare system is the problem of high employee turnover, particularly among nurses. This turnover not only impacts the healthcare industry’s ability to deliver quality care but also hampers its overall performance. Many nurses leave their current organizations in pursuit of opportunities to enhance their skills and competencies [

3]. This phenomenon, called turnover intention, measures how much employees think about leaving their current organization. This significantly affects the organization’s sustainability and reputation [

4]. Turnover intention represents a process wherein employees contemplate leaving their current organization for various reasons, reflecting their anticipation of voluntarily departing soon [

2]. It underscores an employee’s contemplation and inclination toward seeking alternative employment. In the healthcare industry, nurse turnover intention has emerged as a pervasive problem, transcending organizational size, location, and nature of business [

4]. The adverse impact of high turnover intention on healthcare organizations is keenly felt, as it directly affects the quality of service they can provide [

5]. Consequently, extensive research efforts have been dedicated to understanding and evaluating nurse turnover, specifically identifying predictive factors for nurse turnover intention [

3], [

6]. International studies consistently report a significant increase in nurses expressing their intention to leave their jobs [

7], [

8]. Hence, the ability to predict nurse turnover has become a crucial procedure for healthcare organizations. Early access to information regarding nurse turnover status empowers organizations to take preemptive measures and implement interventions to curtail turnover, ultimately ensuring the continued delivery of high-quality healthcare services [

9].

In this regard, Artificial Intelligence (AI) offers a unique ability to analyze diverse datasets, from structured human resource records to unstructured sources like social media sentiment and employee feedback [

10]. This holistic approach provides valuable insights into the factors contributing to turnover. Such factors include work-related stress, job dissatisfaction, or personal circumstances [

11]. Human resource departments can identify early warning signs such as increased absenteeism or declining performance of employees [

12]. Thus, the healthcare industry can proactively intervene in the turnover intention based on predictive factors. These interventions may include tailored training programs, workload adjustments, or personalized support to address employee concerns [

13].

One of the main branches of AI is machine learning (ML) algorithms, which can learn and adapt knowledge based on data training and learn from recurring patterns from the dataset. Then, observed data patterns are used to predict an outcome. Various machine learning algorithms are popular to predict the outcomes in the recent healthcare-related study [

14]–[

16], which included but not limited to neural networks, extreme gradient boosting, random forest (RF), decision tree, logistic regression, support vector machine (SVM) [

7], [

9], [

13]. In ML, classification algorithms consider that every class should have an approximately equal number, but, in practice, it may fail due to class imbalances [

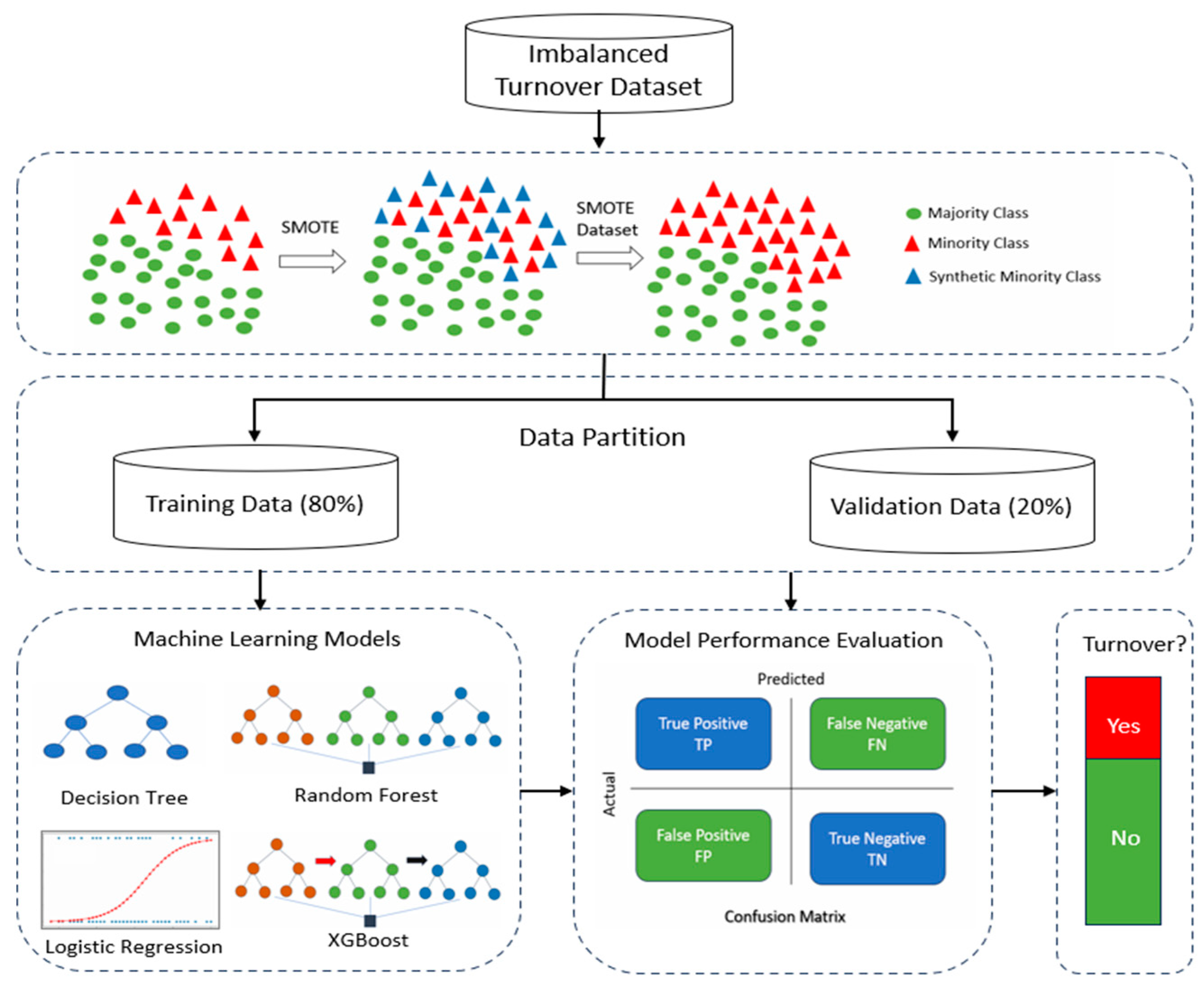

17]. In an imbalanced dataset, we have the class with fewer examples, a so-called minority class, and the class with many examples, a so-called majority class. If an imbalanced dataset is used when performing ML analysis, the imbalanced distribution of the classes may be overlooked. This results in poor performance for the minority class, creating a model bias for the majority class because ML tends to learn more about the majority class during the data partitioning process [

18].

The academic significance of our present research lies in the scarcity of open literature studies focused on nurse turnover prediction using machine learning algorithms. While numerous papers have examined the association between various factors and nurse turnover, only a few have delved into the predictive potential of machine learning in this context. Demographic factors such as age, sex, marital status, work experience, and job position have commonly been identified as contributing factors to nurse turnover [

19]. Organizational factors, including department, employment status (regular or non-regular), and lower nursing grade, have also been found to predict turnover [

20]. Furthermore, research from South Korea highlights additional critical factors such as marriage, childbirth, and child-rearing as significant contributors to nurse turnover [

7], [

20]–[

22]. However, it is essential to note that the most recent study conducted by Bae (2023) employed the 2018 National Sample Survey of Registered Nurses (NSSRN) dataset and utilized multivariable logistic regression for analysis. One notable challenge encountered in the study was dealing with imbalanced data in the context of turnover classification. This challenge serves as a key motivation for our research.

Previous literature reviews demonstrated that existing approaches have effectively predicted nurse turnover across various datasets. However, diverse machine learning algorithms have been employed without considering class imbalance issues to enhance various performance metrics, including accuracy, precision, and recall. In this study, our primary objective is to compare machine learning techniques alongside the Synthetic Minority Over-sampling Technique (SMOTE) to determine the most effective method for predicting nurse turnover. To our knowledge, this is the first endeavor to comprehensively analyze all dataset features within the NSSRN context.

This study aimed to develop and evaluate a predictive model for nurse turnover in the USA using machine learning. The remainder of this research paper is as follows.

Section 2 presents a methodology such as data preprocessing, the ML algorithm, and the SMOTE method.

Section 3 presents the experimental results of the study and compares them with existing methods.

Section 4 presents the study’s conclusion and future research.

3. Results

3.1. Experiment Setup

All data processing, sampling, and machine learning analyses were conducted using the R statistical software, a freely available open-source tool.

3.2. Characteristics of the Participants

The characteristics of 43,937 nurses are summarized in Table 2. A total of 4,728 nurses (11%) left their primary nursing positions. Among the turnover group, those holding NP and RN qualifications tended to leave their positions, accounting for 45.96% and 44.67%, respectively. Most nurses expressed satisfaction with their primary nursing positions, with 9.77% reporting dissatisfaction and 90.23% reporting satisfaction. On average, the age of the nurses was 55 ± 11 years, individual income averaged $70,856 ± 41,404, and they worked an average of 346 ± 14.4 hours per week. In terms of race, 86.51% of nurses were White, and 91.10% were female among those in the turnover group. Furthermore, 75.04% of those who left their positions were married, and 93.97% of nurses reported no prior military service. Regarding household income, 21.49% of nurses earned less than $75,000, 43.46 of nurses earned between $75,000 and $150,000, and 35.05% are more than $150,001. When it came to their educational backgrounds, more than half (57%) held advanced degrees such as MSN and PhD/DNP/DN. Most nurses (82.38%) did not have dependents under the age of 6, and 90.08% were hired by organizations and working full-time (79.61%). In terms of employment setting, 34.01% of nurses worked in clinical/ambulatory settings, followed by hospitals (43.53%) and inpatient/other types of settings (22.46%). Finally, 78.79% of nurses reported that they could practice to the extent of their knowledge, education, and training.

Table 2.

Distribution of the characteristics of the 18 extracted variables in the NSSRN database.

Table 2.

Distribution of the characteristics of the 18 extracted variables in the NSSRN database.

| Characteristic |

Turnover |

Turnover |

| Yes (N=4728), 11% |

No (N=39209), 89% |

| Categorical Variables |

Count |

Percentage |

Count |

Percentage |

| Certificate |

|

|

|

|

| Other |

443 |

9.37% |

2748 |

7.01% |

| NP |

2173 |

45.96% |

19382 |

49.43% |

| RN |

2112 |

44.67% |

17079 |

43.56% |

| Region |

|

|

|

|

| Midwest |

1059 |

22.40% |

8950 |

22.83% |

| North |

893 |

18.89% |

7227 |

18.43% |

| South |

1574 |

33.29% |

13084 |

33.37% |

| West |

1202 |

25.42% |

9948 |

25.37% |

| Job_Satisfaction |

|

|

|

|

| Dissatisfied |

462 |

9.77% |

3867 |

9.86% |

| Satisfied |

4266 |

90.23% |

35342 |

90.14% |

| Race |

|

|

|

|

| Other Race |

638 |

13.49% |

5686 |

14.50% |

| White |

4090 |

86.51% |

33523 |

85.50% |

| Sex |

|

|

|

|

| Female |

4307 |

91.10% |

35847 |

91.43% |

| Male |

421 |

8.90% |

3362 |

8.57% |

| Marital Status |

|

|

|

|

| Married |

3548 |

75.04% |

29490 |

75.21% |

| Single |

1180 |

24.96% |

9719 |

24.79% |

| Veteran |

|

|

|

|

| Never Served |

4443 |

93.97% |

36919 |

94.16% |

| Served |

285 |

6.03% |

2290 |

5.84% |

| Household_Income |

|

|

|

|

| Less than $75,000 |

1016 |

21.49% |

8418 |

21.47% |

| $75,001 TO $150,000 |

2055 |

43.46% |

17369 |

44.30% |

| More than $150,001 |

1657 |

35.05% |

13422 |

34.23% |

| Degree |

|

|

|

|

| ADN |

773 |

16.35% |

5891 |

15.02% |

| BSN |

956 |

20.22% |

9395 |

23.96% |

| MSN |

2404 |

50.85% |

20308 |

51.79% |

| PHD/DNP/DN |

595 |

12.58% |

3615 |

9.22% |

| Dependant < 6years |

|

|

|

|

| No |

3895 |

82.38% |

32248 |

82.25% |

| Yes |

833 |

17.62% |

6961 |

17.75% |

| EHR_EMR Usability |

|

|

|

|

| No |

488 |

10.32% |

4595 |

11.72% |

| Yes |

4240 |

89.68% |

34614 |

88.28% |

| Employment_Type |

|

|

|

|

| Employed by Organization |

4448 |

94.08% |

36540 |

93.19% |

| Other |

280 |

5.92% |

2669 |

6.81% |

| Job_Type |

|

|

|

|

| Full Time |

3764 |

79.61% |

30964 |

78.97% |

| Part Time |

964 |

20.39% |

8245 |

21.03% |

| Employment_Setting |

|

|

|

|

| Clinical/Ambulatory |

1608 |

34.01% |

13110 |

33.44% |

| Hospital |

2058 |

43.53% |

17551 |

44.76% |

| Inpatient/Other |

1062 |

22.46% |

8548 |

21.80% |

| Practice |

|

|

|

|

| No |

1003 |

21.21% |

8512 |

21.71% |

| Yes |

3725 |

78.79% |

30697 |

78.29% |

| Numerical Variables |

Count |

Std.dev |

Count |

Std.dev |

| Age |

55 |

11 |

48 |

12 |

| Individual Income |

70,285 |

41404 |

85,444 |

37157 |

| Working Hour (per week) |

34 |

14.4 |

39 |

11.2 |

3.3. Machine Learning Analysis Results

In this study, we conducted a comprehensive analysis of supervised machine learning classifiers after implementing the Synthetic Minority Over-sampling Technique (SMOTE) on our dataset. Our primary goal was to evaluate the predictive accuracy and performance of five distinct machine learning algorithms, namely SMOTE-enhanced Logistic Regression (SMOTE_LR), SMOTE-enhanced Random Forest (SMOTE_RF), SMOTE-enhanced Decision Trees (SMOTE_DT), and SMOTE-enhanced XGBoost (SMOTE_XGB), in the context of predicting nurse turnover.

Table 3 displays the outcomes of the logistic regression (LR) model, presenting odds ratios (ORs), 95% confidence intervals (CIs), and p-values at a 95% significance level, which shed light on the influence of each variable on nurse turnover. Notably, we treated NP as the reference category. Individuals falling under the category of Other (comprising NA and NM) are 1.592 times more likely to experience turnover than those in the NP group, assuming all other variables remain constant (CI: 1.42-1.78). Nurses residing in the South and West regions show a decreased likelihood of turnover (OR=1.037, CI: 0.95-1.14). Additionally, nurses who make use of Electronic Health Records (EHR) or Electronic Medical Records (EMR) technology exhibit a reduced likelihood of turnover (OR=0.567, CI: 0.52-0.62).

When considering Employee by Organization as the reference category, other types of employment (such as Travel Nurses and the self-employed) are associated with a substantial increase in the odds of turnover (OR=2.525, CI: 2.29-2.78). Among different job types, part-time nurses have 1.446 times the odds of turnover compared to their full-time counterparts under constant conditions. Furthermore, nurses working in inpatient or other settings exhibit a moderately increased likelihood of turnover (OR=1.248, CI: 1.15-1.35). Notably, individuals working standard work hours are less likely to experience turnover (OR=0.732, CI: 0.69-0.78). Having fewer opportunities for job practice is associated with an increased likelihood of turnover. Male nurses, single individuals, and veterans are more likely to experience turnover. Concerning race, White individuals are less likely to turnover (OR=0.538, CI: 0.50-0.58). A household income of more than $150,001 significantly increases turnover, as indicated by the model (p<0.05). On the other hand, individuals with a BSN (OR=0.726, CI: 0.66-0.80) and MSN (OR=0.730, CI: 0.80) are less likely to turnover. Having dependents under 6 years old is linked to a moderately increased likelihood of turnover (OR = 1.357, CI: 1.25-1.47). Lastly, higher age and nurse income decreased nurse turnover.

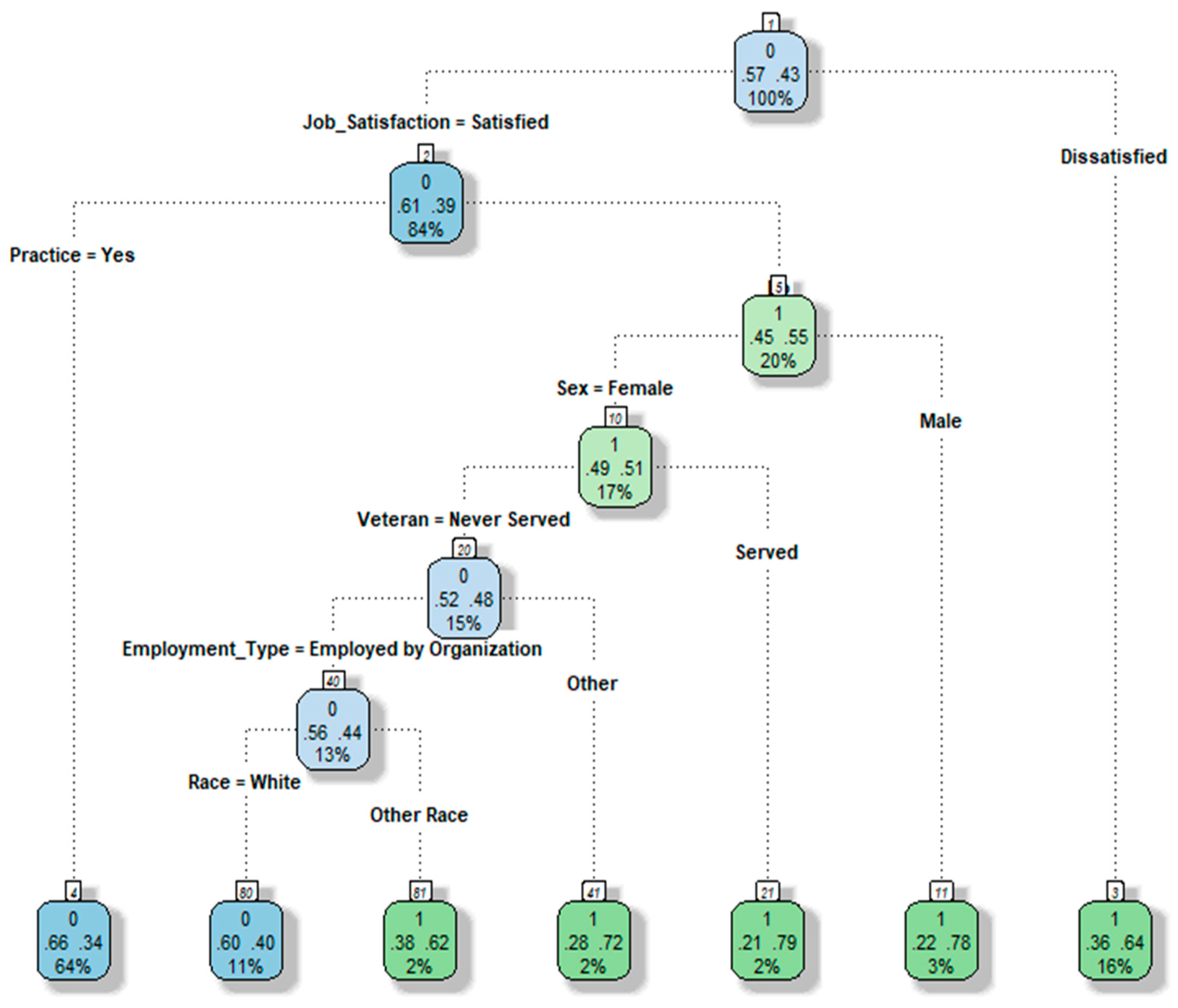

Figure 3 depicts the default Decision Tree analysis results for nurse turnover. At the root node (node 1), we find all the records from the training dataset, comprising 43% “Yes” and 57% “No” outcomes in our target variable (Turnover). The “0” within the top node’s box signifies the majority of nurses who did not leave their jobs.

The first node occurs at the Job Satisfaction node (node 2), where 84% of nurses report job satisfaction, with a 39% turnover probability. In contrast, if nurses express dissatisfaction with their jobs (16%), they move to the terminal node (3) with a 64% probability of turnover.

Nurses who are satisfied with their jobs but cannot practice have a 55% chance of turnover (node 5). Notably, male nurses who were unable to practice in their jobs exhibited a higher turnover probability of 78%. Furthermore, nurses serving in the military, working as travel nurses, or in other roles, along with those of non-white ethnicity, show a notably high probability of turnover. The terminal nodes represent the final Decision Tree for nurse turnover. Among the seven terminal nodes, two are associated with the classification “Did not Turnover,” while four lead to the “Turnover” classification. The Decision Tree analysis identifies the most influential variables for turnover as Job Satisfaction, followed by Job Practice, Gender, Veteran status, Employee Type, and Race.

3.4. Feature Importance of ML Models

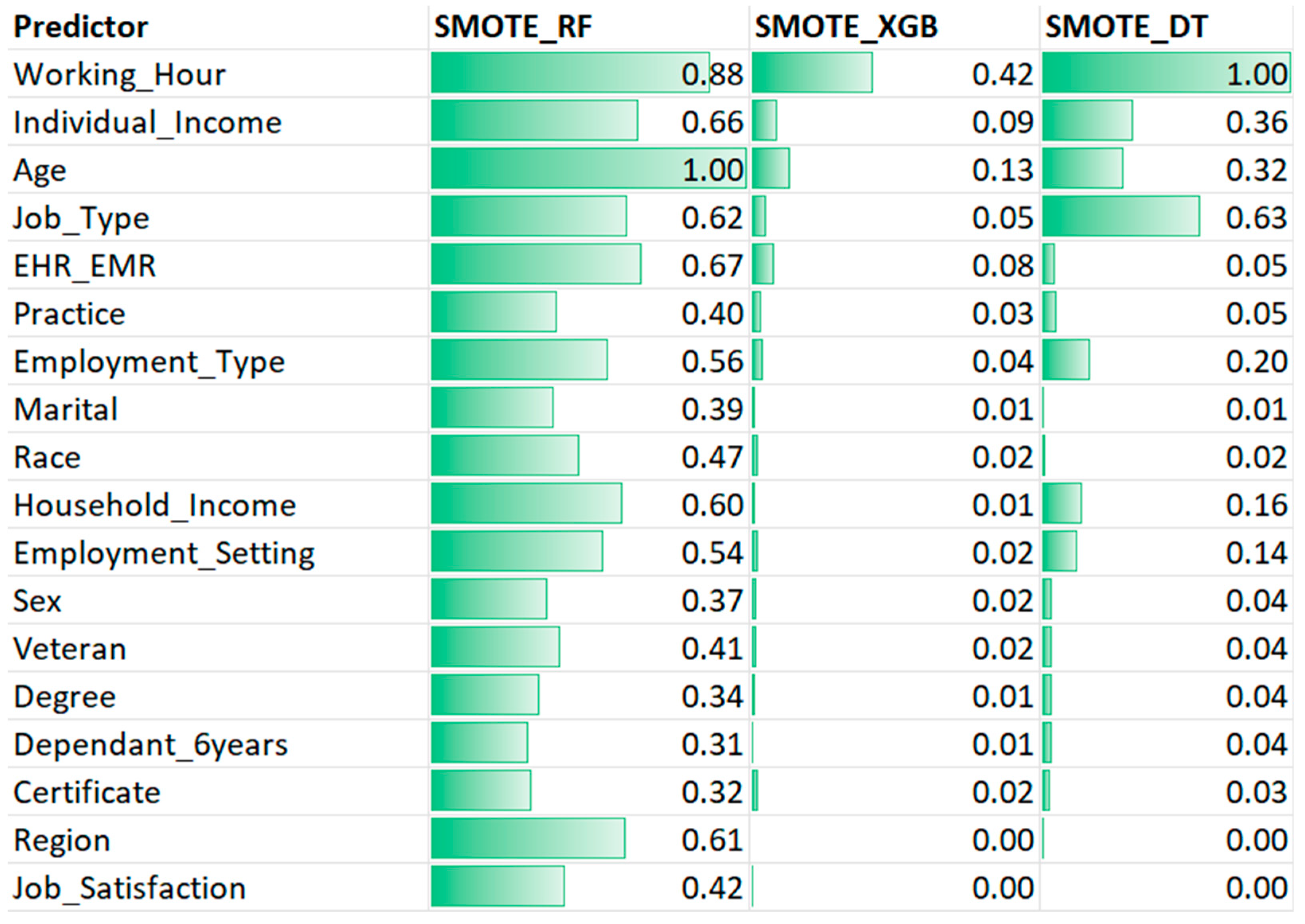

Based on different feature importance criteria, SMOTE_RF, SMOTE_XGB, and SMOTE_DT provide importance rankings for relevant variables in predicting turnover using the mean decrease accuracy score.

Figure 4 displays the mean decrease in accuracy and ranking of 18 variables under three different SMOTE-based ML models.

From SMOTE_RF, the top five important variables for predicting turnover were AGE (1), WORKING_HOUR (0.88), HER_EMR (0.67), INDIVIDUAL_INCOME (0.66), and JOB_TYPE (0.62). In the SMOTE_XGB results, WORKING_HOUR (0.42), AGE (0.13), INDIVIDUAL_INCOME (0.09), EHR_EMR (0.08), and JOB_TYPE (0.05) were identified as important features. SMOTE_DT revealed that WORKING_HOUR (1), JOB_TYPE (0.63), INDIVIDUAL_INCOME (0.36), AGE (0.32), and EMPLOYMENT_TYPE (0.02) were the most important features. Blytt et al. (2022) showed an association between working hours and turnover intention. Nurses with higher working hours tend to seek jobs with preferable working time arrangements. Age was an important factor in turnover intention. Previous research found that new graduate nurses, who are usually young, have a higher turnover than experienced nurses because they tend to quit their jobs to seek career advancement [

21].

Conversely, SMOTE_RF, DEPENDANT_6YEARS (0.31), CERTIFICATE (0.32), DEGREE (0.34), SEX (0.37), and MARITAL (0.39) exhibited the lowest mean decrease scores, indicating that they are the least important variables for predicting nurse turnover. SMOTE_XGB, JOB_SATISFACTION (0), HOUSEHOLD_INCOME (0.01), MARITAL (0.01), DEGREE (0.01), and DEPENDANT_6YEARS (0.01) had the lowest mean decrease scores, making them the least important variables for prediction. SMOTE_DT identified REGION (0), JOB_SATISFACTION (0), MARITAL (0.01), RACE (0.02), and Certificate (0.03) as the least important variables. SMOTE_LR was excluded from the analysis because it provides variable importance for the entire set of predictive variables, preventing us from comparing variable rankings and their correlations. However, we compare SMOTE_LR with other models in terms of performance index.

In terms of correlation analysis, strong positive correlations were observed between SMOTE_DT and SMOTE_XGB (0.86). Moderate-strong correlations were found between SMOTE_RF and SMOTE_XGB (0.68) and between SMOTE_SGB and SMOTE_RF (0.68). Notably, the top five predictors identified in SMOTE_RF, SMOTE_XGB, and SMOTE_DT were also significant in the SMOTE_LR model (see

Table 4).

3.5. ML Model Performance of Nurse Turnover Prediction

This study evaluated the performance of five different machine learning models using a confusion matrix.

Table 5 presents a summary of the classification model indices, including True Positives (TP), True Negatives (TN), False Positives (FP), and False Negatives (FN). The validation dataset consisted of 20% of the total data, with a sample size of 5,295 individuals. In terms of True Positives (TP), SMOTE_RF demonstrated the highest TP rate at 51.3%, correctly predicting the departure of 2,714 out of 5,295 individual nurses from their primary jobs. SMOTE_XGBT followed closely with a TP rate of 51.0%, accurately predicting 2,701 departures. Specifically, SMOTE_RF correctly identified 2,623 instances of nurses leaving their primary jobs, indicating that 51.3% of the cases predicted as job departures corresponded to actual departures.

On the other hand, examining the False Negatives (FN) area, SMOTE_RF exhibited the lowest True Negatives (TN) rate at 5.8%, predicting 312 out of 5,295 cases as job departures when they did indeed leave their primary jobs. This implies that SMOTE_RF incorrectly classified instances as negative cases when they should have been positive. Thus, the model failed to identify only 312 cases that were part of the positive class. Conversely, SMOTE_LR achieved the highest False Positive (FP) rate at 19.6%, correctly predicting 1039 out of 5,295 nurses who did not leave their primary jobs. The model, however, missed 2450 instances that were actually part of the positive class. In terms of the proportion of correct predictions (TP+TN) in the confusion matrix, SMOTE_RF accurately classified 83.6% of the cases, SMOTE_XGBT achieved 82.7% accuracy, while SMOTE_DT and SMOTE_LR achieved 78.3% and 69.5% accuracy, respectively.

Table 6 provides an evaluation of five machine learning methods used in this study, using a set of commonly employed metrics for assessing machine learning algorithms. We have constructed classification metrics, specifically Accuracy, Recall (Sensitivity), Precision, and F1-Score, to compare the performance of our models. Accuracy quantifies the number of correct classifications as a percentage of the total classifications made by a classification model. Precision represents the proportion of positive classifications that are accurately identified, while recall measures the proportion of all positive classifications correctly classified. The F1-Score is a metric that combines precision and recall using their harmonic mean. When considering accuracy, SMOTE_RF and SMOTE_XGB emerge as the optimal models, each achieving similar Accuracy scores of 83.93% and 83.59%, respectively. Conversely, SMOTE_LR (75.90%) and SMOTE_DT (78.30%) exhibited the lowest predictive accuracy. Examining precision, SMOTE_XGB stands out as the best-performing model with a precision score of 89.25%. However, when evaluating the F1-Score, SMOTE-RF emerges as the optimal model at 94.02%, particularly when we consider false negatives (FN) and false positives (FP) to be of greater concern.

On the other hand, considering the area under the curve (AUC), the model with the highest AUC score is SMOTE_RF, with an AUC of 82.67%. It’s worth noting that AUC is not influenced by the threshold used in the ML classification or the distribution of the dataset. Thus, it provides a comprehensive measure of the classification power of the ML model. Consequently, SMOTE_RF is the preferred choice as the optimal model for predicting nurse turnover. It’s interesting to note that our results are similar to the findings of Kim et al. (2023). In their study, RF was identified as the best predictive model.

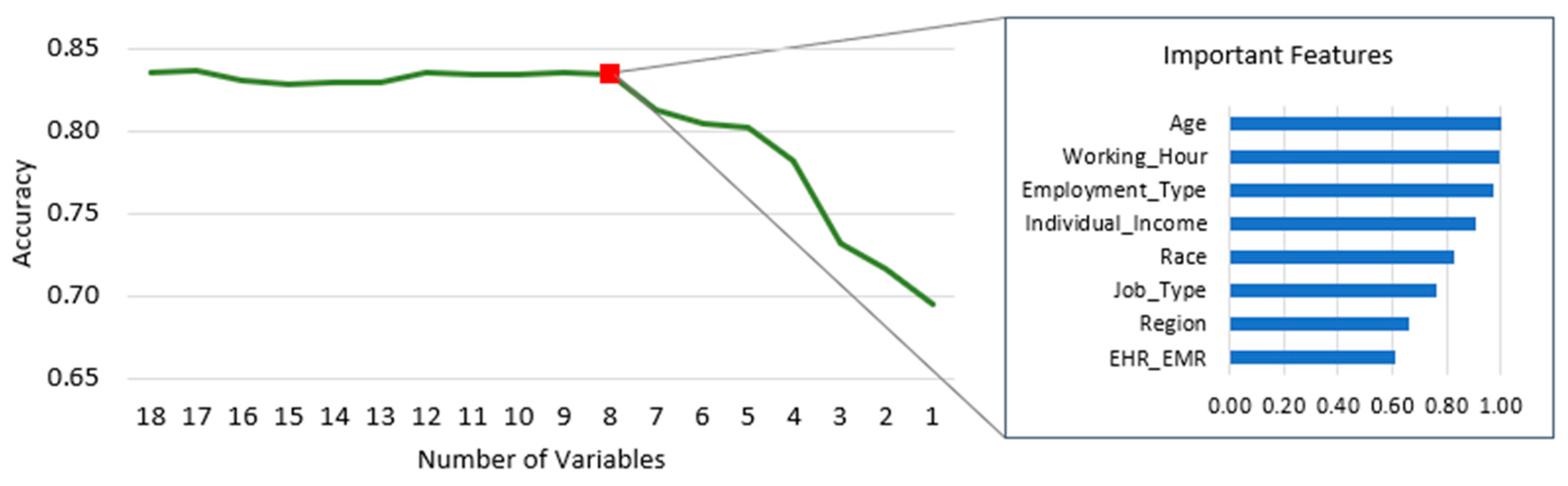

3.6. Optimized Random Forest Analysis Result

In this section, we employed an optimized RF analysis to determine the optimal number of features based on their importance. We utilized 18 independent variables and one dependent variable for our model. The process involved running the model 18 times and progressively eliminating lower-scoring features. Our analysis revealed that the accuracy began to decline when only the top eight features in

Figure 5 were retained. Consequently, we selected these eight features as the key predictors for the nurse turnover prediction problem. Age, Working Hours, Employment Type, Individual Income, Race, Job Type, Region and HER_EMR were the most important features of the recursive RF analysis. This dimensionality reduction enhances interpretability, especially for handling unbalanced characteristics, as demonstrated by [

29]. Reducing the dataset’s dimensionality serves a valuable purpose. It equips the human resources department with a more accurate tool for predicting nurse turnover. Rather than concentrating on many predictive variables, the human resources department can achieve more effective interventions in reducing the turnover rate by focusing on a smaller set of variables. Thus, the experimental findings offer valuable insights into reducing nurse turnover intention. In

Table 7, we can see that SMOTE_RF shows better performance again for the index for Accuracy, Recall, Precision, F1-score, and AUC than algorithms SMOTE_DT, SMOTE_XGB, and SMOTE_LR, which implies better predictive ability.

4. Conclusion and Future Work

The utilization of machine learning algorithms for processing raw employee turnover data represents a promising avenue for enhancing the capacity of human resource teams to address nurse turnover effectively. Through a comprehensive analysis of the key contributing factors to nurse turnover, it is possible to implement proactive measures aimed at its mitigation, facilitated by integrating machine learning algorithms.

The present study introduces an effective and efficient machine learning algorithm designed to predict nurse turnover utilizing the 2018 National Sample Survey of Registered Nurses (NSSRN) dataset. The machine learning techniques proposed encompass Logistic Regression (LR), Random Forest (RF), Decision Trees (DT), and Extreme Gradient Boosting (XGB). To address the imbalanced datasets frequently encountered in the NSSRN dataset, we apply the Synthetic Minority Over-sampling Technique (SMOTE). None of the studies treated data imbalance problems of the NSSRN dataset when performing predictive analysis to predict nurse turnover. Our study demonstrates that by addressing the issue of imbalanced datasets through SMOTE. This novel methodology effectively mitigates dataset imbalance in human resources, offering predictive insights that can empower healthcare managers and supervisors to take informed actions regarding factors influencing turnover intentions, thereby formulating intervention policies to retain their workforce.

SMOTE_RF produced variable importance scores, which calculate the relative score of the different predictive factors. From the importance of predictor variable analysis, Age, Working hours, EHR/EMR usability, individual income, and household income were among the top five priorities in predicting turnover. We also used SMOTE_DT and SMOTE_XGB approaches to find the variable importance score and a high correlation was observed among different models. Lastly, researchers used the SMOTE_LR approach to predict the turnover for comparisons with our other proposed models. Five predictive factors found in SMOTE_RF were also significant in the SMOTE_LR model. In summary, factors that reduce the likelihood of turnover include being in the NP category, residing in the South and West regions, using Electronic Health Records (EHR) or Electronic Medical Records (EMR) technology, working standard hours, having high job satisfaction, ample job practice, being of white ethnicity, holding a BSN or MSN degree, and being young with a lower individual income.

This study’s results may interest healthcare managers or supervisors involved in staff management planning who wish to minimize the nurse turnover rate. The key considerations for practitioners include factors such as age, working hours, technology usability (EHR or EMR adoption), full-time versus part-time employment, geographic region, and job satisfaction. The literature consistently identifies these variables as influencers of turnover intentions. For instance, prior research by Cho et al. [

21] noted a negative correlation between turnover intention and job dissatisfaction, while Blytt et al. [

6] observed similar findings regarding overtime.

Our study found that the age variable emerged as the most significant factor in our SMOTE_RF analysis, with a notably high turnover probability observed among younger nurses. This observation is in alignment with the findings of several previous studies [

6], [

8], [

21], [

30], all of which have highlighted age as a major determinant influencing nurse turnover. The inclination for younger nurses to exhibit higher turnover rates can be attributed to various factors. New graduate nurses and those in the early stages of their careers often depart their current positions in pursuit of better career prospects or improved employment benefits, such as higher income or more favorable job conditions [

38]. Understanding that age plays a pivotal role in nurse turnover allows us to consider it a potentially controllable factor within the healthcare sector. Proactive measures should be implemented by supervisors and managers to address this issue and mitigate the turnover intention among younger nurses. These measures may include offering comprehensive job training, providing ample opportunities for on-the-job practice, and carefully assigning patients to new nurses who require additional time to acclimate to their new work environment. By taking such actions, healthcare institutions can better retain their younger nursing staff and ensure the continued delivery of high-quality patient care. This proactive approach acknowledges the significance of the age variable in nurse turnover and leverages it as a strategic point of intervention.

The second most crucial variable in our study is the “Working Hours,” specifically the impact of overtime on nurse turnover. Our findings underscore the substantial influence of overtime on the turnover rates among nurses, emphasizing the importance of addressing this issue. This insight can serve as compelling evidence to inform the development of optimal work scheduling practices and guidelines for nurse work scheduling aimed at minimizing nurse turnover, as advocated by Bae [

8]. Overtime hours must be closely regulated to prevent nurse burnout, ensuring they can maintain their well-being and consistently deliver high-quality patient care. A key aspect of this regulation is continuously monitoring work hours and overtime. This monitoring should be a fundamental part of maintaining the quality of work within healthcare institutions [

31]. It is particularly crucial during shift changes when uncertainties in hospital operations can result in unexpected overtime. Robust policies need to be established during shift changes to address this challenge effectively, and supervisors or managers should actively advocate for implementing such policy changes. These measures are vital in maintaining a healthy work-life balance for nurses and ultimately contribute to reducing turnover rates, thereby enhancing the overall quality of healthcare services.

Our findings also underscore the strong association between nurses’ use of EHR or EMR technology and turnover intentions [

35]. In the United States, gray literature has reported higher job satisfaction among nurses using EHR systems. Nevertheless, issues such as poor EHR usability, the lack of standards, limited functionality, and the need for workarounds can detrimentally impact nurse productivity, patient care, and outcomes, as reported by Bjarnadottir et al. [

36]. Adequate information and support are crucial to minimize potential harm caused by suboptimal EHR systems, as such improvements can enhance patient-nurse interactions and job performance, reduce medical errors, and alleviate nurse burnout and stress. Continuous support, financial incentives, and adherence to best practices should be integral components of the strategy to ensure the successful implementation of EHR or EMR systems in healthcare settings.

Finally, the nature of a nurse’s employment, whether full-time or part-time, significantly influences nurse turnover rates. Part-time nurses tend to exhibit a higher likelihood of turnover. This phenomenon can be explained by the practice of assigning part-time nurses to fill in for their full-time counterparts. Consequently, part-time nurses may find themselves less familiar with the routines, daily operations, and processes of the hospital wards or units, leading to apprehension about their work in the hospital setting. To address this issue and mitigate the fear of work among part-time nurses, implementing a buddy system could be an effective strategy [

34]. This system would pair part-time nurses with more experienced and seasoned counterparts, providing them with the necessary support and guidance. Such a support system can go a long way in helping part-time nurses acclimate to their work environment and foster a sense of confidence and belonging within the hospital. Regardless of working environment, salary, region, and job satisfaction can also be considered to reduce nurse turnover.

Our machine learning analysis has underscored the enhanced predictive power of SMOTE_RF when the number of variables is streamlined. This finding highlights the importance of prioritizing essential features and avoiding unnecessary information when addressing nurse turnover through interventions led by human resource teams, supervisors, or managers. Notably, SMOTE_RF consistently outperformed alternative methods across all performance metrics considered in this study.

While our study yielded favorable results, there are still several limitations. The analysis primarily focused on the working environment and individual characteristics, largely due to constraints imposed by the NSSRN dataset, which offered limited survey data results. Factors like leadership style, communication with management, individual health status, and collaboration with colleagues, which could significantly impact nurse turnover, were not incorporated into the model [

3], [

11]. Future research should include these additional variables to ensure a more comprehensive analysis. Furthermore, researchers should explore alternative class imbalance methods beyond those employed in our study, as some of these approaches may offer more advanced and effective ways to examine nurse turnover. Researchers must also apply more sophisticated sampling techniques to address imbalances in predictive variables, a limitation present in our current study. By addressing these limitations and adopting more comprehensive methodologies, we can further enhance our understanding of nurse turnover dynamics and contribute to developing more effective intervention strategies.