Submitted:

03 November 2023

Posted:

06 November 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

1.1. Related Work

1.2. Outline

2. Background

2.1. Mathematical model

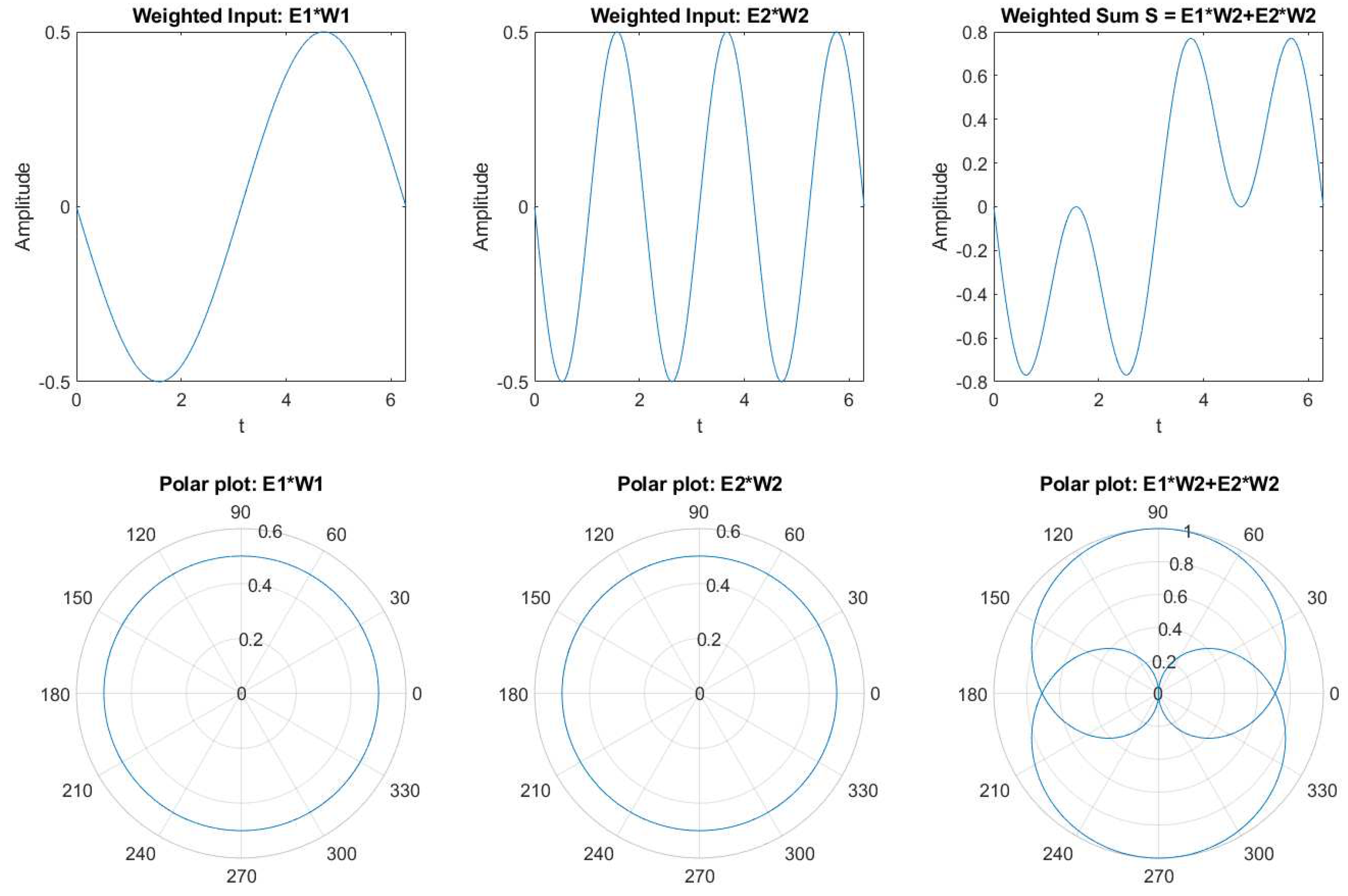

2.2. MATLAB Simulation Report

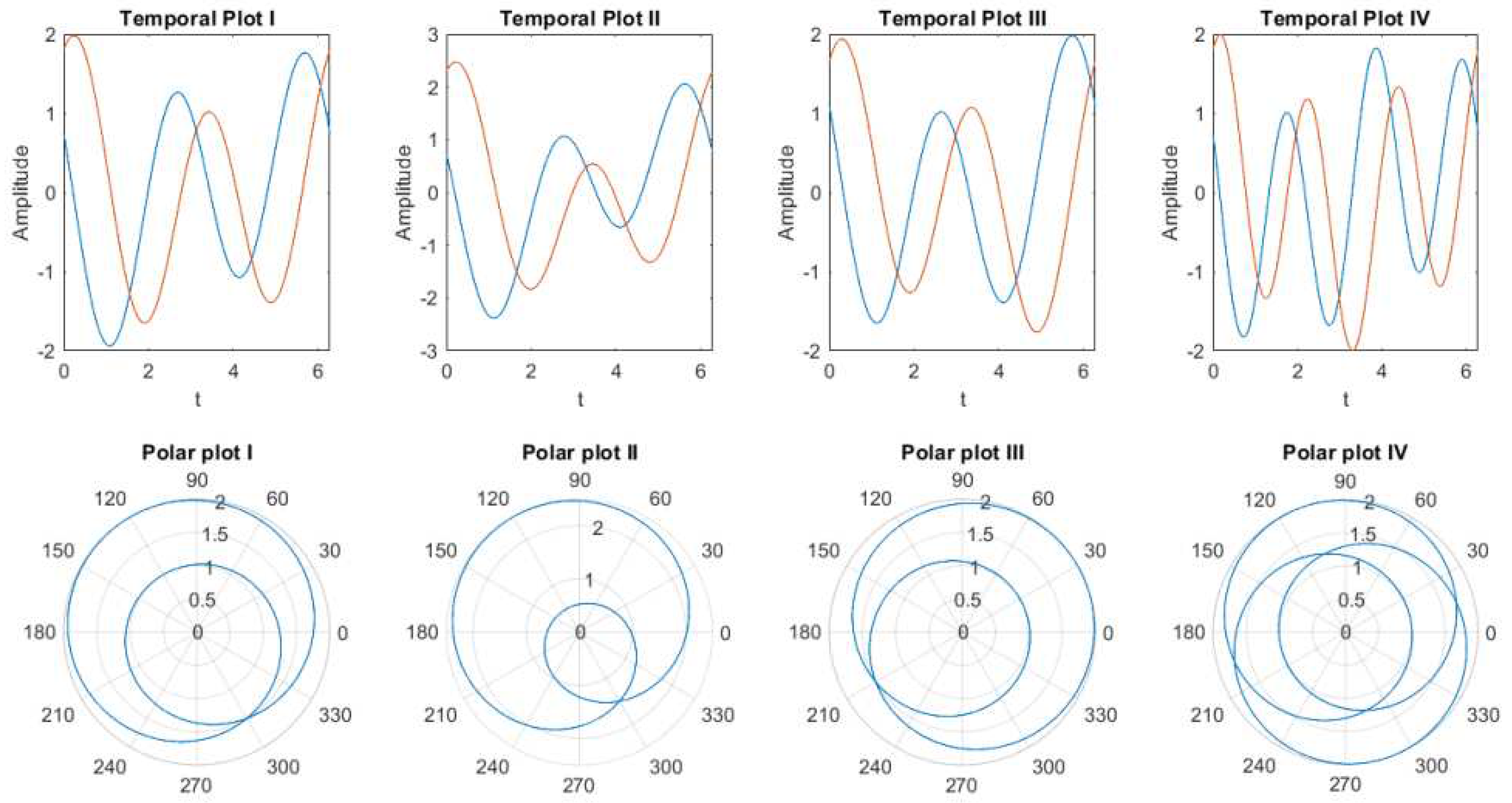

- Example 1 in Figure 2 (temporal plot I and Polar plot I) shows the output of the weighted sum of two neurons where are used.

- example 2 (temporal plot II and Polar plot II) all parameters of the weighted input of two neurons remain the same except is taken. This causes a change in amplitude.

- example 3, is changed to . This changes the phase and orientation of the weighted sum.

- For example, 4 is set to 3; this dramatically changes the pattern of the weighted sum, which is shown in the polar plot iv.

3. Methodology

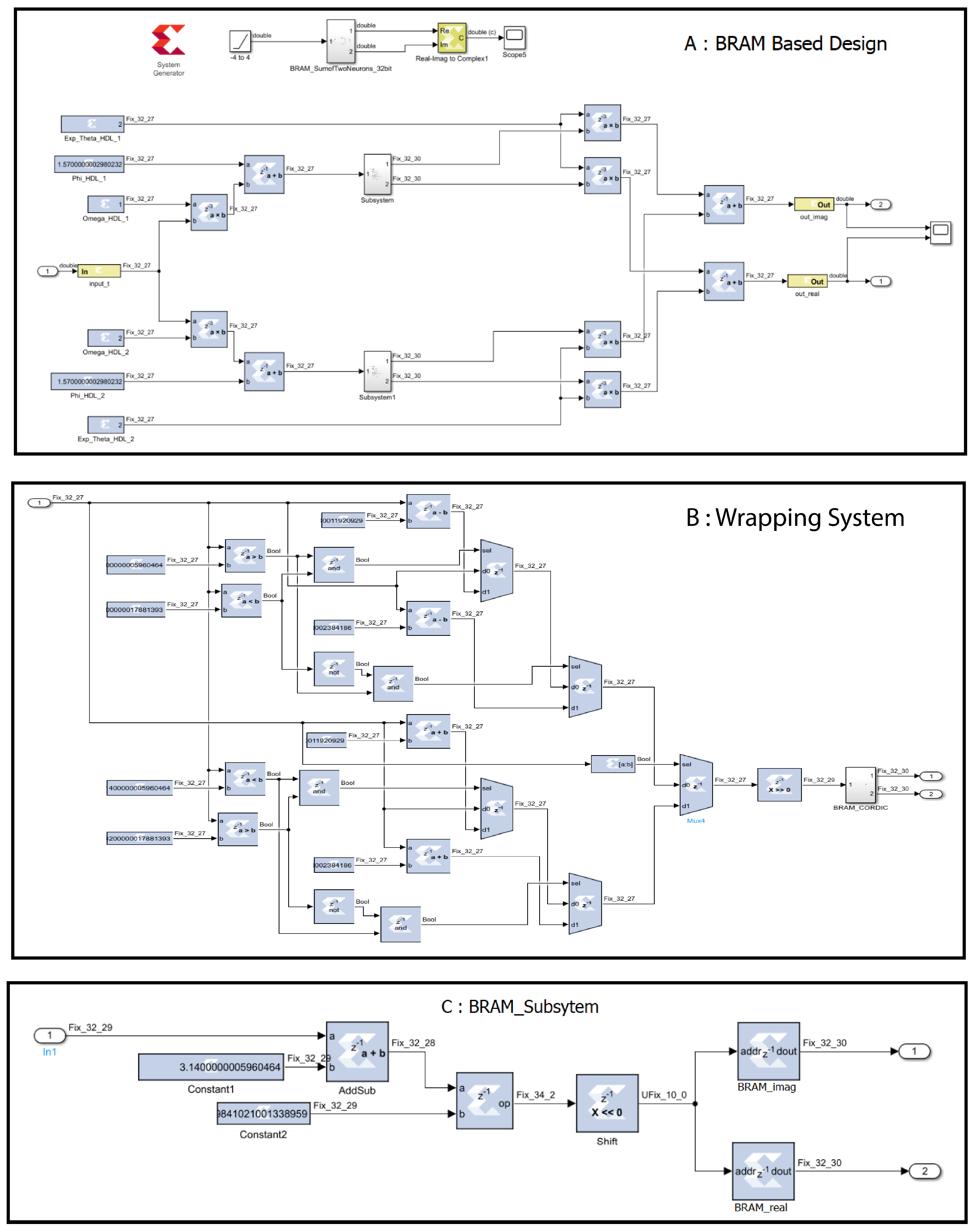

3.1. Model design in Simulink and Vivado

3.1.1. BRAM-based design (using SysGen in MATLAB Simulink)

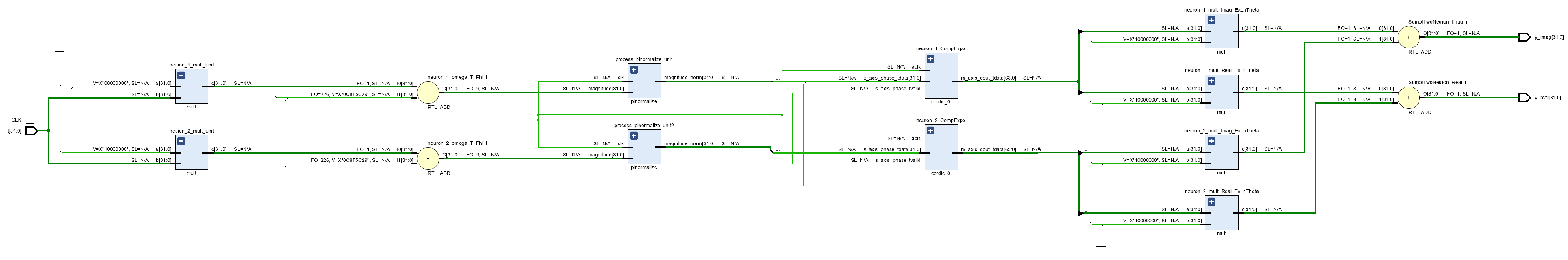

3.1.2. BRAM-based design in Vivado

3.1.3. CORDIC based design (using SysGen in MATLAB Simulink)

3.1.4. CORDIC based design in Vivado

3.1.5. The fixed-point implementation

4. Result and Discussion

4.1. Simulation Report

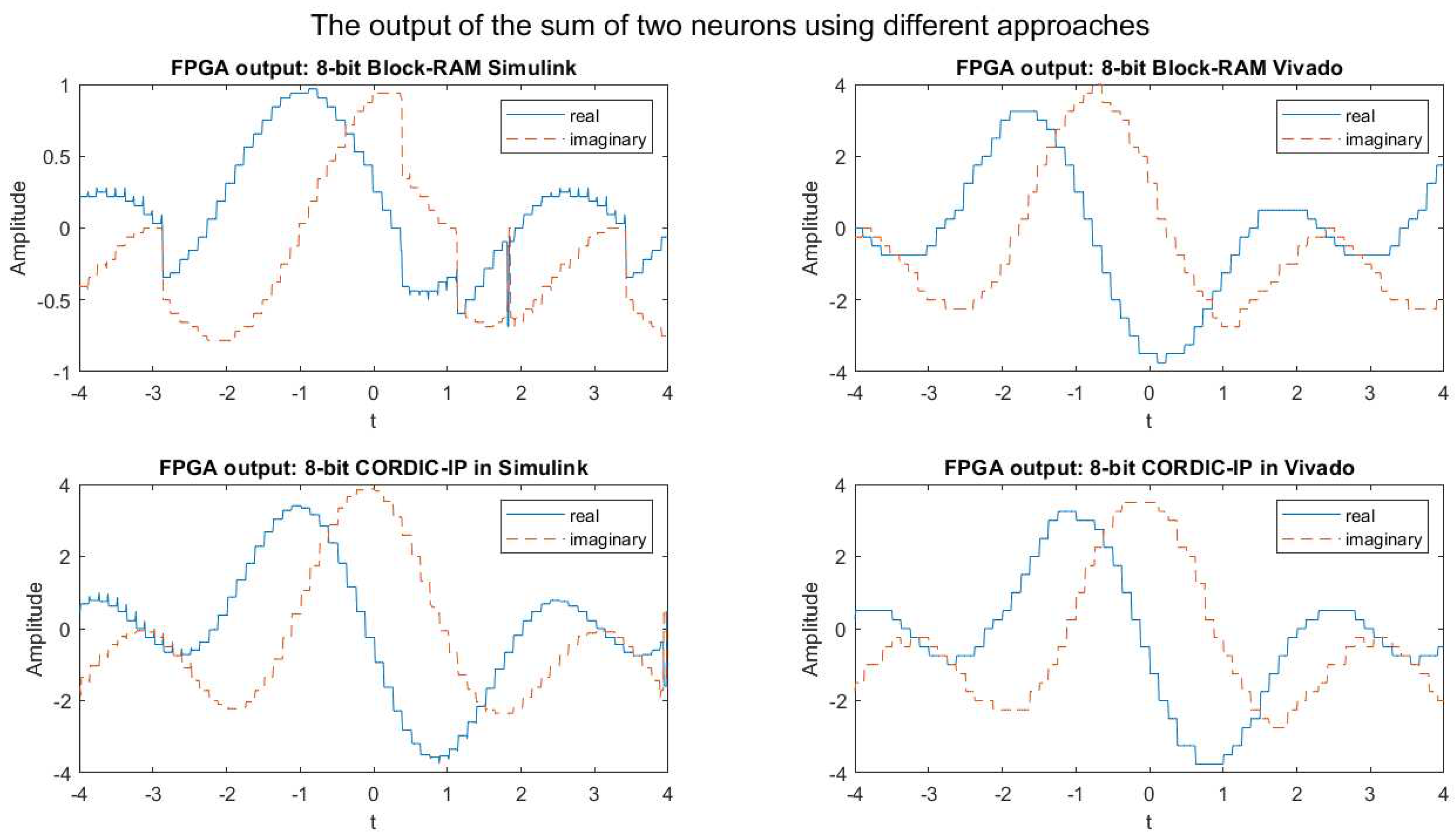

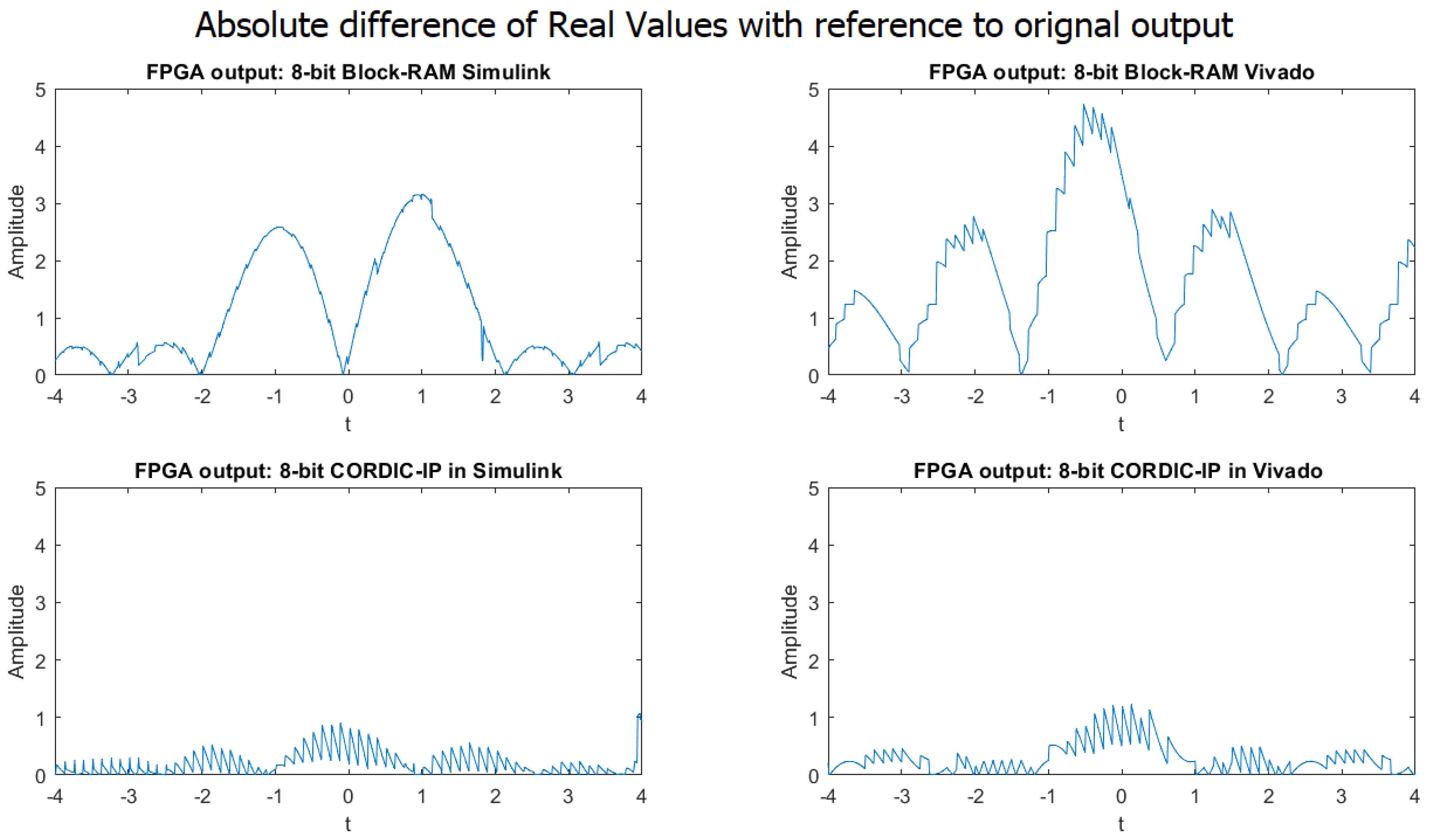

4.1.1. 8-bit implementation result

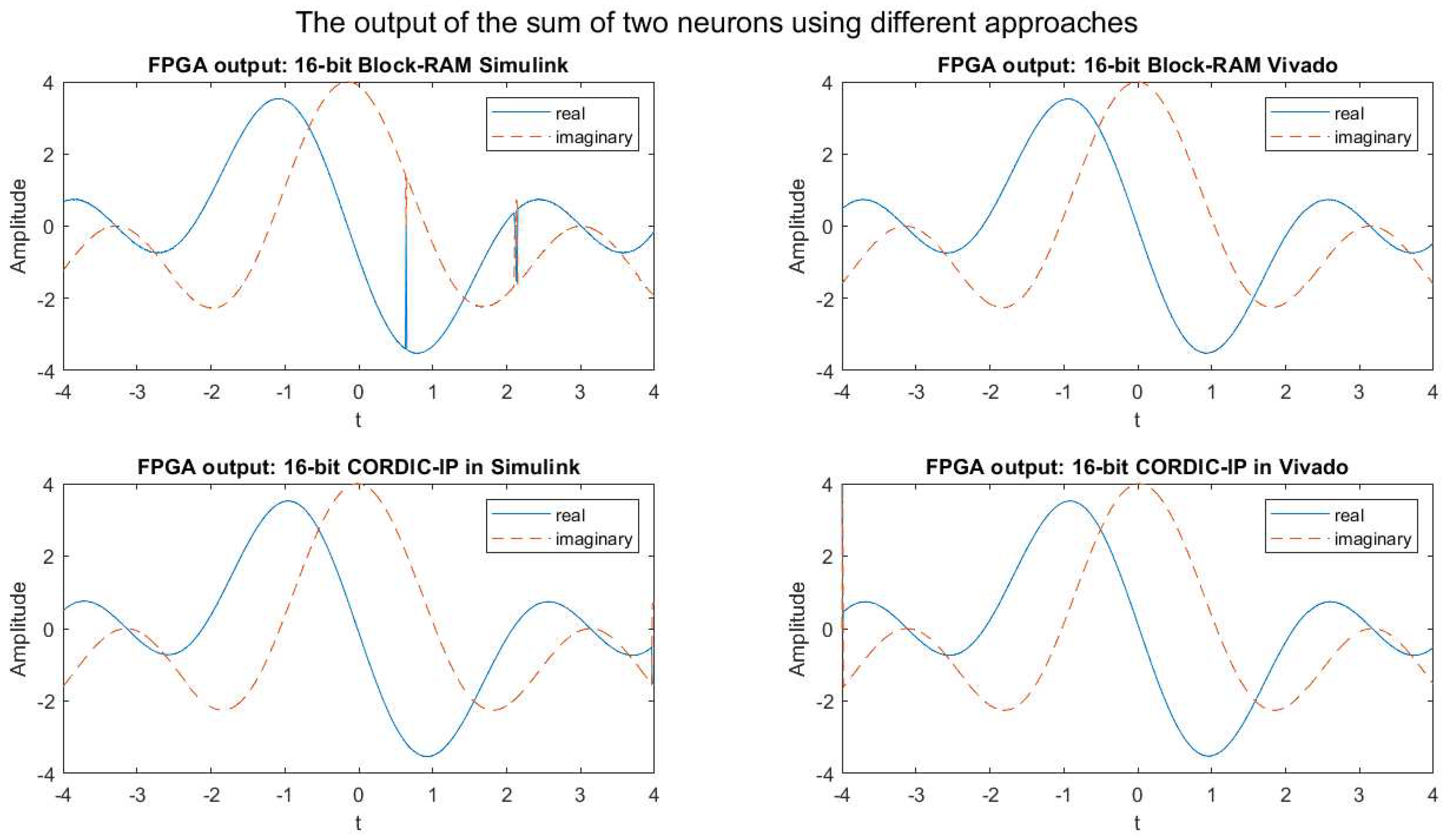

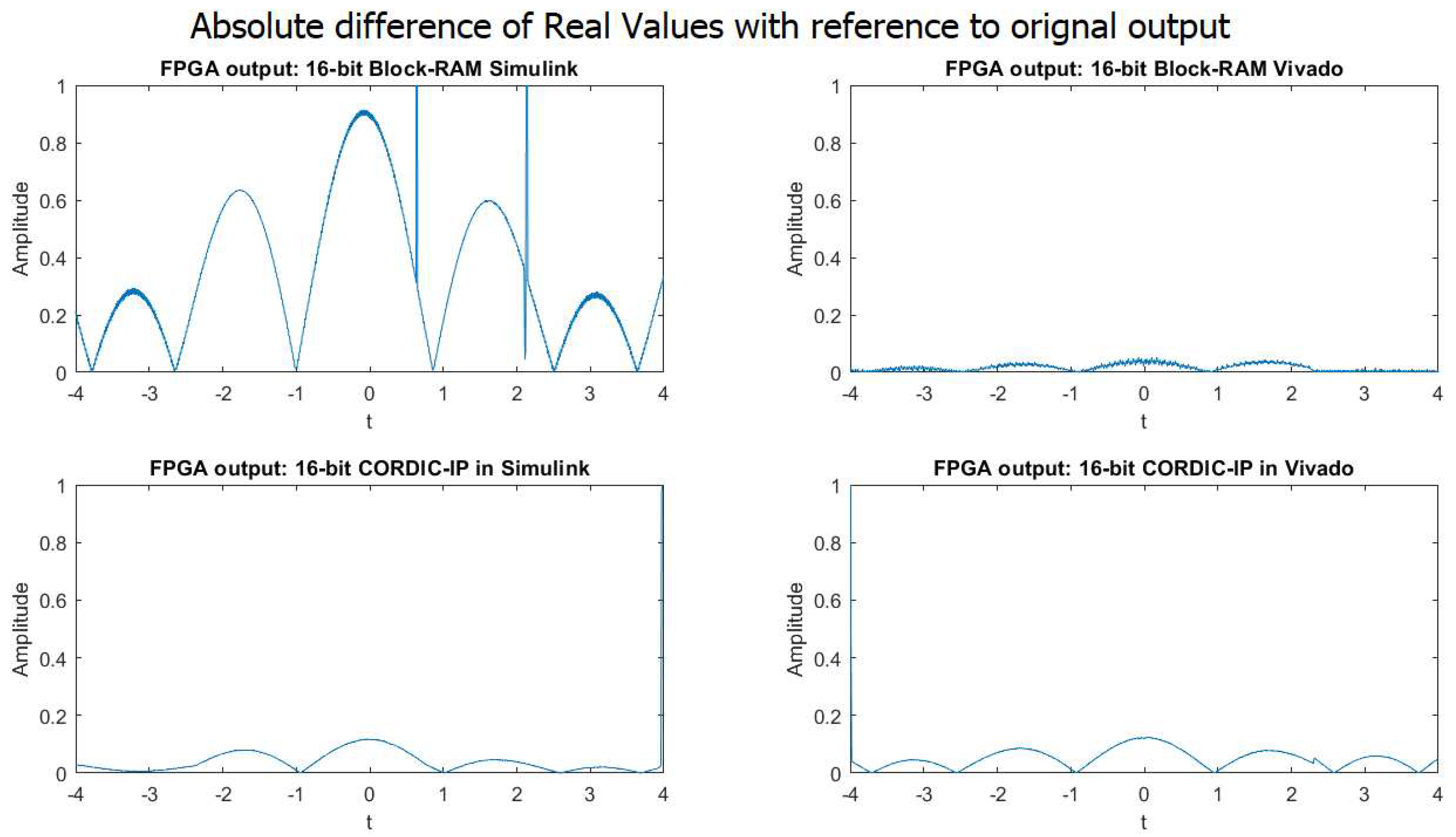

4.1.2. 16-bit implementation result

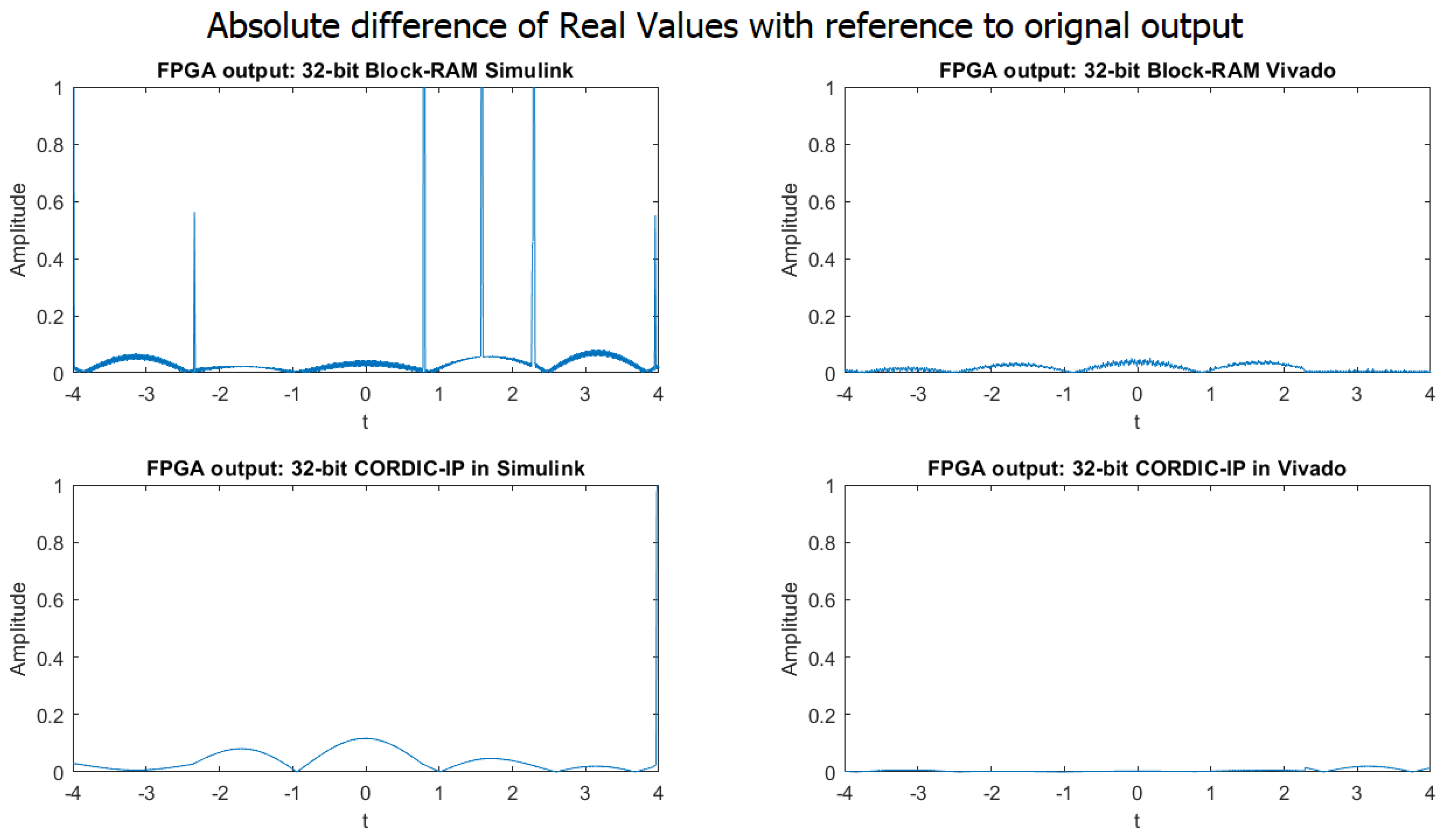

4.1.3. 32-bit implementation result

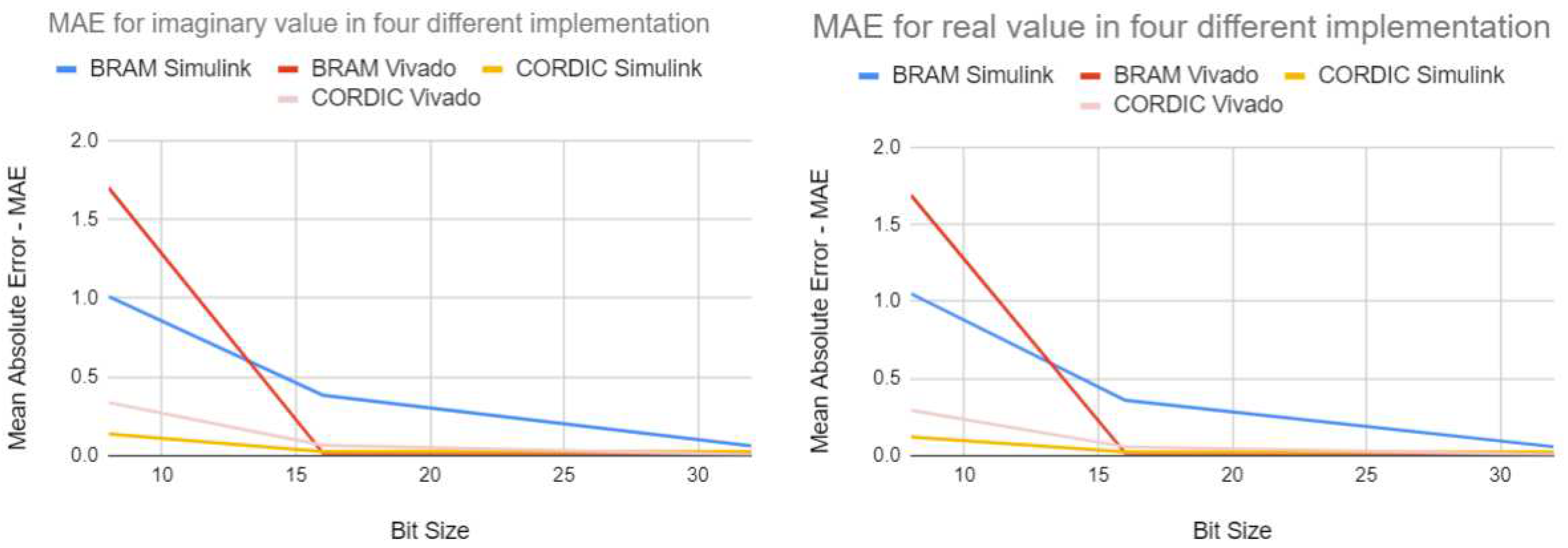

4.1.4. MAE of the four different implementation approaches

4.1.5. Discussion Summary

4.2. Hardware Implementation Report

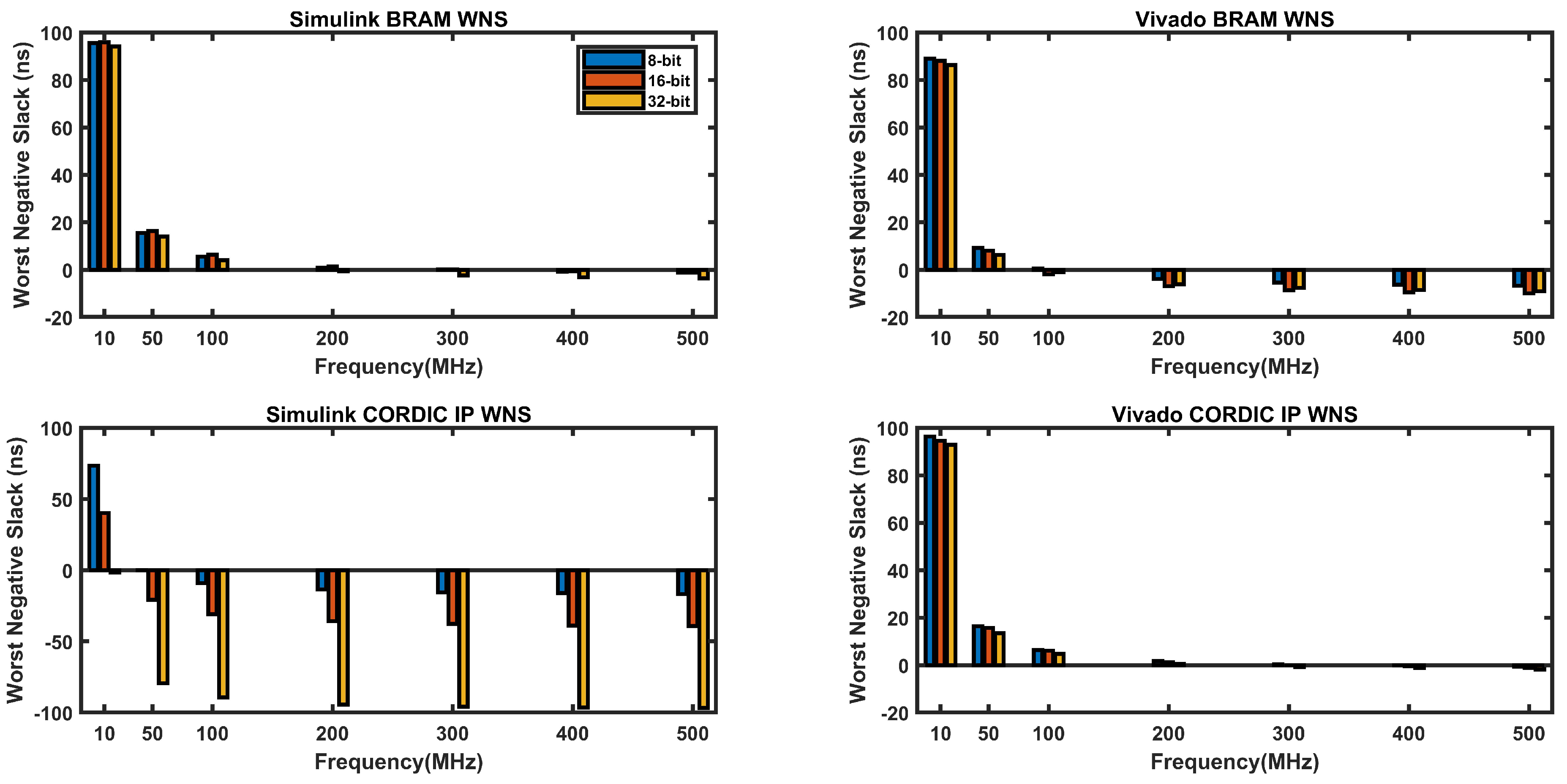

4.2.1. WNS Report:

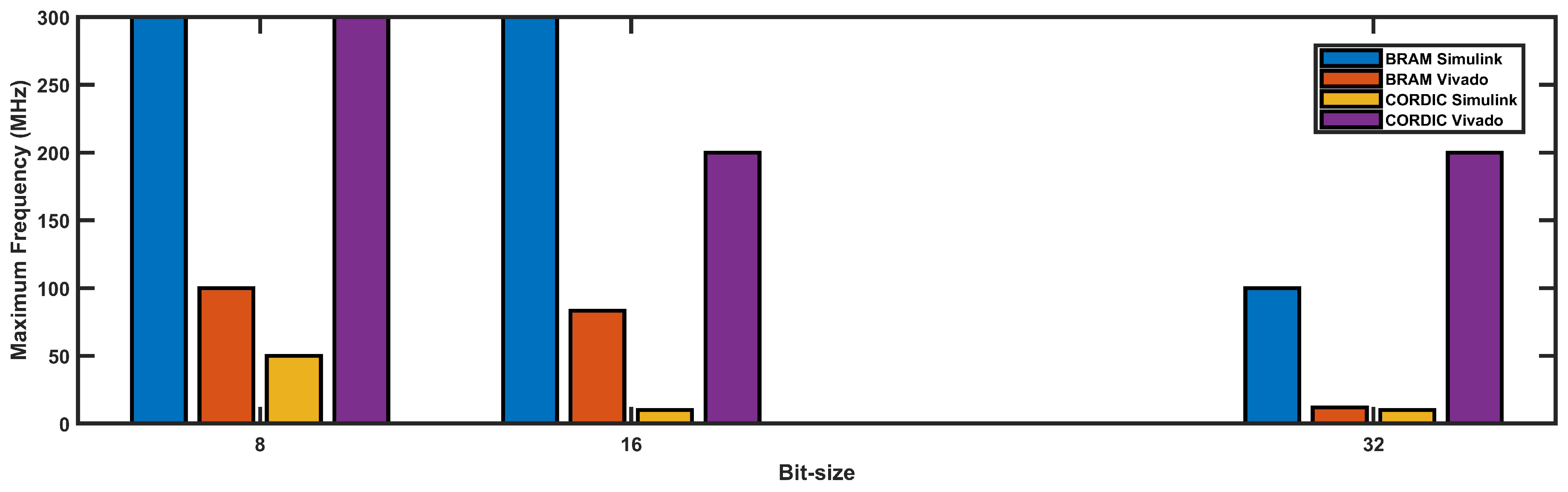

4.2.2. Max Operating Frequency

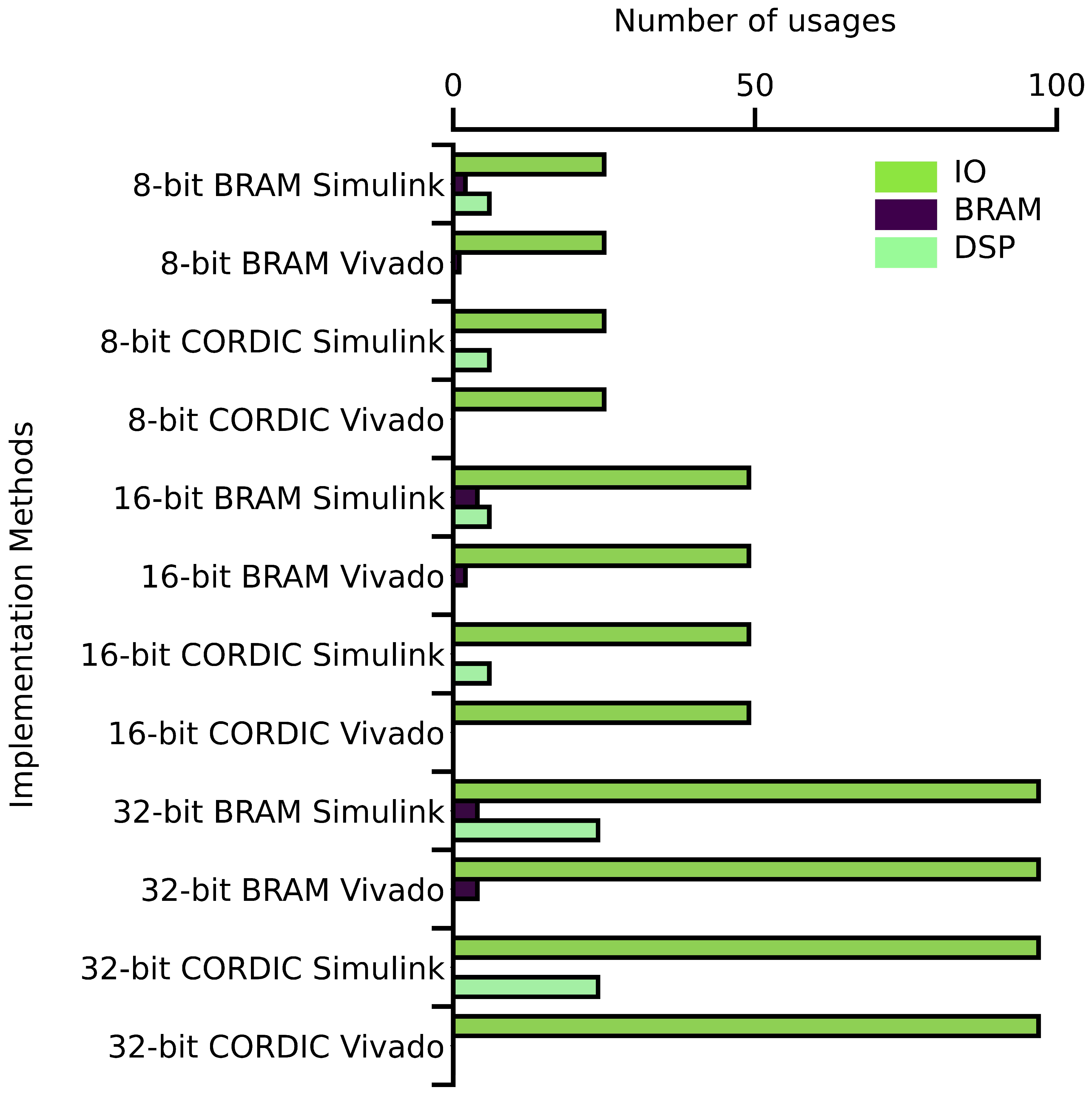

4.2.3. Resource usages

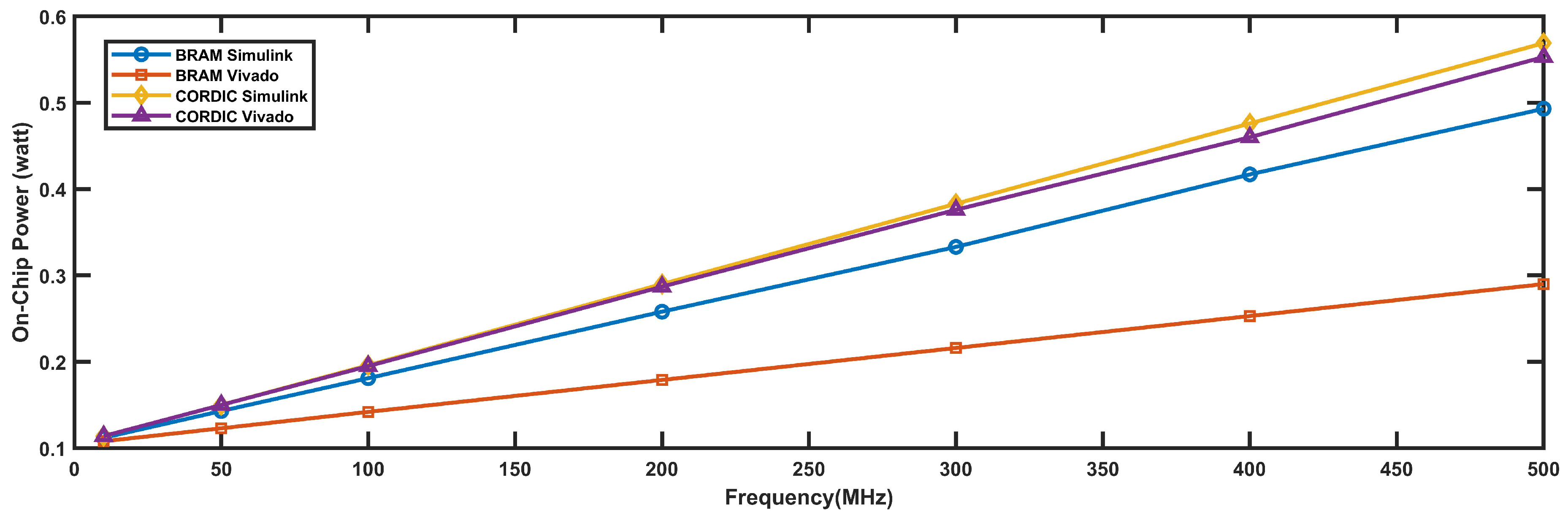

4.2.4. Power Requirement

4.2.5. Discussion Summary

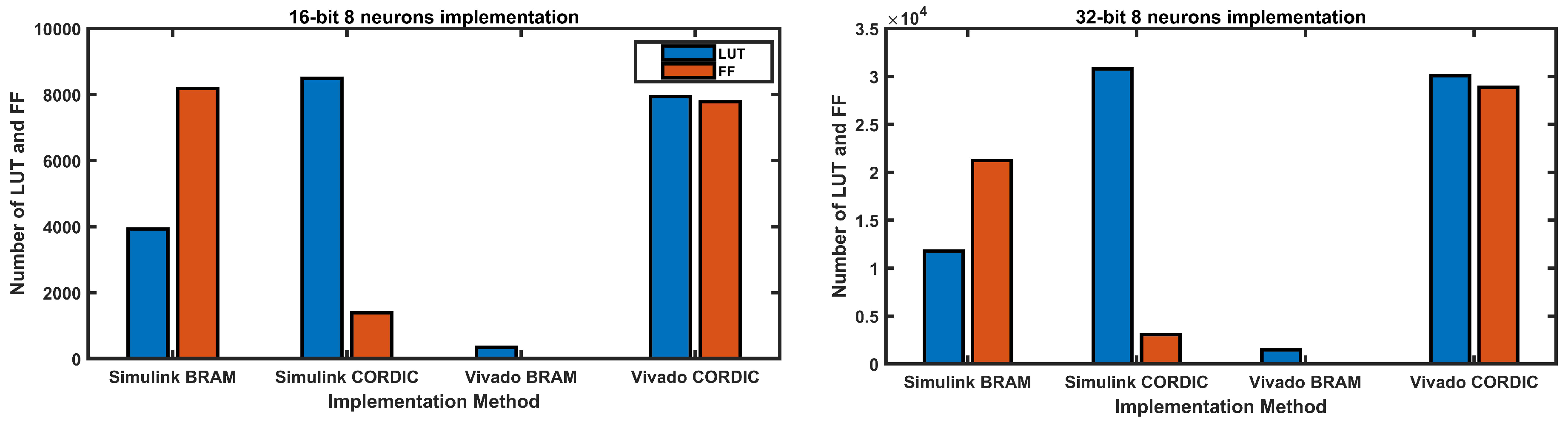

4.3. Multi-Neuron Implementation Report

5. Conclusion and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Sample Availability

Abbreviations

| FPGA | Field-Programmable Gate Array |

| ASIC | Application-Specific Integrated Circuit |

| DSP | Digital Signal Processor |

| HDL | Hardware Description Language |

| VHDL | VHSIC Hardware Description Language |

| LUT | Lookup Table |

| FF | Flip-Flop |

| SSN | Simultaneous Switching Noise |

| BRAM | Block RAM (Random Access Memory) |

| CORDIC | Coordinate Rotation Digital Computer |

| IO | Input/Output |

References

- Zhang, L. Oscillation Patterns of A Complex Exponential Neural Network. 2022 IEEE/WIC/ACM International Joint Conference on Web Intelligence and Intelligent Agent Technology (WI-IAT). IEEE, 2022, pp. 423–430. [CrossRef]

- Capra, M.; Bussolino, B.; Marchisio, A.; Shafique, M.; Masera, G.; Martina, M. An updated survey of efficient hardware architectures for accelerating deep convolutional neural networks. Future Internet 2020, 12, 113. [Google Scholar] [CrossRef]

- Ghimire, D.; Kil, D.; Kim, S.h. A survey on efficient convolutional neural networks and hardware acceleration. Electronics 2022, 11, 945. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, L. Spiking Neural Network Implementation on FPGA for Multiclass Classification. 2023 IEEE International Systems Conference (SysCon). IEEE, 2023, pp. 1–8. [CrossRef]

- Aizenberg, I. Complex-valued neural networks with multi-valued neurons; Vol. 353, Springer, 2011. [CrossRef]

- Aizenberg, I.; Herman, J.; Vasko, A. A Convolutional Neural Network with Multi-Valued Neurons: a Modified Learning Algorithm and Analysis of Performance. 2022 IEEE 13th Annual Ubiquitous Computing, Electronics & Mobile Communication Conference (UEMCON). IEEE, 2022, pp. 0585–0591. [CrossRef]

- Javanshir, A.; Nguyen, T.T.; Mahmud, M.P.; Kouzani, A.Z. Advancements in Algorithms and Neuromorphic Hardware for Spiking Neural Networks. Neural Computation 2022, 34, 1289–1328. [Google Scholar] [CrossRef]

- Huynh, P.K.; Varshika, M.L.; Paul, A.; Isik, M.; Balaji, A.; Das, A. Implementing spiking neural networks on neuromorphic architectures: A review. arXiv arXiv:2202.08897 2022.

- Han, J.; Li, Z.; Zheng, W.; Zhang, Y. Hardware implementation of spiking neural networks on FPGA. Tsinghua Science and Technology 2020, 25, 479–486. [Google Scholar] [CrossRef]

- Khodamoradi, A.; Denolf, K.; Kastner, R. S2n2: A fpga accelerator for streaming spiking neural networks. The 2021 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, 2021, pp. 194–205. [CrossRef]

- Fang, H.; Mei, Z.; Shrestha, A.; Zhao, Z.; Li, Y.; Qiu, Q. Encoding, model, and architecture: Systematic optimization for spiking neural network in FPGAs. Proceedings of the 39th International Conference on Computer-Aided Design, 2020, pp. 1–9. [CrossRef]

- Gupta, S.; Vyas, A.; Trivedi, G. FPGA implementation of simplified spiking neural network. 2020 27th IEEE International Conference on Electronics, Circuits and Systems (ICECS). IEEE, 2020, pp. 1–4. [CrossRef]

- Kakani, V.; Li, X.; Cui, X.; Kim, H.; Kim, B.S.; Kim, H. Implementation of Field-Programmable Gate Array Platform for Object Classification Tasks Using Spike-Based Backpropagated Deep Convolutional Spiking Neural Networks. Micromachines 2023, 14, 1353. [Google Scholar] [CrossRef]

- Guo, W.; Yantır, H.E.; Fouda, M.E.; Eltawil, A.M.; Salama, K.N. Toward the optimal design and FPGA implementation of spiking neural networks. IEEE Transactions on Neural Networks and Learning Systems 2021, 33, 3988–4002. [Google Scholar] [CrossRef]

- Hosseiny, A.; Jaberipur, G. Complex exponential functions: A high-precision hardware realization. Integration 2020, 73, 18–29. [Google Scholar] [CrossRef]

- Rekha, R.; Menon, K.P. FPGA implementation of exponential function using cordic IP core for extended input range. 2018 3rd IEEE International Conference on Recent Trends in Electronics, Information & Communication Technology (RTEICT). IEEE, 2018, pp. 597–600. [CrossRef]

- Wang, D.; Ercegovac, M.D.; Xiao, Y. Complex function approximation using two-dimensional interpolation. IEEE Transactions on Computers 2013, 63, 2948–2960. [Google Scholar] [CrossRef]

- Malík, P. High throughput floating point exponential function implemented in FPGA. 2015 IEEE Computer Society Annual Symposium on VLSI. IEEE, 2015, pp. 97–100. [CrossRef]

- Xilinx. Block RAM, 2020. Accessed on April 12, 2023.

- Xilinx. Introduction to System Generator, 2020.

- Saidani, T.; Dia, D.; Elhamzi, W.; Atri, M.; Tourki, R.; others. Hardware co-simulation for video processing using xilinx system generator. Proceedings of the World Congress on Engineering, 2009, Vol. 1, pp. 3–7.

- Xilinx. Introducing the Vivado IDE, 2020. Accessed on April 14, 2023.

- Susuki, K. Myelin: A Special Membrane for Cell Communication. Nature education 2010, 3, 59. [Google Scholar]

- Michel, H.E.; Awwal, A.A.S. Artificial neural networks using complex numbers and phase encoded weights. Applied optics 2010, 49, B71–B82. [Google Scholar] [CrossRef] [PubMed]

| Resource Usages | Bit Size | BRAM Simulink | BRAM Vivado | CORDIC Simulink | CORDIC Vivado |

|---|---|---|---|---|---|

| LUT usages | 8 | 286 | 41 | 612 | 548 |

| LUT usages | 16 | 965 | 202 | 2160 | 2094 |

| LUT usages | 32 | 2904 | 337 | 7660 | 7473 |

| FF usages | 8 | 514 | 0 | 196 | 516 |

| FF usages | 16 | 2190 | 0 | 412 | 1944 |

| FF usages | 32 | 5336 | 116 | 874 | 7217 |

| 8-Neuron Method | Max. Number of Neurons | |

|---|---|---|

| 16 bit | 32 bit | |

| Simulink BRAM | 64 | 16 |

| Simulink CORDIC | 32 | 8 |

| Vivado BRAM | 128 | 64 |

| Vivado CORDIC | 32 | 8 |

| 8-Neurons Method | Maximum Frequency (MHz) | |

|---|---|---|

| 16 bit | 32 bit | |

| Simulink BRAM | 250 | 150 |

| Simulink CORDIC | 19 | 9 |

| Vivado BRAM | 50 | 53 |

| Vivado CORDIC | 200 | 200 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).