Submitted:

06 November 2023

Posted:

07 November 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Methodologies

2.1. Air Conditioning load prediction model based on conventional artificial intelligence algorithm

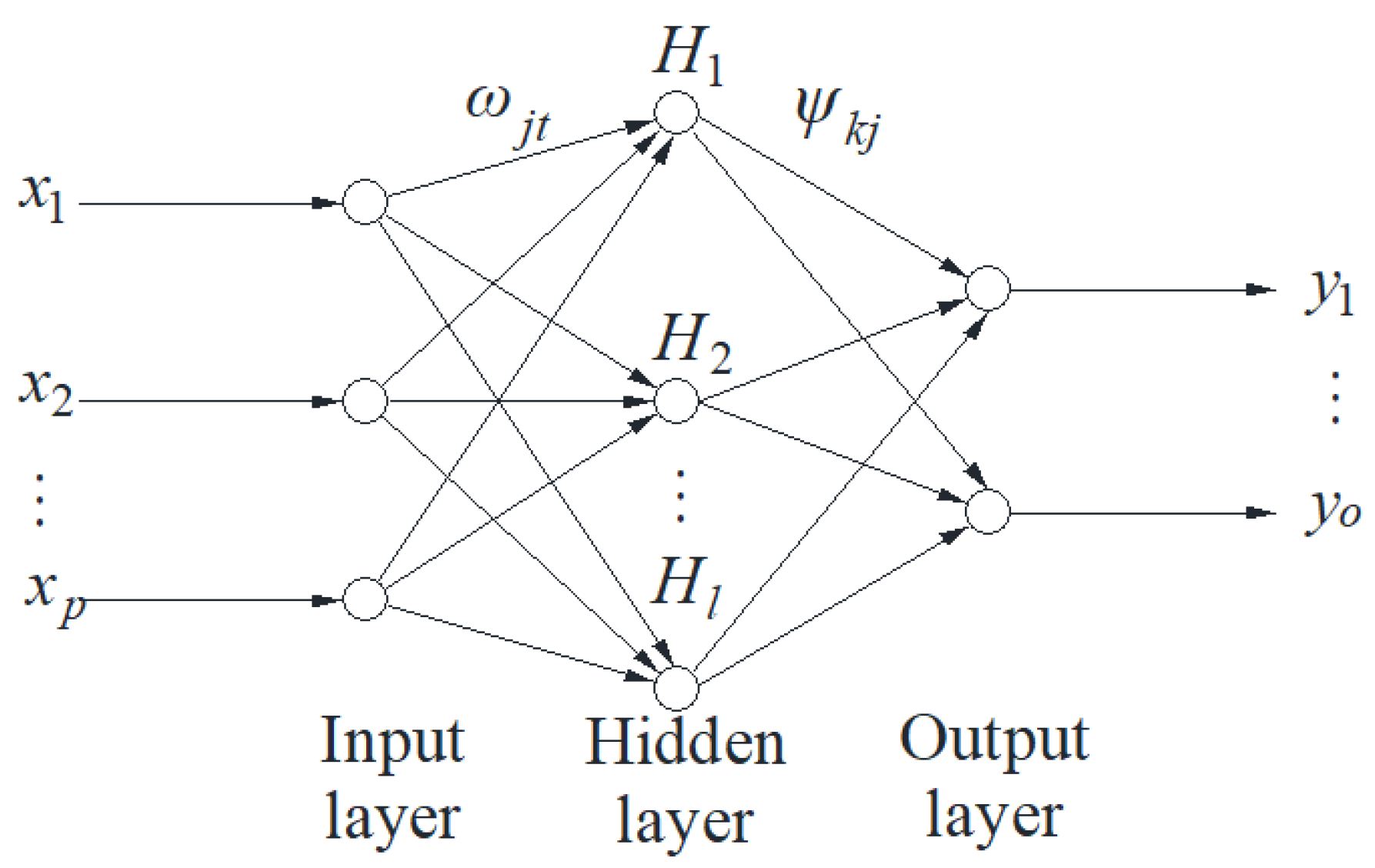

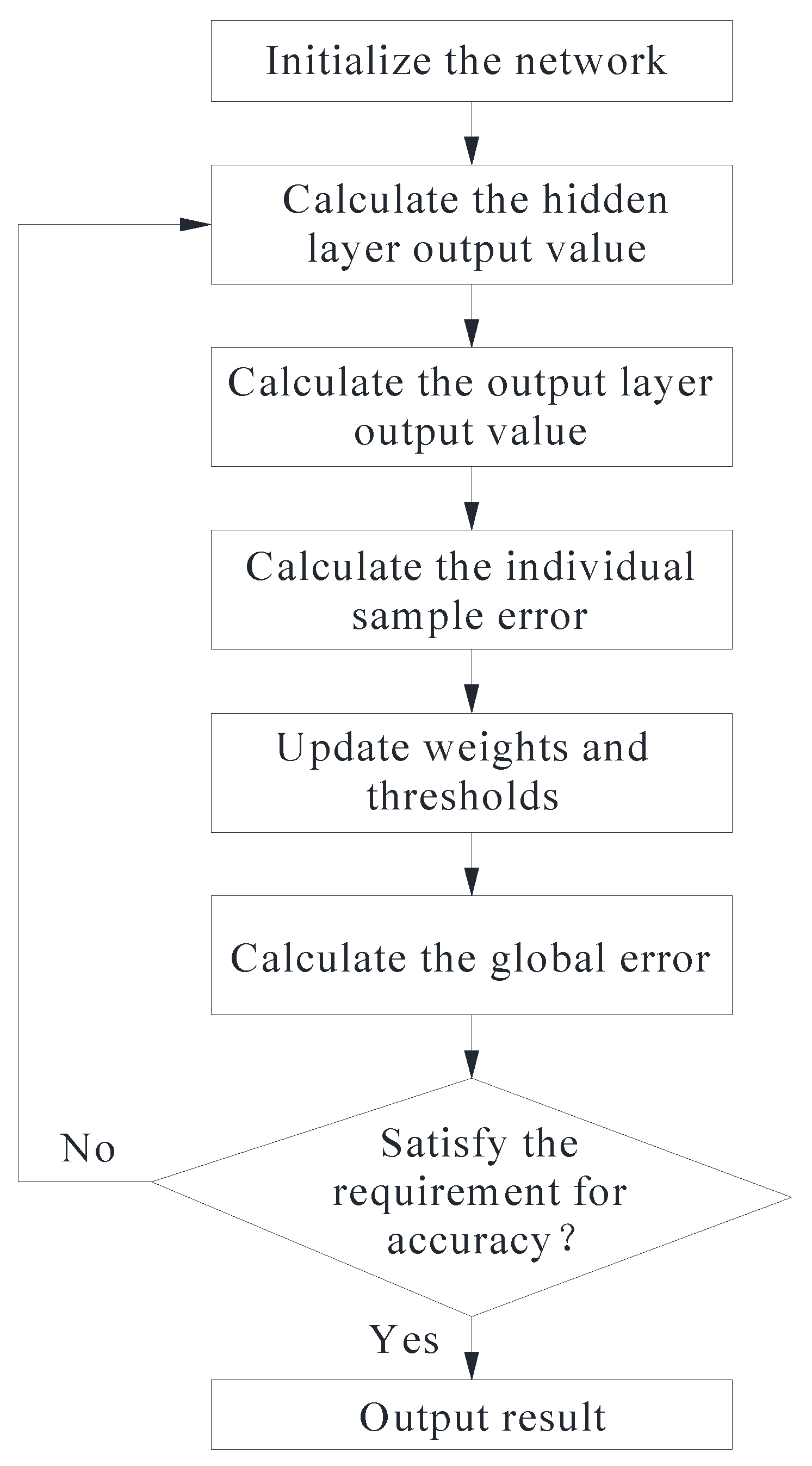

2.2.1. BPNN prediction model

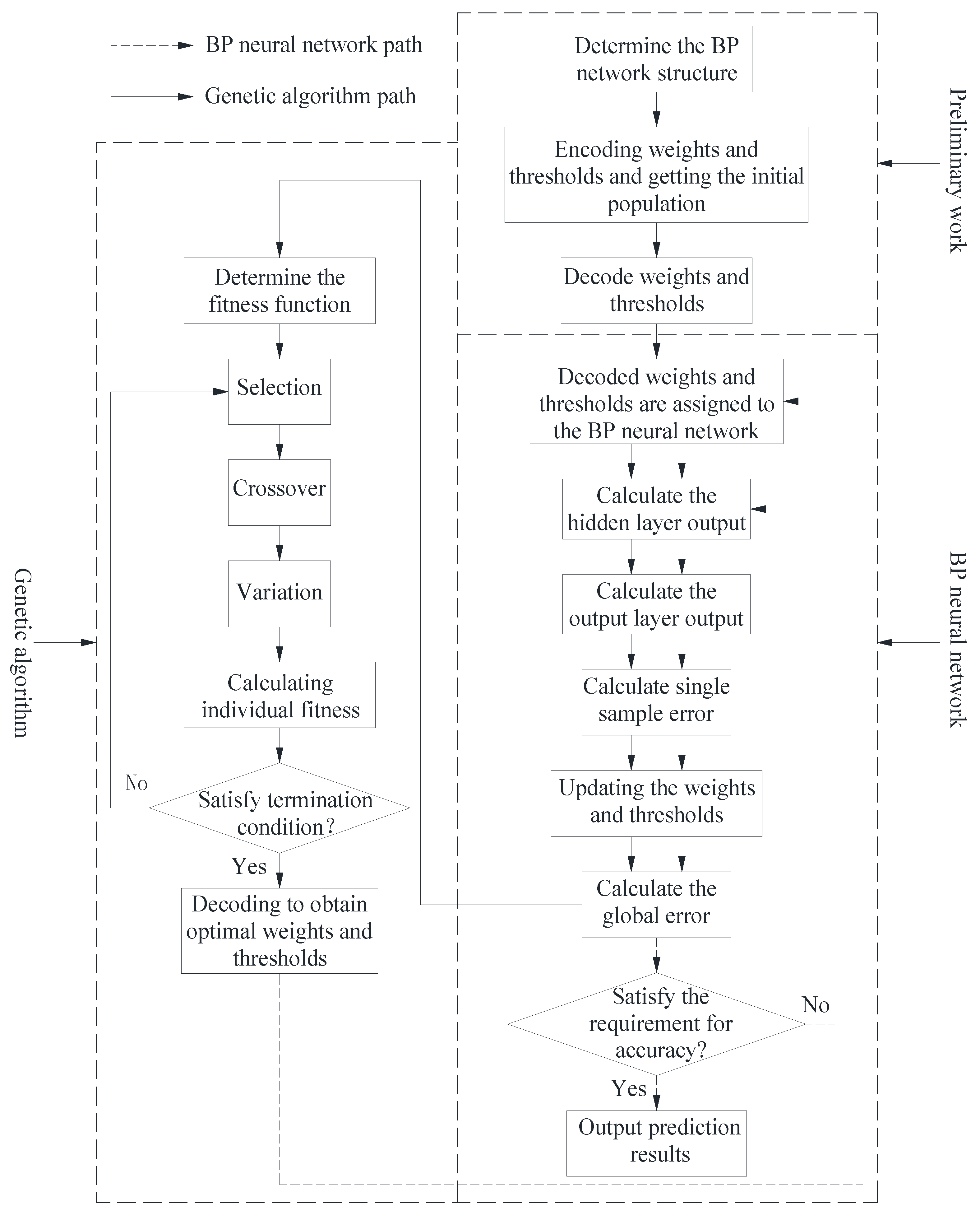

2.2.2. Genetic algorithm BPNN prediction model

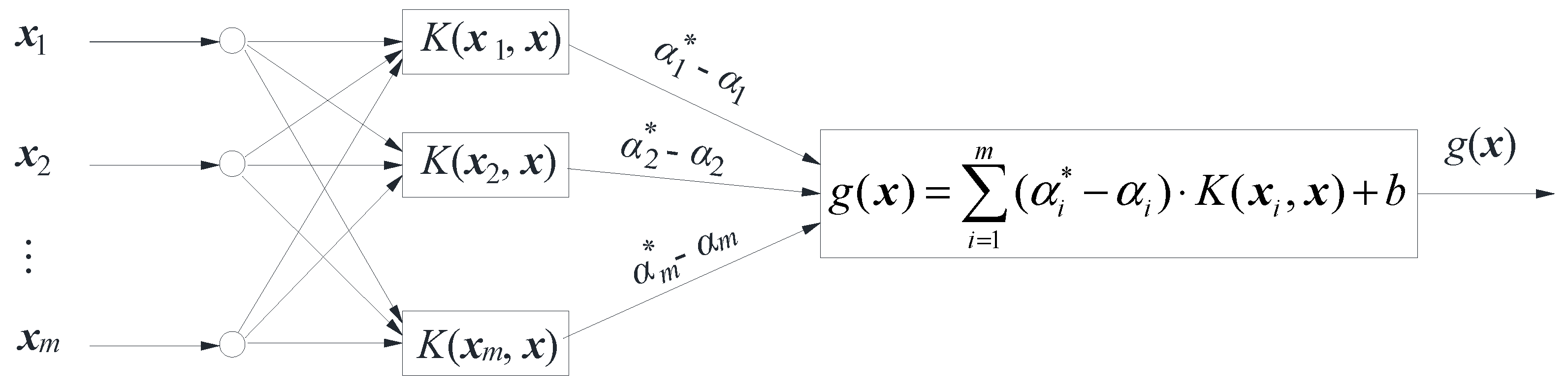

2.2.3. SVR prediction model

2.2.4. ELM prediction model

2.2. Improved air conditioning load prediction model based on similarity

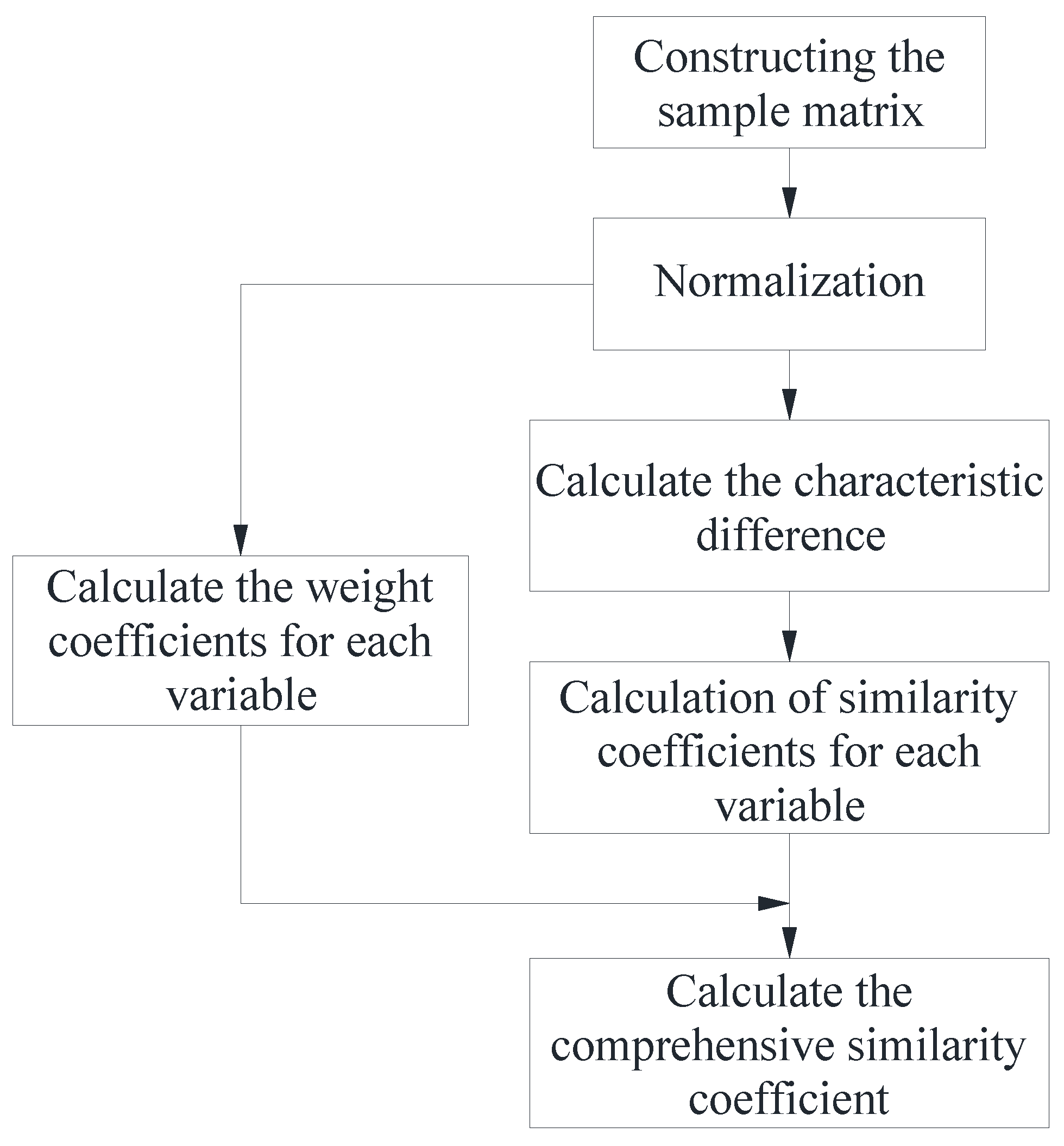

2.2.1. Calculation of comprehensive similarity coefficient

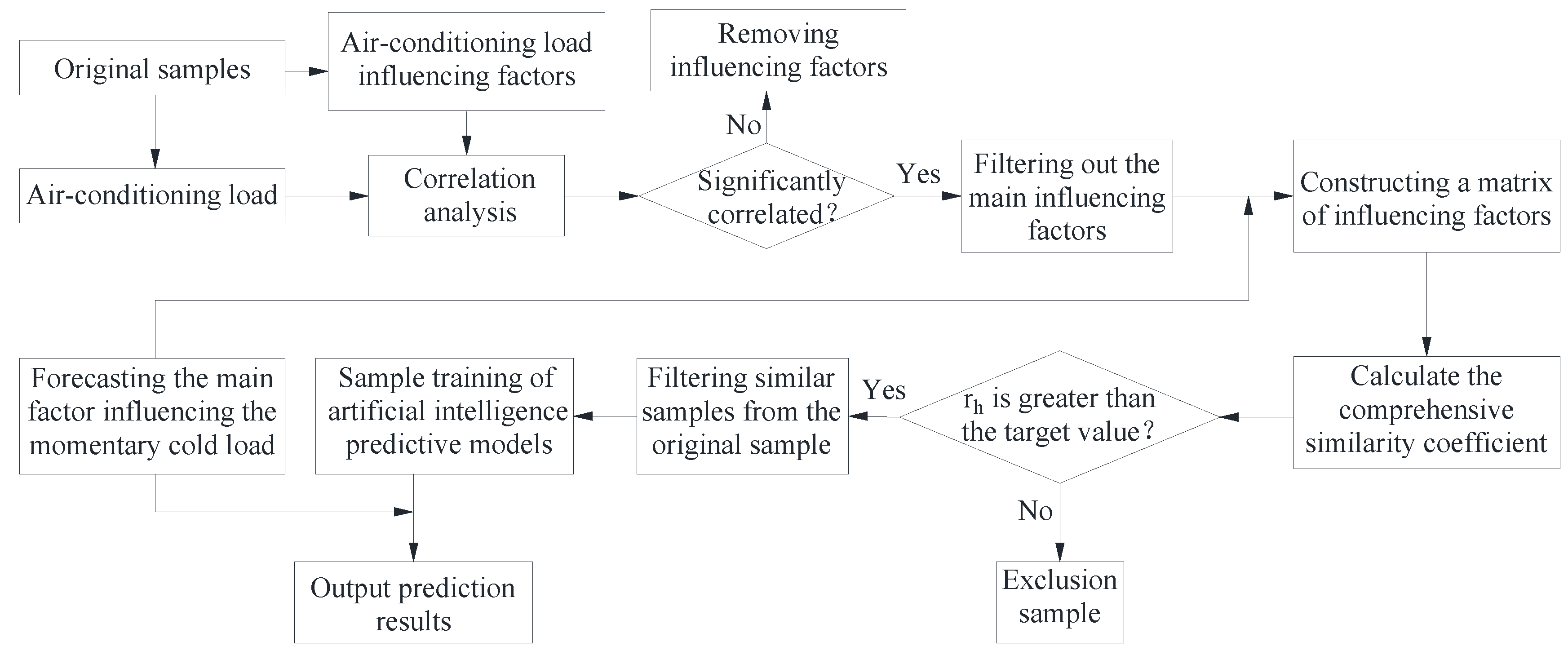

2.2.2. Air conditioning load prediction process based on similarity improvement

2.3. Uncertainty calculation

3. Results and discussions

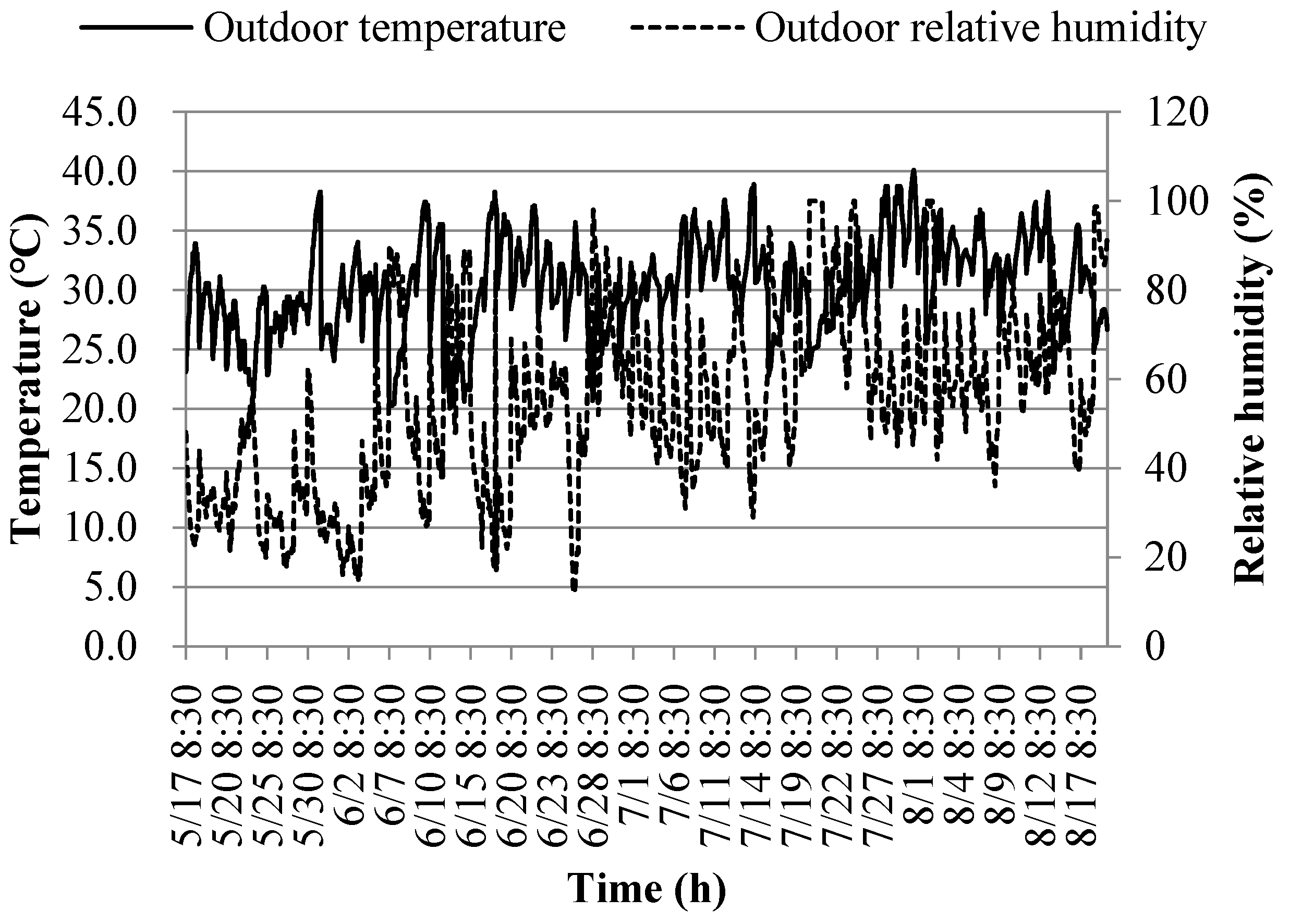

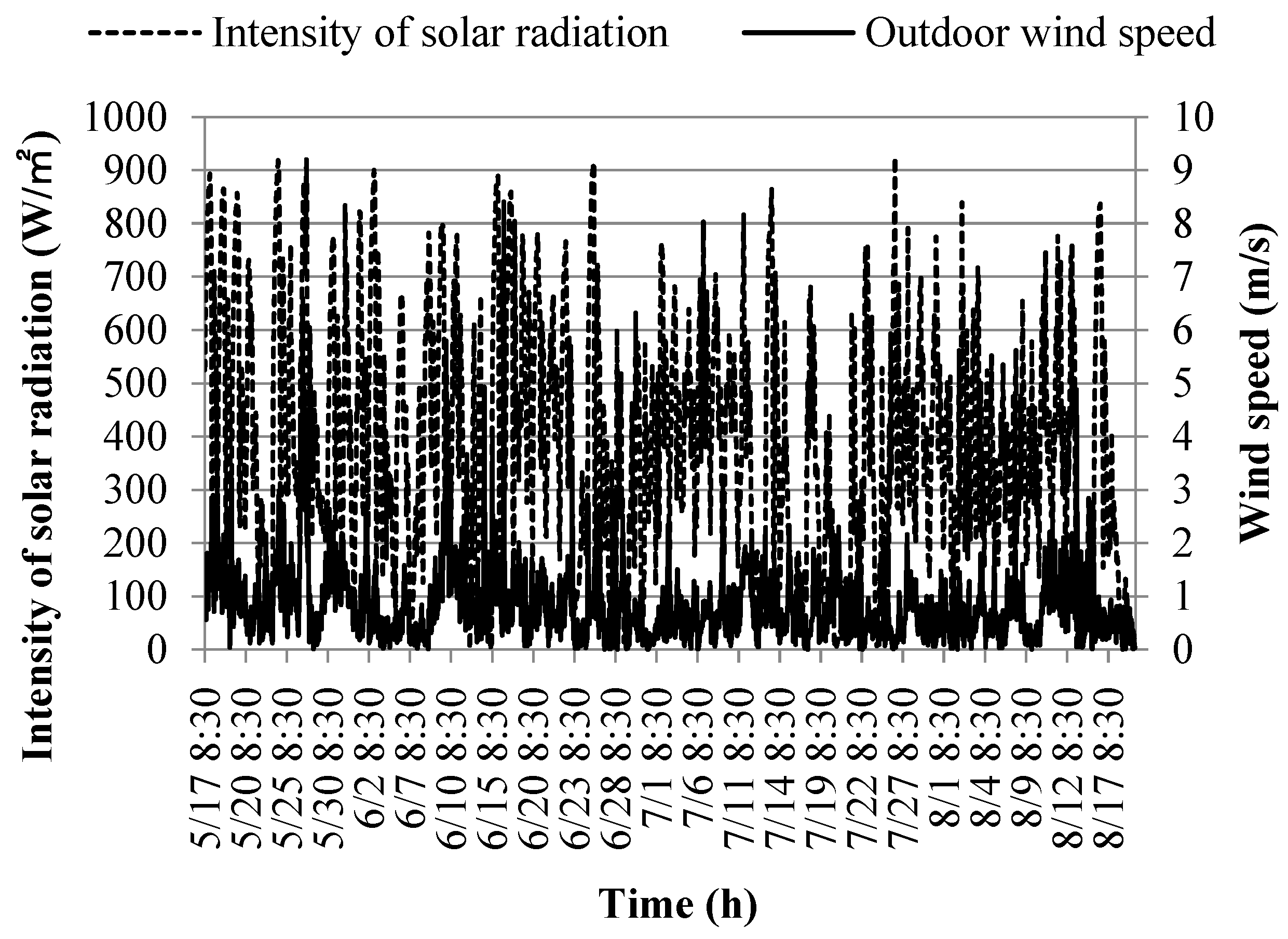

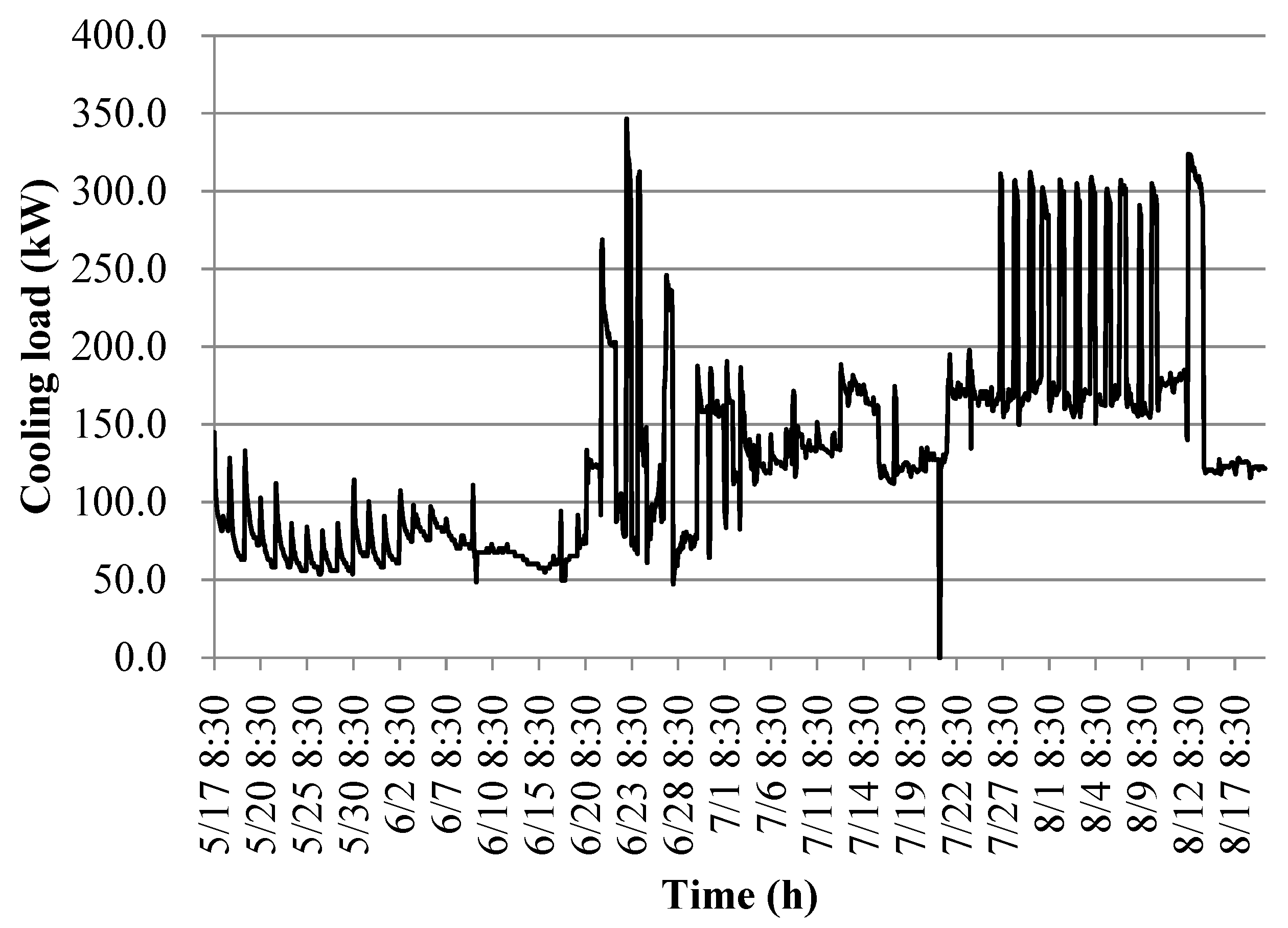

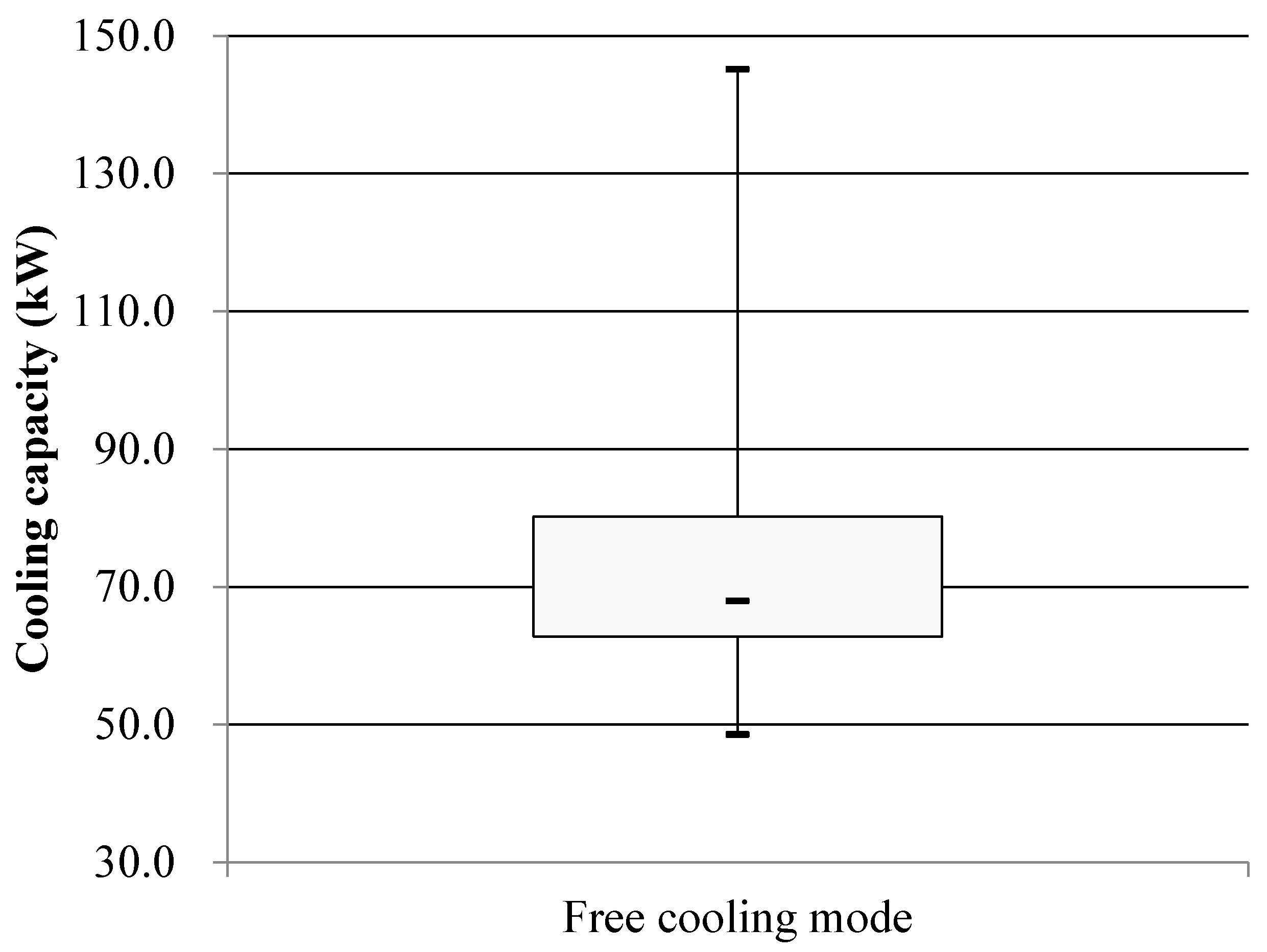

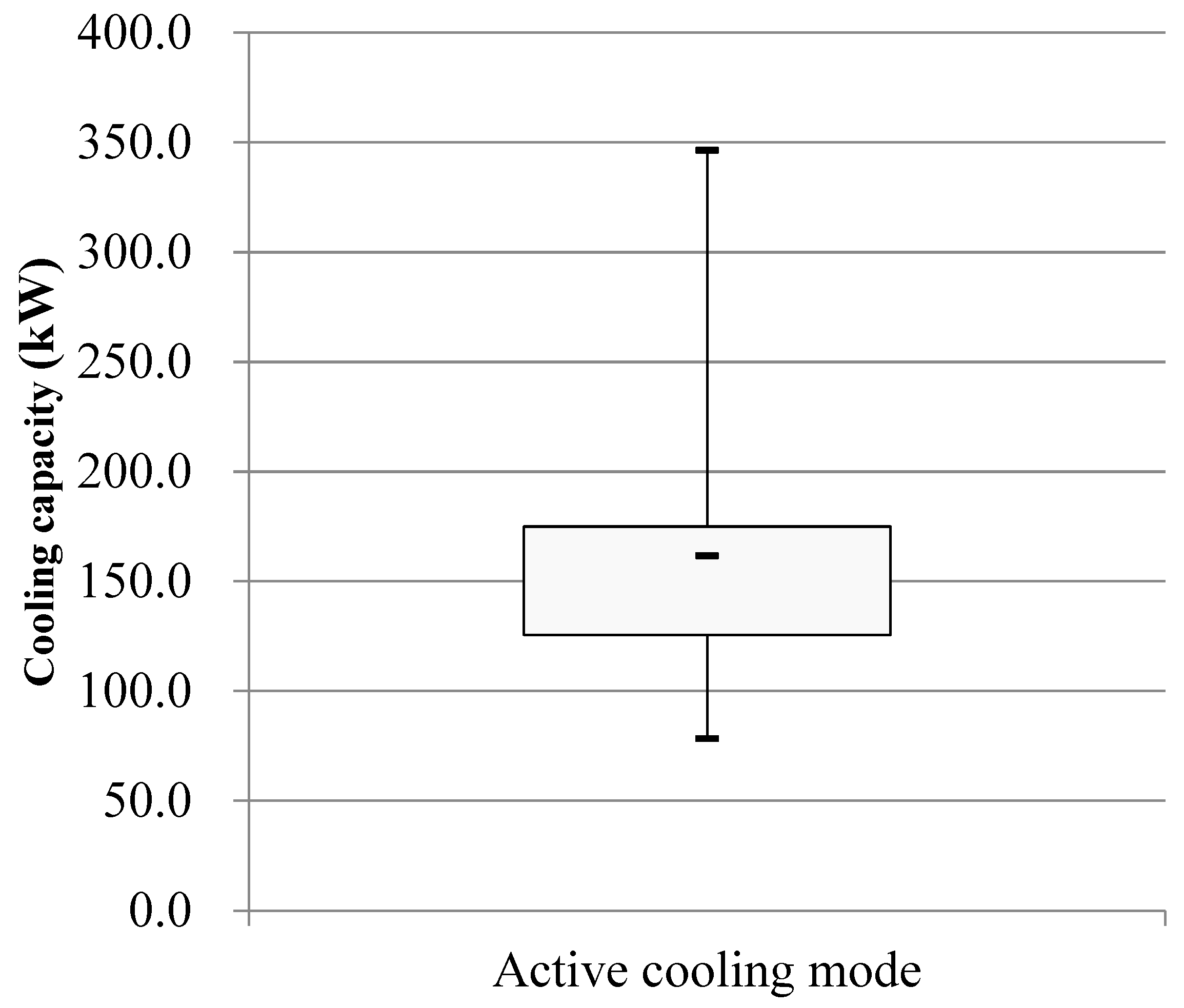

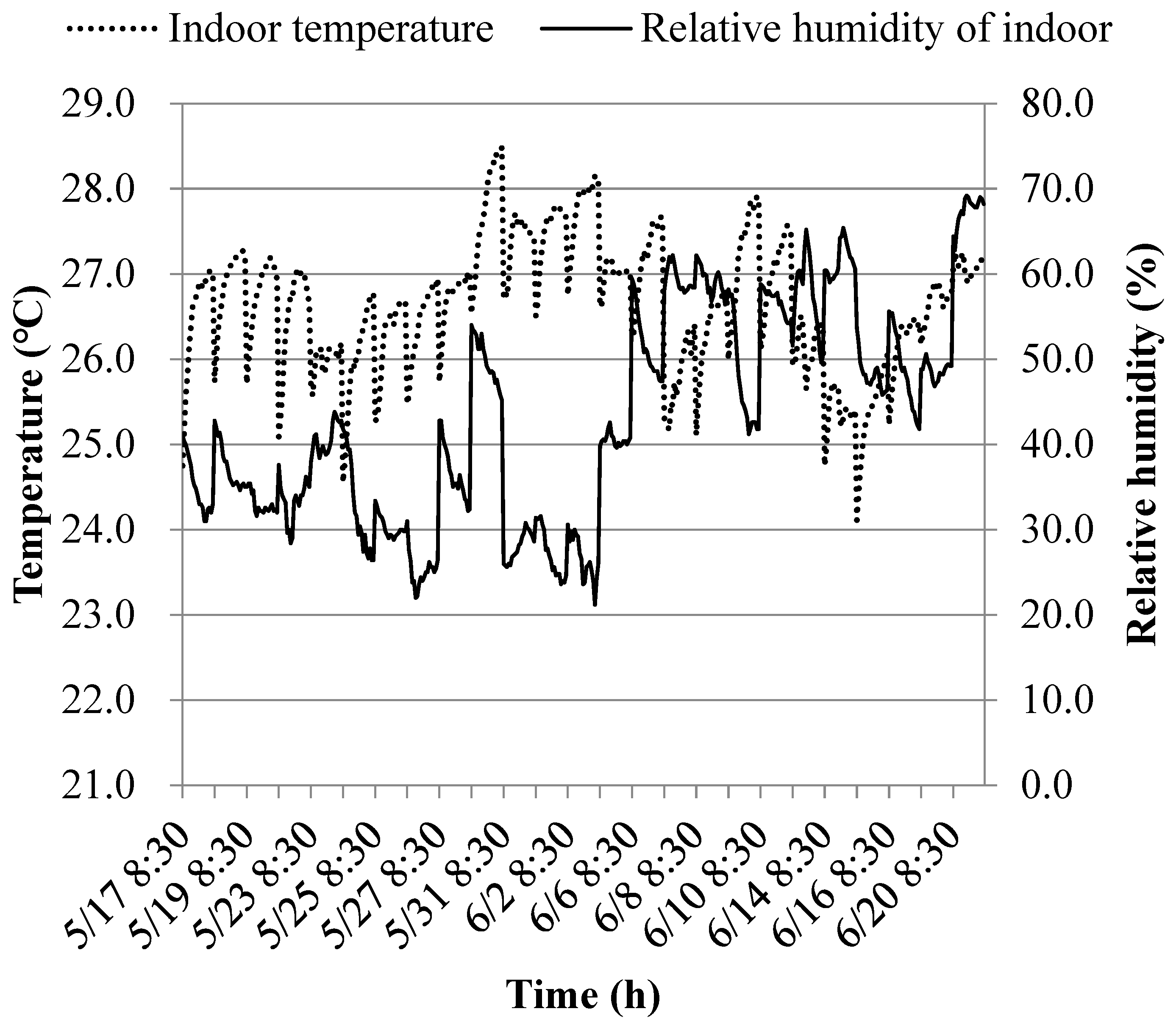

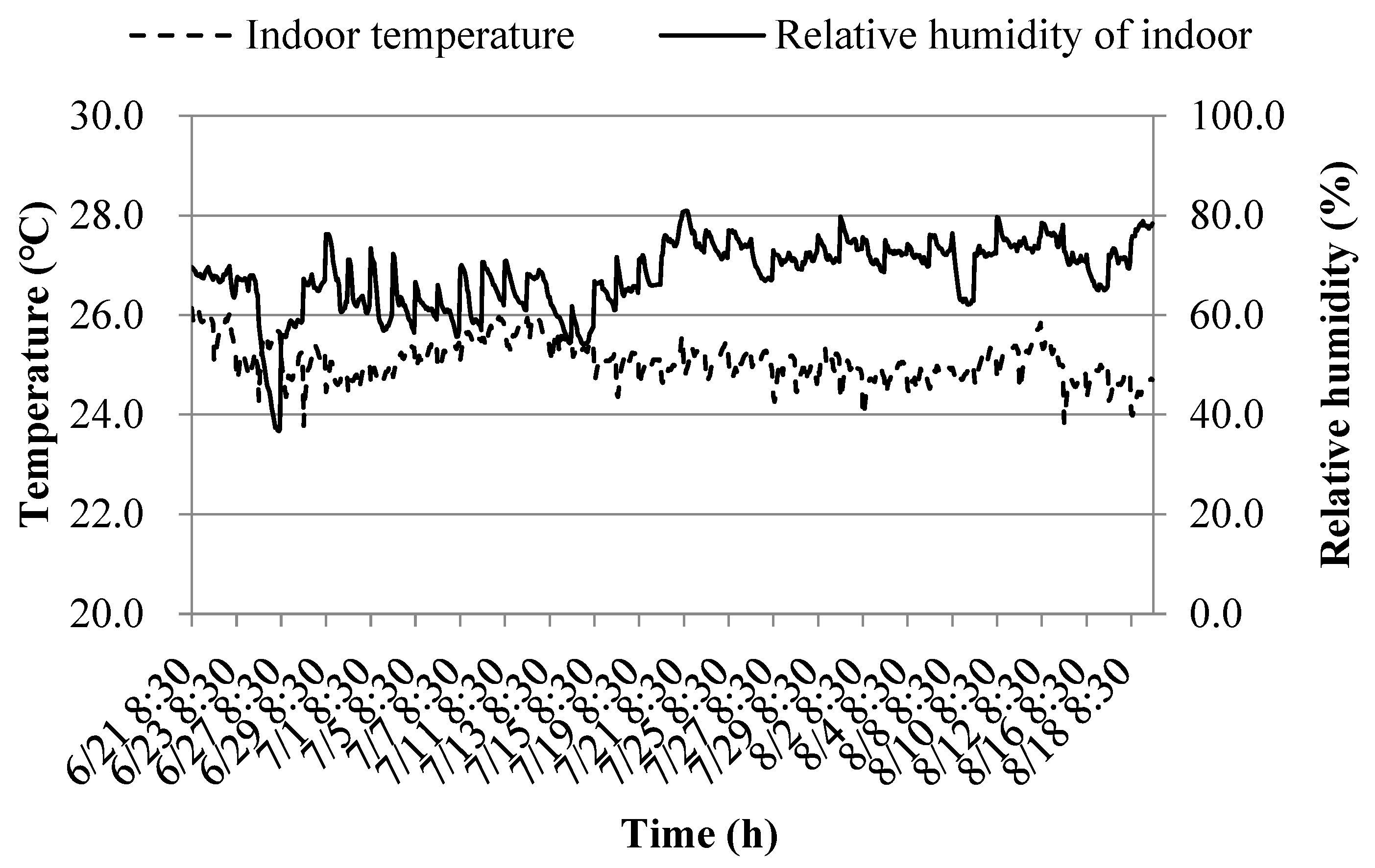

3.1. Building cooling load testing case study

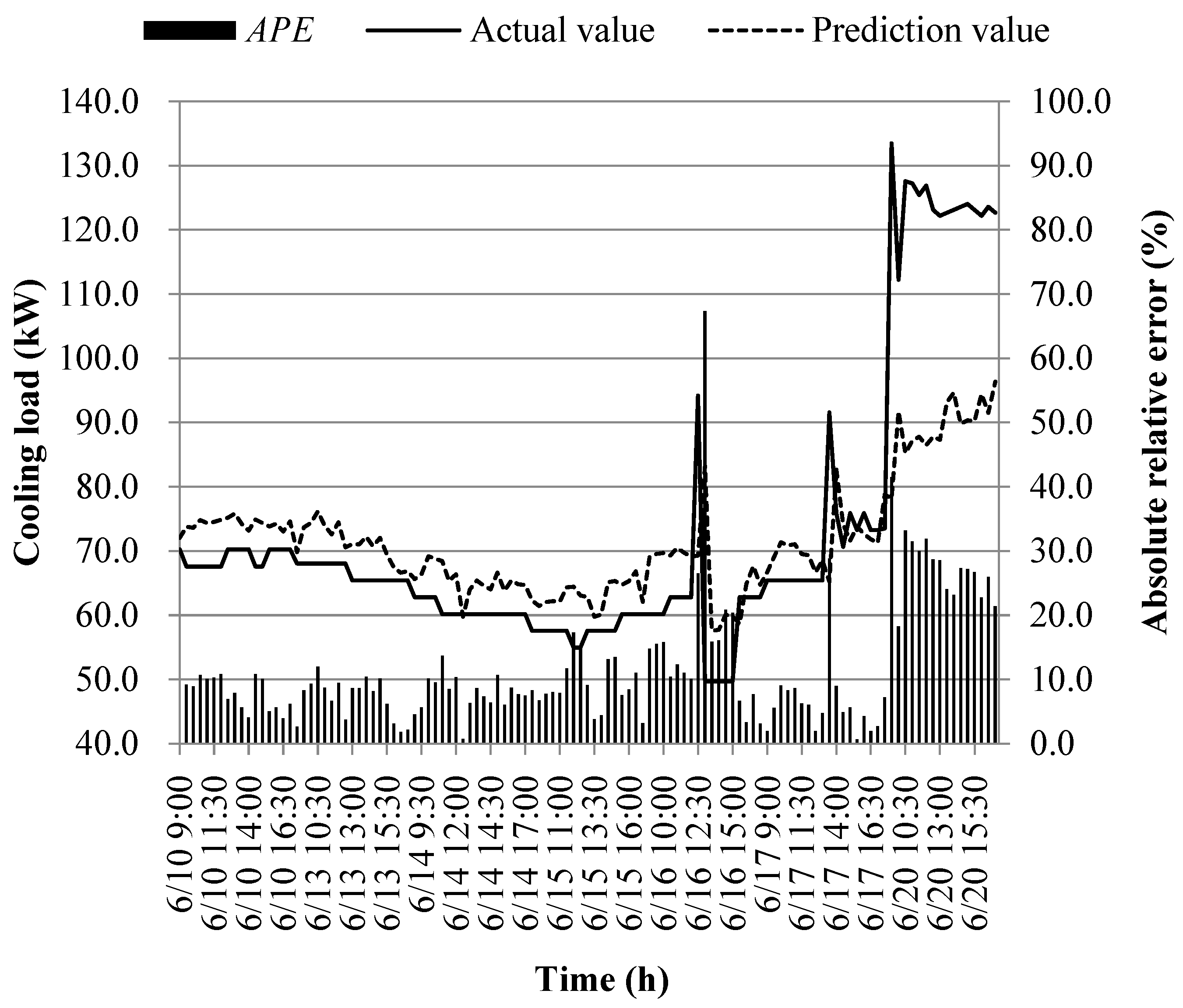

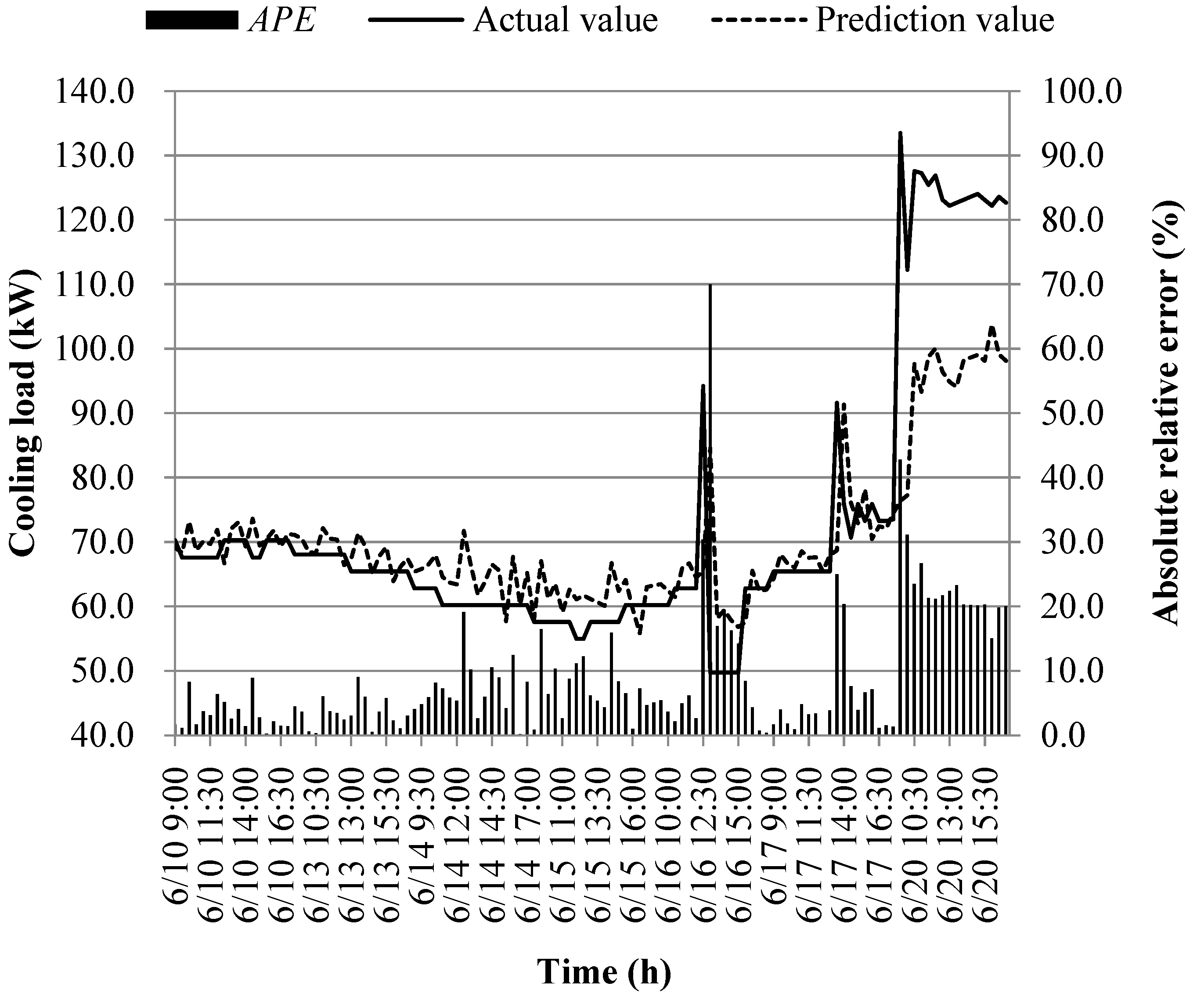

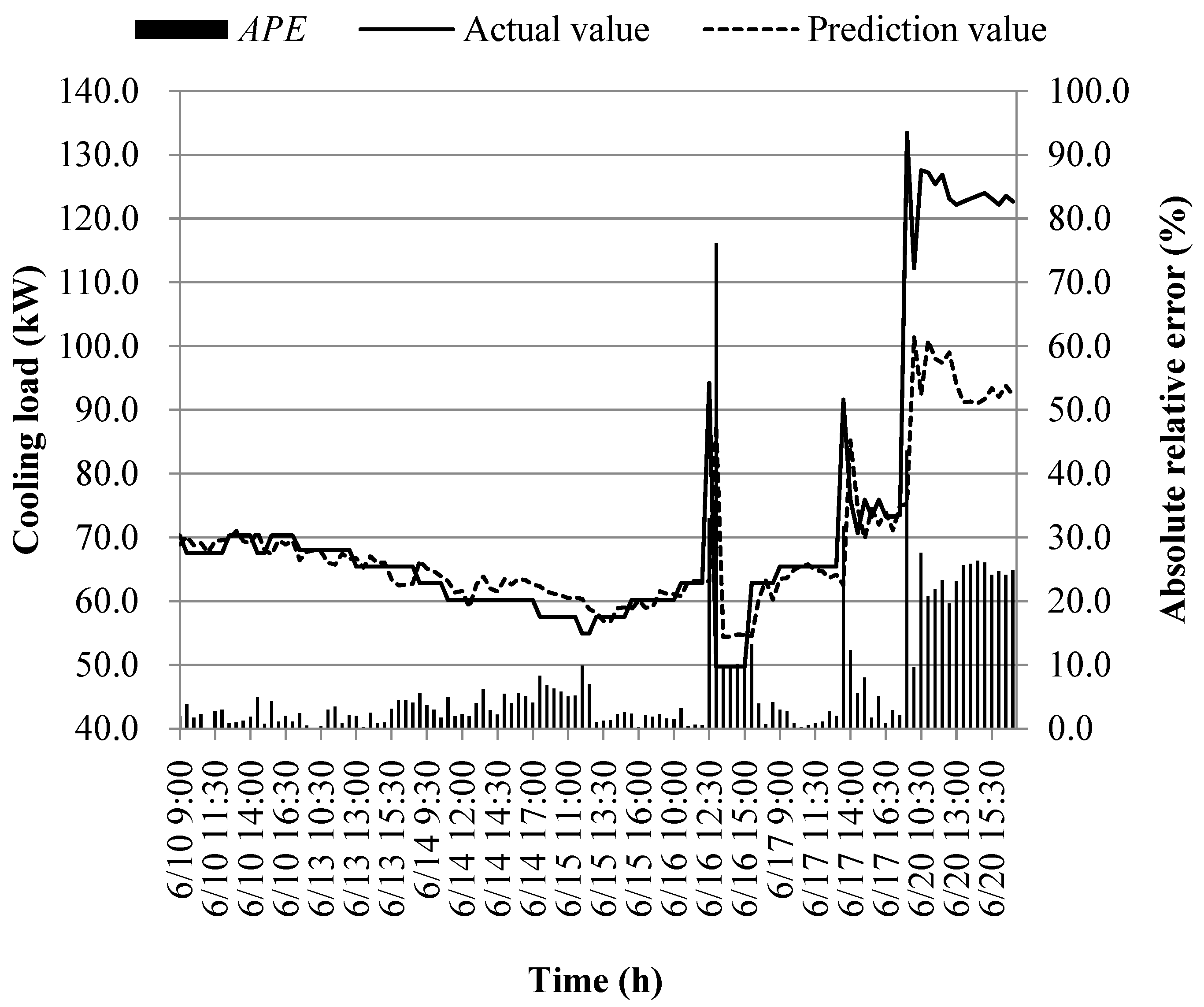

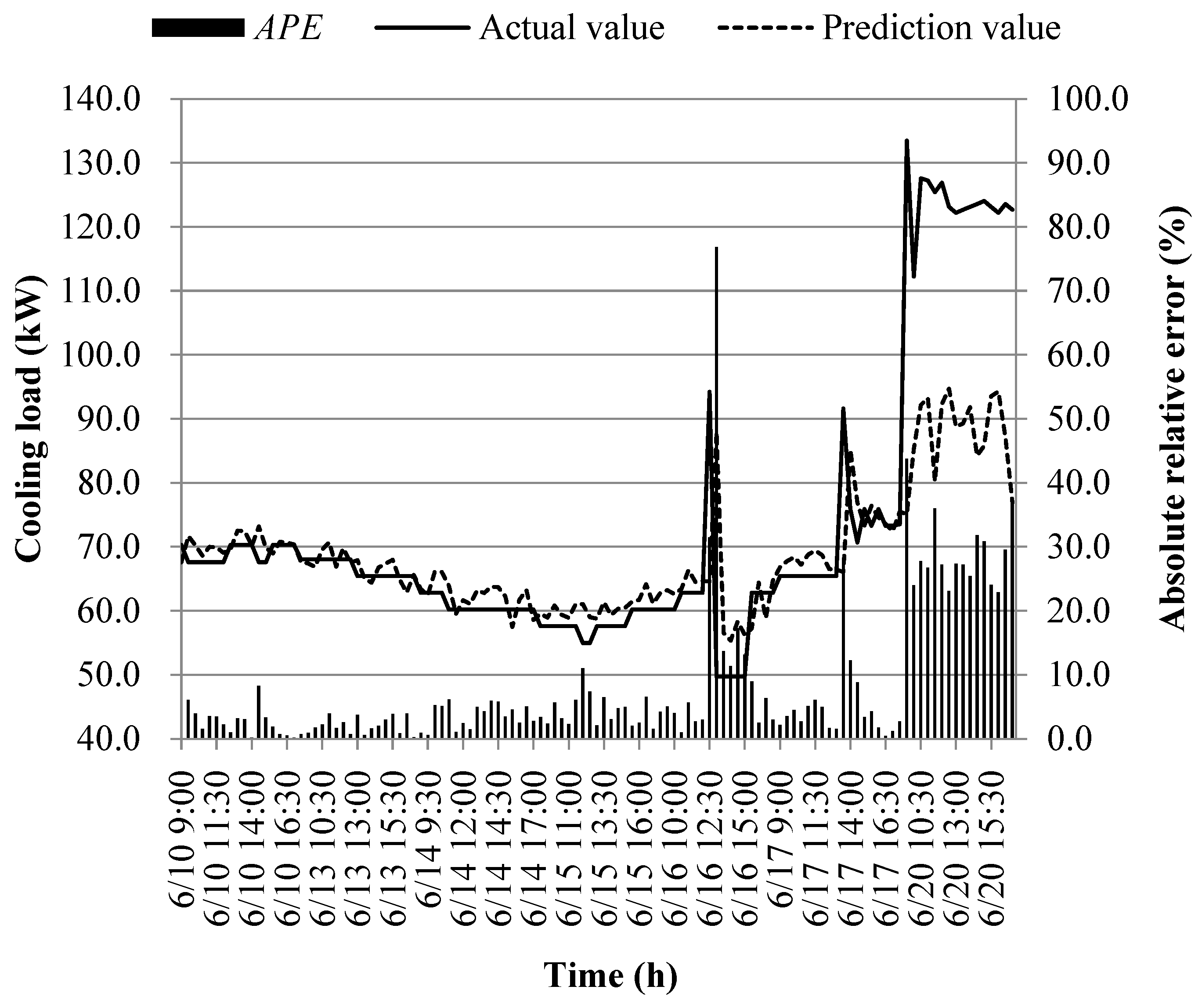

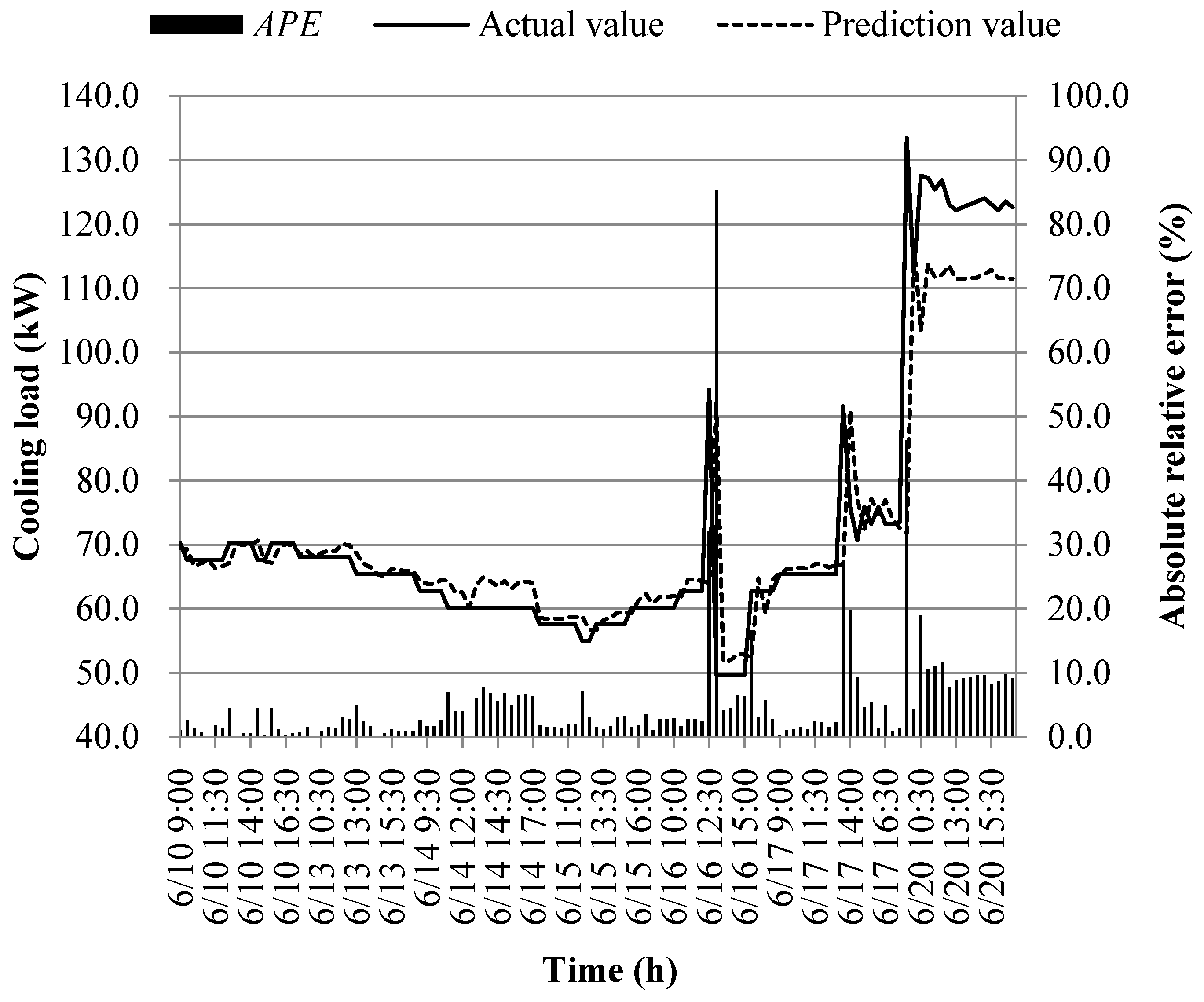

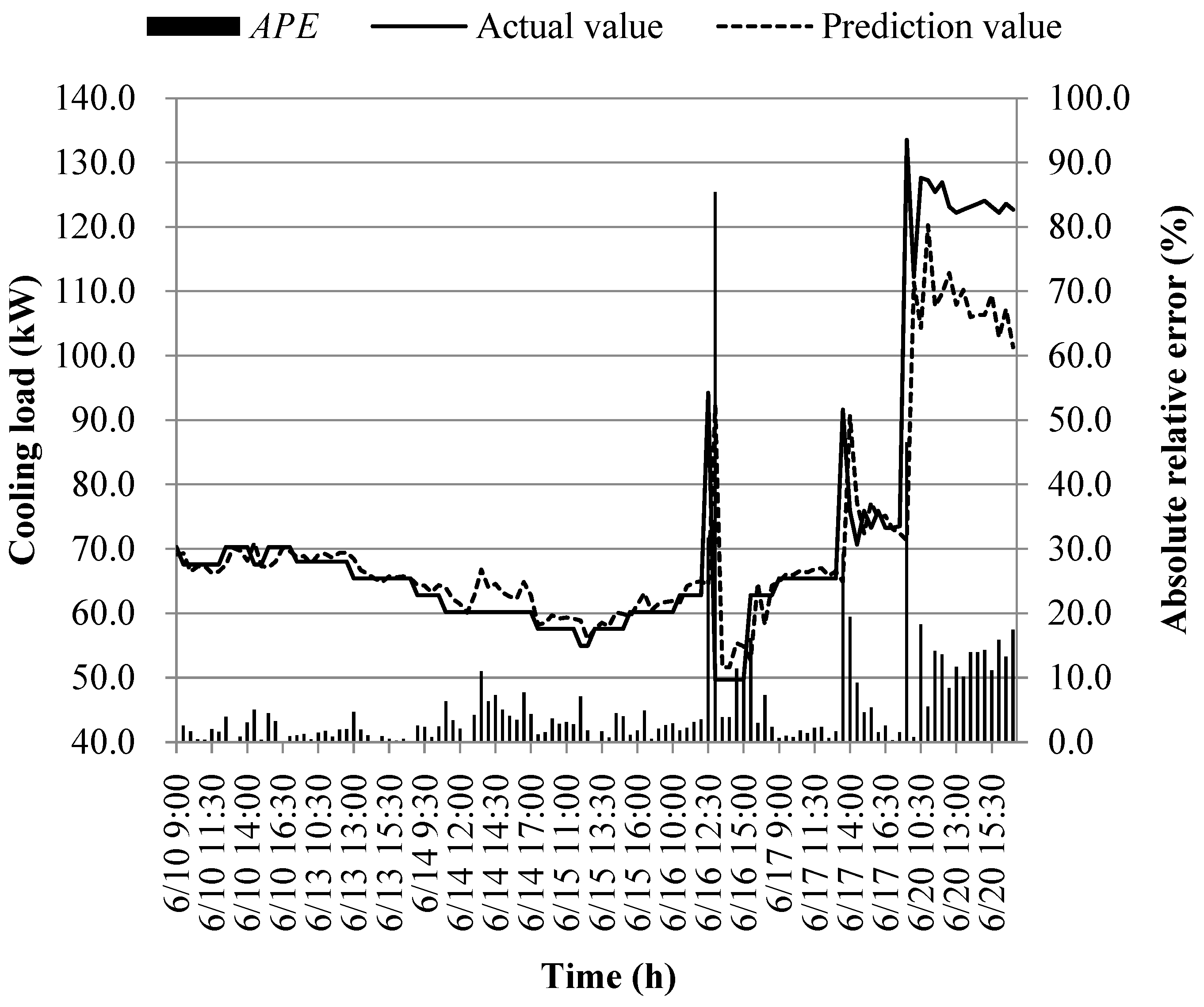

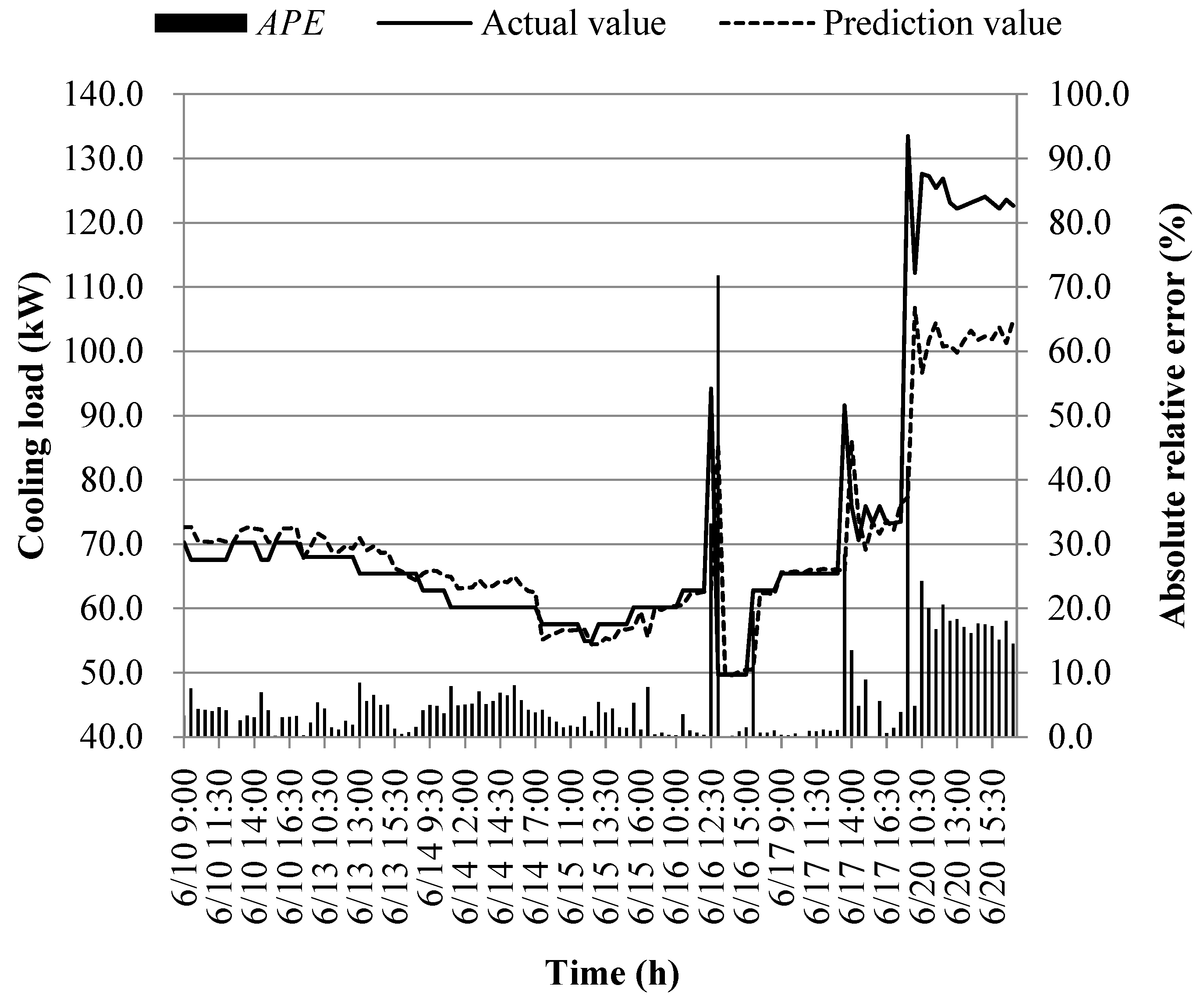

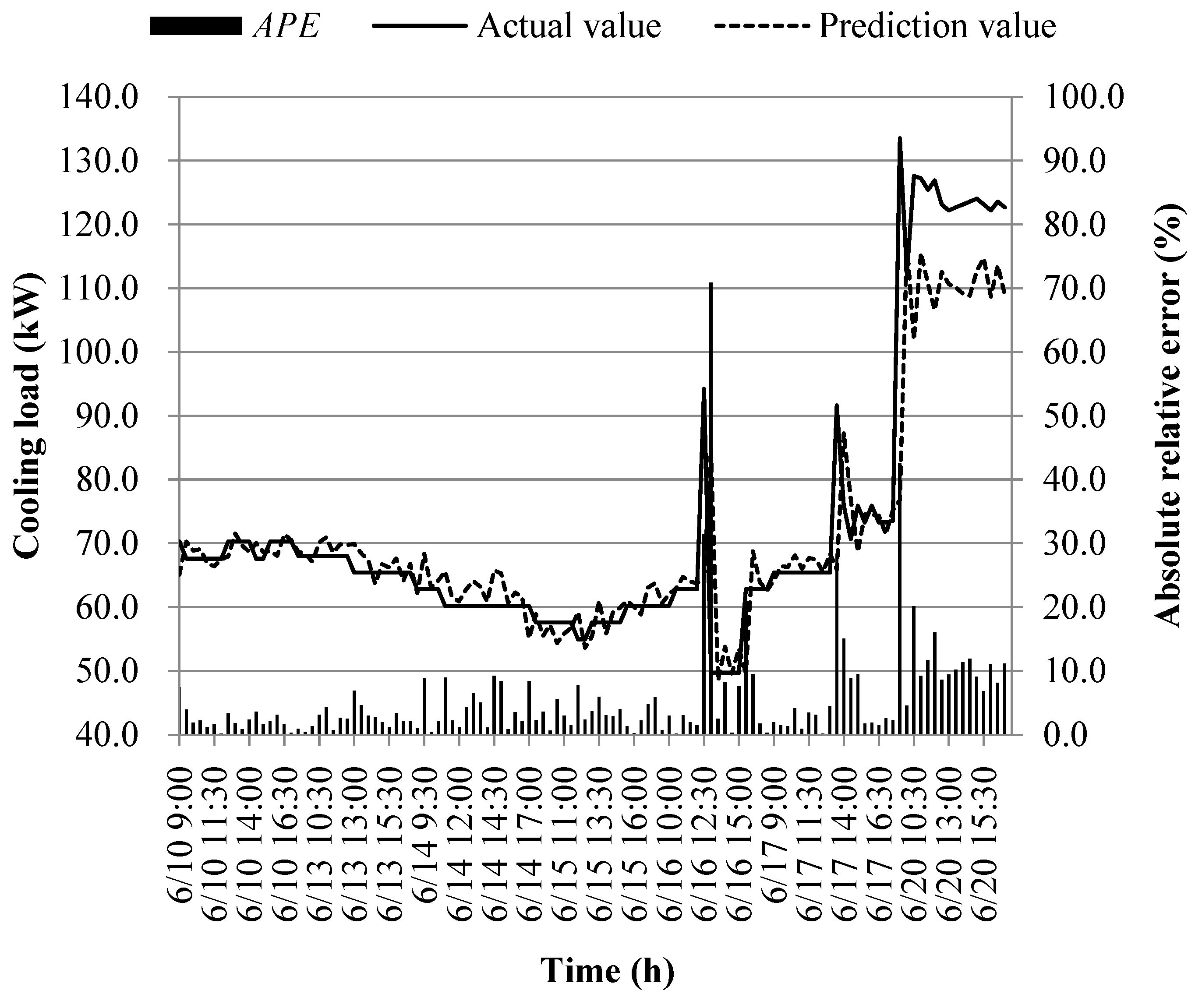

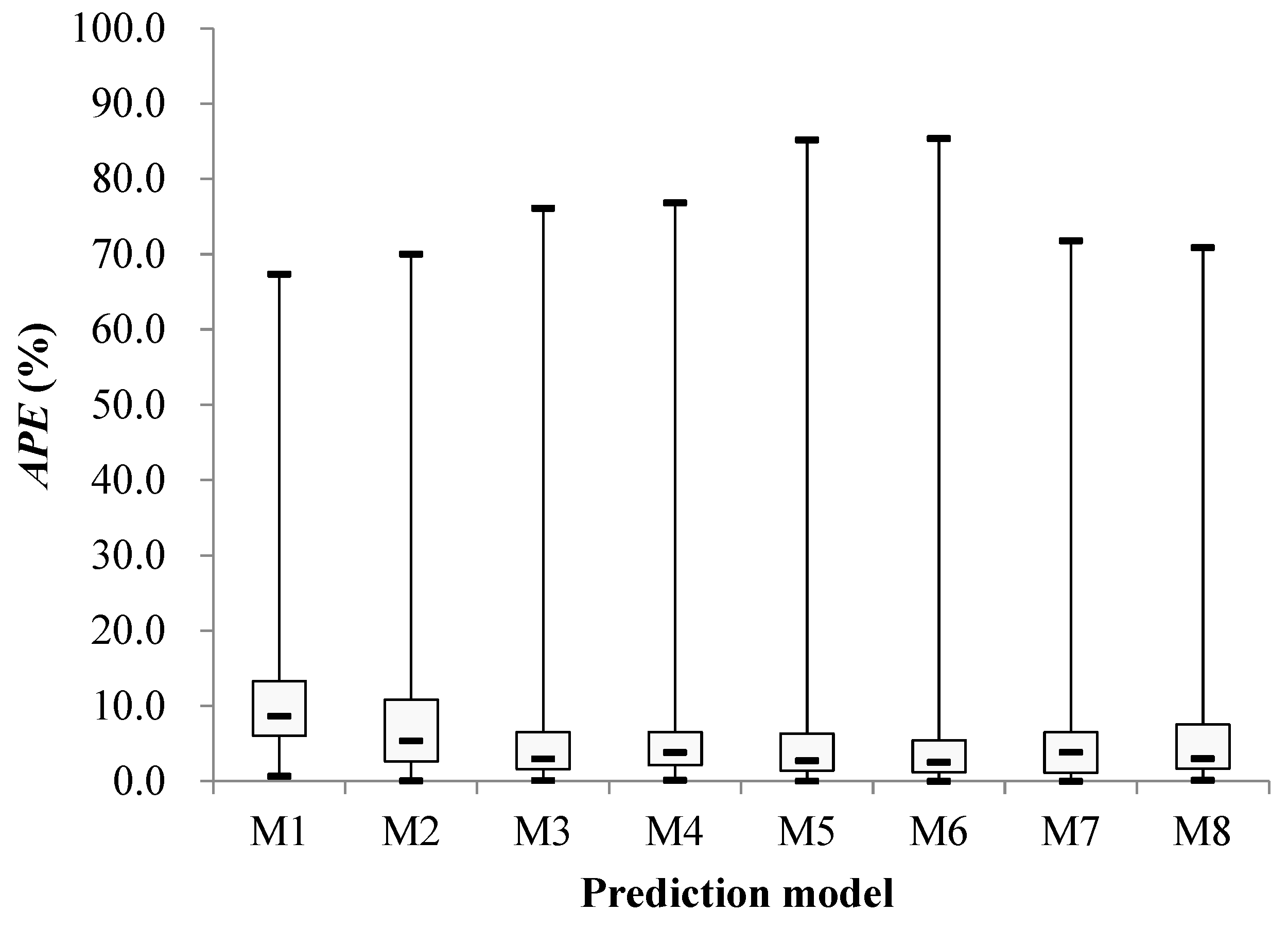

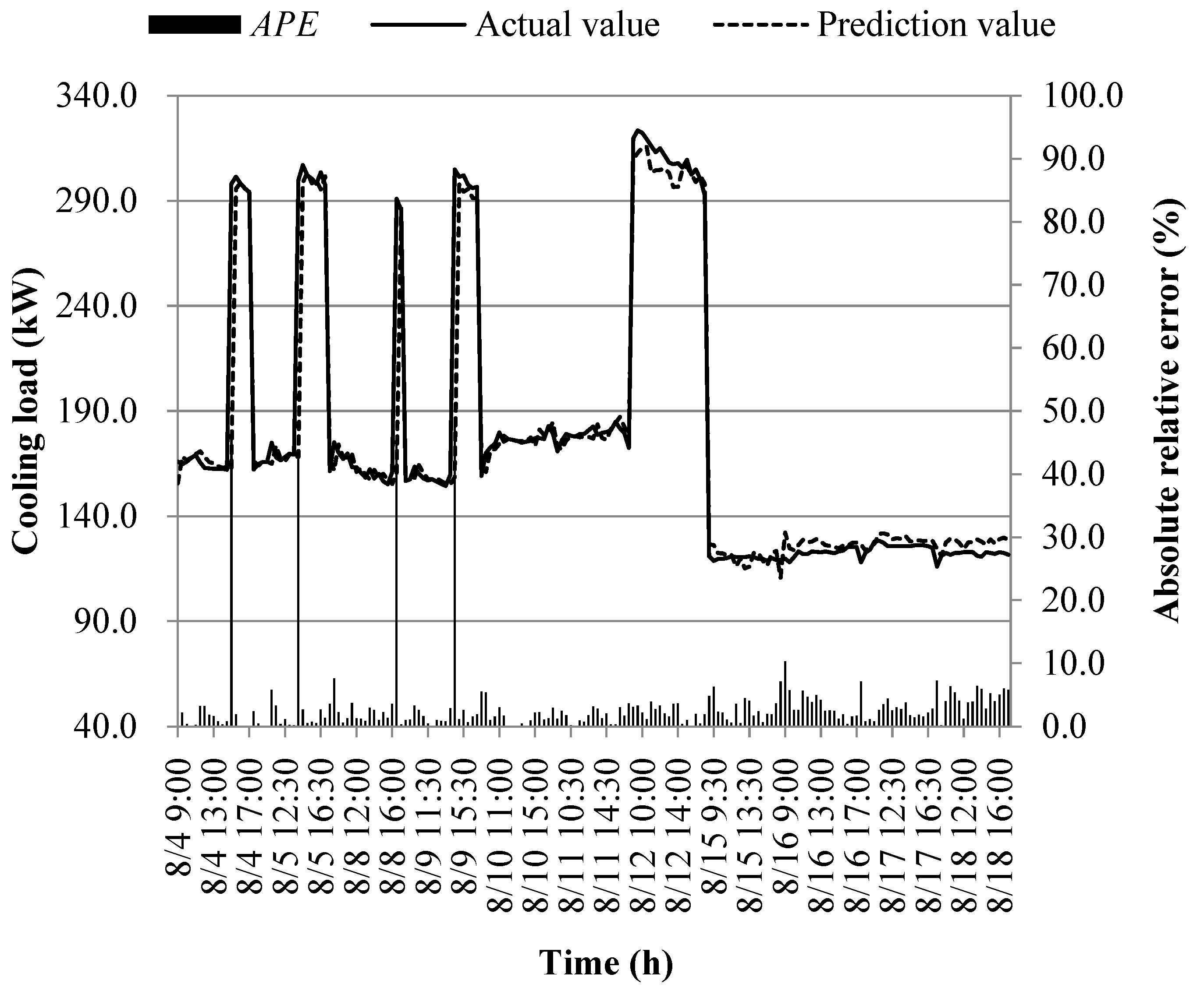

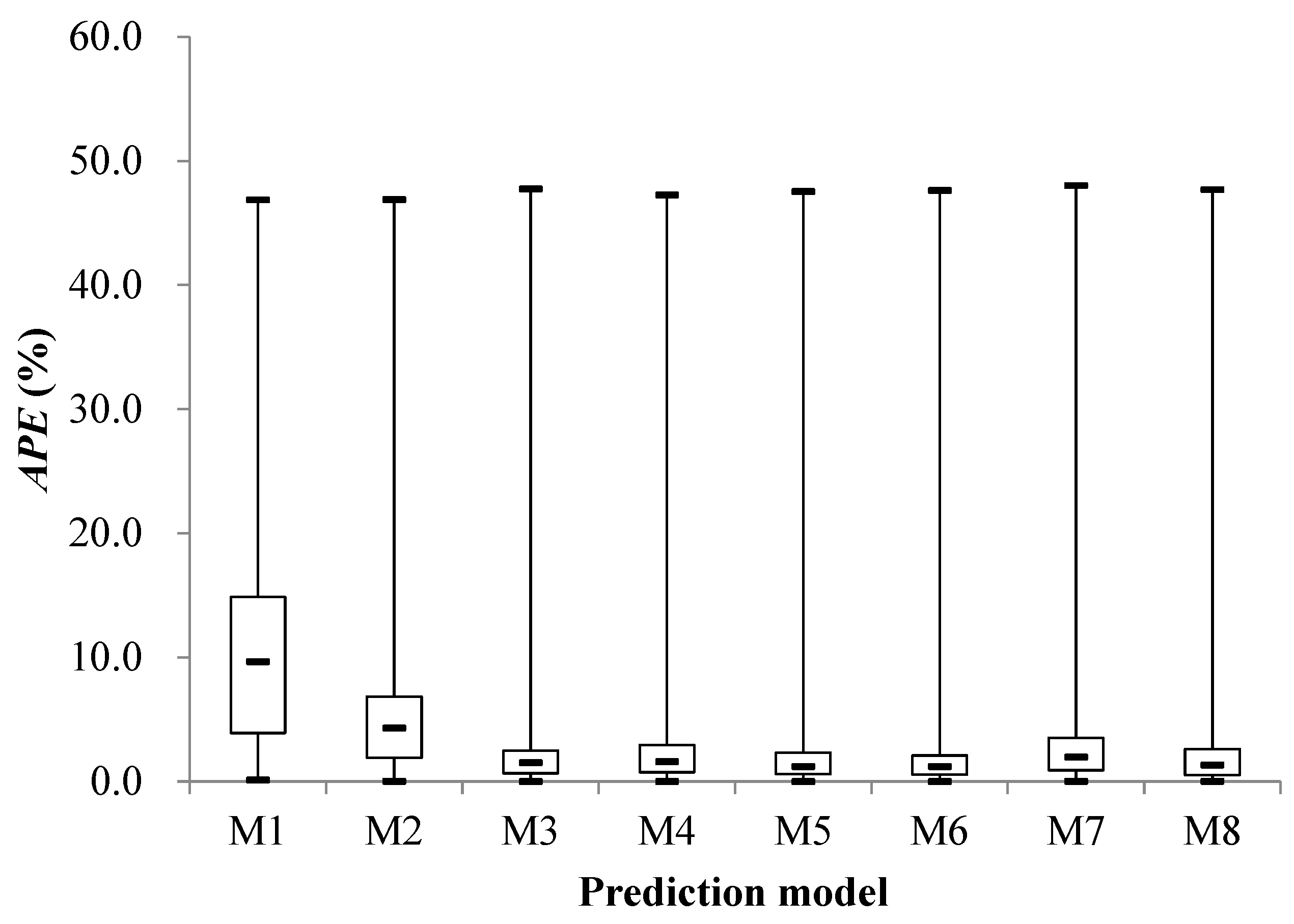

3.2. Early stage cooling load prediction for cooling supply

- Model M1: 6.0% to 13.3%

- Model M2: 2.6% to 10.8%

- Model M3: 1.6% to 6.5%

- Model M4: 2.1% to 6.5%

- Model M5: 1.4% to 6.3%

- Model M6: 1.2% to 5.4%

- Model M7: 1.1% to 6.5%

- Model M8: 1.7% to 7.5%

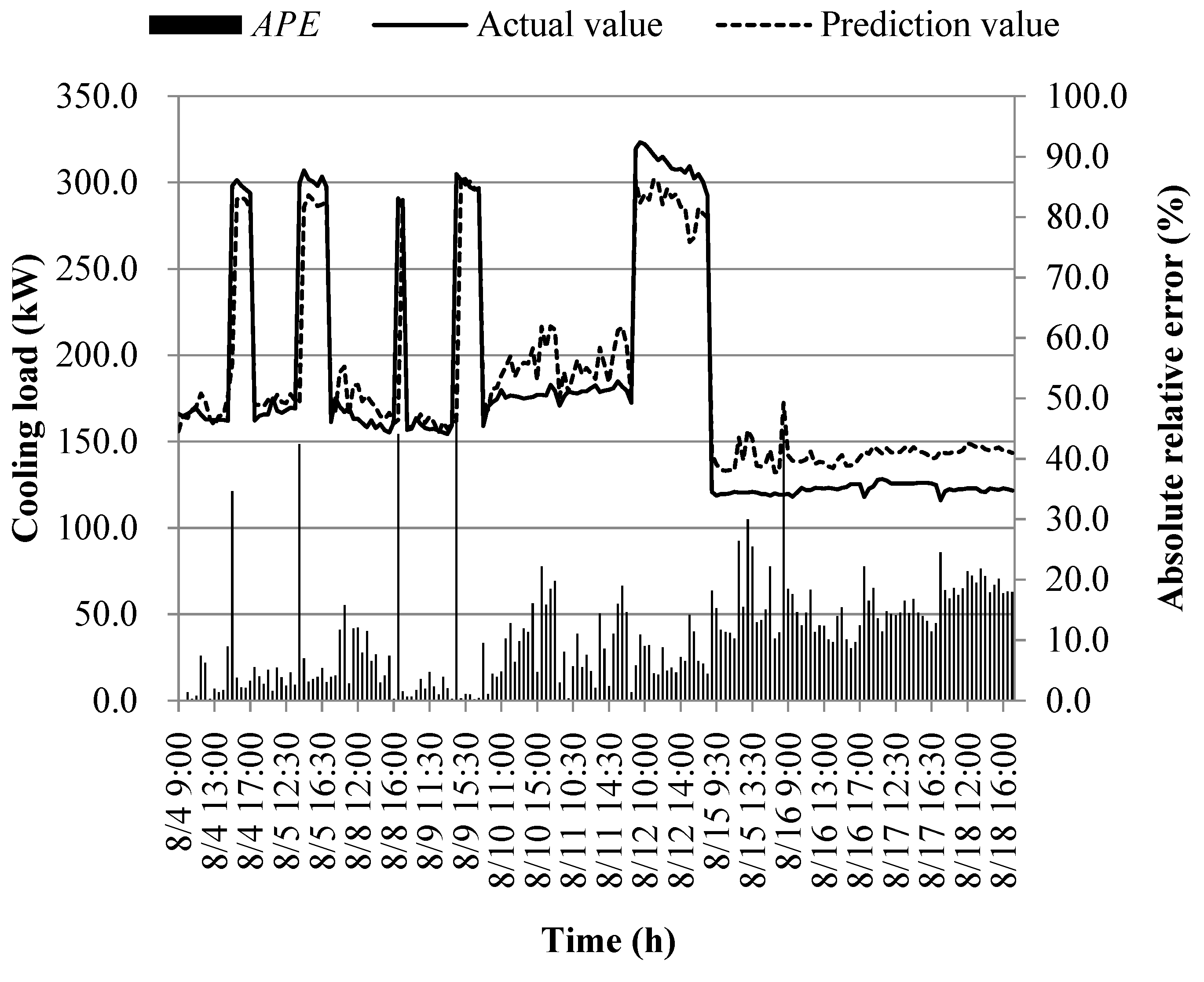

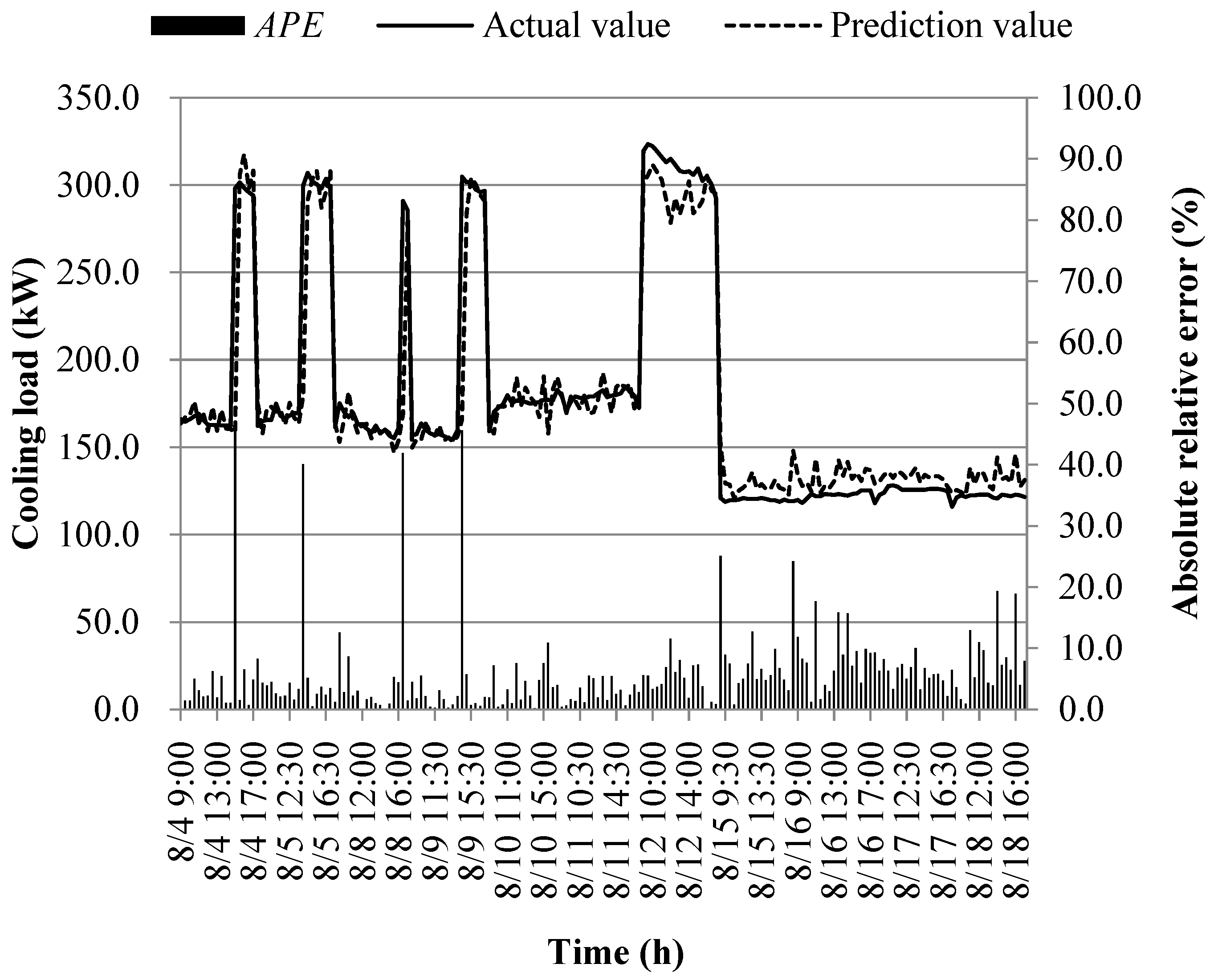

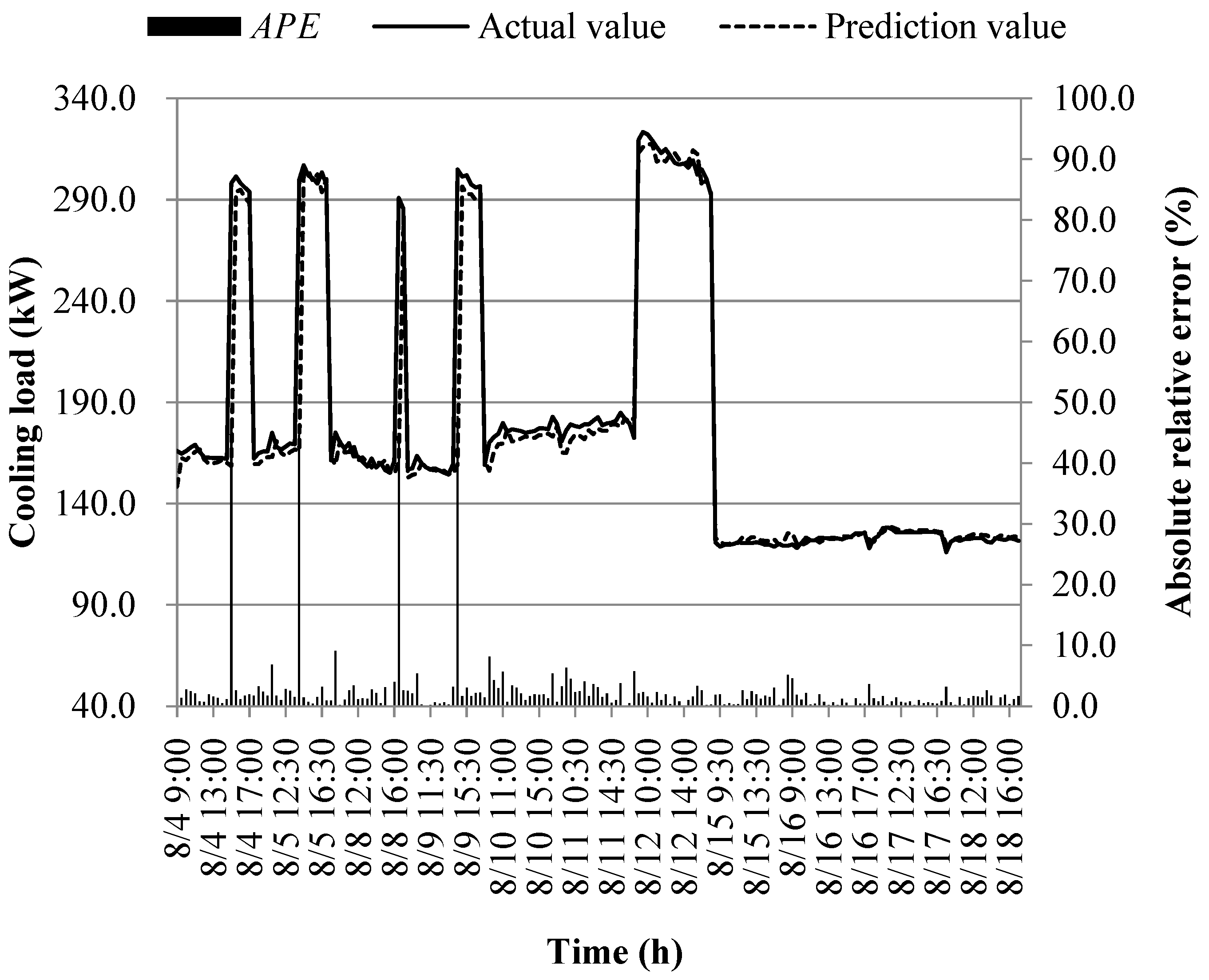

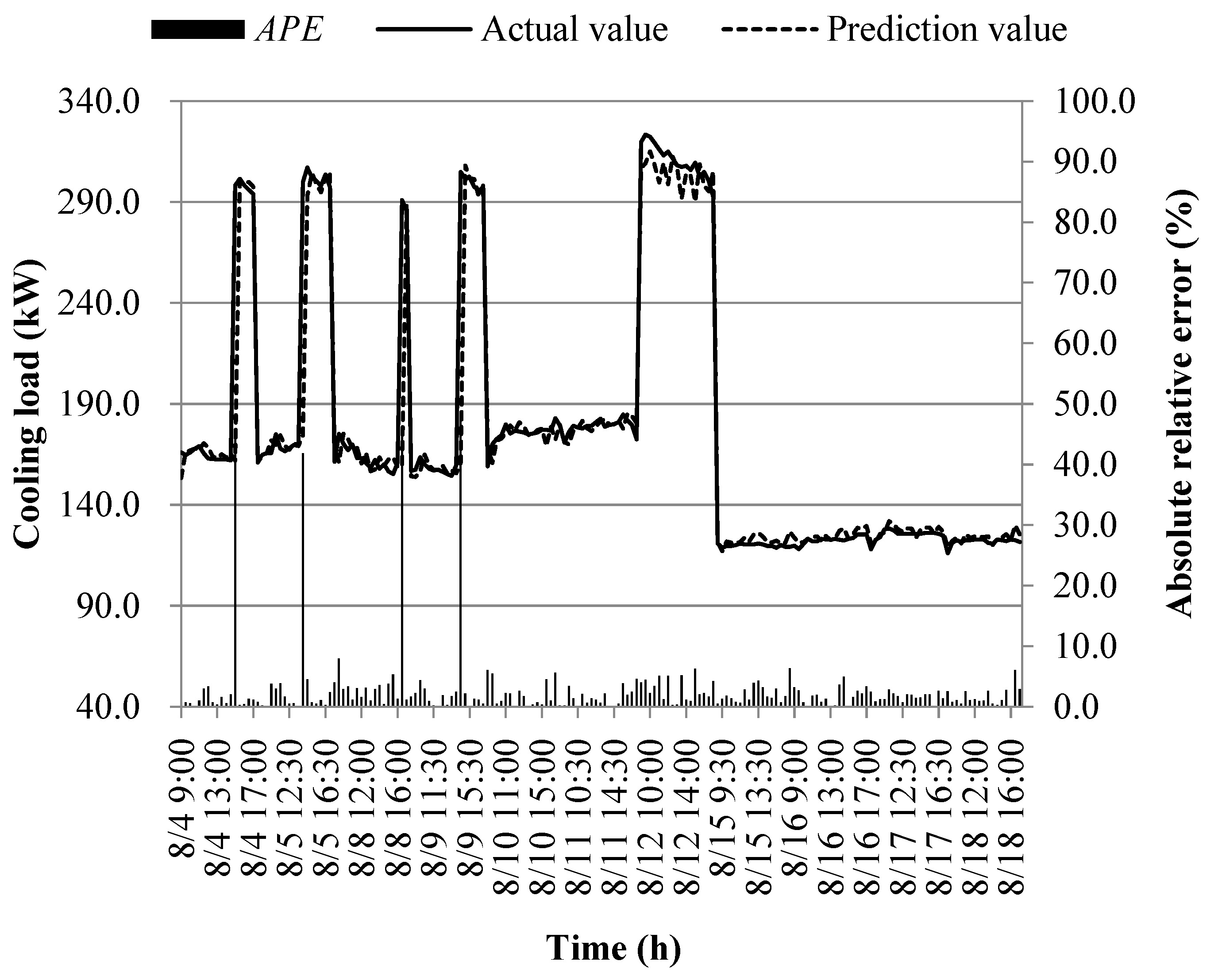

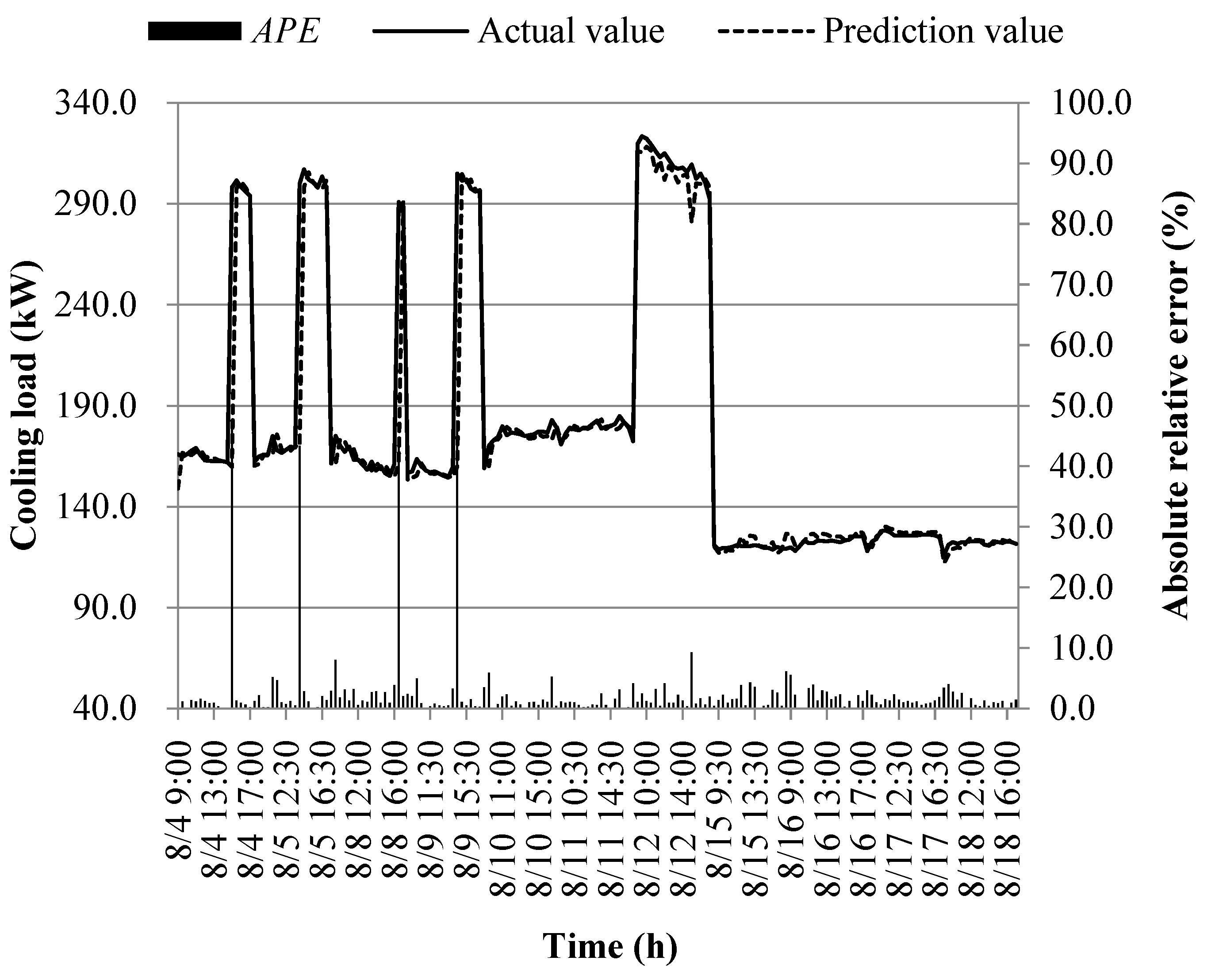

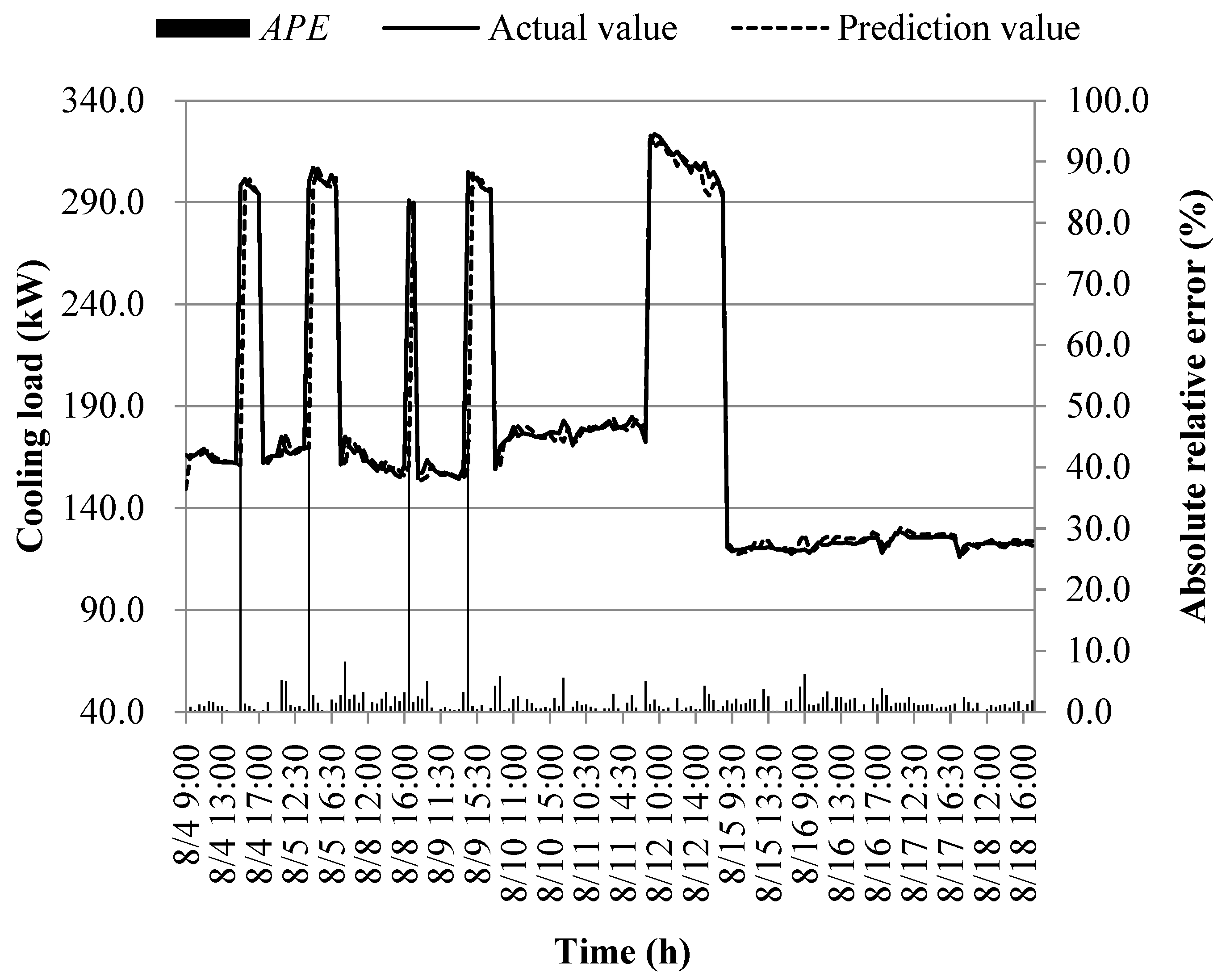

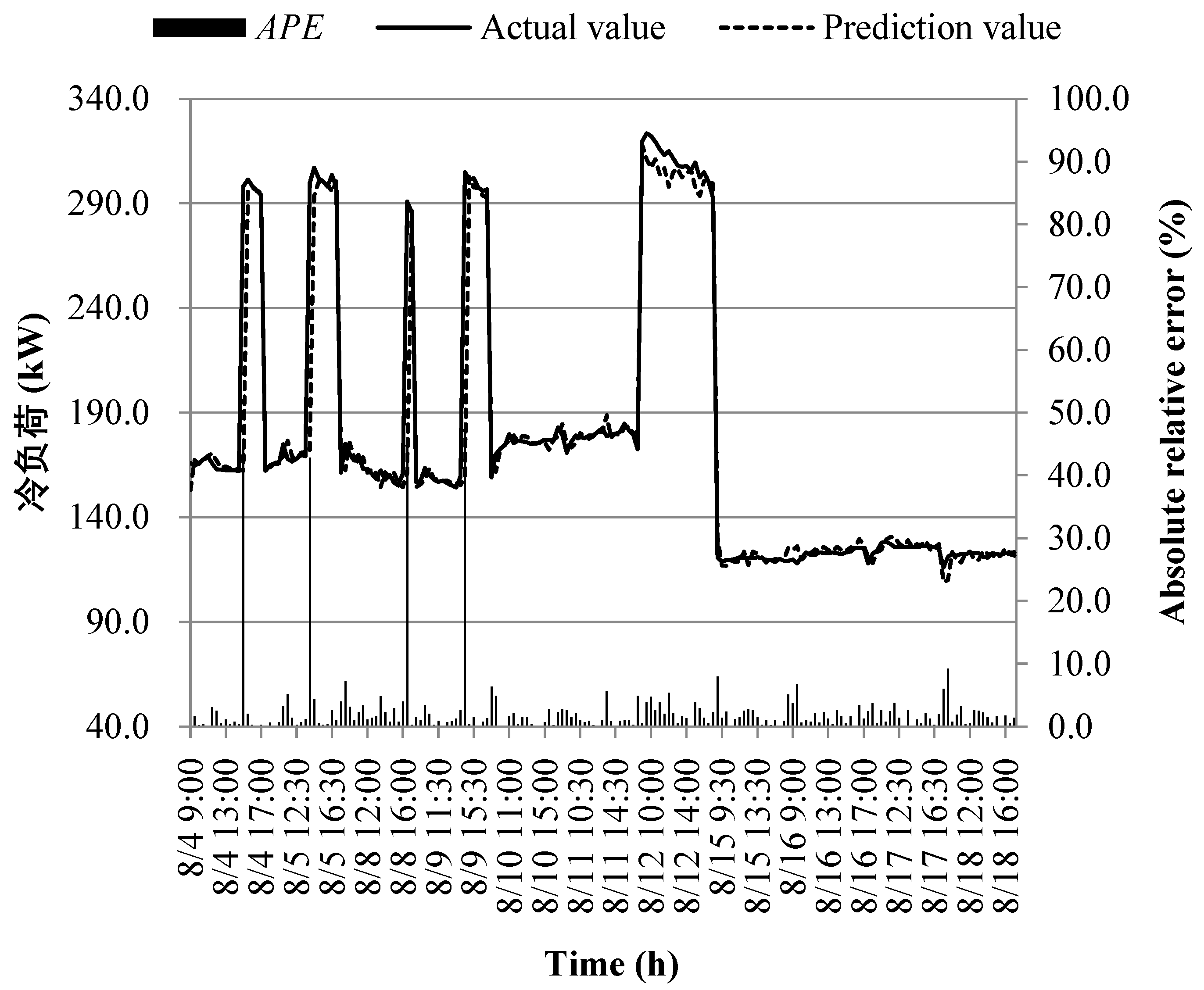

3.3. Middle stage cooling load prediction for cooling supply

4. Conclusions and future works

Conflicts of Interest

| Nomenclature | |

| aqt,ast | Cross-over recombination of a new chromosome |

| A | Feature matrix of the input sample |

| A′ | Feature matrix of the input sample after normalization |

| b | Random number |

| B | Weight matrix between all training samples hidden layer and output layer neurons |

| c | Random number |

| cp,w | Specific heat capacity of chilled water (kJ/(kg·℃)) |

| C | Penalty factor |

| d | Current number of iterations |

| D | Maximum number of evolutions |

| eall | Global error in BPNN |

| ek | Computational error for a single training sample |

| Ek | Desired output value of BPNN |

| Et | Entropy value of the tth meteorological parameter |

| F | Individual fitness value |

| Fl | The fitness value of individual |

| F(S) | Transfer function of hidden layer neurons |

| g(x) | SVR model output value |

| G(S) | Transfer function of neurons in the output layer |

| Hj | Output value of the hidden layer neuron |

| Io | Outdoor solar radiation intensity (W/m2) |

| k | Coverage factor |

| K(xi,xr) | Kernel function |

| l | Number of neurons in the hidden layer |

| Lε(g(x),y) | ε linearly insensitive loss function |

| m | Total number of training samples |

| n | Number of populations |

| Oi | Vector of output value of the output layer of the neural network |

| Ok | Output value of neurons in the output layer |

| p | Number of neurons in the input layer |

| Ph,t | Probability of occurrence of input variable |

| Pl | Probability that individual is selected |

| Qc | Heat pump system cooling load (kW) |

| r | Random number |

| rh | Comprehensive similarity coefficient |

| RHi | Indoor relative humidity |

| RHo | Outdoor relative humidity |

| Sj | Input signals of hidden layer neurons |

| Sk | Input signals of output layer neurons |

| T | Matrix of output values of neurons in the output layer of all training samples |

| Ti | Indoor temperature (℃) |

| To | Outdoor temperature (℃) |

| Tre | Return water temperature of heat pump system (℃) |

| Tsu | Supply water temperature of heat pump system (℃) |

| U | Function of a series of measured parameters |

| v | Coefficient of linear regression |

| V | Weight vector |

| Vo | Outdoor wind speed (m/s) |

| Wt | Weight values of input variables |

| xh | Feature vector of the input sample |

| xh,p | Eigenvalue of input variable |

| x'h,t | Eigenvalues of input variable after dimensionless processing |

| xt | Input variable |

| Xl | Test parameters |

| Xmax | Upper bound of gene Xqt |

| Xmin | Lower bound of gene Xqt |

| X'qt | Mutated genes |

| Z | Matrix of output values of neurons in the hidden layer of all training samples |

| Z+ | Moore-Penrose generalized inverse of hidden layer output matrix Z of ELM |

| Abbreviations | |

| APE | Absolute Percentage Error |

| BP | Back Propagation |

| BPNN | Back Propagation Neural Network |

| ELM | Extreme Learning Machine |

| GABPNN | Genetic Algorithm-Back Propagation Neural Network |

| MLR | Multi-layer perceptron |

| Primary minimum difference | |

| Secondary minimum difference | |

| Maximum value of predicted and historical moments at the tth input variable eigenvalue | |

| Primary maximum difference | |

| Secondary maximum difference | |

| OECD | Organization for Economic Co-operation and Development |

| SVM | Support Vector Machine |

| Greek symbols | |

| αi | Lagrange multiplier |

| αi* | Lagrange multiplier |

| αj | Threshold of hidden layer neurons |

| βk | Threshold of output layer neurons |

| δj | Calculate the partial derivative of the error function to the connection weight between the input layer and the hidden layer neurons. |

| δk | Calculate the partial derivative of the error to the connection weight between the hidden layer and the output layer neurons. |

| ε | The error requirement of linear regression function |

| εX,l | Uncertainty of the measured parameter |

| ∆εU | Relative uncertainty of the calculated parameter |

| ∆εX,l | Relative uncertainty of the measured parameter |

| xi | Slack variable |

| xi* | Slack variable |

| ρ | Resolution factor |

| ρw | Density of chilled water (kg/m3) |

| σ | Adjustment factor |

| η | Learning efficiency of neural network |

| μ | Additional momentum factor |

| ψkj | Connection weights of neurons between the hidden and output layers |

| ψj | Weight vector between neurons in the hidden and output layers |

| ωj | Weight vector between neurons in the hidden and input layers |

| ωjt | Connection weights of neurons between the input and hidden layers |

| Subscripts | |

| h | Time series |

| j | Number of the hidden layer |

| k | Number of the input layer |

| q | Number of chromosomes |

References

- IEA, Energy Technology Perspective 2017. 2017, Paris: Catalysing Energy Technology Transformations.

- Casals, X.G. Analysis of building energy regulation and certification in Europe: Their role, limitations and differences. Energy and buildings, 2006. 38(5): p. 381-392. [CrossRef]

- Liu, Z.; Liu, Y.; He, B.; et al. Application and suitability analysis of the key technologies in nearly zero energy buildings in China. Renewable and Sustainable Energy Reviews, 2019. 101: p. 329-345. [CrossRef]

- Al-Shargabi, A.A.; Almhafdy, A.; Ibrahim, D.M.; et al. Buildings' energy consumption prediction models based on buildings’ characteristics: Research trends, taxonomy, and performance measures. Journal of Building Engineering, 2022. 54: p. 104577.

- Yuan, T.; Ding, Y.; Zhang, Q.; et al. Thermodynamic and economic analysis for ground-source heat pump system coupled with borehole free cooling. Energy and Buildings, 2017. 155: p. 185-197. [CrossRef]

- Solano, J.C.; Caamaño-Martín, E.; Olivieri, L.; et al. HVAC systems and thermal comfort in buildings climate control: An experimental case study. Energy Reports, 2021. 7: p. 269-277. [CrossRef]

- Wang, Y.; Li, Z.; Liu, J.; et al. A novel combined model for heat load prediction in district heating systems. Applied Thermal Engineering, 2023, 227:120372.

- Yun, K.; Luck, R.; Mago, P.J.; et al. Building hourly thermal load prediction using an indexed ARX model. Energy and Buildings, 2012, 54: 225-233.

- Kwok, S.S.K.; Lee, E.W.M. A study of the importance of occupancy to building cooling load in prediction by intelligent approach. Energy Conversion and Management, 2011, 52(7): 2555-2564.

- Al-Shammari, E.T.; Keivani, A.; Shamshirband, S.; et al. Prediction of heat load in district heating systems by support vector machine with firefly searching algorithm. Energy, 2016, 95: 266-273.

- Sajjadi, S.; Shamshirband, S.; Alizamir, M.; et al. Extreme learning machine for prediction of heat load in district heating systems. Energy and Buildings, 2016, 122: 222-227.

- Leung, M.C.; Tse, N.C.F.; Lai, L.L.; et al. The use of occupancy space electrical power demand in building cooling load prediction. Energy and Buildings, 2012, 55: 151-163.

- Ilbeigi, M.; Ghomeishi, M.; Dehghanbanadaki, M. Prediction and optimization of energy consumption in an office building using artificial neural network and a genetic algorithm. Sustainable Cities and Society, 2020, 61:102325.

- Fan, C.; Xiao, F.; Zhao, Y. A short-term building cooling load prediction method using deep learning algorithms. Applied Energy, 2017, 195: 222-233.

- Duanmu, L.; Wang, Z.; Zhai, Z.J.; et al. A simplified method to predict hourly building cooling load for urban energy planning. Energy and Buildings, 2013, 58: 281-291.

- Yao, Y.; Lian, Z.; Liu, S.; et al. Hourly cooling load prediction by a combined forecasting model based on analytic hierarchy process. International Journal of Thermal Sciences, 2004, 43(11): 1107-1118.

- Ding, Y.; Zhang, Q.; Yuan, T. Research on short-term and ultra-short-term cooling load prediction models for office buildings. Energy and Buildings, 2017, 154: 254-267.

- Tian, Z.; Gan, W.; Zou, X.; et al. Performance prediction of a cryogenic organic Rankine cycle based on back propagation neural network optimized by genetic algorithm. Energy, 2022, 254:124027.

- Zhang, Y.; Gao, X.; Katayama, S. Weld appearance prediction with BP neural network improved by genetic algorithm during disk laser welding. Journal of Manufacturing Systems, 2015, 34: 53-59.

- Wang, H.; Jin, T.; Wang, H.; et al. Application of IEHO–BP neural network in forecasting building cooling and heating load. Energy Reports, 2022, 8:455-65.

- Qian, L.; Zhao, J.; Ma, Y. Option Pricing Based on GA-BP neural network. Procedia Computer Science, 2022, 199:1340-54.

- Ren, C.; An, N.; Wang, J.; et al. Optimal parameters selection for BP neural network based on particle swarm optimization: A case study of wind speed forecasting. Knowledge-Based Systems, 2014, 56: 226-239.

- Domashova, J.V.; Emtseva, S.S.; Fail, V.S.; Aleksandr, S.G. Selecting an optimal architecture of neural network using genetic algorithm. Procedia Computer Science, 2021, 190:263-73.

- Oreski, S.; Oreski, G. Genetic algorithm-based heuristic for feature selection in credit risk assessment. Expert Systems with Applications, 2014, 41(4, Part 2): 2052-2064.

- Sreenivasan, K.S.; Kumar, S.S.; Katiravan, J. Genetic algorithm based optimization of friction welding process parameters on AA7075-SiC composite. Engineering Science and Technology, an International Journal, 2019, 22:1136-48.

- Cortes, C.; Vapnik, V. Support-vector networks. Machine Learning, 1995, 20 (3): 273-297.

- Vapnik, V.N. The nature of statistical learning theory[M]. New York: Springer, 1999: 181-217.

- Huang, G.B.; Zhu, Q.Y.; Siew, C.K. Extreme learning machine: A new learning scheme of feedforward neural networks[C]//Proceedings of International Joint Conference on Neural Networks, 25-29 July, 2004. Hungary: Budapest, 2004: 985-990.

- Huang, G.; Zhu, Q.; Siew, C. Extreme learning machine: Theory and applica- tions. Neurocomputing, 2006, 70(1-3): 489-501.

- Wang, T.; Lee, H. Developing a fuzzy TOPSIS approach based on subjective weights and objective weights. Expert Systems with Applications, 2009, 36(5): 8980-8985.

- Shannon, C.E. A mathematical theory of communication. The Bell System Technical Journal, 1948, 27(3): 379-423.

- Sahoo, M.; Sahoo, S.; Dhar, A.; et al. Effectiveness evaluation of objective and subjective weighting methods for aquifer vulnerability assessment in urban context. Journal of Hydrology, 2016, 541: 1303-1315.

- Analytical Methods Committee. Uncertainty of measurement: Implications of its use in analytical science. Analyst, 1995, 120: 2303-2308.

- Akbulut, U.; Utlu, Z.; Kincay, O. Exergoenvironmental and exergoeconomic analyses of a vertical type ground source heat pump integrated wall cooling system. Applied Thermal Engineering, 2016, 102: 904-921.

- Hepbasli, A.; Akdemir, O. Energy and exergy analysis of a ground source (geothermal) heat pump system. Energy Conversion and Management, 2004, 45(5): 737-753.

- Chen, X.; Yang, H.; Lu, L.; et al. Experimental studies on a ground coupled heat pump with solar thermal collectors for space heating. Energy, 2011, 36(8): 5292-5300.

- Dai, L.; Li, S.; DuanMu, L.; et al. Experimental performance analysis of a solar assisted ground source heat pump system under different heating operation modes. Applied Thermal Engineering, 2015, 75: 325-333.

- Joint Committee for Guides in Metrology. Evaluation of measurement data- guide to the expression of uncertainty in measurement, JCGM 100:2008 (GUM 1995 with minor corrections)[M]. Paris: Bureau International des Poids et Mesures, 2010: 1-20.

- Moffat, R.J. Describing the uncertainties in experimental results. Experi- mental Thermal and Fluid Science, 1988, 1(1): 3-17.

- Guo, Q.; Tian, Z.; Ding, Y.; et al. An improved office building cooling load prediction model based on multivariable linear regression. Energy and Buildings, 2015, 107: 445-455.

| Instrument name. | Model | Test range | Precision |

|---|---|---|---|

| heat meter | Engelmann SENSOSTAR 2BU | Temperature: 1~150℃/Flow rate: 0~120m³/h | ±0.35℃/±2% |

| HOBO data self-logger | U10-003 | Temperature: -20~70℃ / Relative humidity: 25~95% | ±0.4℃/±3.5% |

| Temperature and HumiditySensor (small weather station) | S-THB-M002 | Temperature: -40~75℃/Relative Humidity:0~100% | ±0.21℃/±2.5% |

| Solar Radiation Sensor(small meteorological station) | S-LIB-M003 | 0~1280W/m2 | ±10W/m2 |

| Wind Speed Sensor(small meteorological station) | S-WSB-M003 | 0~76m/s | ±4% |

| Parameter name | Unit | Relative uncertainty (%) |

|---|---|---|

| Return water temperature of free cooling mode | ℃ | ±1.06 |

| Supply water temperature of free cooling mode | ℃ | ±1.25 |

| Cooling capacity of free cooling model | kW | ±9.77 |

| Flow of free cooling model | m3/h | ±1.77 |

| Indoor temperature | ℃ | ±0.90 |

| Relative humidity of indoor air | % | ±3.26 |

| outdoor temperature | ℃ | ±0.40 |

| Relative humidity of outdoor | % | ±2.65 |

| Intensity of solar radiation | W/m2 | ±1.49 |

| Outdoor wind speed | m/s | ±2.31 |

| Water supply temperature of heat pump unit A | ℃ | ±2.43 |

| Return water temperature of heat pump unit A | ℃ | ±1.67 |

| Cooling capacity of heat pump unit A | kW | ±7.56 |

| Cooling energy efficiency of heat pump unit A | - | ±7.53 |

| Water supply temperature of heat pump unit B | ℃ | ±1.88 |

| Return water temperature of heat pump unit B | ℃ | ±1.44 |

| Cooling capacity of heat pump unit B | kW | ±8.69 |

| Number of populations | 50 |

|---|---|

| Maximum number of generations | 400 |

| The number of binary digits of the variable | 20 |

| Generation gap | 0.95 |

| Probability of crossover | 0.7 |

| Probability of mutation | 0.01 |

| Maximum training times | 1000 |

|---|---|

| Momentum factor | 0.9 |

| Learning rate | 0.1 |

| Training goal | 0.005 |

| Indicators | Building cooling load and its influencing factors | |||||||

|---|---|---|---|---|---|---|---|---|

| Q | To | RHo | Io | Vo | Ti | RHi | Q(t-1) | |

| Average value |

72.766 | 29.013 | 39.697 | 476.889 | 1.060 | 26.675 | 43.249 | 73.980 |

| Standard deviation |

15.523 | 4.123 | 18.196 | 238.526 | 0.871 | 0.755 | 12.712 | 16.471 |

| Test statistic |

0.147 | 0.041 | 0.156 | 0.061 | 0.124 | 0.051 | 0.098 | 0.146 |

| Asymptotic significance |

0.000 | 0.089 | 0.000 | 0.001 | 0.000 | 0.011 | 0.000 | 0.000 |

| Indicator | Factors affecting building cooling loads | ||||||

|---|---|---|---|---|---|---|---|

| To | RHo | Io | Vo | Ti | RHi | Q(t-1) | |

| Correlation coefficient | 0.119* | 0.171** | 0.144** | -0.129** | 0.221** | 0.180** | 0.956** |

| Significance | 0.014 | 0.000 | 0.003 | 0.008 | 0.000 | 0.000 | 0.000 |

| Name | Main Input Variables | Detailed description |

|---|---|---|

| M1 | HRo; Io; Vo; Ti; RHi; Q(t-1) | BPNN |

| M2 | HRo; Io; Vo; Ti; RHi; Q(t-1) | Similar samples screening +BPNN |

| M3 | HRo; Io; Vo; Ti; RHi; Q(t-1) | GABPNN |

| M4 | HRo; Io; Vo; Ti; RHi; Q(t-1) | Similar samples screening +GABPNN |

| M5 | HRo; Io; Vo; Ti; RHi; Q(t-1) | SVR neural network |

| M6 | HRo; Io; Vo; Ti; RHi; Q(t-1) | Similar samples screening +SVR neural network |

| M7 | HRo; Io; Vo; Ti; RHi; Q(t-1) | ELM neural network |

| M8 | HRo; Io; Vo; Ti; RHi; Q(t-1) | Similar samples screening +ELM neural network |

| Model | Training error | Prediction error | ||||

|---|---|---|---|---|---|---|

| MAPE(%) | R2 | RMSE(kW) | MAPE(%) | R2 | RMSE(kW) | |

| M1 | 3.7 | 0.892 | 4.092 | 11.5 | 0.535 | 14.611 |

| M2 | 3.5 | 0.895 | 3.937 | 8.8 | 0.658 | 12.536 |

| M3 | 2.6 | 0.917 | 3.593 | 7.2 | 0.632 | 12.992 |

| M4 | 2.9 | 0.915 | 3.531 | 8.3 | 0.528 | 14.717 |

| M5 | 2.2 | 0.908 | 3.777 | 5.4 | 0.811 | 9.319 |

| M6 | 2.5 | 0.903 | 3.763 | 5.7 | 0.782 | 10.007 |

| M7 | 2.8 | 0.903 | 3.889 | 6.4 | 0.742 | 10.881 |

| M8 | 3.0 | 0.897 | 3.885 | 5.7 | 0.820 | 9.101 |

| Indicators | Building cooling load and its influencing factors | |||||||

|---|---|---|---|---|---|---|---|---|

| Q | To | RHo | Io | Vo | Ti | RHi | Q(t-1) | |

| Average value |

169.105 | 31.644 | 62.727 | 360.958 | 0.690 | 25.078 | 68.716 | 168.599 |

| Standard deviatin |

53.664 | 3.354 | 15.966 | 202.608 | 0.543 | 0.380 | 5.948 | 53.055 |

| Test statistic |

0.245 | 0.025 | 0.080 | 0.046 | 0.135 | 0.067 | 0.105 | 0.243 |

| Asymptotic significance |

0.000 | 0.200 | 0.000 | 0.003 | 0.000 | 0.000 | 0.000 | 0.000 |

| Indicator | Factors affecting building cooling loads | ||||||

|---|---|---|---|---|---|---|---|

| To | RHo | Io | Vo | Ti | RHi | Q(t-1) | |

| Correlation coefficient | 0.427* * | -0.008 | 0.189** | 0.137** | 0.185** | 0.250** | 0.966** |

| Significance | 0.000 | 0.841 | 0.000 | 0.001 | 0.000 | 0.000 | 0.000 |

| Name | Main input variables | Detailed description |

|---|---|---|

| M1 | To; Io; Vo; Ti; RHi; Q(t-1) | BPNN |

| M2 | To; Io; Vo; Ti; RHi; Q(t-1) | Similar sample screening +BPNN |

| M3 | To; Io; Vo; Ti; RHi; Q(t-1) | GABPNN |

| M4 | To; Io; Vo; Ti; RHi; Q(t-1) | Similar sample screening +GABPNN |

| M5 | To; Io; Vo; Ti; RHi; Q(t-1) | SVR neural network |

| M6 | To; Io; Vo; Ti; RHi; Q(t-1) | Similar sample screening +SVR neural network |

| M7 | To; Io; Vo; Ti; RHi; Q(t-1) | ELM neural network |

| M8 | To; Io; Vo; Ti; RHi; Q(t-1) | Similar sample screening +ELM neural network |

| Model | Training error | Prediction error | ||||

|---|---|---|---|---|---|---|

| MAPE(%) | R2 | RMSE(kW) | MAPE(%) | R2 | RMSE(kW) | |

| M1 | 7.6 | 0.889 | 15.641 | 10.5 | 0.850 | 25.580 |

| M2 | 4.8 | 0.911 | 11.819 | 5.7 | 0.893 | 21.588 |

| M3 | 2.7 | 0.963 | 9.020 | 2.8 | 0.903 | 20.574 |

| M4 | 2.8 | 0.962 | 8.964 | 2.9 | 0.905 | 20.289 |

| M5 | 2.2 | 0.967 | 8.508 | 2.6 | 0.905 | 20.370 |

| M6 | 2.4 | 0.964 | 8.708 | 2.5 | 0.906 | 20.208 |

| M7 | 2.7 | 0.964 | 8.961 | 3.3 | 0.904 | 20.467 |

| M8 | 2.6 | 0.948 | 8.647 | 2.7 | 0.906 | 20.247 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).