In this paper, the function to be tested for autonomous driving is selected, and the functional test scenario generation method introduced above is used to generate simulation scenarios, and simulation software is used to conduct simulation tests, thus proving the effectiveness of the scenarios obtained based on the generation method in this paper.

5.1. Functional Test Specific Scenario Generation

Advanced Driving Assistance System (ADAS) is an intelligent system that uses a variety of on-board sensors to collect environmental information, road network information, road facilities and road participants while the vehicle is in motion, and then realizes the alert of impending danger through system calculation and analysis. ADAS has been widely cited at the L1 and L2 levels of autonomous driving.

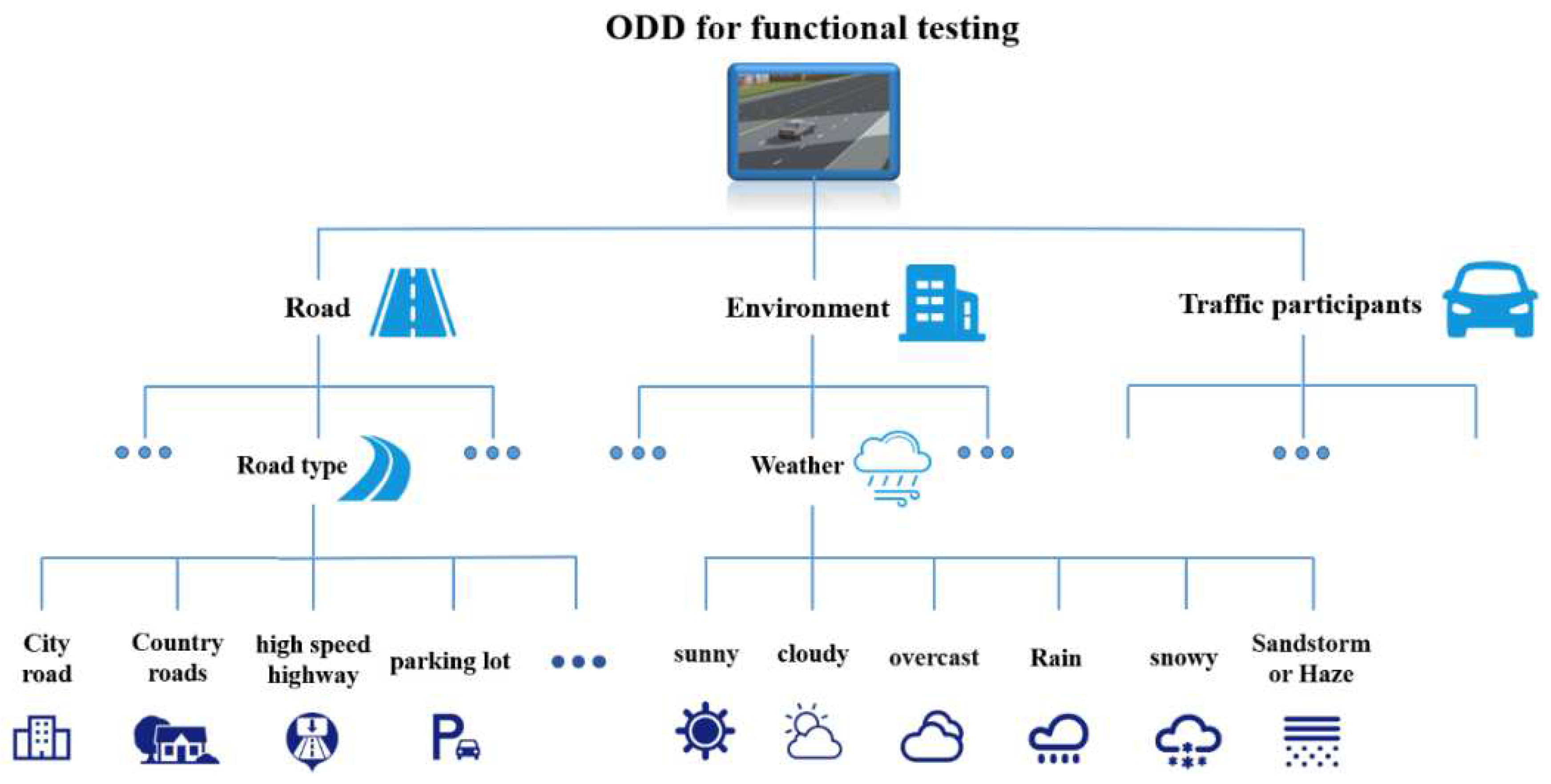

Autonomous Emergency Braking (AEB), is one of the most widely used ADAS functions on L2 autonomous vehicles. In this paper, we take AEB function as an example to generate a specific scenario for simulation testing of AEB function. Firstly, it is necessary to construct an ODD that complies with the test regulations of AEB function.

In 2014, Euro-NCAP released a test standard for AEB systems, which specifies two test methods and evaluation criteria for automatic emergency braking, including automatic emergency braking for vehicle-to-vehicle (C2C) and automatic emergency braking for vehicles and vulnerable road users such as pedestrians, autonomous vehicles, and VRU [

32]. Among them, the evaluation criteria of AEB testing for vehicle-to-vehicle (C2C) mainly focus on the scenario where the test vehicle is rear-ended with the target vehicle, i.e., the front vehicle. There are three scenarios, Car-to-Car Stationary (CCRs), Car-to-Car Moving (CCRm) and Car-to-Car Braking (CCRb), depending on the movement state of the vehicle in front.

According to relevant statistics [

33], the car shop rear-end scenario is one of the most dominant scenarios in current traffic accidents, so this paper selects the car shop automatic emergency braking scenario in AEB function for design.

The AEB function test ODD is shown in

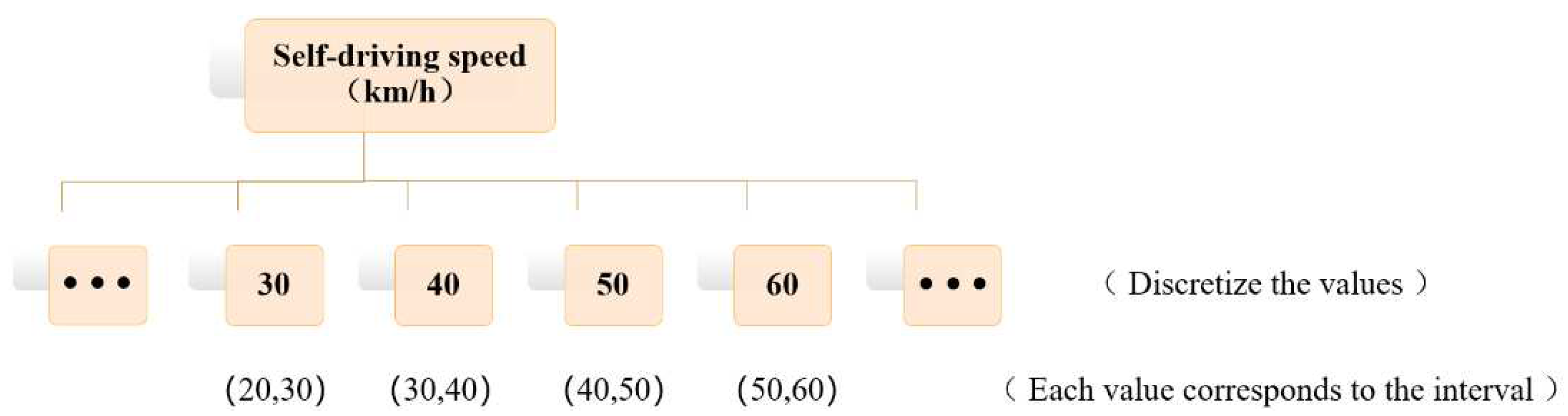

Table 3. The sub-element values under the traffic participant element are discretized for continuous values and each value represents an interval, for example, the self- driving speed value of 40 represents a value interval of (30km/h,40km/h).

The weight values of ODD elements are obtained by the calculation method of scene element fetching weight values in

Section 2, as shown in

Table 4.

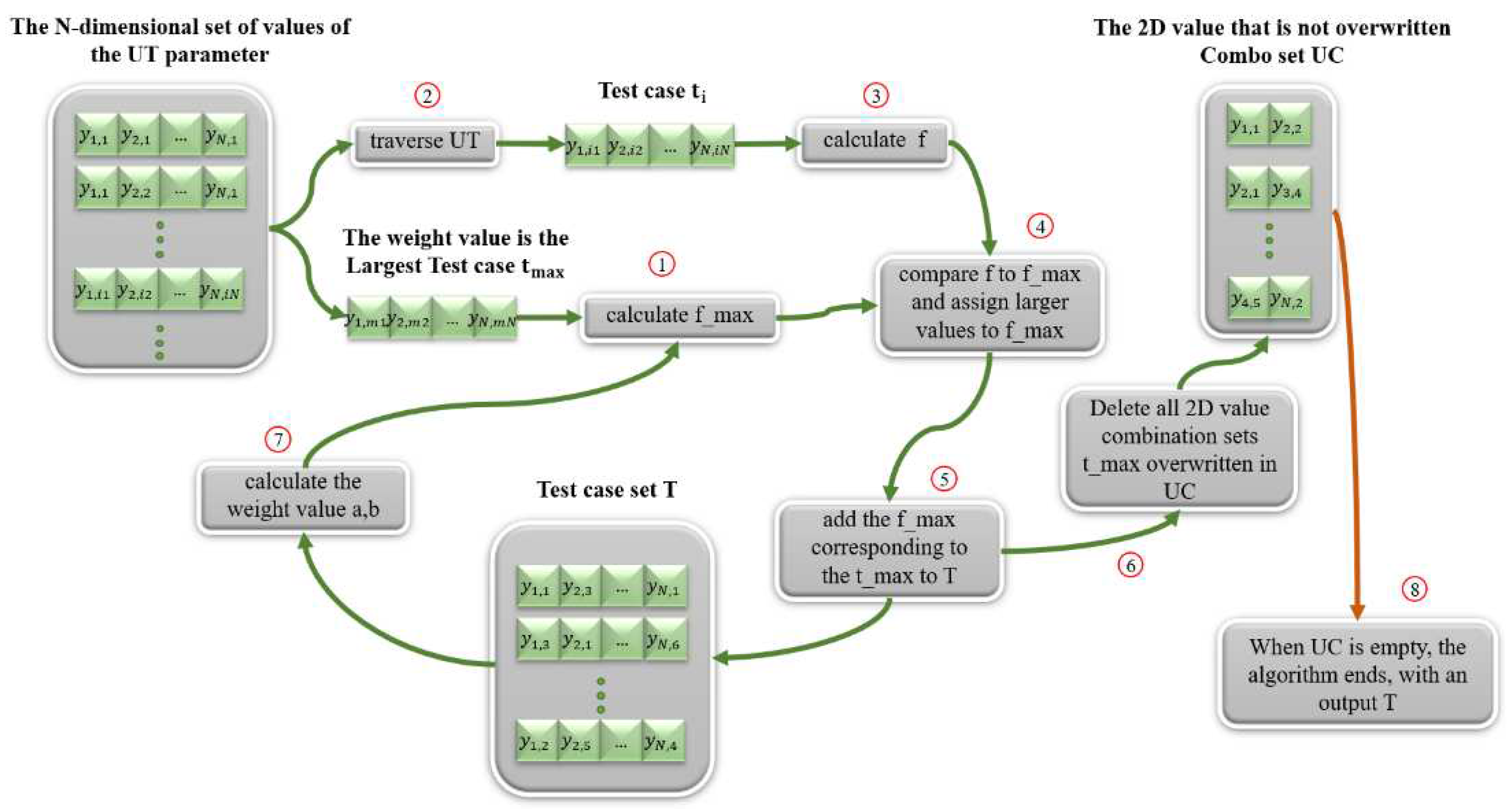

The AEB function ODD element values and their weight values are used as the input parameters and their weight values of the improved combination testing algorithm, and the ES(a,b) algorithm is used to generate test case sets covering dimension n=2, n=3, n=4, n=5. In order to show the generation effect of ES(a,b) algorithm, this paper selects currently available combination testing tools or combination testing open source code, such as PICT, AllPairs, AETG [

34], and uses the same AEB function ODD elements as input parameters of the algorithm to generate test case sets. The number of test cases in the test case set generated by different algorithms is shown in

Table 5.

From

Table 4, it can be seen that the ES(a,b) algorithm can effectively reduce the effect of test case generation at both lower coverage dimensions and higher coverage dimensions, so the optimization effect of ES(a,b) algorithm for test case generation has been verified.

The specific scenario generation method is used to sample the four sub-element value intervals under each test case traffic participant element in the set of test cases with different coverage dimensions obtained by the ES(a,b) algorithm to obtain specific scenarios.

Take one test case in the two-dimensional combined coverage test case set generated by the ES(a,b) algorithm as an example, the four subelements under the traffic participant element of this test case take the values shown in

Table 6.

Using Monte Carlo method and clustering algorithm, the obtained post-clustering elements take values as shown in

Table 7.

5.2. Automatic Driving MIL Test Based on AEB Function

As can be seen from the above, the automatic driving simulation test is divided into four types according to the test method: SIL, MIL, HIL and HIL, and the MIL test method is chosen in this paper.

The automated driving simulation test relies on simulation software, and the automated driving virtual simulation software is a three-dimensional graphics processing software developed based on virtual reality technology [

35]. The automated driving simulation test platform established based on the simulation software needs to have the ability to truly restore standard regulatory test scenes and transform the road collection data of real vehicles to generate simulation scenes, while the simulation test platform should realize that vehicles can be carried out in the platform cloud At the same time, the simulation test platform should realize the ability of large batch and parallel simulation of vehicles in the platform cloud, so that the simulation test can realize the closed-loop test of the full-stack algorithm of automatic driving perception, planning, decision making and control.

This paper uses PreScan, a simulation software for driver assistance system and safety system development and validation, whose main functions include scenario building, sensor modeling, developing control algorithms and running simulation [

36]. Compared with VTD, CarSim, Carla and other simulation software, the advantages of PreScan are simple scenario modeling process, perfect sensor and vehicle dynamics model, and PreScan is equipped with an interface for joint simulation with Matlab/Simulink, through which control and decision making algorithms in Matlab/Simulink can be imported. algorithms, and also in Matlab/Simulink to modify the vehicle dynamics model and demo algorithms that come with PreScan [

37]. In this paper, the specific scenario of

Table 7 is selected for simulation testing, and the ODD elements of the specific scenario of

Table 7 are taken as shown in

Table 8.

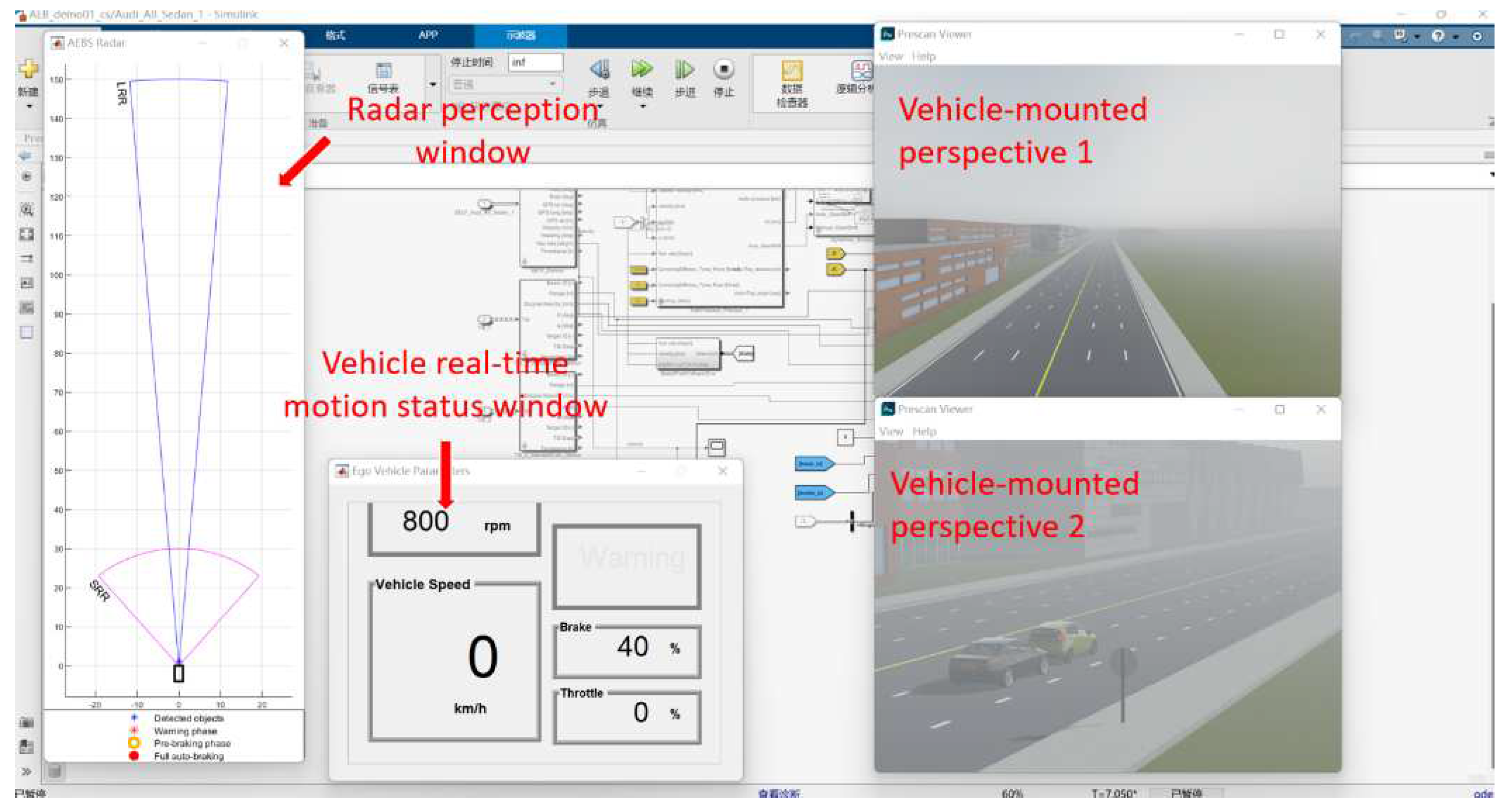

The simulation scenario generated by PreScan is shown in

Figure 4.

Add long-range millimeter wave radar for self-vehicle in PreScan, set FoV as pyramidal, single beam, scanning frequency 25Hz, detection distance 150m, lateral FoV opening angle 9 degrees, maximum number of detected targets 32, and the rest parameters as default parameters.

Add short-range millimeter wave radar, set FoV as pyramidal, single beam, scanning frequency 25Hz, detection distance 30m, lateral FoV opening angle 90 degrees, maximum number of detected targets 32, and the rest parameters as default parameters.

Click build to compile, and after compiling, open the corresponding .slx file in simulink and complete the connection of each module input and output. In this paper, we choose Prescan’s own AEB control algorithm, which uses the time to collision (TTC) as the judgment criterion. When TTC < 2.6s, the control algorithm gives an alarm signal; when TTC < 1.6s, the control algorithm gives about 40% of the half braking signal; when TTC < 0.6s, the control algorithm gives about 100% of the full braking signal.

The added sensor model, dynamics model, and AEB control algorithm are the same for both scenarios. When you click Run Simulation, you can see the two windows added with the vehicle view, the real-time motion status window of the vehicle, and the window showing the sensing results of the millimeter wave radar, as shown in

Figure 5.

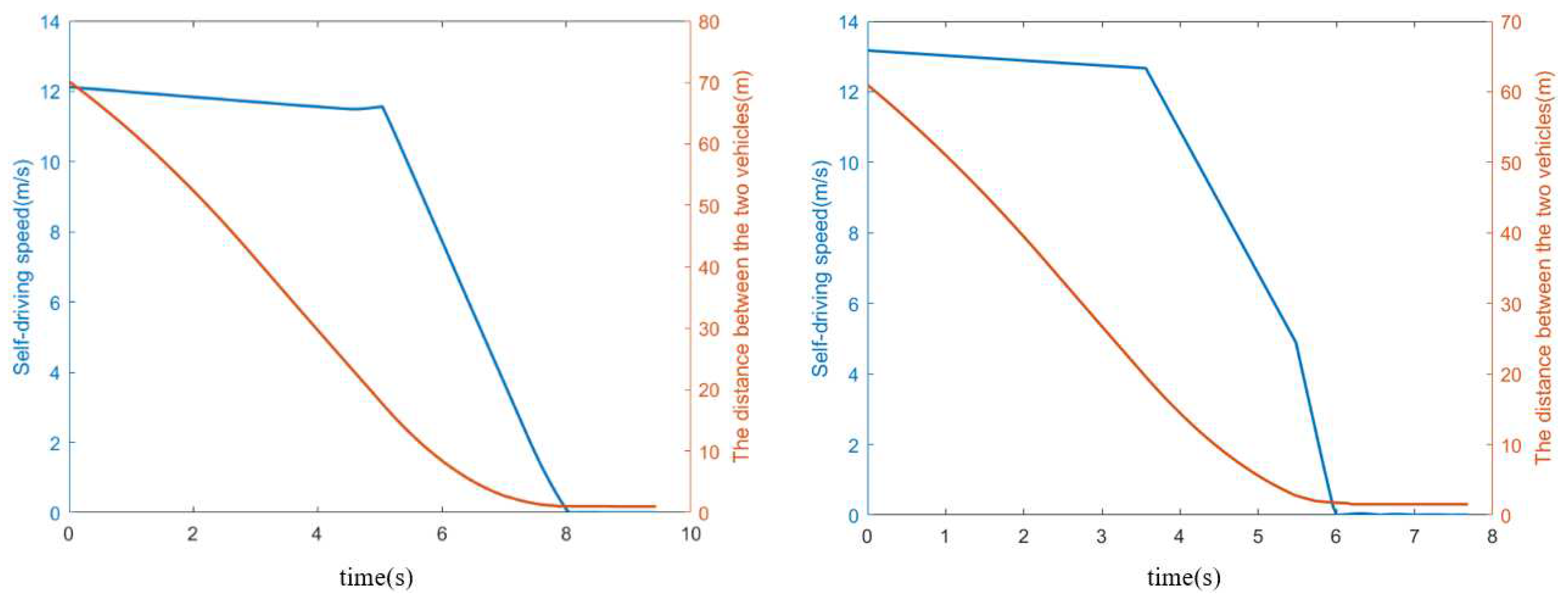

At the end of the simulation run, the graphs of the change of the speed of the self-vehicle and the relative distance between the self-vehicle and the target vehicle can be obtained, as shown in

Figure 6. The horizontal coordinate in the graph represents the simulation running time, the red curve represents the relative distance between two vehicles, and the blue curve represents the speed of the self-vehicle.

As can be seen from

Figure 6, Scenario 1 at the beginning of the simulation, the two cars are 74.43m apart, the self-car monitors the target car, and the self-car travels straight at a constant speed of 43.63km/h or 12.12m/s; when the two cars are about 18m apart, the AEB system gives a full brake and the self-car brakes to stop; after the brakes stop the two cars are about 1m apart and the self-car braking time is 2.7s.

Scenario 2 At the beginning of the simulation, the two cars are 65.21m apart, the self-car monitors the target car, the self-car goes straight at a constant speed of 47.40km/h or 13.17m/s; when the distance between the two cars is about 19m, the AEB system gives a full brake and the self-car brakes to stop; the distance between the two cars after braking is about 1.5m, and the braking time of the self-car is 2.2s.

From the simulation results, it can be seen that the AEB control algorithm and the millimeter wave radar used in the simulation test performed well under the bad weather conditions of sand and haze, when the speed range of the self-car was (40km/h,60km/h), and the self-car braked at about 1-2m distance between the two cars to avoid the accident.

To test the effect of the AEB function when the speed of the self-car is high under the same sandy haze weather conditions, this paper selects another test case in the two-dimensional combined coverage test case set generated by the ES(a,b) algorithm for testing. The specific scenario generated by this test case is shown in

Table 9.

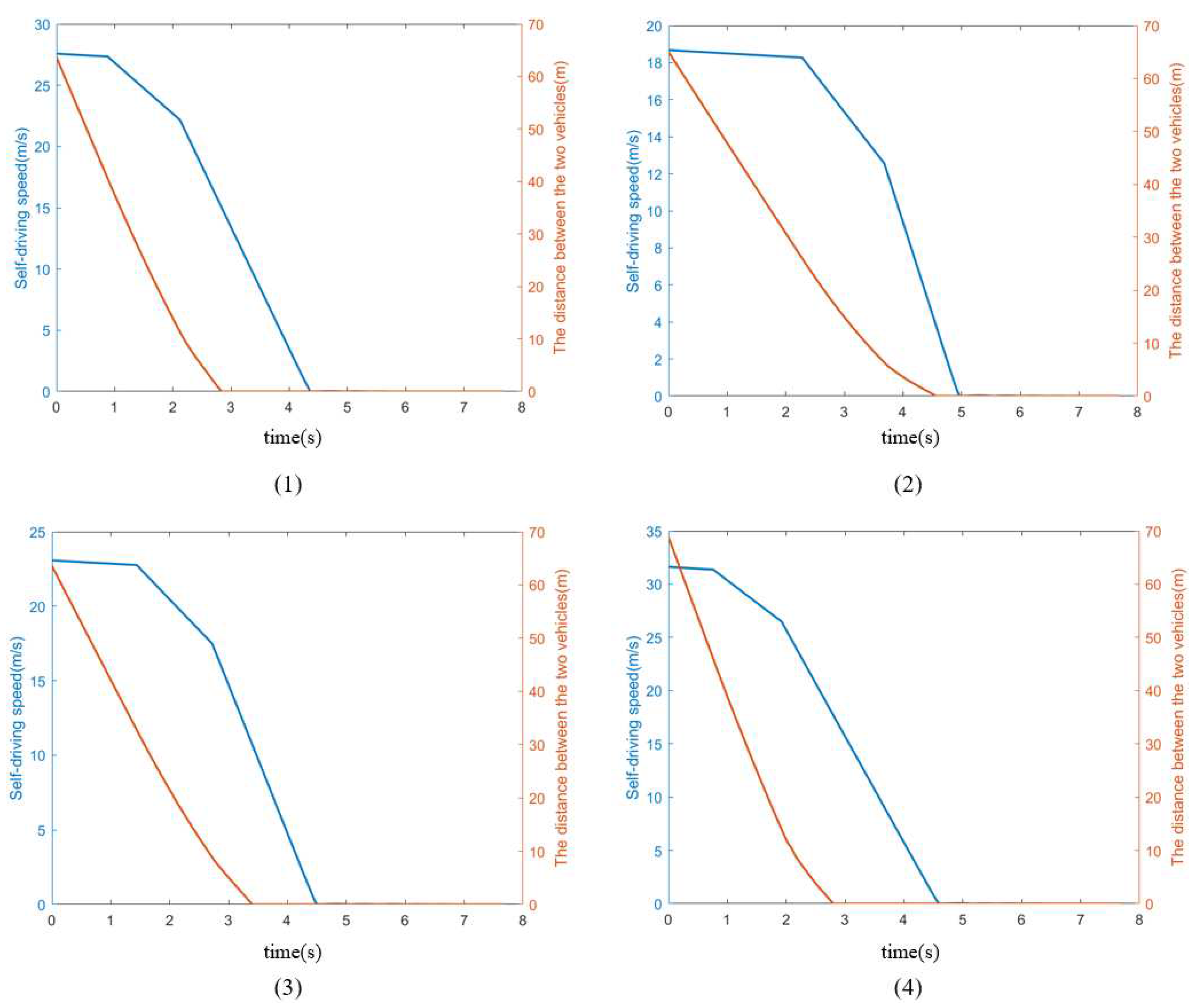

Add the sensor model, vehicle dynamics model and AEB control algorithm consistent with the above. Run the simulation and get the variation graphs of self-vehicle speed, relative distance between self-vehicle and target vehicle and self-vehicle braking force parameters, as shown in

Figure 7.

At the beginning of simulation scenario 1, the speed of the self-car is 99.29km/h or 27.58m/s, and the distance between the self-car and the target car is 67.89m; when the distance between the two cars is about 40m, the AEB control algorithm controls the self-car to give about 40% braking force; when the distance between the two cars is about 10m, the AEB control algorithm controls the self-car to give 100% full braking force; when the distance between the two cars is 0m, the self-car When the distance between two cars is 0m, the speed is about 16m/s, the self-car is not braked, and the two cars collide. Since the collision animation effect is not set, the animation shown is that the target car passes through the self-car, and then the self-car brakes to stop.

At the beginning of simulation scenario 2, the speed of the self-car is 67.26km/h or 18.68m/s, and the distance between the self-car and the target car is 69.30m; when the distance between the two cars is about 38m, the AEB control algorithm controls the self-car to give about 40% braking force; when the distance between the two cars is about 10m, the AEB control algorithm controls the self-car to give 100% full braking force; when the distance between the two cars is 0m, the self-car The speed is about 15m/s, the self-car is not braked, and the two cars collide.

At the beginning of simulation scenario 3, the speed of the self-car is 83.07km/h or 23.08m/s, and the distance between the self-car and the target car is 67.84m; when the distance between the two cars is about 32m, the AEB control algorithm controls the self-car to give about 40% braking force; when the distance between the two cars is about 9m, the AEB control algorithm controls the self-car to give 100% full braking force; when the distance between the two cars is 0m, the speed of the self-car is When the distance between the two cars is 0m, the speed of the self-car is about 11m/s, the self-car is not braked, and the two cars collide.

At the beginning of simulation scenario 4, the speed of the self-car is 113.87km/h or 31.63m/s, and the distance between the self-car and the target car is 73.13m; when the distance between the two cars is about 42m, the AEB control algorithm controls the self-car to give about 40% braking force; when the distance between the two cars is about 13m, the AEB control algorithm controls the self-car to give 100% full braking force; when the distance between the two cars is 0m, the self-car When the distance between the two cars is 0m, the speed is about 18m/s, the self-car is not braked, and the two cars collide.

From the simulation results, it can be seen that under the bad weather conditions of sand and haze, the AEB control algorithm and the millimeter wave radar used in the simulation test performed poorly when the speed range of the self-car was (60km/h,120km/h), and the AEB control algorithm did not give full braking directly when braking, but gave half braking first and then full braking, resulting in a collision between the two cars when they were 0m apart and the self-car did not brake.

In summary, the validity of the specific scenarios obtained above for MIL testing of AEB functions has been verified.