Submitted:

09 November 2023

Posted:

10 November 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Materials and Methods

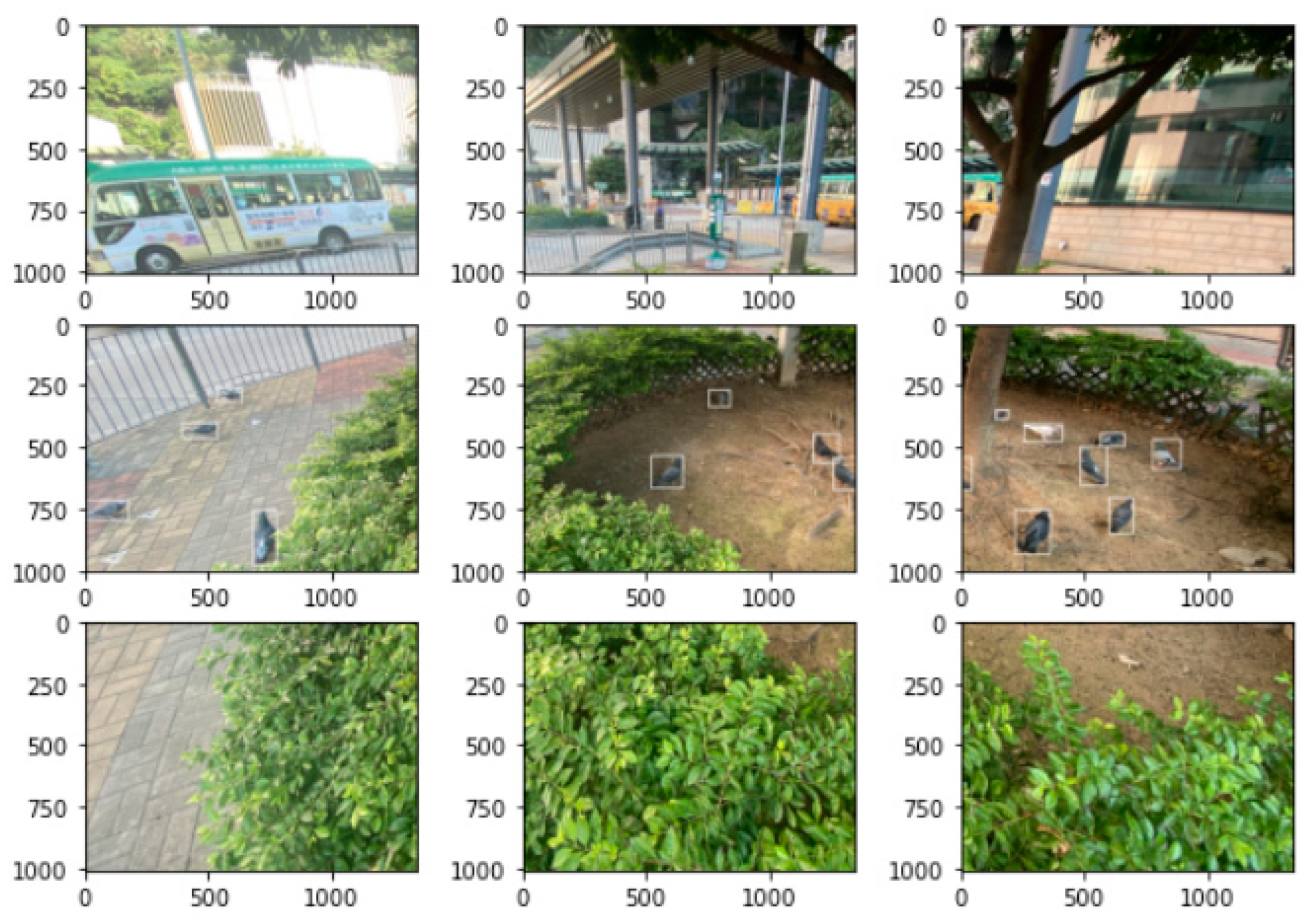

2.1. Image data collection

2.2. Data labelling and augmentation

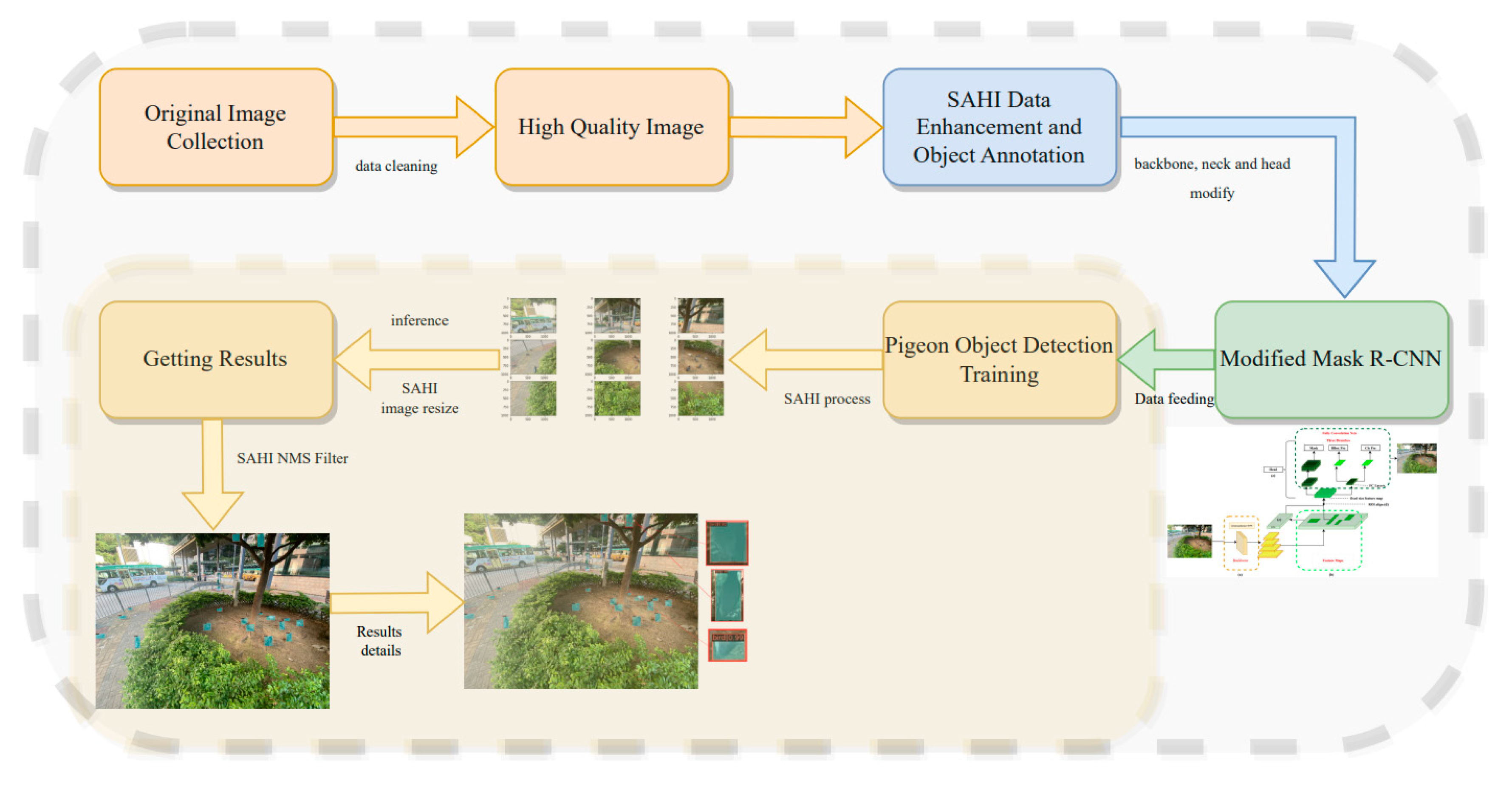

2.3. Workflow overview

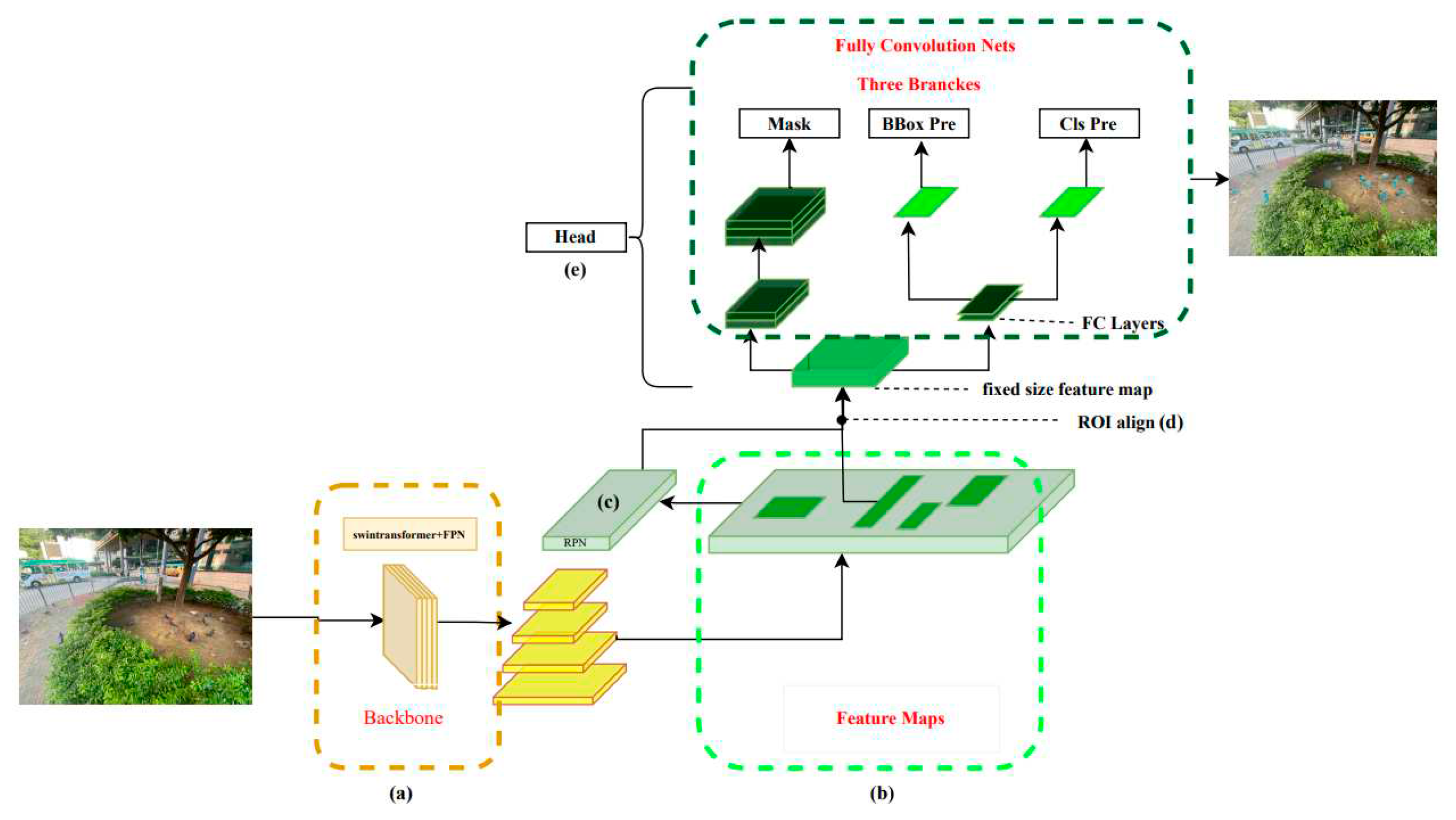

2.4. Swin-Mask R-CNN with SAHI model

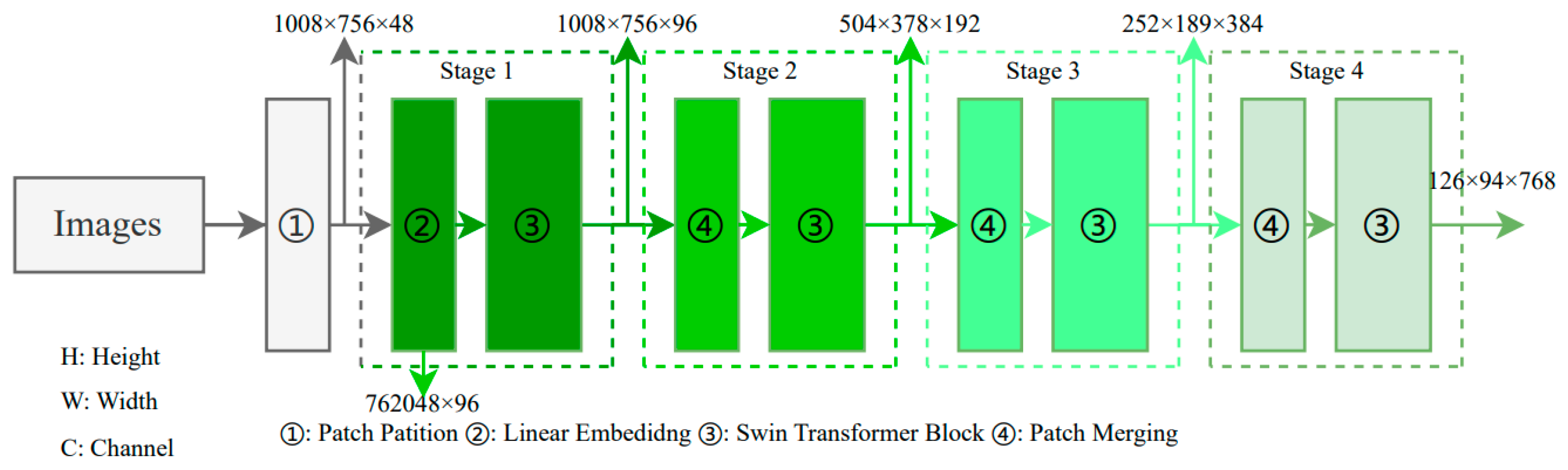

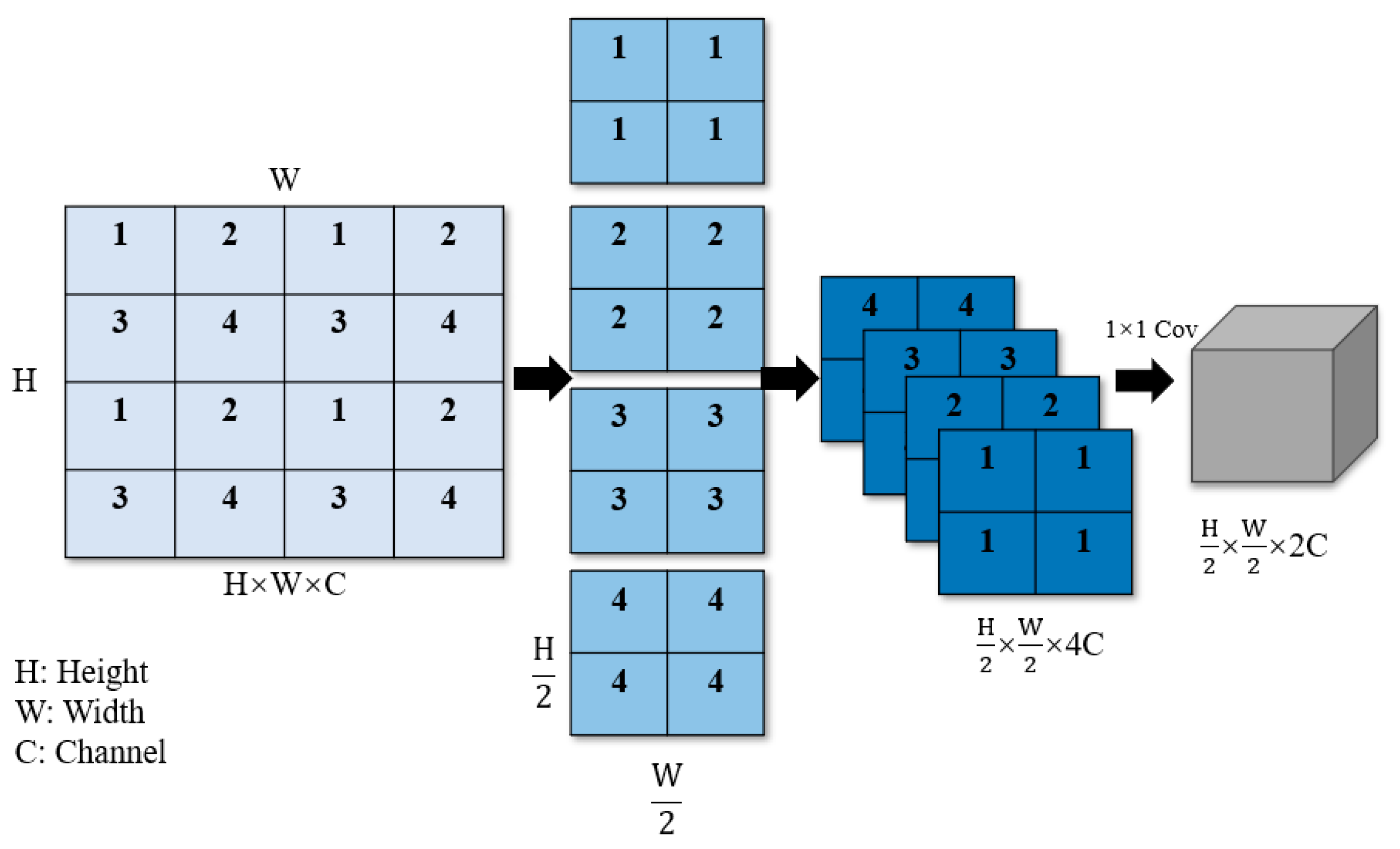

2.5. Swin transformer Backbone

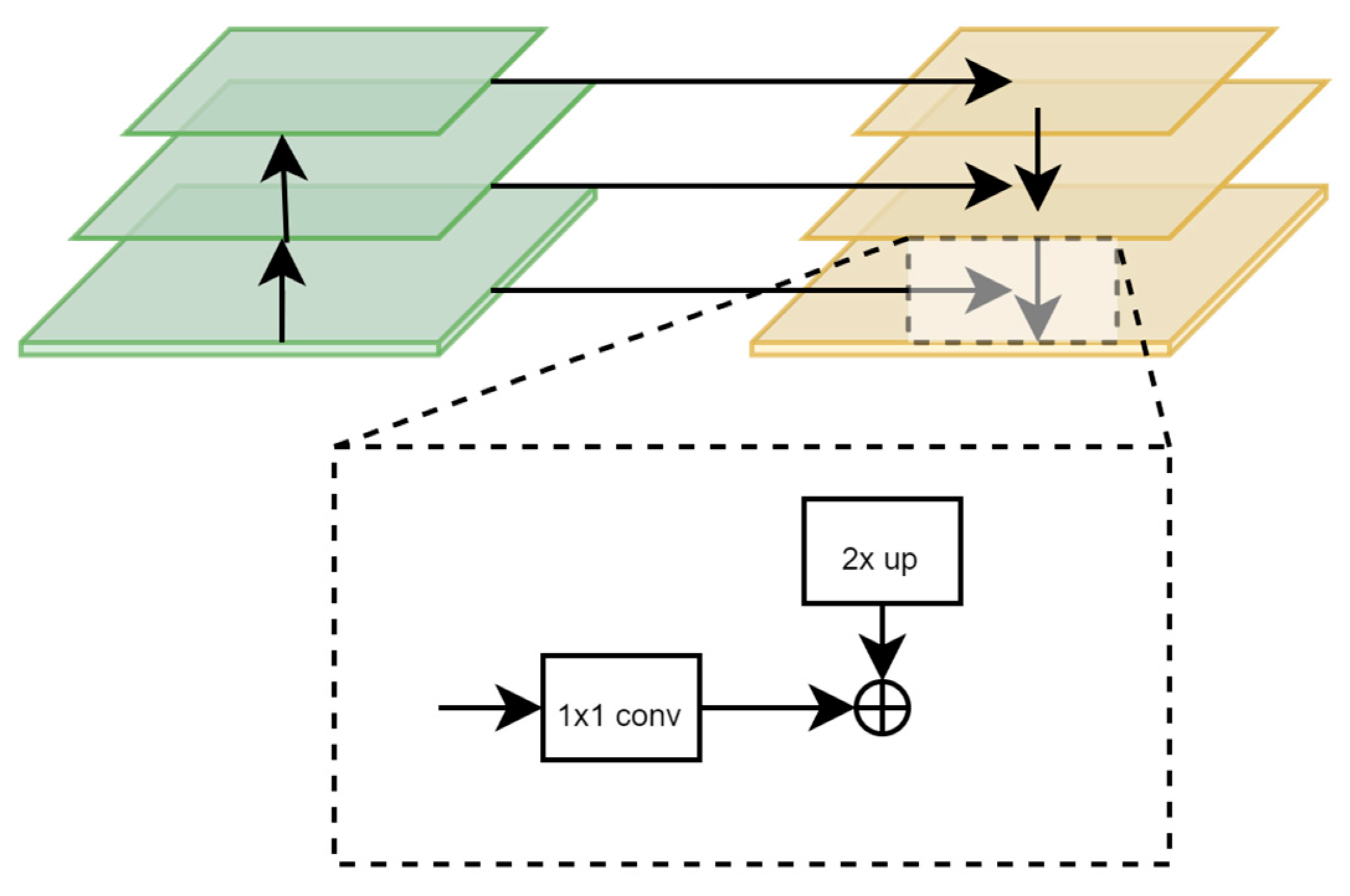

2.6. Feature Pyramid Network (FPN)

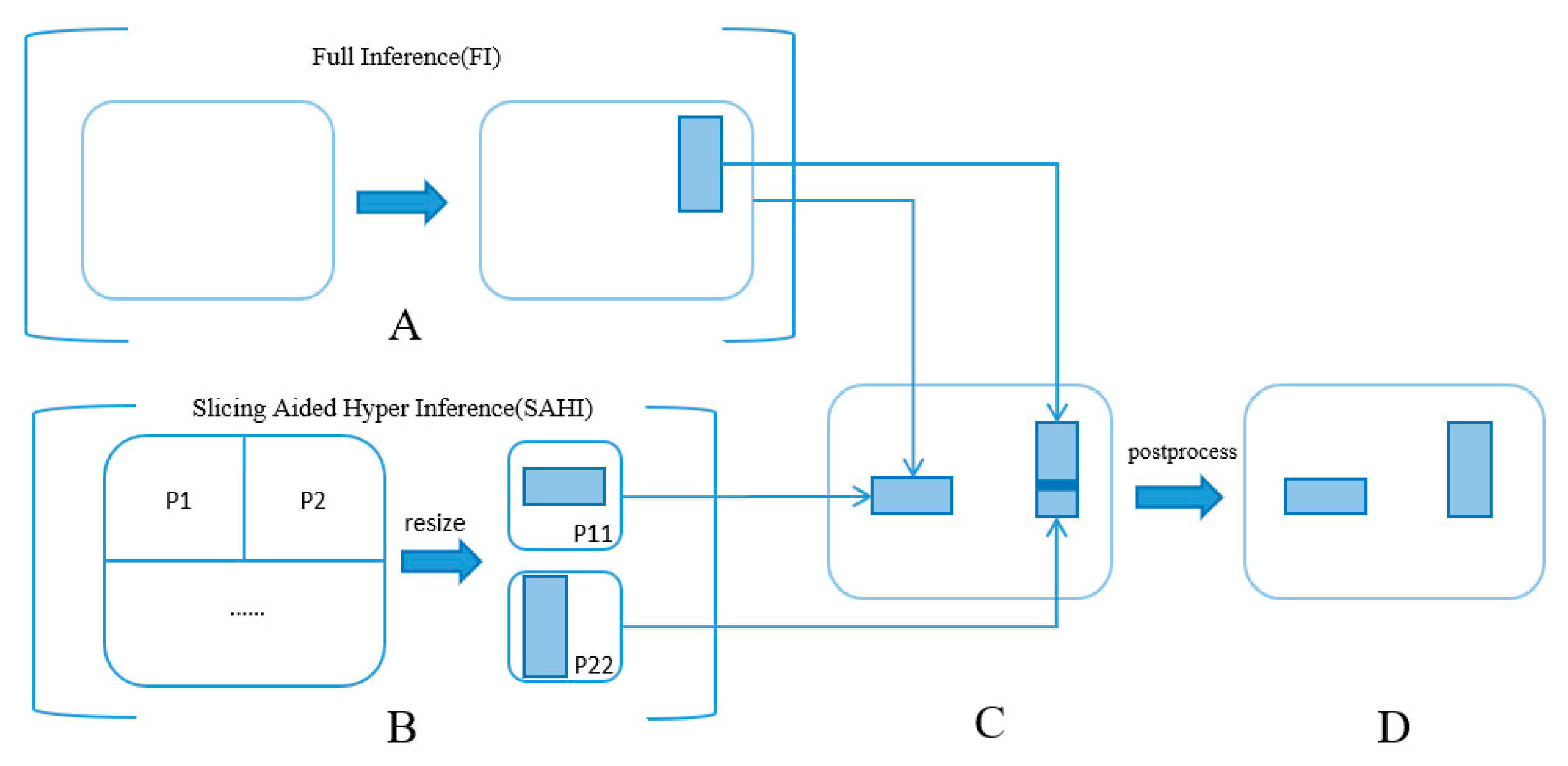

2.7. Slicing Aided Hyper(SAHI) tool

3. Results

3.1. Experimental settings and model evaluation indicators

3.2. mAP comparison of different model

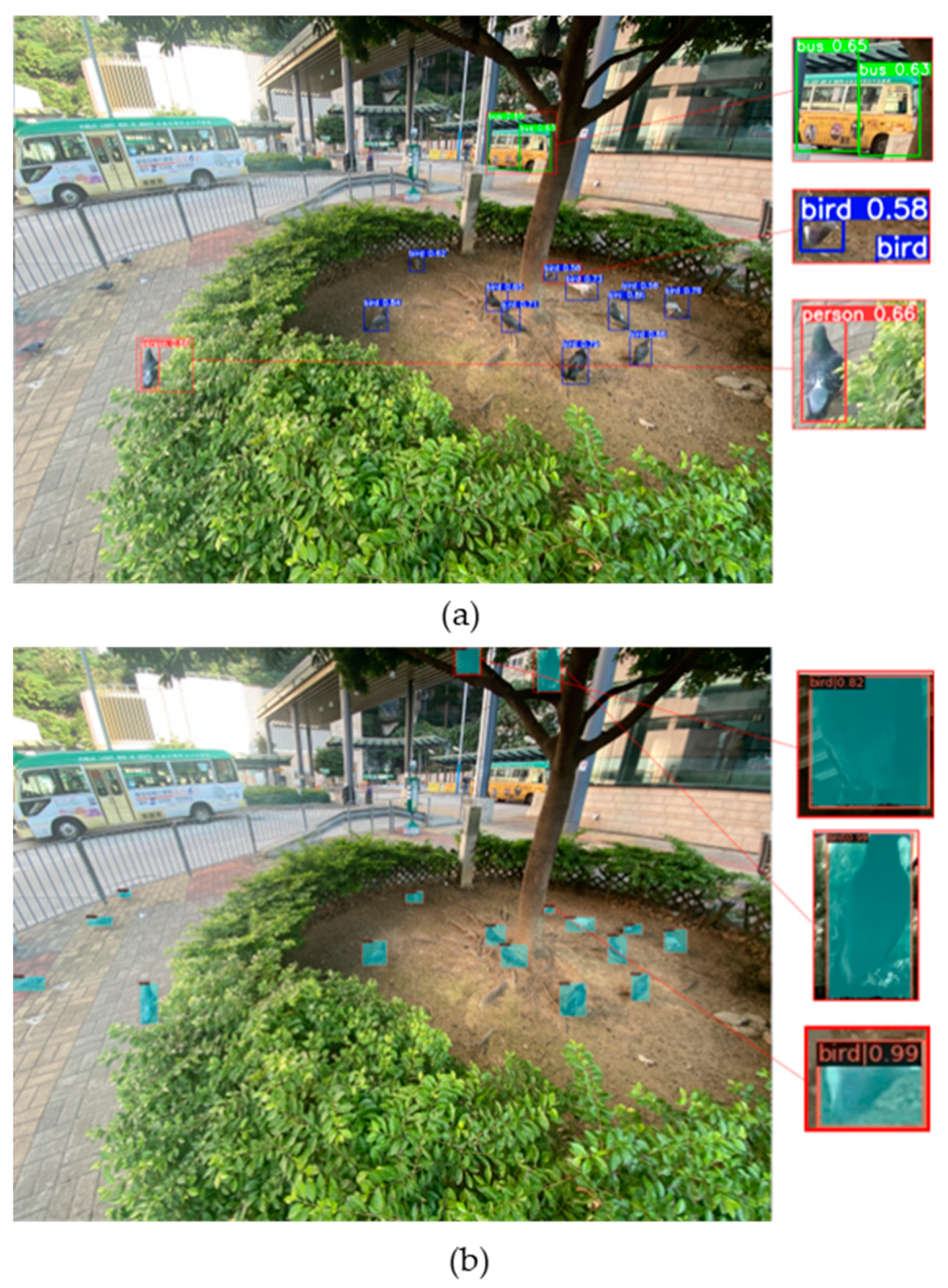

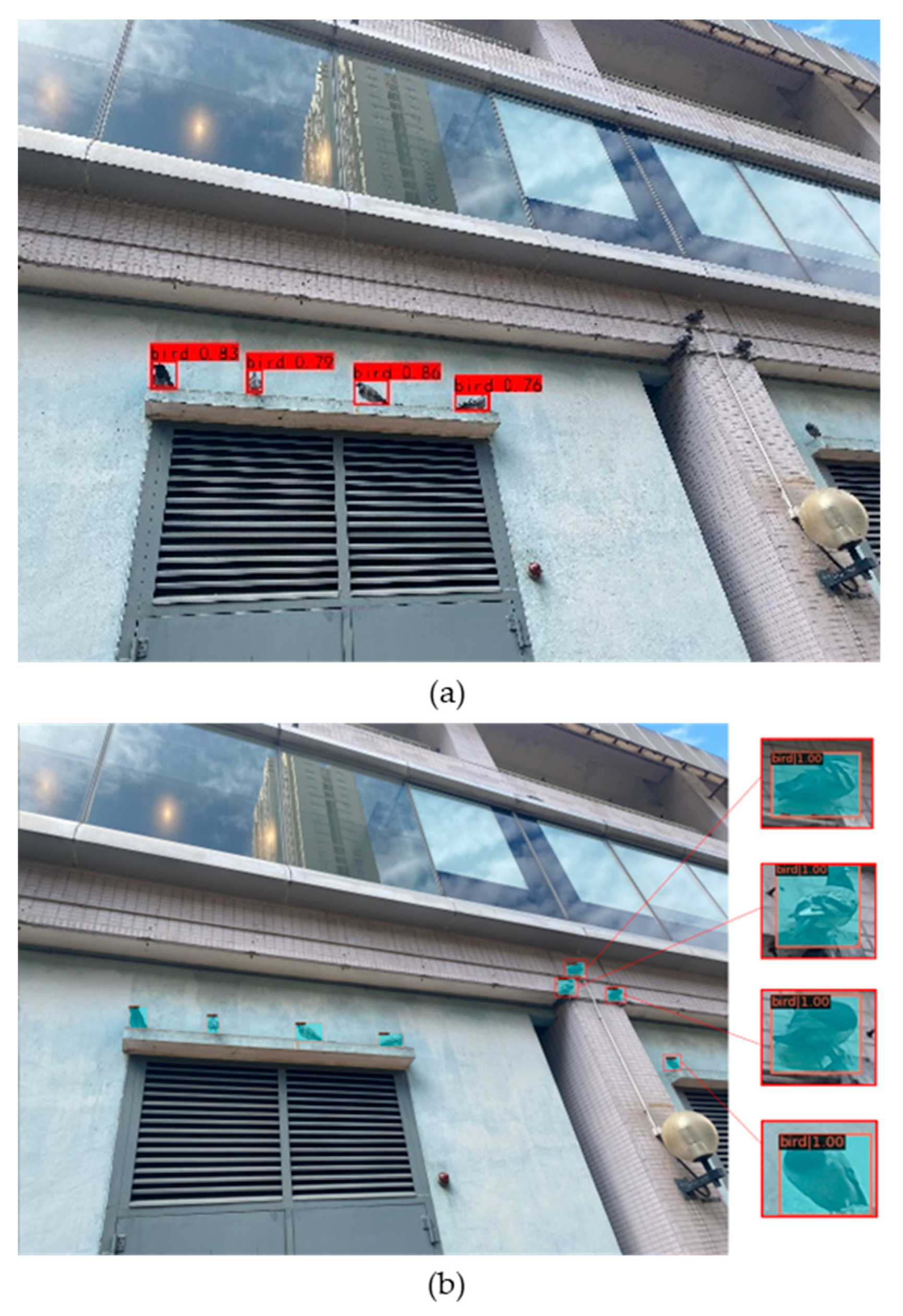

3.3. Results visualization

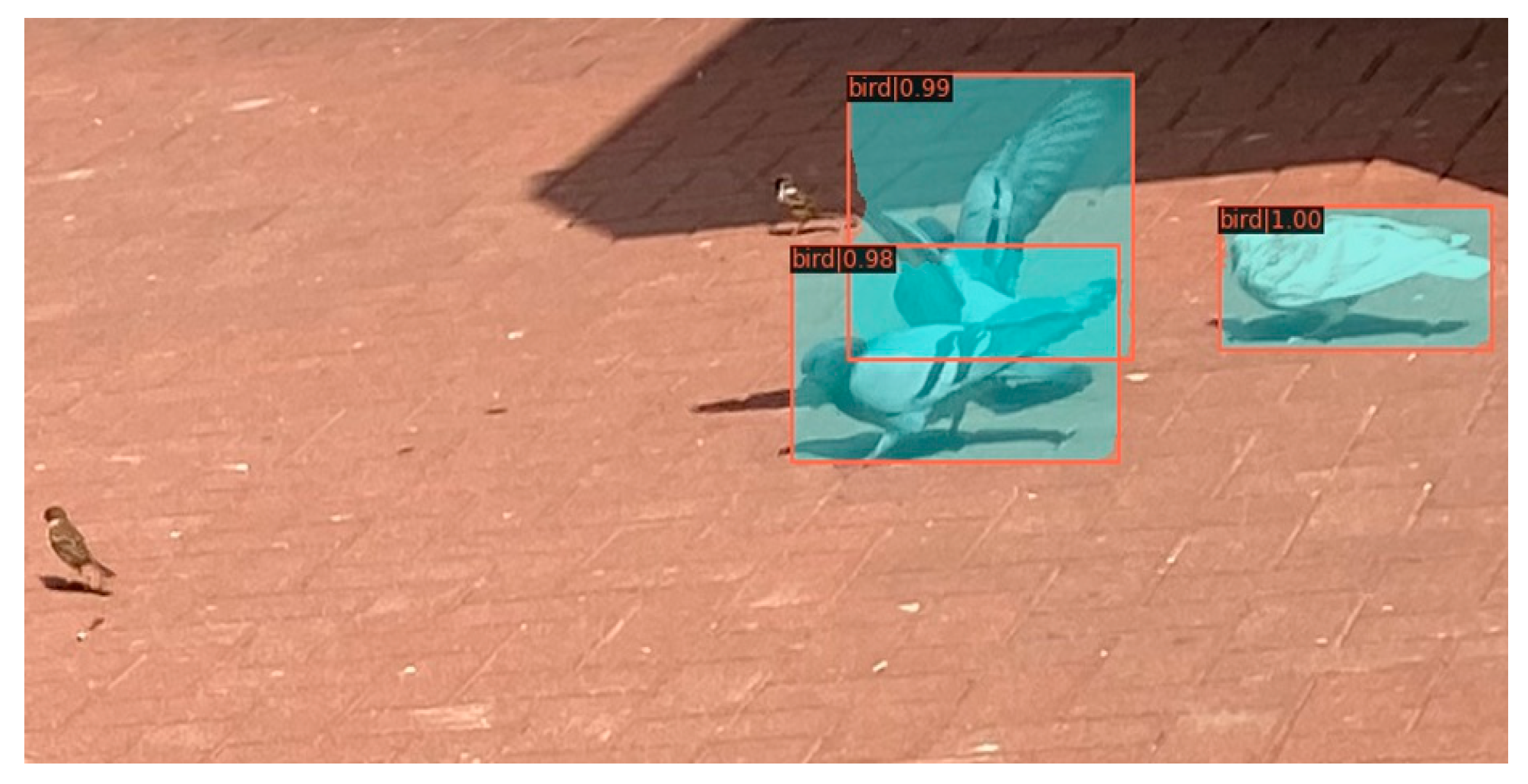

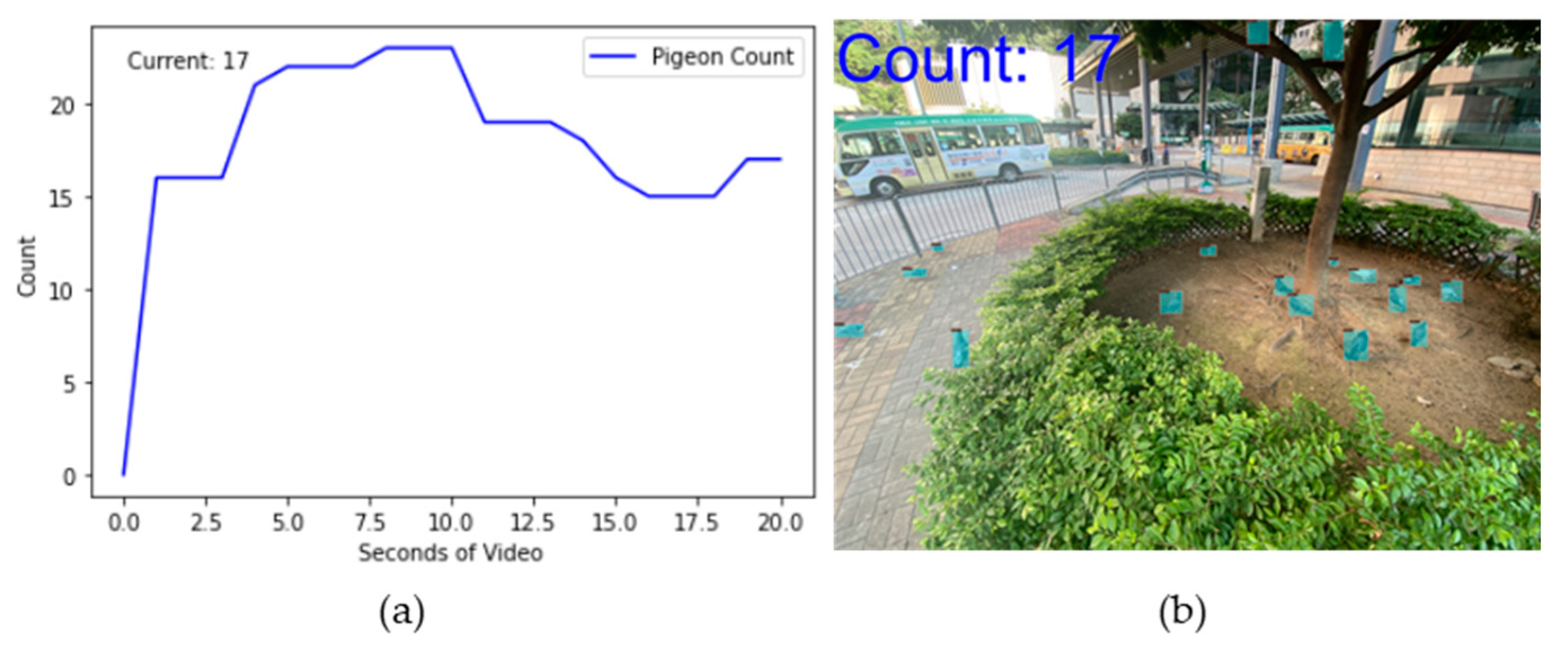

3.4. Pigeon counting demo

4. Discussion

- The use of deep learning network algorithms for bird recognition has consistently demonstrated strong performance [32,33]. However, there are several limitations in current bird detection methods. Firstly, the utilization of traditional backbone networks in two-stage detection approaches [34,35] hinders the maximization of network performance in bird detection. For instance, backbone networks such as CNN-based Mobilenet, VGGnet, Resnet, and ShuffleNet [7] fail to adequately capture the intricate details and contextual information specific to target bird species. Secondly, most existing studies on bird detection using deep learning techniques lack a specific focus on individual bird species, such as feral pigeons. Some studies concentrate on accurate identification of various bird species in airborne scenarios [14,15,24,35], while others explore the classification and detection of different bird species in natural environments, such as wind farms or aquatic habitats [18,34]. Additionally, a few studies specifically investigate the detection and counting of different bird species in specific regions, such as birds on transmission lines [17]. Moreover, extensive resources are required for traditional feral pigeon research in urban environments [36], and the limited urban pigeon detection focuses only on specific areas such as buildings [37]. There is currently no comprehensive study on feral pigeon detection in complex urban settings. To address these challenges, we propose an automatic detection method for feral pigeons in urban environments using deep learning. Through a series of experiments, we demonstrate the effectiveness of our proposed method in feral pigeon detection in urban areas.

- In bird detection, most studies have utilized one-stage (YOLO) [15,17,18,20] and two-stage (Faster R-CNN and Mask R-CNN) [34,35] object detection models. The original Mask R-CNN has demonstrated great performance in bird detection [35]. Based on this, we propose an improved algorithm that enhances the main components of the original Mask R-CNN and incorporates the SAHI tool to improve the model's detection performance. Recent studies have shown the effectiveness of the Swin Transformer in capturing fine-grained animal details [38,39]. Therefore, we replace the backbone of the original Mask R-CNN with the Swin Transformer and add FPN as the network's neck for multi-scale feature fusion [40]. After adjusting the network, to evaluate the performance of the Swin-Mask R-CNN model, we compare it with commonly used object detection methods for bird detection, including YOLO [15,17,18,20], Faster R-CNN [34], Mask R-CNN [35], and our proposed method (Swin-Mask R-CNN) on our feral pigeon dataset. The mAP of our proposed Swin-Mask R-CNN model reaches the highest value of 0.68. These experimental results demonstrate that by applying various bird detection models and the Swin-Mask R-CNN model to feral pigeon detection, our model achieves the best performance. Moreover, although using Swin-Mask R-CNN as the architecture yields optimal results in the previous comparative experiments, there is still room for improvement in detecting small objects of birds (AP50s). There are specific studies focused on the detection of small objects of birds [14,35]. Therefore, to further enhance the accuracy of detecting small objects of feral pigeons, we introduce the SAHI tool [29] to assist inference processing. In this phase, we incorporate the SAHI tool into all the models involved in the previous experiments and conduct further experiments on our dataset. The experimental results demonstrate that our Swin-Mask R-CNN with SAHI model significantly improves the accuracy of feral pigeon detection, achieving the highest values in mAP, AP50 and AP50s with improvements of 6%, 6%, and 10% respectively.

- Our current work has significantly improved the detection capability of feral pigeons in urban environments, but we still face some challenges in the future. Our research has the following two limitations: we have not further tested the generalization ability of our model, and we have not fully deployed it in real-time on portable terminals. In future work, we plan to enhance these aspects. On one hand, although our proposed model demonstrates good detection performance, to further validate its generalization ability, we intend to collect larger datasets encompassing feral pigeons and other bird species from various cities through collaborations with researchers and public data sources. On the other hand, while we have developed a demo for automatic feral pigeon counting, it has not been extensively deployed in real-world scenarios. Our goal for future work is to deploy our algorithm on cloud and mobile platforms, enabling researchers to upload photos and videos for automatic analysis by the model. This will provide feral pigeon detection and counting results, allowing estimation of feral pigeon populations in different areas and assessment of the impact of feral pigeon overpopulation.

5. Conclusion

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Giunchi D, Albores-Barajas Y V, Baldaccini N E, et al. Feral pigeons: problems, dynamics and control methods[J]. Integrated pest management and pest control. Current and future tactics, London, InTechOpen, 2012: 215-240.

- Haag-Wackernagel D. Regulation of the street pigeon in Basel[J]. Wildlife Society Bulletin, 1995: 256-260.Author 1, A.; Author 2, B. Book Title, 3rd ed.; Publisher: Publisher Location, Country, 2008; pp. 154–196.

- Sandercock B K. Estimation of survival rates for wader populations: a review of mark-recapture methods[J]. Bulletin-Wader Study Group, 2003, 100: 163-174.

- Volpato G H, Lopes E V, Mendonça L B, et al. The use of the point count method for bird survey in the Atlantic forest[J]. Zoologia (curitiba), 2009, 26: 74-78.

- Li P, Martin T E. Nest-site selection and nesting success of cavity-nesting birds in high elevation forest drainages[J]. The Auk, 1991, 108(2): 405-418.

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Xiao, Y.; et al. A review of object detection based on deep learning. Multimedia Tools and Applications. 2020, 79, 23729–23791. [CrossRef]

- Oliveira, D.A.B.; et al. A review of deep learning algorithms for computer vision systems in livestock. Livestock Science. 2021, 253, 104700. [CrossRef]

- Banupriya, N.; et al. Animal detection using deep learning algorithm. J. Crit. Rev. 2020, 7(1), 434–439.

- Huang, E.; et al. Center clustering network improves piglet counting under occlusion. Computers and Electronics in Agriculture. 2021, 189, 106417. [CrossRef]

- Li, Z.; et al. A survey of convolutional neural networks: analysis, applications, and prospects. IEEE Transactions on Neural Networks and Learning Systems. 2021.

- Sarwar F, Griffin A, Periasamy P, et al. Detecting and counting sheep with a convolutional neural network[C]//2018 15th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS). IEEE, 2018: 1-6.

- Xu B, Wang W, Falzon G, et al. Automated cattle counting using Mask R-CNN in quadcopter vision system[J]. Computers and Electronics in Agriculture, 2020, 171: 105300.

- Chabot, D.; Francis, C.M. Computer-automated bird detection and counts in high-resolution aerial images: a review. Journal of Field Ornithology 2016, 87(4), 343–359. [Google Scholar] [CrossRef]

- Boudaoud L B, Maussang F, Garello R, et al. Marine bird detection based on deep learning using high-resolution aerial images[C], OCEANS 2019-Marseille. IEEE, 2019: 1-7.

- Redmon, J.; Divvala, S.; Girshick, R.; et al. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2016, 779-788.

- Zou, C.; Liang, Y.Q. Bird detection on transmission lines based on DC-YOLO model. In: Proceedings of the 11th IFIP TC 12 International Conference on Intelligent Information Processing (IIP 2020), Hangzhou, China, July 3-6, 2020; Springer International Publishing, 2020; pp. 222-231.

- Alqaysi, H.; Fedorov, I.; Qureshi, F.Z.; et al. A temporal boosted YOLO-based model for birds detection around wind farms. Journal of Imaging 2021, 7(11), 227.

- Welch, G.; Bishop, G. An introduction to the Kalman filter. 1995, 2.

- Siriani, A.L.R.; Kodaira, V.; Mehdizadeh, S.A.; et al. Detection and tracking of chickens in low-light images using YOLO network and Kalman filter. Neural Computing and Applications 2022, 34(24), 21987–21997. [CrossRef]

- 21.Zhang, Y.; et al. A comprehensive review of one-stage networks for object detection. In: Proceedings of the 2021 IEEE International Conference on Signal Processing, Communications and Computing (ICSPCC); IEEE, 2021.

- Du, L.; Zhang, R.; Wang, X. Overview of two-stage object detection algorithms. Journal of Physics: Conference Series 2020, 1544(1), 012034. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision,2015, 1440-1448.

- Hong S J, Han Y, Kim S Y, et al. Application of deep-learning methods to bird detection using unmanned aerial vehicle imagery[J]. Sensors 2019, 19(7), 1651. [CrossRef] [PubMed]

- Liu, W.; Anguelov, D.; Erhan, D.; et al. SSD: Single Shot Multibox Detector. In: Proceedings of the 14th European Conference on Computer Vision (ECCV 2016), Amsterdam, The Netherlands, October 11-14, 2016; Springer International Publishing, 2016; pp. 21-37.

- 26.He, K.; Gkioxari, G.; Dollár, P.; et al. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, 2017, 2961-2969.

- Liu, Z.; Lin, Y.; Cao, Y.; et al. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, 10012-10022.

- Lin, T.Y.; Dollár, P.; Girshick, R.; et al. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017, 2117-2125.

- Akyon, F.C.; Altinuc, S.O.; Temizel, A. Slicing aided hyper inference and fine-tuning for small object detection. In: Proceedings of the 2022 IEEE International Conference on Image Processing (ICIP); IEEE, 2022; pp. 966-970.

- Neubeck, A.; Van Gool, L. Efficient non-maximum suppression. In: Proceedings of the 18th International Conference on Pattern Recognition (ICPR'06); IEEE, 2006; Vol.

- Tzutalin. LabelImg. Git code,2015. https://github.com/tzutalin/labelImg.

- Datar, Prathamesh, Kashish Jain, and Bhavin Dhedhi. Detection of birds in the wild using deep learning methods. 2018 4th International Conference for Convergence in Technology (I2CT). IEEE, 2018.

- 33. Pillai, S. K., Raghuwanshi, M. M., & Borkar, P. (2021). SUPER RESOLUTION MASK RCNN BASED TRANSFER DEEP LEARNING APPROACH FOR IDENTIFICATION OF BIRD SPECIES. International Journal of Advanced Research in Engineering and Technology 2022, 11(11).

- Xiang, W., et al. Birds detection in natural scenes based on improved faster RCNN. Applied Sciences 2022, 12(12), 6094.

- Kassim, Y. M., et al. Small object bird detection in infrared drone videos using mask R-CNN deep learning. Electronic Imaging, 2020(8), 85-1. [CrossRef]

- Giunchi D, Gaggini V, Baldaccini N E. Distance sampling as an effective method for monitoring feral pigeon (Columba livia f. domestica) urban populations[J]. Urban Ecosystems 2007, 10, 397–412. [CrossRef]

- Schiano F, Natter D, Zambrano D, et al. Autonomous detection and deterrence of pigeons on buildings by drones[J]. IEEE Access 2021, 10, 1745–1755.

- Agilandeeswari, L.; Meena, S. Swin transformer based contrastive self-supervised learning for animal detection and classification. Multimed. Tools Appl. 2023, 82, 10445–10470. [Google Scholar] [CrossRef]

- Gu, T.; Min, R. A Swin Transformer based Framework for Shape Recognition. In Proceedings of the 2022 14th International Conference on Machine Learning and Computing (ICMLC), Guangzhou, China, 18–21 February 2022; pp. 388–393. [Google Scholar]

- Dogra, A., Goyal, B., & Agrawal, S. From multi-scale decomposition to non-multi-scale decomposition methods: a comprehensive survey of image fusion techniques and its applications. IEEE Access 2017, 5, 16040–16067.

| Dataset | Train | Validation | Test | All |

|---|---|---|---|---|

| Original dataset | 266 | 67 | 67 | 400 |

| Data augment | 2400 | 600 | 600 | 3600 |

| Final dataset | 2466 | 667 | 667 | 4000 |

| Configuration | Parameters |

|---|---|

| CPU | 32 vCPU AMD EPYC 7763 64-Core Processor |

| GPU | A100-SXM4-80GB (80GB) |

| Development environment | Python 3.8 |

| Operation system | Ubuntu 18.04 |

| Operating Deep Learning Framework | Pytorch 1.9.0 |

| CUDA Version | CUDA 11.1 |

| Model | Backbone | Model weight size | mAP | AP50 | AP50s |

|---|---|---|---|---|---|

| YOLOv5-s | Darknet53 | 72m | 0.45 | 0.65 | 0.36 |

| YOLOv5-m | Darknet53 | 98m | 0.44 | 0.69 | 0.39 |

| Faster R-CNN | Resnet | 142m | 0.52 | 0.70 | 0.43 |

| Mask R-CNN | Resnet | 229m | 0.51 | 0.75 | 0.40 |

| Modified Mask R-CNN (Swin-Mask-RCNN) | Swin Transformer + FPN | 229m | 0.68 | 0.87 | 0.57 |

| Model | mAP | AP50 | AP50s |

|---|---|---|---|

| YOLOv5-s + SAHI | 0.51 | 0.71 | 0.42 |

| YOLOv5-m + SAHI | 0.56 | 0.74 | 0.46 |

| Faster R-CNN + SAHI | 0.60 | 0.72 | 0.46 |

| Mask R-CNN+ SAHI | 0.62 | 0.78 | 0.52 |

| Swin-Mask R-CNN + SAHI (ours) | 0.74 | 0.93 | 0.67 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).