Submitted:

11 November 2023

Posted:

14 November 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

- On-demand self-service model

- b.

- Data Security and Backup

- c.

- Choice of public/private data

- d.

- Optimized Resource sharing and allocation

- e.

- Meeting changing business needs

2. Cloud Migration Methods

2.1. Cloud Migration Scenarios

- a.

- Partial migration

- b.

- Overall migration

2.2. Cloud Migration tools and services

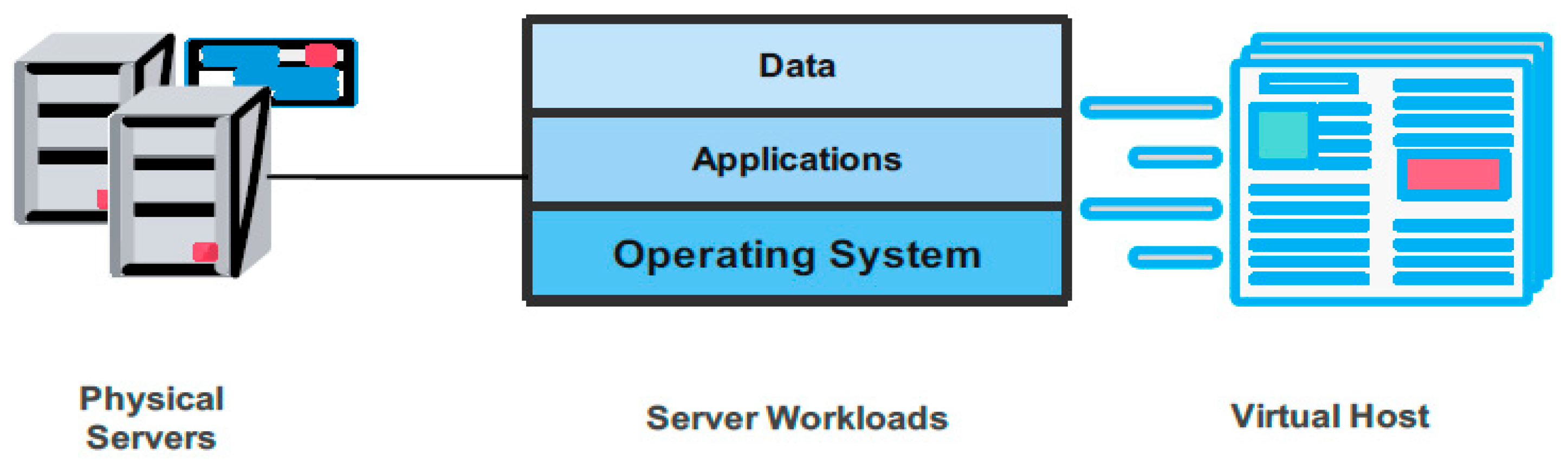

- PlateSpin Migrate

- b.

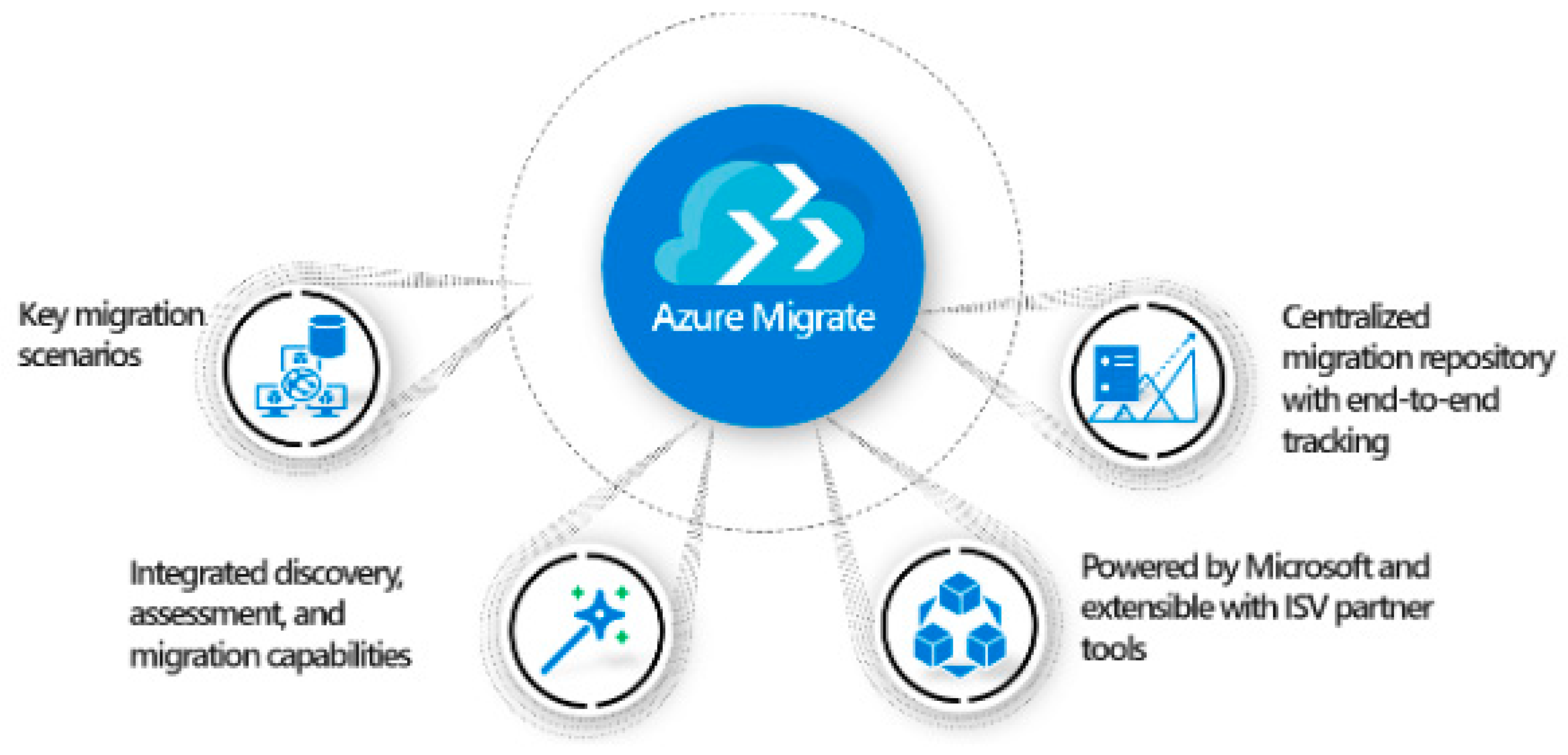

- AWS Migration Services

- c.

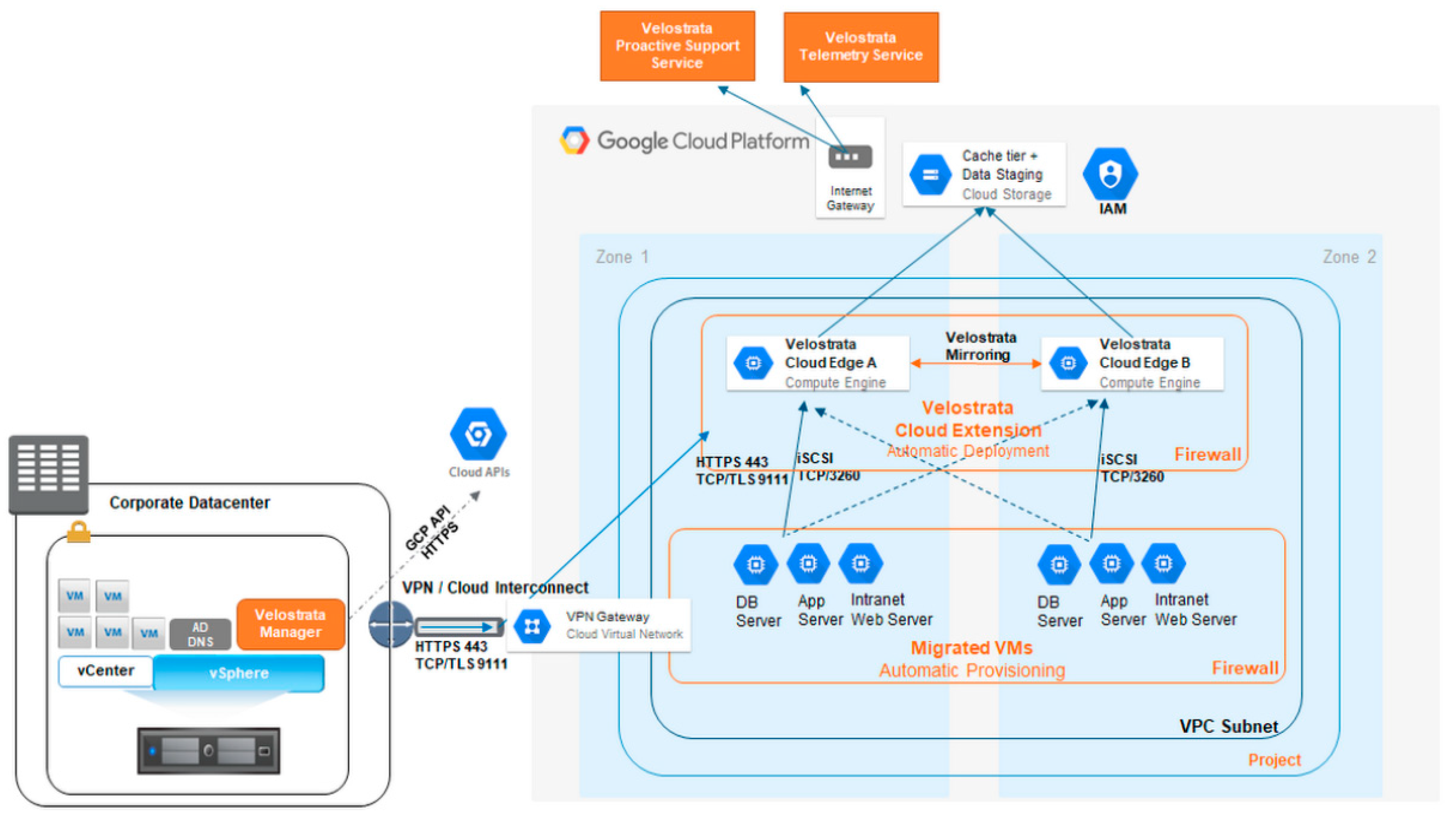

- Google Migration Services /Velostrata

2.3. Cloud Migration test, implementation, and validation

- Migration test

- b.

- Migration implementation and verification

3. Cloud Computing Deployment and Service Models

3.1. Cloud Computing Service Models

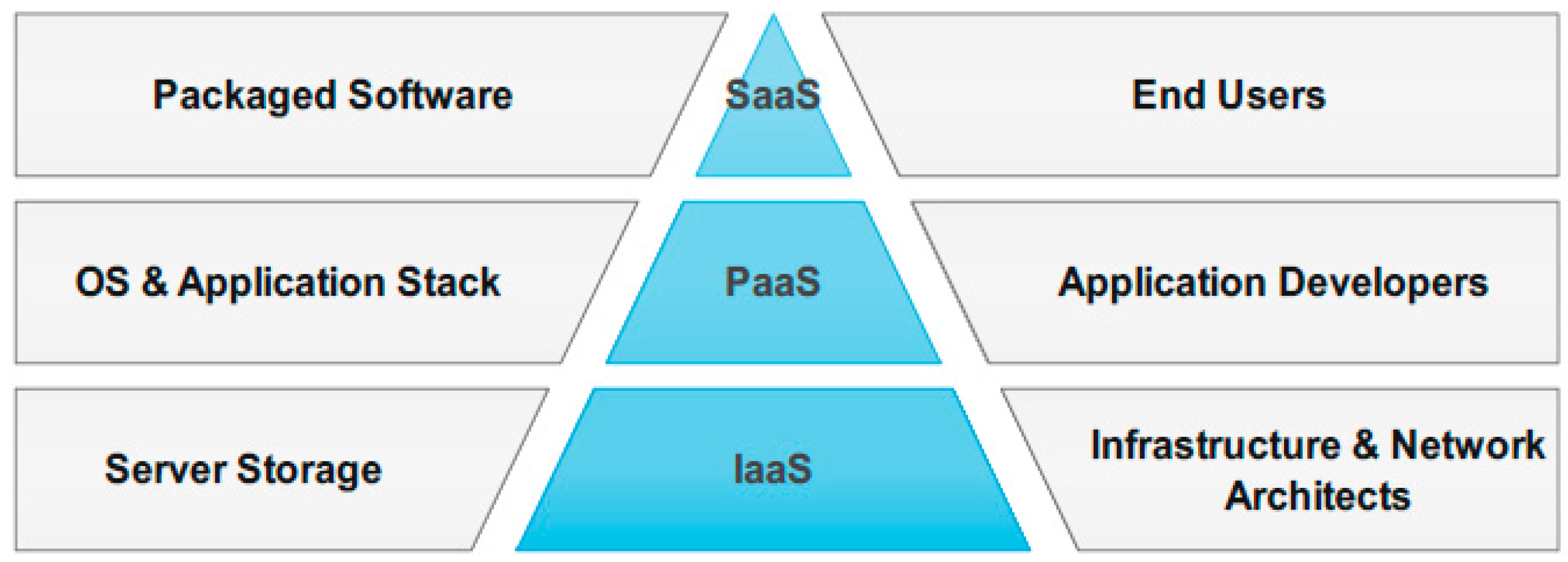

- Software as a Service (SaaS)

- b.

- Platform as a Service (PaaS)

- c.

- Infrastructure as a Service (IaaS)

- Cost Saving

- b.

- Scalability

- c.

- Centralised management

- d.

- Business Continuity

3.2. Cloud Computing Deployment Models

- Public Cloud

- b.

- Private Cloud

- i.

- Maintenance & System Logs

- ii.

- Geographical access

- iii.

- Security & Privacy

- c.

- Community Cloud

- d.

- Hybrid Cloud

4. SDN-enabled cloud computing

5. Conclusion

References

- S. Ali et al., “Towards Pattern-Based Change Verification Framework for Cloud-Enabled Healthcare Component-Based,” IEEE Access, vol. 8, pp. 148007–148020, 2020. [CrossRef]

- “What is On-Demand Self Service? - Definition from Techopedia.”. https://www.techopedia.com/definition/27915/on-demand-self-service (accessed on 24 July 2023).

- H. Biswas, V. Sarkar, P. Sen, and D. Sarddar, “Smart city development: Theft handling of public vehicles using image analysis and cloud network,” Recent Trends Comput. Intell. Enabled Res. Theor. Found. Appl., pp. 155–169, Jan. 2021. [CrossRef]

- “What Is Broad Network Access? Definition, Key Components, and Best Practices - Spiceworks.”. https://www.spiceworks.com/tech/cloud/articles/what-is-broad-network-access/ (accessed on 24 July 2023).

- “Why Use Resource Pools?”. https://docs.vmware.com/en/VMware-vSphere/7.0/com.vmware.vsphere.resmgmt.doc/GUID-AA95D1D1-55C0-419D-9E1A-C523C138CC65.html (accessed on 24 July 2023).

- Q. W. Ahmed et al., “AI-Based Resource Allocation Techniques in Wireless Sensor Internet of Things Networks in Energy Efficiency with Data Optimization,” Electron., vol. 11, no. 13, 2022. [CrossRef]

- Z. A. Almusaylim and N. Jhanjhi, “Comprehensive Review: Privacy Protection of User in Location-Aware Services of Mobile Cloud Computing,” Wirel. Pers. Commun., vol. 111, no. 1, pp. 541–564, 2020. [CrossRef]

- “Rapid Elasticity in Cloud Computing: On-Demand Scaling for Business I am running a few minutes late; my previous meeting is running over. Synopsys Cloud.”. https://www.synopsys.com/cloud/insights/rapid-elasticity-in-cloud-computing-on-demand-scaling-for-business.html (accessed on 24 July 2023).

- “What is Measured Service in Cloud Computing? - Definition from Techopedia.”. https://www.techopedia.com/definition/14469/measured-service-cloud-computing (accessed on 24 July 2023).

- “PlateSpin Migrate I am running a few minutes late; my previous meeting is running over. Server Migration Use Cases | Micro Focus.”. https://www.microfocus.com/en-us/use-case/platespin-migrate (accessed on 24 July 2023).

- “PlateSpin Migrate | OpenText.”. https://www.microfocus.com/en-us/products/platespin-migrate/overview (accessed on 24 July 2023).

- R. Pothecary, Running Microsoft Workloads on AWS. 2021. [CrossRef]

- “What is Cloud Migration? Strategy, Process, and Tools.”. https://bluexp.netapp.com/blog/cloud-migration-strategy-challenges-and-steps (accessed on 24 July 2023).

- “An introduction to Azure Migrate.”. https://www.bdrsuite.com/blog/azure-migrate-introduction/ (accessed on 24 July 2023).

- “Web Hosting | Google Cloud.” . https://cloud.google.com/solutions/web-hosting/?utm_source=bing&utm_medium=cpc&utm_campaign=japac-MY-all-en-dr-bkws-all-pkws-trial-b-dr-1009882&utm_content=text-ad-none-none-DEV_c-CRE_-ADGP_Hybrid %7C BKWS - PHR %7C Txt ~ Application Modernization ~ Web Hosting_hosting-web hosting-KWID_43700071941269965-kwd-74904499601926%3Aloc-112&userloc_158630-network_o&utm_term=KW_google cloud web hosting&gclid=d7201923ab5814fd729b7d9ded3d791c&gclsrc=3p.ds (accessed on 24 July 2023).

- P. Jamshidi, A. Ahmad, and C. Pahl, “Cloud Migration Research: A Systematic Review,” IEEE Trans. Cloud Comput., vol. 1, no. 2, pp. 142–157, 2013. [CrossRef]

- Good, T.; Between, P.; Linthicum, D.S. Cloud-Native Applications and Cloud Migration. 2000, 12–14. [CrossRef]

- Alyas, T.; Mugees, M.; Security, A.; Machine, V. Cloud Computing To cite this version : Security Strategy for Virtual Machine Allocation in Cloud. 2022.

- “7 Different Types of Cloud Computing Structures | UniPrint.net.”. https://www.uniprint.net/en/7-types-cloud-computing-structures/ (accessed on 25 July 2023).

- L. Chen, M. Xian, J. Liu, and H. Wang, “Research on Virtualization Security in Cloud Computing,” IOP Conf. Ser. Mater. Sci. Eng., vol. 806, no. 1, 2020. [CrossRef]

- D. A. Shafiq, N. Z. Jhanjhi, A. Abdullah, and M. A. Alzain, “A Load Balancing Algorithm for the Data Centres to Optimize Cloud Computing Applications,” IEEE Access, vol. 9, pp. 41731–41744, 2021. [CrossRef]

- “What is IaaS? Infrastructure as a Service | Microsoft Azure.”. https://azure.microsoft.com/en-us/resources/cloud-computing-dictionary/what-is-iaas/ (accessed on 26 July 2023).

- “Benefits of IaaS | Infrastructure as a Service | Dataprise.” . https://www.dataprise.com/resources/blog/iaas-benefits/ (accessed on 24 July 2023).

- Y. S. Abdulsalam and M. Hedabou, “Security and privacy in cloud computing: Technical review,” Futur. Internet, vol. 14, no. 1, 2022. [CrossRef]

- “7 benefits of choosing a private cloud solution - Syneto.”. https://preview.syneto.eu/benefits-of-choosing-private-cloud/ (accessed on 24 July 2023).

- “Private Cloud - javatpoint.”. https://www.javatpoint.com/private-cloud (accessed on 24 July 2023).

- Son, J.; Buyya, R. A taxonomy of software-defined networking (SDN)-enabled cloud computing. ACM Comput. Surv. 2018, 51. [Google Scholar] [CrossRef]

- Muzafar, S.; Jhanjhi, N. DDoS Attacks on Software Defined Network: Challenges and Issues,” 2022 Int. Conf. Bus. Anal. Technol. Secur. ICBATS 2022, vol. 2022-Janua, 2022. [CrossRef]

- Muzafar, S.; Jhanjhi, N.Z.; Khan, N.A.; Ashfaq, F. “DDoS Attack Detection Approaches in on Software Defined Network,” 14th Int. Conf. Math. Actuar. Sci. Comput. Sci. Stat. MACS 2022, 2022. [Google Scholar] [CrossRef]

- Vahdat, A.; Clark, D.; Rexford, J. “A purpose-built Global network: Google’s move to SDN,” Commun. ACM 2016, 59, 46–54. [Google Scholar] [CrossRef]

- Priyadarshini, I.; Chatterjee, J.M.; Sujatha, R.; Jhanjhi, N.; Karime, A.; Masud, M. Exploring internet meme activity during COVID-19 lockdown using Artificial Intelligence techniques. Applied Artificial Intelligence 2022, 36, 2014218. [Google Scholar] [CrossRef]

- Muzammal, S.M.; Murugesan, R.K.; Jhanjhi, N.Z.; Hossain, M.S.; Yassine, A. Trust and Mobility-Based Protocol for Secure Routing in Internet of Things. Sensors 2022, 22, 6215. [Google Scholar] [CrossRef]

- Basavaraju, P.H.; Lokesh, G.H.; Mohan, G.; Jhanjhi, N.Z.; Flammini, F. Statistical channel model and systematic random linear network coding based qos oriented and energy efficient uwsn routing protocol. Electronics 2022, 11, 2590. [Google Scholar] [CrossRef]

- Muthukkumar, R.; Garg, L.; Maharajan, K.; Jayalakshmi, M.; Jhanjhi, N.; Parthiban, S.; Saritha, G. A genetic algorithm-based energy-aware multi-hop clustering scheme for heterogeneous wireless sensor networks. PeerJ Computer Science 2022, 8, e1029. [Google Scholar] [CrossRef]

- Sharma, U.; Nand, P.; Chatterjee, J.M.; Jain, V.; Jhanjhi, N.Z.; Sujatha, R. (Eds.) Cyber-Physical Systems: Foundations and Techniques. John Wiley & Sons: 2022.

- Anandan, R.; Gopalakrishnan, S.; Pal, S.; Zaman, N. (Eds.) Industrial Internet of Things (IIoT): Intelligent Analytics for Predictive Maintenance. John Wiley & Sons: 2022.

- Zaman, N.; Gaur, L.; Humayun, M. (Eds.) . Approaches and Applications of Deep Learning in Virtual Medical Care. IGI Global: 2022. [CrossRef]

- Gandam, A.; Sidhu, J.S.; Verma, S.; Jhanjhi, N.Z.; Nayyar, A.; Abouhawwash, M.; Nam, Y. An efficient post-processing adaptive filtering technique to rectifying the flickering effects. PLoS One 2021, 16, e0250959. [Google Scholar] [CrossRef] [PubMed]

- Talwani, S.; Singla, J.; Mathur, G.; Malik, N.; Jhanjhi, N.Z.; Masud, M.; Aljahdali, S. Machine-Learning-Based Approach for Virtual Machine Allocation and Migration. Electronics 2022, 11, 3249. [Google Scholar] [CrossRef]

- Muzammal, S.M.; Murugesan, R.K.; Jhanjhi, N.Z. (2021, March). Introducing mobility metrics in trust-based security of routing protocol for internet of things. In 2021 National Computing Colleges Conference (NCCC) (pp. 1-5). IEEE.

- Hafeez, Y.; Ali, S.; Jhanjhi, N.; Humayun, M.; Nayyar, A.; Masud, M. Role of Fuzzy Approach towards Fault Detection for Distributed Components. Computers, Materials & Continua 2021, 67.

- Jhanjhi, N. Z., Almusalli, F. A., Brohi, S. N., Abdullah, A. (2018, October). Middleware power saving scheme for mobile applications. In 2018 Fourth International Conference on Advances in Computing, Communication & Automation (ICACCA) (pp. 1-6). IEEE.

- Kumar, T.; Pandey, B.; Mussavi SH, A.; Zaman, N. CTHS based energy efficient thermal aware image ALU design on FPGA. Wireless Personal Communications 2015, 85, 671–696. [Google Scholar] [CrossRef]

- Adeyemo, V.E.; Abdullah, A.; JhanJhi, N.Z.; Supramaniam, M.; Balogun, A.O. Ensemble and deep-learning methods for two-class and multi-attack anomaly intrusion detection: an empirical study. International Journal of Advanced Computer Science and Applications 2019, 10. [Google Scholar]

- Khalil, M.I.; Jhanjhi, N.Z.; Humayun, M.; Sivanesan, S.; Masud, M.; Hossain, M.S. Hybrid smart grid with sustainable energy efficient resources for smart cities. sustainable energy technologies and assessments 2021, 46, 101211. [Google Scholar] [CrossRef]

- Sennan, S.; Somula, R.; Luhach, A.K.; Deverajan, G.G.; Alnumay, W.; Jhanjhi, N.Z.; Sharma, P. Energy efficient optimal parent selection based routing protocol for Internet of Things using firefly optimization algorithm. Transactions on Emerging Telecommunications Technologies 2021, 32, e4171. [Google Scholar] [CrossRef]

- Kok, S.H.; Abdullah, A.; Jhanjhi, N.Z. Early detection of crypto-ransomware using pre-encryption detection algorithm. Journal of King Saud University-Computer and Information Sciences 2022, 34, 1984–1999. [Google Scholar] [CrossRef]

- Verma, S.; Kaur, S.; Rawat, D.B.; Xi, C.; Alex, L.T.; Jhanjhi, N.Z. Intelligent framework using IoT-based WSNs for wildfire detection. IEEE Access 2021, 9, 48185–48196. [Google Scholar] [CrossRef]

- Hussain, K.; Hussain, S.J.; Jhanjhi, N.Z.; Humayun, M. (2019, April). SYN flood attack detection based on bayes estimator (SFADBE) for MANET. In 2019 International Conference on Computer and Information Sciences (ICCIS) (pp. 1-4). IEEE.

- Gaur, L.; Afaq, A.; Solanki, A.; Singh, G.; Sharma, S.; Jhanjhi, N.Z.; Hoang, M.; Le, D.N. Capitalizing on big data and revolutionary 5G technology: Extracting and visualizing ratings and reviews of global chain hotels. Computers and Electrical Engineering 2021, 95, 107374. [Google Scholar] [CrossRef]

- Gaur, L.; Singh, G.; Solanki, A.; Jhanjhi, N.Z.; Bhatia, U.; Sharma SKim, W. Disposition of youth in predicting sustainable development goals using the neuro-fuzzy and random forest algorithms. Human-Centric Computing and Information Sciences, 2021; 11. [Google Scholar]

- Almusaylim, A.Z.; Jhanjhi, N.Z.; Alhumam, A. Detection and mitigation of RPL rank and version number attacks in the internet of things: SRPL-RP. Sensors 2020, 20, 5997. [Google Scholar] [CrossRef] [PubMed]

- Alsaade, F.; Zaman, N.; Hassan, M. F.; Abdullah, A. An Improved Software Development Process for Small and Medium Software Development Enterprises Based on Client’s Perspective. Trends in Applied Sciences Research 2014, 9, 254–261. [Google Scholar] [CrossRef]

- Hamid, B.; Jhanjhi, N.Z.; Humayun, M. (2020) Digital Governance for Developing Countries Opportunities, Issues, and Challenges in Pakistan. In Employing Recent Technologies for Improved Digital Governance (pp. 36-58). IGI Global.

- Sangkaran, T.; Abdullah, A.; JhanJhi, N.Z. Criminal network community detection using graphical analytic methods: A survey. EAI Endorsed Transactions on Energy Web 2020, 7, e5–e5. [Google Scholar] [CrossRef]

- Bashir, I.R.A.M.; Hamid, B.U.S.H.R.A.; Jhanjhi, N.Z.; Humayun, M.A.M.O.O.N.A. Systematic literature review and empirical study for success factors: client and vendor perspective. J Eng Sci Technol 2020, 15, 2781–2808. [Google Scholar]

- Alayda, S.; Almowaysher, N.A.; Humayun, M.; Jhanjhi, N. A novel hybrid approach for access control in cloud computing. Int. J. Eng. Res. Technol 2020, 13, 3404–3414. [Google Scholar] [CrossRef]

- Srivastava, A., Verma, S., Jhanjhi, N. Z., Talib, M. N., Malhotra, A. (2020, December). Analysis of Quality of Service in VANET. In IOP Conference Series: Materials Science and Engineering (Vol. 993, No. 1, p. 012061). IOP Publishing.

- Lee, S.; Abdullah, A.; Jhanjhi, N.Z.; Kok, S.H. Honeypot Coupled Machine Learning Model for Botnet Detection and Classification in IoT Smart Factory–An Investigation. MATEC Web of Conferences; EDP Sciences, 2021; Volume 335, p. 04003. [Google Scholar]

- Kaur, M.; Singh, A.; Verma, S.; Kavita Jhanjhi, N.Z.; Talib, M.N. FANET: Efficient routing in flying ad hoc networks (FANETs) using firefly algorithm. In Intelligent Computing and Innovation on Data Science: Proceedings of ICTIDS 2021; Springer: Singapore, 2021; pp. 483–490. [Google Scholar]

- Nawaz, A. Feature engineering based on hybrid features for malware detection over Android framework. Turkish Journal of Computer and Mathematics Education (TURCOMAT) 2021, 12, 2856–2864. [Google Scholar]

- Ali AB, A.; Ponnusamy, V.; Sangodiah, A.; Alroobaea, R.; Jhanjhi, N.Z.; Ghosh, U.; Masud, M. Smartphone security using swipe behavior-based authentication. Intelligent Automation & Soft Computing 2021, 29, 571–585. [Google Scholar]

- Shanmuganathan, V.; Yesudhas, H.R.; Madasamy, K.; Alaboudi, A.A.; Luhach, A.K.; Jhanjhi, N.Z. AI based forecasting of influenza patterns from twitter information using random forest algorithm. Hum. Cent. Comput. Inf. Sci 2021, 11, 33. [Google Scholar]

- Teoh, A.A.; Ghani NB, A.; Ahmad, M.; Jhanjhi, N.; Alzain, M.A.; Masud, M. Organizational Data Breach: Building Conscious Care Behavior in Incident. Organizational data breach: Building conscious care behavior in incident response. Computer Systems Science and Engineering 2022, 40, 505–515. [Google Scholar] [CrossRef]

- Hussain, I.; Tahir, S.; Humayun, M.; Almufareh, M.F.; Jhanjhi, N.Z.; Qamar, F. Health monitoring system using internet of things (iot) sensing for elderly people. 2022 14th International Conference on Mathematics, Actuarial Science, Computer Science and Statistics (MACS), Novermber 2022; IEEE; pp. 1–5. [Google Scholar]

- Jena, K.K.; Bhoi, S.K.; Malik, T.K.; Sahoo, K.S.; Jhanjhi, N.Z.; Bhatia, S.; Amsaad, F. E-Learning Course Recommender System Using Collaborative Filtering Models. Electronics 2022, 12, 157. [Google Scholar] [CrossRef]

| Parameters | Partial migration | Overall migration |

|---|---|---|

| Protection of original equipment | Use existing equipment effectively | Unable to use the original equipment, resulting in the waste of the original equipment investment |

| Cost of construction | The direct construction cost of this project is relatively high, but the overall cost is relatively low considering the expense of resource occupation of cloud platform. | The direct construction cost of this project is low, but the overall cost is high considering the expense of cloud platform |

| Risks to the business | Some devices depend on the stability and maturity of the cloud. Therefore, the impact on services and risks are relatively small | The entire system depends heavily on the stability and maturity of the cloud. When a fault occurs, the system must be rolled back in time to ensure services. |

| System Architecture | The original system architecture needs to be changed into the functions of central node and sub-node, which may affect the performance and stability of the system. Only a few modifications to the system architecture are needed to migrate to the cloud platform | The complexity of project implementation requires cutting over some user data and system architecture adjustment. The project's complexity is high, and the implementation duration is lengthy. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).