1. Introduction

Wildfires have increased in number, frequency, and severity in the United States in recent years, with the western states and particularly California, being severely impacted [

1,

2,

3]. Climate change, anthropogenic activities, and other factors have worsened the frequency and severity of wildfires. Catastrophic wildfire events have short- and long-term impacts on the economy, human health, ecosystems, watersheds, and built environment, highlighting the need for effective wildfire monitoring [

4]. Real-time monitoring is crucial for providing timely and accurate information on the location, size, and intensity of wildfires. This can be an effective means for efficient emergency response management efforts and for making decisions related to firefighting, public safety, and evacuation orders.

Remote sensing technologies, including terrestrial-based, aerial-based, and satellite-based systems are used to provide information on wildfires, such as their location, rate of spread, and fire radiative power, which can help analyze wildfire behavior and manage its impact [

5,

6]. Terrestrial-based systems are highly accurate and have a fast response time, but their coverage is limited, and they are vulnerable to blockage [

7]. Aerial-based systems provide detailed fire progression mapping, but their deployment during emergencies can be challenging [

8,

9]. Satellite-based systems, such as the Earth Observation (EO) system, allow for detection of wildfires over a vast area, including remote or inaccessible areas. However, the spatial and/or temporal resolution of these systems is limited, not providing the level of details needed for emergency response applications [

10]. Low Earth Orbit (LEO) satellites offer high spatial resolution, but they typically capture snapshots of the same area at low temporal resolution (e.g., hours or days) [

11]. Landsat-8/9 [

12] and Sentinel-2A/2B [

13] provide multi-spectral global coverage with a resolution of 10 m to 30 m [

14], but their revisit intervals of 8 days for Landsat-8/-9 and 5 days for Sentinel-2A/2B are inadequate for monitoring active fires [

15]. Other instruments on board LEO satellites, such as the Visible Infrared Imaging Radiometer Suite (VIIRS) [

16] on the Suomi National Polar-orbiting Partnership (SNPP) satellite and the Moderate Resolution Imaging Spectroradiometer (MODIS) [

17] on the Terra and Aqua satellites, are commonly used to detect active fire points with twice-daily revisits [

18]. VIIRS offers a spatial resolution of 375 m, while MODIS provides a spatial resolution of 1 km [

19,

20]. On the other hand, Geosynchronous Equatorial Orbits (GEO) satellites provide high temporal resolution but lower spatial resolution (2 km) due to their higher elevation from the Earth [

21]. An example of a GEO satellite is the Geostationary Operational Environmental Satellites R Series (GOES-R), consisting of three geostationary satellites, GOES-16, -17, and -18, which continuously monitor the entire western hemisphere, including North America, South America, the Pacific Ocean, the Atlantic Ocean, and Western Africa. However, due to its low spatial resolution, the GOES-R active fire product has been found to be unreliable, with a false alarm rate of around 60% to 80% for medium and low confidence fire pixels [

22]. While it may struggle with some fire detections, GOES-R's performance is commendable for many high-impact fires, as demonstrated by studies such as Lindley et al. (2020) [

23] for the Kincade Fire.

Artificial Intelligence (AI) has the potential to improve the temporal and spatial accuracy for monitoring wildfires. AI, particularly Deep Learning (DL) models, have been effective in solving complex tasks such as image classification [

24], object detection [

25,

26], and semantic segmentation [

27]. Building on these successes, recent research has explored the application of DL models on satellite imagery for land-use classification [

28] and urban planning [

29,

30]. Leveraging success in high-level AI tasks such as image segmentation and super-resolution, it might be possible to develop a high-resolution wildfire monitoring system that can improve our ability to detect and respond to wildfire incidents using available data systems.

A few recent studies have employed DL-based approaches for early wildfire detection from streams of remote sensing data. These studies have focused mainly on active fire detection or fire area mapping while skipping monitoring quantities that can quantify fire intensity. Toan et al. (2019) proposed a deep convolutional neural network (CNN) architecture for wildfire detection using GOES satellite hyperspectral images [

31]. Their model employs 3D convolutional layers to capture spatial patterns across multiple spectral bands of GOES and patch normalization layer to locate fires at the pixel level. The study demonstrates the potential of using DL models for early wildfire detection and monitoring. In a related study, Toan et al. (2020) proposed another DL-based approach for the early detection of bushfires using multi-modal remote sensing data [

32]. Their approach consisted of a DL model incorporating both CNNs and long short-term memory (LSTM) recurrent neural networks. The model was designed to process multi-modal remote sensing data at different scales, ranging from individual pixels (using CNN) to entire images (using LSTM). The authors reported a high-level detection accuracy, outperforming several state-of-the-art methods. Zhao et al. (2022) focused on using time-series data from GOES-R for early detection of wildfires [

33]. The study proposed a DL model incorporating a gated recurrent unit (GRU) network to process the time-series data from GOES-R. The model was designed to learn the spatiotemporal patterns of wildfire events and to predict their likelihood at different locations. Using a sliding window technique to capture the temporal dynamics of wildfire events, the authors demonstrated that the model could detect wildfire events several hours before they are reported by official sources. McCarthy et al. (2021) proposed an extension of U-Net CNNs to geostationary remote sensing imagery with the goal of improving the spatial resolution of wildfire detections and high-resolution active-fire monitoring [

34]. Their study leverages the complementary properties of GEO and LEO sensors as well as static features related to topography and vegetation to inform the analysis of remote sensing imagery with physical knowledge about the fire behavior. However, the study acknowledged a limitation of the proposed algorithm in terms of false positives and emphasized the need for further research to address this issue. Overall, the published literature demonstrates the potential of DL methods for early detection and monitoring of wildfires using remote sensing data from different sources. Recently, Ghali et al. (2023) provided a comprehensive analysis of recent (between 2018 and 2022) DL models used for wildland fire detection, mapping, and damage and spread prediction using satellite data [

35]. However, these studies are limited to fire detection without fire boundary and intensity monitoring. To the best of our knowledge, there is no published study that attempts to improve the spatial resolution of GOES for operational wildfire monitoring.

This work aims to address the mentioned gap in remote monitoring of wildfires by presenting a framework that utilizes DL techniques to enhance the spatial resolution of GOES-17 satellite images using VIIRS data as ground truth. In this context, we have performed an ablation study using different loss functions, evaluation metrics, and variations of a DL architecture known as autoencoder. To enable DL models to use contemporaneous data that share similar spectral and projection characteristics, a scalable dataset creation pipeline is developed, which can accommodate the addition of new sites. An automated real-time streaming and visualization dashboard system can utilize the proposed framework to transform relevant GOES data into high spatial resolution images in near-real-time.

The rest of the paper is organized as follows.

Section 2 presents an overview of the satellite data streams used in the study and describes the preprocessing steps taken to ensure consistency.

Section 3 discusses the proposed approach, including the autoencoder architectures, loss functions, and evaluation metrics. Finally,

Section 4 presents the experimental results and comparisons followed by conclusions in

Section 5.

2. Materials and Methods

2.1. Data Source

2.1.1. Geostationary Operational Environmental Satellite (GOES)

Launched by the National Oceanic and Atmospheric Administration (NOAA), GOES-17 is operational as GOES-West since February 12, 2019. This Geostationary satellite is 35,700 km above earth providing constant watch over the pacific ocean and the western United States [

36]. The Advanced Baseline Imager (ABI) is the primary instrument of GOES for imaging Earth’s weather, oceans, and environment. ABI views the Earth with 16 spectral bands, including two visible channels (channels 1-2 with approximate center wavelengths of 0.47 and 0.64 µm), four near-infrared channels (channels 3-6 with approximate center wavelengths of 0.865, 1.378, 1.61, and 2.25 µm), and ten mid- and long-wave infrared (IR) channels (channels 7-16 with approximate center wavelengths 3.900, 6.185, 6.950, 7.340, 8.500, 9.610, 10.350, 11.200, 12.300, and 13.300 µm) [

36]. These channels are used by various models and tools to monitor different elements on the Earth’s surface, such as trees and water, or in the atmosphere, such as clouds, moisture, and smoke [

36]. Dedicated products are available for cloud formation, atmospheric motion, convection, land surface temperature, ocean dynamics, vegetation health, and flow of water, fire, smoke, volcanic ash plumes, aerosols, air quality, etc. [

37].

In this study, channel 7 (IR shortwave) of Level 1B (L1B) Radiances product (ABI-L1B-Rad) is used as input to the DL model. The product, with its scan mode six, captures one observation of the Continental U.S. (CONUS) with a spatial resolution of 2 km at every 5 min [

38]. The L1B data product contains measurements of the radiance values (measured in milliwatts per square meter per steradian per reciprocal centimeter) from the Earth's surface and atmosphere. These radiances are used to identify cloudy and hot regions within the satellite’s field of view [

39]. These measured radiance values are converted to Brightness temperature (BT) in this study to facilitate data analysis. A detailed explanation of this conversion process will be provided in a following section of the paper.

2.1.2. Visible Infrared Imaging Radiometer Suite (VIIRS)

The Visible Infrared Imaging Radiometer Suite (VIIRS) instrument is installed on two polar orbiter satellites, namely Suomi National Polar-orbiting Partnership (S-NPP) operational from 7 March, 2012 [

40] and NOAA’s Joint Polar Satellite System (JPSS), now called NOAA-20, operational from 7 March, 2018 [

41]. These two satellites are 50 min apart, 833 km above the earth, and revolve around the Earth in a polar orbit [

41]. For each site, these satellites make two passes daily - one during the day and one at night [

42]. VIIRS features daily imaging capabilities across multiple electromagnetic spectrum bands to collect high-resolution atmospheric imagery including visible and infrared images to detect fire, smoke, and particles in the atmosphere [

43]. The VIIRS instrument provides 22 spectral bands, including five imagery 375 m resolution bands (I bands), 16 moderate 750 m resolution bands (M bands), and one Day-Night Band (DNB band) [

44]. The I-bands include a visible channel (I1) as well as a near, a shortwave, a mediumwave, and a longwave IR (I2-I5) with center wavelengths of 0.640, 0.865, 1.610, 3.740, and 11.450 µm, respectively. The M bands include five visible channels (M1-M5) together with two near IR (M6-M7), four shortwave IR (M8-M11), two mediumwave IR (M12-M13), and three longwave IR (M14-M16) channels with center wavelengths of 0.415, 0.445, 0.490, 0.555, 0.673, 0.746, 0.865, 1.240, 1.378, 1.610, 2.250, 3.700, 4.050, 8.550, 10.763, and 12.013 µm, respectively [

44]. VIIRS also hosts a unique panchromatic Day/Night band (DNB), which is ultra-sensitive in low-light conditions and is operated on central wavelength of 0.7 µm [

45] .

In this study, the VIIRS (S-NPP) I-band Active Fire Near-Real-Time product with 375 m resolution (i.e., VNP14IMGTDL_NRT) [

46] is used as ground truth to improve the spatial resolution of GOES imagery due to its relatively high 375 m spatial resolution compared to GOES 2 km spatial resolution. Furthermore, VIIRS shows good agreement with its predecessors in hotspot detection [

47], and it provides an improvement in the detection of relatively small fires as well as the mapping of large fire perimeters [

48]. The VIIRS data are available from January 20, 2012 to present [

49].

2.2. Data Pre-Processing

DL models require two sets of images: input images (i.e., GOES images herein) and ground truth or reference images (i.e., VIIRS images herein). The prediction of the DL model and ground truth are compared pixel-by-pixel, and the difference between the prediction and ground truth (i.e., loss function value) is used by the model to learn its parameters via backpropagation [

50]. Hence, the input and ground truth images should have the same size, projection, time instance, and location. However, the initial format of GOES and VIIRS data are different. VIIRS fire data, as obtained from the NASA’s Fire Information for Resource Management System (FIRMS) is represented in vector form in CSV format [

38,

51] whereas GOES data is represented in raster form in NetCDF format [

52,

53]. In the vector form, data features are represented as points or lines, while in the raster form, data features are presented as pixels arranged in a grid. Vector data needs to be converted into a pixel-based format for proper display and comparison with raster data [

54]. Furthermore, GOES images contain snapshots of both fire and its surrounding background information, whereas VIIRS data contain only the location and radiance value of globally detected fire hotspots. Therefore, data pre-processing is required to make the initial formats and projections between GOES and VIIRS data consistent.

The pre-processing pipeline aims to create a consistent dataset of images from multiple wildfire sites, with standardized dimensions, projections, and formats. Each processed GOES image in the dataset corresponds to a processed VIIRS image representing the same region and time instance of a wildfire event. To facilitate this process, a comprehensive list of wildfire events in the western U.S. between 2019 and 2021 is compiled from multiple sources [

55,

56] (see

Appendix A). This wildfire property list (WPL) plays a key role in several pre-processing steps, such as defining the region of interest (ROI) for each wildfire site. To obtain the four corners of the ROI, a constant value is added to/subtracted from the central coordinates specified in the WPL. For each wildfire event defined by its ROI and its duration as included in the WPL, the pre-processing pipeline conducts the following four steps.

Step 1: Extracting wildfire event data from VIIRS and identifying timestamps. The pipeline first extracts the records from the VIIRS CSV file to identify detected fire hotspots that fall within the ROI and duration of wildfire event. The pipeline also identifies unique timestamps from the extracted records.

Step 2: Downloading GOES images for each identified timestamp. In order to ensure a contemporaneous dataset, the pre-processing pipeline downloads GOES images with captured times that are near to each VIIRS timestamp identified in Step 1. GOES have a temporal resolution of 5 minutes, meaning that there will always be a GOES image within 2.5 minutes of the VIIRS captured time, except in cases where the GOES file is corrupted [

57]. In case of corrupted GOES data, Steps 3 and 4 will be halted and the pipeline will proceed to the next timestamp.

Step 3: Creating processed GOES images. The GOES images obtained in Step 2 have different projection from the corresponding VIIRS. In this step, the GOES images are cropped to match the site's ROI and reprojected into a standard coordinate reference system (CRS).

Step 4: Creating processed VIIRS images. The VIIRS records obtained in Step 1 are grouped by timestamp and rasterized, interpolated, and saved into GeoTIFF images using the same projection as the one used to reproject GOES images in Step 3.

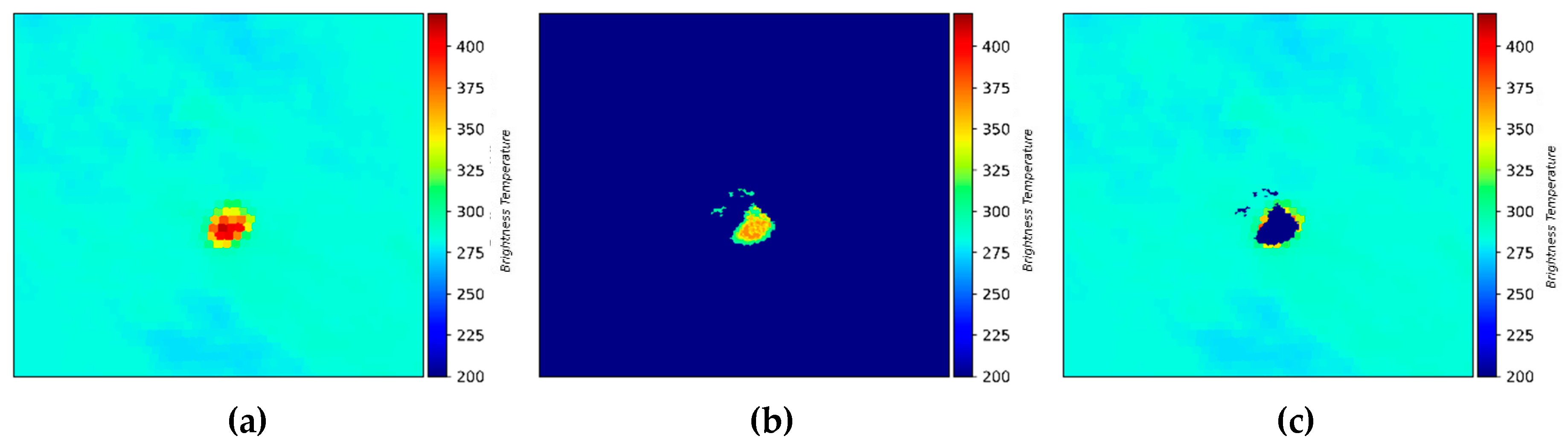

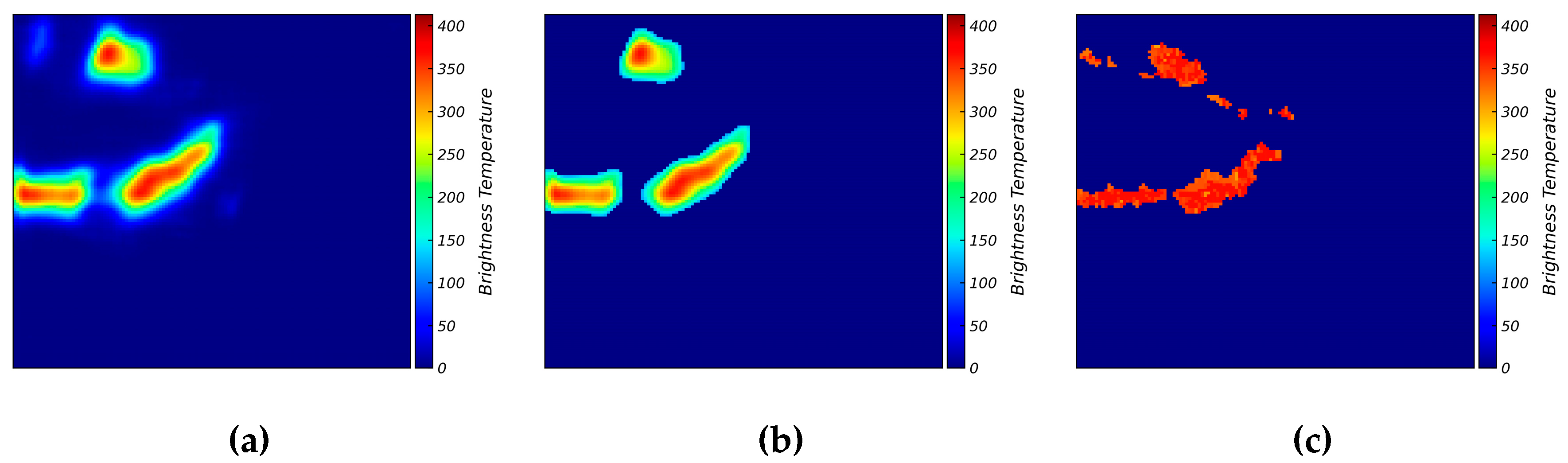

The above steps are explained in more detail in the following subsections. Once these steps are completed, the GOES and VIIRS images will have the same image size and projection. An example of the processed GOES and VIIRS images is shown in

Figure 1a, and 1b, respectively. In

Figure 1c, where the VIIRS image is overlaid on the GOES image, the VIIRS fire region almost completely covers the GOES fire region, which verifies the data pre-processing pipeline. It is crucial to clarify that the dark color in

Figure 1c does not signify low temperatures but rather serves to outline the contours of fires detected in the VIIRS image, distinguishing them from the underlying GOES image. It should be noted that in

Figure 1a, the GOES image includes both fire pixels and background information, while in

Figure 1b, the output VIIRS image only contains fire pixels without any background information.

2.2.1. GOES Pre-Processing

The NOAA Comprehensive Large Array-data Stewardship System (CLASS) repository [

3] is the official site for accessing GOES products. ABI fire products are also available publicly in Amazon Web Services (AWS) S3 Buckets [

4]. The Python s3fs [

5] library, which is a filesystem in user space (FUSE) that allows mounting an AWS S3 bucket as a local filesystem, is utilized in this study to access the AWS bucket “NOAA-GOES17”. As previously mentioned, the downloaded GOES images cover the western U.S. and Pacific Ocean and need to be cropped to the specific wildfire site's ROI. Additionally, the unique projection system of GOES, known as the "GOES Imager Projection" [

58] must be transformed to a standard CRS to make it comparable with VIIRS. In this study, the WGS84 system (latitude/longitude) is used as the standard CRS. The projection transformation is achieved using the Satpy python library [

59], which is specifically designed for reading, manipulating, and writing data from earth-observing remote sensing instruments. Specifically, the Satpy scene function [

60] is utilized to create a GOES scene from the downloaded GOES file, which allows the transformation of the GOES CRS to the WGS84 system [

61]. The Satpy area definition [

62] is then applied to crop the GOES scene to match the ROI. Furthermore, Satpy enables the conversion of Radiance values, measured in milliwatts per square meter per steradian per reciprocal centimeter, to Brightness Temperature (BT) values, expressed in Kelvin (K), using Planck’s law [

63]. This transformation is conducted to have the same physical variable as VIIRS data to facilitate training. Additionally, to ensure the accuracy of these converted values, a comparison is conducted with the Level 2 GOES cloud and moisture CMI (Cloud and Moisture Imagery) product. This validation process confirms the reliability and appropriateness of the Radiance to BT conversion for our analysis. It's important to note that Level 1 data is utilized throughout due to its real-time availability, as Level 2 data is not real-time and may not be suitable for time-sensitive applications.

2.2.2. VIIRS Pre-Processing

Annual summaries of VIIRS-detected fire hotspots in CSV format are accessible by country through the Fire Information for Resource Management System (FIRMS) [

52], which is a part of NASA’s Land Atmosphere Near-Real-time Capability for Earth Observing System (LANCE) [

53]. In this study, annual summaries from 2019 to 2021 are utilized since corresponding GOES-17 files are only available from 2019, the year at which GOES-17 became operational. The CSV files contain the I-4 channel brightness temperature (3.55-3.93 µm) of the fire pixel measured in K, referred to as b-temperature I-4, along with other measurements and information such as the acquisition date, acquisition time, and latitude and longitude fields. Notably, the annual summary files are exclusively available for S-NNP.

The VIIRS-detected fire hotspot vector data are available in CSV format, with the longitude and latitude coordinates of each fire hotspot defining its location at the center of a 375 m by 375 m pixel. To ensure its compatibility with GOES data for the DL model, the vector data is converted into a pixel-based format through rasterization [

64]. This process involves mapping the vector data to pixels, resulting in an image that can be displayed [

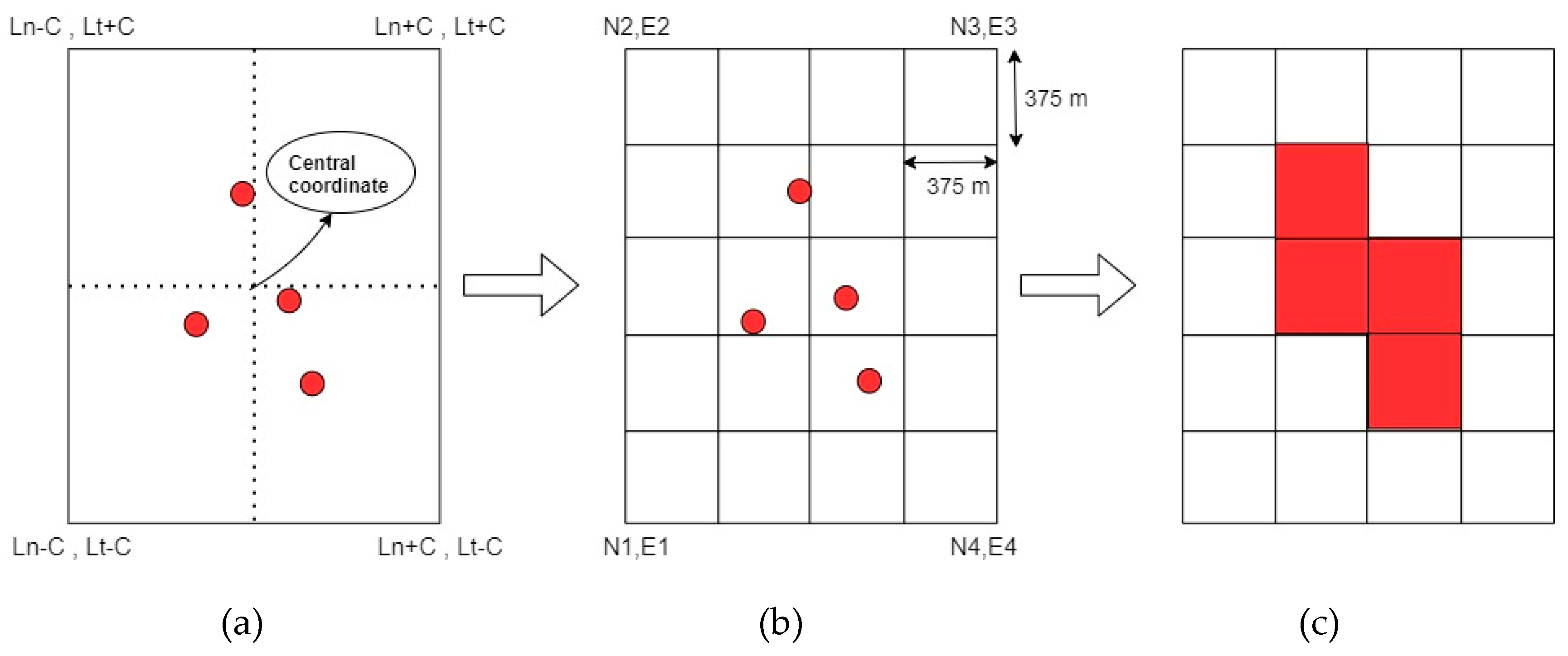

65]. This process has three main steps: (1) defining the ROI, (2) defining the grid system over the ROI, and (3) mapping the fire data to the pixels. This process is illustrated schematically in

Figure 2.

The first step involves defining the ROI for the wildfire site. To accomplish this, a constant value C = 0.6 degrees is added to/subtracted from the center coordinates of the fire site as defined in the WPL to obtain the four corners of the ROI.

Figure 2a shows the defined ROI around the center of fire hotspot represented by the red dots. The second step involves overlaying a grid system over the ROI as illustrated in

Figure 2b. Each cell in the grid system corresponds to a single pixel in the output image, and a specific cell size of 375 m by 375 m is selected to match the resolution of the VIIRS hotspot detection. The process involves transforming the entire ROI from longitude/latitude space to northing/easting or distance space, using PyProj's Python library transformation function [

66]. The final step is to map the fire locations from the CSV file to the corresponding pixels. If the central coordinates of fire hotspot fall within the cell associated with a pixel, that pixel is activated and assigned a value based on the measured I-4 BT/I-5 BT value from VIIRS CSV file, based on Eq. 1.

|

(1) |

where

BTI4 is channel I-4 BT and

BTI5 is channel I-5 BT for each hotspot defined in VIIRS CSV file. Eq. 1 is employed to overcome VIIRS data artifacts as outlined in [

1]. Specifically, the first condition is for instances where the VIIRS pixel is located at the core area of intense wildfire activity causing the pixel saturation. This, in turn, leads to complete folding of the count leading to I-4 BT values of 208 K. The second condition in Eq. 1 is designed to deal with “mixed pixels”, where saturated and unsaturated native pixels are mixed during the aggregation process leading to artificially low I-4 BT values.

Figure 2c illustrates this process by showing the activation of pixels without displaying their actual values for simplicity.

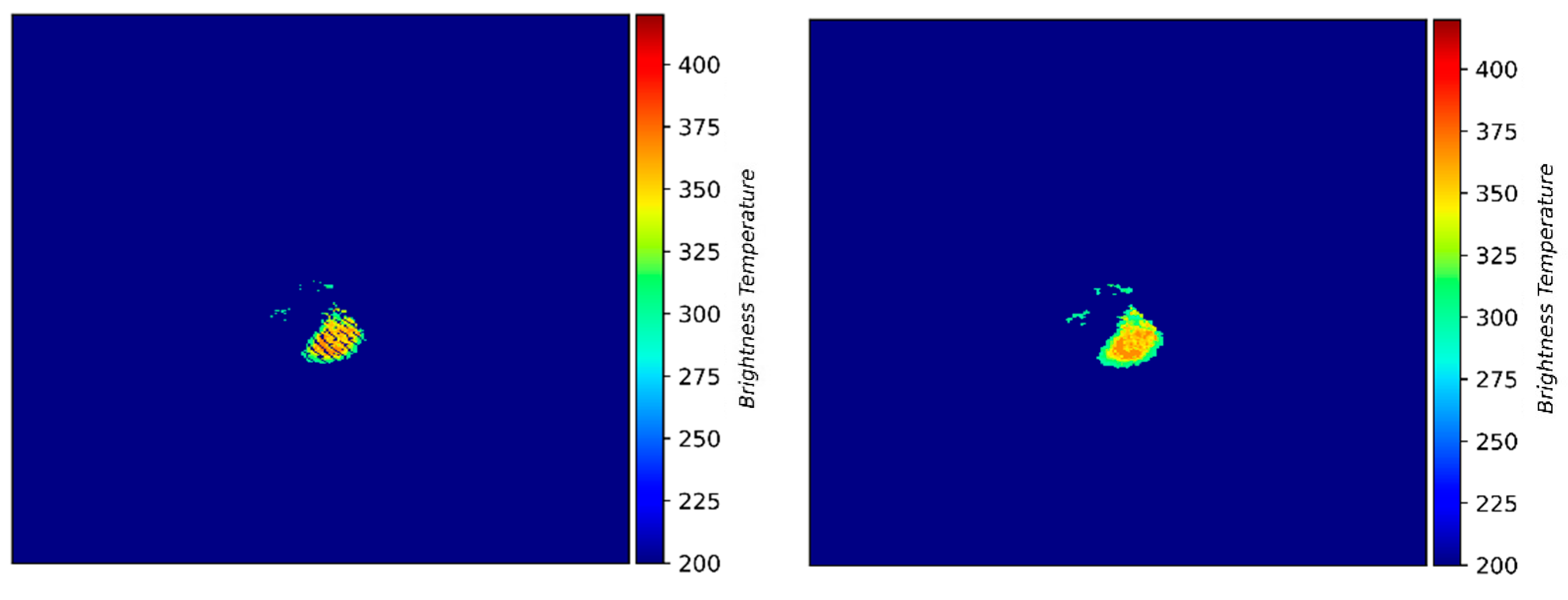

Following the rasterization process, nearest neighbor interpolation [

2] is performed for non-fire pixel with a neighboring fire pixel. This interpolation method is used to eliminate any artificial patches in and around the fire region, as shown in

Figure 3a, and to ensure that the output image, as shown in

Figure 3b, accurately represents the extent of the fire. Finally, the created raster is saved in GeoTIFF format using the GDAL library for future use[

3].

3. Proposed Approach

3.1. Autoencoder

Autoencoders are one of the popular DL architectures for image super-resolution [

4]. It takes the low-resolution image as input, learns to recognize the underlying structure and patterns, and generates a high-resolution image that closely resembles the ground truth [

5]. Autoencoders have two main components: (1) the encoder, which extracts important features from the input data, and (2) the decoder, which generates an output based on the learned features. Together, they can effectively distill relevant information from an input to generate the desired output [

5].

In this study, an autoencoder is tasked with distilling the relevant portions of the input GOES-17 imagery to generate an output with increased resolution and no background noise (i.e., such as reflections from clouds, lakes, etc.) to mimic VIIRS imagery.

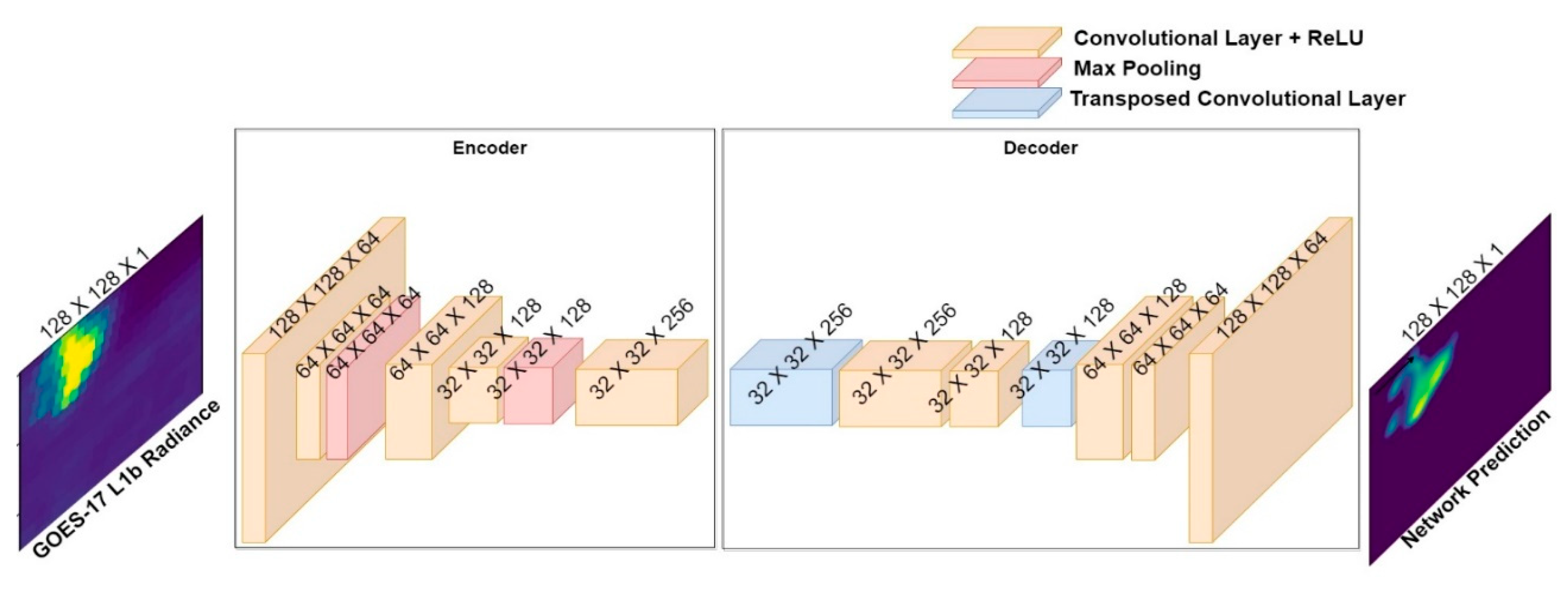

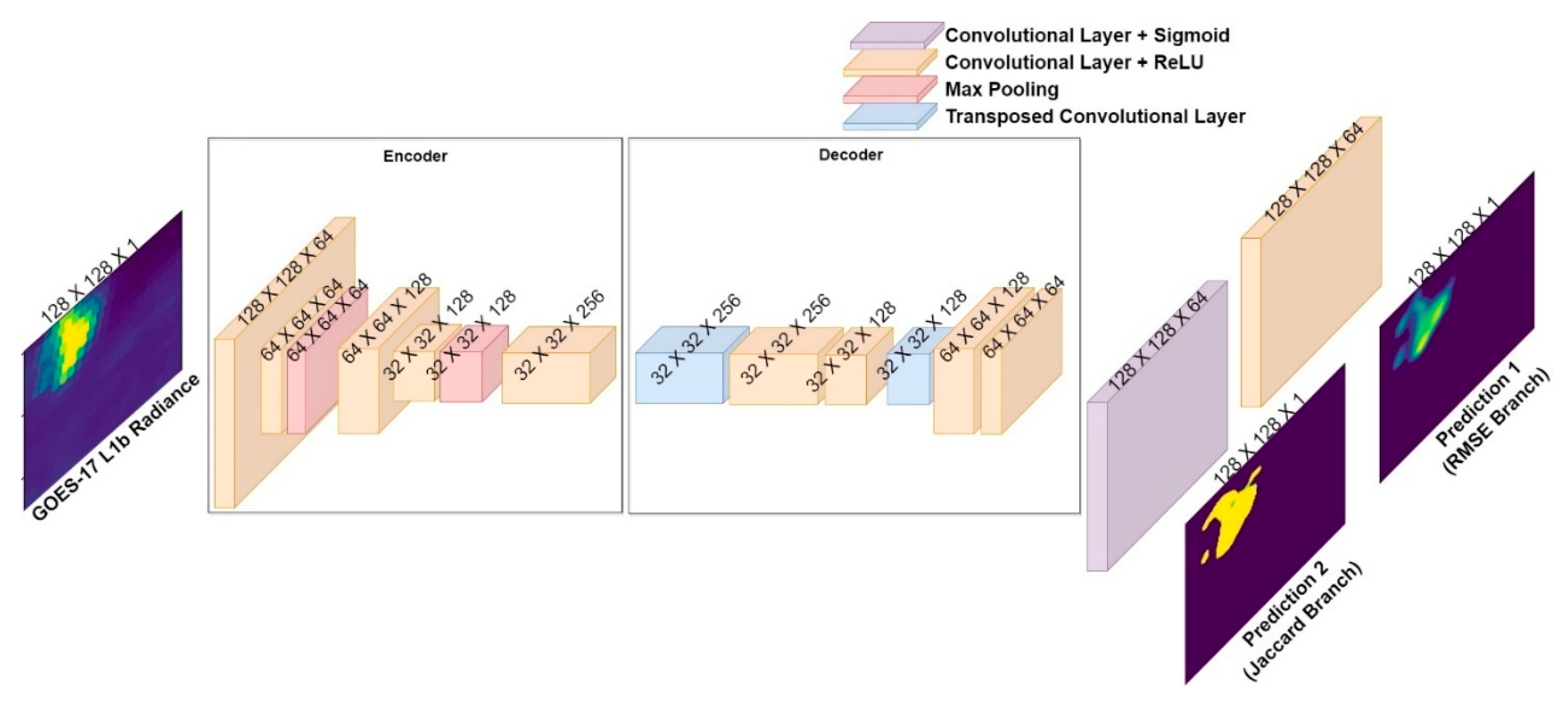

Figure 4 illustrates the autoencoder architecture along with the dimensions for each layer utilized in this study. As can be seen, the model's encoder component is composed of five two-dimensional convolutional layers with a kernel size of three and a padding of one, followed by a Rectified Linear Unit (ReLu) activation layer [

6]. This architecture allows the model to recognize important features in the low-resolution input images while preserving their spatial information. The use of ReLu activation layers after each convolutional layer helps to introduce nonlinearity in the model, which is critical for the network's ability to learn complex patterns presented in the input images. Additionally, the second and fourth convolutional layers are followed by max pool layers, which help to down-sample the feature maps and reduce the spatial dimensions of the data. Meanwhile, the decoder component consists of two blocks, each containing one transposed convolutional layer [

7] and two normal convolutional layers with a kernel size of three. The use of transposed convolutional layers in the decoder allows the model to up-sample the feature maps and generate a high-resolution output image that closely resembles the ground truth image. The normal convolutional layers that follow the transposed convolutional layers help to refine the features and details in the output image. Lastly, the decoder ends with a final convolutional layer that produces the final output image. This architecture is chosen in this study after testing several modifications such as altering the activation functions, adding or removing convolutional layers, and increasing the number of blocks. The final architecture is chosen based on its ability to produce high-quality output images that accurately represent the ground truth images while minimizing the computational resources required for training.

3.2. Loss Functions and Architectural Tweaking

The objective of this study is to enhance GOES-17 imagery by improving its spatial resolution and removing background information, as well as predicting improved radiance values. To achieve this, an ablation study is conducted by considering variations in the choice of the loss functions and autoencoder architecture, in order to determine the optimal solution. In particular, four different loss functions are considered, namely (1) global root mean square error, (2) global plus local root means square error, (3) Jaccard loss, and (4) global root mean square error plus Jaccard loss, which are explained in detail in the following sections.

3.2.1. Global Root Mean Square Error (GRMSE)

Root mean square error (RMSE) is a loss function commonly used in image reconstruction and denoising tasks [

8], where the goal is to minimize the difference between the predicted and ground truth images. In this study, the autoencoder model is initially trained using the RMSE loss function on the entire input image to predict the BT value for each input image pixel based on the VIIRS ground truth data. This is referred to as the global RMSE (GRMSE) as defined in Eq. 2. The term “global” is used here to distinguish it from other RMSE-based loss, which will be discussed in the next section.

where

yi represents the BT value of the

ith pixel in the VIIRS ground truth image,

represents the BT value of

ith pixel in the predicted image, and

n represents the total number of pixels in the VIIRS/predicted image.

3.2.2. Global plus Local RMSE (GLRMSE)

Since the background area is often significantly larger than the fire area (e.g., see Fig 1c), it dominates the RMSE calculation, possibly leading to decreased training performance. For this reason, a local RMSE (LRMSE) is defined in Eq. 3. The LRMSE applies only to the fire area of the ground truth image (i.e., where pixel’s BT value is non-zero). Specifically,

where

I is an identifier variable, which is one for all pixels belonging to the fire area and zero for the background. Based on this, the RMSE is calculated only for the fire region of the VIIRS ground truth image. The LRMSE is combined with the global RMSE resulting in a global plus local RMSE loss function (GLRMSE) as defined in Eq. 4.

where

WG is a weight factor for the global RMSE and

WL is a weight factor for local RMSE. These weights are hyperparameters and are determined through hyperparameter optimization as will be discussed later.

3.2.3. Jaccard Loss (JL)

For effective wildfire monitoring, it is crucial to not only minimize discrepancies in BT values but also to predict the wildfire perimeters. This is accomplished by binary segmentation in which a binary value is assigned to each pixel based on its category, partitioning the image into foreground (i.e., fire) and background regions [

9]. The Jaccard Loss (JL) function defined in Eq. 5 is a prevalent loss function utilized in the field of image segmentation [

74]. It aims to evaluate and improve the similarity between the predicted and ground truth binary masks, which is also referred to as segmentation masks. Specifically, JL is defined as

where

yb,i represents the presence (1) or absence (0) of fire in the VIIRS ground truth image at the

ith pixel, and

represents the probability of fire in the predicted image at the

ith pixel.

In order to use the JL in the autoencoder training, the VIIRS ground truth image is transformed into a binary image by setting the BT value of all fire pixels to one, while setting the BT value of the background pixels to zero [

10]. Additionally, the final activation layer of the autoencoder model is modified from ReLu to Sigmoid [

11], essentially generating a probability value for every pixel in the output image. This probability map assigns a value between zero and one to each pixel, indicating the probability of that pixel belonging to the fire region or not. Once the model is properly trained, the resulting probability map is converted to a binary map (0/1). The resulting binary map can be used to identify the fire region and distinguish it from the surrounding environment.

3.2.4. RMSE plus Jaccard Loss Using Two-Branch Architecture

RMSE and Jaccard losses can be combined to predict the shape and location of the fire as well as its BT values. However, as these loss functions require different activation layers, combining them in a single network necessitates architectural changes. To address this issue, a two-branch architecture is introduced, where the first branch uses ReLu as the last activation layer to predict the BT values, while the second branch uses a Sigmoid activation layer to predict the fire probability map. The resulting architecture combines GRMSE and JL loss functions as defined in Eq. 6 as follows to enhance learning and improve predictions.

where

WR is weight for the

GRMSE loss,

WJ is the weight for

JL loss, and

TBL is the two-branch loss, which is the weighted sum of the two losses. These weights are determined through hyperparameter optimization.

The two-branch architecture, illustrated in

Figure 5, branches out before the final convolutional layer. The outputs from the two branches are compared with their respective ground truth images to calculate individual losses, which are then combined to train the model. This approach allows the learning process to utilize information from both branches, resulting in a more effective model. As a result, the output from the GRMSE branch, which captures both the predicted location and BT of the fire, is considered the primary output of the model. From this point forward, this architecture will be referred to as the two-branch loss (TBL) model.

3.3. Evaluation

3.3.1. Pre-Processing: Removing Background Noise

Accurate network prediction evaluation requires taking noise into account. Although noise may not have significant physical relevance due to its typically low BT compared to the actual fire, it can still impact the accuracy of the evaluation metrics. To mitigate this issue, the Otsu’s thresholding method has been adopted in this study to effectively remove background noise and improve evaluation accuracy. The Otsu’s thresholding automatically determines the optimal threshold level that separates the foreground (relevant data) from the background (noise) [

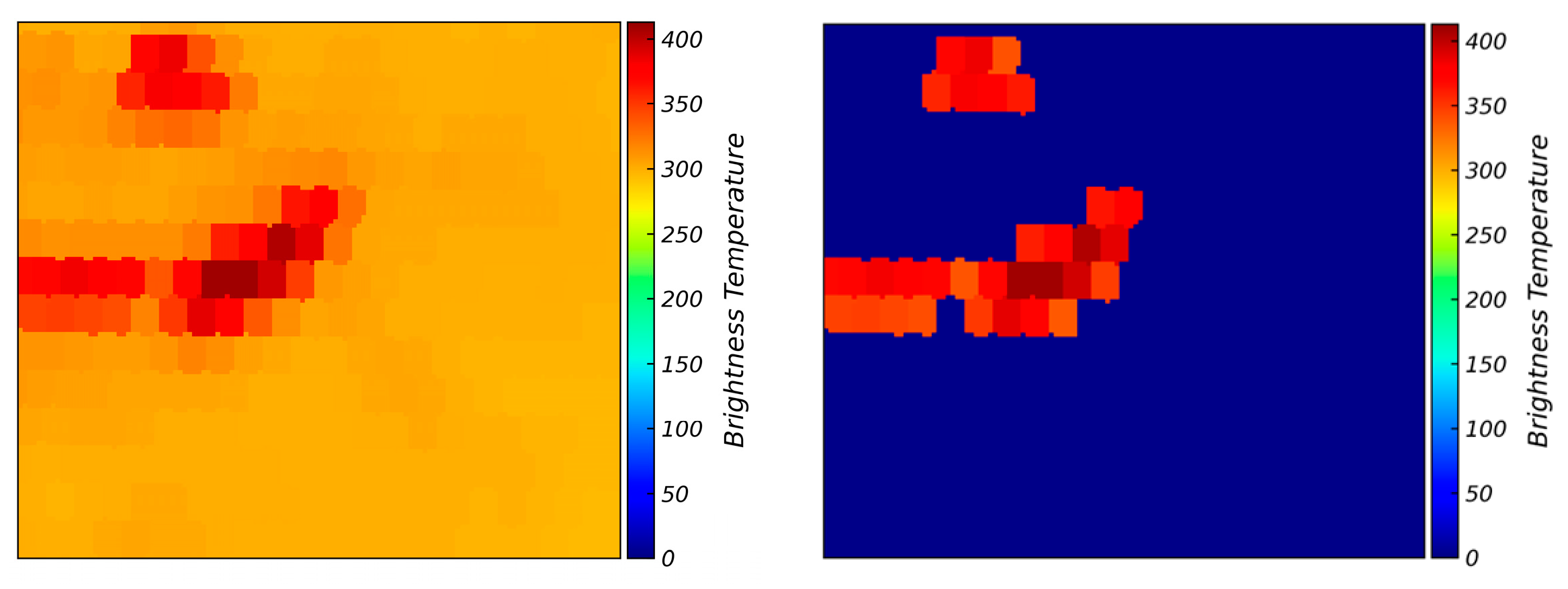

12]. This is accomplished by calculating the variance between two classes of pixels (foreground and background) at different threshold levels and selecting the threshold level that maximizes the variance between these two classes.

Figure 6 shows the process of using the Otsu's thresholding to remove background noise from the model’s prediction (

Figure 6a) and create a post-thresholding prediction (

Figure 6b) that is compared to the ground truth (

Figure 6c) to evaluate the performance of the model. The successful removal of background by Otsu's thresholding improves the consistency between the evaluation metrics and visual inspection.

3.3.2. Evaluation Metrics

The performance of DL models is evaluated using two metrics: intersection over union (IOU) and intersection's point signal-to-noise ratio (IPSNR), which is a modified version of PSNR. These metrics are explained below.

IOU measures the agreement between the prediction and ground truth by quantifying the degree of overlap of the fire area between ground truth and network prediction as follows.

The terms used in this equation are already defined following Eq. 5. However, Eq. 7 and Eq. 5 differ in sign. To compute the IOU metric, both the post-thresholding prediction and ground truth images are converted into binary masks. This is achieved by setting all fire BT values to one, effectively binarizing the image. This step is conducted to simplify the IOU calculation. By representing the images as binary masks, the IOU can be calculated as the intersection of the two masks divided by their union, providing an accurate measure of the overlap between the predicted fire region and the ground truth.

On the other hand, IPSNR quantifies the similarity of BT values in the intersection of the fire areas between the prediction image and its ground truth as shown in Eq. 8. Here, the intersection is defined as the region where both the prediction and VIIRS ground truth have fire BT as shown in Eq. 9.

where

maxval is the maximum BT in the VIIRS ground truth and

IRMSE is the RMSE computed solely in the intersecting fire area using the identifier variable

I, which is one for areas where the predicted fire region intersects with the VIIRS ground truth, and zero otherwise.

The motivation for utilizing IPSNR instead of PSNR stems from the difficulty of evaluating the model's performance based on the similarity of BT values over the whole image, given that the fire area typically occupies only a small portion of the image. If the model's prediction is incorrect, most of the background still appears similar to the ground truth, resulting in higher PSNR values that do not necessarily reflect accurate performance. Therefore, to obtain a more reliable evaluation metric, it is necessary to focus on assessing only the predicted fire area that matches the ground truth when calculating PSNR. It should be noted that the underlying principle of IPSNR is different from that of LRMSE. IPSNR evaluates the quality of the correctly predicted fire area (i.e., predicted fire pixels, that are also present in the ground truth) by considering the RMSE for the intersection of the prediction and ground truth (as described in Eq. 9), while LRMSE is a loss function which focuses on reducing the RMSE specifically for the fire area (as described in Eq. 3).

3.3.3. Dataset Categorization

To obtain a more accurate and precise evaluation of the model's performance, it's important to account for diversity in input and ground truth samples. Factors such as fire orientation, location, background noise, fire size, and similarity between input and ground truth can vary significantly affecting the model's performance. Evaluating the model's performance on a given test set without separately considering the above factors might provide an inaccurate assessment of the model since average performance may be biased towards the majority of sample types in the test set. To overcome this limitation, the test set is divided into four categories based on (1) the total coverage of distinguishable foreground in GOES images, and (2) the initial IOU between the distinguishable foreground in GOES image and VIIRS ground truth. To achieve this, the Otsu’s thresholding is utilized herein to eliminate background information from the original GOES image (

Figure 7a), resulting in a post-thresholding GOES image (

Figure 7b) with distinguishable foreground information that is used to compute coverage and initial IOU. Thus, coverage is determined by calculating the ratio of fire pixels to the total number of pixels in the post-thresholding image. This metric indicates the degree of foreground presence in the GOES image. Meanwhile, the initial IOU is calculated using the binarized post-thresholding GOES image and binarized VIIRS ground truth. This provides a measure of the degree of foreground area similarity between the two images. It should be noted that the foreground area identified by the Otsu’s method in the GOES image may not always accurately indicate the fire region, unlike the prediction image. In some cases, the BT values of the background may be comparable to, or even greater than that of the actual fire region, making it difficult to identify the fire region accurately. Additionally, the coverage calculation involves a single iteration of the Otsu’s thresholding, which is used to determine the true coverage of GOES. On the other hand, calculating the IOU requires multiple iterations of the Otsu’s thresholding to accurately assess the fire area. The final IOU result is obtained by selecting the highest IOU value obtained from all iterations, ensuring that only the fire area is considered for evaluation.

Using the calculated coverage and IOU, the test set is categorized into four groups. These groups are (1) low coverage with high IOU (LCHI), (2) low coverage with low IOU (LCLI), (3) high coverage with high IOU (HCHI), and (4) high coverage with low IOU (HCLI). The threshold values for coverage and IOU are determined through visual inspection of the results and are provided in

Table 1. By categorizing the test set, we can perform a more meaningful evaluation of the model's performance and identify its strengths and weaknesses under various scenarios.

3.3.4. Post-Processing: Normalization of Prediction Values

To ensure that the autoencoder's output aligns with the desired physical range of VIIRS brightness temperature values, Min-Max scaling, a commonly used normalization technique, is employed. This process linearly transforms the autoencoder's output to fit within the specific range corresponding to VIIRS data, enhancing both the physical interpretability of the results and their compatibility with existing remote sensing algorithms and models tailored to this range.

4. Results

4.1. Training

The western U.S. wildfire events that occurred between 2019 and 2021 (listed in

Appendix A) are utilized herein to create contemporaneous images of VIIRS and GOES using the preprocessing steps explained in

Section 2 for network training and evaluation. The preprocessed images are then partitioned into windows of size 128 by 128 pixels, resulting in a dataset of 5,869 samples. These samples are then split with ratio of 4 to 1 to obtain training and test sets, respectively – that is 80% for training and 20% for testing. The training set is further divided into a 4 to 1 ratio to obtain training and validation sets. After splitting the dataset, the training, validation, and test sets include 3,756, 939, and 1,174 samples, respectively. The validation set is used to identify potential overfitting during the training process, while the test set is kept aside for evaluating the performance of the model on novel samples. To improve the diversity of the training data and prevent overfitting, data augmentation techniques are utilized. In particular, at each epoch, the training samples undergo random horizontal and vertical flips, leading to greater variability in the training data and improved model's generalization to novel samples.

To find optimum hyperparameters for the networks defined in

Section 3.1 and

Section 3.2.4, hyperparameter tuning is done using the Weights and Biases (WAB) tool [

13]. For each subset of hyperparameters, the validation loss is used to identify potential overfitting and determine the optimum hyperparameters. The hyperparameter subset that leads to the smallest validation loss and overfitting is then chosen as the final hyperparameters. In the case of autoencoder models combining two losses, such as GLRMSE and TBL, the weights for the loss functions are also considered as hyperparameters and are determined through the same hyperparameter tuning process.

After hyperparameter tuning is conducted, all autoencoder models, as outlined in

Section 3.2, are trained for 150 epochs and batch size of 16 using Adam optimizer with learning rate of 3×10

-5 [

14]. A learning rate decay based on validation loss plateau, with weight decay of 0.1, threshold of 1×10

-5, and patience of 10 epochs is used during training to help both optimization and generalization performance [

15]. For the GLRMSE model, the best results are achieved by setting the weights for the LRMSE and global GRMSE to

WL = 1 and

WG = 8 (see Eq. 4). For the TBL model, the best results are obtained by setting the weights of GRMSE and Jaccard losses to

WR = 3 and

WJ = 1 (see Eq. 6). The DL model is implemented using Pytorch [

16] (v.1.12) Python package and trained on an Nvidia RTX 3090 Graphical Processing Unit (GPU) with 24 GB of Video RAM (VRAM). With this setup the models are trained in 15 to 20 minutes.

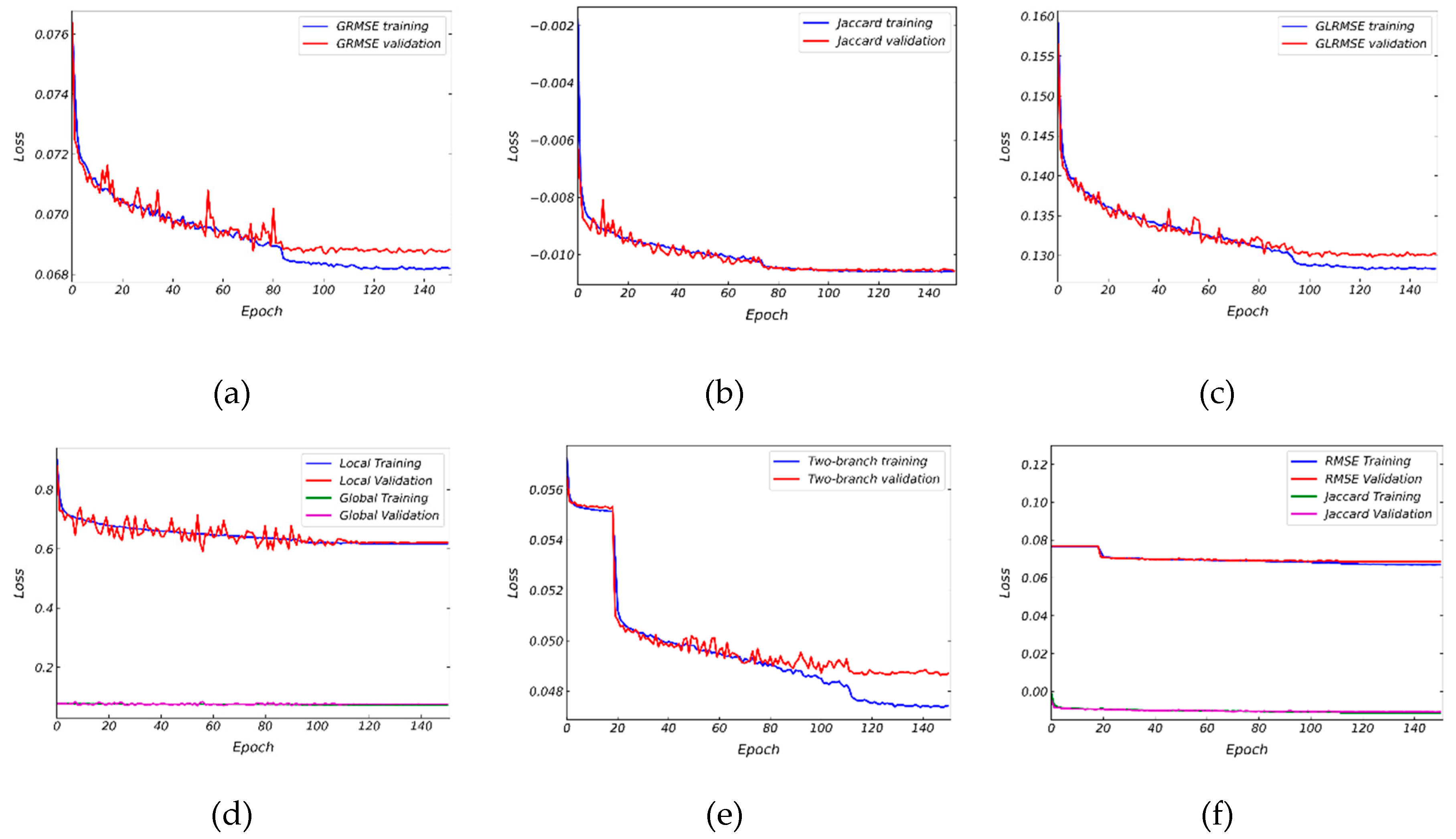

Figure 8 depicts the training loss for all four cases along with the individual losses for the GLRMSE and TBL models. As can be seen, the loss functions are converging to a plateau with small to no overfitting.

4.2. Testing

To evaluate the performance of the four models, the IOU and IPSNR are used for evaluation.

Table 2 presents the results obtained on the entire test set. The TBL model is found to produce the best results in terms of IOU between the prediction and ground truth (VIIRS) while GLRMSE yielded the best results in terms of IPSNR. This suggests that the TBL model is influenced by both loss functions (i.e., Jaccard loss for the fire shape and GRMSE loss for BT values) resulting in a higher IOU. On the other hand, the GLRMSE model improved the prediction of the BT values in the fire area by adding local (i.e., fire area of ground truth) RMSE calculation resulting in a higher IPSNR compared to GRMSE. However, as discussed earlier in

Section 3.3, there is a possibility for evaluation bias towards the majority of the sample types. Therefore, the evaluation is carried out separately for each of the four categories, namely LCHI, LCLI, HCHI, and HCLI in the following subsections. For each group, representative results are presented on three distinct samples to visually illustrate the model’s performance. The samples are chosen to present fires that encompass a wide range of temperatures and spatial scales. Average evaluation scores are also presented for each category for completeness.

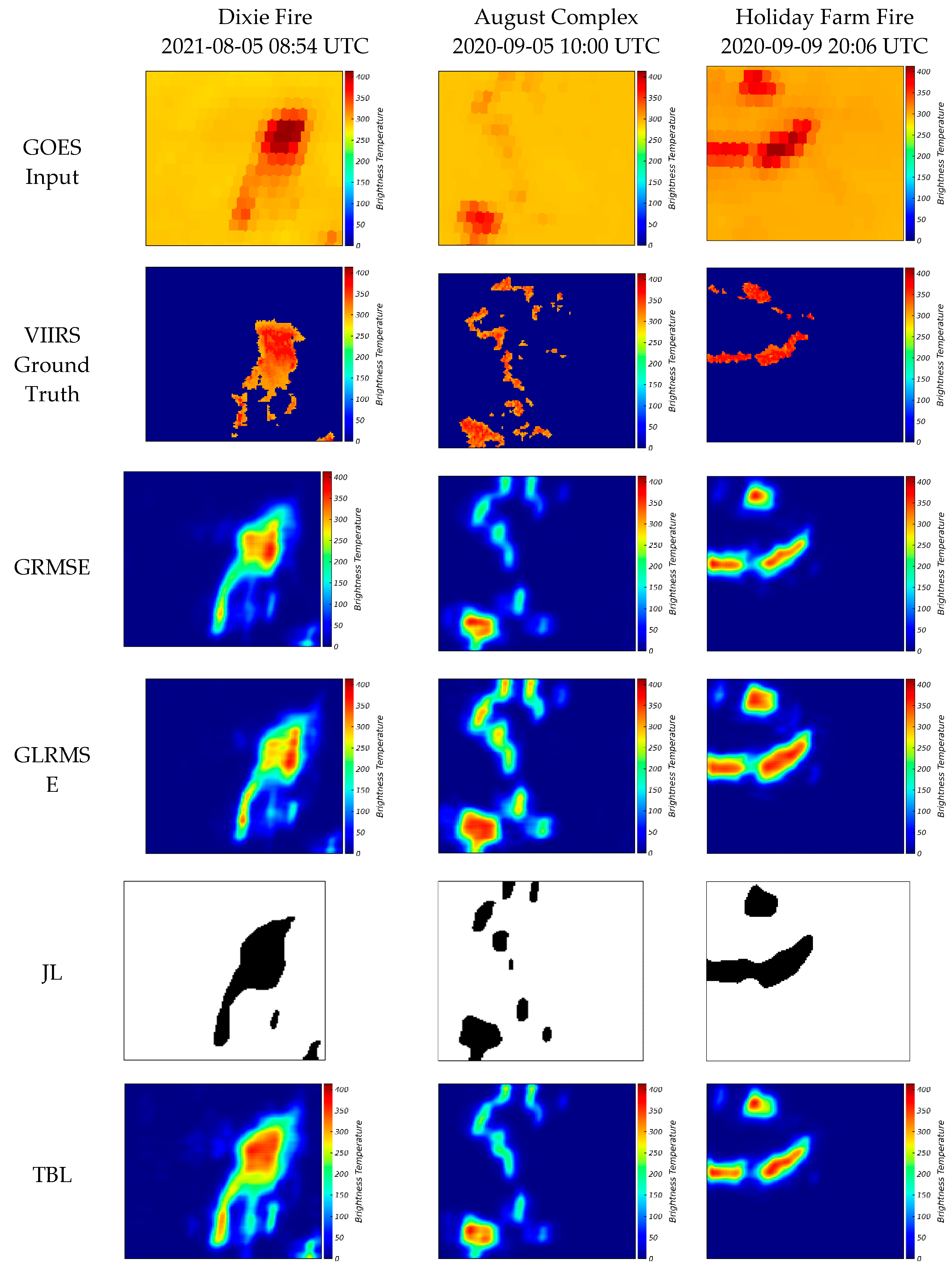

4.2.1. LCHI: Low Coverage with High IOU

Figure 9 shows representative sample image in this category captured by GOES and the corresponding VIIRS images for three fires along with the results obtained from the four models, which demonstrate the correspondence and deviation between sample of LCHI. For the Dixie Fire, due to the small size of distinguishable pixels found after applying Otsu’s thresholding, this sample is classified as having low coverage. Furthermore, the initial IOU between the fire regions in the GOES and VIIRS images is relatively high indicating a significant overlap in the fire area captured by both satellites. This type of scenario is generally less challenging for the DL models to handle, as there is clear and visible overlap between the fire areas in both images.

The JL model performs slightly worse than the GRMSE and GLRMSE models in accurately predicting the location of fires, with an IOU score of 0.527, compared to 0.531 and 0.528, respectively. The lower performance is counter intuitive since it solely focuses on shape of fire and not on actual BT values. However, the TBL, using the features of both GRMSE and JL improves the prediction accuracy compared to other models, achieving an IOU score of 0.531. Although this improvement comes at the cost of a lower IPSNR score, the TBL model provides a compromise between predicting BT values and accurately capturing the shape of wildfires. However, in terms of predicting BT values, the GLRMSE model outperforms all other models, with an IPSNR of 59.24, followed by the GRMSE model at 58.03 and the TBL model at 57.82. The visual results confirm that the BT values predicted by the GLRMSE model are higher than the other models and hence closer to the ground truth. This potentially demonstrates the importance of having higher focus on fire area than the background to achieve more accurate prediction results.

The August Complex Fire on 2020-09-05 at 10:00 UTC (

Figure 9), diverges from both the Dixie Fire and the Holiday Farms Fire with the TBL model having a low IOU score of 0.315. The GLRMSE model achieved higher IOU scores of 0.319 and GRMSE achieved equal IOU of 0.315, respectively, in comparison to the TBL model. This is most likely due to the fact that either the GOES fire area in this sample has a relatively low BT value or the VIIRS fire region spans across multiple subregions, unlike the previous sample. However, it is worth noting that the GLRMSE model achieved an IPSNR of 59.97, which is still higher than the IPSNRs of both the GRMSE (55.09) and TBL (55.60) models.

Figure 9 supports this observation, showing that the GLRMSE model produced higher BT values than the other models, similar to the previous example. The Holiday Farm Fire on 2020-09-09 at 20:06 UTC (

Figure 9) shares similarities to the Dixie Fire sample, with both GOES and VIIRS fire area spanning to roughly a single region and with good initial overlap. The TBL model exhibits superior performance in terms of IOU, followed by the GRMSE, GLRMSE, and JL models. The GLRMSE here overestimated fire region but it still outperformed the other models in terms of IPSNR, followed by the GRMSE and TBL models.

To summarize the results for this category,

Table 3 presents evaluation results on 421 LCHI test samples, revealing a similar pattern to what has been observed in the entire dataset. While there may be some exceptions, such as the sample shown in August Complex, the pattern observed in the Dixie and Holiday Farms Fires appears to be generally consistent with the bulk statistics of this category. To summarize the results for this category,

Table 3 presents evaluation results on 421 LCHI test samples, revealing a similar pattern to what has been observed in the entire dataset. While there may be some exceptions, such as the August Complex Fire in

Figure 9, the pattern observed in this figure appears to be generally consistent with the bulk statistics of this category.

Overall, it can be concluded that, for most of the samples in this category, the models’ performance follows a similar pattern. That is, the TBL model performs the best among all the models in terms of IOU, indicating better agreement between the predicted and actual fire areas. This can be attributed to the fact that this model is trained based on both fire shape and BT, providing a compromise between fire perimeter and BT prediction performance. Meanwhile, the GLRMSE model has the highest IPSNR, indicating better performance in predicting BT values. This suggests that the GLRMSE model can predict BT values closer to the those in VIIRS images, most likely due to the focus of the local term of its loss function on the fire area.

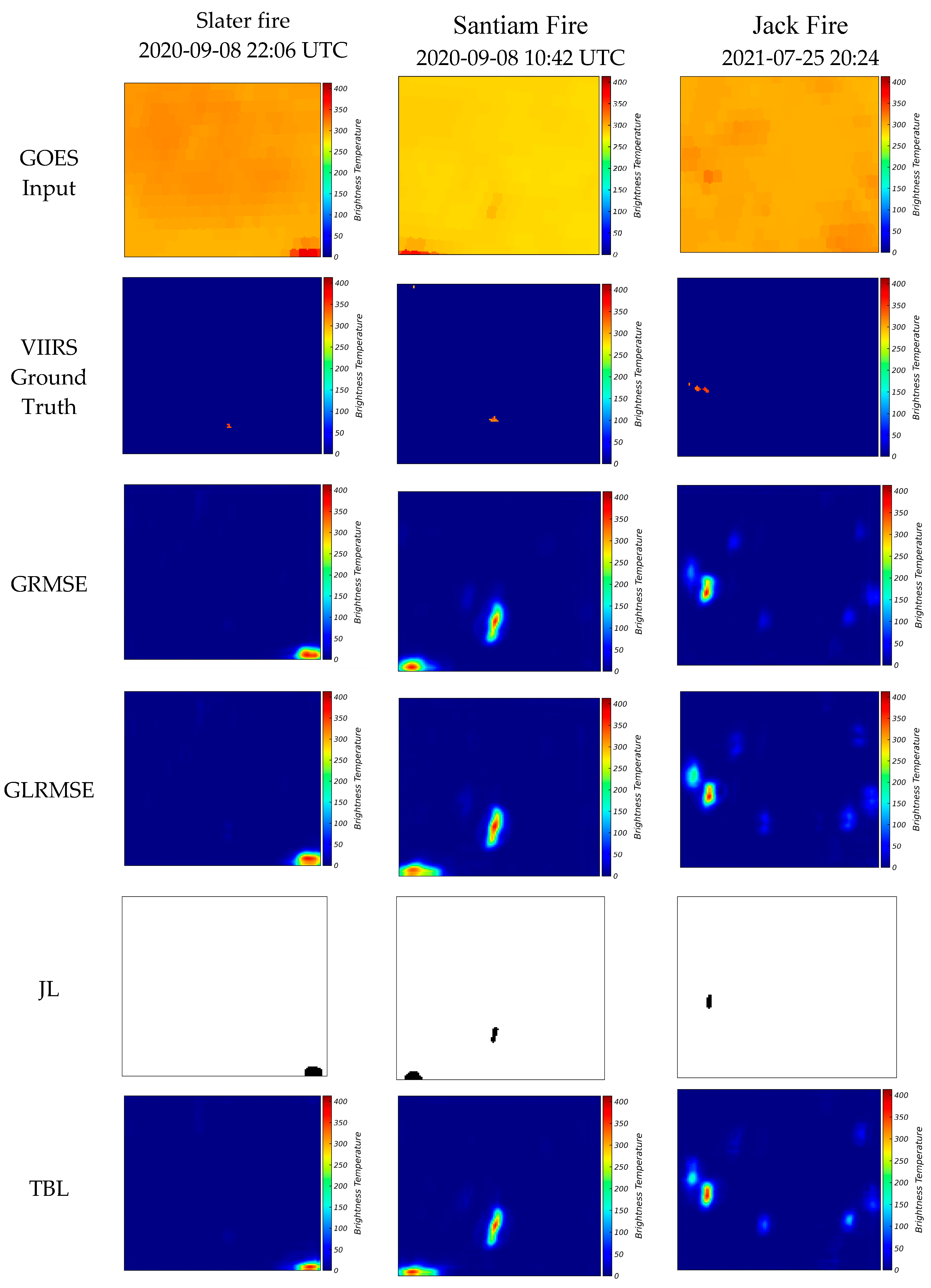

4.2.2. LCLI: Low Coverage with Low IOU

Figure 10 depicts a comparison of representative images from this category taken by GOES and the corresponding VIIRS images for three different fires. The results obtained from the four models illustrate the agreement and variation in the sample of LCLI. Specifically, for the Slater Fire on 2020-09-08 at 22:06 UTC, all models produced an IOU score of zero due to the small size of the VIIRS fire region and the lack of significant overlap between the GOES and VIIRS images. This is expected as there is no underlying pattern in these types of samples that the DL model can learn from.

For the Santiam Fire on 2020-09-08 at 10:42 UTC, the VIIRS fire area is relatively small similar to the sample from the Slater Fire. The GOES image for the Santiam Fire contains visible fire area for two regions, one overlapping with VIIRS fire region and another region with higher BT value producing an error of commission, which is most likely noise. Even with these errors, all four models performed reasonably well in predicting the fire location. The network is unable to remove the noise, which warrants the need for improving the ground truth to address regions of noise or false positives. Among the models, the JL model demonstrates a significant improvement over the GRMSE model, with an IOU score of 0.08 compared to 0.04. The TBL model achieves the same IOU score of 0.04 as the GRMSE model. The GLRMSE model shows the best BT value prediction in terms of IPSNR among the considered models.

The Jack Fire on 2021-07-25 at 20:24 UTC (

Figure 10), demonstrates a distinguishable fire region in the GOES image with relatively higher coverage than the previous samples of this category. This fire also is dissimilar in shape and orientation to the VIIRS fire region. Additionally, the VIIRS fire area is scattered into multiple subregions. As a result, accurately predicting the fire location is challenging for all four models. The GRMSE model produced an IOU score of 0.067, is still better than the TBL, GLRMSE and JL model's score of 0.42, 0.03 and 0.02 respectively. These results suggest that TBL and GLRMSE model may not be effective in cases where GOES visually distinguishable fire area is scattered and dispersed, resulting in these models to enhance these regions instead of removing them as noise. Nonetheless, similar to the previous samples, the GLRMSE model has the best BT value prediction.

To summarize the performance of all the models in this category,

Table 4 presents evaluation results on 136 LCLI test samples, revealing a pattern similar to what has been observed in the entire dataset. Specifically, the TBL model exhibits better IOU and the GLRMSE model demonstrates superior IPSNR performance compared to the other models. However, for some samples where there is no overlap between GOES and VIIRS fire areas or where VIIRS fire areas are scattered in subregions, the TBL model is not the best performing model. In some cases, the GRMSE model demonstrates better IOU results than the other models.

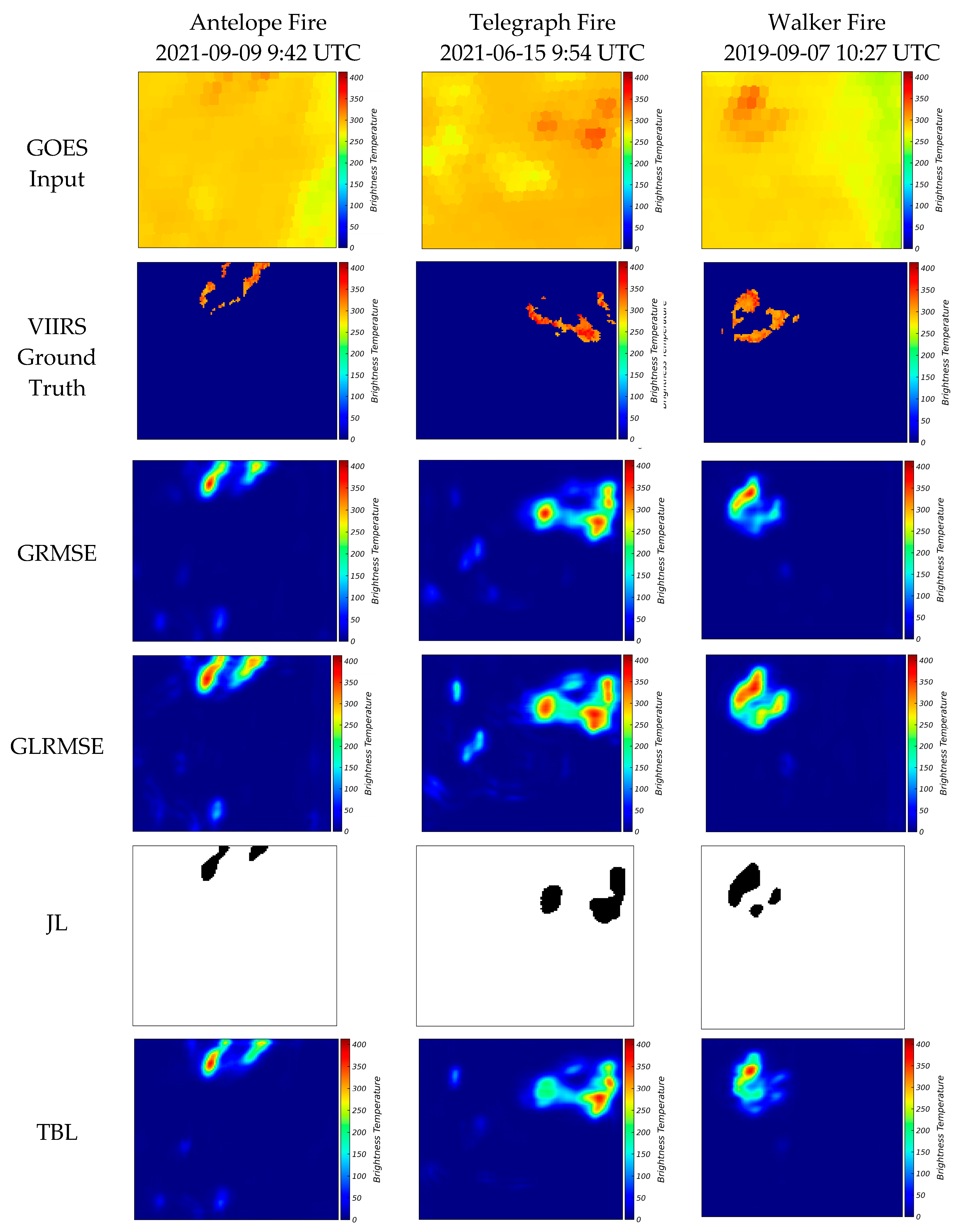

4.2.3. HCHI: High Coverage with High IOU

Representative sample image in this category captured by GOES and the corresponding VIIRS images for three fires along with the results obtained from the four models demonstrate the correspondence and deviation between sample of HCHI (

Figure 11). For the Antelope Fire, the GOES image is considered to have high coverage due to the large background area with high BT values, which are not removed by Otsu’s thresholding. However, the actual fire region in the GOES image still has good overlap with that in the VIIRS image. The predictions of all models have removed most of the background and predicted the fire area with reasonable accuracy. Specifically, the TBL model achieved the highest IOU score of 0.407 where GRMSE, GLRMSE and JL model scored IOU of 0.340, 0.319 and 0.340 respectively. Although the visual results for the GRMSE and TBL models appear similar, the evaluation results suggest that the TBL model can reduce background noise more effectively, resulting in a higher IOU score. However, as it is evident from visual inspection as well as IPSNR evaluation (60.19), the GLRMSE model proved to be predicting the BT values better than GRMSE (57.01 IPSNR) and TBL (56.48 IPSNR).

For the Telegraph Fire on 2021-06-15 at 9:54 UTC (

Figure 11), the TBL model scored 0.244, which is lower than the scores of 0.271 and 0.281 achieved by the GRMSE and JL models, respectively, suggesting that the good performance of GRMSE and JL models does not guarantee TBL to perform as well. However, with the lowest IOU of 0.240, similarly to previous samples, the GLRMSE model performs best in terms of IPSNR.

For the Walker Fire on 2019-09-07 at 10:27 UTC (

Figure 11), the GRMSE, JL and GLRMSE model achieved the IOU score of 0.327, 0.318 and 0.317, while the TBL model has an IOU score of 0.247. Hence, we can conclude that TBL model may not have the highest performance for all samples. Nonetheless, the GLRMSE model still demonstrates the highest IPSNR score, highlighting the strength of this model in predicting BT values.

Table 5 presents evaluation results on 155 HCHI testing samples. The results demonstrate a consistent pattern of GLRMSE's superior performance in terms of IPSNR compared to the other models and the TBL model in terms of IOU, similar to the previous categories. However, some samples can be found where the other three model’s IOU is significantly lower than the TBL model, contradicting the average evaluation (

Table 5) for this category. This suggests the need for a more precise categorization.

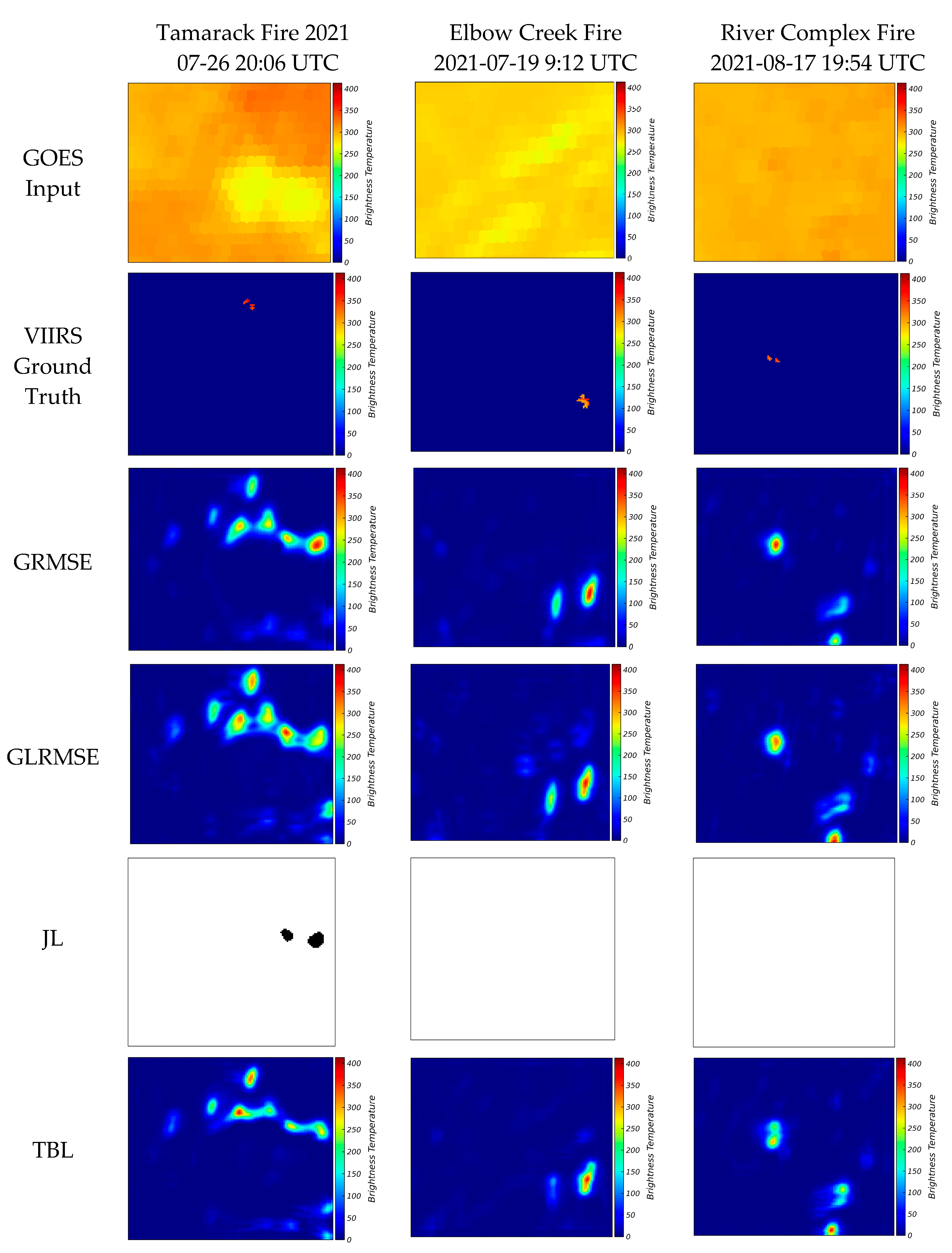

4.2.4. HCLI: High Coverage with Low IOU

Figure 12 presents representative sample images from this category that are acquired by GOES, as well as the corresponding VIIRS images for three sample fires. Additionally, the results obtained from the four models reveal the agreement and disparity within the HCLI sample. For the Tamarack Fire on 07-26 at 20:06 UTC, the high coverage and absence of overlap between the GOES and VIIRS fire regions resulted in all models predicting a zero IOU score, leading to incorrect predictions compared to the ground truth. This outcome is expected due to the small size of the fire region in the VIIRS images. However, it is worth noting that all models successfully removed most of the background information, except for the areas where the GOES image contained high BT values.

From the example of the Elbow Creek Fire on 2021-07-19 at 9:12 UTC (

Figure 12), the initial overlap between the GOES and VIIRS fire regions is visually hard to detect, the GRMSE, GLRMSE, and TBL models accurately predicted the overall location of the fire in comparison to the ground truth with IOU of 0.126, 0.106, and 0.187 respectively. In this case, the JL model has the lowest predicted IOU score of zero. Despite this, the GLRMSE model demonstrated the highest IPSNR score, leading to the best-matching predicted BT values.

The River Complex Fire on 2021-08-17 at 19:54 UTC (

Figure 12) example, demonstrates the even though initial overlap between the GOES and VIIRS fire regions is visually hard to detect, the GRMSE, GLRMSE and TBL models, with IOU of 0.03, accurately predicted the location of the fire as well as removed most of the background information except for the areas where the GOES image contained high BT values.

Table 6 presents evaluation results on 436 HCLI test samples. The results demonstrate a consistent pattern of GLRMSE's superior performance in terms of IPSNR compared to the other models and the TBL model best in terms of IOU, closely following the pattern observed in the other categories.

4.3. Blind Testing

To further evaluate the performance of the proposed approach, the best performing model (i.e., the TBL model based on earlier results as seen in

Section 4.1) is used for blind testing on two wildfire events, namely the 2020 Bear fire and the 2021 Caldor fire. The term "blind testing" refers to the fact that the DL model has never been exposed to any data from these sites during training. To conduct the blind testing, GOES images are downloaded at the operational temporal frequency (i.e., 5 min) for the entire duration of the testing. The preprocessing pipeline, as outlined in

Section 2.2.1, is applied to these images, which are then fed to the trained DL model as input. The output of the DL model, which are enhanced VIIRS-like images, are postprocessed (combined to show entire ROI) for visualization. The predicted images are validated against high resolution (i.e., 250 m spatial and 5 min temporal resolution) fire perimeters estimated from NEXRAD reflectivity measurements [

17].

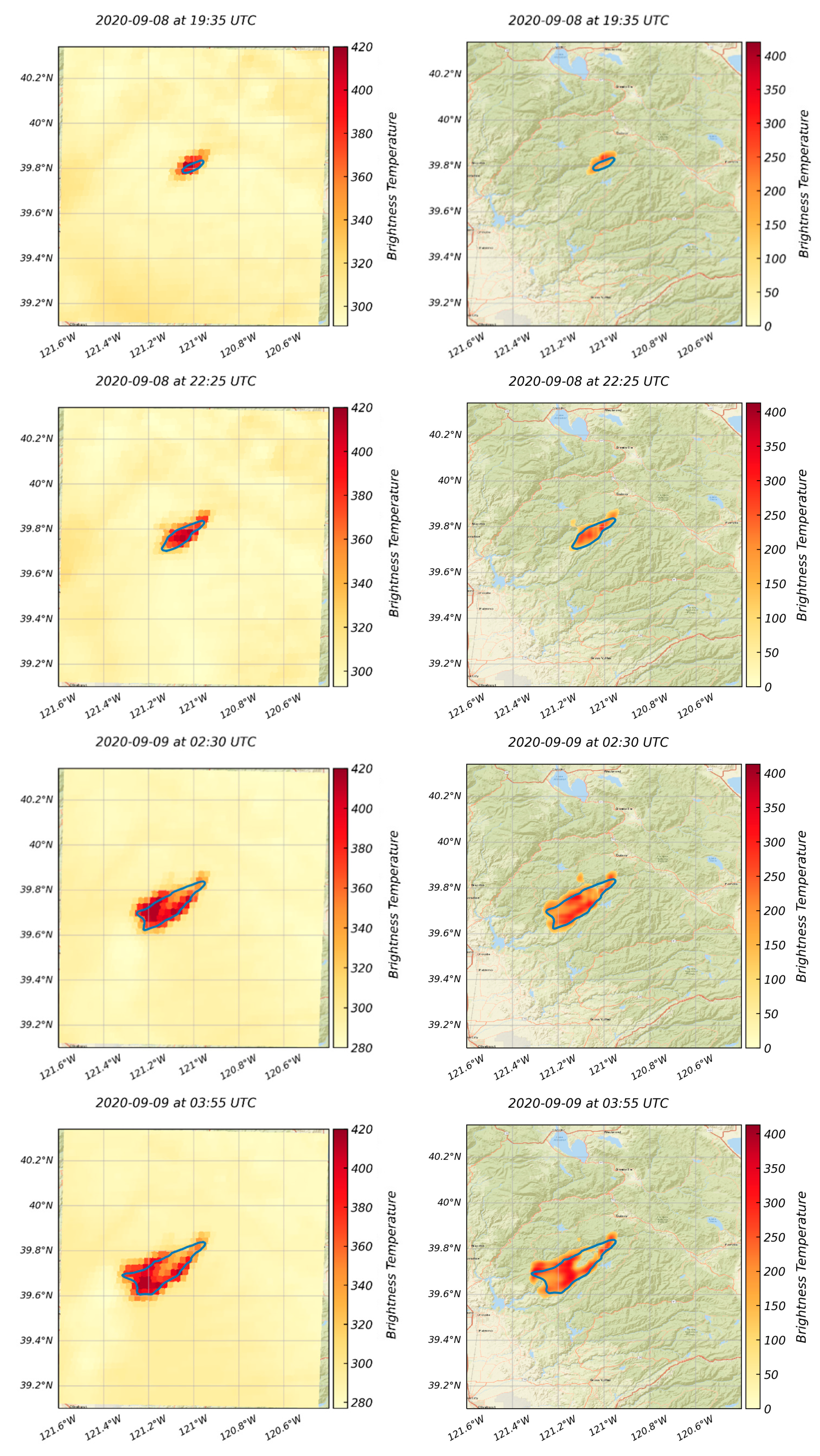

Figure 13 shows four instances during the 2020 Bear Fire from September 8 to 9, 2020, with the blue boundaries representing the radar-estimated fire perimeter and color shading representing the output of the DL model. As of 2020-09-08 19:35 UTC, the model’s results have good agreement with radar data, but are not completely matching. However, by 2020-09-08 22:25 UTC, the DL model’s predictions are comparatively within the radar perimeters. As the fire area expands, by 2020-09-09 02:30 UTC, it is still confined by radar perimeters with reasonable accuracy. Even as the fire begins to fade and only remains at the boundaries, by 2020-09-09 03:55 UTC, it is still comparatively inside the radar perimeters. It should be noted that the DL output only shows the active fire regions, fitting a fire perimeter to the DL output is out of the scope of this study and will be addressed in future research.

Similarly,

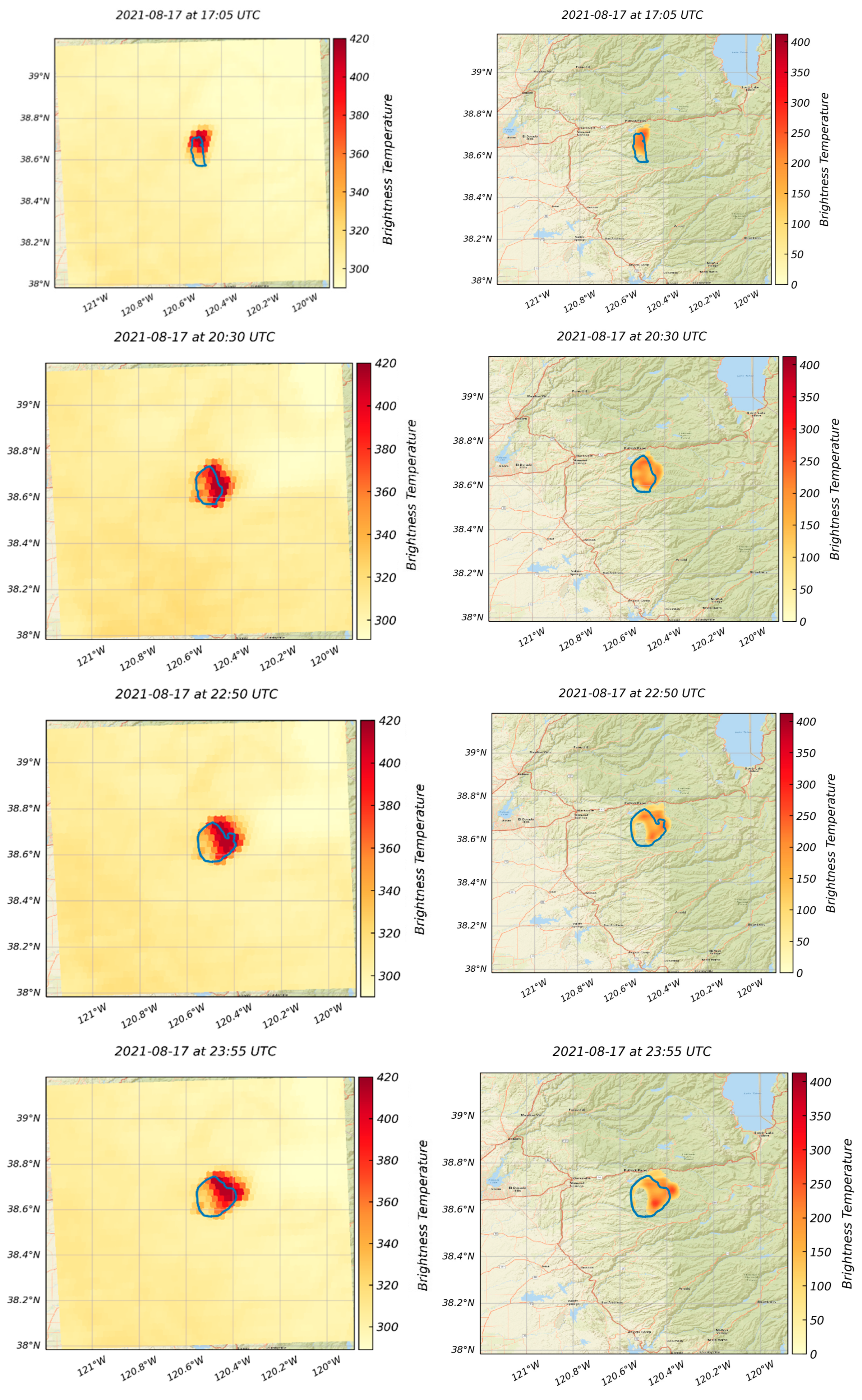

Figure 14 depicts four instances during the 2021 Caldor Fire from August 16 to 17, 2021. Reasonable agreement between the fire pixel and predicted boundaries can be observed in the results, however, not as accurate as in the case of Bear fire. At 2021-08-17 17:05 UTC, the DL prediction is aligned with general location of the radar perimeters, with no visible fire in the bottom half and overprediction in the top half. This can be related to the fact that the network predictions are limited to active fire regions, which are the only part of the fires captured by the satellite. Nonetheless, the overlap between the advancing fire head in the radar perimeters and the predicted active fire regions in this case is less than the Bear Fire case. This can be further improved for operational applications such as online learning, which is out of the scope of this study. Additionally, from 2021-08-17 20:30 UTC to 2021-08-17 23:55 UTC, the DL prediction is consistently confined by the radar parameters, with some fire area outside, but the overall shape is similar to the radar parameter. These findings suggest that the DL model’s predictions closely align with the radar data, indicating that it has the potential to be a valuable tool for monitoring wildfires in near-real-time. It is worth mentioning that both GRMSE and GLRMSE models yielded similar visual results in these cases.

4.4 Opportunities and Limitations

With any network-based learning model, bias is introduced based on the quantity and quality of training data. When assessing various models using the entire dataset, two key observations were made. Firstly, the Two-Branch Loss (TBL) model demonstrated the best overall performance in accurately predicting the location of fire pixels, as confirmed by IOU (Intersection over Union) validation. Secondly, the Global plus Local Root Mean Square Error (GLRMSE) model exhibited notable improvement in predicting Brightness Temperature (BT) values for fire pixels among all models, validated by IPSNR (Intersection’s Point Signal-to-Noise Ratio), which considers only true positive predictions. This can be attributed to the custom regression loss function designed to have higher focus on the fire area than the background. Furthermore, the TBL model achieved the second highest performance in BT values prediction among the cases, highlighting the TBL architecture can provide a compromise between fire shape and BT predictions. However, it's crucial to consider that these results might be influenced by the abundance of certain sample types in the dataset. As a result, evaluations were conducted based on different categories of fire coverage and intensity. Upon analyzing multiple samples from each category, it became evident that while the TBL model performed well, it didn't consistently produce the best results. The ranking of model performance varied depending on the specific samples evaluated, underscoring the importance of adopting more effective evaluation metrics and sample categorization. Overall, the GLRMSE model consistently demonstrated superior performance in predicting BT values for fire pixels across all cases.

Blind testing of TBL model predictions aligned well with radar-based fire perimeters. However, anecdotally there appears to be a spatial disconnect between temperatures and where the active flaming front should be occurring. These effects could be a result of the limited training set used to inform the model, sampling incongruities from GOES-17 data due to effects from the plume as a function of plume height and direction, or representation of heat signatures from post-front combustion. These are a few factors that need to be accounted for in future applications of this approach. From a fire management and safety perspective, continuous 5-minute updated progressions of fire hold significant benefit to personnel, resource, and risk planning. In general, large fire incidents rely on fire simulations as FSPRO [add ref] and daily National Infrared Operations to build a daily operations picture. Additional filtering and tuning of the model outputs to account for overprediction of fire perimeter placement is expected to improve fire intelligence needed for management of large active fires. Expanding the training data beyond this proof-of-concept approach should improve model predictions, though more work will be needed to quantify predicted temperatures that potentially can be integrated into machine learning approaches to improve coupled atmosphere-fire modeling to better understand the role of fire energetics effects on plume dynamics and resulting post-fire effects.

5. Conclusions

The primary objective of this study was to design and develop a deep learning (DL) framework pursuing two primary objectives. The first focus was directed towards enhancing the spatial resolution of GOES (Geostationary Operational Environmental Satellite) images, seeking to elevate the quality and clarity of the captured satellite data. The second critical objective was to predict Brightness Temperature (BT) values within the GOES images, aligning them with the ground truth data obtained from VIIRS (Visible Infrared Imaging Radiometer Suite). To these ends, the autoencoder model, an approach widely recognized for its ability to learn and represent intricate patterns within image data, was utilized with different loss functions and architectures. The model was trained utilizing GOES data as input, with the aim of capturing the underlying spatial information, and VIIRS data as the ground truth, providing a reference for the model's learning process. This training process allowed the DL model to extract valuable features and characteristics from the input GOES images, learning how to enhance their spatial resolution effectively.

The initial phase of this study encompassed data pre-processing and dataset creation steps, where GOES and VIIRS data were downloaded, and efforts were made to ensure consistency between the initial data in terms of location, time, and projection, as well as efforts to convert VIIRS vector data into raster format. Subsequently, a DL model was designed and trained, with the two main goals of enhancing the spatial resolution of GOES images and predicting BT values of active fire regions, closely aligned with VIIRS ground truth images. To comprehensively assess the model's performance, an ablation study was conducted, involving four distinct models with different loss functions and autoencoder architecture variations. This analysis provided valuable insights into the impact of different components on the model's effectiveness. The study further addressed the challenges of evaluating model performance and proposed an evaluation metric that aligned with the physical interpretation of the results, providing a more meaningful assessment. Additionally, it was also suggested that assessing different scenarios based on coverage and initial IOU would be more meaningful than reporting results on the whole dataset without detailed analysis.

The findings of this study have established a strong and promising foundation for advancing the creation of high-resolution GOES images. Moving forward, there are several potential avenues for enhancing the accuracy and applicability of DL models in this domain. Firstly, one aspect to focus on is improving the prediction of actual Brightness Temperature (BT) values. While the current models have shown high performance, further refining the algorithms can lead to even more precise and reliable BT predictions. This will be instrumental in providing more accurate information about the thermal characteristics of different fire regions. Secondly, expanding the training dataset by incorporating other data sources, such as NOAA-20 satellite VIIRS data as well as NEXRAD-estimated fire perimeters, can further improve the model's performance. Integrating data from multiple sources can provide a broader and more diverse set of inputs, enabling the model to capture a more comprehensive range of features and patterns. This, in turn, will improve the model's ability to adapt to different conditions and geographical regions. Thirdly, incorporating a time component into the DL models can open opportunities for forecasting wildfire pattern changes. By considering temporal dynamics, the models can capture how fires evolve and spread over time. Additionally, enriching the DL models with land use, vegetation properties, and terrain data can further enhance wildfire pattern predictions. These additional data layers will enable the models to better understand the complex interactions between fire and environmental factors. Incorporating land use information can help identify vulnerable regions, while vegetation properties and terrain data can provide insights into how fires might spread in different landscapes. Overall, by pursuing these potential avenues for improvement, DL models can become even more powerful tools for generating high-resolution GOES images and advancing our understanding of wildfires and their impact on the environment. These advancements hold the potential to improve the means of how we monitor and respond to wildfires, ultimately contributing to better fire management practices and environmental protection.

Author Contributions

Conceptualization, N.P.L and H.E.; methodology, M.B., K.S., G.B., and H.E.; software, M.B. and K.S.; validation, M.B., K.S., and N.P.L.; formal analysis, M.B., K.S., H.E., G.B.; writing—original draft preparation, M.B. and K.S.; writing—review and editing, M.B., K.S., H.E., G.B., N.P.L., and E.R.; visualization, M.B.; supervision, H.E. and G.B.; project administration, H.E.; funding acquisition, H.E. and N.P.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported through the National Science Foundation’s Leading Engineering for America’s Prosperity, Health, and Infrastructure (LEAP-HI) program by grant number CMMI1953333. The opinions and perspectives expressed in this study are those of the authors and do not necessarily reflect the views of the sponsor.

Data Availability Statement

Data and materials supporting the results or analyses presented in this work are available upon reasonable request from the corresponding author.

Acknowledgments

The authors would like to acknowledge technical support from Dr. Sharif Amit Kamran at University of Nevada, Reno in model development.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. List of Wildfire Events Used in This Study

| Site |

Central Longitude |

Central Latitude |

Fire Start date |

Fire End date |

| Kincade |

-122.780 |

38.792 |

2019-10-23 |

2019-11-06 |

| Walker |

-120.669 |

40.053 |

2019-09-04 |

2019-09-25 |

| Tucker |

-121.243 |

41.726 |

2019-07-28 |

2019-08-15 |

| Taboose |

-118.345 |

37.034 |

2019-09-04 |

2019-11-21 |

| Maria |

-118.997 |

34.302 |

2019-10-31 |

2019-11-05 |

| Redbank |

-122.64 |

40.12 |

2019-09-05 |

2019-09-13 |

| Saddle ridge |

-118.481 |

34.329 |

2019-10-10 |

2019-10-31 |

| Lone |

-121.576 |

39.434 |

2019-09-05 |

2019-09-13 |

| Chuckegg creek fire |

-117.42 |

58.38 |

2019-05-15 |

2019-05-22 |

| Eagle bluff fire |

-119.5 |

49.42 |

2019-08-05 |

2019-08-10 |

| Richter creek fire |

-119.66 |

49.04 |

2019-05-13 |

2019-05-20 |

| LNU lighting complex |

-122.237 |

38.593 |

2020-08-18 |

2020-09-30 |

| SCU lighting complex |

-121.438 |

37.352 |

2020-08-14 |

2020-10-01 |

| CZU lighting complex |

-122.280 |

37.097 |

2020-08-16 |

2020-09-22 |

| August complex |

-122.97 |

39.868 |

2020-08-17 |

2020-09-23 |

| North complex fire |

-120.12 |

39.69 |

2020-08-14 |

2020-12-03 |

| Glass fire |

-122.496 |

38.565 |

2020-09-27 |

2020-10-30 |

| Beachie wildfire |

-122.138 |

44.745 |

2020-09-02 |

2020-09-14 |

| Beachie wildfire 2 |

-122.239 |

45.102 |

2020-09-02 |

2020-09-14 |

| Holiday farm wildfire |

-122.49 |

44.15 |

2020-09-07 |

2020-09-14 |

| Cold spring fire |

-119.572 |

48.850 |

2020-09-06 |

2020-09-14 |

| Creek fire |

-119.3 |

37.2 |

2020-09-05 |

2020-09-10 |

| Blue ridge fire |

-117.68 |

33.88 |

2020-10-26 |

2020-10-30 |

| Silverado fire |

-117.66 |

33.74 |

2020-10-26 |

2020-10-27 |

| Chuckegg creek fire |

-117.42 |

58.38 |

2019-05-15 |

2019-05-22 |

| Bond fire |

-117.67 |

33.74 |

2020-12-02 |

2020-12-07 |

| Washinton fire |

-119.556 |

48.825 |

2020-08-18 |

2020-08-30 |

| Oregon fire |

-121.645 |

44.738 |

2020-08-17 |

2020-08-30 |

| Talbott creek |

-117.01 |

49.85 |

2020-08-17 |

2020-08-30 |

| Christie mountain |

-119.54 |

49.364 |

2020-08-18 |

2020-09-30 |

| Bush fire |

-111.564 |

33.629 |

2020-06-13 |

2020-07-06 |

| Magnum fire |

-112.34 |

36.61 |

2020-06-08 |

2020-07-06 |

| Bighorn fire |

-111.03 |

32.53 |

2020-06-06 |

2020-07-23 |

| Santiam fire |

-122.19 |

44.82 |

2020-08-31 |

2020-09-30 |

| Holiday farm fire |

-122.45 |

44.15 |

2020-09-07 |

2020-09-30 |

| Slater fire |

-123.38 |

41.77 |

2020-09-07 |

2020-09-30 |

| Eagle bluff fire |

-119.5 |

49.42 |

2019-08-05 |

2019-08-10 |

| Alberta fire 1 |

-118.069 |

55.137 |

2020-06-18 |

2020-06-30 |

| Doctor creek fire |

-116.09788 |

50.0911 |

2020-08-18 |

2020-08-24 |

| Magee fire |

-123.22 |

49.88 |

2020-04-15 |

2020-04-16 |

| Pinnacle fire |

-110.201 |

32.865 |

2021-06-10 |

2021-07-16 |

| Backbone fire |

-111.677 |

34.344 |

2021-06-16 |

2021-07-19 |

| Rafael fire |

-112.162 |

34.942 |

2021-06-18 |

2021-07-15 |

| Telegraph fire |

-111.092 |

33.209 |

2021-06-04 |

2021-07-03 |

| Dixie |

-121 |

40 |

2021-06-15 |

2021-08-15 |

| Monument |

-123.33 |

40.752 |

2021-07-30 |

2021-10-25 |

| River complex |

-123.018 |

41.143 |

2021-07-30 |

2021-10-25 |

| Antelope |

-121.919 |

41.521 |

2021-08-01 |

2021-10-15 |

| Mcfarland |

-123.034 |

40.35 |

2021-07-29 |

2021-09-16 |

| Beckwourth complex |

-118.811 |

36.567 |

2021-07-03 |

2021-09-22 |

| Windy |

-118.631 |

36.047 |

2021-09-09 |

2021-11-15 |

| Mccash |

-123.404 |

41.564 |

2021-07-31 |

2021-10-27 |

| Knpcomplex |

-118.811 |

36.567 |

2021-09-10 |

2021-12-16 |

| Tamarack |

-119.857 |

38.628 |

2021-07-04 |

2021-10-08 |

| French |

-118.55 |

35.687 |

2021-08-18 |

2021-10-19 |

| Lava |

-122.329 |

41.459 |

2021-06-25 |

2021-09-03 |

| Alisal |

-120.131 |

34.517 |

2021-10-11 |

2021-11-16 |

| Salt |

-122.336 |

40.849 |

2021-06-30 |

2021-07-19 |

| Tennant |

-122.039 |

41.665 |

2021-06-28 |

2021-07-12 |

| Bootleg |

-121.421 |

42.616 |

2021-07-06 |

2021-08-14 |

| Cougar peak |

-120.613 |

42.277 |

2021-09-07 |

2021-10-21 |

| Devil'sKnob Complex |

-123.268 |

41.915 |

2021-08-03 |

2021-10-19 |

| Roughpatch complex |

-122.676 |

43.511 |

2021-07-29 |

2021-11-29 |

| Middlefork complex |

-122.409 |

43.869 |

2021-07-29 |

2021-12-13 |

| Bull complex |

-122.009 |

44.879 |

2021-08-02 |

2021-11-19 |

| Jack |

-122.686 |

43.322 |

2021-07-05 |

2021-11-29 |

| Elbowcreek |

-117.619 |

45.867 |

2021-07-15 |

2021-09-24 |

| Blackbutte |

-118.326 |

44.093 |

2021-08-03 |

2021-09-27 |

| Fox complex |

-120.599 |

42.21 |

2021-08-13 |

2021-09-01 |

| Joseph canyon |

-117.081 |

45.989 |

2021-06-04 |

2021-07-15 |

| Wrentham market |

-121.006 |

45.49 |

2021-06-29 |

2021-07-03 |

| S-503 |

-121.476 |

45.087 |

2021-06-18 |

2021-08-18 |

| Grandview |

-121.4 |

44.466 |

2021-07-11 |

2021-07-25 |

| Lickcreek fire |

-117.416 |

46.262 |

2021-07-07 |

2021-08-14 |

| Richter mountain fire |

-119.7 |

49.06 |

2019-07-26 |

2019-07-30 |

References

- Wildfires and Acres | National Interagency Fire Center. Available online: https://www.nifc.gov/fire-information/statistics/wildfires (accessed on 22 March 2023).

- Taylor, A.H.; Harris, L.B.; Skinner, C.N. Severity Patterns of the 2021 Dixie Fire Exemplify the Need to Increase Low-Severity Fire Treatments in California’s Forests. Environ. Res. Lett. 2022, 17. [Google Scholar] [CrossRef]

- Liao, Y.; Kousky, C. The Fiscal Impacts of Wildfires on California Municipalities. J. Assoc. Environ. Resour. Econ. 2022, 9, 455–493. [Google Scholar] [CrossRef]

- Westerling, A.L.; Hidalgo, H.G.; Cayan, D.R.; Swetnam, T.W. Warming and Earlier Spring Increase Western U.S. Forest Wildfire Activity. Science (80-. ). 2006, 313, 940–943. [Google Scholar] [CrossRef]

- Szpakowski, D.M.; Jensen, J.L.R. A Review of the Applications of Remote Sensing in Fire Ecology. Remote Sens. 2019, Vol. 11, Page 2638 2019, 11, 2638. [Google Scholar] [CrossRef]

- Barmpoutis, P.; Papaioannou, P.; Dimitropoulos, K.; Grammalidis, N. A Review on Early Forest Fire Detection Systems Using Optical Remote Sensing. Sensors (Switzerland) 2020, 20, 1–26. [Google Scholar] [CrossRef] [PubMed]

- Pradhan, B.; Dini, M.; Suliman, H. Forest Fire Susceptibility and Risk Mapping Using Remote Sensing and Geographical Information Systems (GIS) Identification of Rocks and Their Quartz Content in Gua Musang Gold Field Using Advanced Space-Borne Thermal Emission and Reflection Radiometer (AS. [CrossRef]

- Barmpoutis, P.; Papaioannou, P.; Dimitropoulos, K.; Grammalidis, N. A Review on Early Forest Fire Detection Systems Using Optical Remote Sensing. Sensors 2020, Vol. 20, Page 6442 2020, 20, 6442. [Google Scholar] [CrossRef]

- Radke, L.F.; Clark, T.L.; Coen, J.L.; Walther, C.A.; Lockwood, R.N.; Riggan, P.J.; Brass, J.A.; Higgins, R.G. The Wildfire Experiment (WIFE): Observations with Airborne Remote Sensors. Can. J. Remote Sens. 26(5) 406-417 2000, 26, 406–417. [Google Scholar] [CrossRef]

- Valero, M.M.; et. al. On the Use of Compact Thermal Cameras for Quantitative Wildfire Monitoring. Adv. For. fire Res. 2018 2018, 1077–1086. [Google Scholar] [CrossRef] [PubMed]

- Loew, A.; Bell, W.; Brocca, L.; Bulgin, C.E.; Burdanowitz, J.; Calbet, X.; Donner, R. V.; Ghent, D.; Gruber, A.; Kaminski, T.; et al. Validation Practices for Satellite-Based Earth Observation Data across Communities. Rev. Geophys. 2017, 55, 779–817. [Google Scholar] [CrossRef]