1. Introduction

European cities are motivated to act towards the achievement of climate-neutral mobility solutions [

1]. A policy that could achieve transformative changes requires interdisciplinary and transdisciplinary approaches [

2], but cities are, often, facing many challenges when it comes to bringing sustainable mobility ideas forward and “fast tracking” these ideas towards deployment [

3].Gaps can be identified in governance schemes, lack of funding, limited (human) resources, but also in limited knowledge/ experience for data-driven approaches that can support all stages of mobility solutions implementation [

4].

Capacity building that fills the gaps and enables the acquisition of skills related to the implementation of innovative mobility solutions can empower local/ regional authorities to identify, adopt and eventually deliver mobility solutions that are considered innovative for the local/ regional context and needs [

5].

The definition of capacity building is broad, referring not only to the process of developing and strengthening abilities and skills but also on the process of building relationships and values [

6]. OECD places great emphasis on the “quality of organizations” and the “enabling environment” that allows the term of “capacity development” to go beyond the increase of knowledge and skill of the individuals, to incentive building and governance transformations of the organizations [

7]. Under the same strategic vein, the United Nations have identified the importance of capacity building and have appointed the UN Environmental Programme (UNEP) to act as a missionary for capacity building as a means for achieving sustainable development [

6].

Looking closely at the transport field, Glaser, Brommelstroet, & Bertolini [

2] identify learning for transportation policy as an integral part of larger processes, such as innovation; Martins, Kalakou & Pimenta [

3] see capacity building of local authorities as a “hearts and minds approach” for individual, organizational and institutional capacity that will address principal barriers to achieving more sustainable transport strategies (p.11) and they defined capacity building as “the strengthening of the dynamics in an organization that drive its effectiveness in implementing plans” (p.13). But how is capacity building delivered within an analytical framework?

Thapa, Matin and Bajracharya [

8] have defined a sequential capacity building flow for the development of the necessary skills and abilities around the use of Earth Observation and geospatial information technologies, which consists of four elements: needs assessment, design, implementation, and monitoring (

Figure 1). Starting from capacity assessment that allows for clear gap definition and learning objective formulation, the capacity building curriculum is designed to address the learning objectives. Following the execution of the capacity building activities, monitoring and evaluation takes place for assessing the performance of the capacity building activities against criteria of quality and relevance and foreseeing for mitigation actions in case the criteria are not met.

Similarly, the UNDP [

9] proposes five steps for the capacity development cycle beginning with actions that will bring the commitment of people to the process (

Figure 1).

Anderston, et al. [

10] propose a capacity building approach beginning from a bottom level of knowledge gain (in the form of external knowledge that fills the gaps) to organizational transformation, were organizations are empowered to deliver/ adopt changes. In their underlying framework of learning, the learning taxonomy should not stop on classifying the learning objectives, rather than going to an upper level of evaluation capacity, namely “the ability to conduct an effective evaluation” [

11] (p.1).

Evaluation of capacity building has been highlighted as a common denominator and Martins, Kalakou and Pimenta agree that evaluating capacity building is crucial in order to: map the program’s progress against its goals, grasp the reactions of the learner and get his/her feedback, identify gaps and weaknesses in the learning program itself and propose actions to address these weaknesses [

3]. The European Commission also clearly states: “Assessing institutions and capacity is thus a central element of preparing and implementing any kind of support” [

12] (pg.7). Horton answers the questions “why should capacity building be evaluated?” by also discussing on the purpose of “accountability” (the obligation to produce evaluation reports), but eventually the importance of “improvement” of ongoing or future capacity-building activities is stressed out [

13] (pg.9).

Since 2000 there is a great discussion among capacity building assessment and an evaluator can refer to a commonplace of sources that cover a variety of fields/ sectors [

12,

14,

15]. An underlying philosophy for these frameworks has to do with the consideration of the organizations as systems that are embedded in a context (environment), receive resources and use the capacity of these resources to deliver products/ services. Therefore, any changes related to any of these items (content, resources, capacities) should be considered.

Complementary to the above, a number of guides is also available for setting up and applying monitoring and evaluation frameworks for capacity building [

16,

17,

18,

19,

20], with a substantial work done for the health sector and the measurement of “community capacity” in the public health arena (for an extended review over the latter, the reader can refer to Liberato et al work [

21]).

Within the pool of capacity building evaluation frameworks/ guides, though, not a substantial amount of work is found for the transport sector. As the most outstanding cases, the SUITS capacity building evaluation framework [

3] and Transport Innovation Living Lab approach for capacity needs assessment are mentioned [

5]. SUITS evaluation framework [

3] assessed the perceived drivers and barriers of capacity building development that aims to transform the transport organizations of the cities engaged to project. The project identifies four capacity elements: inputs (referring to expenditure of people, materials, funds), processes (activities transforming the inputs to outputs), outputs (results produces by the processes) and outcomes (knowledge, skills and behavior). The evaluation framework defined the tools/ methods for data collection and the categories and sub-categories of capacity to be assessed (categories: organizational, political, legal, societal; sub-categories: communicational, financial, managerial and technical), each one linked with a list of Key Performance Indicators (KPIs). The framework foresaw a rating conversion methodology, in order to estimate the performance level of each city, based on estimated KPIs values.

Assessment of the capacities of cities to plan and implement mobility innovations is done by Teko and Lah within nine Living Labs (LLs). Key characteristics and elements of the LLs are used for the definition of the assessment framework and further developed into indicators: “extent of real-life contextualization” (as LLs “depict a real-life scenario” capacity needs are defined in a real-life context), “level of participation” (as LLs allow active user-participation”, also in the assessment of capacity needs), “diversity of stakeholders involved” (as LLs “involve multiple stakeholders”, capacity needs assessment is done from a variety of relevant stakeholders) and “time span of engagement” (short, medium or long term “length of time involved in defining capacity needs”). As a result, capacity needs can be refined reflecting collective decision, the interest of stakeholders in the assessment process is sustained and capacity building interventions are well-tailored to the identified needs [

5] (

Table 1 on p.4).

Methodological difficulties associated with establishing a direct link between capacity building and impact (how to “map the pathway” from improved individual capacity to community impacts) are relevant to our discussion [

15,

16]. There is a common understanding, though, about the need to differentiate, along this pathway, between the “inputs”, “outputs” (sometimes bound together with the inputs), “outcomes” and “impacts” of any learning process [

3,

9,

12,

15,

16,

18]:

Inputs measure the efforts placed and are usually linked to the delivery of activities/ services.

Outputs measure the results that the delivered activities/ services should be able to guarantee.

Outcomes measure the effectiveness of the delivered activities/ services (sustained production of benefits),

Impacts measure the changes that are linked to higher-level objectives towards which the delivered activities/ services are expected to contribute.

The current study follows the performance of a Learning and Exchange Programme, that was designed and implemented for European cities as part of an EU-funded project, to help them accelerate the deployment of innovative sustainable mobility solutions. The monitoring and evaluation of the knowledge exchange and transfer was done through a Key Performance Indicator (KPI) based assessment framework, applying a loop of “input-to-impact” pathway for the KPI feed, analysis and evaluation throughout the 2-year duration of the Learning and Exchange Programme. The KPI assessment allowed for the strengths and weaknesses of the Programme to be identified and relevant mitigation measures to be applied when necessary.

2. Materials and Methods

The paper focuses on a capacity building monitoring and assessment process, undertaken by the authors as part of an EU – funded project, which aimed to support local authorities in Europe to quickly implement innovative – for them - mobility solutions. The project followed the capacity building flow of Thapa, Matin and Bajracharya presented above (

Figure 1) [

8], adapted as per the iterations needed to accommodate changes in the design of the capacity building activities:

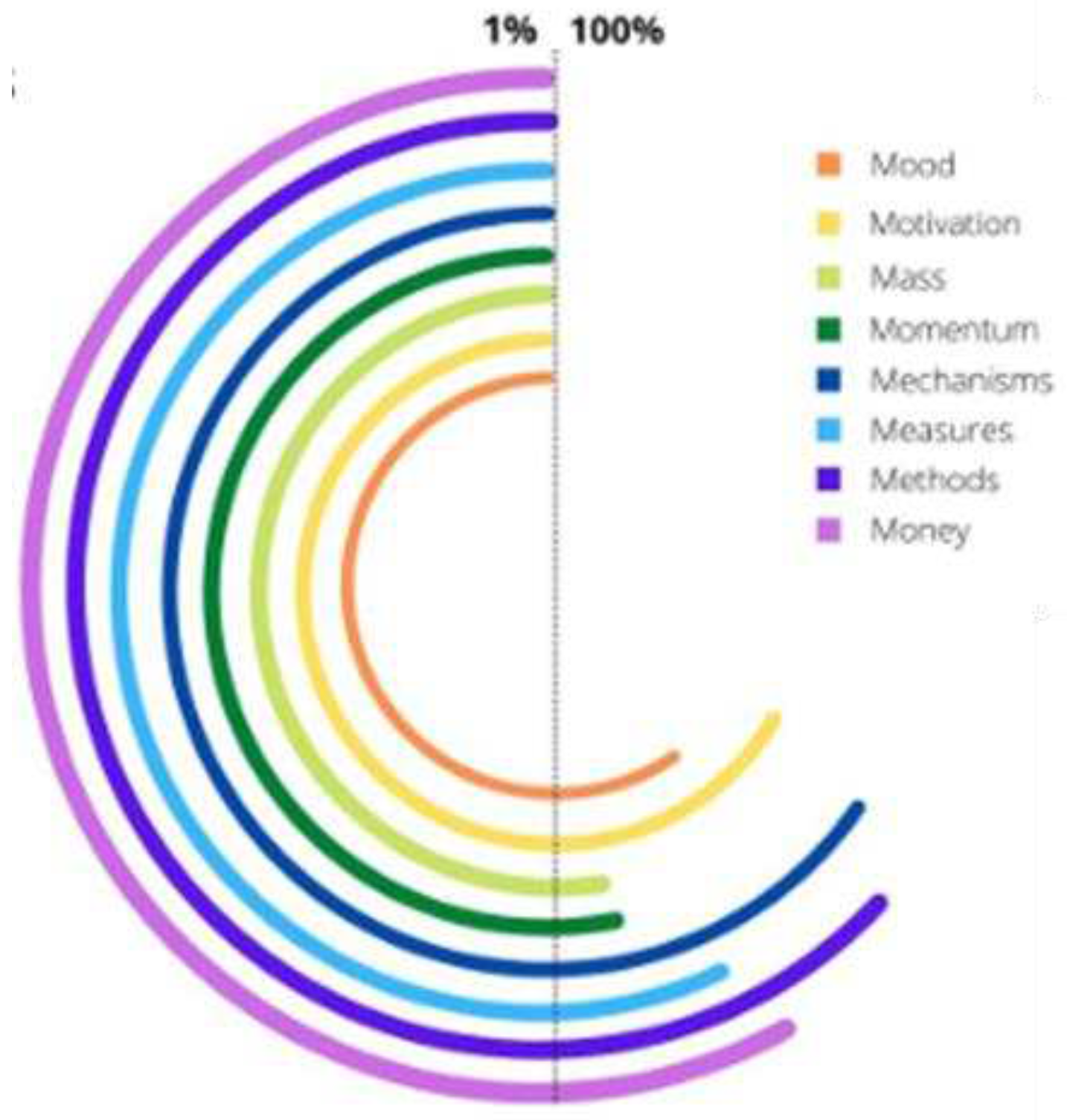

Step 1: a carefully performed needs assessment is of outmost importance for priority definition and activity design of a capacity building programme that reflects the specific individual/ organizational learning conditions and priorities [

22]. Within this concept, the capacity building programme examined under this paper first initiated with a “diagnostic” phase to address the actual challenges faced by cities. The participating cities have defined their understanding of “smart” and “clean” innovations and have identified the barriers they need to overcome for rapid implementation. “Innovation profiles” were finally extracted in a “fingerprint” visualization of the cities’ performance across eight success factors, the so called “8Ms”: mood, motivation, mass, momentum, mechanisms, measures, methods, money (

Figure 2) [

23].

Step 2: the project designed and applied a tailored Learning and Exchange Programme (from now on called “Programme”), which aimed at fulfilling the learning needs identified and helping cities to overcome the barriers obstructing the deployment of innovative mobility solutions. The Programme included audiences and connections throughout Europe, resources such as databases of solutions, a portal of best practices, and capacity-building and knowledge-sharing events that reveal new opportunities for innovation [

24]. The learning exchange between the project and the cities was established through four clusters:

Cluster 1 – Sustainable & Clean Urban Logistics

Cluster 2 – Cycling in the Urban & Functional Urban Area

Cluster 3 – Integrated Multi-modal Mobility Solutions

Cluster 4 – Traffic & Demand Management

At the same time, four cross-cutting themes have been identified, for dealing with key learning components running across all clusters:

Behaviour change

Digitalisation & Data Management

Funding, Financing & Business Models

Governance, Participation, Cooperation and Co-creation

Step 3: The Programme was wrapped around five Learning Sequences (LSs), each with a defined object to achieve: from cities setting their goals within the project to cities concretizing their actions towards the deployment of innovative mobility solutions and eventually accelerating their innovation. Five intensive learning week (the Capacity Building Weeks – CBWs) marked the end of each LS, and interactions between these weeks were facilitated through i.e., webinars, online workshops and access to asynchronous learning material.

Step 4: a robust monitoring and evaluation Framework was developed, to capture the innovation performance of the project, in a two-fold manner [

25]:

Branching Exploration, aiming to understand the innovation profiles of the cities.

Incremental Iterative Refinement, aiming to monitor and refine the mobility solutions This was done through the development and monitoring, by the authors, of a KPI framework that allows the project to understand the efficiency and the impact of the Programme. The current paper focuses on this part of the project’s evaluation framework.

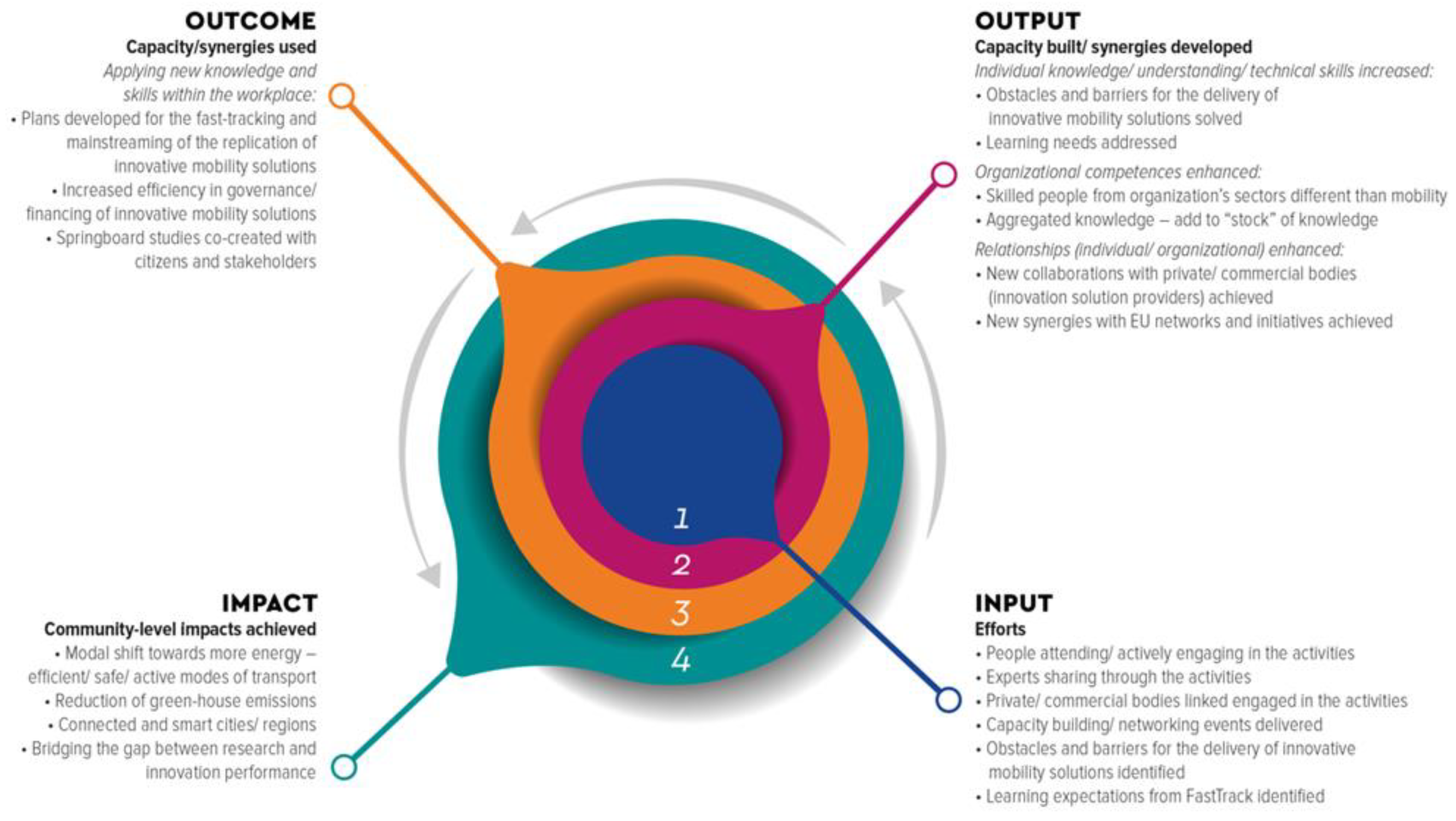

Figure 3 presents an adapted, by the authors, generic impact pathway for the Programme [

26]. This is based on the ‘capacity building-to-impact pathway” presented by Hailey, James & Wrigley [

20] and the Ripple Model presented by James [

19], in which the “capacity building interventions ripples” flow outwards, like a drop of rain, from capacity building outputs to behaviour change amongst beneficiaries.

A predictive approach is used with Key Performance Indicators (KPIs) set for every level (input, output, outcome, impact) to monitor the progress towards pre-defined objectives of the project [

26]:

Capacity input indicators include number and type of engagement/ capacity building/ consulting events, number of attendees, but also measurements of the delivery of the capacity building content, such as number of obstacles and barriers that the city representative identified in the delivery of innovative mobility solutions and number of learning expectations from the Programme.

Capacity output indicators refer to individual capacity built through, for example, challenges solved, learning needs addressed, but also to partnership building, such as new collaborations with private/ commercial bodies and new synergies with EU networks and initiatives. If direct measure of capacity built is not available, proxy indicators (i.e., satisfaction of the trainee) is used.

Outcome indicators refer to the capacity used to reach achievements and changes at organisation level, always within the spectrum of the rapid deployment of innovative mobility solutions, including, for example, increased organizational efficiency in governance/ financing of these solutions, or the delivery of the relevant deployment plans.

Finally, at an impact level, high-level changes in the organization structure are considered, while special focus is placed on community-level benefits, beyond those applying directly to the individuals or the organizations. For the Programme these benefits are attributed to the innovative mobility solutions per se (as these mobility solutions are perceived as enhanced quality services provided by the cities to the communities) and can refer to behavioural mobility changes (modal shift to more safe and sustainable modes of transport) or environmental conditions changes (such as reduction of green-house emissions).

The KPI framework used in the project defined a list of total 51 KPIs. Apart from estimating the KPI baseline values (at the outset of the project), a loop for the KPI monitoring was established, as iterations of data collection and their reporting enabled a regular understanding of the performance of the Programme, thus, also allowing for responsive and/or formative mechanisms to take place for tackling rising issues and/or better addressing the learning needs and expectations of each city. The KPI monitoring process systematically considered the feedback from twenty-three European cities engaged to the project. Target values were also attributed to 17 indicators and the Programme’s progress towards these values has been referenced.

Data mapping and storing processes were defined at an early stage of the project. Data collection methods were decided and the relevant tools for gathering data were created. Data collection was done in a consistent format, either through individual data points (online forms/ questionnaires) or directly within logbooks (spreadsheets or word documents) created for data collection and storing. Each KPI was matched to the data streams and mechanisms were set for organization (organization in folders and classification). Data collection methods to be used approximately included interviews, questionnaire surveys and structured observations.

The monitoring of the KPIs was done using the following tools:

Needs Assessment Survey (NAS). As already mentioned in Step 1 of the project’s capacity building flow, this was conducted at the beginning of the project, to collect information on the cities’ innovation profile. Data collected regarding cities’ needs, obstacles and opportunities regarding innovative sustainable solutions were used for calculating the baseline values for some indicators.

Event Forms (EF): The Event Form was introduced as an online questionnaire targeting the event organizers (partners). The form collected both quantitative (i.e., number of participants) and qualitative information (i.e., level of participation) regarding the events (either stand-alone events or events organized within the Capacity Building Weeks - CBWs). The event organizers filled in the event forms, usually, within a period of 2 weeks after the event implementation.

Innovation Diaries (ID). The ID was introduced as an online questionnaire targeting the cities that were engaged in the project. The ID initially collected information related to challenge definition (barriers that hinder the rapid deployment of innovative mobility solutions), idea formation (getting inspired from city peers) and learning action framing (what exactly cities need to overcome the identified challenges). As the Programme moved forward from problem definition to planning formulation (Deployment Plans), the ID content was adjusted accordingly. Nonetheless, questions related to the city’s progress/ satisfaction from the Programme’s activities, remained as a key content in all IDs. The cities’ representatives filled in the Innovation Diary usually within a month after the end of each CBW.

Registration and participation forms, collecting data on participants and their working profile.

Complementary to the above, the KPI analysis has been facilitated by the content analysis of the following project documents and outputs:

Dissemination Tracker, introduced by the project’s communication and dissemination activities, aiming at monitoring the project’s dissemination efforts, including attendances of the partners and the cities’ representatives to external – to the project events.

Deployment Plans, developed and delivered by the cities as a final product of the project, for accelerating the deployment of selected innovative mobility solutions.

Transferability assessment templates, introduced as a short questionnaire during the project’s study visits, for capturing the transferability potential of each study visit case to the cities/ regions engaged in the project.

Other project activities, such as the Exploitation Strategy implementation.

For the communication of the KPI monitoring to the project consortium and the activation of the iteration process of the evaluation framework, four internal periodical Activity Reports were produced, one after the conclusion of each of the Learning Sequences 1, 2, 3 and 4. The Activity Reports monitored those KPIs that were relevant for the learning period under analysis, as not all KPIs were monitored each time. A review of the performance of the KPIs was done through infographics and link to the project’s quantitative impact targets was done when relevant. At the same time the insight from the Event Organizers were provided, to allow for a better understanding of the event process and formats, as well as the “inclusiveness” (engagement of external) and interactiveness of the events delivered. At the end of each Activity Report, main findings from the KPI analysis were provided on the basis of promising results and points that need further attention. This allowed to the whole partnership to keep track of the progress of the Programme towards the predefined targets and even plan/ proceed to changes in the content/ format of the engagement activities when the KPI results indicated such a need.

3. Results

3.1. Data Collected and Relevant Challenges Identified

Table 1 summarizes the data that were collected for the KPI monitoring of the Programme. These are counted as collected forms/ questionnaires, or records or documents/ reports. The correlation with the Learning Sequence in which data were collected is also made.

Issues and points of attention regarding data collection are summarised below. These are mostly related to the Needs Assessment Survey (NAS) and the Innovation Diaries (IDs), as their completion required input from the cities’ representatives. It should be highlighted here that the majority of the cities (19 out of the 23) were engaged in the project on a voluntary basis. Four (4) cities acted as “Ambassador cities” in the project, being involved as partners.

The NAS was launched at the beginning of the project with an initial composition of cities. At the end of the first year of the project, though, some cities could not remain engaged due to changes in their policy priorities. Other cities were recruited, for which the NAS had to be initiated again.

The Innovation Diaries requested special guidance for their completion. A dedicated workshop took place before the completion of the ID1 (during the 1st Capacity Building Week – CBW#1) for presenting the concept of the Innovation Diaries and explaining the type of information requested by the cities. During the workshop, cities’ representatives were advised to complete one (1) ID for their city each time. In cases where several city representatives were engaged in the project, this required the consolidation of the replies into one document. This was, however, not always coordinated internally (perhaps because this requirement was not communicated between the different city’s representatives that filled in the ID), and some cities provided two IDs at the same time. In such cases, consolidation of the data was done during data analysis.

Four (4) rounds of Innovation Diary survey have taken place, with input requested by the cities engaged in the project. Special attention was placed on not to create a “survey fatigue”. For this reason, ID3 was provided in a simpler and shorter format than IDs 1, 2 and 4, despite of not serving completely the project’s monitoring purposes. Another reason for this was the need to have the last ID (ID4) in its most extended version, so that the cities can reflect upon all aspects of their learning.

A typical difficulty that was, also, encountered, had to do with the engagement of the Local Affiliates on ID data collection, as: a) not all LAs replied to the Innovation Diaries and b) it was not always the same cities that replied to each IDs.

One minor issue encountered regarding the monitoring of participations in online events had to do with the inability to identify registered participants joining the event when these used only their first name or the name of their organization. A cross-check with the event organizer/ coordinator was then necessary.

3.2. KPI Analysis and Key Findings

In this section, a discussion over key results of groups of KPIs is performed, showcasing the “input-to-impact” pathway of the proposed assessment framework. Indicatively, some KPI (info)graphics are also displayed, although the presentation of all 29 (info)graphics that were produced for the KPI framework application, falls outside the scope of this paper.

3.2.1. Delivery of the Learning and Exchange Programme

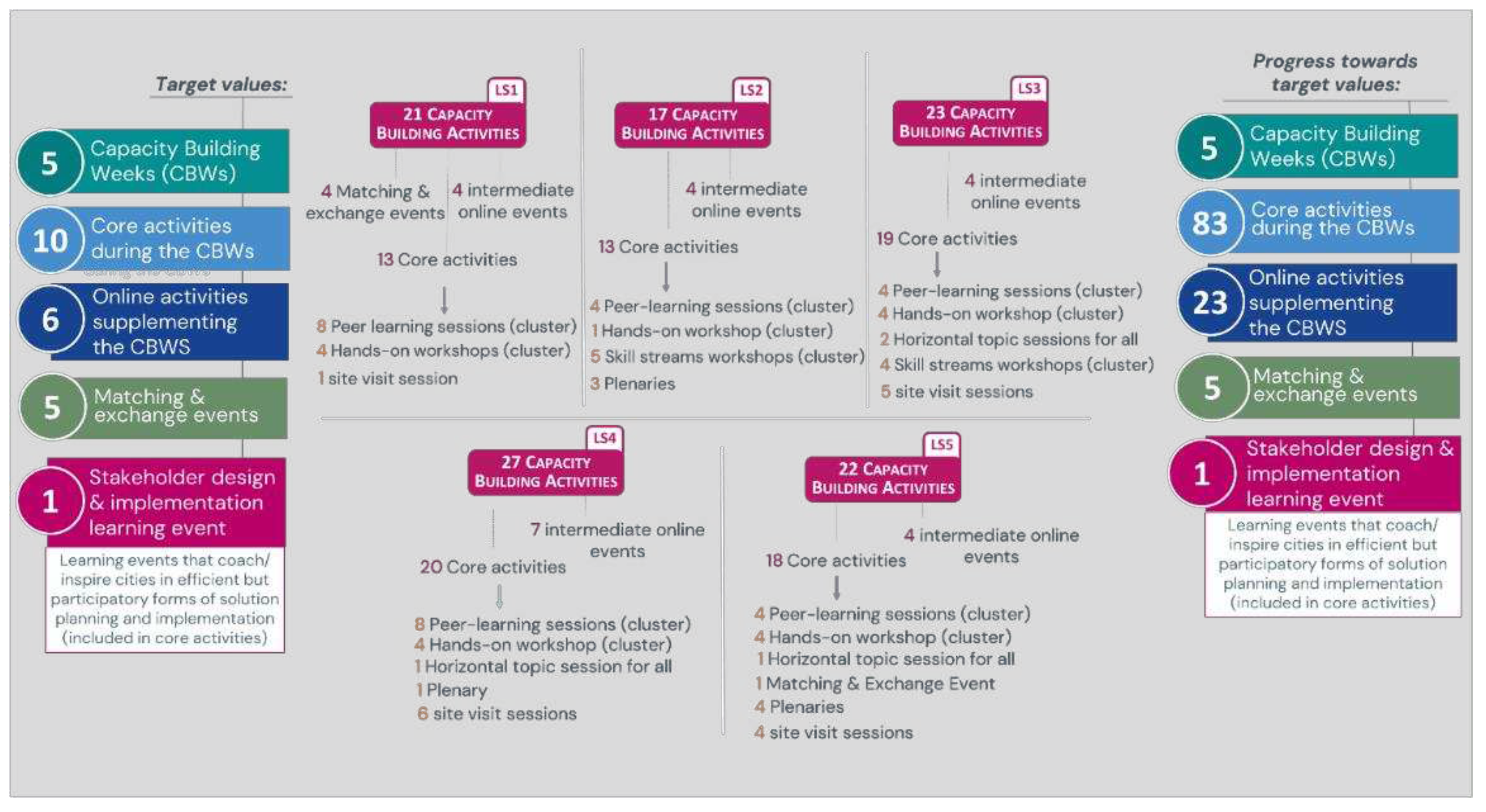

The delivery of the Programme progressed as initially planned, with a total of 106 capacity building sessions implemented during 5 Learning Sequences (

Figure 4). The Programme differentiated between core activities (plenaries, study-visits, horizontal topic sessions for all and cluster-based peer-learning sessions and hands-on workshops) and intermediate activities, organized as remote learning sessions around core interests identified by each cluster, horizontal learning needs, coordination activities and specific requests by the cities.

Included in the core activities, 5 matching and exchange events between cities and mobility innovation suppliers took place, as well as 1 learning event that coached cities in participatory forms of planning and implementation. The matching and exchange events had a great added value to relationship building between cities and providers, which eventually, led to 15 concrete contracts that supported the cities towards the development and implementation of their Deployment Plans.

The Programme was flexibly built on a combination of methodologies (webinars, study visits, co-learning workshops, co-creation workshops, peer-review workshops, speed networking) that allowed for each LS objective to be reached. Specific care was given for events/ sessions that were delivered online (i.e., CBWs #1 and #2 were held online due to the ongoing pandemic), in order to properly adapt to the online format (i.e., shorten duration, foresee enough breaks, use of online co-learning/ design tools such as online whiteboards, etc.).

The learning and exchange events enabled an intense discussion around the learning needs of the cities, especially at the beginning of the Programme. In total, 200 learning needs were either expressed by the cities or recorded by the event organizers. Indicatively, during LS1, where 51 learning needs have been discussed, a 30% of them has been covered through the Programme.

City representatives have also expressed their satisfaction in a more qualitative term, as the majority of them (63%) indicated that the Programme covered to a high or very high level their needs/ questions around the deployment of their innovative mobility solution. The rest (37%) positioned themselves in an average score regarding this statement.

3.2.2. Participation and Active Engagement

Twenty-three (23) cities were eventually engaged in the Learning and Exchange Programme and allocated to the cluster of their interest. Special care was placed on achieving a rather balanced composition of cities withing the clusters, meaning having both cities with economies that advance sustainable mobility solutions and cities lagging behind on that matter. A re-shuffling and re-definition of the clusters was necessary to be performed due to the entrance of new cities in the project until the end of the 1st year, but the Programme allowed for flexibility and eventually the balanced composition of the clusters has not been compromised.

Regarding participation of cities in the learning and exchange events, the online events during CBWs #1 and #2 allowed for more people to connect, but interactions between the cities were, by default, more limited and the full benefits of the face-to-face exchange were not reached.

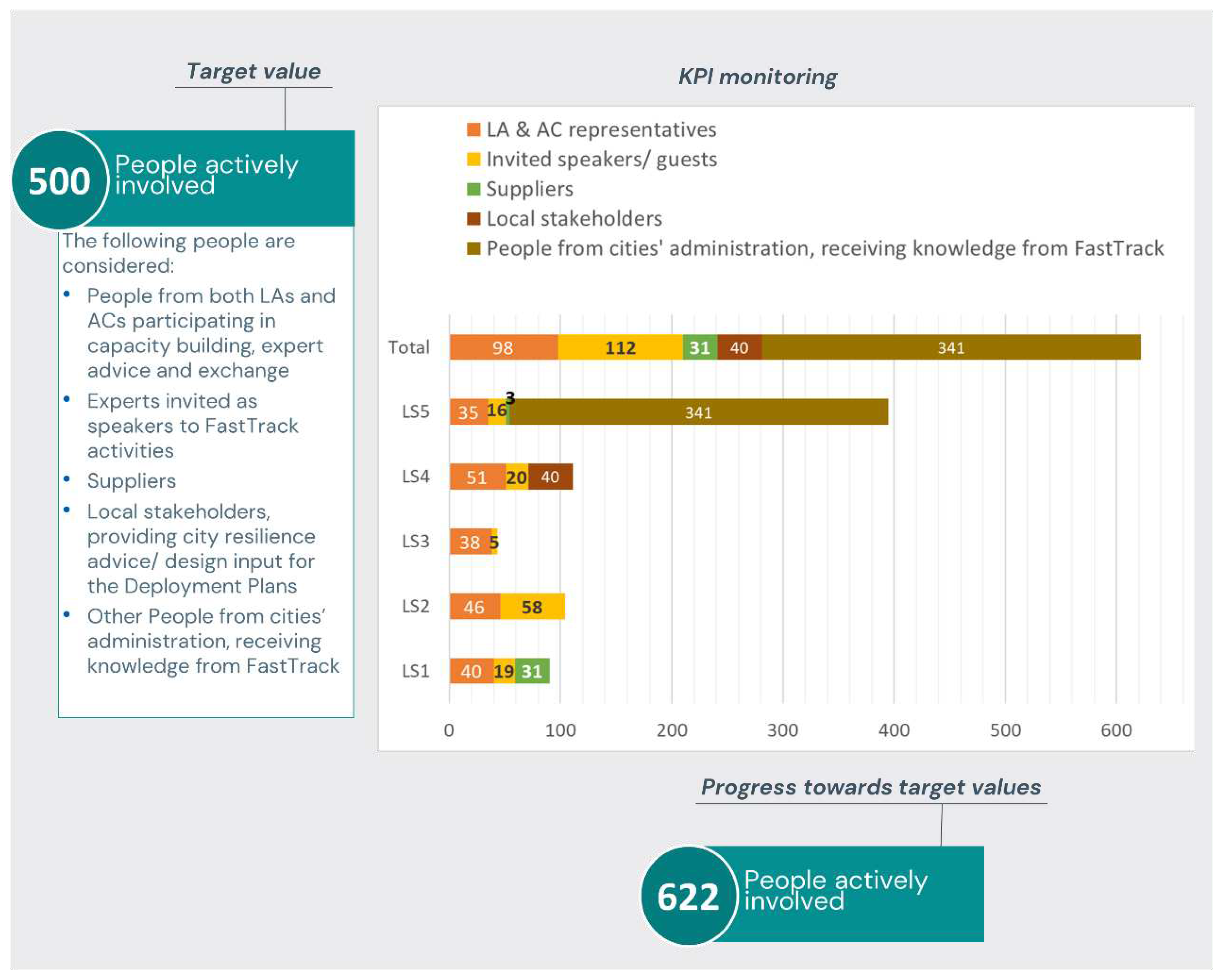

In total, more than 600 people were somehow involved in the Programme, either as city representatives or invited speakers, mobility solution suppliers and local actors (figure 5). Cities representatives, were, of course, present in each Learning Sequence, as the main beneficiaries of the Programme. The involvement of the rest groups of engaged people followed the objectives of each Learning Sequence: suppliers were mostly represented in LS1, where relevant matchmaking events were organized in the form of “speed dating”. During LS4 the cities prepared their deployment plans, therefore members of the cities’ stakeholders’ groups that provided input to the plans were involved in this process. Noteworthy, people within the cities’ administrations, who were reached by their colleagues and received knowledge from the Programme, were counted once, towards the end of the Programme (through ID4), but they were engaged throughout the other Learning Sequences as well.

The Programme strongly encouraged an integrated approach to solving challenges and addressing needs around the planning and implementation of innovative mobility solutions. A transdisciplinary and interdisciplinary approach, involving actor from sectors other than the mobility & logistics sector, was possible, as experts from various fields of expertise were invited in the project and cities received feedback from 42 local actors outside the mobility sector for their Deployment Plans (DPs). Overall, there were 162 people outside the mobility sector that either received knowledge from the Programme or provided their feedback to the DPs.

The Programme provided a stage for people representing various local contexts and working backgrounds, to come together and exchange their knowledge and expertise over common challenges and needs. This was highly appreciated by all participating cities.

3.2.3. Synergies and Networking

The Programme was further complemented by the “Activity Fund”, which offered cities support for preliminary studies, the organisation of further in-depth exchange activities, and access to tailored expert advice from a Pool of Suppliers. The latter was set up for drawing external expertise to the Programme’s community. In total, 48 private/ commercial bodies were connected to the project through the pool of suppliers and 15 contracts were signed between 9 cities and these suppliers, for a direct support to their Deployment Plans.

On of the key characteristics of the Programme was its extroversion: gaining knowledge and experience from other EU projects/ initiatives and networks and sharing its insights to a wider EU community. In total, the Programme established links with 37 EU projects and networks and has established 4 interactions with Smart Cities Marketplace (SCM), i.e., as partners participations in SCM events/ sessions or invitation of speakers representing SCM initiatives/ projects to the CBWs.

Along, the Programme also aimed at inspiring the LAs to act as ambassadors of their innovation to their wider (local) network and already six (6) cities have undertaken this role.

As a result of the added-value of connecting with EU networks/ initiatives that was communicated through the Programme’s activities, 1 city has connected with SMC, 1 city has become CIVITAS member and 2 cities have connected with EIT (European Institute of Innovation & Technology) - Urban Mobility.

Going one step further to the above, 1 city also brough its mobility solution explored within the project as a pilot case in EIT calls, while 15 new proposals/ projects were brought forward from 9 cities for receiving funding at national or EU level. Seven (7) of these new proposals have already received funding.

3.2.4. Progress Towards the Acceleration of Mobility Solutions –Deployment Plans

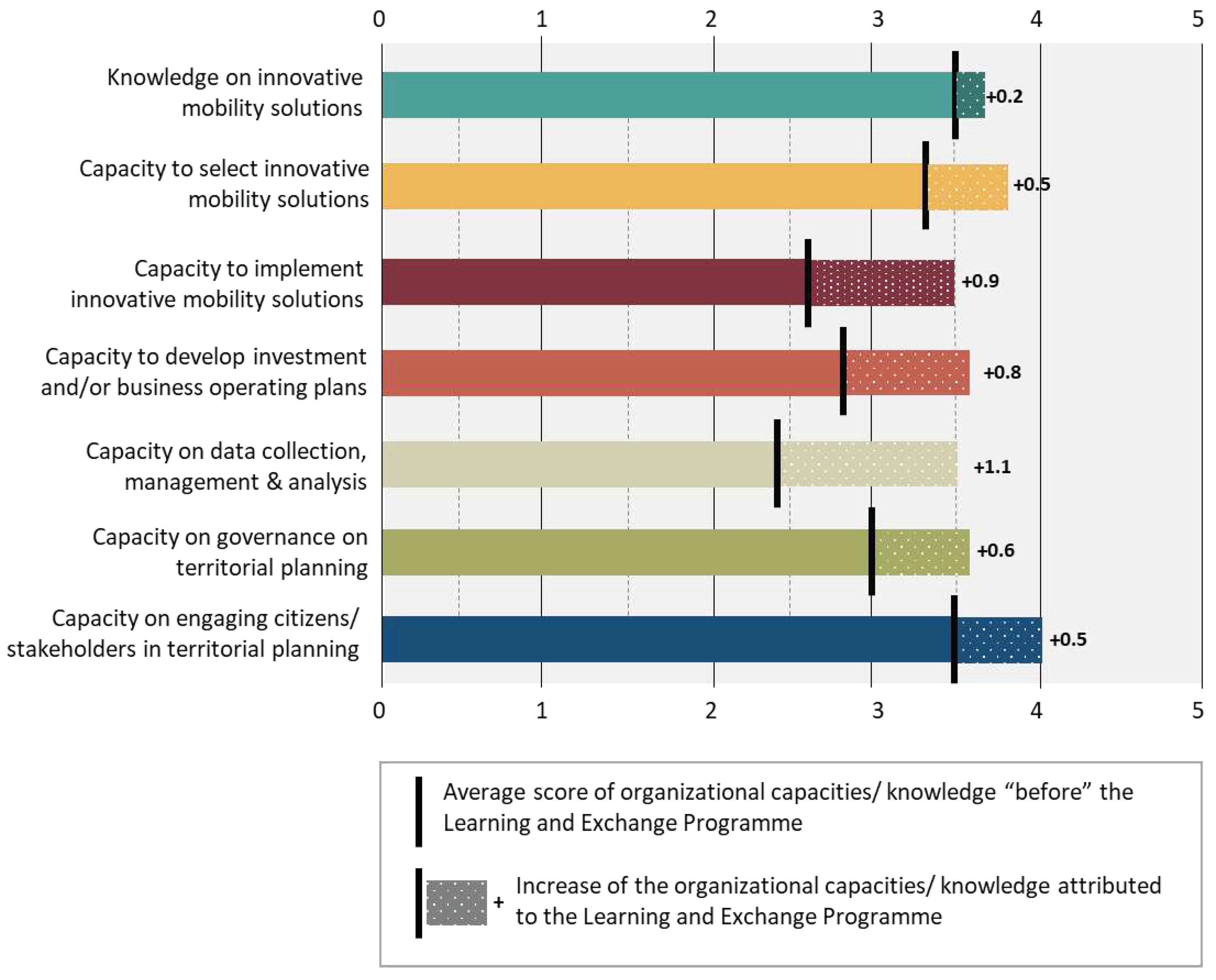

An important discussion for innovative solutions has initiated through the Programme, bringing forward more than 122 mobility solutions as an inspiration. This led to an increase of knowledge of network members on innovative mobility solutions and increased capacities in the selection of mobility solutions that address the city’s needs, which has been recorded through the final Innovation Diary on a 1-5 Likert scale (

Figure 6).

Eventually 23 solutions were identified from the cities/ regions as those explored within the project and 23 Deployment Plans were registered and approved.

The Programme offered a great opportunity for identifying and addressing specific challenges related to the implementation of innovative mobility solutions. Several challenges were discussed and address during the course of the Programme and, as the latter progressed towards the development of the deployment plans, eventually 58 barriers were linked to the innovative mobility solutions explored within the project. Most of these barriers (48%) had a local identity, followed by a 17% that has both local and national identity and a 15% that is linked to all levels (local, national and European). The Programme enabled a 74% of the barriers that were eventually included in the Deployment Plans, to be solved or partially solved.

Eventually, a significant increase of network members’ capacity for overcoming identified barriers and implementing innovative mobility solutions is recorded (

Figure 6), as 10 cities have indicated through the last Innovation Diary, a higher capacity for implementing innovative mobility solutions, than the one reported at the beginning of the project (moving from a “before” average score from all cities of 2.6 to an “after” score of 3.5). Ten (10) cities have also indicated a very high or high capacity for finalizing the implementation of their innovative mobility solution after the end of the project.

During the course of the project, 2 cities had already launched the implementation of their mobility solutions and another 5 were under preparation for implementation (i.e., preparing procurement documents).

The process of Deployment Plan (DP) development was highly appreciated by the cities, as it allowed for a structured definition of the challenges related to and actions that need to be undertaken for the implementation of innovative mobility solution. Accompanied by dedicated workshops on acceleration factors, the DPs also allowed for the cities to reflect upon the condition under which an innovation can be considered as “shovel-ready” for implementation.

3.2.5. Cross-cutting Skills Supporting the Deployment Plans

The Skill Streams events of the Programme allowed for common data, governance, funding and engagement issues be discussed and related good practices to be shared.

The discussion over data seemed of high importance for many cities and LS2 had a specific focus on mobility data integration and management. In total, 24 new data sources were discussed during LS1 and LS2. Four (4) cities have already shared open data with their fellow cities during the project and 8 cities were willing to do so after the project’s closure. As a result, 11 network members indicated an increase of knowledge on data gathering, management and analysis (

Figure 6) (moving from a “before” average score of all cities of 2.4 to an “after” score of 3.5). Eventually, 9 new data sources and/or new methodologies for data integration were included in the Deployment Plans and 10 cities have already launched their data collection.

Funding (lack of funding) was a key common challenge for the implementation of innovative mobility solution for many cities. LS3 allowed for a targeted learning approach in relation to funding mechanisms and business models, enabling a significant increase in the knowledge of network members on developing investment and/or business/ operating plans (

Figure 6) (moving from a “before” average score of all cities of 2.8 to an “after” score of 3.6).

Regarding governance on territorial planning, 7 project network members reported an increase of their capacity (

Figure 6) (moving from a “before” average score of all cities of 3.0 to an “after” score of 3.6), while having already observed changes in their city governance model.

Social innovation and the ecosystemic approach to engage all actors was placed at the focus of the Programme during CBW4. Although many cities have indicated that they had already high or very high capacities in citizens’ and stakeholders’ engagement (

Figure 6) (“before” average score of all cities = 3.5), an improvement is recorded for 6 cities (“after” average score of all cities = 4.0). As a result of the Programme’s support on better understanding of who the stakeholders are, how to engage them and what is their influence in the planned mobility solutions, 10 cities are already trying to improve their local engagement activities.

3.2.6. Achievements of higher-level objectives

Higher-level achievements are connected with changes in the organization structure and community benefits offered through the deployment of the project’s innovative mobility solutions per se.

Cities were asked to position themselves in a “global” spectrum of ‘starters’ (cities facing a rapid transition curve and ready to interact and learn from other cities), ‘sharers’ (“capacity conscious” cities, who can share knowledge but also have learning needs) or ‘leaders’ (a relative leader in a specific topic, but still with room to benefit from further advise). A positive movement of in total 9 cities is observed in the spectrum: 8 cities moving from ‘starter’ to ‘sharer’ status and 1 city moving from ‘sharer’ to ‘leader’ status.

Cities were also asked to provide quantitative data for the estimation of KPIs related to modal shifts and reduction of greenhouse gas emissions, attributed to the implementation of the mobility solutions per se. Unfortunately, the cities could not provide such data rather than indicated in a more generic approach the direct connection of their solutions to the objectives and target values of their Sustainable Urban Mobility Plans. It is worth mentioning, though, that all cities had a greenhouse gas emission reduction goal, while the majority of the cities had the goal of modal shift toward more energy efficient modes (i.e., electric vehicles, bicycles, walking, public transport when the shift is done from private cars).

4. Discussion and Conclusions

The current paper presents a KPI Framework for assessing the performance of a learning and exchange programme dedicated to increasing local authorities’ capacities around the planning and implementation of innovative mobility solutions. Key findings from the application of the Framework in the case of a Learning and Exchange Programme developed as part of an EU-funded project are also provided. This analysis allowed for the Programme to be properly monitored and assessed within the requirements of the project. Nonetheless, the results from the Framework’s application should be cautiously generalized due to the content-specific character of the project, therefore the discussion that follows hereafter focuses primarily on the Framework itself and the data requirements supporting it.

The KPI framework was based on a “capacity building-to-impact” pathway, clustering 51 KPIs as input, output, outcome or impact indicators. A loop for the KPI monitoring was applied and iterations of data collection enabled a regular understanding of the performance of the Programme, but, also, allowed for responsive and/or formative mechanisms to take place for tackling raising issues.

The KPI framework monitored learning gaps in various skills (technical, administrative, financial, social) and the way these were addressed by the learning and exchange provisions of the Programme. Thus, it heavily depended on the contribution of the cities that were engaged in the Programme, although a room for structured observations was also allowed. This dependency further favoured the engagement, but it also brought forward challenges in the data collection. These were primarily related to challenges of keeping the interest of all the cities to provide data vivid throughout a rather extended data collection period, as in total 5 rounds of data collection were requested, from mid-2021 to mid-2023.

The above is also related to a discussion over the “objectivity” of observations related to the increase of organization capacities. While individual capacity can be easily evaluated, organizational capacity monitoring implies a twofold procedure: the first being the actual transfer and adoption of knowledge within the organizational structures and the second being the evaluation in terms of “collective” progress. Actual observations of the knowledge transfer and the organizational transformation would provide an objective perspective but, other than requiring significant resources and a more extended list of KPIs that better map the organizational functions [

27], they are difficult to implement as part of (rather short) projects (since it takes time for capacity utilization to become apparent). In such cases, data rely on individuals’ responses to knowledge transfer mechanisms (has the individual exchanged knowledge with his/ her fellow-workers in a consistent way?) and perceptions over the progress of organization transformation (do the individual’s replies in the evaluation survey consider changes at an individual or a collective level?), which always contain a level of subjectivity.

Another weakness of the KPI framework that should be discussed deals with the gap between the expectation to have adequate metrics for the impact indicators versus the maturity of the cities to provide relevant data for their calculation. Reporting on impacts such as carbon – emission reductions or modal shifts to more sustainable or energy efficient modes of transport is rather challenging even when the mobility solutions are already in place, let alone when their implementation needs time to mature (which was the case in our KPI framework application). Complex (modelling) processes that are usually required either for an ex-ante or an ex-post estimation of emissions reduction, modal shifts, etc., contradict engagement on voluntary basis that respects the limited resources brought forward by the “volunteer”. Time limitations set in projects of rather short duration (i.e., 2 years or even less) also add to this issue. Simister and Smith seem to have a similar opinion: “The duration between capacity building interventions and desired end results can be very long. For example, one Southern capacity building provider interviewed as part of the research are only now seeing the fruits of work carried out fifteen years ago” [

16] (p.7). They might have had an argument, thought, on the basis of “maybe you have decided to measure too far”, as the extent to which the monitoring and evaluation of capacity building should go is a critical decision assigned to the evaluator right from the very beginning. To this end, the authors seem to mostly support “illustrations” of changes, rather than measurements of it [

16] (p.8-9).

James also quotes on an dimension that is seen by the authors as an additional challenge for the assessment of our impacts: “as a project moves from input to effects, to impact, the influence of non-project factors becomes increasingly felt thus making it more difficult for the indicators to measure change brought about by the project” [

19] (p.10), thus stressing out that capacity building expected impacts are heavily influenced by changes in the external environment as well.

The authors opine that the proposed KPI framework can provide a useful tool for the evaluation of similar structured learning programs, given, of course, content-related adjustments and considerations of the challenges expressed above. This can result in improved reporting and hence in the delivery of more effective learning activities.

Funding

This research was funded by CIVITAS FastTrack Coordination and Support Action project. The FastTrack project has received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement no. 101006853.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

A detailed report on the results of the monitoring and evaluation of the Learning and Exchange Programme under investigation can be found on FastTrack project official website (

https://fasttrackmobility.eu/). Original data are not publicly available due to GDPR principles applied.

Conflicts of Interest

The authors declare no conflict of interest.

References

- European Commission. The European Green Deal. COM(2019) 640 final, 2019.

- Glaser, M., Brommelstroet, M., & Bertolini, L. Learning to build strategic capacity for transportation policy change: An interdisciplinary exploration. Transportation Research Interdisciplinary Perspectives, 2019, Volume 1, doi: . [CrossRef]

- Martins, S., Kalakou, S., & Pimenta, I. Deliverable 2.2 Evaluation Framework. CIVITAS SUITS, June 2017. Available online: https://www.suits-project.eu/wp-content/uploads/2018/12/Evaluation-Framework.pdf (accessed on 04 October 2023).

- Hull, A. Implementing Innovatory Transport Measures: What Local Authorities in the UK Say About Their Problems and Requirements. European Journal of Transport and Infrastructure Research, 2009, Volume 9, doi: . [CrossRef]

- Teko, E., & Lah, O. Capacity Needs Assessment in Transport Innovation Living Labs: The Case of an Innovative E-Mobility Project. Transportation Systems Modeling, 2022,Volume 3, doi: . [CrossRef]

- UNEP. Capacity Building for Sustainable Development: An overview of UNEP Environmental Capacity Development Activities, UNEP, 2002. Available online: https://wedocs.unep.org/bitstream/handle/20.500.11822/8283/-Capacity%20Building%20for%20Sustainable%20Development%20_%20An%20Overview%20of%20Unep%20Environmental%20Capacity%20Development%20Initiatives-2002119.pdf?sequence=3&%3BisAllowed= (accessed on 9 October 2023).

- OECD. The Challenge of Capacity Development: Working towards Good Practice, OECD Papers, vol. 6/1, 2006 . [CrossRef]

- Thapa, R. B., Matin, M. A., & Bajracharya, B. Capacity Building Approach and Application: Utilization of Earth Observation Data and Geospatial Information Technology in the Hindu Kush Himalaya. Land Use Dynamics, 2019, Volume 7, doi: . [CrossRef]

- UNDP. CAPACITY DEVELOPMENT: A UNDP PRIMER. New York: United Nations Development Programme, 2009.

- Anderson, L. W., Krathwohl, D. R., Airasian, P. W., Cruikshank, K. A., Mayer, R. E., Pintrich, P. R., Raths, J. and Wittrock, M. C. A Taxonomy for Learning, Teaching and Assessing. A Revision of Bloom's Taxonomy of Educational Objectives. Addison Wesley Longman, Inc., 2001.

- Milstein, B., & Cotton, D. Defining concepts for the presidential strand on building evaluation capacity. Working paper circulated in advance of the meeting of the American Evaluation Association, 2000, Honolulu, HI.

- European Commission, EuropeAid Co-operation Office. Institutional assessment and capacity development – Why, what and how?, Publications Office, 2007.

- Horton, D. Planning, Implementing, and Evaluating Capacity Development, ISNAR Briefing Paper 50, July 2002. Available online: https://dgroups.org/file2.axd/93710bd7-740f-41fa-9233-396d2c88b61d/ISNAR_BP_50.pdf (accessed, 10 October 2023).

- Ngai, T. K. K., Coff, B., Manzano, E., Seel, K., Elson, P. Evaluation of education and training in water and sanitation technology: case studies in Nepal and Peru. In proceedings of the 37th WEDC International Conference, Hanoi, Vietnam, 2014.

- Gordon, J., & Chadwick, K. Impact assessment of capacity building and training: assessment framework and two case studies. Australian Centre for International Agricultural Research, 2007.

- Simister, N., Smith, R. Monitoring and Evaluating Capacity Building: Is it really that difficult?, Praxis Paper 23, INTRAC, 2010. Available online: https://www.intrac.org/wpcms/wp-content/uploads/2010/01/Praxis-Paper-23-Monitoring-and-Evaluating-Capacity-Building-is-it-really-that-difficult.pdf (accessed 12 September 2023).

- LaFond, A., Brown, L. A Guide to Monitoring and Evaluation of Capacity-Building Interventions in the Health Sector in Developing Countries, MEASURE Evaluation Manual Series, No. 7, March 2003. Available online: https://www.measureevaluation.org/resources/publications/ms-03-07/at_download/document (accessed 12 September 2023).

- Brown, L., LaFond, A., Macintyre, K. Measuring Capacity Building, MEASURE Evaluation, March 2001. Available online: https://pdf.usaid.gov/pdf_docs/PNACM119.pdf (accessed 12 September 2023).

- James, R. Practical Guidelines for the Monitoring and Evaluation of Capacity-building: Experiences from Africa. INTRAC, 2001.

- Hailey, J. M., James, R., & Wrigley, R. Rising to the Challenges: Assessing the Impacts of Organisational Capacity Building. The International NGO Training and Research Centre, February 2005.

- Liberato, S. C., Brimblecombe, J., Ritchie, J., Ferguson, M., Coveney, J. Measuring capacity building in communities: a review of literature, BMC Public Health 2011, 11, doi: http://www.biomedcentral.com/1471-2458/11/850.

- UNEP; DTIE; ETB. Ways to Increase the Effectiveness of Capacity Building for Sustainable Development, Discussion Paper presented at the Concurrent Session 18.1 The Marrakech Action Plan and Follow-up, 2006 IAIA Annual Conference, Stavanger, Norway, 2006. Available online: https://docplayer.net/17564218-Ways-to-increase-the-effectiveness-of-capacity-building-for-sustainable-development.html (accessed 9 October 2023).

- Gabi, Stefan. Deliverable 1.2: Synthesis of Issues Affecting the FastTracking of Innovation, CIVITAS FastTrack project, 2023. Available online: https://fasttrackmobility.eu/fileadmin/user_upload/Resources/Deliverables/FastTrack_2022_06_05_D1.2.pdf (accessed 12 September 2023).

- Trapp, A.-C., & Staalen, P. Deliverable 1.3: Programme of Work for Local Affiliate Engagement. CIVITAS FastTrack, 2021. Available online: https://fasttrackmobility.eu/fileadmin/user_upload/Resources/Deliverables/D3.1_Set-up_Responsive_Support_Structure.pdf (accessed on 4 October 2023).

- Cristea, L. Deliverable 4.1: FastTrack Innovation and Knowledge Strategy. CIVITAS FastTrack, 2021. Available online: https://fasttrackmobility.eu/fileadmin/user_upload/Resources/Deliverables/FastTrack_D4.1_EC_comments_edit.pdf (accessed on 9 October 2023).

- Chatziathanasiou, M., & Morfoulaki, M. Deliverable 4.2: Results of the engagement strategy developed and its strengths and weaknesses. CIVITAS FastTrack project, 2023. Available online: https://fasttrackmobility.eu/fileadmin/user_upload/Resources/Deliverables/FastTrack_D4.2_Results_of_the_engagement_strategy_developed_and_its_impact-_Strengths_and_Weaknesses.pdf (accessed on 9 October 2023).

- UNDP. Measuring Capacities: An Illustrative Cataloge to Benchmarks and Indicators, Capacity Development Group Bureau for Development Policy, New York, September 2005.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).