Submitted:

14 November 2023

Posted:

16 November 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

- We examined the latest ransomware definition

- We surveyed studies of static analysis and dynamic analysis of ransomware, and critiqued their shortcomings.

- We listed and discussed major insights of this surveys.

2. Related works

2.1. Behavioral-Centric Detection Approaches

2.2. Static Analysis and Feature Reduction Techniques

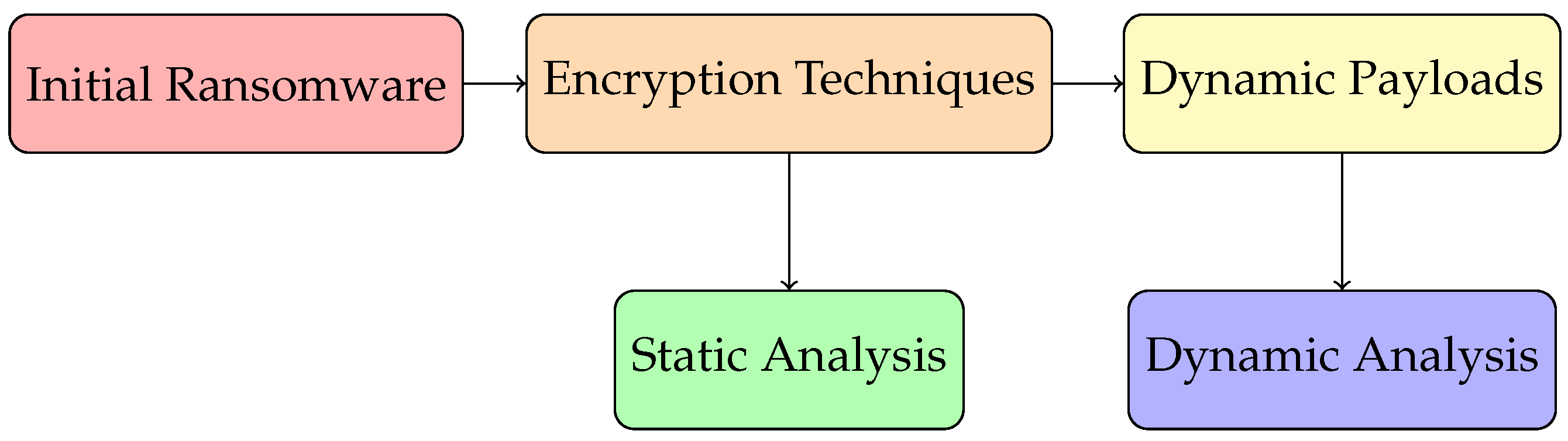

2.3. Dynamic Analysis Utilizing Windows API Calls

3. Methodology

3.1. Environment setup

3.2. Dataset

3.3. Generating Windows API calls

3.3.1. Tracing Windows API Calls

3.3.2. Windows API feature abstraction

3.3.3. Constructing n-gram

3.3.4. API refining process

3.3.5. Removing noise

| n-gram | SVM | kNN | DT | RF |

|---|---|---|---|---|

| 2-g | 66.7% | 58.7% | 63.7% | 67.7% |

| 3-g | 98.7% | 88.7% | 86.7% | 78.7% |

| 4-g | 96.7% | 76.7% | 74.7% | 61.7% |

| 5-g | 97.7% | 78.7% | 76.7% | 59.7% |

4. Results

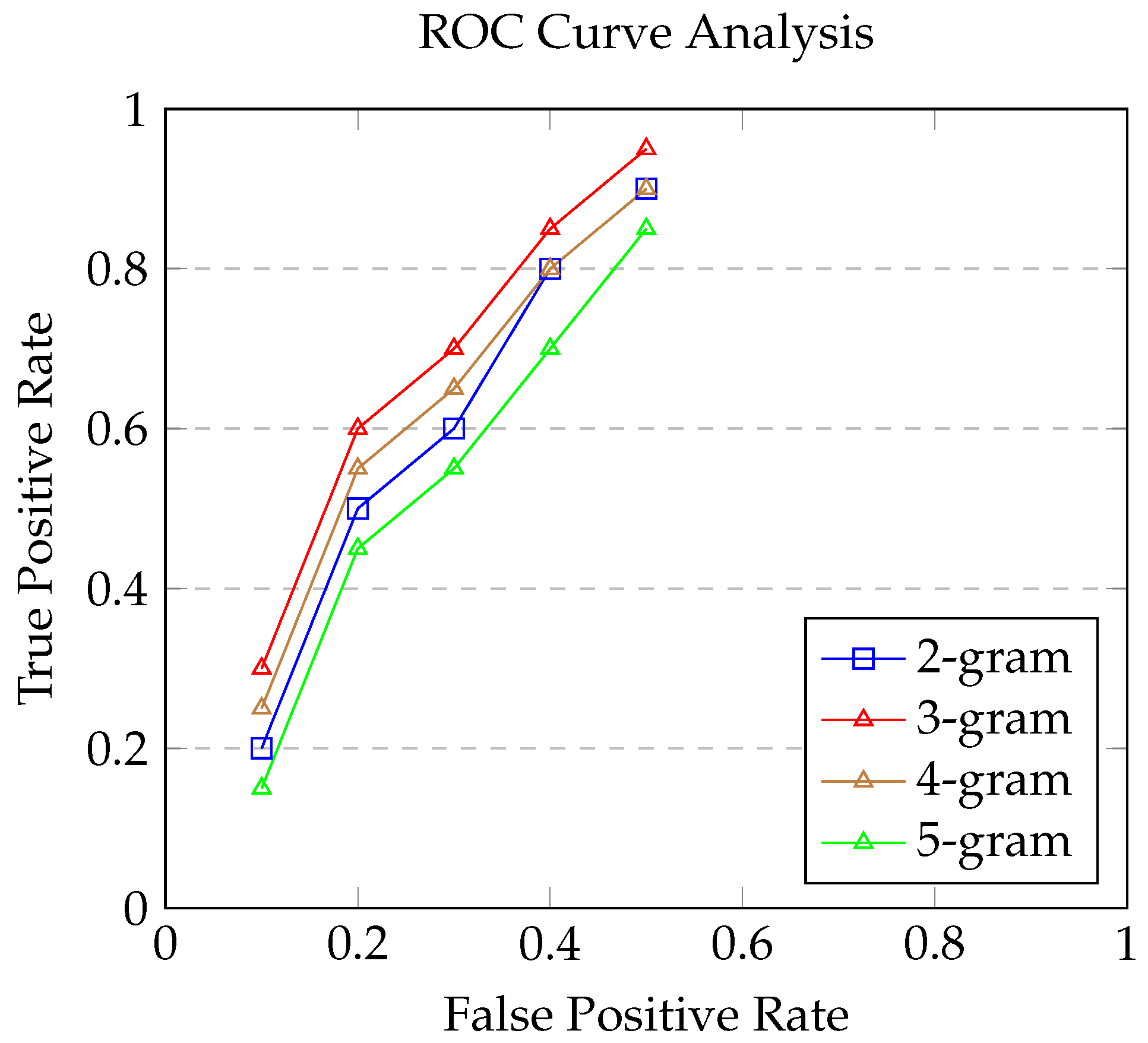

4.1. Classifier Performance Analysis Based on N-Gram Feature Lengths

| n-gram Length | SVM | kNN | DT | RF |

|---|---|---|---|---|

| 2-gram | 66.1% | 58.1% | 63.1% | 67.1% |

| 3-gram | 98.1% | 88.1% | 86.1% | 78.1% |

| 4-gram | 96.1% | 76.1% | 74.1% | 61.1% |

| 5-gram | 97.1% | 78.1% | 76.1% | 59.1% |

4.2. Enhanced Analysis of Classifier Efficacy Based on Variable N-Gram Feature Sizes

5. Discussions

5.1. Efficacy of N-Gram Based Feature Engineering

5.2. Comparative Analysis of Classifier Performance

5.3. Relevance of Enhanced Feature Selection

5.4. Limitations of the Study

6. Conclusions and Future Research Directions

Conflicts of Interest

References

- Almashhadani, A.O.; Kaiiali, M.; Sezer, S.; O’Kane, P. A multi-classifier network-based crypto ransomware detection system: a case study of Locky ransomware. Ieee Access 2019, 7, 47053–47067. [Google Scholar] [CrossRef]

- Ahmed, U.; Lin, J.C.W.; Srivastava, G. Mitigating adversarial evasion attacks of ransomware using ensemble learning. Computers and Electrical Engineering 2022, 100, 107903. [Google Scholar] [CrossRef]

- McIntosh, T.; Kayes, A.; Chen, Y.P.P.; Ng, A.; Watters, P. Dynamic user-centric access control for detection of ransomware attacks. Computers & Security 2021, 111, 102461. [Google Scholar]

- Eliando, E.; Purnomo, Y. LockBit 2.0 Ransomware: Analysis of infection, persistence, prevention mechanism. CogITo Smart Journal 2022, 8, 232–243. [Google Scholar] [CrossRef]

- Hwang, J.; Kim, J.; Lee, S.; Kim, K. Two-stage ransomware detection using dynamic analysis and machine learning techniques. Wireless Personal Communications 2020, 112, 2597–2609. [Google Scholar] [CrossRef]

- Davies, S.R.; Macfarlane, R.; Buchanan, W.J. Evaluation of live forensic techniques in ransomware attack mitigation. Forensic Science International: Digital Investigation 2020, 33, 300979. [Google Scholar] [CrossRef]

- McIntosh, T.; Kayes, A.; Chen, Y.P.P.; Ng, A.; Watters, P. Applying Staged Event-Driven Access Control to Combat Ransomware. Computers & Security 2023, 103160. [Google Scholar]

- Zuhair, H.; Selamat, A.; Krejcar, O. A Multi-Tier Streaming Analytics Model of 0-Day Ransomware Detection Using Machine Learning. Applied Sciences 2020, 10, 3210. [Google Scholar] [CrossRef]

- Ahmed, M.E.; Kim, H.; Camtepe, S.; Nepal, S. Peeler: Profiling kernel-level events to detect ransomware. In Proceedings of the Computer Security–ESORICS 2021: 26th European Symposium on Research in Computer Security, Darmstadt, Germany, October 4–8, 2021, Proceedings, Part I 26. Springer, 2021, pp. 240–260.

- Al-Hawawreh, M.; Sitnikova, E.; Aboutorab, N. Asynchronous peer-to-peer federated capability-based targeted ransomware detection model for industrial IoT. IEEE Access 2021, 9, 148738–148755. [Google Scholar] [CrossRef]

- Al-Dwairi, M.; Shatnawi, A.S.; Al-Khaleel, O.; Al-Duwairi, B. Ransomware-Resilient Self-Healing XML Documents. Future Internet 2022, 14, 115. [Google Scholar] [CrossRef]

- AlMajali, A.; Qaffaf, A.; Alkayid, N.; Wadhawan, Y. Crypto-Ransomware Detection Using Selective Hashing. 2022 International Conference on Electrical and Computing Technologies and Applications (ICECTA)2022, pp. 328–331.

- Axon, L.; Erola, A.; Agrafiotis, I.; Uuganbayar, G.; Goldsmith, M.; Creese, S. Ransomware as a Predator: Modelling the Systemic Risk to Prey. Digital Threats: Research and Practice. [CrossRef]

- Bekkers, L.; van’t Hoff-de Goede, S.; Misana-ter Huurne, E.; van Houten, Y.; Spithoven, R.; Leukfeldt, E.R. Protecting Your Business against Ransomware Attacks? Explaining the Motivations of Entrepreneurs to Take Future Protective Measures against Cybercrimes Using an Extended Protection Motivation Theory Model. Computers & Security 2023, 127, 103099. [Google Scholar]

- Bold, R.; Al-Khateeb, H.; Ersotelos, N. Reducing False Negatives in Ransomware Detection: A Critical Evaluation of Machine Learning Algorithms. Applied Sciences 2022, 12, 12941. [Google Scholar] [CrossRef]

- Davies, S.R.; Macfarlane, R.; Buchanan, W.J. Review of Current Ransomware Detection Techniques. In Proceedings of the Proc. of the 7 th International Conference on Engineering and Emerging Technologies (ICEET). Institute of Electrical and Electronics Engineers, 2022.

- Abbasi, M.S.; Al-Sahaf, H.; Mansoori, M.; Welch, I. Behavior-based ransomware classification: A particle swarm optimization wrapper-based approach for feature selection. Applied Soft Computing 2022, 121, 108744. [Google Scholar] [CrossRef]

- Albulayhi, K.; Al-Haija, Q.A. Early-stage Malware and Ransomware Forecasting in the Short-Term Future Using Regression-based Neural Network Technique. In Proceedings of the 2022 14th International Conference on Computational Intelligence and Communication Networks (CICN). IEEE, 2022, pp. 735–742.

- Almeida, F.; Imran, M.; Raik, J.; Pagliarini, S. Ransomware Attack as Hardware Trojan: A Feasibility and Demonstration Study. IEEE Access 2022, 10, 44827–44839. [Google Scholar] [CrossRef]

- Aurangzeb, S.; Rais, R.N.B.; Aleem, M.; Islam, M.A.; Iqbal, M.A. On the classification of Microsoft-Windows ransomware using hardware profile. PeerJ Computer Science 2021, 7, e361. [Google Scholar] [CrossRef]

- Baksi, R.P. Pay or Not Pay? A Game-Theoretical Analysis of Ransomware Interactions Considering a Defender’s Deception Architecture 2022. pp. 53–54.

- Beaman, C.; Barkworth, A.; Akande, T.D.; Hakak, S.; Khan, M.K. Ransomware: Recent advances, analysis, challenges and future research directions. Computers & security 2021, 111, 102490. [Google Scholar]

- Berrueta, E.; Morato, D.; Magaña, E.; Izal, M. Crypto-ransomware detection using machine learning models in file-sharing network scenarios with encrypted traffic. Expert Systems with Applications 2022, 209, 118299. [Google Scholar] [CrossRef]

- McIntosh, T.; Kayes, A.; Chen, Y.P.P.; Ng, A.; Watters, P. Ransomware mitigation in the modern era: A comprehensive review, research challenges, and future directions. ACM Computing Surveys (CSUR) 2021, 54, 1–36. [Google Scholar] [CrossRef]

- Borah, P.; Bhattacharyya, D.K.; Kalita, J.K. Cost effective method for ransomware detection: an ensemble approach 2021. pp. 203–219.

- Celdrán, A.H.; Sánchez, P.M.S.; Scheid, E.J.; Besken, T.; Bovet, G.; Pérez, G.M.; Stiller, B. Policy-based and Behavioral Framework to Detect Ransomware Affecting Resource-constrained Sensors 2022. pp. 1–7.

- Connolly, A.Y.; Borrion, H. Reducing ransomware crime: analysis of victims’ payment decisions. Computers & Security 2022, 119, 102760. [Google Scholar]

- Davies, S.R.; Macfarlane, R.; Buchanan, W.J. NapierOne: A modern mixed file data set alternative to Govdocs1. Forensic Science International: Digital Investigation 2020, 40, 301330. [Google Scholar]

- Davies, S.R.; Macfarlane, R.; Buchanan, W.J. Differential area analysis for ransomware attack detection within mixed file datasets. Computers & Security 2021, 108, 102377. [Google Scholar]

- De Gaspari, F.; Hitaj, D.; Pagnotta, G.; De Carli, L.; Mancini, L.V. Evading behavioral classifiers: a comprehensive analysis on evading ransomware detection techniques. Neural Computing and Applications 2022, 34, 12077–12096. [Google Scholar] [CrossRef]

- Du, J.; Raza, S.H.; Ahmad, M.; Alam, I.; Dar, S.H.; Habib, M.A. Digital Forensics as Advanced Ransomware Pre-Attack Detection Algorithm for Endpoint Data Protection. Security and Communication Networks 2022, 2022, 1–16. [Google Scholar] [CrossRef]

- Davies, S.R.; Macfarlane, R.; Buchanan, W.J. Majority Voting Ransomware Detection System. Journal of Information Security 2023, 14. [Google Scholar] [CrossRef]

- Ahmed, Y.A.; Koçer, B.; Al-rimy, B.A.S. Automated Analysis Approach for the Detection of High Survivable Ransomware. KSII Transactions on Internet and Information Systems (TIIS) 2020, 14, 2236–2257. [Google Scholar]

- Ahmed, Y.A.; Koçer, B.; Huda, S.; Al-rimy, B.A.S.; Hassan, M.M. A system call refinement-based enhanced Minimum Redundancy Maximum Relevance method for ransomware early detection. Journal of Network and Computer Applications 2020, 167, 102753. [Google Scholar] [CrossRef]

- Faghihi, F.; Zulkernine, M. RansomCare: Data-centric detection and mitigation against smartphone crypto-ransomware. Computer Networks 2021, 191, 108011. [Google Scholar] [CrossRef]

- Adamov, A.; Carlsson, A. Reinforcement learning for anti-ransomware testing. In Proceedings of the 2020 IEEE East-West Design & Test Symposium (EWDTS). IEEE, 2020, pp. 1–5.

- Iqbal, M.J.; Aurangzeb, S.; Aleem, M.; Srivastava, G.; Lin, J.C.W. RThreatDroid: A Ransomware Detection Approach to Secure IoT Based Healthcare Systems. IEEE Transactions on Network Science and Engineering 2022. [Google Scholar] [CrossRef]

- McIntosh, T.; Liu, T.; Susnjak, T.; Alavizadeh, H.; Ng, A.; Nowrozy, R.; Watters, P. Harnessing GPT-4 for generation of cybersecurity GRC policies: A focus on ransomware attack mitigation. Computers & Security 2023, 134, 103424. [Google Scholar]

- Al-rimy, B.A.S.; Maarof, M.A.; Alazab, M.; Alsolami, F.; Shaid, S.Z.M.; Ghaleb, F.A.; Al-Hadhrami, T.; Ali, A.M. A Pseudo Feedback-Based Annotated TF-IDF Technique for Dynamic Crypto-Ransomware Pre-Encryption Boundary Delineation and Features Extraction. IEEE Access 2020. [Google Scholar] [CrossRef]

- Al-rimy, B.A.S.; Maarof, M.A.; Alazab, M.; Shaid, S.Z.M.; Ghaleb, F.A.; Almalawi, A.; Ali, A.M.; Al-Hadhrami, T. Redundancy coefficient gradual up-weighting-based mutual information feature selection technique for crypto-ransomware early detection. Future Generation Computer Systems 2020. [Google Scholar] [CrossRef]

- Al-Haija, Q.A.; Alsulami, A.A. High performance classification model to identify ransomware payments for heterogeneous bitcoin networks. Electronics 2021, 10, 2113. [Google Scholar] [CrossRef]

- Zhang, C.; Luo, F.; Ranzi, G. Multistage Game Theoretical Approach for Ransomware Attack and Defense. IEEE Transactions on Services Computing 2022. [Google Scholar] [CrossRef]

- Davies, S.R.; Macfarlane, R.; Buchanan, W.J. Exploring the Need For an Updated Mixed File Research Data Set. In Proceedings of 2021 International Conference on Engineering and Emerging Technologies (ICEET). IEEE, 2021, pp. 1–5.

- A. Alissa, K.; H. Elkamchouchi, D.; Tarmissi, K.; Yafoz, A.; Alsini, R.; Alghushairy, O.; Mohamed, A.; Al Duhayyim, M. Dwarf mongoose optimization with machine-learning-driven ransomware detection in internet of things environment. Applied Sciences 2022, 12, 9513. [Google Scholar] [CrossRef]

| Component | Configuration |

|---|---|

| Operating System | Windows 11 23H2, 64bit |

| Security Measures | Deactivated (Anti-virus, Firewall, etc.) |

| Third-Party Applications | Microsoft Office, Acrobat Reader, Google Chrome |

| Virtualization Software | VirtualBox with Host-Only Adapter |

| Network Settings | Configured for C&C communication |

| Python Agent | Installed for Cuckoo communication |

| User Files | Created in standard directories |

| Browsing History | Established for analysis |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).