1. Introduction

The quadratic unconstrained binary optimization is a classic NP-hard problem with an enormous number of real has been used as a unifying approach to many combinatorial optimization problems [

2,

3]. Due to its practicality, as well as theoretical interest, over the years researchers have proposed many theoretical results as well as simple and sophisticated approaches as solution procedures [

4,

5,

6,

7,

8,

9,

10,

11]. However, due to the complexity and practicality of QUBO it is still necessary to provide results suitable for solving large-scale problems. In recent years, researchers have developed theoretical results to reduce algorithmic implementation difficulty of QUBO, [

12,

13,

14,

15,

16,

17]. Our result in this paper also helps to reduce size and difficulty of algorithmic implementation of these problems.

The quadratic unconstrained binary optimization (QUBO) can be formulated as,

In (1),

is the

i,

j-th entry of a given

n by

n symmetric matrix

Q. QUBO is often referred to as the

model [

18]. Since

, and

Q may be written as an upper triangular matrix by doubling each entry of the upper triangle part of the matrix and letting

, then we can write (1) as (2).

Local search strategy (LSS) is one of the most fundamental algorithmic concepts that has been successfully applied to a wide range of hard combinatorial optimization problems. The basic ingredient of almost all sophisticated heuristics is some variation of LSS. One LSS that has been used by many researchers as a stand-alone or as a basic component of more sophisticated algorithms is the

r-flip (also known as

r-Opt) strategy [

12,

19,

20,

21,

22,

23]. In Figure A6, we present a comprehensive review of r-flip strategies applied to QUBO. Let

N={1,2,…,n}. Given a binary solution,

of

, the

r-flip search chooses a subset,

, with

, and builds a new solution,

, where

for all

. If

improves the objective function, it is called an

improving move (or improving subset

S). The

r-flip search starts with a solution

x, chooses an improving subset

S, and flips all elements in

S. The process continues until there is no subset

S with

that improves the objective function. The result is called

a locally optimal solution with respect to the r-flip

move (or

r-Opt).

Often in strategies where variable neighborhood searches, such as

fan-and-filter (F&F) [

24,

25],

variable neighborhood search (VNS) [

26,

27], and

multi-exchange neighborhood search (MENS)[

19,

20,

21,

22,

23] are used, the value of

r dynamically changes as the search progresses. Generally, there are two reasons for a dynamically changing search space strategy.

- a)

The execution of an implementation of an r-flip local search, for larger value of r, can be computationally expensive to execute. This is because the size of the search space is of order n chosen r, and for fixed values of n, it grows quickly in r for value of . Hence, smaller values of r, especially r equal to 1 and 2, have shown considerable success.

- b)

In practice, a r-flip local search process with a small value of r (e.g., r=1) can quickly reach local optimality. Thus, as a way to escape 1-flip local optimality, researchers have tried to dynamically change the value of r as the search progressed. This gives an opportunity to expand the search to a more diverse solution space.

A clever implementation of (a) and (b) in an algorithm can not only save computational time, since the smaller value of r is less computationally expensive, but it can also possibly reach better solutions because the larger values of r provide an opportunity to search more a diverse part of the solution space.

1.1. Previous Works

The development of closed form formulas for

r-flip moves is desirable for developing heuristics for solving very-large-scale problem instances because it can reduce computational time consumed by an implementation of an algorithm. Alidaee, Kochenberger [

12] introduced several theorems showing closed form

r-flip formulas for general Pseudo-Boolean Optimization. Authors in [

13,

14] recently provided closed form formulas for evaluating

r-flip rules in QUBO. In particular, Theorem 6 in [

12] is specific to the

problem. To explain the closed form formula for the

r-flip rule in

, we first introduce a few definitions. Refer to Figure A6 for an exhaustive literature of

r-flip rules applied to QUBO.

Given a solution

, the

derivative of

f(x) with respect to

is defined as:

Fact 1. Given a solution vector

and a solution

obtained by flipping the

ith element of

x, we have:

It is well known that any locally optimal solution to an instance of the QUBO problem with respect to a 1-flip search satisfies,

Furthermore, after changing

x to

x’, the update for

, j=1,…,n, can be calculated as follows:

Note that

may be written as

, which can simplify the implementation process. A simple 1

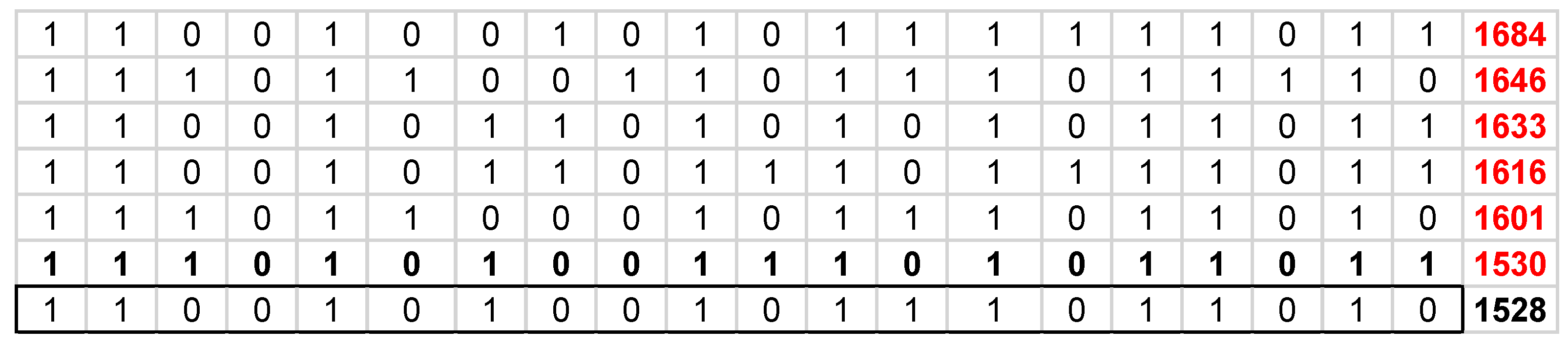

-flip search is provided in

Figure 1. Note that in line 3 we chose a sequence to implement Fact 1. Using such a strategy has experimentally proven to be very effective in several recent studies [

28].

Before we present the algorithms in this study for the r-flip strategy, the notations used are given as follows:

n The number of variables

x A starting feasible solution

x* The best solution found so far by the algorithm

K The largest value of k for r-flip, kr

π(i) The i-th element of x in the order π(1)⋯π(n)

S ={i:xi is tentatively chosen to receive a new value to produce a new solution xi'} restricting consideration to |S| = r

D The set of candidates for an improving move

Tabu_ten The maximum number of iterations for which a variable can remain Tabu

Tabu(i) A vector representing Tabu status of x

Derivative of f(x) with respect to

The vector of derivatives

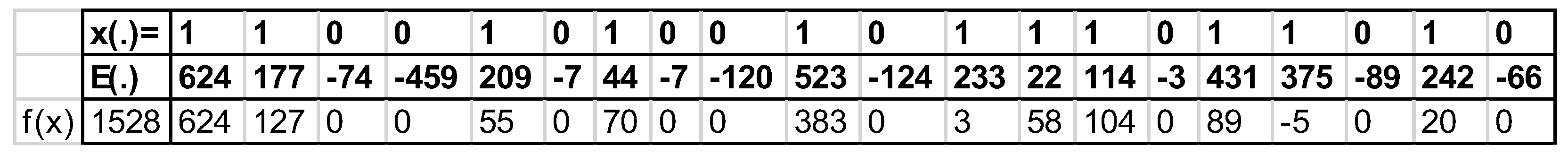

x(.) A vector representing the solution of x

E(.) A vector representing the value of derivative

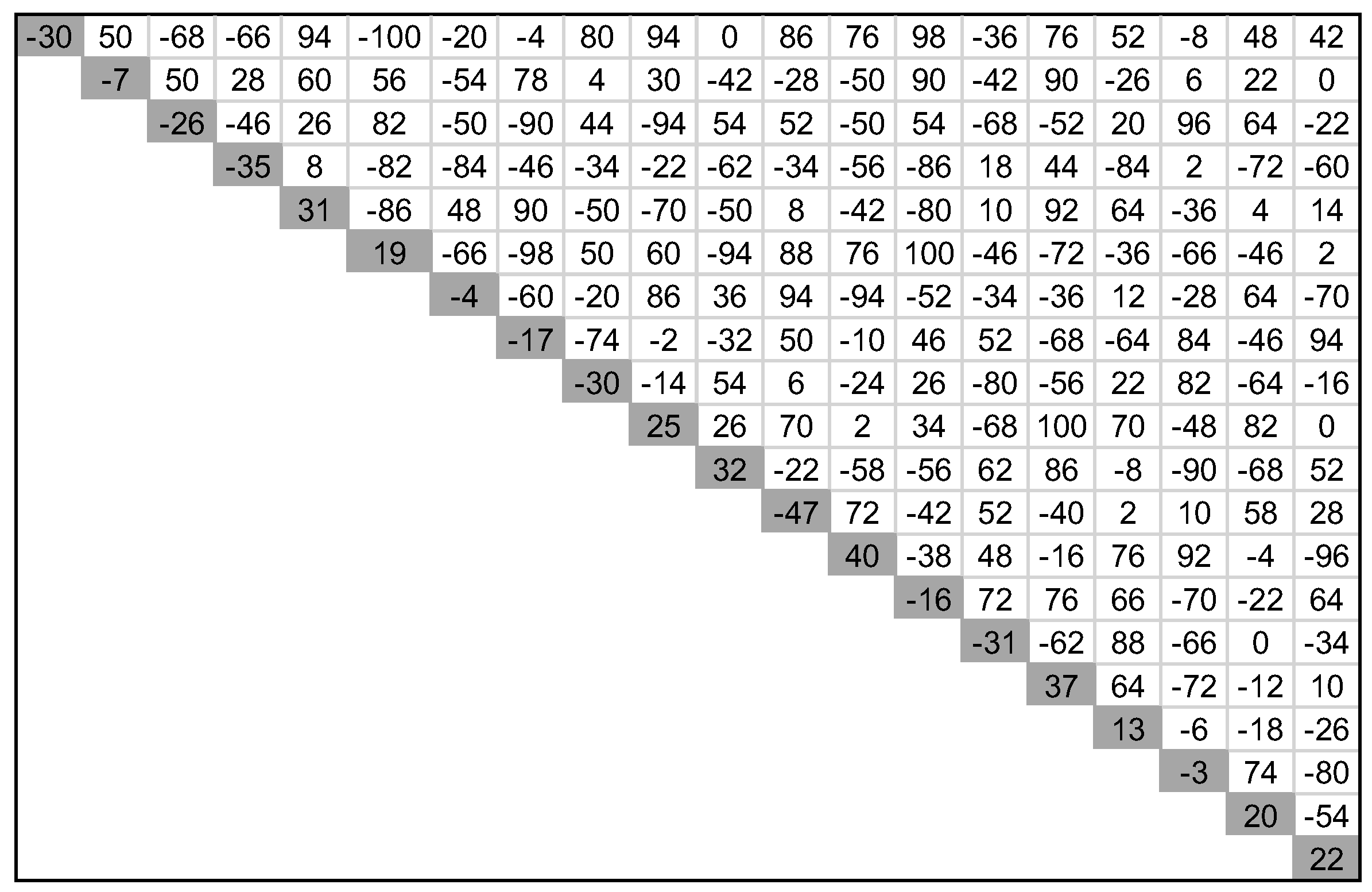

The result of Fact 1 has been extended to the r-flip search, given below.

(Theorem 6, Alidaee, Kochenberger [

12]) Let x be a given solution of QUBO and x’ obtained from x by r-flip move (for a chosen set S) where

, |S|=r, the change in the value of the objective function is:

Furthermore, after changing x to x’ the update for

,j=1,…,n, can be calculated as follows:

As explained in [

12], the evaluation of change in the objective function (7) can be done in

, i.e., evaluating

f(x’) from

f(x). The update in (8) requires

r calculations for each

j in

N\S, and

r-1 calculations for each

j in

S, Thus, overall, update for all

n variables can be performed in

O(nr).

Note that for any two elements

i,j=1,…,n, and

i<j, we can define:

Using (9), a useful way to express Equation (7) is Equation (10).

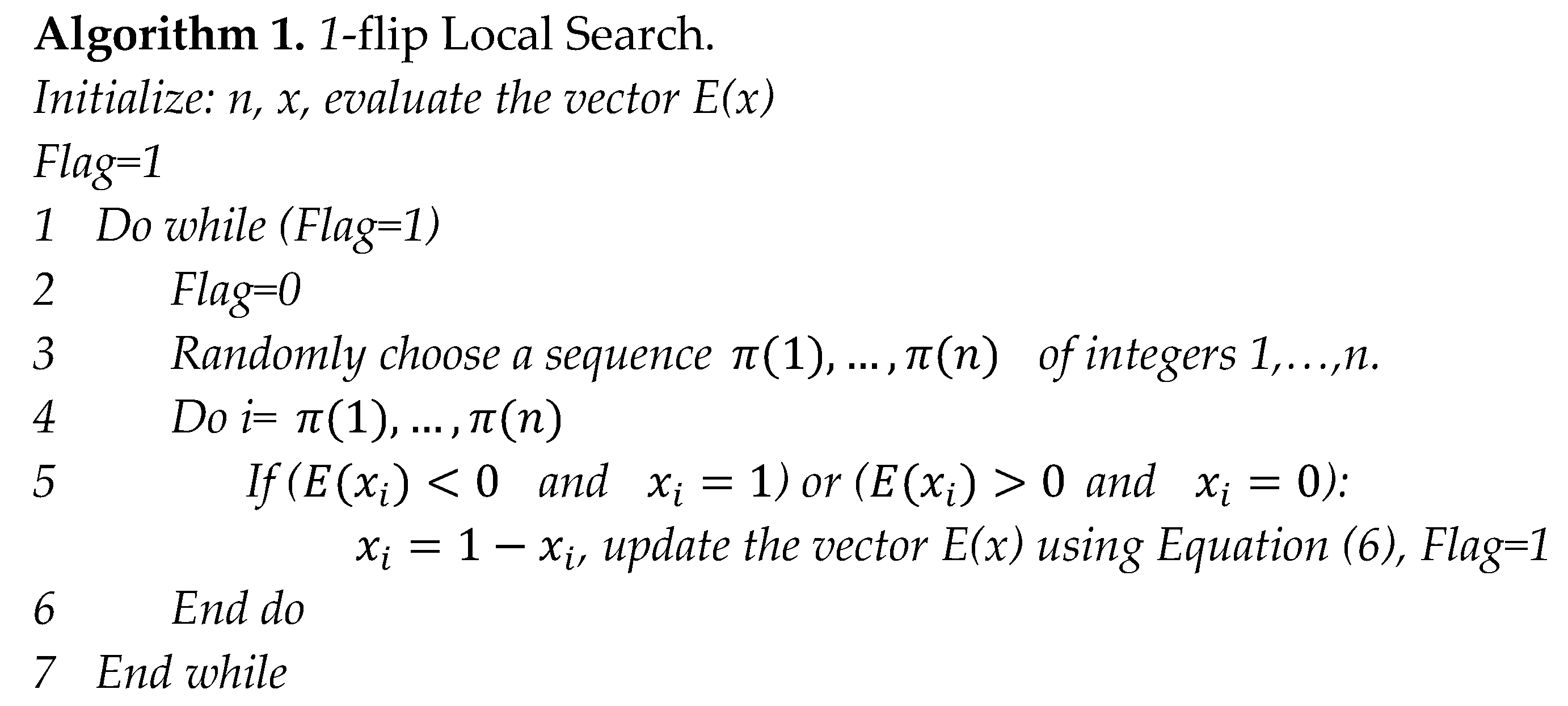

A simple exhaustive

r-flip search is provided in

Figure 2. The complexity of the problem indicates that the use of a larger value of

r in the

r-flip local search can make the implementation of the search process more time consuming. Meanwhile, the larger value of

r can provide an opportunity to search a more diverse area of search space and thus possibly reach better solutions. To overcome such conflicts, researchers often use

r=1 (and occasionally

r=2) as the basic components of their more complex algorithms, such as F&F, VNS, and MENS. Below, in Theorem 1 and Proposition 1, we prove that after reaching the locally optimal solution with respect to a 1-flip search, the implementation of an

r-flip search can significantly be reduced. Further, related results are also provided to allow the efficient implementation of an

r-flip search within an algorithm.

2. New Results on Closed-form Formulas

We first introduce some notations. For

m<n, define

(m, n) to be the number of combinations of

m elements out of

n, and let

, and

. Furthermore, Lemma 1 and Lemma 2, presented below, help to prove the results. Note that, Lemma 1 is direct deduction from previous results [

12].

Lemma 1. Given a locally optimal solution

with respect to a 1-flip search, we have:

Proof. Condition of local optimality in (5) indicates that:

Using this condition, we thus have:

Lemma 2. Let

be any solution of the problem; then, we have:

Proof. For each pair of elements, , the left-hand-side can be or. Since |S|=r, the summation on the left-hand-side is at most equal to M.

Theorem 1: Let

and M be as defined above and let

be a locally optimal solution of

with respect to a 1-flip search. A subset

, with |S|=r, is an improving r-flip move if and only if we have:

Proof: Using (7), a subset

of r elements is an improving r-flip move if and only if we have:

Since

x is a locally optimal solution with respect to a 1-flip search, it follows from Lemma 1 that inequality (14) is equivalent to (15); that completes the proof.

Proposition 1: Let and M be as defined above and let be any locally optimal solution of the problem with respect to a 1-flip search. If a subset , with |S|=r, is an improving r-flip move, then we must have: .

Proof: Since

x is a locally optimal solution with respect to a 1-flip search and

S is an improving

r-flip move, by Theorem 1, we have:

Using Lemma 2; we also have (16); which completes the proof.

The consequence of Theorem 1 is as follows. Given a locally optimal solution x with respect to a 1-flip search, if there is no subset of S with |S|=r that satisfies (13), then x is also locally optimal solution with respect to an r-flip search. Furthermore, if there is no subset S of any size that (13) is satisfied, then x is also locally optimal solution with respect to an r-flip search for all . Similar statements are also true regarding Proposition 1.

The result of Proposition1 is significant in the implementation of an r-flip search. It illustrates that, after having a 1-flip search implemented, if an r-flip search is next served as a locally optimal solution, only those elements with the sum of absolute value of derivatives less than M are eligible for consideration. Furthermore, when deciding about the elements of an r-flip search, we can easily check to see if any element by itself or with a combination of other elements is eligible to be a member of an improving r-flip move S. Example 1 below illustrates this situation.

Example 1. Consider an

problem with

n variables. Let

be a given locally optimal solution with respect to a 1-flip search. Consider

S={i,j,k,l} for a possible 4-flip move. In order to have

S for an improving move, all 15 inequalities, given below in (17), must be satisfied. Of course, if the last inequality in (17) is satisfied, all other inequalities are also satisfied. This means each subset of the

S is also an improving move. This is important in any dynamic neighborhood search strategies

k-flip moves for

in consideration.

Obviously, choosing the appropriate subset S to implement a move is critical. There are many ways to check for an improving subset S. Below, we explain two such strategies. In addition, a numerical example is given in the Appendix.

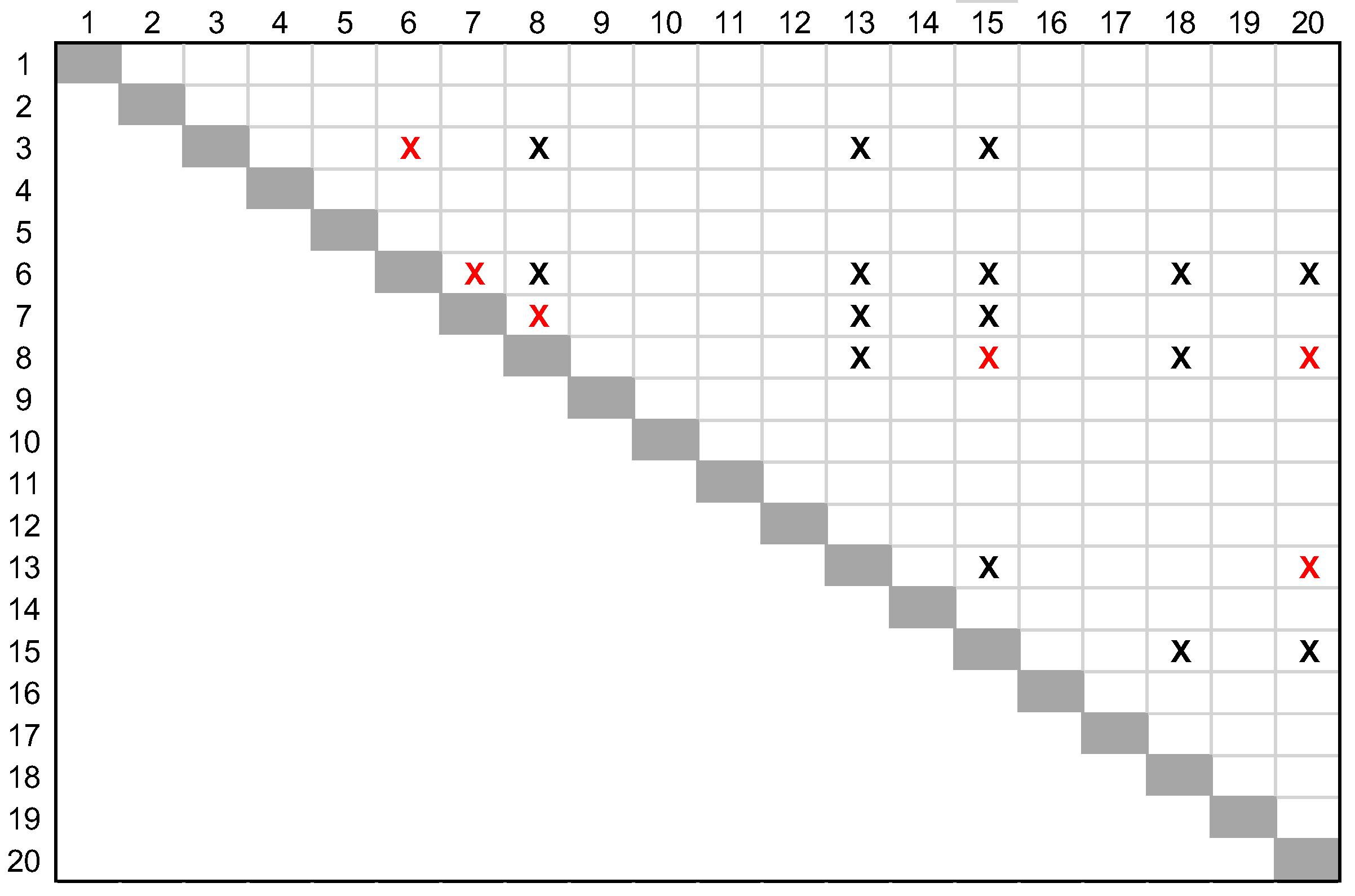

2.1. Strategy 1

We first define a set,

D(n), of candidate for improving moves. Given a locally optimal solution

x with respect to a 1-flip move, let the elements of

x be ordered in ascending absolute value of derivatives, as given in (18).

Here,

means the

i-th element of

x in the order

. Let

K be the largest value of

k=1,2,…,n where the inequality (19) is satisfied. The set

D(n) is now defined by (20).

Lemma 3. Any subset satisfies the necessary condition for an improving move.

Proof. It follows from Proposition 1.

There are some advantages to having elements of x in an ascending order, i.e., inequalities (18):

the smaller the value of is, the more likely that is involved in an improving k-flip move for k<=r (this might be due to the fact that, the right-hand-side value M in (19) for given r is constant. Thus, smaller values of on the left-hand-side might help to satisfy the inequality easier.)

because the elements of

D(n) are in an ascending order of absolute values of derivatives, a straightforward implementable series of alternatives to be considered for improving subsets,

S, may be the elements of the set given in (21). Note that there are a lot more subsets of

D(n) compared to the sets in (21) that are the candidates for consideration in possible

k-flip moves. Here we only gave one possible efficient implementable strategy.

It is important to note that, if Proposition 1 is used in the process of implementing an algorithm, given a locally optimal solution x with respect to a 1-flip search, after an r-flip implementation for a subset |S|=r with r>1, the locally optimal solution with respect to a 1-flip search for the new solution, x’, can be destroyed. Thus, if an r-flip search needed to be continued, a 1-flip search might be necessary on solution x’ before a new r-flip move can continue. However, there are many practical situations where this problem may be avoided for many subsets, especially when the problem is very-large-scale, i.e., the value of n is large, and/or Q is sparse. Proposition 2 is a weaker condition of Proposition 1 that can help to overcome up to some point in the aforementioned problem.

In the proof of Theorem 1 and Proposition 1, we only used a condition of optimality for a 1-flip search satisfied for the members of the subset S. We now define a condition as follows and call it ‘condition of optimality with respect to a 1-flip search for a set S’, or simply ‘condition of optimality for S’.

Given a solution

x, the

condition of optimality for any subset

is satisfied if and only if we have:

Of course, if we have N in (22) instead of S, x is a locally optimal solution as was defined in Fact 1.

For m<n, let (m, n) be the number of combinations of m elements out of n elements, and , and . With these definitions now we state Proposition 2.

Proposition 2 (weak necessary condition): Let , |S|=r, and , and as defined above. Given any solution of , and assume the condition of optimality is satisfied for a subset S. If S is an r-flip improving move, we must have .

Proof: Similar to proof of Proposition 1.

Notice that the values of and in Proposition 2 depend on S; however, these values can be updated efficiently as the search progresses. As explained above, in situations where the problem is very-large-scale and/or Q is sparce, for many variables, the values of derivatives are ‘unaffected’ by the change of values of elements in S. This means a large set of variables still satisfies the condition of optimality, and thus the search can continue without applying a 1-flip search each time before finding a new set S for r-flip implementation.

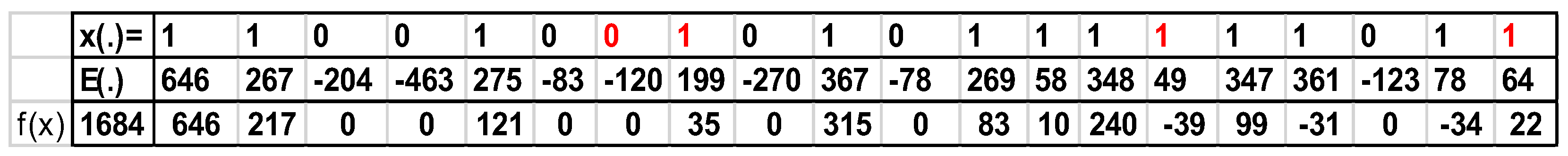

2.2. Strategy 2

Another efficient and easily implementable strategy is when instead of (19), we only use an individual element to create a set of candidates for applying an

r-flip search, set

D(1) as defined below. Corollary 1 is a special case of Proposition 1 that suffices such a strategy.

Corollary 1: Let and M be as defined before, given a solution of , if the 1-flip local search cannot further improve the value of f(x), and with where an r-flip move of elements of S improves f(x), then we must have .

To gain insight into the use of Corollary 1, we did some experimentation to find the size of the set

D(1) for different sizes of instances. The steps of the experiment to find the size of

D(1) are given below. Problems considered are taken from literature [

27], and used by many researchers. We only used the larger-scale problems with 2500 to 6000 variables, a total of 38 instances.

Find_D(): Procedure for finding the size of the set D(1):

Step 1. Randomly initialize a solution to the problem. For each value of r calculate M. Apply the algorithm in

Figure 1 and generate a locally optimal solution x with respect to a 1-flip search. However, in Step 5 of

Figure 1 only consider those derivatives with

Step 2. Find the number of elements in the set D(1) for x.

Step 3. Repeat Step 1 and 2, 200 times for each problem, and find the average number of elements in the set D(1) for the same size problem, density, and r value.

The results of the experiment are shown in

Table 1. From

Table 1, in general we can say that as the density of matrix

Q increases, the size of

D(1) decreases for all problem sizes and values of

r. This is, of course, due to the fact that the larger density of

Q makes the derivative of each element in an

x more related to other elements. As the size of a problem increases, the size of

D(1) also increases.

An interesting observation in our experiment was that, in most cases, the size of D(1) for better locally optimal solutions were smaller than those with the worse locally optimal solutions. This indicates that as the search reaches closer to the globally optimal solutions, the time for an r-flip search decreases when we take advantage of Corollary 1.

2.3. Implementation Details

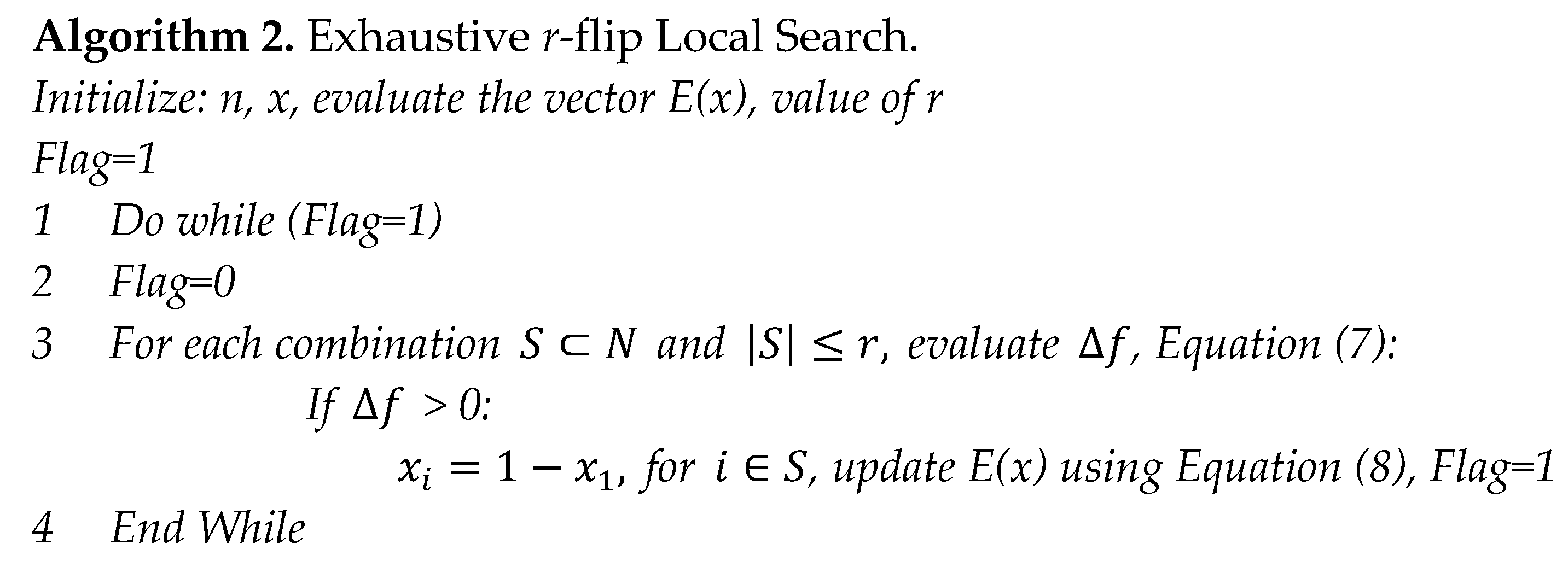

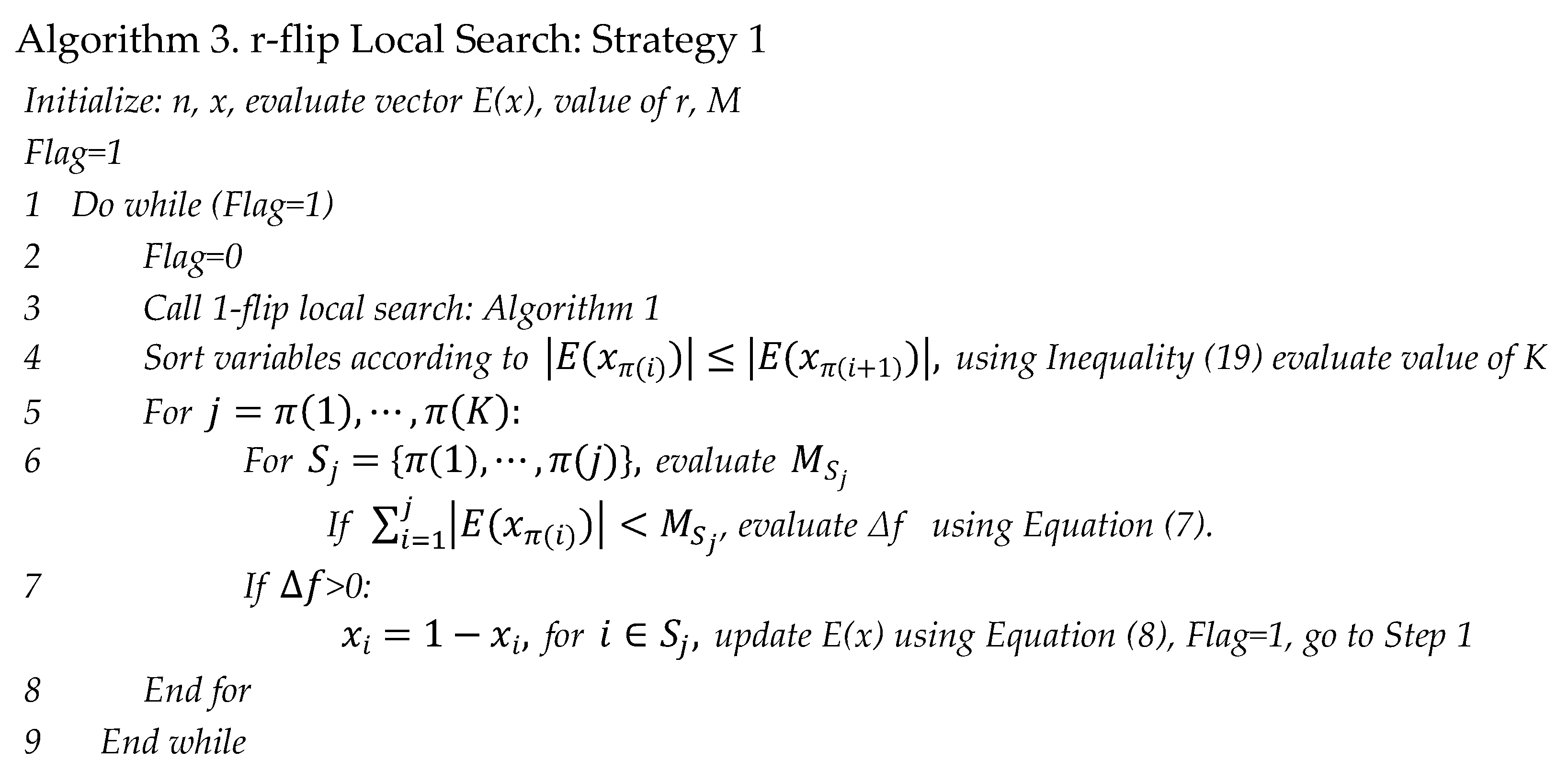

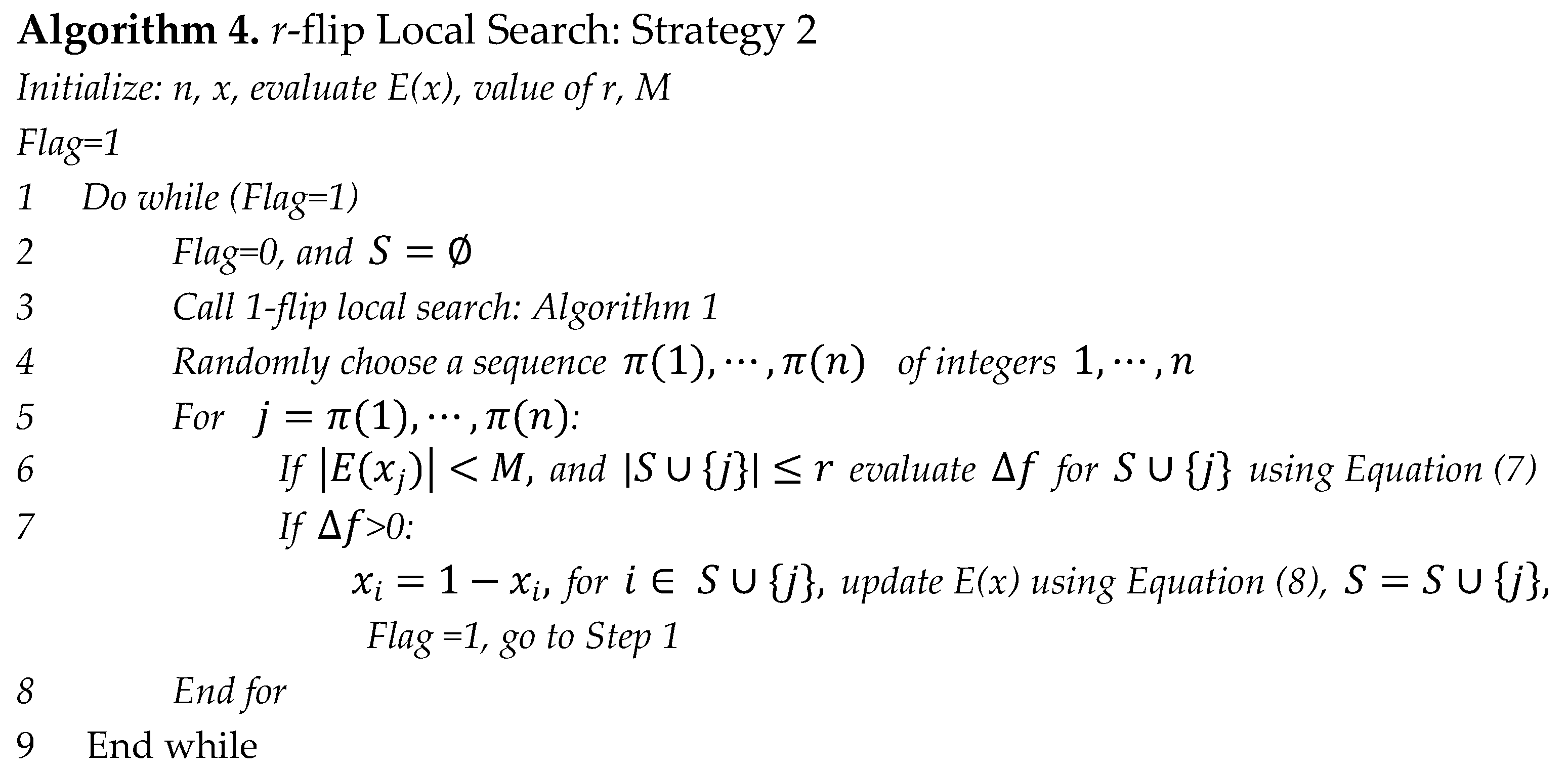

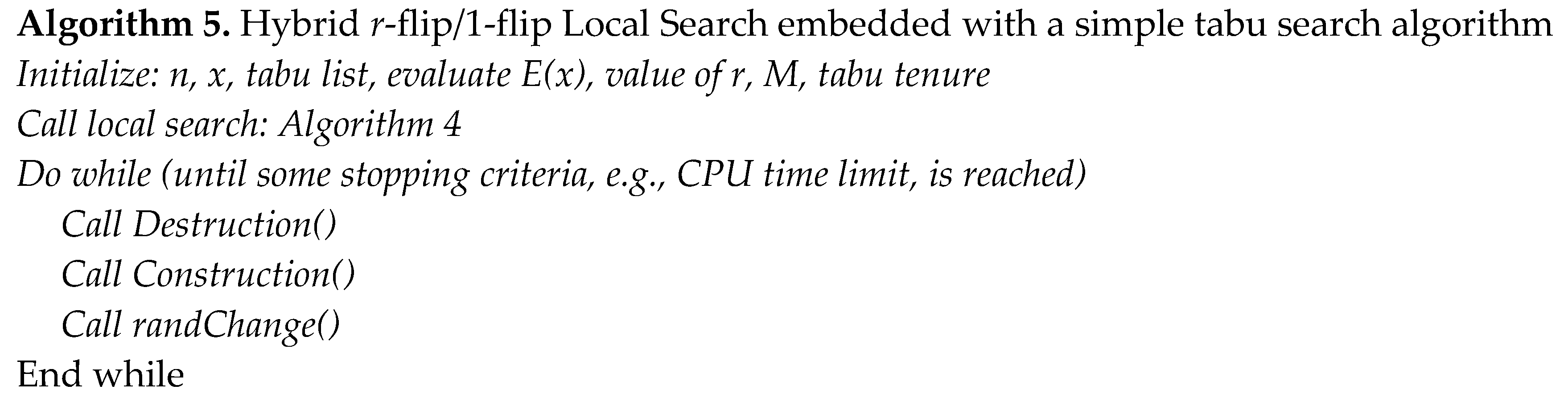

We first implement two strategies in

Section 2.1 and

Section 2.2 via Algorithm 3 for Strategy 1 (see

Figure 3), and Algorithm 4 for Strategy 2 (see

Figure 4), then propose Algorithm 5 for Strategy 2 embedded with a simple tabu search algorithm for the improvement in

Figure 5.

In the Destruction() procedure, there are three steps:

step 3a. Find the variable that is not on the tabu list and lead to the small change to the solution when the variable is flipped.

step 3b. Change its value, place it on the tabu list to update the tabu list, update E(x).

step 3c. Test if there is any variable that is not on the tabu list and can improve the solution. If not, go to Step 3a.

In the Construction() procedure, there are four steps:

step 4a. Test all the variables that are not on the tabu list. If a solution better than the current best solution is found, change its value, place it on the tabu list, update E(x) , update the tabu list, and go to Step 1.

step 4b. Find the index i corresponding to the greatest value of E(xi), change its value of xi, place it on the tabu list to update the tabu list, update E(x).

step 4c. If this is the fifteenth iteration in the Construction() procedure, go to Step 1.

step 4d. Test if there is any variable that is not on the tabu list and can improve the solution. If not, go to Step 3a. If yes, go to Step 4a.

The randChange() procedure is invoked occasionally and randomly to select an x for the Destruction() using a random number generator. There is less than a 2% probability of invoking after the Construction() procedure. To get the 2% probability, a random number generator is used to create an integer between 1 to 1000. If the value of integer is smaller than 20, the randChange() is invoked. The variable chosen in the randChange() will lead to the change of E(x) for Destruction().

Any local search algorithm, e.g., Algorithm 3 or 4, can be used in Step 1 of this simple tabu search heuristic. However, a limited preliminary implementation of Algorithm 3 and 4 within the Algorithm 5 suggested that due to its simplicity of implementation and computational saving time, the Algorithm 4 with slight modification was quite effective, thus we used it in Step 1 of the Algorithm 5. The slight modification was as follows. If the solution found by a 1-flip is worse than the current best-found solution, quit the local search and go to Step 2.

In order to determine whether the hybrid r-flip/1-flip local search algorithms with two strategies (Algorithms 3 and 4) do better than the hybrid r-flip/1-flip local search embedded with a simple tabu search implementation, we compared Algorithms 3 and 4 to Algorithm 5.

The goal of the new strategies is to reach local optimality on large scale instances with less computing time. We report the comparison of three algorithms of a 2-flip on very-large-scale QUBO instances in the next section.

3. Computational results

In this study, we perform substantial computational experiments to evaluate the proposed strategies for problem size, density, and r value. We compare the performance of Algorithms 3, 4, and 5 for r=2 on very-large-scale QUBO instances. We also compare the best algorithm among Algorithms 3, 4, and 5 to one of the best algorithms for , i.e., Palubeckis’s multiple start tabu search. We code the algorithms in C++ programming language.

In [

29], there are five multiple start tabu search algorithms, and MST2 algorithm had the best results reported by the author. We choose MST2 algorithm with the default values for the parameters recommended by the author [

29]. In MST2 algorithm, the number of iterations as the stopping criteria for the first tabu search start subroutine is 25000 * size of problem, then MST2 algorithm reduces the number of iterations to 10000*size of problem as the stopping criteria for the subsequent tabu search starts. Within the tabu search subroutine, if an improved solution is found, then MST2 algorithm invokes a local search immediately. The CPU time limit in MST2 algorithm is checked at the end of the tabu search start subroutine. Thus, the computing time might exceed the CPU time limit for large instances when we choose short CPU time limits.

All algorithms in this study are compiled by GNU C++ compiler v4.8.5 and run on a single core of Intel Xeon Quad-core E5420 Harpertown processors, which have a 2.5 GHz CPU with 8 GB memory. All computing jobs were submitted through the Open PBS Job Management System to ensure both methods using the same CPU for memory usage and CPU time limits on the same instance.

Preliminary results indicated that Algorithms 3, 4, and 5 perform well on instances with the size less than 3,000 and low density. All algorithms found the best-known solution with the CPU time limit of 10 seconds. Thus, we only compare the results of large instances with high density and size from 3,000 to 8,000 by MST2 algorithm and the best algorithm among Algorithms 3, 4, and 5. These benchmark instances with the size from 3,000 to 8,000 have been reported by other researchers [

6,

30]. In addition, we generate some very-large-scale QUBO instances with high density and size of 30,000 using the same parameters from the benchmark instances. We use a CPU time limit of 600 seconds and

r=2 for Algorithms 3, 4, and 5 on the very-large-scale instances in

Table 2. We adopted the following notation for computational results:

OFV The value of the objective function for the best solution found by each algorithm.

BFS Best found solution among algorithms within the CPU time limit.

TB[s] Time to reach the best solution in seconds of each algorithm.

AT[s] Average computing time out of 10 runs to reach OFV.

DT %Deviation of computing time out of 10 runs to reach OFV.

Table 2 shows the results of comparison for Algorithms 3, 4, and 5 on very-large-scale instances out of 10 runs. Algorithm 5 produces a better solution than Algorithms 3 and 4 with

r=2; thus, we use Algorithm 5 with

r=1 and

r=2 to compare to MST2 algorithm. We impose a CPU time limit of 60 seconds and 600 seconds per run with 10 runs per instance on Algorithm 5 and MST2 algorithm. We choose tabu tenure value of 100 for 1-flip and 2-flip. The instance data and solutions files are available on this data repository

1.

In our implementation, we choose the CPU time limit as the stopping criteria and check the CPU time limit before invoking the tabu search in Algorithm 5. Because MST2 algorithm and Algorithm 5 are not single point-based search methods, the choice of the CPU time limit as the stopping criteria seems to be a fair performance comparison method between algorithms.

Table 3 describes the size and density of each instance and the number of times out of 10 runs to reach the OFV as well as solution deviation within the CPU time limit for MST2 algorithm and Algorithm 5 with

r=1 and

r=2. MST2 algorithm produces a stable performance and reaches the same OFV frequently out of 10 runs. Algorithm 5 starts from a random initial solution and can search a more diverse solution space in a short CPU time limit. When the CPU time limit is changed to 600 seconds, MST2 algorithm and Algorithm 5 produce a better solution quality in terms of relative standard deviation[

31]. The relative standard deviation (RSD) in

Table 3 inside the parenthesis is measured by:

,

and

, where

f(x) is the OFV of each run and

is the mean value of OFV out of n=10 runs. For some instances, the relative standard deviation (RSD) is less than 5.0

E-4 even though not all runs found the same OFV. We use 0.000 as the value of RSD when the value is rounded up to three decimal points.

Table 4 reports the computational results of a CPU time limit of 60 seconds, and

Table 5 reports the computational results of a CPU time limit of 600 seconds. In

Table 4,

MST2 algorithm matches 5 out of 27 best solutions within the CPU time limit. The 1-flip strategy in

Algorithm 5 matches 26 out of 27 best solutions while the 2-flip strategy in

Algorithm 5 matches 18 out of 27 best solutions. For

MST2 algorithm, the computing time to find the initial solution exceeded the CPU time limit of 60 seconds for two large instances.

When the CPU time limit is increased to 600 seconds, MST2 algorithm matches 10 out of 27 best solutions. The 1-flip strategy matches 25 out of 27 best solutions, and the 2-flip strategy matches 23 out of 27 best solutions. The 1-flip and 2-flip strategies in Algorithm 5 perform well on the high-density large instances. There is no clear pattern that the 2-flip strategy uses more time than a 1-flip strategy on finding the same OFV. The 1-flip and 2-flip strategies in Algorithm 5 choose the initial solution randomly and independently. The 1-flip strategy has a better performance when the CPU time limits are 60 and 600 seconds.

Table 6 and

Table 7 present the time deviation of each algorithm on reaching the OFV for each instance.

MST2 algorithm has less variation in computing time when it finds the same OFV while the

r-flip strategy in

Algorithm 5 has a wider range of computing time. If the algorithm only finds the OFV once out of 10 runs, the time deviation will be zero.

The r-flip strategy can be embedded in other local search heuristics as an improvement procedure. The clever implementation of the r-flip strategy can reduce the computing time as well as improve the solution quality. We reported the time and solutions out of 10 runs for each instance. The time deviation and solution deviation of 10 runs with the short CPU time limits are computed due to the computing resources available to this study.