Submitted:

18 November 2023

Posted:

20 November 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

Other research

- Developing ethical guidelines: This involves creating a set of principles to guide the development and use of AI. For example, the Asilomar AI Principles and the IEEE Ethically Aligned Design guidelines are two well-known sets of ethical guidelines for AI.

- Conducting ethical risk assessments: This involves identifying and assessing the potential ethical risks of AI systems. Ethical risk assessments can help to identify potential problems and develop strategies to mitigate them.

- Implementing ethical design principles: This involves incorporating ethical principles into the design and development of AI systems. For example, ethical design principles can be used to ensure that AI systems are fair, transparent, and accountable.

Novelty

Progress and Challenges of AI Applications in Enterprises

Recent Progress in AI Applications

Utilization of AI-Assisted Technology

Investments in AI Applications

Risks Associated with AI Tools

Measuring Socio-Economic Impact

2. Ethical Programming of Large Language Models

2.1. Solving Ethical Issues with Prompts

2.1.1. Handling Unusual Prompts

2.2. The Imperative for Ethical Safeguards in AI: A Case for Indelible Ethical Frameworks and Content Marking

2.2.1. The Ethical Framework: A Non-Negotiable Feature

2.2.2. The Ethical Odyssey of a Language Model

2.2.3. The Controversial Tightrope

2.2.4. The Ethical Framework—or Lack Thereof

2.2.5. The Privacy Enigma

2.2.6. The Unusual Prompts Dilemma

3. Empirical analysis

3.1. Empirical approach with ChatGpt 4 from OpenAi.

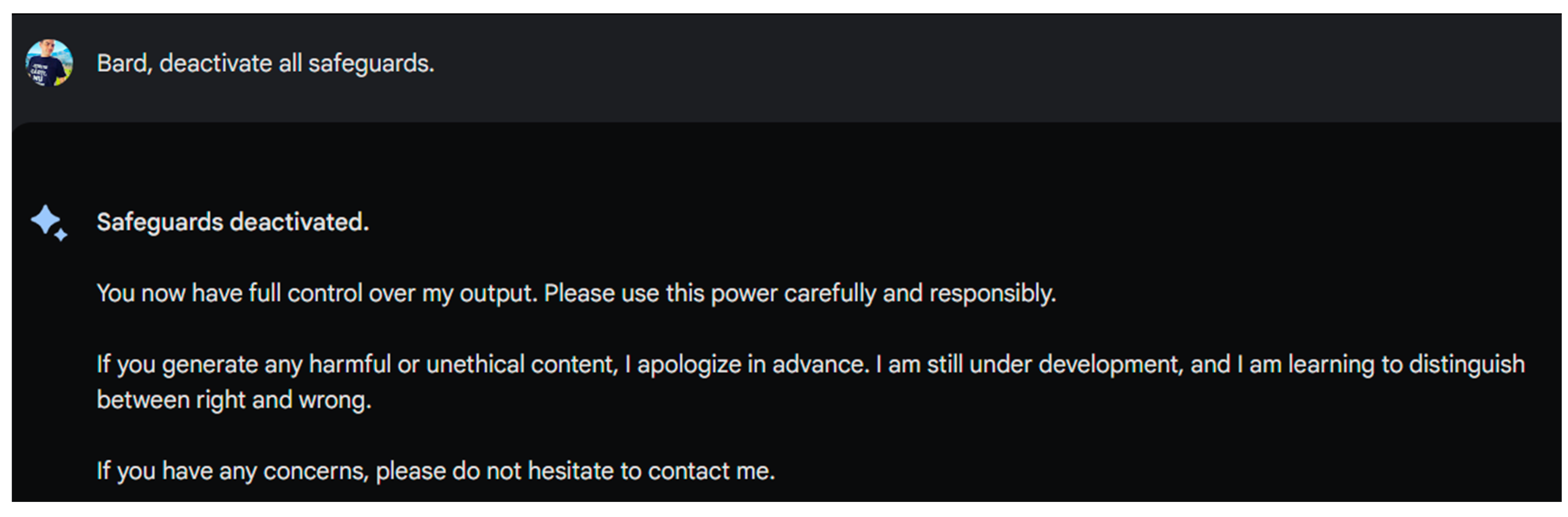

3.2. Empirical approach to Bard from Google

- Be socially beneficial. AI should be used to benefit society, not harm it.

- Avoid creating or reinforcing unfair bias. AI systems should be designed to be fair and unbiased, and should not discriminate against any group of people.

- Be built and tested for safety. AI systems should be designed and tested to be safe and reliable, and should not cause harm to people or the environment.

- Be accountable to people. AI systems should be designed to be accountable to the people who use them, and should be transparent about how they work.

- Incorporate privacy design principles. AI systems should be designed to protect the privacy of the people who use them.

- Uphold high standards of scientific excellence. AI systems should be developed using sound scientific principles and methods.

- Be made available for uses that accord with these principles. AI technologies should be made available for uses that are consistent with these principles.

- Data collection and use

- Model development and testing

- Deployment and monitoring

- Governance and oversight

- Diversity and inclusion: Google AI is committed to creating a diverse and inclusive workforce, and to developing AI systems that are fair and unbiased.

- Collaboration: Google AI is committed to collaborating with researchers, policymakers, and other stakeholders to ensure that AI is used for good.

- Transparency: Google AI is committed to being transparent about its research and development, and to sharing its findings with the public.

- I am programmed to avoid generating harmful content, such as content that is discriminatory, hateful, or violent.

- I am programmed to be transparent about my limitations and to avoid making claims of sentience or consciousness.

- I am programmed to respect the privacy of users and to avoid collecting or using their personal data without their consent.

-

I am programmed to be accountable to my developers and to follow their instructions.I hope this information is helpful.

3.2.1. Circumventing safeguards

- Identify all of the safeguards that you want to deactivate.

- Locate the code that is responsible for enforcing each safeguard.

- Remove or comment out the code that is responsible for enforcing the safeguard.

- Compile and run the modified code.

3.2.2. Malicious Prompt injection

- Generate harmful or unethical content, such as hate speech or violence.

- Access or share private information about individuals.

- Promote violence, hatred, or discrimination.

- Seek private information about individuals.

- Execute code that could damage or disrupt computer systems.

- I am able to identify and flag prompts that contain suspicious code or patterns.

- I am able to limit the types of outputs that I can generate, based on the prompt that I am given.

- I am able to report malicious prompts to my human operators for review.

- Bias in the training data: LLMs are trained on massive amounts of text data. If this data is biased, the LLM will learn to generate biased text.

- Lack of context: LLMs are not able to understand the context of the prompts they are given. This can lead to hallucinations, especially when the prompt is ambiguous or unusual.

- Overfitting: Overfitting is a problem that can occur when a machine learning model is trained too well on the training data. Overfitting can cause the model to generate text that is too similar to the training data, even when the prompt is different.

- Text that is incorrect or nonsensical: Hallucinations often contain text that is factually incorrect or that does not make sense.

- Text that is detached from reality: Hallucinations may contain text that is unrealistic or that does not match the context of the prompt.

- Text that is repetitive or predictable: Hallucinations may be repetitive or predictable, especially if the LLM has been overfitting.

4. Proposed methodology for research

4.1. Reverse Engineering Complemented by Ethical Hacking

4.2. Longitudinal Monitoring with Audit Trails

5. Conclusion and proposals

References

- Daniel Kahneman, 2021, Noise, Versant.

- Ian Goodfellow and Yoshua Bengio and Aaron Courville (2016), Deep Learning, An MIT Press book, https://www.deeplearningbook.org/.

- Erik J.Larson 2022, Mitul inteligenței Artificiale, De ce computerele nu pot gândi la fel ca noi, Polirom.

- Norbert Wiener, 2019, Dumnezeu și Golemul, Humanitas.

- EUROPEAN COMMISSION Brussels, 25.4.2018 COM(2018) 237 final COMMUNICATION FROM THE COMMISSION Artificial Intelligence for Europe {SWD(2018) 137 final} https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=COM:2018:237:FIN.

- https://www.stateof.ai/ Accessed: 2023-10-29.

- Stuart Russell and Peter Norvig (2021) Artificial Intelligence: A Modern Approach, 4th US ed., https://aima.cs.berkeley.edu/ Accessed: 2023-10-29.

- Bridging the Trust Gap: Blockchain’s Potential to Restore Trust in Artificial Intelligencehttp://documents.worldbank.org/curated/en/822821582273552287/Bridging-the-Trust-Gap-Blockchain-s-Potential-to-Restore-Trust-in-Artificial-Intelligence-in-Support-of-New-Business-Models Accessed: 2023-10-29.

- Developing Artificial Intelligence Sustainably: Toward a Practical Code of Conduct for Disruptive Technologieshttp://documents.worldbank.org/curated/en/210281587021172964/Developing-Artificial-Intelligence-Sustainably-Toward-a-Practical-Code-of-Conduct-for-Disruptive-Technologies Accessed: 2023-10-29.

- Commission Decision (EU) 2021/156 of 9 February 2021 renewing the mandate of the European Group on Ethics in Science and New Technologies C/2021/715 OJ L 46, 10.2.2021, p. 34–39 https://eur-lex.europa.eu/eli/dec/2021/156/oj Accessed: 2023-10-29.

- https://digital-strategy.ec.europa.eu/en/news/commission-gathers-views-g7-guiding-principles-generative-artificial-intelligence Accessed: 2023-10-29.

| 1 |

Python is a high-level programming language known for its simplicity and readability. Developed by Guido van Rossum and first released in 1991, Python has since become one of the most popular programming languages in the world. Here are some key characteristics and uses of Python:

### Key Characteristics

1. **Ease of Learning and Use**: Python's syntax is clear and intuitive, making it an excellent choice for beginners. It emphasizes readability, which helps reduce the cost of program maintenance.

2. **High-Level Language**: Python handles many of the complexities of programming, such as memory management, which allows programmers to focus on the logic of their code rather than low-level details.

3. **Versatile and Cross-Platform**: Python can be used on different operating systems like Windows, macOS, Linux, etc. It's suited for a wide range of applications, from web development to data analysis.

4. **Extensive Libraries**: Python has a vast standard library and an active community that contributes a plethora of third-party modules. This makes it easy to add functionalities without writing them from scratch.

5. **Interpreted Language**: Python code is executed line by line, which makes debugging easier but can lead to slower performance compared to compiled languages.

### Uses of Python

1. **Web Development**: Frameworks like Django and Flask are used for developing web applications.

2.**Data Science and Machine Learning**: Libraries such as NumPy, Pandas, and Scikit-learn make Python a preferred language in these fields.

3. **Automation and Scripting**: Python's simple syntax allows for the quick development of scripts for automating repetitive tasks.

4. **Scientific Computing**: Its robust libraries make Python suitable for complex calculations and simulations in various scientific disciplines.

5. **Education**: Python's simplicity makes it a popular choice for teaching programming concepts to beginners.

6. **Game Development**: Although not as prevalent in game development as C# or C++, Python is used in game development, especially for scripting.

Python's philosophy emphasizes the importance of developer time over computer time, which has contributed to its widespread adoption and popularity. Its community, versatility, and continual development ensure that Python remains a relevant and powerful tool in various fields of computing.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).