1. Introduction

With the widespread adoption of information technology, digital devices such as portable laptops, smartphones, and tablets have experienced significant growth. Liquid Crystal Display (LCD) monitors, known for their low power consumption and lack of radiation pollution, have found extensive applications in these domains. However, in the LCD industry, manual inspection is predominantly employed to comprehensively detect defects in finished LCD products, resulting in time wastage, missed detections, and reduced production efficiency [

1,

2].

This research is focused on the automatic visual inspection on low-contrast surface micro-defects of LCDs [

3,

4,

5].

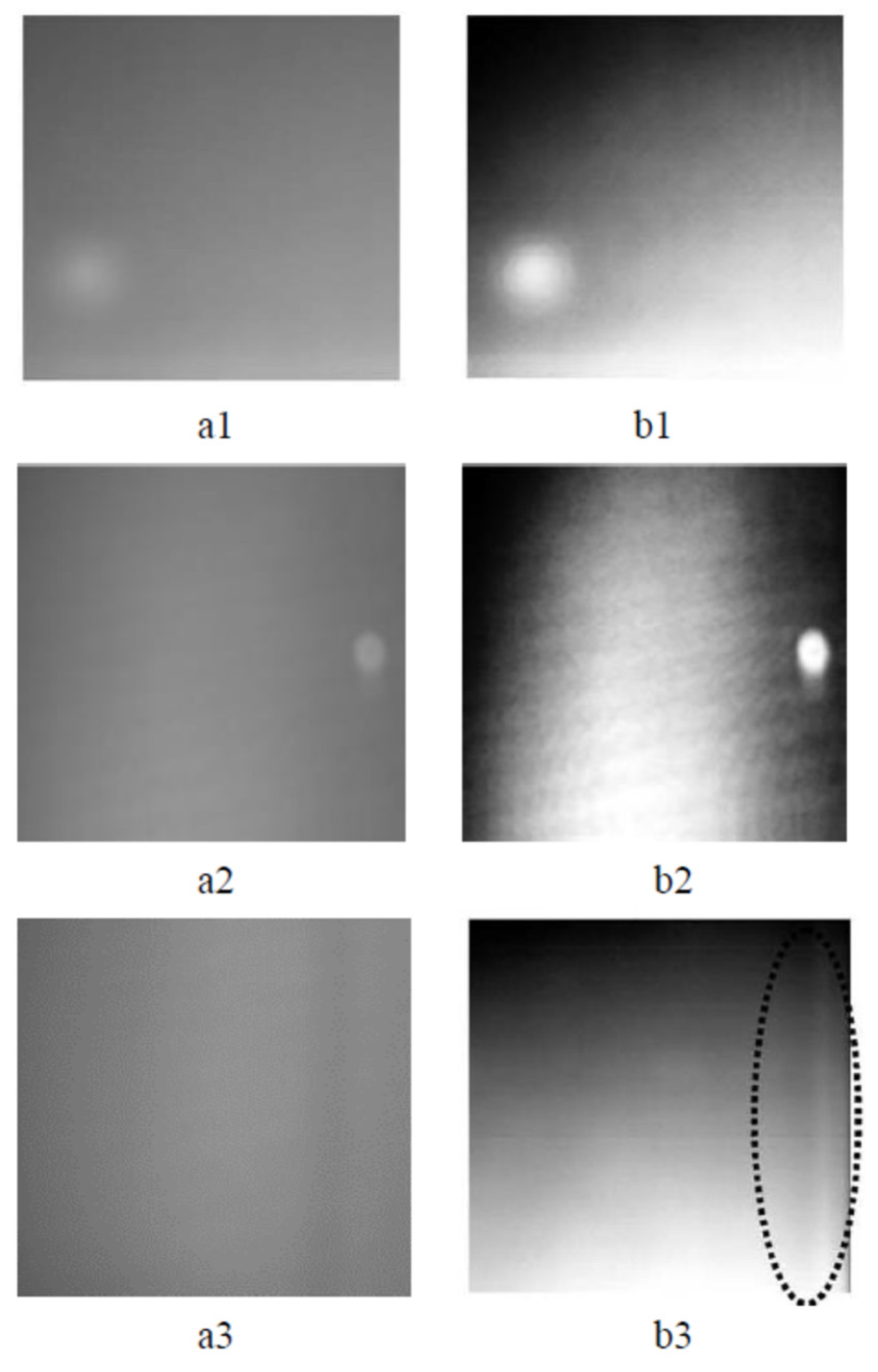

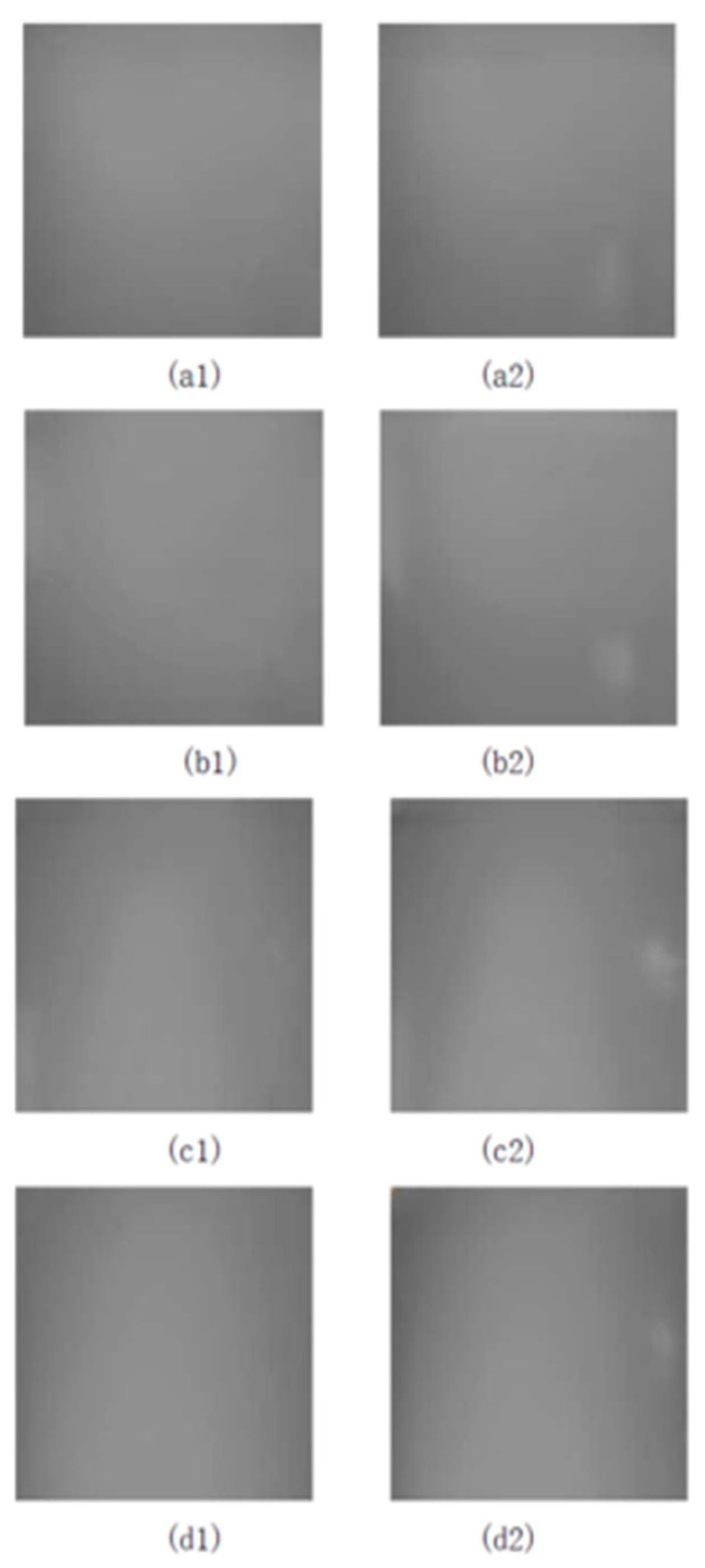

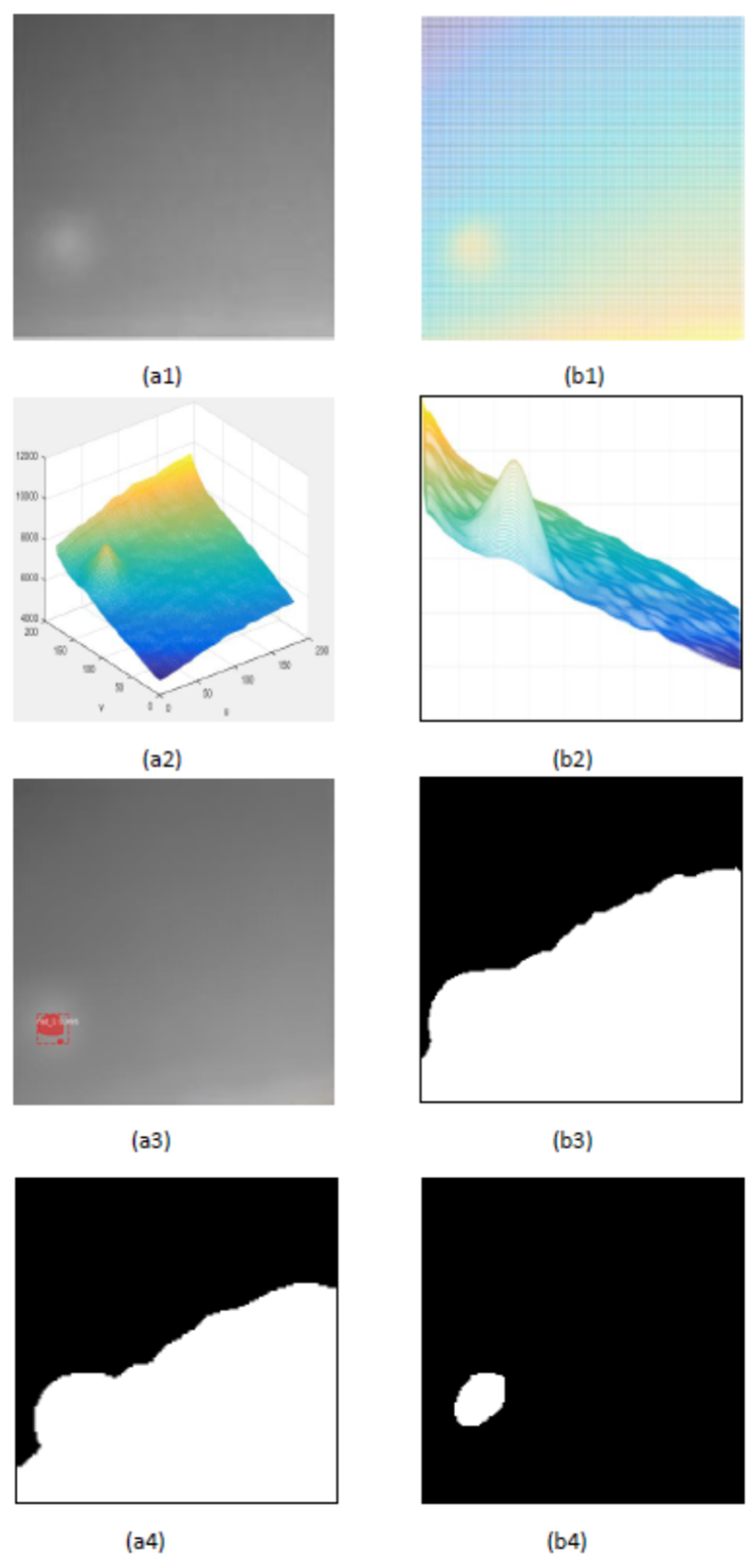

Figure 1 illustrates the brightness non-uniformity defects on the low-contrast LCD surface. The left part(a1)(a2)(a3) depicts the defects, while the right part (b1)(b2)(b3) shows the enhanced effects of these defects. It is evident that the surrounding areas of these defects possess low contrast characteristics, making it challenging to identify these brightness non-uniform defects.

In recent years, deep learning methods have gained prominence and have gradually been employed for LCD defect detection [

6,

7,

8]. However, the drawback of current deep learning methods is their reliance on a significant number of positive and negative samples for model training. Additionally, the laborious and time-consuming process of labeling defective samples (i.e., creating masks) poses a challenge. Furthermore, the issue of imbalanced samples in production arises, where it is challenging to gather an ample quantity of defective samples. Thus, it is crucial to research a new method based on generative adversarial networks to solve these problems.

We propose a method to automatically generate samples and masks simultaneously using deep generative network models, which can accomplish the complex and laborious task of labeling and obtaining image masks while solving the difficult problem of positive and negative sample imbalance.

2. Related work

Common machine vision-based methods for surface defect detection can be categorized into these classes [

9]: statistical methods, feature-based method, spectral-based methods, subspace-based methods [

10,

11], and the emerging deep learning-based methods.

Statistical methods need to collect a certain number of qualified samples, and use statistical models to perform calculations to establish a fixed template of qualified samples. During inspection, the sample to be inspected is matched with a fixed template, and the differences between the sample and the template are highlighted and defined as defects.

Zhong [

12] analyzed a certain number of defect samples and calculated the grayscale threshold of the defect and background image, and used this threshold to threshold the subsequent detection objects to enhance the image contrast. By calculating the probability between the defect and the background edge pixels enables the detection of impurity defects in flexible integrated circuit packaging substrates. Since the threshold value of this method relies on manual calculation, there is the trouble of repeatedly calculating the threshold value when facing multiple categories of inspection objects or multiple defect types.

The feature-based method is processing image pixels to obtain defect information, and has a certain degree of simplicity when facing detection objects with obvious defect characteristics and high recognition. When detecting low-contrast surface defects, it is usually impossible to calculate an effective threshold due to the high randomness of the defect appearance and the complex background image.

Tu [

13] proposed a printed circuit board inspection and sorting method. The PCB images collected by the camera are processed in sub-pixels and then registered with the corresponding template based on grayscale information, so as to detect mis-soldering and missing soldering of surface components. PCB samples are automatically positioned and sorted. Since this type of method only requires qualified samples to construct a fixed template, it solves the problems of difficulty in defect feature extraction and sample imbalance in feature detection algorithms, and can effectively detect targets with too many defect types and insufficient defect samples.

However, the defects encountered in LCD manufacturing exhibit localized brightness non-uniformity and smooth brightness variations. Traditional methods are inadequate for detecting low-contrast surface brightness non-uniform defects, as investigated in this study. Consequently, in addition to employing approaches such as adaptive thresholding [

14], sophisticated machine learning algorithms [

15,

16,

17], including deep convolutional neural networks(CNN) [

18,

19], have been introduced. In recent years, the deep learning methods has great advancements in classification, detection [

20], and instance segmentation [22,23]. Consequently, deep learning methods is increasing in LCD defect detection.

Shuang Mei

et al. [

18] proposed a MURA defect identification method based on feature-level fusion of unsupervised learning. This method is a defect identification method based on joint feature representation. This method fuses hand-crafted and Unsupervised learning of features to obtain useful features. Experimental results show that this method realizes the identification of Mura defects in thin film transistor LCD panels using visual inspection equipment, and has strong robustness and accuracy.

In the latest Faster R-CNN [

20,

21] and instance segmentation method Mask R-CNN [

22], a pivotal element is the Region Proposal Network (RPN). The fundamental concept underlying RPN is the dense sampling of the entire input image using a multitude of overlapping bounding boxes of various shapes and sizes. Subsequently, the network is trained to generate multiple object proposals, also referred to as Regions of Interest (RoI). This architectural choice enables RPN to effectively explore features across diverse scales. RPN comprises a convolutional neural network that takes feature images as input and produces output bounding boxes along with associated probabilities for contained objects.

Ramya et al. [24] introduced the utilization of the state-of-the-art Single Shot Multi-box Detector (SSD) network for both classifying and localizing Mura defects, achieving simultaneous defect classification and localization. In comparison, the Mask R-CNN method [25–27] offers higher accuracy compared to the aforementioned deep learning-based object detection methods. Moreover, it possesses the advantage of simultaneous classification, localization, and instance segmentation. Therefore, by improving and applying the Mask R-CNN method to the detection of micro-defects in LCDs, it becomes possible to identify defect categories and segment defect shapes. However, the utilization of the aforementioned deep learning methods faces challenges in collecting a substantial number of defect samples for training and testing, as well as the complex and time-consuming task of annotating defect samples with masks. To address these issues, a deep network model capable of automatically generating samples and masks is proposed as follows.

In practical LCD manufacturing processes, a large quantity of qualified samples can be easily obtained, while gathering a lot of defect samples within a short time is challenging. The data augmentation techniques is used to augment the defect dataset [28,29]. This involves synthesizing defect regions onto normal images through operations such as rotation, cropping, and duplication to generate defect samples.

As an unsupervised network model, Generative Adversarial Networks (GAN) can adaptively generate similar samples through the input unlabeled data set by setting the generator and discriminator. A precisely designed GAN network can be trained with a small number of defect samples and automatically generate a large number of similar samples for subsequent training of the recognition network. In defect detection, the problem of insufficient defect samples can be well solved.

Yi [30] used a variational self-generator to improve the adversarial generation network, successfully expanded the MINST data set, and verified that there was no significant difference between the generated samples and the original samples. Li [31] performed a 3D modeling of the belt conveyor and then input it into the CycleGAN [32] network to expand the fault samples. The expanded samples were used to fully train the Yolo v5 target detection network, achieving an improvement in network detection accuracy. Liu [33] used CycleGAN to expand the defect samples of sample LCD screens to achieve the construction of a balanced sample data set. Mask R-CNN fully trained on this data set achieved an improvement in detection accuracy. These previous studies have amply demonstrated the effectiveness of utilizing deep generative networks in sample dataset expansion. However, when the original CycleGAN network faces welding images with complex backgrounds, the visual effects and authenticity of the samples it generates have not yet been verified. Thus, it is proposed to employ a Cycle-Consistent Generative Adversarial Network (CycleGAN) to address the issue of sample imbalance.

After generating a significant number of samples, while training the aforementioned deep learning-based surface defect detection methods such as Mask R-CNN, the time-consuming and labor-intensive process of annotating and acquiring defect image masks persists. Existing annotation tools like LabelMe [34] add to the complexity and effort required for mask annotation. To overcome this challenge, a method based on Generative Adversarial Networks (GANs) [35–37] is proposed to automatically annotate and acquire image masks. Specifically, the generated defect sample images and their corresponding input defect-free images are fed into a CycleGAN model. The defect sample images serve as the target images, while the defect-free input images are treated as the input images during the training process. Through iterative steps, the defect information is progressively accumulated in the defect-free input images until the generation of defect samples is achieved. This iterative process represents the generation of sample masks.

3. Simultaneous Generation of Training Samples and Masks Based on the Gan Model

We propose a technique that leverages a generative adversarial network (GAN) to autonomously generate defect samples. This method requires only a limited quantity of real samples to enhance and expand the LCD sample dataset. Subsequently, the generated dataset is employed to enhance the detection results.

After a sufficient defect sample dataset is generated, when training deep learning methods such as Mask R-CNN, there is still a very time-consuming and laborious problem of labeling and acquiring Mask of defect images, so a new method of automatically acquiring image Mask is proposed, that is, the generated defect sample image and the corresponding input non-defective image are input into the new CycleGAN model, and trained as target images and input images in the training process of this model. During the training process, the superimposed defect information will be accumulated in the defect-free input image in a step-by-step iterative manner until the defect sample is generated, and this superposition process is the generation process of the sample mask.

3.1. CycleGAN model

The CycleGAN model is an image style transformation technique, and the ultimate goal of this model is to complete the image style transformation between two domains without the one-to-one corresponding training data. Image style conversion refers to the conversion of a picture from one style to another.

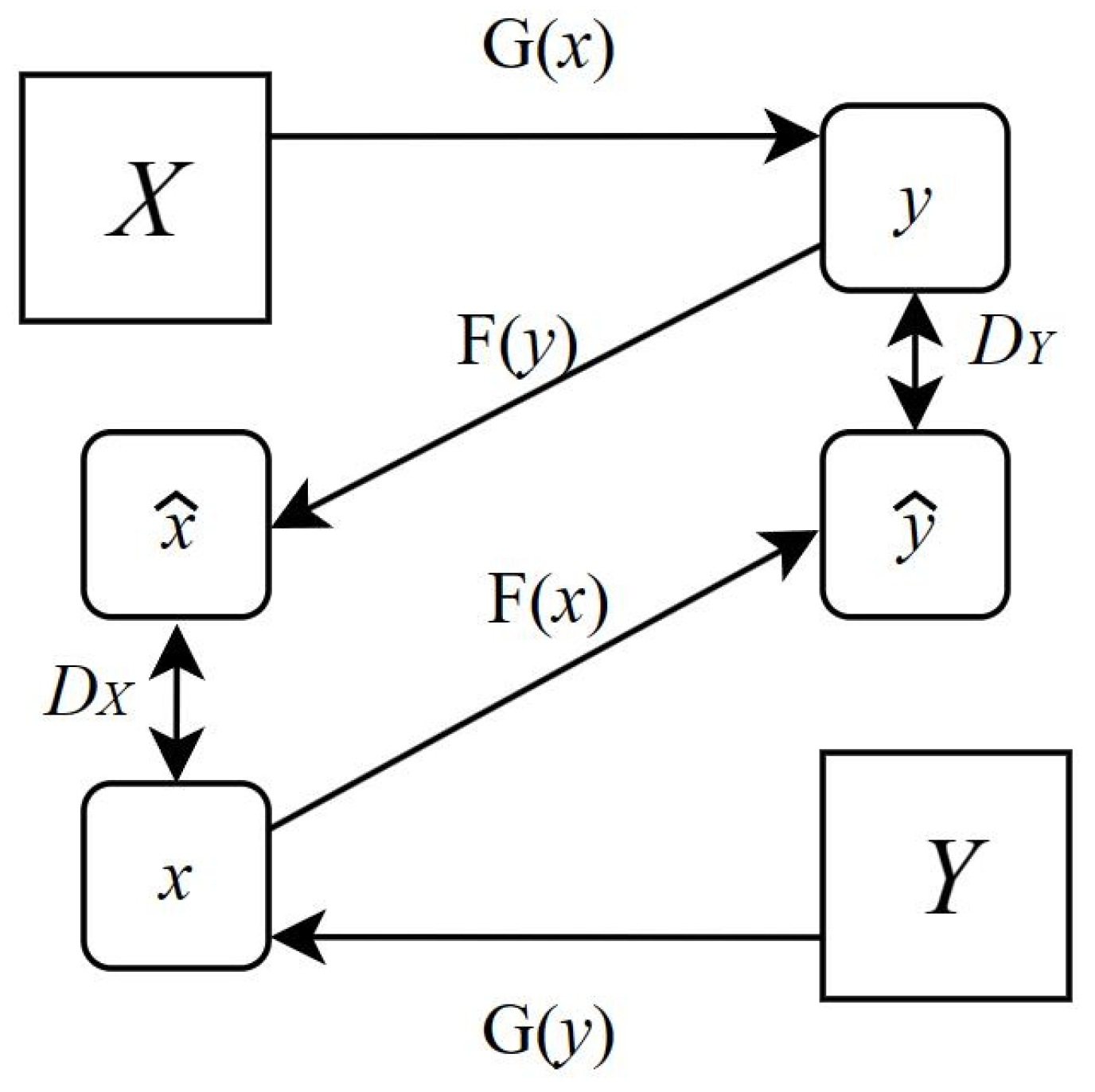

As show in

Figure 2, The CycleGAN model maps from the

X domain to the

Y domain by mapping

G. D

Y is the discriminator corresponding to the generator, which is used to distinguish between real data and generate G(

x), forming a single-generation adversarial process. In order to avoid invalid conversion effects, the authors of the CycleGAN model propose cyclic consistency loss. Another mapping relationship F maps from the

Y domain to the

X domain, and the discriminator of the denote generator is D

X, which distinguishes the real data and generates F(

y). The CycleGAN model learns both the G mapping and F mapping relationships, while satisfying the cyclic consensus requirement: G(F(

x))≈

x, and after two opposite mappings, it returns from the

x domain back to the

x domain.

3.2. CycleGAN loss function

CycleGAN combines adversarial loss and periodic consistency loss to create an output image, which measures adversarial loss where the generation distribution does not match the target. The consistency loss is used to avoid contradictory pairs of mappings. It is trained with unpaired samples and is ideal for defect detection. The process involves two types of loss: adversarial losses and cycle consistency losses.

To bring the generated data distribution closer to the real data distribution:

Like GAN, G is used to achieve

X→

Y, and G(

x) should be as close to

Y as possible during training, and the discriminator D

Y is used to determine the true and false of the sample. Same formula as GAN:

Similarly,

Y→X is implemented for F:

- 2.

Cycle consistency loss

Adversarial loss only ensures that the generated sample is homogeneous with the real sample, but it also requires a one-to-one correspondence of images in the corresponding domain.

We want ≈x, called forward cycle consistency; ≈y, called backward cycle consistency.

To ensure consistency as much as possible, set the corresponding loss:

Generator G tries to achieve the migration of

X to

Y, generator F tries to achieve the migration of

Y to

X, and at the same time, it is hoped that the generators of the two generators can achieve mutual inversion, that is, iterate back to themselves.

where

is the weight between control, resistance loss, and periodic consistency loss.

The background of the defective image generated by CycleGAN is similar to the real image with the defect. CycleGAN can generate synthetic defective samples by simply entering new defect-free samples.

3.3. The proposed automatic sample and mask generation method

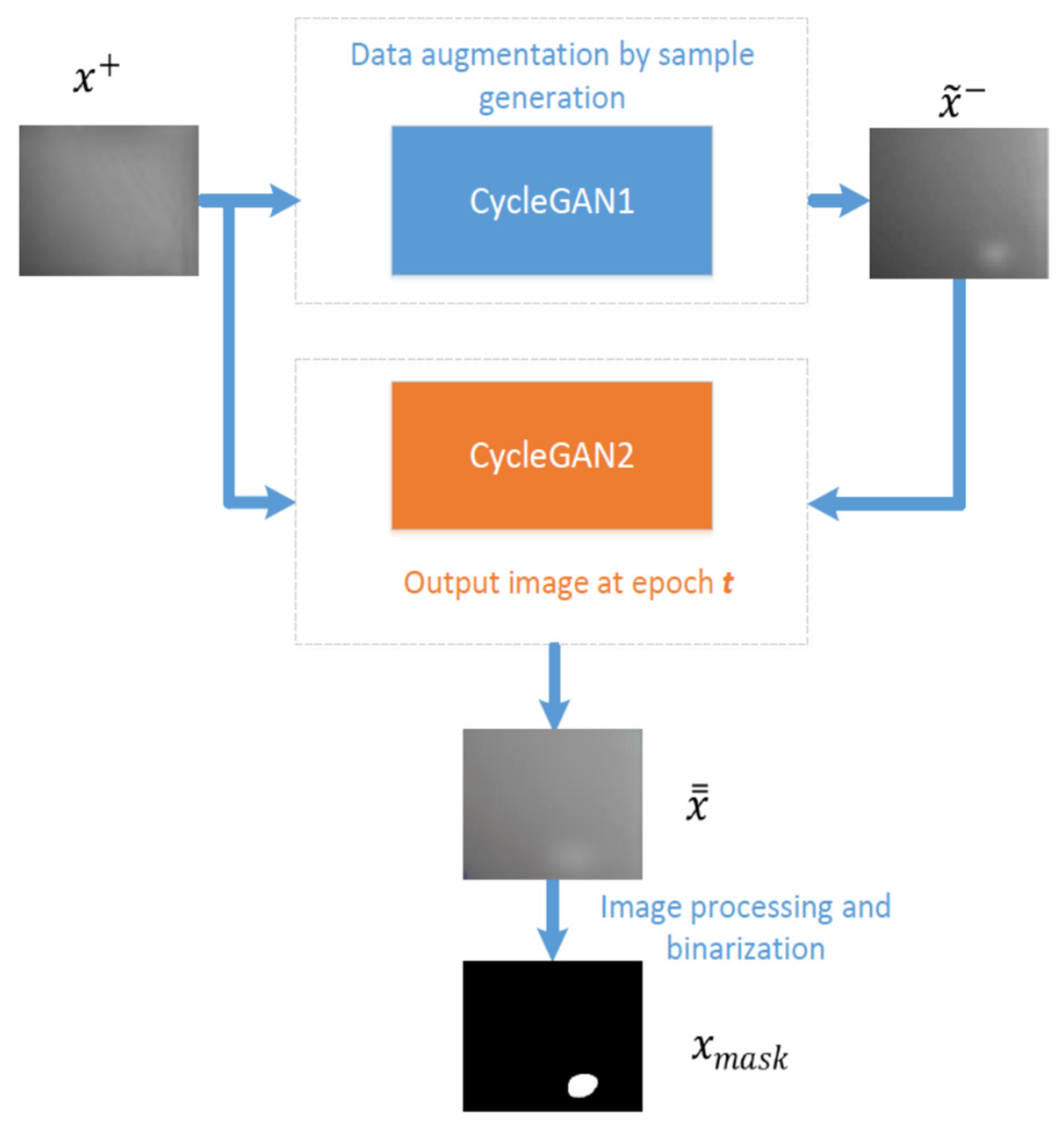

The proposed method's workflow is depicted in

Figure 3, input the defect-free LCD sample image

, and generate a large number of defective LCD sample images

through CycleGAN1.

The non-defective sample and the corresponding generated defective sample are trained as the input and output of CycleGAN2, and the effect part of the defect will be gradually superimposed during the training process, and when enough defect information is superimposed, the image can be obtained by simple image processing and binarization operation to obtain the mask of the image.

In this investigation, a learning rate of 0.0002 is utilized for CycleGAN1. This exceptionally low value is chosen to ensure that the synthetic defects closely resemble real defects. In CycleGAN2, CycleGAN2 takes a large value (e.g., 100) so that the texture background of the defect-free input and the output are as close as possible.

In order to identify defect regions in the image mask generated by CycleGAN2, the difference between the generated image in t iterations and the final image generated at the last T iteration is the accumulation of intermediate iterations. namely:

where

is the output generated by CycleGAN2 at

t epochs, for

t = 3, 4, . . . ,

T-1. Since the background texture was not reconstructed very well during the first two cycles,

t=1,2 was discarded. Empirical studies have shown that ten iterations (

T = 10) are usually sufficient to segment the defect regions in the generated image. Pixel background areas that share common ground produce small differences

, while defective pixels make large differences. A simple image processing operation and binary threshold processing are applied to segment defects in a differential image

.

4. Experimental Results and Analysis

The experiment aims to evaluate the performance of a GAN-based sample and mask generation scheme by using LCD images with and without defects. First, the experiment validates the generation of samples to augment the dataset using CycleGAN1 model. Then, the second experiment will generate masks using the CycleGAN2 model, and finally evaluate its performance using Mask R-CNN. The hardware and software configuration for the experiment includes the Nvidia RTX4000 GPU and Python 3.

4.1. Data Set Augmentation using CycleGAN to generate image samples

To address the limited number of images in the original dataset, which is insufficient for effective training, data augmentation is necessary. Initially, common techniques such as rotation and mirroring are applied to the original images for data augmentation, as frequently used in deep learning. However, even with these techniques, the dataset remains limited in size. Therefore, a GAN-based data sample augmentation method is employed.

Since the number of existing defective LCD sample images is limited, conducting effective experiments poses a challenge. CycleGAN is utilized to expand the sample dataset by leveraging the capabilities of generative adversarial networks (GANs). CycleGAN can generate additional datasets based on the features extracted from a small amount of existing data, thus compensating for the scarcity of the original dataset.

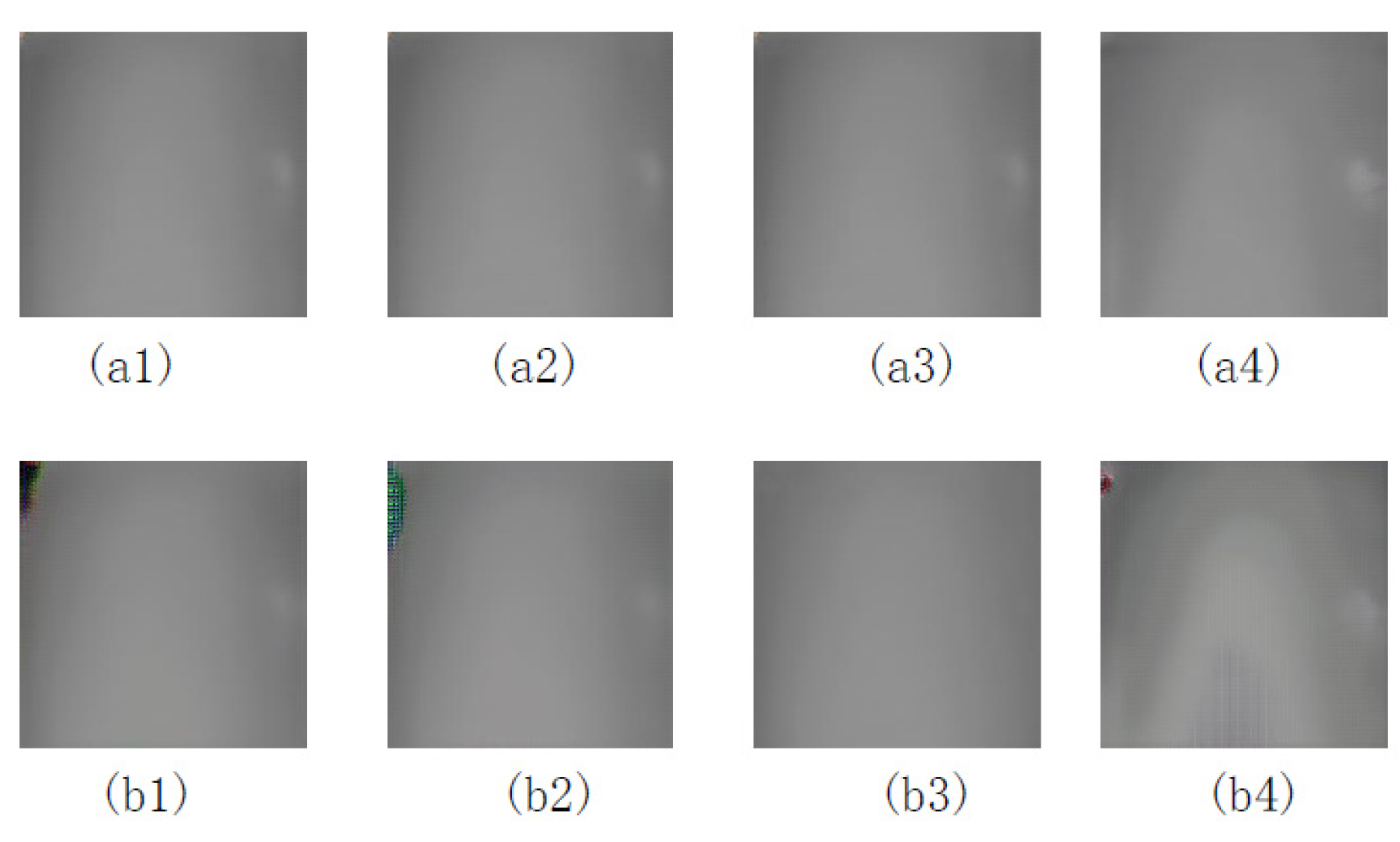

Here,

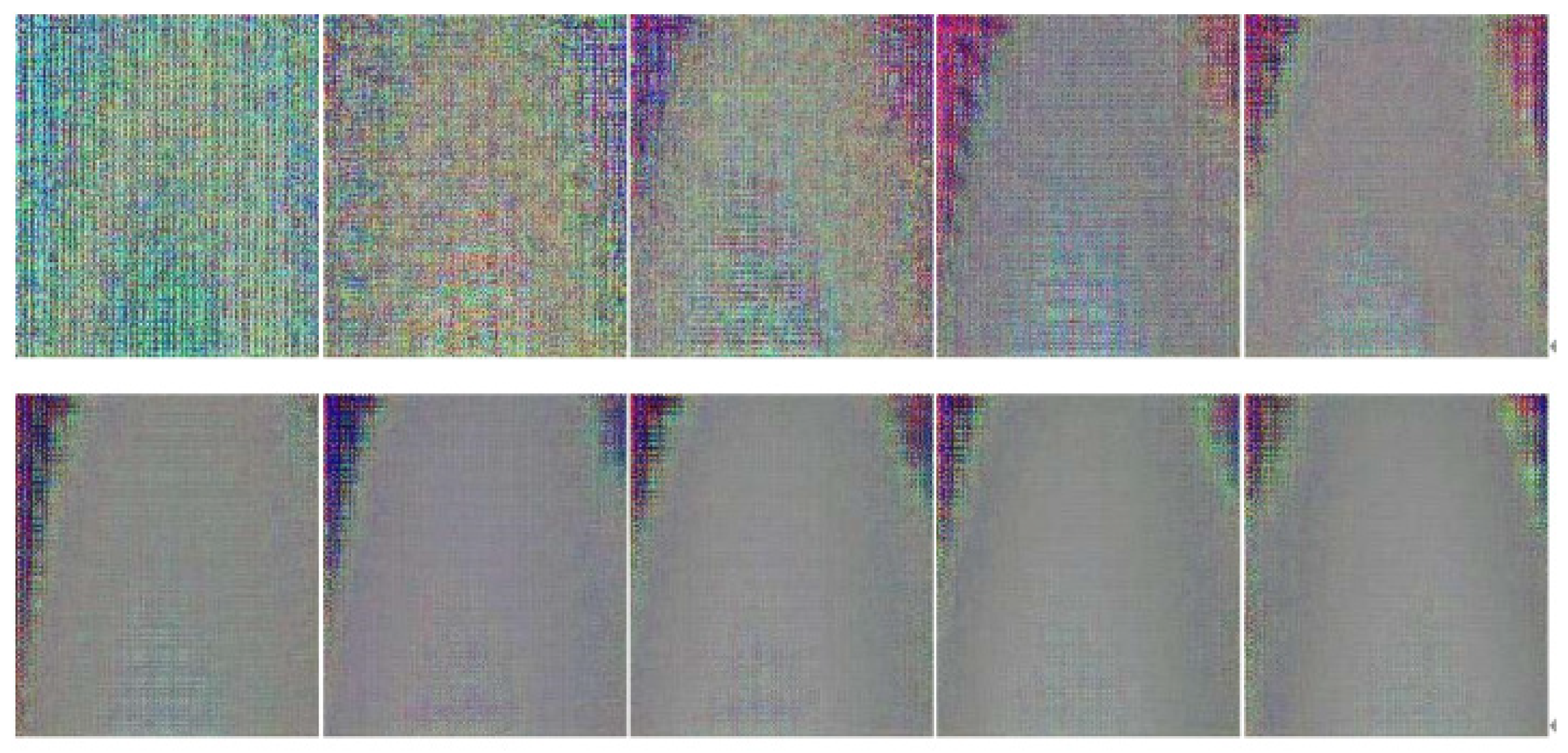

is the weight in the loss function that controls the adversarial loss and the consistency loss. The results shown in

Figure 4 show that smaller

is more inclined to generate images that highlight local defects while the background is similar. An excessively large

value proves ineffective in generating defects, while an excessively small

value hinders the accurate reconstruction of background texture. To strike a balance that aligns with practical requirements and ensures overall performance in both defect synthesis and background preservation, this study employs

=45 for CycleGAN1.

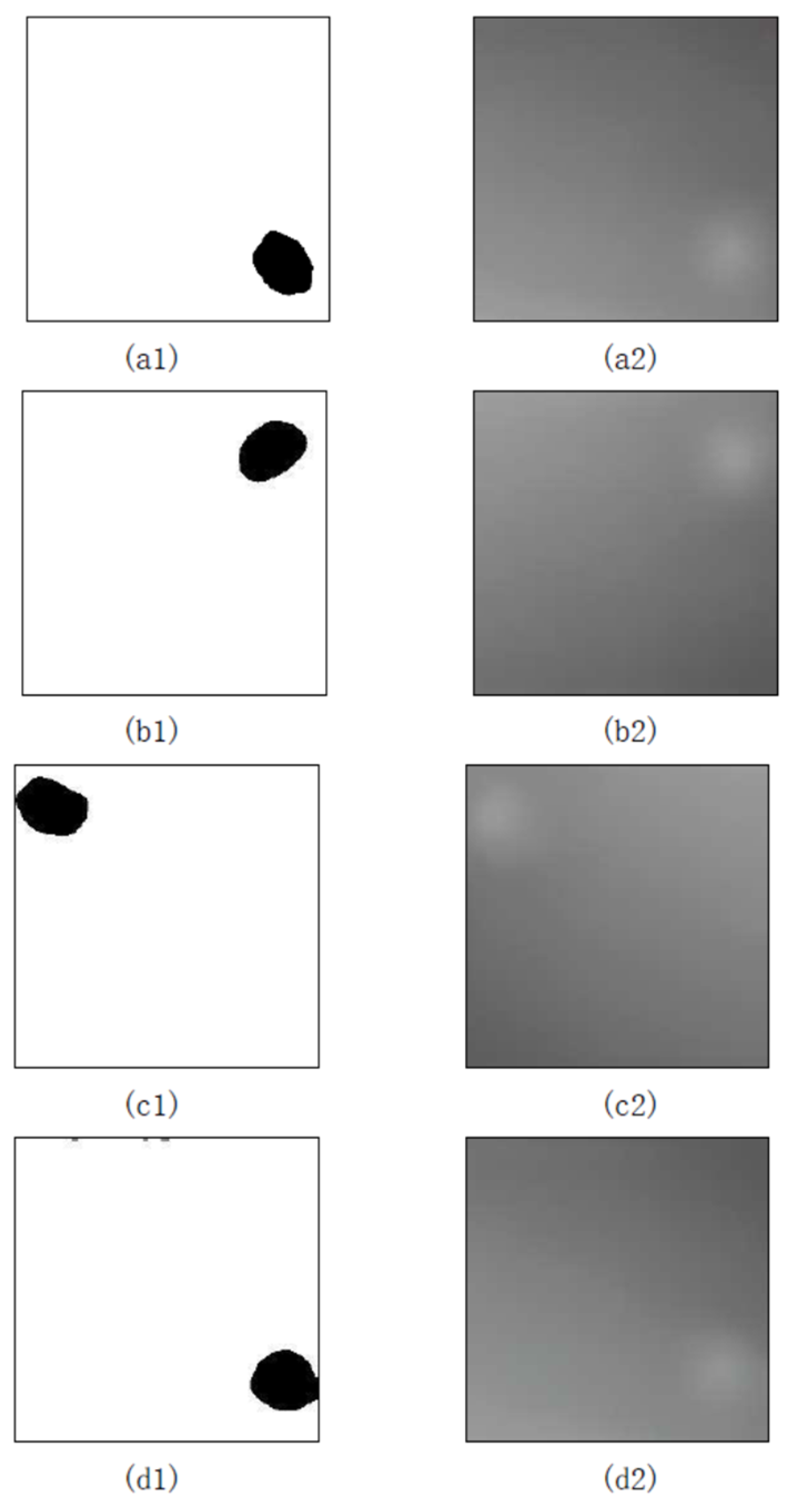

4.2. Image Masks generating using the CycleGAN2 model

Through the existing defect-free image and the defect image corresponding to this defect-free image generated from the CycleGAN1 model, these two images become the input pair of the CycleGAN2 model, and CycleGAN2 trains each pair of images separately. As shown in

Figure 5, a1, b1, c1, and d1 are non-defective sample images, while a2, b2, c2, and d2 are corresponding defective sample images generated by CycleGAN1. Input the corresponding generated defect sample image and the defect-free image into the CycleGAN2 model as the target image and input image in the training process of this model, respectively. During the training process, the defect-free input image will be gradually iterated accumulate and superimpose defect information until defect samples are generated, and this superposition process is the generation process of sample masks.

In terms of CycleGAN2 loss function parameter selection, since the larger

value in CycleGAN2 pays more attention to consistency loss, it tends to preserve the global background texture of the object surface. The following

Figure 6 shows the comparison results of different

. Our purpose is to make the input image without defects and the background of the generated defect image are as similar as possible, so CycleGAN2 needs a larger regularization value.

T is the generation period of a pair of control samples in the process of training CycleGAN2 to generate a process graph, corresponding to the Formula (6), the larger the value of

T, the closer the comparison image in the process is to the comparison image generated by the final call model;

is the weight in the loss function that controls the adversarial loss and the consistency loss. The experimental results show that a smaller value of

does not reconstruct the background in the early stage, and only defects appear in the later stage; a larger value of

has a better background reconstruction effect and synthesizes defects in the early stage. Therefore, CycleGAN2 uses

=100 in this paper.

In order to highlight defective pixels in the synthetic images in CycleGAN2, we integrate the process image and accumulate the differences in the generated images. Since the background texture is not well reconstructed in the early stage, a floating integral lower bound is chosen to compare the effect of different integral lower bounds on segmenting defect regions in the synthesized image.

4.3. Segmentation results

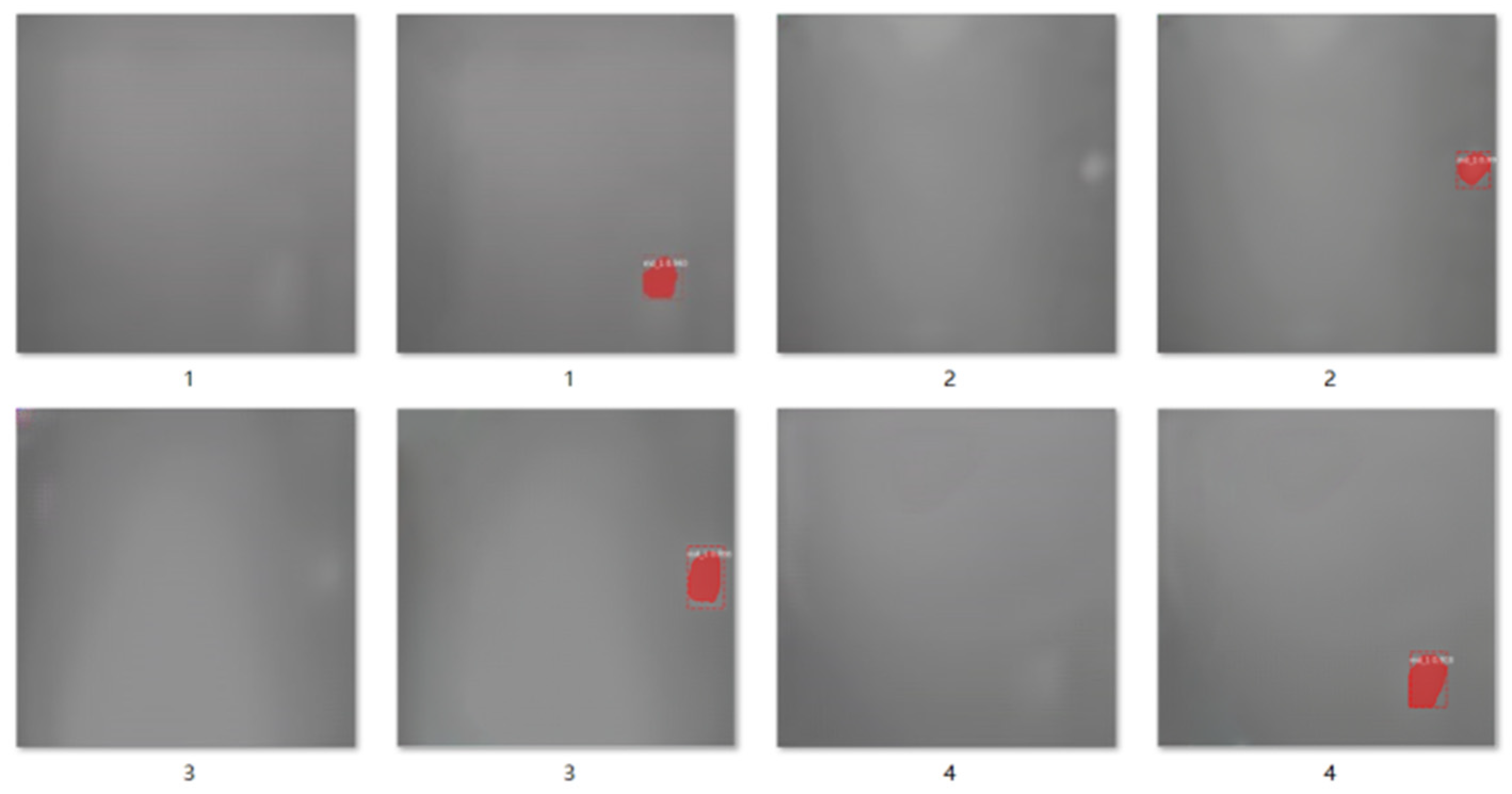

Carry out simple image processing and binarization on the superimposed process image of defects, and obtain the segmentation results. The experimental results are as shown in

Figure 7: From the segmentation image, it can be seen that the position information of the defects is relatively obvious, which can be used to generate a mask.

4.4. Employing the Mask RCNN Model for Recognition

The Mask R-CNN model is employed for training and testing on the LCD dataset. Initially, the dataset is prepared to include defect sample images obtained previously and their corresponding segmented mask images. Subsequently, the Mask R-CNN model is used for training and testing.

Figure 8 illustrates the results using 30 samples, demonstrating a varying recognition rate ranging from 0.730 to 0.999. Notably, the majority of these recognition rates surpass 0.95. We tested the two sets of data, the pre-expansion sample set and the GAN method to generate samples to expand the data set. The detailed results are shown in Table Ⅰ.

Table I.

Recognition rate distribution in each group of test results.

Table I.

Recognition rate distribution in each group of test results.

| Recognition rate |

< 0.9 |

< 0.95 |

< 0.99 |

> 0.99 |

| Group1 |

1 |

0 |

2 |

11 |

| Group2 |

11 |

0 |

11 |

28 |

Table II.

Comparison Recognition Accuracy and Object Detection Outcomes Across Diverse Mask Generation Techniques (The best results achieved are bolded).

Table II.

Comparison Recognition Accuracy and Object Detection Outcomes Across Diverse Mask Generation Techniques (The best results achieved are bolded).

| Method |

mAPbbox

|

| Mask R-CNN with LabelMe (Group 1) |

99.99% |

| Mask R-CNN with LabelMe (Group 2) |

96.8% |

| Mask R-CNN with Our proposed method (Group 1) |

98.8% |

| Mask R-CNN with Our proposed method (Group 2) |

94.63% |

As shown in Table I, the initial Group1 experiments were performed on a set of unexpanded defective image samples. Its recognition rate mainly exceeded 0.99, albeit with one instance falling below 0.9. The average recognition rate across all test samples was 0.988. The model demonstrated excellent performance on real samples, but the limitations imposed by the limited sample size must be recognized.

Group2 is experimented on LCD samples generated by an expanded defect image dataset. From the experimental results, it can be seen that most of the test results are above 0.95, but there are 11 images which are less than 0.9. This has a certain relationship with the increase in the number of sample sets. The average recognition rate of all the test samples is 0.9463. overall, the performance of the detection results of the generated sample dataset has decreased, but it can be easy to obtain a sufficient data sample set.

In order to quantitatively evaluate the performance of our proposed unsupervised automatic mask generation method, we compared it with a manually labeled mask generation method (LableMe). We input the masks generated by these two methods to MASK R-CNN separately. The final test results are used to compare the impact of various mask generation methods on the algorithm's recognition performance.

4.5. The Comparing segmentation results

Figure 9 illustrates the experimental results that juxtapose our proposed method with the Gabor filter method, wavelet method, and U-Net segmentation method. The experiments reveal that the Gabor and wavelet methods struggle to effectively segment images of defective LCD surfaces, whereas our proposed method excels in successfully detecting these defects. The U-Net segmentation method can successfully segment defect areas, but the segmentation results are not very accurate. In addition, the U-Net method only belongs to semantic segmentation and does not mark specific instance information of pixels. Our proposed method can effectively solve this problem.

5. Conclusions

In the application of deep learning techniques to detect micro defects on LCD surfaces with low contrast, the challenges of imbalanced positive and negative samples, as well as the complex and laborious task of annotating and acquiring image masks, can be addressed by our proposed method of simultaneously auto-generating samples and masks using a deep generative network model. Our proposed deep generative network approach obtains the image samples needed to train the target detection network and automatically generates the corresponding image masks. The experimental findings in the detection of micro defects on low-contrast LCD surfaces substantiate the high detection accuracy achieved with the obtained image samples and image masks, highlighting the applicability of our proposed method to other domains requiring image sample augmentation and annotation.

However, the method may suffer from unsatisfactory generation quality when facing targets with complex backgrounds for automatic generation. We will subsequently explore more powerful methods to handle automatic generation and automatic detection of diverse targets.

Author Contributions

Conceptualization, H.W. and Y.L.; methodology, H.W.; software, Y.L.and Y.X.; validation, Y.L. and Y.X.; formal analysis, H.W.; investigation, Y.L.; resources, H.W.; data curation, Y.X.; writing-original draft preparation, H.W.; writing-review and editing, Y.X. and Y.L.; visualization, H.W.; supervision, H.W.; project administration, H.W.; funding acquisition, H.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported in part by the China National Key Research and Development project (2017YFE0113200), Anhui Provincial Natural Science Foundation (2108085ME166).

Data Availability Statement

The data that support the findings of this study are available from the corresponding author, H.W., upon reasonable request.

Conflicts of Interest

Authors have no conflict of interest to declare.

References

- Ren Z, Fang F, Yan N, et al. State of the art in defect detection based on machine vision[J]. International Journal of Precision Engineering and Manufacturing-Green Technology, 2022, 9(2): 661-691. [CrossRef]

- Singh S A, Desai K A. Automated surface defect detection framework using machine vision and convolutional neural networks[J]. Journal of Intelligent Manufacturing, 2023, 34(4): 1995-2011. [CrossRef]

- Tao J, Zhu Y, Jiang F, et al. Rolling surface defect inspection for drum-shaped rollers based on deep learning[J]. IEEE Sensors Journal, 2022, 22(9): 8693-8700. [CrossRef]

- Dong H, Song K, He Y, Xu J, Yan Y and Meng Q. PGA-Net: Pyramid feature fusion and global context attention network for automated surface defect detection[J]. IEEE Transactions on Industrial Informatics, 2019, 16(12): 7448-7458. [CrossRef]

- Lee M, Jeon J, Lee H. Explainable AI for domain experts: a post Hoc analysis of deep learning for defect classification of TFT–LCD panels[J]. Journal of Intelligent Manufacturing, 2021: 1-13. [CrossRef]

- Pratt W K, Sawkar S S, O'Reilly K. Automatic blemish detection in liquid crystal flat panel displays[C]//Machine Vision Applications in Industrial Inspection VI. International Society for Optics and Photonics, 1998, 3306: 2-13. [CrossRef]

- Lu H P, Su C T. CNNs Combined with a Conditional GAN for Mura Defect Classification in TFT-LCDs[J]. IEEE Transactions on Semiconductor Manufacturing, 2021, 34(1):25-33. [CrossRef]

- Kim M, Lee M, An M, et al. Effective automatic defect classification process based on CNN with stacking ensemble model for TFT-LCD panel[J]. Journal of Intelligent Manufacturing, 2020, 31(5): 1165-1174. [CrossRef]

- Xie X. A review of recent advances in surface defect detection using texture analysis techniques[J]. ELCVIA: electronic letters on computer vision and image analysis, 2008, 7(3): 1-22.

- Yufeng S, Dali Z, Junhua Z, et al. Analysis of textile defects based on PCA-NLM[J]. Journal of Intelligent & Fuzzy Systems, 2020, 38(2): 1463-1470.

- Ahmad J, Akula A, Mulaveesala R, et al. An independent component analysis based approach for frequency modulated thermal wave imaging for subsurface defect detection in steel sample[J]. Infrared Physics & Technology, 2019, 98: 45-54. [CrossRef]

- ZHONG Z, MA Z. A Novel Defect Detection Algorithm for Flexible Integrated Circuit Package Substrates[J]. IEEE Transactions on Industrial Electronics, 2022,69(2): 2117-2126. [CrossRef]

- Tu Zimei, Wang Sujuan, Shen Zhiwei. Printed circuit board inspection and sorting system based on machine vision [J]. Modern Electronic Technology, 2022, 45(14): 5-9.

- Kim S Y, Song Y C, Jung C D, Park K H. Effective defect detection in thin film transistor liquid crystal display images using adaptive multi-level defect detection and probability density function[J]. Optical review, 2011, 18(2): 191-196. [CrossRef]

- Zhang Y, Zhang Y, Gong J. A LCD Screen Mura Defect Detection Method Based on Machine Vision[C]//2020 Chinese Control And Decision Conference (CCDC). IEEE, 2020: 4618-4623. [CrossRef]

- Chen C S, Weng C M, Tseng C C. An efficient detection algorithm based on anisotropic diffusion for low-contrast defect[J]. The International Journal of Advanced Manufacturing Technology, 2018, 94(12): 4427-4449. [CrossRef]

- Yang H, Song K, Mei S, et al. An accurate mura defect vision inspection method using outlier-prejudging-based image background construction and region-gradient-based level set[J]. IEEE Transactions on Automation Science and Engineering, 2018, 15(4): 1704-1721. [CrossRef]

- Mei S, Yang H, Yin Z. Unsupervised-Learning-Based Feature-Level Fusion Method for Mura Defect Recognition[J]. IEEE Transactions on Semiconductor Manufacturing, 2017, 30(1): 105-113. [CrossRef]

- Yang H, Mei S, Song K, Tao B, Yin Z. Transfer-Learning-Based Online Mura Defect Classification[J]. IEEE Transactions on Semiconductor Manufacturing, 2018, 31(1): 116-123. [CrossRef]

- Ren S, He K, Girshick R, et al. Faster r-cnn: Towards real-time object detection with region proposal networks[J]. IEEE transactions on pattern analysis and machine intelligence, 2016, 39(6): 1137-1149. [CrossRef]

- Fang, F., Li, L., Zhu, H., & Lim, J. H. (2020). Combining Faster R-CNN and Model-Driven Clustering for Elongated Object Detection. IEEE transactions on image processing: a publication of the IEEE Signal Processing Society, 29(1), 2052–2065. [CrossRef]

- Huang Z, Huang L, Gong Y, et al. Mask scoring r-cnn[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2019: 6409-6418.

- Bolya D, Zhou C, Xiao F, et al. Yolact: Real-time instance segmentation[C]//Proceedings of the IEEE/CVF International Conference on Computer Vision. 2019: 9157-9166.

- Singh R, Kumar G, Sultania G, et al. Deep Learning based Mura Defect Detection[J]. EAI Endorsed Transactions on Cloud Systems, 2019, 5(15): 1-7. [CrossRef]

- He K, Gkioxari G, Dollár P, et al. Mask r-cnn[C]//Proceedings of the IEEE international conference on computer vision. 2017: 2961-2969.

- Chen X, Girshick R, He K, et al. Tensormask: A foundation for dense object segmentation[C]//Proceedings of the IEEE/CVF International Conference on Computer Vision. 2019: 2061-2069.

- Cheng B, Misra I, Schwing A G, et al. Masked-attention mask transformer for universal image segmentation[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2022: 1290-1299.

- Çelik A, Küçükmanisa A, Sümer A, et al. A real-time defective pixel detection system for LCDs using deep learning based object detectors[J]. Journal of Intelligent Manufacturing, 2020: 1-10. [CrossRef]

- Lin H, Li B, Wang X, et al. Automated defect inspection of LED chip using deep convolutional neural network[J]. Journal of Intelligent Manufacturing, 2019, 30(6): 2525-2534. [CrossRef]

- Yin Yuting, Xiao Qinkun. Image generation based on deep convolutional generative adversarial network [J]. Computer Technology and Development, 2021, 31(4): 86-92.

- Liu Zhaochen, Xie Qing, Wang Chunxin, et al. Partial discharge data enhancement and pattern recognition method based on CycleGAN and deep residual network [J]. High Voltage Electrical Appliances, 2022, 58(11): 106-113.

- J. Y Z, T. P, P. I, et al. Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks: 2017 IEEE International Conference on Computer Vision (ICCV)[C], 2017.

- J. L, H. W, Y. L, et al. Automatic Generation and Detection Method of LCD Samples Based on Deep Learning: 2022 5th World Conference on Mechanical Engineering and Intelligent Manufacturing (WCMEIM)[C], 2022.

- Torralba A, Russell B C, Yuen J. Labelme: Online image annotation and applications[J]. Proceedings of the IEEE, 2010, 98(8): 1467-1484. [CrossRef]

- Schlegl T, Seeböck P, Waldstein S M, et al. Unsupervised anomaly detection with generative adversarial networks to guide marker discovery[C]//International conference on information processing in medical imaging. Springer, Cham, 2017: 146-157. [CrossRef]

- Kwon D, Kim H, Kim J, et al. A survey of deep learning-based network anomaly detection[J]. Cluster Computing, 2019, 22(1): 949-961. [CrossRef]

- Kalantar, R., Messiou, C., te.al. CT-Based Pelvic T1-Weighted MR Image Synthesis Using UNet, UNet++ and Cycle-Consistent Generative Adversarial Network (Cycle-GAN). Frontiers in oncology, 11, 665807. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).