1. Introduction

The field of aspect-level sentiment classification, as cited in seminal works by Bo Pang (2008) and Bing Liu (2012) [

1], has increasingly gained prominence in the sphere of natural language processing [

2,

3,

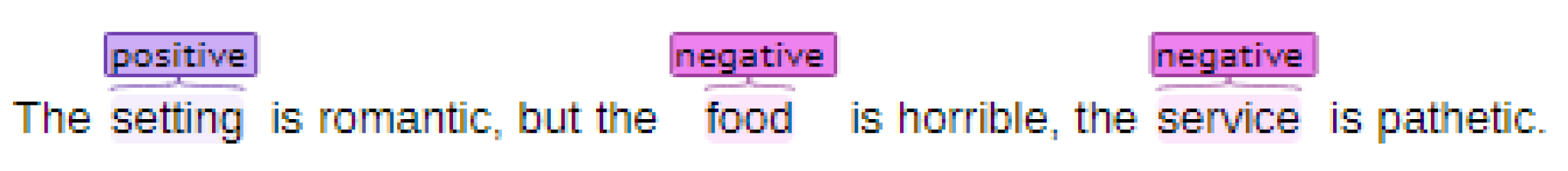

4]. This intricate task goes beyond general sentiment analysis by zeroing in on the sentiment polarities associated with specific aspects within a given context. Consider, for instance given in

Figure 1, a sentence like “The price is reasonable although the service is poor". Here, the sentiments expressed towards distinct aspects, namely “price" and “service", diverge, reflecting positive and negative polarities, respectively. In this context, an aspect could be an entity or a particular attribute of an entity.

Delving deeper into aspect-level sentiment classification reveals its complexity [24], especially when contrasted with broader, sentence-level sentiment analysis. The primary challenge lies in pinpointing and interpreting the segments of a sentence that pertain to each aspect. Earlier methodologies, as explored in studies by Svetlana Kiritchenko (2014) and others, predominantly relied on statistical techniques, crafting a suite of handcrafted features to train classifiers like Support Vector Machines. Such approaches, while effective to an extent, were labor-intensive and lacked flexibility.

Recent years have witnessed a paradigm shift with the advent of neural network models, as detailed in works by Poria et al. (2016) and Duyu Tang (2016) [

25,

26,

27,

28]. These models have garnered interest for their proficiency in autonomously generating insightful, low-dimensional representations from both aspects and their contextual environments. This development has markedly improved accuracy in aspect-level sentiment classification, eliminating the need for meticulous feature engineering [

24,

29,

30,

31]. A cornerstone of this evolution has been the implementation of attention mechanisms in neural networks, as evidenced in groundbreaking research by Volodymyr (2014) and Dzmitry (2015) [

4,

9,

32,

33,

34]. These mechanisms, widely incorporated in aspect-level sentiment analysis models, adeptly identify the sentence components most pertinent to a given aspect. Models by Chen et al. (2017), Ma et al. (2017), and Song et al. (2019) exemplify the successful application of attention-based frameworks in creating aspect-specific representations.

Notwithstanding these advancements, existing models are not without limitations. A recurring issue is their tendency to treat each aspect in isolation, thus overlooking the potential wealth of information embedded in the sentiment dependencies among multiple aspects. For instance, understanding the positive sentiment towards the "setting" aspect, combined with the conjunction "but", intuitively suggests a negative sentiment towards the "food" aspect. Such inter-aspect sentiment dependencies are instrumental in accurately gauging the overall sentiment conveyed in a sentence.

Addressing this gap, we introduce a groundbreaking method, termed Sentiment Dependency Graph Convolutional Networks (SDGCN), to model these intricate sentiment interconnections in aspect-level sentiment classification. Leveraging the strengths of graph convolutional networks (GCN), as discussed in Kipf’s (2016) research, SDGCN adeptly captures the nuanced interdependencies within relational data. Each aspect is conceptualized as a node within a graph, with edges delineating the sentiment relationships between nodes. Prior to applying GCN, our model also incorporates a bidirectional attention mechanism paired with position encoding, further refining the aspect-specific representations.

Our evaluation, conducted on the SemEval 2014 datasets [

35], demonstrates that SDGCN significantly outperforms existing state-of-the-art methods. The primary contributions of our work are multifold:

We are pioneers in considering inter-aspect sentiment dependencies within a single sentence for aspect-level sentiment classification.

Our innovative bidirectional attention mechanism, enhanced with position encoding, effectively captures nuanced aspect-specific representations.

SDGCN introduces a novel framework for multi-aspect sentiment classification, employing GCN to adeptly map sentiment dependencies across different aspects within sentences.

Comprehensive evaluations on SemEval 2014 datasets affirm the superiority of our model compared to current leading methodologies.

In summary, our research not only presents a novel approach in the landscape of sentiment analysis but also sets a precedent for future explorations in this domain, underlining the critical role of understanding the interconnected nature of sentiments across various aspects.

2. Related Work

This section delves into the prevailing research on aspect-level sentiment classification and the utilization of graph convolutional networks, providing a succinct overview of these domains.

2.1. Aspect-Level Sentiment Classification

The realm of sentiment analysis, also commonly referred to as opinion mining, stands as a cornerstone in the field of Natural Language Processing (NLP), as highlighted in studies by Kim (2014) and Abid et al. (2019). Within this domain, aspect-level sentiment classification emerges as a nuanced, fine-grained task [

13,

36,

37,

38].

Historically, initial research in aspect-level sentiment classification centered on the extraction of various features, such as bag-of-words and sentiment lexicons, to train classifiers (Rao and Ravichandran, 2009). These methodologies spanned from rule-based systems (Ding et al., 2008) to statistical approaches (Jiang et al., 2011), all heavily reliant on the painstaking process of feature engineering. However, the landscape began to shift with the advent of deep learning. More recent advances have seen a surge in the application of deep neural networks, renowned for their ability to autonomously generate dense sentence vectors, eschewing the need for manual feature crafting (Dong et al., 2014; Hai and Cong, 2015). These vectors, characterized by their low dimensionality, retain a rich tapestry of semantic details. Attention mechanisms, in particular, have revolutionized sentence representation by homing in on the most salient aspects of a sentence in relation to a given aspect (Li et al., 2017; Li and Jiang, 2018; Ma et al., 2019). Wang and colleagues (2016) introduced ATAE-LSTM, a model that fuses LSTM with an attention mechanism, enabling aspect embeddings to directly influence the computation of attention weights. Similarly, Chen et al. (2017) proposed RAM, employing a multi-attention mechanism within a bi-directional LSTM structure. Ma et al. (2017) and Song et al. (2019) furthered this exploration, designing models with bidirectional and multi-head attention mechanisms, respectively, for a more intricate relationship modeling between context and aspect. Nonetheless, a common limitation in these attention-centric studies is their isolated handling of each aspect within sentences, potentially overlooking crucial sentiment dependency information in scenarios involving multiple aspects.

2.2. Graph Convolutional Network

Graph convolutional networks (GCN), first conceptualized by Bruna et al. (2014), have proven to be highly effective in processing graph-structured data, rich in relational information. Extensive research has been conducted to adapt GCNs for image-related tasks, with notable contributions from Henaff et al. (2015), Defferrard et al. (2016), Qi et al. (2017), and Li et al. (2018). Chen et al. (2019) advanced this further by applying GCN for multi-label image recognition, facilitating the propagation of information among multiple labels and fostering the development of interconnected classifiers for each image label. In parallel, the NLP sector has also witnessed a burgeoning interest in GCNs, with applications spanning semantic role labeling (Marcheggiani and Titov, 2017), machine translation (Bastings et al., 2017), and relation classification (Li et al., 2019). Other explorations in this field have utilized graph neural networks for text classification, treating documents, sentences, or words as nodes within a graph and constructing the network based on node relationships (Hao et al., 2018; Yue et al., 2018). These studies collectively underscore GCNs’ adeptness in capturing the intricate relations between nodes [

39?]. Drawing inspiration from this body of work, we incorporate GCN in our research to effectively discern sentiment dependencies among multiple aspects within textual data.

3. Methodology

The task of aspect-level sentiment classification is defined as follows: Consider a context containing N words and K aspect terms . Each aspect term , comprising words, is a subset of the context . The objective is to develop a classifier that accurately predicts the sentiment polarity for each of these aspect terms.

Our proposed SDGCN architecture encompasses several components: input embedding, Bi-LSTM, position encoding, bidirectional attention, GCN, and the output layer. We describe these components in sequence from input to output.

3.1. Input Embedding Layer

In this layer, individual words are transformed into vectors in a high-dimensional space. We use the pretrained GloVe model [

40] and the BERT model [

41] to obtain static word embeddings. Consequently, each word is represented by a vector

, where

denotes the embedding dimension. Post embedding, the context is represented as

, and the

i-th aspect as

.

3.2. Bidirectional LSTM (Bi-LSTM)

On top of the embedding layer, a Bi-LSTM captures contextual nuances for each word. The forward and backward hidden states,

and

respectively, where

represents the number of hidden units, are concatenated to form the final state:

Separate Bi-LSTMs are used for obtaining the contextual outputs for the sentence, , and each aspect, , with shared parameters across different aspects.

3.3. Position Encoding

Recognizing that the sentiment of an aspect is more likely influenced by nearby context words, we incorporate position encoding. For an aspect

among

K aspects, where

is the aspect index, the relative distance

between the

t-th context word and the

i-th aspect is defined as:

where

is the distance from a context word to the aspect,

s is a predetermined constant, and

N is the context length. The position-aware representation with embedded position information is:

3.4. Bidirectional Attention Mechanism

To encapsulate the interactive relationship between context and aspect, our model utilizes a bidirectional attention mechanism, comprising two modules: context to aspect attention and aspect to context attention. The first module redefines aspect representations based on the context, while the second focuses on deriving aspect-specific context representations for subsequent GCN processing.

3.4.1. Context to Aspect Attention

This component assigns weights to aspect words according to a query vector

, derived from averaging the context hidden outputs

. The attention weight

for each aspect word vector

is computed as:

where

is the attention weight matrix. The new aspect representation is then a weighted combination of the aspect hidden states:

3.4.2. Aspect to Context Attention

This module captures the aspect-specific context representation, mirroring the

context to aspect attention. It calculates attention scores using the new aspect representation

and the position-aware representation

:

where

is another attention weight matrix. This step yields aspect-specific representations

for each aspect in context.

3.5. Graph Convolutional Network (GCN)

GCN excels in processing data with intricate relationships and dependencies. We leverage GCN to model the sentiment dependencies between aspects, treating each aspect as a graph node. The final output of each node functions as the classifier for the respective aspect. As our task lacks explicit edges, we define these connections from scratch.

3.5.1. SDGCN: Sentiment graph based GCN

In SDGCN, each graph node encapsulates aspect-specific data, transforming neighborhood information into a novel vector representation. This method, inspired by Kipf et al. [?], incorporates self-loops in every node. The transformation is mathematically described as:

Here,

,

, with

signifying the

u-th aspect-centric representation (refer Eq.(

9)), and relu representing the rectifier linear unit function. We set

in our SDGCN model.

By integrating multiple layers of SDGCN, we enhance the depth of neighborhood information accessible to each node. Each layer processes the output from its predecessor to generate updated node representations:

where

l represents the layer index, with

.

3.6. Aspect Classification via SDGCN

The SDGCN framework concludes with an aspect classification mechanism. The final layer’s node output,

, is harnessed as an aspect-specific classifier. A fully-connected layer then maps

to a

C class aspect space:

where

and

. The probability of the

i-th aspect being in the sentiment class

is calculated as:

3.7. Optimization in SDGCN Training

The SDGCN model optimizes performance by minimizing a loss function that integrates cross-entropy with an

-regularization component. The loss for a specific sentence is formulated as:

where

denotes the one-hot labels for the

i-th aspect in the

j-th class,

is the

-regularization coefficient, and

encompasses the parameters subject to regularization. Additionally, to mitigate overfitting, a dropout strategy is employed during the training phase.

4. Experimental Analysis

4.1. Datasets and Experimental Configurations

We assessed the efficacy of SDGCN using two SemEval 2014 Task4 datasets (refer

Table 1), comprising laptop and restaurant reviews. Each dataset is divided into training and testing sets, with reviews containing multiple aspects and their sentiment polarities (positive, neutral, negative). The distribution of aspects within sentences is illustrated in Figure ??, showing a common presence of multi-aspects in single sentences.

For model initialization, we utilized GloVe and BERT word representations, with respective dimensionalities of 300 and 768. The LSTM hidden units were set at 300, while GCN layer output was fixed at 600. Weight matrices, barring the final fully connected layer, were initialized using a uniform distribution. The final layer employed a normal distribution and regularization. Training parameters included a 0.5 dropout rate, batch size of 32, and Adam Optimizer with a learning rate of 0.001. Model performance was evaluated using Accuracy and Macro-F1 metrics.

4.2. Comparative Frameworks

SDGCN’s effectiveness is benchmarked against various models: TD-LSTM, ATAE-LSTM, MemNet, IAN, RAM, PBAN, TSN, AEN, AEN-BERT - Various approaches employing aspect-specific representations and attention mechanisms with LSTM or GRU networks for sentiment polarity prediction.

4.3. Overall Outcomes

The experimental outcomes, as delineated in

Table 2, unveil the comparative efficiency of the models under scrutiny. To facilitate an unbiased evaluation that transcends the limitations posed by varying word representations, we meticulously segmented our analysis into two distinct categories: models predicated on GloVe embeddings and those based on BERT. A remarkable observation from this bifurcated analysis is the unparalleled performance of our brainchild, the SDGCN model, which not only leads the pack in both categories but also sets unprecedented benchmarks in the domain, especially with its BERT-based incarnation, SDGCN-BERT.

Delving into the intricacies of the GloVe-based methodologies, it becomes evident that the TD-LSTM approach lags in its effectiveness. This shortfall stems from its rudimentary integration of aspect information, which lacks the finesse and depth required for nuanced sentiment analysis. In contrast, models like ATAE-LSTM, MenNet, and IAN, which harness the power of attention mechanisms, exhibit a marked improvement over TD-LSTM. These models adeptly factor in the significance of aspects, thereby enhancing their predictive accuracy.

Notably, RAM, with its fusion of multiple attention mechanisms and a recurrent neural network, excels in capturing detailed, aspect-specific representations, elevating its performance above its basic attention-based counterparts. PBAN, utilizing position embedding, parallels the efficacy of RAM, showing superior results on the Restaurant dataset but not quite matching up on the Laptop dataset. The relative underperformance of TSN, in both datasets, can be attributed to its oversimplified framework, which inadequately models the context and aspects. AEN, albeit slightly better than TSN, also falls short of RAM and PBAN, implying that eschewing recurrent neural networks might reduce model complexity but at the cost of performance.

A pivotal insight emerges when comparing the results of SDGCN variants - the SDGCN-A and SDGCN-G. The global-relation graph-based SDGCN-G demonstrates a subtle yet significant edge over its adjacent-relation counterpart in terms of accuracy and Macro-F1 scores. This suggests that solely relying on adjacent relationships might be insufficient to holistically capture the intricate web of interactions among multiple aspects, especially when long-distance relationships are at play. Furthermore, incorporating position information into SDGCN-A and SDGCN-G yields substantial performance enhancements, underscoring the critical role of position encoding in achieving optimal results.

The BERT-based models, armed with the prowess of pre-trained BERT embeddings, showcase a clear superiority over their GloVe-based counterparts. This is particularly evident when examining the performance leaps made by SDGCN-BERT. On the Restaurant dataset, it notches up gains of 1.09% in accuracy and 1.86% in Macro-F1 scores, while on the Laptop dataset, it registers even more impressive gains of 1.42% and 2.03% in the same metrics, respectively. These significant improvements attest to the formidable efficacy of our proposed SDGCN model, cementing its status as a trailblazer in the realm of aspect-based sentiment analysis.

4.4. In-Depth Examination of GCN’s Influence on Model Efficacy

This segment delves into a meticulously structured series of model experiments designed to rigorously assess the transformative impact of the Graph Convolutional Network (GCN) module on aspect-based sentiment analysis. The models employed in this investigation are:

BiAtt+GCN (Synonymously SDGCN): This model, our flagship SDGCN, harnesses the synergistic power of bilateral attention mechanisms combined with GCN, offering a holistic view of inter-aspect sentiment relations.

BiAtt: Stripping away the GCN layer from BiAtt+GCN, this model offers insights into sentiment predictions by treating each aspect in isolation, thus lacking the relational context provided by GCN.

Att+GCN: A pared-down version of BiAtt+GCN, this model retains the GCN component but omits the nuanced ’context to aspect attention’, potentially impacting its sentiment prediction accuracy.

Att: This variant, devoid of the GCN module, serves as a baseline, focusing solely on attention mechanisms without the benefit of GCN’s relational insights.

The performance metrics of these models, as illustrated in

Table 3, paint a revealing picture. A comparative analysis clearly demonstrates that models incorporating the GCN module (e.g., Att+GCN and BiAtt+GCN) consistently outperform their non-GCN counterparts. This disparity in performance not only underscores the efficacy of GCN in enhancing the model’s capability to predict sentiment polarities but also highlights its critical role in understanding the intricate web of sentiment dependencies between various aspects within a text. The juxtaposition of these models, varying in their incorporation of GCN and attention mechanisms, offers a nuanced understanding of how each component contributes to the overall sentiment analysis, thereby validating the indispensable role of GCN in elevating the sophistication and accuracy of aspect-based sentiment analysis.

4.5. Detailed Analysis Through Case Studies

This segment delves into a comparative case study, utilizing examples from the laptop dataset, to elucidate the nuanced differences between models equipped with the Graph Convolutional Network (GCN) and those without. These examples, rich in multiple aspects, serve to provide a concrete, intuitive understanding of our proposed GCN-enhanced model’s capabilities in contrast to its counterpart.

Consider the first scenario: "I love the keyboard and the screen." In this sentence, the key aspects are "keyboard" and "screen." The model lacking GCN focuses predominantly on the word "love" to ascertain the sentiment polarities tied to these aspects. However, the GCN-equipped model exhibits a more sophisticated approach. It not only considers "love" but also gives due weight to the connective "and." This subtle yet significant difference indicates that our GCN model adeptly identifies the sentiment link between "keyboard" and "screen" through the connective, allowing for a more integrated and simultaneous sentiment prediction for both aspects.

Moving to the second example: "Air has higher resolution but the fonts are small." This sentence presents the aspects "resolution" and "fonts," connected by the contrasting conjunction "but," implying opposing sentiments. The model without GCN isolates the sentiments, associating "higher" with a positive sentiment for "resolution" and "small" with a potentially negative sentiment for "fonts," neglecting the interplay between these aspects. In stark contrast, the GCN model brings the conjunction "but" into the limelight. This focus enables the model to comprehend the inverse sentiment relationship between "resolution" and "fonts," enhancing the accuracy of sentiment polarity predictions for each aspect.

These illustrative examples highlight the superior capability of our GCN model. It doesn’t just concentrate on individual words pertinent to aspect sentiments; it also intelligently interprets textual elements that signify relationships between different aspects. Utilizing the attention mechanism to spotlight words that express inter-aspect dependencies, followed by the GCN module’s analysis, our model effectively encapsulates the sentiment dynamics within a sentence. This comprehensive approach allows for a more accurate prediction of aspect-level sentiment categories, demonstrating the effectiveness and sophistication of the GCN-enhanced model in aspect-based sentiment analysis.

5. Conclusion and Future Directions

This study presents the development of a cutting-edge model, SDGCN, specifically tailored for the nuanced task of aspect-level sentiment analysis. At the heart of SDGCN’s methodology lies the innovative application of Graph Convolutional Networks (GCN) to intricately map out and analyze the sentiment interconnections among various aspects within a single sentence. A distinct feature of SDGCN is its integration of a bidirectional attention mechanism, enhanced with positional encoding. This approach enables the model to generate representations that are not only aspect-specific but also deeply informed by the positional context of each aspect within the text. Furthermore, SDGCN employs an advanced message-passing technique to effectively decode the complex web of sentiment dependencies between aspects, a facet often overlooked in previous research endeavors.

Our empirical evaluations, conducted using the SemEval 2014 dataset, have conclusively demonstrated SDGCN’s superior performance capabilities. Particularly noteworthy is the SDGCN-BERT variant, which has achieved unprecedented results, setting new benchmarks in the field of aspect-level sentiment analysis. Through meticulous case studies, SDGCN has proven its proficiency in not only identifying key words pivotal for determining aspect sentiment polarities but also in discerning words that play a crucial role in establishing the sentiment relationships between different aspects. This dual focus endows SDGCN with a remarkable ability to capture the subtle nuances of sentiment interplay within textual data.

Looking ahead, our research will venture into the realm of refining and enhancing the sentiment graph structures interlinking aspects. The current model utilizes two varieties of undirected sentiment graphs, which, while effective, offer scope for further sophistication. We hypothesize that leveraging the rich contextual cues present in the textual data could pave the way for constructing more intricate and precise graph structures. This advancement has the potential to not only fine-tune the sentiment analysis process but also to unveil deeper, more intricate layers of sentiment interrelations, thereby pushing the frontiers of aspect-based sentiment analysis.

References

- Pang, B.; Lee, L.; others. Opinion mining and sentiment analysis. Foundations and Trends® in Information Retrieval 2008, 2, 1–135. [CrossRef]

- Nazir, A.; Rao, Y.; Wu, L.; Sun, L. Issues and challenges of aspect-based sentiment analysis: A comprehensive survey. IEEE Transactions on Affective Computing 2020, 13, 845–863. [Google Scholar] [CrossRef]

- Fei, H.; Wu, S.; Li, J.; Li, B.; Li, F.; Qin, L.; Zhang, M.; Zhang, M.; Chua, T.S. LasUIE: Unifying Information Extraction with Latent Adaptive Structure-aware Generative Language Model. Proceedings of the Advances in Neural Information Processing Systems, NeurIPS 2022, 2022, pp. 15460–15475. [Google Scholar]

- Dong, L.; Wei, F.; Tan, C.; Tang, D.; Zhou, M.; Xu, K. Adaptive recursive neural network for target-dependent twitter sentiment classification. Proceedings of the 52nd annual meeting of the association for computational linguistics (volume 2: Short papers), 2014, Vol. 2, pp. 49–54.

- Fei, H.; Li, J.; Wu, S.; Li, C.; Ji, D.; Li, F. Global Inference with Explicit Syntactic and Discourse Structures for Dialogue-Level Relation Extraction. Proceedings of the Thirty-First International Joint Conference on Artificial Intelligence, IJCAI, 2022, pp. 4082–4088.

- Wu, S.; Fei, H.; Ren, Y.; Ji, D.; Li, J. Learn from Syntax: Improving Pair-wise Aspect and Opinion Terms Extraction with Rich Syntactic Knowledge. Proceedings of the Thirtieth International Joint Conference on Artificial Intelligence, 2021, pp. 3957–3963.

- Xiang, C.; Zhang, J.; Li, F.; Fei, H.; Ji, D. A semantic and syntactic enhanced neural model for financial sentiment analysis. Information Processing & Management 2022, 59, 102943. [Google Scholar]

- Fei, H.; Zhang, Y.; Ren, Y.; Ji, D. Latent Emotion Memory for Multi-Label Emotion Classification. Proceedings of the AAAI Conference on Artificial Intelligence, 2020, pp. 7692–7699.

- Tang, D.; Qin, B.; Feng, X.; Liu, T. Effective LSTMs for Target-Dependent Sentiment Classification. Proceedings of COLING 2016, the 26th International Conference on Computational Linguistics: Technical Papers, 2016, pp. 3298–3307.

- Wu, S.; Fei, H.; Li, F.; Zhang, M.; Liu, Y.; Teng, C.; Ji, D. Mastering the Explicit Opinion-Role Interaction: Syntax-Aided Neural Transition System for Unified Opinion Role Labeling. Proceedings of the Thirty-Sixth AAAI Conference on Artificial Intelligence, 2022, pp. 11513–11521.

- Ma, D.; Li, S.; Zhang, X.; Wang, H. Interactive Attention Networks for Aspect-Level Sentiment Classification. Proceedings of the Twenty-Sixth International Joint Conference on Artificial Intelligence, IJCAI-17, 2017, pp. 4068–4074.

- Fei, H.; Zhang, M.; Ji, D. Cross-Lingual Semantic Role Labeling with High-Quality Translated Training Corpus. Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, 2020, pp. 7014–7026.

- Chen, P.; Sun, Z.; Bing, L.; Yang, W. Recurrent attention network on memory for aspect sentiment analysis. Proceedings of the 2017 conference on empirical methods in natural language processing, 2017, pp. 452–461.

- Fei, H.; Zhang, M.; Li, B.; Ji, D. End-to-end Semantic Role Labeling with Neural Transition-based Model. Proceedings of the AAAI Conference on Artificial Intelligence, 2021, pp. 12803–12811.

- Zhang, M.; Qian, T. Convolution over hierarchical syntactic and lexical graphs for aspect level sentiment analysis. Proceedings of the 2020 conference on empirical methods in natural language processing (EMNLP), 2020, pp. 3540–3549.

- Wu, S.; Fei, H.; Ren, Y.; Li, B.; Li, F.; Ji, D. High-Order Pair-Wise Aspect and Opinion Terms Extraction With Edge-Enhanced Syntactic Graph Convolution. IEEE ACM Trans. Audio Speech Lang. Process. 2021, 29, 2396–2406. [Google Scholar] [CrossRef]

- Chen, C.; Teng, Z.; Zhang, Y. Inducing target-specific latent structures for aspect sentiment classification. Proceedings of the 2020 conference on empirical methods in natural language processing (EMNLP), 2020, pp. 5596–5607.

- Fei, H.; Wu, S.; Ren, Y.; Zhang, M. Matching Structure for Dual Learning. Proceedings of the International Conference on Machine Learning, ICML, 2022, pp. 6373–6391.

- Huang, L.; Sun, X.; Li, S.; Zhang, L.; Wang, H. Syntax-aware graph attention network for aspect-level sentiment classification. Proceedings of the 28th international conference on computational linguistics, 2020, pp. 799–810.

- Fei, H.; Li, F.; Li, B.; Ji, D. Encoder-Decoder Based Unified Semantic Role Labeling with Label-Aware Syntax. Proceedings of the AAAI Conference on Artificial Intelligence, 2021, pp. 12794–12802.

- Fei, H.; Ren, Y.; Zhang, Y.; Ji, D. Nonautoregressive Encoder-Decoder Neural Framework for End-to-End Aspect-Based Sentiment Triplet Extraction. IEEE Transactions on Neural Networks and Learning Systems 2023, 34, 5544–5556. [Google Scholar] [CrossRef] [PubMed]

- Hou, X.; Huang, J.; Wang, G.; He, X.; Zhou, B. Selective attention based graph convolutional networks for aspect-level sentiment classification. arXiv preprint arXiv:1910.10857, 2019. [Google Scholar]

- Fei, H.; Li, J.; Ren, Y.; Zhang, M.; Ji, D. Making Decision like Human: Joint Aspect Category Sentiment Analysis and Rating Prediction with Fine-to-Coarse Reasoning. Proceedings of the ACM Web Conference 2022, WWW, 2022, pp. 3042–3051.

- Jiang, L.; Yu, M.; Zhou, M.; Liu, X.; Zhao, T. Target-dependent twitter sentiment classification. Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies-Volume 1. Association for Computational Linguistics, 2011, pp. 151–160.

- Huang, B.; Carley, K.M. Syntax-aware aspect level sentiment classification with graph attention networks. arXiv preprint arXiv:1909.02606, 2019. [Google Scholar]

- Zhang, C.; Li, Q.; Song, D. Aspect-based sentiment classification with aspect-specific graph convolutional networks. arXiv preprint arXiv:1909.03477, 2019. [Google Scholar]

- Sun, K.; Zhang, R.; Mensah, S.; Mao, Y.; Liu, X. Aspect-level sentiment analysis via convolution over dependency tree. Proceedings of the 2019 conference on empirical methods in natural language processing and the 9th international joint conference on natural language processing (EMNLP-IJCNLP), 2019, pp. 5679–5688.

- Wang, K.; Shen, W.; Yang, Y.; Quan, X.; Wang, R. Relational Graph Attention Network for Aspect-based Sentiment Analysis. arXiv preprint arXiv:2004.12362, 2020. [Google Scholar]

- Wagner, J.; Arora, P.; Cortes, S.; Barman, U.; Bogdanova, D.; Foster, J.; Tounsi, L. Dcu: Aspect-based polarity classification for semeval task 4. Proceedings of the 8th international workshop on semantic evaluation (SemEval 2014), 2014, pp. 223–229.

- Tang, D.; Qin, B.; Liu, T. Aspect level sentiment classification with deep memory network. arXiv preprint arXiv:1605.08900, 2016. [Google Scholar]

- Wang, Y.; Huang, M.; Zhao, L. ; others. Attention-based LSTM for aspect-level sentiment classification. Proceedings of the 2016 conference on empirical methods in natural language processing, 2016, pp. 606–615.

- Li, J.; Fei, H.; Liu, J.; Wu, S.; Zhang, M.; Teng, C.; Ji, D.; Li, F. Unified Named Entity Recognition as Word-Word Relation Classification. Proceedings of the AAAI Conference on Artificial Intelligence, 2022, pp. 10965–10973.

- Fei, H.; Ren, Y.; Zhang, Y.; Ji, D.; Liang, X. Enriching contextualized language model from knowledge graph for biomedical information extraction. Briefings in Bioinformatics 2021, 22. [Google Scholar] [CrossRef] [PubMed]

- Fei, H.; Ren, Y.; Ji, D. Boundaries and edges rethinking: An end-to-end neural model for overlapping entity relation extraction. Information Processing & Management 2020, 57, 102311. [Google Scholar]

- Pontiki, M.; Galanis, D.; Pavlopoulos, J.; Papageorgiou, H.; Androutsopoulos, I.; Manandhar, S. SemEval-2014 Task 4: Aspect Based Sentiment Analysis. Proceedings of the 8th International Workshop on Semantic Evaluation (SemEval 2014); Association for Computational Linguistics and Dublin City University: Dublin, Ireland, 2014; pp. 27–35. [Google Scholar]

- Liu, J.; Zhang, Y. Attention modeling for targeted sentiment. Proceedings of the 15th Conference of the European Chapter of the Association for Computational Linguistics: Volume 2, Short Papers, 2017, pp. 572–577.

- Li, L.; Liu, Y.; Zhou, A. Hierarchical Attention Based Position-Aware Network for Aspect-Level Sentiment Analysis. Proceedings of the 22nd Conference on Computational Natural Language Learning, 2018, pp. 181–189.

- Li, X.; Bing, L.; Lam, W.; Shi, B. Transformation networks for target-oriented sentiment classification. arXiv preprint arXiv:1805.01086, 2018. [Google Scholar]

- Maas, A.L.; Hannun, A.Y.; Ng, A.Y. Rectifier nonlinearities improve neural network acoustic models. Proc. icml, 2013, Vol. 30, p. 3.

- Pennington, J.; Socher, R.; Manning, C. Glove: Global vectors for word representation. Proceedings of the 2014 conference on empirical methods in natural language processing (EMNLP), 2014, pp. 1532–1543.

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805, 2018. [Google Scholar]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).