Submitted:

28 November 2023

Posted:

29 November 2023

You are already at the latest version

Abstract

Keywords:

MSC: 90C56; 90C26

1. Introduction

- A review of techniques and strategies aiming to reduce the set of selected potential optimally hyper-rectangles in DIRECT-type algorithms.

- Introduction of a novel grouping strategy which simplify the identification of hyper-rectangles in the selection procedure in DIRECT-type algorithms.

- The new approach incorporates a particular vertex database to avoid more than two samples in descendant subregions.

- The improvements of BIRECTv algorithm positively impacted the performance of the BIRECTv algorithm.

2. Materials and Methods

2.1. The original BIRECT

2.1.1. Selection criteria

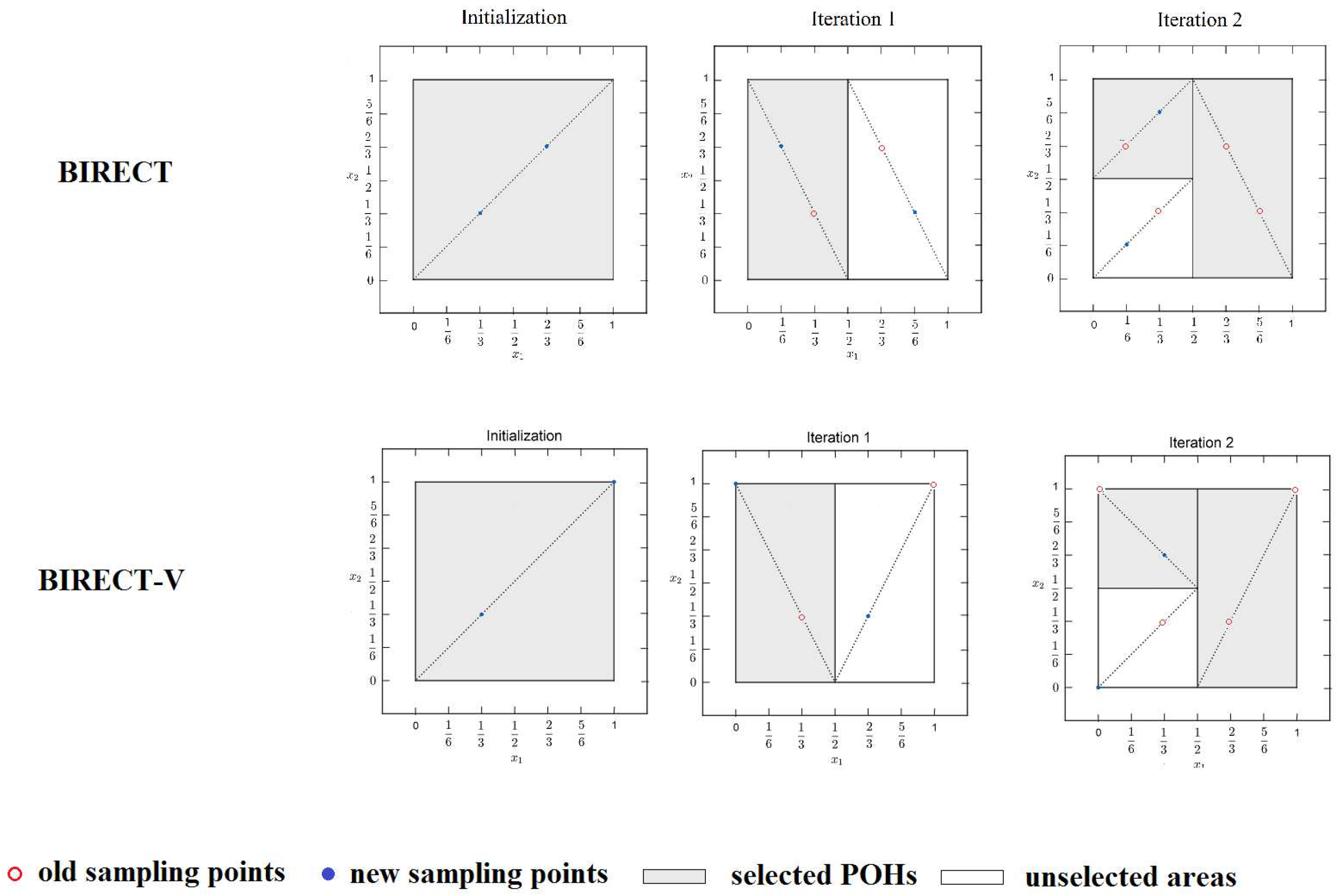

- At each iteration (kth iteration), starting from the current partitionwhere is the index set identifying the current partition, a new partition is created by bisecting a set of potentially optimal hyper-rectangles from the previous partition.

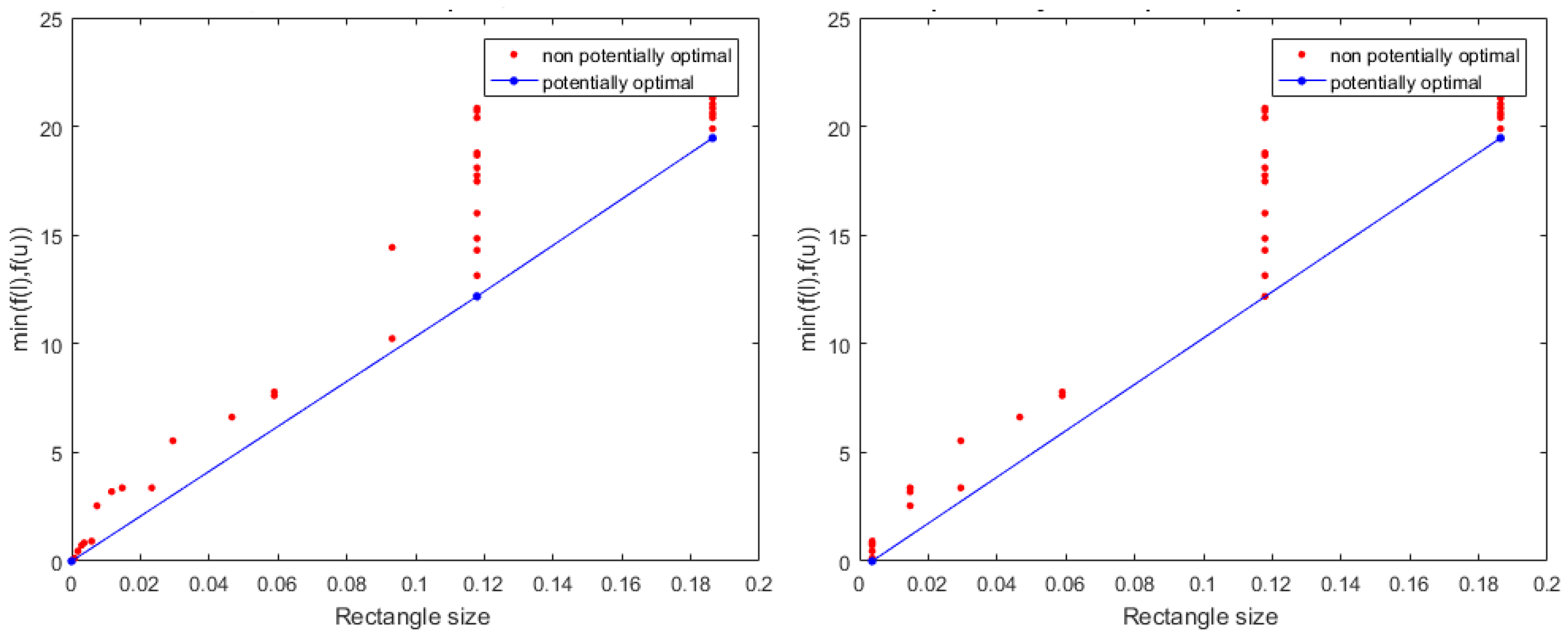

- The identification of a potentially optimal hyper-rectangle is based on lower bound estimates of the objective function over each hyper-rectangle, with a fixed rate of change (analogous to a Lipschitz constant).

- A hyper-rectangle , is considered potentially optimal if specific inequalities involving (a positive constant) and the current best-known function value are satisfied.where the measure (distance, size) of the hyper-rectangle is given by

2.1.2. Division and sampling criteria

- After the initial partitioning, BIRECT proceeds to future iterations by partitioning potentially optimal hyper-rectangles and evaluating the objective function at new sampling points.

- New sampling points are generated by adding and subtracting a distance equal to half the side length of the branching coordinate from the previous points. This approach allows for the reuse of old sampled points in descendant subregions.

- An important aspect of the algorithm is how the selected hyper-rectangles are divided. For each potentially optimal hyper-rectangle, the set of maximum coordinates (edges) is computed, and the hyper-rectangle is bisected along the coordinate (branching variable ) with the largest side length. The selection of the coordinate direction is based on the lowest index j, prioritizing directions with more promising function values.

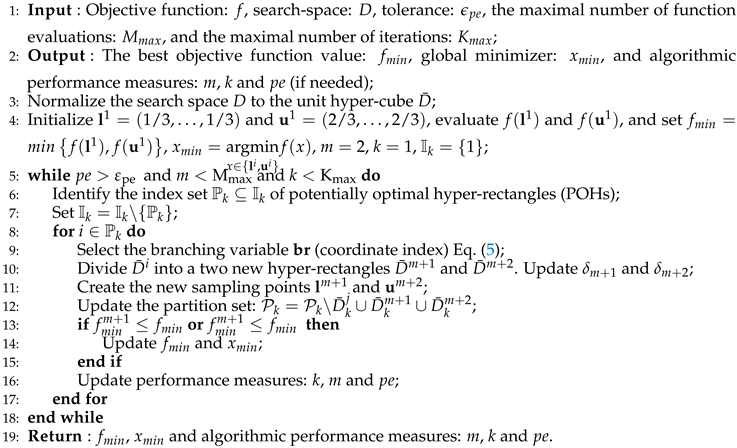

| Algorithm 1 Main steps of BIRECT algorithm |

|

2.2. Description of the BIRECTv Algorithm

2.3. Integrating Scheme for Identification of Potentially Optimal Hyper-rectangles in DIRECT-based Framework

- A tolerance of (0.01) means that the algorithm will consider hyper-rectangles whose and values are within 0.01 of each other.

- It allows for a relatively larger difference between and , meaning the algorithm will be more lenient in selecting potentially optimal hyper-rectangles.

- This might result in a larger set of potentially optimal hyper-rectangles, including some with relatively larger differences in their norm values.

- A tolerance of (0.0000001) means that the algorithm will consider hyper-rectangles whose and values are within of each other.

- It uses a much smaller tolerance, making the algorithm much stricter in selecting potentially optimal hyper-rectangles.

- This will result in a smaller set of potentially optimal hyper-rectangles, only including those with extremely close norm values.

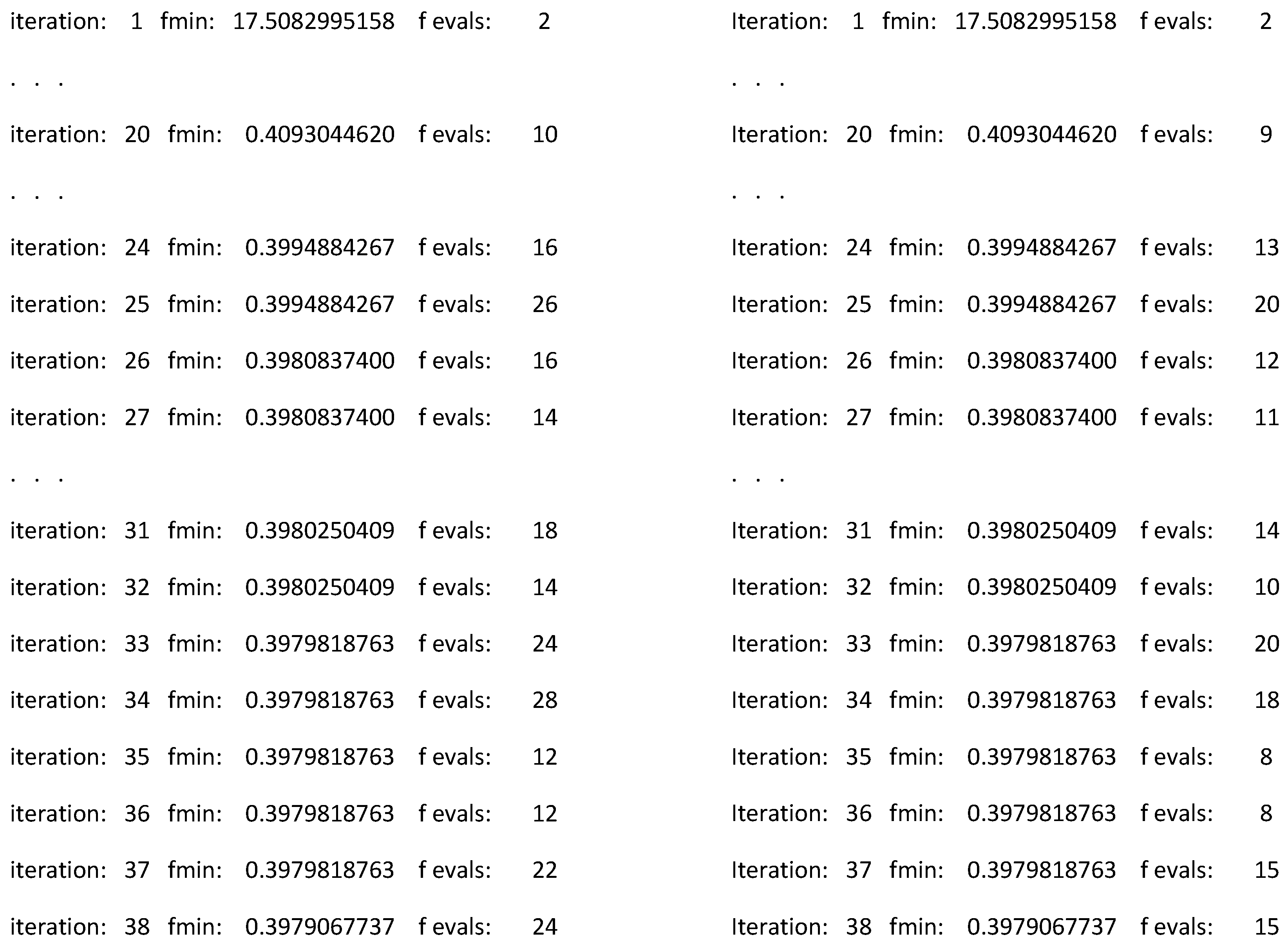

3. Results and Discussion

3.1. Implementation

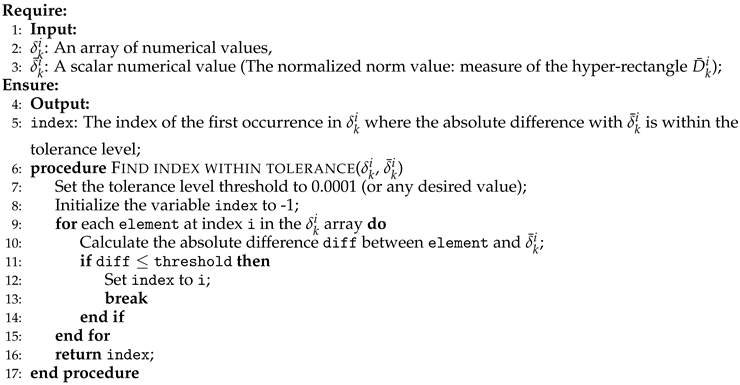

| Algorithm 2 Find First Index within Tolerance |

|

3.2. Discussion

- The improved versions of BIRECTv-l and BIRECT (imp.) appear to be reliable choices for optimization tasks, as they consistently outperform the previously published versions and demonstrate competitive performance in terms of both objective value and computational effort.

- The new algorithms, BIRECT-l (new) and BIRECT (new), show promise and are particularly efficient in terms of the number of function evaluations. However, their objective function values may vary depending on the problem.

- The choice of algorithm should be problem-dependent. Some algorithms may be more suitable for specific problem characteristics, such as unimodal or multimodal objective functions, and global or local optimization.

- These informations provide a comprehensive assessment of the algorithms’ performance across various aspects, including solution quality and computational efficiency.

4. Conclusions and Future Prospects

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Problem | Problem | Dimension | Feasible region | No. of local | Optimum |

| No. | name | n | minima | ||

| Ackley | 2, 5, 10 | multimodal | 0.0 | ||

| 4 | Beale | 2 | multimodal | 0.0 | |

| Bohachevsky 1 | 2 | multimodal | 0.0 | ||

| Bohachevsky 2 | 2 | multimodal | 0.0 | ||

| Bohachevsky 3 | 2 | multimodal | 0.0 | ||

| 8 | Booth | 2 | unimodal | 0.0 | |

| 9 | Branin | 2 | 3 | ||

| 10 | Colville | 4 | multimodal | 0.0 | |

| Dixon & Price | 2, 5, 10 | unimodal | 0.0 | ||

| 14 | Easom | 2 | multimodal | ||

| 15 | Goldstein & Price | 2 | 4 | 3.0 | |

| Griewank | 2 | multimodal | 0.0 | ||

| 17 | Hartman | 3 | 4 | ||

| 18 | Hartman | 6 | 4 | ||

| 19 | Hump | 2 | 6 | ||

| Levy | 2, 5, 10 | multimodal | 0.0 | ||

| Matyas | 2 | unimodal | 0.0 | ||

| 24 | Michalewics | 2 | 2! | ||

| 25 | Michalewics | 5 | 5! | ||

| 26 | Michalewics | 10 | 10! | ||

| 27 | Perm | 4 | multimodal | ||

| Powell | 4, 8 | multimodal | |||

| 30 | Power Sum | 4 | multimodal | ||

| Rastrigin | 2, 5, 10 | multimodal | |||

| Rosenbrock | 2, 5, 10 | unimodal | |||

| Schwefel | 2, 5, 10 | unimodal | |||

| 40 | Shekel, | 4 | 5 | ||

| 41 | Shekel, | 4 | 7 | ||

| 42 | Shekel, | 4 | 10 | ||

| 43 | Shubert | 2 | 760 | ||

| Sphere | 2, 5, 10 | multimodal | |||

| Sum squares | 2, 5, 10 | unimodal | |||

| 50 | Trid | 6 | multimodal | ||

| 51 | Trid | 10 | multimodal | ||

| Zakharov | 2, 5, 10 | multimodal |

| Problem | BIRECT-(new) | BIRECT | DIRECT-l | DIRECT | |||||||

| No. | |||||||||||

| 1 | 202 | 202 | 255 | ||||||||

| 2 | 1268 | 1777 | 8845 | ||||||||

| 3 | 47792 | 80927 | |||||||||

| 4 | 436 | 436 | 655 | ||||||||

| 5 | 468 | 476 | 327 | ||||||||

| 6 | 472 | 478 | 345 | ||||||||

| 7 | 480 | 573 | 693 | ||||||||

| 8 | 194 | 215 | 295 | ||||||||

| 9 | 242 | 242 | 195 | ||||||||

| 10 | 3379 | 6585 | |||||||||

| 11 | 722 | 722 | 513 | ||||||||

| 12 | 54843 | 19661 | |||||||||

| 13 | 164826 | 372619 | |||||||||

| 14 | 16420 | 6851 | 32845 | ||||||||

| 15 | 274 | 274 | 191 | ||||||||

| 16 | 5106 | 8379 | 9215 | ||||||||

| 17 | 352 | 352 | 199 | ||||||||

| 18 | 764 | 764 | 571 | ||||||||

| 19 | 196 | 334 | 321 | ||||||||

| 20 | 152 | 152 | 105 | ||||||||

| 21 | 968 | 1024 | 705 | ||||||||

| 22 | 6402 | 7904 | 5589 | ||||||||

| 23 | 90 | 94 | 107 | ||||||||

| 24 | 126 | 126 | 69 | ||||||||

| 25 | 82562 | 73866 | 26341 | ||||||||

| 26 | |||||||||||

| 27 | |||||||||||

| 28 | 32331 | 14209 | |||||||||

| 29 | 99514 | ||||||||||

| 30 | 10856 | ||||||||||

| 31 | 1727 | 987 | |||||||||

| 32 | 1394 | ||||||||||

| 33 | 40254 | ||||||||||

| 34 | 285 | 1621 | |||||||||

| 35 | 1700 | 2703 | 20025 | ||||||||

| 36 | 10910 | 74071 | 174529 | ||||||||

| 37 | 341 | 255 | |||||||||

| 38 | 7210 | 322039 | 31999 | ||||||||

| 39 | 315960 | ||||||||||

| 40 | 1272 | 1200 | 155 | ||||||||

| 41 | 1204 | 1180 | 145 | ||||||||

| 42 | 1140 | 1140 | 145 | ||||||||

| 43 | 2043 | 2967 | |||||||||

| 44 | 118 | 118 | 209 | ||||||||

| 45 | 602 | 712 | 4653 | ||||||||

| 46 | 8742 | 16974 | 99123 | ||||||||

| 47 | 226 | 244 | 107 | ||||||||

| 48 | 1000 | 1034 | 833 | ||||||||

| 49 | 5538 | 7688 | 8133 | ||||||||

| 50 | 1506 | 8731 | 5693 | ||||||||

| 51 | 32170 | 90375 | |||||||||

| 52 | 338 | 502 | 237 | ||||||||

| 53 | 26088 | 316827 | |||||||||

| 54 | |||||||||||

| Average | |||||||||||

| Median | |||||||||||

References

- Floudas, C.A.: Deterministic Global Optimization: Theory, Methods and Applications. Nonconvex Optimization and Its Applications, vol. 37. Springer, Boston, MA (1999). [CrossRef]

- Gablonsky, J.M., Kelley, C.T.: A locally-biased form of the DIRECT algorithm. J. of Glob. Optim. (2001), 21(1), 27-37. [CrossRef]

- Guessoum, N., Chiter, L.: Diagonal Partitioning Strategy Using Bisection of Rectangles and a Novel Sampling Scheme. MENDEL.(2023), 29, 2 131-146. [CrossRef]

- Hedar, A.: Test functions for unconstrained global optimization. http://www-optima.amp.i.kyotou.ac.jp/member/student/hedar/Hedar_files/TestGO.htm (2005). (accessed on 23 August 2006).

- Horst, R., Pardalos, P.M., Thoai, N.V.: Introduction to Global Optimization. Nonconvex Optimization and Its Application. Kluwer Academic Publishers (1995).

- Horst, R., Tuy, H.: Global Optimization: Deterministic Approaches. Springer, Berlin (1996).

- Jones, D.R., Perttunen, C.D., Stuckman, B.E.: Lipschitzian optimization without the Lipschitz constant. J. of Optim. Theory and Appl. (1993), 79(1), 157-181. [CrossRef]

- Jones, D.R.: The Direct global optimization algorithm. In: C.A. Floudas, P.M. Pardalos (eds.) The Encyclopedia of Optimization, pp. (2001), 431-440. Kluwer Academic Publishers, Dordrect (2001).

- Jones, D.R., Martins, J.R.R.A.: The DIRECT algorithm: 25 years later. J. Glob. Optim. 79, 521–566 (2021). [CrossRef]

- Ma, K., Rios, L. M., Bhosekar, A., Sahinidis, N., V., Rajagopalan, S.: Branch-and-Model: a derivative-free global optimization algorithm. Computational Optimization and Applications. (2023). [CrossRef]

- Kvasov, D.E., Sergeyev, Y.D.: Lipschitz gradients for global optimization in a one-point-based partitioning scheme. Journal of Computational and Applied Mathematics. (2012), 236(16), 4042-4054. [CrossRef]

- Liberti, L., Kucherenko, S.: Comparison of deterministic and stochastic approaches to global optimization. International Transactions in Operational Research 12(3), 263–285 (2005) https:// onlinelibrary.wiley.com/doi/pdf/10.1111/j.1475-3995.2005.00503.x. [CrossRef]

- Liu, H., Xu, S.,Wang, X.,Wu, J., Song, Y.: A global optimization algorithm for simulation-based problems via the extended DIRECT scheme. Eng. Optim. (2015), 47(11), 1441–1458. [CrossRef]

- Liu, Q., Zeng, J., Yang, G.: MrDIRECT: a multilevel robust DIRECT algorithm for global optimization problems. Journal of Global Optimization. (2015), 62(2), 205-227. [CrossRef]

- Liuzzi, G., Lucidi, S., Piccialli, V.: Exploiting derivative-free local searches in direct-type algorithms for global optimization. Computational Optimization and Applications pp. (2014), 1-27. [CrossRef]

- Paulavičius, R., Žilinskas, J., Grothey, A.: Parallel branch and bound for global optimization with combination of Lipschitz bounds. Optimization Methods and Software. (2011), 26(3), 487-498. [CrossRef]

- Paulavičius, R., Žilinskas, J.: Simplicial Global Optimization. SpringerBriefs in Optimization. Springer New York, New York, NY (2014). [CrossRef]

- Paulavičius, R., Sergeyev, Y.D., Kvasov, D.E., Zilinskas, J.: Globally-biased DISIMPL algorithm for expensive global optimization. J. Glob. Optim. (2014) 59, 545–567. [CrossRef]

- Paulavičius, R.; Zilinskas, J. Simplicial Lipschitz optimization without the Lipschitz constant. J. Glob. Optim. 2014, 59, 23–40. [CrossRef]

- Paulavičius, R., Chiter, L., Žilinskas, J.: Global optimization based on bisection of rectangles, function values at diagonals, and a set of Lipschitz constants. J. Glob. Optim. (2018), 71(1), 5–20. [CrossRef]

- Paulavičius, R., Sergeyev, Y.D., Globally-biased BIRECT algorithm with local accelerators for expensive global optimization, Expert Systems with Applications. November 2019.

- Sergeyev, Y.D.: An efficient strategy for adaptive partition of N-dimensional intervals in the framework of diagonal algorithms. Journal of Optimization Theory and Applications. (2000), 107(1), 145-168. [CrossRef]

- Sergeyev, Y.D.: Efficient partition of n-dimensional intervals in the framework of one-point-based algorithms. Journal of optimization theory and applications. (2005), 124(2), 503-510. [CrossRef]

- Sergeyev, Y.D., Kvasov, D.E.: Global search based on diagonal partitions and a set of Lipschitz constants. SIAM Journal on Optimization. (2006), 16(3), 910-937. [CrossRef]

- Sergeyev, Y.D., Kvasov, D.E.: Diagonal Global Optimization Methods. FizMatLit, Moscow (2008). In Russian.

- Sergeyev, Y.D., Kvasov, D.E.: On deterministic diagonal methods for solving global optimization problems with Lipschitz gradients. In: Optimization, Control, and Applications in the Information Age, 130, pp . Springer International Publishing Switzerland. (2015), 315-334. [CrossRef]

- Sergeyev, Y.D., Kvasov, D.E.: Lipschitz global optimization. In: Cochran, J.J., Cox, L.A., Keskinocak, P., Kharoufeh, J.P., Smith, J.C. (eds.) Wiley Encyclopedia of Operations Research and Management Science (in 8 Volumes) vol. 4, pp. 2812–2828. John Wiley and Sons, New York, NY, USA (2011).

- Sergeyev, Y.D.; Kvasov, D.E. Deterministic Global Optimization: An Introduction to the Diagonal Approach; SpringerBriefs in Optimization; Springer: Berlin, Germany, 2017. [CrossRef]

- Stripinis, L., Paulavičius, R., Žilinskas, J.: Improved scheme for selection of potentially optimal hyperrectangles in DIRECT. Optim. Lett. (2018), 12(7), 1699–1712. [CrossRef]

- Stripinis, L., Paulavičius, R.: DIRECTGOLib - DIRECT Global Optimization test problems Library, v1.1. Zenodo (2022). [CrossRef]

- Stripinis, L., Kůdela, J., Paulavičius, R.: Directgolib - direct global optimization test problems library (2023). https://github.com/blockchain-group/DIRECTGOLib. Pre-release v2.0.

- Stripinis, L., Paulavičius, R. Novel Algorithm for Linearly Constrained Derivative Free Global Optimization of Lipschitz Functions; Mathematics, 11(13), (2023), 2920. [CrossRef]

- Stripinis, L., Paulavičius, R. GENDIRECT: a GENeralized DIRECT-type algorithmic framework for derivative-free global optimization. [CrossRef]

- Stripinis, L., Paulavičius, R.: DIRECTGO: A new DIRECT-type MATLAB toolbox for derivative free global optimization. GitHub (2022). https://github.com/blockchain-group/DIRECTGO.

- Stripinis, L., Paulavičiuss, R.: DIRECTGO: A new DIRECT-type MATLAB toolbox for derivative free global optimization. arXiv (2022). https://arxiv.org/abs/2107.0220.

- Stripinis, L., Paulavičius, R.: Lipschitz-inspired HALRECT Algorithm for Derivative-free Global Optimization. [CrossRef]

- Stripinis, L.; Paulavičius, R. An extensive numerical benchmark study of deterministic vs. stochastic derivative-free global optimization algorithms. [CrossRef]

- Stripinis, L.; Paulavičius, R. An empirical study of various candidate selection and partitioning techniques in the DIRECT framework. J. Glob. Optim. 2022, 1–31. [CrossRef]

- Stripinis, L. Improvement, development and implementation of derivative-free global optimization algorithms. DOCTORAL DISSERTATION, VILNIUS UNIVERSITY, 2001. [CrossRef]

- Tsvetkov, E.A., Krymov, R.A. Pure Random Search with Virtual Extension of Feasible Region. J Optim Theory Appl 195, 575–595 (2022). [CrossRef]

- Tuy, H. Convex Analysis and Global Optimization. Springer Science & Business Media (2013).

- Zhigljavsky, A., Žilinskas, A. Stochastic Global Optimization. Springer, New York (2008).

| Problem | BIRECTv-l (imp.) | BIRECTv (imp.) | BIRECTv-l [3] | BIRECTv [3] | BIRECT-l (new) | BIRECT (new) | ||||||||||||

| No. | f.eval. | f.eval. | f.eval. | f.eval. | f.eval. | f.eval. | ||||||||||||

| 1 | 153 | 156 | 192 | 134 | 158 | |||||||||||||

| 2 | 387 | 1135 | 422 | 1578 | 1062 | |||||||||||||

| 3 | 1000 | 47311 | 1000 | 72804 | 41654 | |||||||||||||

| 4 | 474 | 742 | 638 | 1034 | ||||||||||||||

| 5 | 209 | 254 | 284 | 496 | 496 | |||||||||||||

| 6 | 211 | 252 | 284 | 682 | 682 | |||||||||||||

| 7 | 209 | 248 | 282 | 852 | 849 | |||||||||||||

| 8 | 249 | 300 | 334 | 330 | 330 | |||||||||||||

| 9 | 480 | 370 | 652 | 490 | ||||||||||||||

| 10 | 1614 | 1337 | 2318 | 1868 | ||||||||||||||

| 11 | 263 | 431 | 346 | 578 | ||||||||||||||

| 12 | 2087 | 2652 | 2912 | 6103 | 6125 | |||||||||||||

| 13 | 28871 | 19418 | 38460 | 44114 | ||||||||||||||

| 14 | 138 | 716 | 180 | 1082 | 558 | |||||||||||||

| 15 | 28 | 28 | 274 | 274 | ||||||||||||||

| 16 | 3440 | 4700 | 5192 | 5756 | ||||||||||||||

| 17 | 169 | 200 | 208 | 352 | 352 | |||||||||||||

| 18 | 542 | 542 | 764 | 764 | ||||||||||||||

| 19 | 254 | 202 | 334 | 190 | 196 | |||||||||||||

| 20 | 103 | 116 | 136 | 154 | ||||||||||||||

| 21 | 388 | 459 | 454 | 558 | 354 | |||||||||||||

| 22 | 1133 | 6246 | 1182 | 7440 | 2302 | |||||||||||||

| 23 | 119 | 163 | 148 | 208 | ||||||||||||||

| 24 | 142 | 231 | 184 | 314 | 136 | |||||||||||||

| 25 | 5654 | 8484 | 7526 | 49160 | 47196 | |||||||||||||

| 26 | ||||||||||||||||||

| 27 | 65536 | 48724 | ||||||||||||||||

| 28 | 1837 | 2518 | 1624 | 1814 | 2108 | |||||||||||||

| 29 | 2867 | 3058 | 3400 | 20672 | 21260 | |||||||||||||

| 30 | 204 | 40788 | 4932 | 5623 | ||||||||||||||

| 31 | 523 | 809 | 688 | 820 | 178 | |||||||||||||

| 32 | 6511 | 8512 | 10978 | 66462 | 82546 | |||||||||||||

| 33 | 1439 | 1454 | 1240 | 15544 | ||||||||||||||

| 34 | 540 | 544 | 700 | 716 | ||||||||||||||

| 35 | 1950 | 2231 | 2528 | 3058 | 1692 | |||||||||||||

| 36 | 17176 | 27256 | 18922 | 31756 | 10766 | |||||||||||||

| 37 | 384 | 413 | 486 | 564 | 268 | |||||||||||||

| 38 | 17061 | 10362 | 25904 | 16754 | 3780 | |||||||||||||

| 39 | 55701 | 84784 | 2248 | 265002 | ||||||||||||||

| 40 | 4002 | 3665 | 6146 | 5604 | 1254 | |||||||||||||

| 41 | 1536 | 1655 | 2256 | 2456 | 1186 | |||||||||||||

| 42 | 1740 | 2238 | 2476 | 3332 | 1138 | |||||||||||||

| 43 | 432 | 570 | 226 | 766 | 642 | |||||||||||||

| 44 | 143 | 112 | 190 | 106 | 118 | |||||||||||||

| 45 | 364 | 987 | 392 | 1400 | 602 | |||||||||||||

| 46 | 1043 | 19418 | 1054 | 27566 | 8742 | |||||||||||||

| 47 | 348 | 328 | 494 | 460 | 226 | |||||||||||||

| 48 | 1141 | 1102 | 1484 | 1006 | 1134 | |||||||||||||

| 49 | 5331 | 2452 | 6066 | |||||||||||||||

| 50 | 1414 | 1312 | 1662 | 1322 | 1462 | |||||||||||||

| 51 | 2965 | 10470 | 3114 | 11880 | 3122 | |||||||||||||

| 52 | 122 | 125 | 156 | 162 | 118 | |||||||||||||

| 53 | 2805 | 2948 | 3710 | 3958 | 1858 | |||||||||||||

| 54 | ||||||||||||||||||

| Average | ||||||||||||||||||

| Median | ||||||||||||||||||

| Problem | BIRECT-(new) | BIRECT [20,21] | BIRECT-l-(new) | BIRECT-l [21] | |||||||

| No. | |||||||||||

| 1 | 202 | 202 | 176 | 176 | |||||||

| 2 | 1268 | 454 | 454 | ||||||||

| 3 | 47792 | 874 | 874 | ||||||||

| 4 | 436 | 436 | 436 | 436 | |||||||

| 5 | 476 | 468 | 468 | ||||||||

| 6 | 478 | 472 | 472 | ||||||||

| 7 | 480 | 474 | 474 | ||||||||

| 8 | 194 | 188 | 188 | ||||||||

| 9 | 242 | 242 | 242 | 242 | |||||||

| 10 | 794 | 794 | 794 | 794 | |||||||

| 11 | 722 | 722 | 722 | 722 | |||||||

| 12 | 4060 | 4060 | 4060 | 4060 | |||||||

| 13 | 164826 | 1628682 | |||||||||

| 14 | 16420 | 480 | |||||||||

| 15 | 274 | 274 | 274 | 274 | |||||||

| 16 | 5106 | 5106 | |||||||||

| 17 | 352 | 352 | 352 | 352 | |||||||

| 18 | 764 | 764 | 764 | 764 | |||||||

| 19 | 334 | 190 | 190 | ||||||||

| 20 | 152 | 152 | 152 | 152 | |||||||

| 21 | 1024 | 660 | |||||||||

| 22 | 7904 | 1698 | 1698 | ||||||||

| 23 | 94 | 90 | 90 | ||||||||

| 24 | 126 | 126 | 126 | 126 | |||||||

| 25 | 82562 | 101942 | |||||||||

| 26 | |||||||||||

| 27 | |||||||||||

| 28 | 2114 | 2114 | 1832 | ||||||||

| 29 | 99514 | 92884 | |||||||||

| 30 | 10856 | 4994 | |||||||||

| 31 | 180 | 180 | 156 | ||||||||

| 32 | 1394 | 474 | |||||||||

| 33 | 40254 | 1250 | 1250 | ||||||||

| 34 | 242 | 242 | 242 | 242 | |||||||

| 35 | 1700 | 1496 | |||||||||

| 36 | 10910 | 4620 | |||||||||

| 37 | 236 | 236 | 214 | ||||||||

| 38 | 7210 | 1422 | |||||||||

| 39 | 315960 | 58058 | |||||||||

| 40 | 1272 | 1286 | |||||||||

| 41 | 1204 | 1224 | 1224 | ||||||||

| 42 | 1140 | 1140 | 1162 | ||||||||

| 43 | 1780 | 1780 | 2114 | 2114 | |||||||

| 44 | 118 | 118 | 108 | ||||||||

| 45 | 712 | 294 | |||||||||

| 46 | 16974 | 784 | 784 | ||||||||

| 47 | 244 | 226 | 226 | ||||||||

| 48 | 1034 | 836 | 836 | ||||||||

| 49 | 7688 | 3366 | 3366 | ||||||||

| 50 | 1506 | 1138 | |||||||||

| 51 | 32170 | 24716 | |||||||||

| 52 | 502 | 338 | 338 | ||||||||

| 53 | 26088 | 27364 | |||||||||

| 54 | |||||||||||

| Average | |||||||||||

| Median | |||||||||||

| Problem | BIRECT-l | |||||||

| No./ | ||||||||

| 1 | 168 | 182 | 178 | 174 | 176 | |||

| 2 | 530 | 484 | 454 | 454 | ||||

| 3 | 842 | 852 | 874 | 872 | 874 | |||

| 4 | 424 | 434 | 436 | 436 | 436 | |||

| 5 | 424 | 456 | 468 | 468 | 468 | |||

| 6 | 432 | 462 | 472 | 472 | 472 | |||

| 7 | 942 | |||||||

| 8 | 188 | 188 | 188 | 188 | 188 | |||

| 9 | 256 | |||||||

| 10 | 790 | 794 | 790 | 794 | 794 | |||

| 11 | 732 | 722 | 722 | 722 | ||||

| 12 | 5352 | 4060 | 4060 | 4060 | ||||

| 13 | 186694 | 152402 | 156448 | 158880 | ||||

| 14 | ||||||||

| 15 | 272 | 274 | 274 | 274 | 274 | |||

| 16 | 3236 | 3452 | 4148 | 4982 | ||||

| 17 | 354 | 352 | 352 | 352 | 352 | |||

| 18 | 764 | 764 | 764 | 764 | 764 | |||

| 19 | 190 | 190 | 190 | 190 | 190 | |||

| 20 | 152 | 152 | 152 | 152 | 152 | |||

| 21 | 644 | 656 | 656 | 656 | 656 | |||

| 22 | 1698 | 1698 | 1698 | 1698 | 1698 | |||

| 23 | 90 | 90 | 90 | 90 | 90 | |||

| 24 | 126 | 126 | 126 | 126 | 126 | |||

| 25 | 49488 | 90504 | 101900 | 101900 | 101900 | |||

| 26 | ||||||||

| 27 | ||||||||

| 28 | 2440 | 2102 | 1820 | 1820 | 1820 | |||

| 29 | 87502 | 90162 | 92028 | 91954 | ||||

| 30 | 5024 | 5014 | 5002 | 4994 | ||||

| 31 | 152 | 154 | 156 | 156 | 156 | |||

| 32 | 436 | 474 | 474 | 474 | 474 | |||

| 33 | 1166 | 1240 | 1250 | 1250 | 1250 | |||

| 34 | 242 | 242 | 242 | 242 | 242 | |||

| 35 | 1470 | 1498 | 1496 | 1496 | 1496 | |||

| 36 | 4510 | 4612 | 4620 | 4620 | 4620 | |||

| 37 | 204 | 214 | 214 | 214 | 214 | |||

| 38 | 1148 | 1280 | 1400 | 1434 | 1434 | |||

| 39 | 49516 | 57452 | 57994 | 58000 | 58000 | |||

| 40 | 810 | 1254 | 1248 | 1248 | 1248 | |||

| 41 | 818 | 1186 | 1224 | 1224 | 1224 | |||

| 42 | 766 | 1138 | 1162 | 1162 | 1162 | |||

| 43 | 1880 | 2044 | 2086 | 2114 | 2114 | |||

| 44 | 106 | 106 | 106 | 106 | 106 | |||

| 45 | 294 | 294 | 294 | 294 | 294 | |||

| 46 | 786 | |||||||

| 47 | 226 | 226 | 226 | 226 | 226 | |||

| 48 | 826 | 836 | 836 | 836 | 836 | |||

| 49 | 3162 | 3332 | 3366 | 3366 | 3366 | |||

| 50 | 1152 | 992 | 992 | 992 | 992 | |||

| 51 | 28268 | 24704 | 24704 | 24704 | ||||

| 52 | 320 | 338 | 338 | 338 | 338 | |||

| 53 | 27720 | 27286 | 27230 | 27230 | ||||

| 54 | ||||||||

| Average | ||||||||

| Median |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).