1. Introduction

Mapping the particle size of river bed materials is an important part of defining river habitat. Riverbed materials fulfill multifaceted environmental functions as feeding grounds, spawning grounds, and shelters for aquatic invertebrates and fish [

1,

2,

3]. Their particle size reflects the tractive force during the flooding term [

4,

5]. In addition, in flood simulations, the roughness of riverbeds is determined based on the particle size of the material, so the evaluated particle size affects the reproducibility of the simulation [

6]. Furthermore, if the vegetation that is adapted to the grain size characteristics develops, it regulates flow regimes and induces new sediment transport characteristics and river morphology [

7,

8]. The distribution of river bed material particle size is essential information from the viewpoint of flood control and the environmental management of river channels.

The conventional field methods such as the grid-by-number and volumetric methods [

9,

10] for riverbed material investigation require a lot of labor and time for sieving or direct measurement of particle size. Therefore, conducting the surveys in a wide-ranging area with high density would require an extremely large amount of cost and would not be carried out. In addition, the spatial representativeness is also an issue because the sampling is within a quadrat with a limited area of less than 1m

2 while the site ranges some square kilometers.

In recent years, unmanned aerial vehicles (UAVs), which are used for a variety of purposes, are capable of comprehensively photographing a wide range. If particle size can be measured by automated extraction of individual particles from a large number of captured images, it is possible to map the particle size distribution of vast river areas. Evaluation of particle size distribution by image analysis is not limited to the application of aerial images taken from UAVs. There are several applications in fields other than river bed material surveys. Yokota et al. [

11] used stereo image analysis to evaluate blasting shear during tunnel excavation. They developed a technology to calculate particle size accumulation curves with high accuracy, and its effectiveness has been demonstrated. Igathinathane et al. [

12] photographed airborne wood dust particles of wood using a document scanner and performed dimensional measurements and size distribution analysis using ImageJ (machine vision plug-in). The results showed that dimensions larger than 4 μm can be measured with an accuracy of 98.9% in about 8 seconds per image, and it is an effective method equivalent to sieving.

In river management, there are some applications of image recognition to identify information on river channels and other features. As an example of river bed materials, M. Detert et al. [

13,

14] developed the software BASEGRAIN which automatically identifies each particle in an image taken of a riverbed, measures the major axis and minor axis, and automatically calculates the particle size distribution. Harada et al. [

15] verified the analytical accuracy of his BASEGRAIN with the results obtained by actually performing the volumetric method. It showed that the particle size distributions of the two have a good agreement, and they mapped the particle size of a wide range of river bed materials. However, although BASEGRAIN is an effective method for obtaining the particle size distribution of materials contained in a single image, compared to the particle size evaluation by the image analysis in which the shooting conditions are fixed to some extent as introduced above, the manual operations are required depending on the brightness and shadow of each image because it is taken outdoor and. In cases where the shadows that initially appear on a single stone surface are counted as multiple particles, the obtained particle size distribution may be significantly different unless the analysis conditions are adjusted and the population brake is applied [

16]. Such tuning processes increase effort and time to spend. On the other hand, in the actual river management scene or when providing a distribution of riverbed roughness for two-dimensional shallow water flow simulation, it is often sufficient to be able to map a representative grain size such as D50. Therefore, if the purpose is limited to mapping, there is no need to extract individual particles from the images; it is sufficient to divide the image into a large number of grids and determine the representative particle size for each grid.

A similar analysis method is the land cover classification using remote sensing data and machine learning by comparing pixel values of satellite and aerial photographs with ground truth data. [

17,

18,

19,

20]. This method is effective when a wide area can be photographed under the same conditions, but the problem is that it cannot be classified properly if the pixel values change due to differences in photographing time or weather. On the other hand, image recognition using deep learning can capture more advanced image features rather than simple pixel values, so it can be expected to achieve robust classification against differences in brightness, etc. [

21,

22].

Since the development of Deep Learning to semantic segmentation [

23], which classifies regions of an entire image, it has been applied in various fields of manufacturing and resource management. The accuracy of semantic segmentation has been evaluated using the benchmark datasets, and there are also datasets for cityscapes and roadscapes, in recent years, the most accurate models have been announced every year for these datasets. Research on the application of deep learning to satellite images and aerial photography is progressing [

24], and detection of not only land cover but also features such as buildings [

25,

26,

27], roads [

28,

29,

30], and land cover [

31,

32,

33] is available. A large part of the examples of semantic segmentation applying to rivers are the river channel detection and the river width measurement using satellite images [

34,

35,

36]. As the examples related to river management, there are attempts to detect the estuary sandbars [

37], the water level monitoring during floods [

38,

39,

40,

41], the water binary segmentation task to aid in fluvial scene autonomous navigation [

42], and fine-grained river ice [

43]. The number of research on semantic segmentation for river scenes is less than that for terrestrial areas because the benchmark datasets are smaller than those for terrestrial data. Any cases mentioned above are based on the analysis of two-dimensional images, such as satellite images, UAV aerial images, and surveillance videos.

In recent years, not only 2-dimensional image information but also 3-dimensional point cloud data obtained from SfM analysis [

44,

45] and terrestrial laser scanning (TLS) [

46] have been analyzed to investigate riverbed morphology, roughness, and surface sedimentation.

However, the point cloud density obtained by SfM and TLS is not homogeneous depending on the characteristics of the ground surface pattern and the distance from the instrument. If the main purpose is a mapping that requires high precision such as roughness or surface sedimentology, careful attention must be paid to data acquisition and selection). Furthermore, with SfM, it is possible to analyze underwater river bed materials in rivers with high transparency and shallow water depth, but with TLS, measurements are impossible because the laser is absorbed on the water surface.

Onaka et al. used the Convolution Neural Network (CNN) to determine the presence or absence of clam habitat [

47] and to classify sediment materials in tidal flatwater areas [

48]. These targets are stagnant water areas where there is no current and no diffuse reflection of waves from the surface of the water. On the other hand, in river channels, as Hedger et al. [

49] pointed out, automated extraction of information on river habitats from remote sensing imagery is difficult due to a large number of confounding factors. There are some attempts to apply artificial intelligence techniques to map surface cover types [

50], hydromorphological features [

51], mesohabitats [

52], salmon redds [

53], etc., but there is a possibility of misclassification due to water surface waves [

54]. The authors have previously attempted to automatically classify the particle size of riverbed material in terrestrial areas using UAV aerial images and image recognition using artificial intelligence (AI) during normal water conditions. [

55]. The popular pre-trained CNN, GoogLeNet, was retrained to perform a new task using the 70 riverbed images using transfer learning. The overall accuracy of the image classification reaches 95.4%.

In this study, we applied the same method to the shallow water images taken from UAVs over the river channels when the water was highly transparent and attempted the classification. The representative particle size, which serves as a reference for training data and test data, was determined by the bed material samples taken from the quadrat underwater surrounded with fences to prevent advection during collection. Furthermore, the network trained in transfer learning was used to map the particle sizes in the river channel range. Trends of the particle size were compared with the detailed topographical changes in the longitudinal direction of the river channel measured by Lidar-UAV and the relationship between particle size and flow regime was discussed.

2. Materials and Methods

2.1. Study Area

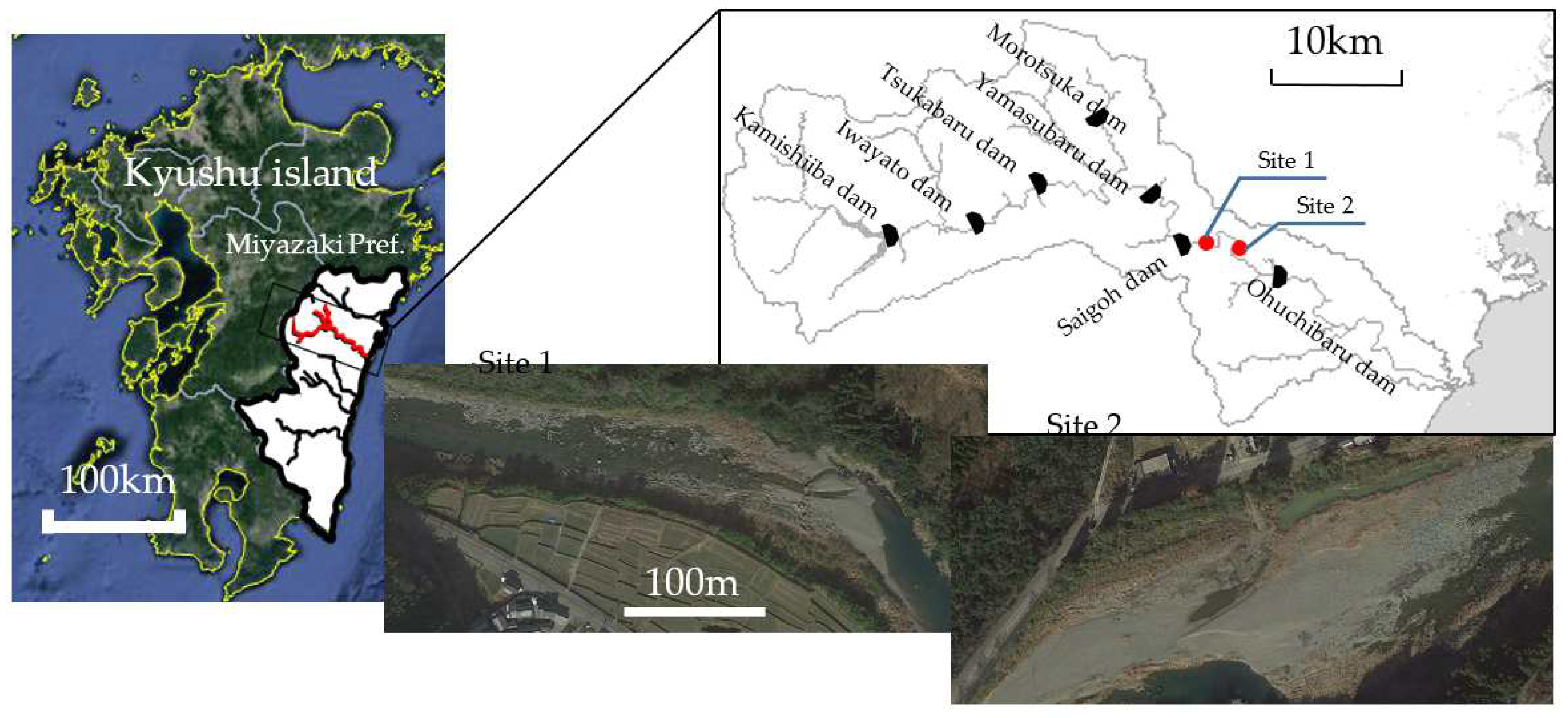

The study area which is the same as our previous study [

55], Mimikawa River located in Miyazaki Prefecture, Japan, has a length of 94.8 km and a watershed area of 884.1 km2. It has 7 dams, developed between the 1920s and the 1960s, whose purpose is only power generation, not flood control (

Figure 1). However, sedimentation in the reservoirs breaks the natural transportation of solid matter along the channel [

56,

57], although sediment supplied from upstream areas greatly contributes to the conservation of the river channel and the coastal ecosystems [

58,

59]. On the other hand, the southern part of Kyushu, Japan, where the basin of the Mimikawa River is located, was severely flooded as a result of a typhoon in 2005. These old dams, which did not have sufficient discharge capacity, became obstacles during floods.

As a solution to these two disparate issues, the renovation of the dams and the lowering of the dam body to enhance the sluicing of sediment were planned [

60,

61]. The three dams constructed in succession downstream on the Mimikawa River were remodeled, and a function was added to the dams to remove the sediment at the time of flooding. Retrofitting of Saigo Dam, the second from the lower side of Mimikawa River was finished in 2017, and then the operation was started. Remodeling an operating dam requires advanced, world-leading techniques, and sediment in Japanese rivers is actively monitored [

62]. In the revised operation, the water level during floods was decreased to enhance the scrubbing and transportation of the settled sediment on the upstream side of the dam body. Due to those operations, sand bars which have diverse grain sizes found especially on the downward section of Saigo Dam in recent years.

In our previous study [

55], the image classification method according to fractions categories by using CNN is only applied to the terrestrial area above the water surface on the day of photographing, and it does not include the area under the water surface where the color is affected by water absorption and wetting on the material surface, or where the visibility may be affected by waves or reflections on the water surface. This study extends the target of image recognition to the shallow underwater area. Fortunately, the water was very transparent during our survey, and when there were no waves, we could see the bottom of the water, which was about 1 meter deep.

2.2. Aerial Photography and sieving for validation

The UAV used for aerial photography was a Phantom 4 Pro, manufactured by DJI. Aerial photography from the UAV was conducted at 108 locations (76 locations in terrestrial areas + 32 locations in shallow waters) in parallel with the sieving and classification work described below. Pictures were taken from 10 m flight altitudes, as same as our previous study. The previous study has shown that the resolution of the pictures taken at that flight altitude is sufficient to distinguish between the smallest particle size we want to classify and the next particle size (

Table 1).

At the same 108 points, the particle size distribution of bed material was measured by sieving and weight scale. Mesh sizes of the sieves were 75, 53, 37.5, 26.5, 19, 9.5, 4.75, and 2 mm (JIS Z 8801-1976) that have intervals considering the logarithmic axis of the graph showing the cumulative curve of particle size. Riverbed material in a 0.5 m × 0.5 m quadrat with a depth of around 10 cm was sampled. Sieving and weighing were carried out in the field. The riverbed material in the shallow water area was sampled by setting up the fences surrounding the target area to eliminate washout by river currents. The collected materials were in a wet state, so after sieving them and measuring their wet weights on the site, some of them were sealed and brought back to the laboratory, where they were dried and the moisture content was determined. The dry weight was determined by subtracting the mass equivalent to the moisture content from the wet weight measured on-site.

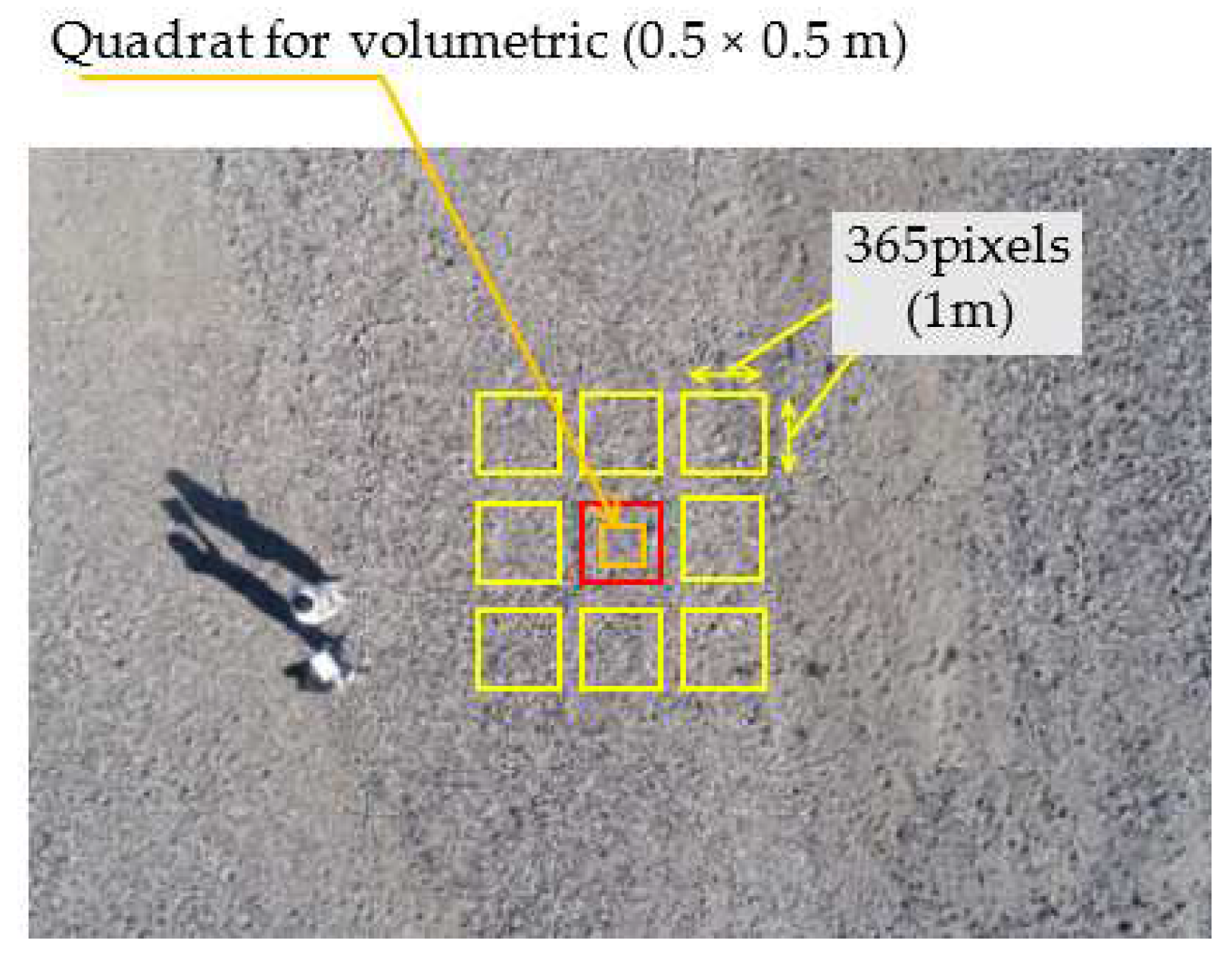

Although the above sampling was performed in a 0.5 m × 0.5 m square by the volumetric method, in this study, the application area of the proposed photographing was set to a 1.0 m × 1.0 m square centered on the 0.5 m × 0.5 m square. This is because stones with a size of about 20 cm could be seen at some points, and the image range of 50 cm square may not be large enough. Specifications of the camera follow: image size was 5472 pixels × 364 pixels, lens focal length was 8.8 mm, and sensor size was 13.2 mm × 8.8 mm. When photographing at an altitude of 10m using this camera, the image resolution was about 2.74 mm/pixel. Therefore, 365 × 365 pixels (Equivalent to 1.0 m × 1.0 m square) were trimmed from the original image.

Only the 108 data points of particle size distribution were obtained by the volumetric method. This number was insufficient for training data for the calibration of the parameters in CNN and for the test data for evaluating the accuracy of CNN tuned in this study. Then, we increased the number of images available for the training and testing, by analyzing the surrounding area with BASEGRAIN.

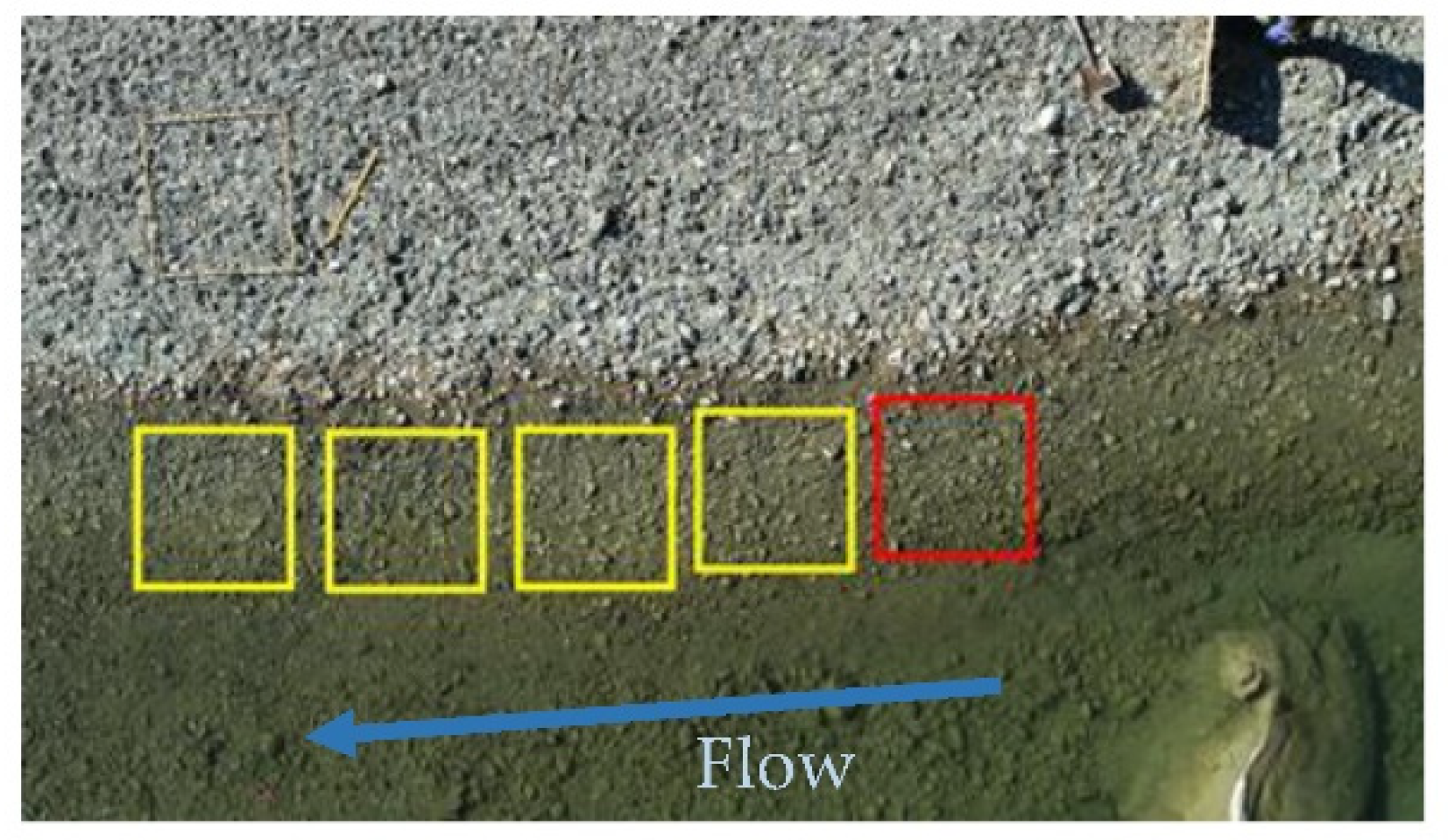

For the materials on the terrestrial area, the images of adjacent grids in 8 directions from the sieving quadrat were clipped as in the previous study. If BASEGRAIN determined that the particle size distribution of the sieve quadrat image and the surrounding 8 images were approximately the same, the surrounding 8 images were nominated as the same class of particle size as that of the sieving quadrat. On the other hand, for the materials located under shallow water, the images around the quadrat area, as shown in Figure 2, were clipped because the class of particle size was kept in flow direction while the class changes drastically in width or depth direction.

When determining the typical particle size value based on the result by BASEGRAIN, we took into account the fact that BASEGRAIN has difficulty distinguishing fine particles, as in previous studies. When the number of particles recognized by BASEGRAIN is very small, the image is considered to be the finest particle class. Furthermore, considering the correlation between the results of the volumetric method and BASEGRAIN, the threshold value of BASEGRAIN between Class 1 (Medium) and Class 2 (small) was set to 28.2 mm, corresponding to the threshold value of 24.5 mm by the volumetric method [

55].

Figure 3.

Image preprocessing and how to increase the image data for the image on the terrestrial area. (Red: volumetric method and BASEGRAIN; Yellow: BASEGRAIN only).

Figure 3.

Image preprocessing and how to increase the image data for the image on the terrestrial area. (Red: volumetric method and BASEGRAIN; Yellow: BASEGRAIN only).

Figure 4.

Image preprocessing and how to increase the image data for the image in shallow water. (Red: volumetric method and BASEGRAIN; Yellow: BASEGRAIN only).

Figure 4.

Image preprocessing and how to increase the image data for the image in shallow water. (Red: volumetric method and BASEGRAIN; Yellow: BASEGRAIN only).

2.3. Training and Test of CNN

The image recognition code was built with MATLAB

®. The CNNs were installed in the code as a module. CNN consists of input and output layers, as well as several hidden layers. The hidden part of CNN is the combination of convolutional layers, pooling layers realizing the extraction of visual signs, and a fully connected classifier, which is a perceptron that processes the features obtained on the previous layers [

63].

In our previous study [

55], GoogleNet (2014) [

64] showed the best performance in differentiating river sediment fractions on a terrestrial area in the images, among the major networks pre-trained on ImageNet [

65], so the following show the results using Googlenet as CNN. The networks can be retrained to perform a new task using transfer learning [

66]. Finetuning a network with transfer learning is usually much faster and easier than training a network from scratch with randomly initialized weights. The features learned before transfer learning can be quickly transferred to the new task using a smaller number of training images.

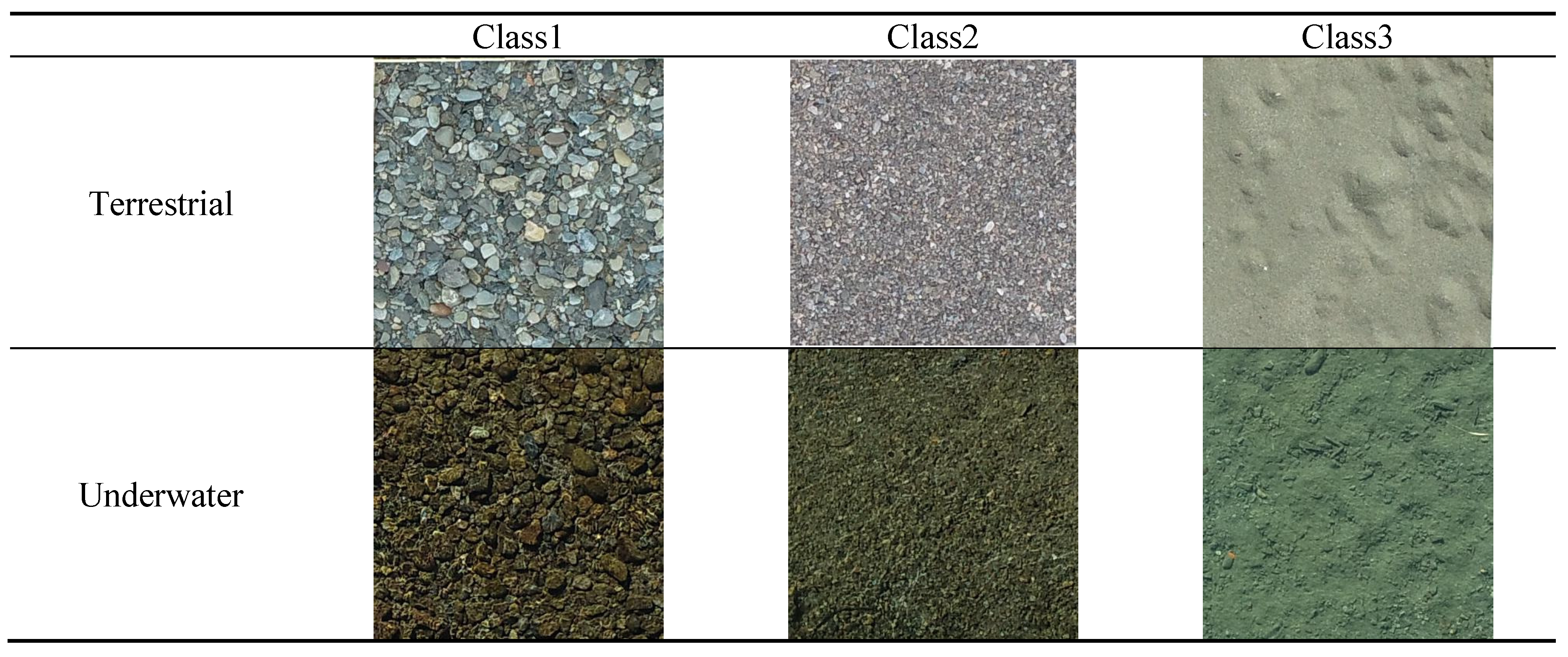

In the previous study, we set 3 classes of particle size (

Table 1) and tried image classification based on these classification criteria for the images of the materials in terrestrial areas. In this study, materials in water are also subject to classification, so we are changing the number of classifications and searching for several classifications that can be classified with higher accuracy. For example, if the images of bed materials with the same particle size are divided into two conditions, whether they are on a terrestrial area or underwater, the six classes are defined by multiplying the three particle size classes listed above with the conditions.

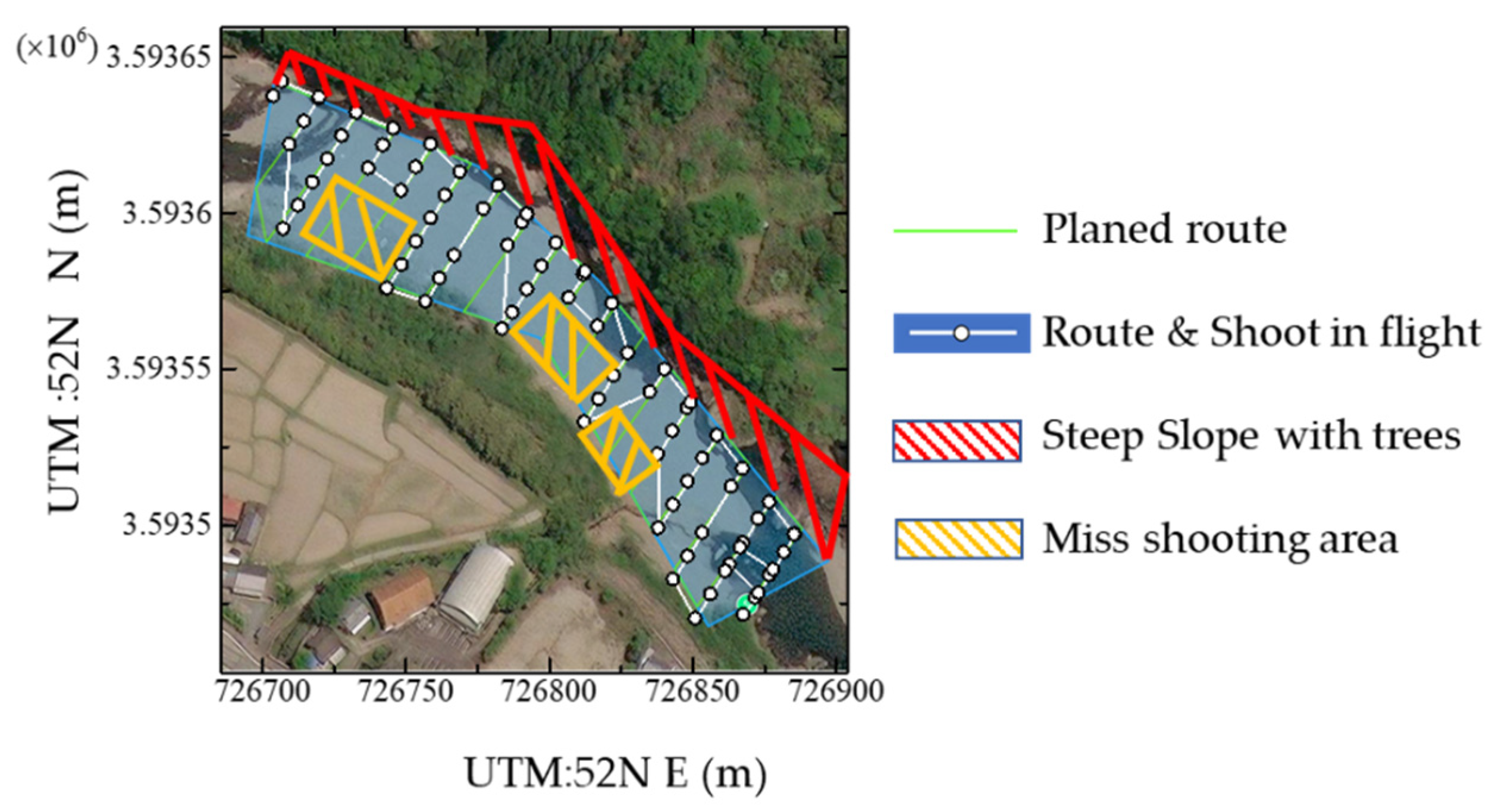

2.4. Projection of the classification results and microtopographic survey

The UAV was automatically navigated to continuously photograph the two study sites, and each image was divided into small meshes, and the classification results for each mesh were projected on a map at the end of this study. However, the study Site was in the narrow canyon and the UAV flight altitude was relatively low to obtain the sufficient photographic resolution. As a result, depending on the flight periods, the signal acquisition from GPS satellites was insufficient, making it impossible to capture images along the planned automatic navigation route. In the second observation (11th November 2023), we were able to predict the defect in advance, so after completing the automatic navigations, we confirmed the missing area and manually flew the UAV over it to take supplementary photographs. There was a lack of photographs in the first observation (20th September 2023) when we did not notice it on the site.

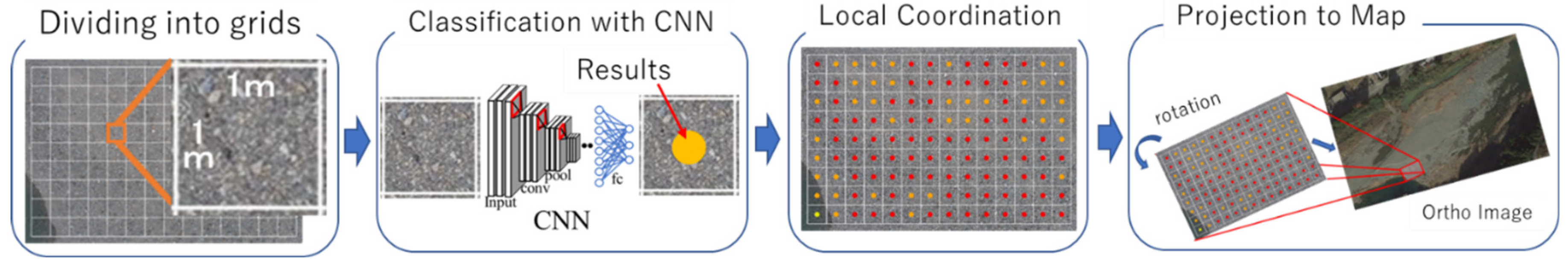

When taken from an altitude of 10m, each image captures a ground surface approximately the area of 9m x 14m. This was divided into 1m meshes and the surface condition was classified using CNN. The position of each mesh is expressed in relative coordinates with the center of the image as the origin, and the relative coordinate values were transformed based on the UAV coordinates extracted and the Yaw angle of the camera from the XMPMetadata. The results of the classification with CNN were projected onto the map.

In addition, to discuss the relationship between the grain size map and the microtopography, the microtopography at the study sites was surveyed using Lidar-UAV (Matrice 300 RTK + Zenmuse L1 manufactured by DJI).

4. Discussion

4.1. Reduction of the error factors by the diversity of training data

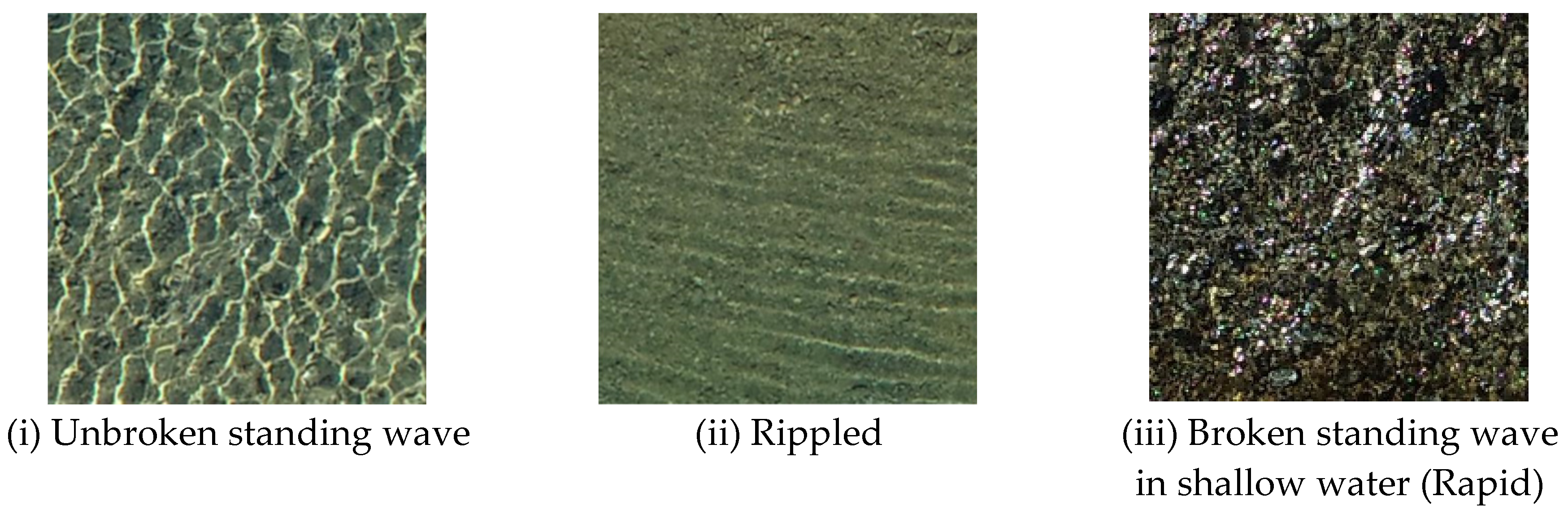

Hedger et al. [

54] proposed using CNN to classify water surface waves into smooth, rippled, and standing waves, and to combine them with water surface slope information to compartmentalize them into hydromorphological units (HMU) for each mesohabitat type. The results suggested that CNN is capable of recognizing and classifying water waves in images. In other words, in the classification of riverbed material particle size, which is the target of this research, the water surface waves overlaid on the image are also the target of feature extraction, and are also a source of error in determining the bed material particle size. There are two possible ways to reduce the error in bed material classification due to the surface waves. The first one is to attempt learning and classification using 20 types in total (5 classes of particle size, which have been shown in this study so far, x 4 types of water surface waves). However, as the number of classes increases, the amount of learning per class decreases in this research which the total number of images available for training is limited. In addition, as seen in

Table 3, classifying highly similar images into two classes reduces the accuracy. The other method aims to classify particle size into the 5 classes mentioned above based solely on particle size information and performs training by arranging images with different water surface wave conditions in the same class in the training data. It is supposed that this method orders the CNN to ignore waves on the water surface and to focus on the bottom bed condition for classification.

In this study, the number of classifications is limited to the five classes mentioned above, and we evaluate how the classification accuracy changes depending on whether or not the training data includes various types of water surface waves. If the cases where river bed material cannot be photographed due to blisters or white water were excluded from the Surface Flow Type (SFT) shown by Hedger [

54] and Newson et al. [

67], (i) Unbroken standing wave, (ii) Rippled, (iii) Broken standing wave in shallow water (Rapid) (

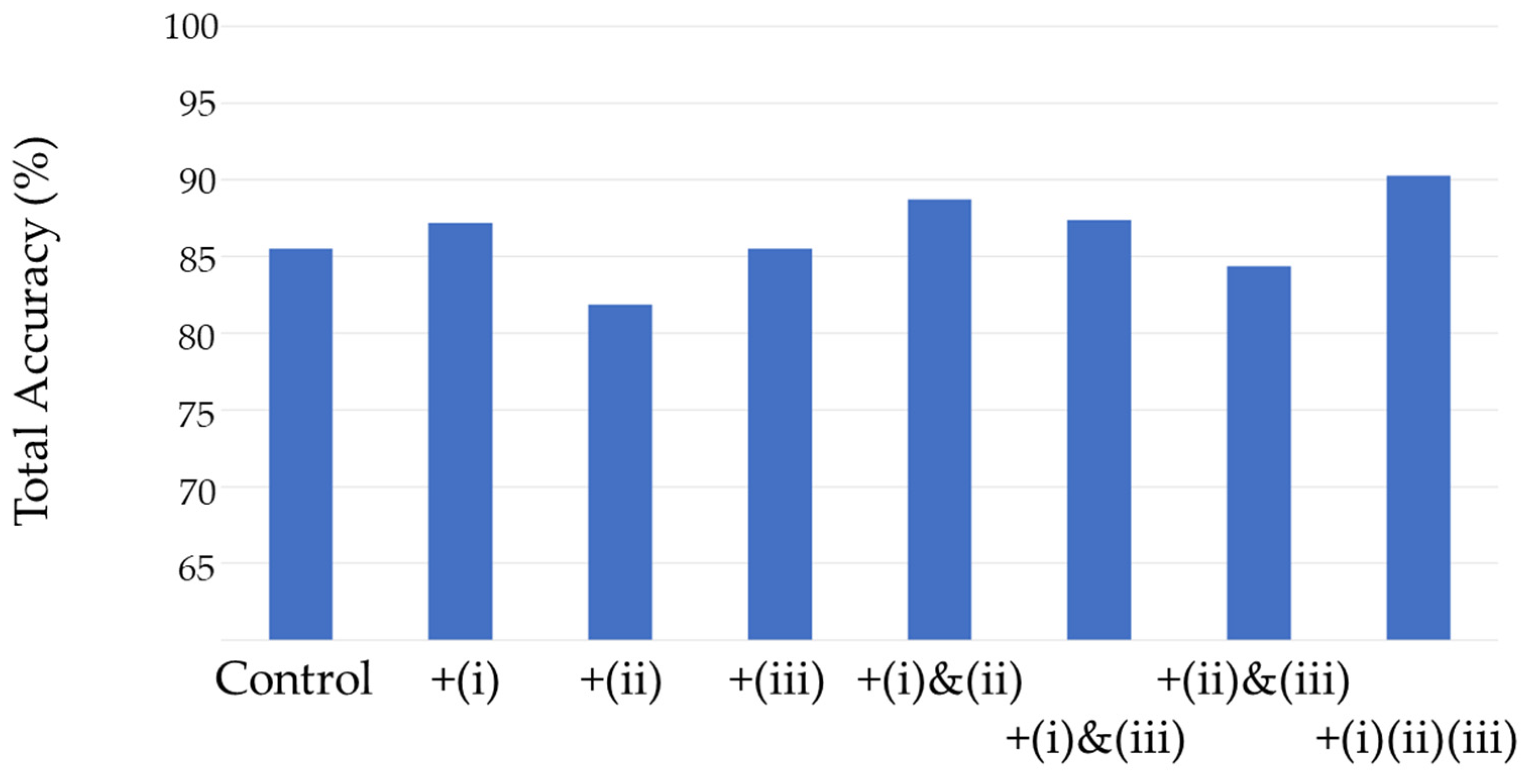

Figure 6) and smooth water surface remain. Here, the total accuracy of the test results for the network trained only with the images of “smooth” without waves on the water surface, is the Control for the following comparison. Then, the networks trained by sequentially adding images that contain waves (i) to (iii) were prepared. The same test data set as 3.2 was used for both verifications and compared in terms of total accuracy.

Figure 7 shows a comparison of total accuracy for all five classes. When training by adding one type of each of the three types of water surface waves in (i)-(iii) (in

Figure 7, the three on the left adjacent to the Control), a significant improvement in accuracy was seen when (i)unbroken standing wave was added. If trained without the images containing unbroken standing waves, the wave pattern with a similar wavelength to riverbed material was not recognized as a surface wave, and misclassifications occurred. Therefore, when (i) is included in the learning data, there is a strong tendency for the accuracy to be improved. Similarly, for those that include two of the three types of SFT as training data, whether or not (i) is included has a large influence. On the other hand, whether or not (iii) Broken standing waves in shallow water (Rapid) is included does not contribute much to improving the accuracy. Broken standing wave in shallow water is under a rapid state, where the fine material as Class 3 is never settled on the bed. In fact, among the pictures taken in this research, there was no image of Class 3 of fine bed material with the wave type of (iii). Therefore, whether or not the wave (iii) is learned does not contribute to improving the accuracy of fine material discrimination. In addition, when visually checking the image of the water surface of broken standing waves in shallow water created by comparatively rough bed materials of Class 1 & 2, the particle diameter and wavelength are similar. So, whether or not the images of Class 1&2 with the wave type of (iii) are included does not have a large effect on the accuracy.

Finally, the accuracy was achieved at 90.3% when training included all three types of SFTs (i) to (iii). The total accuracy was higher than the results shown in

Table 5, but this is because there were several more SFT images of (i) added to the training data, and Class 2 with underwater conditions could be discriminated more accurately. As mentioned above, many factors need to be taken into account that affect the brightness and pattern of images taken under natural conditions. By taking these into account and including images taken under various conditions in the learning data, it is possible to reduce this influence.

4.2. Mapping of the wide-ranging area

It is possible to create a spatial distribution map of particle size by photographing the wide-ranging area with the automatic navigation system of UAV and applying the proposed image classification. However, when the UAV was flown at a low altitude to obtain sufficient photographic resolution to determine particle size in a canyon area with steep slopes on both sides, GPS reception was insufficient, resulting in missing the coordination of images when the UAV was operated automatically. In this study, we manually photographed the missing points and rearranged the classification results on the map based on the information on the photographing angle.

Figure 8 shows an example of the shooting locations when the UAV flew automatic navigation at the study site. To avoid the UAV crashing, an automated flight plan is created to avoid getting too close to cliffs with a lot of vegetation. The miss-shooting area due to the loss of GPS signals is shown as the orange hatching area. Immediately after taking pictures using automatic navigation, we extracted the coordination of each image, checked the areas where no images due to the above reason were obtained, and then flew and took pictures manually.

Each picture taken both automatically and manually was divided into small grids (1m x 1m), as shown in

Figure 9, to classify with the network. The values of the local coordination on a picture with that center as the origin were given to the result of the classification for each grid. The results were projected on a map by converting the local coordinate values on the picture into the map coordinate values based on the GPS and Yaw angle of each photo extracted from its XMPMetadata.

The classification with CNN mentioned above aimed the validation, so that the classes were only focused on the particle size and the terrestrial or underwater condition. In the practical mapping, the surface of channels is not covered only with sediments. In our study site, there are vegetation parts and deep pools where the bed material is not visible. The classes “Grass” and “Deep Pool” were added to the original 5 classes which were applied in the previous chapter. Totally among the 7 classes, the misclassification between terrestrial and underwater for the same classes has no problem practically. So, the test result was evaluated with the confusion matrix in which the same classes of particle size under terrestrial and underwater conditions were combined into one class (

Table 5). The 17 images in Class 3 (Fine gravel and coarse sand) were misclassified to Class 2. The results reduced the accuracy but generally, the scores were acceptable. Then, this network was applied to mapping the wide-ranging area in our study site.

To discuss the relationship between the distribution of bed material and hydraulic features, the topographic point clouds were obtained via UAV-(Matrice-300-RTK: DJI Japan, Japan) mounted LiDAR (Zenmuse-L1: DJI Japan, Japan). LiDAR–UAV can acquire point clouds at an altitude of 100 m, with an accuracy of 3 cm, but that accuracy is insufficient to distinguish the bed material particle on the study site. The scanning mode of the LiDAR was a repeat scanning (repeat) pattern, and the return mode was triple, to acquire the topography under the trees. The flight route was set at an altitude of about 80 m, with one survey line in the middle of the river channel. The topographic surveys were conducted only on 11th November 2023 due to the procurement of the apparatus while the sediment particle size was monitored on 4th September and 11th November 2023, before and after Typhoon No.14 on 20th September 2023.

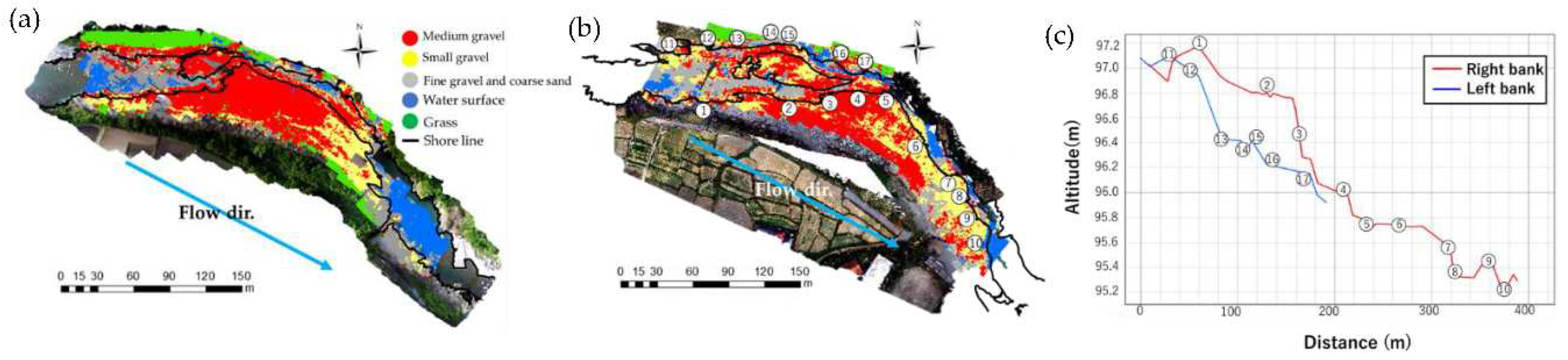

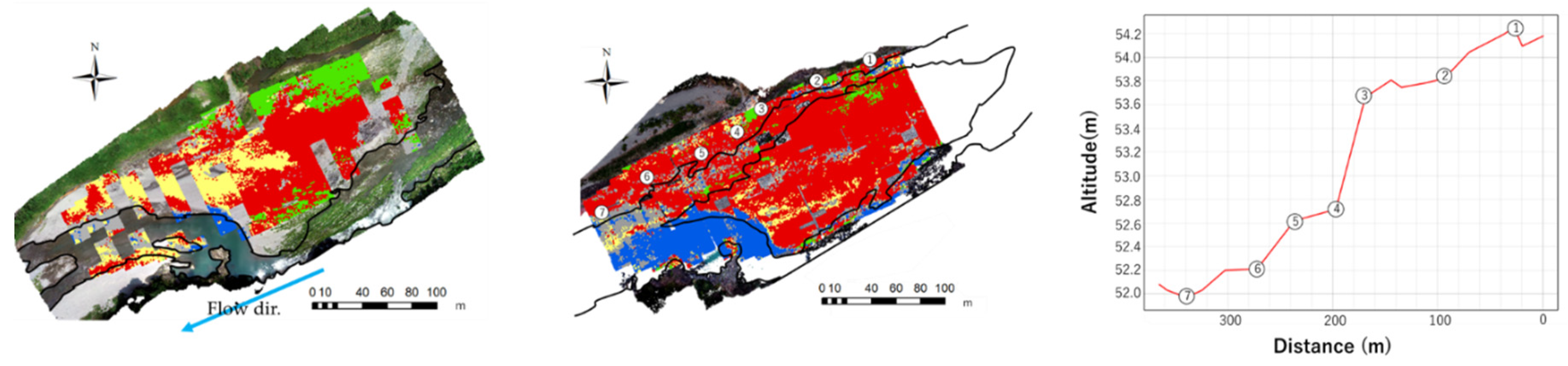

Figure 10 and

Figure 11 show the spatial distribution map of river bed grain size and the longitudinal cross-sectional diagram created from point cloud data for Site 1 and Site 2, respectively. The spatial distribution map of particle size shows particle size, water, and plants as points in five colors, and the water edges of both sides at the time when the observation on low water day are shown as a black line. The flow direction is shown by the light blue arrow below; in

Figure 10, the water flows from the upper left to the lower right, and in

Figure 11, the water flows from the upper right to the lower left. In the vertical profile, the vertical axis represents the altitude, and the horizontal axis represents the distance along the shorelines from the upper border of the measurements. Also, the numbers on the longitudinal map and the numbers on the spatial distribution map indicate the same points.

Upstream of the bend of the channel in Site 1(around the center in

Figure 10b), Class 3 of the fine sediment is widely distributed within the low water channel after the flood. When the flow channel gradually narrowed to the left bank during the decreasing phase of the flood, coarse sediment accumulated on the right bank on the inside of the bend, first. As the water level was decreasing, relatively large grains were deposited in front of the bend, forming a rapid (section 3 to 5). The upstream side was dammed up and became a slow-flowing area, and the fine-grain sediment transported during the period of declining water flow was widely deposited on the flow path. Seeing the longitudinal profile, the right bank channel of the 2 branched low channels has a steep slope between 3 and 5, and it can be confirmed that relatively large grain-size sediment has been deposited in that section. In addition, the grain size of the sediment along the left bank channel is uniform. In the longitudinal section, the left bank side shows an almost uniform slope without pools and falls, and the channel width is also almost uniform. This slope is consistent with the absence of fine sediment deposition. The topography before the flood was not obtained. However, the rapid accumulation of coarse material at the bend and the fine sediment accumulation on its upper side are also seen in the upper half of the distribution map before the flood.

Comparing the distribution maps before and after the typhoon, the deep pool near the downstream end of the observation area has shrunk, and the fine-grained sediment on the right bank side of the deep pool has disappeared and been replaced by Class 2 sediment. Before the typhoon, the area near the downstream end was dammed up due to the accumulation of sediment outside the photographed area, and due to the slow flow of the deep pool, fine-grained sediment was deposited. It is presumed that the rapids outside the area were washed away by the flood, and the accumulation disappeared, resulting in the accumulation of Class 2 sediment. Looking at the longitudinal profile after the typhoon, the downstream part of the photographed area shows an almost uniform slope.

At Site 2, which is a point a little far from the dam, Class 2&3 are distributed above the low water channel on the right bank downstream in both of the distribution maps before and after the typhoon. The reason for this is different from the fine-grained sediment deposited in the slow basin that was dammed behind the small rapids at Site 1. Looking at the longitudinal profile after the typhoon, it can be seen that the slope between 4 to 7 is gentle generally, but there is an alternation of the course (Class 1) and the fine (Class 3). The channel of Site 2 is wider than Site 1 and other upstream areas, resulting in lower flow velocity and accumulation.

Comparing the distribution maps before and after the flood, it is found that before the flood, Class 2 was deposited downstream and there was no branch on the right bank on the low-water days. On the other hand, after the flood, the branching channel appeared on the right bank and its bed is the alternation of Class 1 and 3. Especially the range of Class 1 (coarse) was extended. This is because the sluicing operations had been carried out before 2023, but only on Saigo dam. In 2023, the Yamasubaru dam also started sediment sluicing after completing its retrofitting. So, there is a possibility that the larger grain-size particles were transported from the upper dam.

5. Conclusions

In this study, the particle size classification method using CNN with UAV aerial images, which has so far targeted only terrestrial bed materials in our previous study, to the classification of materials underwater in shallow waters where the water bottom can be seen. When completely ignoring whether the sample is terrestrial or underwater, we could predict that the classification accuracy would drop significantly, even without the trial. The accuracy was further reduced by trying to classify similar images into different classes by doubling the number of classes. If the image similarity between the classes is high, increasing the number of classes is not a good idea. We were able to improve accuracy by using the same class, especially for fine grains that have high similarity between terrestrial and underwater. On the other hand, if the particles are large enough and can be identified on the image, the colors are different between terrestrial and underwater, so classifying them into the other would have resulted in higher classification accuracy.

In addition, the waves on the water surface were thought to be a factor causing the errors, but increasing the number of classes was not considered a good idea, so we did not add classes accordingly. Since the purpose of this research is to classify river bed images based on particle size, we were able to obtain sufficient accuracy by focusing only on the particle size and setting classes without considering the difference in wave types. In particular, it was shown that accuracy could be improved by including a variety of SFTs in the training data.

It suggests that classification based on the main target (particle size in this study), without being distracted by other environmental factors that may cause changes in the image, provides a certain level of accuracy. This is not limited to SFT. Furthermore, if the training data includes images taken in as diverse conditions as possible concerning other environmental factors, the biases caused by the other factors will be reduced and higher accuracy will be obtained. In this study, photographs were taken in two relatively close areas of the river, so environmental conditions other than waves on the water surface were relatively similar. Therefore, to make the method applicable to many other rivers generally, it is necessary to photograph under various environmental conditions with different flow rates, current speeds, parent rocks, watercolor, particle shape characteristics, riverbed gradients, etc. It is preferable to prepare more training data that has been verified.

In addition, the validity of this method was supported by the fact that there was a reasonable relationship between the gradient of the microtopography observed by Lidar and the trend of the wide-area distribution of particle size. Images of river channels taken from UAVs are the result of a combination of various environmental information. It is difficult to classify by considering multiple types of environmental conditions at the same time. However, if you simply classify by focusing on one item and provide training data without considering other environmental items, sufficient accuracy can be obtained. This is true not only for classification based on riverbed particle size but also for creating environmental classification maps based on image classification.

Author Contributions

Conceptualization, M.I.; methodology, S.A., T.S., and M.I.; software, S.A., T.S., and M.I.; validation, S.A., T.S., and M.I.; formal analysis, S.A., T.S., and M.I.; investigation, M.I.; resources, M.I.; data curation, S.A., T.S., and M.I.; writing—original draft preparation, M.I.; writing—review and editing, M.I.; visualization, S.A., T.S.; supervision, M.I.; project administration, M.I.; funding acquisition, M.I. All authors have read and agreed to the published version of the manuscript.