The aim of this section is to derive a default prior

to be used in (

4). To this end, following [

11] we use the shrinkage argument, which is a crucial procedure in the development of matching priors, i.e. priors that ensure, up to the desired order of asymptotics, an agreement between Bayesian and frequentist procedures. Examples of matching priors are (see [

11]) for posterior quantiles, for credible regions and for prediction. Here, we focus on a specific matching prior, that ensures the invariance of the posterior mode in the posterior distribution (

4). As a consequence, the invariance extends to HPDs, as well as the

value, achieved incorporating the reference function within the prior.

The proposed choice of the prior

, that makes the MAP and thus also HPDs and the

value invariant under 1-1 reparameterization, will depend on the log-likelihood

and on its derivatives. In regular parametric estimation problems, both the MLE and the score estimating function exhibit an asymptotically symmetric distribution centered at the true parameter value and at zero, respectively. However, these asymptotic behaviours may poorly reflect exact sampling distributions particularly in cases with small or moderate sample information, sparse data, or complex models. Several proposals have been developed to correct the estimate or the estimating function. Most available methods are aimed at approximate bias adjustment, either of the MLE or of the profile score function, also when nuisance parameters are present (see [

25] for a review of bias reduction for the MLE and [

26] and subsequent literature for bias correction of the profile score). Lack of equivariance impacts the so-called implicit bias reduction methods, which achieve first-order bias correction by modifying the score equation (see [

25,

27]). To avoid this drawback, in this paper we focus on the median modification of the score, or profile score equation, whose solution respects equivariance under monotone reparameterizations ([

28]). Similar to Firth’s implicit method ([

27]), the median modification of the score, or profile score, does not rely on finiteness of the MLE, thereby effectively preventing infinite estimates.

3.1. No nuisance parameters

Let’s explore first the scenario where

is scalar. In order to obtain median bias reduction of the MLE, it is possible to resort to a modified version of the score function of the form

where

is the score function and

a suitable correction term of order

. In particular, the median modified score function assumes for

the expression

The solution

to the equation

not only upholds equivariance under componentwise monotone reparameterizations but also approximates median unbiasedness ([

28]). Note that likelihood inference based on (

10) does not depend explicitely on the MLE. Indeed, the modified score function has been found to overcome infinite estimate problems. Likewise the MLE, also

is asymptotically

, so that the Wald-type statistics only differ in location.

Since Bayes’ theorem is a statement of adittivity on the log scale

constant, we observe that in the Bayesian framework

can be interpreted as the derivative of the logarithm of a prior, that is

. We are thus looking for a matching prior

such that

In the scalar parameter case, it is straightforward to show that the proposed

median matching prior takes the form

where

and

. The posterior based on the median matching prior is thus

A first-order approximation for the

value, when testing

, is simply given by

which differs in location with respect to the classical first-order approximation for the

value based on the MLE. A second approximation for the

value, when testing

, can be obtained from the asymptotic distribution of the modified score function (

10), that is

Although the first-order equivalence between (

11) and (

12), note that (

11) is based on an easily understandable comparison between estimated value and hypothetical value, taking estimation error into account, and is widely used in applications, but does not satisfy the principle of parameterization invariance. On the other hand,

is parameterization invariant.

Note that, when using a

predictive matching prior, i.e. a prior ensuring asymptotic equivalence of higher-order frequentist and Bayesian predictive densities (see, e.g., [

11]), the term

in (

10) corresponds to the Firth’s adjustment ([

27])

In view of this, for general regular models, Firth’s estimate coincides with the mode of the posterior distribution obtained using the default predictive matching prior. However, lack of invariance affects this kind of adjustment ([

25]), unless dealing with linear transformations.

Example 1:

One parameter exponential family. For a one parameter exponential family with canonical parameter

, i.e. with density

the median modified score function has the form

where

and

. In this parameterization,

can be seen as the first derivative of the log-posterior

On the other hand, Firth’s modified score takes the form

. The effect of the median modification is to consider the median matching prior

, while

implies a Jeffreys’ prior

. Note that, for a one parameter exponential family with canonical parameter

, both

and

belong to the family of invariant priors discussed in [

29,

30].

Example 2:

Scale model. Consider the scale model , where is a given function. Let . We have , and , with , and . The median matching prior is thus , while the Jeffreys’ prior for a one-parameter scale model is and the prior associated to the Firth’s adjustment is .

Example 3:

Skew-normal distribution. Consider a skew-normal distribution with shape parameter

, with density

, where

is the standard normal density function. The median correction term for the score function associated to the median matching prior is (see [

28,

31])

Numerical integration must be performed to obtain the expected values involved in

.

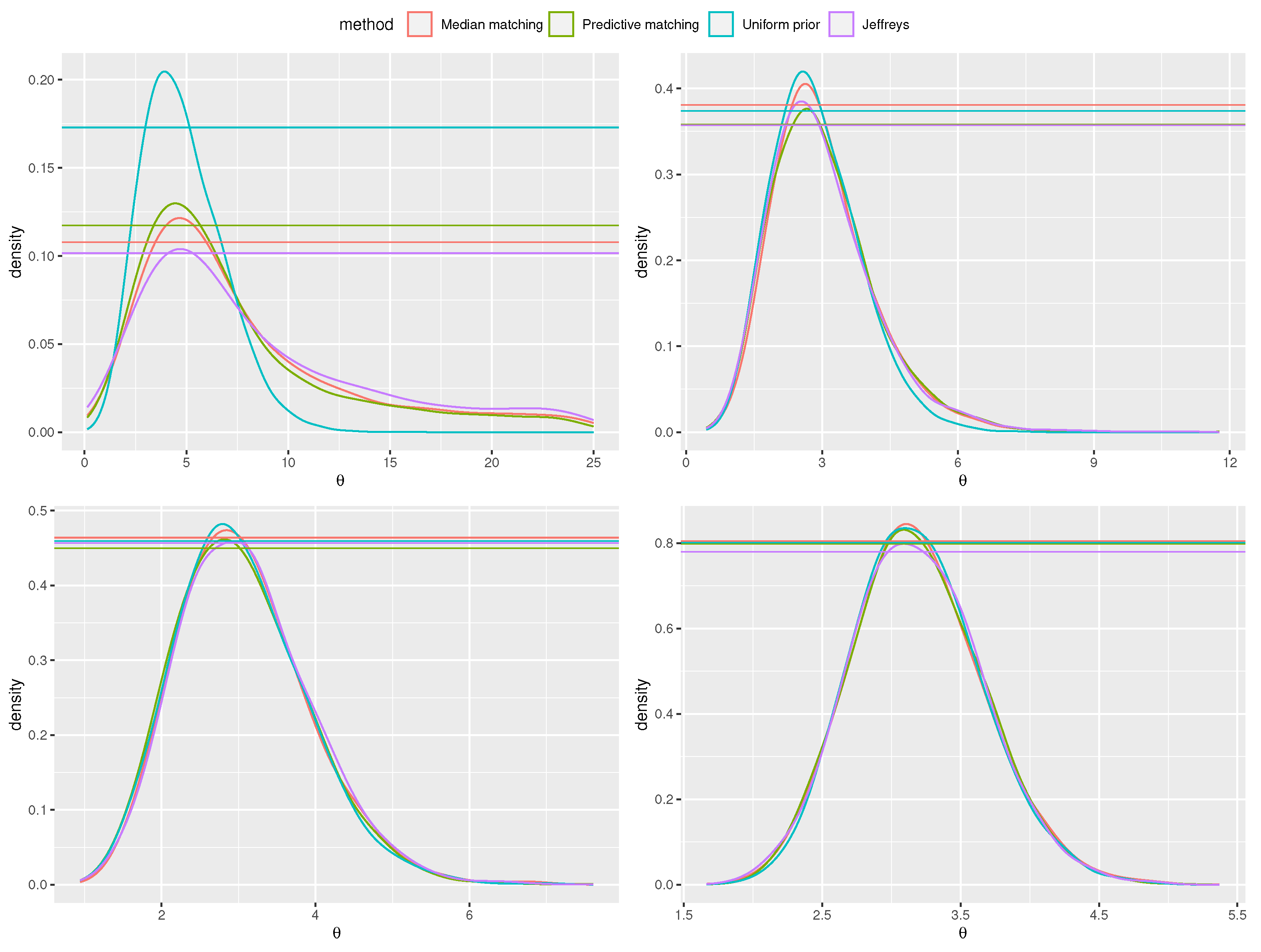

In order to illustrate the proposed prior, we consider draws from the skew-normal model with true parameter

and increasing sample sizes

(

Figure 1). The posterior distributions are obtained with the method by [

32], i.e. drawing

values and accepting the best

. The

values associated to the null (true) hypothesis

and the (false) hypothesis

are reported in

Table 1. For comparison, also the Jeffreys’ prior ([

33]), the predictive matching prior ([

31]) and the flat prior, with uniform reference function, are considered. Progressive agreement among evidence values obtained with the proposed median matching prior and the other priors for larger sample size is shown. Also, as expected, progressively increasing

n, the evidence values indicate agreement with the true hypothesis

and disagreement with

for all the priors used. Anyway, note that the posterior distribution obtained with a flat prior, and a uniform reference function, is proportional to the likelihood function that can be monotone. In view of this, while the MAPs of the posterior based on the default priors are always finite, in some samples the MAP of the posterior with the non-informative prior may be infinite. An example of this effect is illustrated in

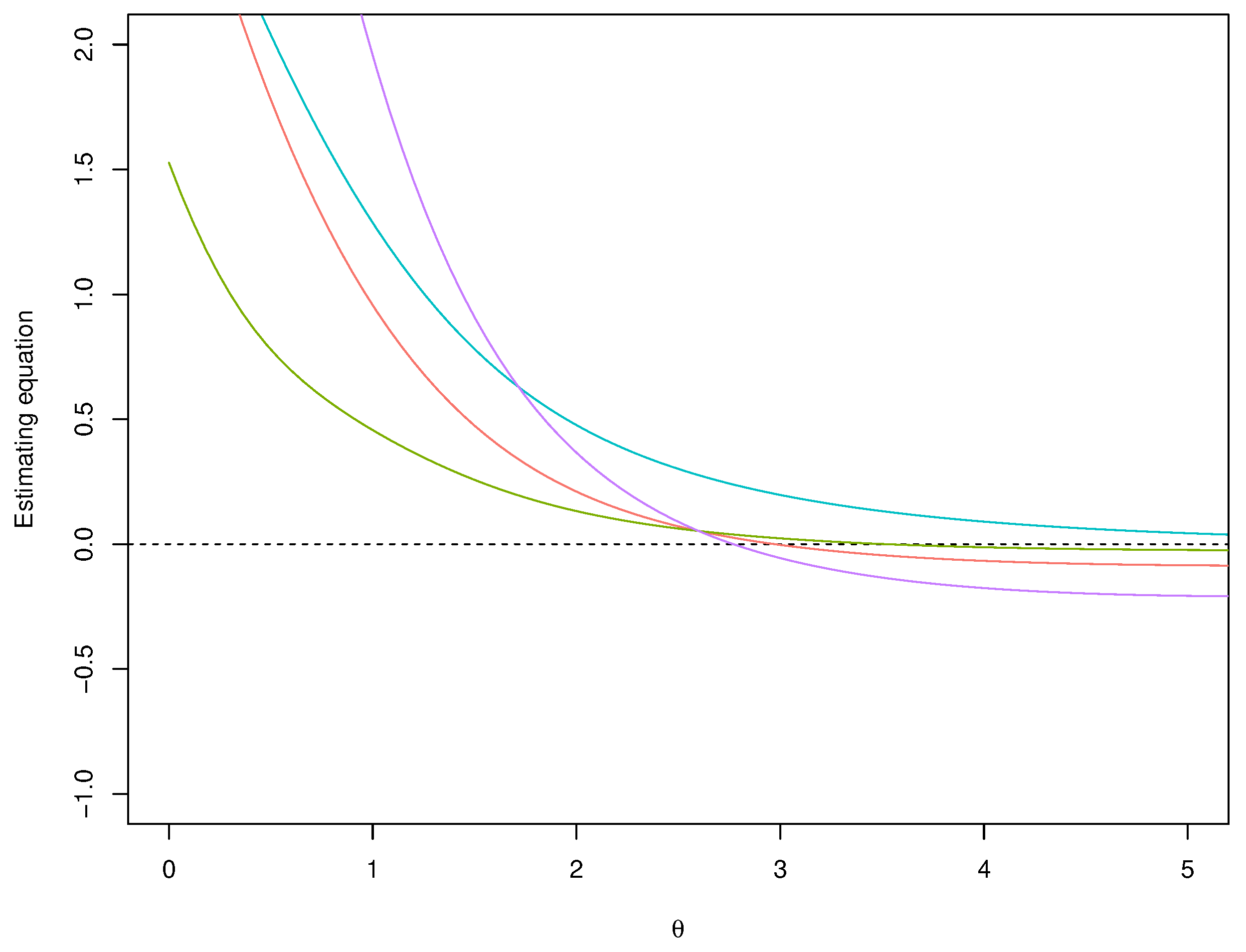

Figure 2.

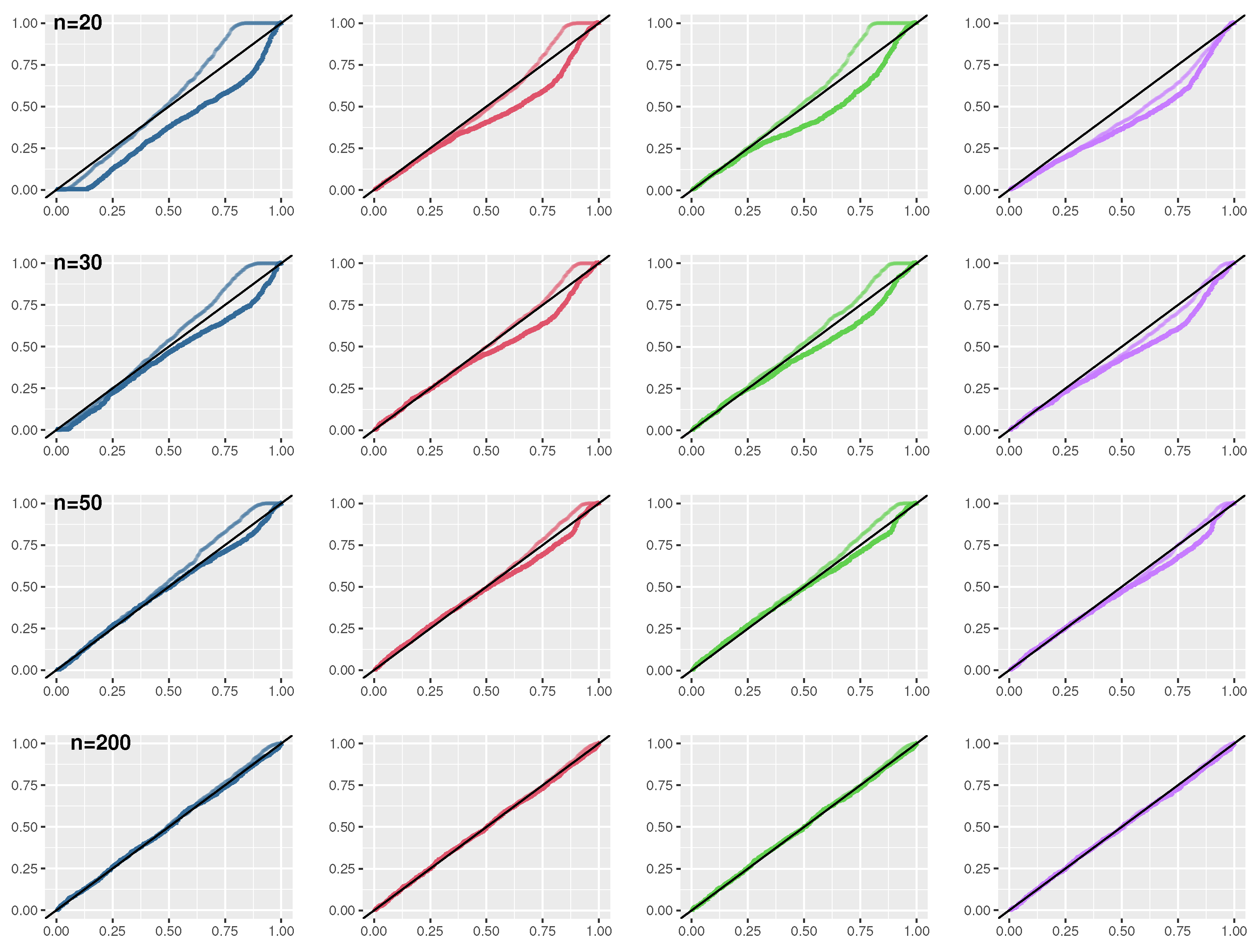

The properties of first-order approximations of the

values have been investigated by a simulation study, with sample sizes

. Results are displayed in

Figure 3. Distributions of the

value from the posterior based on the median matching prior are better, both for small and moderate sample sizes, in terms of convergence to the Uniform distribution. Moreover, score-type

values (

12) are also preferable than Wald-type

values (

11). For the results with the posterior distribution obtained with a flat prior we found

of infinite estimates for the sample sizes considered in the simulation study, and in these cases the

value was considered as 0.

3.2. Presence of nuisance parameters

In the presence of nuisance parameters, in order to obtain median bias reduction of the MLE, it is possible to resort to a modified version of the profile score function of the form

where

a suitable correction term of order

. In particular, for the median modified profile score function, the adjustment

assumes the expression

where

,

and

are the first three cumulants of

(see [

28], Section 2.2, for their expression). For the estimator

, defined as the solution of

, parameterization equivariance holds under interest respecting reparameterizations ([

28]).

Note that, also in the context of nuisance parameters, we are in the situation in which the proposed prior

is known through its first derivative; this is typically the situation with matching priors (see, e.g., [

11]). Since the parameter of interest is scalar, the posterior based on the median matching prior can be written as

A simple analytical way of approximating to first-order the posterior distribution (

14) based on the median matching prior is to resort to a quadratic form of

. In particular, the approximate posterior distribution for

takes the form

where

is a Rao score-type statistic based on (

13) and the symbol "

" means asymptotic proportionality to first-order. In this case, a first-order approximation of the

value, when testing

, is given by

In this case, an higher order approximation via (

3) would be impractical since a closed form prior is not available. As an alternative, simulation-based approaches may be used to derive the implied posterior distribution (

14) based on the median matching prior. The first one relies on Approximate Bayesian Computation (ABC) techniques, using

or the modified profile score function

as summary statistics; see [

34] for the modification of the algorithm of [

32] by using a profile estimating equation. This first method introduces an approximation at the level of the posterior estimation. The second one still relies on (

13) but considers use of Manifold MCMC methods (see ,e.g., [

35]) to conditioning exactly on the profile equation and not up to a tolerance level, as in ABC (see [

36,

37]). The algorithm moves on the constrained space

, where

is the solution of the estimating equation on the original data. For the latter method, we need minimal regularity assumptions on

, which is assumed to be continuous, differentiable and available in closed form expression. Note, for instance, that in the skew-normal example in

Section 3.1 these conditions are not met.

Example 4:

Exponential family. If

is an exponential family of order

d with canonical parameter

, i.e.

, quantities involved in

are simply obtained from derivatives of

([

28]). Note that, in this framework,

is an approximation with error of order

of the score for

in the conditional model given

(see e.g. [

38],Section 10.2). Then, in the continuous case, the MAP

is an approximation of the optimal conditional median unbiased estimator, and

is related to the conditional likelihood for

given by

; see [

39] for a Bayesian interpretation of such pseudo-likelihoods.

Example 5:

Multivariate regression model. Consider a regression model of the form

where it is assumed that

, with

positive definite matrix, and

are unknown parameters. This model is widely used for instance in time series analysis, in which as regression covariates the past of the observables

y are used. We focus on the problem of testing hypothesis on the correlation coefficient

.

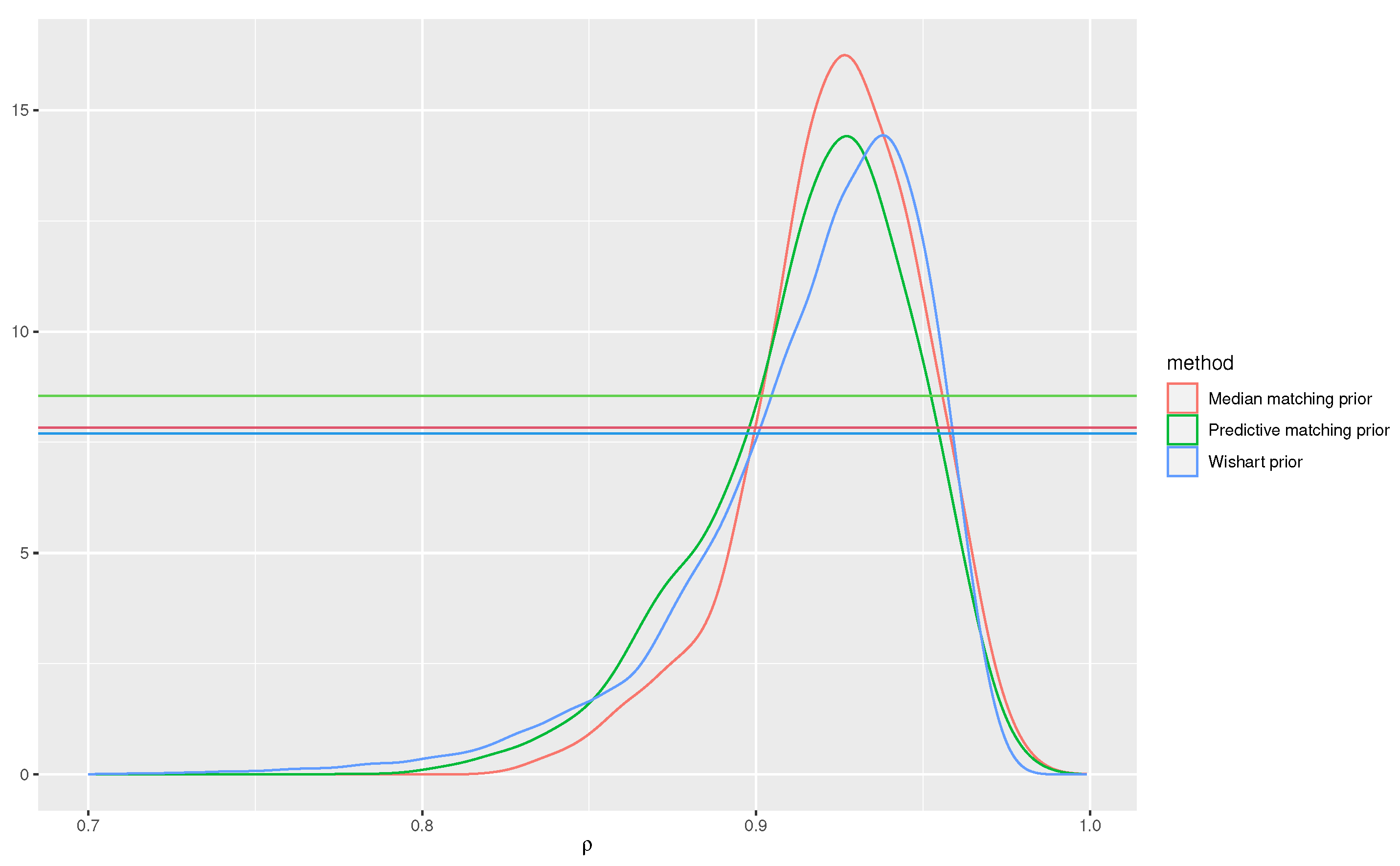

Consider a draw with true parameter

and

. For obtaining the proposed posterior (

14) for

we first compute the MAPs with the median matching prior and also, for comparison, with the predictive matching prior, which are respectively 0.953 and 0.92. Note that the expression of the predictive matching prior for

is given in [

40] and it still corresponds to the Firth’s adjustment to the score function. The expressions of the modified profile estimating functions

and

are obtained from [

41] and are available in closed form expressions. Hence, the Manifold MCMC method can be used to obtain the implied posteriors, whose approximation is comparable to that of any MCMC sampler. In particular we used 20000 iterations.

We compare the posterior distribution based on the proposed median matching prior, with those obtained with the predictive matching prior and with an inverse-Wishart prior for the covariance matrix

with one degree of freedom and identity position, and uniform prior on the regression parameters. The posterior distributions are displayed in

Figure 4. The hypothesis of interest is

, and a smaller

e-value indicating disagreement with the hypothesis should be preferable. The

values are 0.25 with the median matching prior, 0.36 for the predictive matching prior and 0.60 with the inverse-Wishart prior. Note that the

value based on the inverse-Wishart prior involves the constrained maximization and multidimensional integration thus is not directly readable in

Figure 4. Indeed, one crucial difference is that the original

value formulation links the evidence of the null hypothesis to the evidence of a more refined hypothesis, choosing the MAP under the null hypothesis for all the nuisance parameters, while in the alternative (tangential) set all values are used, and integration is performed on the full dimensionality of the space. On the contrary, in the proposed posterior based on the median matching prior, the maximizer of nuisance parameters are taken both in the null and non null sets.

Finally, for the posterior based on the inverse-Wishart prior, we also computed the

value based on high-order tail area approximation (

9) of the marginal surprise posterior, which is equal to 0.27. This procedure still avoids the multidimensional integration but the result is not invariant to changes of parametrization.

Example 6:

Logistic regression model. Let , , be independent realizations of binary random variables with probability , where and is a row vector of covariates. We assume that a generic scalar component of is of interest and we treat the remaining components as nuisance parameters.

As an example, we consider the

endometrial cancer grade dataset analyzed, among others, in [

42]. The aim of the clinical study was to evaluate the relationship between the histology of the endometrium (HG), the binary response variable, of

patients and three risk factors: 1. Neovascularization (NV), that indicates the presence or extent of new blood vessel formation; 2. Pulsatility Index (PI), that measures blood flow resistance in the endometrium; 3. Endometrium Height (EH), that indicates the thickness or height of the endometrium. A logistic model for HG, including an intercept and using all the covariates (NV, PI, EH), has been fitted, but maximum likelihood leads to infinite MLE of the coefficient

related to NV, due to quasi-complete separation. This phenomenon prohibits the use of diffuse priors for

, since the corresponding posterior wouldn’t concentrate. Moreover, the

value with non-informative priors cannot be obtained also for any hypothesis concerning parameters different from

.

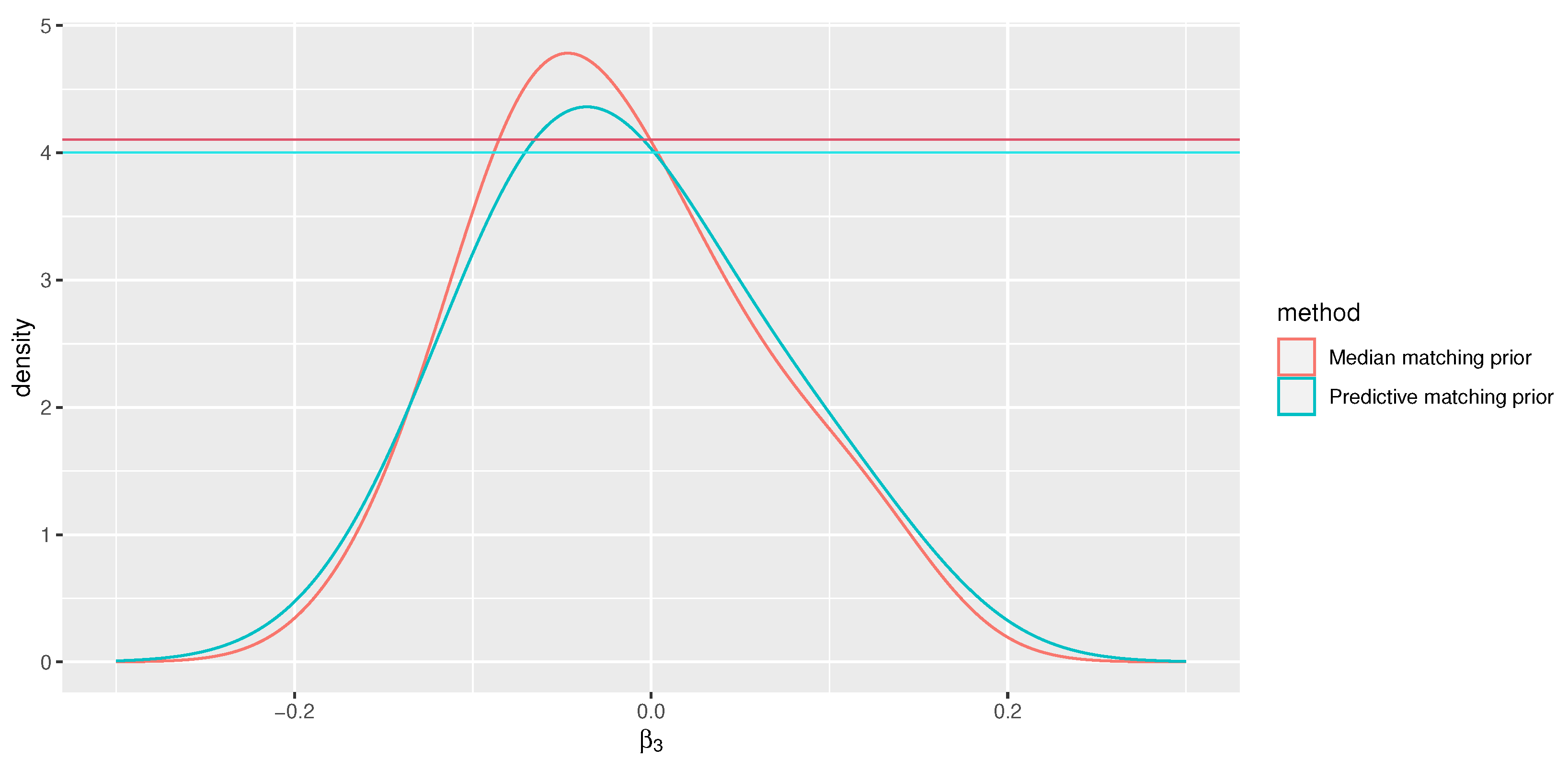

If we consider as the parameter of interest, while the remaining regression coefficients are treated as nuisance parameters, the analysis with the median matching prior allows to obtain a global proper posterior, with MAP equal to 3.86, open to interpretation both in the original scale and in terms of odds ratios. Similarly, the posterior based on the predictive matching prior, which in this model coincides with Jeffreys’ prior is proper, with the MAP set at 2.92. The latter suffers from lack of interpretability on different scales, since a different parametrization in estimation phase would affect the results.

If we consider

as the parameter of interest, related to the risk factor PI, the MAPs are -0.038 when using the median matching prior and -0.035 when using the predictive matching prior. The

values for the hypothesis

are 0.60 and 0.55, respectively (see

Figure 5). Likewise, the interpretation of

e-values remains consistent and independent of parametrization solely in the first case.