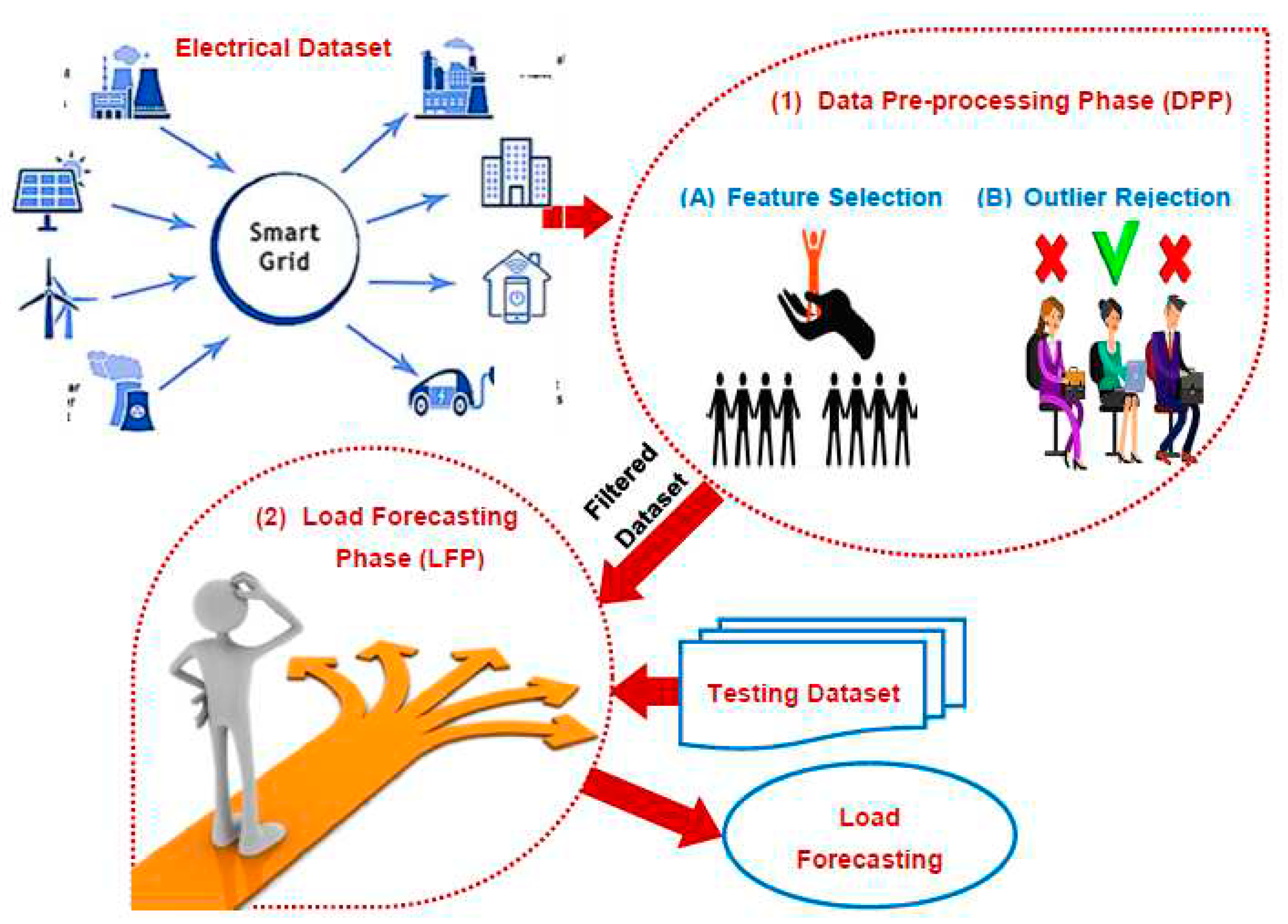

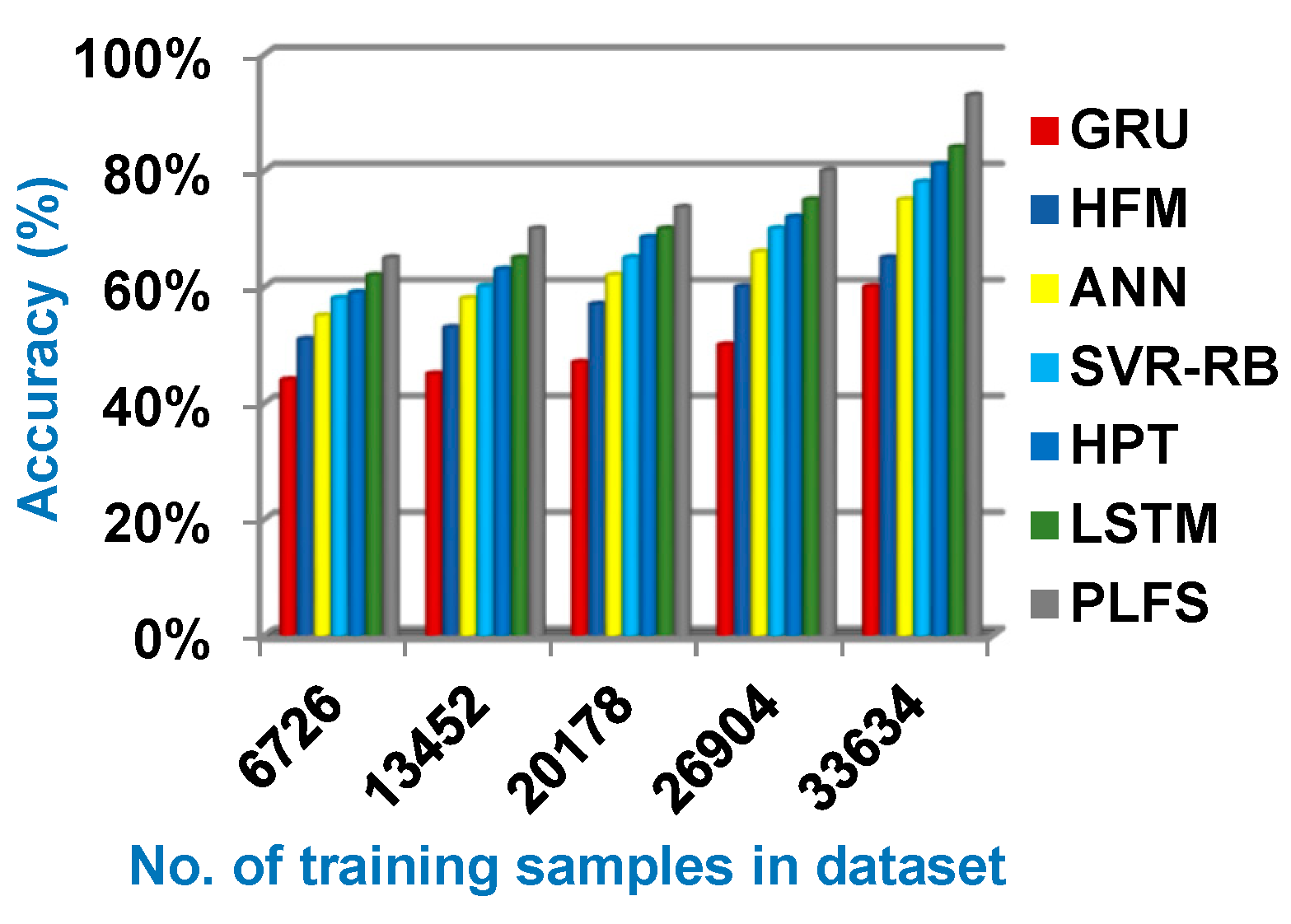

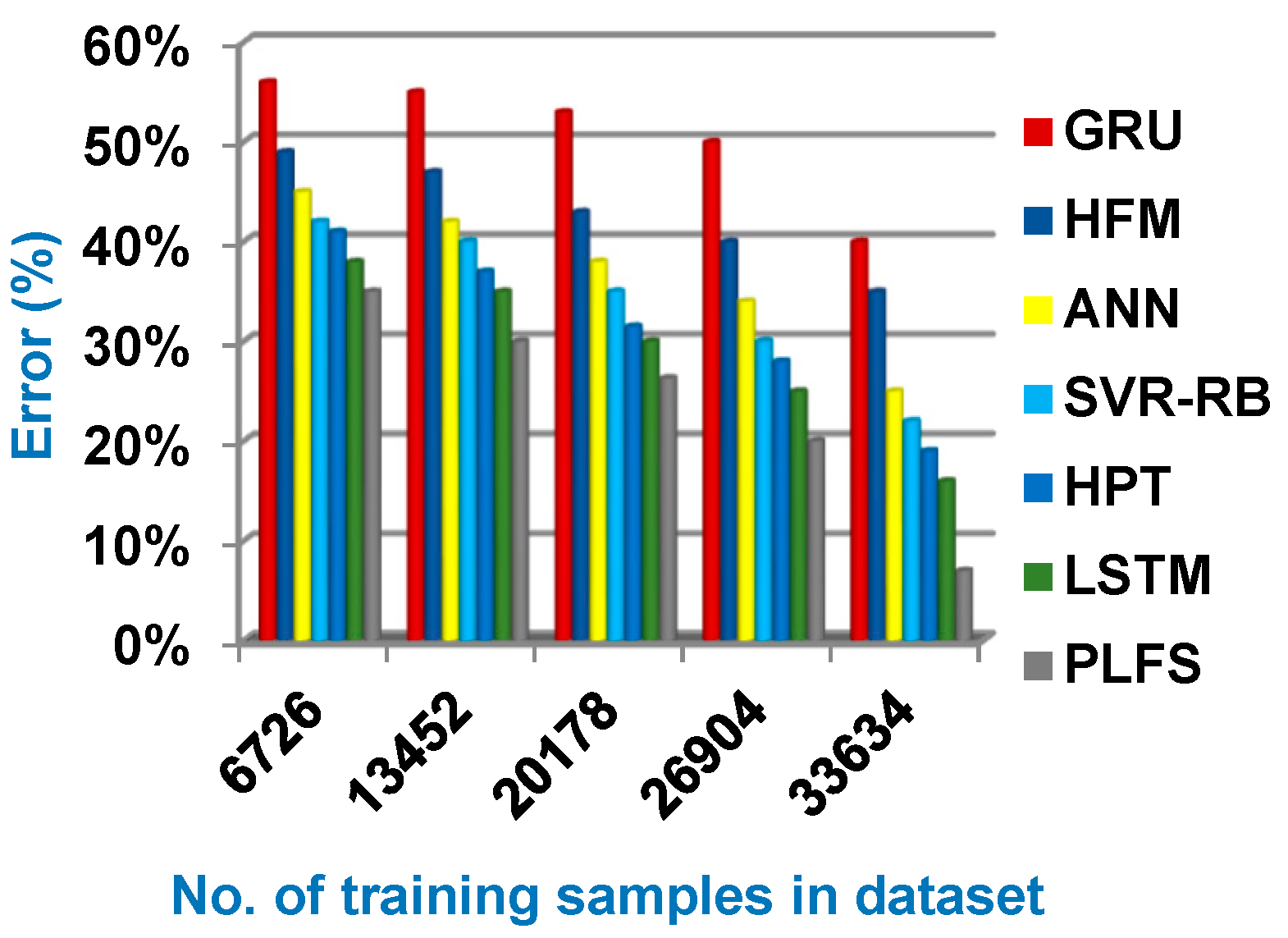

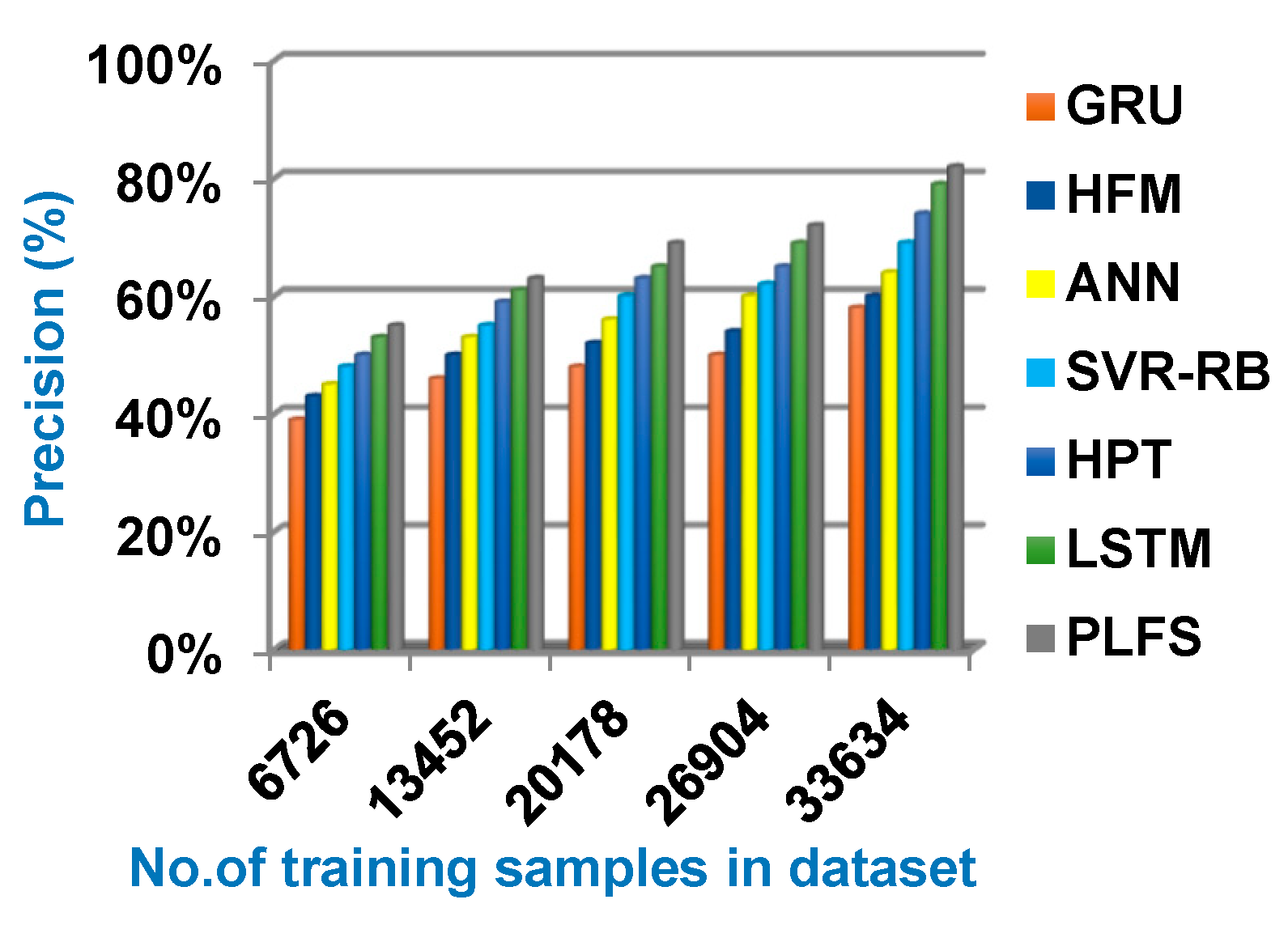

In this section, Perfect Load Forecasting Strategy (PLFS) as a new forecasting strategy is introduced to accurately estimate the amount of electricity required in the future. PLFS consists of two sequential phases called Data Pre-processing Phase (DPP) and Load Forecasting Phase (LFP) as presented in

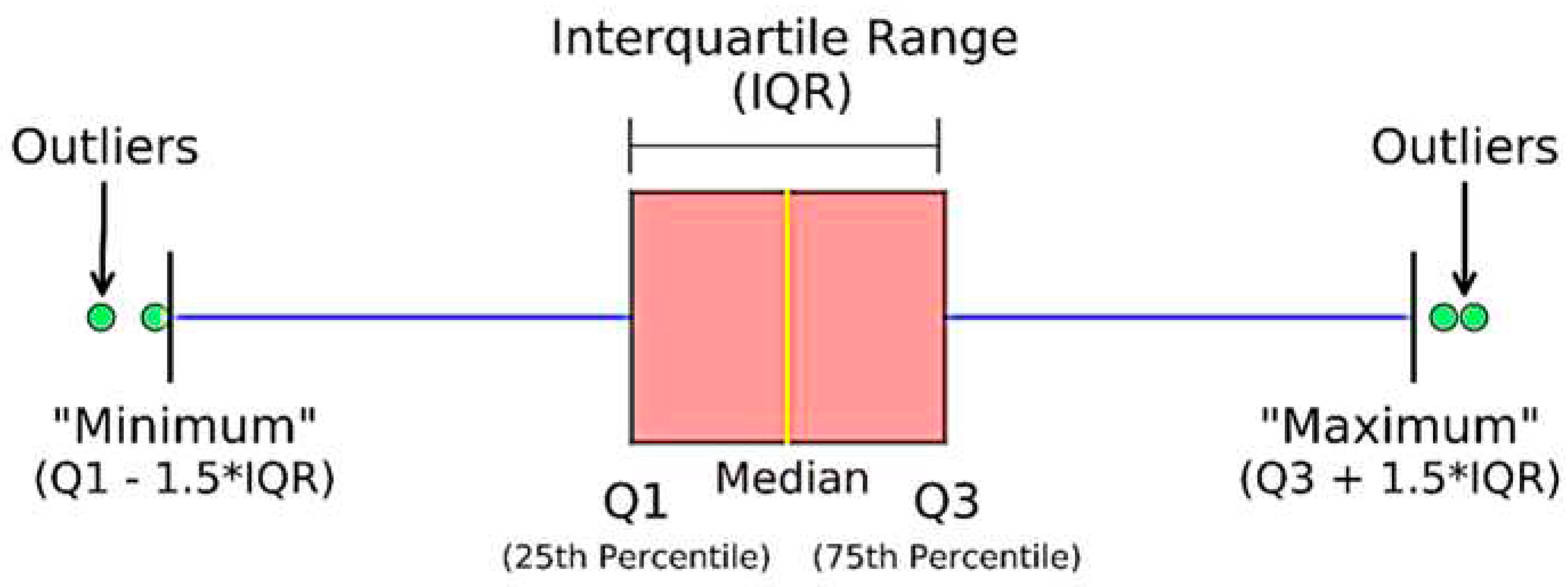

Figure 1. DPP aims to filter dataset from irrelevant features and outliers to contain valid data. Then, LFP aims to provide a perfect load forecasting based on valid dataset passed from DPP. In DPP, two main processes called feature selection and outlier rejection will be applied to filter the electrical dataset before learning the load forecasting model in LFP to provide perfect results. While feature selection removes non informative features, outlier rejection removes invalid items in the dataset to prevent overfitting problem and to enable the forecasting model to provide accurate results. At the end, the proposed load forecasting model in LFP will be used to give a perfect results. In this work, feature selection process will be performed using a new selection method called Advanced Leopard Seal Optimization (ALSO). Then, Interquartile Range (IQR) will be used to detect outliers. Finally, Weighted K-Nearest Neighbor (WKNN) algorithm will be used to provide fast and accurate forecasts.

3.1. The Advanced Leopard Seal Optimization (ALSO)

In this subsection, a new feature selection method called Advanced Leopard Seal Optimization (ALSO) that combines both filter and wrapper approaches will be discussed in detail. While filter approaches can quickly select a set of features, wrapper approaches can accurately select the best features. Hence, ALSO includes Chi-square as a filter method [

10] and Binary Leopard Seal Optimization (BLSO) as a wrapper method [

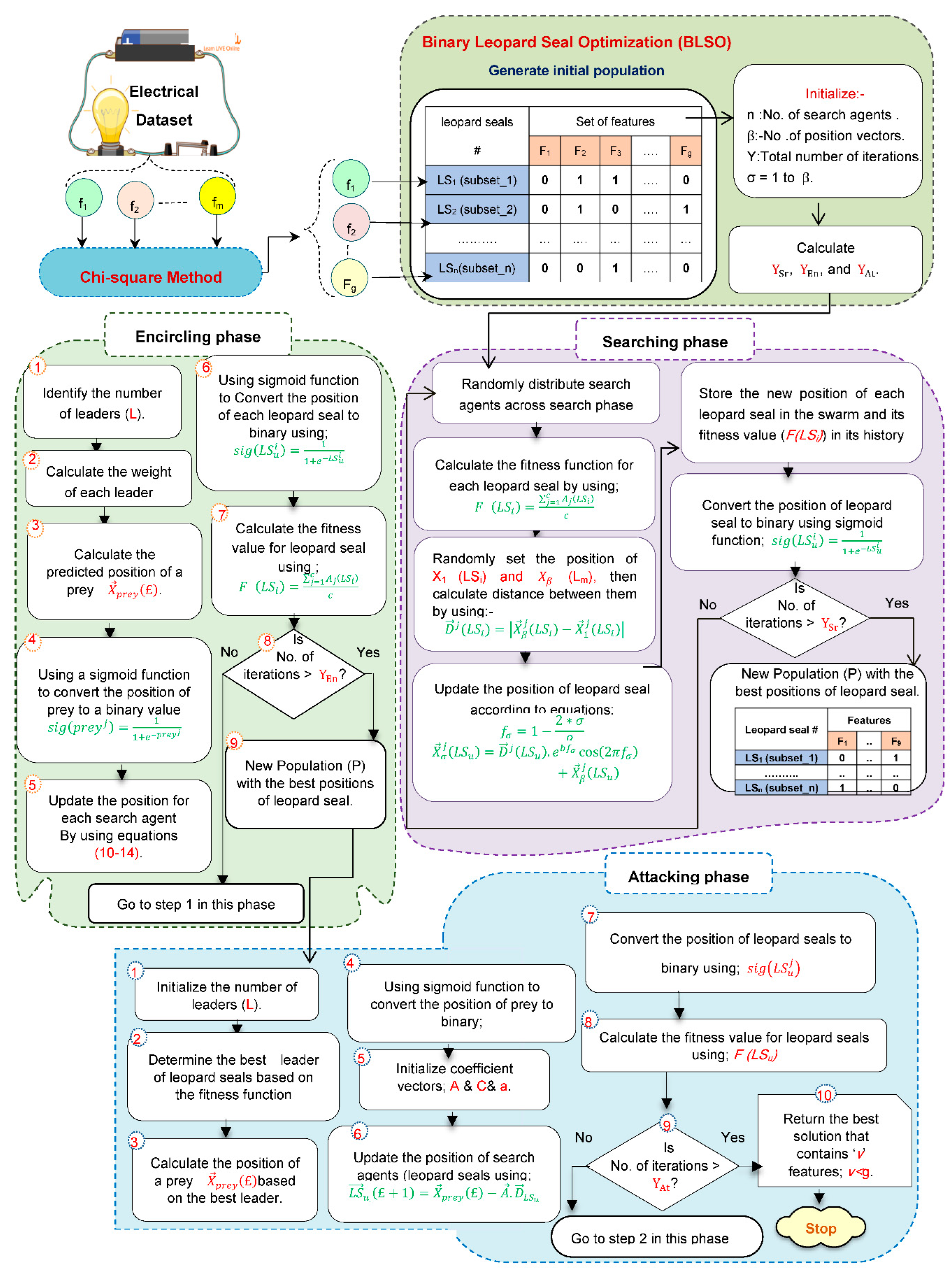

11]. While Chi-square is a fast but inaccurate method, BLSO is an accurate but slow method. Accordingly, ALSO contains both different methods for their cooperation together, so that each of them compensates for the problems of the other to determine the optimal set of features. The implementation of ALSO is represented in many steps as shown in

Figure 2. At first, the collected dataset from smart electrical grids is followed to Chi-square to quickly select a set of informative features. Then, this set of features is followed to BLSO to accurately select the most significant features on electrical load forecasting. BLSO begins with create initial Population (P) including many leopard seals called search agents. It is assumed that P includes ‘n’ Leopard Seals (LS); LS = {LS1, LS2, ……, LSn}. Each LS in P is represented in binary form where zero denotes a useless feature while one denotes a useful feature. After creating search agents in P, these agents will be evaluated using the average accuracy value from ‘c’ classifiers as a fitness function to prove that a set of features selected can enable any classifier to give the best load forecasting. According to ‘c’ classifiers, the fitness function for i

th leopard seal (LSi) can be measured by (1).

Where the fitness value for ith leopard seal is F(LSi), the classifiers number that is used to calculate the fitness value of the selected features in each seal is c, and the accuracy of jth classification according to the chosen features in ith leopard seal is Aj(LSi). To illustrate the idea, it is supposed that there are two seals in P; n=2 and three classifiers; c=3, applied to measure the fitness value of the selected features in every seal as illustrated in

Table 2. Related to

Table 2, it is supposed that the applied classifiers are Support Vector Machine (SVM) [

6,

11], K-Nearest Neighbors (KNN) [

4,

12], and Naïve Bayes (NB) [

4,

5]. According to the accuracy of these classifiers, SVM and NB provide that the best seal is LS2 while KNN provides that LS1 is the best seal. At the last, the average accuracy value proved that the best seal is LS2. Hence, evaluating seals based on single classifier cannot give the best set of features that can enable any classifier to give the best results.

According to the fitness values, the highest fitness value indicates to the best solution (seal) where the essential aim of the selection process is to provide the maximum average accuracy value. In fact, it is a necessary to assign the iterations number (Y) and also the position vectors number of the agents through its movement according to each iteration (£). Accordingly, the number of iterations for three phases called searching, encircling, and attacking based on the Y values will be calculated by (2-4) [

2].

Where YSr, YEn,, and YAt are the number of iterations for three phases called searching, encircling, and attacking respectively. To execute BLSO, three phases called searching, encircling, and attacking will be sequentially implemented based on YSr, YEn,, and YAt as shown in

Figure 2. Initially, the steps of searching phase will be executed until the number of iterations (YSr) is terminated. In the case if the YSr is not met, the position vectors of each agent will be modified using (5).

Where the modified

’s position of LSu at jth iteration is

, positions of LSu from 2 to

-1 is

;

{2, 3, 4,….,

-1}, and

is the distance between the search agent and the prey that can be computed by (6). b is the logarithmic spiral shape, the angle scaling factor for the

’s position of the agent is

computed by (7), and the modification of the last position (

’s position) of LSu at jth iteration is

.

Where the modified 1st position of LSu at jth iteration is

, the total positions number is β, and the current position of LSu is

. After updating the positions of agents, these new positions should be changed to a binary value by using function called a sigmoid function (8) [

11].

Where

is the binary value of uth agent at ith bit in

that represents the next iteration; i=1,2,...,f and

is the sigmoid function that will be calculated by (9) [

10,

11]. Furthermore, r(0,1) is a random value between 0 and 1.

Where the base of the natural logarithm is e. In P, the new position of each seal

will be evaluated by (1). Through searching for a prey, each agent in P will keep updated position and fitness values. In fact, the procedures of searching phase will be continued until the YSr is terminated. Then, the best position in P for each agent will be assigned based on the maximum evaluation value through their search for prey after searching phase is completed. Related to the best agents in P generated by the searching phase, the steps of the encircling phase will be implemented until the iterations number (YEn) is terminated. At first, the best leaders will be determined based on the calculations of evaluation function. Then, Weighted Leaders Prey Allocation (WLPA) method will be used for prey allocation [

11]. The leopard seals will update their positions depending on the prey’s position using (10-14).

Where the uth agent’s position at iteration j is

, the forecasted position of the prey at the jth iteration is

, and the distance between the uth agent and the prey is

. Additionally, random vectors belonging to [0,1] are

and

, coefficient vectors are

and

calculated by (12) and (13), the number of iterations in encircling phase is YEn calculated by (3). A vector that decrements linearly from two to zero over iterations is

computed by (14). In the encircling phase, the new position of leopard will be in between the current position of leopard and the prey’s position depending on

that includes a random value in [-1,1]. In the case if the YEn is not met, leopard seals will update their positions based on prey’s position by (10-14). At the last of the encircling phase, each leopard in P will assign its new position by calculating the distance between the leopard and prey applying (10). After that, the new position of leopard is calculated applying (11) and then is evaluated using the evaluation (fitness) function for determining the best solutions (leaders). Then, the sigmoid function should be applied to change all positions to be in binary form using (8).

The last phase called attacking phase receives the last information from the previous phase called encircling phase. Initially, the best leopard seals (alpha) will be determined and their positions will be used for determining the location of the target prey . Then, the positions of leopard seals will be converted into binary using the sigmoid function. In the case if YAt is not terminated, the leopard seals will modify their positions and then will test them by using the fitness function. At the end, the optimal solution will be the fittest leopard seal that contains the best set of features.

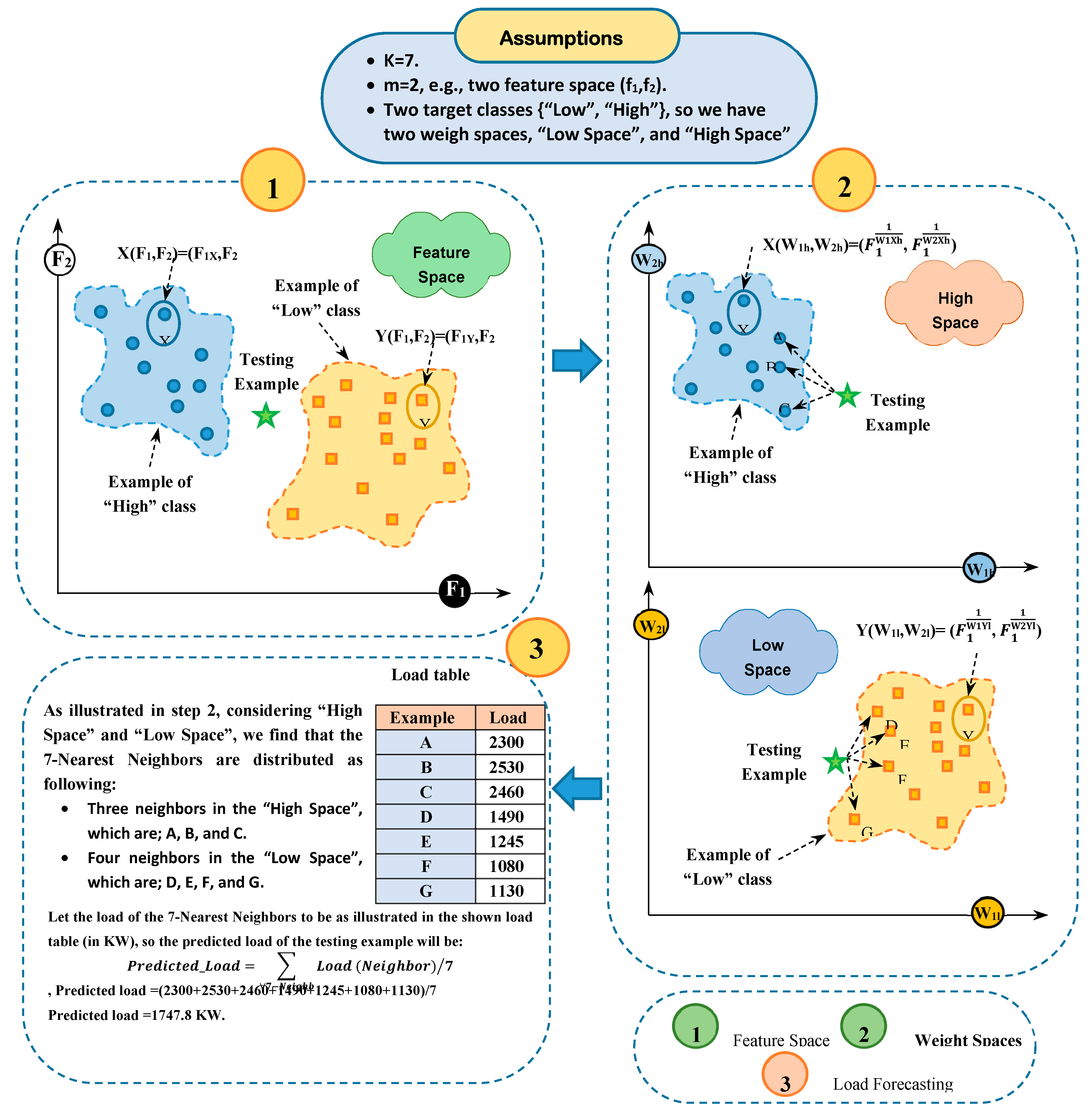

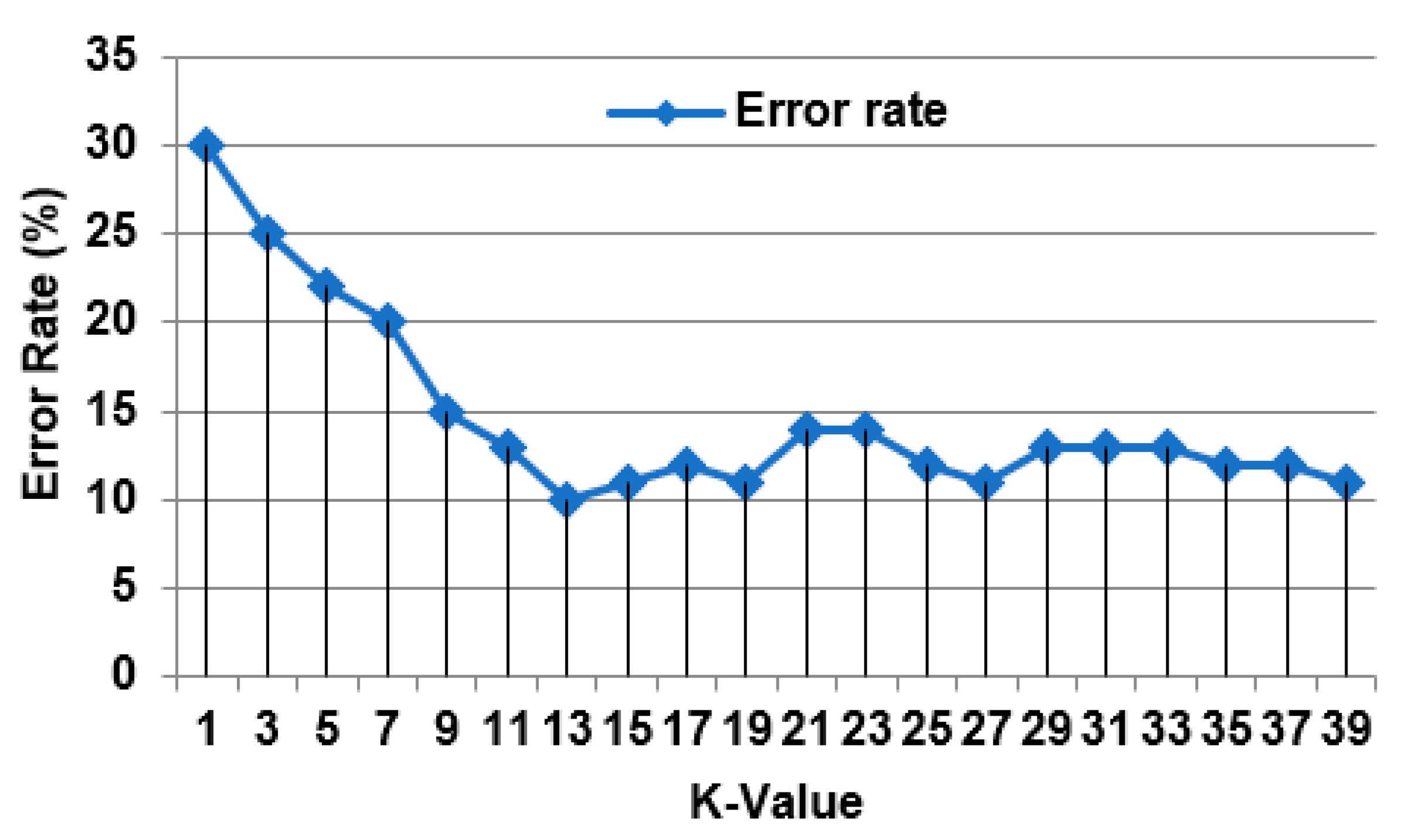

3.3. The Proposed Weighted K-Nearest Neighbor (WKNN) Algorithm

In this section, a new forecasting model called Weighted K-Nearest Neighbor (WKNN) will be explained in detail. WKNN consists of two main methods called Naïve Bayes (NB) as a feature weighting method [

6] and K-Nearest Neighbor (KNN) as a load forecasting method based on the weighted features [

4,

6]. In fact, the traditional KNN is a simple and straightforward method that can be easily implemented. On the other hand, KNN is based on measure the distance between the features of each testing item and every training item separately without taking the impact of features on the class categories. Hence, NB is used as a weighting method to measure the impact of features on the class categories. Thus, a feature space will be converted into a weight space. Then, KNN will be implemented in weight space instead of feature space. In other words, the distance between any testing item and every training item will be implemented in a weight space using Euclidean distance [

6].

Let testing item is represented as

E={,,….., } and training item is represented as

Q={,,….., }, where

is the

ith feature value at

E testing item and

is the

ith feature value at

Q training item;

i={1, 2, …, v}. Then, WKNN starts with calculating the Euclidean distance between each testing item in testing dataset and every training item in training dataset in v-dimension weight space using (15).

Where

is the Euclidean distance between E and Q items at class category c in a weight space.

is weight of ith feature at E item that belongs to c class while

is the weight of ith feature at Q item that belongs to c class using (16) and (17).

Where the probability that feature

is in items of class

is

and the probability that feature

is in items of class

is

. Also,

the probability of occurrence of class

and

are the probability of occurrence of class

.

is the probability of generating the feature

given the class

and

is the probability of generating the feature

given the class

. After calculating the distance (D) between each testing item and every training items separately using (15), distances should be in ascending order. Then, the k items that have the lowest distance values should be determined to take the average of their loads as a predicted load value for the testing or new item using (18).

Where

is the predicted load of testing item

,

is the load of the jth nearest training item, and k is the number of nearest neighbors.

Figure 4 presents an illustrative example to illustrate the idea of implementing WKNN algorithm.