1. Introduction

Vein detection plays a critical role in the medical field, as numerous surgical procedures rely on accessing the vascular system, necessitating accurate identification and localization of veins within the human body [

1,

3,

4,

5,

8]. Medical practitioners often find it difficult to precisely locate veins in the human body [

3,

5]. This issue is particularly prevalent in certain patient populations, including children, individuals with excessive subcutaneous fat, and patients with darker skin tones [

1,

3,

5]. When veins are inadequately visible, medical professionals are compelled to rely on their anatomical knowledge to perform blind sticks during medical procedures. Relying solely on a practitioner’s skills and anatomical knowledge can result in imprecise outcomes [

1]. This makes precise vein detection essential in modern medical practice. Failed venipuncture attempts can lead to complications such as vein thrombosis [

6], hematoma, or nerve injuries, potentially causing conditions like “causalgia” or complex regional pain syndrome (CRPS) [

4,

7]. Moreover, accurate vein detection is vital for studying and managing cancer, as it provides valuable insights into the anatomical relationship between arteries and veins in tumours [

2,

8,

9].

Improving vein detection methods can significantly enhance patient care and treatment outcomes. Currently, a variety of devices has been developed to aid healthcare workers in locating subcutaneous veins of patients for delivering intravenous or surgical treatments. These devices utilize different techniques such as trans-illumination, photo-acoustic, ultrasound, and Near-Infrared (NIR) imaging to aid in visualizing non-visible veins of patients. Each technique possesses distinct advantages and drawbacks, but NIR imaging has emerged as particularly suitable for vein localization during intravenous treatments [

1,

10,

11,

12]. By employing non-ionizing light rays, NIR imaging can penetrate deep within skin tissues to acquire clear images of the venous structure. However, despite the advancements in NIR imaging, challenges persist in accurately and reliably detecting veins, especially in complex surgical scenarios. To address this gap, Hyper-Spectral Imaging (HSI) offers a promising solution.

HSI captures spectral radiation across the visible to near-infrared electromagnetic spectrum, generating distinct images for each spectral band. It captures hundreds of continuous spectral bands, forming a datacube often referred to as a hypercube. This comprehensive data representation enables the acquisition of detailed information beyond what the human eye can perceive, providing valuable insights for various applications, e.g., agriculture, environmental monitoring, geology and mineral exploration, and medical imaging [

13,

14]. Widely explored in remote sensing applications, HSI offers a powerful tool for analysing and interpreting complex data from a diverse range of sources. Despite its successes in the medical field, HSI for human vein detection is yet to be investigated. The capabilities of HSI to capture rich spectral information may provide reliable data for vein detecting, enabling precise vein localization during surgeries and other medical procedures.

HSI data can be quite large, posing challenges in terms of manageability and demanding high computational resources. Consequently, these factors can potentially impact vein detection performances and, consequently, overall classification accuracy. Therefore, dimensionality reduction techniques are commonly employed to reduce their complexity. The selection of the most appropriate dimensionality reduction technique depends on the specific application’s requirements and the technique’s performance in accurately preserving essential vein detection features while reducing data complexity. For this research, three dimensionality reduction techniques that have previously been successfully used in hyperspectral (HS) data analysis, namely Principal Component Analysis (PCA), Folded Principal Component Analysis (FPCA), and Ward’s Linkage Strategy using Mutual Information (WaLuMI) were chosen for experimentation.

HSI has made significant contributions to the medical field, with a diverse range of applications. One such application involves the calculation of tissue oxygen saturation [

15], offering valuable insights into oxygen levels within tissues. It has also been effectively employed to monitor relative spatial changes in retinal oxygen saturation [

16], providing detailed observations of oxygen variations in the retinal region. Additionally, this imaging technique has been used to get the optimum range of illumination for venous imaging systems [

1].

This paper makes a substantial contribution to the field by investigating the effectiveness of PCA, FPCA, and WaLuMI in conjunction with the Support Vector Machine (SVM) binary classifier for vein detection using HS images of human hands. A HS image dataset of the left and right hand of 100 subjects was created and labeled to map out the vein and the rest area of the hand. This dataset covers a wide range of skin tones from diverse ethnicities. The annotated HS image dataset allows evaluation of the performance of each of the dimensionality reduction methods in the context of real-world vein detection tasks. By leveraging these dimensionality reduction techniques, salient features are extracted from the HS data, enabling the vein detection algorithm to identify vein patterns accurately.

The rest of this paper is organized as follows:

Section 2 briefly introduces PCA technique,

Section 3 briefly explains the FPCA technique, and

Section 4 briefly presents the WaLuMI technique.

Section 5 describes the data acquisition setup.

Section 6 discusses the vein detection steps and

Section 7 provides insights into the experiments conducted and the results obtained. Finally,

Section 8 draws the conclusion.

2. PCA for HS Images

Principal Component Analysis (PCA) [

17,

18,

19] is a widely used statistical technique for dimensionality reduction and data exploration in various fields [

20]. It enables the analysis of complex datasets by transforming them into a new set of uncorrelated variables called principal components [

21]. These components capture the maximum variance in the data, allowing for a simplified representation without significant loss of information. PCA has proven to be particularly valuable in numerous applications, including image processing, pattern recognition, and feature extraction.

In HSI, PCA has been successfully utilized for dimensionality reduction [

19,

22,

23]. PCA’s ability to capture essential spectral variations and effectively reduce the dimensionality of HS data has led to its widespread adoption in this domain. HS images contain rich spectral information captured within a wide range of spectral bands. PCA aims to transform the original high-dimensional HS data into a new set of orthogonal axes called principal components. These components are ordered by the amount of variance they capture, with the first component capturing the highest variance, the second component capturing the second highest variance, and so on.

Mathematically, given a HS data set matrix X, where each row corresponds to a pixel and each column corresponds to a spectral band, PCA can be applied for data reduction and feature extraction of a HS image data as follows:

Mean-Centering: Subtract the mean of each band from the corresponding column of X to center the data.

Covariance Matrix: Calculate the covariance matrix by

where

n is the number of samples (pixels).

Eigen Decomposition: Compute the eigenvectors and eigenvalues of the covariance matrix C. The eigenvectors form the principal components, and the eigenvalues represent the amount of variance captured by each component.

Data Projection: Select the top k eigenvectors corresponding to the k highest eigenvalues to form a projection matrix P. Multiply the original data matrix X by P to obtain the lower-dimensional representation Y.

3. FPCA for HS Images

Folded Principal Component Analysis (FPCA) [

24] is an extension of PCA that takes into account the spatial information inherent in HS images. Unlike traditional PCA, which treats each pixel independently, FPCA considers the correlation between neighboring pixels. It leverages the interplay between spectral and spatial information to enhance dimensionality reduction and feature extraction.

In FPCA, the fundamental idea is to convert each spectral vector into a matrix format, enabling the direct calculation of a partial covariance matrix. This matrix is then accumulated for eigen-decomposition and data projection, effectively incorporating spatial relationships into the analysis.

FPCA can be implemented on HS data with important parameters H (fold size) and W (number of spectral bands in each segment) as follows:

Matrix Transformation: For each pixel’s spectral vector, a matrix is constructed where each row contains a segment of W spectral bands. The entire spectral signature, represented by F bands, is divided into H segments. This transformation allows for capturing spectral-spatial interactions within a local context.

Partial Covariance Matrix: A partial covariance matrix is computed directly from these segmented matrices. This matrix reflects the interactions between different spectral bands within each segment, encapsulating both spectral and spatial information.

Eigen Decomposition and Projection: The accumulated partial covariance matrices are subjected to eigen decomposition. The resulting eigenvectors represent directions of maximum variance within the folded spectral-spatial data. By selecting the top k eigenvectors associated with the largest eigenvalues, a projection matrix is formed.

When

, FPCA simplifies to conventional PCA, treating each pixel’s spectral vector individually. As

H increases, spatial context is increasingly incorporated. A larger

H enables capturing broader spatial interactions but creates increased computational complexity. FPCA has previously been successfully applied in HSI for efficient dimensionality reduction and feature extraction [

23,

24,

25].

4. WaLuMI for HS Images

Ward’s Linkage Strategy using Mutual Information (WaLuMI) [

26] is a technique that combines hierarchical clustering using Ward’s linkage method [

27] with mutual information as a similarity measure for HS image analysis. Hierarchical clustering groups pixels based on their similarity, creating a dendrogram that represents the hierarchy of pixel associations. Mutual Information

is used as a criterion to measure the similarity between pixels. By utilizing mutual information and hierarchical clustering, WaLuMI considers both spectral and spatial information to discard redundant information in HS data, hence, leading to efficient data reduction in HS images. WaLuMI can be implemented on HS data as follows:

-

Mutual Information Calculation: Compute the mutual information between spectral vectors of pixels. Mutual information measures the amount of information shared between two variables, indicating how much knowing one variable reduces uncertainty about the other.

Let

I be the input hyperspectral image with dimensions

, and

X be the vectorized spectral data. The mutual information matrix is computed by

Wards Linkage: Use the mutual information values to perform hierarchical clustering using the Wards linkage strategy. This strategy merges clusters that minimize the increase in the sum of squared differences within clusters.

Dendrogram Creation: As the algorithm progresses, a dendrogram is formed, representing the hierarchical structure of pixel groupings.

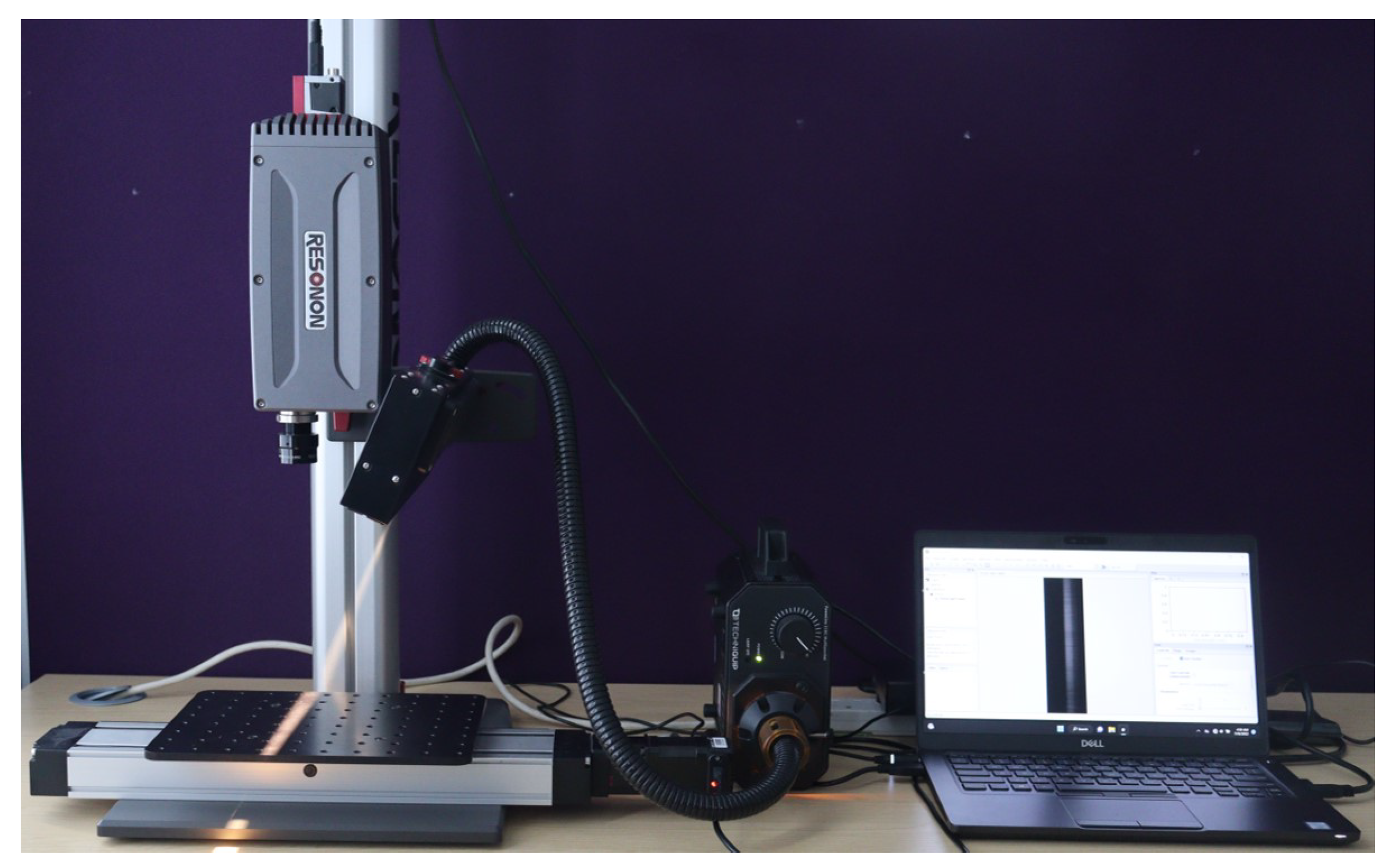

5. Data Acquisition Setup

To capture the Hyper Spectral (HS) image data, the benchtop HSI system manufactured by Resonon Inc. was used. The Resonon’s benchtop HSI system comprises of a Pika XC2 HSI camera, objective lens, linear translation stage, mounting tower, halogen line light with stabilized power supply, a calibration tile, and a system with Spectronon software pre-loaded. The Pika XC2 camera has a spectral range of 400 – 1000nm (nanometer), spectral resolution of 1.9nm, spectral channels of 447, spatial pixels of 1600, and spectral bandwidth of 1.3nm. Every pixel within the HS image contains a series of reflectance values across various spectral wavelengths, revealing the spectral signature of that particular pixel.

Figure 1 illustrates a schematic depiction of a sample captured HS image.

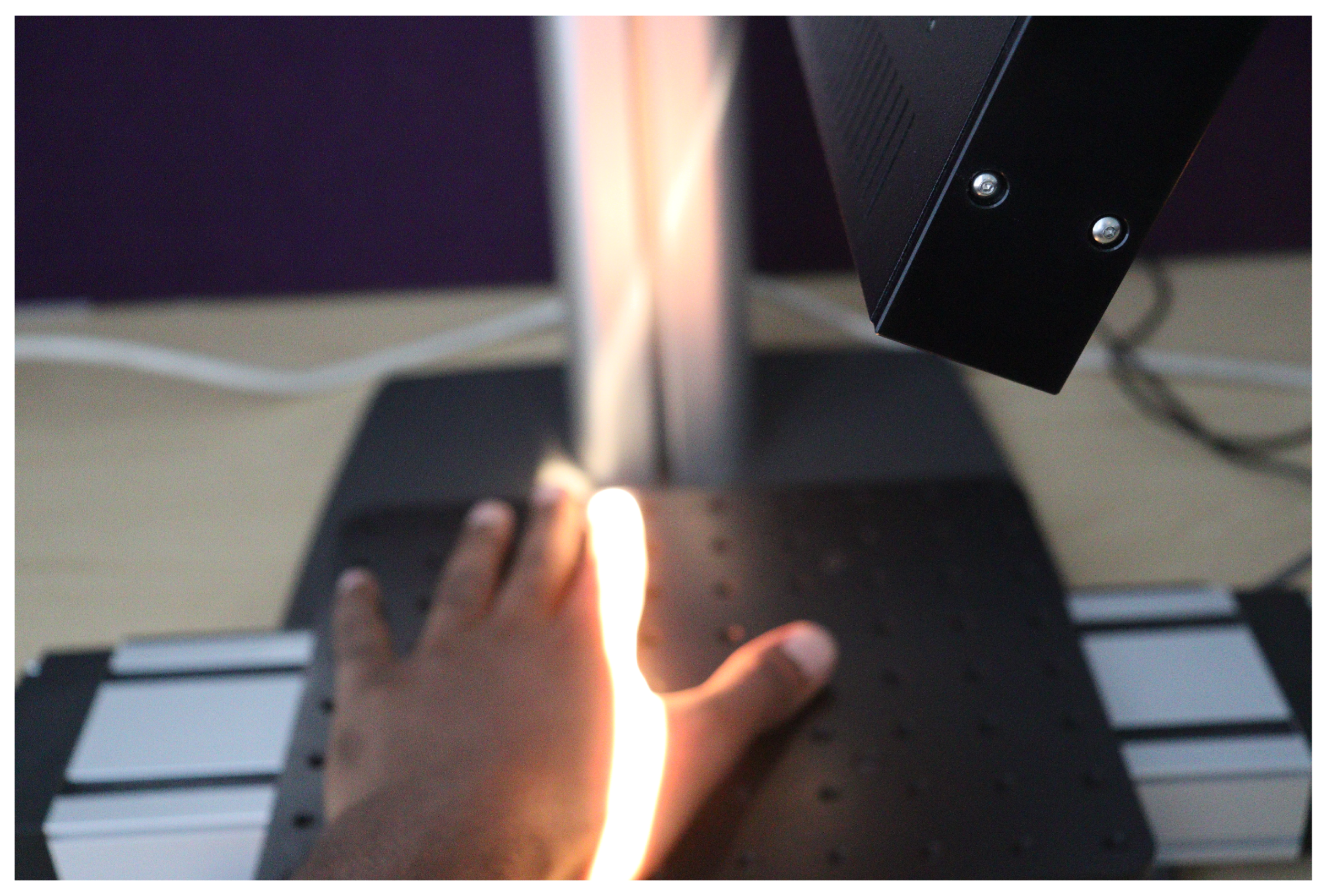

To setup the HS data acquisition system, the camera is mounted on the tower directly above the motorized linear translation stage. The lighting assembly is positioned and secured on the tower to illuminate in the direction of the stage baseplate from above. A halogen line light, as the light source, provides stabilized broad-band illumination on the human hand to be captured. To optimize the setup and improve data acquisition capabilities, the camera and lighting were carefully adjusted along the length of the tower.

Figure 2 shows the HS image data acquisition setup.

To initiate data capturing, the camera underwent calibration to ensure precise measurements. Throughout the data acquisition process, a consistent distance was maintained between the camera lens and the stage baseplate. During data acquisition, the linear translation stage moves, causing the hand to be translated beneath the camera. The HS camera utilizes the push-broom technique for imaging. This technique involves the camera scanning the object line by line using its inbuilt tunable filters or liquid crystal filters. By electronically adjusting these filters, the camera captures different spectral wavelengths of light. As the linear translation stage moves along the scanning direction, the camera sequentially captures HS information from different parts of the object. This allows the construction of spectral intensity images for each wavelength, resulting in a comprehensive HS image set.

Figure 3 shows a human hand being captured.

The Spectronon software facilitates the visualization of the captured HS images and enables a comprehensive suite of intuitive hypercube analysis functionalities. Additionally, it offers control over the linear translation stage, allowing precise manipulation of the stage position for enhanced data acquisition. The hypercubes are captured in Band-Interleaved-by-Line (BIL) format, accompanied by the generation of a corresponding HeaDeR File (HDR) for each completed capture. The HDR file contains essential metadata that describes various aspects of the captured data.

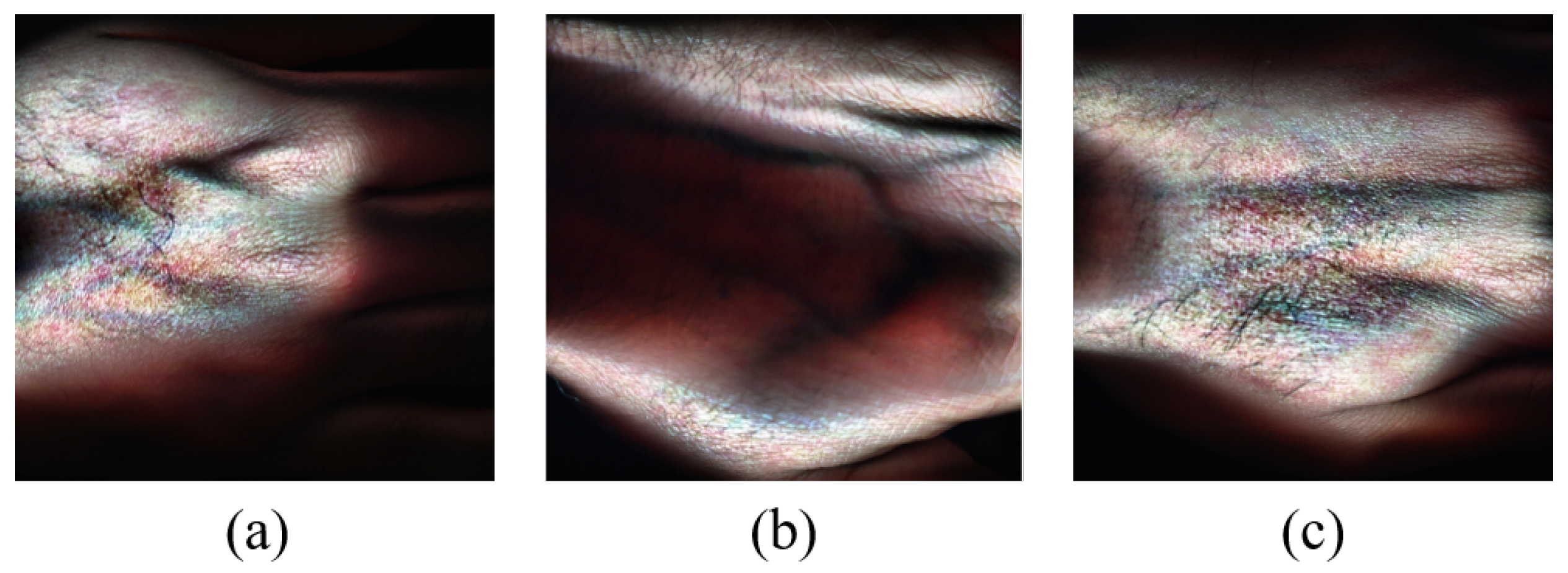

5.1. HS Image Dataset

The capturing processes formed a HS image dataset comprising the left and right-hand images of 100 participants meticulously captured, yielding a comprehensive collection of 200 images. The volunteer participants are a diverse group of individuals from various countries, including Asia, Africa, America, British, and Malaysia. The dataset encompassed individuals spanning different age groups and exhibiting distinct skin tones, representing a broad range of ethnicities for the experiments. To characterize the dataset, skin tone distribution is categorized according to the Fitzpatrick Scale [

29]. The Fitzpatrick Scale was developed by dermatologist Thomas B. Fitzpatrick to classify skin color and response to ultraviolet radiation [

30]. This scale serves diverse applications, including assessing skin cancer risk, guiding aestheticians in determining optimal laser treatment parameters for procedures like hair removal or scar treatment, and evaluating the potential for premature skin aging due to sun exposure. The Fitzpatrick Scale classifies skin tones into Types I to VI, representing a range from the lightest to darkest. For reference, Type I corresponds to very light or pale skin, while Type VI represents very dark or deeply pigmented skin.

Figure 4 shows the Fitzpatrick Scale. The statistical summaries are presented in

Table 1 and

Table 2.

Table 1 outlines essential statistics regarding the dataset composition. Notably, the dataset consists of 200 hand images, representing both the left and right hands of the 100 participants. The gender distribution reveals that 76% are male, and 24% are female. Ethnicity distribution shows a diverse representation, with 32% African, 59% Asian, and 9% European participants. Age distribution spans multiple categories, with the majority falling within the 26-30 age group (28%). Furthermore, the majority of individuals in the dataset exhibit skin tones classified as Type III (Medium) and Type IV (Olive), each accounting for 21% and 22%, respectively.

Table 2 provides a detailed breakdown of skin tone distribution within each ethnic group, adhering to the Fitzpatrick Scale. Noteworthy findings include the prevalence of Type IV (Olive) and Type V (Brown) skin tones among African participants, constituting 8% and 13% of the group, respectively. In the European group, the majority exhibit Type I (Light) and Type II (White) skin tones, accounting for 6% and 2%, respectively. Asian participants exhibit a more balanced distribution across various skin tones, with Type II (White) and Type III (Medium) being the most prevalent.

Figure 5 shows RGB image representations of some HS images from the dataset generated for visualization purposes using the hypercubes. This was achieved for each of the showcased HS images by selecting three specific channels from their hypercube and mapping them to the red, green, and blue channels of the RGB image. The captured hand images have spatial dimensions of

pixels and a spectral dimension of 462 bands. Some samples of the captured skin tones are shown in

Figure 6.

5.2. Preprocessing

The experimentations and data processing were conducted using MATLAB, a widely used software tool for scientific computing and data analysis, due to its comprehensive functionality and flexibility. The Region Of Interest (ROI) within the images has dimensions of

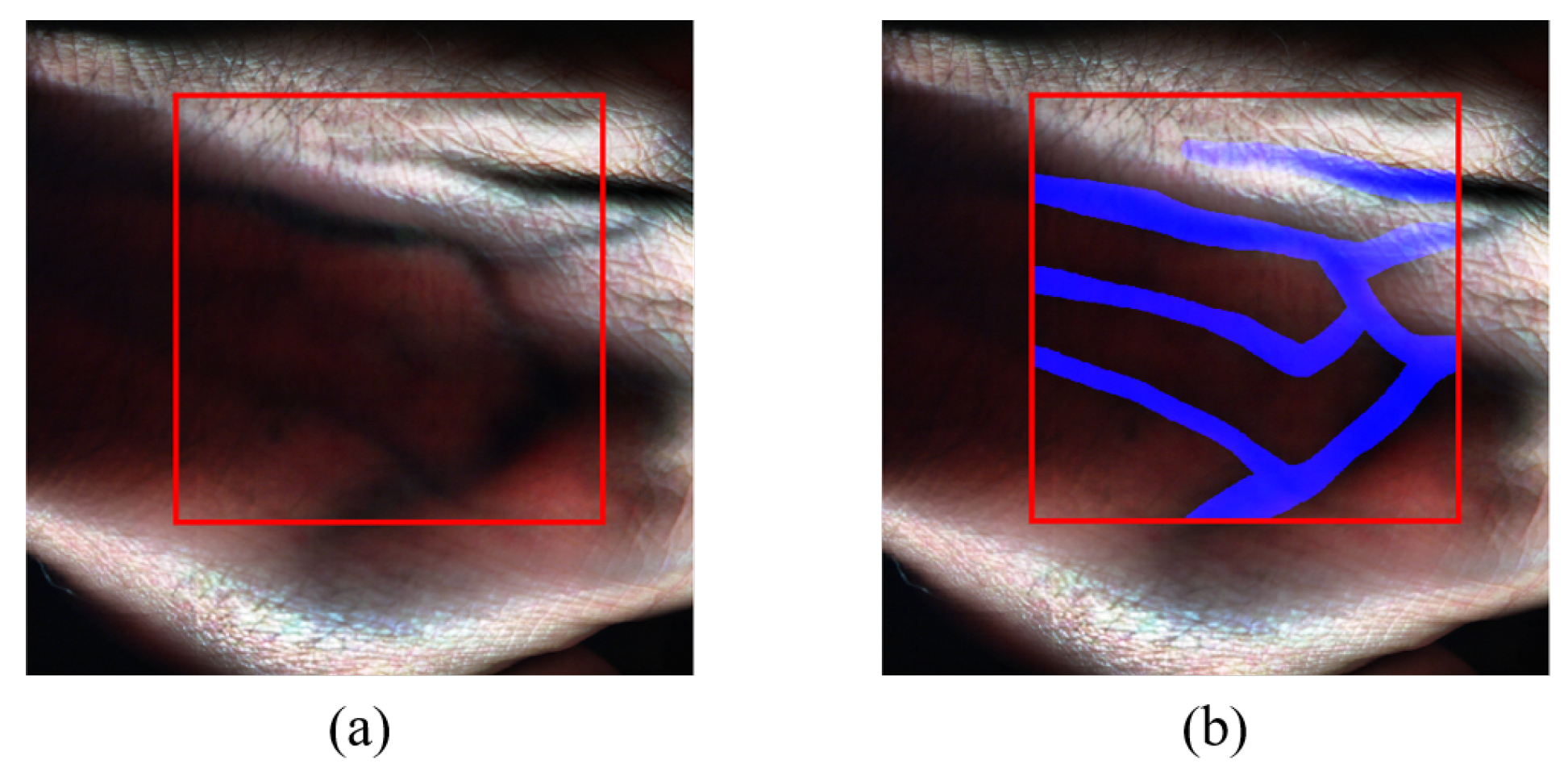

pixels. The ROI was carefully selected to encompass the essential spectral information relevant to the research objectives. The HS images were cropped to the size of the ROI. An estimated RGB image representation for each of the images’ ROI is then generated, which can be used for manual annotation.

Figure 7a showcases the delineated ROI.

5.3. Data Annotation

To facilitate subsequent vein detection analysis, each HS hand image in the dataset was manually annotated to highlight the veins present in the hand. By doing so, ground truth images were created for each of the captured HS images as reference labels, and this was done with the guidance of a medical expert and by using anatomical data to determine the vein locations in the images. The ground truth is a binary image with one representing the vein locations and zeros representing the rest.

Figure 7b depicts the annotated RGB representation of the sample HS image data, with veins highlighted in blue.

6. Vein Detection Methodology

In this section, the methodology applied for the detection of veins in HS images is outlined, encompassing data pre-processing, dimensionality reduction, training and testing set separation, classification, performance assessment metrics, and visual representation of the classification outcome.

6.1. Dataset Preparation

60% of the HS images within the dataset were randomly selected and used for training purposes and the remaining images were used for testing and evaluation. This division allowed for the construction and assessment of the algorithm’s performance on unseen data.

To reduce the computation time during processing, while retaining essential information, the ROI of the images were cropped to and likewise, their ground truth images were cropped to .

6.2. Dimensionality Reduction

The experiment involved three dimensionality reduction techniques namely PCA, FPCA, and WaLuMI. The selection of these dimensionality reduction techniques for the experimentation was driven by their distinct characteristics and potential benefits in the context of HS data analysis. These techniques have demonstrated effectiveness in related fields and show promise for exploring their applicability in HS studies. Each of these techniques was employed in a separate experiment. Initially, the HS images underwent dimensionality reduction using conventional PCA, after which a comprehensive analysis of the classification results was conducted.

Subsequently, the procedure was iterated by employing FPCA for dimensionality reduction, followed by a replication of the same process utilizing the WaLuMI technique. This systematic approach facilitated a thorough and comparative evaluation of how different dimensionality reduction techniques, namely PCA, FPCA, and WaLuMI, influenced the performance of vein detection.

6.3. Training and Testing Set Separation

The classifier was trained using 60% of the images of the HS image dataset and the rest of the images were used for testing to evaluate the classification performance for each technique. The training images were concatenated vertically to form the training data.

6.4. Classification: Support Vector Machine

Support Vector Machine (SVM) [

32] is widely used for classifying large data or handling noisy samples [

8,

38,

39]. SVM has recently become a prominent method for HS image classification, gaining significant attention in the field [

8,

31,

33,

34]. Its popularity stems from its ability to find optimal decision boundaries that maximize the separation between different classes, even in complex data distributions [

31]. By doing so, SVMs can effectively handle high-dimensional data and offer robust classification performance. It’s versatility and strong theoretical foundation has made SVM a valuable tool in various fields, including biomedical applications [

8,

35], pattern recognition [

36], and data analysis [

33,

37].

Due to SVM’s successes in HSI applications, it was chosen to classify the HS data. The input for SVM classification consisted of the training data and its ground truth. Following training of the SVM classifier, it was applied to the testing images to predict the class labels of the test samples. By evaluating the classifier’s performance on unseen data, the effectiveness of the classification approach could be assessed. Throughout the SVM training phase, integration of a linear kernel function aided the classification process, resulting in a significant enhancement of vein detection performance in the experiment.

6.5. Performance Assessment Metrics

Following the classification stages, measures to evaluate the classification performance were implemented. This involved calculating various metrics to assess the effectiveness of the dimensionality reduction techniques combined with SVM classification. This was implemented by calculating a range of performance evaluation metrics including accuracy, precision, recall, and confusion matrix. These metrics were compared and analyzed with respect to ground truth labels to determine the performance of each technique in discriminating between different classes of hands based on HS images.

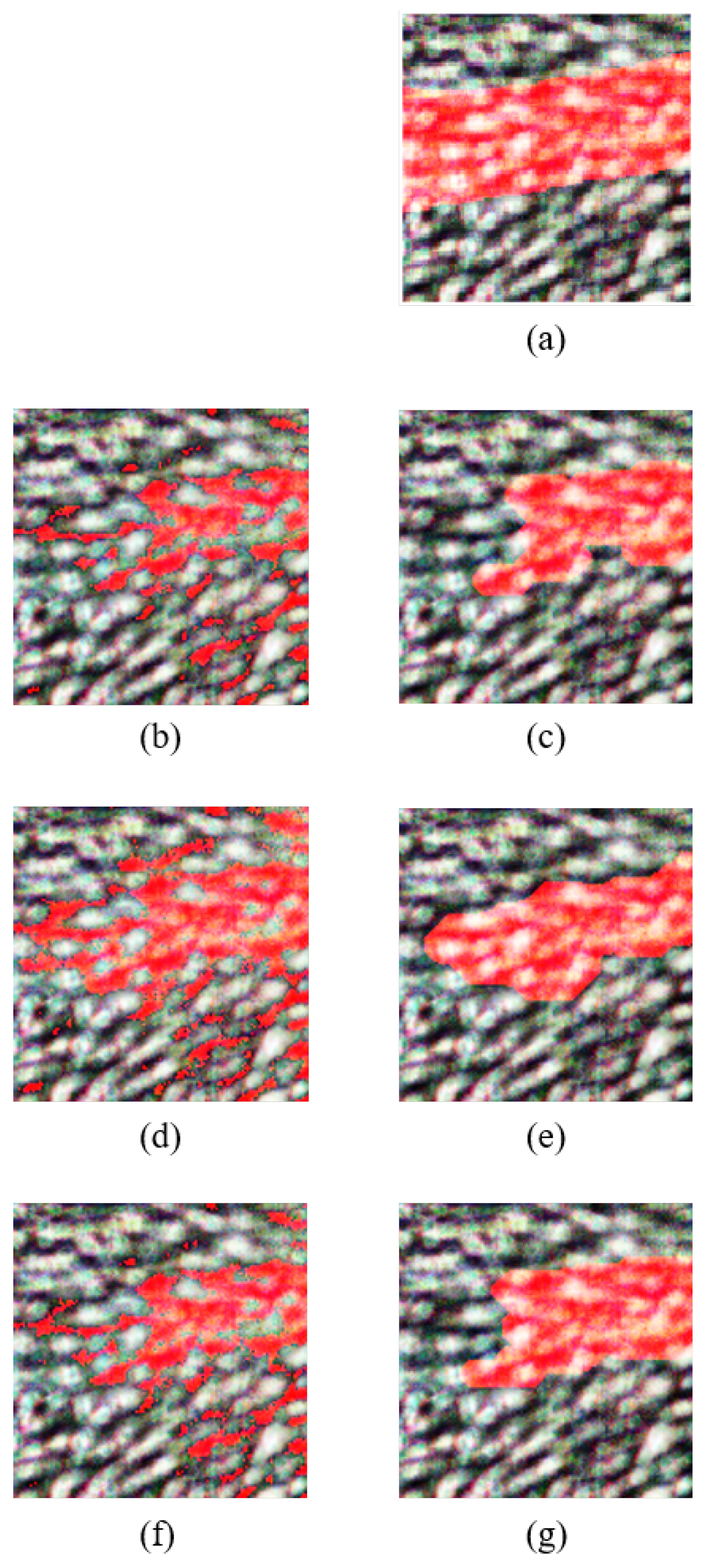

6.6. Visual Representation of Classification Result

For improved clarity and comprehensibility of the classification outcomes using PCA, FPCA, and WaLuMI techniques, a systematic approach was employed. The initial step involved a thorough analysis by varying the number of spectral bands to assess their impact on classification accuracy. This preliminary step was crucial in determining the optimal number of bands that would yield the most accurate results.

Subsequently, with the optimal number of bands identified, a visual representation of the classification result at the optimum was generated. This visual representation enhances the comprehension of classifier performance and effectiveness.

To offer a detailed perspective on this process, this process can be divided into two key steps:

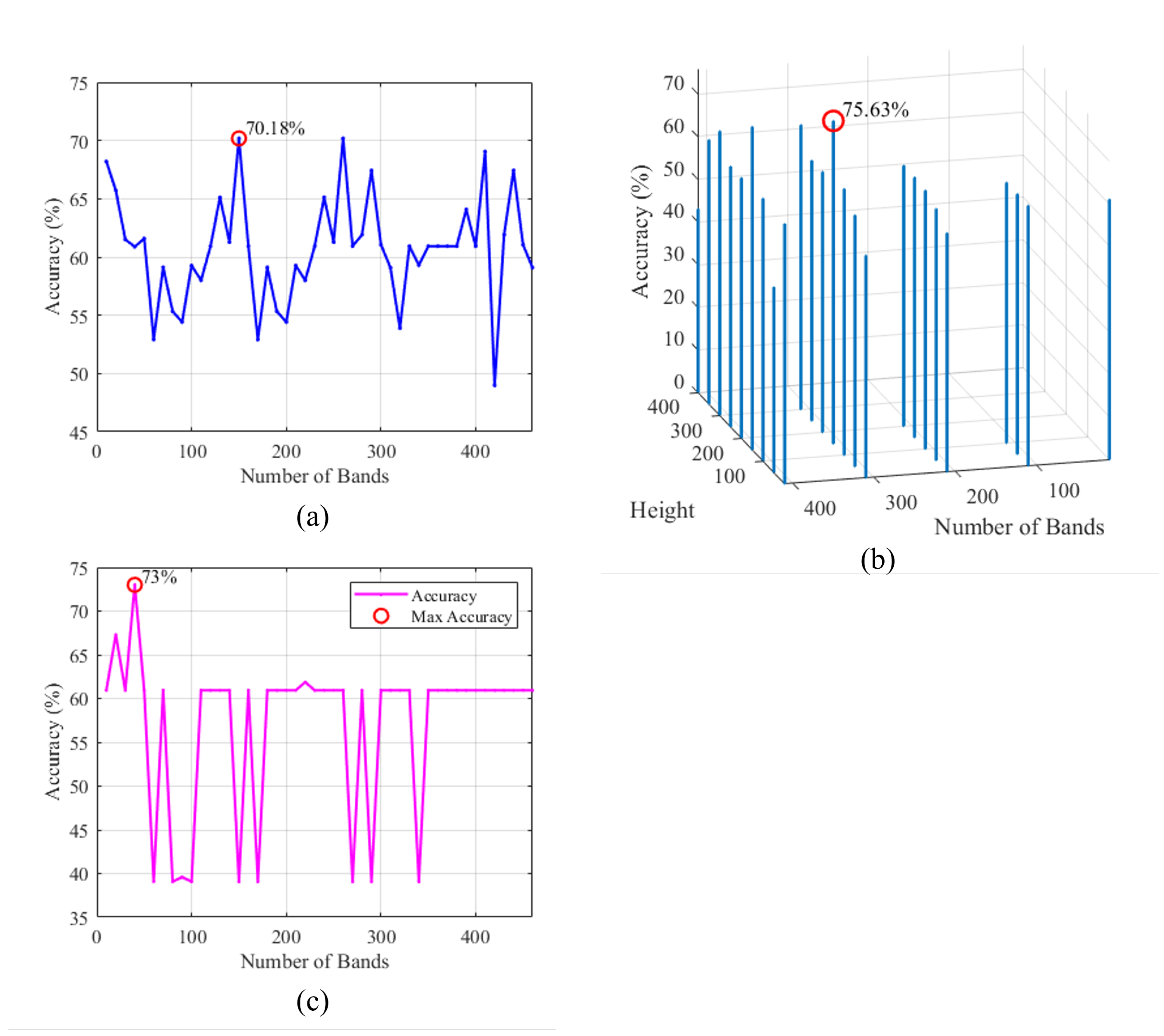

Assessing Optimal Number of Bands: The classification accuracies were plotted against the varying number of bands as shown in

Figure 8. This step allowed the identification of the point at which the classifier achieved its highest accuracy. The chosen number of bands at this point was regarded as the optimal configuration for subsequent analysis.

Visual Classification Outcome: With the optimal number of bands established, a visual representation of the classification outcome at the optimum was created. The produced image facilitates visual comparison with the ground truth. This provides insights into the performance of the classifier by illustrating the veins identified in the tested HS image, see

Figure 9 (further information about this Figure is given in

Section 7).

7. Experimental Results and Evaluation

In this section, the results obtained from applying PCA, FPCA, and WaLuMI dimensionality reduction techniques are presented. The objective was to assess the effectiveness of these techniques and their performance in the context of vein detection using HS data.

7.1. Experiments and Results

To determine the optimal operating points of the three-dimensionality reduction methods, PCA, FPCA, and WaLuMI, 60% of the HS images of the dataset were randomly selected to train the SVM classifier, and the rest of the images were used to generate experimental results. The experimental procedures for each of the techniques are as follows:

7.1.1. PCA Experiments

The initial set of experiments applied PCA to the HS image data, systematically varying the number of principal components from 10 to 462 in steps of 10 to assess its impact on classification performance. As shown in

Figure 8a, the experiments uncovered a complex interplay between the number of principal components and classification accuracy. While higher numbers of components often contributed to improved accuracy, it was observed that this trend did not hold uniformly across all ranges of component values. Instead, there were regions where increasing the number of components resulted in lower accuracy, indicating the presence of peak ranges for component selection. Beyond this range, further increases in components led to diminishing returns and, in some cases, decreased accuracy. From

Figure 8a, it can be seen that the PCA-based method achieves its highest performance in terms of accuracy when it uses 150 components.

7.1.2. FPCA Experiments

The second set of experiments delved into FPCA, which considers the window parameters (Height (H) and Width (W) of the window). The experiments aimed to understand the influence of both, the number of components and window parameters, on the classification accuracy using FPCA.

Figure 8b shows a 3-dimensional plot representing the achieved accuracy versus the window’s height and the number of bands. From

Figure 8b, it is clear that the FPCA-based method achieves its optimum performance in terms of accuracy when it uses 310 bands and a window size of

.

Moreover, it is evident that the choice of the window parameters significantly impacted the results. Smaller window height values often led to improved accuracy, particularly when dealing with a high number of bands.

7.1.3. WaLuMI Experiments

The third set of experiments focused on WaLuMI, specifically investigating the number of components and their influence on classification accuracy.

Figure 8c, demonstrates the accuracy versus the reduced number of bands for the WaLuMI-based method. From this figure, it can be observed the WaLuMI-based method achieves its higher performance in terms of accuracy when it reduces the dimensionality of the HS images to 40 bands.

Concerning the effect of dimensionality reduction, WaLuMI demonstrated competitive accuracy compared to PCA and FPCA. For instance, with 40 components, WaLuMI achieved an accuracy of approximately 73%.

The outcomes of these experiments provide valuable insights into the applicability of PCA, FPCA, and WaLuMI in the context of HS image classification for vein detection. Each of these techniques revealed distinct advantages, with FPCA particularly standing out by achieving the highest classification accuracy in the experiments. The selection of a method and its parameter configuration in the context of this study should be guided by the specific demands of the HS vein detection task. Considerations should encompass factors such as the dataset’s dimensionality and the distinctive spectral attributes of veins under investigation. These findings emphasize the necessity of aligning the choice of dimensionality reduction techniques with the intricacies of the vein detection challenge addressed in this research.

To generate experimental results, the calculated optimal operation parameters for PCA-, FPCA-, and WaLuMI-based methods were used to reduce the dimensionality of the input HS image data, where 60% of the input HS images of the dataset were used for training the SVM classifier and the rest of the images were used to generate the statistics. The obtained results for PCA, FPCA, and WaLuMI are presented in

Table 3.

As shown in

Table 3, in the evaluation of the three techniques, several key metrics were considered, including the accuracy, precision, recall, false positive rate (FPR), and false negative rate (FNR), which provide crucial insights into their classification performance.

PCA exhibited a relatively low FPR, suggesting that it had a commendable ability to correctly classify non-vein pixels without generating an excessive number of false alarms. However, a notable drawback is observed in its performance in terms of FNR. PCA exhibited a higher FNR, implying that it missed a considerable number of vein pixels during the classification process, leading to a significant number of false negatives. The overall accuracy of PCA is 70.18%, indicating that it successfully classified around 70.18% of the vein and non-vein pixels. The precision and recall values for PCA are 76.48% and 33.90%, illustrating its ability to balance between true positives and false positives.

FPCA demonstrates a slightly higher FPR compared to PCA, meaning that it has a relatively higher rate of false positives. This might lead to a slightly increased number of false alarms. However, FPCA excelled in capturing vein pixels, as indicated by its considerably lower FNR. The overall accuracy of FPCA is the highest among the three techniques, with a rate of 75.63%. This implies that FPCA correctly classified approximately 75.63% of the vein and non-vein pixels. The precision and recall values for FPCA are 73.34% and 59.12%, underlining its effectiveness in achieving both high true positives and low false positives.

Furthermore, WaLuMI shows a competitive FPR, striking a balance between classifying non-vein pixels correctly and avoiding false positives. Nonetheless, it has a higher FNR when compared to FPCA, signifying that it also missed some vein pixels during classification. The overall accuracy of WaLuMI is 73.00%, which means it successfully classified approximately 73.00% of the vein and non-vein pixels. The precision and recall values for WaLuMI are 78.03% and 43%, reflecting its ability to provide balanced classification results.

These results show that FPCA excelled in achieving the highest overall accuracy. Its strength lies in minimizing false negatives, even though it resulted in a slightly higher rate of false positives. PCA and WaLuMI demonstrated their own strengths and weaknesses, with PCA being effective at avoiding false positives and WaLuMI offering competitive accuracy. These findings highlight the importance of choosing dimensionality reduction techniques that fit the specific needs of the vein detection task, considering the trade-off between false positives and false negatives.

7.2. Morphological Operations

After performing vein detection using PCA, FPCA, and WaLuMI techniques and obtaining the respective results, post-processing involved enhancing the outcomes through morphological operations. Morphological operations, such as dilation, erosion, and opening, were strategically employed to extract and refine vein structures from the classified image. Dilation expanded vein regions, allowing for better connectivity and visualization of veins within the images. Erosion, on the other hand, helped remove noise and fine structures, resulting in cleaner and more distinct vein representations. Furthermore, opening operations fine-tuned the process by smoothing the detected veins while preserving their essential characteristics. Through a judicious combination of these morphological operations, the vein regions were adequately highlighted for the respective case of each reduction technique. A sample representation of the achieved refined image for each method has been generated and illustrated for visual perceptions.

Figure 9 shows the refined images after morphological operations for the PCA, FPCA, and WaLuMI techniques. The steps followed to perform morphological operations on the output classified image of the PCA, FPCA, and WaLuMI are as follows:

Figure 9.

Overlay of the predicted vein regions on the rgb of the HS test image and the resulting refined image after morphological operations, where veins are highlighted in blue, for each technique: (a) rgb and groundtruth overlay, (b) PCA classified image, (c) PCA refined image, (d) FPCA classified image, (e) FPCA refined image, (f) WaLuMI classified image, (g) WaLuMI refined image.

Figure 9.

Overlay of the predicted vein regions on the rgb of the HS test image and the resulting refined image after morphological operations, where veins are highlighted in blue, for each technique: (a) rgb and groundtruth overlay, (b) PCA classified image, (c) PCA refined image, (d) FPCA classified image, (e) FPCA refined image, (f) WaLuMI classified image, (g) WaLuMI refined image.

7.2.1. PCA Morphological Operations

Morphological Erosion: A disk-shaped structuring element with a radius of 4 pixels is employed to iteratively reduce noise and fill gaps in the classified image.

Morphological Dilation: An iterative dilation operation with a line-shaped structuring element (length: 5 pixels, angle: 180 degrees) is applied to enhance feature extraction.

7.2.2. FPCA Morphological Operations

Morphological Erosion: Iterative morphological erosion with a disk-shaped structuring element (radius: 4 pixels) is employed to refine feature extraction.

Morphological Dilation: Dilation operations are applied iteratively using a square-shaped structuring element (size: 2x2 pixels) to further enhance feature extraction.

7.2.3. WaLuMI Morphological Operations

Morphological Erosion: Disk-shaped structuring elements with varying radii are utilized to iteratively reduce noise and gaps in the binary image.

Morphological Dilation: Dilation operations are applied iteratively with structuring elements tailored to address specific characteristics of the data.

Figure 9 presents the classified vein images and their corresponding refined versions obtained through morphological operations for each of the applied dimensionality reduction techniques. This visual representation offers valuable insights into the performance of these techniques regarding vein detection.

The images reveal distinctive characteristics associated with each dimensionality reduction method. Notably, FPCA stands out as the superior performer, as evidenced by the vivid representation of vein structures in its classified image. Vein regions are prominently identified, demonstrating the high accuracy achieved by FPCA. The refined image further enhances the visualization, underscoring the method’s efficacy in isolating veins from the rest of the hand, making it a compelling choice for vein detection in HS images.

In the case of WaLuMI, the classified image exhibits a notable degree of vein detection, though with slightly lower contrast compared to FPCA. Morphological operations enhance the image further, making it a viable choice for vein detection tasks, especially when factors such as computational efficiency are taken into account.

For PCA, the generated classified image exhibits relatively lower vein detection clarity. While PCA provides a reduction in dimensionality, the results indicate that it may not be the optimal choice for vein detection in HS images without further refinement.

The outcomes of this visualization align with the quantitative results, where FPCA demonstrated the highest vein detection accuracy.

Figure 8 illustrates how different dimensionality reduction techniques impact vein detection quality, emphasizing the importance of method selection based on the specific demands of the application. The remarkable visual results achieved with FPCA hold great promise for enhancing vein detection in various clinical contexts, paving the way for advancements in medical diagnostics and imaging.

8. Conclusions

In conclusion, this paper leveraged hyperspectral (HS) images to advance the field of vein detection, addressing the pressing need for improved diagnostic tools in various clinical settings. The curated dataset consisted of left and right-hand captures from 100 subjects with varying skin tones. To harness the potential of HS data for vein detection, three dimensionality reduction techniques: Principal Component Analysis (PCA), Folded Principal Component Analysis (FPCA), and Ward’s Linkage Strategy using Mutual Information (WaLuMI) were employed.

Through rigorous experimentation and evaluation, FPCA emerged as the standout performer, delivering the highest accuracy in vein detection. This result highlights the importance of optimizing dimensionality reduction methods in the pursuit of enhanced medical imaging and diagnostics.

Furthermore, the research extended beyond accurate classification to visualizing vein regions effectively. This was achieved by generating classified images using the optimal bands obtained from the dimensionality reduction techniques. These images were then refined through the application of morphological operations, providing clearer and more interpretable representations of vein structures.

The implications of this work are far-reaching. By demonstrating the potential of HSI in conjunction with tailored dimensionality reduction techniques, a significant contribution has been made to the advancement of medical imaging and diagnostics. The findings of this paper hold great promise, as they could lead to significant advancements in clinical practices and improve patient care in various healthcare settings.

Author Contributions

Conceptualization, H.N., and A.S.A.; methodology, H.N., A.S.A., and I.M.; and A.S.A.; software, H.N.; validation, H.N., A.S.A., and I.M.; formal analysis, H.N., A.S.A., and I.M.; investigation, H.N.; resources, A.S.A.; data curation, H.N.; writing—original draft preparation, H.N., A.S.A., J.D., and I.M.; writing—review and editing, H.N., A.S.A., J.D., and I.M.; visualization, H.N.; supervision, A.S.A., J.D., and I.M.; project administration, A.S.A.. All authors have read and agreed to the published version of the manuscrip.

Funding

This research received no external funding.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The dataset is available for academic research upon request to the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| HS |

Hyperspectral |

| HSI |

Hyperspectral Imaging |

| PCA |

Principal Component Analysis |

| FPCA |

Folded Principal Component Analysis |

| WaLuMI |

Ward’s Linkage Strategy using Mutual Information |

| CRPS |

Complex Regional Pain Syndrome |

| NIR |

Near-Infrared |

| ROI |

Region Of Interest |

| SVM |

Support Vector Machine |

References

- Shahzad, A., Saad, M. N., Walter, N., Malik, A. S., & Meriaudeau, F. Hyperspectral venous image quality assessment for optimum illumination range selection based on skin tone characteristics. BioMedical Engineering OnLine 2014, 13, 109. [CrossRef]

- Akbari, H., Kosugi, Y., Kihara, K. A novel method for artery detection in laparoscopic surgery. Surg Endosc 2008, 22, 1672–1677. [CrossRef]

- Cantor-Peled, G., Halak, M., & Ovadia-Blechman, Z. Peripheral vein locating techniques. Imaging in Medicine 2016, 8, 83–88.

- Pan, C.-T., Francisco, M. D., Yen, C.-K., Wang, S.-Y., & Shiue, Y.-L. Vein Pattern Locating Technology for Cannulation: A Review of the Low-Cost Vein Finder Prototypes Utilizing Near Infrared (NIR) Light to Improve Peripheral Subcutaneous Vein Selection for Phlebotomy. Sensors 2019, 19, 3573. [CrossRef]

- Cuper, N. J., Klaessens, J. H. G., Jaspers, J. E. N., de Roode, R., Noordmans, H. J., de Graaff, J. C., & Verdaasdonk, R. M. The use of near-infrared light for safe and effective visualization of subsurface blood vessels to facilitate blood withdrawal in children. Medical Engineering & Physics 2013, 35, 433–440. [CrossRef]

- Lamperti, M., & Pittiruti, M. II. Difficult peripheral veins: Turn on the lights. Br. J. Anaesth 2013, 110, 888–891. [CrossRef]

- Ialongo, C., & Bernardini, S. Phlebotomy, a bridge between laboratory and patient. Biochem Med (Zagreb) 2016, 26, 17–33. [CrossRef]

- Akbari, H., Kosugi, Y., Kojima, K., & Tanaka, N. Blood vessel detection and artery-vein differentiation using hyperspectral imaging. In Proceedings of the 2009 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Minneapolis, MN, USA, 3-6 September 2009; pp. 1461–1464.

- Lei, T., Udupa, J. K., Saha, P. K., & Odhner, D. Artery-vein separation via MRA-An image processing approach. IEEE Transactions on Medical Imaging 2001, 20, 689–703. [CrossRef]

- Wang, F., Behrooz, A., & Morris, M. High-contrast subcutaneous vein detection and localization using multispectral imaging. Journal of Biomedical Optics 2013, 18, 050504. [CrossRef]

- Paquit, V., Price, J. R., Seulin, R., Mériaudeau, F., Farahi, R. H., Tobin Jr., K. W., & Ferrell, T. L. Near-infrared imaging and structured light ranging for automatic catheter insertion. In Proceedings of the SPIE 6141, Medical Imaging 2006: Visualization, Image-Guided Procedures, and Display, 10 March 2006.

- Paquit, V., Price, J. R., Mériaudeau, F., Tobin Jr., K. W., & Ferrell, T. L. Combining near-infrared illuminants to optimize venous imaging. In Proceedings of the SPIE 6509, Medical Imaging 2007: Visualization and Image-Guided Procedures, 21 March 2007.

- Lu, G., & Fei, B. Medical hyperspectral imaging: A review. J. Biomed. Opt. 2014, 19, 10901. [CrossRef]

- Ndu, H., Sheikh-Akbari, A., & Mporas, I. Hyperspectral Imaging and its Applications for Vein Detection – a Review. In P. Shukla, R. Aluvalu, S. Gite, & U. Maheswari (Eds.), Computer Vision: Applications of Visual AI and Image Processing; De Gruyter: Berlin, Boston, 2023; pp. 277-306.

- Kellicut DC, Weiswasser JM, Arora S, et al. Emerging Technology: Hyperspectral Imaging. Perspectives in Vascular Surgery and Endovascular Therapy 2004, 16, 53–57. [CrossRef]

- Khoobehi, B., Beach, J. M., & Kawano, H. Hyperspectral Imaging for Measurement of Oxygen Saturation in the Optic Nerve Head. Investigative Ophthalmology & Visual Science 2004, 45, 1464–1472. [CrossRef]

- Yuan, X., Ge, Z., & Song, Z. Locally Weighted Kernel Principal Component Regression Model for Soft Sensing of Nonlinear Time-Variant Processes. Industrial & Engineering Chemistry Research 2014, 53, 13736–13749. [CrossRef]

- Gonzalez, R., & Woods, R. Digital Image Processing. Massachusetts: Addison-Wesley Publishing Company, 1993.

- Rodarmel, C., & Shan, J. Principal component analysis for hyperspectral image classification. Surveying and Land Information Systems 2002, 62, 56–61.

- Wang, Y., Sun, K., Yuan, X., Cao, Y., Li, L., & Koivo, H. N. A Novel Sliding Window PCA-IPF Based Steady-State Detection Framework and Its Industrial Application. IEEE Access 2018, 6, 20995–21004. [CrossRef]

- Bento, C. Principal Component Analysis algorithm in Real-Life: Discovering patterns in a real-estate dataset. Retrieved from https://towardsdatascience.com/principal-component-analysis-algorithm-in-real-life-discovering-patterns-in-a-real-estate-dataset-18134c57ffe7, 2020.

- Aydemir, M. S., & Bilgin, G. 2D2PCA-based hyperspectral image classification with utilization of spatial information. In 2013 5th Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Gainesville, FL, USA, 2013; pp. 1–4.

- Uddin, P., Mamun, A., Hossain, A., & Ibn, M. Improved folded-PCA for efficient remote sensing hyperspectral image classification. Geocarto International 2022, 1–23.

- Zabalza, J., Ren, J., Yang, M., Zhang, Y., Wang, J., Marshall, S., & Han, J. Novel Folded-PCA for improved feature extraction and data reduction with hyperspectral imaging and SAR in remote sensing. ISPRS Journal of Photogrammetry and Remote Sensing 2014, 93, 112–122. [CrossRef]

- Mishu, S. Z., Ahmed, B., Hossain, M. A., & Uddin, M. P. Effective subspace detection based on the measurement of both the spectral and spatial information for hyperspectral image classification. International Journal of Remote Sensing 2020, 41, 7541–7564. [CrossRef]

- Martínez-Usó, A., Pla, F., Sotoca, J., & García-Sevilla, P. Comparison of Unsupervised Band Selection Methods for Hyperspectral Imaging. Sensors 2007, 7, 126–142. [CrossRef]

- Ward, J.H. Hierarchical Grouping to Optimize an Objective Function. Journal of the American Statistical Association 1963, 58, 236–244. [Google Scholar] [CrossRef]

- Emerge. This system classifies skin type according to the amount of pigment your skin has and your skin’s reaction to sun exposure. 2022. Available online: https://emergetulsa.com/fitzpatrick/ (accessed on 4 December 2023).

- Fitzpatrick, T.B. Soleil et peau. Journal de médecine esthétique 1975, 2, 33–34. [Google Scholar]

- Sommers, M. S., Fargo, J. D., Regueira, Y., Brown, K. M., Beacham, B. L., Perfetti, A. R., Everett, J. S., Margolis, D. J. Are the Fitzpatrick Skin Phototypes Valid for Cancer Risk Assessment in a Racially and Ethnically Diverse Sample of Women? Ethn Dis. 2019; 29, 505–512. [CrossRef]

- Moughal, T.A. Hyperspectral image classification using Support Vector Machine. Journal of Physics: Conference Series 2013, 439, 012042. [Google Scholar] [CrossRef]

- Vapnik, V.N. The Nature of Statistical Learning Theory. J. Machine Learning Research 1995, 5, 1745–1751. [Google Scholar] [CrossRef]

- Gualtieri, J. A., & Chettri, S. Support vector machines for classification of hyperspectral data. In IGARSS 2000. IEEE 2000 International Geoscience and Remote Sensing Symposium. Taking the Pulse of the Planet: The Role of Remote Sensing in Managing the Environment. Proceedings, Honolulu, HI, USA, 2000; pp. 813-815 vol.2.

- Gualtieri, J. A., & Cromp, R. F. Support vector machines for hyperspectral remote sensing classification. In Other Conferences, 1999.

- El-Naqa, I., Yang, Y., Wernick, M. N., Galatsanos, N. P., & Nishikawa, R. M. A support vector machine approach for detection of microcalcifications. IEEE Transactions on Medical Imaging 2002, 21, 1552–1563. [CrossRef]

- Burges, C.J. A Tutorial on Support Vector Machines for Pattern Recognition. Data Mining and Knowledge Discovery 1998, 2, 121–167. [Google Scholar] [CrossRef]

- Pu, D.-M., Gao, D.-Q., & Yuan, Y.-B. A dynamic data correction algorithm based on polynomial smooth support vector machine. In 2016 International Conference on Machine Learning and Cybernetics (ICMLC), Vol. 2, 2016; pp. 820-824.

- Deepa, P., & Thilagavathi, K. Feature extraction of hyperspectral image using principal component analysis and folded-principal component analysis. In 2015 2nd International Conference on Electronics and Communication Systems (ICECS), Coimbatore, India, 2015; pp. 656-660.

- Camps-Valls, G., & Bruzzone, L. Kernel-based methods for hyperspectral image classification. IEEE Transactions on Geoscience and Remote Sensing 2005, 43, 1351–1362. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).