1. Introduction

A fundamental problem in the field of data classification with supervised machine learning (ML) is the shortage and/or imbalanced training data [

1,

2]. For example, the training set of the public image database ISIC2020 [

3] contains only 584 malignant images and approximately 33,126 benign. Such a large class imbalance leads to overfitting [

4] which impacts the prediction accuracy of a classifier. It holds because the model is biased toward the class with larger set of samples. In the example given above, the ML classifier may produce a large number of False Negatives (Type 1 Error) which will indicate a poor performance of the model.

A natural way to tackle the problem of imbalanced classes and data shortage is to fill up the gap with additional data but in many practical cases, the process of obtaining additional real data is time consuming and expensive. Hence, other sources and/or approaches of data generation should be explored and used. One approach is to artificially produce data which will have the same characteristics as the original data and after the augmenting of the training data with the artificial one the entire set will increase the statistical outcomes of a ML method. An approach that produces artificial data is to apply geometric transformations on a certain part of the training images and augment the original training set with the transformed set of images. Typical geometric image transformations are rotation, translation, flipping [

6] or cropping [

7]. Another set of techniques that generate images for the purpose of training data augmentation are Gaussian blur, image sharpening, smoothing, [

5] or noise injection [

6].

In recent years, a method was developed to embed vector field (VF) into an image [

8]. This method augments the set of image features with VF features like singular points and trajectories. Augmenting the set of features in every image of an image database increases the learning capabilities of the ML methods and leads to the increase of its classification abilities. This claim was successfully validated with the help of a new Sparce Representation Classification in the Quaternion Wavelet domain method [

9] and a new convolutional NN (CNN) [

10]. They classified several publicly available image databases and the corresponding image databases with embedded VFs with real [

9] and complex [

10] singular points shapes as well as image databases populated with a Gaussian noise.

One may observe that the methods listed above produce new data on the base of existing data. Also, the VF embedding methods [

8,

10] augment the set of image features with VF features but none of them changes the main theme of (keep the subjects that belong to) the images, in the case of image data.

On the other hand the data distilation methods based on NNs may generate a new data or images which do not resemble the original sets at all, but are still useful for training data augmentation. Another useful way to generate new images on the basis of existing, for the purpose of training data augmentation, is to use NN. In paper [

11] the authors used GAN architecture to generate synthetic images to resolve the problem of limited data availability for three different classes. For the purpose of augmentation, the authors of paper [

12] develop and implement a CNN to determine the likelihood of an object category to be present inside a box that encompasses a given neighborhood. Further, the method finds suitable locations on images to place new objects.

A comprehensive survey on the recent methods of data distillation, including application of NN, is given in [

13]. The authors provide detailed description of multiple data distillation techniques based on the concept of "meta-model", "gradient", "distribution", "trajectory" and "factorization" matching.

In the present paper we propose the novel idea to apply the PCA method for vector data distillation from existing training data [

15]. The generalized idea is to use the Eigenvectors from the covariance matrix of the training vectors with the greatest magnitudes, as data augmenting vectors. We conducted a set of experiments with four publicly available databases. We applied on them three classifiers a NN, a support vector machine (SVM) and Logistic Regression. The experimental results are shown in the paper and they confirm the advantage that augmenting the training set with PCA distilled vectors leads to the increase of the classification statistics.

The rest of the paper is organized as follows: in its first subsection, section two describes the new method for data distillation with the PCA, while the second subsection section three introduces a statistical method [

16] that determines lower bound of the number of distilled vectors necessary to augmented the set of training vectors in order to provide certain accuracy of classification; section three presents the four classifiers implemented to validate the new distillation method; next section describes the original datasets along with the augmented vector datasets; finally, sections 5 and 6 describe our results and discuss on the novelties and the advantages of the new vectors distillation method.

4. Validation Datasets and Training of the Models

4.1. Original Datasets

In the present section we describe the four datasets of feature vectors we used to validate that augmenting the training dataset with PCA distilled vectors imroves the statistical outcomes of a ML classifier.

The first one we call Skin Lesion (

SL) Dataset which comprises 162 observations (samples

S) of 5D feature vectors (

) extracted by an active contour in [

26] from skin lesion images with a ground truth [

25]. The

SL dataset contains 100 benign samples (skin lesion feature vectors labeled by

B) and 62 malignant observations (labeled by

M).

The Diabetes (

D) Dataset [

27] consists of 768 feature vectors with a dimension 8 (

). The vectors are distributed in two sets labeled by

P if the corresponding vector indicates Diabetes. Otherwise, the vector is labeled by

N, which means that the vector indicates NON-Diabetes. The class

P which suggests Diabetes consists of 268 vectors, while the class

N contains 500 vectors of dimension 8.

The Heart Disease (

HD) Dataset [

28] consists of 1025 feature vectors of dimension 13 features. The data samples are distributed in two classes

and

. The

N samples in the HD datasets are 499 while the

P are 526.

Breast Cancer (

BC) Dataset [

29] contains total of 569 feature vectors (observations) of dimension 30D . Out of them 347 labeled as benign-

B while the remaining 212 are determined, by the medical experts, to be malignant-

M.

A read of the above databases information shows that all databases are binary. In three of the cases, the two classes are disbalanced. The benign (negative class) contains almost twice more feature vectors than the malignant (positive) class.

4.2. Augmentation of the Original Training Data

For the validating experiments we will use the following split of the original data: 90% will be used for training and 10% for testing. Then we will distill vectors from the selected training set and will augment this set with the distilled vectors. Hence, we construct three kinds of augmentations. For the purpose of distillation, we apply the method from section 2.3 to determine the minimum number of training samples (MNTS) to be distilled and used to augment the original training samples such that they train a classifier to provide an accuracy of

. In

Table 1 are present the experimental results of classifying the 10% testing data with the three models, introduced above and, trained by the augmented data and the same models trained by the original data.

Augmented Dataset 1: Is created from the SL dataset using a testing/training split of 10%/90 %, where we keep the proportion same as it is in the original SL dataset. Therefore, we have 90 B, and 56 M vectors for training as well as 10 B, and 6 M for testing. Next, using PCA we distill the 26 vectors from the selected 90% (90 B, and 56 M ) training original vectors such that the proportion is preserved. The cardinality of the distilled vectors is 26 and is determined by the MNST approach and consists of 16 B and 10 M. Next, we add the distilled vectors to the original 90 B and 56 M training samples. Then, we train our classifiers to learn from the augmented training set and tested the models on the remaining 10% B and 10% M original vectors.

Augmented Dataset 2: Is for the SL dataset as well. In this augmentation we randomly select 90% of the entire original data for training and use the remaining 10% original data for testing. It means that in the selected training vectors the proportion is not necessary to be as in the entire original SL dataset. Further, in the distilled set of 26 vectors, the ratio equals the ratio of the randomly selected training vectors.

Augmented Datasets 3: Analogously to the case of

SL dataset, we use 90% randomly selected samples for training from each class and distill, from the training samples, 101, 151 and 201 new vectors for each of the datasets

D,

HD and

BC. Note, that in the distilled sets the proportion M/B or P/N is the same as in the original dataset. Then, for each of these datasets we create an augmented training set of the form:

Original + 101-PCA-d,

Original + 151-PCA-d, &

Original + 201-PCA-d:. With

Original we denote the randomly selected 90% original vector samples, which consist of 90% of the M (P) and 90% of the B (N) samples. The sets of

are distilled from the 90% Original vectors with the help of the PCA method described in

Section 2 . Therefore, for each of the original datasets

D,

HD and

BC we develop three augmented datasets for training. Then we train the models and test them on the remaining 10% of the original data.

Augmented Datasets 4: Further, we respectively distilled 51, 77, and 101 samples from the 101, 151, and 201 samples, distilled earlier with the help of the same PCA method, described in

Section 2. In the double distilled three sets of vectors, the proportion M/B or P/N is kept the same as in the initially distilled dataset. With the help of these new and nested distillation we create three augmented training sets of the form:

Original + (101 + 51)-PCA-d,

Original + (151 + 76)-PCA-d, &

Original + (201 + 101)-PCA-d, for each of the original datasets D, HD, and BC. Therefore, we developed nine training augmented datasets and tested them on the remaining 10% of the original data.

4.3. Model Training

Since the

SL data is very small we designed a slightly different NN than the one described in

Section 3.3. The modified NN is designed with the Keras Sequential API, and contains two fully connected layers. The first layer comprises 10 neurons and implements the ReLU activation function, while the second layer consists of one neuron with the Sigmoid activation function. The model uses the Adam optimizer for 100 epochs with a batch size of 32 in order to be trained with the very small sample set of 100 and 106 samples.

As for the classification of the other three datasets we applied the neural network presented in

Section 3.3. The model also includes two Dropout layers with a rate of 0.5 to reduce overfitting. The input dimension is set to 8. The model is compiled with the Adam optimizer and uses the binary cross-entropy loss function.

Recall that the advantage of this study is that augmenting the training data with PCA distilled vectors increases classification statistics. The next model we use to validate this advantage is the logistic regression model described in

Section 3.1. We define this classifier again with the Scikit-Learn library as a LogisticRegression object. The model uses a maximum of 1000 iterations to ensure convergence.

The last model we implemented to validate our approach is the Support Vector Machine (SVM). We design this classifier with the help of the Scikit-Learn library and make the SVM works with two kernels: linear and polynomial degree 2. Further we set the machine’s regularization strength C= 1.

For the NN model, the accuracy score is calculated using the evaluate() method with the testing data. For the logistic regression and SVM models, the accuracy score is calculated using the score() method with the testing data. The confusion matrix is also calculated for each model using the confusion_matrix() function from ScikitLearn.

4.4. Cross-Validation

To fairly validate the classification statistics of the three classifiers we implement the Monte Carlo cross-validation approach. It randomly splits the entire set of samples into training and test samples and repeats the slitting

m times. For each split, a sample appears in exactly one of the sets training or testing. The mean of the

m experiments is calculated for each evaluating statistics. In our experiments, we set m=10 and show the results in

Table 1,

Table 2,

Table 3,

Table 4,

Table 5,

Table 6,

Table 7,

Table 8,

Table 9,

Table 10,

Table 11,

Table 12 and

Table 13 for the

SL and

HD datasets. The advantage of applying the Monte Carlo cross-validation comes from the fact that the approach decreases the variance of the split sample error estimate and the proportion of the training-test random splits does not depend on the number on the selected number m.

4.5. Classification Metrics

Accuracy, sensitivity, and specificity are commonly used metrics to evaluate the effectiveness of a classification model. Below is a description of each metric along with their equations. The notations used are TP = True Positives, TN = True Negatives, FP = False Positives, and FN = False Negatives

Accuracy : Accuracy measures the overall correctness of the model’s predictions by calculating the ratio of correctly predicted instances to the total number of instances.

Sensitivity (True Positive Rate): Sensitivity, also known as recall or true positive rate, measures the model’s ability to correctly identify positive instances out of all the actual positive instances.

Specificity (True Negative Rate): Specificity calculates the model’s ability to correctly identify negative instances out of all the actual negative instances.

5. Experimental Results

To validate that augmenting a training set of vectors with vectors distilled from them by the PCA method increases classification statistics, we conducted experiments applying NN, SVM and LR classifiers on the four databases of feature vectors: skin lesion

SL [

25,

26]; diabetes

D [

27]; heart disease

HD [

28]; breast cancer

BC [

29]. For this purpose, we designed different setups for the training datasets as described in

Section 4.2. The classification results obtained are presented in various tables throughout the present section. In the reporting tables, we show two types of accuracy for each experiment. The first percentage represents the model’s accuracy for one iteration, while the 2nd percentage indicates the mean accuracy of the Monte Carlo cross-validation approach for 10 experiments. The bold percentages in all the tables indicate the best results. We presented our results in three separate tables for the classification metrics mentioned in

Section 4.5 for each dataset.

In

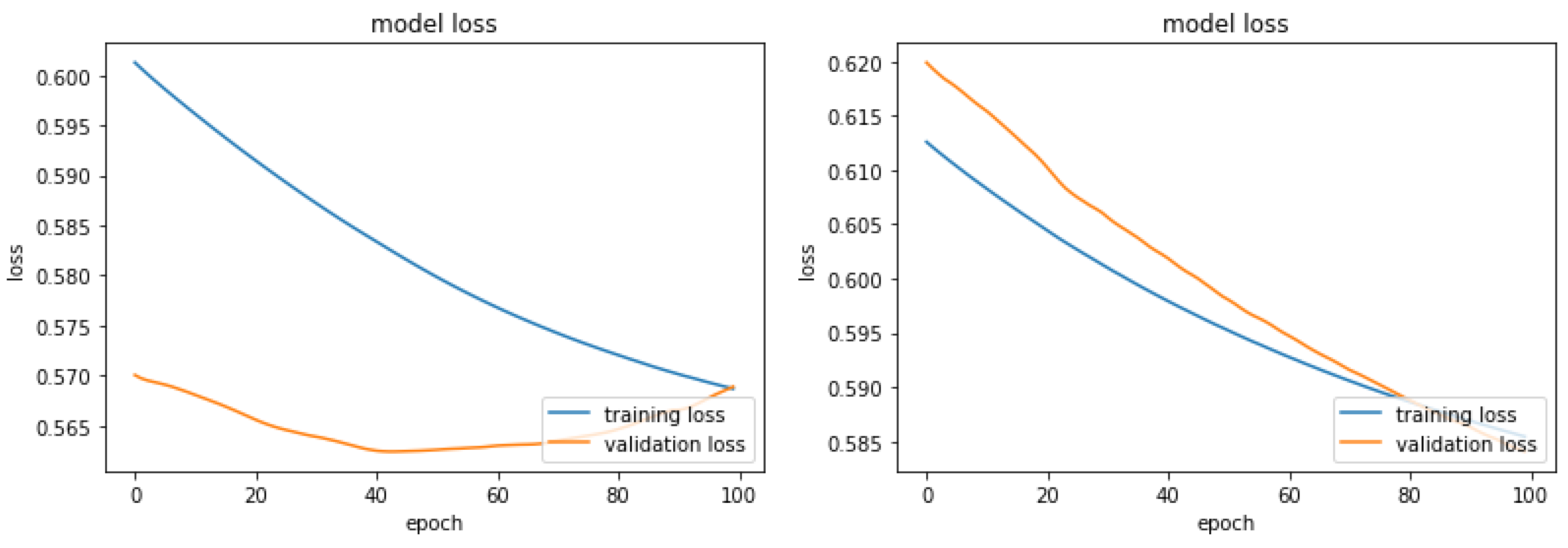

Figure 1 are shown the curves of the loss function for the NN model on the SL data. One may observe that the curves of the loss functions for the training processes with original and Augmented Dataset 1 resemble each other. On the other hand, the curves of the loss functions for the two validation processes are very different. The curve for the validation process when the NN was trained with the original vectors is convex from below. It speaks about possible divergence after the 100 epochs. While the curve for validation when the NN was trained with the Augmented Dataset 1 is steadily decreasing, which suggests a classification improvement if more than 100 epochs are conducted.

In

Table 1, we represented the comparison of classification statistics of different classifiers for the

SL dataset and its augmentations mentioned in

Section 4.2. The total number of samples in the original dataset is 162 and we split it to 90% vs 10% for training and testing. Studying

Table 1 one can tell that the mean accuracy of the LR has significantly increased from 71% to 82% for

Augmented dataset 2. Failure in the remaining classifiers may have occurred due to the fact that the total sample size is too ls mall. In some instances, the sensitivity has increased which indicates that the model’s ability to detect malignant samples has increased due to the augmentation. The Specificity of the LR model has increased by 5% which indicates a better ability of the model to detect benign lesions if

Augmented Data 1 are used. Also, the Sensitivity of the NN classifier for the

Augmented Data 1 increased twice, while the SVML increased the same statistics with 5% for the training

Augmented Data 2. Furthermore, the results show that the augmented training data balances the Sensitivity/Specificity ratio, which tells that the classifiers are balanced according to the recognition of TP and TN samples. While in the case of the original data the ability of the NN and the LR to recognize benign samples is twice as big as the recognition of malignant samples.

Note that the

SL dataset is small having only 168 samples. We continue the experimental validation with relatively larger feature vectors databases:

D which contains 768 feature vectors of 8D, with ration P/N 268/500 [

27]; heart desies

HD which contains 1025 feature vectors of 13D, with ration P/N 499/526 [

28]; breast cancer

BC which contains 569 feature vectors of 30D, with ration P/N 212/347 [

29].

The results from

D data classification with the four classifiers are presented in

Table 2,

Table 3 and

Table 4. We apply the NN, the LR and the SVM with linear and polynomial degree 2 kernels and denote the classifiers with SVML and SVMP respectively. The classifiers are trained with Original, Original +

-PCA-d and Original +

-PCA-d datasets which are described in

Section 4.2. One may notice that the training with the augmented data improved the classification accuracy if compared with the training by the original data. For the NN and SVMP the highest increase came for the

10-fold (the right column in

Table 2) with the single augmentation by 101 distilled vectors. For the LR the highest results come with the double augmentation of

and

distilled vectors, while for the SVML the highest outcome is obtained with

.

On the other hand, the outcomes about Sensitivity and Specificity show high results for the former statistics and twice smaller for the latter. It tells that the augmentations did not balance the classification models as it did in the case of the

SL dataset in

Table 1.

As mentioned above we conducted distillation-augmentation experiments using the heart disease

HD [

28] dataset as well. Recall it contains 1025 feature vectors of dimension 13D. Out of them there are 499 positive (P) vectors and 526 negatives (N). The former vectors indicate heart disease, while the latter indicate heathy samples. The four classifiers are trained again with the following types of sets Original, Original +

-PCA-d and Original +

-PCA-d. The number

denotes the number of vectors distilled from the Original training vectors, while

denotes the number of vectors distilled from the already distilled

vectors. The experimental results, with 90% of the P and 90% of the N randomly selected samples for training, are shown in

Table 5,

Table 6,

Table 7. One may tell from there that the average accuracy increased for all classifiers when they were trained with the augmented set Original +

-PCA-d, which contains double distillation. The Sensitivity increased only for the SVMP because it is already at the maximum for the Original training data for the other classifiers. The specificity increased (significantly for LR, SVML and SVMP) for all classifiers if trained with augmented sets that contain single and double distillated data. Another important achievement obtained with the

HD dataset and the used distillation-augmentations is that all classifiers are balanced according to Sensitivity/Specificity ratio.

With the help of the heart disease

HD [

28] dataset we conducted a second set of experiments decreasing the number of randomly selected original vectors for training to 70%. Now the testing vectors are the remaining 30% of the feature vectors. All other activities such as distilations and augmentations are the same as in the case of 90% selected original feature vectors for training. The obtained results of classification with the four chosen classifiers are shown in

Table 8,

Table 9 and

Table 7.

A study of the results in the above tables show that selecting 70% of Original HD feature vectors for training, distillation and augmentation keeps the same trends of increasing the classification statistics as in the case of 90% selection. Moreover, one may observe that with the 70:30% split the Specificity for all classifiers increased when trained with Original+151-PCA-d dataset. In summary, the augmentation of the original training set with distilled vectors from this set increases the classification statistics and makes the classifiers balanced. Only that, the classification statistics in the 70% split are smaller than the classification statistics in the 90% case.

We conducted the final set of experiments with the

BC dataset which contains 569 feature vectors of 30D, with ratio P/N 212/347 [

29]. For training we randomly selected, ten times, 90% of the P and 90% of the negative samples. Every time, from every selection of training samples, we distilled 101, 151 and 201 vectors with same dimensions as the original training vectors. From every distilled set we distilled 51, 77, and 101 vectors respectively as a second distillation. The experimental results with the Original and the augmented training sets Original +

-PCA-d and Original +

-PCA-d are reported in

Table 11,

Table 12,

Table 13. Recall with

we denote a set of vectors distilled during the first distillation, while

denotes set of vectors from the second distillation.

A study of the results show the significant increase of the classification accuracy of the NN for the augmented set Original+101-PCA-d and for the SVML classifier with the augmented set Original+151-PCA-d . In what concern the LR and SVMP the their highest results with the latter augmented set are same as the accuracy of classification with the Original training data set. The reason is that the accuracies of the latter data set are high enough and there is no room for further increase. Same observation and conclusion hold for the Specificity of the NN and the Sensitivities of the LR and SVMP classifiers. The last two statistics exhibit a significant increase for the remaining classifiers when trained with augmented datasets. Moreover, the ration Sensitivity/Specificity is well balanced leading to the highest Balanced Accuracy = (Sensitivity + Specificity)/2.

6. Discussion

The present paper develops a method for vector data augmentation through distillation. The method is based on the principal component analysis (PCA) [

14,

15]. Its difference with the extension we developed for vector data distillation is that former method maps the set of original vectors to a set of vectors with smaller dimension and same cardinality. On the other hand, the latter one maps the original set of vectors to a set of vectors with same dimensions as the original set but with a smaller cardinality.

The extended PCA for distillation is unproductive if the matrix

in Eq.

7 is 1-orthogonal. Note, by definition the matrix

is 1-orthogonal if and only if

, where

is the identity matrix. Therefore, if

is 1-orthogonal Eqs.

7 and

8 will map the original training dataset

into itself and distilled vectors will not be generated. To remedy the problem, we select

strongest vectors, instead of

m, as it is shown above Eq.

7.

The novelty of the present study is the development and use the extended PCA for distillation of vectors from given set of vectors, such that the distilled vectors have same dimensions as the original vectors.

The advantage that comes from the proposed novelty is that adding the PCA distilled vectors to the original vectors, from which the former ones were distilled, we receive a new training set. This new set trains a classifier such that its model has statistics higher than the statistics of the model trained only with the original dataset.

We validated the advantage by applying four classifiers

NN, LR, SVML, SVMP on four different datasets of vectors

SL, D, HD, BC. Every experimental result is produced by the Monte-Carlo 10-fold cross-validation where we randomly select 10 times 10% of the samples for testing while the remaining 90% are used for training. Then the average is taken. For every selection the augmented vectors are distilled from the set of selected training vectors. We conducted a second (nested) distillation from every set of vectors generated during the first distillation. The experimental results are shown in

Table 1 to

Table 13 where we compare the outcomes after training with original vectors only and the outcomes after training with augmented datasets. It is evident that the latter outcomes are higher and better balanced if compared with the former.

Our future work continues with enlarging classes which have small cardinalities. In certain datasets exsist a big difference between the number of samples in the different classes. Hence, we will conduct distillations from the samples of the class with smallest cardinality and will augment this class to increase its cardinality. For this purpose, we will investigate the number of consecutive distillations which provide meaningful and useful sample vectors.

Figure 1.

(a) Training and Validation Loss Curves of the Original Skin Lesion Data (b) Training and Validation Loss Curves of the Augmented Data 1.

Figure 1.

(a) Training and Validation Loss Curves of the Original Skin Lesion Data (b) Training and Validation Loss Curves of the Augmented Data 1.

Table 1.

Comparison of Accuracy, Sensitivity, and Specificity (Skin Lesion Data).

Table 1.

Comparison of Accuracy, Sensitivity, and Specificity (Skin Lesion Data).

| Original skin lesion data |

NN |

LR |

SVML |

| Accuracy |

88%, 79% |

88%,71% |

88%, 88% |

| Sensitivity |

50% |

33% |

86% |

| Specificity |

100% |

81% |

90% |

| Augmented Data 1 |

NN |

LR |

SVM |

| Accuracy |

94%,75% |

76%,76% |

76%,76% |

| Sensitivity |

100% |

70% |

70% |

| Specificity |

86% |

86% |

86% |

| Augmented Data 2 |

NN |

LR |

SVM |

| Accuracy |

82%, 78% |

82% , 82% |

82% , 82% |

| Sensitivity |

91% |

91% |

91% |

| Specificity |

60% |

60% |

60% |

Table 2.

Comparison of Classification Accuracy of Four Classifiers Trained on 90% of the Original D Data and Various Augmentations, Tested on 10% of the Original D Data. The left column shows the highest result from the 10-fold experiments, while the right one shows their average.

Table 2.

Comparison of Classification Accuracy of Four Classifiers Trained on 90% of the Original D Data and Various Augmentations, Tested on 10% of the Original D Data. The left column shows the highest result from the 10-fold experiments, while the right one shows their average.

| Training Data |

NN |

LR |

SVML |

SVMP |

| Original |

76%, 75% |

73%, 70% |

70%, 70% |

70%, 70% |

| Original + 101-PCA-d |

84%,82% |

77%, 77% |

74%,74% |

82% , 82% |

| Original + (101 + 51)-PCA-d |

84%,79% |

78%,78% |

78%,78% |

78% , 78% |

| Original + 151-PCA-d |

78%,77% |

73%,73% |

74%,74% |

80% , 80% |

| Original + (151 + 77)-PCA-d |

82%,79% |

78%,78% |

64%,64% |

67% , 67% |

| Original + 201-PCA-d |

78%,75% |

67%,67% |

73%,73% |

73% , 73% |

| Original + (201 + 101)-PCA-d |

77%,75% |

70%,70% |

70%,70% |

73% , 73% |

Table 3.

Comparison of Classification Sensitivity of Different Classifiers Trained on 90% of the Original D Data and Various Augmentations, Tested on 10% of the Original D Data. The single column shows the average of the 10-fold experiments.

Table 3.

Comparison of Classification Sensitivity of Different Classifiers Trained on 90% of the Original D Data and Various Augmentations, Tested on 10% of the Original D Data. The single column shows the average of the 10-fold experiments.

| Training Data |

NN |

LR |

SVML |

SVMP |

| Original |

52% |

56% |

68% |

67% |

| Original + 101-PCA-d |

77% |

48% |

30% |

37% |

| Original + (101 + 51)-PCA-d |

81% |

56% |

26% |

56% |

| Original + 151-PCA-d |

52% |

41% |

30% |

56% |

| Original + (151 + 77)-PCA-d |

74% |

52% |

19% |

22% |

| Original + 201-PCA-d |

44% |

22% |

33% |

33% |

| Original + (201 + 101)-PCA-d |

52% |

41% |

41% |

22% |

Table 4.

Comparison of Classification Specificity of Different Classifiers Trained on 90% of the Original D Data and Various Augmentations, Tested on 10% of the Original D Data. The single column shows the average of the 10-fold experiments.

Table 4.

Comparison of Classification Specificity of Different Classifiers Trained on 90% of the Original D Data and Various Augmentations, Tested on 10% of the Original D Data. The single column shows the average of the 10-fold experiments.

| Training Data |

NN |

LR |

SVML |

SVMP |

| Original data |

93% |

83% |

72% |

72% |

| Original + 101-PCA-d |

88% |

92% |

94% |

94% |

| Original + (101 + 51)-PCA-d |

86% |

90% |

88% |

90% |

| Original + 151-PCA-d |

94% |

90% |

98% |

94% |

| Original + (151 + 77)-PCA-d |

86% |

92% |

88% |

92% |

| Original + 201-PCA-d |

96% |

94% |

94% |

94% |

| Original + (201 + 101)-PCA-d |

90% |

86% |

98% |

90% |

Table 5.

Comparison of Classification Accuracy of Different Classifiers Trained on 90% of the Original HD Data and Various Augmentations, Tested on 10% of the Original HD Data. The left column shows the highest result from the 10-fold experiments, while the right one shows their average.

Table 5.

Comparison of Classification Accuracy of Different Classifiers Trained on 90% of the Original HD Data and Various Augmentations, Tested on 10% of the Original HD Data. The left column shows the highest result from the 10-fold experiments, while the right one shows their average.

| Training Data |

NN |

LR |

SVML |

SVMP |

| Original |

98%, 95% |

83%, 86% |

83%, 83% |

66%, 83% |

| Original + 101-PCA-d |

97%,96% |

87%, 87% |

88%,88% |

86% , 86% |

| Original + (101 + 51)-PCA-d |

97%,96% |

90%,90% |

91%,91% |

86% , 91% |

| Original + 151-PCA-d |

97%,95% |

86%,86% |

86%,86% |

83% , 84% |

| Original + (151 + 76)-PCA-d |

98%,95% |

83%,83% |

83%,83% |

88% , 83% |

| Original + 201-PCA-d |

90%,89% |

86%,86% |

85%,85% |

83% , 83% |

| Original + (201 + 101)-PCA-d |

91%,90% |

83%,83% |

83%,83% |

83% , 83% |

Table 6.

Comparison of Classification Sensitivity of Different Classifiers Trained on 90% of the Original HD Data and Various Augmentations, Tested on 10% of the Original HD Data. The single column shows the average of the 10-fold experiments.

Table 6.

Comparison of Classification Sensitivity of Different Classifiers Trained on 90% of the Original HD Data and Various Augmentations, Tested on 10% of the Original HD Data. The single column shows the average of the 10-fold experiments.

| Training Data |

NN |

LR |

SVML |

SVMP |

| Original |

100% |

96% |

98% |

69% |

| Original + 101-PCA-d |

100% |

94% |

94% |

94% |

| Original + (101 + 51)-PCA-d |

96% |

94% |

96% |

85% |

| Original + 151-PCA-d |

100% |

85% |

89% |

87% |

| Original + (151 + 76)-PCA-d |

98% |

85% |

83% |

87% |

| Original + 201-PCA-d |

100% |

96% |

96% |

98% |

| Original + (201 + 101)-PCA-d |

91% |

87% |

92% |

89% |

Table 7.

Comparison of Classification Specificity of Different Classifiers Trained on 90% of the Original HD Data and Various Augmentations, Tested on 10% of the Original HD Data. The single column shows the average of the 10-fold experiments.

Table 7.

Comparison of Classification Specificity of Different Classifiers Trained on 90% of the Original HD Data and Various Augmentations, Tested on 10% of the Original HD Data. The single column shows the average of the 10-fold experiments.

| Training Data |

NN |

LR |

SVML |

SVMP |

| Original |

96% |

79% |

71% |

64% |

| Original + 101-PCA-d |

94% |

80% |

82% |

78% |

| Original + (101 + 51)-PCA-d |

98% |

86% |

86% |

88% |

| Original + 151-PCA-d |

94% |

88% |

84% |

78% |

| Original + (151 + 77)-PCA-d |

98% |

80% |

84% |

90% |

| Original + 201-PCA-d |

86% |

76% |

74% |

68% |

| Original + (201 + 101)-PCA-d |

92% |

76% |

74% |

78% |

Table 8.

Comparison of Classification Accuracy of Different Classifiers Trained on 70% of the Original HD Data and Various Augmentations, Tested on 30% of the Original HD Data. The left column shows the highest result from the 10-fold experiments, while the right one shows their average.

Table 8.

Comparison of Classification Accuracy of Different Classifiers Trained on 70% of the Original HD Data and Various Augmentations, Tested on 30% of the Original HD Data. The left column shows the highest result from the 10-fold experiments, while the right one shows their average.

| Training Data |

NN |

LR |

SVML |

SVMP |

| Original |

94%, 92% |

84%, 84% |

85%, 81% |

69%, 81% |

| Original + 101-PCA-d |

89%,86% |

82%, 82% |

82%, 82% |

82%, 82% |

| Original + (101 + 51)-PCA-d |

92%,91% |

80%,80% |

80%,80% |

86% , 86% |

| Original + 151-PCA-d |

94%,93% |

85%,85% |

85%,85% |

85% , 85% |

| Original + (151 + 76)-PCA-d |

90%,88% |

84%,84% |

83%,83% |

82% , 82% |

| Original + 201-PCA-d |

92%,91% |

83%,83% |

83%,83% |

87% , 87% |

| Original + (201 + 101)-PCA-d |

92%,90% |

83%,83% |

84%,84% |

82% , 82% |

Table 9.

Comparison of Classification Sensitivity of Different Classifiers Trained on 70% of the Original HD Data and Various Augmentations, Tested on 30% of the Original HD Data. The single column shows the average of the 10-fold experiments.

Table 9.

Comparison of Classification Sensitivity of Different Classifiers Trained on 70% of the Original HD Data and Various Augmentations, Tested on 30% of the Original HD Data. The single column shows the average of the 10-fold experiments.

| Training Data |

NN |

LR |

SVML |

SVMP |

| Original |

92% |

93% |

56% |

76% |

| Original + 101-PCA-d |

93% |

89% |

91% |

89% |

| Original + (101 + 51)-PCA-d |

96% |

88% |

90% |

90% |

| Original + 151-PCA-d |

97% |

94% |

95% |

96% |

| Original + (151 + 76)-PCA-d |

91% |

92% |

93% |

93% |

| Original + 201-PCA-d |

93% |

87% |

86% |

91% |

| Original + (201 + 101)-PCA-d |

94% |

92% |

94% |

89% |

Table 10.

Comparison of Classification Specificity of Different Classifiers Trained on 70% of the Original HD Data and Various Augmentations, Tested on 30% of the Original HD Data. The single column shows the average of the 10-fold experiments.

Table 10.

Comparison of Classification Specificity of Different Classifiers Trained on 70% of the Original HD Data and Various Augmentations, Tested on 30% of the Original HD Data. The single column shows the average of the 10-fold experiments.

| Training Data |

NN |

LR |

SVML |

SVMP |

| Original |

96% |

82% |

36% |

63% |

| Original + 101-PCA-d |

85% |

74% |

73% |

75% |

| Original + (101 + 51)-PCA-d |

88% |

71% |

81% |

81% |

| Original + 151-PCA-d |

91% |

77% |

73% |

74% |

| Original + (151 + 76)-PCA-d |

88% |

76% |

72% |

71% |

| Original + 201-PCA-d |

90% |

79% |

81% |

83% |

| Original + (201 + 101)-PCA-d |

91% |

73% |

74% |

75% |

Table 11.

Comparison of Classification Accuracy of Different Classifiers Trained on 90% of the Original BC Data and Various Augmentations, Tested on 10% of the Original BC Data. The left column shows the highest result from the 10-fold experiments, while the right one shows their average.

Table 11.

Comparison of Classification Accuracy of Different Classifiers Trained on 90% of the Original BC Data and Various Augmentations, Tested on 10% of the Original BC Data. The left column shows the highest result from the 10-fold experiments, while the right one shows their average.

| Training Data |

NN |

LR |

SVML |

SVMP |

| Original |

89%, 87% |

100%, 95% |

65%, 65% |

98%, 98% |

| Original + 101-PCA-d |

100%,97% |

84%, 84% |

88%,88% |

86% , 86% |

| Original + (101 + 51)-PCA-d |

93%,90% |

84%,84% |

86%,86% |

88% , 88% |

| Original + 151-PCA-d |

90%,81% |

95%,95% |

98%,98% |

98% , 98% |

| Original + (151 + 77)-PCA-d |

95%,95% |

81%,81% |

86%,86% |

90% , 90% |

| Original + 201-PCA-d |

97%,94% |

95%,95% |

97%,97% |

97% , 97% |

| Original + (201 + 101)-PCA-d |

88%,84% |

93%,93% |

97%,97% |

93% , 93% |

Table 12.

Comparison of Classification Sensitivity of Different Classifiers Trained on 90% of the Original BC Data and Various Augmentations, Tested on 10% of the Original BC Data. The single column shows the average of the 10-fold experiments.

Table 12.

Comparison of Classification Sensitivity of Different Classifiers Trained on 90% of the Original BC Data and Various Augmentations, Tested on 10% of the Original BC Data. The single column shows the average of the 10-fold experiments.

| Training Data |

NN |

LR |

SVML |

SVMP |

| Original |

100% |

79% |

47% |

94% |

| Original + 101-PCA-d |

100% |

100% |

100% |

100% |

| Original + (101 + 51)-PCA-d |

100% |

95% |

95% |

95% |

| Original + 151-PCA-d |

100% |

100% |

100% |

100% |

| Original + (151 + 76)-PCA-d |

100% |

100% |

100% |

100% |

| Original + 201-PCA-d |

100% |

95% |

95% |

95% |

| Original + (201 + 101)-PCA-d |

100% |

100% |

100% |

100% |

Table 13.

Comparison of Classification Specificity of Different Classifiers Trained on 90% of the Original BC Data and Various Augmentations, Tested on 10% of the Original BC Data. The single column shows the average of the 10-fold experiments.

Table 13.

Comparison of Classification Specificity of Different Classifiers Trained on 90% of the Original BC Data and Various Augmentations, Tested on 10% of the Original BC Data. The single column shows the average of the 10-fold experiments.

| Training Data |

NN |

LR |

SVML |

SVMP |

| Original |

83% |

100% |

73% |

100% |

| Original + 101-PCA-d |

100% |

75% |

81% |

78% |

| Original + (101 + 51)-PCA-d |

89% |

77% |

80% |

83% |

| Original + 151-PCA-d |

83% |

92% |

97% |

97% |

| Original + (151 + 76)-PCA-d |

94% |

69% |

78% |

83% |

| Original + 201-PCA-d |

94% |

94% |

97% |

97% |

| Original + (201 + 101)-PCA-d |

81% |

89% |

94% |

89% |