1. Introduction

The problem with a quantum version of general relativity is that the calculations that would describe interactions of very energetic gravitons — the quantized units of gravity — would have infinitely many infinite terms. You would need to add infinitely many counterterms in a never-ending process. Renormalization would fail. Because of this, a quantum version of Einstein’s general relativity is not a good description of gravity at very high energies. It must be missing some of gravity’s key features and ingredients.

However, we can still have a perfectly good approximate description of gravity at lower energies using the standard quantum techniques that work for the other interactions in nature. The crucial point is that this approximate description of gravity will break down at some energy scale — or equivalently, below some length.

Above this energy scale, or below the associated length scale, we expect to find new degrees of freedom and new symmetries. To capture these features accurately we need a new theoretical framework.

– Sera Cremonii, theoretical physicist at Lehigh University, specializing on string theory, quantum gravity, and cosmology, as quoted by Natalie Wolchover [118]

Problems in Extending Field Theory to General Relativity (GR)

There are two main problems in extending quantum field theory (QFT) to general relativity. One is the problem of renormalizing the loop integrals in quantum general relativity (QGR). In quantum electrodynamics (QED) the divergent loop integrals over virtual particles created a serious problem in the 1940s: the calculations agreed with the results – provided you simply threw away the infinite parts. This worked but did not make much sense. Some brilliant work by Feynman, Schwinger, Tomonaga and others showed that the divergent integrals could be systematically contained by the addition of counter-terms. It was eventually shown this approach could be extended to all orders and that therefore QED and QFT will give well-defined – and experimentally confirmed – answers to an extraordinary degree of precision, to within ten parts to a billion in some cases.

The problem is that, as Cremonii observed, it is has not yet been possible to extend this program to QGR. Success has been achieved with increasingly more complex diagrams, but there is no overall program. His point that we expect this approach to break down at some scale of high energy short times is in some sense the starting point of this work.

Further, the need to include such counter-terms, in either QGR or QGR, is deeply troubling. In general when we encounter infinities in scientific theory we suspect there may be a problem with our assumptions or approximations. It was precisely such problems – especially with the analysis of black body radiation – that led to the development of quantum mechanics in the first place.

The second problem is that there appears to be an asymmetry in the handling of time in quantum mechanics. This is often phrased as the question “is time a parameter or an observable?” In the early days of quantum mechanics, Heisenberg [52] took for granted that time should be treated as an observable, at least in the sense that the Heisenberg uncertainty principle (HUP) should apply in the way to the time/energy relation as it does to the space/momentum relation. The practice in quantum mechanics since has been to treat time as more of a parameter than an observable. In the Schrödinger equation, time goes strictly forward, wave functions describe probability amplitudes in space but are rooted at a specific instant in time , and path integrals are normally taken over all possible directions in space – left/right, up/down, forwards/back – but do not venture into the past or the future.

This is clearly counter to the spirit of relativity, where time and space are treated on an almost completely symmetric basis.

It may be reasonably argued that this problem is resolved by the Feynman path integral approach to QED, where the expressions are clearly covariant. So that while we may not understand every aspect of the problem, we have got the basics right. Furthermore, given the spectacular agreement of the results in QED with experiment there is not much room for variation. Problem solved or at least contained.

Time as Observable

In earlier work [3–5] we picked up the discussion with Heisenberg and asked happens if we force the issue: what happens if we assume that time should be treated as an observable/wave functions should extend a bit in time/paths should go into the future/past just as they do for space?

Our defining principle for this work was to insist on full covariance – time and space treated as symmetrically as possible – and then to push as hard as possible until either we found a contradiction or got to a way to falsify the hypothesis.

We found that if we started with the Feynman path integral approach (FPI), the only change we had to make was to change the paths to include the time direction, we could leave the rest of the machinery essentially intact. We did need to make some adjustments to the mechanics of the path integrals, for instance the usual methods for achieving convergence of the path integrals failed, and an alternative had to be found.

We got the equivalent Schrödinger equation directly from the FPI expression, by looking at the FPI expressions for very short time steps, and taking the limit as those time steps go to zero. The result turned out to be a variation on an equation discovered by Feynman and Stueckelberg, specialized to our requirements. An estimate of the scale shows that the effects of dispersion in time will start to show at about the attosecond scale and shorter. The essential time scale is given by the time taken by a photon to cross an atomic radius. This explains both why these effects have not been seen to date – we are only just starting to probe the attosecond time range – and also implies that in the near future we should be able to detect the effects of dispersion in time, if they are real. And if they are not, even a negative result should tell us something interesting about the nature of time.

Extension to Field Theory

These results were for the single particle case. The problem is that the best experiments to test this take place at high energies/short times. This means we have to include the effects of particle creation/annihilation, which means in turn we have to look at the extension to field theory.

This turned out, as one might expect, to be considerably more difficult. We continued with the FPI approach. We defined a Fock space consisting of the appropriately symmetrized single particle solutions. We extended the essentially non-relativistic solutions from the above to high energy by using a self-consistency argument within this Fock space. Then developed the propagators in the usual way as time-ordered vacuum expectation values. This resulted in two major changes:

First, the paths now show dispersion in time/energy on the same basis as dispersion in space/momentum. This was, of course, the objective.

Second, the integrals over virtual particles are promoted to integrals over real particles. In the usual Feynman path integrals we work with paths of the form:

The factor of is picking out a contour in a contour integral. The value is the value of the pole in the integral. In an energy-momentum integral, if three of the four variables are fixed, the fourth is forced as the value of the residue. The effect is that the integrals are formally covariant but when examined in detail, they are on-shell. The change from SQM to TQM is essentially to include off-shell energies as well as on-shell energies. In most cases, the average over the off-shell energies gives the on-shell result. As with the single particle case, we expect differences only at the attosecond and shorter times.

Heisenberg Uncertainty Principle in Time

At this point, the tree diagrams are in good shape. We use the same topology as in SQM, but line now includes dispersion in time: the lines going from being crisp to being fuzzy. In any problem adequately described at the tree level we will see additional dispersion in time. Tests of the Heisenberg uncertainty principle in time show a particularly dramatic difference. If we send a particle through a narrow slit in time (i.e. a very very fast camera), in SQM the wave function will be clipped, producing less dispersion in time at the detector. But in TQM, the wave function will be diffracted in time, showing a significantly greater dispersion in time at the detector. In principle, the effect may be made arbitrarily large.

The strict requirement of covariance makes the predictions unambiguous, so we have not just a test but falsifiability – if the predicted wider dispersion is not seen, then we may infer that time should not be treated as an observable.

We would probably find ourselves making some other related inferences at that point as well, as we would have to account for the violation of full symmetry between time and space in some other way. So the experimental work would be far from wasted.

In practice, we would probably replace the ultra-fast camera with a probe particle shaped to have a very short width in time: a chirp, not a slow bass note.

Loop Diagrams and Ultra-Violet Divergences

But at this point, we expect much greater trouble with the loop integrals. We have one extra dimension to integrate over after all. If the usual QED divergences are barely renormalizable now, they might become hopeless in TQM. We should still get some interesting experiments to run, but the theory itself will be hopelessly inconsistent.

What happened in practice was unexpected. Usually we get the time slice-by-time slice integrals in path integrals working by adding a small convergence factor to each integral. But it is not possible to do this in a covariant way. Instead we took advantage of the fact that the kernels are really distributions and only make sense when applied to a specific wave function. But if the wave function is finite, if it has norm one and well-defined uncertainty in all four dimensions, then the integral for each time-slice is also finite: the initial wave function acts as an built-in regulating factor.

Through Morlet wavelet analysis it is possible to represent any finite wave function as a sum over Gaussian test functions (GTFs). We showed this for GTFs and immediately got full generality. This does mean that we cannot apply our approach to general plane waves or delta functions: the individual time slices stop being well-defined. And it does make the integrals much harder from a technical point of view. Only the simplest cases can be done by hand.

So we are required in any case to apply our loop integrals only to GTFs. And we are required by our defining principle of full covariance to have the loop integral entangled with the initial GTF in time as well as in space. This entanglement forces each integral in turn to be finite. Again the initial GTF acts as a built-in regulating factor.

Two Sides of the Same Quantum Coin

While at first glance it looked as if the addition of dispersion in time would make the ultraviolet divergences harder to manage, in practice, it contains them, in fact making them finite. The two problems—achieving full symmetry between time and space and managing the ultraviolet divergences—appear to be complementary parts of the same problem.

We still have to normalize, it is still true that no measurement stands alone. But now we are comparing one finite calculation to another, a much less troubling problem.

Extension to QGR

While the attosecond tests have not yet been done, this development immediately suggests we should look at an extension to QGR. The key problem here is to write QGR in a way that lets us apply the QFT results to it. This is done here by building on the existing work in QGR but selecting the “trace-reversed de Donder” gauge as the starting point. Once we have used this gauge to do the extension, we can shift the gauge around freely.

This leaves several further problems.

First, the actual expressions in QGR are extremely complex. The transition from SQM to TQM at least doubles the complexity of each specific calculation. We will address this problem by only looking at the simplest possible cases and leaning on existing results as much as possible

Second, the vertexes now are associated with powers of the energy. In the case of SQM, this adds additional powers of the loop momentum (i.e. , and worse) to each loop integral, making a hard problem significantly harder. However in TQM these polynomial powers are suppressed by the Gaussian factors associated with the GTFs, so adds complexity but are not fatal.

Third, while for QED we are within reach of falsifiability, there is no realistic chance of doing tests of the HUP with individual gravitons. However if we accept the “emergent gravity” approach to QGR, we can then take the metric as being the classical limit of a statistical ensemble of gravitons. In this case, we should be able to use the TQM approach to model the properties of the “graviton sea” and then compare this to observations of dark energy and dark matter distributions, to see if they match up.

Objective

Our primary objective here is to show that we can apply the previous work on TQM to QGR, thereby getting a renormalizable (actually finite) and well-defined model of QGR, with a high level of symmetry between time and space by construction. Since we are using covariance to extend from three to four dimensions, we can’t guarantee that the predictions are correct. We have the same problem that you have when you extrude a circle to a sphere: are you going to get an exact sphere or something rather more egg-shaped or perhaps even stretched out? But a sphere is the simplest prediction and should represent a reasonable starting point for further theoretical, observational, and experimental work.

We do not guarantee that the approach here matches at all levels the existing loop calculations in QGR. But at least they are well-defined, so the comparisons should be interesting.

And finally, the ability to extend QM in a relatively clean way to QGR provides additional motivation to look for the attosecond level effects of time dispersion in QED.

Literature

The work here has its starting point in the path integral approach as originated by Stueckelberg and Feynman [35–38,100,101] and as further developed in [39,43,57,65,69,86,91,104,122]. This work is specifically part of the Relativistic Dynamics approach as developed in [31–34,53–56,72].

We are also much indebted to general reviews of the role of time in quantum mechanics: [14,25,78,79,82,92,121].

And we have taken considerable advantage of the extraordinary literature for QED [11,12,20,40,48,49,57,60,63,68,71,73,84,85,90,94,95,99,115,116,119].

We use the path integral formalism here. Texts on QED typically include a chapter on path integrals. There is also a considerable literature on them in their own right, as [43,65,66,69,86,91,104,122].

We have also benefited enormously from the literature on GR [1,18,51,74,89,93,102,107,109,111–113,120] and QGR [26,41,50,61,62,75,109,114].

In previous work we have looked at time dispersion in the single particle case [3] (paper A), at the specific problems created in doing time-of-arrival measurements [4] (paper B), and time dispersion in QED [5] (paper C).

Section outline

Time dispersion in quantum mechanics. To avoid excessive and distracting page flipping, we summarize the key points of earlier work. To keep the length down, we refer the interested reader to the original derivations for most of the calculations.

Time dispersion in gravity. We use those methods to extend standard QGR to include dispersion in time. We finish with the Feynman rules for the renormalized model.

Loop diagrams in gravity. We look in detail at the question of loop diagrams and their accompanying ultraviolet divergences. This completes the analysis of the renormalized model.

Discussion.

2. Time Dispersion in Quantum Mechanics

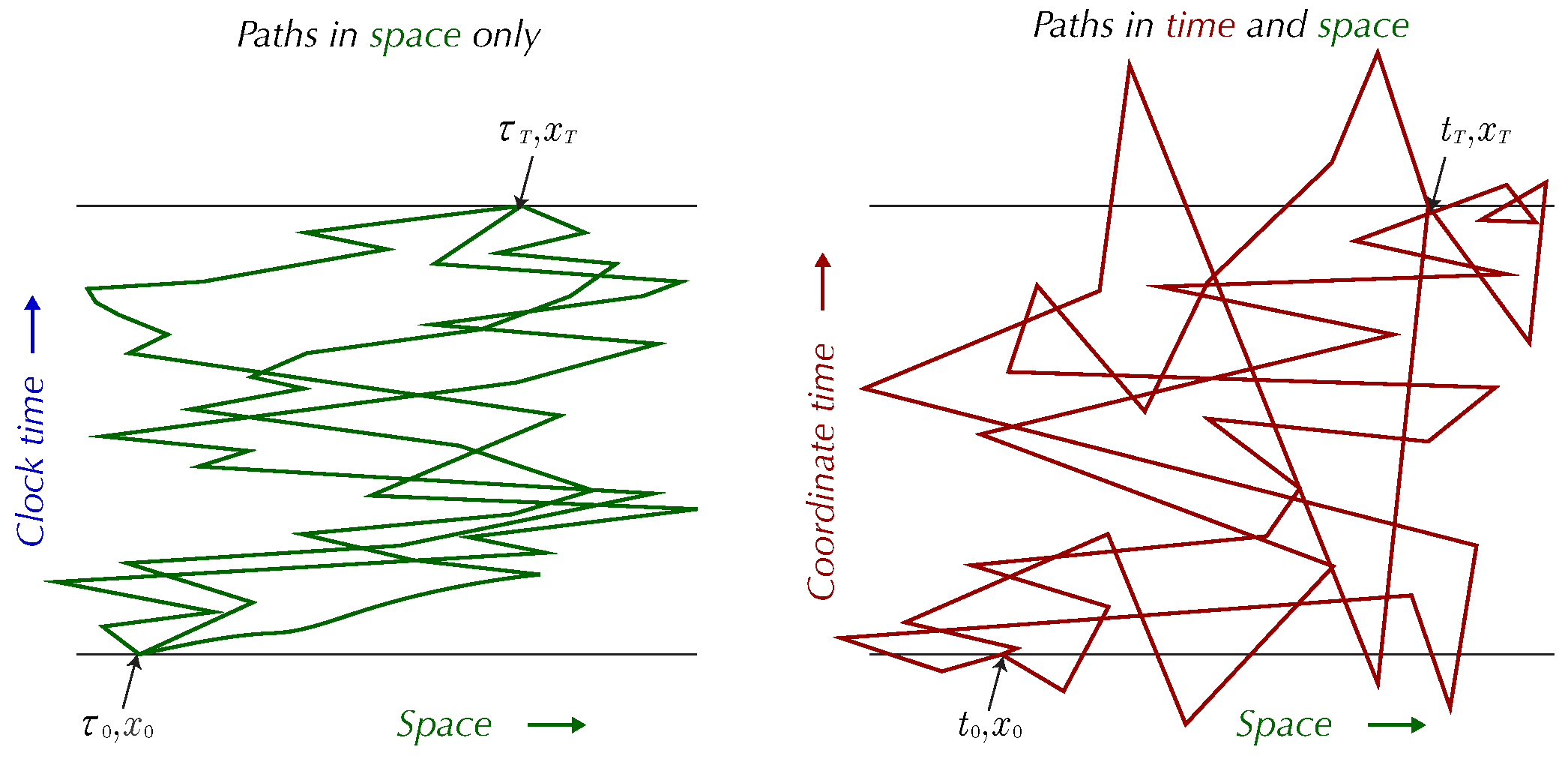

Figure 2.1.

Paths in space; paths in time and space

Figure 2.1.

Paths in space; paths in time and space

“Wheeler’s often unconventional vision of nature was grounded in reality through the principle of radical conservatism, which he acquired from Niels Bohr: Be conservative by sticking to well-established physical principles, but probe them by exposing their most radical conclusions.” – K. S. Thorne [108]

2.1. Overview

Our original goal was not just to promote time to an observable but to do in a way that is falsifiable: this means a simple development path and no free parameters. We were able to achieve this by starting with the FPI ([35–38,100,101] and as further developed in [39,43,57,65,69,86,91,104,122]).With the FPI the main change we had to make to the formalism was to promote the paths from varying in the three space dimensions to varying in time as well. The rest of the machinery could be left largely untouched. Since the extension in time is strongly, almost completely, constrained by the twin requirements of conformity to existing results and covariance any predictions are going to be falsifiable.

We look at the areas:

Single particles, primarily the non-relativistic case

Field theory, primarily the spin zero case

What are the differences in the Feynman diagrams

The relationship of the laboratory time to the time the paths are moving in

Falsifiability

2.2. Single Particles

We start with the single particle: this is much simpler than field theory and, as it happens, most of the development can be done at this level. We will assume some familiarity with the FPI at this point. In the single particle (usually non-relativistic case) case we sum over all paths from a start time to a finish time, weighing each by the action.

We have two times at issue here. The first is the traditional laboratory clock time. The second is the time our paths are varying in. Ultimately we will have to define the relationship between the two. But we found it simpler to treat them separately at the start.

The laboratory time is defined by the laboratory clock. Our paths will start at one time by that clock, finish at another. We call the time that the paths are moving in the coordinate time. We refer to it as t. The coordinate time’s properties are defined by covariance. In SQM, we define the paths as ; in TQM we define the paths as . The latter paths can go a bit into the future or the past. We can write the paths as the direct product of paths in time and in space: .

Convergence

The usual tricks for getting the time-slice by time-slice integrals to converge aren’t covariant. They are not meant to handle paths that vary in time: if they make the paths going into the future finite they then make the paths going into past infinite. To get past this problem, we note that the kernels are really distributions; they are only meaningful as applied to a specific wave function:

Then we note that all physical wave functions are normalizable; they have well-defined means and dispersions. This means we can use Morlet wavelet analysis to write any physically acceptable wave function as a sum over Gaussian wave functions [2]. If we apply the Lagrangian from Goldstein to such Gaussian test functions (GTFs) we see each integral in the long series of time-slices converges, in spite of the fact that integrals include integrals over time as well as the three space dimensions. So the solution was to shift to a more physically motivated approach.

As an unexpected but welcome side-effect, this will turn out to be how we tame the ultraviolet (UV) divergences in both QED and in QGR. The successive integrals entangle each step with the previous. The result is the initial GTF forces each integral in turn to be finite. We discuss in more detail below.

Classical Limit

One of the attractive features of the FPI is that the relationship between the classical and the quantum approaches is unusually clear. In classical mechanics, the classical path is normally defined by a variational procedure: we compute the classical path as the path that extremizes the action. Effectively we are doing a variation on the stationary phase approximation, the stationary point defines the classical path, the first variation vanished by construction; the second variations are just Gaussian integrals which we can do. Higher orders have to be by perturbation or other approaches, see for instance [66]. We can write the integral over the second variations in terms of the classical action as [69,91]:

A similar analysis works in QGR, where it is part of the background field method [22,24].

This approach also clarifies the relationship between TQM and SQM: in TQM we include variations in time and space; in SQM we include only variations in space. TQM is to SQM with respect to time as SQM is to classical mechanics (CM) with respect to space.

Schrödinger equation

Usually we derive the FPI expression from the Schrödinger equation [44,69,91]. But here we work in reverse, we go from the kernel to the Schrödinger equation. We take the time derivative of the kernel via a limiting process:

and use this to compute the associated Schrödinger equation:

We recognize this as the Feynman-Stückelberg equation. See also the Relativistic Dynamics (RD) program [31–34,53,53,56,72]). Usually in the RD program is a free parameter; here it is specifically defined as the clock time.

The only change we will need to make to extend this equation to the relativistic case is to replace the bare mass with the relativistic mass E or . We will therefore see an unusually smooth transition from the NR to the relativistic case.

Estimate of Initial Wave Function

Since we must always solve for a specific wave function we have to have a way to estimate them in particular cases. In paper A we got a crude but reasonable estimate by assuming we start with an initial wave function given as a function of the three-momentum, then getting a maximum entropy estimate of the energy part by using Lagrange multipliers. The constraints are:

and with these inputs the maximum entropy estimate of the energy part of the wave function is:

With this approach, if we already have the wave function as a function of the three momentum, we can get a reasonable estimate of the wave function in energy (and therefore time). Applied to the bound state wave functions, this gives us the scale as about . This is the Bohr radius divided by c; the time it would take a photon to cross an atom, also: .

This gives us two essential pieces of information:

It explains why these effects have not been seen by accident.

It implies that they are within reach, however. The attosecond area has been opening up rapidly, witness the 2023 Nobel prize for work in the attosecond area. And Ossiander’s group [81] has already gotten results at the sub-attosecond level.

Therefore if we can provide sufficiently targeted predictions, we should be able to confirm/falsify the hypothesis that time should be treated as an observable.

Development in Time

The free equation is formally very similar to the non-relativistic Schrödinger equation; it is as if we added a 4th space coordinate, albeit one that enters in to equations with the opposite sign of its three siblings.

The free solutions have the form:

with

the clock energy defined by:

It is often useful to write:

which take advantage of the fact that the variation of coordinate time from the clock time and of the coordinate energy from the clock energy is likely to be small.

Time Scales

There are two different time scales at work here. The time scales for t come from atomic units. They correspond to energies of order electron volts, therefore of order attoseconds and less.

But the time scale for the clock frequency is a million times slower than that. Taking bound state wave functions as a basis, the clock frequency is of order millionths of an electron volt and the associated time scale of order picoseconds.

This provides a quick if partial explanation of the much debated transition from the quantum to the classic realm. At atomic times the clock time does not play a role, , the FS/T equation looks like the Klein-Gordon (KG) equation, and the wave function varies as rapidly in time as in space.

But consider the long (to an atomic particle) trip from interaction zone to detector. Now the effects of on-shell bits of the wave function () will tend to reinforce, off-shell bits to cancel, and the wave function that arrives at the detector will look as if it has magically gone on-shell. See for instance Tannor [106] on this sort of effect.

So the free solutions interpolate between the 4D and the 3D views.

Computation of time of arrival

There is one more piece required to complete an initial analysis of the single particle case: what are the rules for detection? This obviously ties into the measurement problem, which is beyond the scope of this work. However we will provide a reasonable heuristic, pending a full analysis. Picture all of the possible paths from start to finish. The starting wave function is most naturally given as function of coordinate time as of a specific clock time. The development in time can be analyzed in terms of the free solutions.

We will take our detector as a quantum object. All of the individual paths that our our path integrals are summing over must, if they are to be detected, arrive at the detector at some point. If we assume that that point is marked in coordinate time, paths can arrive at that point with the same coordinate time but have taken routes with different clock times. The difference in clock time takes on the role of a proper time and different paths are associated with different proper times. To sum up the amplifier at the detector we write:

2.3. Spin Zero Fields

While most of the key ideas show up in the single particle case, we can’t, as noted get to falsifiability without extending the work to QFT, specifically QED. We worked primarily with the spin zero case, as the simplest. From the spin zero case, the generalization to QED was relatively straightforward.

For this step, it proved simpler to start with the FS/T equation and generalize it to high energies. We started with the single particle solutions, albeit with an extension in coordinate time as well as in space. We used the single particle solutions of the FS/T equation to define a Fock space. And with the free solutions and the creation and annihilation operators for the Fock space, we derived the Feynman propagators as the time-ordered vacuum expectation products in the usual way.

SQM propagators

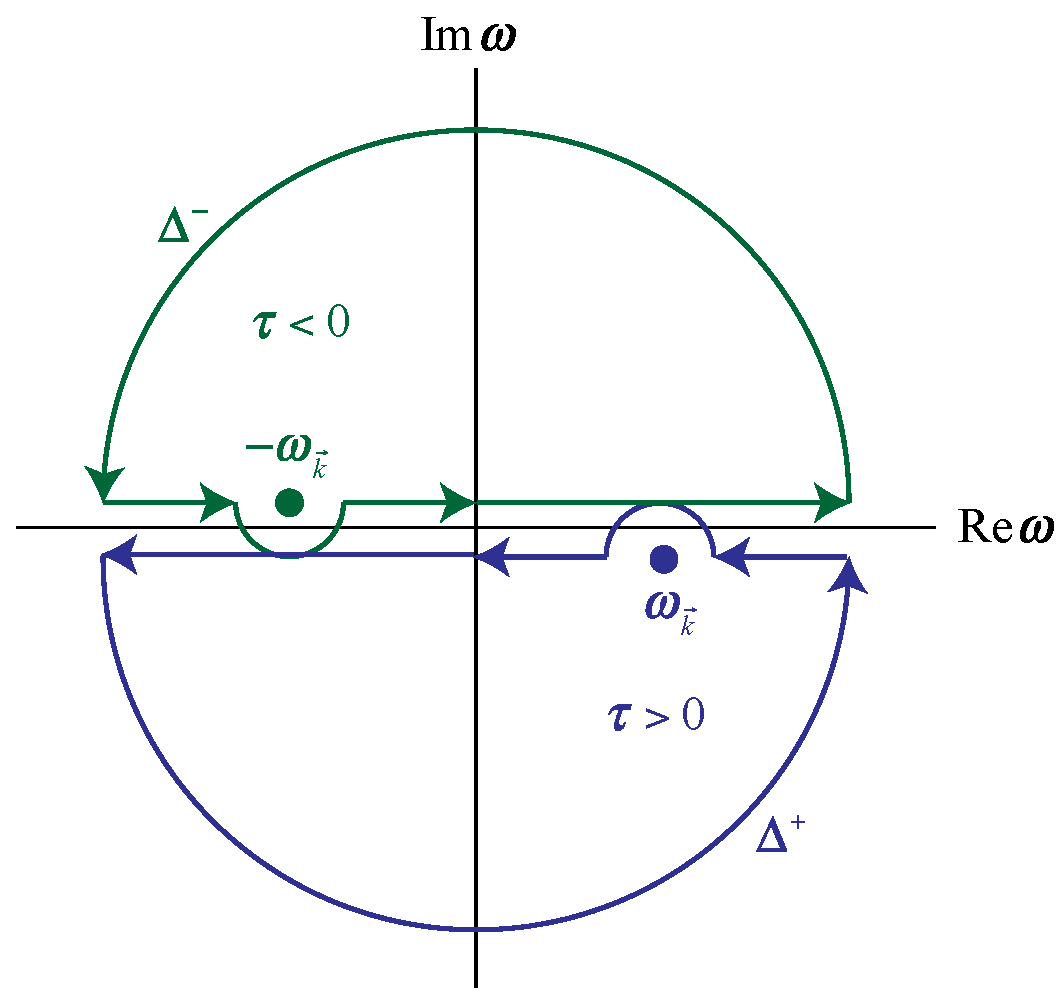

Figure 2.2.

Contour integral for propagator.

Figure 2.2.

Contour integral for propagator.

To ground the development of the TQM propagators we did a careful examination of the development of the SQM propagators. Tracking in particular Klauber’s line-by-line treatment, we noted there is one place where we start with three dimensional single particle wave functions, but then rewrite the normalization factor as a contour integral over .

This is done in a way that folds in the Feynman boundary conditions.

The specific value of

appears as the residue of the pole in the complex plane. This means that while we seem to be doing an integral over all possible values of

, the only one which will actually have an effect on the final result is the on-shell value:

The effect of this maneuver is to give us a manifestly covariant propagator which gives formal covariance, as well as a considerable simplification of the QED integrals. But the off-shell energies are not being explored. The virtual particles are in fact virtual. We can think of TQM as SQM with the virtual particles replaced by real ones.

Details We start with the free SQM wave functions:

These are the basis for the SQM Fock space, which consists of appropriately symmetrized products of these. We define the creation and annihilation operators by their effects on this Fock space:

with the 3D commutators being:

Note there is no mention of harmonic oscillators here; the creation and annihilation operators are defined by entirely by their effects on Fock space.

Now we build up the spin zero field operators as sums over the free single particle solutions in the interaction picture. We have the sums over the positive frequency components on the left and negative frequency components on the right:

We mark SQM parts with a superscript S. The normalization factor corresponds to the convention of normalizing beams to energy/volume (see for instance Feynman [40]). The represents box normalization, used when we are summing over a discrete set of wave vectors; to compute the propagator we shift to integrating over a continuous set of wave vectors, so we take .

The propagator is defined in terms of time ordered products of the free wave functions:

We are marking the clock time for the

x particle with

or just a 2; the clock time for the

y particle with a

or just a 1. With the time-ordered wave functions between in sandwich only those terms with a creation operator on the left and an annihilation operator on the right will have an effect. The result, after rather a bit of algebra is:

These are Feynman boundary conditions; as noted, excellent for S matrix calculations.

The next step in the derivation is to replace the positive time branch with a contour integral:

and the negative time with a similar contour integral. Combined, they give us the familiar expression for the propagator:

So the variable is not free. It is slotted to be reduced to its on-shell value when the contour integral is actually done.

TQM Propagators

We start with the FS/T. To extend to the relativistic case we promote the bare mass to the relativistic mass:

:

The promotion of the mass to the relativistic energy is an obvious generalization and may be treated as a reasonable Ansatz, one that works for massless and massive particles both. We provided in paper C a more sophisticated justification where we assumed that the foreground particle is surrounded by a quantum vacuum of the same kind of particles. The requirement that both the foreground particle and the background particles obey the KG equation and that they are in equilibrium forced the promotion from bare mass to relativistic mass.

Free Wave Functions

With the FS/T equations we have their corresponding free solutions by inspection.

The changes are:

Three vectors go to four vectors: . Obvious.

Energy normalization goes from on-shell energy to free energy: . The shift from the on-shell energy to the unconstrained energy is an aspect of the shift from time as parameter (constrained) to time as observable (unconstrained).

Box normalization goes to hyper box normalization: . We assume periodic boundary conditions. We can think of this as a box in time large enough to contain the entire interaction of interest. The use of box normalization is a great help with dimension checking. Once the dimension checking is done, it is often simpler to work with continuous normalization. In this case the factors go to .

As with SQM, we define the Fock space in terms of sums of the appropriately symmetrized free wave functions. The creation and annihilation operators are defined by their effects in Fock space:

with 4D commutators:

All other commutators are zero. Again, we make no use of the usual interpretation in terms of harmonic oscillators.

Propagators in TQM can be handled the same way, but with four dimensions in play rather than three:

We again use Feynman boundary conditions, to keep the development in parallel with SQM. But we stop the derivation just before the trick with the contour integral:

Note that the second term in TQM differs from the second term in SQM by the overall sign, by the sign of the clock frequency term, and by taking to the 4th rather than 3rd power.We can see that the w integral is real; that off-shell values will contribute to the result. Effectively we have moved from virtual fluctuations in energy to real ones. This is what changing time from parameter to observable means in the context of propagators.

To understand the relationship of this to the SQM propagator we focus on the left hand term, the retarded part of the propagator. We expect:

With this we have in TQM:

so in TQM the time dependence seen in the SQM picture is carried by the coordinate time/coordinate energy factor instead.

2.4. Feynman Rules

2.4.1. Vertexes

The vertexes in TQM are essentially the same as in SQM. If we looking at a

vertex we have:

There is one subtle but critical distinction. In SQM we have at each vertex an associated -function clock energy along with the usual three -functions in momentum. The -function in clock energy is the result of an integral over all of clock time from . It goes away if we look at shorter times. Since we almost always use SQM in the limit as this acceptable.

If we take the same limit in TQM, we get five -functions at each vertex: one for clock energy, one for coordinate energy, and the three momentum -functions.

But to explore the effects of TQM we need to look at short times, to look at time intervals of order attoseconds. At these times it is not meaningful to talk of clock energy and its conservation. We have four -functions at each vertex, but the fourth is for coordinate energy not clock energy.

2.4.2. Topology

As a result of this otherwise strict parallelism between vertexes in TQM and SQM, the topology of specific Feynman diagrams is the same between the two. All signs and symmetries are the same.

We have to replace the initial wave functions with the TQM versions. And for this we prefer GTFs to plane waves in any case.

We replace the SQM propagators with TQM:

And we prefer retarded to Feynman boundary conditions. It is easier to ensure convergence if we start in the past and push forwards.

2.4.3. Consistency with SQM

Since, appearances to the contrary, the results for TQM are usually SQM plus dispersion in time, we can generally treat an existing SQM calculation as a carrier for TQM, SQM plus temporal fuzz. And in earlier papers we worked through a few examples of doing this.

Experiments that average over times will generally average TQM back down to SQM. We will discuss how to find the differences below.

We note that while tree diagrams will in general be similar, we see a profound difference in the handling of loops. In SQM these are often accompanied by ultraviolet infinities; in TQM they are not just renormalizable but finite. We give a detailed example below, working how the combination of an initial dispersion in time plus entanglement in time has this effect.

2.4.4. Extension to QED

To promote to photons and fermions we extend the spin zero treatment to include them by a recipe:

Find basis/gauge in which each component separately obeys the KG equation. The usual Dirac basis does this for fermions; the Lorenz gauge does this for photons.

Require that each component now obey the FS/T equation.

Promote all energy variables being restricted to on-shell values to being allowed to be off-shell.

With the conversion to TQM done, rotate to whatever gauge or basis is most suitable for the problem in hand.

This also the approach we will use for gravitons, using the de Donder gauge.

2.5. Clock Time and Coordinate Time

We now return to our two times, clock time and coordinate time. We defined the first operationally: it is what clocks measure. We defined the second formally, as a kind of fourth space dimension, like the old and now abandoned trick (strongly objected to in [74]).

The most obvious problem is that the clock term on the left implies the selection of a specific frame, anathema to manifest covariance. We proposed a resolution to this in paper C:

Weinberg [114] showed we can define a specific pseudo-tensor as the stress energy of the vacuum. A similar conclusion has been arrived by Dirac [26]. Baryshev [8] reviews the work in this area.

Use the stress energy pseudo-tensor to define the rest frame of spacetime. This is then an invariant definition.

Make the rest frame of spacetime the defining reference frame for TQM.

When doing measurements of TQM we correct in the analysis phase for the differences between our laboratory frame and the rest frame of spacetime.

This ensures all laboratories are working to a common reference.

A second objection is that we now have two kinds of time. We argue that we really have one kind of time but two perspectives.

We identify the coordinate time with the time coordinate used in the block universe picture. Every path has fixed start and end points in this picture. The coordinate system may vary, but the space time points are fixed. We treat the coordinate time as fundamental.

Now we consider the wave function of the laboratory. We redefine the laboratory time as the expectation of the coordinate time:

The wave function of the laboratory is defined over . It is an emergent property.

This should be understood as no more problematic than defining the space position of the laboratory as:

2.6. Falsifiability

We now have falsification within reach but not quite within grasp. We have a way to compute anything we can currently compute with Feynman diagrams in SQM in TQM. But the expected sub-attosecond time ranges are not easy to access. Sub-attosecond times were reached by Ossiander et al, but that was a highly specialized and sophisticated project. Currently the shortest attosecond scale pulses are now down to about 53as [67] which is still too large.

There are several factors which make detection still more difficult:

The time-of-arrival is the most obvious metric of dispersion in time. At sub-relativistic speeds, this is dominated by the uncertainty in space. A slow packet will show a large dispersion in time of arrival regardless of the existence or otherwise of dispersion in time.

To measure time-of-arrival we have to look at individual packets; beams will average out the start time of individual packets and thereby wash out the effects we are interested in. We can’t just create a beam of a thousand packets; we must generate each packet separately.

The HUP in time/energy looks to be the most dramatic test. But to create an experimental apparatus which produces an effective slit of attosecond duration is going to be difficult.

A counter-balancing factor is the extreme generality of the hypothesized effect. Every time dependent phenomena will show some effects of dispersion in time. Every quantum effect – entanglement, dispersion, tunneling, and so on – has an “in time” variant. The standard references on foundational experiments in QM [7,46,70] may suggest possibilities. Wilczek’s time crystals [96,117] may provide ways to amplify the effects.

And of course the difficulty of the project is not without attractions of its own.

3. Time Dispersion in Gravity

3.1. Quantization of the Metric

Whether gravity should be quantized and if so, how, is of course a much vexed question. We are, of course, taking the position that it should but that raises the question, how?

We consider alternatives. We can quantize at the level of metric, which is well understood [9,10,5,17,21–24,26–29,45,50,59,62,76,77,80,83,88,98,103,114]. Or we can quantize at the level of a hypothesized underlying spacetime. There are two problems with working with an underlying spacetime:

Which spacetime? There are many: string theory, loop quantum gravity, causal set theory, causal dynamic triangulation, and so on.

Most show their effects at the Planck scale. For the most part, this means there is no real prospect of falsifying any results.

By working at the metric level we are working with a kind of lowest common denominator of most or all of the proposed spacetimes. Gravitons are then quantized ripples on the metric.

where

is the Minkowski metric (using particle conventions):

3.2. Free Gravitons

We now build up the free wave functions and propagator for gravitons. The treatment here is abbreviated from [50,62,109,110].

The matter free action in general relativity is:

We are ignoring the question of ghosts for the moment. If we vary the action with respect to the metric we get the free Einstein equations of motion. If we take a second variation, we get the propagator. For instance [110]:

To apply TQM we need to use a gauge where each component separately obeys the KG equation; with this we can apply the recipe we used with QED (sub

Section 2.4.4) to get the TQM propagator. We will refer to TQM applied to QGR as TGR (time + General Relativity). From this point forward we will generally use QGR to mean specifically GR quantized according to rules of SQM, i.e. with time as parameter rather than time as observable.

We therefore shift to the use of the de Donder gauge:

We can then shift to the “trace-reversed” form:

This gives the simple form for the matter-free Einstein equation:

The key point here is that we can now apply the recipe we used to go from SQM to TQM in QED, so we write out the corresponding FS/T equation as:

With only two actual degrees of freedom we can describe a graviton using only two polarization matrices. For example assume the graviton is traveling in the

z direction:

We can take the two polarization matrices as:

These are sometimes called the plus (+) and cross (x) polarizations.

We can write the graviton wave function in terms of these two polarizations:

The corresponding TGR free wave function is:

3.2.1. Fock space in TGR

This gives us the Fock space as the sum over these free wave functions:

With creation and annihilation operators:

And wave function operators:

3.2.2. TGR Propagators

And the same procedure as with TQM gives us the free graviton propagator. We start with the (clock) time-ordered product:

We use the same approach as for TQM. The result is:

I ensures that the graviton matrix stays symmetric.

We therefore have the free TGR wave functions and propagator. We will refer to this as the simple propagator.

Note that during the derivation we required that the individual components of the graviton obey the KG equation. But now we have established the mapping from QGR to TGR, we are permitted to undo the gauge transformations that achieved this simplicity.

We can therefore shift back to de Donder gauge:

3.3. Action for Gravitons

We have found Jakobsen [62] particularly helpful. He is approaching QGR from a QFT perspective, as we are, and gives a detailed account of the propagator, vertexes, and so on. We are primarily interested in self-interactions of the graviton field.

We start with the Einstein-Hilbert (EH) and matter actions. The QGR action is given by:

where is the gravitational constant.

Ghosts

To enforce de Donder or another gauge, using the Faddeev-Popov method [30,94,99] we will need a ghost term:

We are not concerned with the specifics of the ghost fields. They serve to keep using using the preferred propagator, either the very simple de Donder propagator or the default propagator, and to define the vertex structure that goes with this. We will take the vertex structures as given in Jakobsen.

As with QFT, the vertex structure is the same in TGR as in QGR (except for adding coordinate time as the 4th dimension at each point).

Path Integrals

in path integral approach to QGR, we integrate over a series of paths:

In the single particle case it is common to start with the classical solution, then write the path integrals as corrections to it. This same approach works in QGR as well: we start with an existing metric, as the Schwartzschild metric, then write the path integral in terms of quantum corrections to it. This is referred to as the “background field” approach.

We will take a simpler approach here. We take advantage of the fact that any metric can made locally Minkowskian, and work locally. We lose generality but gain simplicity, which is appropriate in a first attack on the problem.

Laboratory Time

To develop in parallel with TQM for QED, we need a clock time to serve as reference. For the development here the specific choice of clock time is not critical; we only require a well-defined and consistent choice.

If we are dealing with one of the many “table-top” experiments which have been proposed [6,13,16,19,58,64] we can continue to use the laboratory time. Still gravity experiments, in the nature of things, tend to larger scales. In some cases, we may want to take the entire universe as our laboratory. In this case the obvious choice for the clock time is the cosmic time as defined in the model.

3.4. Three Graviton Vertex

To promote the vertexes from QGR to TGR we can use the same procedure as for the spin zero case earlier (sub

Section 2.4.1).

Unfortunately the original graviton/graviton interactions are extremely complex. And as QGR is a non-linear gauge theory we have to include ghost terms in the action, which adds considerably to the complexity. DeWitt [23] notes there are 171 terms in the three point vertex, 2850 terms in the four point vertex – although he goes on to reassure us that with appropriate symmetrizations these may be reduced to 11 and 28 respectively.

We confine ourselves to the three vertex case here.

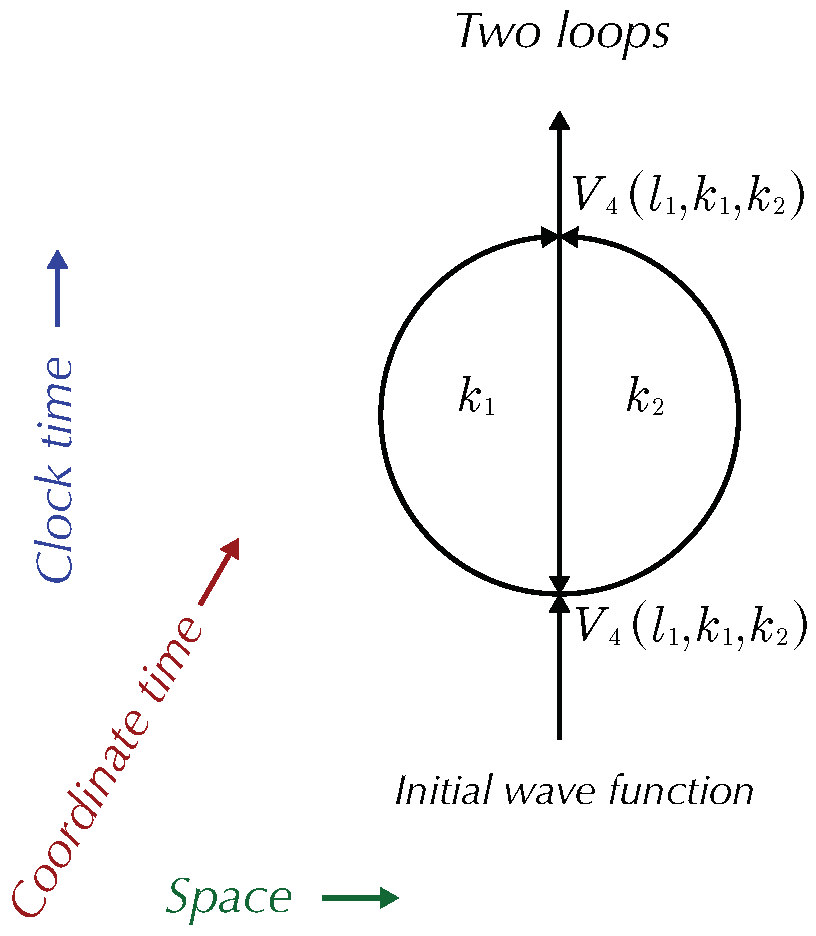

Figure 3.1.

Three graviton vertex.

Figure 3.1.

Three graviton vertex.

We are tracking the derivation in Jakobsen. We are assuming the metric is locally flat. And that there is no nearby matter to complicate things. We are skipping the steps used to reduce the EH Lagrangian to a sum of products of powers of the metric and its first derivatives. We are using the QGR form of the action to derive the three graviton vertex. Once we have the vertex in QGR we promote it to a three graviton vertex in TGR using the rule in sub

Section 2.4.1.

For the three graviton vertices in de Donder gauge, the ghost contribution is zero. Therefore in this specific case we need only the

term in the EH action. To get this, we read off the

terms in:

We can expand the action in powers of h keeping only those with exactly three powers of h. There are sixteen such, four from the determinant and twelve from the remaining terms.

In SQM each vertex is accompanied by a

function in three momentum plus a

function in clock energy reflecting the fact that it is normal to take the limit as

in QGR. It is at this point in the derivation that we promote the vertex from QGR to TGR. Using the convention that all momenta are inward bound we have:

The vertex is given (in Jakobsen’s notation) by:

We have one pair of indices –

– for each of the three incoming gravitons. The vertex may be expanded out in terms of “U” functions. The

U functions are extremely complicated but not functions of the momenta; they are eight dimensional constant matrices. In a certain sense the

U function appears only once here; the second and third terms are symmetrized versions of the first. Each of the three terms gives up six of its eight indexes to the incoming gravitons, leaving the other two to pair with all three possible pairs of the graviton momenta.

Note that the vertex is completely symmetric in its three inputs. And that there are two powers of

present. It is these two powers of

which are at the heart of the renormalization problem: they are what make the SQM loops for QGR hard to renormalize. In a self-energy loop there are two of these

vertexes. So the integrals go as:

where is a cutoff in momentum.

3.5. Feynman Rules for Gravitons

Topology

We now have all we need to write the Feynman diagrams for TGR. We assume we start with a set of standard Feynman diagrams for QGR. The topology and symmetries are the same as with QGR. We look here purely at the graviton part. The changes for the propagators and vertexes are as given above. The specifics of the loop integrals will be discussed in the next section.

We have worked here by using the standard QGR procedures to develop the Feynman rules, then use the Feynman rules as the basis for promoting from SQM to TQM (QGR to TGR). We could also have worked, perhaps a bit more traditionally, within the Lagrangian framework and then used the Dyson series to develop the Feynman rules from them. We did that in paper C. However the approach from the Feynman rules seems more intuitive. And in the interests of brevity, we prefer to present only one approach.

Initial Graviton Wave Function

The initial graviton wave functions have to be written with four rather than three dimensions, as earlier.

We can estimate the extension in energy for an individual wave function using the method earlier. The polarization part is unchanged.

The initial QGR wave function:

goes to the TGR wave function:

Final Wave Functions

Feynman diagrams are implicitly optimized for scattering experiments, where the particles come in from and are detected at .

Since at such long times, there is unlikely to be a detectable difference between TGR and QGR we look at short times. At short times we have to replace the traditional

-function in clock energy with explicit integrals over clock time:

but expand the remaining three dimensional overall delta functions into four dimensional delta functions:

We are using k for the external momenta here, but l for the internal.