Introduction

Artificial intelligence (AI) chatbots are generative chat tools built on large language models (LLM), such as GPT-3.5, GPT-4, PALM, and LLaMA. In the past year, AI chatbots (e.g. Bard, Bing Chat, and ChatGPT) have received great interest from the media, public, policy makers, and researchers from various fields (Bowman 2023). Educational researchers have also found generative chat engines an interesting research topic. In particular, higher education institutions (HEI) have been at the frontier of educational use of AI chatbots long before the recent public awareness of LLM-based software. This is clearly seen from several recent review articles (Okonkwo and Ade-Ibijola 2021; Dempere et al. 2023; Lo and Hew 2023). A current trend is that major companies and research institutions around the world are investing in the development of LLMs, promoting their rapid evolution. New use cases and applications are invented constantly, and AI chat technology is soon becoming ubiquitous (Bowman 2023). In this regard, the educational field must adopt this new technology and develop pedagogically meaningful use cases for it (Lim et al. 2023).

Technically, LLMs are statistical next-word predictors. However, from a human perspective, LLMs seem to have highly intelligent creative abilities. Bowman (2023) reviewed multiple studies where the ability of LLMs to learn and predict seem to produce novel text and representations that are valuable for users. According to Bowman, the reason for this is that LLMs are trained in a representationally rich environment through a massive amount of data using versatile training methods, including interaction with various types of software. They are also capable of conducting searches within the databases in response to user information requests (Panda and Chakravarty 2022). This is interesting from an educational perspective. For example, LLMs can pass visual tests that measure reasoning (He et al. 2021), deduce what the author knows about the topic, produce reasonable text suggestions to support writing (Andreas 2022), and generate novel images and guide users on how to draw them (Bubeck et al. 2023). The current challenge is that even though LLMs seem to provide meaningful output in many cases, there are no reliable techniques to control the content generation. There is also a learning curve to consider with e.g. prompt crafting, as a short interaction with LLMs does not seem to produce good results (Bowman 2023). To maximize success with LLMs, users need to learn how to think step-by-step and formulate prompts using an iterative process (Kojima et al. 2022).

As mentioned, scholars working in higher-education studies have been active in AI chatbot research, providing knowledge on e.g., potential use cases, risks, and students’ perceptions towards its usage. For example, Strzelecki (2023) found that the three strongest reasons for higher education students to use ChatGPT in learning were habit of usage, expectations for improving performance, and hedonic motivation. These results were based on a data sample of 543 students’ self-reports, thus providing a trustworthy perspective on motivational factors of early adopters. Cooper (2023) highlights potential risks of LLMs, such as fake citations, generation of biases found from training data, need for content moderation, copyright issues, and environmental impacts such as carbon dioxide emissions and high energy consumption. From a positive perspective, Jauhiainen and Guerra (2023) argue that generative AI can revolutionize digital education and offer major possibilities to support sustainable development goals, especially sustainable education SDG4. This could be achieved, for example, by using AI to provide access to high-quality learning environments and up-to-date information for all. However, the adoption of new technology increases the complexity of the information environment. This sets new requirements for teaching information literacy that must be considered in the teacher education (Dahlqvist 2021). In summary, scholars are cautious of new technology by identifying potential risks. However, these scholars also see several educational possibilities, such as a tool to create new exercises and to support writing (Crawford, Cowling, and Allen 2023; Sullivan, Kelly, and McLaughlan 2023).

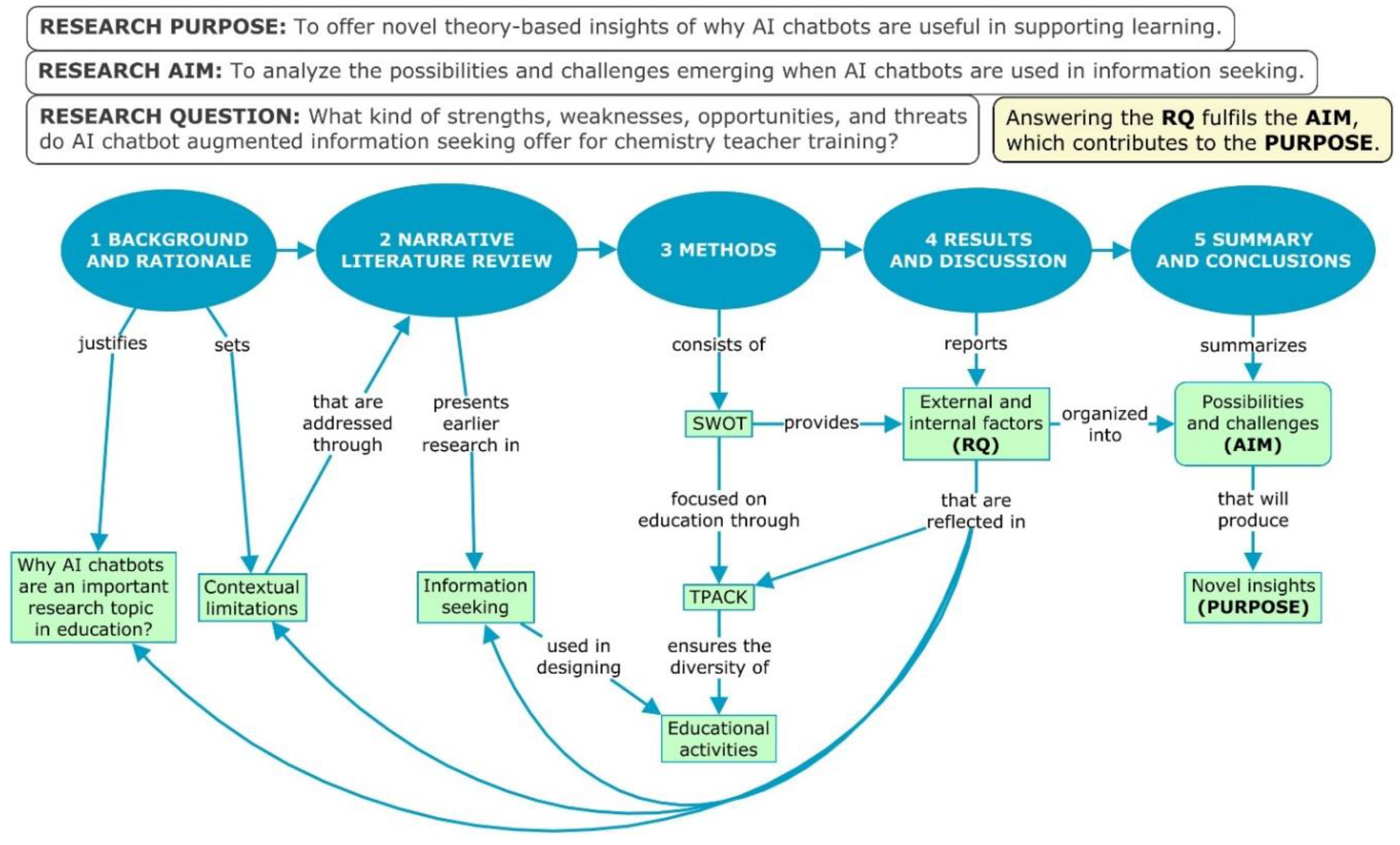

Indeed, the implementation of AI chatbots is a very current topic in educational research. New articles are published constantly. Although it seems that much research has been done, according to many authors (e.g. Dempere et al. 2023; Jauhiainen and Guerra 2023; Strzelecki 2023) there is a need to develop research-based models on how to use these tools to support learning and teaching in practice. We agree with this need and use it to justify the rationale. The purpose of this article is to offer novel theory-based insights about the usefulness of AI chatbots in supporting learning. However, the challenge with this kind of broad purpose statement is that the educational usage of AI chatbots is a highly multidisciplinary topic, offering endless perspectives to address. Therefore, to make a valuable contribution to the scientific discussion, the article needs a clear focus that positions AI chatbots in an educational context. A well-defined focus requires several contextual limitations, which we describe and justify next.

First, as AI chatbots are technological applications of LLMs, we argue that AI chatbot-related educational research needs to define AI chatbots as a technological invention. This enables defining the educational adoption of AI chatbots as an innovation (Denning 2012), which is a central concept for the diffusion of new educational technology (Rogers 2003). From the research literature, one can already find multiple examples of how to implement AI chatbots in education (see Okonkwo and Ade-Ibijola 2021; Dempere et al. 2023). However, even though there are ready-made solutions available, the field will not immediately adopt them on a broad scale. This is due to the nature of innovations. It takes time before innovation is communicated throughout the educational community, including teachers, students, and other stakeholders (Rogers 2003). Fortunately, there are ways to expedite the diffusion. Ertmer et al. (2012) suggests that the adoption can be supported by identifying and removing barriers that hinder teachers’ usage of new technology.

Obstacles can be external or internal. External challenges are called first-order barriers, such as hardware, software, training, and support. Second-order barriers are internal challenges, such as teachers’ beliefs, attitudes, skills, knowledge, confidence, and experienced value for supporting teaching and learning (Ertmer and Hruskocy 1999). First-order barriers are significant and can prevent technology usage completely. However, they are often clearly identifiable and removed rapidly (Ertmer et al. 2012). For example, when ChatGPT was released for public use on 30 November 2022, it instantly removed barriers related to software access (OpenAI 2022). Training and support can also be arranged rapidly if needs are identified and there are available resources for training. Therefore, the second-order barriers are the real challenge in supporting the adoption of educational technology. Teachers will not start using new tools without skills and understanding of their possibilities and challenges (Ertmer et al. 2012). This is the reason why we focus on removing the second-order barriers by providing insights into the educational possibilities and challenges of AI chatbots usage.

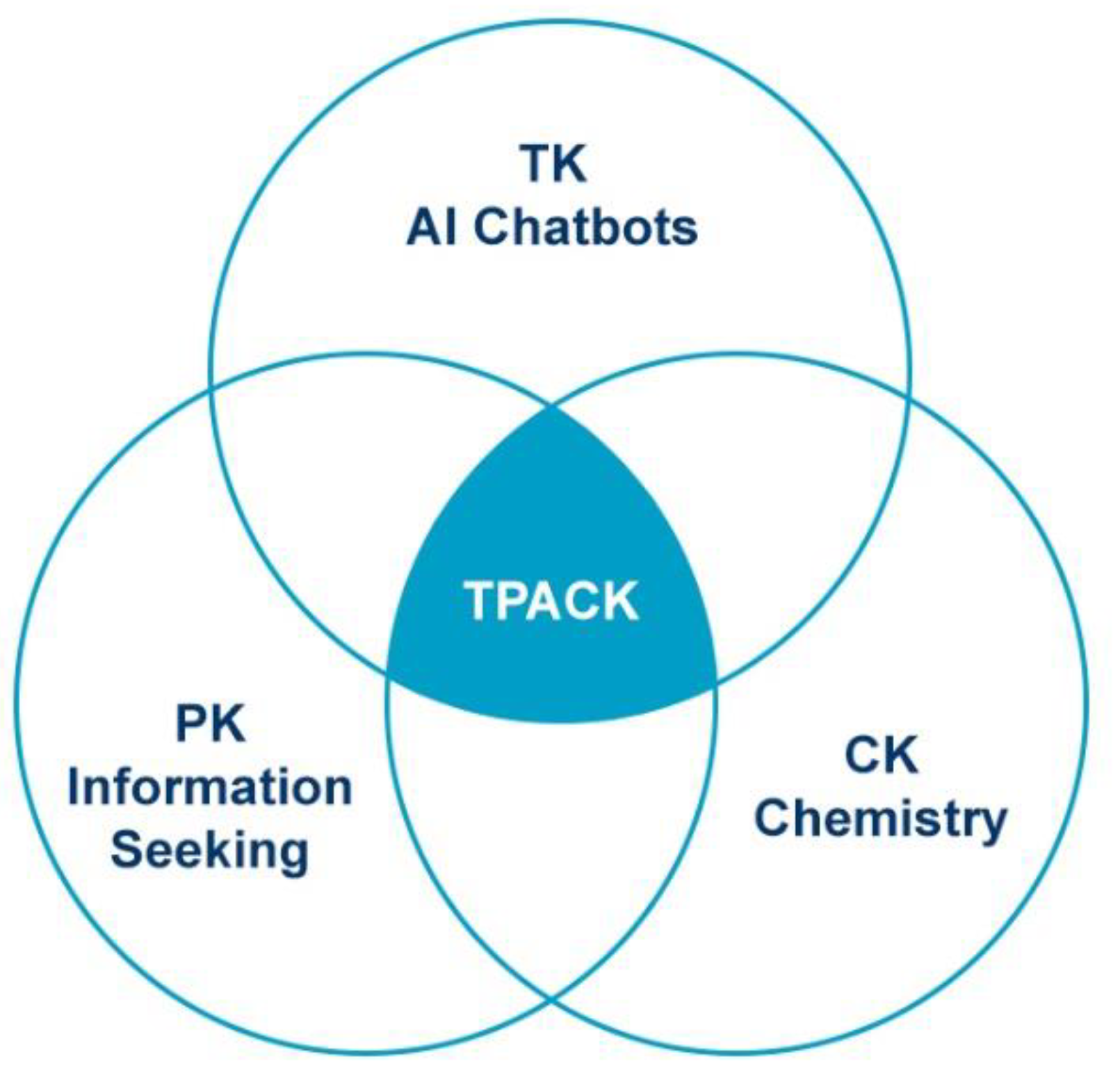

There are several research-based frameworks that support modeling of knowledge domains required in describing the full innovation environment. In this research, we have selected a technological pedagogical content knowledge (TPACK) framework for the modelling tool (Koehler, Mishra, and Cain 2013). TPACK was selected because it is a widely used framework and there are several successful research cases where it has been used in modeling the knowledge domains that affect adoption of educational technology (Voogt et al. 2013).

1 First-order barriers are mainly related to technological knowledge (TK) and are easily identified in the case of AI chatbots. However, the removal of second-order barriers requires pedagogical knowledge, which adds complexity to the innovation work.

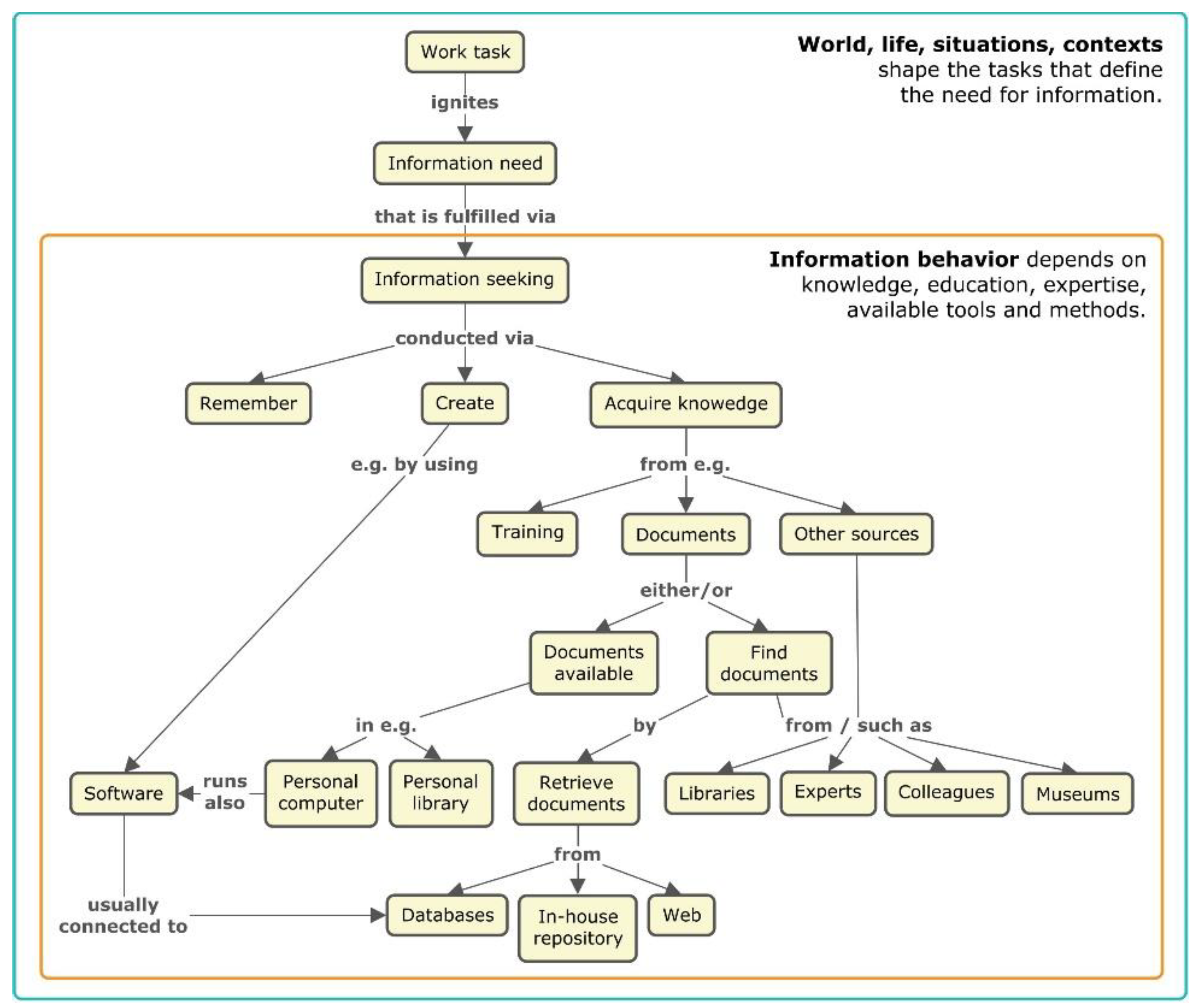

Second, to contribute directly to the educational discussion, the article needs a pedagogical context. For the pedagogical knowledge (PK) component, we have selected information seeking. In this article, we define information seeking as purposive interaction with information (Ingwersen and Järvelin 2005) (see section 2). The context was selected because good information-seeking skills are essential in academic work (Gordon et al. 2018) and present in all learning and problem-solving activities to some extent (Shultz and Li 2016). In addition, the topic is important for the educational sector because teachers need new tools on how to manage the rapidly expanding information environment. Lack of information skills can lead to inequality between people (Parissi et al. 2023). Without up-to-date information literacy skills, teachers cannot promote lifelong learning skills and support the sustainability and equality of education for all (SDG4) (Dahlqvist 2021).

There is also a gap in research that combines AI chatbots and information seeking in an educational context. Therefore, information-seeking context makes this research interesting also for the field of information sciences. There is a shortage of task-specific technology-driven information-seeking studies (Järvelin and Ingwersen 2004; Ingwersen and Järvelin 2005), and more research is needed whenever new tools and technology are developed (Zamani et al. 2023).

The third contextual limitation is derived from the content knowledge (CK) component of TPACK framework (see

Figure 1). We have included the content knowledge perspective into the innovation environment by conducting the research in an authentic higher education setting of chemistry teacher education. This enables us to analyze the selected technological and pedagogical contexts through chemical information.

With these three contextual limitations and the selected higher educational setting, we can formulate a clear focus. The aim of this theoretical research is to analyze the possibilities and challenges emerging when AI chatbots (TK) are applied in educational information seeking (PK) of chemical information (CK) in pre-service chemistry teacher education (HEI). The analysis was conducted by first preparing a narrative literature review (Green, Johnson, and Adams 2006) on information seeking (see section 2). The insights of the literature review were used in designing educational chemical information-seeking activities that were analyzed via a SWOT approach (Benzaghta et al. 2021). The educational use cases of AI-assisted information seeking were specifically designed for future chemistry teacher education courses at the University of Helsinki. To ensure pedagogical diversity, we present one example from each core knowledge component of TPACK (see section 3). We analyzed the possibilities and challenges via SWOT, which was guided with the following research question: what kind of strengths, weaknesses, opportunities, and threats does AI chatbot-assisted information seeking offer for chemistry teacher education? In section 4, we report the analysis results and reflect them on the presented background literature. Lastly, in section 5 we present a summary and research-based conclusions organized using the SWOT model.

Through this research design (see

Figure 2), we can produce novel insights and address the stated broader purpose from a unique interdisciplinary perspective that is especially relevant for chemistry teacher education. This research contributes to the identified research gaps by providing research-based knowledge that is useful for all who design educational AI chatbot applications, use cases, and pedagogical models.

SWOT analysis

SWOT is a descriptive method that enables analysis of possibilities and challenges by categorizing features to internal strengths and weaknesses and external opportunities and threats (Gürel and Tat 2017; Benzaghta et al. 2021). Therefore, SWOT offers more analytical accuracy than just focusing on possibilities and challenges, which is why we have chosen this as the analysis approach. Although SWOT was originally developed in the 1960s to support strategic business planning (Puyt, Lie, and Wilderom 2023), due to its practical nature, there are several research examples where SWOT has been applied in analyzing educational technology contexts, such as 360° virtual reality (Kittel et al. 2020; Roche et al. 2021), educational cheminformatics (Pernaa 2022), and sports technology (Verdel et al. 2023).

In addition, because our research context is highly interdisciplinary, combining learning, information seeking, and educational technology, we decided to add an extra layer to SWOT to improve the accuracy even further. To ensure that the analysis is focused strictly on educational possibilities and challenges, we used the TPACK framework to guide the analysis inside SWOT sections (Mishra and Koehler 2006).

TPACK Framework

TPACK is a widely adopted model that facilitates understanding of different knowledge types needed for successful use of educational technology. The TPACK framework consists of several overlapping knowledge domains, often visualized through a Venn diagram (see

Figure 5) (Koehler, Mishra, and Cain 2013). The three already introduced core components are PK (how to learn or write), CK (concepts, theories, and research techniques), and TK (knowledge of devices, software, communication tools). The interaction of the core components forms three hybrid knowledge categories. Pedagogical content knowledge (PCK) is, for example, knowledge of challenges in learning some specific concept. In this research, PCK refers to the interaction of chemical information and information seeking. Technological content knowledge (TCK) enables using technology to support learning of some specific concepts. In this research, the usage of AI chatbots for interacting with chemical information is considered TCK. Technological pedagogical knowledge (TPK) is understanding the possibilities that technology offers for learning. In this research, prompting technological advice is considered TPK (Koehler and Mishra 2005; Mishra and Koehler 2006; Koehler, Mishra, and Cain 2013). Note that TPK is not subject specific and does not overlap directly with CK (see

Figure 5).

The TPACK model has been criticized for being rather unclear or unpractical (Willermark 2018). One problem is the diversity and inaccuracy of definitions for different knowledge components (Graham 2011). For example, Cox (2008) analyzed TPACK research literature and found 13 definitions for TCK, 10 definitions for TPK, and 89 definitions for TPACK. Such variance makes it difficult for researchers to understand and use the framework systematically to support diffusion of innovations and to measure different TPACK domains in consensus. For example, TK includes all modern (e.g. smartphones, internet, and AI chatbots) and traditional technologies (e.g. pencils and chalkboards) under the same knowledge domain (Graham 2011). Because of this broad categorization, some researchers have developed more accurate definitions. For example, Angeli and Valanides (2009) have conceptualized a knowledge domain called ICT-TPCK to represent the information and communication technological aspects of TPACK. Some criticism has also been directed towards the actual TPACK visualization (see

Figure 5). Graham (2011) argues that according to the TPACK model visualization, PK is not needed in TCK (no overlap between PK and TCK). Nevertheless, there are still many TCK definitions that include the aspect of learning. Graham emphasizes that there is a lot of work to be done in defining TPACK and its knowledge components, because

“precise definitions are essential to a coherent theory” (Graham 2011, 1955).

We agree with the inaccuracy claims of the TPACK model. Every model has its strengths and limitations. The authors cited have provided constructive criticism that has supported the development of the TPACK concept. Despite the criticism, TPACK has been used successfully in hundreds of research cases in modeling the knowledge components needed to use educational technology. Therefore, we are confident in using TPACK to increase the analysis accuracy of our SWOT analysis.

Activity 1: Write a Summary (PK to TPACK)

In the first activity, chemistry student teachers were assigned to read an article about microcomputer-based laboratories (Aksela 2011) and write a 250-word summary in Finnish. The designed information need was to become orientated with the topic before laboratory work. The information behavior needed to complete this assignment leaned strongly towards creating knowledge (see

Figure 2) (Ingwersen and Järvelin 2005).

We supported the work process via the following instructions:

Generate a summary from the article via AI-PDF software (for example, pdf2gpt software [https://pdf2gpt.com]).

Ask an AI chatbot tool (e.g., ChatGPT, Bard, or Bing Chat) to refine it to 250-word length.

Translate the text to Finnish via the same tool.

Examine the text, correct language and readability, and add the required infographic or table mentioned in the evaluation criteria.

Describe the entire working process (prompts included) below the summary sufficiently precisely such that it can be repeated if desired.

Reflect on the possibilities and challenges of the work process in 250 words.

We categorized this activity under PK, as academic writing is not always CK dependent. Software tools represent the TK domain, making the driving factor of the assignment TPK. However, the context of the activity is microcomputer-based laboratory (CK), which is why it ultimately activates the entire TPACK framework (Mishra and Koehler 2006; Koehler, Mishra, and Cain 2013).

Activity 2: Create a Concept Map (CK to TCK)

The second activity was a concept-mapping exercise. In this exercise, chemistry student teachers chose a chemical concept found in the Finnish curriculum, such as energy or a chemical reaction. The students then made a Novakian concept map including about 20 concepts, 3-4 hierarchy levels, links, and images (see Novak and Cañas 2006).

This exercise activates all three major information-seeking strategies (Ingwersen and Järvelin 2005). Our design conjecture was that students will remember some concepts but not all 20. Therefore, they must acquire conceptual knowledge and create relations between them. In this regard, the information need was to remember basic-level chemistry knowledge and model a larger conceptual system. To acquire chemical information and to create relations between concepts, they were encouraged to use AI chatbots and textbooks. The role of AI chatbots was to strengthen the internal work process by offering a tool for triangulating ideas, supporting the design of conceptual limitations, and verifying memory-based definitions.

This exercise was categorized under CK because it focused on chemistry. However, because the concepts were prepared using software, and the overall aim was to learn how to visualize chemical concept structures, the exercise also has TCK emphasis (Koehler, Mishra, and Cain 2013).

Activity 3: Build a Chemistry Measurement Instrument (TK to TPACK)

In the third exercise, students designed and built a chemistry measurement instrument using a single-board computer platform. In addition, they created a project-based learning module that used the device. This exercise was very challenging for chemistry student teachers because it required programming skills and electronics construction experience in addition to chemistry and pedagogical knowledge. These skills are not included in their study program by default, but are developed through optional studies or hobbies (Ambrož et al. 2023).

The basis of this exercise is TK, due to the electronics and programming demands. However, this exercise also required CK for the chemistry context and PK for the pedagogical planning. Therefore, we argue that it can be used in developing whole TPACK (Koehler and Mishra 2005). This exercise was the most challenging from the information-seeking perspective. Building a chemistry device with a pedagogical purpose requires remembering, creating, and acquiring knowledge through diverse resources. The task likely activated all information behavior components presented in

Figure 3 (Järvelin and Ingwersen 2004; Ingwersen and Järvelin 2005).

Supporting Writing Assignments

As discussed in the introduction, several scholars have studied the possibilities and challenges of AI chatbots for writing. Some are concerned on how chatbots affect the development of writing skills; others focus on its benefits (Sullivan, Kelly, and McLaughlan 2023). Although students already use chatbots widely, it is hard to detect which is an internal weakness.

To adopt AI chatbots meaningfully in higher education, the University of Helsinki has taken a constructive approach and is not focused on controlling but rather on teaching how to use new technology. The Faculty of Science offers support to faculty personnel on how to guide students in using AI chatbots in courses (see appendix A). Some use cases may be allowed, others forbidden, but the key is to make course-dependent decisions based on the learning objectives. This is an external opportunity to teach students about the ethical considerations in academic writing (Crawford, Cowling, and Allen 2023). Faculty-level actions are essential in coordinated systematic innovation work. This kind of instructive communication towards faculty personnel especially supports the removal of second-order internal barriers, such as beliefs and attitudes (Ertmer et al. 2012).

Our design conjecture was that the use of AI chatbots offers many internal strengths, such as increasing productivity by expediting preparation of the summary (Sullivan, Kelly, and McLaughlan 2023; Strzelecki 2023; Brandtzaeg and Følstad 2017). From a course-planning perspective, this is an opportunity that allows allocating fewer hours to CK orientating and more time for laboratory work. From a writing perspective, AI chatbots change the focus of skills that will be developed. Previously, the emphasis was on writing text; with chatbots the emphasis is now on analyzing and evaluating text and editing it to ensure fluency. Therefore, chatbot-assisted writing fosters critical thinking and can be used in developing academic writing skills (P dos Santos 2023). However, one must understand that chatbots support different kinds of writing skills. Some might see this as an external threat for academic skills in general. However, we argue that this is neither an internal strength nor a weakness depending on the perspective.

The translation abilities of AI chatbots are not limited to English (Adarkwah et al. 2023). This offers an external opportunity to expand the language pool of the selected course literature. For example, there is considerable chemistry education research literature published in German, French, and Spanish that are not commonly used in Finnish academia. In addition, language-processing capabilities enable foreign students with modest English skills to interact with the course literature more seamlessly than before. When chatbots develop further, they could even contribute to writing exercises in a multilingual group assignment. This offers an external opportunity to support the inclusion and equity of education (SDG4) (United Nations n.d.). However, the selected software should be open source and sustainable such that every learner has an equal opportunity to use them. For example, in our activity 1 we recommended pdf2gtp software, which has subsequently announced that the free version will have restrictions in the future and a pro version with a small fee will be published. This is an example of an external threat derived from TK domain.

Triangulating Basic Level Conceptual Knowledge

The second activity introduced students to concept mapping. A concept map is a meta-level knowledge tool especially useful for making internal conceptual structures visible, which facilitates communication between stakeholders and enables refining information from data to expertise (Wilson 1999; Novak and Cañas 2006; Bawden and Robinson 2012). While designing the activity, we saw the possibilities that AI chatbots offer for learning discussions. Users can have meaningful chemistry-related conversations with chatbots, but as highlighted in the literature, good prompt-crafting skills are essential for successful workflows (P dos Santos 2023). In addition, we realized how important it is to critically analyze the answers that AI chatbots return. For example, some of the Finnish names for concepts and their definitions were nonsensical. This sets requirements for the user’s CK. The user must have at least a basic-level understanding of the topic to evaluate the quality of the output. This is an internal weakness derived from AI chatbot technology. On the other hand, this offers an opportunity to activate HOCS, such as analyze and evaluate (Krathwohl 2002).

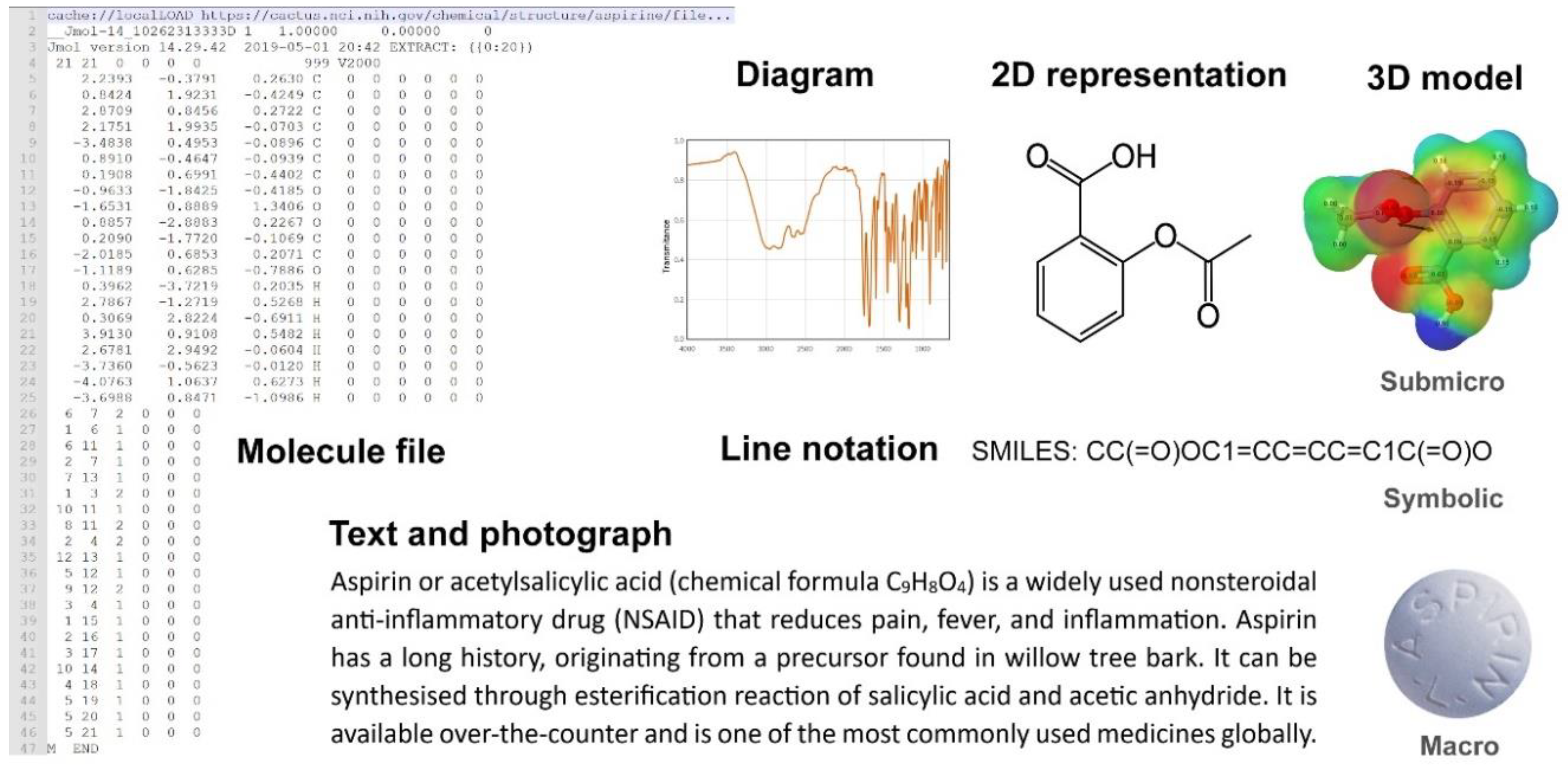

In this research, we mainly used the free version of ChatGPT based on GPT 3.5 in developing the exercises (OpenAI 2022). From a chemical information perspective, the free version of ChatGPT can help understanding at all three levels (Johnstone 1982; Gilbert and Treagust 2009), although outputs are delivered in text format. This is an internal weakness. Users can make a submicroscopic level prompt and the chatbot will describe the dynamic nature of chemistry through a textual description (see

Figure 4). However, these outputs can be used in clarifying some specific illustrations and could decrease the potential cognitive overload (Gabel 1999; Reid 2019). In this sense, a triangulation of knowledge through a combination of traditional information resources (such as textbooks) and modern tools (such as chatbots) can be a good workflow before more integrated solutions are developed (Adarkwah et al. 2023).

Scaffolding Usage of Unfamiliar Technical Knowledge

During the development of activity 3, we observed that AI chatbots can produce functional well-commented source code and circuit planning. This was the case at least with the educational Arduino platform. As our earlier research indicated, we have a major challenge in introducing coding and SBC-based chemical instrument development for chemistry student teachers because the diverse knowledge requirements cannot be addressed in a single 5 ECTS university course (Ambrož et al. 2023). Through AI chatbots, we can significantly decrease the workload related to programming and electronic device planning. For the output analysis need, the software development platforms offer internal quality tools by default. Users can test and debug whether the written code works as intended. This is an external opportunity to teach software development and an internal strength raised from the ability of AI chatbots to generate working source code. However, prompt crafting is again the central skill that should be taught to students (Kojima et al. 2022).

From the information-seeking perspective, this is the most challenging activity. It includes many unfamiliar knowledge domains that require considerable independent problem solving. Of course, students can ask for help from teachers. Unfortunately in reality, teachers do not have enough resources to assist every student in every single support request (Ambrož et al. 2023). To develop good problem-solving abilities and persistence, students must learn how to cope by themselves using their personal networks as support. This is also important from the work-life requirements perspective. As a solution, we see that AI chatbots offer an external opportunity to build a personal learning environment and expand their ZPD (Fernández et al. 2001; Peña-López 2013). For example, if the learner does not understand some parts of the code, they could make clarifying prompts. However, to develop good information literacy skills, we claim that it is important to learn how to use versatile information resources, such as libraries, document retrieval, and contact with experts (Shultz and Li 2016). This would ensure the development of diverse information behavior (Ingwersen and Järvelin 2005; Dahlqvist 2021).

Summary and Conclusions

The conducted TPACK-guided SWOT analysis revealed several possibilities and challenges both from the internal and external perspectives (see

Table 1). According to our evaluation, the TPACK as a modeling framework worked as planned and offered more analysis accuracy for categorizing the insights inside the SWOT model (Koehler, Mishra, and Cain 2013). This enables focusing the innovation work to a specific knowledge domain.

First, we agree with Brandtzaeg’s and Følstad’s (2017) prediction in that AI chatbots will create a new paradigm for how people interact with information. AI chatbots represent cutting-edge information technology that will soon be applied throughout society in all kinds of tasks to increase productivity (Bowman 2023; Strzelecki 2023). AI chatbots offer opportunities for information seeking by providing a conversational interface and access to a limitless information resource for all (Liao et al. 2020; Jauhiainen and Guerra 2023). Their translation capabilities and ability to have learning discussions enable building of high-quality personal learning environments and expanded ZPDs that will offer personalized learning experiences for everyone regardless of language or cultural background (Fernández et al. 2001; Peña-López 2013; P dos Santos 2023; Adarkwah et al. 2023). For maximizing ZPD, we predict that in the future there will be more integrated software solutions built to support collaborative information seeking (Avula et al. 2018). The possibilities to support sustainable education are endless. We claim that AI chatbots will be a major change agent towards inclusive and equitable quality lifelong learning for all (SDG4) (United Nations n.d.).

However, it is important to understand that AI chatbots are an invention and their educational adoption will take time (Denning 2012; Rogers 2003). This can be supported through innovation work aiming to remove first- and second-order barriers (Denning 2012; Ertmer et al. 2012). For example, HEIs must offers licenses, support, training, and easily adopted use cases and frameworks for teachers (see

Appendix A). For removal of first-order barriers, to minimize the external threats related to sustainability of selected software, we recommend that HEIs should favor LLMs and software based on open-source code. In addition, as Ertmer et al. (2012) emphasize, second-order barriers are the true challenge. We agree with this claim, and we highlight the role of teacher education as a solution. Schools may have recommendations for AI usage in teaching, but in many countries, teachers have great autonomy in making pedagogical decisions, such as whether they include AI chatbots to teaching or not. During their higher education studies, chemistry student teachers build professional identity, including perceptions and beliefs towards the new technology. Negative attitudes can be persistent, and it is more difficult to change them later in working life. This research shows an example of how to support the removal of second-order barriers though educationally meaningful learning activities. The development process of such activities can be improved further by implementing a co-design approach, which enables inclusion of expertise from several different stakeholders in the process (Aksela 2019). This is crucial in a multidisciplinary innovation environment, such as the case of using AI chatbots in seeking chemical information.

Chemistry education and teacher education will benefit from AI chatbots similarly to any other domain. Learners can refine information to knowledge via learning discussions, check facts, and prompt definitions for concepts (Bawden and Robinson 2012; Hatakeyama-Sato et al. 2023). However, one must be aware of the limitations of LLMs and analyze or triangulate the generated information before using it. This is an important information literacy skill related to usage of AI chatbots that should be included in chemistry education programs. In addition, from the chemical information perspective, AI chatbots are currently limited in processing multimodal representations in three different levels (Wegner et al. 2012; Reid 2019). In the future, AI tools will surely expand their ability to work in a multimodal information environment with visual inputs and outputs. In addition, they will likely be able to guide users to original information sources used in the training data. These kinds of features would definitely help the information seeking of chemists (Gordon et al. 2018).

Based on our theoretical insights, we believe that AI chatbots will change the way people interact with information-processing tasks and what is considered expertise. In the future, everyone will have access to endless information through their high-quality personal learning environment with an embedded AI tutor. Teachers must be trained on new information literacy requirements. For future research directions, we suggest that it would be important conceptualize what is considered knowledge and expertise in the modern information age. Educational practices and evaluation culture throughout the educational field should then be renewed to support development of a new understanding of learning.

Finally, to achieve a wider change, use of AI chatbots must be included in information literacy skills and integrated in every educational level, from primary to higher education (including lifelong learning). This integration must be done at the curriculum level, which will slowly resonate as a change in school practices. This is especially important for teacher education. Without modern information literacy skills, teachers will not be able to support sustainable education and lifelong learning. Therefore, we challenge teacher-education programs around the world to include AI-assisted chatbot information seeking into their curricula and to show leadership at the frontier of education by changing the future of education, one teacher at a time.

Author Contributions

JP: Conceptualization, Formal analysis; Funding acquisition, Investigation, Methodology, Project administration, Resources, Supervision, Visualization, Writing – original draft; Writing – review and editing; TI: Writing – review and editing; AT: Writing – review and editing; EM: Writing – review and editing; RP: Writing – review and editing; OH: Writing – review and editing

Funding

This research received no external funding. Open access funding is provided by the University of Helsinki.

Acknowledgments

We would like to express our gratitude to the students who participated in the “ICT in Chemistry Education” course at the University of Helsinki during the fall of 2023. Authors and students had several insightful conversations on the potential and challenges of AI chatbots from the students’ perspective. In addition, we would like to thank professor Maija Aksela for providing feedback on the article.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

This is a preprint of a manuscript submitted to Frontiers in Education (Pernaa et al. In review).

Appendix A: Use of Large Language Models in this Course

Large language models (LLM) are recently developed, versatile tools. Although LLMs have useful use cases, they can also conflict with learning objectives. Permitted uses are always course-dependent. Permitted and prohibited uses are listed below. Some uses may not be listed because they are not relevant to this course. Common language models can produce false, misleading, or irrelevant information. Because of this, it is the student’s responsibility to ensure the correctness and relevance of the information. It is also worth remembering that specialized tools usually produce better results than language models. Presenting the generated text as your own can be interpreted as plagiarism. More information can be found at https://studies.helsinki.fi/instructions/article/what-cheating-and-plagiarism.

The course can specify that if a language model is used, its use must be reported. In such a case, more detailed instructions are presented in the listing below.

The use of language models in this course is:

(REMOVE UNNECESSARY SECTIONS FROM THE FOLLOWING)

- -

Fully allowed/forbidden.

- -

Allowed/forbidden to generate text for e.g., report, thesis, or certificates.

- -

Allowed/forbidden to finish or rewrite the text.

- -

Allowed/forbidden to check grammar mistakes.

- -

Allowed/forbidden as a typesetting aid (e.g. generating Latex code when making tables or graphs)

- -

Allowed/forbidden in searching for information or explaining or summarizing topics.

- -

Allowed/forbidden in code generation.

This instruction was written by Kjell Lemström, Senior University Lecturer, Director of the bachelor's program in computer science, Department of Computer Science, Faculty of Science, University of Helsinki, Finland.

Notes

| 1 |

An in-depth overview of the TPACK framework can be found in section 3.2. |

References

- Adarkwah, Michael Agyemang, Chen Ying, Muhammad Yasir Mustafa, and Ronghuai Huang. 2023. “Prediction of Learner Information-Seeking Behavior and Classroom Engagement in the Advent of ChatGPT.” In Smart Learning for A Sustainable Society, edited by Chutiporn Anutariya, Dejian Liu, Kinshuk, Ahmed Tlili, Junfeng Yang, and Maiga Chang, 117–26. Lecture Notes in Educational Technology. Singapore: Springer Nature. [CrossRef]

- Aksela, M. 2019. “Towards Student-Centred Solutions and Pedagogical Innovations in Science Education through Co-Design Approach within Design-Based Research.” LUMAT: International Journal of Math, Science and Technology Education 7 (3). [CrossRef]

- Aksela, Maija Katariina. 2011. “Engaging Students for Meaningful Chemistry Learning through Microcomputer-Based Laboratory (MBL) Inquiry.” Educació Química : EduQ, no. 9: 30–37. [CrossRef]

- Ambrož, Miha, Johannes Pernaa, Outi Haatainen, and Maija Aksela. 2023. “Promoting STEM Education of Future Chemistry Teachers with an Engineering Approach Involving Single-Board Computers.” Applied Sciences 13 (5): 3278. [CrossRef]

- Andreas, Jacob. 2022. “Language Models as Agent Models.” In Findings of the Association for Computational Linguistics: EMNLP 2022, 5769–79. Abu Dhabi, United Arab Emirates: Association for Computational Linguistics. [CrossRef]

- Androutsopoulou, Aggeliki, Nikos Karacapilidis, Euripidis Loukis, and Yannis Charalabidis. 2019. “Transforming the Communication between Citizens and Government through AI-Guided Chatbots.” Government Information Quarterly 36 (2): 358–67. [CrossRef]

- Angeli, Charoula, and Nicos Valanides. 2009. “Epistemological and Methodological Issues for the Conceptualization, Development, and Assessment of ICT-TPCK: Advances in Technological Pedagogical Content Knowledge (TPCK).” Computers & Education 52 (1): 154–68. [CrossRef]

- Avula, Sandeep, Gordon Chadwick, Jaime Arguello, and Robert Capra. 2018. “SearchBots: User Engagement with ChatBots during Collaborative Search.” In Proceedings of the 2018 Conference on Human Information Interaction & Retrieval, 52–61. CHIIR ’18. New York, NY, USA: Association for Computing Machinery. [CrossRef]

- Bawden, David. 2001. “Information and Digital Literacies: A Review of Concepts.” Journal of Documentation 57 (2): 218–59. [CrossRef]

- Bawden, David, and Lyn Robinson. 2012. An Introduction to Information Science. Chicago: Neal-Schuman.

- Benzaghta, Mostafa, Abdulaziz Elwalda, Mousa Mousa, Ismail Erkan, and Mushfiqur Rahman. 2021. “SWOT Analysis Applications: An Integrative Literature Review.” Journal of Global Business Insights 6 (1): 55–73. [CrossRef]

- Bowman, Samuel R. 2023. “Eight Things to Know about Large Language Models.” arXiv. http://arxiv.org/abs/2304.00612.

- Brandtzaeg, Petter Bae, and Asbjørn Følstad. 2017. “Why People Use Chatbots.” In Internet Science, edited by Ioannis Kompatsiaris, Jonathan Cave, Anna Satsiou, Georg Carle, Antonella Passani, Efstratios Kontopoulos, Sotiris Diplaris, and Donald McMillan, 377–92. Lecture Notes in Computer Science. Cham: Springer International Publishing. [CrossRef]

- Bubeck, Sébastien, Varun Chandrasekaran, Ronen Eldan, Johannes Gehrke, Eric Horvitz, Ece Kamar, Peter Lee, et al. 2023. “Sparks of Artificial General Intelligence: Early Experiments with GPT-4.” arXiv. [CrossRef]

- Cooper, Grant. 2023. “Examining Science Education in ChatGPT: An Exploratory Study of Generative Artificial Intelligence.” Journal of Science Education and Technology 32 (3): 444–52. [CrossRef]

- Cox, Suzy. 2008. “A Conceptual Analysis of Technological Pedagogical Content Knowledge.” Doctoral dissertation, Provo, UT: Brigham Young University. http://hdl.lib.byu.edu/1877/etd2552.

- Crawford, Joseph, Michael Cowling, and Kelly-Ann Allen. 2023. “Leadership Is Needed for Ethical ChatGPT: Character, Assessment, and Learning Using Artificial Intelligence (AI).” Journal of University Teaching & Learning Practice 20 (3). [CrossRef]

- Dahlqvist, Claes. 2021. “Information-Seeking Behaviours of Teacher Students: A Systematic Review of Quantitative Methods Literature.” Education for Information 37 (3): 259–85. [CrossRef]

- Dempere, Juan, Kennedy Modugu, Allam Hesham, and Lakshmana Kumar Ramasamy. 2023. “The Impact of ChatGPT on Higher Education.” Frontiers in Education 8. https://www.frontiersin.org/articles/10.3389/feduc.2023.1206936. [CrossRef]

- Denning, Peter. 2012. “Innovating the Future: From Ideas to Adoption.” The Futurist, no. January-February: 40–46.

- Ertmer, Peggy A., and Carole Hruskocy. 1999. “Impacts of a University-Elementary School Partnership Designed to Support Technology Integration.” Educational Technology Research and Development 47 (1): 81–96. [CrossRef]

- Ertmer, Peggy A., Anne T. Ottenbreit-Leftwich, Olgun Sadik, Emine Sendurur, and Polat Sendurur. 2012. “Teacher Beliefs and Technology Integration Practices: A Critical Relationship.” Computers & Education 59 (2): 423–35. [CrossRef]

- Ferk Savec, Vesna. 2017. “The Opportunities and Challenges for ICT in Science Education.” LUMAT: International Journal on Math, Science and Technology Education 5 (1): 12–22. [CrossRef]

- Fernández, Manuel, Rupert Wegerif, Neil Mercer, and Sylvia Rojas-Drummond. 2001. “Re-Conceptualizing ‘Scaffolding’ and the Zone of Proximal Development in the Context of Symmetrical Collaborative Learning.” The Journal of Classroom Interaction 36/37 (2/1): 40–54.

- Flaxbart, David. 2001. “Conversations with Chemists: Information-Seeking Behavior of Chemistry Faculty in the Electronic Age.” Science & Technology Libraries 21 (3–4): 5–26. [CrossRef]

- Gabel, Dorothy. 1999. “Improving Teaching and Learning through Chemistry Education Research: A Look to the Future.” Journal of Chemical Education 76 (4): 448–554. [CrossRef]

- Gilbert, John K., and David F. Treagust. 2009. “Introduction: Macro, Submicro and Symbolic Representations and the Relationship Between Them: Key Models in Chemical Education.” In Multiple Representations in Chemical Education, edited by John K. Gilbert and David Treagust, 1–8. Models and Modeling in Science Education. Dordrecht: Springer Netherlands. [CrossRef]

- Gordon, Ian D., Patricia Meindl, Michael White, and Kathy Szigeti. 2018. “Information Seeking Behaviors, Attitudes, and Choices of Academic Chemists.” Science & Technology Libraries 37 (2): 130–51. [CrossRef]

- Graham, Charles R. 2011. “Theoretical Considerations for Understanding Technological Pedagogical Content Knowledge (TPACK).” Computers & Education 57 (3): 1953–60. [CrossRef]

- Green, Bart N., Claire D. Johnson, and Alan Adams. 2006. “Writing Narrative Literature Reviews for Peer-Reviewed Journals: Secrets of the Trade.” Journal of Chiropractic Medicine 5 (3): 101–17. [CrossRef]

- Guo, Taicheng, Kehan Guo, Bozhao Nan, Zhenwen Liang, Zhichun Guo, Nitesh V. Chawla, Olaf Wiest, and Xiangliang Zhang. 2023. “What Can Large Language Models Do in Chemistry? A Comprehensive Benchmark on Eight Tasks.” arXiv. [CrossRef]

- Gürel, S., and M. Tat. 2017. “SWOT Analysis: A Theoretical Review.” The Journal of International Social Research 10 (51): 994–1006. [CrossRef]

- Hatakeyama-Sato, Kan, Naoki Yamane, Yasuhiko Igarashi, Yuta Nabae, and Teruaki Hayakawa. 2023. “Prompt Engineering of GPT-4 for Chemical Research: What Can/Cannot Be Done?” ChemRxiv. [CrossRef]

- He, Pengcheng, Xiaodong Liu, Jianfeng Gao, and Weizhu Chen. 2021. “DeBERTa: Decoding-Enchanced BERT with Distangled Attention.” In International Conference on Learning Representations. Vienna, Austria: ICRL. https://openreview.net/forum?id=XPZIaotutsD.

- Ingwersen, Peter, and Kalervo Järvelin, eds. 2005. “Implications of the Cognitive Framework for IS&R.” In The Turn: Integration of Information Seeking and Retrieval in Context, 313–57. The Information Retrieval Series. Dordrecht: Springer Netherlands. [CrossRef]

- Järvelin, Kalervo, and Peter Ingwersen. 2004. “Information Seeking Research Needs Extension towards Tasks and Technology.” Information Research 10 (1): paper 212.

- Jauhiainen, Jussi S., and Agustín Garagorry Guerra. 2023. “Generative AI and ChatGPT in School Children’s Education: Evidence from a School Lesson.” Sustainability 15 (18): 14025. [CrossRef]

- Johnstone, A. H. 1982. “Macro- and Microchemistry.” School Science Review 64: 377–79.

- Johnstone, A. H. 1991. “Why Is Science Difficult to Learn? Things Are Seldom What They Seem.” Journal of Computer Assisted Learning 7 (2): 75–83. [CrossRef]

- Kittel, Aden, Paul Larkin, Ian Cunningham, and Michael Spittle. 2020. “360° Virtual Reality: A SWOT Analysis in Comparison to Virtual Reality.” Frontiers in Psychology 11. https://www.frontiersin.org/articles/10.3389/fpsyg.2020.563474. [CrossRef]

- Koehler, Matthew, and Punya Mishra. 2005. “What Happens When Teachers Design Educational Technology? The Development of Technological Pedagogical Content Knowledge.” Journal of Educational Computing Research 32 (2): 131–52. [CrossRef]

- Koehler, Matthew, Punya Mishra, and William Cain. 2013. “What Is Technological Pedagogical Content Knowledge (TPACK)?” Journal of Education 193 (3): 13–19. [CrossRef]

- Kojima, Takeshi, Shixiang Shane Gu, Machel Reid, Yutaka Matsuo, and Yusuke Iwasawa. 2022. “Large Language Models Are Zero-Shot Reasoners.” In . https://openreview.net/forum?id=6p3AuaHAFiN.

- Krathwohl, David R. 2002. “A Revision of Bloom’s Taxonomy: An Overview.” Theory Into Practice 41 (4): 212–18. [CrossRef]

- Liao, Q. Vera, Werner Geyer, Michael Muller, and Yasaman Khazaen. 2020. “Conversational Interfaces for Information Search.” In Understanding and Improving Information Search: A Cognitive Approach, edited by Wai Tat Fu and Herre van Oostendorp, 267–87. Human–Computer Interaction Series. Cham: Springer International Publishing. [CrossRef]

- Lim, Weng Marc, Asanka Gunasekara, Jessica Leigh Pallant, Jason Ian Pallant, and Ekaterina Pechenkina. 2023. “Generative AI and the Future of Education: Ragnarök or Reformation? A Paradoxical Perspective from Management Educators.” The International Journal of Management Education 21 (2): 100790. [CrossRef]

- Lo, Chung Kwan, and Khe Foon Hew. 2023. “A Review of Integrating AI-Based Chatbots into Flipped Learning: New Possibilities and Challenges.” Frontiers in Education 8. https://www.frontiersin.org/articles/10.3389/feduc.2023.1175715. [CrossRef]

- Lommatzsch, A., and Jonas Katins. 2019. “An Information Retrieval-Based Approach for Building Intuitive Chatbots for Large Knowledge Bases.” In Proceedings of the Conference on “Lernen, Wissen, Daten, Analysen,” edited by Robert Jäschke and Matthias Weidlich, 343–52. Berlin: CEUR. https://ceur-ws.org/Vol-2454/paper_60.pdf.

- Mishra, Punya, and Matthew J. Koehler. 2006. “Technological Pedagogical Content Knowledge: A Framework for Teacher Knowledge.” Teachers College Record 108 (6): 1017–54. [CrossRef]

- Mott, Jon, and David Wiley. 2009. “Open for Learning: The CMS and the Open Learning Network.” In Education 15 (2). [CrossRef]

- Novak, Joseph, and Alberto Cañas. 2006. “The Theory Underlying Concept Maps and How to Construct Them.” Technical Report. Institute for Human and Machine Cognition. 2006. http://cmap.ihmc.us/Publications/researchpapers/theorycmaps/TheoryUnderlyingConceptMaps.bck-11-01-06.htm.

- Okonkwo, Chinedu Wilfred, and Abejide Ade-Ibijola. 2021. “Chatbots Applications in Education: A Systematic Review.” Computers and Education: Artificial Intelligence 2 (January): 100033. [CrossRef]

- OpenAI. 2022. “Introducing ChatGPT.” Introducing ChatGPT. November 30, 2022. https://openai.com/blog/chatgpt.

- P dos Santos, Renato. 2023. “Enhancing Chemistry Learning with ChatGPT and Bing Chat as Agents to Think With: A Comparative Case Study.” SSRN Scholarly Paper. Rochester, NY. [CrossRef]

- Panda, Subhajit, and Rupak Chakravarty. 2022. “Adapting Intelligent Information Services in Libraries: A Case of Smart AI Chatbots.” Library Hi Tech News 39 (1): 12–15. [CrossRef]

- Parissi, Marioleni, Vassilis Komis, Gabriel Dumouchel, Konstantinos Lavidas, and Stamatios Papadakis. 2023. “How Does Students’ Knowledge about Information-Seeking Improve Their Behavior in Solving Information Problems?” Educational Process: International Journal 12 (1): 113–37.

- Peña-López, Ismael. 2013. “Heavy Switchers in Translearning: From Formal Teaching to Ubiquitous Learning.” Edited by John Moravec. On the Horizon 21 (2): 127–37. [CrossRef]

- Pernaa, Johannes. 2022. “Possibilities and Challenges of Using Educational Cheminformatics for STEM Education: A SWOT Analysis of a Molecular Visualization Engineering Project.” Journal of Chemical Education 99 (3): 1190–1200. [CrossRef]

- Pernaa, Johannes, Topias Ikävalko, Aleksi Takala, Emmi Vuorio, Reija Pesonen, and Outi Haatainen. In review. “Artificial Intelligence Chatbots in Chemical Information Seeking: Educational Insights through a SWOT Analysis.” Frontiers in Education.

- Pernaa, Johannes, Aleksi Takala, Veysel Ciftci, José Hernández-Ramos, Lizethly Cáceres-Jensen, and Jorge Rodríguez-Becerra. 2023. “Open-Source Software Development in Cheminformatics: A Qualitative Analysis of Rationales.” Applied Sciences 13 (17): 9516. [CrossRef]

- Puyt, Richard W., Finn Birger Lie, and Celeste P. M. Wilderom. 2023. “The Origins of SWOT Analysis.” Long Range Planning 56 (3): 102304. [CrossRef]

- Radford, Alec, Karthik Narasimhan, Tim Salimans, and Ilya Sutskever. 2018. “Improving Language Understanding by Generative Pre-Training.” OpenAI. https://openai.com/research/language-unsupervised.

- Reid, Norman. 2019. “A Tribute to Professor Alex H Johnstone (1930–2017): His Unique Contribution to Chemistry Education Research.” Chemistry Teacher International 1 (1): 20180016. [CrossRef]

- Roche, Lionel, Aden Kittel, Ian Cunningham, and Cathy Rolland. 2021. “360° Video Integration in Teacher Education: A SWOT Analysis.” Frontiers in Education 6. https://www.frontiersin.org/articles/10.3389/feduc.2021.761176.

- Rogers, Everett M. 2003. Diffusion of Innovations. 5th edition. New York: Free Press.

- Shultz, Ginger V., and Ye Li. 2016. “Student Development of Information Literacy Skills during Problem-Based Organic Chemistry Laboratory Experiments.” Journal of Chemical Education 93 (3): 413–22. [CrossRef]

- Shumanov, Michael, and Lester Johnson. 2021. “Making Conversations with Chatbots More Personalized.” Computers in Human Behavior 117 (April): 106627. [CrossRef]

- Strzelecki, Artur. 2023. “To Use or Not to Use ChatGPT in Higher Education? A Study of Students’ Acceptance and Use of Technology.” Interactive Learning Environments 0 (0): 1–14. [CrossRef]

- Sullivan, Miriam, Andrew Kelly, and Paul McLaughlan. 2023. “ChatGPT in Higher Education: Considerations for Academic Integrity and Student Learning.” Journal of Applied Learning and Teaching 6 (1): 31–40. [CrossRef]

- United Nations. n.d. “Goal 4: Ensure Inclusive and Equitable Quality Education and Promote Lifelong Learning Opportunities for All.” Sustainable Development. Accessed October 25, 2023. https://sdgs.un.org/goals/goal4.

- Verdel, Nina, Klas Hjort, Billy Sperlich, Hans-Christer Holmberg, and Matej Supej. 2023. “Use of Smart Patches by Athletes: A Concise SWOT Analysis.” Frontiers in Physiology 14. https://www.frontiersin.org/articles/10.3389/fphys.2023.1055173. [CrossRef]

- Voogt, J., P. Fisser, N. Pareja Roblin, J. Tondeur, and J. van Braak. 2013. “Technological Pedagogical Content Knowledge – a Review of the Literature.” Journal of Computer Assisted Learning 29 (2): 109–21. [CrossRef]

- Vygotski, Lev Semjonovitš. 1978. Mind in Society. The Development of Higher Psychological Processes. Cambridge, MA: Harvard University Press.

- Wegner, Joerg Kurt, Aaron Sterling, Rajarshi Guha, Andreas Bender, Jean-Loup Faulon, Janna Hastings, Noel O’Boyle, John Overington, Herman Van Vlijmen, and Egon Willighagen. 2012. “Cheminformatics.” Communications of the ACM 55 (11): 65–75. [CrossRef]

- Willermark, Sara. 2018. “Technological Pedagogical and Content Knowledge: A Review of Empirical Studies Published from 2011 to 2016.” Journal of Educational Computing Research 56 (3): 315–43. [CrossRef]

- Wilson, T. D. 2000. “Human Information Behavior.” Informing Science: The International Journal of an Emerging Transdiscipline 3: 049–056. [CrossRef]

- Wilson, T.D. 1999. “Models in Information Behaviour Research.” Journal of Documentation 55 (3): 249–70. [CrossRef]

- Zamani, Hamed, Johanne R. Trippas, Jeff Dalton, and Filip Radlinski. 2023. “Conversational Information Seeking.” Foundations and Trends® in Information Retrieval 17 (3–4): 244–456. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).