Submitted:

24 November 2023

Posted:

14 December 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

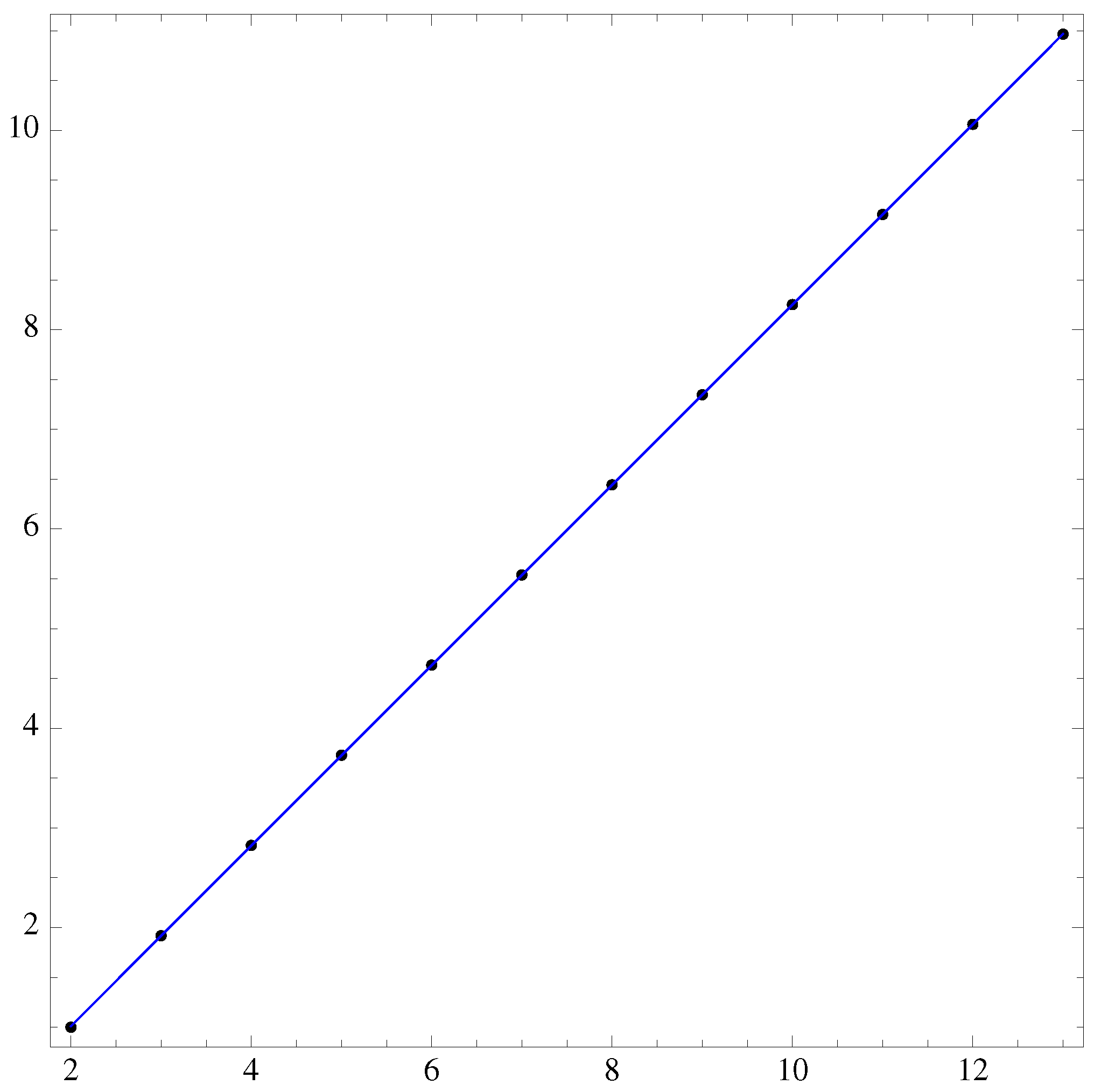

2. Entropy of difference-method

| 1 | |

| 0.982 | 2.01 | 2.01 | 0.991 | |||||||||

| 0.924 | 3.00 | 5.05 | 3.00 | 3.00 | 5.05 | 3.00 | 0.960 | |||||

| 0.756 | 3.86 | 9.10 | 5.92 | 9.23 | 16.0 | 11.0 | 4.03 | 3.86 | 11.1 | 16.2 | 9.10 | |

| 5.78 | 9.22 | 4.03 | 0.768 |

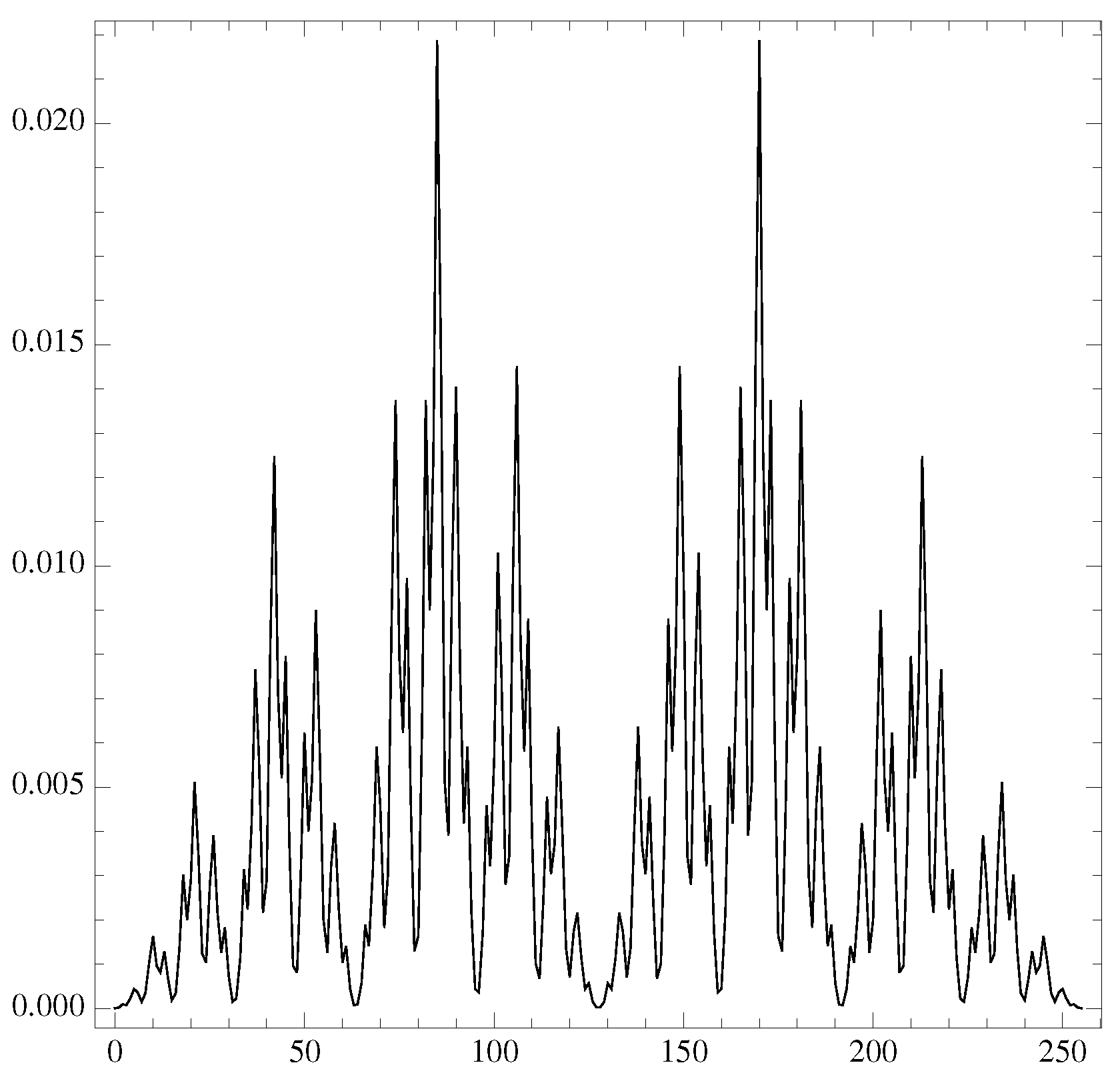

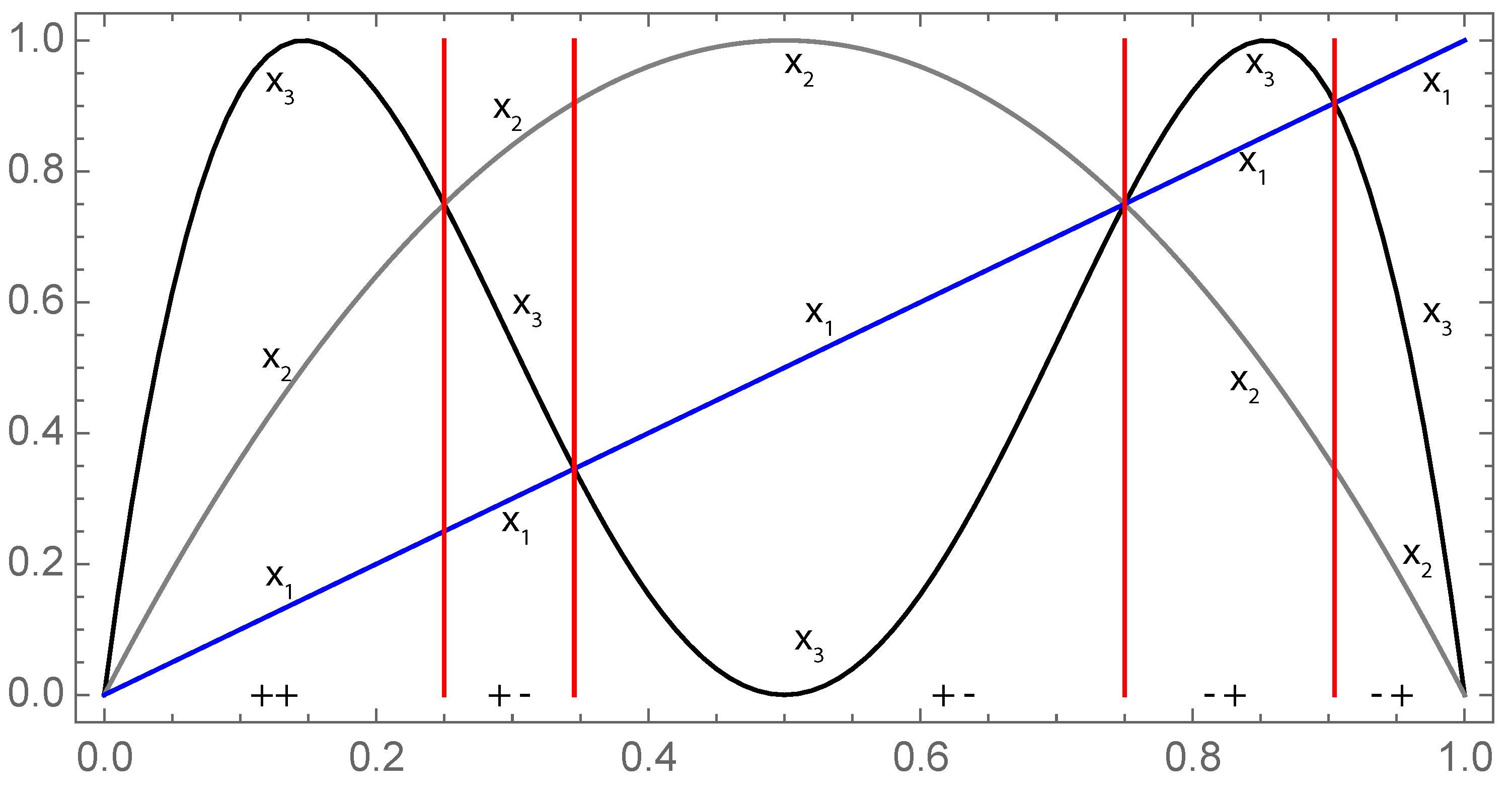

3. Periodic signal

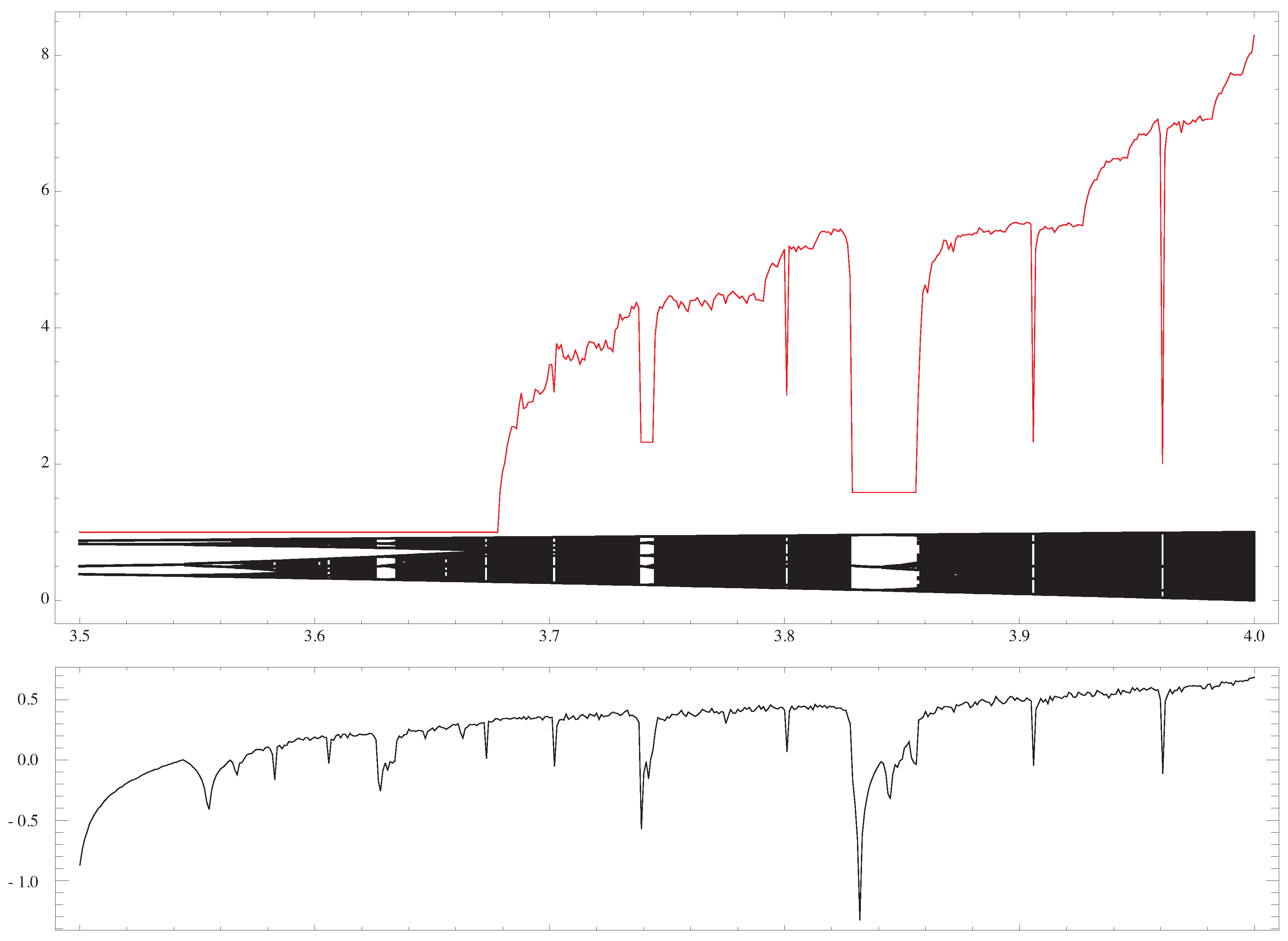

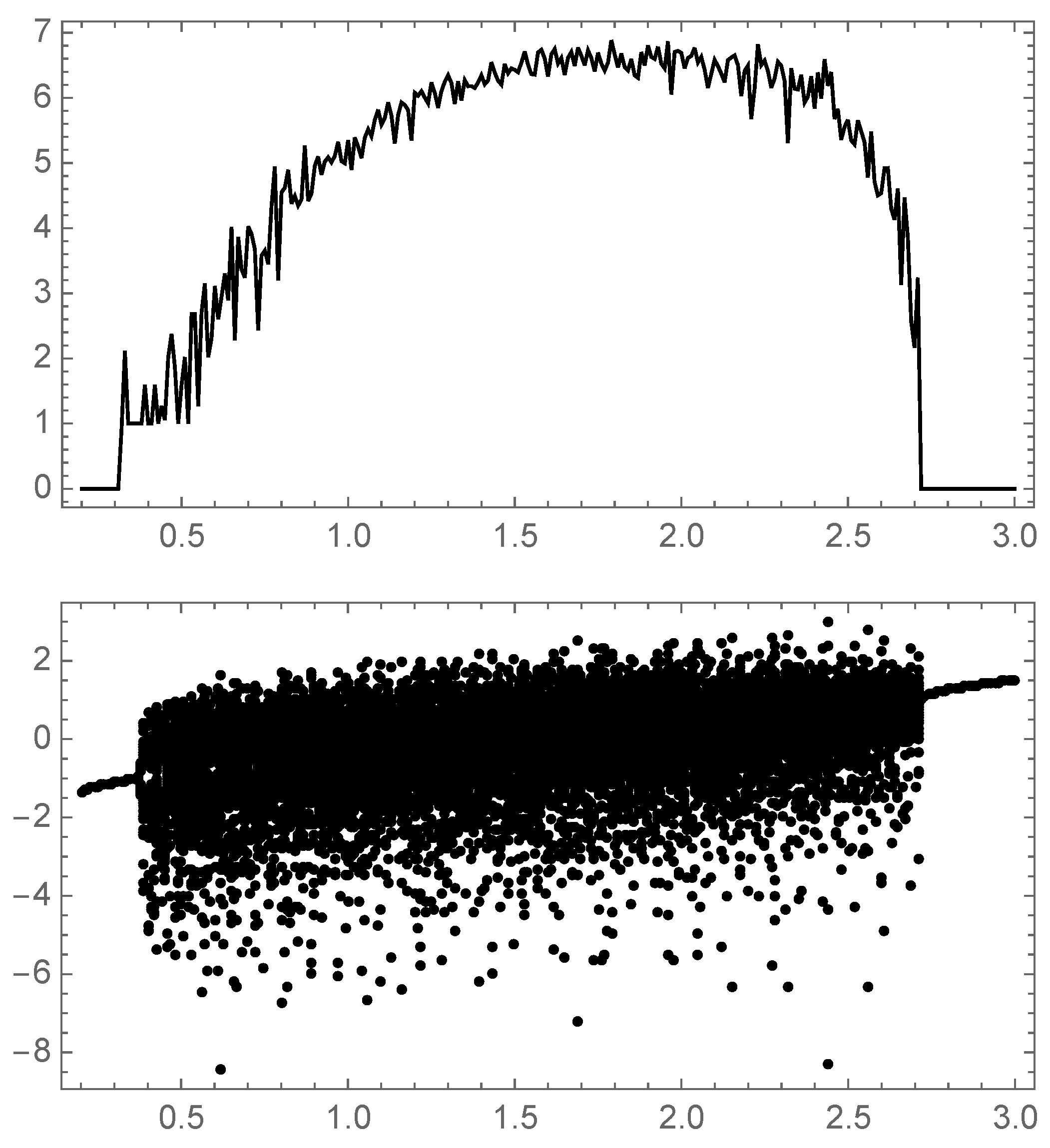

4. Chaotic logistic map example

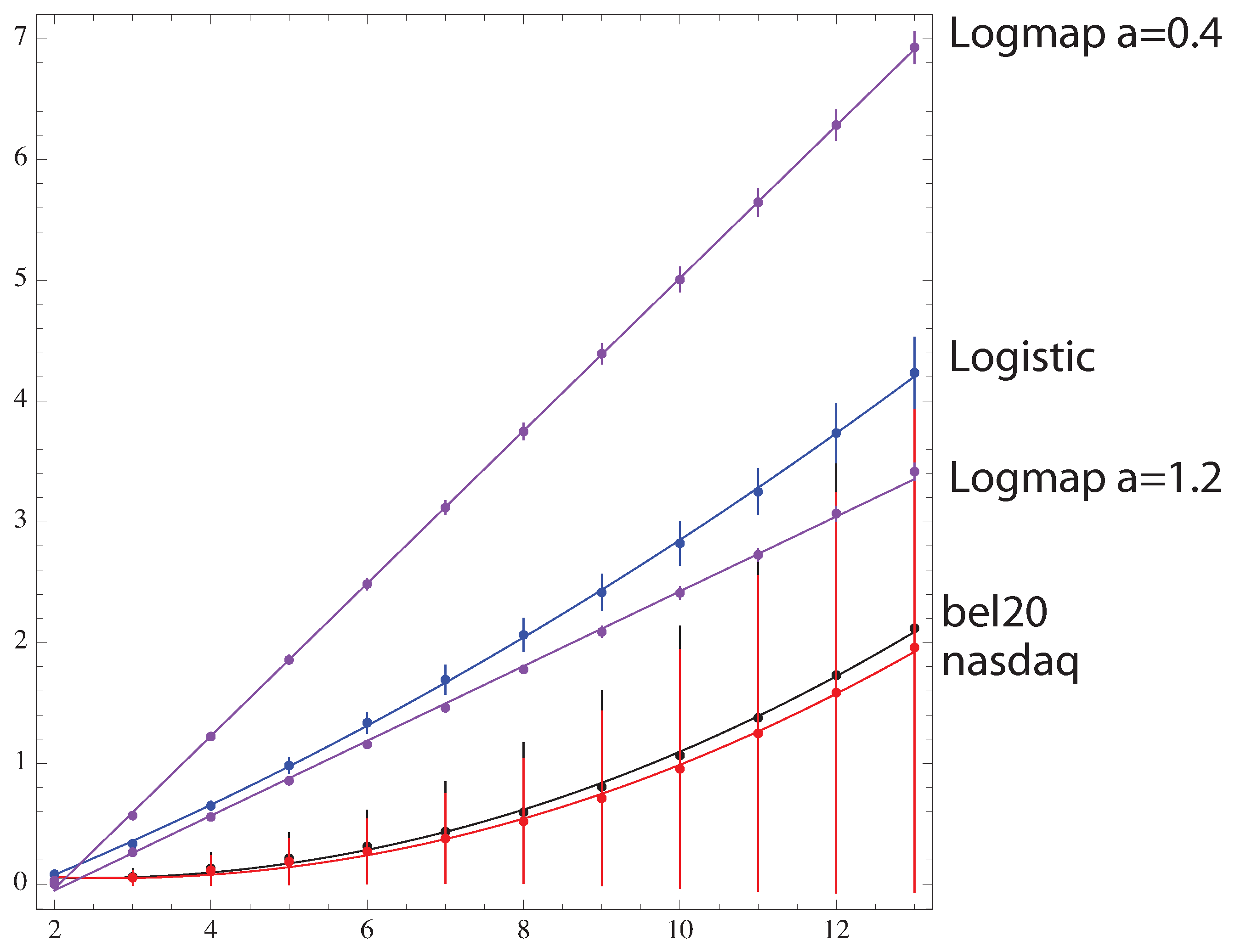

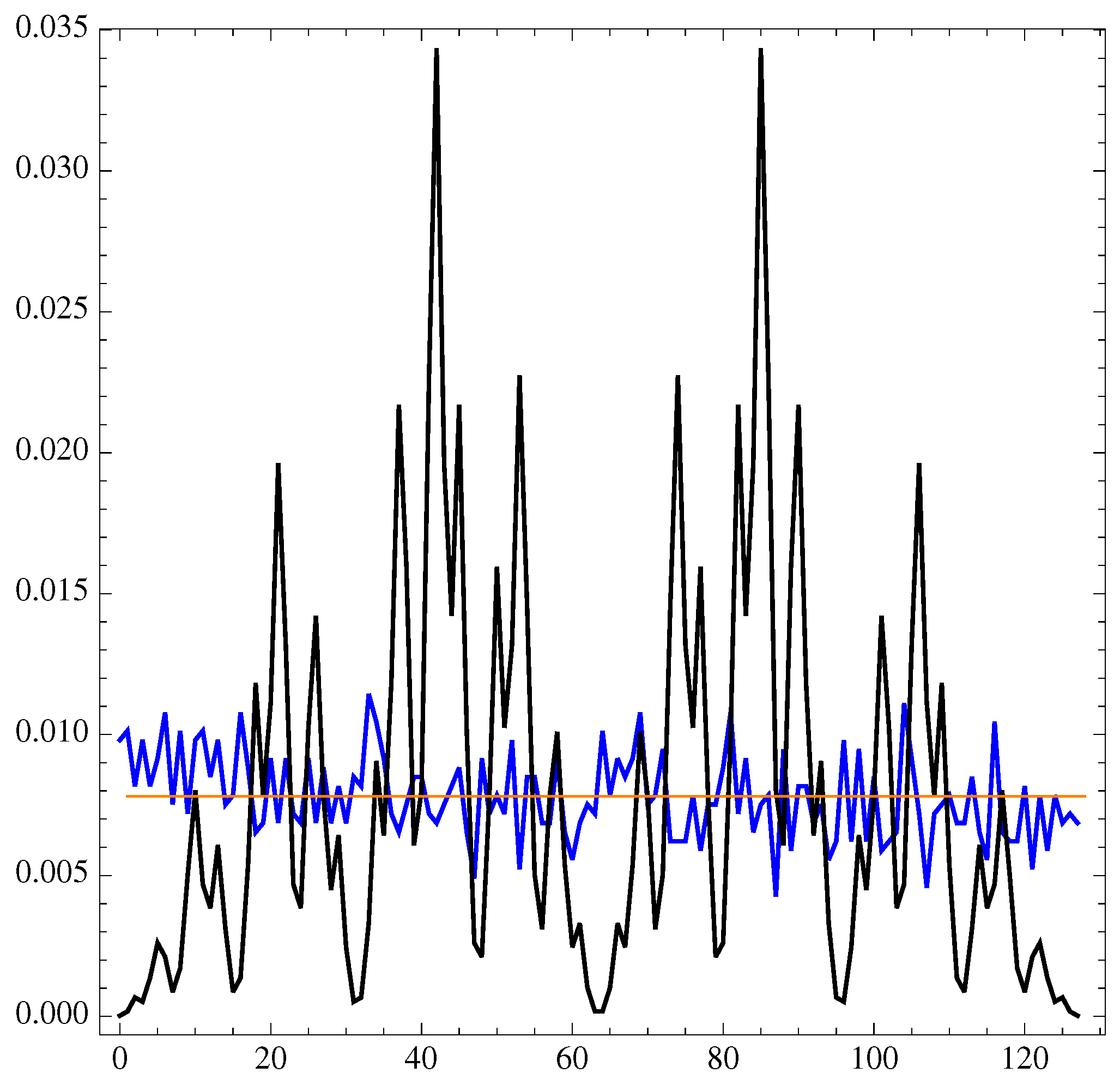

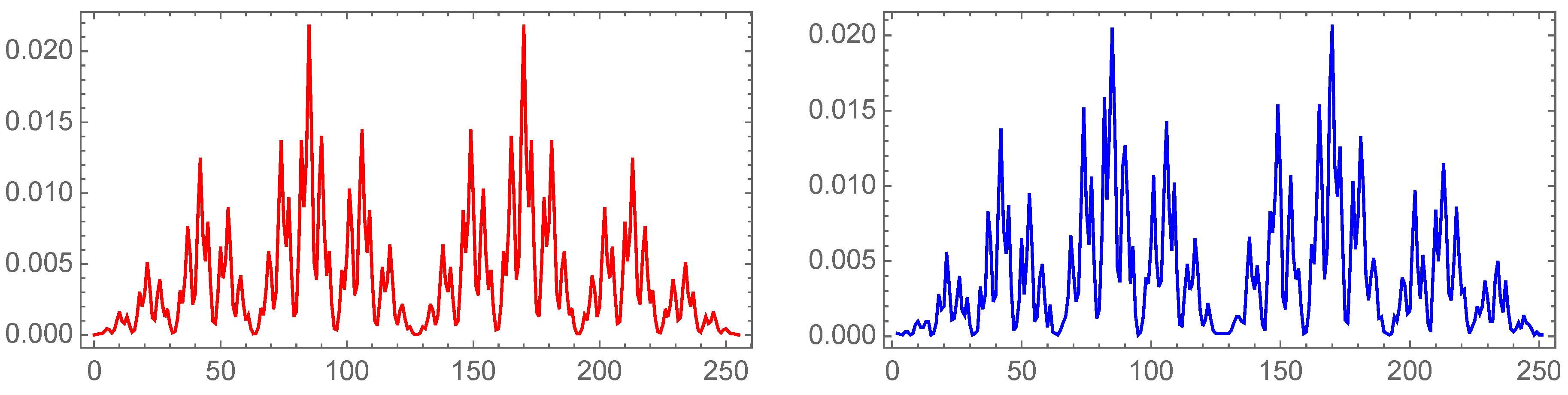

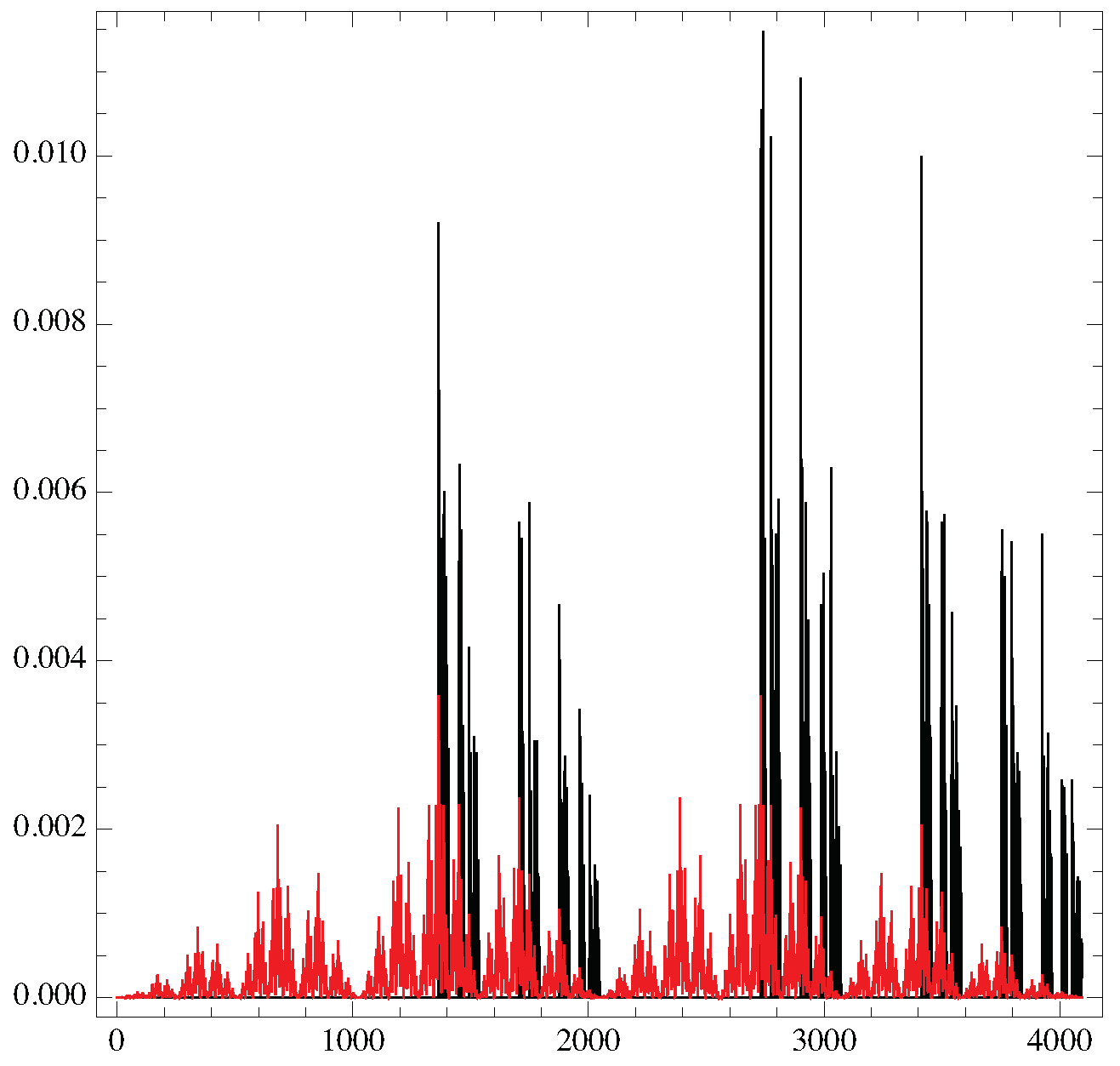

5. divergences versus m on real data and on maps

6. Conclusions

Conflicts of Interest

Appendix A

References

- C. Bandt and B. Pompe, Phys. Rev. Lett. 88, 174102 (2002).

- Chunhua Bian, Chang Qin, Qianli D. Y. Ma and Qinghong Shen Phys. Rev. E 85, 021906 (2012).

- L. Zunino, D. G. Pérez, M. T. Martín, M. Garavaglia, A. Plastino, and O. A. Rosso, Phys. Lett. A 372, 4768 (2008).

- X. Li, G. Ouyang, and D. A. Richards, Epilepsy Res. 77, 70 (2007).

- X. Li, S. Cui, and L. J. Voss, Anesthesiology 109, 448 (2008).

- B. Frank, B. Pompe, U. Schneider, and D. Hoyer, Med. Biol. Eng. Comput. 44, 179 (2006).

- E. Olofsen, J. W. Sleigh, and A. Dahan, Br. J. Anaesth. 101, 810 (2008).

- O. A. Rosso, L. Zunino, D. G. Perez, A. Figliola, H. A. Larrondo, M. Garavaglia, M. T. Martin and A. Plastino, Phys. Rev. E 76, 061114 (2007).

- S. Kullback and R. A. Leibler Ann. Math. Statist., 22, 1, 79, (1951).

- Édgar Roldán and Juan M. R. Parrondo Phys. Rev. E, 85, 3, 031129, (2012).

- F. Ginelli, P. Poggi, A. Turchi, H. Chate, R. Livi, and A. Politi Phys. Rev. Lett. 99, 130601 (2007).

- J. Theilerb and P. E. Rapp, Electroencephalography and Clinical Neurophysiology, 98, 3, 213 (1996).

- R.M. May, Nature 261, 459 (1976).

- M. Jakobson,Communications in Mathematical Physics, 81, 39-88 (1981).

- data are provided by http://www.wessa.net/.

| 1 | See Appendix A

|

| m | 3 | 4 | 5 | 6 | 7 |

|---|---|---|---|---|---|

| 6 | 24 | 120 | 720 | 5040 | |

| 13 | 73 | 501 | 4051 | 37633 | |

| 4 | 8 | 16 | 32 | 64 |

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | |

| 1 | 2 | 2 | 1 | |||||||||||||

| 1 | 3 | 5 | 3 | 3 | 5 | 3 | 1 | |||||||||

| 1 | 4 | 9 | 6 | 9 | 16 | 11 | 4 | 4 | 11 | 16 | 9 | 6 | 9 | 4 | 1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).