Submitted:

15 December 2023

Posted:

18 December 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

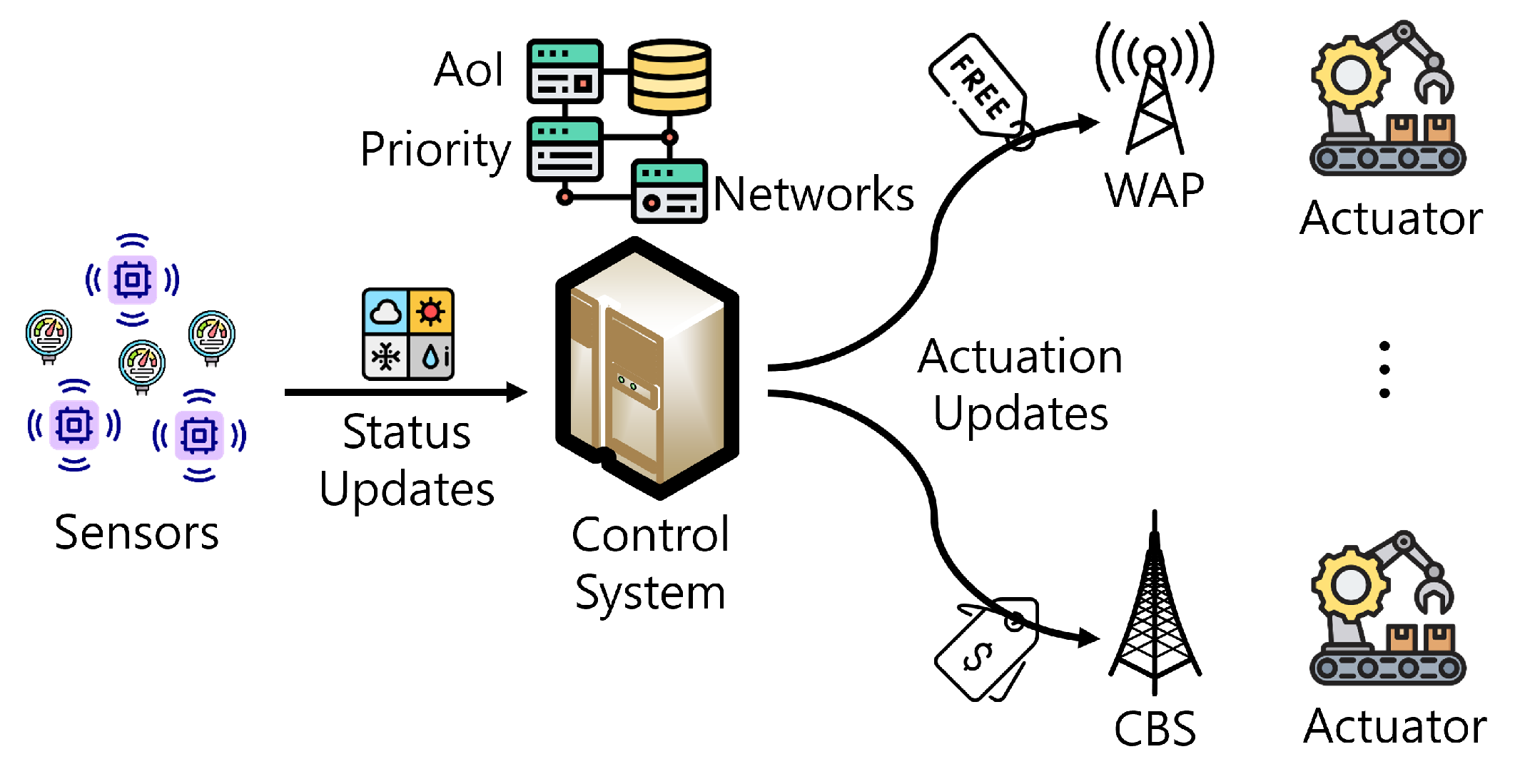

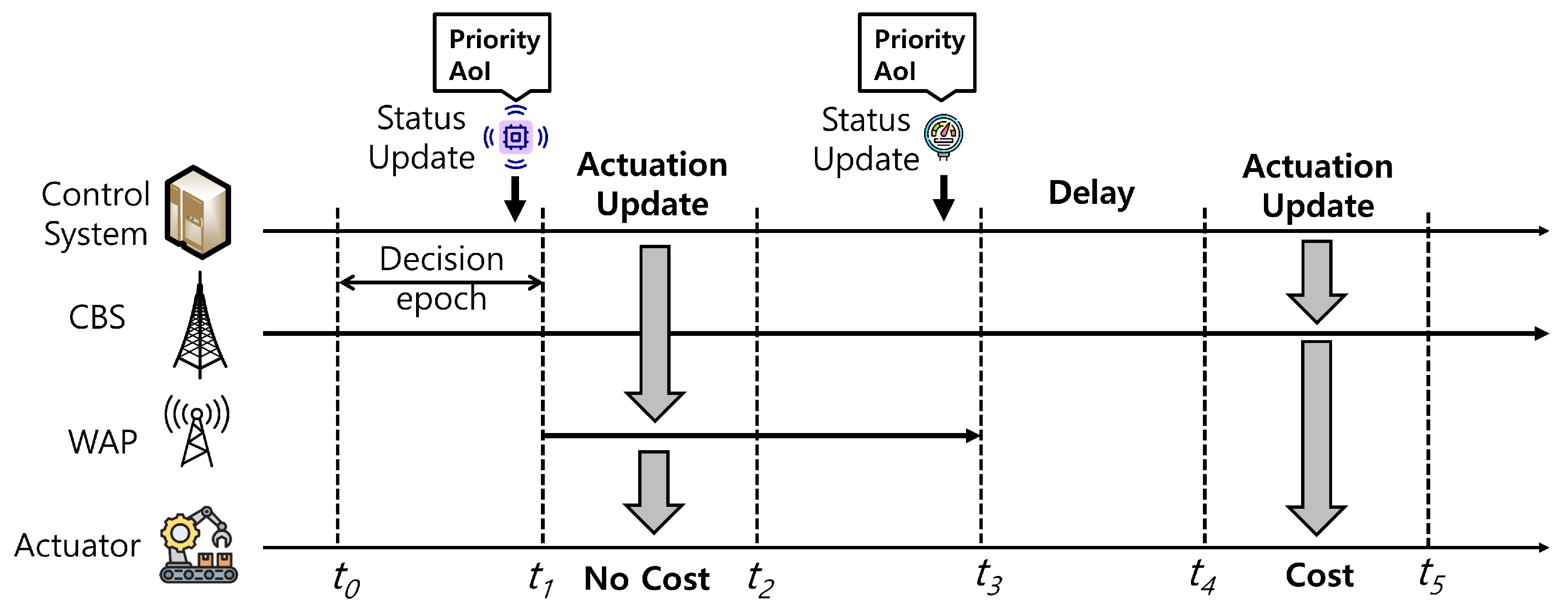

2. System Model

3. Problem Formulation

3.1. State Space

3.2. Action Space

3.3. Transition probability

3.4. Reward and Cost Functions

4. QL-based Actuation Update Algorithm

| Algorithm 1: Steps for update |

|

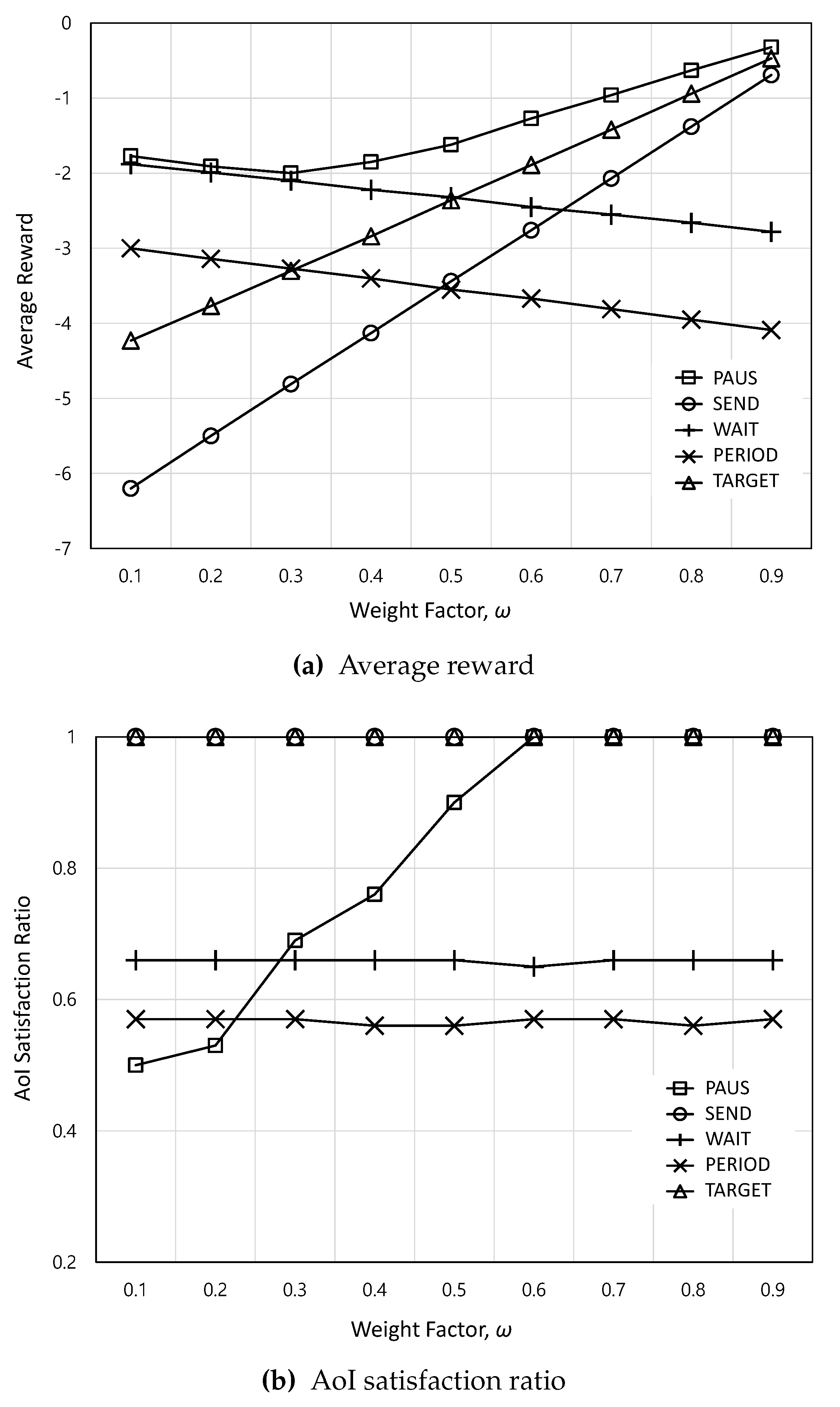

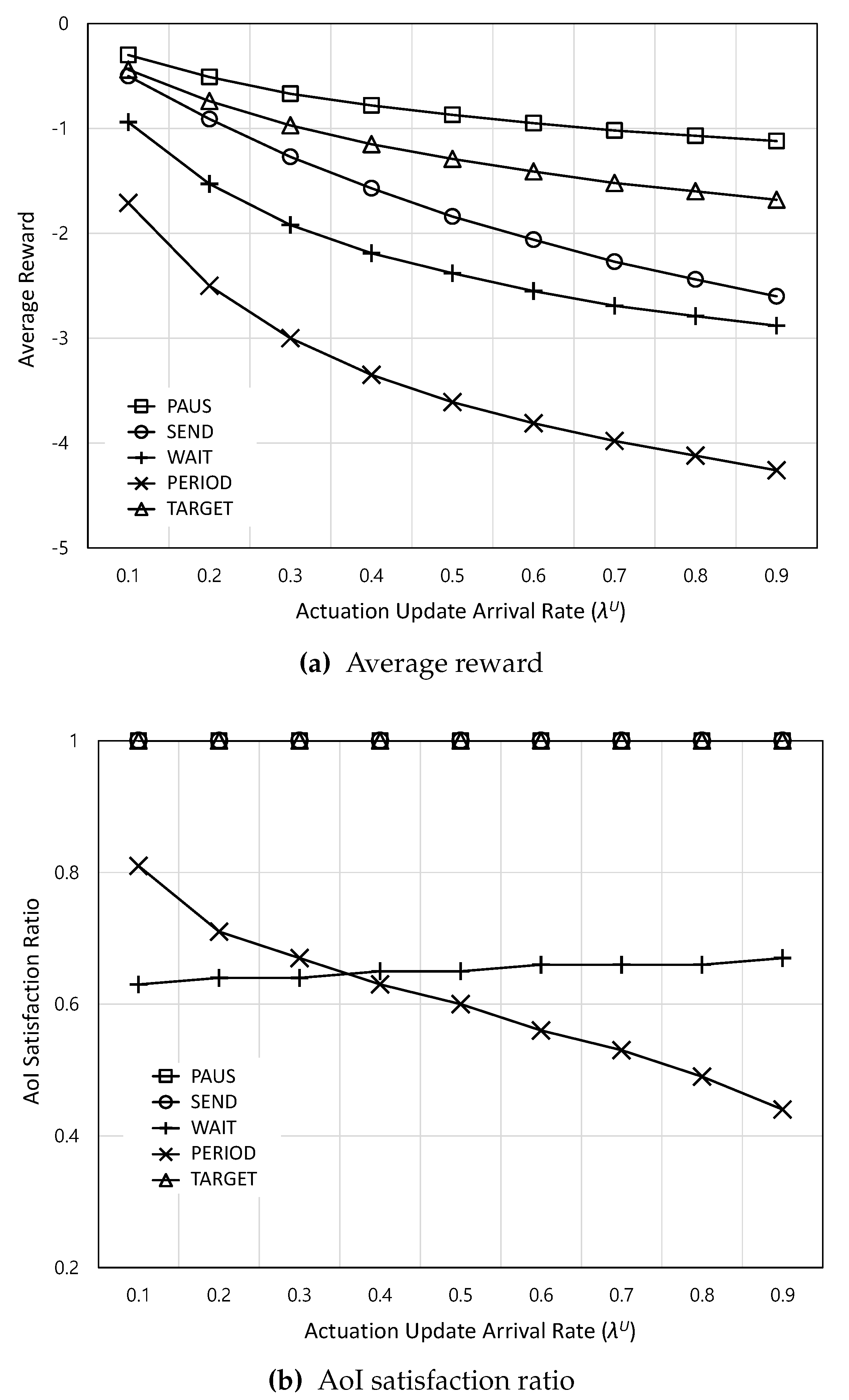

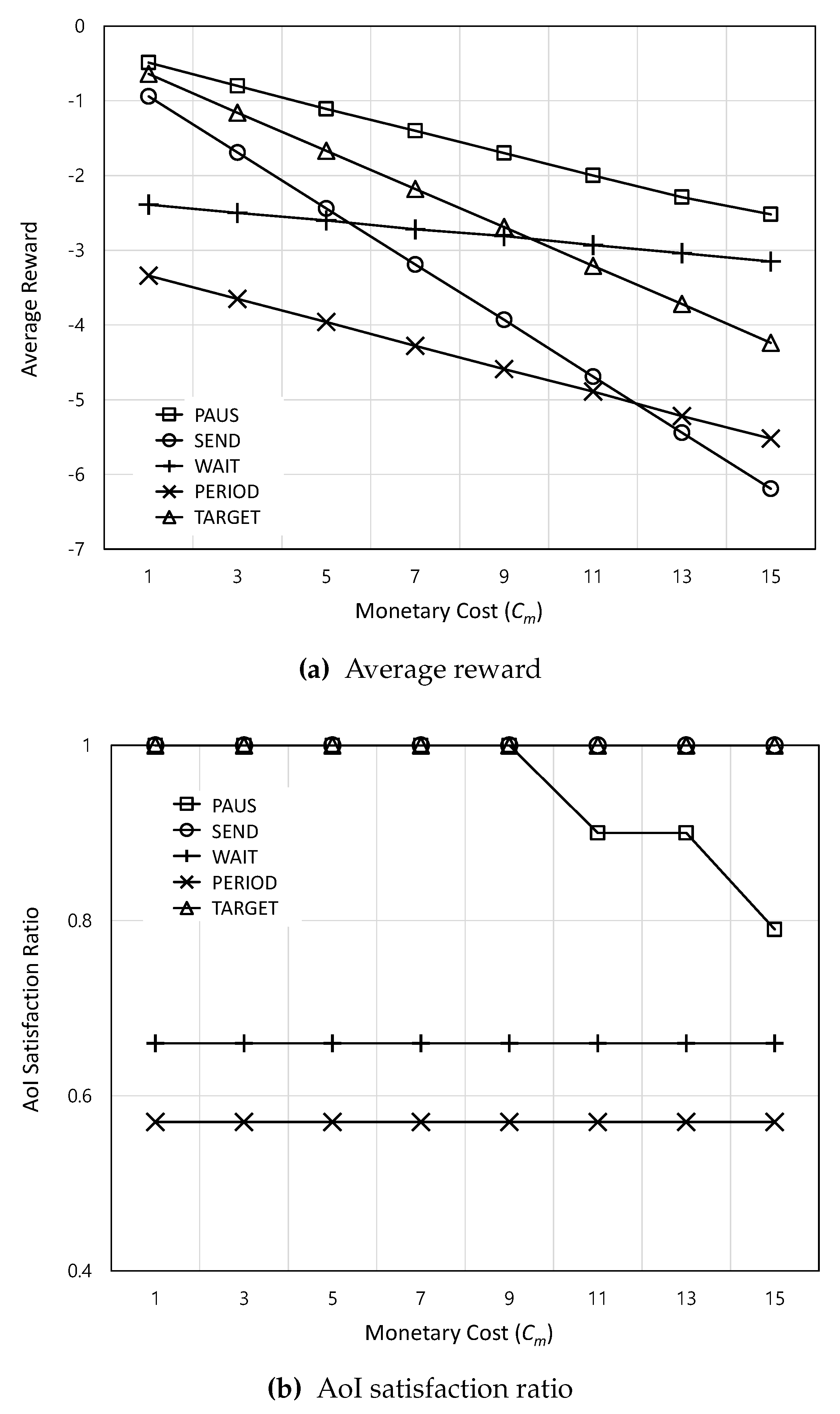

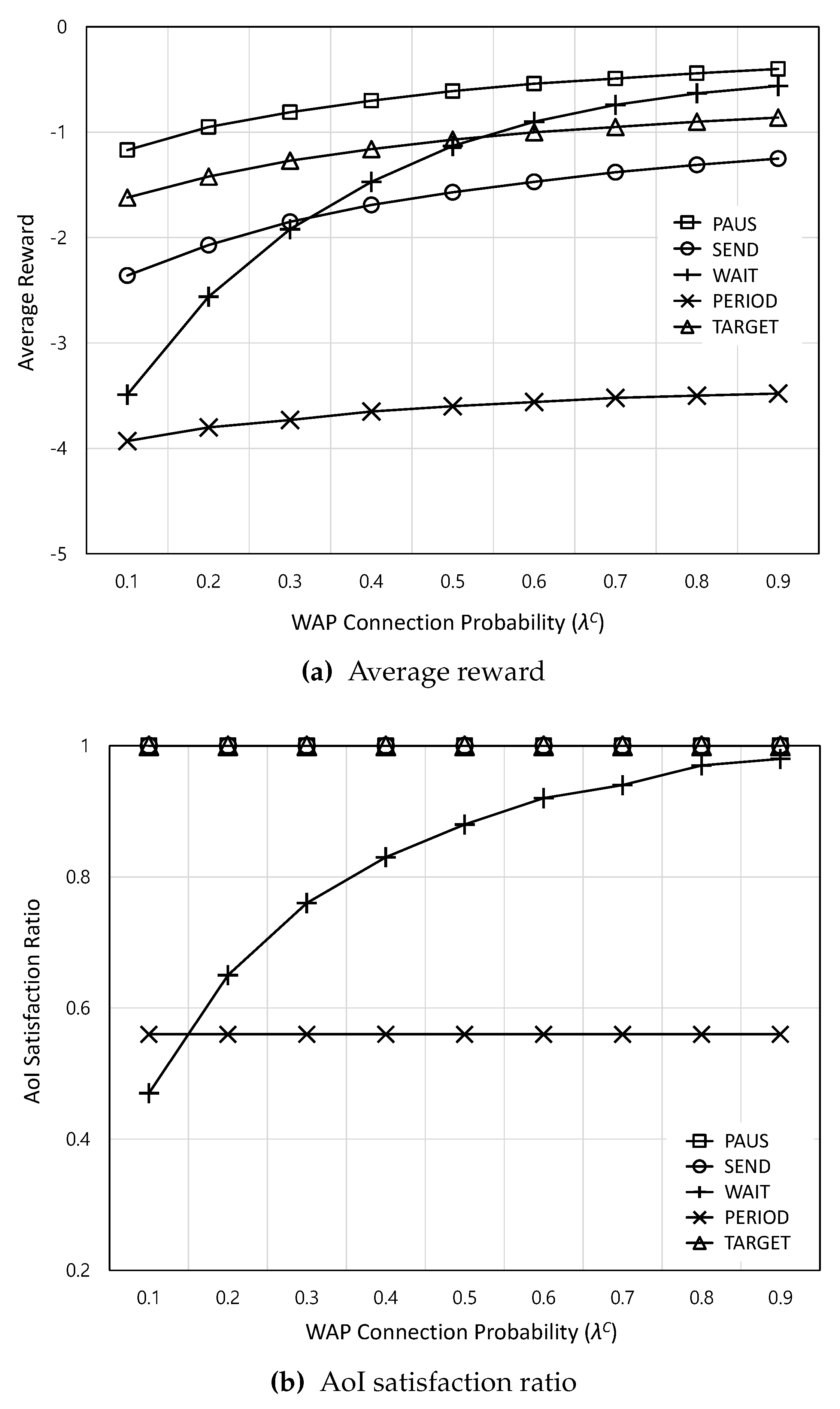

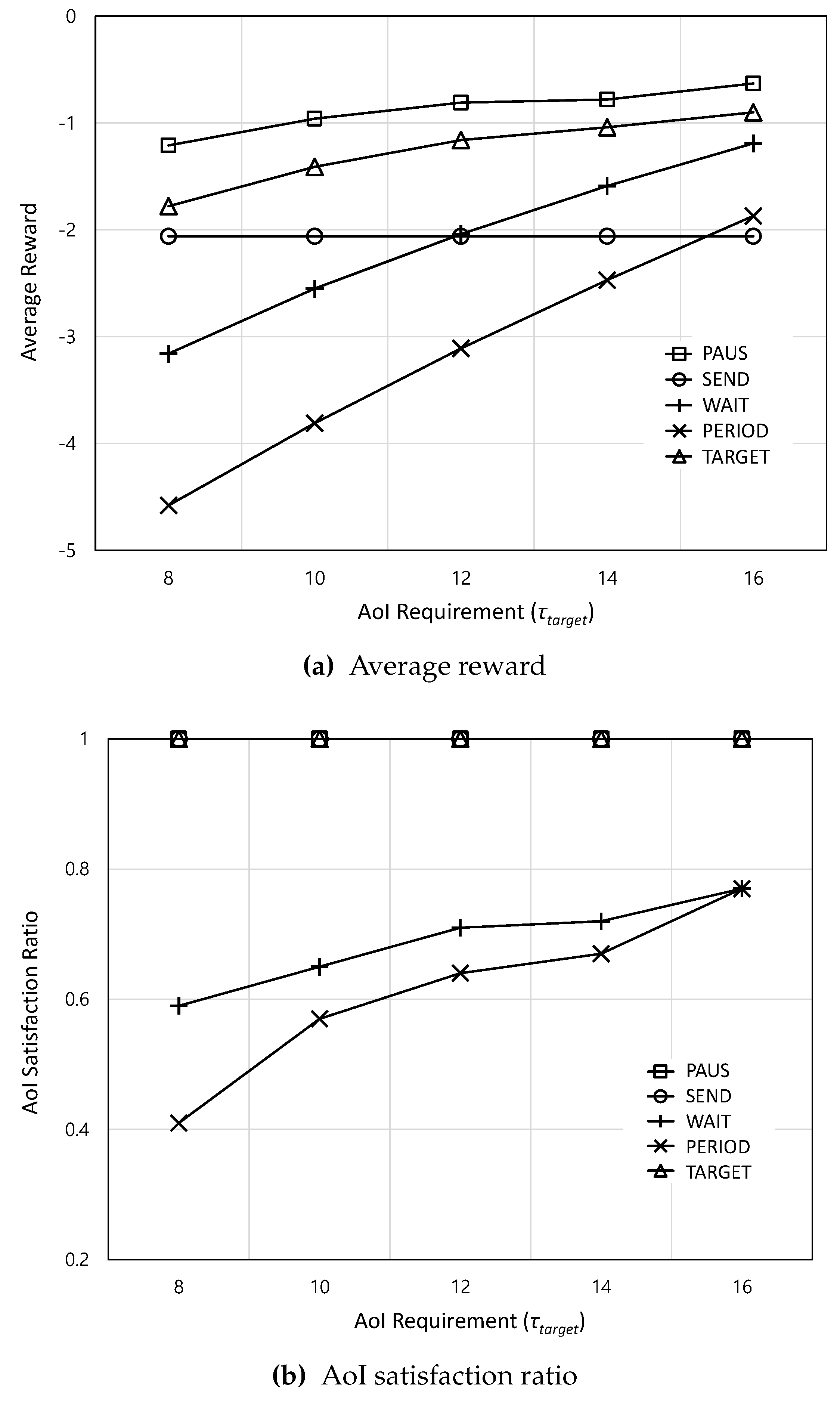

5. Performance Analysis Results

6. Conclusion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Mansano, R.K.; Rodrigues, R.J.; Godoy, E.P.; Colon, D. A New Adaptive Controller in Wireless Networked Control Systems: Developing a Robust and Effective Controller for Energy Efficiency. IEEE Industry Applications Magazine 2019, 25, 12–22. [Google Scholar] [CrossRef]

- Wang, X.; Chen, C.; He, J.; Zhu, S.; Guan, X. AoI-Aware Control and Communication Co-Design for Industrial IoT Systems. IEEE Internet of Things Journal 2021, 8, 8464–8473. [Google Scholar] [CrossRef]

- Liu, W.; Popovski, P.; Li, Y.; Vucetic, B. Wireless Networked Control Systems With Coding-Free Data Transmission for Industrial IoT. IEEE Internet of Things Journal 2020, 7, 1788–1801. [Google Scholar] [CrossRef]

- Sun, Y.; Uysal-Biyikoglu, E.; Yates, R.D.; Koksal, C.E.; Shroff, N.B. Update or Wait: How to Keep Your Data Fresh. IEEE Transactions on Information Theory 2017, 63, 7492–7508. [Google Scholar] [CrossRef]

- Kaul, S.; Yates, R.; Gruteser, M. Real-time status: How often should one update? 2012 Proceedings IEEE INFOCOM, 2012, pp. 2731–2735. [CrossRef]

- Champati, J.P.; Al-Zubaidy, H.; Gross, J. Statistical Guarantee Optimization for AoI in Single-Hop and Two-Hop FCFS Systems With Periodic Arrivals. IEEE Transactions on Communications 2021, 69, 365–381. [Google Scholar] [CrossRef]

- Chang, B.; Li, L.; Zhao, G.; Meng, Z.; Imran, M.A.; Chen, Z. Age of Information for Actuation Update in Real-Time Wireless Control Systems. IEEE INFOCOM 2020 - IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), 2020, pp. 26–30. [CrossRef]

- Xu, C.; Xu, Q.; Wang, J.; Wu, K.; Lu, K.; Qiao, C. AoI-centric Task Scheduling for Autonomous Driving Systems. IEEE INFOCOM 2022 - IEEE Conference on Computer Communications, 2022, pp. 1019–1028. [CrossRef]

- Hazra, A.; Donta, P.K.; Amgoth, T.; Dustdar, S. Cooperative Transmission Scheduling and Computation Offloading With Collaboration of Fog and Cloud for Industrial IoT Applications. IEEE Internet of Things Journal 2023, 10, 3944–3953. [Google Scholar] [CrossRef]

- Chi, H.R.; Wu, C.K.; Huang, N.F.; Tsang, K.F.; Radwan, A. A Survey of Network Automation for Industrial Internet-of-Things Toward Industry 5.0. IEEE Transactions on Industrial Informatics 2023, 19, 2065–2077. [Google Scholar] [CrossRef]

- Cogalan, T.; Camps-Mur, D.; Gutiérrez, J.; Videv, S.; Sark, V.; Prados-Garzon, J.; Ordonez-Lucena, J.; Khalili, H.; Cañellas, F.; Fernández-Fernández, A.; Goodarzi, M.; Yesilkaya, A.; Bian, R.; Raju, S.; Ghoraishi, M.; Haas, H.; Adamuz-Hinojosa, O.; Garcia, A.; Colman-Meixner, C.; Mourad, A.; Aumayr, E. 5G-CLARITY: 5G-Advanced Private Networks Integrating 5GNR, WiFi, and LiFi. IEEE Communications Magazine 2022, 60, 73–79. [Google Scholar] [CrossRef]

- Hewa, T.; Braeken, A.; Liyanage, M.; Ylianttila, M. Fog Computing and Blockchain-Based Security Service Architecture for 5G Industrial IoT-Enabled Cloud Manufacturing. IEEE Transactions on Industrial Informatics 2022, 18, 7174–7185. [Google Scholar] [CrossRef]

- Pan, J.; Bedewy, A.M.; Sun, Y.; Shroff, N.B. Age-Optimal Scheduling Over Hybrid Channels. IEEE Transactions on Mobile Computing 2023, 22, 7027–7043. [Google Scholar] [CrossRef]

- Altman, E.; El-Azouzi, R.; Menasche, D.S.; Xu, Y. Forever Young: Aging Control For Hybrid Networks. Proceedings of the Twentieth ACM International Symposium on Mobile Ad Hoc Networking and Computing; Association for Computing Machinery: New York, NY, USA, 2019; Mobihoc ’19; pp. 91–100. [Google Scholar] [CrossRef]

- Raiss-el fenni, M.; El-Azouzi, R.; Menasche, D.S.; Xu, Y. Optimal sensing policies for smartphones in hybrid networks: A POMDP approach. 6th International ICST Conference on Performance Evaluation Methodologies and Tools, 2012, pp. 89–98. [CrossRef]

- Bhati, A.; Pillai, S.R.B.; Vaze, R. On the Age of Information of a Queuing System with Heterogeneous Servers. 2021 National Conference on Communications (NCC), 2021, pp. 1–6. [CrossRef]

- Fidler, M.; Champati, J.P.; Widmer, J.; Noroozi, M. Statistical Age-of-Information Bounds for Parallel Systems: When Do Independent Channels Make a Difference? IEEE Journal on Selected Areas in Information Theory 2023, 4, 591–606. [Google Scholar] [CrossRef]

- Xie, X.; Wang, H.; Liu, X. Scheduling for Minimizing the Age of Information in Multi-Sensor Multi-Server Industrial IoT Systems. IEEE Transactions on Industrial Informatics 2023, pp. 1–10. [CrossRef]

- Ko, H.; Kyung, Y. Performance Analysis and Optimization of Delayed Offloading System With Opportunistic Fog Node. IEEE Transactions on Vehicular Technology 2022, 71, 10203–10208. [Google Scholar] [CrossRef]

- Dong, Y.; Chen, Z.; Liu, S.; Fan, P.; Letaief, K.B. Age-Upon-Decisions Minimizing Scheduling in Internet of Things: To Be Random or To Be Deterministic? IEEE Internet of Things Journal 2020, 7, 1081–1097. [Google Scholar] [CrossRef]

- Marí-Altozano, M.L.; Mwanje, S.S.; Ramírez, S.L.; Toril, M.; Sanneck, H.; Gijón, C. A Service-Centric Q-Learning Algorithm for Mobility Robustness Optimization in LTE. IEEE Transactions on Network and Service Management 2021, 18, 3541–3555. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).