Submitted:

19 December 2023

Posted:

20 December 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

- We provide a solution for the recovery of time-varying cost weights, essential for analyzing real-world animal or human motion.

- Our method operates online, suitable for a broad spectrum of real-time calculation problems. This contrasts with previous online IOC methods that mainly focused on constant cost weights for discrete system control.

- We introduce a neural network and state observer-based framework for online verification and refinement of estimated cost weights. This innovation addresses the critical need for solution uniqueness and robustness against data noise in IOC applications.

2. Problem Formulation

2.1. System description and problem statement

2.2. Maximum principle in forward optimal control

2.3. Analysis of the IOC problem

- What happens when a different feature function is selected?

- Whether or not the given set in the IOC problem has a unique solution .

3. Adaptive Observer-based Neural Network Approximation of time-varying Cost Weights

3.1. Construction of the observer

4. Neural Network Based Approximation of Time Varying Cost Weights

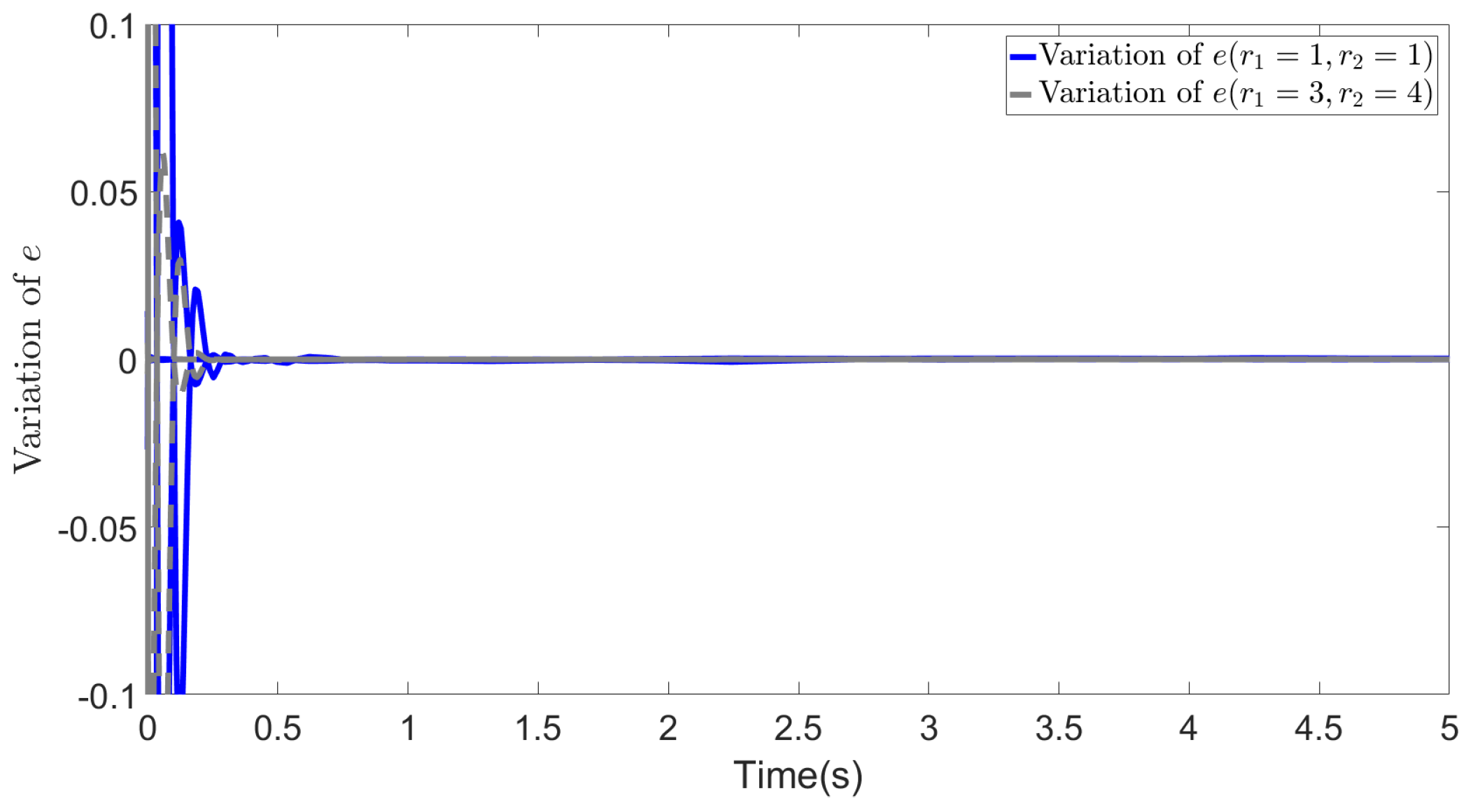

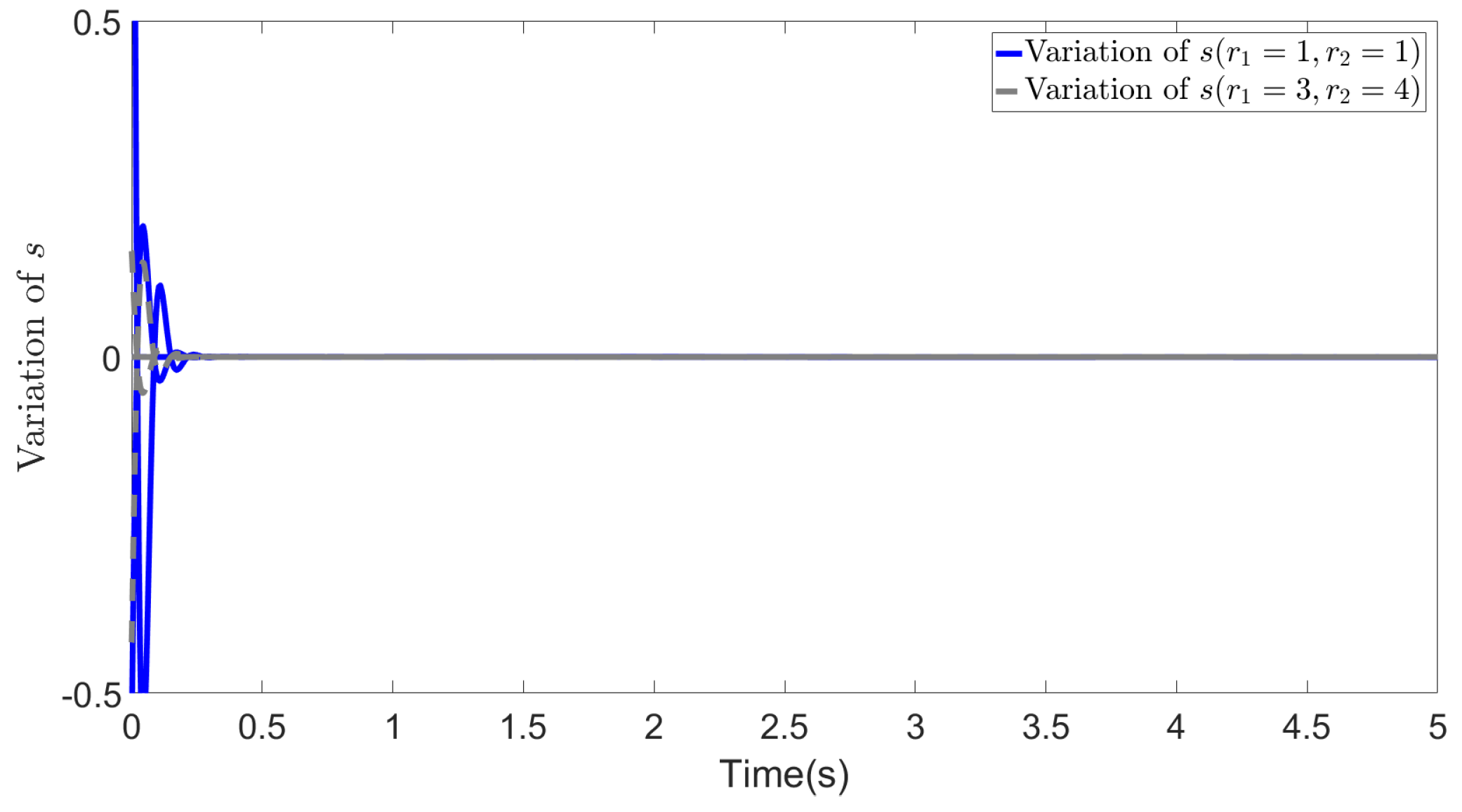

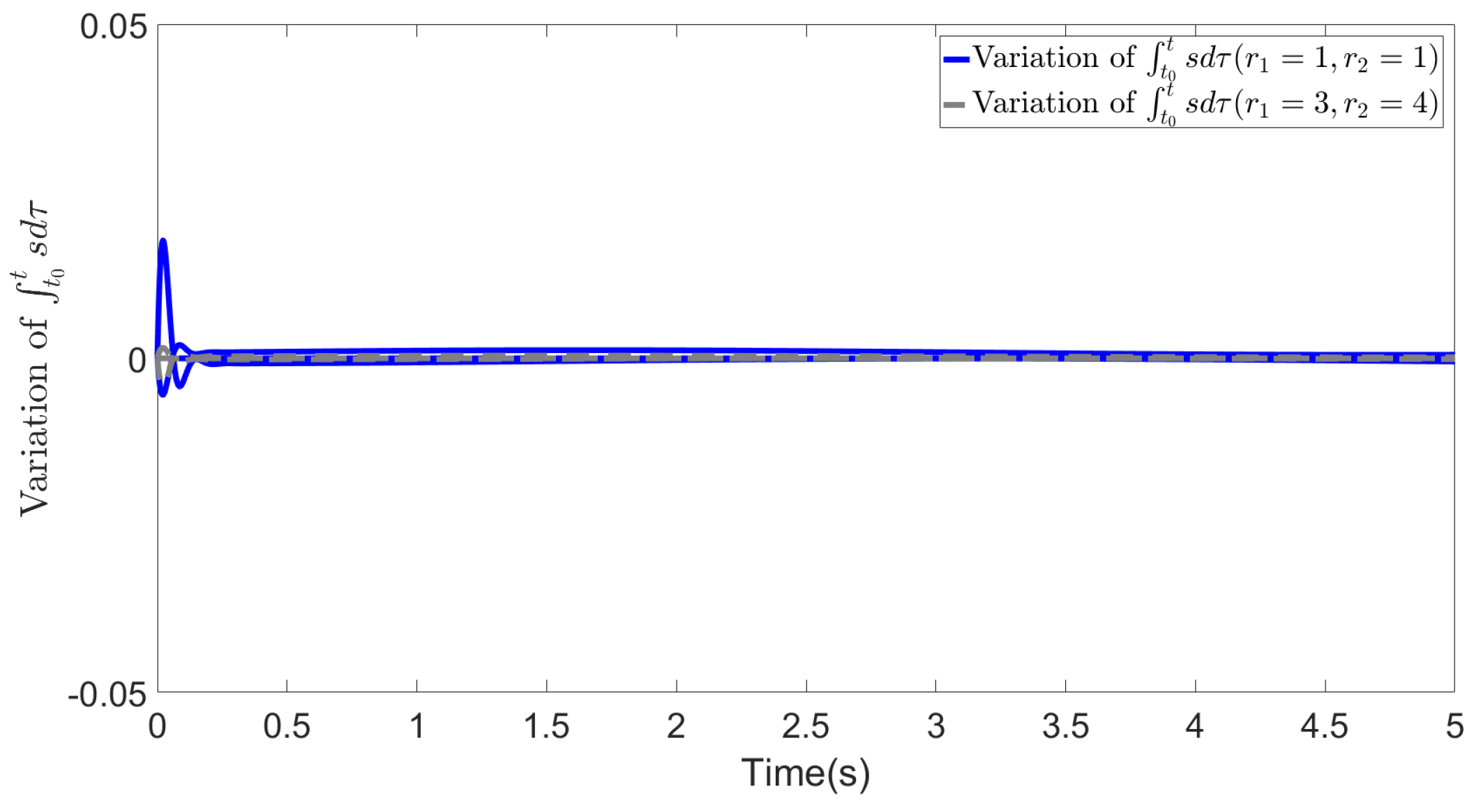

- become UUB after a time point (, and )

- The change in approaches zero

- Matrix C defined below will become a full row rank matrix.

4.1. Construction of the neural network

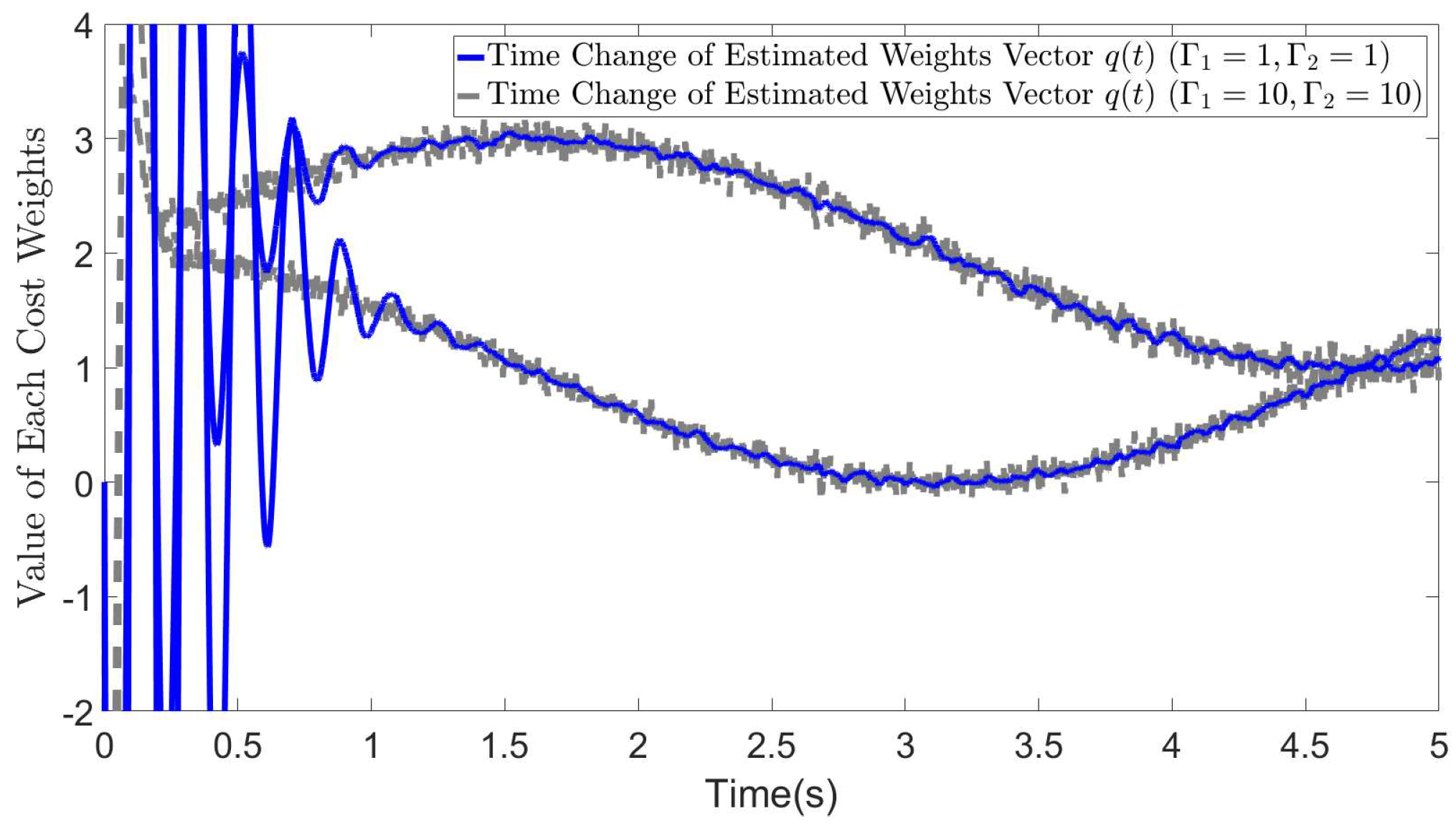

4.2. Tuning law of the neural network for the estimation of

5. Simulations

5.1. Basic simulation conditions

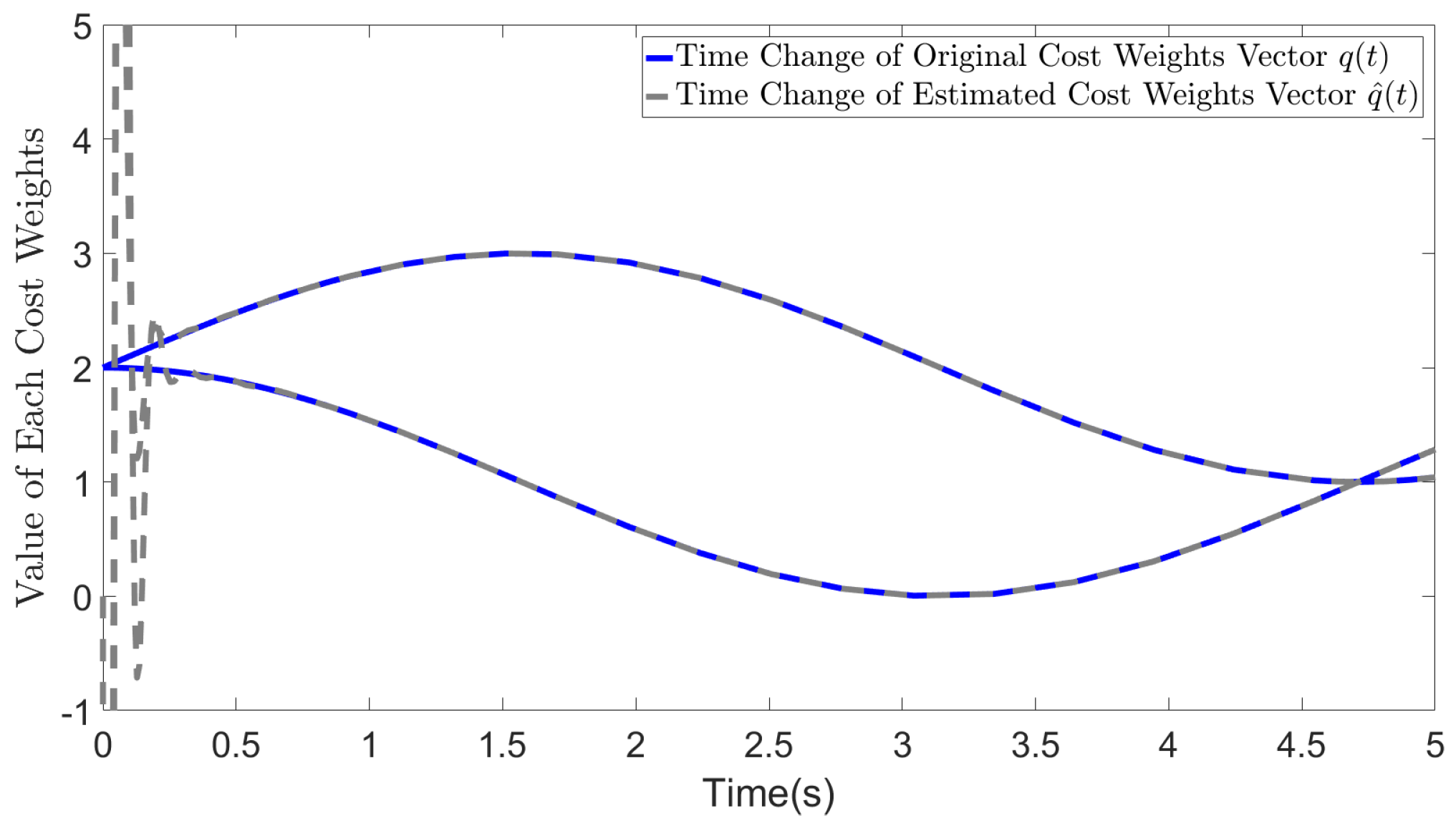

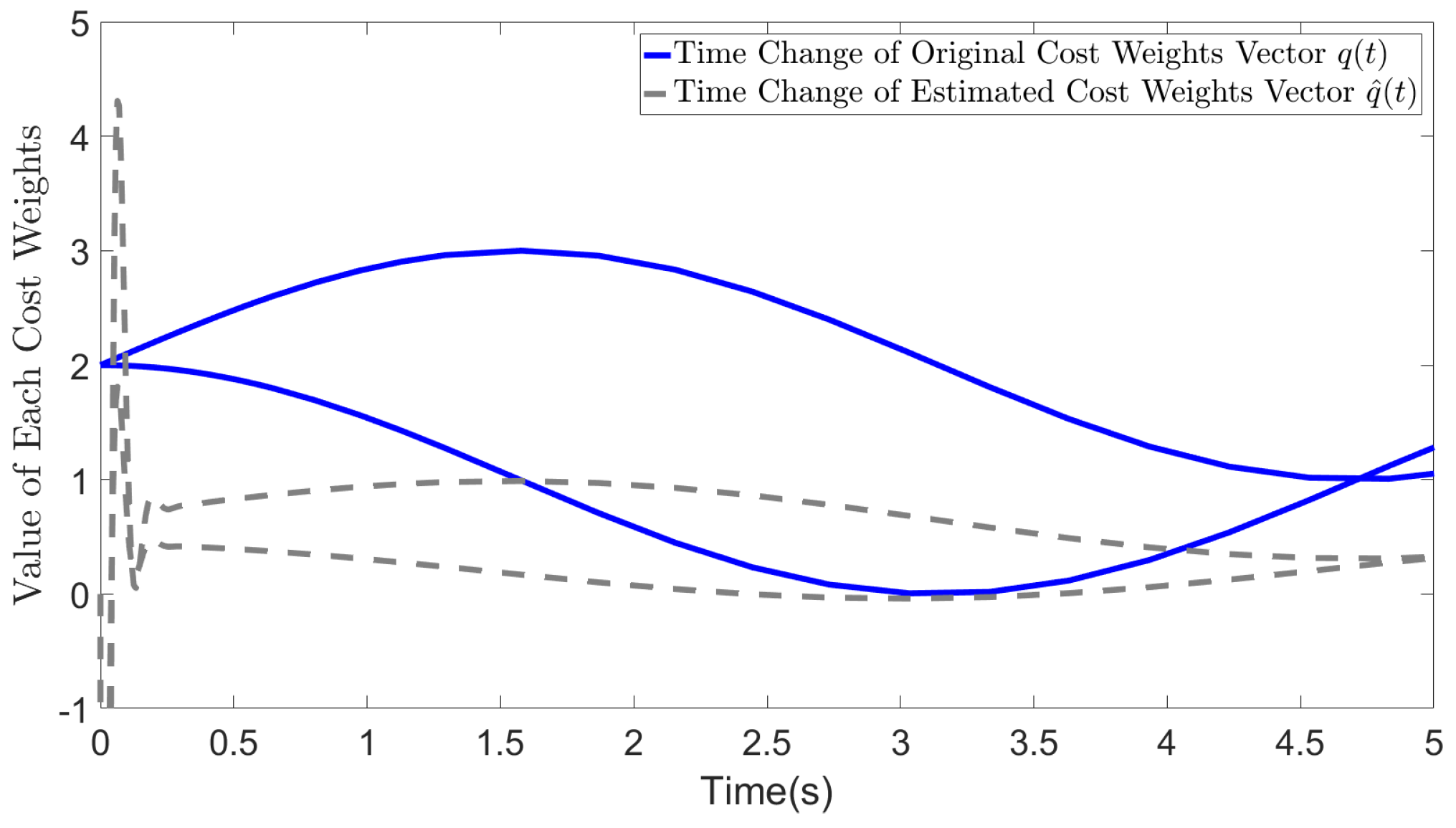

5.2. Results

6. Discussion

6.1. Robustness of the proposed method to noisy data

6.2. Calculation complexity and real-time calculation

6.3. Advantages of using

7. Conclusion

8. Proof of Theorem 1

References

- Frigon, A.; Akay, T.; Prilutsky, B.I. Control of Mammalian Locomotion by Somatosensory Feedback. Comprehensive Physiology 2021, 12, 2877–2947. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Tee, K.P.; Yan, R.; Chan, W.L.; Wu, Y. A framework of human–robot coordination based on game theory and policy iteration. IEEE Transactions on Robotics 2016, 32, 1408–1418. [Google Scholar] [CrossRef]

- Ziebart, B.D.; Maas, A.L.; Bagnell, J.A.; Dey, A.K. Human Behavior Modeling with Maximum Entropy Inverse Optimal Control. AAAI spring symposium: human behavior modeling, 2009, Vol. 92.

- Berret, B.; Chiovetto, E.; Nori, F.; Pozzo, T. Evidence for composite cost functions in arm movement planning: an inverse optimal control approach. PLoS computational biology 2011, 7, e1002183. [Google Scholar] [CrossRef] [PubMed]

- El-Hussieny, H.; Abouelsoud, A.; Assal, S.F.; Megahed, S.M. Adaptive learning of human motor behaviors: An evolving inverse optimal control approach. Engineering Applications of Artificial Intelligence 2016, 50, 115–124. [Google Scholar] [CrossRef]

- Jin, W.; Kulić, D.; Mou, S.; Hirche, S. Inverse optimal control from incomplete trajectory observations. The International Journal of Robotics Research 2021, 40, 848–865. [Google Scholar] [CrossRef]

- Kalman, R.E. When is a linear control system optimal? 1964.

- Molinari, B. The stable regulator problem and its inverse. IEEE Transactions on Automatic Control 1973, 18, 454–459. [Google Scholar] [CrossRef]

- Obermayer, R.; Muckler, F.A. On the inverse optimal control problem in manual control systems; Vol. 208, Citeseer, 1965.

- Boyd, S.; El Ghaoui, L.; Feron, E.; Balakrishnan, V. Linear matrix inequalities in system and control theory; SIAM, 1994.

- Priess, M.C.; Conway, R.; Choi, J.; Popovich, J.M.; Radcliffe, C. Solutions to the inverse LQR problem with application to biological systems analysis. IEEE Transactions on control systems technology 2014, 23, 770–777. [Google Scholar] [CrossRef] [PubMed]

- Rodriguez, A.; Ortega, R. Adaptive stabilization of nonlinear systems: the non-feedback linearizable case. IFAC Proceedings Volumes 1990, 23, 303–306. [Google Scholar] [CrossRef]

- Freeman, R.A.; Kokotovic, P.V. Inverse optimality in robust stabilization. SIAM journal on control and optimization 1996, 34, 1365–1391. [Google Scholar] [CrossRef]

- Johnson, M.; Aghasadeghi, N.; Bretl, T. Inverse optimal control for deterministic continuous-time nonlinear systems. 52nd IEEE Conference on Decision and Control. IEEE, 2013, pp. 2906–2913.

- Abbeel, P.; Ng, A.Y. Apprenticeship learning via inverse reinforcement learning. Proceedings of the twenty-first international conference on Machine learning, 2004, p. 1.

- Ziebart, B.D.; Maas, A.L.; Bagnell, J.A.; Dey, A.K. ; others. Maximum entropy inverse reinforcement learning. Aaai. Chicago, IL, USA, 2008, Vol. 8, pp. 1433–1438.

- Molloy, T.L.; Ford, J.J.; Perez, T. Online inverse optimal control for control-constrained discrete-time systems on finite and infinite horizons. Automatica 2020, 120, 109109. [Google Scholar] [CrossRef]

- Gupta, R.; Zhang, Q. Decomposition and Adaptive Sampling for Data-Driven Inverse Linear Optimization. INFORMS Journal on Computing 2022. [Google Scholar] [CrossRef]

- Jin, W.; Kulić, D.; Lin, J.F.S.; Mou, S.; Hirche, S. Inverse optimal control for multiphase cost functions. IEEE Transactions on Robotics 2019, 35, 1387–1398. [Google Scholar] [CrossRef]

- Li, Y.; Yao, Y.; Hu, X. Continuous-time inverse quadratic optimal control problem. Automatica 2020, 117, 108977. [Google Scholar] [CrossRef]

- Zhang, H.; Ringh, A. Inverse linear-quadratic discrete-time finite-horizon optimal control for indistinguishable homogeneous agents: A convex optimization approach. Automatica 2023, 148, 110758. [Google Scholar] [CrossRef]

- Lewis, F.; Jagannathan, S.; Yesildirak, A. Neural network control of robot manipulators and non-linear systems; CRC press, 2020.

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).