1. Introduction

Observing hydrological phenomena occurring in the natural environment is not an easy task, especially in hard-to-reach areas. Maintaining a monitoring network is expensive, which is why the number of traditional measurement stations decreases every year. However, there is a growing interest in using alternative sources of information, such as satellite images or images from surveillance cameras. This became possible mainly thanks to the rapid development in recent years of machine learning systems, which can automatically extract a lot of valuable information from such materials. This type of models are increasingly used in hydrology, e.g. to monitor the water level in rivers (Bentivoglio et al 2021, Muhadi et al. 2021), flood monitoring (Lo et al. 2015, Lopez-Fuentes et al. 2017) or determining flood zones (Tedesco et al. 2023). Pally and Samadi (2022) used i.a. R-CNN, YOLOv3 and Fast R-CNN for flood image classification and semantic segmentation. Erfani et al. (2022) developed ATLANTIS, a benchmark for semantic segmentation of waterbody images.

The main directions of hydrological research are directly related to process and time series modeling, but machine learning is increasingly used to obtain and prepare data (Shen et al. 2021).

One of the important elements influencing the water flow in the river is the riverbed roughness coefficient. It is related to the resistance due to friction acting along the perimeter of the wetted trough and the resistance created by objects directly washed by the water. Large objects such as stones, boulders, bushes, trees and logs significantly increase the bed roughness factor. When larger flows occur, they may cause water damming and pose a flood risk (Fisher & Dawson 2003; Djajadi 2009; Nagy 2017). This is why a good estimate of roughness has a significant impact on the results of hydrological models. However, in catchments with high flow dynamics, for example mountain ones, this parameter changes rapidly due to the material carried away by the floods and the vegetation developing intensively within the riverbeds. Tracking these changes using traditional methods would require frequent measurements and would be extremely expensive. One alternative to collecting and updating such data may be the use of various types of images. Modern computer vision systems make it possible to obtain semantic information from such data, for example about the type of land cover. Sematic segmentation algorithms are used for this type of tasks. In addition to dedicated segmentation systems, such as state-of-the-art panoptic segmentation (Carion et al. 2020), general-purpose segmentation systems are gaining popularity. One of the newest general models with zero-shot transfer capability is Segment Anything Model (SAM) released by Meta in April 2023 (Kirillov et al. 2023).

Thanks to its general-purpose structure and training data, SAM can be used for many tasks. In medical imaging SAM can be used to segment different structures and tissues in images, such as tumors, blood vessels, and organs (Mazurowski et al. 2023; He et al. 2023). This information can be used to assist doctors in diagnosis and treatment planning. So far, most publications exploiting the possibility of using SAM for image segmentation have appeared in the field of medicine. In agriculture SAM can be used to monitor crop health and growth. By segmenting different areas of a field or crop, SAM can identify areas that require attention, such as areas of pest infestation or nutrient deficiency (Li et al. 2023a). In Earth Sciences SAM was used for superglacial lake mapping (Luo et al. 2023), but for task of flood inundation mapping we only managed to find information about the possible potential use of SAM (Li et al. 2023). This is therefore an area of applicability of the model to potentially explore.

The goal of SAM is automatic promptable segmentation with minimal human intervention. It is a deep learning model, trained on the SA-1B dataset, the largest segmentation dataset to date – over 1 billion masks spread across 11 million carefully curated images (Kirillov et al. 2023). The model has been trained to achieve outstanding zero-shot performance, surpassing previous fully supervised results in numerous cases. Zero-shot transfer refers to SAM's ability to adapt to new tasks and object categories without requiring explicit training or prior exposure to specific examples (Ren et al. 2023). The model predicts object masks only and does not generate labels. SAM was trained to return segmentation masks for any prompt understood as point, bounding box, text or any other information indicating what to segment on an image. Alternative method is automatic segmentation of an image as a whole using a grid of points. In this case SAM tries to segment any object on the image. The SAM is available as source code in public repository and as a web application (

https://segment-anything.com/demo#), giving the opportunity to test its capabilities without the need for coding. Therefore, each user can choose a version of the model appropriate to his or her experience with machine learning models. One of such potential user groups may be hydrologists, and it is from their perspective that we tried to analyze the possibilities of using SAM.

The aim of this study is to use the Segment Anything Model in hydrology to determine changes in the roughness of a mountain riverbed based on images in various vegetation periods over the years 2010-2023. The second goal is to estimate the extent of water in the streambed based on the same images. The results can be used to quickly determine flood zones in the event of floods.

2. Materials and Methods

This study focuses on a simple and automatic solution that allows obtaining data that can be used in hydrological modeling. From a modeling perspective, the type of coverage in the riverbed area is particularly important. In the case of mountain catchments covered with forest, obtaining this type of data is difficult when using only orthophotomaps and aerial photos. The best solution is field measurements or the use of local monitoring. Unfortunately, manual methods are time-consuming and expensive. Therefore, there is a need to use tools and methods that allow automatic or semi-automatic analysis using other data sources, e.g. photographic documentation or local video monitoring.

2.1. Study area

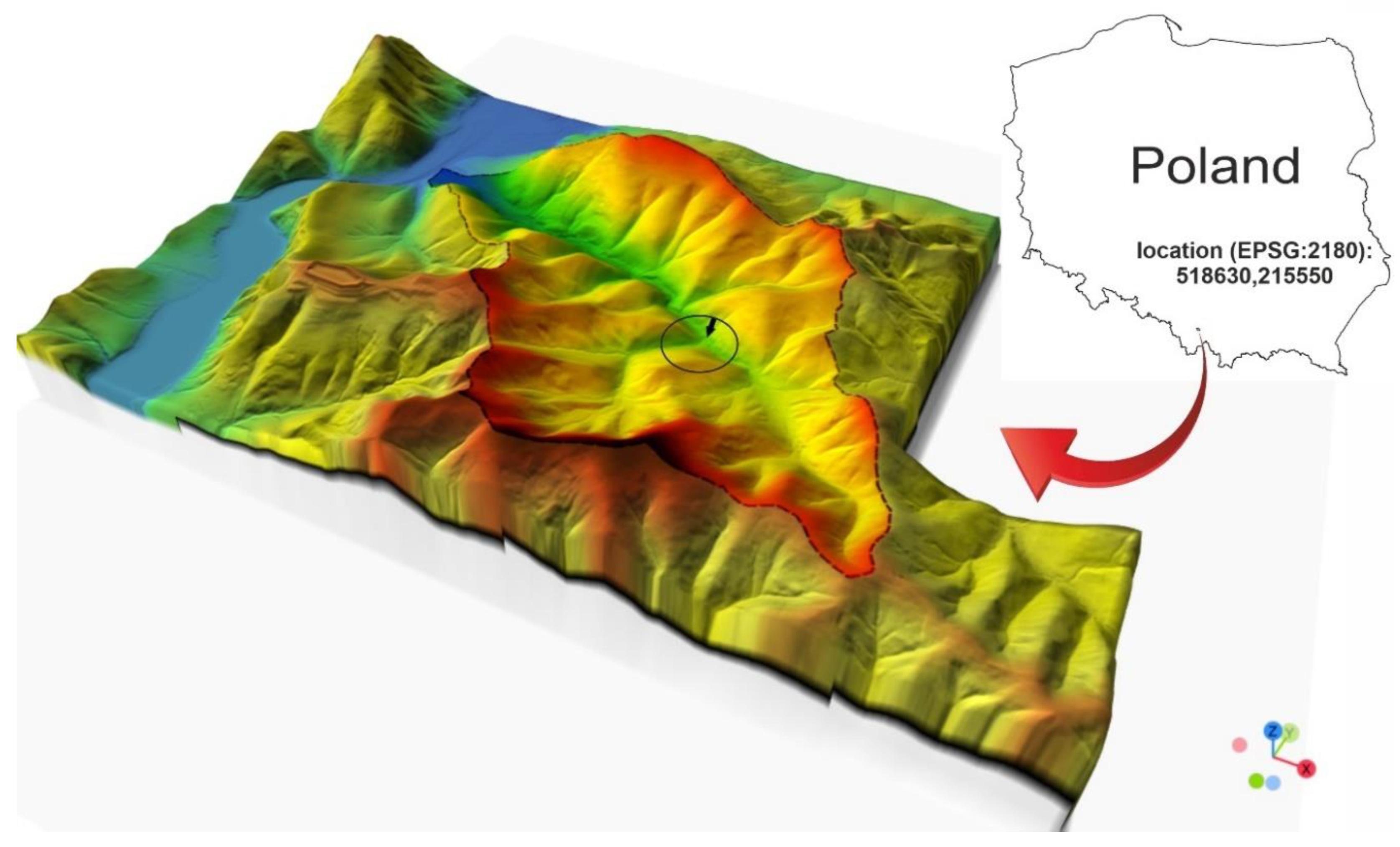

The study area is the catchment area of the Wielka Puszcza river (Great Forest in Polish) located in the southern part of Poland, in the Mały Beskid Mountains. The stream is a right-bank tributary of the Soła River, which is a right-bank tributary of the Vistula, the largest river in the country. The mouth of the Wielka Puszcza stream is located in the backwater of the dam reservoir located in the town of Czaniec (

Figure 1). The The Wielka Puszcza is a mountain catchment area characterized by large slopes, impermeable soil and a dense river network. About 90% of the catchment area is forest, 7% is agricultural land and 3% is built-up area.

Table 1.

Physiographic parameters of the Wielka Puszcza river catchment.

Table 1.

Physiographic parameters of the Wielka Puszcza river catchment.

| Parameter |

Value |

| Catchment area |

20.05 [km2] |

| Main watercourse length |

9.44 [km] |

| Watercourse slope |

43.75 [‰] |

| River source elevation |

710 [m a.s.l.] |

| Estuary elevation |

297 [m a.s.l.] |

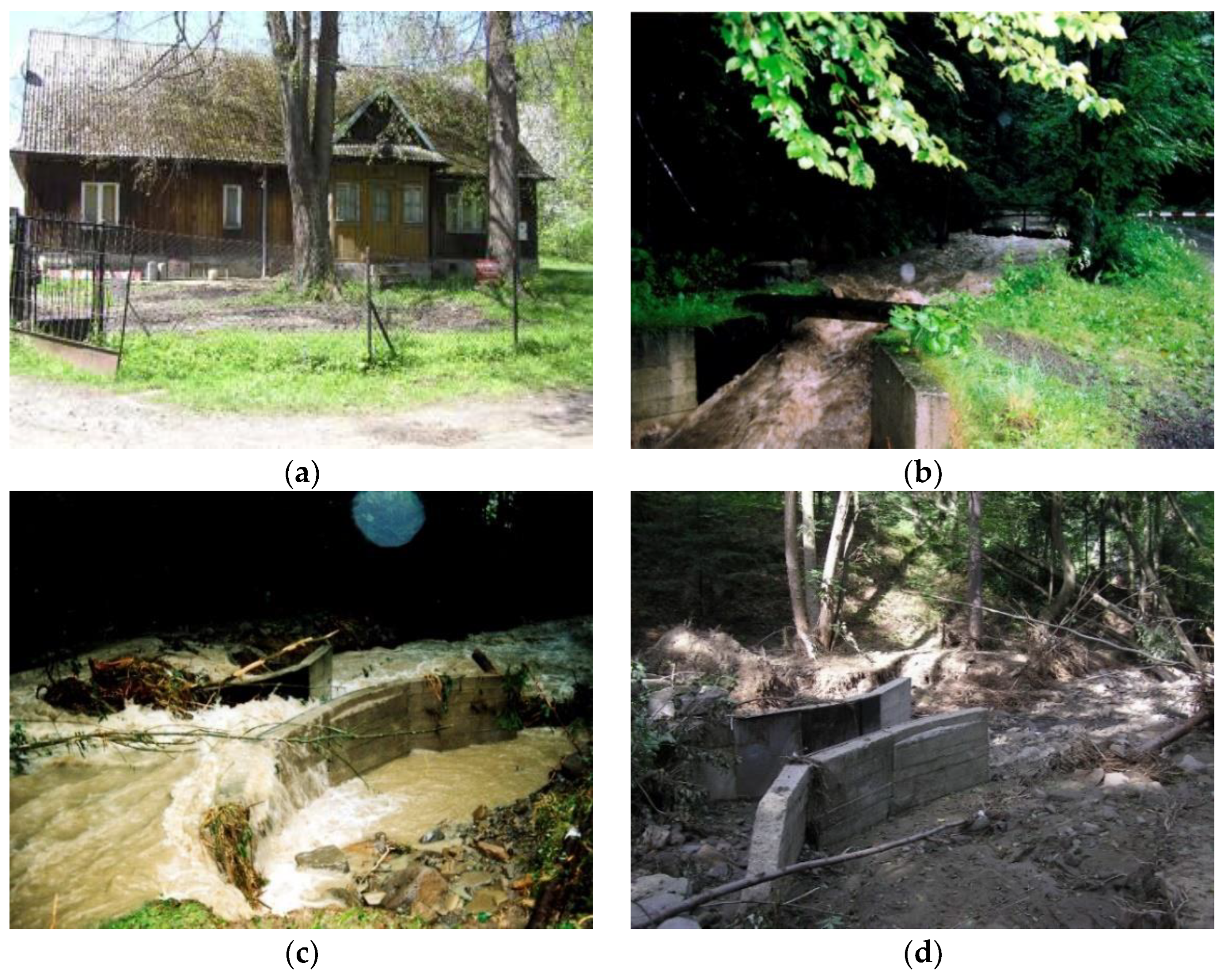

The Wielka Puszcza stream has been a research catchment area of the Cracow University of Technology since the 1970s. In the 1990s, meteorological observations were carried out at 7 measurement stations. Additionally, measurements of water levels and flows in one cross-section were also carried out. In 2005, as a result of catastrophic rainfall and flooding, the measuring station was destroyed, which resulted in its liquidation (

Figure 2).

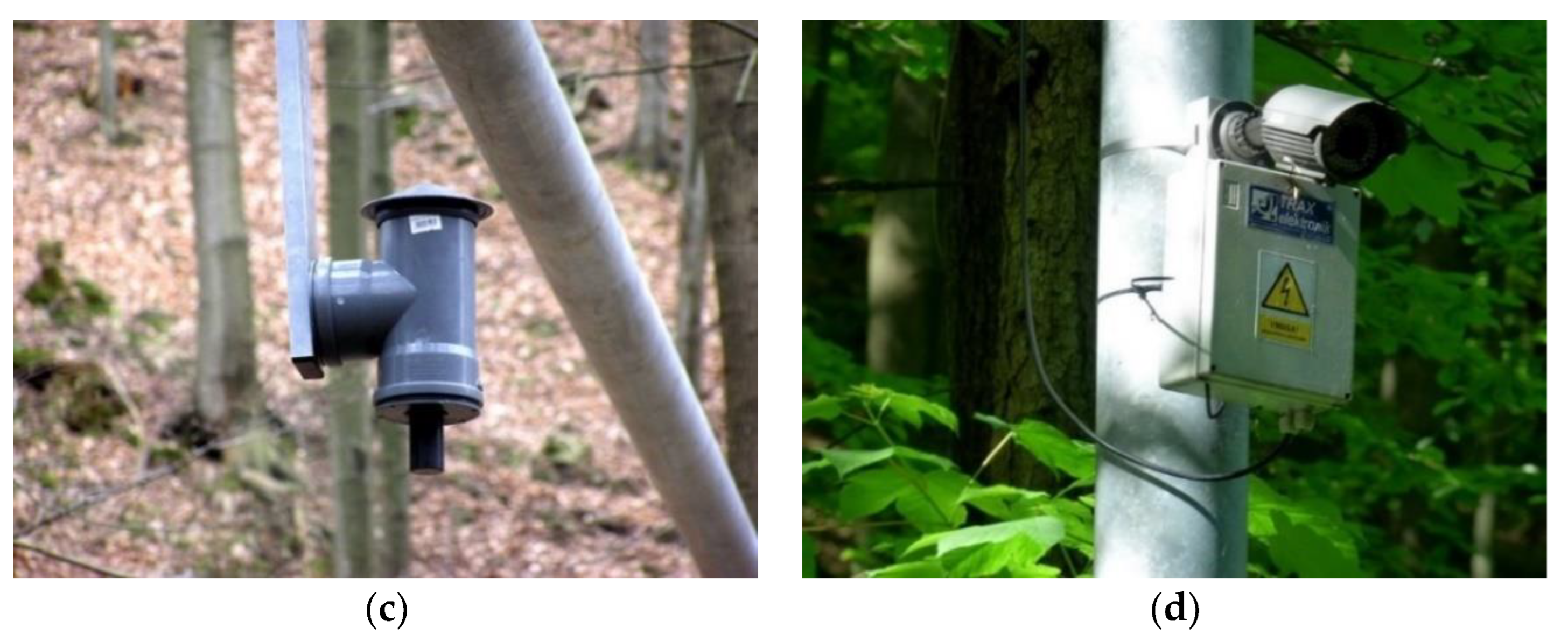

In 2017, hydrological measurements resumed on a limited basis at a new location. Currently, observations of water levels using a radar sensor are being carried out, as well as visual monitoring of the water table level, in the form of images taken by an industrial camera operating in visible light and infrared (day and night). Two Hellmann's trough rain gauges are also installed, providing an automatic system for measuring precipitation height every 10 minutes (

Figure 3). The data is collected in the recorder and sent to a server located at the Cracow University of Technology, which makes it possible to conduct online observations of the water table level in the stream and the precipitation.

2.2. Data

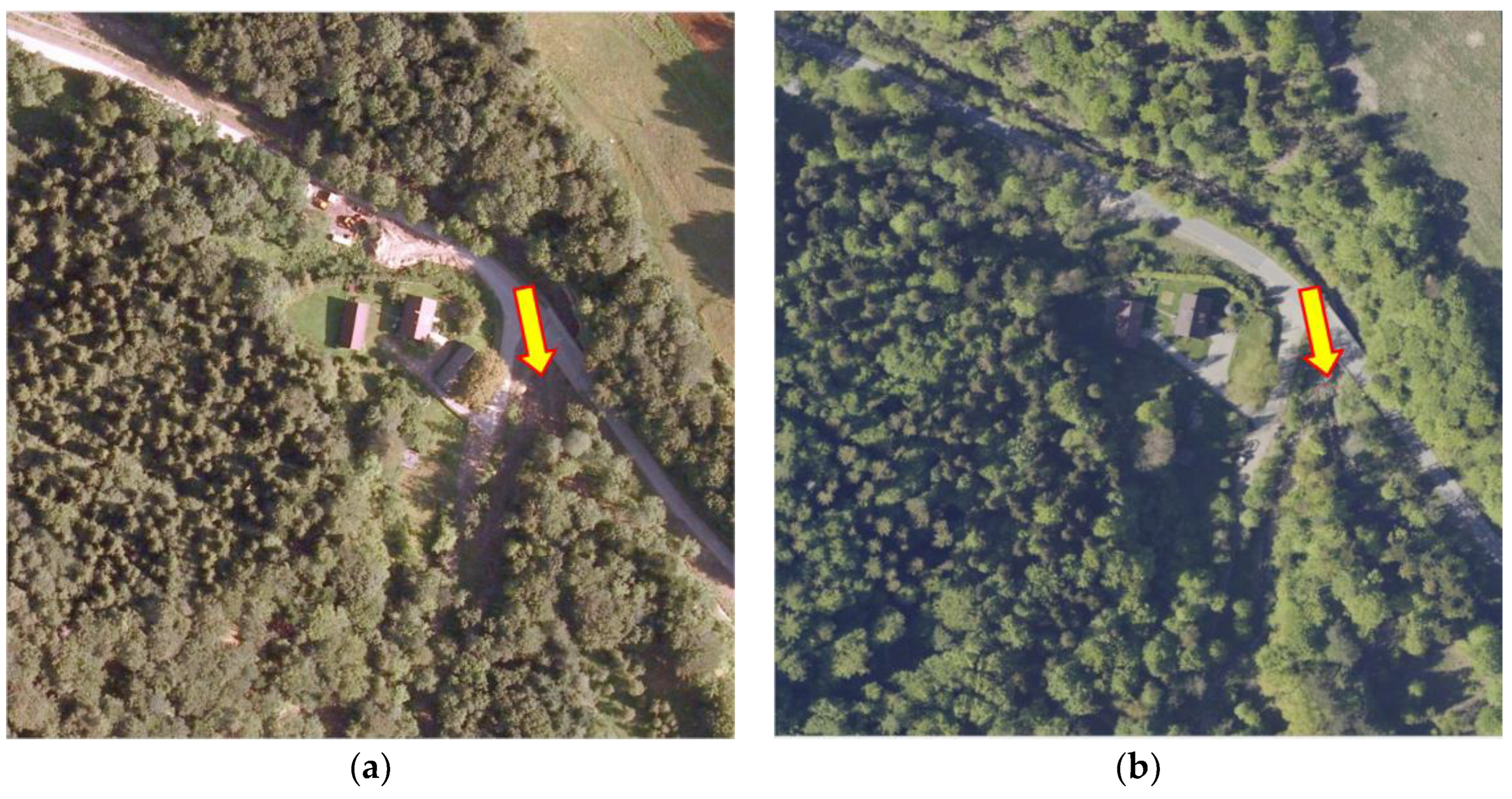

The analyzed area is a mountainous catchment with no continuous measurement system. The only data sources are publicly available orthophotos and satellite images. In the considered period 2010-2023, the orthophoto update covers only 2010 and 2023. Unfortunately, due to the nature of the area, orthophotos, even at high resolution, are not suitable for assessing changes in the riverbed (

Figure 4). Therefore, other data sources are needed to determine these changes.

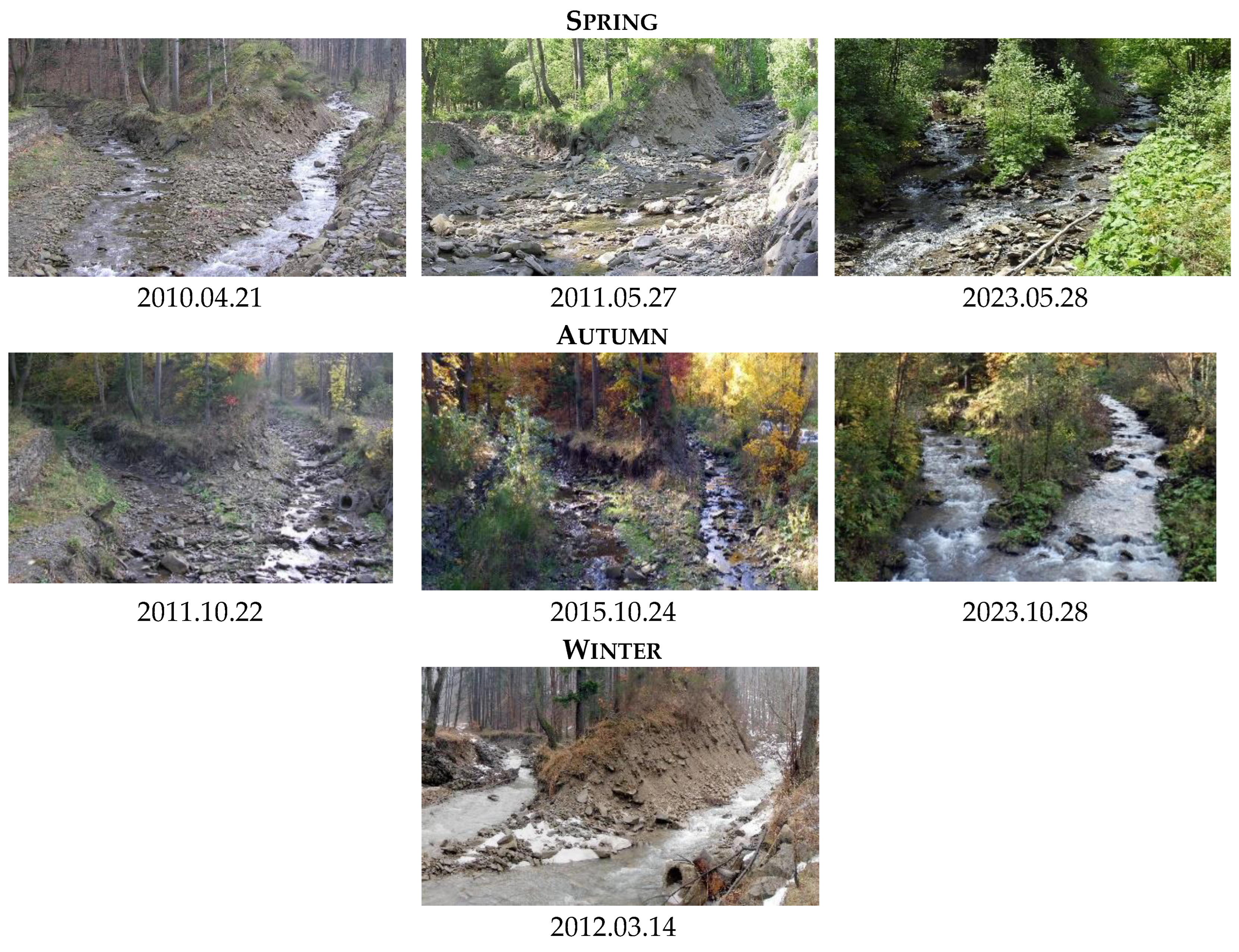

The data for the Segment Anything Model consists of photographic documentation by Marek Bodziony taken in the catchment area, at the junction of the Wielka Puszcza and Roztoka streams. Photos are from three growing seasons: spring - in the years 2010, 2011 i 2023, autumn - in the years 2011, 2015 and 2023, and winter - in 2012 (

Figure 5). Photographic documentation is available on the website

https://holmes.iigw.pl/~mbodzion/zaklad/wielka_puszcza/.

2.3. Methods

The presented workflow is divided into two parts using identical data and tools, but for different purposes. The basis for the analysis are photos showing the valley of a mountain stream at the junction of two watercourses. Using the SAM automatic segmentation model, objects in photos are recognized, but without assigning labels to them. The labeling process was performed non-automatically by assigning designated segments to one of the categories specified in

Table 2. The segmentation analysis was limited in each case to the area defined as the streambed.

The aim of the first part of the analysis was to estimate the roughness of the riverbed based on the segmentation results. The water category was omitted in this analysis. The aim of the second part was to assess to what extent the presented model can be used to estimate the extent of floods. Only the water category was used in this analysis.

To determine the index related to the roughness of the riverbed (

), the relationship between Manning's roughness coefficient (n) and the area of coverage for each of the adopted coverage categories was assumed:

where:

- riverbed roughness index [m-1/3 s],

n - Manning's coefficient of roughness for category [m-1/3 s],

- coverage area for the category [px].

The SAM supports three main segmentation modes in the online version: fully automatic mode, bounding box mode and point mode. The study tested the first two modes. In addition, automatic segmentation was performed using the Google Colaboratory (Colab) environment using Jupyter notebook provided by the SAM authors.

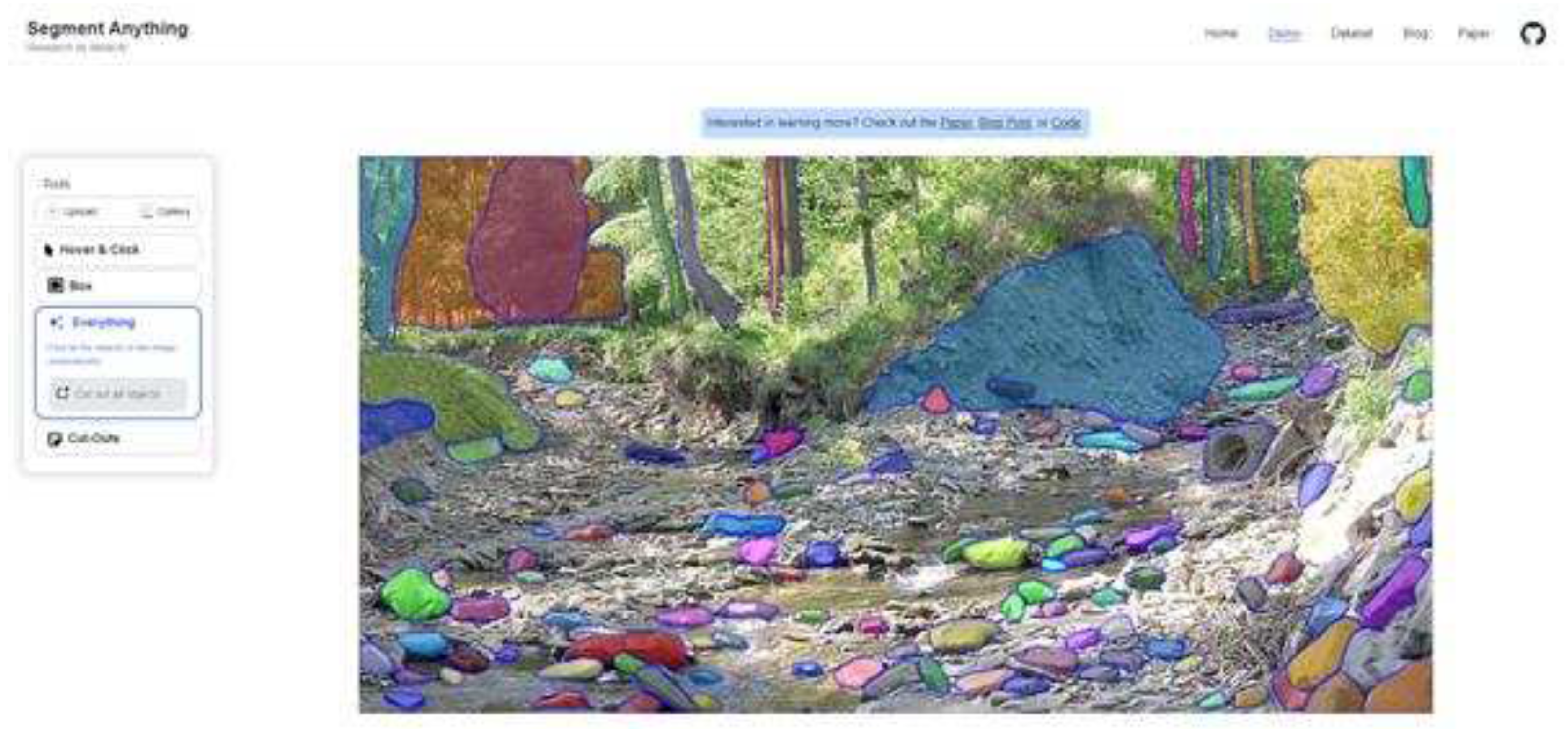

SAM online: The fully automatic segmentation mode involves generating masks automatically based on a regular grid of points. An example of using this mode on a selected image is shown in

Figure 6.

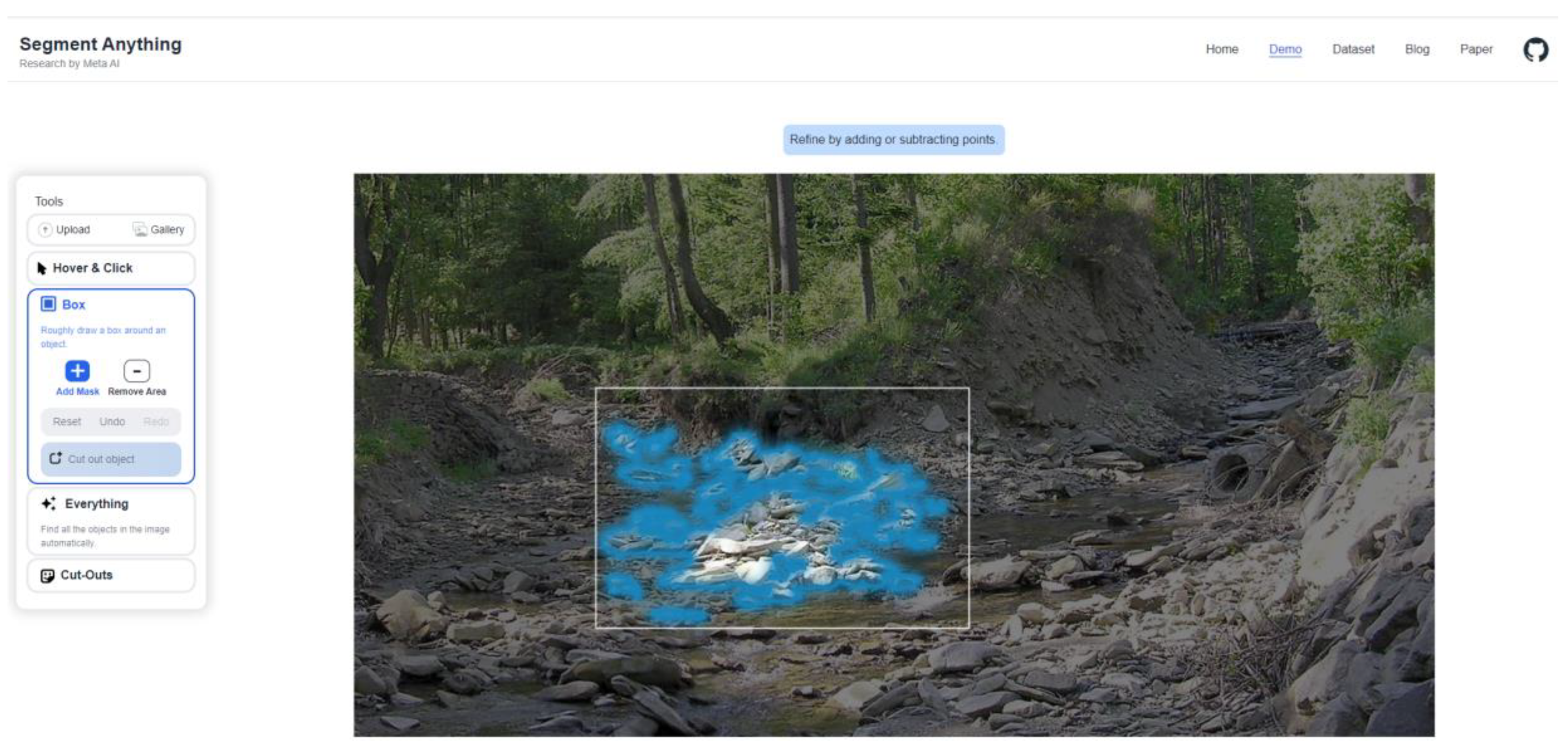

Bounding box online: To segmentation only a specific portion of an image the best solution is to use bounding boxes to identify the object within the area of interest, and SAM will segment it accordingly. An example of using this mode on a selected image is shown in

Figure 7.

Image segmentation using the bounding box method requires manual selection of objects. Its use is therefore not the best solution for automatically generating areas of land cover type in a watercourse area. Due to the necessity of pointing out objects each time, this method is time-consuming and unsuited to the needs of the presented analysis. Tests verifying the segmentation of water-covered areas showed its low efficiency, so already at the test stage this method was abandoned for analysis.

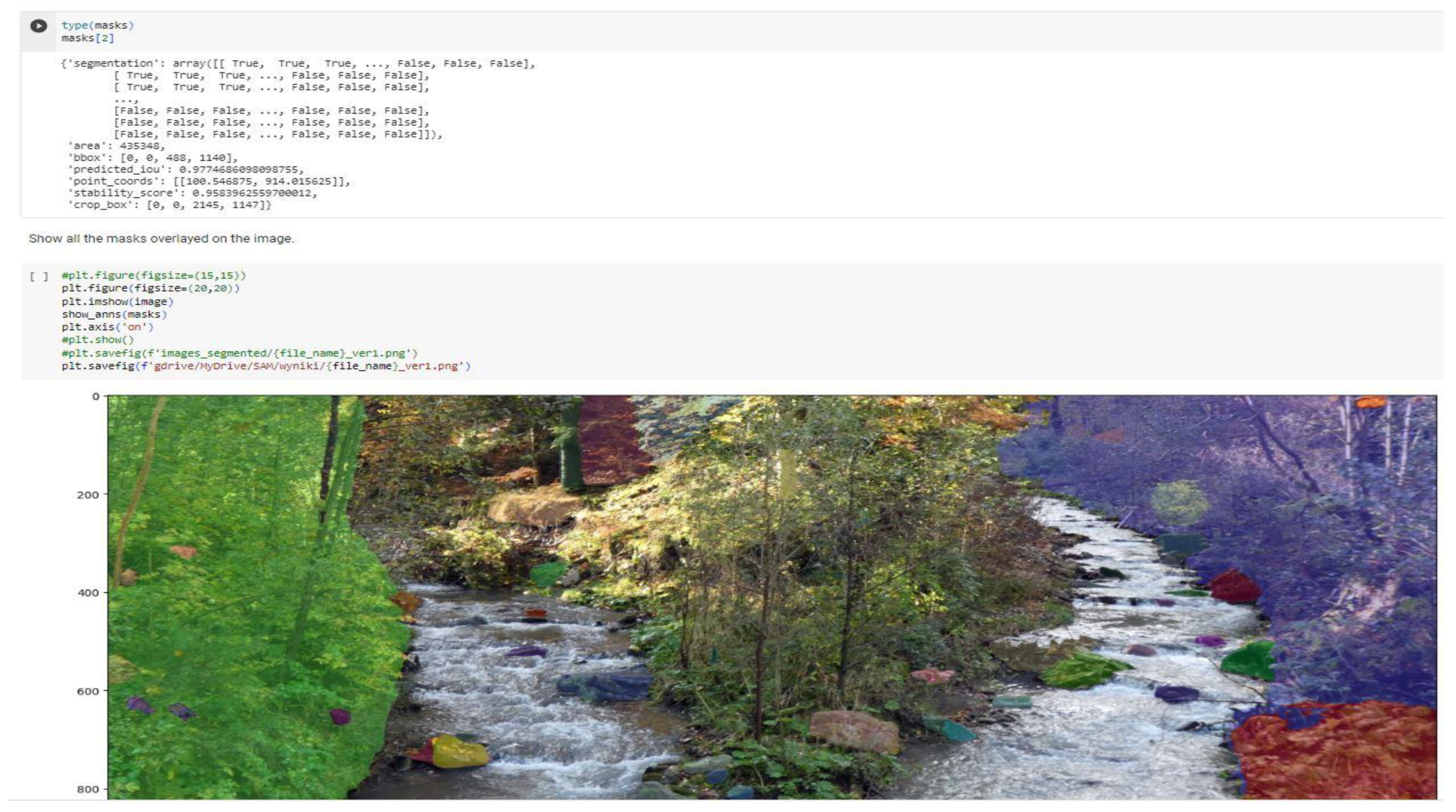

SAM Colab: Google Colaboratory (Colab) is a public service for running Jupyter notebooks directly in the browser without configuration (

Figure 8). It provides free GPU access and facilitates code sharing. Using the sample Jupyter notebook provided in the project repository, image segmentation was performed. It should be mentioned that model parameters were not modified or tuned for this analysis. The results obtained in this way will therefore be suboptimal, but for the purposes of these tests it is assumed that the user of the tools is a hydrologist without in-depth knowledge of machine learning methods and optimization of these models. As a result of image segmentation, a segmentation mask is created, as well as a file containing the identifiers and geometry of the masks.

Four categories were adopted to identify the type of cover of the riverbed area, which have a decisive influence on the riverbed roughness parameter: earth channel, stones, grass, shrubs/trees. The earth channel category includes areas consisting of small stones and gravel constituting a uniform surface. Objects distinguished by their size and shape from the earth channel area were classified as stones. Grass is an area covered with low grassy vegetation. Areas of medium and tall vegetation were included in the shrubs/trees category. Image analysis in SAM were carried out based on the segmentation of adopted categories, the areas of which were defined in image units (pixels), not in physical units. Areas for which SAM did not assign a mask most often corresponded to the earth channel and water categories. When estimating the roughness of the river bed, they were assigned the earth channel category. Areas for which SAM did not assign a mask most often corresponded to the earth channel and water categories. When estimating the roughness of the river bed, they were assigned the earth channel category.

The image segmentation results were assessed using a confusion matrix, comparing actual (ground truth) segments to prediction settings (Wang et al. 2020, Reinke et al. 2021). Intersection over Union (IoU) was calculated from confusion matrix values to evaluate SAM performance (Hernández et al. 2022; Li et al. 2023) using True Positive (TP), False Negative (FN) and False Positive (FP) values.

Since SAM is not a classic sematic segmentation model, the effect of segmentation are masks, which are assigned to appropriate categories in our method. Therefore, since the assignment to categories is manual, and the shapes of the masks themselves reproduce the shapes of objects very well, False Positive does not occur.

3. Results

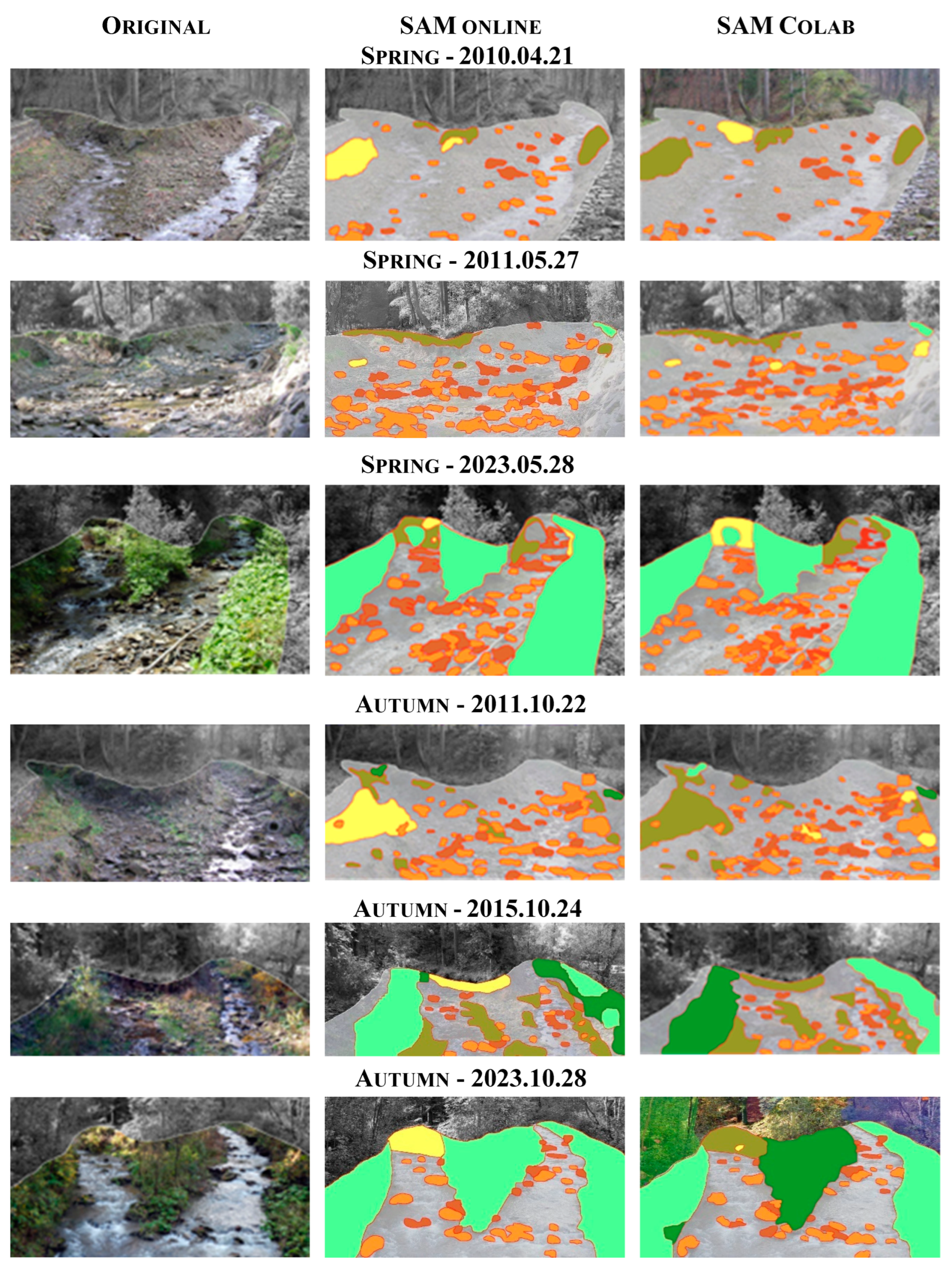

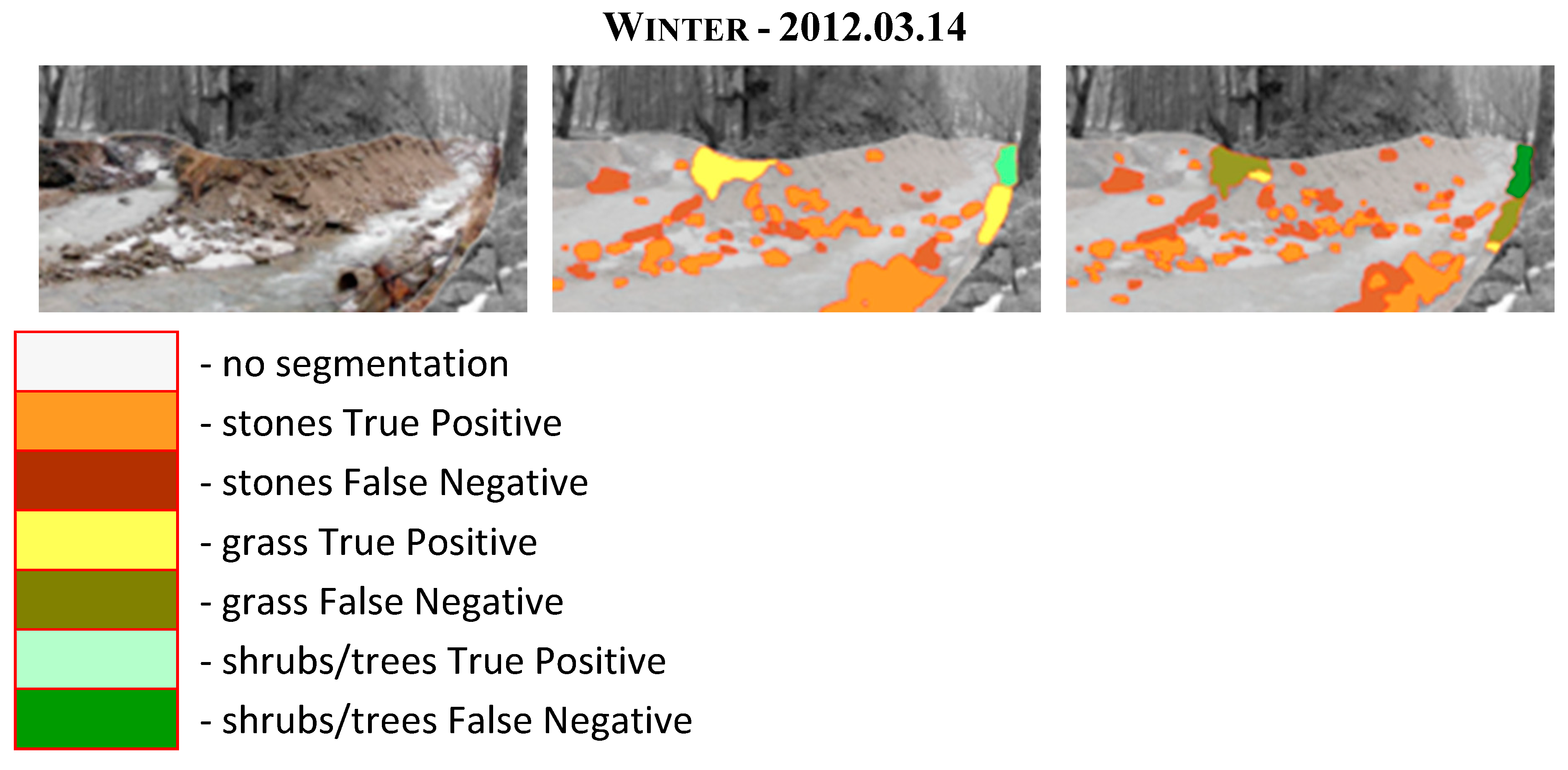

The effectiveness of the segmentation algorithm of the two SAM versions on images of a mountain stream bed is the main effect of the presented analysis. The results in

Table 3 present two basic descriptive statistics of Intersection over Union (IoU). The average IoU value shows the averaged result, where the value of 0 can be interpreted as the worst possible result, and 1 as the best possible result to be obtained.

The IoU standard deviation is a measure of model stability. It is clearly visible that, with the exception of the segmentation of the water and grass categories, the remaining results are similar for the two SAM launch modes used.

The best segmentation results of both models were obtained for the shrubs/trees category. However, this is also the category with the greatest variability of results. The most unstable results were obtained when estimating the extent of floods (water category), where the online SAM method obtained an average IoU of 0.49, while SAM Colab practical did not recognize this category, obtaining IoU practically at the minimum level. Similar results were obtained for the stones category, but in this case both models were very stable, achieving an IoU standard deviation in the range of 0.06-0.14. This is understandable because SAM is better suited for segmenting specific objects, rather than larger areas in an image with a complex visual structure.

In the task of estimating river bed roughness, SAM online performed well, considering the complexity of the images used. SAM Colab performed slightly worse, especially for the grass category. In the task of estimating the flood extent, SAM online performed well, obtaining an IoU mean of 0.49, while SAM Colab practically did not segment this category. It should be noted that only some of the photos used had a clearly visible water table, so this was an extremely difficult task.

4. Discussion

Some regularities can be noticed in the image segmentation results presented in Table 3. The best results expressed by the Intersection over Union measure were obtained for categories that can be classified as physical objects. In this case, these are stones whose average IoU in both methods is at the level of 0.61-0.68, but they are characterized by a very low standard deviation of 0.06-0.14. Even smaller variances with slightly worse results were obtained for the earth channel. In the case of segments covering a larger area (grass, shrubs/trees), significantly better identification is achieved using online SAM. This is especially visible in

Figure 9, in the photographs from 2023.10.28 and from 2012.03.14, in terms of identifying areas covered with grass and shrubs.

In general, grass constitutes the smallest share of the area and is segmented well by SAM online, but much worse by SAM Colab. This can be seen in the images from 22.10.2011 and 28.10.2023 (

Figure 9). Large stones pose the least problem to the segmentation model and are probably best identified in images. This can be explained by the fact that SAM is designed to segment objects rather than uniform areas. Vegetation, if present in the image, is recognized well, probably due to a significantly different spectral signature than the background.

Detailed quantitative segmentation results for SAM online and SAM Colab are presented in

Table 4 and

Table 5, respectively. The IoU = 1 value means extreme cases of identification: either the model did not detect areas in a given category because they did not appear in the photo or 100% of them were identified. This situation applies primarily to shrubs and trees.

Both versions of the SAM model do not perform segmentation only occasionally (IoU = 0). For SAM online this applies to the image from 2011.10.22 for shrubs, trees (Table 4). For SAM Colab such a situation occurred on 2015.10.24 for grass and on 2012.03.14 for shrubs, trees (

Table 5). It is for the vegetation-related categories that IoU takes on its most extreme values, which is most visible for SAM online at shrubs/trees (

Table 4). This may be related to the fact that there is relatively little vegetation in the area of the stream bed, so the results obtained may be extremely different.

The worst segmentation results were obtained for grass with SAM Colab at a level not exceeding IoU = 0.5. This is understandable because the complex texture of low vegetation can be difficult to identify as an object.

Based on the image segmentation results, Manning's roughness coefficients were estimated, divided into seasons. The roughness coefficient is closely related to the vegetation period, as shown in

Figure 5 and

Table 6. The obtained coefficient values suggest a slightly different division than the division into seasons adopted in

Table 6.

The end of May (2023.05.28) should be interpreted as a period of intense vegetation (summer, autumn) and the vegetation shown in the photo 2011.10.22 is more consistent with the winter season. However, it is impossible to generalize by introducing a strict division into growing seasons, because each year the seasons may start or end at different times. Therefore, the analysis of the stream bed based on SAM segmentation makes it possible to determine more actual parameters related to hydraulic resistance. It is also worth noting that the range of changes in the roughness coefficient for the analyzed section of the stream bed is large (in the range of 0.027-0.059) and may significantly affect the flow conditions.

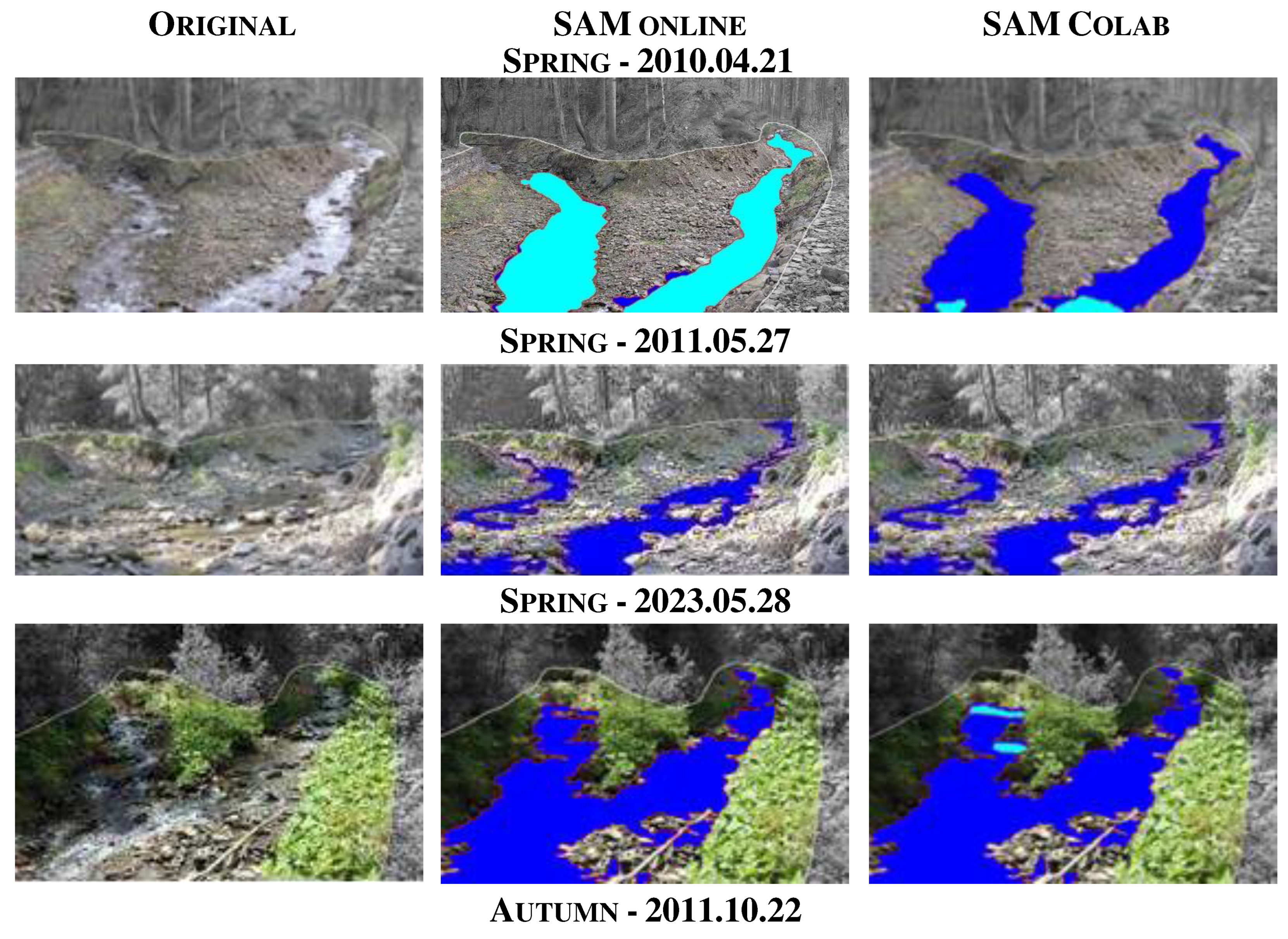

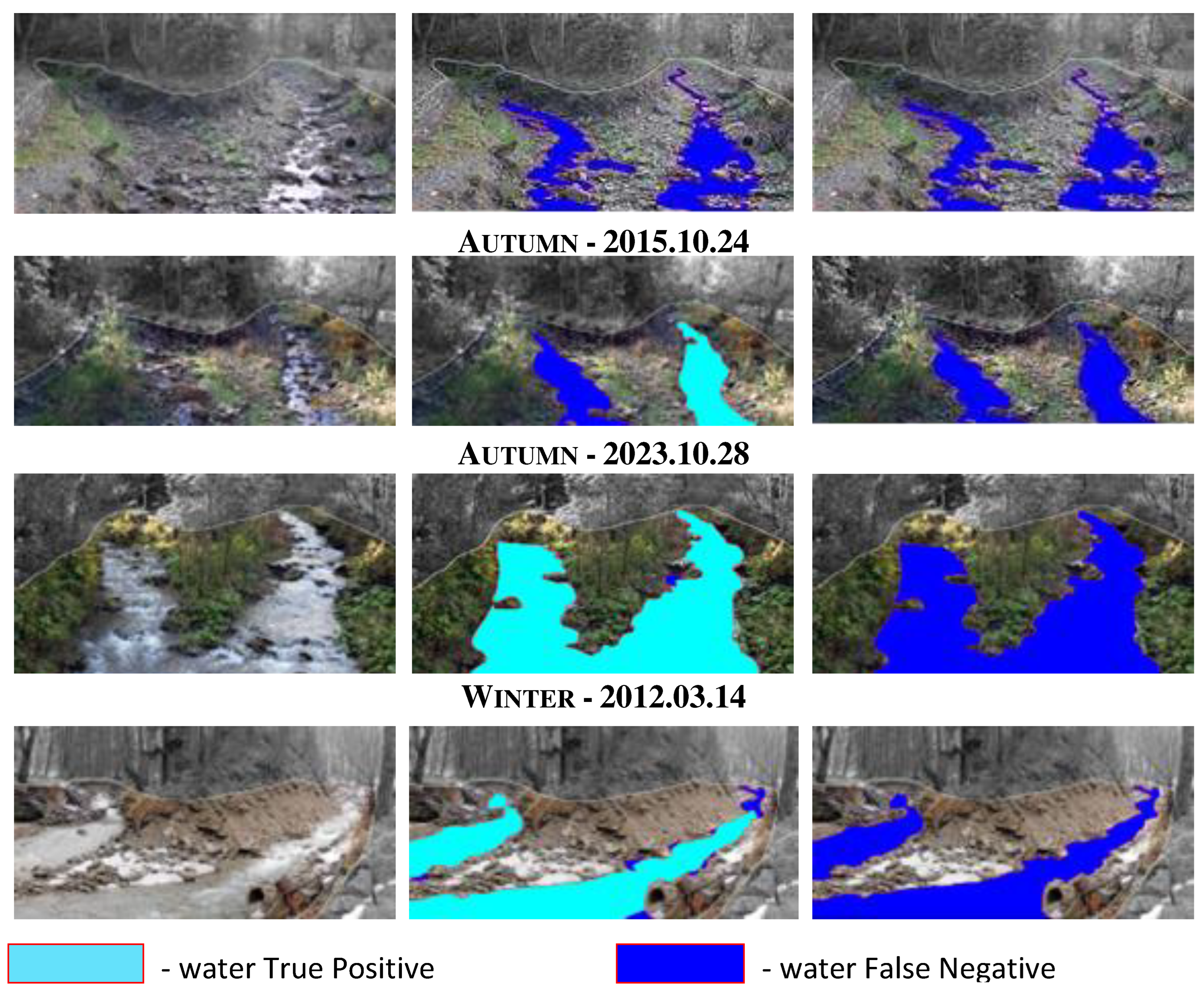

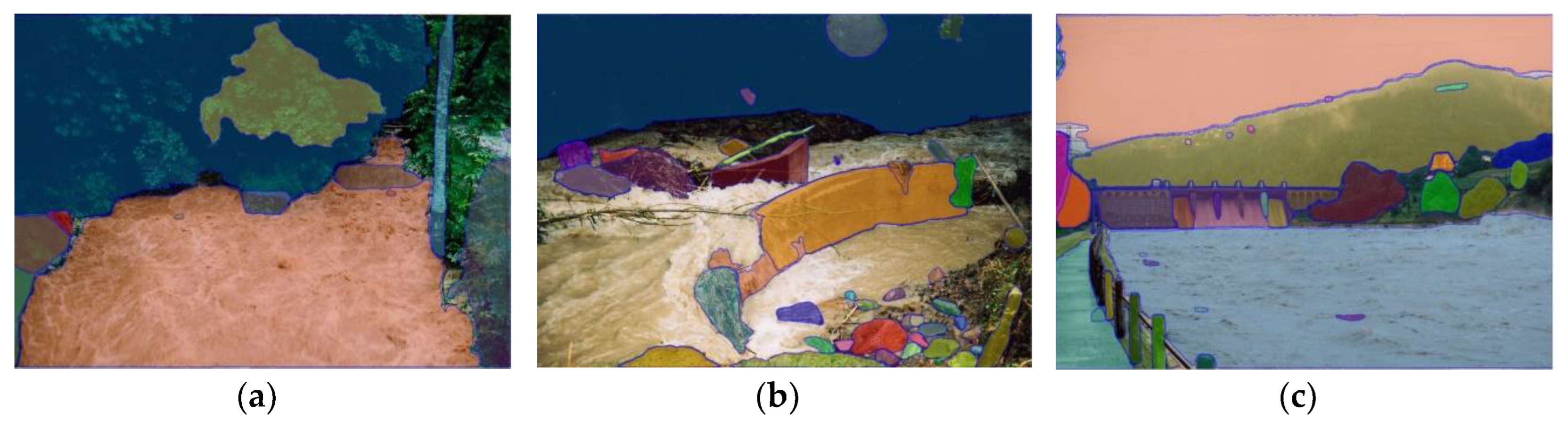

The second aim of the analysis was to assess the effectiveness of SAM segmentation results for estimating flood extent. Detailed effects are presented in the segmentation results of the main stream (Wielka Puszcza on the left) and its tributary (Roztoka stream on the right) (

Figure 10). In this case, the focus was only on the water category.

A fragment of the streambed that has been subjected to vision analysis poses a great challenge to segmentation algorithms. In the image from 2011.05.27 (

Figure 10), it is difficult even for humans to recognize fragments with water because the photo was taken at an extremely low water level. The image from 2015.10.24 is equally difficult to segment. In this case, one of the streams was correctly identified, while the other one was not identified at all. In images where water is an important part (2010.04.21, 2023.10.28, 2012.03.14), SAM online had no problems with good segmentation of this category, although SAM Colab did not cope with this task. Analyzing the segmentation in terms of flood extent, it can be seen that only in one out of four cases for higher water levels SAM online the detection of the water table failed (

Table 7). In the remaining cases, the IoU results were no less than 0.94, which can be considered an excellent result.

The very large variance of the SAM online results is also significant, as only in one case (2015.10.24) the IoU was not close to 0 or 1. The SAM Colab segmentation results were extremely poor. In many images, water appears only between the stones. An additional difficulty may be the lighting conditions, where the shadows of trees mix with stones and low vegetation. SAM was created for object recognition, but such a complex structure as a mountain stream slightly filled with water seems too difficult a challenge.

The analyzed area is characterized by very fast and short-lasting floods. For this reason, very few photos are available from the flood period itself. The available photographic documentation from the studied stream cross-section did not contain a sufficient number of images from the flood, therefore three additional photos were analyzed (

Figure 11).

In the case of uniformly illuminated water surfaces (

Figure 11a, 11c), SAM online is easily able to segment the flood water table. The remaining image elements are segmented equally well. The third photo, taken during the flood at night (

Figure 11b), segments all objects very well, except for the turbulent and unevenly lit stream. However, it can be assumed that in this case the flood extent corresponds to the entire remaining area, so it is easy to identify and further process. The quality of segmentation depends not only on the texture of the object, but also on its lighting.

5. Conclusions

The Segment Anything Model (SAM) presented in this paper is a new tool with a potentially very wide range of applicability, also in hydrology. Unlike dedicated models, it is trained on a large and diverse datasets. Two versions of SAM were tested in the task of segmenting images of a mountain streambed in order to estimate the roughness coefficient. In a similar way, SAM was used to estimate the extent of floods. To the best of our knowledge, this is the first described application of SAM for estimating riverbed roughness and one of the first for estimating flood extent.

The SAM online provides automatic image segmentation without the ability to manage model parameters, which poses some limitations, but the quality of the results obtained is satisfactory. The average IoU ranges from 0.55 for grass to 0.82 for shrubs/trees. However, it is worth noting the high standard deviations of the results. The high variability of SAM results, expressed here by high IoU standard deviations, was also observed by other authors (He et al. 2023). According Mazurowski et al. (2023) SAM’s performance based on single prompts highly varies depending on the dataset and the task, from IoU=0.11 for spine MRI to IoU = 0.86 for hip X-ray. In our study most categories are either segmented well (very well) or not segmented at all. This is clearly visible in the online SAM results for the shrubs, trees and water categories, where IoU equal to 0 or close to 1 dominates. As reported by Huang et al. (2023) SAM showed remarkable performance in some specific objects but was unstable, imperfect, or even totally failed in other situations. As this study showed, with the appropriate selection of images, you can quickly obtain an estimate of the flood extent at the level of at least IoU = 0.94. For a similar task, Tedesco and Radzikowski (2023) reported IoU of 0.84-0.98 using deep convolutional neural networks (D-CNNs). In all analyzed cases, the quality of images and uniform lighting are of great importance. The SAM, which is a zero-shot model that does not require training, performs segmentation very well. Most importantly, it is available for free and does not require knowledge of machine learning for practical use.

The SAM Colab provides opportunities to optimize model parameters, but this requires experience in machine learning. With the default parameters, the segmentation results are rather unsatisfactory. Similarly, He et al. (2023) observed that SAM, when directly applied to medical images without re-training or fine-tuning, is not yet as accurate as algorithms specifically designed for medical image segmentation tasks. To better adapt SAM to dedicated tasks in the area of hydrological applications, training and tuning of the model called SAM Colab in this article would be required.

Author Contributions

Conceptualization, B.B., M.B. and R.S.; methodology, B.B., M.B. and R.S.; software, B.B., M.B. and R.S.; validation, B.B., M.B. and R.S..; formal analysis, B.B., M.B. and R.S.; investigation, B.B., M.B. and R.S.; resources, B.B., M.B. and R.S.; data curation, B.B., M.B. and R.S.; writing—original draft preparation, B.B., M.B. and R.S.; writing—review and editing, B.B., M.B. and R.S.; visualization, B.B., M.B. and R.S.; supervision, B.B., M.B. and R.S.. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Conflicts of Interest

The authors declare no conflict of interest.

References

- Bentivoglio R., Isufi E., Jonkman S.N. Taormina R. Deep Learning Methods for Flood Mapping: A Review of Existing Applications and Future Research Directions. Hydrology and Earth System Sciences 2021. [CrossRef]

- Carion N., Massa F., Synnaeve G., Usunier N., Kirillov, A., Zagoruyko S.. End-to-end object detection with transformers. In European conference on computer vision (213-229 pp). Cham: Springer International Publishing. 2020. [CrossRef]

- Chow V. T. Open Channel Hydraulics, Mcgraw-Hill Book Co 1957.

- Djajadi R.. Comparative study of equivalent manning roughness coefficient for channel with composite roughness. Civil Engineering Dimension, vol. 11, no. 2, pp. 113+. Gale Academic OneFile 2009. [CrossRef]

- Erfani S. M. H., Wu Z., Wu X., Wang S., Goharian E. ATLANTIS: A benchmark for semantic segmentation of waterbody images. 149, 105333. Environmental Modelling & Software 2022. [CrossRef]

- Fisher K.R. Dawson F. Reducing Uncertainty in River Flood Conveyance: Roughness Review. Report number: Project W5A-057 - DEFRA (204 pp) Flood Management Division & Science Directorate, & Environment Agency 2003.

- He S., Bao R., Li J., Grant P. E., Ou Y. Accuracy of segment-anything model (sam) in medical image segmentation tasks. arXiv preprint arXiv:2304.09324. 2023. [CrossRef]

- Hernández D., Cecilia J. M., Cano J. C., Calafat, C. T. Flood detection using real-time image segmentation from unmanned aerial vehicles on edge-computing platform. Remote Sensing, 14(1), 223, 2022 . [CrossRef]

- Huang Y., Yang X., Liu L., Zhou H., Chang A., Zhou X., Ni D. Segment anything model for medical images?. Medical Image Analysis, 103061, 2023. [CrossRef]

- Kirillov A., Mintun E., Ravi N., Mao H., Rolland C., Gustafson L., Xiao T., Whitehead S., Berg A. C., Lo W., Dollár P., Girshick R. B. Segment Anything. 2023, ArXiv, abs/2304.02643 . [CrossRef]

- Li W., Lee H., Wang S., Hsu C. Y., & Arundel S. T. Assessment of a new GeoAI foundation model for flood inundation mapping. In Proceedings of the 6th ACM SIGSPATIAL International Workshop on AI for Geographic Knowledge Discovery (pp. 102-109) 2023. [CrossRef]

- Li Y., Wang D., Yuan C., Li H., Hu J. Enhancing Agricultural Image Segmentation with an Agricultural Segment Anything Model Adapter. Sensors, 23(18), 7884. 2023 a. [CrossRef]

- Lo S-W., Wu J-H., Lin F-P., Hsu C-H. Visual Sensing for Urban Flood Monitoring. Sensors. 15(8):20006-20029. 2015. [CrossRef]

- Lopez-Fuentes L., Rossi C., Skinnemoen H. River segmentation for flood monitoring. 2017 IEEE International Conference on Big Data (Big Data), 3746-3749, 2017. [CrossRef]

- Luo X., Walther P., Mansour W., Teuscher B., Zollner J. M., Li H., Werner M. Exploring GeoAI Methods for Supraglacial Lake Mapping on Greenland Ice Sheet, 2023. [CrossRef]

- Mazurowski M. A., Dong H., Gu H., Yang J., Konz N., Zhang Y. Segment anything model for medical image analysis: an experimental study. Medical Image Analysis, 89, 102918, 2023. [CrossRef]

- Muhadi N. A., Abdullah A. F., Bejo S. K., Mahadi M. R., Mijic A. Deep Learning Semantic Segmentation for Water Level Estimation Using Surveillance Camera. Applied Sciences. 1(20):9691. 2021. [CrossRef]

- Nagy B. A creek's hydrological model's generates in HEC-RAS. Conference: Student V4 Geoscience Conference and Scientific Meeting GISÁČEKAt: Technical University of Ostrava, Ostrava, Czech Republic 2017.

- Pally R. J., Samadi S. Application of image processing and convolutional neural networks for flood image classification and semantic segmentation. Environmental Modelling & Software, 148, 105285. 2022. [CrossRef]

- Reinke A., Tizabi M. D., Sudre C. H., Eisenmann M., Rädsch T., Baumgartner M., Maier-Hein L. Common limitations of image processing metrics: A picture story. arXiv preprint arXiv:2104.05642, 2021. [CrossRef]

- Ren S., Luzi F., Lahrichi S., Kassaw K., Collins L. M., Bradbury K., Malof J. M. Segment anything, from space? ArXiv:2304.13000, 2023. [CrossRef]

- Shen, C., Chen, X., Laloy, E. Broadening the use of machine learning in hydrology. Frontiers in Water, 3, 681023. 2021. [CrossRef]

- Tedesco M., Radzikowski J. Assessment of a Machine Learning Algorithm Using Web Images for Flood Detection and Water Level Estimates. GeoHazards. 4(4):437-452. 2023. [CrossRef]

- Wang Z., Wang E., & Zhu Y. Image segmentation evaluation: a survey of methods. Artificial Intelligence Review, 53, 5637-5674, 2020. [CrossRef]

Figure 1.

The Wielka Puszcza river catchment.

Figure 1.

The Wielka Puszcza river catchment.

Figure 2.

The Wielka Puszcza - research station of the Cracow University of Technology in 1993-2005, a) lodge; b) measuring cross-section before the flood; c) measuring cross-section during the flood; d) measuring cross-section after the flood.

Figure 2.

The Wielka Puszcza - research station of the Cracow University of Technology in 1993-2005, a) lodge; b) measuring cross-section before the flood; c) measuring cross-section during the flood; d) measuring cross-section after the flood.

Figure 3.

The Wielka Puszcza – Cracow University of Technology's new research station operating since 2017, a) measuring rainfall with Hellman rain gauges; b) water table level monitoring; c) water table level measurement sensor; d) camera taking pictures of the water table level.

Figure 3.

The Wielka Puszcza – Cracow University of Technology's new research station operating since 2017, a) measuring rainfall with Hellman rain gauges; b) water table level monitoring; c) water table level measurement sensor; d) camera taking pictures of the water table level.

Figure 4.

Orthophotomap of the research area for the year: a) 2010, b) 2023.

Figure 4.

Orthophotomap of the research area for the year: a) 2010, b) 2023.

Figure 5.

Photographs of different vegetation periods in the considered years 2010-2023.

Figure 5.

Photographs of different vegetation periods in the considered years 2010-2023.

Figure 6.

Example of SAM application - online automatic mode.

Figure 6.

Example of SAM application - online automatic mode.

Figure 7.

Example of SAM application - bounding box mode online.

Figure 7.

Example of SAM application - bounding box mode online.

Figure 8.

Image segmentation in Jupyter notebook on Google Colaboratory (Colab).

Figure 8.

Image segmentation in Jupyter notebook on Google Colaboratory (Colab).

Figure 9.

Image segmentation in Jupyter notebook on Google Colaboratory (Colab).

Figure 9.

Image segmentation in Jupyter notebook on Google Colaboratory (Colab).

Figure 10.

The result of water segmentation in a mountain stream bed.

Figure 10.

The result of water segmentation in a mountain stream bed.

Figure 11.

The SAM online segmentation result of flood water extent; a) and b) flood in 1997 on the Roztoka stream; c) flood in 1997 on the Soła River.

Figure 11.

The SAM online segmentation result of flood water extent; a) and b) flood in 1997 on the Roztoka stream; c) flood in 1997 on the Soła River.

Table 2.

Object categories within riverbed used in this study with corresponding Manning's roughness coefficient (according to Chow 1959).

Table 2.

Object categories within riverbed used in this study with corresponding Manning's roughness coefficient (according to Chow 1959).

| Category |

Manning's roughness coefficient [n] |

| stones |

0.040 |

| earth channel |

0.025 |

| grass |

0.030 |

| shrubs/tree |

0.100 |

Table 3.

Intersection over Union (IoU) mean and standard deviation for two selected SAM versions.

Table 3.

Intersection over Union (IoU) mean and standard deviation for two selected SAM versions.

| Category |

SAM online |

SAM Colab |

| IoU mean |

IoU std. |

IoU mean |

IoU std. |

| stones |

0.68 |

0.14 |

0.61 |

0.06 |

| earth channel |

0.54 |

0.04 |

0.57 |

0.04 |

| grass |

0.55 |

0.38 |

0.17 |

0.17 |

| shrubs/trees |

0.82 |

0.37 |

0.66 |

0.38 |

| water |

0.49 |

0.48 |

0.01 |

0.02 |

Table 4.

Intersection over Union (IoU) for SAM online.

Table 4.

Intersection over Union (IoU) for SAM online.

| Category |

2010.04.21 |

2011.05.27 |

2011.10.22 |

2012.03.14 |

2015.10.24 |

2023.05.28 |

2023.10.28 |

| stones |

0.70 |

0.77 |

0.83 |

0.76 |

0.45 |

0.52 |

0.69 |

| earth channel |

0.52 |

0.53 |

0.53 |

0.51 |

0.61 |

0.56 |

0.51 |

| grass |

0.61 |

0.09 |

0.71 |

1.00 |

0.18 |

0.23 |

1.00 |

| shrubs/trees |

1.00 |

1.00 |

0.00 |

1.00 |

0.72 |

1.00 |

1.00 |

Table 5.

Intersection over Union (IoU) for SAM Colab.

Table 5.

Intersection over Union (IoU) for SAM Colab.

| Category |

2010.04.21 |

2011.05.27 |

2011.10.22 |

2012.03.14 |

2015.10.24 |

2023.05.28 |

2023.10.28 |

| stones |

0.61 |

0.67 |

0.64 |

0.60 |

0.56 |

0.53 |

0.70 |

| earth channel |

0.53 |

0.54 |

0.56 |

0.54 |

0.65 |

0.56 |

0.60 |

| grass |

0.17 |

0.27 |

0.11 |

0.12 |

0.00 |

0.49 |

0.05 |

| shrubs, trees |

1.00 |

1.00 |

0.53 |

0.00 |

0.42 |

1.00 |

0.64 |

Table 6.

Streambed roughness coefficients divided into seasons.

Table 6.

Streambed roughness coefficients divided into seasons.

| Season |

Data |

SAM online |

SAM Colab |

| Spring |

2010.04.21 |

0.027 |

0.027 |

| 2011.05.25 |

0.029 |

0.029 |

| 2023.05.28 |

0.059 |

0.058 |

| Autumn |

2015.10.24 |

0.050 |

0.050 |

| 2023.10.28 |

0.059 |

0.059 |

| 2011.10.22 |

0.029 |

0.029 |

| Winter |

2012.03.14 |

0.029 |

0.029 |

Table 7.

Intersection over Union (IoU) for water category, (*) - water table above low levels.

Table 7.

Intersection over Union (IoU) for water category, (*) - water table above low levels.

| Model |

2010.04.21* |

2011.05.27 |

2011.10.22 |

2012.03.14* |

2015.10.24 |

2023.05.28* |

2023.10.28* |

| SAM online |

0.97 |

0.00 |

0.00 |

0.94 |

0.53 |

0.00 |

0.96 |

| SAM Colab |

0.06 |

0.00 |

0.00 |

0.00 |

0.00 |

0.02 |

0.00 |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).