Submitted:

22 December 2023

Posted:

26 December 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. From the phenomenology of time consciousness to co-construction and shared goals

2.1. Overview of Husserlian phenomenology of inner time consciousness

2.2. Shared protentions

3. An overview of active inference

4. Active inference and time consciousness

4.1. Mapping Husserlian phenomenology to active inference models

| Parameter | Description | Phenomenological Mapping |

|---|---|---|

| Observations that capture the sensory information received by the agent | Represents the hyletic data, setting perceptual boundaries but not directly perceived | |

| Hidden states that capture the causes for the sensory information – the latent or worldly states | Corresponds to perceptual experiences, inferred from sensory input | |

| Likelihood matrix that captures the mapping of observations to (sensory) states | Associated with sedimented knowledge, representing background understanding and expectations | |

| Transition matrix that captures the mapping for how states are likely to evolve | Linked to sedimented knowledge, shaping perceptual encounters | |

| Preference matrix that captures the preferred observations for the agent, which drive their actions | Similar to Husserl’s notions of fulfillment or frustration, representing expected results or preferences | |

| Initial distribution that captures the priors over the hidden states | Represents prior beliefs, shaped by previous experiences and current expectations | |

| Habit matrix that captures the prior expectations for initial actions | Connected to Husserlian notions of horizon and trail set, symbolizing prior expectations | |

| Policy matrix that captures the potential policies that guide the agent’s actions, driving the evolution of the B matrix | Symbolizes the possible course of action, influenced by background information and values |

4.2. An active inference approach to shared protentions

5. Category-theoretic description of shared protentions in Active Inference ensembles

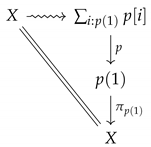

5.1. `Polynomial’ generative models

5.2. A sheaf-theoretic approach to multi-agent systems

5.2.1. A note on toposes

6. Closing Remarks

References

- Albarracin, M.; Pitliya, R.J.; Ramstead, M.J.; Yoshimi, J. Mapping husserlian phenomenology onto active inference. arXiv preprint arXiv:2208.09058, 2022. [Google Scholar]

- Yoshimi, J. The Formalism. In Husserlian Phenomenology; Springer, 2016; pp. 11–33.

- Yoshimi, J. Husserlian phenomenology: A unifying interpretation; Springer, 2016.

- Husserl, E. The phenomenology of internal time-consciousness; Indiana University Press, 2019.

- Andersen, H.K.; Grush, R. A brief history of time-consciousness: historical precursors to James and Husserl. Journal of the History of Philosophy 2009, 47, 277–307. [Google Scholar] [CrossRef]

- Poleshchuk, I. From Husserl to Levinas: the role of hyletic data, affection, sensation and the other in temporality. In From Husserl to Levinas; 2009; pp. 1000–1030. [Google Scholar]

- Husserl, E. The phenomenology of intersubjecitvity. Hamburg: Springer. Search in, 1973. [Google Scholar]

- Sokolowski, R. The formation of Husserl’s concept of constitution; Vol. 18, Springer Science & Business Media, 2013.

- Hoerl, C. Husserl, the absolute flow, and temporal experience. Philosophy and Phenomenological Research 2013, 86, 376–411. [Google Scholar] [CrossRef]

- Bergson, H. Matière et mémoire; République des Lettres, 2020.

- James, W. Principles of Psychology 2007.; Cosimo, 2007.

- Laroche, J.; Berardi, A.M.; Brangier, E. Embodiment of intersubjective time: relational dynamics as attractors in the temporal coordination of interpersonal behaviors and experiences. Frontiers in psychology 2014, 5, 1180. [Google Scholar] [CrossRef] [PubMed]

- Benford, S.; Giannachi, G. Temporal trajectories in shared interactive narratives. Proceedings of the sigchi conference on human factors in computing systems, 2008, pp. 73–82.

- Smith, R.; Friston, K.J.; Whyte, C.J. A step-by-step tutorial on active inference and its application to empirical data. Journal of mathematical psychology 2022, 107, 102632. [Google Scholar] [CrossRef] [PubMed]

- Parr, T.; Pezzulo, G.; Friston, K.J. Active inference: the free energy principle in mind, brain, and behavior; MIT Press, 2022.

- Friston, K.; Da Costa, L.; Sajid, N.; Heins, C.; Ueltzhöffer, K.; Pavliotis, G.A.; Parr, T. The free energy principle made simpler but not too simple. Physics Reports 2023, 1024, 1–29. [Google Scholar] [CrossRef]

- Ramstead, M.J.; Seth, A.K.; Hesp, C.; Sandved-Smith, L.; Mago, J.; Lifshitz, M.; Pagnoni, G.; Smith, R.; Dumas, G.; Lutz, A. ; others. From generative models to generative passages: A computational approach to (neuro) phenomenology. In Review of Philosophy and Psychology; 2022; pp. 1–29. [Google Scholar]

- Çatal, O.; Nauta, J.; Verbelen, T.; Simoens, P.; Dhoedt, B. Bayesian policy selection using active inference. arXiv preprint arXiv:1904.08149, 2019. [Google Scholar]

- Hohwy, J. The self-evidencing brain. Noûs 2016, 50, 259–285. [Google Scholar] [CrossRef]

- Bogotá, J.D.; Djebbara, Z. Time-consciousness in computational phenomenology: a temporal analysis of active inference. Neuroscience of Consciousness 2023, 2023, niad004. [Google Scholar] [CrossRef] [PubMed]

- Veissière, S.P.; Constant, A.; Ramstead, M.J.; Friston, K.J.; Kirmayer, L.J. Thinking through other minds: A variational approach to cognition and culture. Behavioral and brain sciences 2020, 43, e90. [Google Scholar] [CrossRef] [PubMed]

- Friston, K.; Parr, T.; Heins, C.; Constant, A.; Friedman, D.; Isomura, T.; Fields, C.; Verbelen, T.; Ramstead, M.; Clippinger, J.; Frith, C.D. Federated inference and belief sharing. University College London Queen Square Institute of Neurology 2023. [Google Scholar] [CrossRef] [PubMed]

- Ramstead, M.J.; Hesp, C.; Tschantz, A.; Smith, R.; Constant, A.; Friston, K. Neural and phenotypic representation under the free-energy principle. Neuroscience & Biobehavioral Reviews 2021, 120, 109–122. [Google Scholar]

- Gallagher, S.; Allen, M. Active inference, enactivism and the hermeneutics of social cognition. Synthese 2018, 195, 2627–2648. [Google Scholar] [CrossRef] [PubMed]

- Constant, A.; Ramstead, M.J.; Veissière, S.P.; Friston, K. Regimes of expectations: an active inference model of social conformity and human decision making. Frontiers in psychology 2019, 10, 679. [Google Scholar] [CrossRef] [PubMed]

- Constant, A.; Ramstead, M.J.; Veissiere, S.P.; Campbell, J.O.; Friston, K.J. A variational approach to niche construction. Journal of the Royal Society Interface 2018, 15, 20170685. [Google Scholar] [CrossRef] [PubMed]

- Ondobaka, S.; Kilner, J.; Friston, K. The role of interoceptive inference in theory of mind. Brain and cognition 2017, 112, 64–68. [Google Scholar] [CrossRef] [PubMed]

- Seth, A. Being you: A new science of consciousness; Penguin, 2021.

- St Clere Smithe, T. Polynomial Life: The Structure of Adaptive Systems. Electronic Proceedings in Theoretical Computer Science; Open Publishing Association: Cambridge, UK, 2022; Volume 372, pp. 133–148. [Google Scholar] [CrossRef]

- Myers, D.J. Categorical Systems Theory (Draft); (Draft), 2022.

- Spivak, D.I.; Niu, N. Polynomial Functors: A General Theory of Interaction; (In press), 2021.

- Robinson, M. Sheaves Are the Canonical Data Structure for Sensor Integration. Information Fusion 2017, 36, 208–224, [arxiv:math.AT/1603.01446v3]. [Google Scholar] [CrossRef]

- Hansen, J.; Ghrist, R. Opinion Dynamics on Discourse Sheaves. arXiv, 2020; [arxiv:math.DS/2005.12798]. [Google Scholar]

- Hansen, J. Laplacians of Cellular Sheaves: Theory and Applications. PhD thesis, University of Pennsylvania, 2020.

- Abramsky, S.; Carù, G. Non-Locality, Contextuality and Valuation Algebras: A General Theory of Disagreement. Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences 2019, 377, 20190036. [Google Scholar] [CrossRef]

- Hansen, J.; Ghrist, R. Learning Sheaf Laplacians from Smooth Signals. ICASSP 2019 - 2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2019. [CrossRef]

- Shulman, M. Homotopy Type Theory: The Logic of Space. New Spaces for Mathematics and Physics, 2017, [arxiv:math.CT/1703.03007].

*Supported by VERSES. |

|

| 1 | This replacement may be seen to generalize polynomial functions if we note that a number such as 3 may be seen to stand for a set of the same cardinality. |

| 2 | Strictly speaking, a section of the bundle . |

| 3 | To see one direction of the equivalence, observe that, given a bundle , we can obtain a sheaf by defining to be the pullback of along the inclusion . |

| 4 | It must be locally Cartesian closed, which it will be if it is a topos. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).