Submitted:

25 December 2023

Posted:

26 December 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

- Sentiment;

- Target;

- Causal relationship, etc.

2. Analysing Medical Service Reviews as a Natural Language Text Classification Task

- Poor understanding and knowledge of healthcare on the part of the service consumers, which casts doubt on the accuracy of their assessments of the physician and medical services provided [16,17]. Patients often use indirect indicators unrelated to the quality of medical services as arguments (for example, their interpersonal experience with the physician [18,19]).

- Lack of clear criteria by which to assess a physician / medical service [18].

- In the capture phase, relevant social media content will be extracted from various sources. Data collection can be done by an individual or third-party providers [61].

- At the second phase, relevant data will be selected for predictive modelling of sentiment analysis.

- At the third phase, important key findings of the analysis will be visualised [62].

- Bayesian classifier;

- decision tree;

- k-nearest neighbour algorithm (k-NN);

- support vector machine (SVM);

- artificial neural network based on multilayer perceptron;

- Rocchio algorithm.

3. Classification Models for Text Reviews of the Quality of Medical Services in Social Media

- text sentiment: positive or negative;

- target: a review of a medical facility or an individual physician.

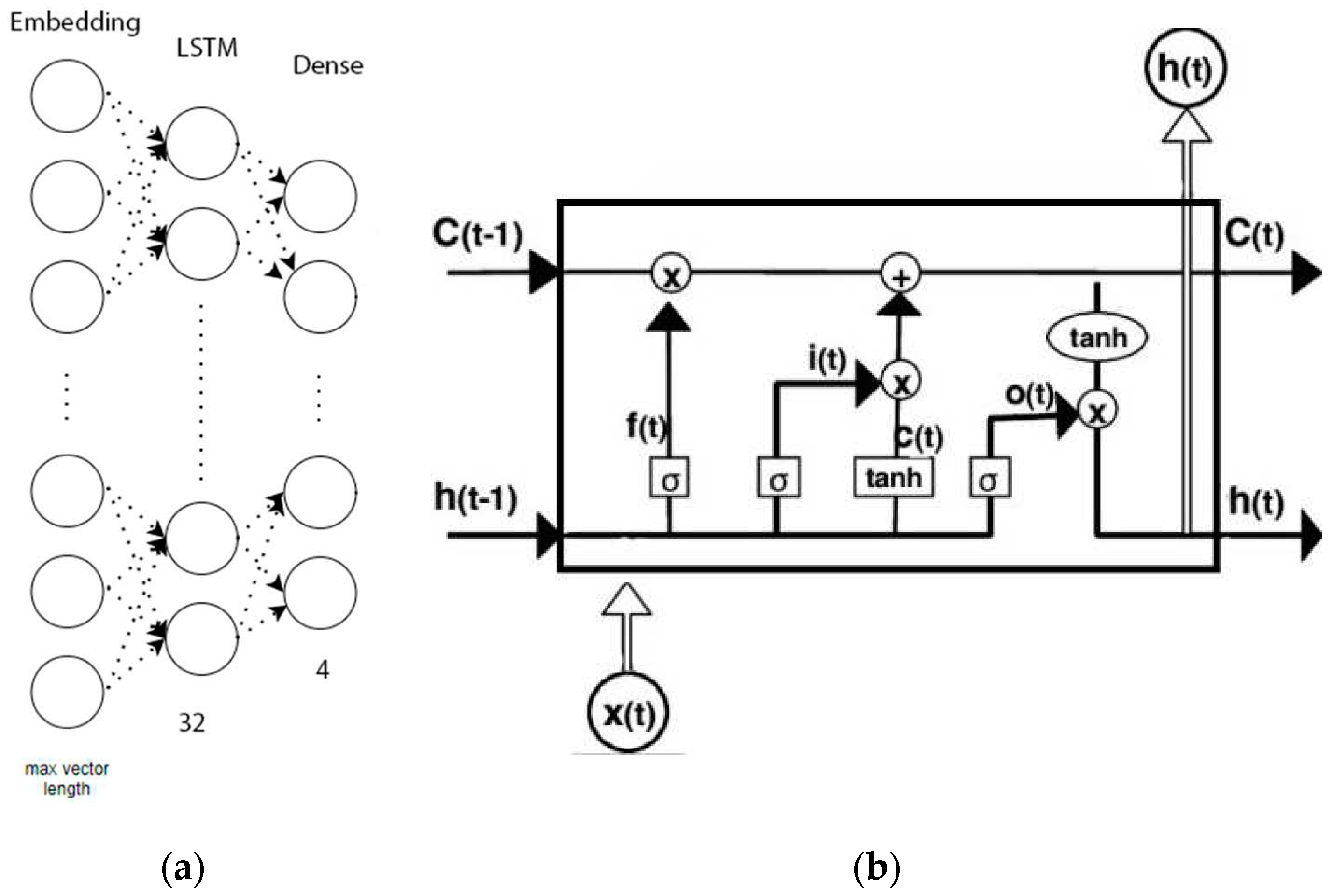

3.1. LSTM Network

- Embedding, the neural network input layer consisting of neurons:where Size(D) — dictionary size in text data;

- LSTM Layer — recurrent layer of the neural network; includes 32 blocks.

- Dense Layer — output layer consisting of four neurons. Each neuron is responsible for an output class. The activation function is "Softmax".

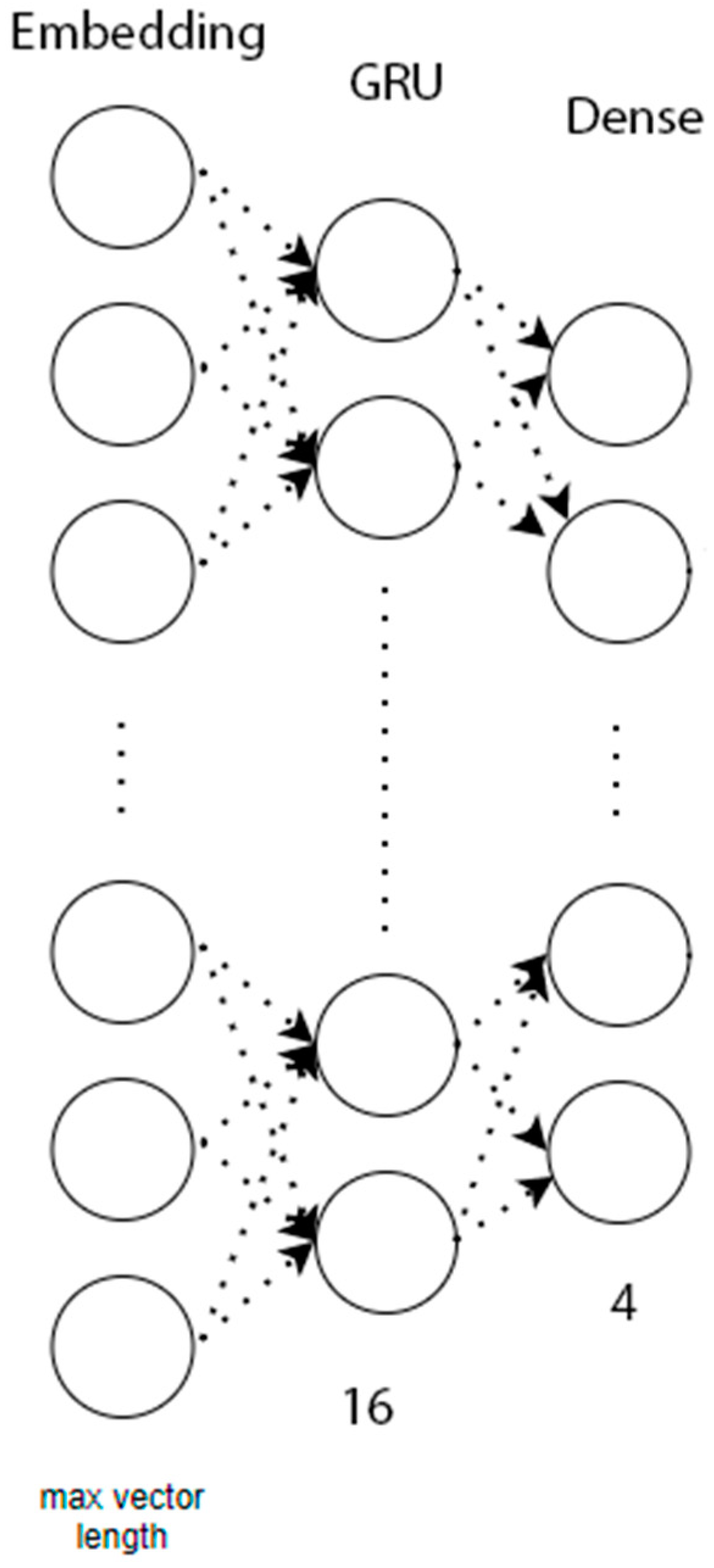

3.2. A Recurrent Neural Network

- Embedding — input layer of the neural network.

- GRU — recurrent layer of the neural network; includes 16 blocks.

- Dense — output layer consisting of four neurons. The activation function is "Softmax".

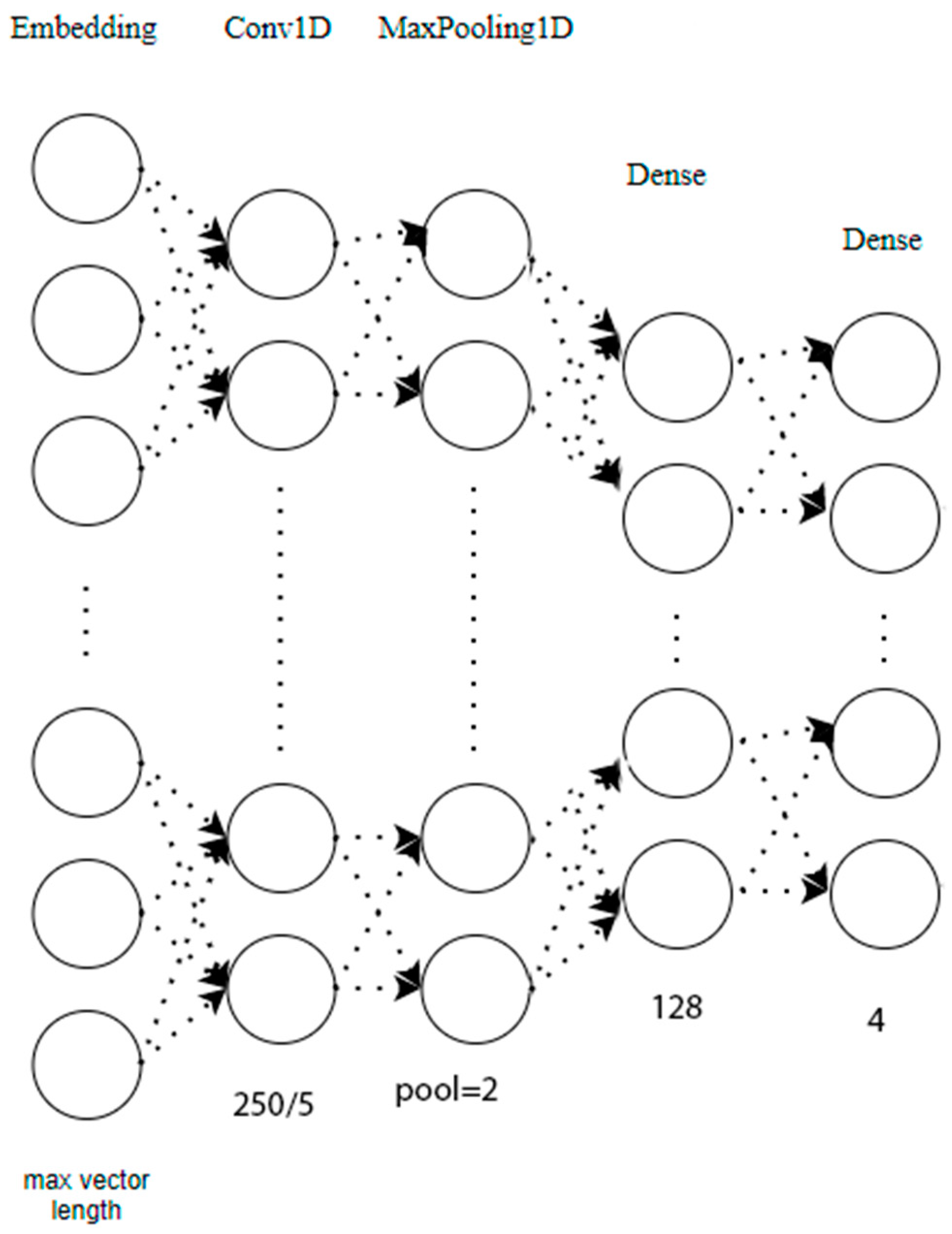

3.3. A Convolutional Neural Network

- Embedding — input layer of the neural network.

- Conv1D — convolutional layer required for deep learning. This layer improves the accuracy of text message classification by 5-7%. The activation function is "ReLU".

- MaxPooling1D — layer which performs dimensionality reduction of generated feature maps. The maximum pooling is equal to 2.

- Dense — first output layer consisting of 128 neurons. The activation function is "ReLU".

- Dense — final output layer consisting of four neurons. The activation function is "Softmax".

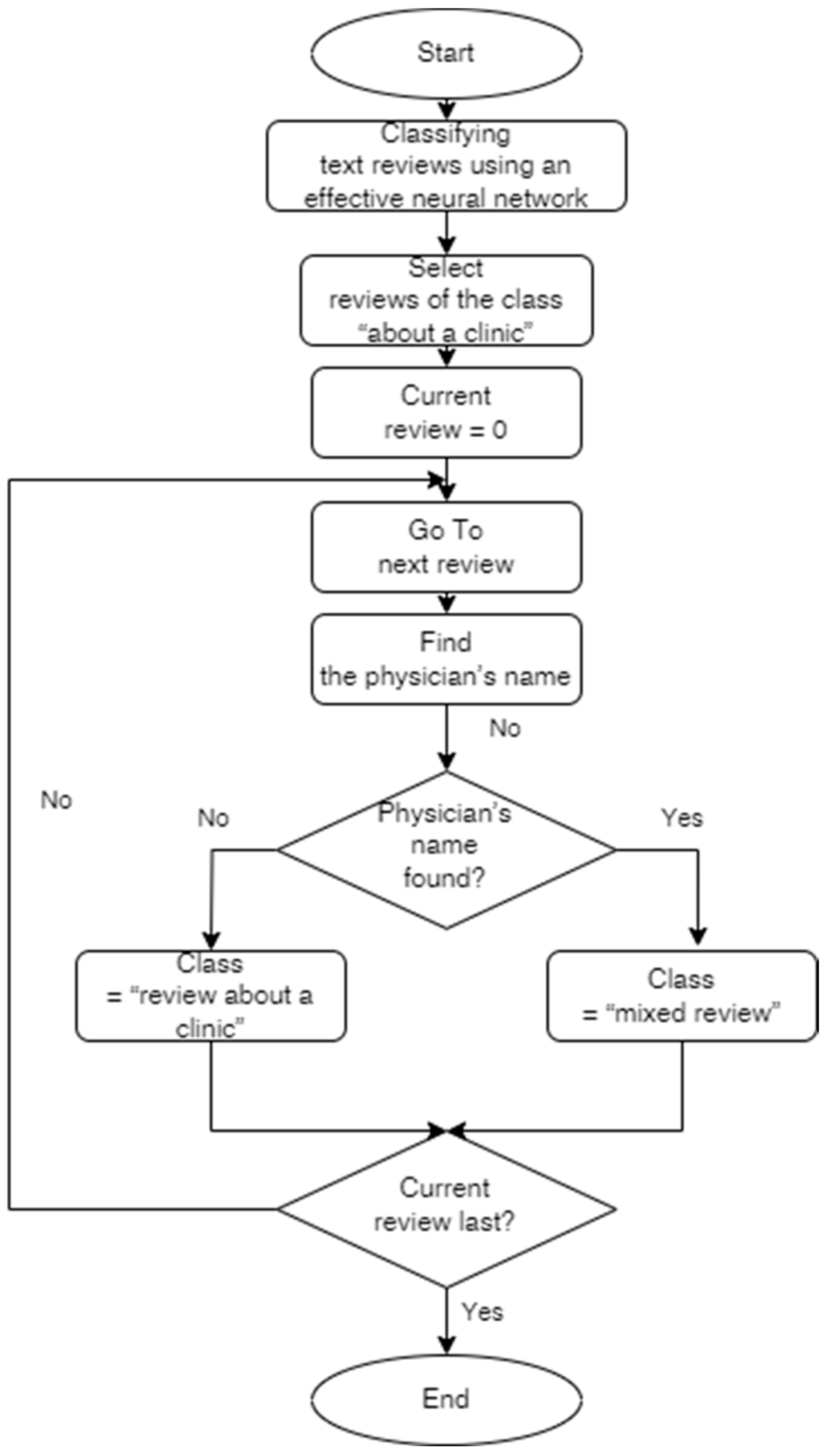

3.4. Using Linguistic Algorithms

4. Software Implementation of a Text Classification System

- Tensorflow, an open-source machine learning software library developed by Google for solving neural network construction and training problems.

- Keras, a deep-learning library that is a high-level API written in Python and capable of running on top of TensorFlow.

- Numpy, a Python library for working with multidimensional arrays.

- Pandas, a Python library that provides special data structures and operations for manipulating numerical tables and time series.

5. Experimental Results of Text Review Classification

5.1. Using Dataset

- city — city where the review was posted;

- text — feedback text;

- author_name — name of the feedback author;

- date — feedback date;

- day — feedback day;

- month — feedback month;

- year — feedback year;

- doctor_or_clinic — a binary variable (the review is of a physician OR a clinic);

- spec — medical specialty (for feedback on physicians);

- gender — feedback author’s gender;

- id — feedback identification number.

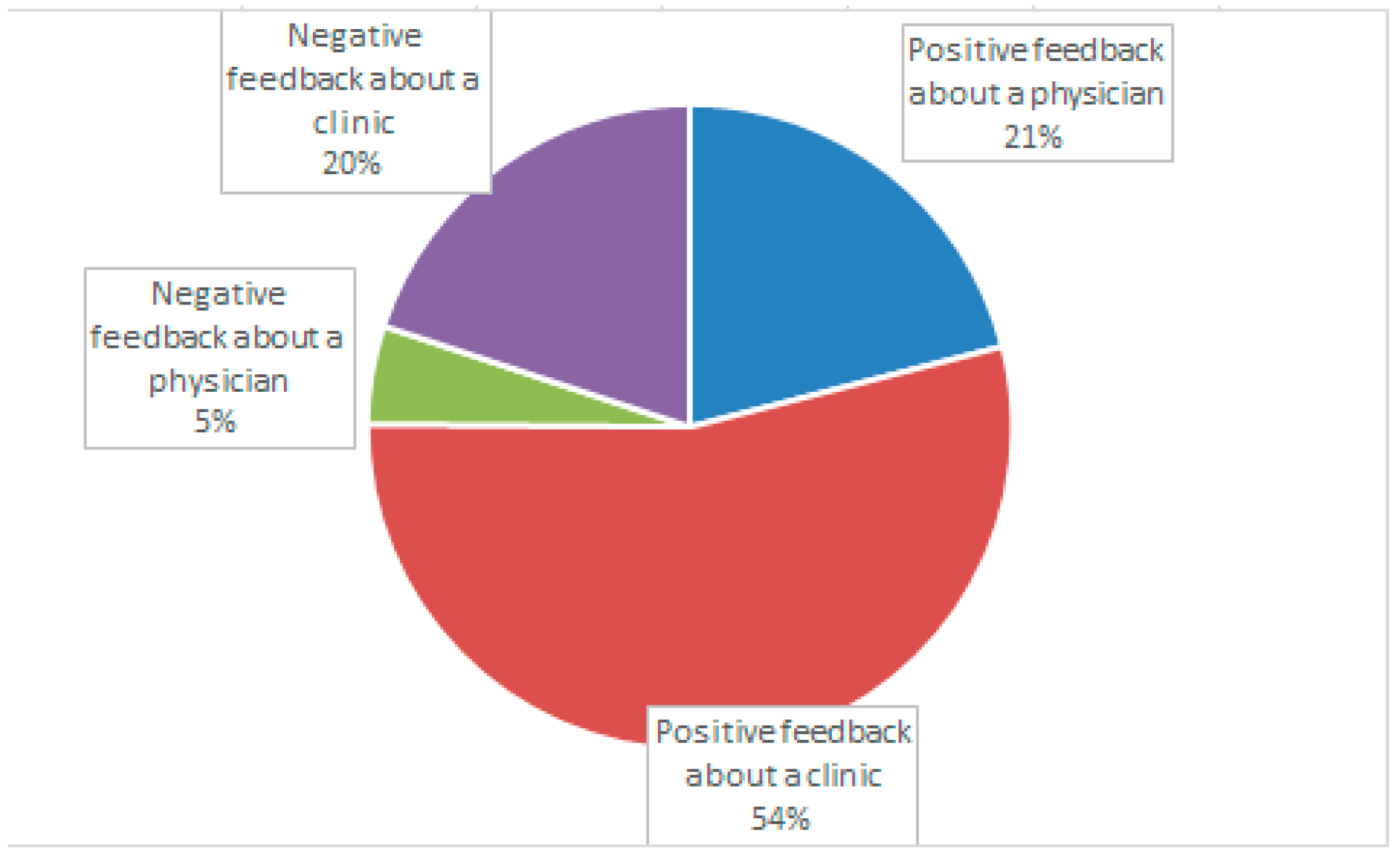

5.2. Experimental Results on Classifying Text Reviews by Sentiment

- Positive review of a physician;

- Positive review of a clinic;

- Negative review of a physician;

- Negative review of a clinic.

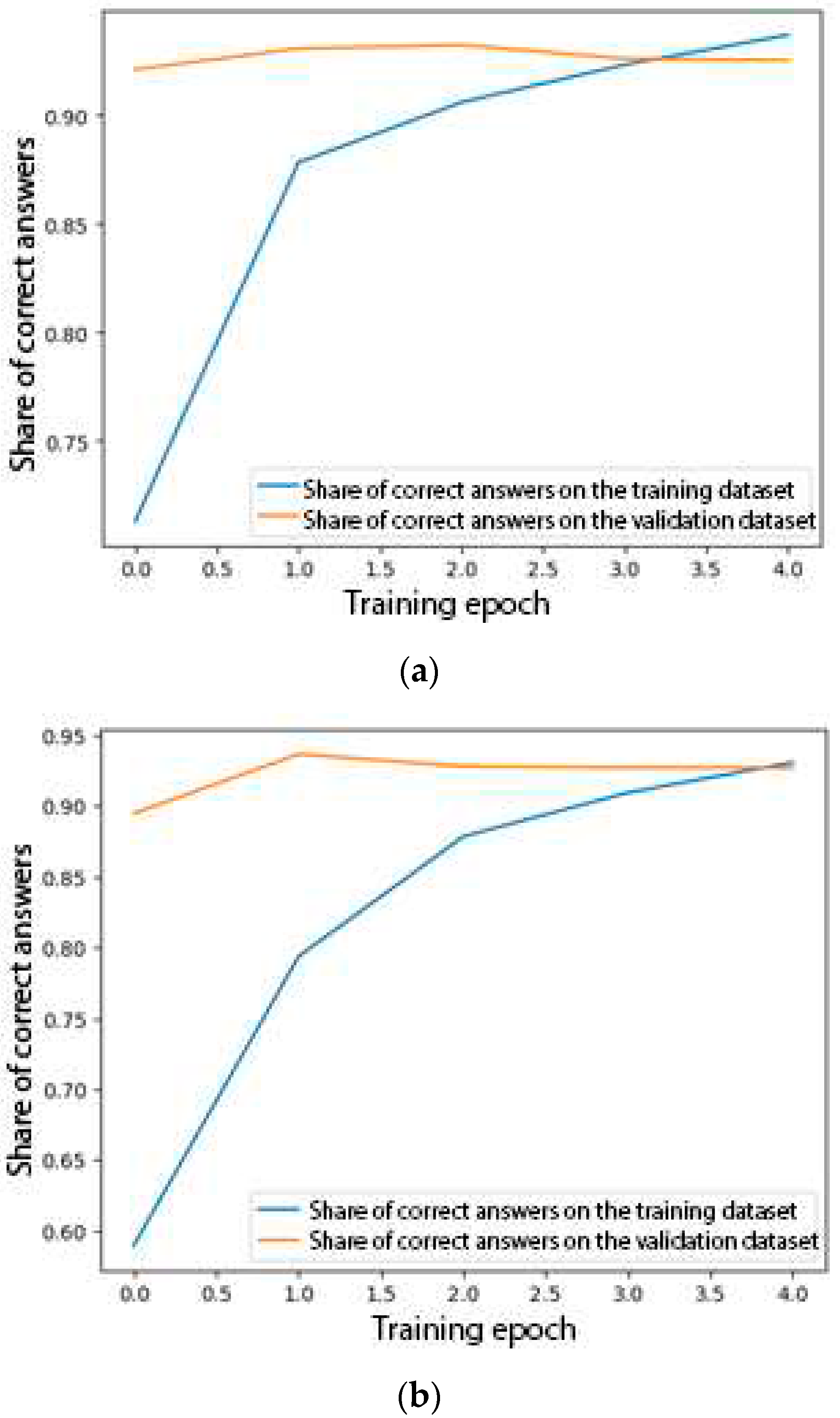

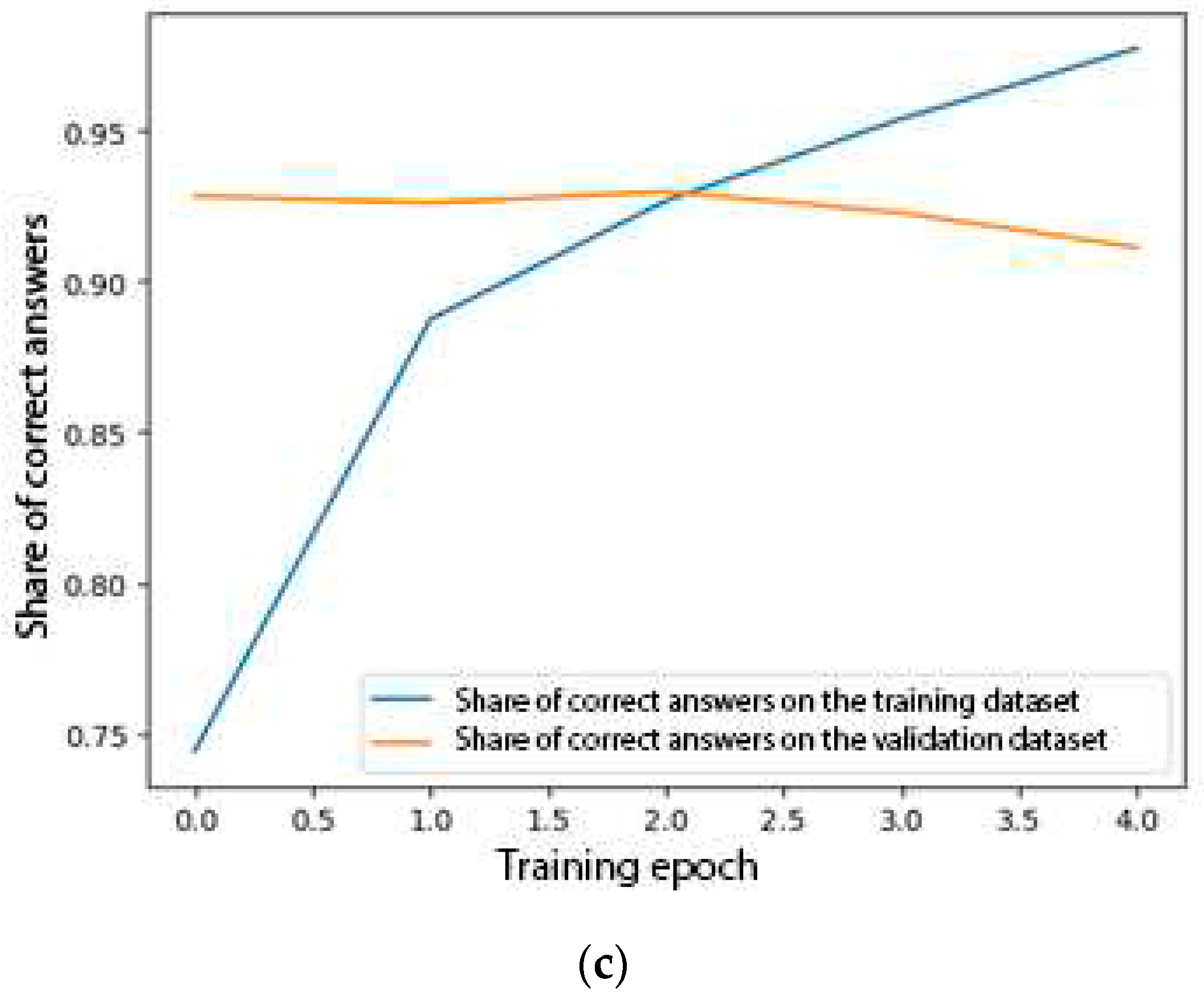

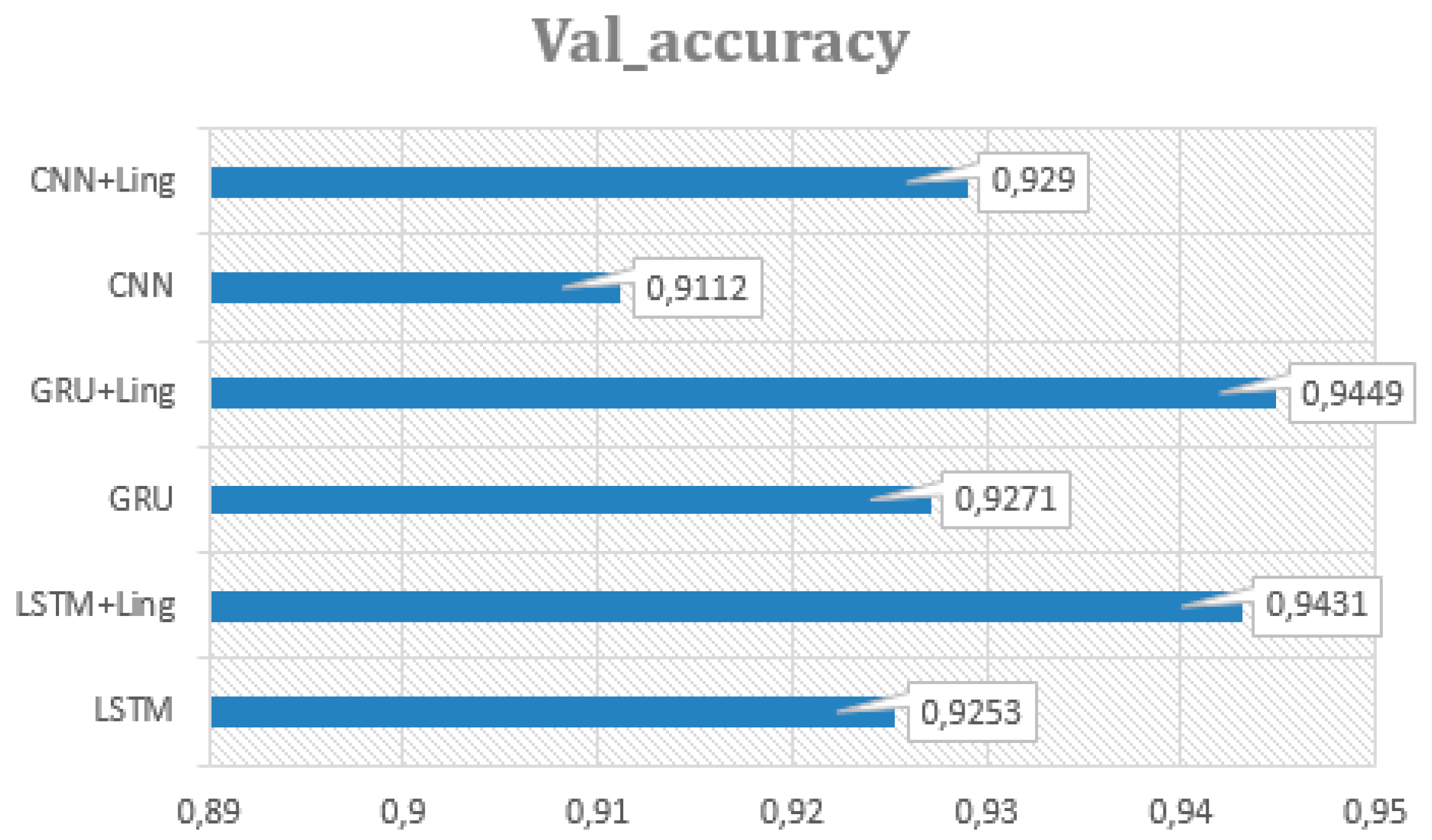

5.3. A Text Feedback Classification Experiment Using Various Machine Learning Models

- Accuracy — training accuracy;

- Val_accuracy — validation accuracy;

- Loss — training loss;

- Val_loss — validation loss.

- Some reviews were of both a clinic and a physician without mentioning the latter’s name. This prevented the named entity recognition tool from assigning the reviews to the mixed class. This problem could be solved by parsing the sentences further with identifying a semantically significant object unspecified by a full name.

- Some reviews expressed contrasting opinions about the clinic, related to different aspects of its operation. The opinions often differed on the organisational support versus the level of medical services provided by the clinic.

6. Conclusions

- The neural network classifiers achieve high accuracy in classifying the Russian-language reviews from social media by sentiment (positive or negative) and target (clinic or physician) using various architectures of the LSTM, CNN, or GRU networks, with the GRU-based architecture being the best (val_accuracy=0.9271).

- The named entity recognition method improves the classification performance for each of the neural network classifiers when applied to the segmented text reviews.

- To further improve the classification accuracy, semantic segmentation of the reviews by target and sentiment is required, as well as a separate analysis of the resulting fragments.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Litvin, S. W., Goldsmith, R. E., & Pan, B. (2008). Electronic word-of-mouth in hospitality and tourism management. Tourism management, 29(3), 458-468. [CrossRef]

- Ismagilova, E., Dwivedi, Y. K., Slade, E., & Williams, M. D. (2017). Electronic word of mouth (eWOM) in the marketing context: A state of the art analysis and future directions.

- Cantallops, A. S., & Salvi, F. (2014). New consumer behavior: A review of research on eWOM and hotels. International Journal of Hospitality Management, 36, 41-51. [CrossRef]

- Mulgund, P., Sharman, R., Anand, P., Shekhar, S., & Karadi, P. (2020). Data quality issues with physician-rating websites: systematic review. Journal of Medical Internet Research, 22(9), e15916. [CrossRef]

- Ghimire, B., Shanaev, S., & Lin, Z. (2022). Effects of official versus online review ratings. Annals of Tourism Research, 92, 103247. [CrossRef]

- Xu, Y., & Xu, X. (2023). Rating deviation and manipulated reviews on the Internet—A multi-method study. Information & Management, 103829. [CrossRef]

- Hu, N., Bose, I., Koh, N. S., & Liu, L. (2012). Manipulation of online reviews: An analysis of ratings, readability, and sentiments. Decision support systems, 52(3), 674-684. [CrossRef]

- Luca, M., & Zervas, G. (2016). Fake it till you make it: Reputation, competition, and Yelp review fraud. Management Science, 62(12), 3412-3427. [CrossRef]

- Namatherdhala, B., Mazher, N., & Sriram, G. K. (2022). Artificial Intelligence in Product Management: Systematic review. International Research Journal of Modernization in Engineering Technology and Science, 4(7).

- Jabeur, S. B., Ballouk, H., Arfi, W. B., & Sahut, J. M. (2023). Artificial intelligence applications in fake review detection: Bibliometric analysis and future avenues for research. Journal of Business Research, 158, 113631. [CrossRef]

- Emmert, M., & McLennan, S. (2021). One decade of online patient feedback: longitudinal analysis of data from a German physician rating website. Journal of Medical Internet Research, 23(7), e24229. [CrossRef]

- Kleefstra, S. M., Zandbelt, L. C., Borghans, I., de Haes, H. J., & Kool, R. B. (2016). Investigating the potential contribution of patient rating sites to hospital supervision: exploratory results from an interview study in the Netherlands. Journal of Medical Internet Research, 18(7), e201. [CrossRef]

- Bardach, N. S., Asteria-Peñaloza, R., Boscardin, W. J., & Dudley, R. A. (2013). The relationship between commercial website ratings and traditional hospital performance measures in the USA. BMJ quality & safety, 22(3), 194-202. [CrossRef]

- Van de Belt, T. H., Engelen, L. J., Berben, S. A., Teerenstra, S., Samsom, M., & Schoonhoven, L. (2013). Internet and social media for health-related information and communication in health care: preferences of the Dutch general population. Journal of medical Internet research, 15(10), e220. [CrossRef]

- Hao, H., Zhang, K., Wang, W., & Gao, G. (2017). A tale of two countries: International comparison of online doctor reviews between China and the United States. International journal of medical informatics, 99, 37-44. [CrossRef]

- Bidmon, S., Elshiewy, O., Terlutter, R., & Boztug, Y. (2020). What patients value in physicians: analyzing drivers of patient satisfaction using physician-rating website data. Journal of medical Internet research, 22(2), e13830. [CrossRef]

- Ellimoottil, C., Leichtle, S. W., Wright, C. J., Fakhro, A., Arrington, A. K., Chirichella, T. J., & Ward, W. H. (2013). Online physician reviews: the good, the bad and the ugly. Bulletin of the American College of Surgeons, 98(9), 34-39.

- Bidmon, S., Terlutter, R., & Röttl, J. (2014). What explains usage of mobile physician-rating apps? Results from a web-based questionnaire. Journal of medical Internet research, 16(6), e3122. [CrossRef]

- 19. Lieber, R. (2012). The web is awash in reviews, but not for doctors. Here’s why. New York Times, (March 9).

- Daskivich, T. J., Houman, J., Fuller, G., Black, J. T., Kim, H. L., & Spiegel, B. (2018). Online physician ratings fail to predict actual performance on measures of quality, value, and peer review. Journal of the American Medical Informatics Association, 25(4), 401-407. [CrossRef] [PubMed]

- Gray, B. M., Vandergrift, J. L., Gao, G. G., McCullough, J. S., & Lipner, R. S. (2015). Website ratings of physicians and their quality of care. JAMA internal medicine, 175(2), 291-293. [CrossRef]

- Skrzypecki, J., & Przybek, J. (2018, May). Physician review portals do not favor highly cited US ophthalmologists. In Seminars in Ophthalmology (Vol. 33, No. 4, pp. 547-551). Taylor & Francis. [CrossRef]

- Widmer, R. J., Maurer, M. J., Nayar, V. R., Aase, L. A., Wald, J. T., Kotsenas, A. L., ... & Pruthi, S. (2018, April). Online physician reviews do not reflect patient satisfaction survey responses. In Mayo Clinic Proceedings (Vol. 93, No. 4, pp. 453-457). Elsevier. [CrossRef]

- Saifee, D. H., Bardhan, I., & Zheng, Z. (2017). Do Online Reviews of Physicians Reflect Healthcare Outcomes?. In Smart Health: International Conference, ICSH 2017, Hong Kong, China, June 26-27, 2017, Proceedings (pp. 161-168). Springer International Publishing.

- Trehan, S. K., Nguyen, J. T., Marx, R., Cross, M. B., Pan, T. J., Daluiski, A., & Lyman, S. (2018). Online patient ratings are not correlated with total knee replacement surgeon–specific outcomes. HSS Journal, 14(2), 177-180. [CrossRef]

- Doyle, C., Lennox, L., & Bell, D. (2013). A systematic review of evidence on the links between patient experience and clinical safety and effectiveness. BMJ open, 3(1), e001570. [CrossRef] [PubMed]

- Okike, K., Uhr, N. R., Shin, S. Y., Xie, K. C., Kim, C. Y., Funahashi, T. T., & Kanter, M. H. (2019). A comparison of online physician ratings and internal patient-submitted ratings from a large healthcare system. Journal of General Internal Medicine, 34, 2575-2579. [CrossRef]

- Rotman, L. E., Alford, E. N., Shank, C. D., Dalgo, C., & Stetler, W. R. (2019). Is there an association between physician review websites and press ganey survey results in a neurosurgical outpatient clinic?. World Neurosurgery, 132, 891-899. [CrossRef]

- Lantzy, S., & Anderson, D. (2020). Can consumers use online reviews to avoid unsuitable doctors? Evidence from RateMDs. com and the Federation of State Medical Boards. Decision Sciences, 51(4), 962-984.

- Gilbert, K., Hawkins, C. M., Hughes, D. R., Patel, K., Gogia, N., Sekhar, A., & Duszak Jr, R. (2015). Physician rating websites: do radiologists have an online presence?. Journal of the American College of Radiology, 12(8), 867-871.

- Okike, K., Peter-Bibb, T. K., Xie, K. C., & Okike, O. N. (2016). Association between physician online rating and quality of care. Journal of medical Internet research, 18(12), e324. [CrossRef]

- Imbergamo, C., Brzezinski, A., Patankar, A., Weintraub, M., Mazzaferro, N., & Kayiaros, S. (2021). Negative online ratings of joint replacement surgeons: An analysis of 6,402 r. [CrossRef]

- Mostaghimi, A., Crotty, B. H., & Landon, B. E. (2010). The availability and nature of physician information on the internet. Journal of general internal medicine, 25, 1152-1156. [CrossRef]

- Lagu, T., Hannon, N. S., Rothberg, M. B., & Lindenauer, P. K. (2010). Patients’ evaluations of health care providers in the era of social networking: an analysis of physician-rating websites. Journal of general internal medicine, 25, 942-946. [CrossRef]

- López, A., Detz, A., Ratanawongsa, N., & Sarkar, U. (2012). What patients say about their doctors online: a qualitative content analysis. Journal of general internal medicine, 27, 685-692. [CrossRef]

- Shah, A. M., Yan, X., Qayyum, A., Naqvi, R. A., & Shah, S. J. (2021). Mining topic and sentiment dynamics in physician rating websites during the early wave of the COVID-19 pandemic: Machine learning approach. International Journal of Medical Informatics, 149, 104434. [CrossRef]

- Bidmon, S., Elshiewy, O., Terlutter, R., & Boztug, Y. (2020). What patients value in physicians: analyzing drivers of patient satisfaction using physician-rating website data. Journal of medical Internet research, 22(2), e13830.

- Shah, A. M., Yan, X., Tariq, S., & Ali, M. (2021). What patients like or dislike in physicians: Analyzing drivers of patient satisfaction and dissatisfaction using a digital topic modeling approach. Information Processing & Management, 58(3), 102516. [CrossRef]

- Lagu, T., Metayer, K., Moran, M., Ortiz, L., Priya, A., Goff, S. L., & Lindenauer, P. K. (2017). Website characteristics and physician reviews on commercial physician-rating websites. Jama, 317(7), 766-768. [CrossRef]

- Chen, Y., & Xie, J. (2008). Online consumer review: Word-of-mouth as a new element of marketing communication mix. Management science, 54(3), 477-491. [CrossRef]

- Pavlou, P. A., & Dimoka, A. (2006). The nature and role of feedback text comments in online marketplaces: Implications for trust building, price premiums, and seller differentiation. Information systems research, 17(4), 392-414. [CrossRef]

- Terlutter, R., Bidmon, S., & Röttl, J. (2014). Who uses physician-rating websites? Differences in sociodemographic variables, psychographic variables, and health status of users and nonusers of physician-rating websites. Journal of medical Internet research, 16(3), e97. [CrossRef]

- Emmert, M., & Meier, F. (2013). An analysis of online evaluations on a physician rating website: evidence from a German public reporting instrument. Journal of medical Internet research, 15(8), e2655. [CrossRef]

- Nwachukwu, B. U., Adjei, J., Trehan, S. K., Chang, B., Amoo-Achampong, K., Nguyen, J. T., ... & Ranawat, A. S. (2016). Rating a sports medicine surgeon's “quality” in the modern era: an analysis of popular physician online rating websites. HSS Journal®, 12(3), 272-277.

- Obele, C. C., Duszak Jr, R., Hawkins, C. M., & Rosenkrantz, A. B. (2017). What patients think about their interventional radiologists: assessment using a leading physician ratings website. Journal of the American College of Radiology, 14(5), 609-614.

- Emmert, M., Meier, F., Pisch, F., & Sander, U. (2013). Physician choice making and characteristics associated with using physician-rating websites: cross-sectional study. Journal of medical Internet research, 15(8), e2702. [CrossRef]

- Gao, G. G., McCullough, J. S., Agarwal, R., & Jha, A. K. (2012). A changing landscape of physician quality reporting: analysis of patients’ online ratings of their physicians over a 5-year period. Journal of medical Internet research, 14(1), e38. [CrossRef]

- Rahim, A. I. A., Ibrahim, M. I., Musa, K. I., Chua, S. L., & Yaacob, N. M. (2021, October). Patient satisfaction and hospital quality of care evaluation in malaysia using servqual and facebook. In Healthcare (Vol. 9, No. 10, p. 1369). MDPI. [CrossRef]

- Galizzi, M. M., Miraldo, M., Stavropoulou, C., Desai, M., Jayatunga, W., Joshi, M., & Parikh, S. (2012). Who is more likely to use doctor-rating websites, and why? A cross-sectional study in London. BMJ open, 2(6), e001493. [CrossRef]

- Hanauer, D. A., Zheng, K., Singer, D. C., Gebremariam, A., & Davis, M. M. (2014). Public awareness, perception, and use of online physician rating sites. Jama, 311(7), 734-735. [CrossRef]

- McLennan, S., Strech, D., Meyer, A., & Kahrass, H. (2017). Public awareness and use of German physician ratings websites: Cross-sectional survey of four North German cities. Journal of medical Internet research, 19(11), e387. [CrossRef]

- Lin, Y., Hong, Y. A., Henson, B. S., Stevenson, R. D., Hong, S., Lyu, T., & Liang, C. (2020). Assessing patient experience and healthcare quality of dental care using patient online reviews in the United States: mixed methods study. Journal of Medical Internet Research, 22(7), e18652. [CrossRef]

- Emmert, M., Meier, F., Heider, A. K., Dürr, C., & Sander, U. (2014). What do patients say about their physicians? An analysis of 3000 narrative comments posted on a German physician rating website. Health policy, 118(1), 66-73. [CrossRef]

- Greaves, F., Ramirez-Cano, D., Millett, C., Darzi, A., & Donaldson, L. (2013). Harnessing the cloud of patient experience: using social media to detect poor quality healthcare. BMJ quality & safety, 22(3), 251-255. [CrossRef]

- Hao, H., & Zhang, K. (2016). The voice of Chinese health consumers: a text mining approach to web-based physician reviews. Journal of medical Internet research, 18(5), e108. [CrossRef]

- Shah, A. M., Yan, X., Shah, S. A. A., & Mamirkulova, G. (2020). Mining patient opinion to evaluate the service quality in healthcare: a deep-learning approach. Journal of Ambient Intelligence and Humanized Computing, 11, 2925-2942. [CrossRef]

- Wallace, B. C., Paul, M. J., Sarkar, U., Trikalinos, T. A., & Dredze, M. (2014). A large-scale quantitative analysis of latent factors and sentiment in online doctor reviews. Journal of the American Medical Informatics Association, 21(6), 1098-1103. [CrossRef]

- Ranard, B. L., Werner, R. M., Antanavicius, T., Schwartz, H. A., Smith, R. J., Meisel, Z. F., ... & Merchant, R. M. (2016). Yelp reviews of hospital care can supplement and inform traditional surveys of the patient experience of care. Health Affairs, 35(4), 697-705. [CrossRef]

- Hao, H. (2015). The development of online doctor reviews in China: an analysis of the largest online doctor review website in China. Journal of medical Internet research, 17(6), e134. [CrossRef]

- Jiang, S., & Street, R. L. (2017). Pathway linking internet health information seeking to better health: a moderated mediation study. Health Communication, 32(8), 1024-1031. [CrossRef]

- Hotho, A. Nürnberger and G. Paaß, “A Brief Survey of Text Mining”, LDV Forum - GLDV Journal for Computational Linguistics and Language Technology, vol. 20, pp.19-62, 2005.

- V. Păvăloaia, E. Teodor, D. Fotache and M. Danileț, M. “Opinion Mining on Social Media Data: Sentiment Analysis of User Preferences”, Sustainability, 11, 4459, 2019. [CrossRef]

- D. Bespalov, B. Bing, Q. Yanjun and A. Shokoufandeh, “Sentiment classification based on supervised latent n-gram analysis”, Proceedings of the 20th ACM international conference on Information and knowledge management (CIKM ’11). Association for Computing Machinery, New York, NY, USA, 375–382, 2011.

- Irfan, R., King, C.K., Grages, D., Ewen, S., Khan, S.U., Madani, S.A., Kolodziej, J., Wang, L., Chen, D., Rayes, A., Tziritas, N., Xu, C.Z., Zomaya, A.Y., Alzahrani, A.S. & Li, H., A Survey on Text Mining in 196 Phat Jotikabukkana, et al. Social Networks, Cambridge Journal, The Knowledge Engineering Review, 30(2), pp. 157-170, 2015.

- Patel, P. & Mistry, K., A Review: Text Classification on Social Media Data, IOSR Journal of Computer Engineering, 17(1), pp. 80-84, 2015.

- Lee, K., Palsetia, D., Narayanan, R., Patwary, Md.M.A., Agrawal, A. & Choudhary, A.S, Twitter Trending Topic Classification, in Proceeding of the 2011 IEEE 11 th International Conference on Data Mining Workshops, ICDW’11, pp. 251-258, 2011.

- Kateb, F. & Kalita, J., Classifying Short Text in Social Media: Twitter as Case Study, International Journal of Computer Applications, 111(9), pp. 1-12, 2015. [CrossRef]

- Chirawichitichai, N., Sanguansat, P. & Meesad, P., A Comparative Study on Feature Weight in Thai Document Categorization Framework, 10th International Conference on Innovative Internet Community Services (I2CS), IICS, pp. 257-266, 2010.

- Theeramunkong, T. & Lertnattee, V., Multi-Dimension Text Classification, SIIT, Thammasat University, 2005.http://www.aclweb.org /anthology/C02-1155 (25 October 2023).

- Viriyayudhakorn, K., Kunifuji, S. & Ogawa, M., A Comparison of Four Association Engines in Divergent Thinking Support Systems on Wikipedia, Knowledge, Information, and Creativity Support Systems, KICSS2010, Springer, pp. 226-237, 2011.

- Sornlertlamvanich, V., Pacharawongsakda, E. & Charoenporn, T., Understanding Social Movement by Tracking the Keyword in Social Media, in MAPLEX2015, Yamagata, Japan, February 2015.

- 72. Konstantinov A., Moshkin V., Yarushkina N. Approach to the Use of Language Models BERT and Word2vec in Sentiment Analysis of Social Network Texts. In: Dolinina O. et al. (eds) Recent Research in Control Engineering and Decision Making. ICIT 2020. Studies in Systems, Decision and Control, vol 337. Springer, Cham. pp 462-473. [CrossRef]

- Kalabikhina, I., Zubova, E., Loukachevitch, N., Kolotusha, A., Kazbekova, Z., Banin, E., Klimenko, G., Identifying Reproductive Behavior Arguments in Social Media Content Users’ Opinions through Natural Language Processing Techniques, Population and Economics, 7(2), pp. 40-59, 2023. [CrossRef]

| # | Feedback Text | Feedback Data | Sentiment Class | Target Class |

|---|---|---|---|---|

| 1 | "The doctor was really rude, she had no manners with the patients, she didn’t care about your poor health, all she wanted was to get home early. I never want to see that doctor again. She’s rubbish, I wouldn’t recommend her to anyone." | Ekaterina, April 13, 2023, Moscow | Negative | physician |

| 2 | “I had to get an MRI scan of my abdomen. They kept me waiting. They gave me the scan results straight away; I’ll show them to my doctor. It was easy for me to get to the clinic. Their manners were not very good. I won’t be going back there.” | Kamil, April 17, 2023, Moscow | Negative | clinic |

| 3 | "All those good reviews are written by their staff marketers, they try to stop the bad ones, they don’t let any real complaints get through. The clinic is very pricey, they just want to make money, no one cares about your health there." | Anonymous, April 10, 2023, Moscow | Negative | clinic |

| 4 | "What they do in this clinic is rip you off because they make you do checkups and tests that you don’t need. I found out when I was going through all this stuff, and then I wondered why I had to do it all." | Arina, March 2, 2023, Moscow | Negative | clinic |

| 5 | "Rubbish doctor. My problem is really bad skin dryness and rashes because of that. ######## just said, “you just moisturise it” and that was it. She didn’t tell me how to moisturise my skin or what to use for moisturiser. I had to push her asking for advice on care and what to do next. She didn’t give me anything except some cream, and that only after I asked her". | Anonymous, May 11, 2023, Moscow, Russia | Negative | physician |

| 6 | "My husband had a bad tooth under the crown, the dentist said he had to redo his whole jaw and put all new crowns again, like he had to sort everything out to fit the new crowns after the tooth was fixed. In the end we trusted the dentist and redid my husband’s whole jaw. The bridge didn’t last a month, it kept coming out. In the end we had to do it all over again with another dentist at another clinic. He was awful, he only rips you off. I don’t recommend this dentist to anyone.” | Tatyana, April 13, 2023, Moscow | Negative | physician |

| 7 | " In 2020, I was going to a doctor at the clinic #######.ru for 3 months for the pain in my left breast. He gave me some cream and told me to go on a diet, but I was getting worse. I went to see another doctor; it turned out it was breast cancer. Nearly killed me…" | Maya, March 27, 2023, Moscow | Negative | physician |

| 8 | "####### nearly left my child with one leg. A healthy 10-month-old child had to have two surgeries after what this “doctor” had given him. It’s over now, but the “nice” memory of this woman will stay with me forever." | Elizaveta, March 16, 2023, Moscow | Negative | physician |

| LSTM | GRU | CNN | SVM | BERT | |

|---|---|---|---|---|---|

| Accuracy | 0.9369 | 0.9309 | 0.9772 | 0.8441 | 0.8942 |

| Val_accuracy | 0.9253 | 0.9271 | 0.9112 | 0.8289 | 0.8711 |

| Loss | 0.1859 | 0.2039 | 0.0785 | 0.3769 | 0.1729 |

| Val_loss | 0.2248 | 0.2253 | 0.3101 | 0.3867 | 0.2266 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).