1. Introduction

Numerical iterative techniques are applied to find roots of an equation when analytic solution is not available [

1,

2,

3,

4]. Hybrid algorithm is a new concept to iterative solutions. Envisioning hybrid techniques to find roots of an equation is an inspiring technique to solve the fundamental problem in diverse fields. Recently, there have been some hybrid algorithms designing efficient solutions. Here we present a new hybrid algorithm which is a more intuitive and heuristic approach to hybridization.

Mostly optimization problems lead to solving non-linear equations for optimizing calculation of the value of a parameter, that is the root of the equation. We design and implement a new algorithm Hybrid4 that is more efficient hybrid of BTsection and False Position methods. The implementation results validate that the new algorithm surpasses the existing hybrid algorithms rooted on standalone Bisection and Trisection algorithms in conjunction with False Position and Newton Raphson algorithms. We designed and implemented an essential hybrid algorithm to the root finding algorithms.

First, why do we need another root finding algorithm? Even though the classical methods have been developed and used for decades, enhancements are progressively made to improve the performance of these methods [

5]. There are several factors in determining the efficiency of an algorithm: accuracy, CPU time efficiency, and generality that it is works most of the time on all functions and domain intervals as expected. Accuracy may be measured in terms of number of decimal digits. For iterative methods, efficiency could mean the number of iterations to arrive at a solution coupled with CPU times, and computational complexity of iteration etc.

Finding the roots of an equation is a fundamental problem in diverse fields in physical and social sciences including Computer Science, Engineering (Biological, Civil, Electrical, Mechanical), and Social Sciences (Psychology, Economics, Businesses, Stock Analysis) etc. They look for the optimal solution to the recurring non-linear problems. The problems such as minimization, Target Shooting, Orbital Motion, Plenary Motion, Social Sciences, Financial Market Stock prediction analysis etc, lend themselves to finding roots of non-linear functional equations [

6,

7]. There is thorough study by Sapna and Mohan in the financial sector away from mathematics [

8].

Thus, a root-finding algorithm implies solving an equation defined by a function that may be linear, non-linear or transcendental. Some root-finding algorithms do not guarantee that they will find a root. If such an algorithm does not find a root, that does not mean that a root does not exist. No single classical method outperforms another method on all functions [

9]. A method may outperform other methods on one input and may produce inferior results on another input. Different methods have their own strengths/weaknesses.

There are classical root-finding algorithms: Bisection, False Position, Newton-Raphson, Secant, methods for finding the roots of an equation f(x) = 0. Every textbook on Numerical Techniques has details of these methods [

1,

2,

3]. In the presence of classical methods, enhancements are progressively made to improve the performance of these methods [

10,

11,

12].

Recently papers are making a headway on seeking better performing methods. In response, the better algorithms that are hybrid of classical methods Bisection, Trisection, with False Position, Newton Raphson methods been developed, namely Hybrid1 [

10], Hybrid2 [

11], and Hybrid3 [

12]. Inspired by these three algorithms, we have crafted a new proficient algorithm, Hybrid4, that is a blend of BTsection and Regula Falsi algorithms. This blended algorithm is comparatively better than Hybrid1, Hybrid2, and Hybrid3 with respect to computational efficiency, solution accuracy (less error) and iteration count required to terminate within the specified error tolerance. This algorithm, Hybrid4, further optimizes these algorithms, first by blending Bisection and Trisection algorithms, then the resulting algorithm is blended with False Position method to evolve into Hybrid 4 algorithm hybridizing one step further by eliminating some of the computing time and increasing the efficiency of the algorithm.

The paper is organized as follows. Section II is brief description of classical methods Bisection, Regula Falsi, Newton-Raphson, Secant; their strengths and pitfalls. In addition, Trisection and new BTsection algorithms are included. Section III describes hybrid algorithms and a new algorithm, Hybrid4, that blends BTsection and False Position algorithms. Section IV presents experimental results simulating the performance of new algorithm Hybrid4 and validating it by comparing its performance with the previous hybrid algorithms. Section V is conclusion.

2. Related Background

The classical algorithms Bisection, False Position, Newton-Raphson, Secant methods are readily found in any text book in detail and iterated in most articles [

1,

2,

3]. The classical algorithms, that are ubiquitous are available everywhere, These are iteration based methods. BIS-Bisection method compute a new approximation at the mid point of the interval, FP-FalsPosition obtains a new approximation by the secant line joining the endpoints of the function. NR- Newton Raphson derives new approximating by the tangent line at the iterated point on the function. All these algorithms are iterative for calculations of better approximations.

We will discuss the recent algorithms Trisection and BTsection. As Bisection method divides an interval into two equal parts, the Trisection algorithm subdivides the interval in three equal parts to get better estimate at the next iteration. BTsection is new algorithm that subdivides the interval adaptively into three parts: first Bisection into two, then the promising one half is adaptively trisected into parts to get better approximation, see algorithm in sectio3 [

14].

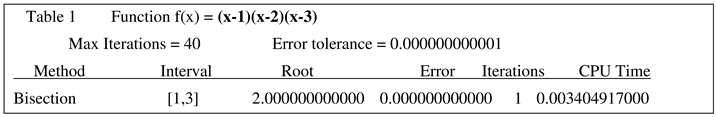

Bisection algorithm states that “If f is continuous on a closed interval [a, b] and f is of opposite signs at the end points, i.e. f(a)f(b) < 0, then there is a root in the open interval (a, b).” Standard algorithm implementation expects that f(a)fb) < 0 to begin with. Because, f(a)f(b)=0 in the following, it will fail to proceed on this root finding problem: ” x2-x-2=0 on interval [2,4], or [0, 2], or [-2,-1] or [-3,-1]. A robust bisection algorithm first confirms that f(a)f(b) < 0 before proceeding to iterate. For example, for f(x) = (x-1)*(x-2)*(x-3) on the interval [1,3], the original version of algorithm will not start, but will succeed on [a,b] only when a, b are not 1, 2, 3.

In order to be successful on any interval including special cases, we reconsider the wording in this theorem and reformulate this theorem to include closed interval all the way. “If f is continuous on a closed interval [a, b] and f is not of same sign at the end points, i.e. f(a)f(b) <= 0, then there is a root in the closed interval [a, b].” The Bisection algorithm is generalized from “f(a)f(b) < 0” to “f(a)f(b ≤ 0” to include the interval boundary also in definition. For example, the equation x2-x-2=0 may show up in some applications with an interval like [2, 4], [0, 2]. Though, it may look simple but if f(a)f(b) = 0, then a or b must be a root, but we still want to know which one of a and b?

For approximate solutions using iterative methods, we have some idea about where the root may be. We should have initial guess as close to the location of root as far as possible. That is, we provide a start point or a guess or initial bracketing interval to the algorithm to iterate in search for the true approximate value of actual root, within some acceptable tolerance.

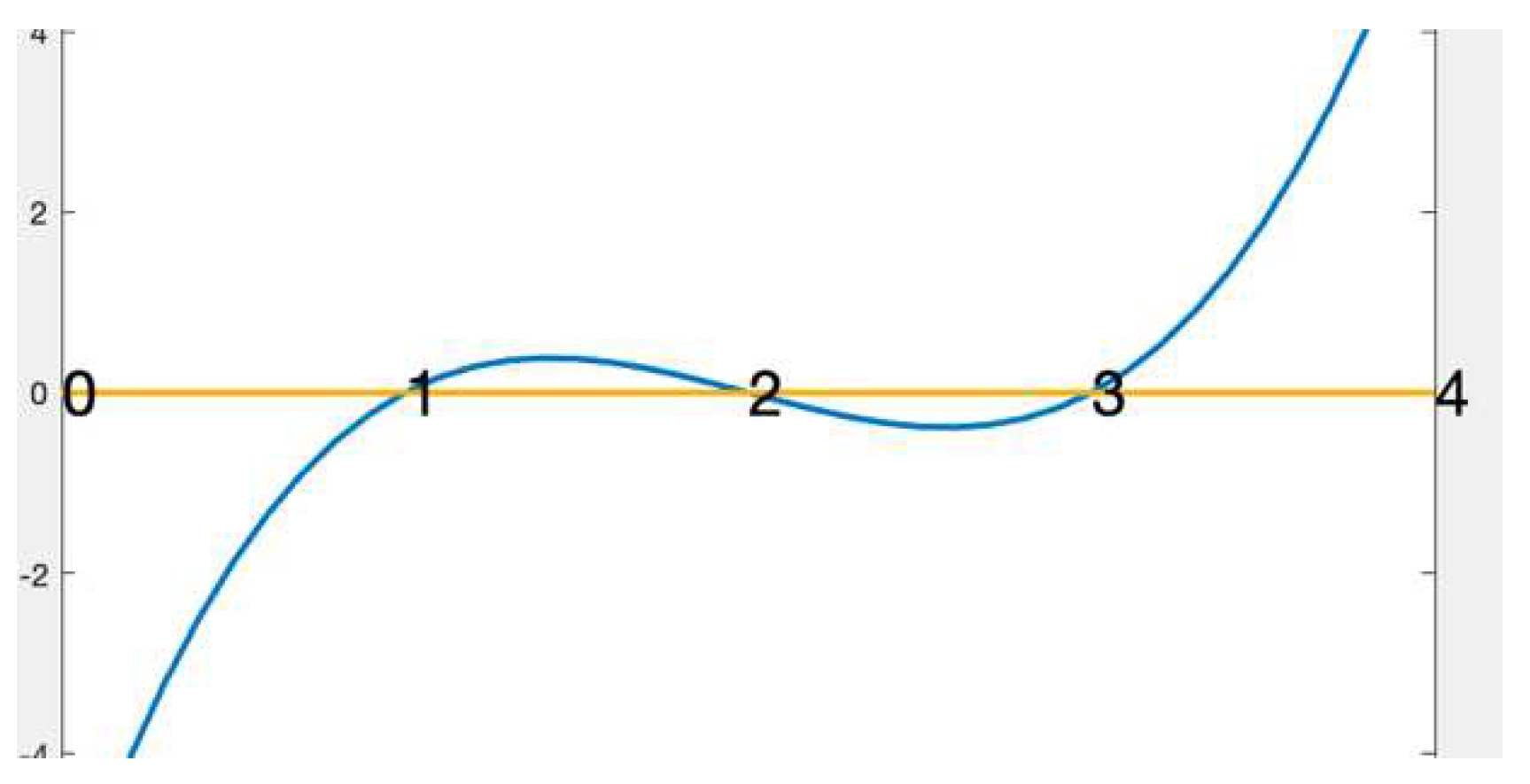

Figure 1.

Modified version of Bisection on [

1,

3], succeeds in determining a root x=2 of (x-1)(x-2)(x-3)=0, in one iteration.

Figure 1.

Modified version of Bisection on [

1,

3], succeeds in determining a root x=2 of (x-1)(x-2)(x-3)=0, in one iteration.

Since the Bisection, Regula Falsi, and Newton Raphson methods are readily available in the literature, their standalone derivations are skipped in this background section.

To enhance the performance of Bisection method, Bader et.al designed a Trisection method that supersedes Bisection method in finding an approximate root. Trisection method reduces the number of iterations performed, computation time, and error of approximation at a small cost on number of function computations. It inspired us to combine Bisection and Trisection algorithms to a BTsection algorithm at no additional compute cost and is at par with Trisection algorithm in computation CPU time, and error in approximate root, but BTsection requires fewer number of iterations, see examples in this section. These algorithms are blended with False Position and Newton-Raphson methods to construct hybrid algorithms. The effectiveness and efficacy of root approximation is measured by number of iterations in root calculation and the approximation accuracy of the root at the termination of algorithms. The heuristic metrics for measuring error, the number iterations and stopping criteria are elaborated here first.

Heuristics for comparing algorithms

2.1. Metric for Approximation Error Measurement

There are various ways to measure the error in the approximate root of an equations, f(x) = 0, at successive iterations to continue to a relatively more accurate approximation to the actual root. At iteration n, to determine rn for which f(rn) 0, we proceed to analyze as follows.

The iterated

root approximation error can be

or

Since a root can be zero, in order to avoid division by small numbers, it is preferable to use absolute error

for convergence test. Another reason for this is that if r

n = 2

-n, then

is always 1, it can never be less than 1, so the root-error tolerance test cannot be satisfied effectively, this test does not work. Since function value is expected to be zero at the root, an alternate cognitively more appealing error test is to use f(r

n) for error consideration instead of r

n. There are three versions for this concept, for comparison criteria, they are

or

or

Since f(rn) is to be close to zero near the root, in order to avoid divide by small numbers, we discard using Further, since can be close to zero without |f(rn)| being close to zero, we discard using also in favor of using only , trueValue error. For example, f(rn)= (n-1)/n is such an example. We avoid using the first two criteria for this reason and exploit the last one, .

Now we are left with two options and to consider for error analysis. Again, rn and rn-1 can be closer to each other without f(rn) being closer to zero. For example rn = 1 +1/n, f(rn) = rn . Between the options and , we find that is the only reliable metric for analyzing the approximation error. Hence, we use, , as the criteria for for comparing with tolerance error analysis for all the methods uniformly.

2.2. Metric for Iterations Stopping Criteria, Halting Condition

Stopping criteria plays a major role in simulations. The iteration termination (stopping) criteria for False Position method is different from Bisection method. Tradeoff between accuracy and efficiency is accuracy of the outcome. In order to obtain n significant digit accuracy, let

be stopping error and let

be the approximation error at any iteration. If

<

, the algorithm stops iterations. With

= 5/10

n-1, we have n significant digit accuracy in the outcome [

1]. The bisection algorithm is trivial [

2], Trisection and BTsection algorithms are described in Section III.

Here we describe two enhancements to bisection algorithm. Trisection algorithm and BTsection [section III] algorithm, each algorithm has five comparison tests and reference to four function values. The BTsection algorithm uses the same amount of computation resources as Trisection algorithm.

But the number of iterations bn (Bisection), tn (Trisection), btn (BTsection) required by the Bisection, Trisection, and BTsection algorithms on [a, b] with stopping tolerance tol are

bn =log{(b-a)/tol} tn=(0.63) log{(b-a)/tol} btn= (0.39) log{(b-a)/tol}.

Trisection algorithm takes 37% fewer iterations than Bisection algorithm to converge within the desired tolerance.

BTesection algorithm takes 24% fewer iterations than Trisection algorithm to converge to the root within the desired tolerance. In addition, as observed below in both Trisection and BTsection algorithms, in each iteration, there is no change in the computation time: five comparison tests and references to four function values.

2.3. Criteria for Performance

This is an experimental science. The methods are numerical and iterative, not analytic solutions. A method may perform well in one case, and fail miserably in another case, see examples below. No one method outperforms all other methods all the time on all the intervals of definition [

9,

15]. We determine that the proposed algorithm performs better than the existing related algorithms.

There is no easy way to declare that an algorithm superior to other exiting algorithms. There are several factors that must be taken into consideration for distinguishing between the competing algorithms for a root finding problem.

Smart way is to represent new algorithm as to how the proposed algorithm differs from the other algorithms. To emphasize the new idea, it is also desirable that the new solution be applicable to a larger spectrum, devoid of incomplete domain. A simple customized example does not give a quantitative or qualitative view. We need to check the performance and applicability of the proposed approach in a broader scope. To comprehend this further, let us take the following example.

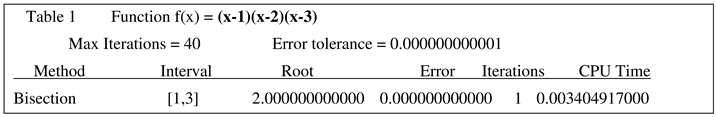

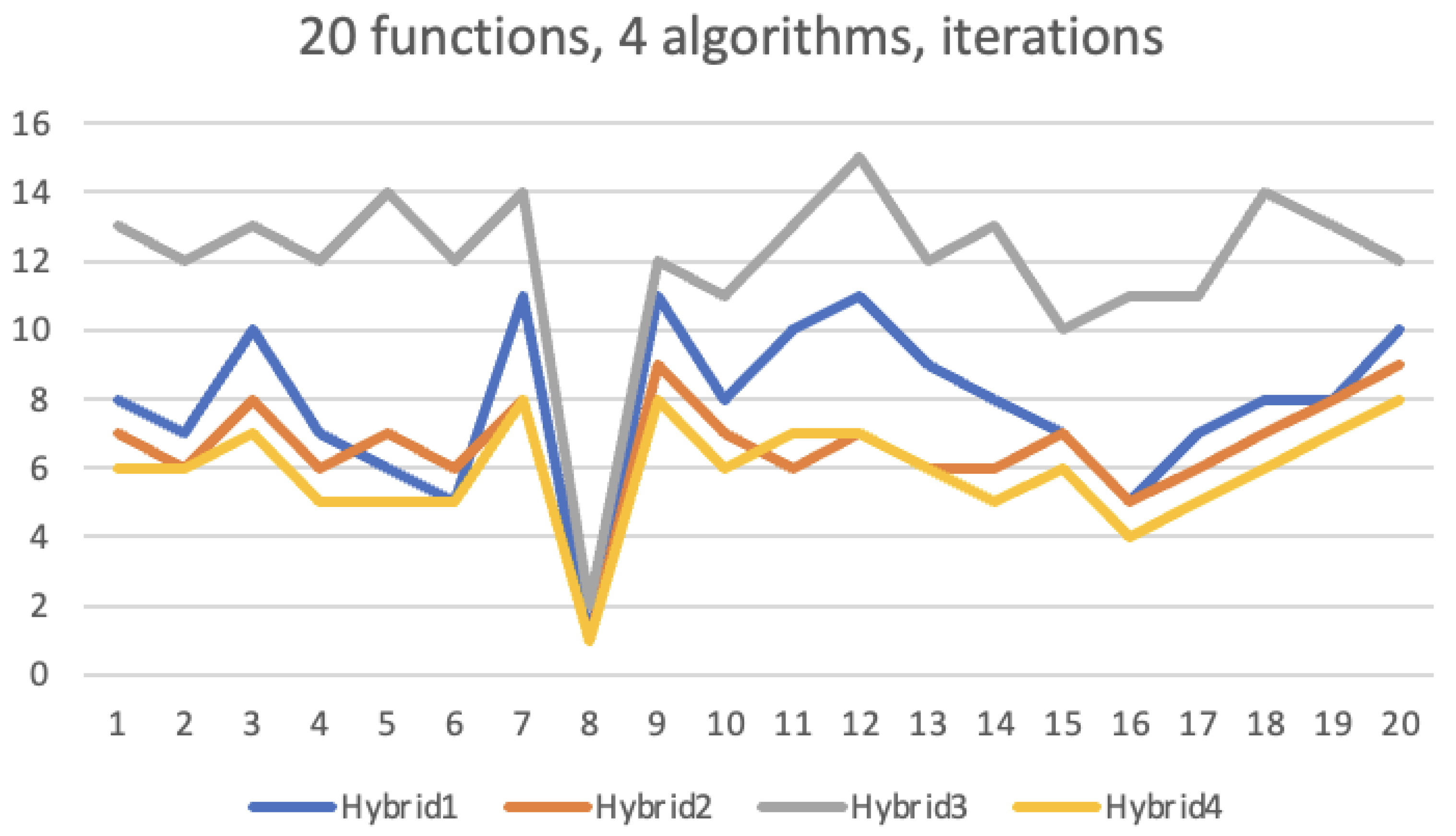

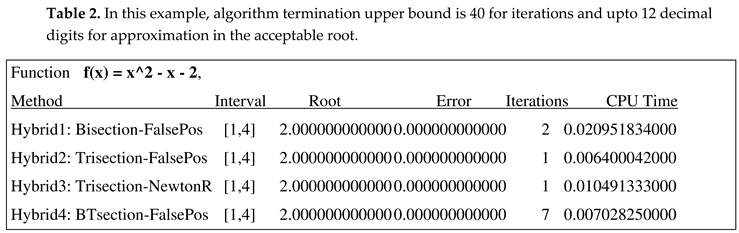

For example, the following problem shows that Trisection algorithm outperforms the other algorithms, see Table2 [Bader], [

12], it gets the correct root in one iteration whereas other cited algorithms take more iterative steps, and more CPU time as well. One can infer from this example that the Trisection algorithm 2 and 3 work well and are superior to other algorithms. Of course, it is when input test function is

x^2 - x – 2 and interval is [1, 4]

.

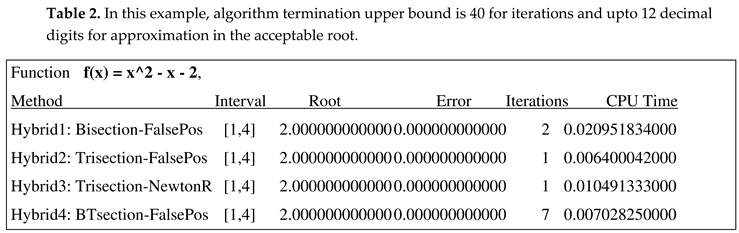

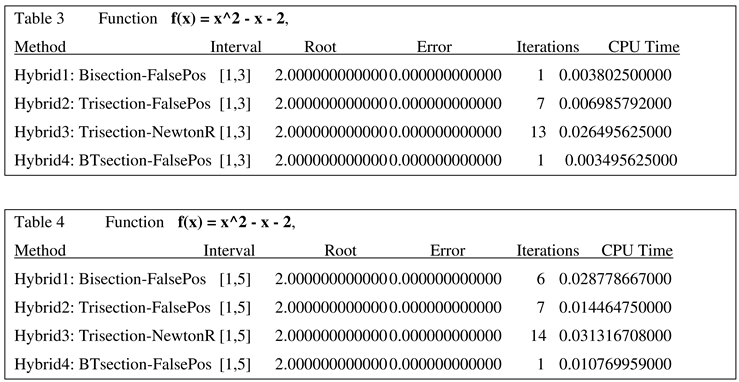

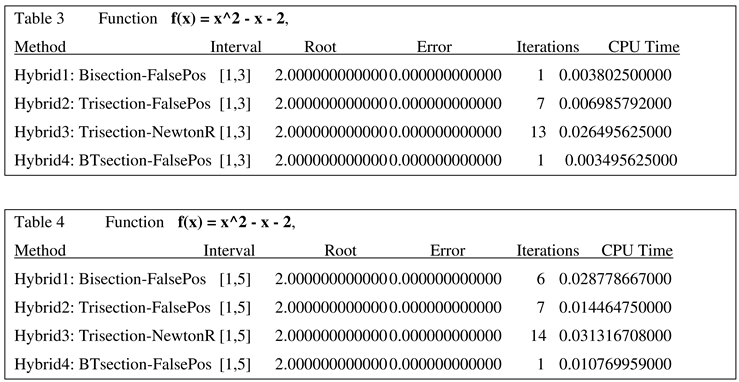

Now, consider the same function, and same four test algorithms. The initial input interval [1, 4] is changed to [1, 3] or [1, 5], the Table3 indicates that the inference from Table 2 does not hold good, the tables are turned, see Tables 3, 4. Here algorithm4 solves the same problem in one iteration and uses the lowest amount of CPU time. Again, from this example, it is unfair to declare that the algorithm 4 outperforms Algorithms 1, 2, 3. Considering Table 4, it gives a clue to inference. Even though algorithm4 doesn’t terminate in one iteration, it does terminate before the other algorithms.

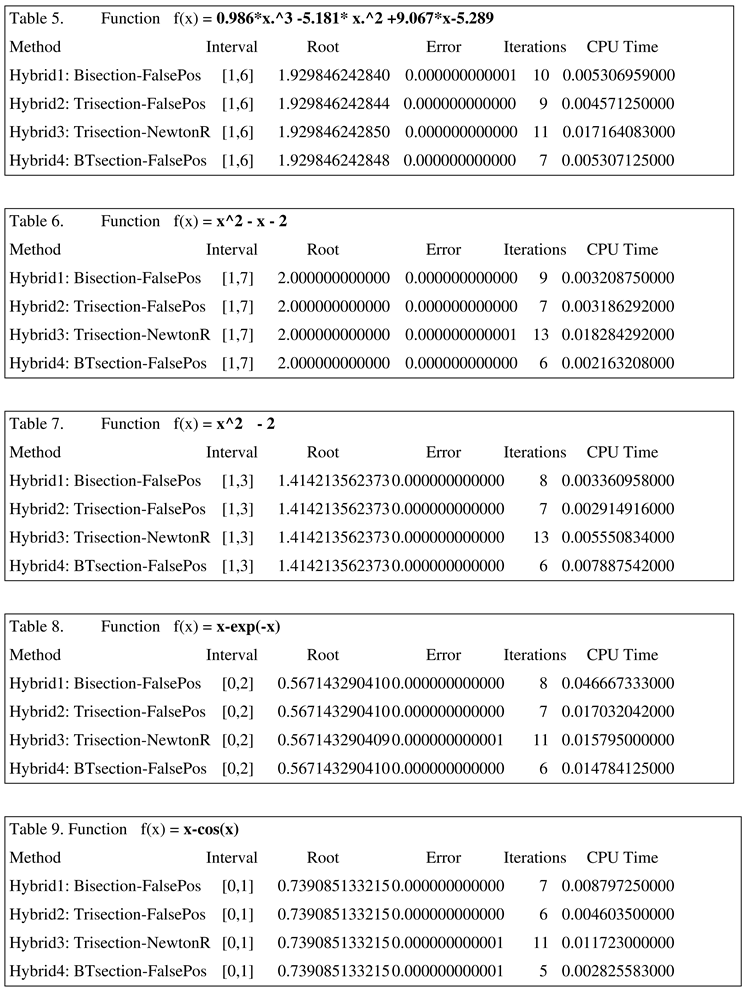

We have seen that in one case algorithms 2, 3 and in the other two cases algorithms 1, 4 come out ahead because they solve the problem in one iteration and use the lowest CPU time. The dilemma is is to determine which algorithm will work satisfactorily on the majority of cases, if not, all the cases. We have to consider not only this function but other functions as well; not one interval, but other related intervals as well. Let us first see the next examples in Tables 5,6,7,8,9 where none of these four algorithms succeed in finding the approximation in one iteration. We used different functions and different intervals for these examples in Tables 5,6,7,8,9. In all these examples, the algorithm 4 has the lowest number of iterations and lowest amount of CPU time. We have tested on number other examples as well to validate the same phenomena. The following examples use different functions and different initial intervals [1, 6], [1, 7], [1, 8], even [0, 1] as well to make a intuitive heuristic that algorithm 4 may be more efficient than the others. In all the ensuing examples in this paper, algorithm halting upperbound is 40 for iterations and upto 12 decimal digits for approximation in the acceptable root.

2.4. Algorithms

2.4.1. Bisection, Regula Falsi, Newton-Raphson algorithm

These algorithms are ubiquitous [

1], [

2], [

3]]. We use Regula Falsi method for analysis of hybrid algorithms. There are multiple reasons for neglecting Newton Raphson from this analysis: (a) it requires that function be differentiable, (2) it depends heavily on the start point, (3) iterated approximations are not bracketed, (4) it fails if start point is not close to the root. Although False Position method is preferably used in hybrid algorithms, it does not fit well in the category of Bisection, Trisection and BTsection, we will first compare these algorithms.

2.4.2. Trisection Algorithm [11].

Bader extended bisection algorithm to trisection in order to create a better algorithm to hybridize it with false position algorithm. The algorithm is as follows.

Input: Function f(x), Initial approximations [a,b] and absolute error eps.

Output: Approximate root r, bracketing interval, and number of iterations k

for k=1 to n

p := (2*a +b)/3; q := (a +2*b)/3;

if |f(p)| < |f(q)|

r := p

else

r := q

endif;

if |f(r)| < eps

return r, a, b, k;

else if f(a)*f(p) < 0

b:=p;

else if f(p)*f(q) < 0

a:=p;

b:=q;

else

a:=q;

end if;

end for

2.4.3. BTsection Algorithm

In this paper, we adapted both Bisection and Trisection algorithms to craft a BTsection algorithm to hybridize, more heuristically, with False Position method [

14]. BTsection algorithm incurs no more computational cost than the Trisection algorithm, but requires fewer iterations, in general, to reach an approximate solution. The algorithm is as follow.

Input: function f(x), Initial interval [a,b] and absolute error eps.

Output: Approximate root r, bracketing interval, and number of iterations k

for i=1:imax

bisection step

r=(a+b)/2;

if (f(a)*f(r)) < 0

b=r; r=(a+2*b)/3;

elseif (f(r)*f(b)) < 0

a=r; r=(2*a+b)/3;

else

return i, r, a, b

endif

trisection step

if (f(a)*f(r)) < 0

b=r;

elseif (f(r)*f(b)) < 0

a=r;

else

return i, r, a, b

endif

if |f(r)| < eps

return i,r,a,b ;

endif

endfor

The Trisection algorithm and a new BTsection algorithm are conceptually equivalent in each iteration. From the following tables, it is clear that BTsection algorithm requires fewer iterations to converge. It shows that BTsection algorithm competes successfully with the other algorithms with respect to number of loop iterations, CPU time, and accuracy in approximation of the root. These benchmark functions appear in recent papers in the literature. See Tables 10–15 for comparing the performance of the sectioning algorithms. The functions and the interval of definition are generic of any algorithm. In all the ensuing examples in this paper, algorithm halting upperbound is 40 for iterations and upto 12 decimal digits for approximation in the acceptable root.

3. Hybrid Algorithms

Now we present recent hybrid algorithms and a new proposed hybrid algorithm. The existing hybrid algorithms have one thing in common. At each iteration, they compute the bracketing interval for each of the two hybridizing algorithms, and compute the interval common to the two algorithms to use at the next iteration. It incurs a computation step. Here in the new algorithm, there is no need to perform such computation because we can have the common interval readily available without performing this computation.

First we describe the original hybrid algorithm, namely, Hybrid1 based on classical Bisection and False Position algorithms. Since the classical algorithms can be found in any text book, those algorithms are not described here.

In Hybrid1 algorithm, at each iteration, more promising root between the Bisection and False Position approximate roots is selected, and common interval is computed for the next iteration. This curtails the unnecessary iterations in either method. It was succeeded by more efficient algorithms using Trisection method in place of Bisection algorithm: Hybrid2 and Hybrid3 [

12]. These algorithms lead the way for us to discover more heuristics to design a new blended algorithm Hybrid4 which is more efficient than the previous three hybrid algorithms. All the four algorithms are described here for reference. For ease in readability, the prefixed variables use symbols: b for Bisection, p for False Position, t for Trisection, and bt for BTsection. The variables without prefix are algorithms local working variable.

Hybrid1:

Bisection and False Position Algorithm [

10]

Input: f , [a, b], maxIterations

Output: root r, k-number of iterations, error of approximation bracketing interval [ak+1, bk+1]

//initialize

k = 0; a1 = a, b1 = b

Initialize bounded interval for bisection and false position

pak+1=ba k+1=a1;pbk+1=bbk+1=b1

repeat

pak+1=bak+1=ak; pbk+1=bbk+1=bk

compute the mid point the error

Hybrid2: Trisection and False Position Algorithm[

11]

This function implements a blend of trisection and false position methods.

Input: The function f; the interval [a, b] where f(a)f(b) < 0 and the root lies in [a, b],

The absolute error (eps).

Output: The root (x), The value of f(x), Numbers of iterations (n), the interval [a, b] where the root lies in

n = 0; a1 := a; a2 := a; b1 := b, b2 := b

while true do

n := n + 1

xT1 := (b + 2*a)/3

xT2 := (2*b + a)/3

xF := a − (f(a)*(b − a))/(f(b) − f(a))

x := xT1

fx := fxT1

if |f(xT2)| < |f(x)|

x := xT2

if |f(xF)| < |f(x)|

x := xF

if |f(x)| <= eps

return x, f(x), n, a, b

if fa * f(xT1) < 0

b1 := xT1

else if f(xT1) * f(xT2) < 0

a1 := xT1

b1 := xT2

else

a1 := xT2

if fa*f(xF) < 0

b2 := xF;

else

a2 := xF;

a := max(a1, a2) ; b := min(b1, b2)

end (while)

Hybrid 3: Trisection and Newton-Raphson Algorithm

This algorithm is along the same lines as Hybrid 2, but with (1) instead of false position method, it uses Newton-Raphson algorithm which requires differentiability of the function, (2) improved iteration count and accuracy in the hybridization step: namely, the common interval in each iteration is computed by analyzing the five function values and then mapped to parameter values for the optimal interval.

The Algorithm is as follows.

Input: Function f(x), an Initial approximations x0 and absolute error eps.

Output: Root x and number of iterations n

df(x):=f’(x); k:=0;

for k=1:n

p := (2*a + b)/3;

q := (a + 2*b)/3;

if |f(p)| < |f(q)|

then r := p - f(p)/df(p);

else r := q - f(q)/df(q);

end if;

if |f(r)| < eps

then

return r, k;

else

find fv:={f(a),f(b),f(r),f(p),f(q)};

a := xa where fv max -ve;

b := xb where fv min +ve

end if;

end.

Recall, in these three algorithms, there are two common steps to coordinate the two algorithms to hybridize. At each iteration, they determine (1) the promising approximation root out of the two roots (2) the common interval bracketing the approximate root. In Hybrid1 and Hybrid 2 algorithms, this simply reduces to intersection of two intervals so that common interval contains the approximate root. No function evaluation is involved in the search for common interval to contain the predicted approximate root. In the Hybrid3 algorithm, it searches among five function values used to determine two function values pertaining the common interval. From these two function values, the function parameters are determined to create the common bracketing interval.

New Hybrid Algorithm

Hybrid4 algorithm provides a more proficient approach to optimization: (1) BTsection algorithm is used instead of bisection or trisection, (2) it eliminates the computation of common interval required by the foregoing algorithms used to hybridize, There is no work needed to determine the better of the two roots. This leads to more efficiency for optimal root approximation and readily available common interval. It is based on common sense Occam’s razor principle [

13],

Figure 2. The Occam’s razor principle is a heuristic, not a proof. That is, when faced with competing choices, we use the simplest from what we have. It will be shown that Occam’s Razor Principle works quite well in this case.

Hybrid4.

This algorithm is a blend of BTsection and False Position methods to find iteration approximations with minimal effort.

Input a0, b0, ro, eps, imax, f

Output k, ak, bk, rk

for k=1:imax

BTsection iteration step determines

btak, btbk-, btrk- from btak-1, btbk-1, btrk-1

relable

btak, btbk, btrk to ak, bk, rk -

False-position iteration step

input is ak, bk, rk instead of old pak-1, pbk-1, prk-1

false position iteration step determines

pak,pbk, prk- from ak, bk, rk instead of from old pak-1, pbk-1, prk-1

This makes [pak,pbk] as the common interval [ak, bk] without any computation.

The use of ak, bk, rk instead of old pak-1, pbk-1, prk-1, makes this step more optimal for next approximation

if f(prk)<eps

return k, pak,pbk, prk

end

relabel pak,pbk, prk to qak, qbk-, qrk-

endFor

Summarizing the foregoing algorithms, succinctly the iteration step in the algorithms are:

Hybrid1

[bak,bbk,brk]= Bisection(ak-1, bk-1, rk-1,f)

[pak,pbk,prk]=FalsePosition(ak-1,bk-1, rk-1,f)

The results of hybridization step are:

rk is better of brk prk,

[ak,bk] is common to [bak,bbk], [pak,pbk],

rk belongs to [ak,bk]

Hybrid 2

[tak,tbk,trk]=Trisection(ak-1,bk-1, rk-1,f)

[pak,pbk,prk]=FalsePosition(ak-1,bk-1, rk-1,f)

The outcomes of hybridization are:

rk is better of trk prk,

[ak,bk] is common to [tak,tbk], [pak,pbk],

rk belongs to [ak,bk]

Hybrid3

[tak,tbk,trk]=Trisection(ak-1,bk-1, rk-1,f)

[nak,nbk,nrk]=NewtonRaphson(ak-1,bk-1, rk-1,f)

The upshot of hybridization is:

rk as better of trk nrk,

[ak,bk] is common from [bak,bbk], [pak,pbk] and nrk by analyzing { f(bak), f(bbk), f(nak),f(nbk), f(nrk})}then finding from {{ bak,bbk,pak,pbk, nrk}}.

rk belongs to [ak,bk]

Hybrid4

[a,b,r]=BTsection(ak-1,bk-1, rk-1,f)

[pak,pbk,prk]=FalsePosition(a,b,r,f)

The conclusion of hybridization is: there is no need to do any work calculation: [ak,bk] is the same interval [pak,pbk] containing rk is the same as prk, the desired root. This algorithm is optimal in the number of iterations and the accuracy in approximate root.