Submitted:

28 December 2023

Posted:

29 December 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

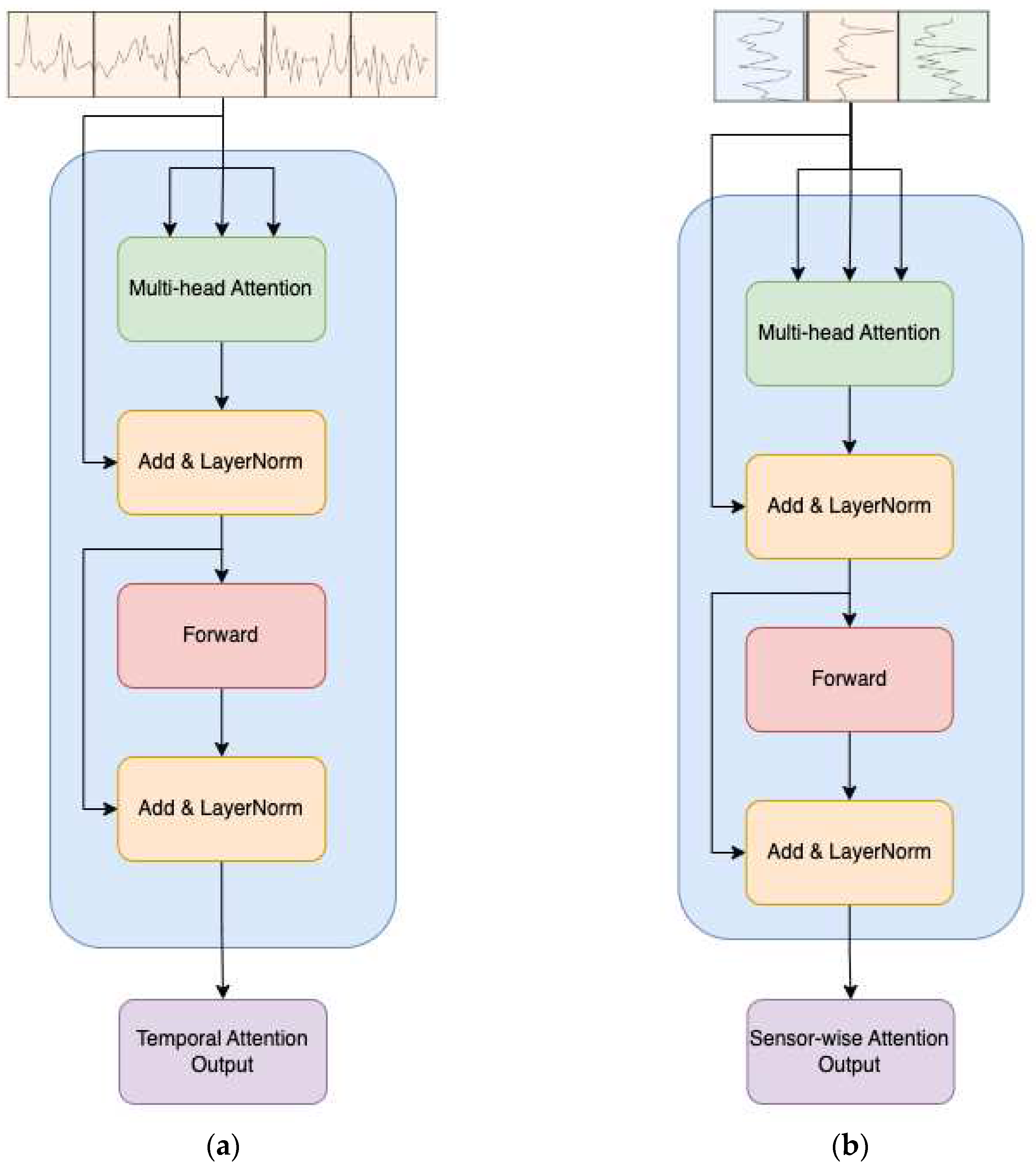

- We incorporate a two-stage attention mechanism capable of capturing both temporal attention and sensor-wise variable attention, representing the first successful application of such a mechanism to turbofan engine RUL prediction.

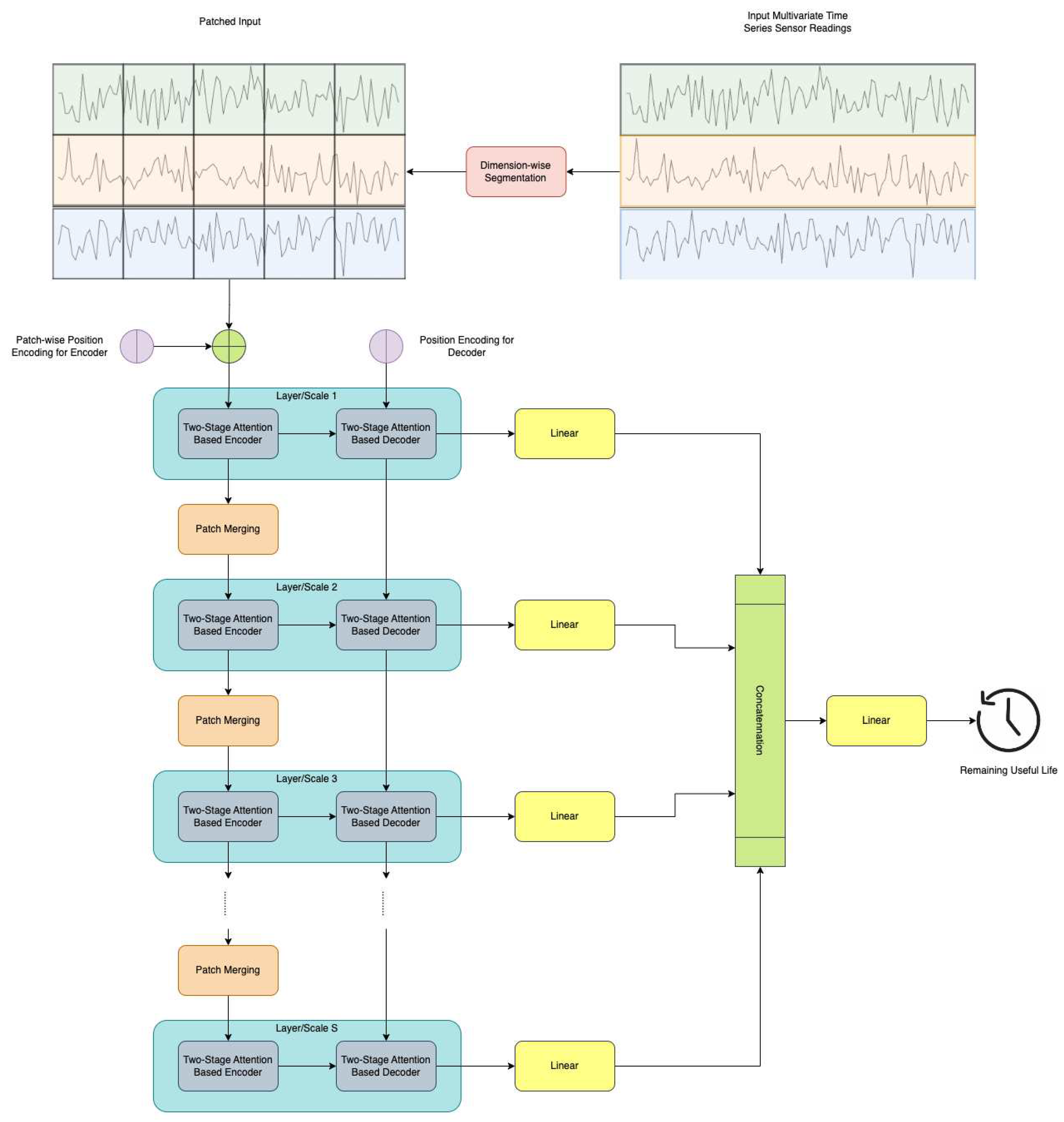

- We propose a hierarchical encoder-decoder framework to capture temporal information across various time scales. While multiscale prediction has shown superior performance in numerous computer vision and time series classification tasks [43,46], our work marks the first successful implementation of multiscale prediction in RUL prediction.

- Through a series of experiments conducted on four CMAPSS turbofan engine datasets, we demonstrate that our model outperforms existing state-of-the-art methods.

2. Methodology

- Dimension-wise segmentation and embedding (section 2.1): Each sensor's univariate time series is segmented into disjoint patches with length . To embed individual patches, a combination of an affine transformation and positional embedding is utilized [33].

- Encoder (section 2.2): Adapting the traditional Transformer encoder [33], we introduce a modification that integrates a two-stage attention mechanism to capture both temporal and sensor-wise attentions.

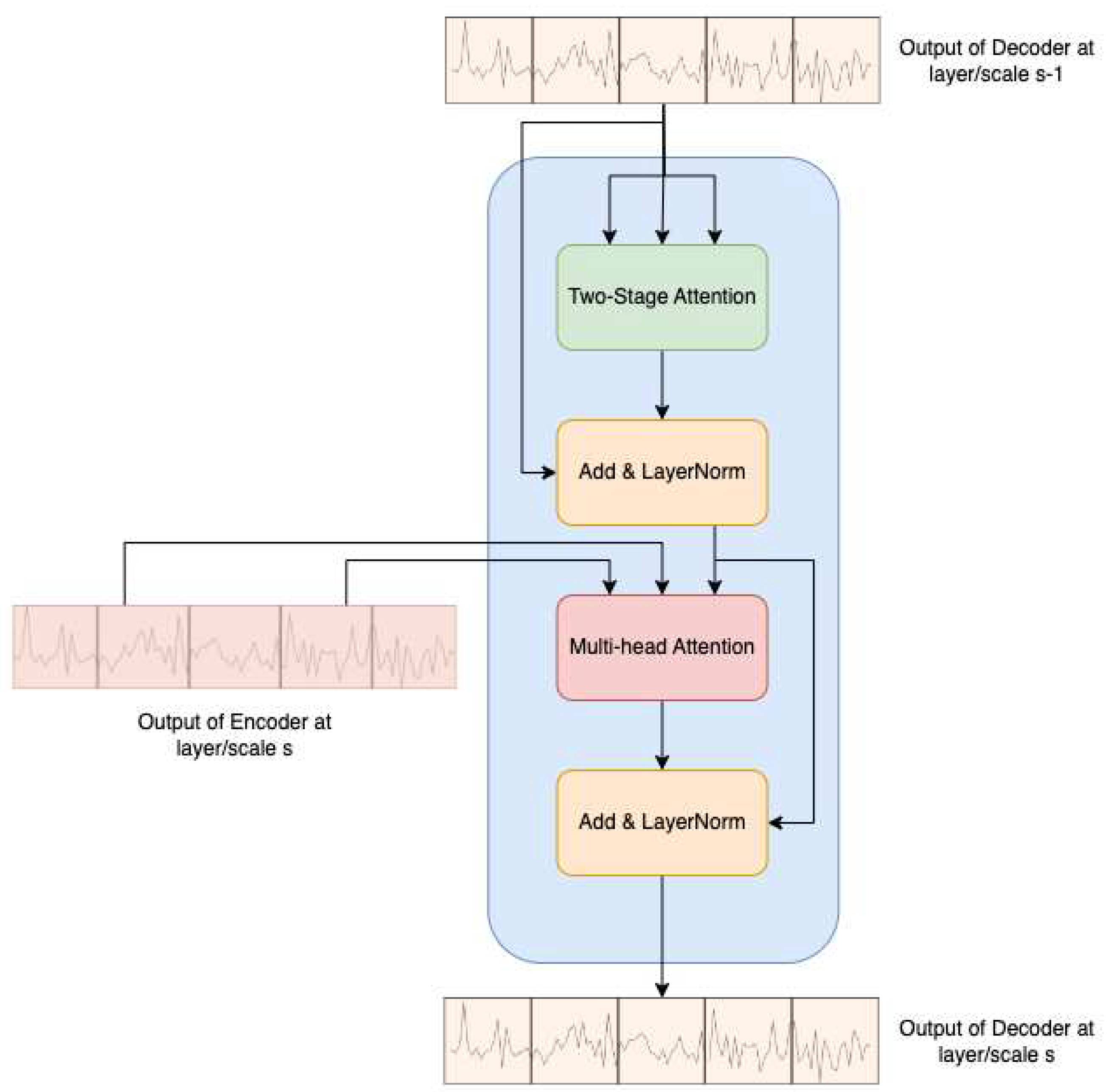

- Decoder (section 2.3): Refining the conventional Transformer decoder [33], our modification introduces a two-stage attention mechanism aimed at capturing both temporal and sensor-wise attentions.

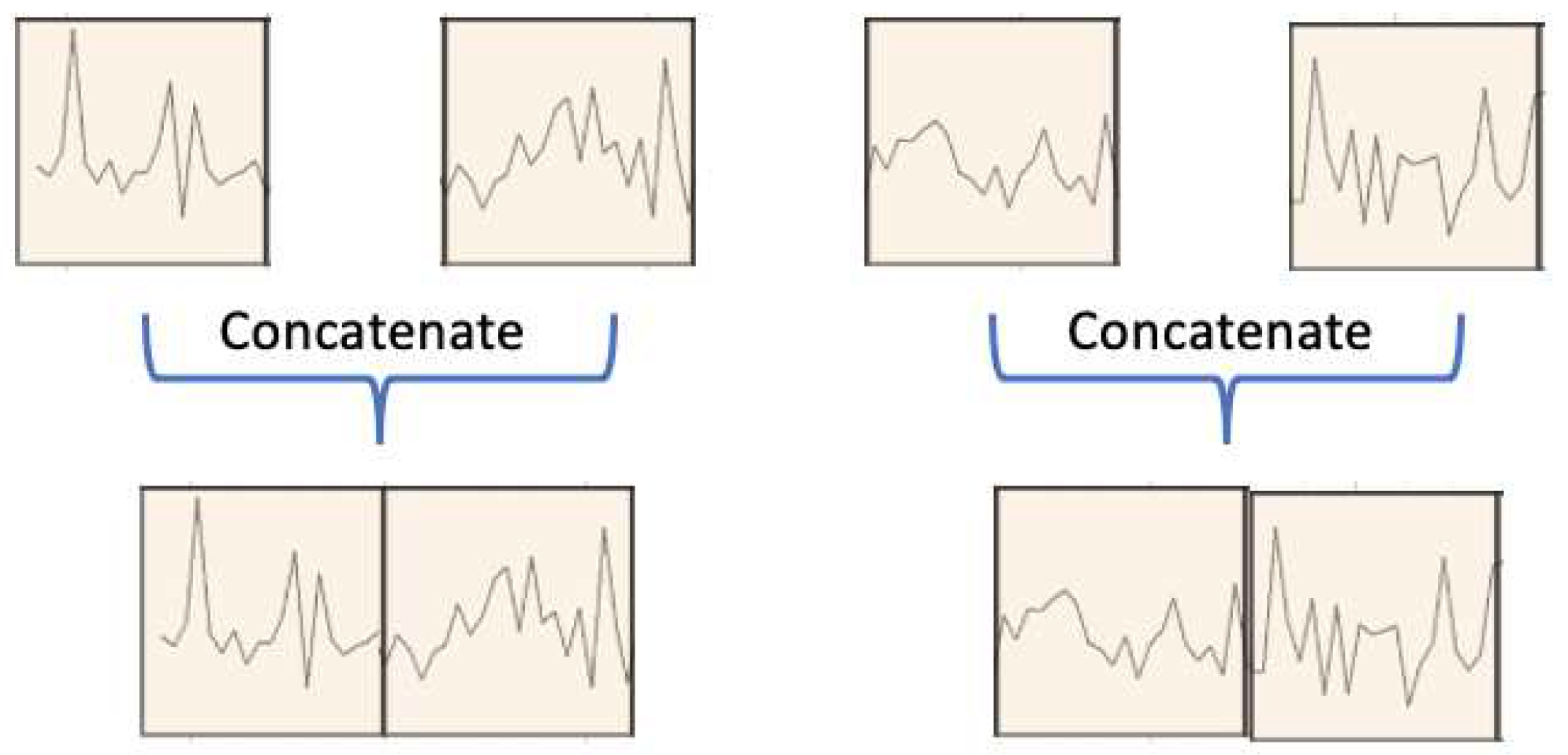

- Patch merging (section 2.4): Merging neighboring patches for each sensor in the temporal domain facilitates the creation of a coarser patch segmentation, enabling the capture of multiscale temporal information.

- Prediction layer (section 2.5): The final RUL prediction is achieved by concatenating information across different time scales through the use of a multi-layer perceptron (MLP).

2.1. Dimension-Wise Segmentation and Embedding

2.2. Two-Stage Attention Based Encoder

2.3. Patch Merging

2.4. Two-Stage Attention Based Decoder

2.5. Prediction Layer

3. Experimental Results and Analysis

3.1. Data and Preprocessing

3.2. Hyperparameter Tuning

3.3. Evaluation Metric

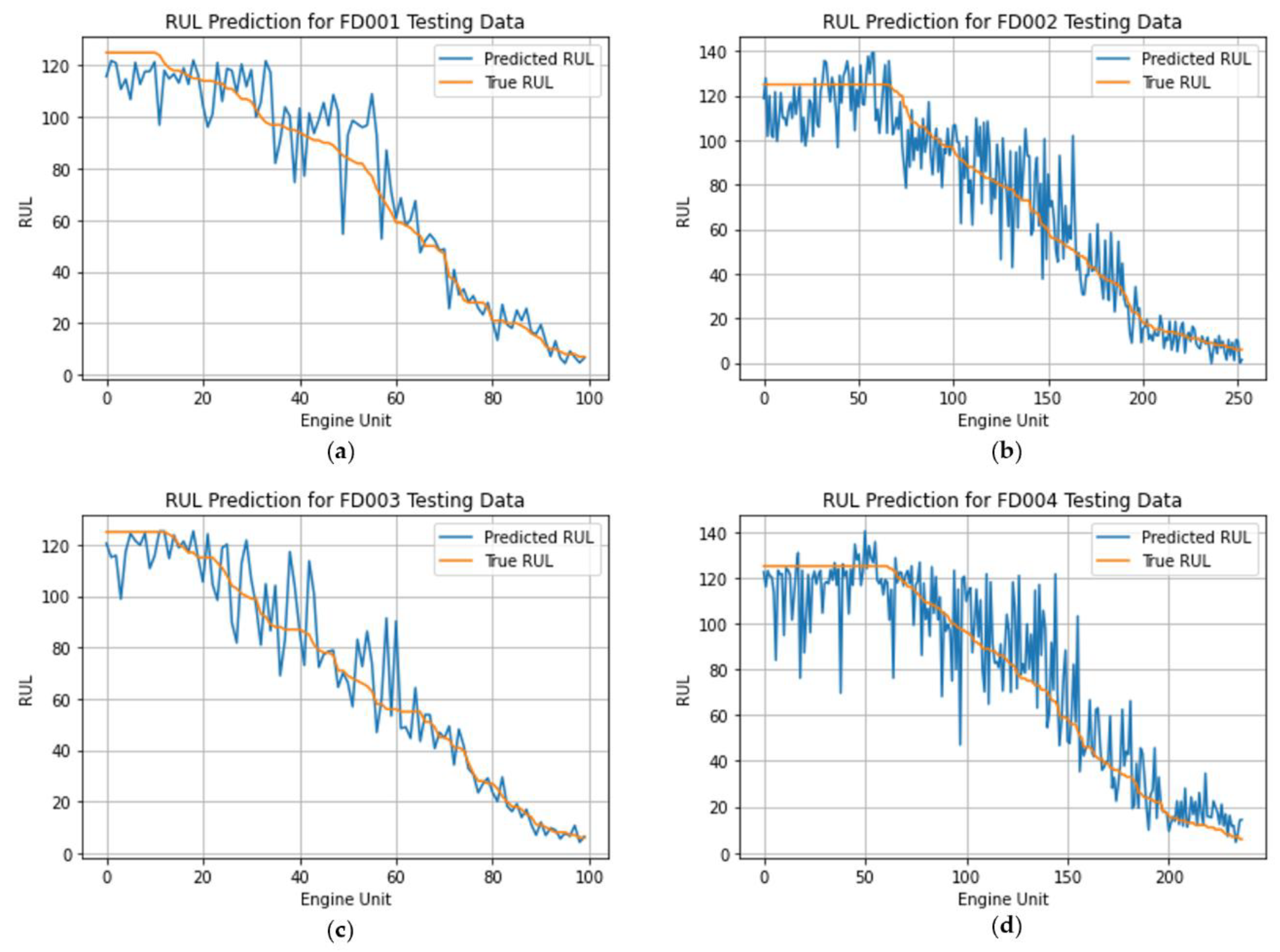

3.4. RUL Prediction

3.5. Ablation Study

- STAR: The proposed model with a two-stage attention mechanism and hierarchical encoder-decoder.

- STAR-Temporal: The proposed model with temporal attention only and a hierarchical encoder-decoder.

- STAR-SingleScale: The proposed model with a two-stage attention mechanism and hierarchical encoder-decoder, excluding the patch merging step between different layers/scales as depicted in Figure 1.

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Peng, Y.; Dong, M.; Zuo, M.J. Current Status of Machine Prognostics in Condition-Based Maintenance: A Review. Int. J. Adv. Manuf. Technol. 2010, 50, 297–313. [Google Scholar] [CrossRef]

- Fan, Z.; Chang, K.; Ji, R.; Chen, G. Data Fusion for Optimal Condition-Based Aircraft Fleet Maintenance with Predictive Analytics. J. Adv. Inf. Fusion 2023, In Press.

- Si, X.-S.; Wang, W.; Hu, C.-H.; Zhou, D.-H.; Pecht, M.G. Remaining Useful Life Estimation Based on a Nonlinear Diffusion Degradation Process. IEEE Trans. Reliab. 2012, 61, 50–67. [Google Scholar] [CrossRef]

- Bolander, N.; Qiu, H.; Eklund, N.; Hindle, E.; Rosenfeld, T. Physics-Based Remaining Useful Life Prediction for Aircraft Engine Bearing Prognosis. Annu. Conf. PHM Soc. 2009, 1. [Google Scholar]

- Roemer, M.J.; Kacprzynski, G.J. Advanced Diagnostics and Prognostics for Gas Turbine Engine Risk Assessment. In Proceedings of the 2000 IEEE Aerospace Conference. Proceedings (Cat. No.00TH8484); March 2000; Vol. 6; pp. 345–353. [Google Scholar]

- Luo, J.; Namburu, M.; Pattipati, K.; Qiao, L.; Kawamoto, M.; Chigusa, S. Model-Based Prognostic Techniques [Maintenance Applications]. In Proceedings of the Proceedings AUTOTESTCON 2003. IEEE Systems Readiness Technology Conference.; September 2003; pp. 330–340. [Google Scholar]

- Ray, A.; Tangirala, S. Stochastic Modeling of Fatigue Crack Dynamics for On-Line Failure Prognostics. IEEE Trans. Control Syst. Technol. 1996, 4, 443–451. [Google Scholar] [CrossRef]

- Li, Y.; Billington, S.; Zhang, C.; Kurfess, T.; Danyluk, S.; Liang, S. Adaptive Prognostics for Rolling Element Bearing Condition. Mech. Syst. Signal Process. 1999, 13, 103–113. [Google Scholar] [CrossRef]

- Kacprzynski, G.J.; Sarlashkar, A.; Roemer, M.J.; Hess, A.; Hardman, B. Predicting Remaining Life by Fusing the Physics of Failure Modeling with Diagnostics. JOM 2004, 56, 29–35. [Google Scholar] [CrossRef]

- Oppenheimer, C.H.; Loparo, K.A. Physically Based Diagnosis and Prognosis of Cracked Rotor Shafts. In Proceedings of the Component and Systems Diagnostics, Prognostics, and Health Management II; SPIE, July 16 2002; Vol. 4733; pp. 122–132. [Google Scholar]

- Giantomassi, A.; Ferracuti, F.; Benini, A.; Ippoliti, G.; Longhi, S.; Petrucci, A. Hidden Markov Model for Health Estimation and Prognosis of Turbofan Engines.; American Society of Mechanical Engineers Digital Collection, June 12 2012; pp. 681–689.

- Lin, J.; Liao, G.; Chen, M.; Yin, H. Two-Phase Degradation Modeling and Remaining Useful Life Prediction Using Nonlinear Wiener Process. Comput. Ind. Eng. 2021, 160, 107533. [Google Scholar] [CrossRef]

- Yu, W.; Tu, W.; Kim, I.Y.; Mechefske, C. A Nonlinear-Drift-Driven Wiener Process Model for Remaining Useful Life Estimation Considering Three Sources of Variability. Reliab. Eng. Syst. Saf. 2021, 212, 107631. [Google Scholar] [CrossRef]

- Feng, D.; Xiao, M.; Liu, Y.; Song, H.; Yang, Z.; Zhang, L. A Kernel Principal Component Analysis–Based Degradation Model and Remaining Useful Life Estimation for the Turbofan Engine. Adv. Mech. Eng. 2016, 8, 1687814016650169. [Google Scholar] [CrossRef]

- Lv, Y.; Zheng, P.; Yuan, J.; Cao, X. A Predictive Maintenance Strategy for Multi-Component Systems Based on Components’ Remaining Useful Life Prediction. Mathematics 2023, 11, 3884. [Google Scholar] [CrossRef]

- Zhang, Y.; Guo, G.; Yang, F.; Zheng, Y.; Zhai, F. Prediction of Tool Remaining Useful Life Based on NHPP-WPHM. Mathematics 2023, 11, 1837. [Google Scholar] [CrossRef]

- Greitzer, F.L.; Li, W.; Laskey, K.B.; Lee, J.; Purl, J. Experimental Investigation of Technical and Human Factors Related to Phishing Susceptibility. ACM Trans. Soc. Comput. 2021, 4, 8:1–8:48. [Google Scholar] [CrossRef]

- Li, W.; Lee, J.; Purl, J.; Greitzer, F.; Yousefi, B.; Laskey, K. Experimental Investigation of Demographic Factors Related to Phishing Susceptibility; 2020; ISBN 978-0-9981331-3-3.

- Li, W.; Finsa, M.M.; Laskey, K.B.; Houser, P.; Douglas-Bate, R. Groundwater Level Prediction with Machine Learning to Support Sustainable Irrigation in Water Scarcity Regions. Water 2023, 15, 3473. [Google Scholar] [CrossRef]

- Liu, W.; Zou, P.; Jiang, D.; Quan, X.; Dai, H. Computing River Discharge Using Water Surface Elevation Based on Deep Learning Networks. Water 2023, 15, 3759. [Google Scholar] [CrossRef]

- Fan, Z.; Chang, K.; Raz, A.K.; Harvey, A.; Chen, G. Sensor Tasking for Space Situation Awareness: Combining Reinforcement Learning and Causality. In Proceedings of the 2023 IEEE Aerospace Conference; March 2023; pp. 1–9. [Google Scholar]

- Zhou, W. Condition State-Based Decision Making in Evolving Systems: Applications in Asset Management and Delivery. Ph.D., George Mason University: United States -- Virginia, 2023.

- Peng, C.; Chen, Y.; Chen, Q.; Tang, Z.; Li, L.; Gui, W. A Remaining Useful Life Prognosis of Turbofan Engine Using Temporal and Spatial Feature Fusion. Sensors 2021, 21, 418. [Google Scholar] [CrossRef] [PubMed]

- Remadna, I.; Terrissa, S.L.; Zemouri, R.; Ayad, S.; Zerhouni, N. Leveraging the Power of the Combination of CNN and Bi-Directional LSTM Networks for Aircraft Engine RUL Estimation. In Proceedings of the 2020 Prognostics and Health Management Conference (PHM-Besançon); May 2020; pp. 116–121. [Google Scholar]

- Hong, C.W.; Lee, C.; Lee, K.; Ko, M.-S.; Kim, D.E.; Hur, K. Remaining Useful Life Prognosis for Turbofan Engine Using Explainable Deep Neural Networks with Dimensionality Reduction. Sensors 2020, 20, 6626. [Google Scholar] [CrossRef] [PubMed]

- Lundberg, S.M.; Lee, S.-I. A Unified Approach to Interpreting Model Predictions. In Proceedings of the Advances in Neural Information Processing Systems; Curran Associates, Inc., 2017; Vol. 30. [Google Scholar]

- Rosa, T.G. da; Melani, A.H. de A.; Pereira, F.H.; Kashiwagi, F.N.; Souza, G.F.M. de; Salles, G.M.D.O. Semi-Supervised Framework with Autoencoder-Based Neural Networks for Fault Prognosis. Sensors 2022, 22, 9738. [Google Scholar] [CrossRef] [PubMed]

- Ji, S.; Han, X.; Hou, Y.; Song, Y.; Du, Q. Remaining Useful Life Prediction of Airplane Engine Based on PCA–BLSTM. Sensors 2020, 20, 4537. [Google Scholar] [CrossRef] [PubMed]

- Peng, C.; Wu, J.; Wang, Q.; Gui, W.; Tang, Z. Remaining Useful Life Prediction Using Dual-Channel LSTM with Time Feature and Its Difference. Entropy 2022, 24, 1818. [Google Scholar] [CrossRef]

- Zhao, C.; Huang, X.; Li, Y.; Yousaf Iqbal, M. A Double-Channel Hybrid Deep Neural Network Based on CNN and BiLSTM for Remaining Useful Life Prediction. Sensors 2020, 20, 7109. [Google Scholar] [CrossRef]

- Wang, X.; Huang, T.; Zhu, K.; Zhao, X. LSTM-Based Broad Learning System for Remaining Useful Life Prediction. Mathematics 2022, 10, 2066. [Google Scholar] [CrossRef]

- Yu, W.; Kim, I.Y.; Mechefske, C. Remaining Useful Life Estimation Using a Bidirectional Recurrent Neural Network Based Autoencoder Scheme. Mech. Syst. Signal Process. 2019, 129, 764–780. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the Advances in Neural Information Processing Systems; Curran Associates, Inc., 2017; Vol. 30. [Google Scholar]

- Mo, Y.; Wu, Q.; Li, X.; Huang, B. Remaining Useful Life Estimation via Transformer Encoder Enhanced by a Gated Convolutional Unit. J. Intell. Manuf. 2021, 32, 1997–2006. [Google Scholar] [CrossRef]

- Ren, L.; Wang, H.; Huang, G. DLformer: A Dynamic Length Transformer-Based Network for Efficient Feature Representation in Remaining Useful Life Prediction. IEEE Trans. Neural Netw. Learn. Syst. 2023, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Su, C.; Wu, J.; Liu, H.; Xie, M. Trend-Augmented and Temporal-Featured Transformer Network with Multi-Sensor Signals for Remaining Useful Life Prediction. Reliab. Eng. Syst. Saf. 2024, 241, 109662. [Google Scholar] [CrossRef]

- Li, Y.; Chen, Y.; Shao, H.; Zhang, H. A Novel Dual Attention Mechanism Combined with Knowledge for Remaining Useful Life Prediction Based on Gated Recurrent Units. Reliab. Eng. Syst. Saf. 2023, 239, 109514. [Google Scholar] [CrossRef]

- Peng, H.; Jiang, B.; Mao, Z.; Liu, S. Local Enhancing Transformer With Temporal Convolutional Attention Mechanism for Bearings Remaining Useful Life Prediction. IEEE Trans. Instrum. Meas. 2023, 72, 1–12. [Google Scholar] [CrossRef]

- Xiang, F.; Zhang, Y.; Zhang, S.; Wang, Z.; Qiu, L.; Choi, J.-H. Bayesian Gated-Transformer Model for Risk-Aware Prediction of Aero-Engine Remaining Useful Life. Expert Syst. Appl. 2024, 238, 121859. [Google Scholar] [CrossRef]

- Fan, Z.; Li, W.; Chang, K.-C. A Bidirectional Long Short-Term Memory Autoencoder Transformer for Remaining Useful Life Estimation. Mathematics 2023, 11, 4972. [Google Scholar] [CrossRef]

- Xue, W.; Zhou, T.; Wen, Q.; Gao, J.; Ding, B.; Jin, R. Make Transformer Great Again for Time Series Forecasting: Channel Aligned Robust Dual Transformer 2023.

- Nie, Y.; Nguyen, N.H.; Sinthong, P.; Kalagnanam, J. A Time Series Is Worth 64 Words: Long-Term Forecasting with Transformers 2023.

- Zhang, Y.; Yan, J. Crossformer: Transformer Utilizing Cross-Dimension Dependency for Multivariate Time Series Forecasting.; September 29 2022.

- Zhang, Z.; Song, W.; Li, Q. Dual-Aspect Self-Attention Based on Transformer for Remaining Useful Life Prediction. IEEE Trans. Instrum. Meas. 2022, 71, 1–11. [Google Scholar] [CrossRef]

- Chadha, G.S.; Shah, S.R.B.; Schwung, A.; Ding, S.X. Shared Temporal Attention Transformer for Remaining Useful Lifetime Estimation. 2022, 10.

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows.; 2021; pp. 10012–10022.

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. BERT: Pre-Training of Deep Bidirectional Transformers for Language Understanding 2019.

- Saxena, A.; Goebel, K.; Simon, D.; Eklund, N. Damage Propagation Modeling for Aircraft Engine Run-to-Failure Simulation. In Proceedings of the 2008 International Conference on Prognostics and Health Management; October 2008; pp. 1–9. [Google Scholar]

- Zheng, S.; Ristovski, K.; Farahat, A.; Gupta, C. Long Short-Term Memory Network for Remaining Useful Life Estimation. In Proceedings of the 2017 IEEE International Conference on Prognostics and Health Management (ICPHM); June 2017; pp. 88–95. [Google Scholar]

- Wu, Q.; Ding, K.; Huang, B. Approach for Fault Prognosis Using Recurrent Neural Network. J. Intell. Manuf. 2020, 31, 1621–1633. [Google Scholar] [CrossRef]

- Sateesh Babu, G.; Zhao, P.; Li, X.-L. Deep Convolutional Neural Network Based Regression Approach for Estimation of Remaining Useful Life. In Proceedings of the Database Systems for Advanced Applications; Navathe, S.B., Wu, W., Shekhar, S., Du, X., Wang, X.S., Xiong, H., Eds.; Springer International Publishing: Cham, 2016; pp. 214–228. [Google Scholar]

- Wang, J.; Wen, G.; Yang, S.; Liu, Y. Remaining Useful Life Estimation in Prognostics Using Deep Bidirectional LSTM Neural Network. In Proceedings of the 2018 Prognostics and System Health Management Conference (PHM-Chongqing); October 2018; pp. 1037–1042. [Google Scholar]

- Li, J.; Li, X.; He, D. A Directed Acyclic Graph Network Combined With CNN and LSTM for Remaining Useful Life Prediction. IEEE Access 2019, 7, 75464–75475. [Google Scholar] [CrossRef]

- Kong, Z.; Cui, Y.; Xia, Z.; Lv, H. Convolution and Long Short-Term Memory Hybrid Deep Neural Networks for Remaining Useful Life Prognostics. Appl. Sci. 2019, 9, 4156. [Google Scholar] [CrossRef]

- Mo, H.; Lucca, F.; Malacarne, J.; Iacca, G. Multi-Head CNN-LSTM with Prediction Error Analysis for Remaining Useful Life Prediction. In Proceedings of the 2020 27th Conference of Open Innovations Association (FRUCT); September 2020; pp. 164–171. [Google Scholar]

- Liu, Y.; Zhang, X.; Guo, W.; Bian, H.; He, Y.; Liu, Z. Prediction of Remaining Useful Life of Turbofan Engine Based on Optimized Model. In Proceedings of the 2021 IEEE 20th International Conference on Trust, Security and Privacy in Computing and Communications (TrustCom); October 2021; pp. 1473–1477. [Google Scholar]

| Dataset | FD001 | FD002 | FD003 | FD004 |

|---|---|---|---|---|

| No. of Training Engines | 100 | 260 | 100 | 249 |

| No. of Testing Engines | 100 | 259 | 100 | 248 |

| No. of Operating Conditions | 1 | 6 | 1 | 6 |

| No. of Fault Modes | 1 | 1 | 2 | 2 |

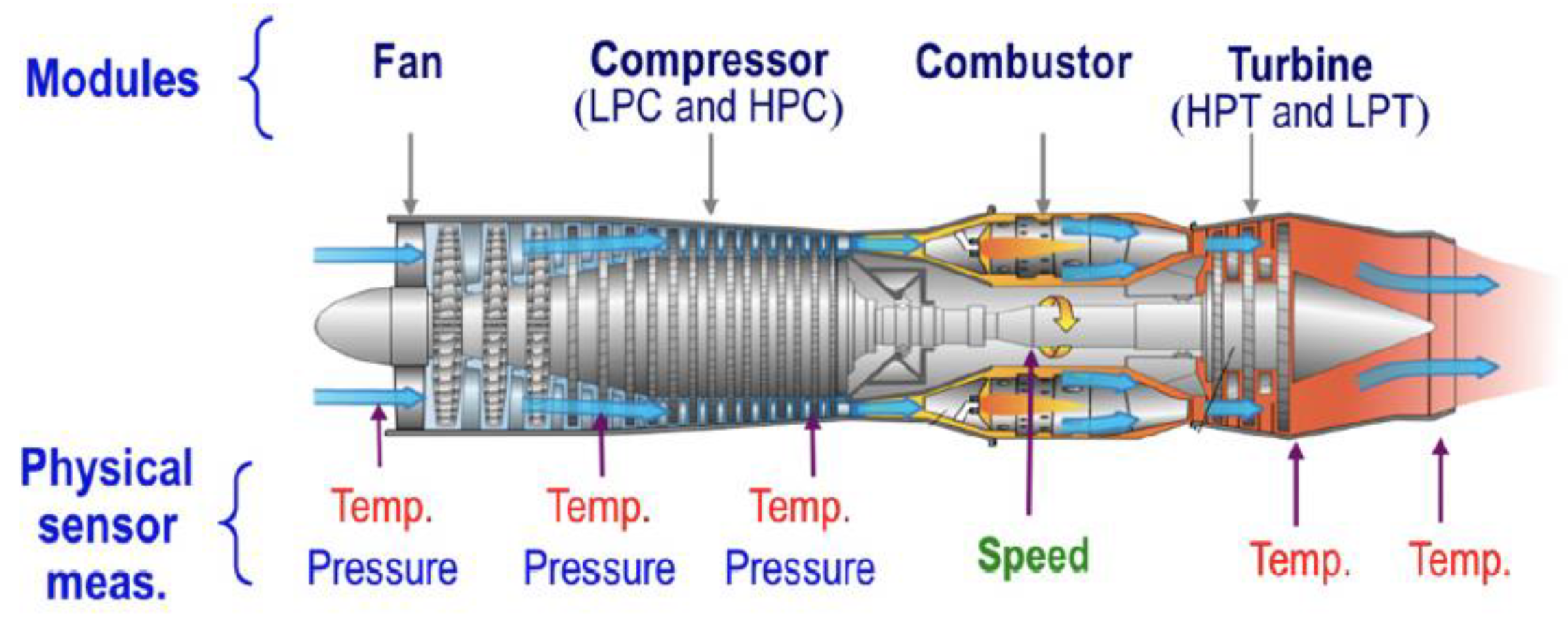

| Symbol | Description | Units |

|---|---|---|

| T2 | Total temperature at fan inlet | R |

| T24 | Total temperature at LPC inlet | R |

| T30 | Total temperature at HPC inlet | R |

| T50 | Total temperature at LPT inlet | R |

| P2 | Pressure at fan inlet | psia |

| P15 | Total pressure in bypass-duct | psia |

| P30 | Total pressure at HPC outlet | psia |

| Nf | Physical fan speed | rpm |

| Ne | Physical core speed | rpm |

| epr | Engine pressure ratio | - |

| Ps30 | Static pressure at HPC outlet | psia |

| Phi | Ratio of fuel flow to Ps30 | pps/psi |

| NRf | Corrected fan speed | rpm |

| NRe | Corrected core speed | rpm |

| BPR | Bypass ratio | - |

| farB | Burner fuel-air ratio | - |

| htBleed | Bleed Enthalpy | - |

| Bf-dmd | Demanded fan speed | rpm |

| PCNfR-dmd | Demanded corrected fan speed | rpm |

| W31 | HPT coolant bleed | lbm/s |

| W32 | LPT coolant bleed | lbm/s |

| Hyperparameter | Range |

|---|---|

| Learning Rate | [0.0001,0.01] |

| Batch Size | 16, 32, 64 |

| Optimizer | Adam, SGD, RMSProp |

| Time Series Length | 32, 48, 64 |

| Number of Layers/Scales | 1, 2, 3, 4 |

| Dimension of Embedding Space | 128, 256, 512, 1024 |

| Number of Head for MSA | 1, 2, 4, 6 |

| Hyperparameter | FD001 | FD002 | FD003 | FD004 |

|---|---|---|---|---|

| Learning Rate | 0.0002 | 0.0002 | 0.0002 | 0.0002 |

| Batch Size | 32 | 64 | 32 | 64 |

| Optimizer | Adam | Adam | Adam | Adam |

| Time Series Length | 32 | 64 | 48 | 64 |

| Number of Layers/Scales | 3 | 4 | 1 | 4 |

| Dimension of Embedding Space | 128 | 64 | 128 | 256 |

| Number of Head for MSA | 1 | 4 | 1 | 4 |

| Method | FD001 | FD002 | FD003 | FD004 | ||||

|---|---|---|---|---|---|---|---|---|

| RMSE | Score | RMSE | Score | RMSE | Score | RMSE | Score | |

| MLP (2016) | 37.56 | - | 80.03 | - | 37.39 | - | 77.37 | - |

| SVR (2016) | 20.96 | - | 42.00 | - | 21.05 | - | 45.35 | - |

| CNN (2016) | 18.45 | - | 30.29 | - | 19.82 | - | 29.16 | - |

| LSTM (2017) | 16.14 | 338 | 24.49 | 4450 | 16.18 | 852 | 28.17 | 5550 |

| BiLSTM (2018) | 13.65 | 295 | 23.18 | 4130 | 13.74 | 317 | 24.86 | 5430 |

| DAG (2019) | 11.96 | 229 | 20.34 | 2730 | 12.46 | 535 | 22.43 | 3370 |

| CNN + LSTM (2019) | 16.16 | 303 | 20.44 | 3440 | 17.12 | 1420 | 23.25 | 4630 |

| Multi-head CNN + LSTM (2020) | 12.19 | 259 | 19.93 | 4350 | 12.85 | 343 | 22.89 | 4340 |

| GCT (2021) | 11.27 | - | 22.81 | - | 11.42 | - | 24.86 | - |

| BiLSTM Attention (2021) | 13.78 | 255 | 15.94 | 1280 | 14.36 | 438 | 16.96 | 1650 |

| B-LSTM (2022) | 12.45 | 279 | 15.36 | 4250 | 13.37 | 356 | 16.24 | 5220 |

| DAST (2022) | 11.43 | 203 | 15.25 | 924 | 11.32 | 154 | 18.23 | 1490 |

| DLformer (2023) | - | - | 15.93 | 1283 | - | - | 15.86 | 1601 |

| BiLSTM-DAE-Transformer (2023) | 10.98 | 186 | 16.12 | 2937 | 11.14 | 252 | 18.15 | 3840 |

| Proposed Method | 10.61 | 169 | 13.47 | 784 | 10.71 | 202 | 15.87 | 1449 |

| Model | FD001 | FD002 | FD003 | FD004 |

|---|---|---|---|---|

| STAR | 10.61 | 13.47 | 10.71 | 15.87 |

| STAR-Temporal | 11.62 | 16.67 | 12.01 | 18.44 |

| STAR-SingleScale | 12.33 | 16.11 | 12.49 | 17.71 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).