Submitted:

28 December 2023

Posted:

29 December 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Method

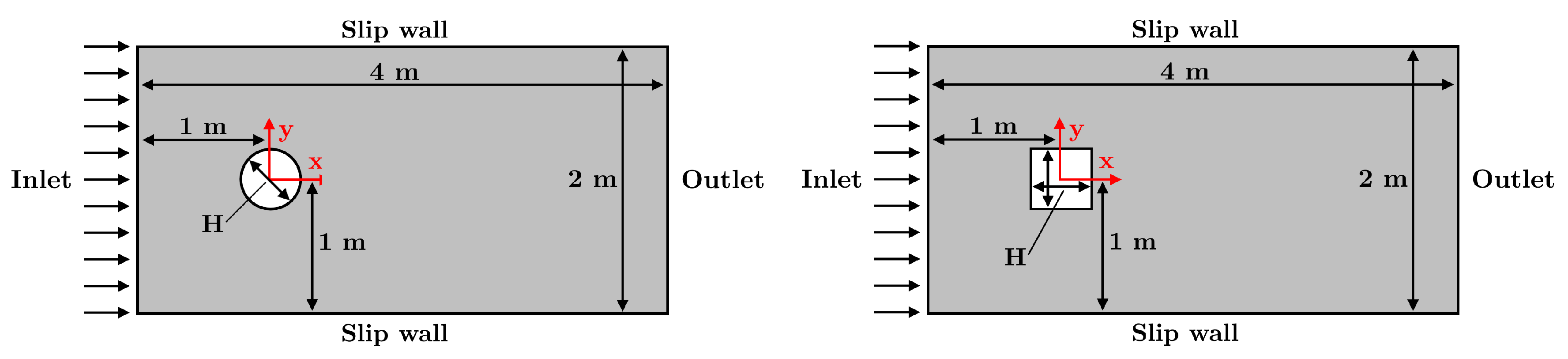

2.1. Geometry

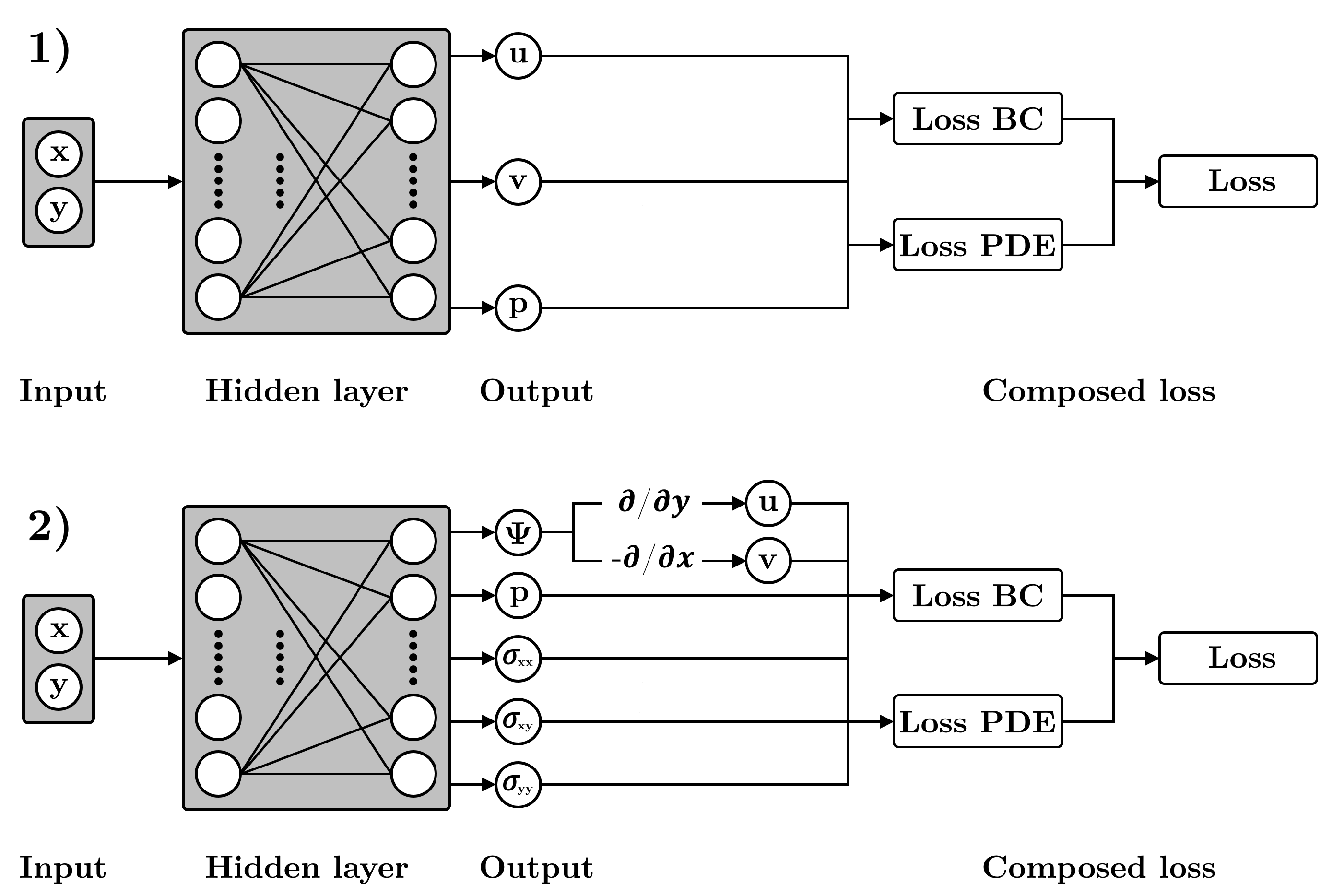

2.2. Governing equations and PINN method

2.3. Reference data

2.4. Study design

3. Results

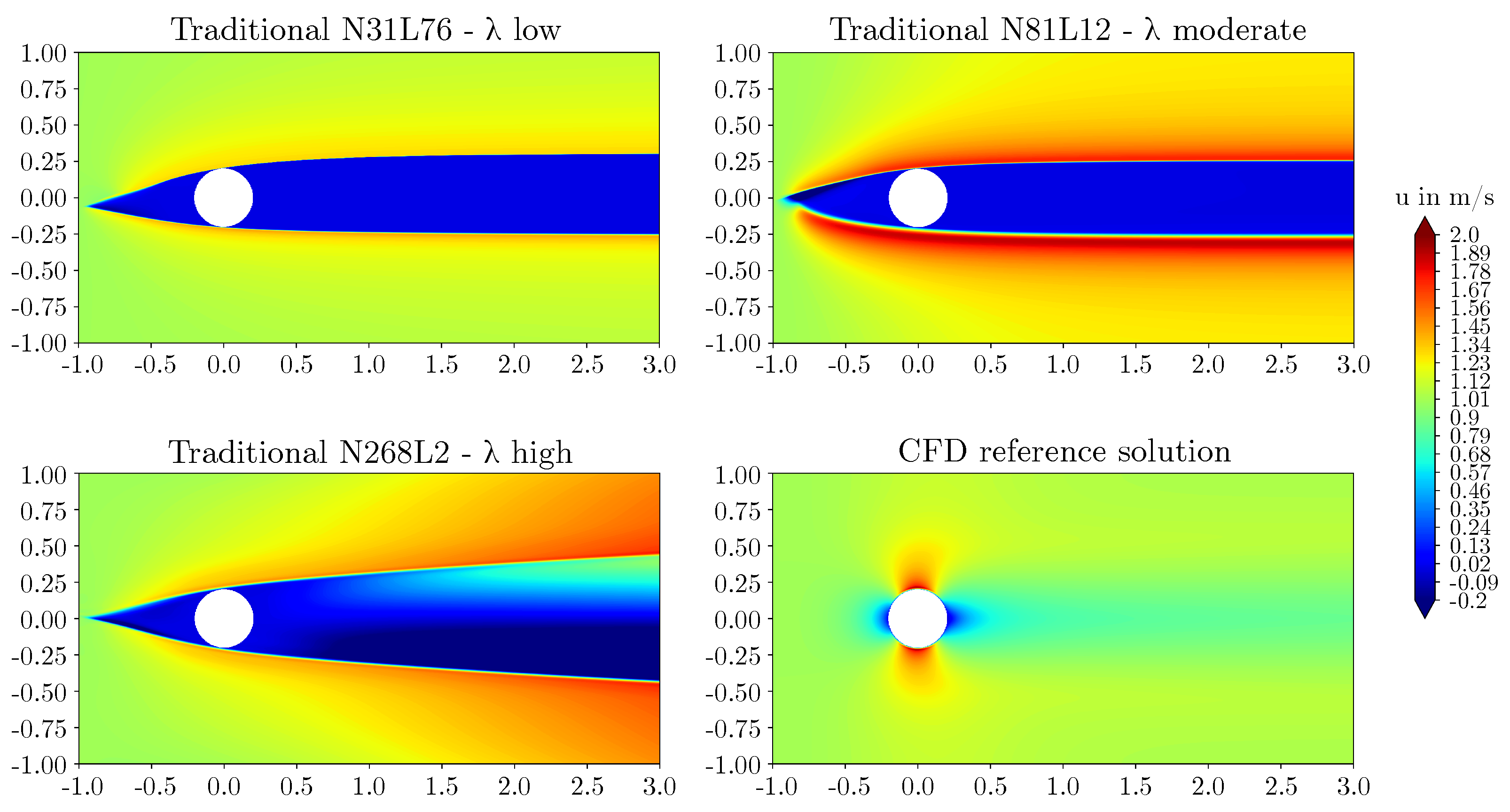

3.1. Performance of the traditional method

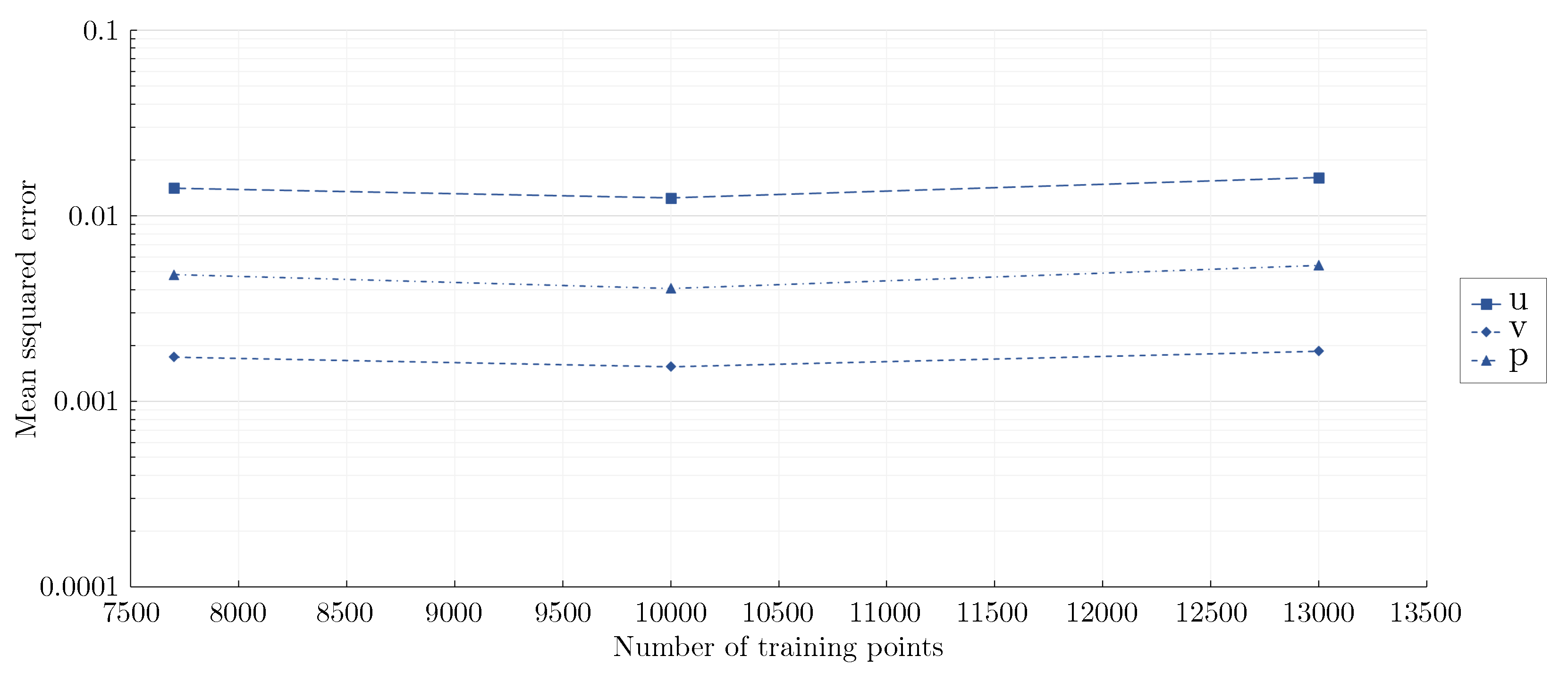

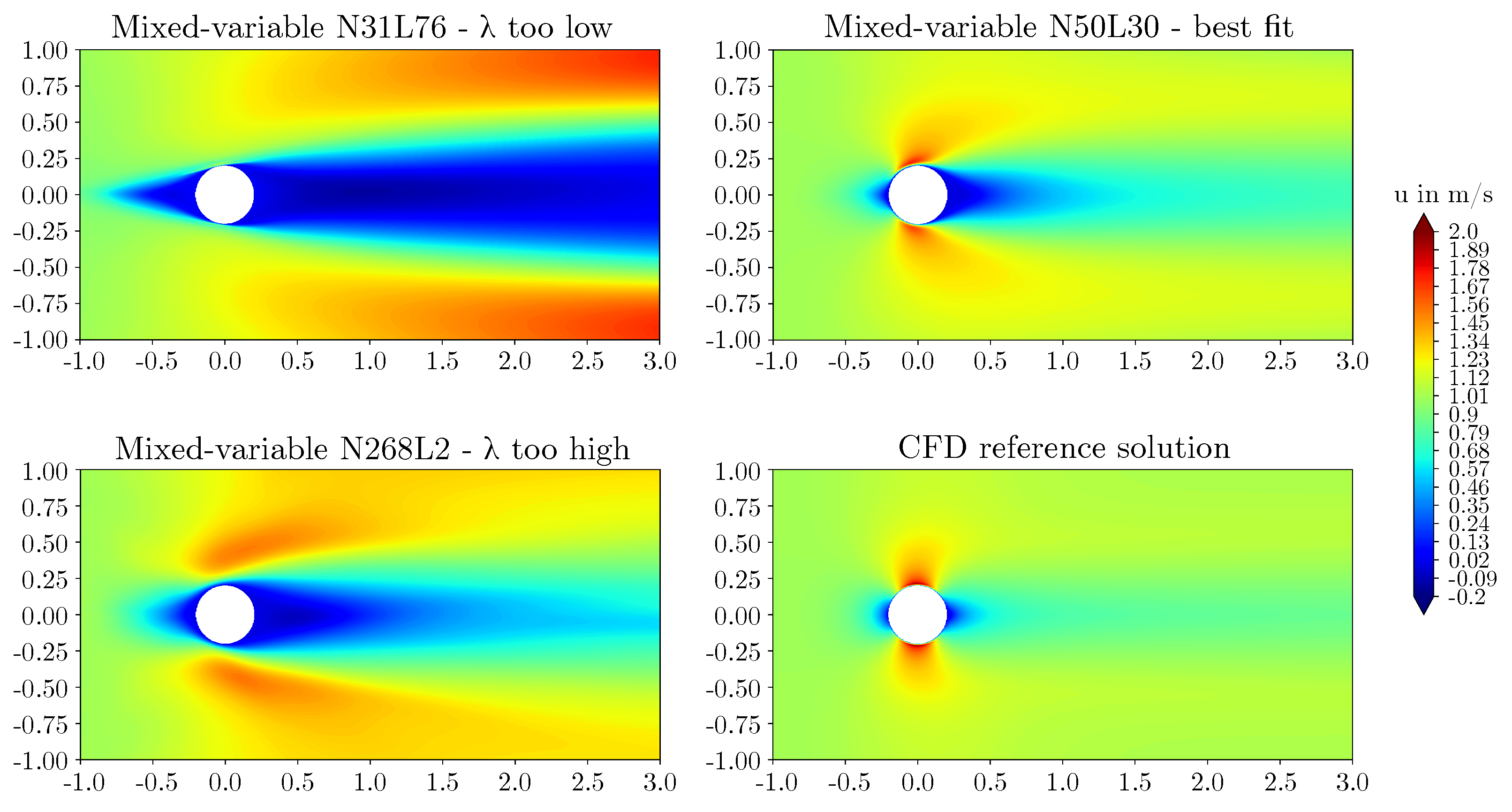

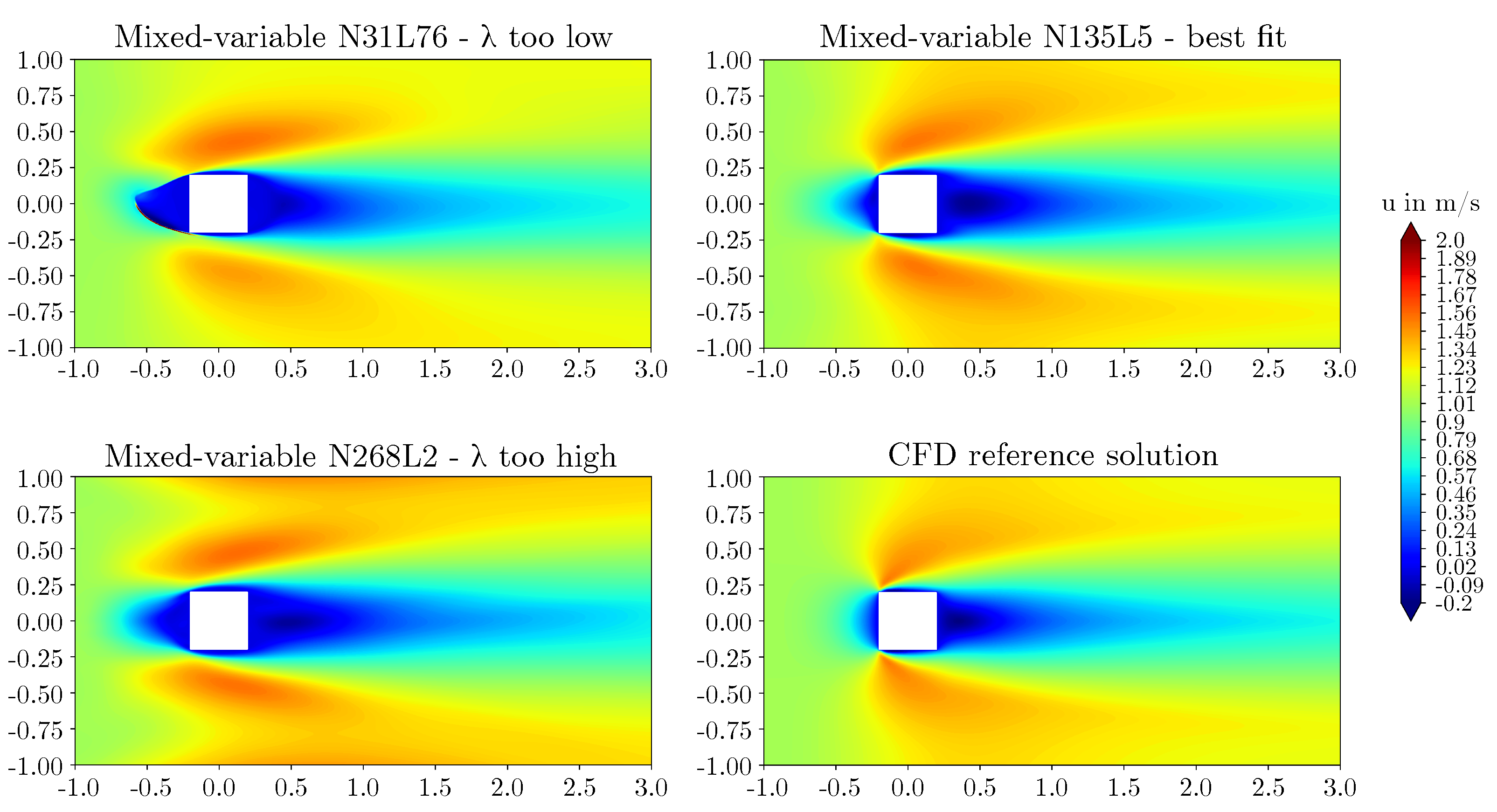

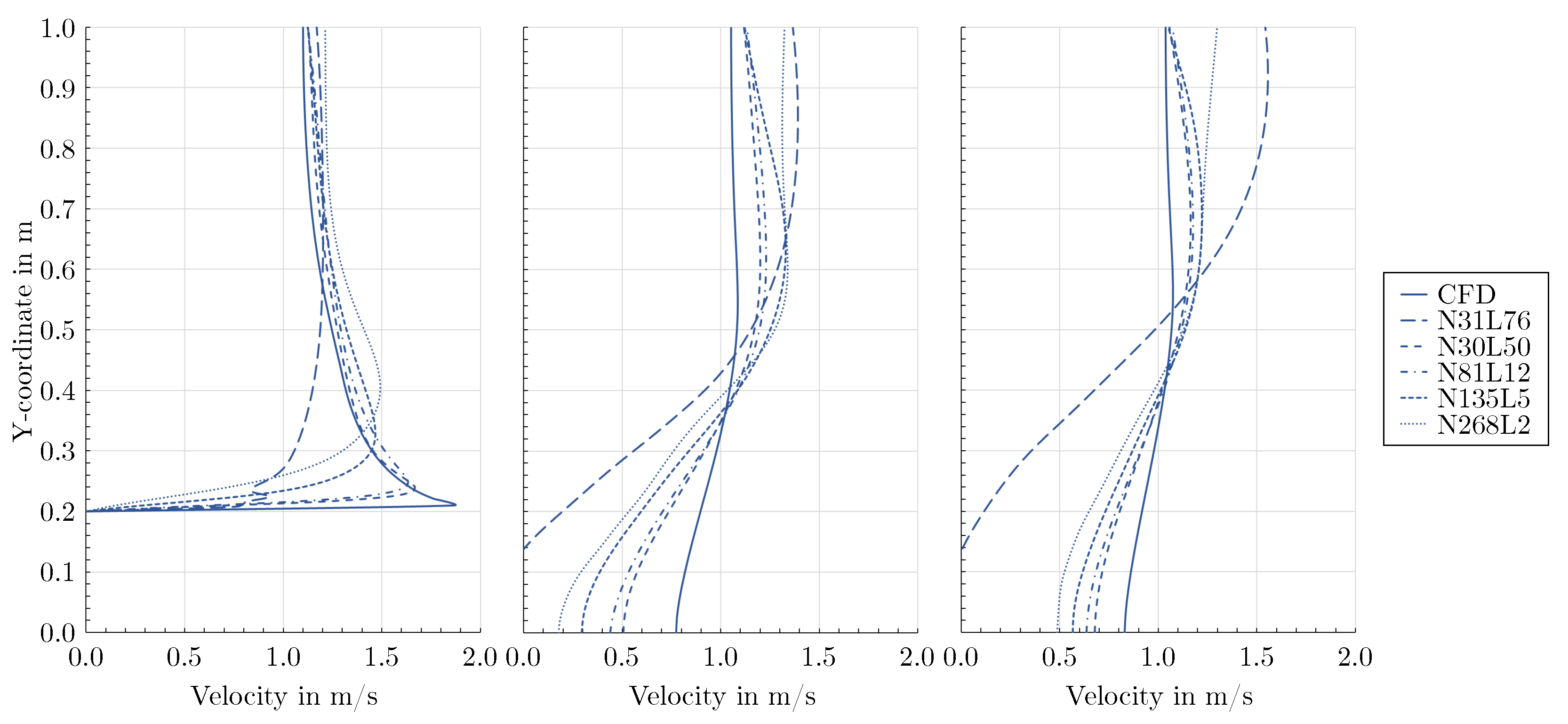

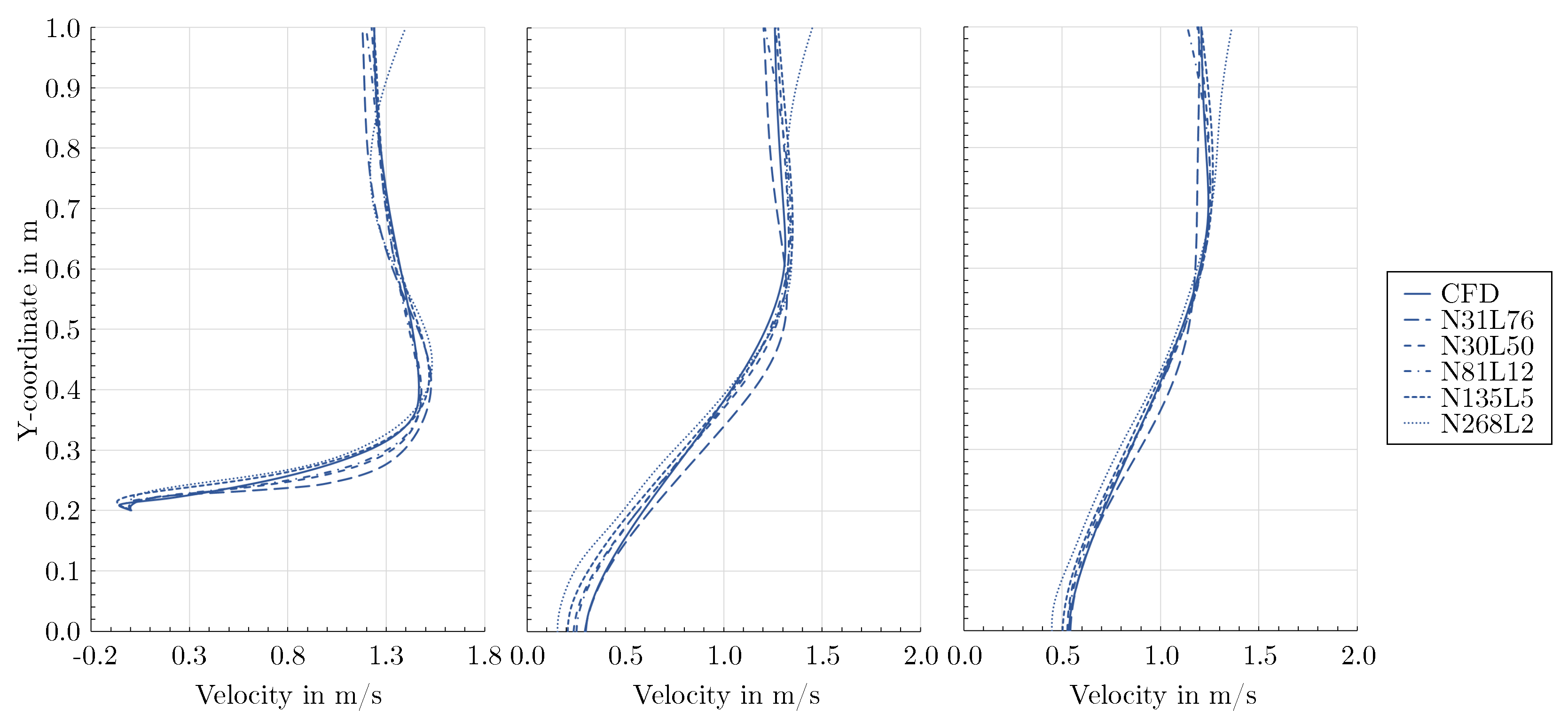

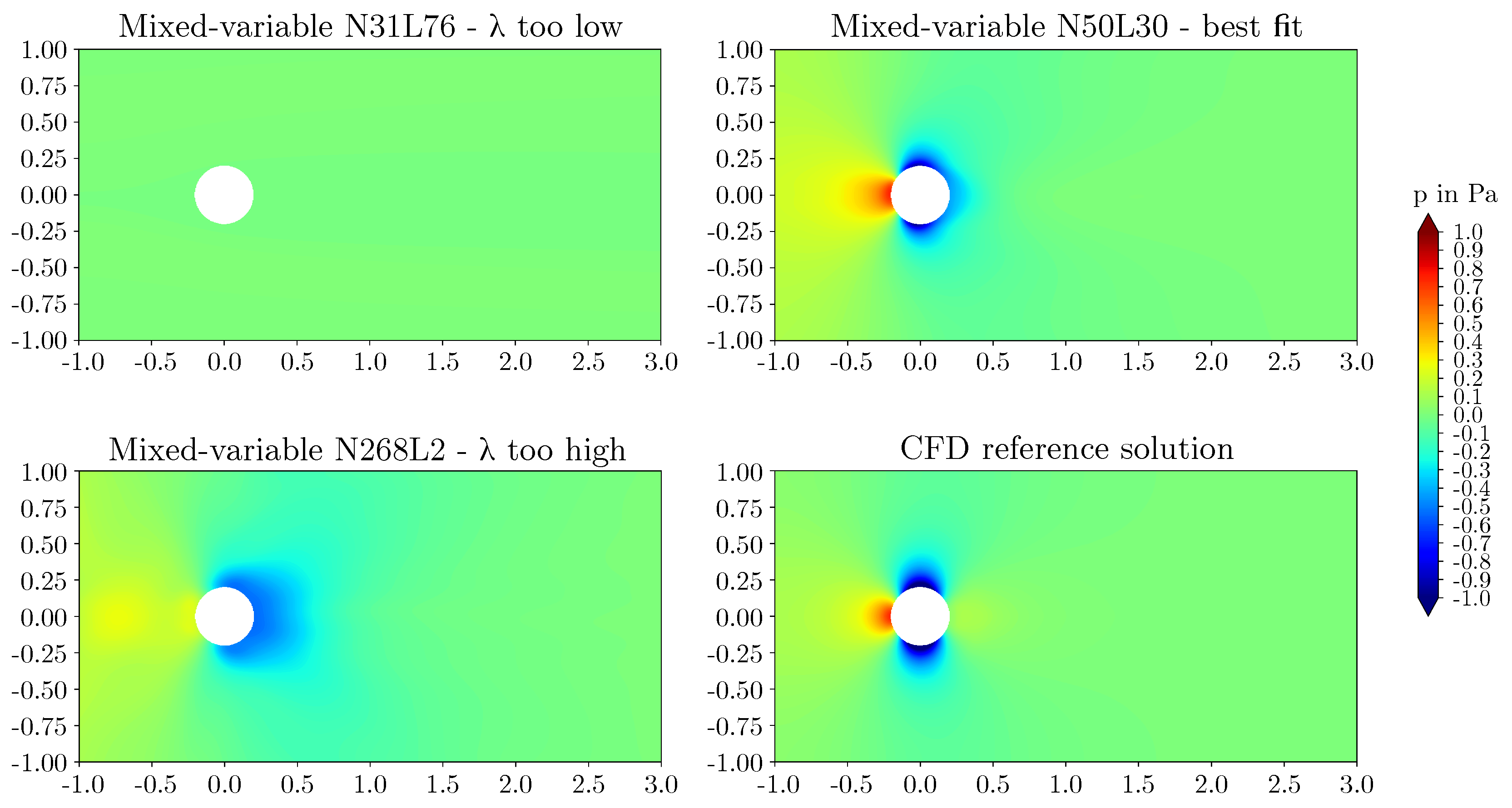

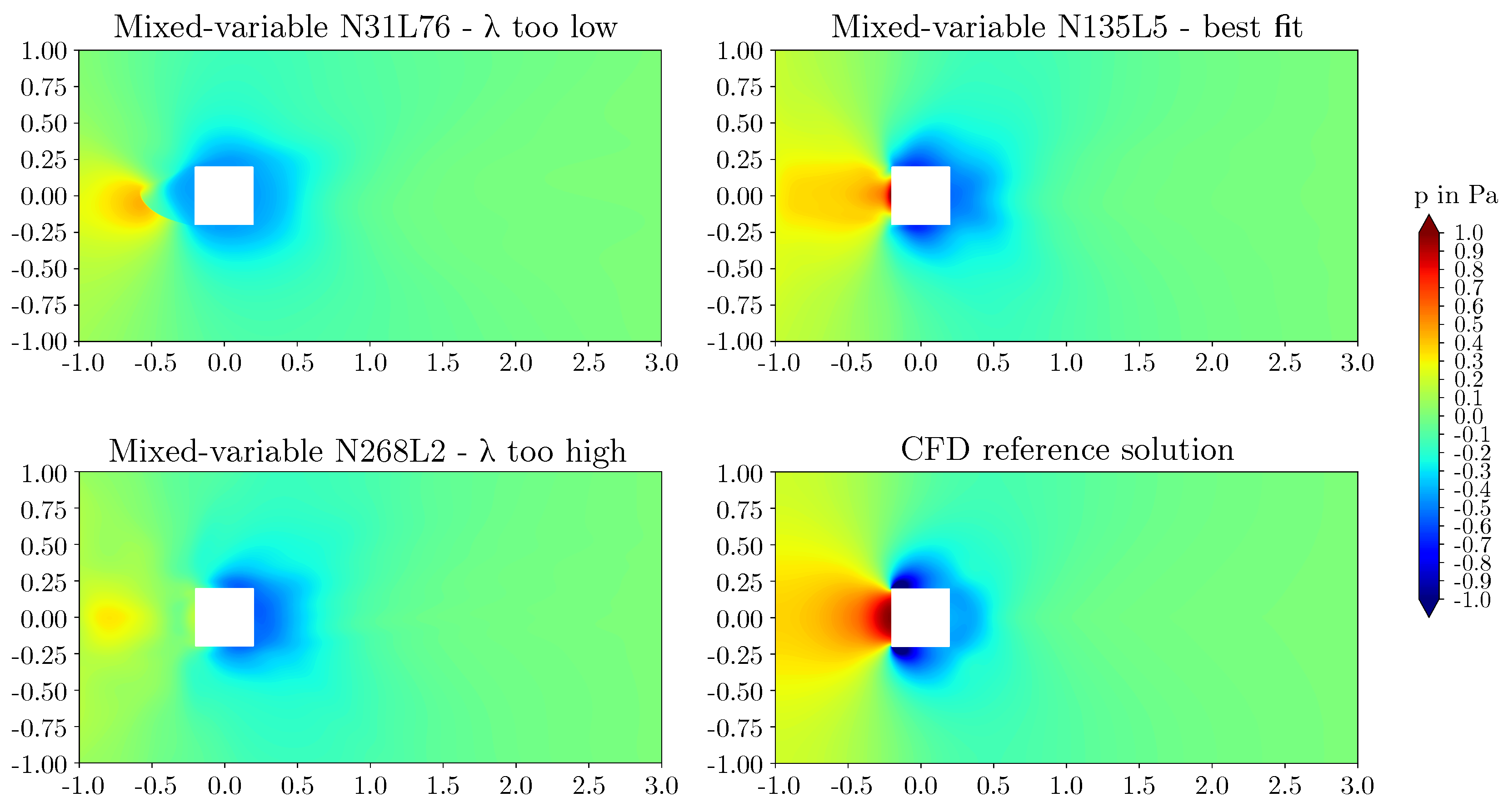

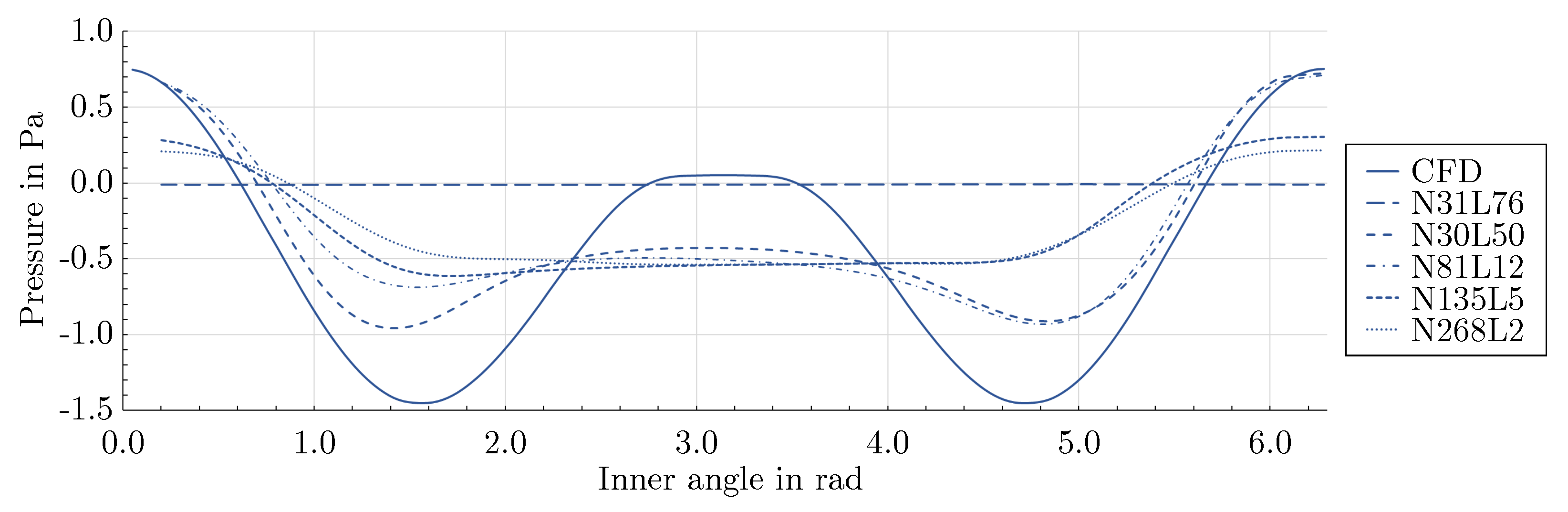

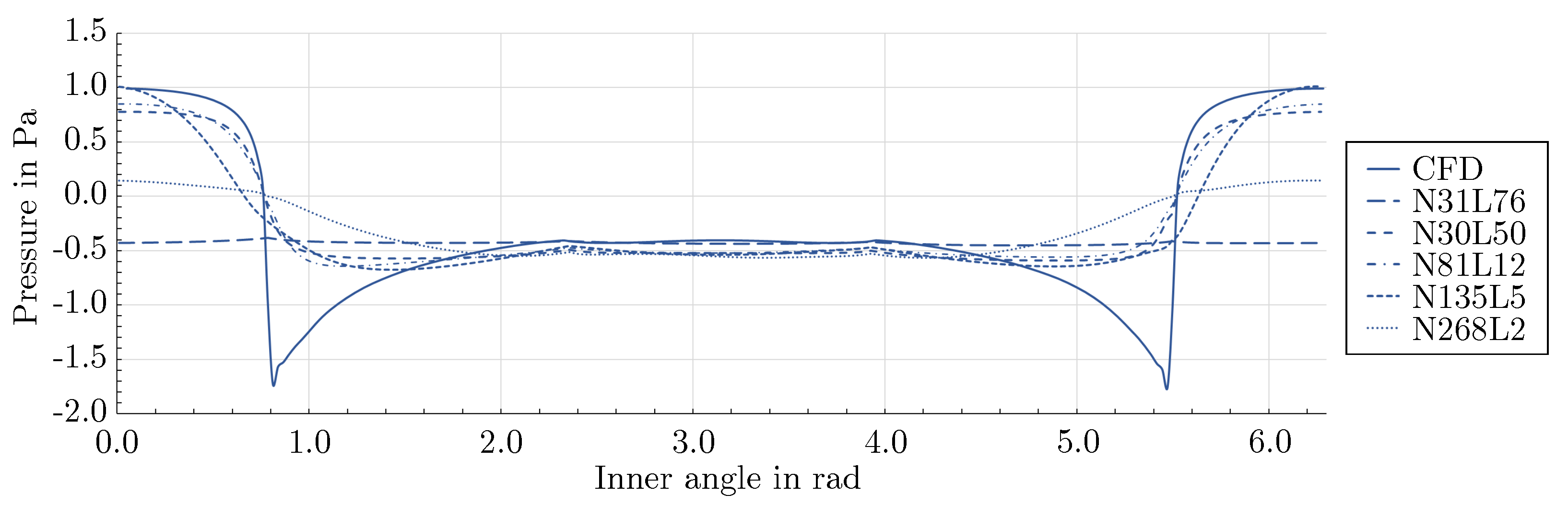

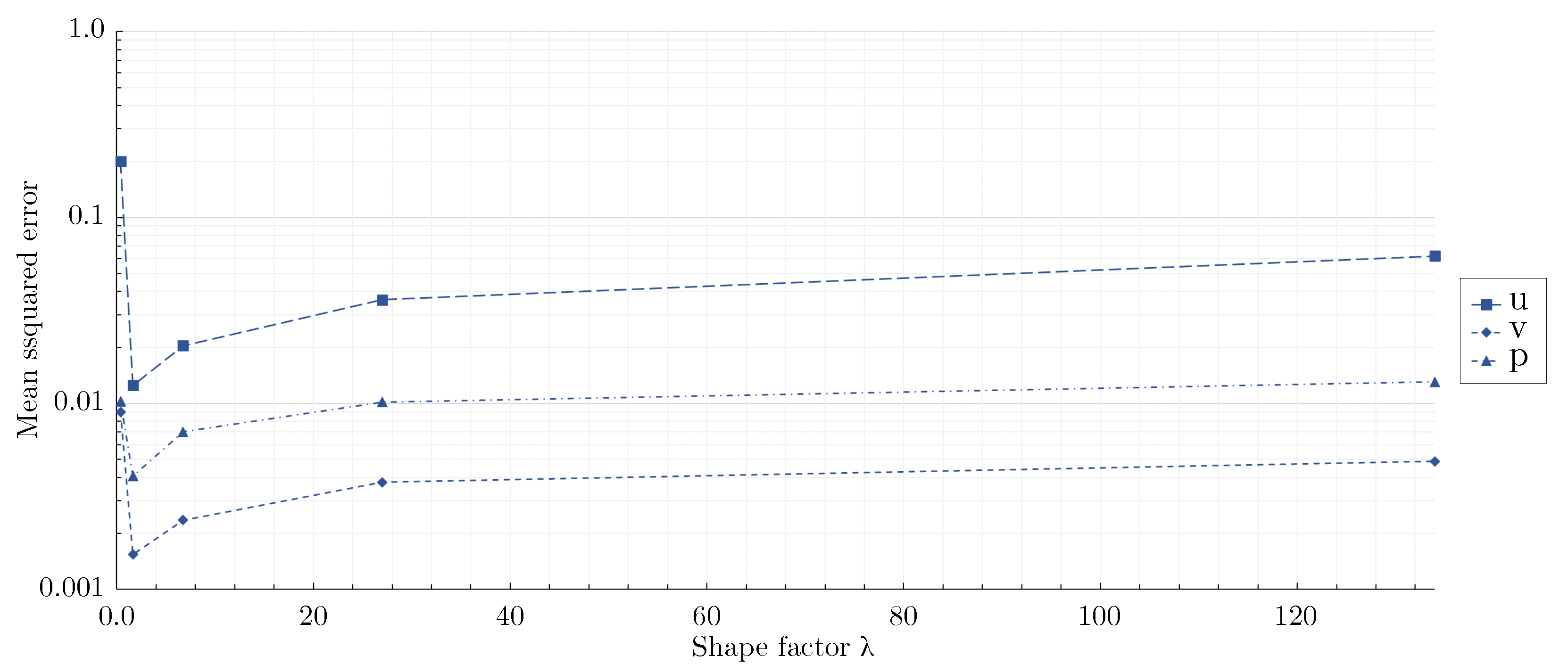

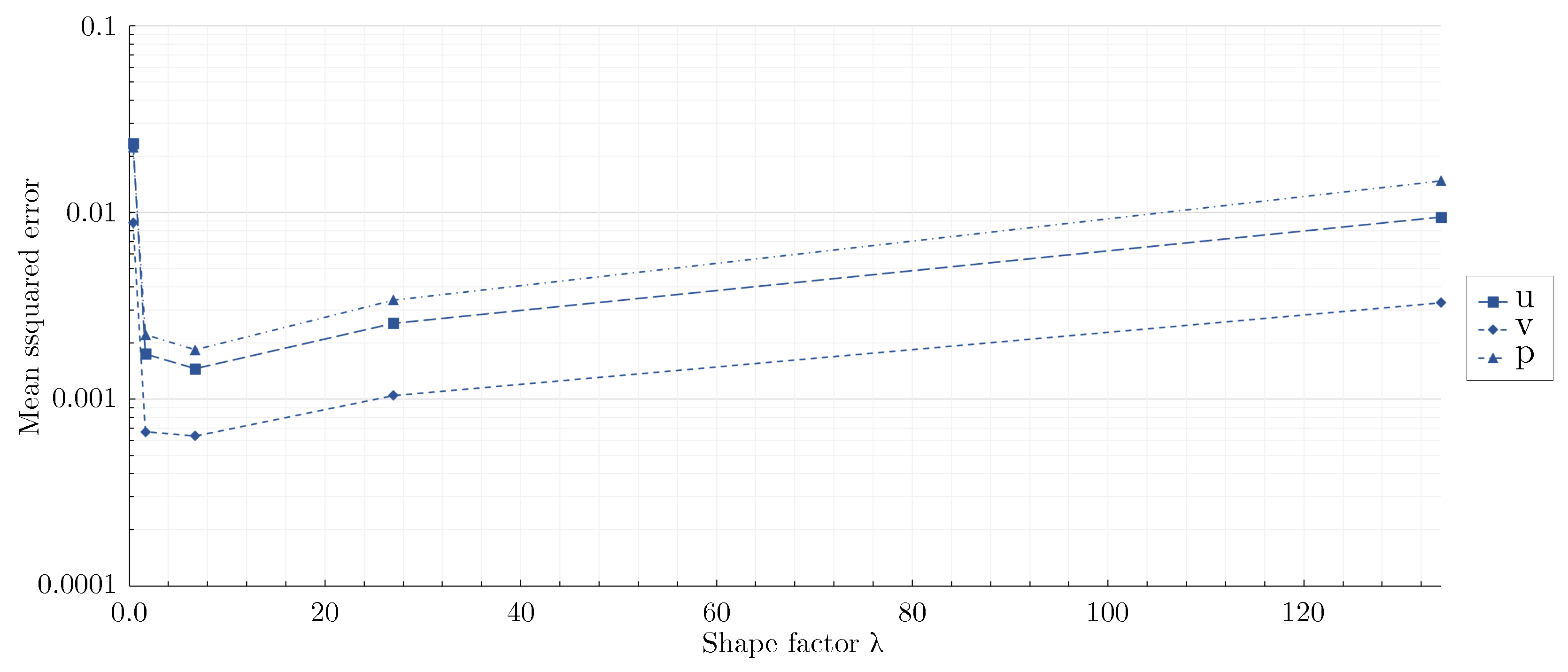

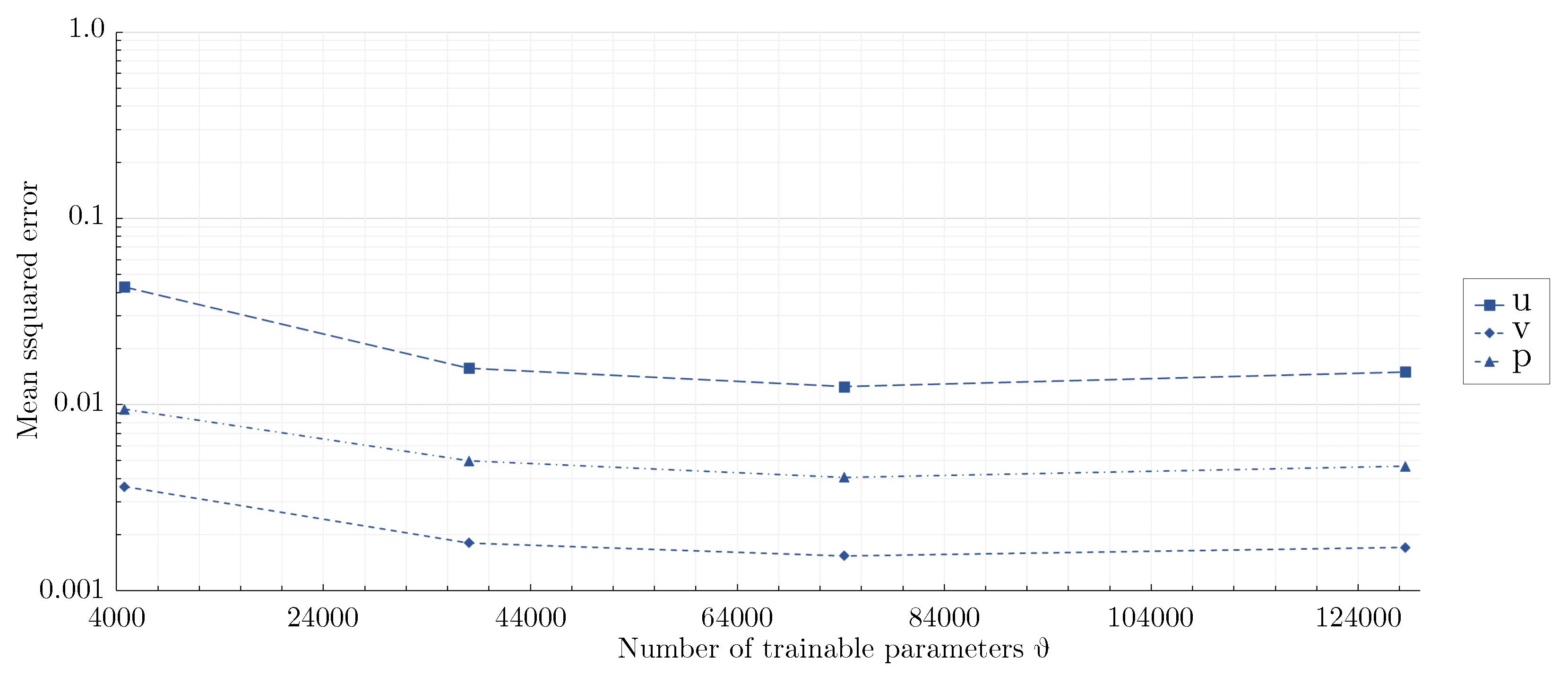

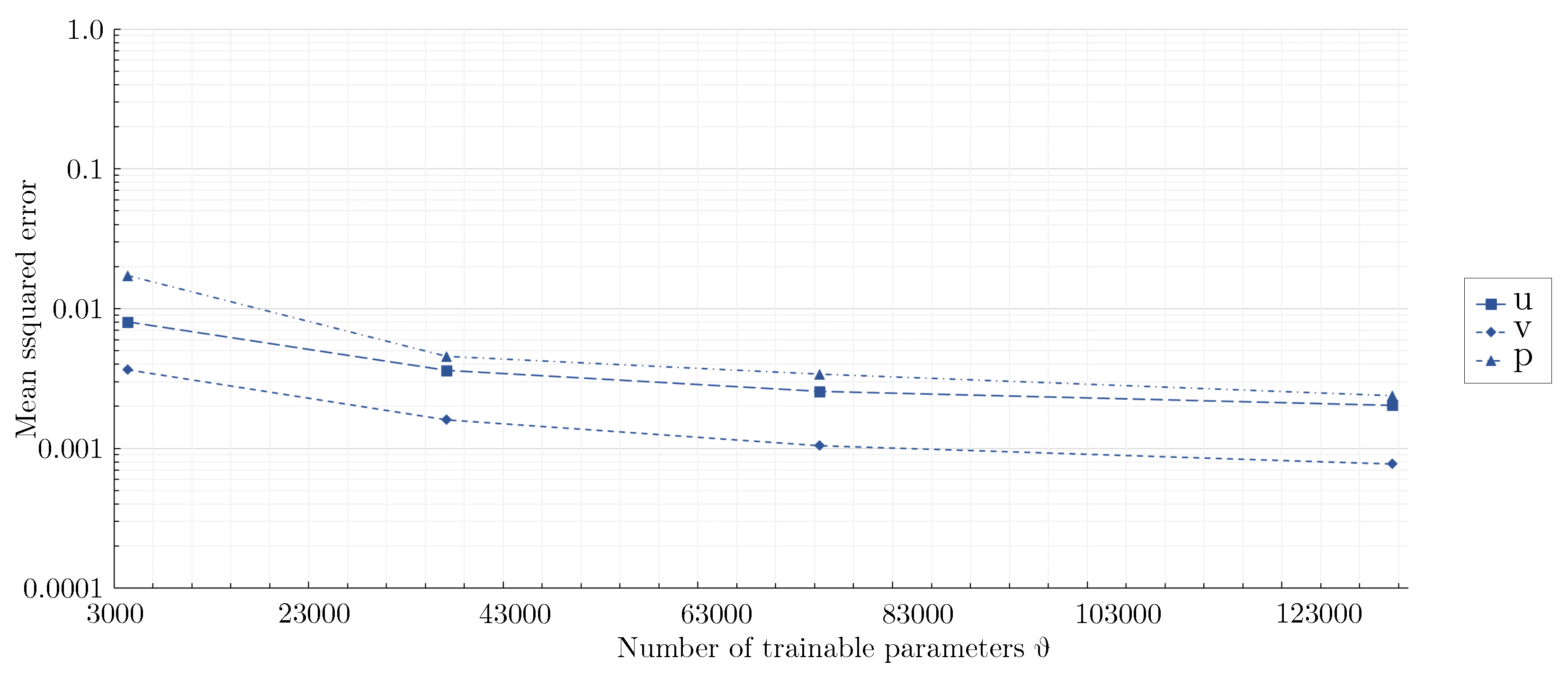

3.2. Effect of network architecture using the mixed-variable method

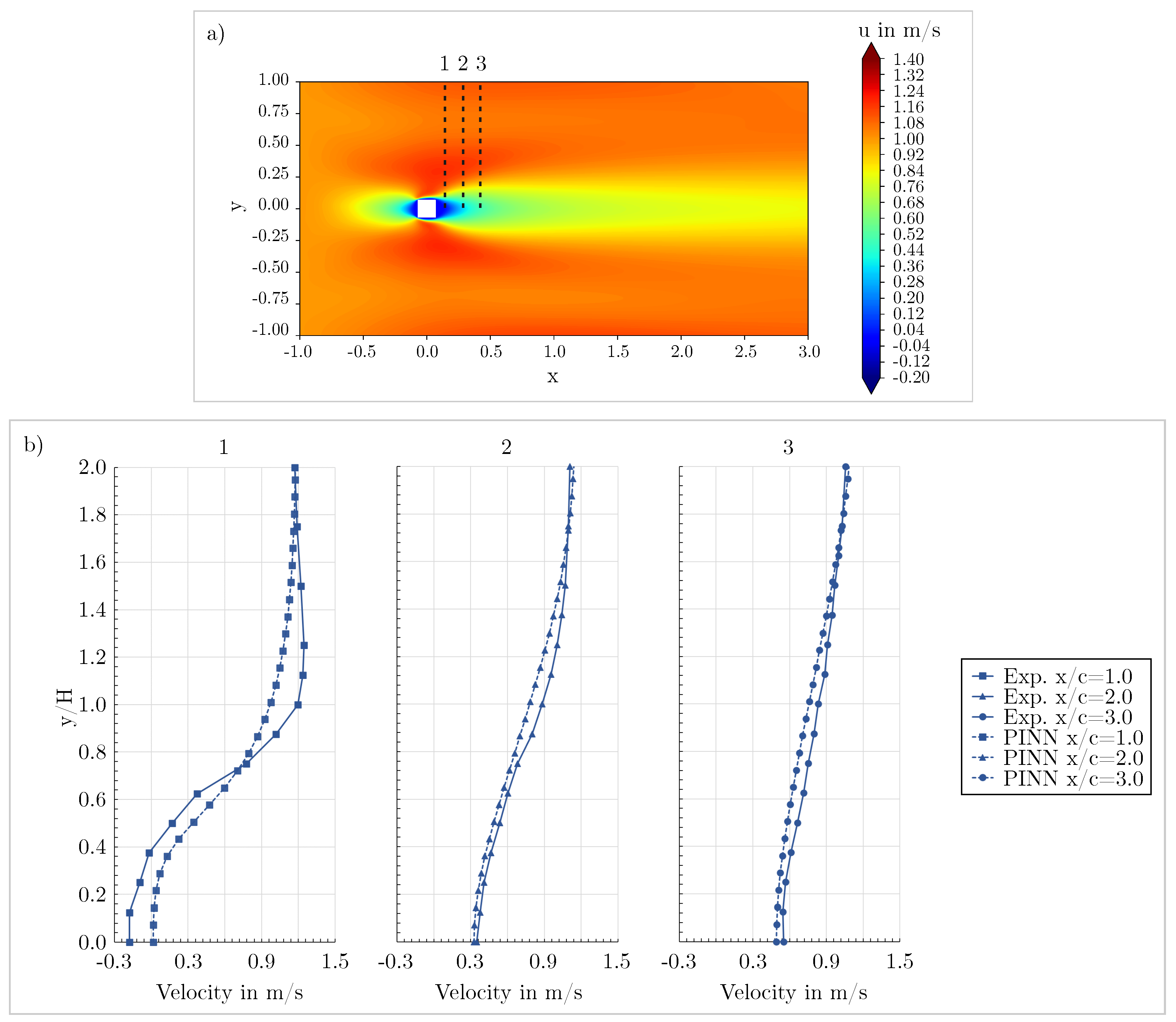

3.3. Validation of the mixed-variable method against measurements

4. Discussion

5. Summary and Conclusions

- For the elevated Reynolds number flow considered here, the superiority of the mixed-variable approach of Rao et al. [19] was confirmed. The traditional PINN method failed to capture the flow field accurately, independent of the network architecture.

- For the flow around the large scale circular cylinder, the deep architecture with a shape factor of outperformed the other architectures. The steep gradients of the boundary layers were predicted more accurately and the prolonged wake was reduced. For the flow around the large scale square cylinder, the wide network with a shape factor of captured the reference solution best. The model with a shape factor of worked well for both geometries.

- For the geometries investigated, different mixed-variable network architectures with factors varying by one order of magnitude were suitable. This demonstrates that depending on the case, it might be necessary to distinctly vary the shape factor of a PINN to find the best fitting model. However, using extremely high or low shape factors proved to be inappropriate.

- Despite inevitable deviations from the reference flow fields, the physics-informed mixed-variable method applied with a proper network architecture was able to predict stagnation points, high gradient boundary layers, flow separation, recirculation areas, and wakes at an elevated Reynolds number without requiring training data. In contrast, regular neural nets are not capable to predict plausible flow fields without providing extensive training data inside the domain.

- The mixing length model proved to be a reliable and stable model for physics-informed deep learning when no simulated or measured data were considered.

- More work needs to be done concerning physics-informed deep learning of the RANS equations. Future work should consider other turbulence models and methods to further increase the accuracy of predicted high Reynolds number flows.

Author Contributions

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Pinkus, A. Approximation theory of the MLP model in neural networks. Acta Numerica 1999, 8, 143–195. [Google Scholar] [CrossRef]

- Thuerey, N.; Weißenow, K.; Prantl, L.; Hu, X. Deep Learning Methods for Reynolds-Averaged Navier–Stokes Simulations of Airfoil Flows. AIAA Journal 2020, 58, 25–36. [Google Scholar] [CrossRef]

- Lagaris, I.E.; Likas, A.; Fotiadis, D.I. Artificial neural networks for solving ordinary and partial differential equations. IEEE transactions on neural networks 1998, 9, 987–1000. [Google Scholar] [CrossRef]

- Raissi, M.; Perdikaris, P.; Karniadakis, G.E. Physics Informed Deep Learning (Part I): Data-driven Solutions of Nonlinear Partial Differential Equations, 2017. Preprint at http://arxiv.org/pdf/1711.10561v1.

- Raissi, M.; Perdikaris, P.; Karniadakis, G.E. Physics Informed Deep Learning (Part II): Data-driven Discovery of Nonlinear Partial Differential Equations, 2017. Preprint at http://arxiv.org/pdf/1711.10566v1.

- Raissi, M.; Perdikaris, P.; Karniadakis, G.E. Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. Journal of Computational Physics 2019, 378, 686–707. [Google Scholar] [CrossRef]

- Raissi, M.; Yazdani, A.; Karniadakis, G.E. Hidden fluid mechanics: Learning velocity and pressure fields from flow visualizations. Science (New York, N.Y.) 2020, 367, 1026–1030. [Google Scholar] [CrossRef]

- Sun, L.; Gao, H.; Pan, S.; Wang, J.X. Surrogate modeling for fluid flows based on physics-constrained deep learning without simulation data. Computer Methods in Applied Mechanics and Engineering 2020, 361, 112732. [Google Scholar] [CrossRef]

- Jin, X.; Cai, S.; Li, H.; Karniadakis, G.E. NSFnets (Navier-Stokes Flow nets): Physics-informed neural networks for the incompressible Navier-Stokes equations. Journal of Computational Physics 2021, 426, 109951. [Google Scholar] [CrossRef]

- Laubscher, R.; Rousseau, P. Application of a mixed variable physics-informed neural network to solve the incompressible steady-state and transient mass, momentum, and energy conservation equations for flow over in-line heated tubes. Applied Soft Computing 2022, 114, 108050. [Google Scholar] [CrossRef]

- Ma, H.; Zhang, Y.; Thuerey, N.; Hu, X.; Haidn, O.J. Physics-driven Learning of the Steady Navier-Stokes Equations using Deep Convolutional Neural Networks, 2021. Preprint at http://arxiv.org/pdf/2106.09301v1.

- J. H. Harmening.; F. Pioch.; L. Fuhrig.; F.-J. Peitzmann.; D. Schramm.; O. el Moctar. Data-Assisted Training of a Physics-Informed Neural Network to Predict the Reynolds-Averaged Turbulent Flow Field around a Stalled Airfoil under Variable Angles of Attack 2023. Preprint at https://www.preprints.org/manuscript/202304.1244/v1. [CrossRef]

- Hennigh, O.; Narasimhan, S.; Nabian, M.A.; Subramaniam, A.; Tangsali, K.; Fang, Z.; Rietmann, M.; Byeon, W.; Choudhry, S. NVIDIA SimNet™: An AI-Accelerated Multi-Physics Simulation Framework. In Computational Science – ICCS 2021; Paszynski, M., Kranzlmüller, D., Krzhizhanovskaya, V.V., Dongarra, J.J., Sloot, P.M., Eds.; Springer International Publishing: Cham, 2021; Vol. 12746, Lecture Notes in Computer Science; pp. 447–461. [Google Scholar] [CrossRef]

- Pioch, F.; Harmening, J.H.; Müller, A.M.; Peitzmann, F.J.; Schramm, D.; el Moctar, O. Turbulence Modeling for Physics-Informed Neural Networks: Comparison of Different RANS Models for the Backward-Facing Step Flow. Fluids 2023, 8, 43. [Google Scholar] [CrossRef]

- Ghosh, S.; Chakraborty, A.; Brikis, G.O.; Dey, B. RANS-PINN based Simulation Surrogates for Predicting Turbulent Flows 2023. Preprint at https://arxiv.org/pdf/2306.06034.pdf.

- Patel, Y.; Mons, V.; Marquet, O.; Rigas, G. Turbulence model augmented physics informed neural networks for mean flow reconstruction, 2023. Preprint at http://arxiv.org/pdf/2306.01065v1.

- Eivazi, H.; Tahani, M.; Schlatter, P.; Vinuesa, R. Physics-informed neural networks for solving Reynolds-averaged Navier–Stokes equations. Physics of Fluids 2022, 34, 075117. [Google Scholar] [CrossRef]

- Xu, H.; Zhang, W.; Wang, Y. Explore missing flow dynamics by physics-informed deep learning: The parameterized governing systems. Physics of Fluids 2021, 33, 095116. [Google Scholar] [CrossRef]

- C. Rao.; H. Sun.; Y. Liu. Physics-informed deep learning for incompressible laminar flows. Theoretical and Applied Mechanics Letters 2020, 10, 207–212. [CrossRef]

- Lyn, D.A.; Einav, S.; Rodi, W.; Park, J.H. A laser-Doppler velocimetry study of ensemble-averaged characteristics of the turbulent near wake of a square cylinder. Journal of Fluid Mechanics 1995, 304, 285–319. [Google Scholar] [CrossRef]

- Ang, E.H.W.; Wang, G.; Ng, B.F. Physics-Informed Neural Networks for Low Reynolds Number Flows over Cylinder. Energies 2023, 16. [Google Scholar] [CrossRef]

- Lu, L.; Meng, X.; Mao, Z.; Karniadakis, G.E. DeepXDE: A deep learning library for solving differential equations. SIAM Review 2021, 63, 208–228. [Google Scholar] [CrossRef]

- European Research Community on Flow, Turbulence and Combustion. Classic Collection Database: Case043 (Vortex Shedding Past Square Cylinder). http://cfd.mace.manchester.ac.uk/ercoftac/doku.php?id=cases:case043. [Online; accessed 07.12.2023].

- Huang, W.; Zhang, X.; Zhou, W.; Liu, Y. Learning time-averaged turbulent flow field of jet in crossflow from limited observations using physics-informed neural networks. Physics of Fluids 2023, 35. [Google Scholar] [CrossRef]

- Kag, V.; Seshasayanan, K.; Gopinath, V. Physics-informed data based neural networks for two-dimensional turbulence. Physics of Fluids 2022, 34. [Google Scholar] [CrossRef]

- Xiao, M.J.; Yu, T.C.; Zhang, Y.S.; Yong, H. Physics-informed neural networks for the Reynolds-Averaged Navier–Stokes modeling of Rayleigh–Taylor turbulent mixing. Computers & Fluids 2023, 266, 106025. [Google Scholar] [CrossRef]

- Wang, S.; Sankaran, S.; Wang, H.; Perdikaris, P. An Expert’s Guide to Training Physics-informed Neural Networks, 2023. Preprint at https://arxiv.org/pdf/2308.08468.pdf.

- Wilcox, D.C. Reassessment of the scale-determining equation for advanced turbulence models. AIAA Journal 1988, 26, 1299–1310. [Google Scholar] [CrossRef]

- Krishnapriyan, A.; Gholami, A.; Zhe, S.; Kirby, R.; Mahoney, M.W. Characterizing possible failure modes in physics-informed neural networks. Advances in Neural Information Processing Systems; M. Ranzato.; A. Beygelzimer.; Y. Dauphin.; P.S. Liang.; J. Wortman Vaughan., Eds. Curran Associates, Inc, 2021, Vol. 34, pp. 26548–26560.

- Xiang, Z.; Peng, W.; Liu, X.; Yao, W. Self-adaptive loss balanced Physics-informed neural networks. Neurocomputing 2022, 496, 11–34. [Google Scholar] [CrossRef]

- Li, S.; Feng, X. Dynamic Weight Strategy of Physics-Informed Neural Networks for the 2D Navier-Stokes Equations. Entropy 2022, 24. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Liu, Y.; Wang, S. Dense velocity reconstruction from particle image velocimetry/particle tracking velocimetry using a physics-informed neural network. Physics of Fluids 2022, 34, 017116. [Google Scholar] [CrossRef]

- Kim, Y.; Choi, Y.; Widemann, D.; Zohdi, T. A fast and accurate physics-informed neural network reduced order model with shallow masked autoencoder. Journal of Computational Physics 2022, 451, 110841. [Google Scholar] [CrossRef]

- Zhu, Y.; Zabaras, N.; Koutsourelakis, P.S.; Perdikaris, P. Physics-Constrained Deep Learning for High-dimensional Surrogate Modeling and Uncertainty Quantification without Labeled Data. Journal of Computational Physics 2019, 394, 56–81. [Google Scholar] [CrossRef]

- Wang, R.; Kashinath, K.; Mustafa, M.; Albert, A.; Yu, R. Towards Physics-informed Deep Learning for Turbulent Flow Prediction. In Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining; Gupta, R., Liu, Y., Shah, M., Rajan, S., Tang, J., Prakash, B.A., Eds.; ACM: New York, NY, USA, 08232020; pp. 1457–1466. [Google Scholar] [CrossRef]

- Wandel, N.; Weinmann, M.; Klein, R. Teaching the Incompressible Navier-Stokes Equations to Fast Neural Surrogate Models in 3D. Physics of Fluids 2021, 33, 047117. [Google Scholar] [CrossRef]

- Dwivedi, V.; Parashar, N.; Srinivasan, B. Distributed physics informed neural network for data-efficient solution to partial differential equations, 2019. Preprint at http://arxiv.org/pdf/1907.08967v1.

| Label | Cylinder shape | Cylinder size H | Blockage ratio | Reference data | Reynolds number | Objective |

| G1 | Circular | 0.40 m | 20% | CFD | Network study | |

| G2 | Square | 0.40 m | 20% | CFD | Network study | |

| G3 | Square | 0.14 m | 7% | Experiments | Validation |

| Label | Neurons p. l. | Layers | Shape factor | Parameters | Geometry | Methods |

| N31L75 | 31 | 76 | 74586 | G1 | Traditional | |

| N81L12 | 81 | 12 | 73548 | G1 | Traditional | |

| N268L2 | 268 | 2 | 73700 | G1 | Traditional |

| Label | Neurons p. l. | Layers | Shape factor | Parameters | Geometry | Methods |

| N31L75 | 31 | 76 | 74648 | G1 and G2 | Mixed-variable | |

| N50L30 | 50 | 30 | 74350 | G1 and G2 | Mixed-variable | |

| N81L12 | 81 | 12 | 73710 | G1 and G2 | Mixed-variable | |

| N135L5 | 135 | 5 | 74520 | G1 and G2 | Mixed-variable | |

| N268L2 | 268 | 2 | 74236 | G1 and G2 | Mixed-variable |

| Label | Neurons p. l. | Layers | Shape factor | Trainable parameters | Geometry | Methods |

| N20L12 | 20 | 12 | 4780 | G1 | Mixed-variable | |

| N40L24 | 40 | 24 | 38040 | G1 | Mixed-variable | |

| N50L30 | 50 | 30 | 74350 | G1 | Mixed-variable | |

| N60L36 | 60 | 36 | 128580 | G1 | Mixed-variable | |

| N54L2 | 54 | 2 | 3402 | G2 | Mixed-variable | |

| N108L4 | 108 | 4 | 36180 | G2 | Mixed-variable | |

| N135L5 | 135 | 5 | 74520 | G2 | Mixed-variable | |

| N162L6 | 162 | 6 | 133326 | G2 | Mixed-variable |

| Label | Neurons p. l. | Layers | Shape factor | Trainable parameters | Geometry | Methods |

| N50L30 | 50 | 30 | 74350 | G3 | Mixed-variable |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).