1. Introduction

1.1. Overview

Due to the GPS’ constant need for high accuracy and fidelity in global localization, issues arise when GPS access is limited in some environments. This could happen due to inadequate GPS accuracy or unavailability in indoor or urban environments such as tunnels [

1,

2]. Some sensors complement GPS-denied systems such as LIDARs, camera systems (mono or stereo), inertial measurement sensors, velocity sensors, altimeters, compasses, and more; however, most of the solutions are expensive or prone to more significant errors over time with no way to calibrate [

3,

4]. Ultra-wideband sensors are ideal for implementing a local GPS because they provide centimeter range accuracy, high range reliability, and low latency [

5,

6,

7]. Despite GPS being affordable and reliable, several issues can make its use suboptimal. Issues include GPS being unavailable in indoor environments and only having five meters of precision, which is not ideal for a higher level of accuracy. This can be most readily noted in a lawn mower example, where 5 meters can be a substantial error relative to the robot’s workspace area. Additional issues in a lawn-mower-type application are the potential of trees obstructing the GPS signal and the GPS’s low update rate of 1 Hz, which is not sufficient to track fast vehicles. Thus, systems such as UWB, which can provide centimeter accuracy at higher rates, are more effective solutions. An RTK solution could be implemented to use centimeter-level accuracy for GPS; however, these are not cost-effective for such situations.

1.1.1. Ultra-Wideband Sensors

The use of Ultra-wideband (UWB) in localization is a popular technology due to its accurate positioning capabilities, immunity against multipath fading, and resilience against active and passive interference’s [

5,

8]. UWB sensors utilize a large frequency spectrum, from 3.1 to 10.6 GHz, and bandwidths of 500+ MHz to implement localization techniques. This means that UWB radar sensors can, compared to traditional optical or less powerful radar sensors, maintain their accuracy in more challenging terrain, such as indoor situations where multipath and interference errors are more prevalent. Thus, they retain the ability to sense and communicate in obstacle-heavy terrain and allow for more robust localization systems [

9]. There are three node types in UWB sensors, the transmitters, which only transmit their operations; the anchor nodes, which are usually static with a set and verified location to help the other nodes in localizing; and mobile nodes, which contain a combination of transmitting antennas and receiving antennas.

1.2. Related Works

UWB localization problems were approached through different configurations of anchors and tags, combining stationary and mobile tags. Two main groups of UWB localization approaches were found in the current literature. The first approach, followed by [

10,

11,

12,

13,

14,

15,

16,

17], used a combination of initial location estimates using the UWB ranging measurements and a regression method followed by the use of a type of Kalman filter to further improve the estimate over time. The second approach followed a Mote Carlo-based simulation similar to particle filters [

18].

To simplify communication issues and improve network robustness, decentralized sensors were most commonly utilized to perform the computation [

16]. The decentralized initial location estimates follow a linear regression problem to solve triangulation or trilateration to get a robust position estimate [

12,

17]. However, the issue was that these systems assumed that the robot was constantly able to achieve communication with a minimum number of nodes before adequately operating. The robot was also assumed to be stationary during this period.

Current literature has explored more noise-robust extensions of Non-Linear Least Squares for triangulation. Systems using robust statistical techniques can mitigate the impact of outliers in measurements [

19,

20,

21]. Some employ reformations of the reweighed least squares (IRLS) methods [

22], while others transform the LSQ problem using a Majorization-Minimization (MM) approach [

23]. While these methods usually look at static environments, some mobile beacon-based location methods track the anchor’s messages and localizes themselves based on the message history [

24,

25]; however, even in these cases, the tracked nodes are static.

Multiple tags and anchors were placed on the robot to improve the understanding of its position and orientation estimates. This helped constrain its position and provided more reliability in its measurements [

15,

17]. An issue with previous work was that the systems localized each other in a relative sense; the nodes were localized relative to a base node. This was, however, not ideal for long-distance or multi-mesh scenarios as it did not ensure that each node was constrained to the same coordinate frame.

Once the position estimate was calculated, the system transitioned to position refinement techniques to improve further the time-varying sensor noise provided to the robot. The extended Kalman filter (EKF) and the unscented Kalman filter (UKF) have been used extensively in literature [

15,

16]. The UKF is sensitive to the inherent time invariant multipath effect and non-line-of-sight (NLOS) noise. To reduce the inherent error, different types of filter approaches have been used [

6,

10,

14,

26]. The noise increase was relatively significant for indoor scenarios because so many obstacles could block the direct line of sight of the UWB sensors. As such, using UWB sensors only for localization was not ideal. The noise inherent in the system was reduced, as demonstrated in this research, with the fusion of the wheel encoders and inertial measurement units (IMUs) to smooth out the position estimate.

In contrast to the aforementioned methods, the second method for position estimation found in literature followed a Monte Carlo approach [

18]. The algorithms followed a repeated random sampling process to arrive at a computationally convergent solution or solutions [

27,

28]. This sampling technique does provide possible issues with multiple equilibrium points and can be more computationally expensive than other options, depending on the number of sampling points. Efforts to enhance wireless network localization techniques have delved into integrating machine learning within the localization engine [

29]. Semi-supervised particle swarms have been augmented to improve the data point selection; however, currently, these systems still assume that the anchor nodes are static [

28]. The following section introduces this research problem formulation.

1.3. Contribution

This article focused on the localization of moving Unmanned Ground Vehicles (UGV) using stationary and moving UGV and Unmanned Aerial Vehicles (UAV). These vehicles had UWB sensors attached, enabling peer-to-peer ranging communication and allowing the robots to improve their respective localization using a cooperative network. The network helped provide a broad reach and create a local GPS network for the nearby robots.

The main contributions from the research are highlighted below:

Developed an initial localization framework where all agents could be non-stationary, and the UWB tags are offset from the center of the robot.

Designed a global/relative localization system based on the UKF for ground-based robots that could leverage ground and aerial range measurement data.

Created a pipeline for a mobile Ad-Hoc localization system.

The rest of the article was organized as follows. The environment and problem are described in the “Method” section, where the agents of the environment, the assumptions of the environment, and the detailed derivations of the proposed system are laid out. The “Simulation results” section contains the simulation results and interpretation. Finally, the work summary is presented in the “Conclusion and future work” section.

2. Method

The Ad-Hoc mesh network definition and implementation with the explanation of the information propagation throughout the network are explained. The following sections contributed to the initial position transform through a non-linear least squares regression, starting in

Section 2.3 and ending in

Section 2.4, followed by the position refinement using an unscented Kalman filter, starting in

Section 2.5 and ending in

Section 2.5.4.

2.1. Ad-Hoc Network

The proposed Ad-Hoc Mesh network followed a mobile decentralized, absolute localization Ad-Hoc system. The nodes in the network would consist of both stationary and mobile nodes. Due to the network’s mobile nature, a decentralized network was more robust to the constantly changing topography of the network graph. The network was fine-grained (range-based) thanks to the incorporated sensors in this research, the UWB sensors, measuring the range using ToF (Time of Flight). The system was also required to converge to an absolute localization system; this means that the robot should be able to transition from an initial relative localization system, and once it had reached the required threshold for transitioning, convert the relative coordinate system to become a global localization problem.

The algorithm follows a similar but simplified version of the Iterative Multilateration AHLoS (Ad-Hoc Localization System) system [

30]. The AHLoS system is a method to implement the localization of a mesh network in a flood-like fashion. The algorithm started with a graph that combined localized and unlocalized nodes; when an unknown node lies in the neighborhood of three or more anchors, the neighboring anchors’ positions and distances were used to estimate its position. Once the position of an unknown node was estimated, the node became an anchor and thus continued the cycle until all the nodes were localized.

This article modified the AHLoS system to account for mobile nodes coming out and into the range of other localized nodes. The current and historical range measurements were only collected from the localized anchors and their associated position at every time step. The range measurements were then paired with the node’s odometry measurement at the corresponding time. Linear extrapolation was employed if the odometry measurements were too far apart.

The localized nodes communicate to the target robot the range measurement and the localized node’s current global position. Given the distributed nature of the mobile Ad-Hoc network, each robot would maintain a history of measurement data from each robot. With nodes frequently entering and exiting the robot’s view and with histories stored locally, the system retains all information and remains unaffected by network topography changes. Given that the range measurement uses ToF for its UWB range estimation, no synchronization between nodes is required, thus making communication more straightforward. As for transmission scheduling, the system would adopt a carrier sense multiple access with collision avoidance (CSMA/CA) protocol to communicate with the other devices [

31]. The device first listens to the UWB channel to check for transmitting nodes. If another node is detected, the device waits a random interval for that node to finish before checking again and then sending its data. This technique is known to be unreliable due to the hidden node problem; however, given UWB’s low transmission rate, the UWB range packets being of small size, and the expected sparsity of the robot at a specific time (no more than ten robots at a time) the risk for package collision is low. The CSMA/CA would be able to handle such traffic with acceptable system delay [

32]. If, after sending, packets are not returned within a time frame T, the ranging handshake would be sent again.

Following the data collection, several data processing checks were employed before following through with the initial localization step:

If the current robot was stationary, then a minimum of three unique anchors were required

If the current robot was non-stationary and the anchors were stationary, then a minimum of two anchors were required

If the current robot was non-stationary and the anchors were non-stationary, then a minimum of one anchor was required

The robots were determined to be mobile by looking at the reported robot’s positions and ensuring the distances between points were above a certain tolerance, giving enough information for the non-linear least square algorithm to work.

To mitigate issues with sensor noise, a minimum number of data points was enforced to start the trilateration. Once the quota was fulfilled, the non-linear least square algorithm was performed as described in

Section 2.4, the robot was defined as localized, and the refining process using the UKF was started. However, if the node failed to localize within a specific time frame, the robot relied solely on odometry for the localization process and “positioned itself within its relative positioning system.” Suppose the node was at any time able to satisfy all the conditions to localize due to it coming in range with other localized nodes. In that case, the robot switched from using the relative positioning system to localizing itself in the new global reference frame and continued using the UKF to refine the results. At this point, the robot switched from an unlocalized node to a localized node. By constantly localizing and changing status once localized, the robots eventually converged to a network where all the nodes followed the same reference frame.

2.2. Parameter Definitions

A detailed definition of all parameters used in the system will be presented in this subsection. For convention, the vectors

and

,

represented the global position, relative position, and heading of the node, respectively. The heading was defined where 0 radians points in the positive x-axis of

, and a counterclockwise motion represented an increase in the heading.

represented the inter distance between two points in an agreed reference frame and can be formulated as,

The relative Euclidean distance between two nodes was thus denoted as

The environment consisted of a team of UAV, UGV, and static UWB anchors labeled 1,2,...N. The calculated global transformation matrices of the UGV were defined as the transformation from the center of the robot’s relative origin point. The starting position of the odometry was assumed to be

with 0 heading, linear and angular velocity, to the robots’ global position (

). The transformation thus translated and rotated the static odometry frame of the robot. To solve the problem, each

was able to access the odometry data, denoted as

with angular velocity

, linear velocity

, heading

also be represented as

,

represented the yaw rate. The robot had distance measurements to its neighbor

, i.e.,

. It was assumed that the robot was moving on a flat 2D plane. As such,

remained constant. The global position

was then derived from

by:

Each denoted the set of its neighbors as where . The neighbor transmitted its range measurements, and UWB anchor position, . The position measurement data was located in the robot’s global reference frame, i.e., . Each UWB anchor was annotated as with a unique identification k where , similarly each UWB tag was denoted as with a unique identification w where .

2.3. Initial Localization

An initial position estimate was made on the target node. The proposed algorithm solved this problem by structuring the estimate as a non-linear least square (NLS) problem. To simplify the construction of the NLS, the odometry and neighboring range data of a single robot node were used to calculate . The position estimate was later refined using the UKF.

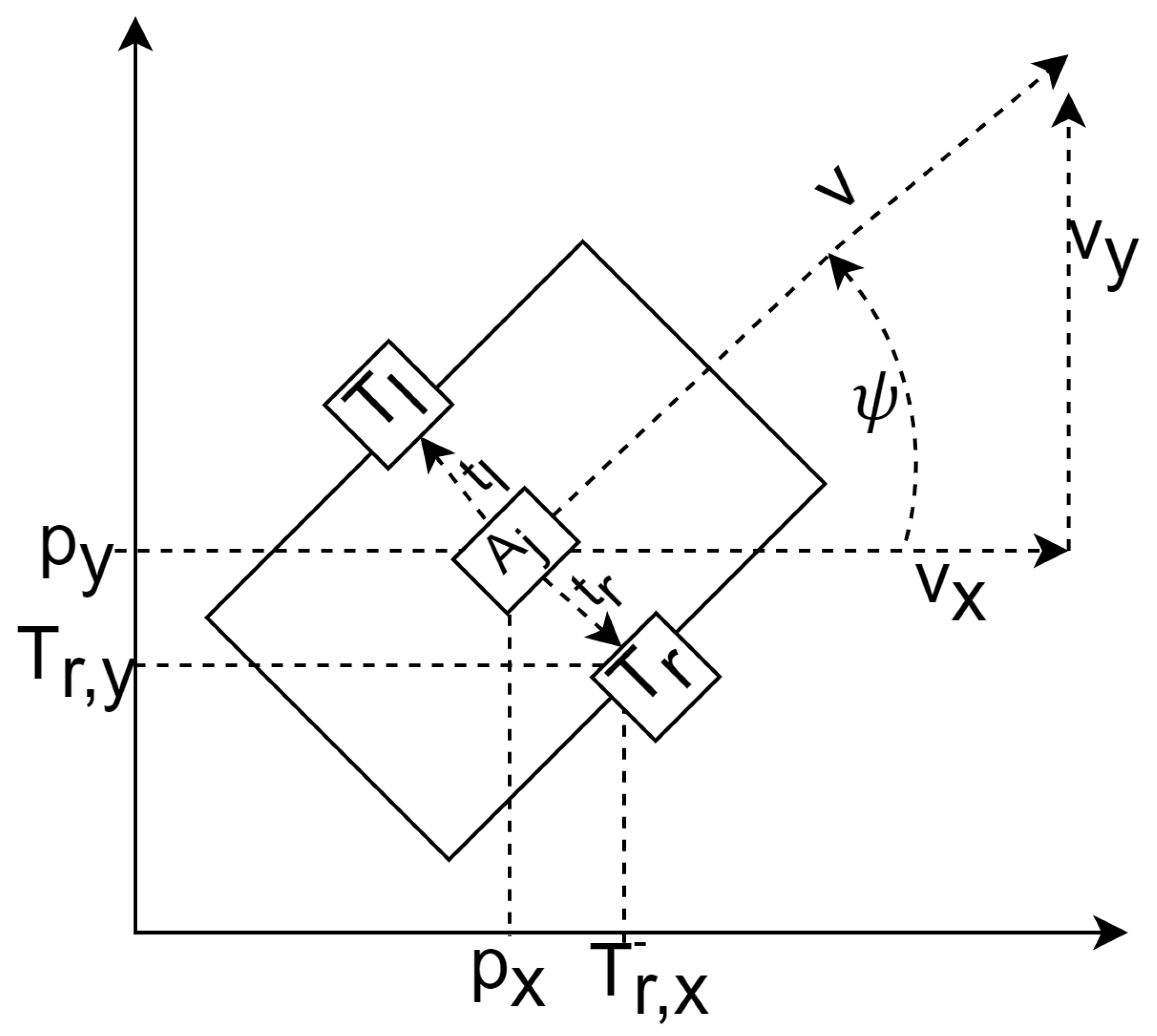

The geometry of the robot is defined and elaborated on below. There were two tags on the robot, which were separated by a fixed distance d. In the global reference frame, the tags were defined as . In the static inertial reference frame of the robot, the position of the tags was represented as where the left tag was at , and the right tag was at . In addition, the odometry position was measured from the robot’s defined origin, , which was the midpoint of the right and left tags. and therefore . The range recorded by the UWB tags was represented as , where was a UWB anchor located on a UGV neighbor.

The robot’s heading was considered when solving the non-linear least squares problem by compensating the target distance by rotating the tag pose. The relative tag position defined as , was represented as the vector rotated about the center of the robot () by the heading global heading .

2.4. Non-Linear Least Squares

The non-linear least squares optimization method seeked to minimize the following:

where

was the residual given by:

The range measurement

was defined as a function of

. In addition,

represented the current heading measured from the odometry and

represented the odometry position

:

The minimum value of

S could be found when the gradient was 0. As a result, Jacobian was used to calculate the partial derivative of all the

n variables needed.

Due to the gradient equations not having a closed solution, the variables

were solved iteratively with Newton’s iteration method.

The Jacobian is a matrix of partial derivatives as a function of constants, the independent variable, and the parameter. It was constructed as such:

A single row of the Jacobian (

J ) was derived from the

function below. In this matrix

represent

and

respectively.

Using Newton’s iteration algorithm for minimizing the error, the Jacobian in equation (14) and the residual functions

were calculated at each step. The residual function was redefined as

and the vectors

and

were introduced as:

Thus, the Newton iteration became:

where

was the new estimated parameter values and

was the last approximation. The initial estimate needed for the Newton approximation (

) was provided in the simulation. The parameter

can be initialized in one of two wats: it can either be set to an initial state of 0, or it can be provided a coarse estimate of the robot’s geographic area. The algorithm terminated when

, where

was a defined termination tolerance criterion.

This was the approach to using the non-linear least square optimization, and many other optimizations could be implemented to solve this problem. The scipy.optimize.least_squares function was used in this work to implement the non-linear least square calculations.

2.4.1. Monte Carlo Simulation

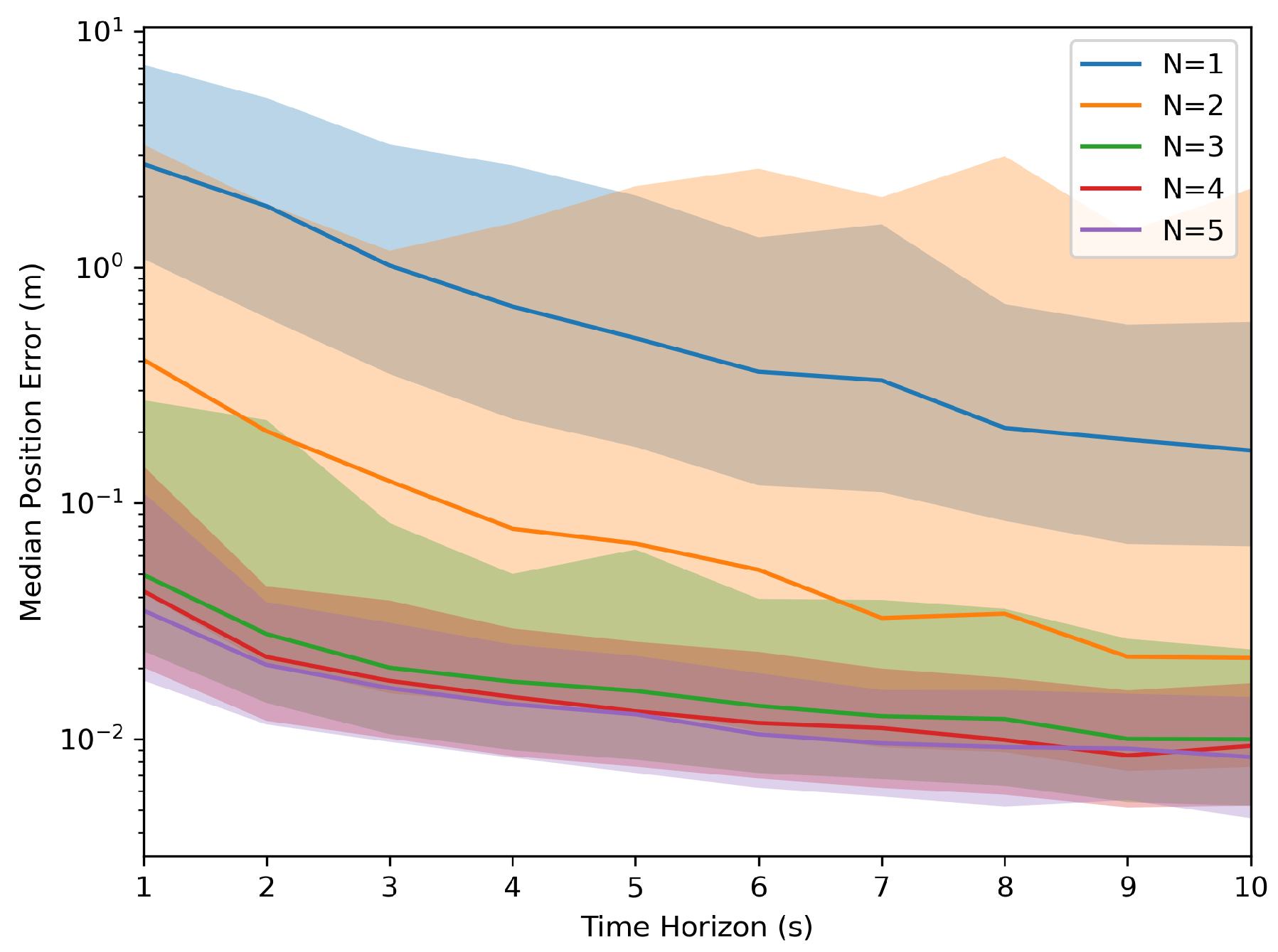

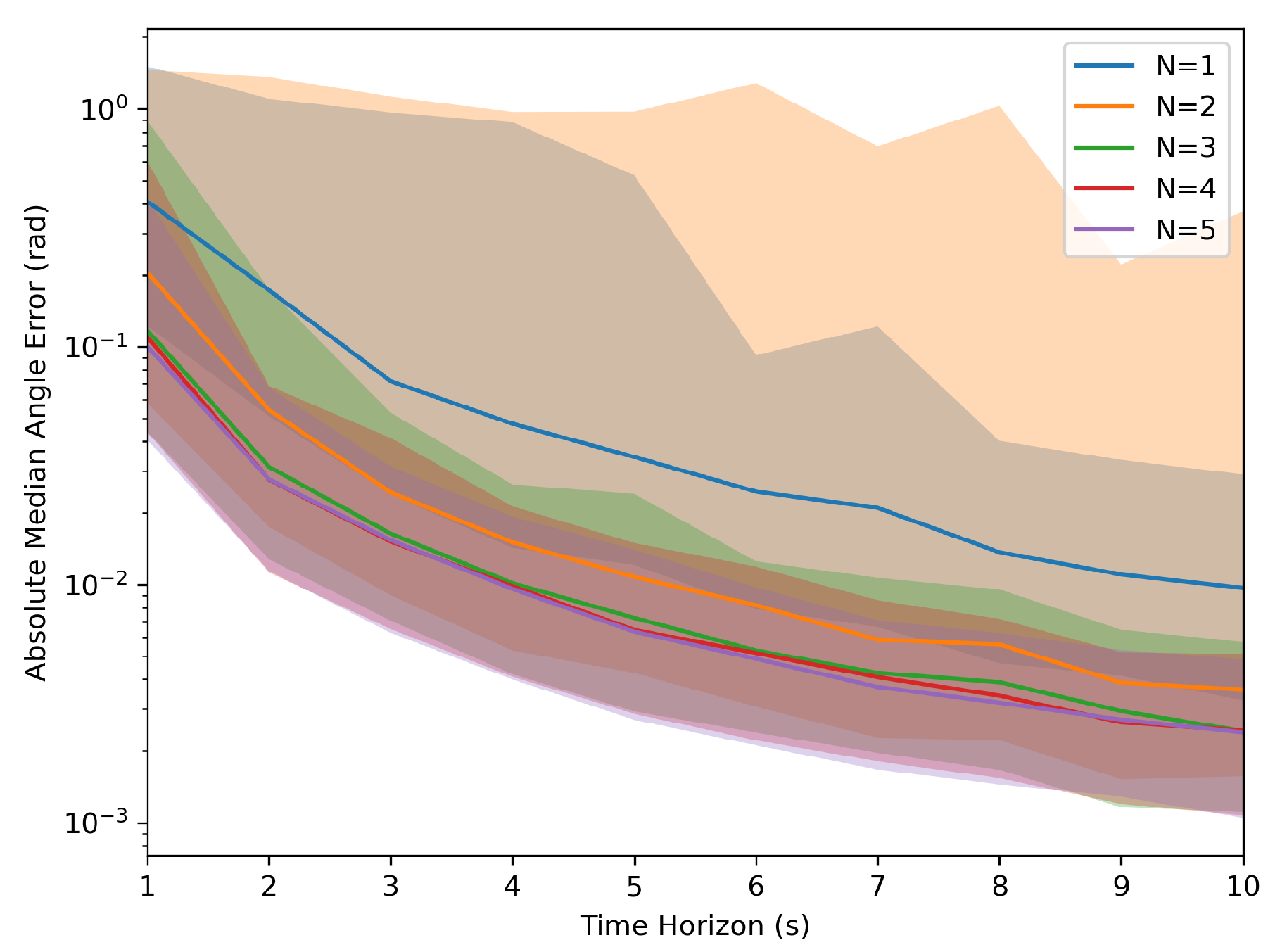

A Monte Carlo simulation was developed to demonstrate the convergence characteristics of the initial localization process. This simulation involved the manipulation of two critical variables: the number of robots traversing the environment,’N,’ and the time horizon for the odometry history, ’T.’ In the simulation, ’N’ random robots were created, each adhering to a differential drive motion model. At each time step (0.1s), these simulated robots moved with a random linear velocity selected from the range of [-vmax/2, vmax], favoring forward motion, and an angular velocity sampled from [-wmax, wmax], both vmax and wmax were set to 2. Simultaneously, one robot was randomly selected to transmit its range measurements at each time step.

The parameter ’T’ governed the duration before the localization process was conducted using the collected data. Random noise was introduced to the acquired odometry data, affecting both anchor poses and the target robot’s measurements. The noise levels were standardized at 0.5 cm for the x and y coordinates of the odometry anchor poses, 2 cm for the range measurements, and 1/1000 radians for angular measurements. The target robot was randomly placed within a 10x10m world with a randomly assigned orientation as demonstrated in

Figure 1. Additionally, the Non-Linear Least Squares’ initial guesses were set to zero.

Each parameter combination simulated 1000 samples to collect the aggregated information. These simulations generated values of ’T’ from 1s to 10s and an ’N’ ranging from 1 to 5 robots. The results, as depicted in

Figure 2 and

Figure 3, indicate that as the time horizon ’T’ increases, the Euclidean error relative to the target transformation matrix diminishes significantly. Furthermore, a higher number of robots present led to less variation in the error relative to the target across runs, and the system was less likely to reach an incorrect solution.

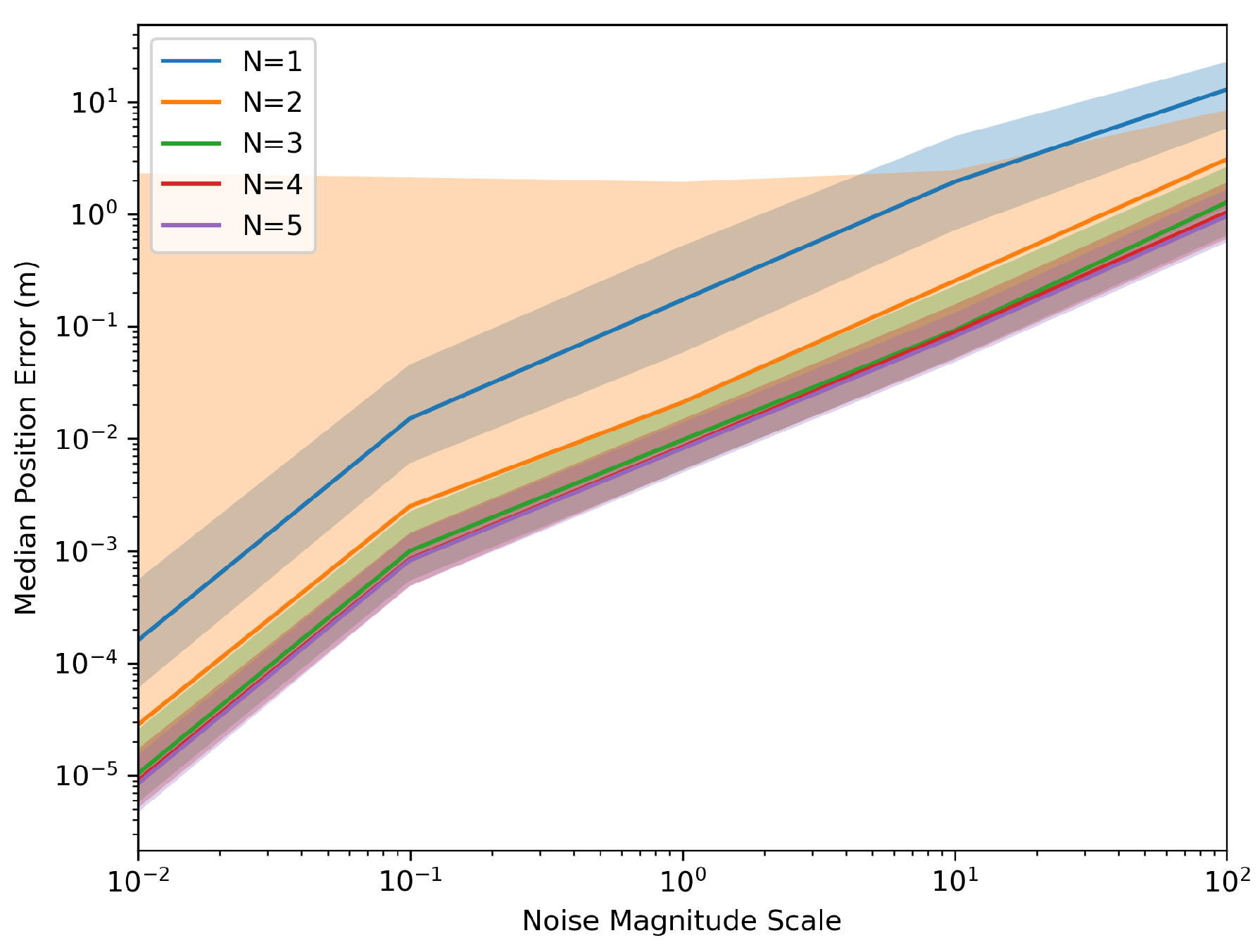

To demonstrate the robustness of the system relative to noise levels. The Monte Carlo simulations were generated again; however, this time with assuming a constant ’T’ of 10 second and a varying scale of the noise levels listed above at different magnitudes.

2.5. UKF Position Refinement

In the following sections, the robot has already been localized and given an initial position estimation. Further refinement of the results by reducing the sensor noise was achieved using a UKF [

33,

34]. Two types of data were used in the UKF. The first came from the ranging measurements at time step

k,

, between the tag

on the target node and a localized neighbor anchor

. The second piece of data came from the robot’s onboard odometry. The following sections go through the UKF model for the CTRV motion model.

2.5.1. Motion model

The UKF motion model that this article followed was the constant turn rate and velocity magnitude model (CTRV). This non-linear motion model assumed that the node could move straight but turned following a bicycle turn model with constant turn rate and linear velocity [

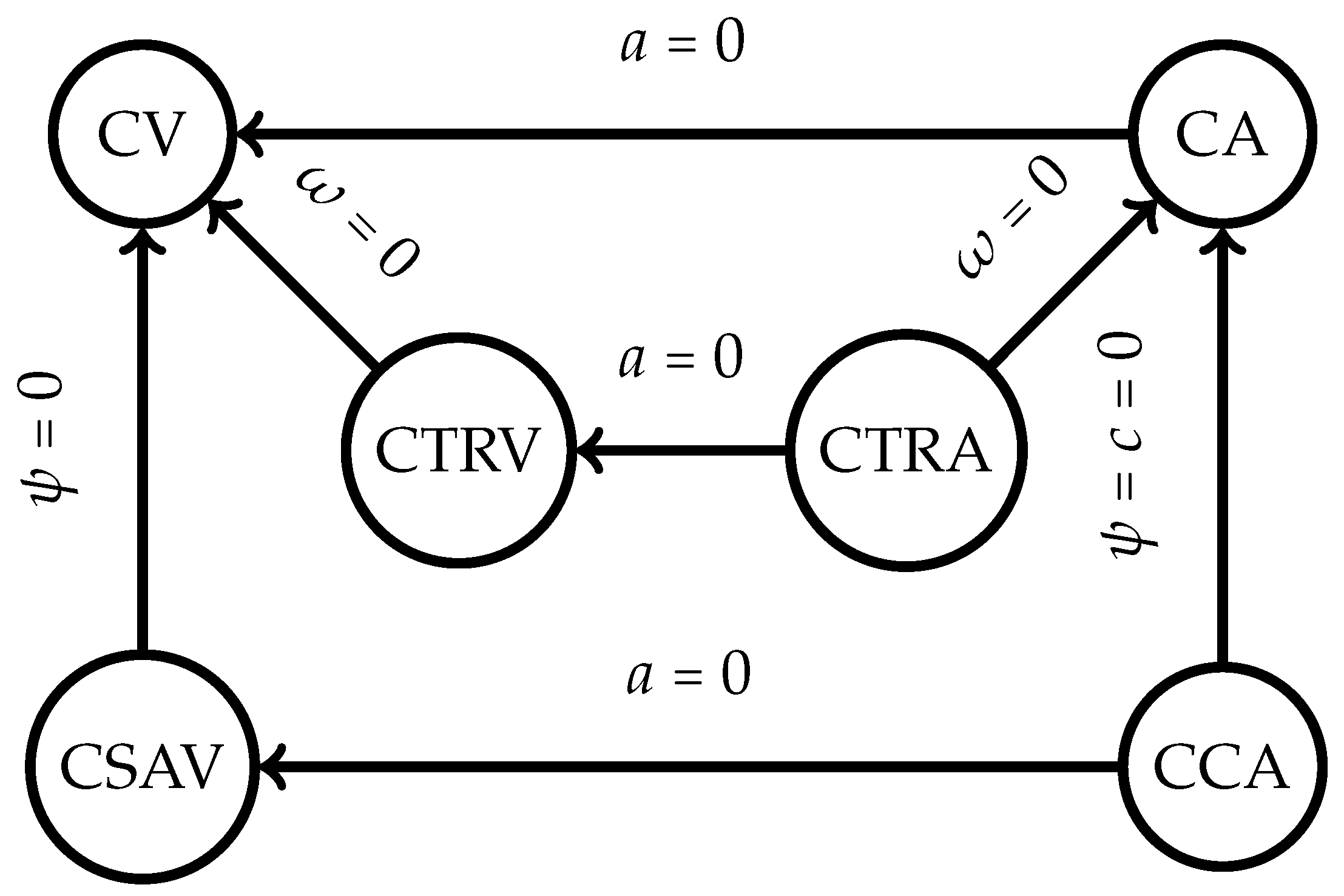

35]. Other types of motion models were available, as illustrated in

Figure 6. The constant velocity (CV) motion model was the simplest one available, a linear motion model where the linear acceleration was defined to be 0. The constant turn rate and acceleration (CTRA) is an expanded version of the CTRV motion model where the acceleration is accounted for and determined. Similarly to the CTRA motion model, the constant curvature and acceleration (CCA) model replaces the yaw rate of the model with the curvature instead. The constant steering angle and velocity (CSAV) model returns to having the acceleration constant and replacing the assumes constant steering angle instead. Each model has its own set of advantages and disadvantages; however, the CTRV motion model was chosen thanks to the balance in computation speed and accuracy in comparison with each different model [

35]. The CTRV was also selected because the odometry sensor output contains the same state variables. This research problem formulation assumes the nodes of interest are ground robots; however, a general motion model could be used. As long as the robot’s dynamics can be derived, the system’s motion model can be used with this implementation. For drones, the motion model is linear. This eliminates the need for an unscented Kalman filter model, allowing the use of a linear Kalman filter instead.

The state vector of the CTRV model was defined to be (

Figure 7):

where

was the node’s speed,

was the yaw angle which described the orientation according to

Figure 7, and

represented the yaw rate. The change of rate in the state was expressed as:

The current step in the process was denoted by

k, and the subsequent step by

. Consequently, the time difference was expressed as

. The process model, which predicted the state at

, could be decomposed into the deterministic and stochastic parts. To derive the deterministic part:

However, problems arose when

as this would cause a division by 0. A modified version of the motion model was thus defined for when

:

Next, the stochastic component was designated as the noise vector, encompassing the linear and yaw acceleration noises in a CTRV model. At time step

k, the noise

was characterized as follows:

where

was the linear acceleration noise defined as a normal distribution,

and

was the yaw acceleration noise defined as a normal distribution,

. It was assumed that the linear and angular acceleration would remain relatively constant during small time intervals, resulting in approximately linear motion between two timesteps (this assumption was valid unless the yaw rate was excessively high). As a result, the noise function was expressed as follows:

The full motion model was characterized as follows:

2.5.2. State Prediction

The first step to the unscented Kalman filter was the sigma point generation. Using sigma points is the main difference between the EKF and the UKF. The EKF linearizes the system through a Taylor-series expansion around the mean of the relevant Gaussian random variable (RV) [

34]. Using multiple points to sample the state distribution improved linearization of the non-linear space [

34]. When greater accuracy was required, a UKF was recommended compared to an EKF. Due to the addition and the linear scaling of the number of sigma points required based on the dimensionality of the state vector, UKFs are known to be more computationally expensive. Following a Gaussian distribution, the sigma points were generated from the last updated state and covariance matrix.

First, the augmented state and covariance matrix were formulated, with the normal state vector denoted by:

Having a dimension of

, the covariance matrix

took the form of an

matrix. Meanwhile, the noise vector was characterized as:

with dimension

, the augmented state matrix was represented as follows:

The augmented state dimension, determined as

, yielded a total of eight dimensions for the CTRV model, specifically

. Subsequently, the process noise covariance matrix was constructed, incorporating the expected acceleration and yaw rate Gaussian distribution into the matrix

Q, resulting in the following representation:

The augmented covariance matrix was thus represented as:

The augmented covariance matrix became a square matrix with size

. By convention, it was recommended to have at least

sigma points; in the case of the illustrated model, this would be

. The sigma points were then calculated as a list

where

was the scaling factor defined as

, where

dictated how far away from the mean the sigma points would be positioned [

36]. The parameter

defined the spread of the sigma points around

and was set to a small positive value between 0 and 1. By default, it was set to 1; however, this could be tuned if necessary. When taking

for the sigma points, there were multiple ways to take the square root of a matrix; however, Cholesky decomposition was recommended due to its computation speed. The output of this list should be in the format

.

Once the sigma points were calculated and transformed into their respective predicted state, each was transformed using the process model and then saved to another list now of format . The processed state sigma points were in the format

, the sigma points of the augmented , the process model function

Append to

In this step, the predicted state mean vector and the predicted covariance matrix were calculated by aggregating the sigma points using a weighted average of the points. There were multiple ways to initialize the weights for this part; however, this paper sticks to a standard Gaussian distribution.

where

and

were the same from the sigma point calculation 2.5.2.

was an extra parameter used to incorporate any prior knowledge of the distribution of the state. In most cases, this paper’s

was left to be 0. Once the weights were calculated, the predicted mean and covariance were calculated respectively:

2.5.3. Measurement Prediction

The measurement stage processes the sigma points into predicted measurement outputs. To accomplish this, a measurement function

was developed for each of the sensor types, where

was the output of the process model in the prediction step 1. The measurement vector was

and

the inherent measurement noise.

Due to this paper tackling the fusion of two sensor types, the odometry data and the UWB range data, two different

functions needed to be defined. For the odometry data, the state vector was defined as:

The measurement transformation function for the UWB ranging sensors took a similar format to the non-linear least square

derivation (

Section 2.4) where the predicted UWB range measurement was derived given the estimated sigma point state (

), the known anchor point position (

) and the tag position relative to the node’s reference frame (

).

The set of measurement sigma points was denoted as . If the measurement used odometry, the output shape should be , and if it was UWB range data, the output should be .

Once the measurement sigma points were collected, the weighted predicted measurement means (

), and the predicted measurement covariance (

) were calculated, similar to how the mean and the covariance from the predicted state sigma points were calculated. The weights were defined in

Section 1.

The measurement noise covariance was also defined

where the vector

was the measurement noise for the sensor’s state. For the odometry sensor,

R would be defined as:

While the

R matrix for the UWB range sensor would be defined as:

Using the defined

R matrices, the predicted measurement covariance could be defined.

2.5.4. State Update

The actual state vector could be updated after defining the predicted state and measurement. First, the cross-correlation between the state points in state space and the measurement space (

) had to be computed:

From there, the Kalman gain was calculated:

The current state and covariance matrix was then updated respectively, where

was the currently retrieved measurement data:

3. Simulation Results

ROS Melodic using Gazebo 9 was used to simulate the described environment and used a set of 3 objects/robots to model the world. The first object was a simple static UWB beacon represented by a solid cylinder meant to represent a generic UWB anchor such as a tripod-mounted Decawave DWM1000 Module, this object would be given an agreed-upon location in global coordinates such as GPS, and as such, the estimated position would be static and with high precision. Decawave claims they can support up to 6.8 Mbps data rates with frequencies of 3.5GHz-6.5GHz, a centimeter-level accuracy, and maintain a connection for up to a range of 290 meters.

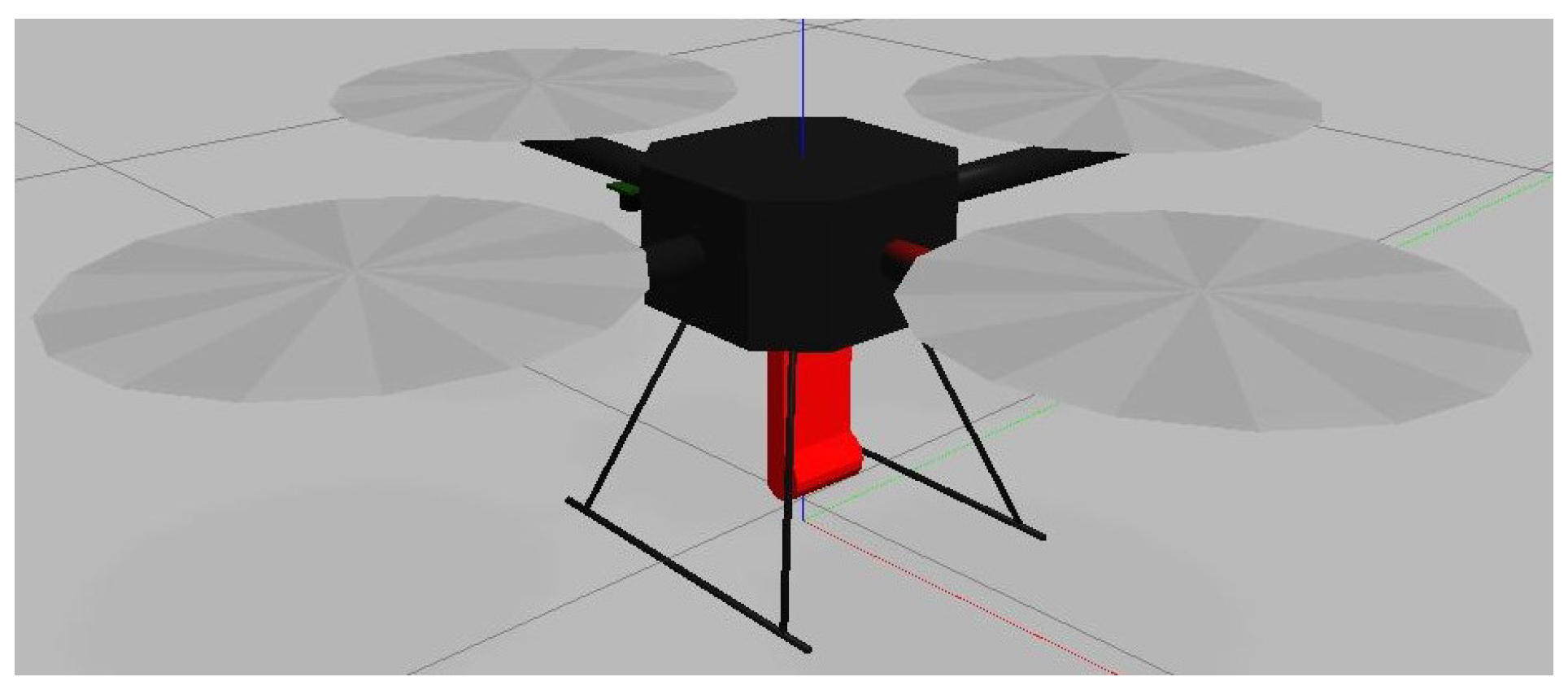

The second object was a Hector quadrotor, in

Figure 8, with a UWB anchor mounted on the bottom of the drone [

37]. This drone was meant to represent a moving UWB anchor. The drone would also be assumed to have been localized using GPS or cameras to represent a high-precision localization estimate. Instead of GPS, drones could use feature positioning based on public geographical information to remove the need to utilize GPS and generate high-accuracy localization [

38,

39]. In addition, as previously demonstrated in the Monte Carlo simulations in

Section 2.3 in

Figure 4 and

Figure 5, even when noise is present in the system if the time horizon is sufficiently large, the system will be able to derive it’s initial position parameters relatively accurately. Thus, even if the "localized nodes" contain noise information, the system will be able to provide an initial estimate of the position.

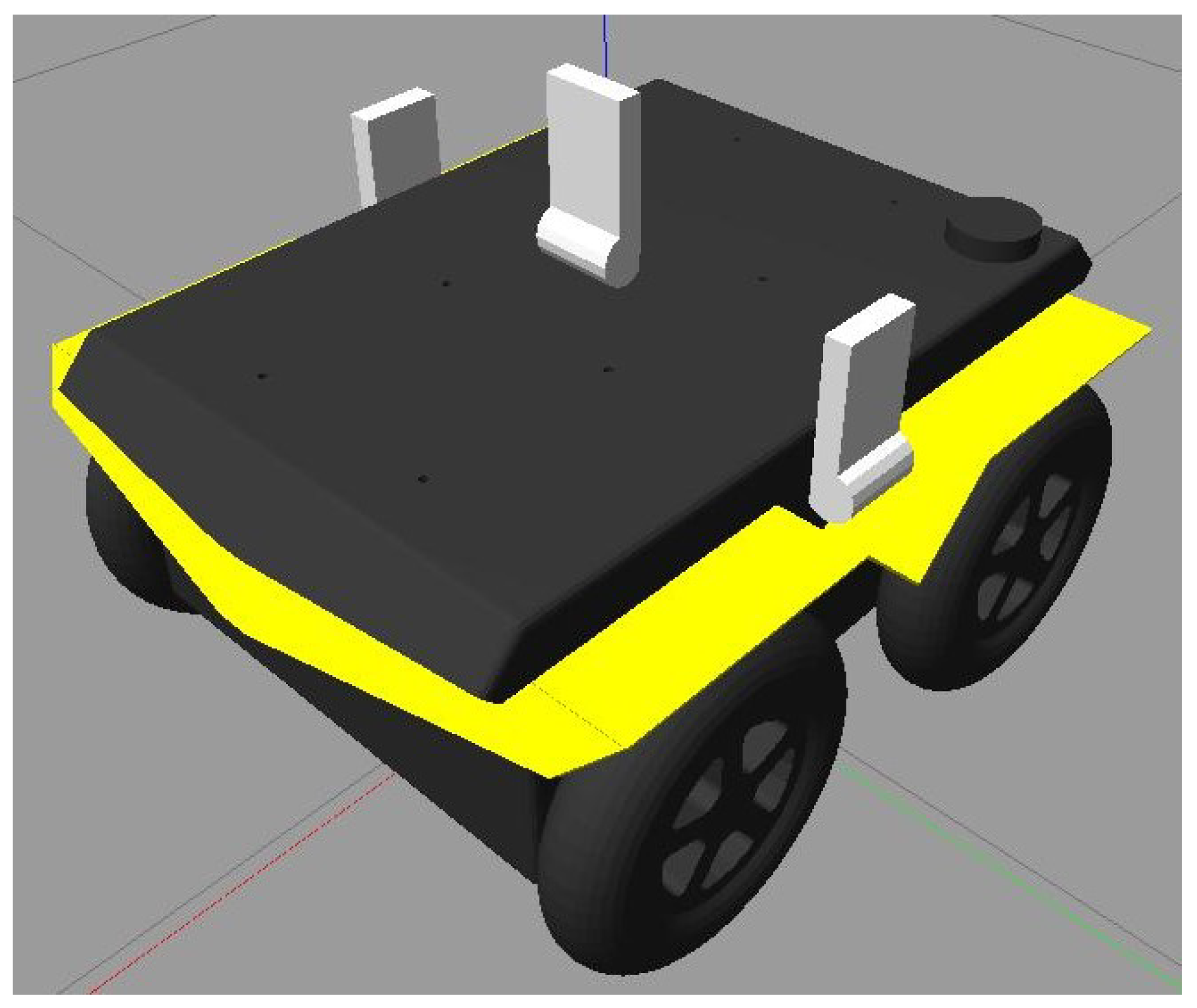

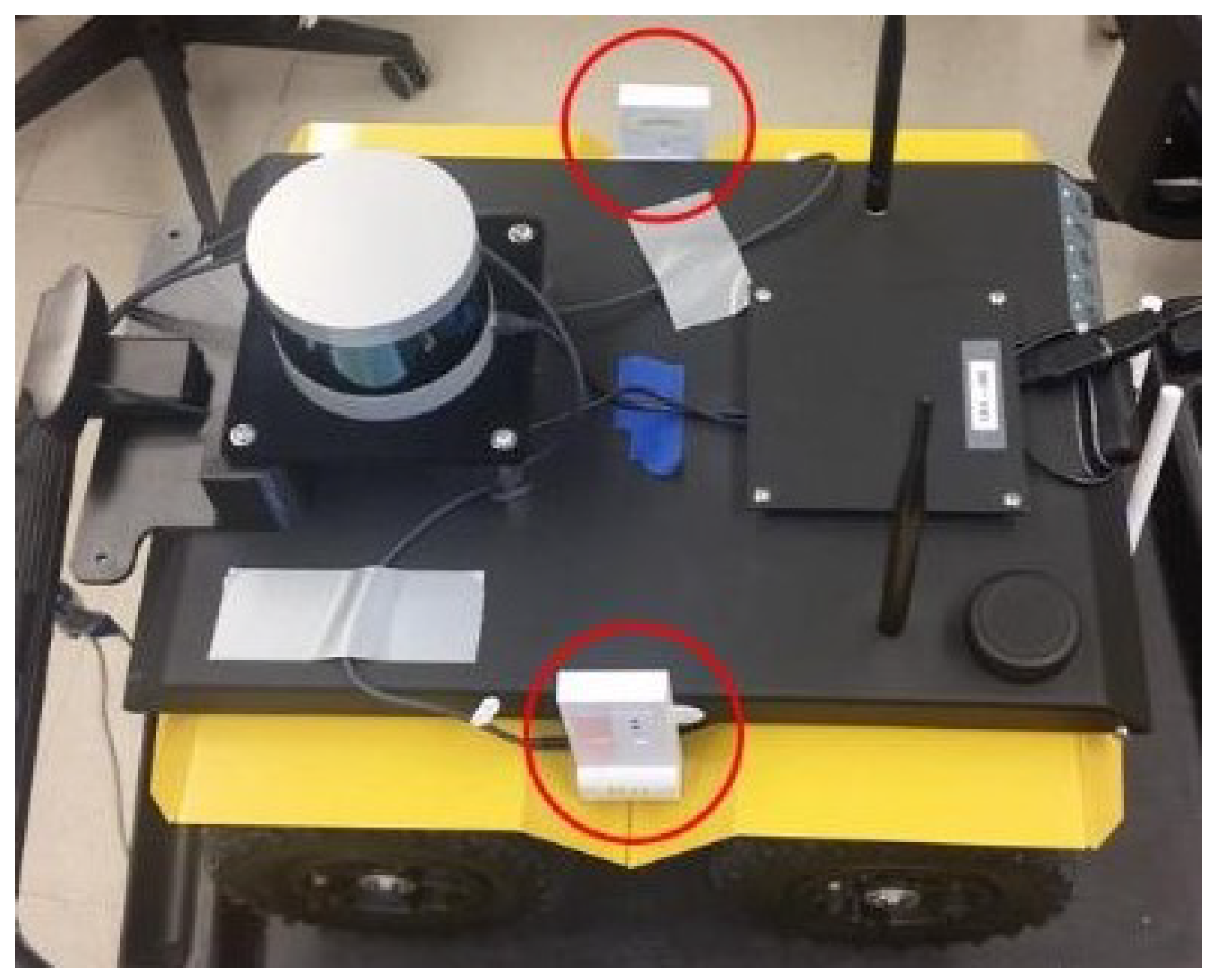

The final object was the implementation of the Clearpath Jackals robots depicted in

Figure 9 and

Figure 10 with two UWB tags on the top of its hood, equally spaced from the center axis of the robot with an extra centrally located UWB anchor. The tags allowed the robot to position itself, while the anchors allowed information propagation to help other robots localize themselves. In this study, it was assumed that the initial position and orientation of the Jackals were not provided. However, an initial estimate could be provided in the initial localization step to improve and reduce the initial position estimate error and thus improve the accuracy. As such, the localization of the Jackal was the main focus of the presented work.

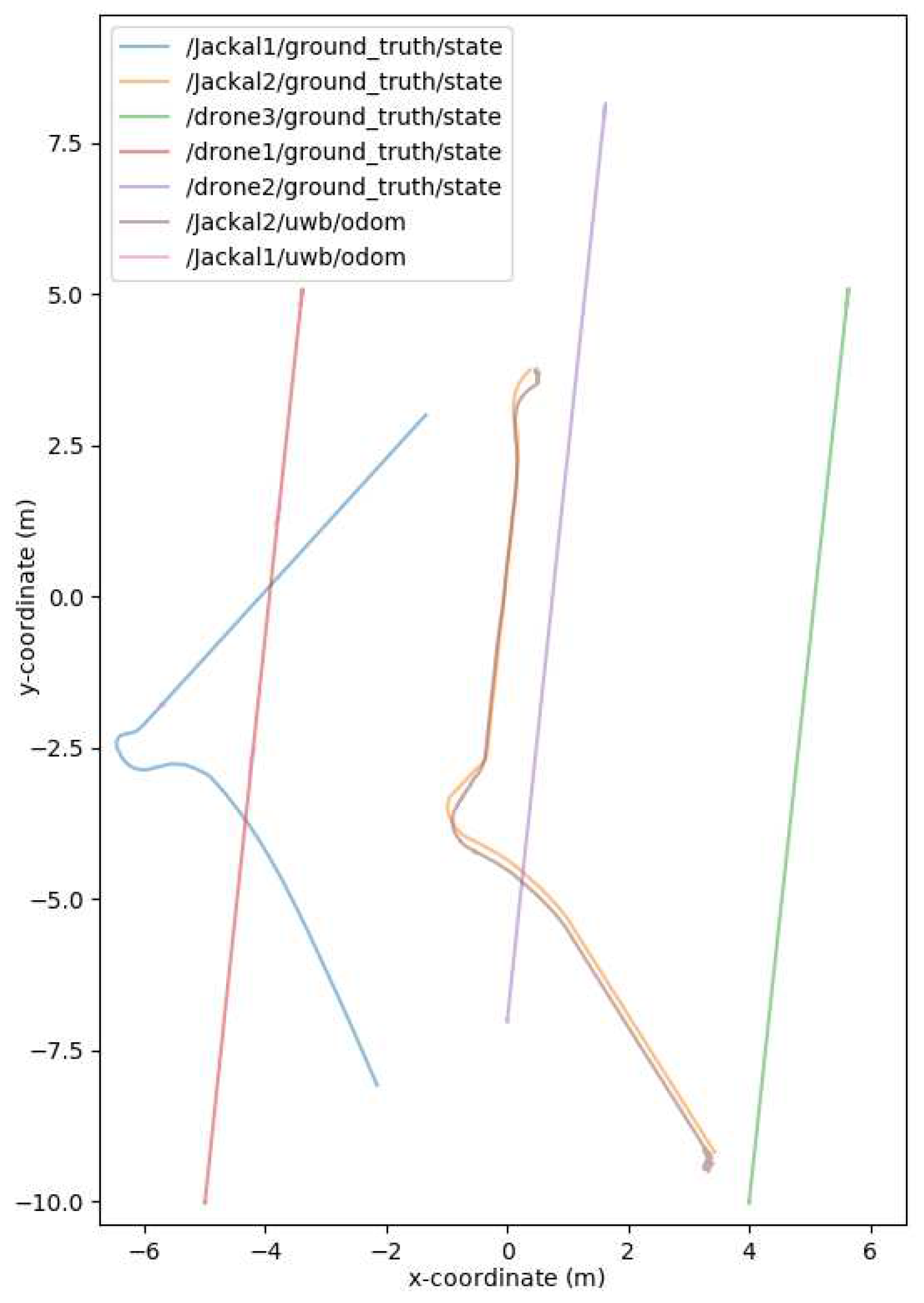

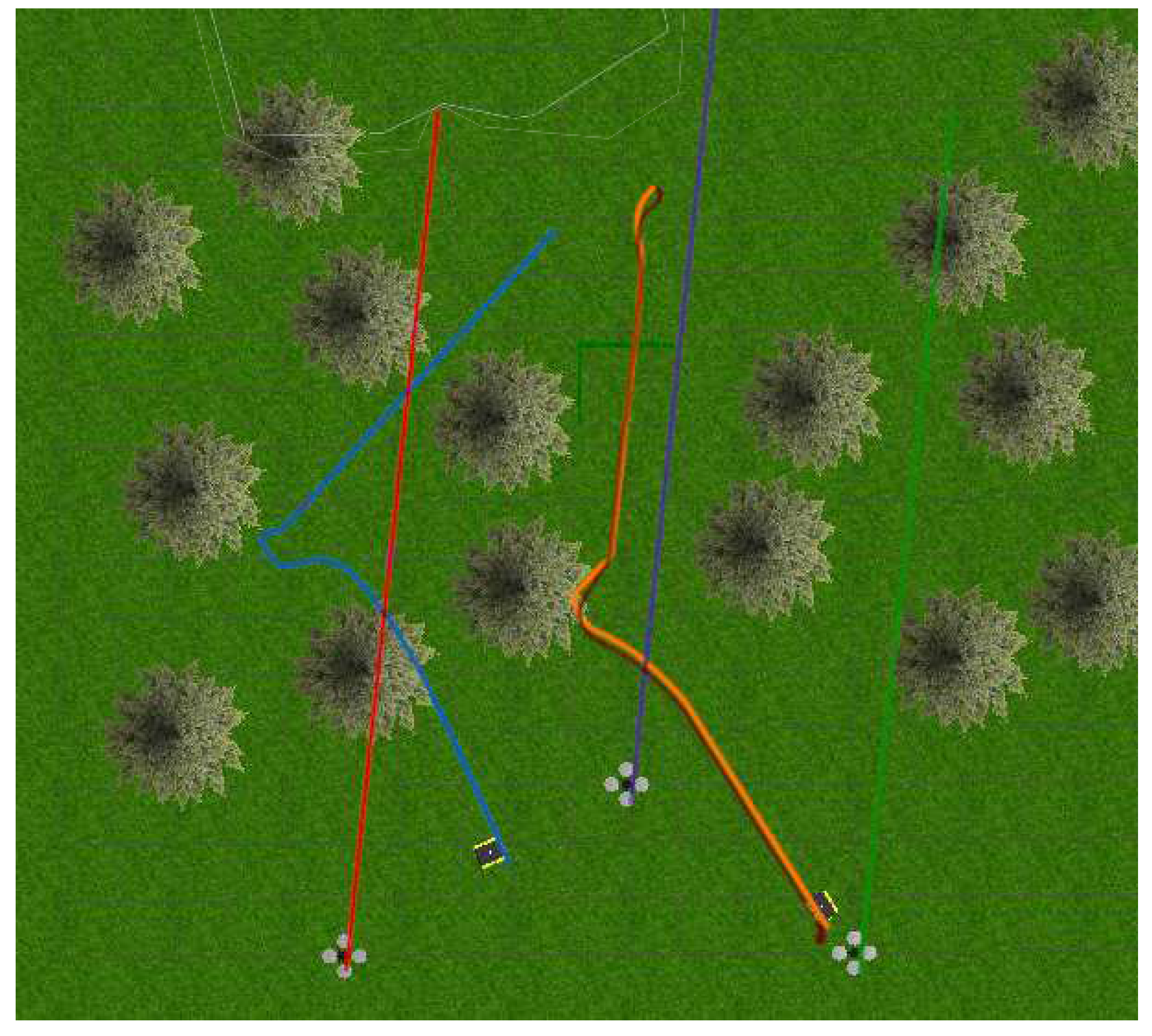

The world consisted of two Jackals and three drones to simulate a mobile surveying endeavor, as presented in

Figure 11 and

Figure 12. The drones represented the mobile anchors as they flew over the trees and had GPS to help localize themselves with acceptable accuracy. The drones also helped the ground Jackals, who did not have GPS. The process proceeded as such: initially, the drones flew over the trees to achieve GPS localization in a global reference frame; once localized, the Jackals developed their initial position estimation based on the Hector drones. Once the Jackals had localized themselves, they performed their abstract task. Once the task was complete, the drones moved to another location to be surveyed, and the Jackals followed suit. When the Jackals moved to the new location, the drones were too far or obstructed to send UWB ranging information, so to continue the localization, the two Jackals sent their inter-robot range measurements as well using their onboard odometry. Once the Jackals approached the new survey site, the UWB range measurements from the Hector drones helped correct any deviation during the offline path. The simulated noise measurements were based on the empirical data collected from the DWM1001-DEV in range increments of 1 meter up to 141 meters. The standard deviations of the measurements were recorded. The measurements also included scenarios when the UWB sensor was occluded by thin objects (

cm) and thick objects (

cm). The distributions can be found in the Gazebo UWB Plugin

https://github.com/AUVSL/UWB-Gazebo-Plugin/blob/master/src/UwbPlugin.cpp.

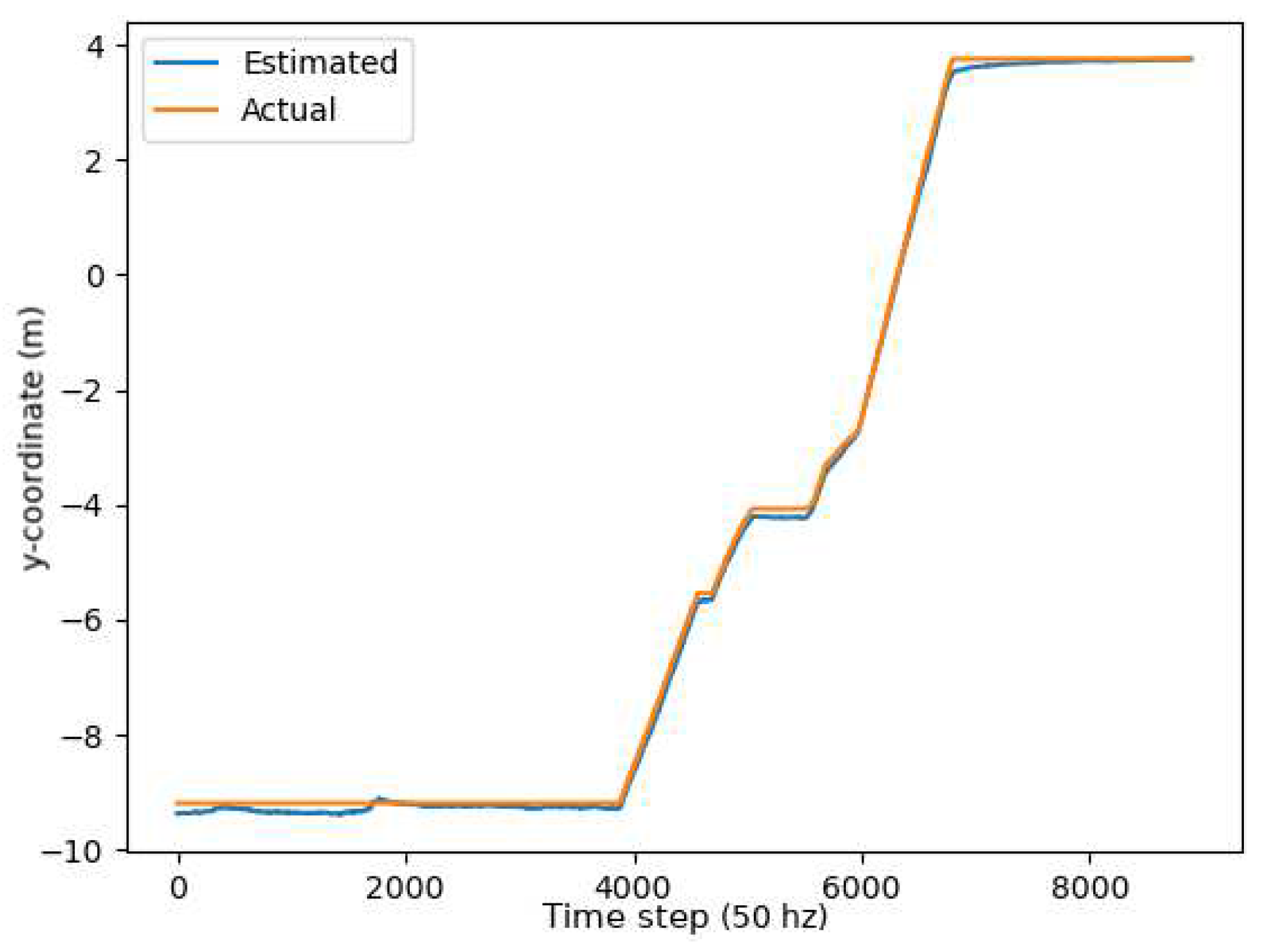

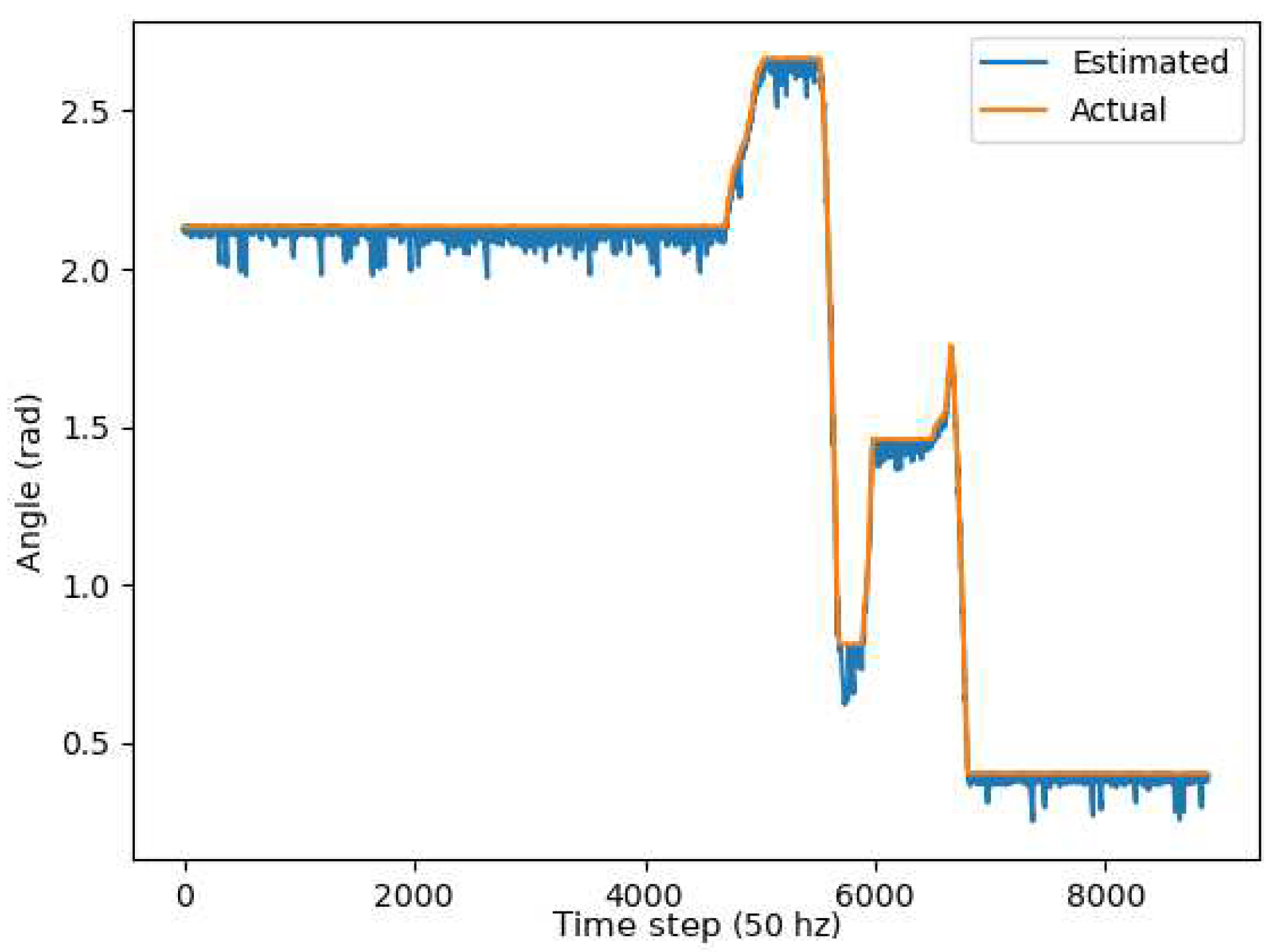

Two metrics were used to determine the accuracy of the unscented Kalman filter: the RMSE (Root Mean Squared error) and Mean Absolute Error (MAE). As demonstrated through the graphs, in

Figure 13,

Figure 14 and

Figure 15, the unscented Kalman filter developed for the Jackals follows the ground truth very closely with minimal RMSE values of no greater than 0.10921 m for the positioning errors; however, it could be noted that the unscented Kalman filter had a more significant difficulty estimating the velocity and the yaw rate, with larger errors of 0.24959

and 0.65856

, though due to the difference in scales of the measurement values, this error was deemed to be acceptable. The MAE measurement values were relatively consistent, hovering around between 0.27

and 0.3

, which informs of a possible issue of systematic bias introduced in the system where the position of the system was consistently offset from the actual target. As noticed through the x,

Figure 13, and y,

Figure 14, position graphs, if noticed closely, the estimated values for the x and y position, when the values seem steady, tend to lag below the ground-truth value. Further tuning of the unscented Kalman filter model’s parameters, such as the sensor noise matrix

R, and the sigma point generation parameters

and

, could increase accuracy in the model. The MAE pose is the average position accuracy, a measure of how close, on average, the position of the robot was to the actual position of the robot using Euclidean distances

. Thus, on average, the robot was about 15 cm away from its target position, as noticed by the RMSE, which was often with errors that had lower magnitudes.

Current literature does not provide a good reference comparison to this system. This is due to systems assuming a static environment or a static leader. However, there is no system where all anchors can move at once and still be localized.

4. Conclusion and Future Work

This article discussed UWB localization using both stationary and mobile nodes. Specifically, it introduced an Ad-Hoc method to implement localization through a modified version of the AHLoS system. In this system, each node starts unlocalized or localized, and the localized nodes serve as reference points to the unlocalized nodes. Once there was enough information, the unlocalized nodes could determine a translation-rotation matrix from the relative position to the global reference frame. This was done using a non-linear least squares function which also accounts for the tag sensor’s offset range measurements. The second phase of the paper transitioned to the UKF-based measurement data refinement stage, where both the globally translated odometry and the offset range measurements were fed into a CTRV motion-based UKF model to improve the long-term accuracy of the positioning system. Simulations were conducted to help provide proof of validity to the algorithms.

The current system assumes a 2D setup for the robot, which would cause inaccuracies in an inclined outdoor situation as ranging estimation for the robot’s z value would not be correctly estimated, as the robot’s 3D dynamics would not be reflected. Another problem is that even though the possibility of getting an inaccurate initial position estimate decreases over time, there are no guarantees that the system will converge with this current system. More robust optimization systems will be explored in the future with explicit guarantees. In addition, the system still requires fine-tuning of specific constants such as the initial P, Q, and R matrics of the UKF, and the time horizon ’T’ and the number of robots N for the initial localization, as suboptimal performance would be achieved if the system is not correctly estimated.

In the future, the UKF will extend the account for the robot’s yaw, pitch, and roll to better account for the diverse terrain and not assume that it was on flat terrain. To further improve the accuracy, additional sensors such as cameras and land-based markers to get a better position estimate and optical flow sensors to improve the velocity calculations will help further improve the UKF results. In addition, the development of the initial position algorithm to account for a wider variety of scenarios and improve the overall accuracy will be explored. Furthermore, a more robust adaptive Kalman filter approach for UWB sensors, to account for multipath and non-line-of-sight (NLOS) measurement error, will be explored to account for a more extensive range of terrain and reduce overall position error.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Lu, W.; Rodríguez F., S.A.; Seignez, E.; Reynaud, R. Lane Marking-Based Vehicle Localization Using Low-Cost GPS and Open Source Map. Unmanned Systems 2015, 03, 239–251. [Google Scholar] [CrossRef]

- Zhao, L.; Wang, D.; Huang, B.; Xie, L. Distributed Filtering-Based Autonomous Navigation System of UAV. Unmanned Systems 2014, 03, 17–34. [Google Scholar] [CrossRef]

- Miller, M.; Soloviev, A.; Haag, M.; Veth, M.; Raquet, J.; Klausutis, T.; Touma, J. Navigation in GPS Denied Environments: Feature-Aided Inertial Systems. 2011, 116, 7–8. [Google Scholar]

- Hussein, H.H.; Radwan, M.H.; El-Kader, S.M.A. Proposed Localization Scenario for Autonomous Vehicles in GPS Denied Environment BT - Proceedings of the International Conference on Advanced Intelligent Systems and Informatics 2020, Cham, 2021; pp. 608–617.

- Alarifi, A.; Al-Salman, A.; Alsaleh, M.; Alnafessah, A.; Al-Hadhrami, S.; Al-Ammar, M.A.; Al-Khalifa, H.S. Ultra Wideband Indoor Positioning Technologies: Analysis and Recent Advances. Sensors (Basel, Switzerland) 2016, 16, 707. [Google Scholar] [CrossRef]

- Geng, S.; Ranvier, S.; Zhao, X.; Kivinen, J.; Vainikainen, P. Multipath propagation characterization of ultra-wide band indoor radio channels. In Proceedings of the 2005 IEEE International Conference on Ultra-Wideband; 2005; pp. 11–15. [Google Scholar] [CrossRef]

- Schejbal, V.; Cermak, D.; Nemec, Z.; Bezousek, P.; Fiser, O. Multipath Propagation Effects of UWB Radars. In Proceedings of the 2006 International Conference on Microwaves, Radar Wireless Communications; 2006; pp. 1188–1191. [Google Scholar] [CrossRef]

- Van Herbruggen, B.; Jooris, B.; Rossey, J.; Ridolfi, M.; Macoir, N.; Van den Brande, Q.; Lemey, S.; De Poorter, E. Wi-PoS: A Low-Cost, Open Source Ultra-Wideband (UWB) Hardware Platform with Long Range Sub-GHz Backbone. Sensors (Basel, Switzerland) 2019, 19, 1548. [Google Scholar] [CrossRef]

- Thomä, R.; Hirsch, O.; Sachs, J.; Zetik, R. UWB Sensor Networks for Position Location and Imaging of Objects and Environments. 2007, Vol. 2007, pp. 1–9. [CrossRef]

- García Núnez, E.; Poudereux, P.; Hernández, .; Ureña, J.; Gualda, D. A robust UWB indoor positioning system for highly complex environments; 2015; pp. 3386–3391. [CrossRef]

- Guo, K.; Li, X.; Xie, L. Ultra-Wideband and Odometry-Based Cooperative Relative Localization With Application to Multi-UAV Formation Control. IEEE Transactions on Cybernetics 2020, 50, 2590–2603. [Google Scholar] [CrossRef] [PubMed]

- Guo, K.; Qiu, Z.; Meng, W.; Xie, L.; Teo, R. Ultra-wideband based cooperative relative localization algorithm and experiments for multiple unmanned aerial vehicles in GPS denied environments. International Journal of Micro Air Vehicles 2017, 9, 169–186. [Google Scholar] [CrossRef]

- Lensund, F.; Sjöstedt, M. Local positioning system for mobile robots using ultra wide-band technology. Student thesis, 2018.

- Liu, J.; Pu, J.; Sun, L.; He, Z. An Approach to Robust INS/UWB Integrated Positioning for Autonomous Indoor Mobile Robots. Sensors (Basel, Switzerland) 2019, 19, 950. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, T.; Zaini, A.H.; Wang, C.; Guo, K.; Xie, L. Robust Target-Relative Localization with Ultra-Wideband Ranging and Communication. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA); 2018; pp. 2312–2319. [Google Scholar] [CrossRef]

- Silva, O.D.; Mann, G.K.I.; Gosine, R.G. Development of a relative localization scheme for ground-aerial multi-robot systems. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems; 2012; pp. 870–875. [Google Scholar] [CrossRef]

- Yu, X.; Li, Q.; Queralta, J.P.; Heikkonen, J.; Westerlund, T. Cooperative UWB-Based Localization for Outdoors Positioning and Navigation of UAVs aided by Ground Robots. ArXiv 2021, abs/2104.00302. [Google Scholar]

- Güler, S.; Abdelkader, M.; Shamma, J.S. Peer-to-Peer Relative Localization of Aerial Robots With Ultrawideband Sensors. IEEE Transactions on Control Systems Technology 2020, pp. 1–16. [CrossRef]

- Zaeemzadeh, A.; Joneidi, M.; Shahrasbi, B.; Rahnavard, N. Robust Target Localization Based on Squared Range Iterative Reweighted Least Squares. Proceedings - 14th IEEE International Conference on Mobile Ad Hoc and Sensor Systems, MASS 2017 2017, pp. 380–388. [CrossRef]

- Beck, A.; Stoica, P.; Li, J. Exact and approximate solutions of source localization problems. IEEE Transactions on Signal Processing 2008, 56, 1770–1778. [Google Scholar] [CrossRef]

- Yin, F.; Fritsche, C.; Gustafsson, F.; Zoubir, A. TOA-based robust wireless geolocation and Cramér-Rao lower bound analysis in harsh LOS/NLOS environments. IEEE Transactions on Signal Processing 2013, 61, 2243–2255. [Google Scholar] [CrossRef]

- Chartrand, R.; Yin, W. Iteratively reweighted algorithms for compressive sensing. 2008 IEEE International Conference on Acoustics, Speech and Signal Processing 2008, pp. 3869–3872. [CrossRef]

- Gao, K.; Zhu, J.; Xu, Z. Majorization-Minimization-Based Target Localization Problem from Range Measurements. IEEE Communications Letters 2020, 24, 558–562. [Google Scholar] [CrossRef]

- Kim, E.; Kim, K. Distance estimation with weighted least squares for mobile beacon-based localization in wireless sensor networks. IEEE Signal Processing Letters 2010, 17, 559–562. [Google Scholar] [CrossRef]

- Sichitiu, M. Localization of wireless sensor networks with a mobile beacon. 10 2004, pp. 174–183. [CrossRef]

- Fan, Q.; Sun, B.; Sun, Y.; Wu, Y.; Zhuang, X. Data Fusion for Indoor Mobile Robot Positioning Based on Tightly Coupled INS/UWB. Journal of Navigation 2017, 70, 1079–1097. [Google Scholar] [CrossRef]

- Harrison, R.L. Introduction To Monte Carlo Simulation. AIP conference proceedings 2010, 1204, 17–21. [Google Scholar] [CrossRef]

- Yoo, J.; Kim, W.; Kim, H.J. Distributed estimation using online semi-supervised particle filter for mobile sensor networks. IET Control Theory Applications 2015, 9, 418–427. [Google Scholar] [CrossRef]

- Praveen Kumar, D.; Amgoth, T.; Annavarapu, C.S.R. Machine learning algorithms for wireless sensor networks: A survey. Information Fusion 2019, 49, 1–25. [Google Scholar] [CrossRef]

- Savvides, A.; Han, C.C.; Strivastava, M.B. Dynamic Fine-Grained Localization in Ad-Hoc Networks of Sensors. In Proceedings of the Proceedings of the 7th Annual International Conference on Mobile Computing and Networking, New York, NY, USA, 2001; MobiCom ’01, pp. 166–179. [CrossRef]

- Shenoy, N.; Hamilton, J.; Kwasinski, A.; Xiong, K. An improved IEEE 802.11 CSMA/CA medium access mechanism through the introduction of random short delays. 2015 13th International Symposium on Modeling and Optimization in Mobile, Ad Hoc, and Wireless Networks, WiOpt 2015 2015, pp. 331–338. [CrossRef]

- Lu, K.; Wang, J.; Wu, D.; Fang, Y. Performance of a burst-frame-based CSMA/CA protocol for high data rate ultra-wideband networks: Analysis and enhancement. ACM International Conference Proceeding Series 2006, 191. [CrossRef]

- Julier, S.; Uhlmann, J.; Durrant-Whyte, H. A new approach for filtering nonlinear systems. Proceedings of 1995 American Control Conference - ACC’95 1995, 3, 1628–1632 vol.3. [Google Scholar]

- Merwe, R.; Wan, E. Sigma-Point Kalman Filters for Probabilistic Inference in Dynamic State-Space Models. Proceedings of the Workshop on Advances in Machine Learning 2003.

- Schubert, R.; Richter, E.; Wanielik, G. Comparison and evaluation of advanced motion models for vehicle tracking. In Proceedings of the 2008 11th International Conference on Information Fusion; 2008; pp. 1–6. [Google Scholar]

- Julier, S.; Uhlmann, J.K. A General Method for Approximating Nonlinear Transformations of Probability Distributions. Technical report, 1996.

- Meyer, J.; Sendobry, A.; Kohlbrecher, S.; Klingauf, U.; Von Stryk, O. Comprehensive Simulation of Quadrotor UAVs Using ROS and Gazebo. 2012, Vol. 7628, pp. 400–411. [CrossRef]

- Bianchi, M.; Barfoot, T.D. UAV Localization Using Autoencoded Satellite Images. IEEE Robotics and Automation Letters 2021, 6, 1761–1768. [Google Scholar] [CrossRef]

- Gupta, A.; Fernando, X. Simultaneous Localization and Mapping (SLAM) and Data Fusion in Unmanned Aerial Vehicles: Recent Advances and Challenges. Drones 2022, Vol. 6, Page 85 2022, 6, 85. [Google Scholar] [CrossRef]

- Keiller, J. Localization for teams of autonomous ground vehicles. M.s. thesis, University of Illinois at Urbana-Champaign, Champaign, IL, 2019.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).