Submitted:

10 January 2024

Posted:

10 January 2024

You are already at the latest version

Abstract

Keywords:

0. Introduction

1. Related Works

1.1. FV Identification Method Based on Deep Learning

1.2. Kernel Size in Convolutional Layers

1.3. Attention Mechanism

2. Proposed Method

2.1. Method Flow and Overall Network Structure

2.2. Dual-channel Network Architecture

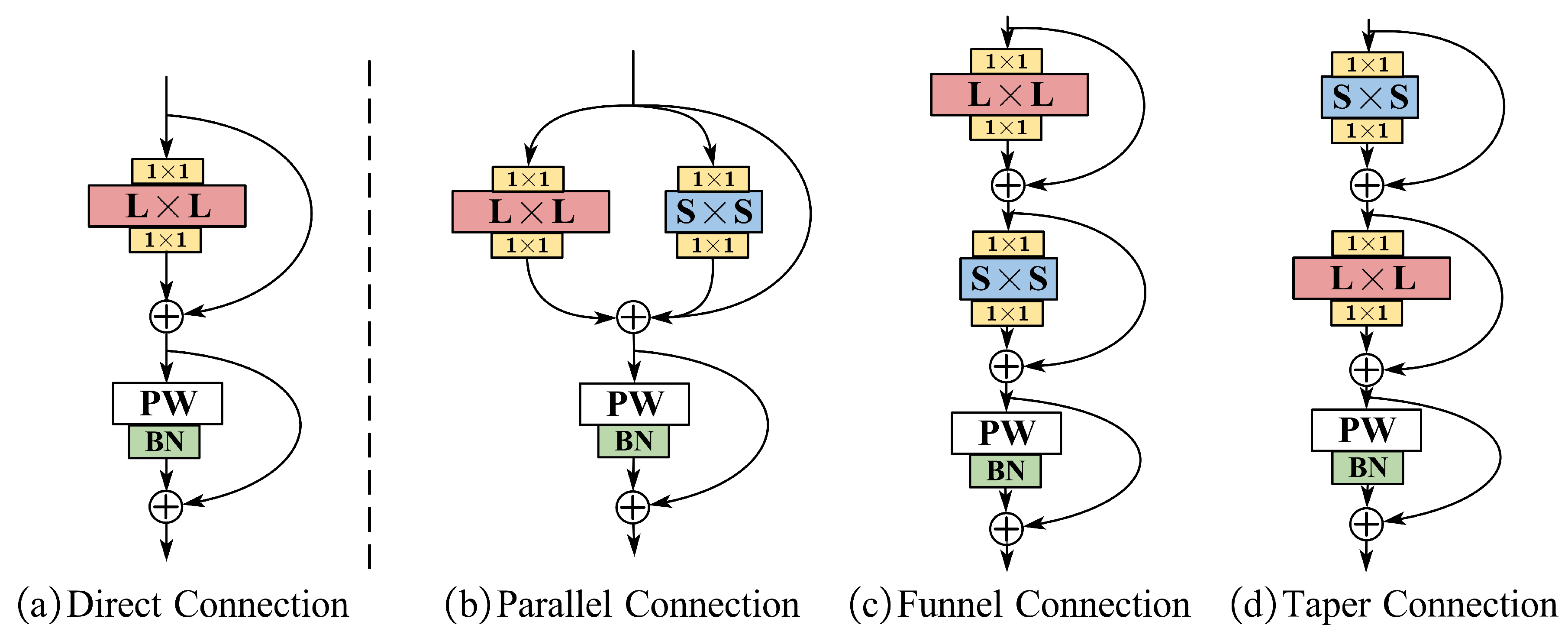

2.3. Design of the LK Block

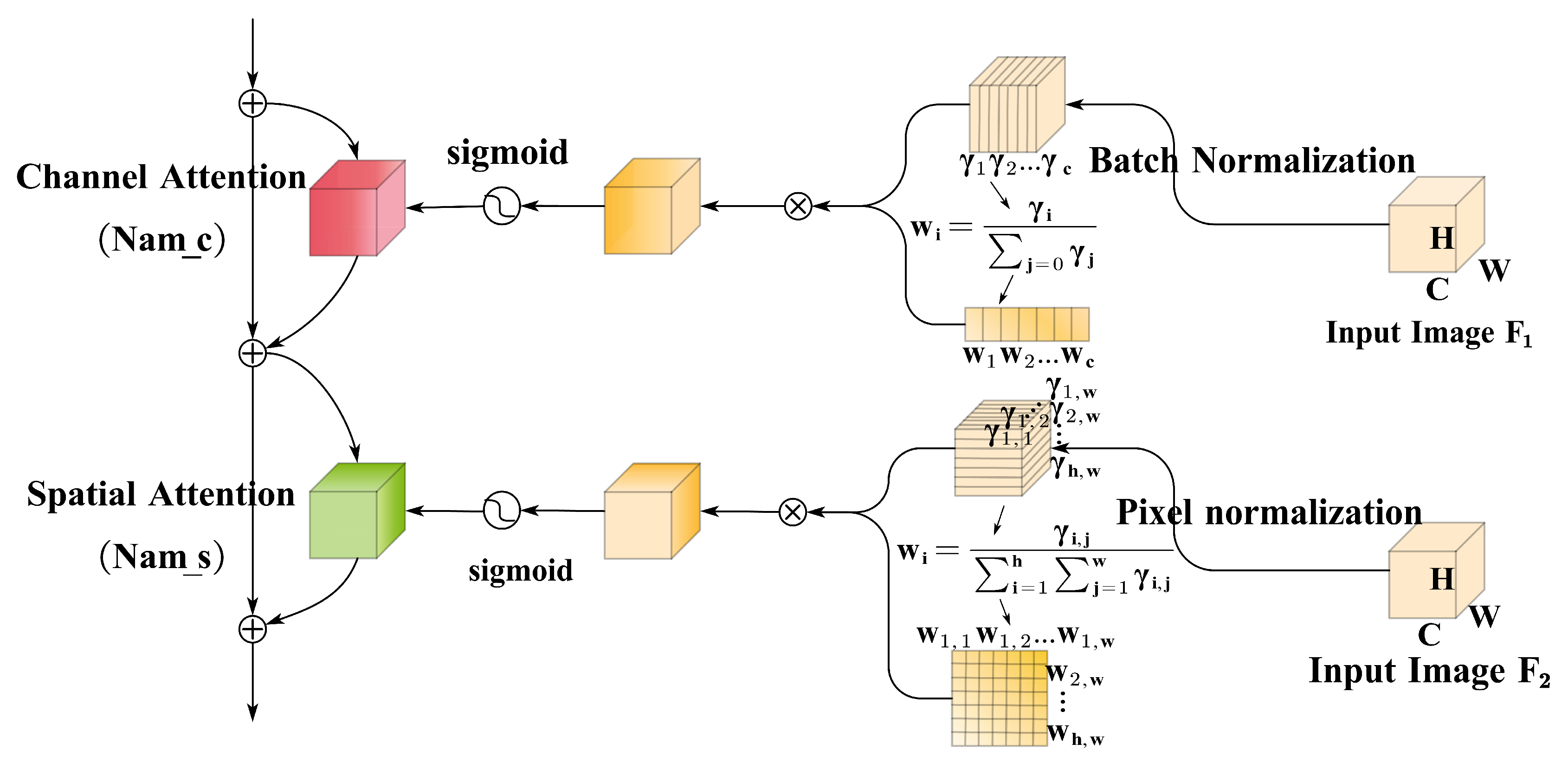

2.4. Attention Module

3. Experiment and Result Analysis

3.1. Dataset Description

3.2. Experimental Settings and Experimental Indicators

3.3. Results Evaluation and Comparison

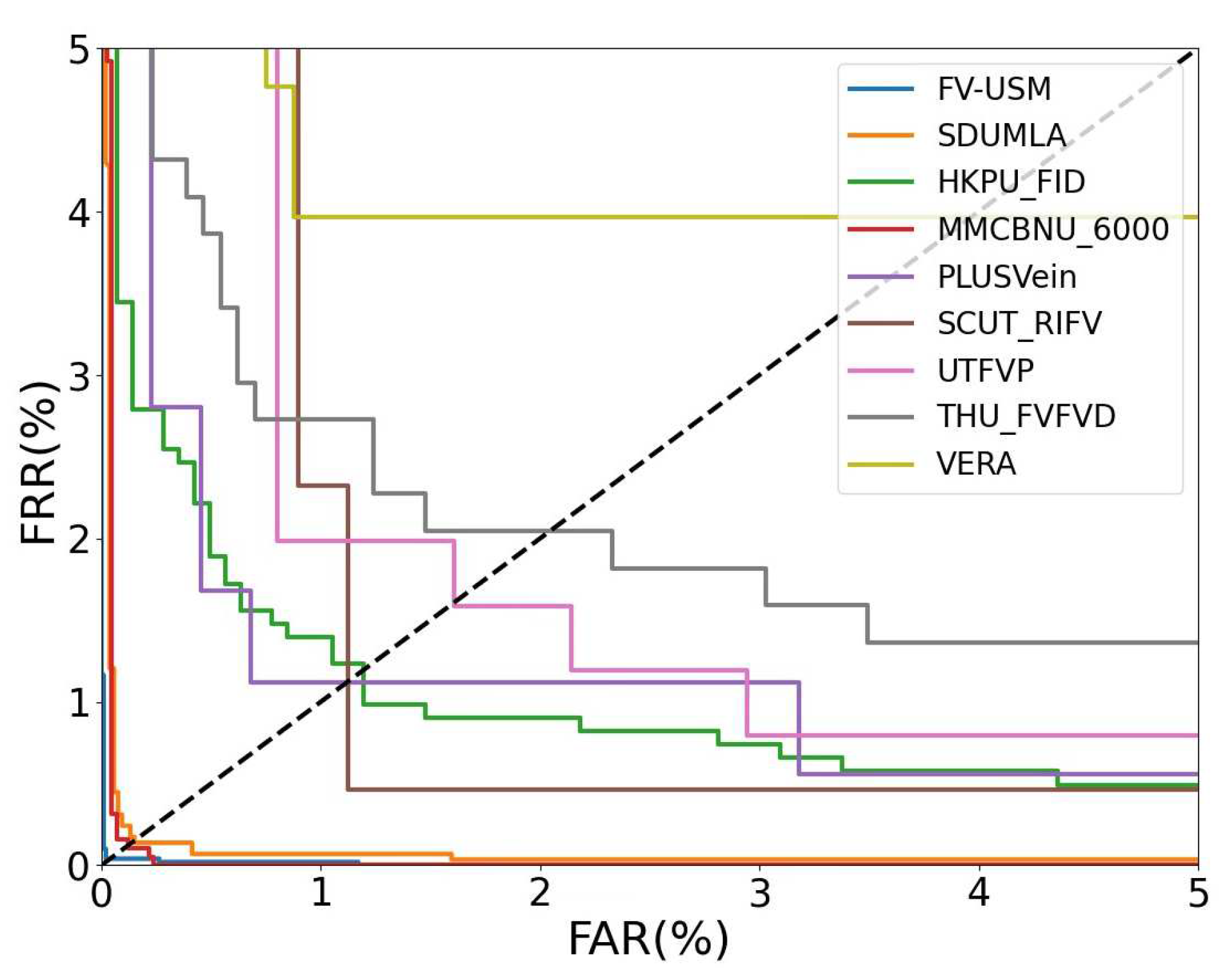

3.3.1. Comparison and Evaluation with Existing FV models

3.3.2. Ablation Experiments

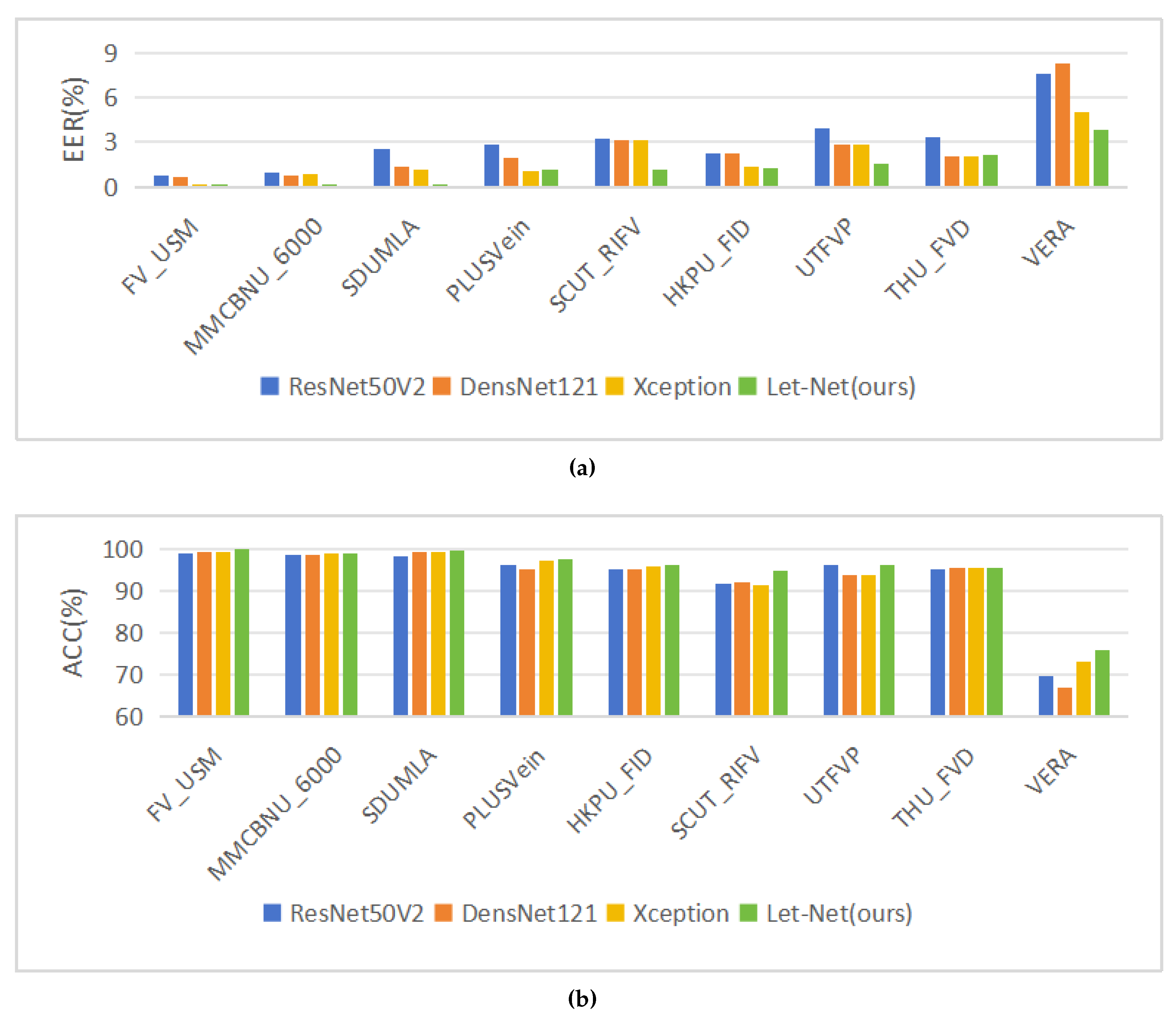

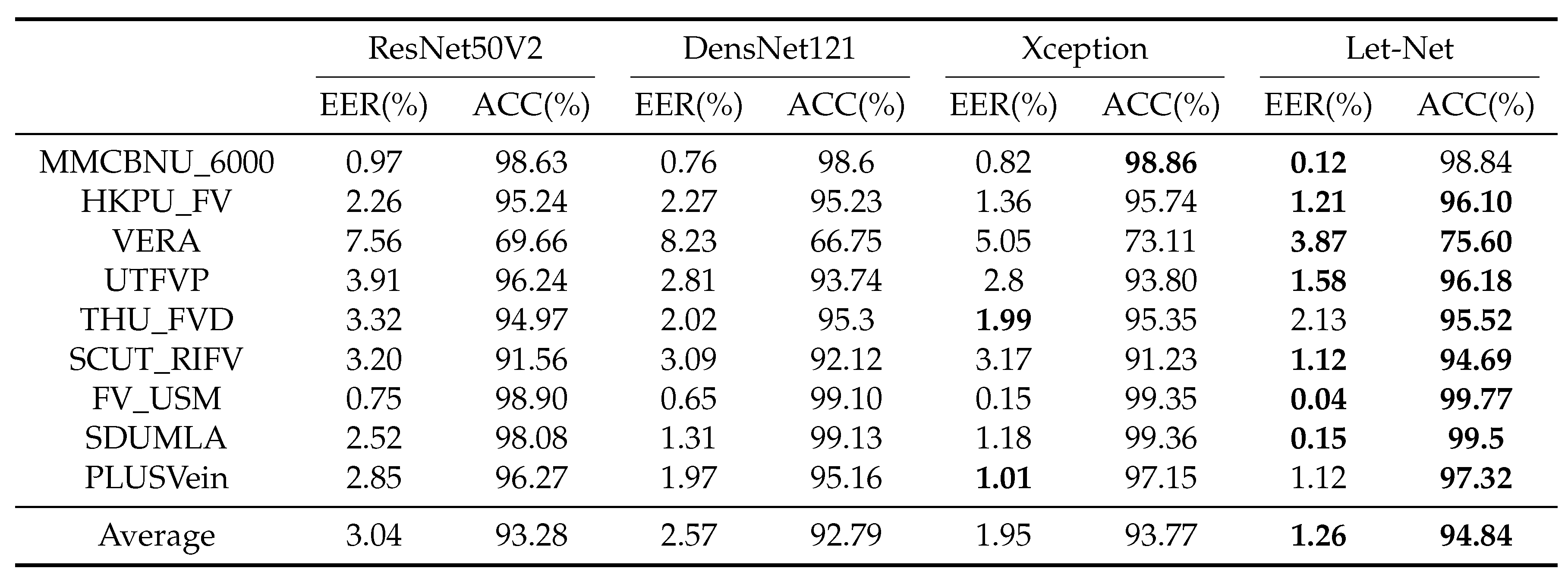

3.3.3. Comparative Experimental Results between Let-Net and Classic Models

3.3.4. Computational Cost

4. Summary and Outlook

References

- Radzi S A, Hani M K, Bakhteri R. Finger-vein biometric identification using convolutional neural network. Turkish Journal of Electrical Engineering and Computer Sciences, 2016, 24(3): 1863-1878. [CrossRef]

- Das R, Piciucco E, Maiorana E, Maiorana E, Campisi P. Convolutional neural network for finger-vein-based biometric identification. IEEE Transactions on Information Forensics and Security, 2018, 14(2): 360-373. [CrossRef]

- Yang W, Luo W, Kang W, Huang Z and Wu Q. Fvras-net: An embedded finger-vein recognition and antispoofing system using a unified cnn. IEEE Transactions on Instrumentation and Measurement, 2020, 69(11): 8690-8701. [CrossRef]

- Shaheed K, Mao A, Qureshi I, Kumar M, Hussain S, Ullah I, Zhang X. DS-CNN: A pre-trained Xception model based on depthwise separable convolutional neural network for finger vein recognition. Expert Systems with Applications, 2022, 191: 116288. [CrossRef]

- Chen L, Guo T, Li L, et al. A Finger Vein Liveness Detection System Based on Multi-Scale Spatial-Temporal Map and Light-ViT Model. Sensors, 2023, 23(24): 9637.

- Huang J, Luo W, Yang W, Zheng A, Lian F, Kang W. FVT: Finger Vein transformer for authentication. IEEE Transactions on Instrumentation and Measurement, 2022, 71: 1-13.

- Tome P, Marcel S. On the vulnerability of palm vein recognition to spoofing attacks. In Proceedings of the 2015 International Conference on Biometrics (ICB), Phuket, Thailand, 19-22 May 2015; pp.319-325.

- Ton B T, Veldhuis R N J. A high quality finger vascular pattern dataset collected using a custom designed capturing device. In Proceedings of the 2013 International conference on biometrics, Madrid, Spain, 4-7 June 2013; pp.1-5.

- Yang W, Hui C, Chen Z, Xue J, Liao Q. FV-GAN: Finger vein representation using generative adversarial networks. IEEE Transactions on Information Forensics and Security, 2019, 14(9): 2512-2524.

- Liu Z, Lin Y, Cao Y, Hu H, Wei Y, Zhang Z, Lin S, Guo B. Swin transformer: Hierarchical vision transformer using shifted windows. In proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), virtually, 11-17 March 2021; pp.10012-10022.

- Luo W, Li Y, Urtasun R, Zemel R. Understanding the effective receptive field in deep convolutional neural networks. Advances in neural information processing systems, 2016, 29.

- Ding X, Zhang X, Han J, Ding G. Scaling up your kernels to 31x31: Revisiting large kernel design in cnns. In proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, Louisiana, 19-23 June 2022; pp.11963-11975.

- Hu H, Zhang Z, Xie Z, Lin S. Local relation networks for image identification. In proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October-2 November 2019; pp.3464-3473.

- Peng C, Zhang X, Yu G, Luo G, Sun J. Large kernel matters–improve semantic segmentation by global convolutional network. In Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), Honolulu, Hawaii, 21-26 July 2017; pp.4353-4361.

- Romero D W, Bruintjes R J, Tomczak J M, Bekkers E J, Hoogendoom M, van Gemert J C. Flexconv: Continuous kernel convolutions with differentiable kernel sizes. arXiv 2021, arXiv:2110.08059.

- Liu Z, Mao H, Wu C Y, Feichtenhofer C, Darrell T, Xie S. A convnet for the 2020s. In proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR), New Orleans, Louisiana, 19-23 June 2022; pp.11976-11986.

- Mnih V, Heess N, Graves A. Recurrent models of visual attention. Advances in neural information processing systems, 2014, 27.

- Woo S, Park J, Lee J Y, Kweon I S. Cbam: Convolutional block attention module. In proceedings of the European conference on computer vision (ECCV), Munich, Germany, 8-14 September 2018; pp.3-19.

- De Silva M, Brown D. Multispectral Plant Disease Detection with Vision Transformer–Convolutional Neural Network Hybrid Approaches. Sensors, 2023, 23(20): 8531. [CrossRef]

- Kang W, Lu Y, Li D, Jia W. From noise to feature: Exploiting intensity distribution as a novel soft biometric trait for finger vein recognition. IEEE transactions on information forensics and security, 2018, 14(4): 858-869.

- Liu Y, Shao Z, Teng Y, Hoffmann N. NAM: Normalization-based attention module. arXiv 2021, arXiv:2111.12419.

- Yin Y, Liu L, Sun X. SDUMLA-HMT: a multimodal biometric database. In proceedings of the Chinese Conference on Biometric Recognition (CCBR), Beijing, China, 3-4 December 2011; pp.260-268.

- Asaari M S M, Suandi S A, Rosdi B A. Fusion of band limited phase only correlation and width centroid contour distance for finger based biometrics. Expert Systems with Applications, 2014, 41(7): 3367-3382. [CrossRef]

- Kumar A, Zhou Y. Human identification using finger images. IEEE Transactions on image processing, 2011, 21(4): 2228-2244. [CrossRef]

- Tang S, Zhou S, Kang W, Wu Q, Deng F. Finger vein verification using a Siamese CNN. IET biometrics, 2019, 8(5): 306-315. [CrossRef]

- Kauba C, Prommegger B, Uhl A. Focussing the beam-a new laser illumination based dataset providing insights to finger-vein recognition. In proceedings of the 2018 IEEE 9th International Conference on Biometrics Theory, Applications and Systems (BTAS), Redondo Beach, CA, USA, 25 October 2018; pp.1-9.

- Lu Y, Xie S J, Yoon S, Wang Z, Park D S. An available database for the research of finger vein recognition. In proceedings of the 2013 6th International congress on image and signal processing (CISP), Hangzhou, China, 16-18 December 2013; pp.1: 410-415.

- Yang W, Qin C, Liao Q. A database with ROI extraction for studying fusion of finger vein and finger dorsal texture. In proceedings of the Biometric Recognition: 9th Chinese Conference(CCBR), Shenyang, China, 7-9 November 2014; pp.266-270.

- Yang L, Yang G, Xi X, Su Kun, Chen C, Yin Y. Finger vein code: From indexing to matching. IEEE Transactions on Information Forensics and Security, 2018, 14(5): 1210-1223.

- Shen J, Liu N, Xu C, Sun H, Xiao Y, Li D, Zhang Y. Finger vein recognition algorithm based on lightweight deep convolutional neural network. IEEE Transactions on Instrumentation and Measurement, 2021, 71: 1-13. [CrossRef]

- Hou B, Yan R. ArcVein-arccosine center loss for finger vein verification. IEEE Transactions on Instrumentation and Measurement, 2021, 70: 1-11.

- Du S, Yang J, Zhang H, Zhang B, Su Z. FVSR-net: an end-to-end finger vein image scattering removal network. Multimedia Tools and Applications, 2021, 80: 10705-10722. [CrossRef]

- Liu J, Chen Z, Zhao K, Wang M, Hu Z, Wei X, Zhu Y, Feng Z, Kim H, Jin C. Finger vein recognition using a shallow convolutional neural network. In proceedings of the Biometric recognition: 15th Chinese Conference (CCBR), Shanghai, China, 10–12 September 2021; pp.195-202.

- Huang J, Zheng A, Shakeel M S, Yang W, Kang W. FVFSNet: Frequency-spatial coupling network for finger vein authentication. IEEE Transactions on Information Forensics and Security, 2023, 18: 1322-1334. [CrossRef]

| EER(%) | |||||||||

| FV_USM | SDUMLA | MMCBNU _6000 | HKPU_FV | THU_FVD | SCUT_RIFV | UTFVP | PLUSVein | VERA | |

| FV_CNN [2] | - | 6.42 | - | 4.67 | - | - | - | - | - |

| Fvras-net [3] | 0.95 | 1.71 | 1.11 | - | - | - | - | - | - |

| FV code [29] | - | - | - | 3.33 | - | - | - | - | - |

| L-CNN [30] | - | 1.13 | - | 0.67 | - | - | - | - | - |

| ArcVein [31] | 0.25 | 1.53 | - | 1.3 | - | - | - | - | - |

| FVSR-Net[32] | - | 5.27 | - | - | - | - | - | - | - |

| S-CNN [33] | - | 2.29 | 0.47 | - | - | - | - | - | - |

| FVT [6] | 0.44 | 1.5 | 0.92 | 2.37 | 3.6 | 1.65 | 1.97 | 2.08 | 4.55 |

| FVFSNet [34] | 0.20 | 1.10 | 0.18 | 0.81 | 2.15 | 0.83 | 2.08 | 1.32 | 6.82 |

| Let-Net(ours) | 0.04 | 0.15 | 0.12 | 1.54 | 2.13 | 1.12 | 1.58 | 1.12 | 3.87 |

| Method | FV_USM EER(%) | SDUMLA EER(%) | Parameters(M) | |

| Kernel Size | 99.57 | 99.1 | 0.72 | |

| 99.66 | 99.35 | 0.81 | ||

| 99.77 | 99.42 | 0.89 | ||

| 99.68 | 99.34 | 1.08 | ||

| 99.66 | 99.33 | 1.67 | ||

| Components of Let-Net |

No Stem | 98.25 | 97.86 | 0.51 |

| No LK | 96.65 | 96.27 | 0.78 | |

| No NAM | 95.76 | 95.17 | 0.66 | |

| No Stem&Lk | 94.71 | 94.16 | 0.52 | |

| No Stem&NAM | 93.64 | 93.11 | 0.27 | |

| No LK&NAM | 88.12 | 87.76 | 0.55 | |

| Stem&LK&NAM | 99.77 | 99.5 | 0.89 | |

| Large Kernel Architecture |

Direct Connection | 96.32 | 96.01 | 0.88 |

| Parallel Connection | 98.46 | 97.26 | 0.89 | |

| Funnel Connection | 98.26 | 97.49 | 0.89 | |

| Taper Connection | 99.77 | 99.5 | 0.89 |

| Model | Params(M) | FLOPs(G) | EER(%)* | ACC(%)* |

| ResNet50V2 | 23.63 | 6.99 | 3.04 | 93.28 |

| DensNet121 | 7.07 | 5.70 | 2.57 | 92.79 |

| Xception | 2.09 | 16.8 | 1.95 | 93.77 |

| Let-Net(ours) | 0.89 | 0.25 | 1.26 | 94.84 |

| Training(s) | Prediction(s) | Total(s) | Single batch time(ms) | |

| VGG16 | 10 | 5 | 15 | 48 |

| VGG19 | 12 | 6 | 18 | 55 |

| Resnet50V2 | 11 | 6 | 17 | 53 |

| InceptionV3 | 16 | 9 | 25 | 78 |

| DensNet121 | 22 | 11 | 33 | 102 |

| Xception | 19 | 6 | 25 | 77 |

| RepLKNet | 96 | 6 | 120 | 373 |

| Let-Net(ours) | 3 | 3 | 6 | 17 |

| Training(s) | Prediction(s) | Total(s) | Single batch time(ms) | |

| VGG16 | 13 | 7 | 20 | 48 |

| VGG19 | 15 | 8 | 23 | 55 |

| Resnet50V2 | 14 | 9 | 23 | 54 |

| InceptionV3 | 20 | 12 | 32 | 77 |

| DensNet121 | 26 | 15 | 41 | 97 |

| Xception | 25 | 7 | 32 | 78 |

| RepLKNet | 126 | 32 | 158 | 379 |

| Let-Net(ours) | 4 | 3 | 7 | 18 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).