Submitted:

11 January 2024

Posted:

12 January 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Inverse problem of experimental data analysis

2.1. Formulation of the problem

2.2. Least-squares formulation of the inverse problem

- Using one of the minimization algorithms, such as the Conjugate Gradient Method,[21] or Powell’s algorithm,[22] we get first iteration trial parameter values . Depending on the chosen minimization algorithm, we may require either calculation of the LSQ function’s gradient (), Hessian (), or evaluate the LSQ function’s values in a few neighboring points around .

- Then, we again compute the and find second (), third (), fourth (), and so on, values of the parameters, trying to minimize the value of the LSQ function (Equation 6).

- We halt this iterative procedure when we reach a pre-defined convergence criterion. For instance, if the change of the parameter value from iteration to iteration is smaller than some small value (), e.g., as , then the convergence criterion can be said to satisfy. In this case, we take the last value obtained in the procedure to be our solution, and then we estimate the uncertainties of the parameters and correlations between them using an approximate normal distribution computed from the second derivatives of the LSQ function (see Equation 10).

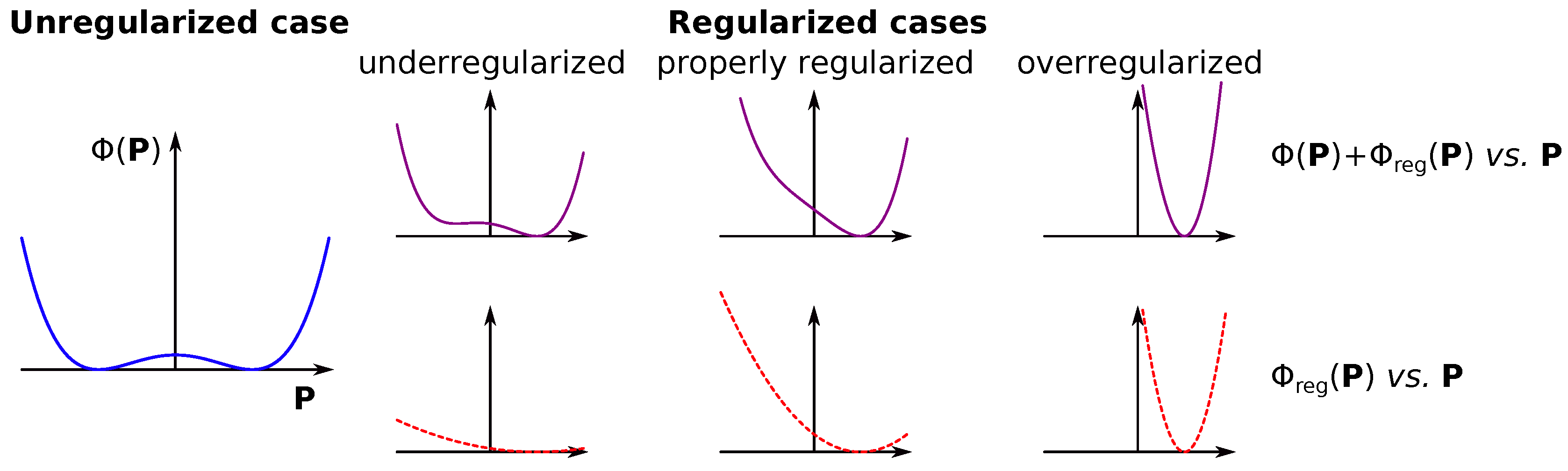

2.3. Regularization of the least-squares inverse problem

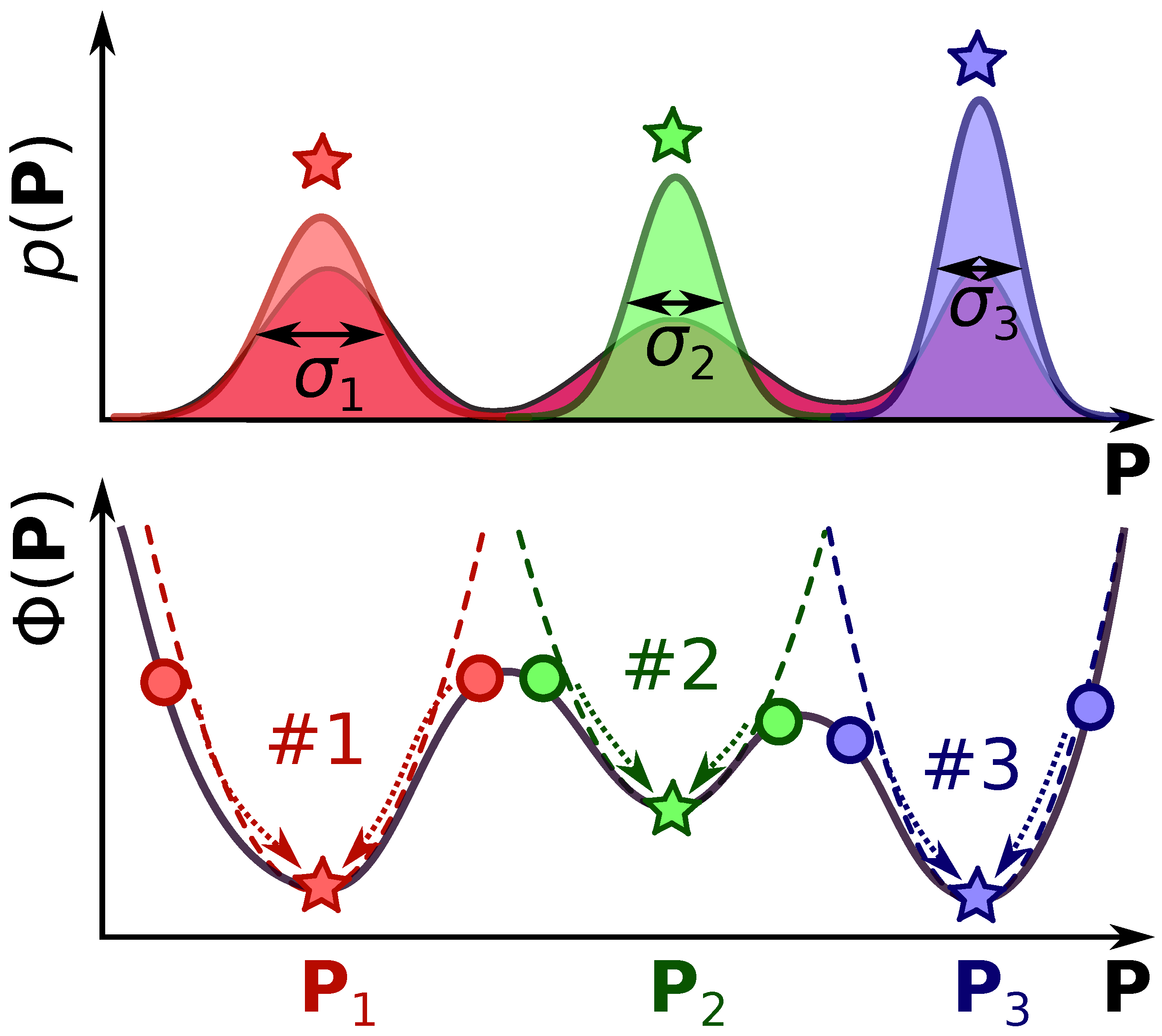

2.4. Monte-Carlo importance sampling of the parameter space

- We start from the initial set of parameters , which we assign to be the current state of the simulation (i.e., we set ). Generally, we can use any set of parameters for the initial guess, but a faster simulation convergence is reached if we provide initial values in the desired solution region, e.g., as the solution of the LSQ fitting problem (Equation 9).

- From the current state, we generate a new trial set of parameters , and then we compute the transition probability . This value describes a chance of changing our current state to a new state (i.e., reassigning ). The probability should be related to the probabilities given in Equation 5, and we will discuss it in detail further in the text (see Equation 21).

-

Then, we draw a random value from a uniform distribution between 0 and 1, and compare the with .

- If , than becomes the new state of the system, i.e., we reassign . This state we will call an accepted step.

- If , this means that the transition does not happen (we disregard the ). The new state of the system becomes the same old value . This state we will call a declined step.

- By repeating steps #2 and #3 sufficient amount of iterations (N), we generate a trajectory of states , where index denotes the state at the n-th iteration of the algorithm. Naturally, some sets of parameters will be repeated multiple times throughout the trajectory. And this trajectory will encode inside the desired distribution given in Equation 5. In practice, the initial part of the trajectory (e.g., first 10% of steps) is disregarded as an equilibration phase. The ratio of the accepted steps in algorithm step #3 () to the total number of steps (, where is the number of rejected steps) we call an acceptance ratio. A general requirement for the simulation to be reasonably good is that this ratio should not be too big or too small. A simple rule of thumb can be that the acceptance rate should be in the range .

-

From the obtained trajectory , we can compute all the required parameters. For instance, the mean value of parameter (from the set of parameters ) can be computed aswhere is the value of in the parameter set . Similarly, we can compute the standard deviation of parameter from the mean value asThe covariance between the parameters and will be given similarly, asFrom standard deviations (Equation 14) and covariances (Equation 15) we can also calculate the Pearson’s correlation coefficients between parameters and asUsing this algorithm, we can effectively sample the possible solutions and provide a more general estimation of parameter values and their uncertainties.

3. Fitting model of pump-probe spectroscopy

3.1. General considerations

- if both the pump and the probe pulses act on the molecule simultaneously, then (this temporal overlap of the pump and probe pulses is also called ),

- if the probe pulse interacts with the molecule before the pump pulse, then ,

- and if the probe pulse interacts with the molecule after the pump pulse, then .

3.2. Delta-shaped pump-probe model

3.2.1. Assumptions of the model

3.2.2. Step function dynamics

3.2.3. Instant dynamics

3.2.4. Transient pump-probe signatures of metastable species

3.2.5. Coherent oscillations without decay

3.2.6. Coherent oscillations with decay

3.2.7. More complicated dynamics models

-

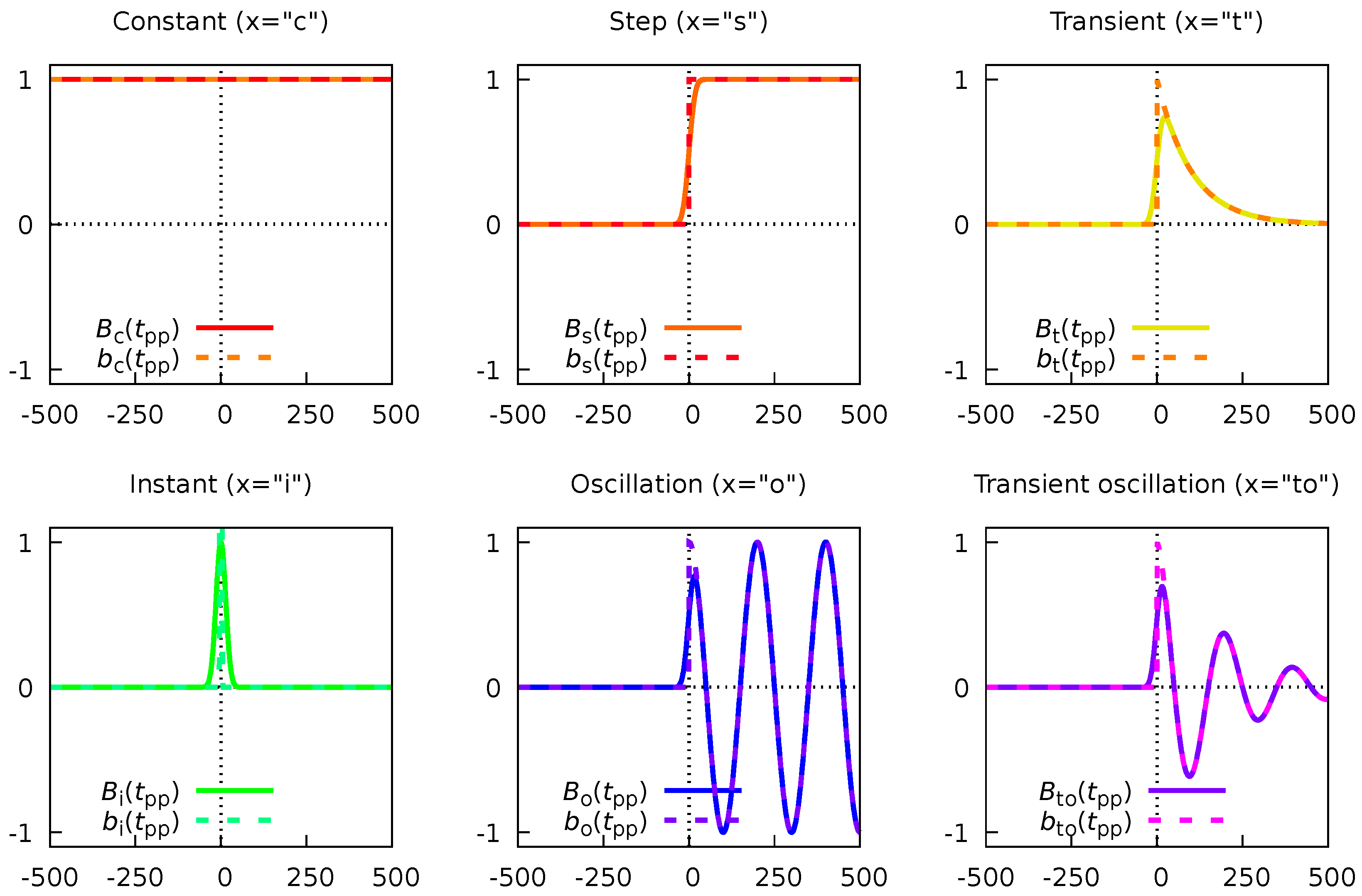

The first type is simply the constant (“c”) defined asThis function (with coefficient in Equation 59) has no parameters and describes the background of the pump-probe experiment.

-

The second type is the step or “switch” function (“s”)This basis function (with coefficient in Equation 59) describes the switching of the background between and regimes.

-

The third type is the transient (“t”) functionThis type of basis function (with coefficients in Equation 59) describes the pump-induced decay dynamics, and it depends on a parameter , which is an effective decay time.

-

This type of dynamics describes unresolvably fast relaxation dynamics.

-

The second additional function, describing non-decaying coherent oscillation (“o”), can be taken from Equation 51:This basis function has two parameters: the oscillation frequency and the initial phase .

-

The last additional function, describing a transient coherent oscillation (“to”), can be taken from Equation 57:This basis function has three parameters: the oscillation frequency , the initial phase , and the decay time .

3.3. Accounting for finite duration of the pulses and experiment jitters

- the real pulses are not delta-shaped but have a finite duration,

- real experimental setups have fluctuations (jitters) of the pump-probe delay, arising from different physical processes.

- pump pulse duration ,

- probe pulse duration ,

- instrument jitter magnitude .

- The first function is the constant (“c”) function:

- The second type is the step function (“s”):where is the error function.

- The third type is the transient (“t”) function

- The fourth type is the instant (“i”) dynamics function

- The fifth type is the non-decaying coherent oscillation (“o”) function

- And the sixth type is the decaying (transient) coherent oscillation (“to”) function

4. Estimation procedure for the parameters and their uncertainties

4.1. Single dataset case

- The first ones are the linear coefficients before basis functions. These parameters depict effective cross-sections and quantum yields for a given dynamics. We will represent these parameters as an N-dimensional vector .

-

The second set of parameters defines each basis function . There are several types of actual parameters.

- The first type is , representing the temporal overlap of the pump and the probe pulses on the molecular sample. This parameter is not always known in advance from the experimental setup (e.g., in the cases of experiments at the FELs with conventional lasers[6]); it might be needed to fit. In this case, the parameter is provided to a given basis function by replacing it with . In most cases, the is a shared parameter for all the basis functions and datasets. However, in some cases, some of the basis functions can have a different parameter to account for Wigner time delay in photoionization.[52]

- The second type of parameter is the cross-correlation time . This parameter might differ for various basis functions since some processes can absorb different numbers of photons to be pumped/probed.

- The third type is the decay time . Various decay processes usually have different parameters.

- The fourth type is the coherent oscillation frequency .

- And the last, fifth, parameter type is the oscillation phase .

These values are required to fully describe the model of the observable. We will denote all of these parameters with a vector .

4.2. Multiple dataset case

4.3. Inverse problem regularization

4.4. Inverse problem solution algorithm

-

Construct a regularization functional for parameters . Two types are available.

- (a)

- If there are some a priori expectations on some of the parameters, they can be included through the penalty function (Equation 90).

- (b)

- If, for some observables, there are multiple basis functions of the same type, they can be protected from linear dependency using (Equation 92).

The total regularization function can be either- , if both regularization cases are applicable;

- or , if only one regularization case in demand;

- , if no regularization is required.

- Define an effective function (Equation 89) as a sum of experimental and regularization functions.

- Find a solution of the LSQ problem as using local or global fitting.

- Start a MC sampling procedure (see Section 2.4) with probability to sample nonlinear () and linear () parameters.

- In addition to the values and uncertainties, Pearson correlation coefficients (Equation 16), histograms of parameter distributions, and higher distribution moments can also be calculated from the MC trajectory.

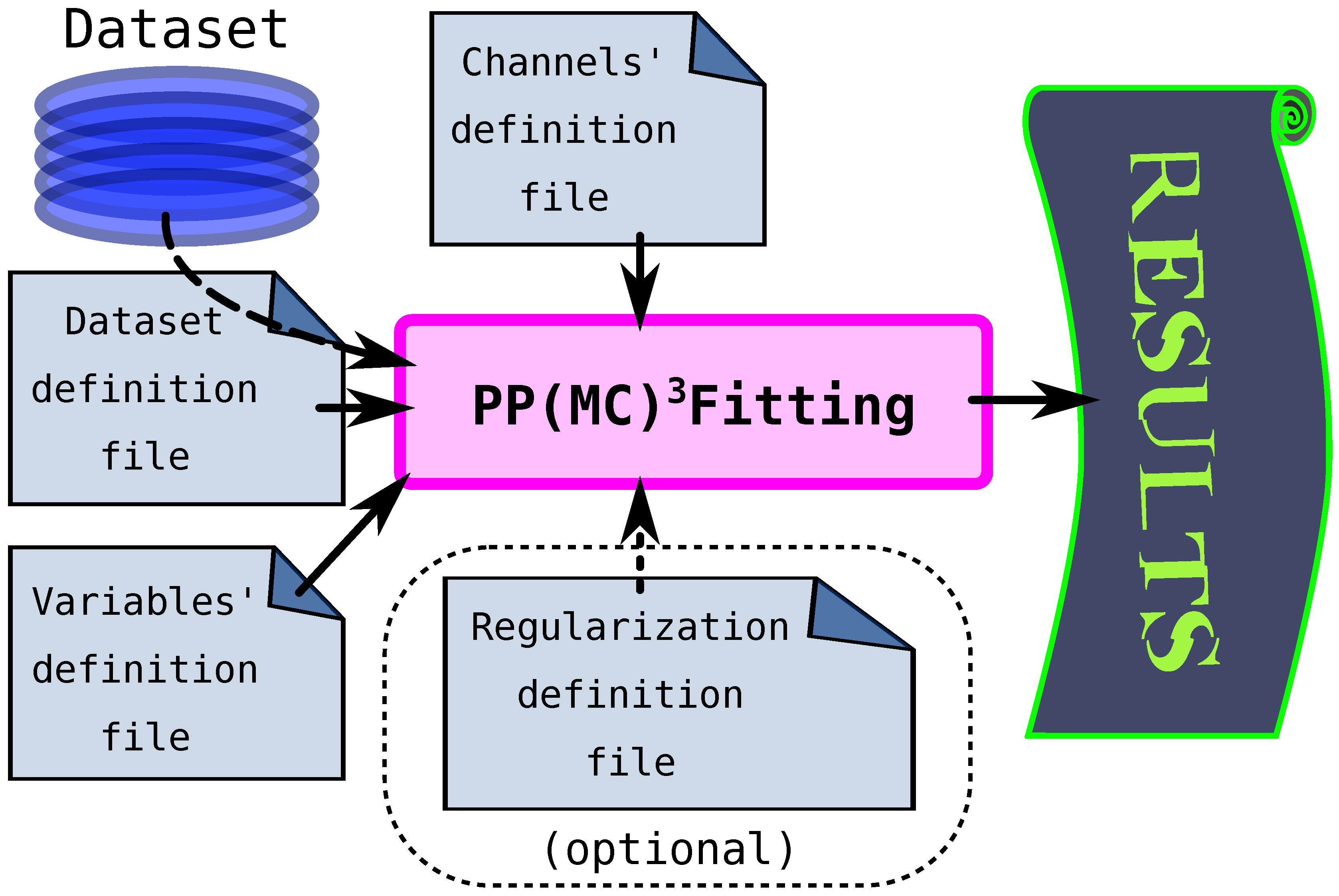

5. PP(MC)3Fitting software

6. Numerical examples

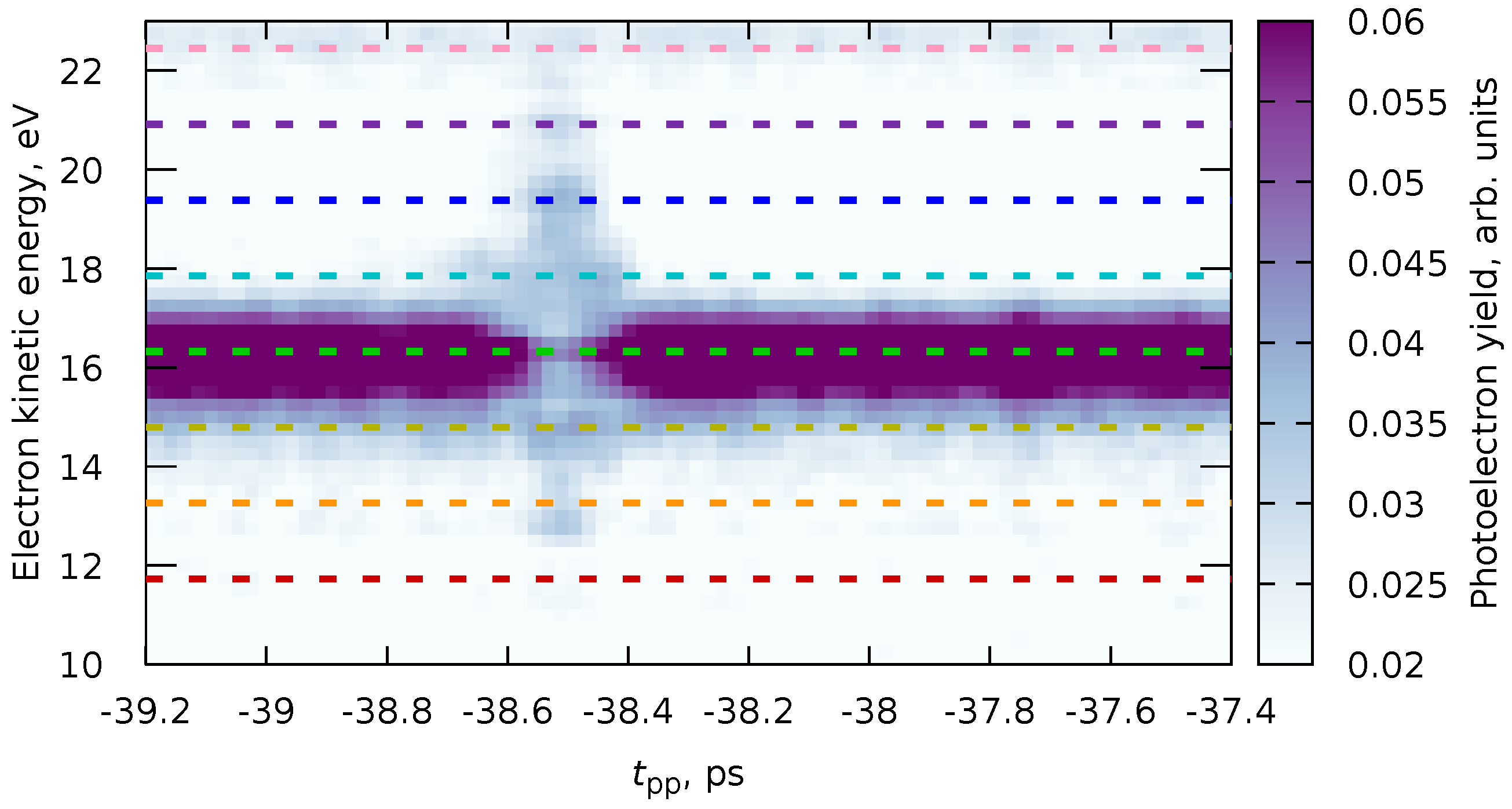

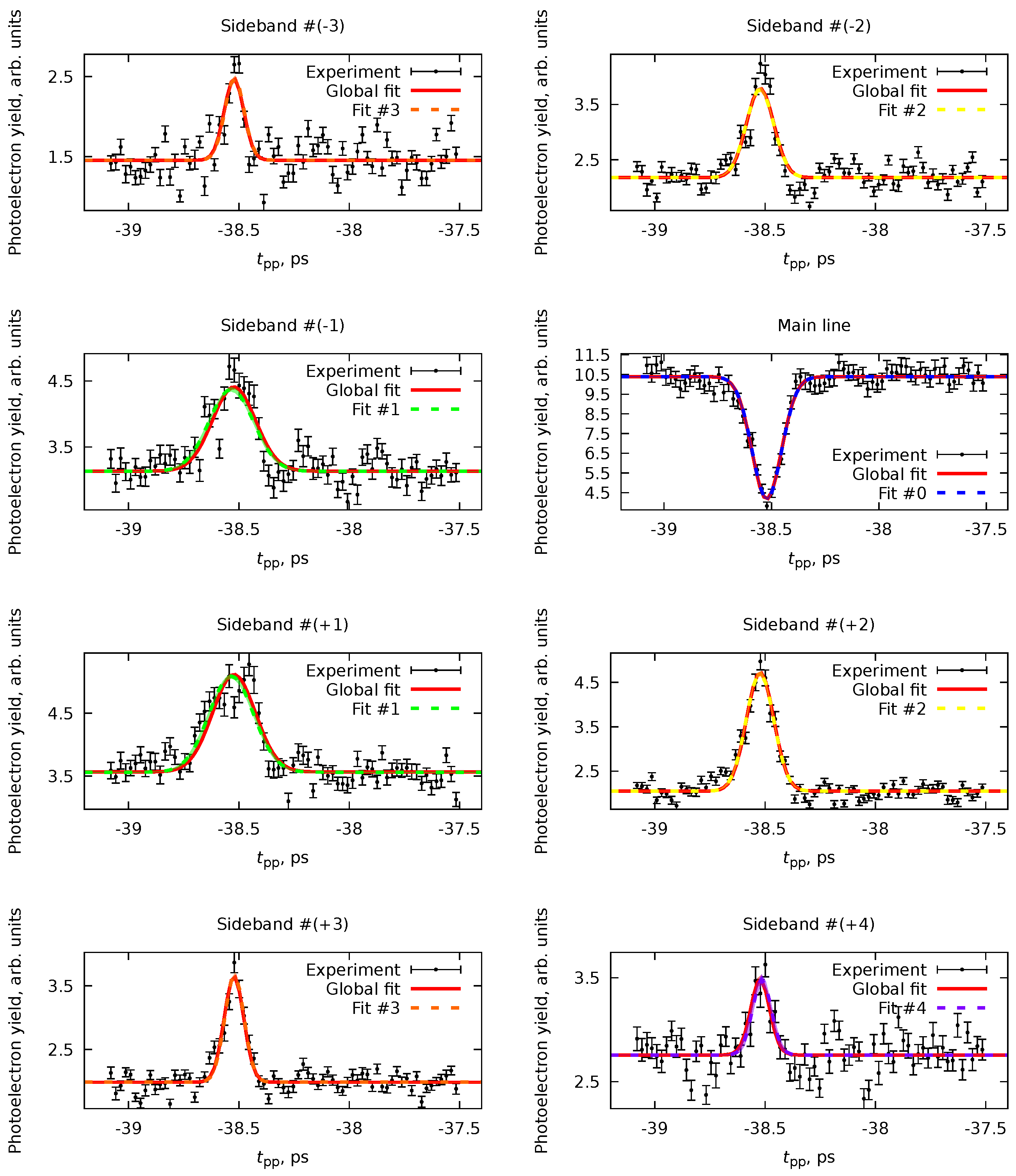

6.1. Multiple datasets with shared parameters

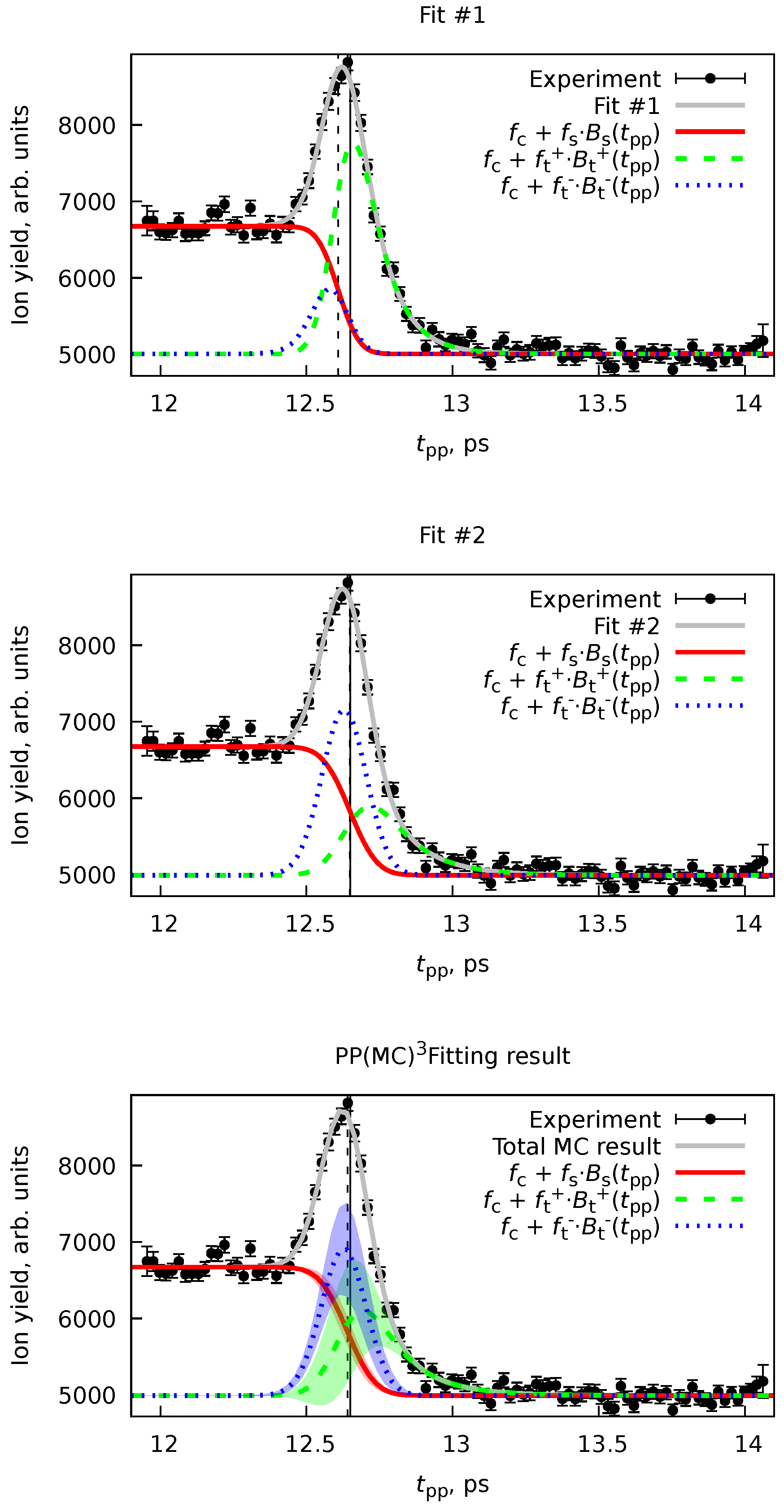

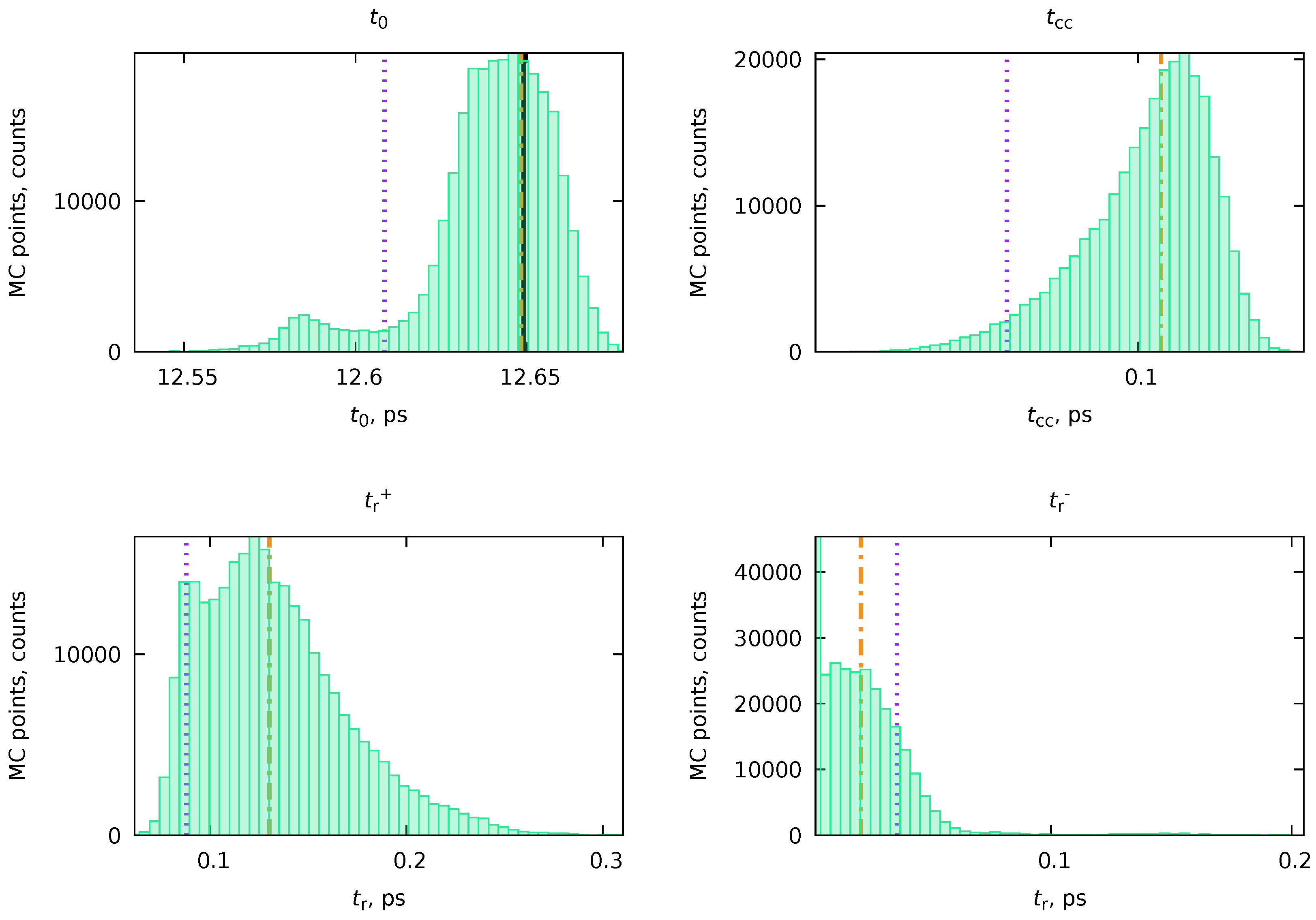

6.2. Forward-backward channel dataset

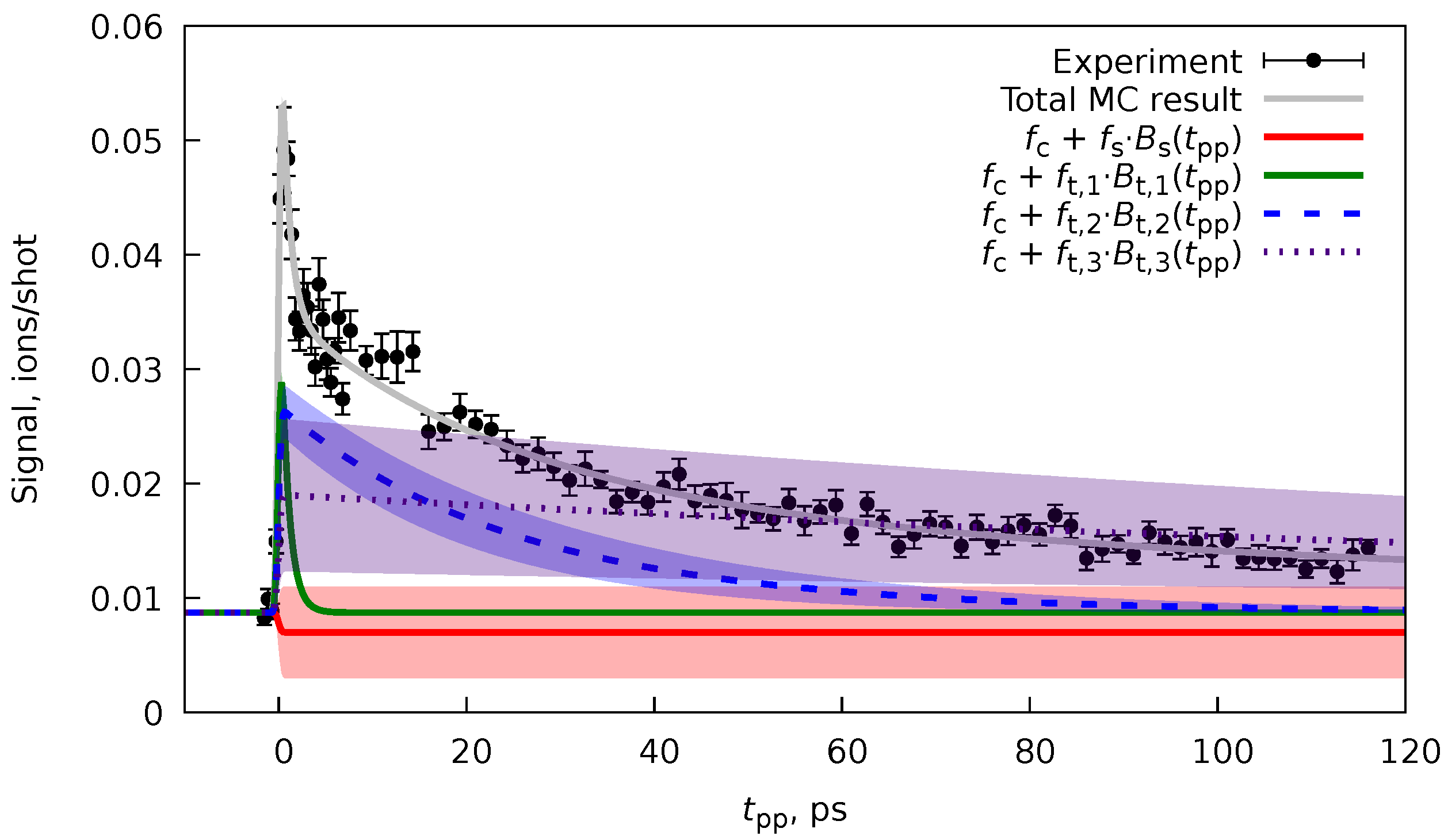

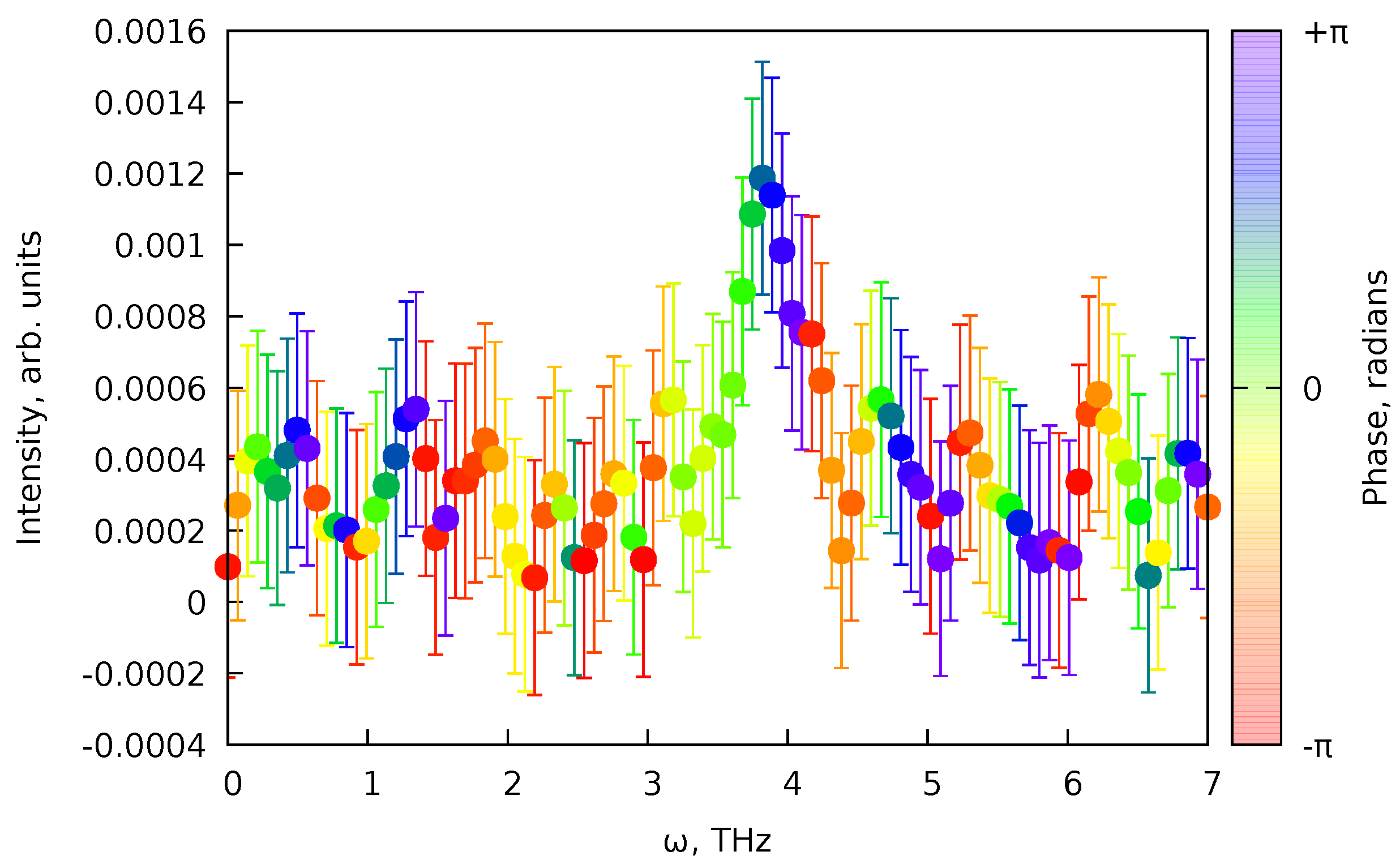

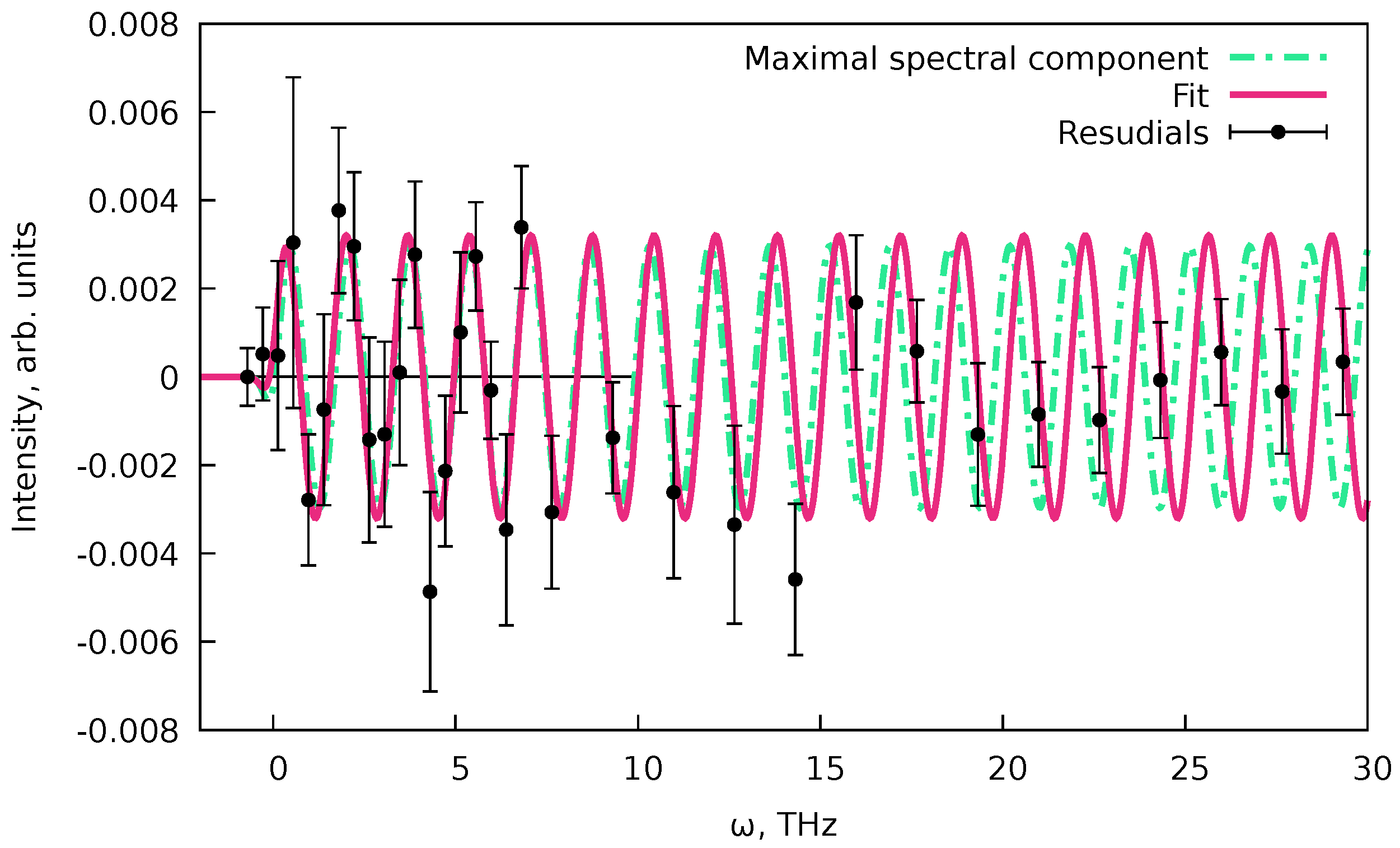

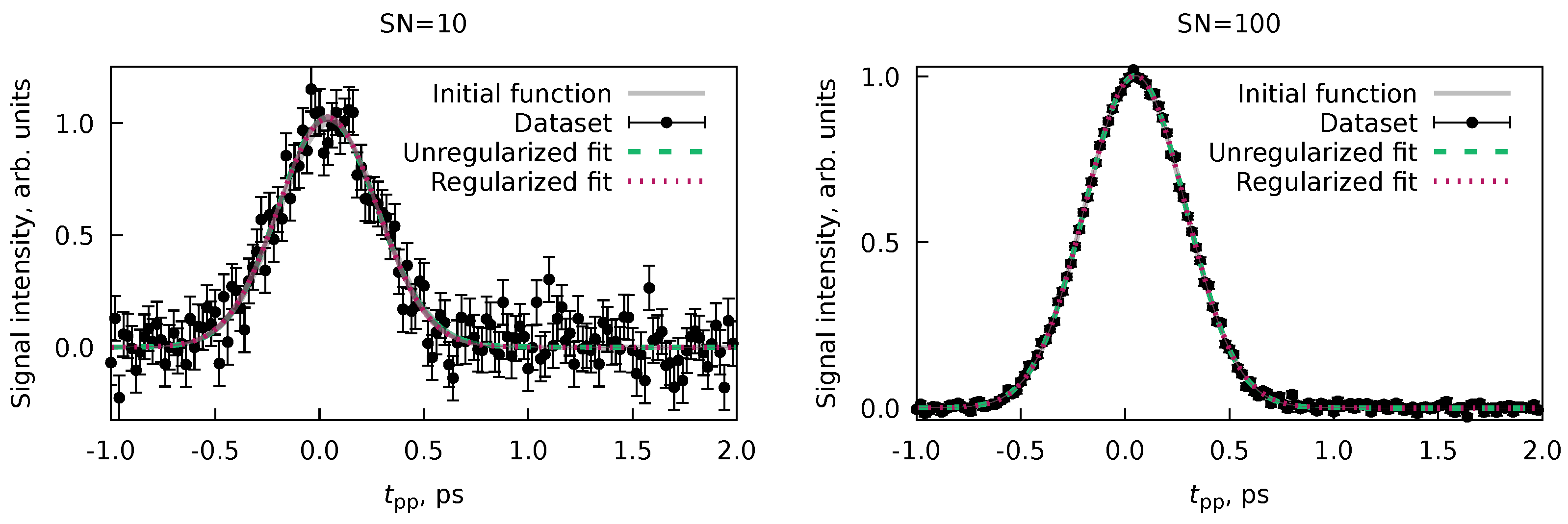

6.3. Treatment of the data with coherent oscillations

- First, with the algorithm from Section 4.4 implemented in PP(MC)3Fitting fit the non-oscillating part of the dynamics.

- Then, perform rwLSSA analysis[68] (see Appendix C) for the residuals of the fit that are also printed by the PP(MC)3Fitting. This analysis will allow us to check whether the signal has any systematic oscillations. Note that the oscillations should be present only in or in parts of the pump-probe data (see Equations 82 and 83).

- If the rwLSSA spectrum shows a presence of statistically meaningful oscillations in a reasonable range of frequencies, then the frequencies and the phases of the maximal amplitude signals from rwLSSA spectra as initial guesses to fit the residuals of the PP(MC)3Fitting result with expression Equation 76 and basis functions (Equation 82) and (Equation 83). Since the coherent oscillations should correspond to the incoherent processes, the cross-correlations and decay times from the PP(MC)3Fitting results can be used.

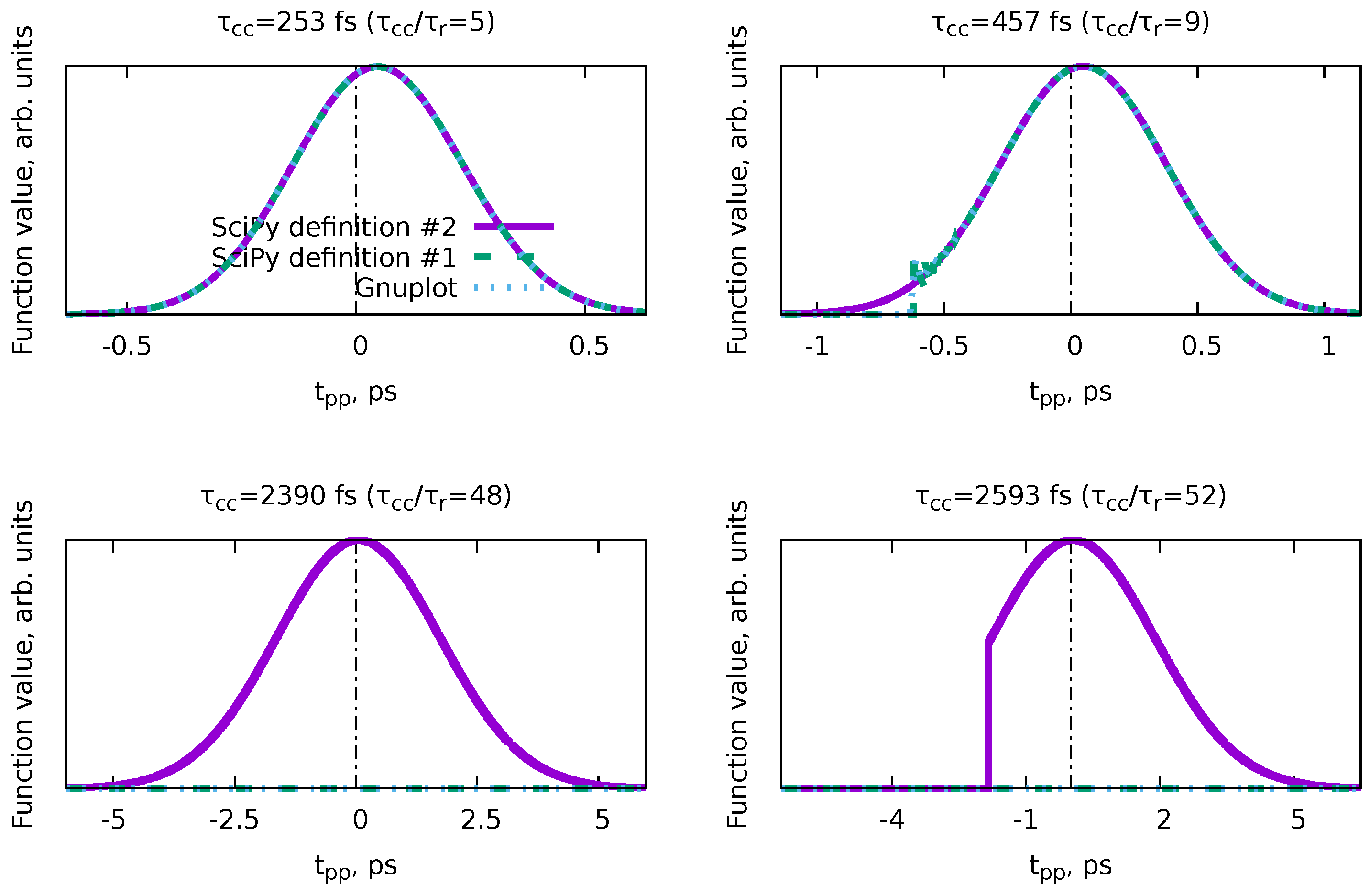

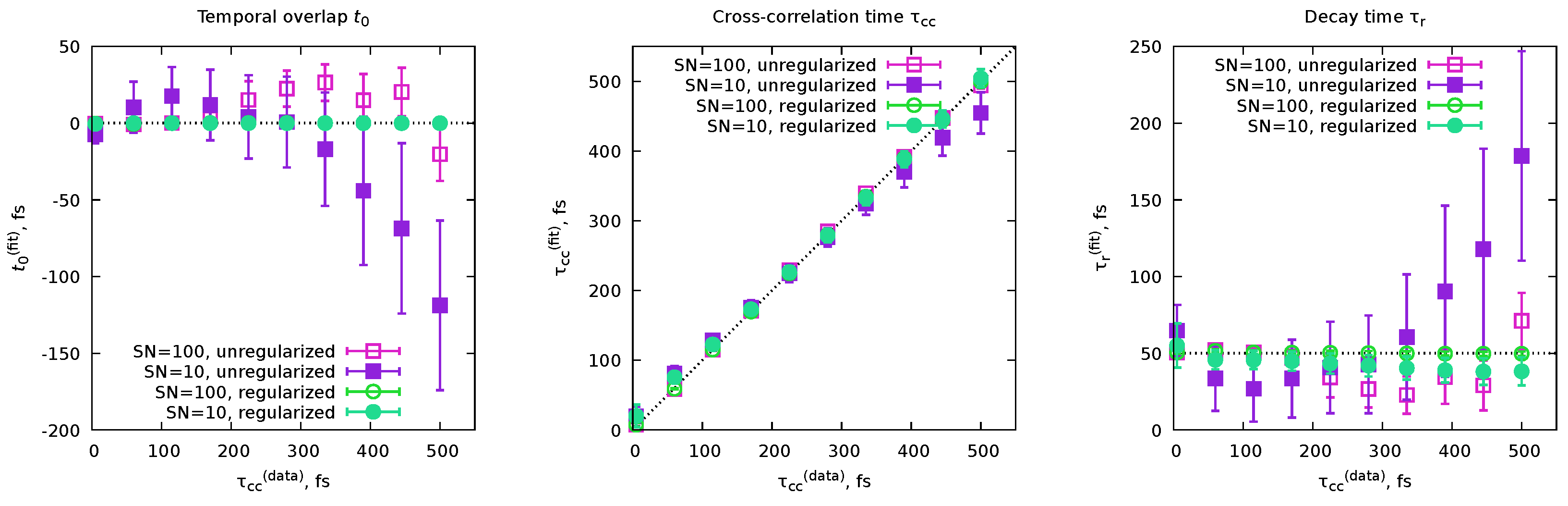

6.4. Cross-correlation time and time resolution

7. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| FEL | free-electron laser |

| FT | Fourier transform |

| IR | infrared |

| LSQ | Least-squares |

| (rw)LSSA | (regularized weighted) least-squares spectral analysis |

| MC | Monte-Carlo |

| PAH | Polycyclic aromatic hydrocarbon |

| SN | signal-to-noise ratio |

| XUV | extreme ultraviolet |

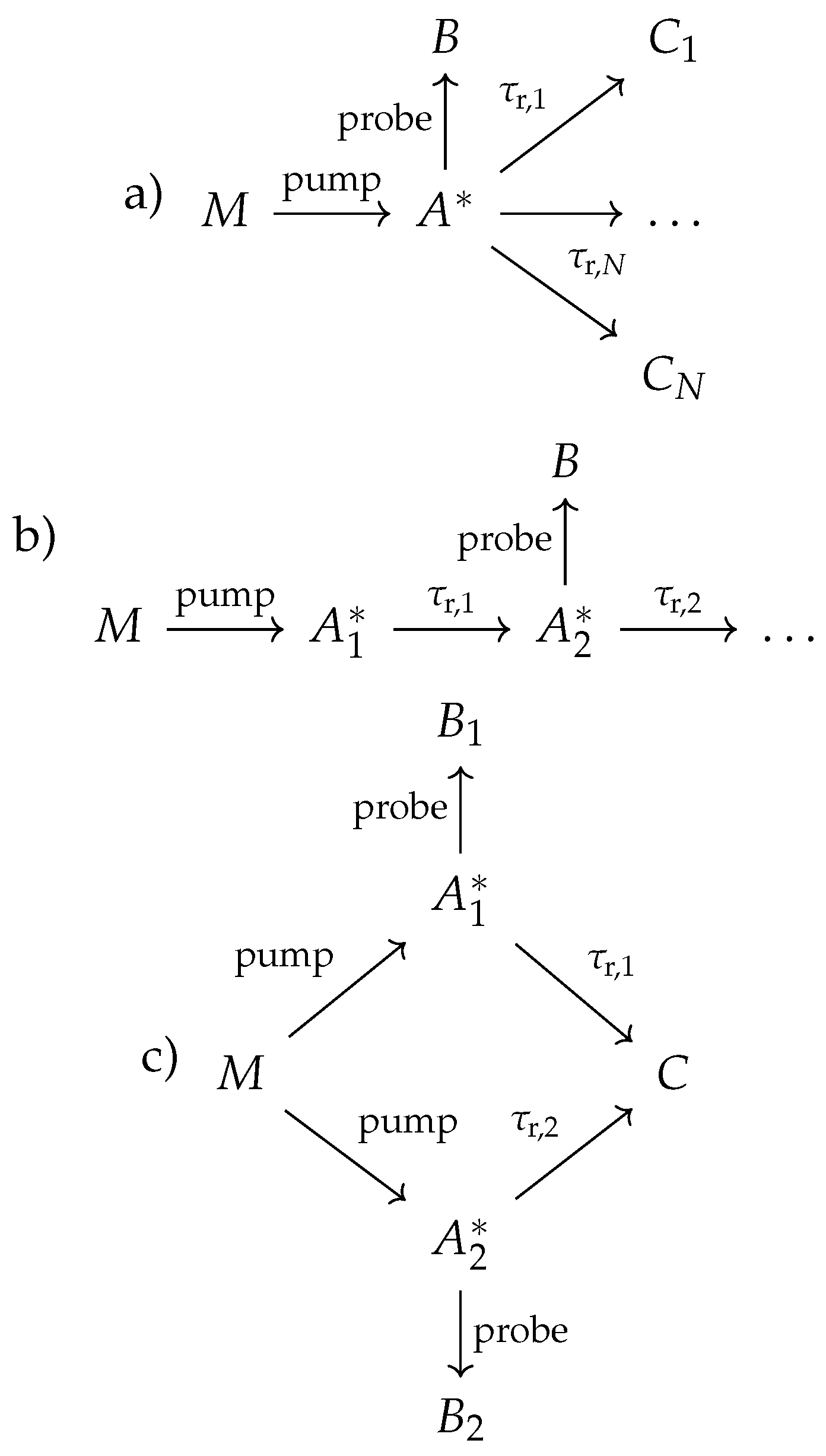

Appendix A Detailed derivations of delta-shaped pump-probe dynamics

Appendix A.1. Solution of the first order kinetics equations

Appendix A.2. Coherent quantum dynamics without decay

Appendix A.3. Relation between quantum and classical regimes

Appendix A.4. Coherent quantum dynamics with decay

Appendix A.5. Reaction scheme with multiple products

Appendix A.6. Reaction scheme with sequential metastable intermediates

Appendix A.7. Reaction scheme with multiple intermediates forming single product

Appendix A.8. General form of the decay dynamics pump-probe equations

Appendix B Effects of the duration of the pulses and experimental setup jitter

Appendix B.1. Sequential convolution with Gaussian-shaped pulses

Appendix B.2. Basis functions for fitting observables with finite duration pump/probe pulses and experimental jitter

Appendix B.2.1. Constant function

Appendix B.2.2. Step function

Appendix B.2.3. Transient function

Appendix B.2.4. Instant increase function

Appendix B.2.5. Non-decaying coherent oscillation function

Appendix B.2.6. Transient coherent oscillation function

Appendix C Regularized weighted least-squares spectral analysis (rwLSSA)

- is the N-dimensional vector of the data points.

- is the M-dimensional vector of spectral representation.

- is the matrix of size with elements .

- is the diagonal matrix of weights.

- is the regularization parameter.

- is the covariance matrix defined as , where is the unit matrix of size .

Appendix D Issues with numerical implementation of B t (t pp ) basis function

References

- Zewail, A.H. Femtochemistry: Atomic-Scale Dynamics of the Chemical Bond Using Ultrafast Lasers (Nobel Lecture). Angewandte Chemie International Edition 2000, 39, 2586–2631.

- Young, L.; Ueda, K.; Gühr, M.; Bucksbaum, P.H.; Simon, M.; Mukamel, S.; Rohringer, N.; Prince, K.C.; Masciovecchio, C.; Meyer, M.; Rudenko, A.; Rolles, D.; Bostedt, C.; Fuchs, M.; Reis, D.A.; Santra, R.; Kapteyn, H.; Murnane, M.; Ibrahim, H.; Légaré, F.; Vrakking, M.; Isinger, M.; Kroon, D.; Gisselbrecht, M.; L’Huillier, A.; Wörner, H.J.; Leone, S.R. Roadmap of ultrafast x-ray atomic and molecular physics. Journal of Physics B: Atomic, Molecular and Optical Physics 2018, 51, 032003. [CrossRef]

- Zettergren, H.; Domaracka, A.; Schlathölter, T.; Bolognesi, P.; Díaz-Tendero, S.; abuda, M.; Tosic, S.; Maclot, S.; Johnsson, P.; Steber, A.; Tikhonov, D.; Castrovilli, M.C.; Avaldi, L.; Bari, S.; Milosavljević, A.R.; Palacios, A.; Faraji, S.; Piekarski, D.G.; Rousseau, P.; Ascenzi, D.; Romanzin, C.; Erdmann, E.; Alcamí, M.; Kopyra, J.; Limão-Vieira, P.; Kočišek, J.; Fedor, J.; Albertini, S.; Gatchell, M.; Cederquist, H.; Schmidt, H.T.; Gruber, E.; Andersen, L.H.; Heber, O.; Toker, Y.; Hansen, K.; Noble, J.A.; Jouvet, C.; Kjær, C.; Nielsen, S.B.; Carrascosa, E.; Bull, J.; Candian, A.; Petrignani, A. Roadmap on dynamics of molecules and clusters in the gas phase. The European Physical Journal D 2021, 75, 152. [CrossRef]

- Hertel, I.V.; Radloff, W. Ultrafast dynamics in isolated molecules and molecular clusters. Reports on Progress in Physics 2006, 69, 1897. [CrossRef]

- Scutelnic, V.; Tsuru, S.; Pápai, M.; Yang, Z.; Epshtein, M.; Xue, T.; Haugen, E.; Kobayashi, Y.; Krylov, A.I.; Møller, K.B.; Coriani, S.; Leone, S.R. X-ray transient absorption reveals the 1Au (nπ*) state of pyrazine in electronic relaxation. Nature Communications 2021, 12, 5003. [CrossRef]

- Lee, J.W.L.; Tikhonov, D.S.; Chopra, P.; Maclot, S.; Steber, A.L.; Gruet, S.; Allum, F.; Boll, R.; Cheng, X.; Düsterer, S.; Erk, B.; Garg, D.; He, L.; Heathcote, D.; Johny, M.; Kazemi, M.M.; Köckert, H.; Lahl, J.; Lemmens, A.K.; Loru, D.; Mason, R.; Müller, E.; Mullins, T.; Olshin, P.; Passow, C.; Peschel, J.; Ramm, D.; Rompotis, D.; Schirmel, N.; Trippel, S.; Wiese, J.; Ziaee, F.; Bari, S.; Burt, M.; Küpper, J.; Rijs, A.M.; Rolles, D.; Techert, S.; Eng-Johnsson, P.; Brouard, M.; Vallance, C.; Manschwetus, B.; Schnell, M. Time-resolved relaxation and fragmentation of polycyclic aromatic hydrocarbons investigated in the ultrafast XUV-IR regime. Nature Communications 2021, 12, 6107. [CrossRef]

- Garg, D.; Lee, J.W.L.; Tikhonov, D.S.; Chopra, P.; Steber, A.L.; Lemmens, A.K.; Erk, B.; Allum, F.; Boll, R.; Cheng, X.; Düsterer, S.; Gruet, S.; He, L.; Heathcote, D.; Johny, M.; Kazemi, M.M.; Köckert, H.; Lahl, J.; Loru, D.; Maclot, S.; Mason, R.; Müller, E.; Mullins, T.; Olshin, P.; Passow, C.; Peschel, J.; Ramm, D.; Rompotis, D.; Trippel, S.; Wiese, J.; Ziaee, F.; Bari, S.; Burt, M.; Küpper, J.; Rijs, A.M.; Rolles, D.; Techert, S.; Eng-Johnsson, P.; Brouard, M.; Vallance, C.; Manschwetus, B.; Schnell, M. Fragmentation Dynamics of Fluorene Explored Using Ultrafast XUV-Vis Pump-Probe Spectroscopy. Frontiers in Physics 2022, 10. [CrossRef]

- Calegari, F.; Ayuso, D.; Trabattoni, A.; Belshaw, L.; Camillis, S.D.; Anumula, S.; Frassetto, F.; Poletto, L.; Palacios, A.; Decleva, P.; Greenwood, J.B.; Martín, F.; Nisoli, M. Ultrafast electron dynamics in phenylalanine initiated by attosecond pulses. Science 2014, 346, 336–339, [https://www.science.org/doi/pdf/10.1126/science.1254061]. [CrossRef]

- Wolf, T.J.A.; Paul, A.C.; Folkestad, S.D.; Myhre, R.H.; Cryan, J.P.; Berrah, N.; Bucksbaum, P.H.; Coriani, S.; Coslovich, G.; Feifel, R.; Martinez, T.J.; Moeller, S.P.; Mucke, M.; Obaid, R.; Plekan, O.; Squibb, R.J.; Koch, H.; Gühr, M. Transient resonant Auger–Meitner spectra of photoexcited thymine. Faraday Discuss. 2021, 228, 555–570. [CrossRef]

- Onvlee, J.; Trippel, S.; Küpper, J. Ultrafast light-induced dynamics in the microsolvated biomolecular indole chromophore with water. Nature Communications 2022, 13, 7462. [CrossRef]

- Malý, P.; Brixner, T. Fluorescence-Detected Pump–Probe Spectroscopy. Angewandte Chemie International Edition 2021, 60, 18867–18875, [https://onlinelibrary.wiley.com/doi/pdf/10.1002/anie.202102901]. [CrossRef]

- Press, W.H.; Teukolsky, S.A.; Vetterling, W.T.; Flannery, B.P. Numerical Recipes 3rd Edition: The Art of Scientific Computing, 3 ed.; Cambridge University Press: USA, 2007.

- Mosegaard, K.; Tarantola, A. Monte Carlo sampling of solutions to inverse problems. Journal of Geophysical Research: Solid Earth 1995, 100, 12431–12447, [https://agupubs.onlinelibrary.wiley.com/doi/pdf/10.1029/94JB03097]. [CrossRef]

- Bingham, D.; Butler, T.; Estep, D. Inverse Problems for Physics-Based Process Models. Annual Review of Statistics and Its Application 2024, 11, null. [CrossRef]

- Tikhonov, A.N. Solution of incorrectly formulated problems and the regularization method. Soviet Math. Dokl. 1963, 4, 1035–1038.

- Tikhonov, A.; Leonov, A.; Yagola, A. Nonlinear ill-posed problems; Chapman & Hall: London, 1998.

- Hoerl, A.E.; Kennard, R.W. Ridge Regression: Biased Estimation for Nonorthogonal Problems. Technometrics 1970, 12, 55–67, [https://www.tandfonline.com/doi/pdf/10.1080/00401706.1970.10488634]. [CrossRef]

- Pedersen, S.; Zewail, A.H. Femtosecond real time probing of reactions XXII Kinetic description of probe absorption fluorescence depletion and mass spectrometry. Molecular Physics 1996, 89, 1455–1502. [CrossRef]

- Hadamard, J. Sur les problèmes aux dérivés partielles et leur signification physique. Princeton University Bulletin 1902, 13, 49–52.

- Tikhonov, D.S.; Vishnevskiy, Y.V.; Rykov, A.N.; Grikina, O.E.; Khaikin, L.S. Semi-experimental equilibrium structure of pyrazinamide from gas-phase electron diffraction. How much experimental is it? Journal of Molecular Structure 2017, 1132, 20–27. Gas electron diffraction and molecular structure. [CrossRef]

- Hestenes, M.R.; Stiefel, E. Methods of conjugate gradients for solving linear systems. Journal of research of the National Bureau of Standards 1952, 49, 409–436.

- Powell, M.J.D. An efficient method for finding the minimum of a function of several variables without calculating derivatives. The Computer Journal 1964, 7, 155–162, [https://academic.oup.com/comjnl/article-pdf/7/2/155/959784/070155.pdf]. [CrossRef]

- Tibshirani, R. Regression Shrinkage and Selection via the Lasso. Journal of the Royal Statistical Society. Series B (Methodological) 1996, 58, 267–288.

- Santosa, F.; Symes, W.W. Linear Inversion of Band-Limited Reflection Seismograms. SIAM Journal on Scientific and Statistical Computing 1986, 7, 1307–1330. [CrossRef]

- Bauer, F.; Lukas, M.A. Comparing parameter choice methods for regularization of ill-posed problems. Mathematics and Computers in Simulation 2011, 81, 1795–1841. [CrossRef]

- Chiu, N.; Ewbank, J.; Askari, M.; Schäfer, L. Molecular orbital constrained gas electron diffraction studies: Part I. Internal rotation in 3-chlorobenzaldehyde. Journal of Molecular Structure 1979, 54, 185–195. [CrossRef]

- Baše, T.; Holub, J.; Fanfrlík, J.; Hnyk, D.; Lane, P.D.; Wann, D.A.; Vishnevskiy, Y.V.; Tikhonov, D.; Reuter, C.G.; Mitzel, N.W. Icosahedral Carbaboranes with Peripheral Hydrogen–Chalcogenide Groups: Structures from Gas Electron Diffraction and Chemical Shielding in Solution. Chemistry – A European Journal 2019, 25, 2313–2321, [https://chemistry-europe.onlinelibrary.wiley.com/doi/pdf/10.1002/chem.201805145]. [CrossRef]

- Vishnevskiy, Y.V.; Tikhonov, D.S.; Reuter, C.G.; Mitzel, N.W.; Hnyk, D.; Holub, J.; Wann, D.A.; Lane, P.D.; Berger, R.J.F.; Hayes, S.A. Influence of Antipodally Coupled Iodine and Carbon Atoms on the Cage Structure of 9,12-I2-closo-1,2-C2B10H10: An Electron Diffraction and Computational Study. Inorganic Chemistry 2015, 54, 11868–11874. PMID: 26625008. [CrossRef]

- Metropolis, N.; Rosenbluth, A.W.; Rosenbluth, M.N.; Teller, A.H.; Teller, E. Equation of State Calculations by Fast Computing Machines. The Journal of Chemical Physics 2004, 21, 1087–1092, [https://pubs.aip.org/aip/jcp/article-pdf/21/6/1087/8115285/1087_1_online.pdf]. [CrossRef]

- Hastings, W.K. Monte Carlo sampling methods using Markov chains and their applications. Biometrika 1970, 57, 97–109, [https://academic.oup.com/biomet/article-pdf/57/1/97/23940249/57-1-97.pdf]. [CrossRef]

- Rosenbluth, M.N. Genesis of the Monte Carlo Algorithm for Statistical Mechanics. AIP Conference Proceedings 2003, 690, 22–30, [https://pubs.aip.org/aip/acp/article-pdf/690/1/22/11551003/22_1_online.pdf]. [CrossRef]

- Snellenburg, J.J.; Laptenok, S.; Seger, R.; Mullen, K.M.; van Stokkum, I.H.M. Glotaran: A Java-Based Graphical User Interface for the R Package TIMP. Journal of Statistical Software 2012, 49, 1–22. [CrossRef]

- Müller, C.; Pascher, T.; Eriksson, A.; Chabera, P.; Uhlig, J. KiMoPack: A python Package for Kinetic Modeling of the Chemical Mechanism. The Journal of Physical Chemistry A 2022, 126, 4087–4099. PMID: 35700393. [CrossRef]

- Arecchi, F.; Bonifacio, R. Theory of optical maser amplifiers. IEEE Journal of Quantum Electronics 1965, 1, 169–178. [CrossRef]

- Lindh, L.; Pascher, T.; Persson, S.; Goriya, Y.; Wärnmark, K.; Uhlig, J.; Chábera, P.; Persson, P.; Yartsev, A. Multifaceted Deactivation Dynamics of Fe(II) N-Heterocyclic Carbene Photosensitizers. The Journal of Physical Chemistry A 2023, 127, 10210–10222, PMID: 38000043. [CrossRef]

- Brückmann, J.; Müller, C.; Friedländer, I.; Mengele, A.K.; Peneva, K.; Dietzek-Ivanšić, B.; Rau, S. Photocatalytic Reduction of Nicotinamide Co-factor by Perylene Sensitized RhIII Complexes**. Chemistry – A European Journal 2022, 28, e202201931, [https://chemistry-europe.onlinelibrary.wiley.com/doi/pdf/10.1002/chem.202201931]. [CrossRef]

- Shchatsinin, I.; Laarmann, T.; Zhavoronkov, N.; Schulz, C.P.; Hertel, I.V. Ultrafast energy redistribution in C60 fullerenes: A real time study by two-color femtosecond spectroscopy. The Journal of Chemical Physics 2008, 129, 204308, [https://pubs.aip.org/aip/jcp/article-pdf/doi/10.1063/1.3026734/13421893/204308_1_online.pdf]. [CrossRef]

- van Stokkum, I.H.; Larsen, D.S.; van Grondelle, R. Global and target analysis of time-resolved spectra. Biochimica et Biophysica Acta (BBA) - Bioenergetics 2004, 1657, 82–104. [CrossRef]

- Connors, K. Chemical Kinetics: The Study of Reaction Rates in Solution; VCH, 1990.

- Atkins, P.; Paula, J. Atkins’ physical chemistry; Oxford University press, 2008.

- Rivas, D.E.; Serkez, S.; Baumann, T.M.; Boll, R.; Czwalinna, M.K.; Dold, S.; de Fanis, A.; Gerasimova, N.; Grychtol, P.; Lautenschlager, B.; Lederer, M.; Jezynksi, T.; Kane, D.; Mazza, T.; Meier, J.; Müller, J.; Pallas, F.; Rompotis, D.; Schmidt, P.; Schulz, S.; Usenko, S.; Venkatesan, S.; Wang, J.; Meyer, M. High-temporal-resolution X-ray spectroscopy with free-electron and optical lasers. Optica 2022, 9, 429–430. [CrossRef]

- Debnath, T.; Mohd Yusof, M.S.B.; Low, P.J.; Loh, Z.H. Ultrafast structural rearrangement dynamics induced by the photodetachment of phenoxide in aqueous solution. Nature Communications 2019, 10, 2944. [CrossRef]

- Atkins, P.; Friedman, R. Molecular Quantum Mechanics; OUP Oxford, 2011.

- Manzano, D. A short introduction to the Lindblad master equation. AIP Advances 2020, 10, 025106, [https://pubs.aip.org/aip/adv/article-pdf/doi/10.1063/1.5115323/12881278/025106_1_online.pdf]. [CrossRef]

- Tikhonov, D.S.; Blech, A.; Leibscher, M.; Greenman, L.; Schnell, M.; Koch, C.P. Pump-probe spectroscopy of chiral vibrational dynamics. Science Advances 2022, 8, eade0311, [https://www.science.org/doi/pdf/10.1126/sciadv.ade0311]. [CrossRef]

- Sun, W.; Tikhonov, D.S.; Singh, H.; Steber, A.L.; Pérez, C.; Schnell, M. Inducing transient enantiomeric excess in a molecular quantum racemic mixture with microwave fields. Nature Communications 2023, 14, 934. [CrossRef]

- Hatano, N. Exceptional points of the Lindblad operator of a two-level system. Molecular Physics 2019, 117, 2121–2127. [CrossRef]

- Schulz, S.; Grguraš, I.; Behrens, C.; Bromberger, H.; Costello, J.T.; Czwalinna, M.K.; Felber, M.; Hoffmann, M.C.; Ilchen, M.; Liu, H.Y.; Mazza, T.; Meyer, M.; Pfeiffer, S.; Prędki, P.; Schefer, S.; Schmidt, C.; Wegner, U.; Schlarb, H.; Cavalieri, A.L. Femtosecond all-optical synchronization of an X-ray free-electron laser. Nature Communications 2015, 6, 5938. [CrossRef]

- Savelyev, E.; Boll, R.; Bomme, C.; Schirmel, N.; Redlin, H.; Erk, B.; Düsterer, S.; Müller, E.; Höppner, H.; Toleikis, S.; Müller, J.; Czwalinna, M.K.; Treusch, R.; Kierspel, T.; Mullins, T.; Trippel, S.; Wiese, J.; Küpper, J.; Brauβe, F.; Krecinic, F.; Rouzée, A.; Rudawski, P.; Johnsson, P.; Amini, K.; Lauer, A.; Burt, M.; Brouard, M.; Christensen, L.; Thøgersen, J.; Stapelfeldt, H.; Berrah, N.; Müller, M.; Ulmer, A.; Techert, S.; Rudenko, A.; Rolles, D. Jitter-correction for IR/UV-XUV pump-probe experiments at the FLASH free-electron laser. New Journal of Physics 2017, 19, 043009. [CrossRef]

- Schirmel, N.; Alisauskas, S.; Huülsenbusch, T.; Manschwetus, B.; Mohr, C.; Winkelmann, L.; Große-Wortmann, U.; Zheng, J.; Lang, T.; Hartl, I. Long-Term Stabilization of Temporal and Spectral Drifts of a Burst-Mode OPCPA System. Conference on Lasers and Electro-Optics. Optica Publishing Group, 2019, p. STu4E.4. [CrossRef]

- Lambropoulos, P. Topics on Multiphoton Processes in Atoms**Work supported by a grant from the National Science Foundation No. MPS74-17553.; Academic Press, 1976; Vol. 12, Advances in Atomic and Molecular Physics, pp. 87–164. [CrossRef]

- Kheifets, A.S. Wigner time delay in atomic photoionization. Journal of Physics B: Atomic, Molecular and Optical Physics 2023, 56, 022001. [CrossRef]

- Virtanen, P.; Gommers, R.; Oliphant, T.E.; Haberland, M.; Reddy, T.; Cournapeau, D.; Burovski, E.; Peterson, P.; Weckesser, W.; Bright, J.; van der Walt, S.J.; Brett, M.; Wilson, J.; Millman, K.J.; Mayorov, N.; Nelson, A.R.J.; Jones, E.; Kern, R.; Larson, E.; Carey, C.J.; Polat, İ.; Feng, Y.; Moore, E.W.; VanderPlas, J.; Laxalde, D.; Perktold, J.; Cimrman, R.; Henriksen, I.; Quintero, E.A.; Harris, C.R.; Archibald, A.M.; Ribeiro, A.H.; Pedregosa, F.; van Mulbregt, P.; SciPy 1.0 Contributors. SciPy 1.0: Fundamental Algorithms for Scientific Computing in Python. Nature Methods 2020, 17, 261–272. [CrossRef]

- Chopra, P. Astrochemically Relevant Polycyclic Aromatic Hydrocarbons Investigated using Ultrafast Pump-probe Spectroscopy and Near-edge X-ray Absorption Fine Structure Spectroscopy. PhD thesis, Christian-Albrechts-Universität zu Kiel (Germany), 2022.

- Garg, D. Electronic structure and ultrafast fragmentation dynamics of polycyclic aromatic hydrocarbons. PhD thesis, Universität Hamburg (Germany), 2023.

- Douguet, N.; Grum-Grzhimailo, A.N.; Bartschat, K. Above-threshold ionization in neon produced by combining optical and bichromatic XUV femtosecond laser pulses. Phys. Rev. A 2017, 95, 013407. [CrossRef]

- Strüder, L.; Epp, S.; Rolles, D.; Hartmann, R.; Holl, P.; Lutz, G.; Soltau, H.; Eckart, R.; Reich, C.; Heinzinger, K.; Thamm, C.; Rudenko, A.; Krasniqi, F.; Kühnel, K.U.; Bauer, C.; Schröter, C.D.; Moshammer, R.; Techert, S.; Miessner, D.; Porro, M.; Hälker, O.; Meidinger, N.; Kimmel, N.; Andritschke, R.; Schopper, F.; Weidenspointner, G.; Ziegler, A.; Pietschner, D.; Herrmann, S.; Pietsch, U.; Walenta, A.; Leitenberger, W.; Bostedt, C.; Möller, T.; Rupp, D.; Adolph, M.; Graafsma, H.; Hirsemann, H.; Gärtner, K.; Richter, R.; Foucar, L.; Shoeman, R.L.; Schlichting, I.; Ullrich, J. Large-format, high-speed, X-ray pnCCDs combined with electron and ion imaging spectrometers in a multipurpose chamber for experiments at 4th generation light sources. Nuclear Instruments and Methods in Physics Research Section A: Accelerators, Spectrometers, Detectors and Associated Equipment 2010, 614, 483–496. [CrossRef]

- Erk, B.; Müller, J.P.; Bomme, C.; Boll, R.; Brenner, G.; Chapman, H.N.; Correa, J.; Düsterer, S.; Dziarzhytski, S.; Eisebitt, S.; Graafsma, H.; Grunewald, S.; Gumprecht, L.; Hartmann, R.; Hauser, G.; Keitel, B.; von Korff Schmising, C.; Kuhlmann, M.; Manschwetus, B.; Mercadier, L.; Müller, E.; Passow, C.; Plönjes, E.; Ramm, D.; Rompotis, D.; Rudenko, A.; Rupp, D.; Sauppe, M.; Siewert, F.; Schlosser, D.; Strüder, L.; Swiderski, A.; Techert, S.; Tiedtke, K.; Tilp, T.; Treusch, R.; Schlichting, I.; Ullrich, J.; Moshammer, R.; Möller, T.; Rolles, D. CAMP@FLASH: an end-station for imaging, electron- and ion-spectroscopy, and pump–probe experiments at the FLASH free-electron laser. Journal of Synchrotron Radiation 2018, 25, 1529–1540. [CrossRef]

- Rossbach, J., FLASH: The First Superconducting X-Ray Free-Electron Laser. In Synchrotron Light Sources and Free-Electron Lasers: Accelerator Physics, Instrumentation and Science Applications; Jaeschke, E.; Khan, S.; Schneider, J.R.; Hastings, J.B., Eds.; Springer International Publishing: Cham, 2014; pp. 1–22. [CrossRef]

- Beye, M.; Gühr, M.; Hartl, I.; Plönjes, E.; Schaper, L.; Schreiber, S.; Tiedtke, K.; Treusch, R. FLASH and the FLASH2020+ project—current status and upgrades for the free-electron laser in Hamburg at DESY. The European Physical Journal Plus 2023, 138, 193. [CrossRef]

- Garcia, J.D.; Mack, J.E. Energy Level and Line Tables for One-Electron Atomic Spectra*. J. Opt. Soc. Am. 1965, 55, 654–685. [CrossRef]

- Martin, W.C. Improved 4i 1snl ionization energy, energy levels, and Lamb shifts for 1sns and 1snp terms. Phys. Rev. A 1987, 36, 3575–3589. [CrossRef]

- Williams, T.; Kelley, C.; many others. Gnuplot 5.4: an interactive plotting program. http://www.gnuplot.info, 2021.

- LEVENBERG, K. A METHOD FOR THE SOLUTION OF CERTAIN NON-LINEAR PROBLEMS IN LEAST SQUARES. Quarterly of Applied Mathematics 1944, 2, 164–168.

- Marquardt, D.W. An Algorithm for Least-Squares Estimation of Nonlinear Parameters. Journal of the Society for Industrial and Applied Mathematics 1963, 11, 431–441. [CrossRef]

- Akaike, H. A new look at the statistical model identification. IEEE Transactions on Automatic Control 1974, 19, 716–723. [CrossRef]

- Vaníček, P. Approximate spectral analysis by least-squares fit. Astrophysics and Space Science 1969, 4, 387–391. [CrossRef]

- Tikhonov, D.S. Regularized weighted sine least-squares spectral analysis for gas electron diffraction data. The Journal of Chemical Physics 2023, 159, 174101, [https://pubs.aip.org/aip/jcp/article-pdf/doi/10.1063/5.0168417/18246387/174101_1_5.0168417.pdf]. [CrossRef]

- Virtanen, P.; Gommers, R.; Oliphant, T.E.; Haberland, M.; Reddy, T.; Cournapeau, D.; Burovski, E.; Peterson, P.; Weckesser, W.; Bright, J.; van der Walt, S.J.; Brett, M.; Wilson, J.; Millman, K.J.; Mayorov, N.; Nelson, A.R.J.; Jones, E.; Kern, R.; Larson, E.; Carey, C.J.; Polat, İ.; Feng, Y.; Moore, E.W.; VanderPlas, J.; Laxalde, D.; Perktold, J.; Cimrman, R.; Henriksen, I.; Quintero, E.A.; Harris, C.R.; Archibald, A.M.; Ribeiro, A.H.; Pedregosa, F.; van Mulbregt, P.; SciPy 1.0 Contributors. SciPy 1.0: Fundamental Algorithms for Scientific Computing in Python. Nature Methods 2020, 17, 261–272. [CrossRef]

- Zurek, W.H. Decoherence and the Transition from Quantum to Classical. Physics Today 1991, 44, 36–44, [https://pubs.aip.org/physicstoday/article-pdf/44/10/36/8303336/36_1_online.pdf]. [CrossRef]

- Zurek, W.H. Decoherence and the Transition from Quantum to Classical – Revisited. Los Alamos Science 2002, 27.

| Parameter | Value, fs | |||||

| Global | Fit #0 | Fit #1 | Fit #2 | Fit #3 | Fit #4 | |

| ps | ||||||

| — | — | — | — | |||

| — | — | — | — | |||

| — | — | — | — | |||

| — | — | — | — | |||

| — | — | — | — | |||

| Parameter | Fit #1 | Fit #2 | PP(MC)3Fitting | ||

| Ini. | Fin. | Ini. | Fin. | ||

| ps | 0 | 0 | |||

| 97 | 97 | ||||

| 50 | 150 | ||||

| 50 | 150 | ||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).