1. Introduction

Tobacco is one of the most important economic crops worldwide, mainly pro-duced in China, the United States, India and Brazil [

1,

2]. Tobacco is important in China's national economy, with more planting areas. Particularly, Guizhou is an important province for tobacco planting. Tobacco has a long production cycle, high planting labor intensity and high technical requirements. Tobacco yield is closely associated with the survival rate of tobacco seedlings after transplanting. Obtaining accurate tobacco planting information is significant for the growth of tobacco seedlings after trans-planting, tobacco fertilization and field management [

3]. Currently, tobacco seedling counting mainly relies on manual labor, which is time-consuming and labor-intensive. With the rapid development of unmanned aerial vehicles (UAVs) in terms of light-weight and stability, UAV remote sensing technology has been widely used in crop plant protection, fertilization and growth monitoring [

4,

5,

6]. Using UAV remote sensing data to identify tobacco plants and monitor plant growth information based on deep learning can save manpower and material resources and provide accurate information for large-scale growth monitoring, fertilization and transplanting.This is applicable to the management of high-value-added economic crop cultivation[

7,

8].Guizhou is located in the central hinterland of one of the three major global karst regions, the Southwest China Karst Region. This region also has the most typical karst landscapes in the world, accounting for 62% of the total national land area.92.5% of Guizhou Province is moun-tainous and hilly[

9]. Guizhou, as the only province in China without the support of plains, belongs to one of the regions with the most significant karst landscape devel-opment in Southwest China. This province has high mountains, deep valleys and fragmented surface due to topography and tectonics and is affected by cloudy, rainy and foggy weather and environmental differences. Consequently, it is difficult to ob-tain low- and medium-resolution satellite imagery data. The information on agricul-tural conditions cannot be rapidly and efficiently acquired, failing to satisfy the need of agricultural monitoring[

10].Using a UAV low-altitude remote sensing platform to ob-tain data has the advantage of low cost, high security, high mobility and customizabil-ity and effectively overcomes the defect that satellite remote sensing cannot timely obtain high spatial resolution images. Thus, real-time, macroscopic and accurate mon-itoring and assessment of the crop growth situation can be performed through the point-surface fusion in order to formulate appropriate production and management measures according to local conditions to improve crop quality and yield[

11]. With the increasing maturity of UAV technology, UAV multispectral remote sensing has been widely used for crop growth monitoring in agriculture due to its advantages of strong band continuity, large amount of spectral data, high centimeter-level resolution and the ability to reach areas of interest in a short period. This facilitates easier and faster Earth observation and monitoring [

12,

13,

14].

Accurately extracting tobacco plant information in karst mountainous areas is challenging. Deep neural network approaches have been used to identify tobacco plants in UAV visible light images. Deep neural networks were proposed in 2006 and became a popular machine learning method[

15]. Due to their robustness, deep neural networks have an impressive track record of applications in image analysis and inter-pretation[

16] , initially in biomedicine and later in agriculture[

17,

18] . Compared with tra-ditional methods such as support vector machine[

19,

20], color space[

21], random forest[

22], artificial neural network (ANN) [

23,

24] and hyperpixel space[

25], deep learning methods can overcome their shortcomings such as higher requirements for observer experience, higher labor intensity and insufficient extraction accuracy for precision agriculture. Chen et al. [

26] applied deep neural networks to high-resolution images in order to identify strawberry yield, with an average accuracy of 0.83 and 0.72 in identifying 2m and 3m aerial height. Oh et al. [

27] used deep learning target detection technology with UAV images for cotton seedling counting and analyzed plant density and precision management. The target detection network identification method showed higher ac-curacy than traditional methods. Wu et al. [

28]used deep learning to extract apple tree canopy information from remote images. This remote sensing technique had a preci-sion of 91.1% and a recall of 94.1% for apple tree detection and counting, an overall precision of 97.1% for branch segmentation and an overall precision of more than 92% for canopy parameter estimation. The deep learning methods can achieve higher accu-racy than traditional methods. As the depth of deep learning models continues to in-crease, their feature representation ability and segmentation accuracy become increas-ingly higher. Despite these advantages, there are some shortcomings. Deeper models are more complex and require more training samples, higher hardware and software configuration for operation and longer training time.

However, The U-Net model can overcome these shortcomings. Freudenberg et al. [

29] used the U-Net neural network to identify palm satellite image maps with a resolu-tion of 40 cm and found that the method was reliable even in shaded or urban areas, with the palm identification accuracy ranging from 89% to 92%. Yang et al. [

30] used FCN-AlexNet and SegNet models to estimate rice fall area in UAV imagery, and the F1-score reached 0.80 and 0.79, respectively. Using flue-cured cigar tobacco plants as the research object, Rao et al. [

31] proposed a new deep learning model to learn the morphological features of the center of the tobacco through some key features. They adopted a lightweight coder and decoder to rapidly identify the tobacco and locate the counts from UAV remote sensing imagery, with an average accuracy of up to 99.6%. Li et al. [

32] extracted dragon fruit plants from UAV visible images of different complex habitat strains based on the U-Net model, with identification accuracies of 85.06%, 98.83%, and 99.20% for the initial, supplementary and extended datasets, respectively. Their experimental results show that increasing the type and number of samples can improve the model's accuracy in identifying dragon fruit plants, and the accuracy of the U-Net model was also verified. The applicability of the U-Net network model was verified in identifying features in plateau mountainous areas. Huang et al. [

33] proposed an accurate extraction method of flue-cured tobacco planting areas based on a deep semantic segmentation model for UAV remote sensing images of plateau mountainous areas, and 71 scene recognition images were semantically segmented using DeeplabV3+, PSPNet, SegNet and U-Net, with segmentation accuracies of 0.9436, 0.9118, 0.9392, and 0.9473, respectively. Deep learning-based methods can overcome the problem of insufficient characterization ability of traditional machine vision methods but need a large amount of sample data for training. Under deeper model layers, these models also require longer training time. In contrast, the U-Net model can obtain higher recognition results with fewer training samples, needs less training time relative to CNN, FCN and other models and saves experiment time with higher run-ning speed. Due to the complexity of the tobacco planting environment, it is difficult for traditional methods to extract high-precision tobacco plant information from UAV images. Thus, it is necessary to find a new method to decompose complex scenes into multiple homogeneous scenes and then perform scene-by-scene identification to im-prove the overall accuracy.

In summary, this study used the U-Net model as a binary semantic segmentation method for UAV visible light images of tobacco plants at the root extension stage in complex habitats. According to the tobacco planting environment in the study area, the complex habitat was divided into eight tobacco plant recognition habitats by consider-ing four main factors (i.e., plot fragmentation, plant size, presence of weeds and shadow masking). The accuracy of each scenario was evaluated to analyze the influ-encing factors of the recognition accuracy. This can provide data and methodological support for promoting the application of UAV remote sensing in agriculture and ac-celerate agricultural dataset standardization and management refinement in the karst mountainous areas.

2. Materials and Methods

2.1. Study Area

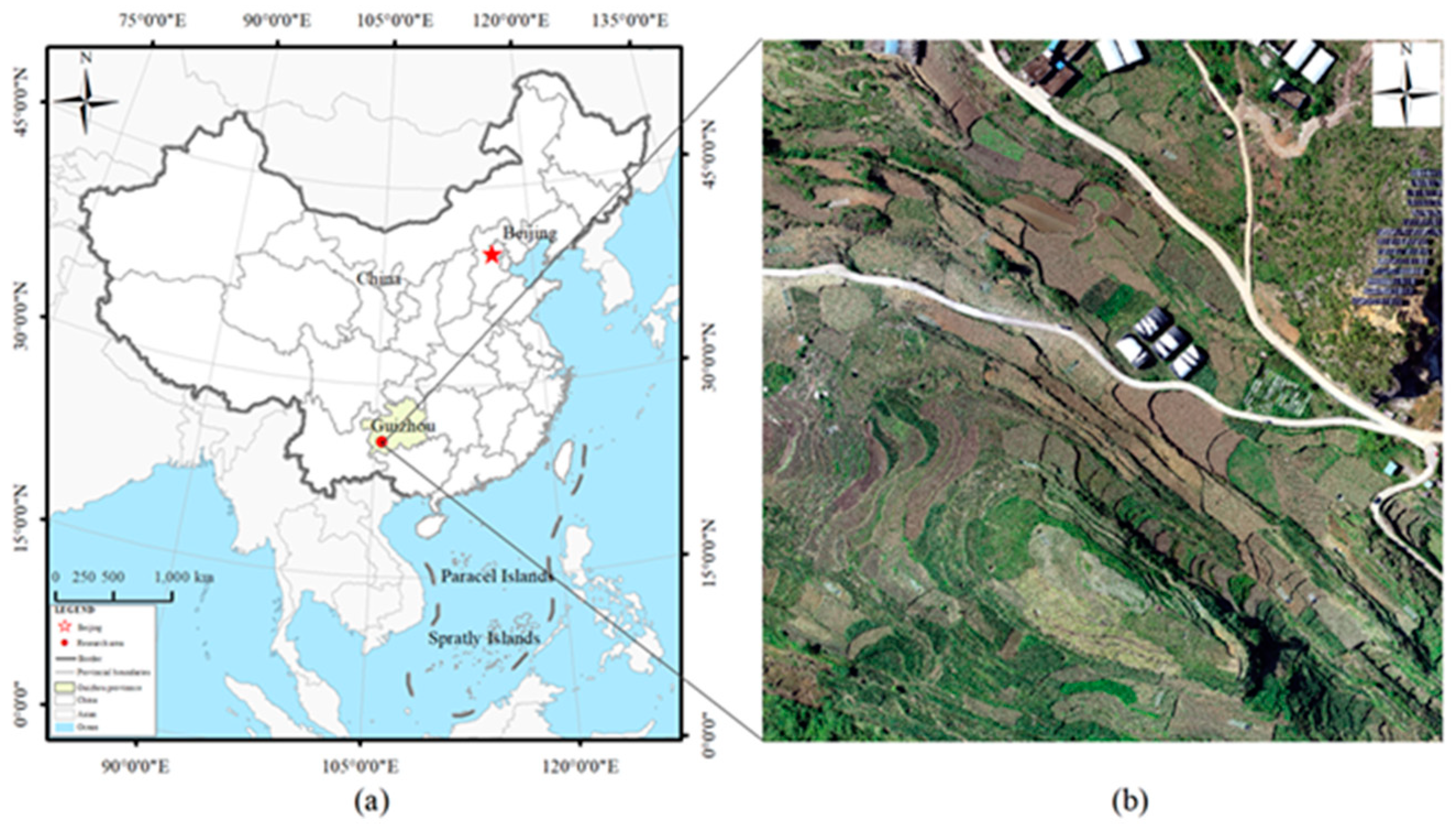

The study area (

Figure 1) is located in Beipanjiang Town, Zhenfeng County, Qi-anxinan Prefecture, Guizhou Province (105°35'53''E, 25°36'08''N). Beipanjiang Town is a karst geomorphological area with rugged and fragmented surface. The terrain in the territory is high in the south, low in the north, hilly in the northeast and smooth in the center with complicated topography and a relative altitude of 1,475 m. The Beipanjiang River Valley slope area has a deep cut. The climate is characterized as the subtropical monsoon humid climate, with four distinct seasons. The annual average mild frost-free period, sunshine hours, number of precipitation days and precipitation are 300 days, 1549.2 h, 180 days and 1100 mm, respectively. Due to the mild summer and winter, concurrent rain and heat are the most suitable for tobacco planting. However, the study area has mountainous characteristics such as fragmented cropland, coexistence of regular and narrow croplands, coexistence of clear-contour and fuzzy-boundary croplands, cropland patches with high fragmentation and diverse farming methods. In 2023, more than 6,900 acres of tobacco were planted in Beipanjiang Town, which is ex-pected to achieve an output value of more than 25 million Chinese yuan.

2.2. Data Acquisition and Preprocessing

To address the difficulty of satellite optical remote sensing data acquisition in karst mountainous areas due to rainy and cloudy weather and rugged and fragmented terrain, this study used the DJI Mavic2 Pro v2.0 UAV as the image data acquisition platform. This platform was equipped with a 1-inch CMOS sensor Hasselblad camera with 20 million photo pixels, a resolution of 5,472×3684 pixels and a maximum wind resistance level of 5. It is small, low-cost, mobile and flexible and does not require a wide level site for take-off and landing. Thus, it is suitable for collecting data in moun-tainous environments with steep terrain, fragmented land and difficulties in obtaining high-precision satellite image data. The image was acquired between 15:00-16:00 on June 4, 2021, under clear weather and wind force 2.5, meeting the requirements for safe UAV operation. In order to ensure the accuracy and quality of remote sensing images during flight, the UAV captured images in the waypoint flight mode, with a heading overlap rate of 80%, a side overlap rate of 75% and a flight altitude of 120 m. This can facilitate clear images with good quality.

The UAV photos were processed using Pix4Dmapper4.0 software for initialization, feature point matching, image stitching, correction (deformation, distortion, blurring and noise due to UAV shaking), image enhancement, color smoothing, cropping and reconstruction to generate a high-resolution orthophoto map (Digital Orthophoto Map, DOM). Finally, orthophotos with a spatial resolution of 6.4 cm were obtained.3. Results.

2.3. Network Modeling and Model Parameter Selection

2.3.1. U-Net Model

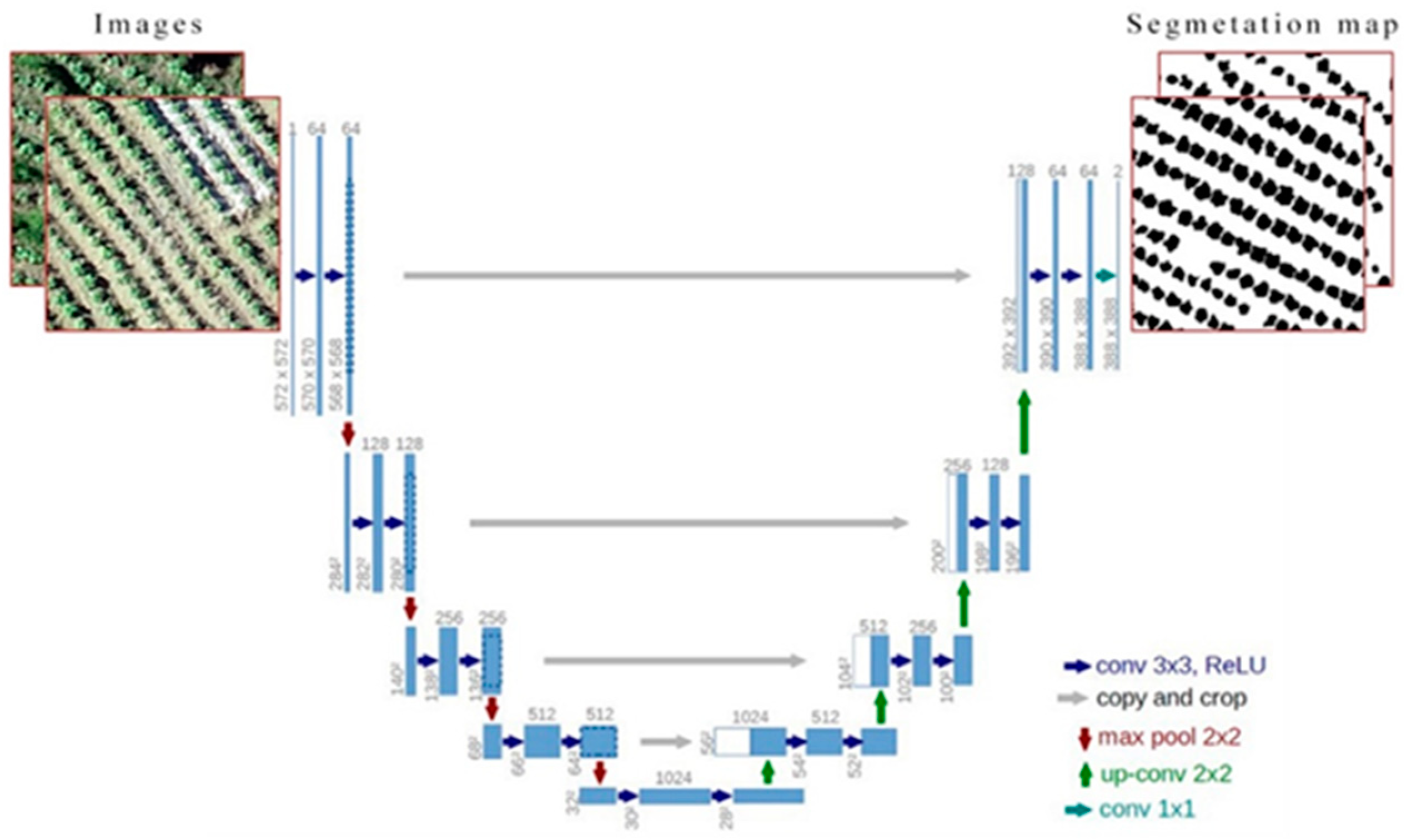

The U-Net model is a network structure based on convolutional neural networks (CNNs) proposed by Ronneberger et al. [

34] in 2015.This model was initially applied to the semantic segmentation of medical images and achieved good performance in dif-ferent biomedical segmentation applications [

35].Then, the U-Net network model was applied to agriculture. In recent years, the U-Net model has made great progress in ag-ricultural remote sensing crop recognition [

36,

37,

38,

39,

40].Its structure is shown in

Figure 2, consisting of the Compressing Path in the left half and the Expansive Path in the right half. The core idea of the model is the introduction of jump connections, greatly im-proving the accuracy of image segmentation. In contrast to CNNs, U-Net uses feature splicing to achieve feature fusion [

41]. Due to the elastic deformation of data enhance-ment [

42], U-Net requires fewer training samples and less training time and can get higher accuracy. The U-Net model has the advantage of "obtaining more accurate clas-sification results with fewer training samples" and can extract local features while re-taining global information. Therefore, the U-Net model is suitable for rapid crop clas-sification.

2.3.2. Experimental Environment

The experimental study was conducted on a professional imaging workstation equipped with Windows 10 (ACPIx64 processor). The computer was powered by an NVIDIA GeForce RTX 2080 Ti (GPU) and an Intel(R) Core(TM) i9-10980XE CPU to accelerate relevant operations. The study was based on the Tensorflow-GPU version 2.0.0 deep learning framework and used the Adma optimizer as the optimization function. Keras=2.4.3 is a WrapperAPI of Tensorflow, a layer of Tensorflow wrapping that allows for simpler model building. The initial learning rate was set as 0.0001 for the model training. The total number of iterations was 50. The training was performed on the workstation. The model was constantly debugged to obtain optimal parameters, improving the recognition accuracy of the U-Net model.

2.3.3. Model Parameter Selection

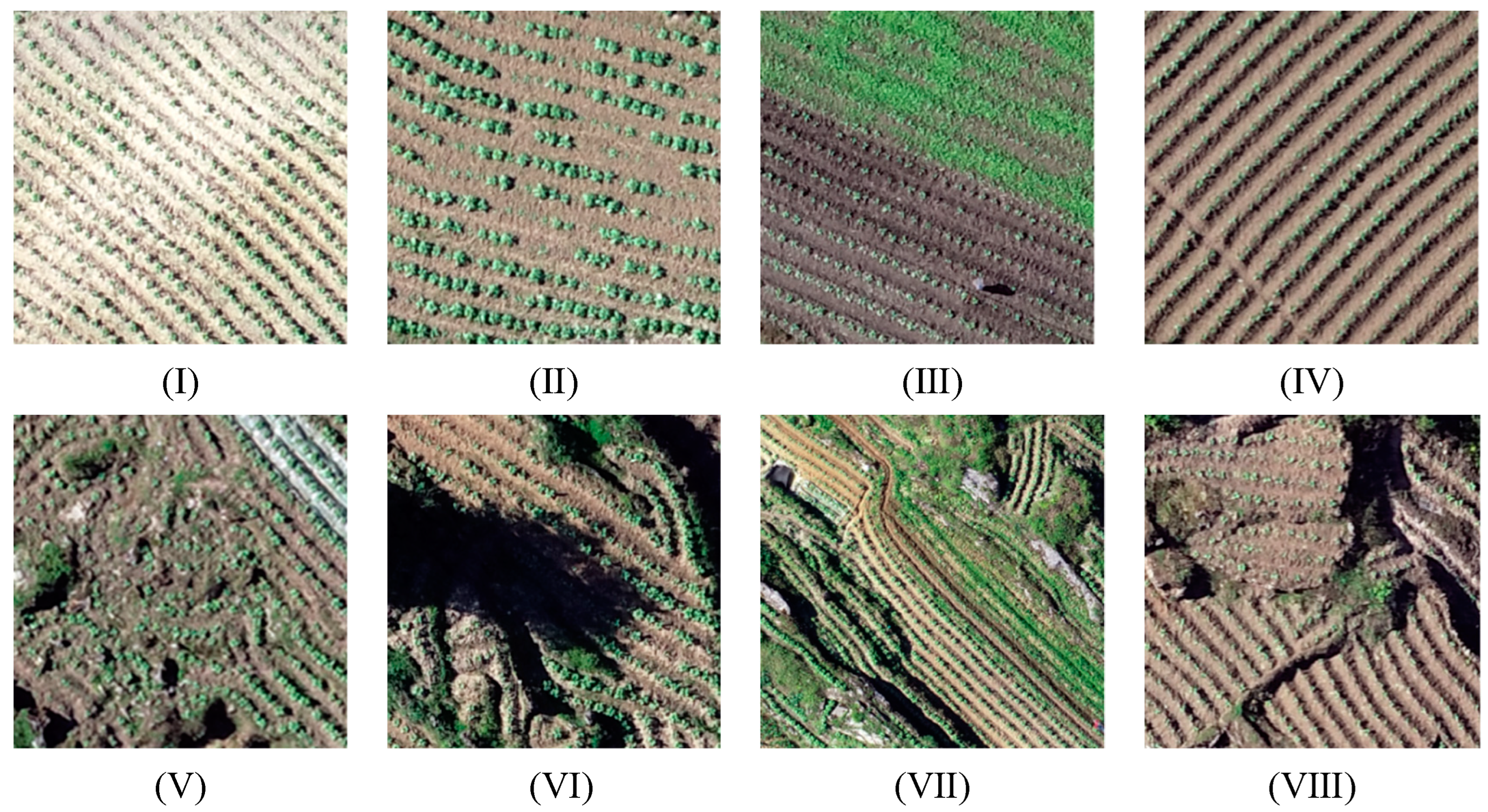

In order to study the influencing factors of complex habitats of tobacco in the karst mountainous area on identifying extracted tobacco using the U-Net model, two groups of training samples were preset for model training. The first set of the experiments was to train the model with all the training samples and labels, and the second set was to train the model with eight habitats: smooth tectonics and weed-free (Ⅰ); smooth tectonics and unevenly growing (Ⅱ); smooth tectonics and weed-infested (Ⅲ); smooth tectonics and planted with smaller seedlings (Ⅳ); subsurface fragmented and weed-free (Ⅴ); surface fragmented and shadow-masked (Ⅵ); subsurface fragmented and weed-infested (Ⅶ); surface fragmented and planted with smaller seedlings (Ⅷ).

In order to obtain the optimal tobacco identification model parameters, multiple parameters can be set to compare the model training when performing model training.

Figure 3 presents the accuracy and loss changing curves of the model trained with different parameters. The parameter changes included the learning rate and the number of iterations. There were some differences in the trend of the loss and accuracy curves of the model trained with different parameters. In order to explore more suitable model parameters for flue-cured tobacco identification, all the samples and labels of eight habitats were used for model training together, with a ratio of 8:2 for the training set and the test set. Firstly, the number of iterations was set as 50, and the learning rate was 0.0001 (

Figure 3a,b) and 0.001 (

Figure 3c,d), respectively. It can be concluded from the experiments that the learning rate of 0.0001 was more suitable for the flue-cured tobacco identification model. Secondly, the learning rate was set as 0.0001, and the number of iterations was set as 100 and 50. When the number of iterations was set as 50, the loss and the accuracy curves in

Figure 3e,f were more fitted. The loss and accuracy curves under 100 iterations are shown in

Figure 3g,h. After many rounds of model training, the comparative analysis shows that when the learning rate and the number of iterations were set as 0.0001 and 50, respectively, the model was more robust. Therefore, the model parameter setting with a learning rate of 0.0001 and 50 iterations in the whole model training can meet the research needs.

2.4. Dataset Construction

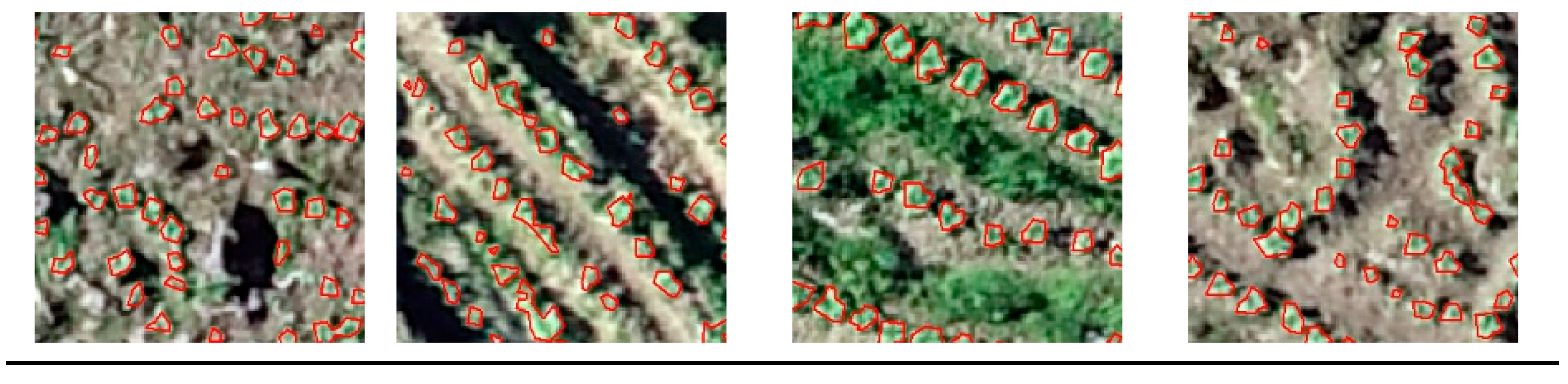

2.4.1. Classification of Complex Habitat for Tobacco

The dataset used for model training is also known as the training sample, which is the basis of the whole model classification algorithm. The quality of the training samples directly affects classification results. Therefore, representative and typical samples with the completeness of regional sample points should be selected. In order to better extract information on complex habitats of tobacco, the UAV visible light images in June 2021 were selected to extract tobacco plants according to the complexity of the planting habitat of tobacco in the study area. The tobacco plants were at the rooting stage, with nonuniform growth and size. In order to better analyze the model recognition accuracy under the complex habitat of crop growth and explore the suitability of different habitats, four main factors were considered, i.e., plot fragmentation, plant size, the presence of weeds and shadow masking according to the tobacco planting habitat in the study area. Then, the eight habitats were classified, as shown in

Figure 4: smooth tectonics and weed-free (Ⅰ); smooth tectonics and unevenly growing (Ⅱ); smooth tectonics and weed-infested (Ⅲ); smooth tectonics and planted with smaller seedlings (Ⅳ); subsurface fragmented and weed-free (Ⅴ); surface fragmented and shadow-masked (Ⅵ); subsurface fragmented and weed-infested (Ⅶ); surface fragmented and planted with smaller seedlings (Ⅷ). In this scene classification system, training samples were constructed based on the UAV visible light images.

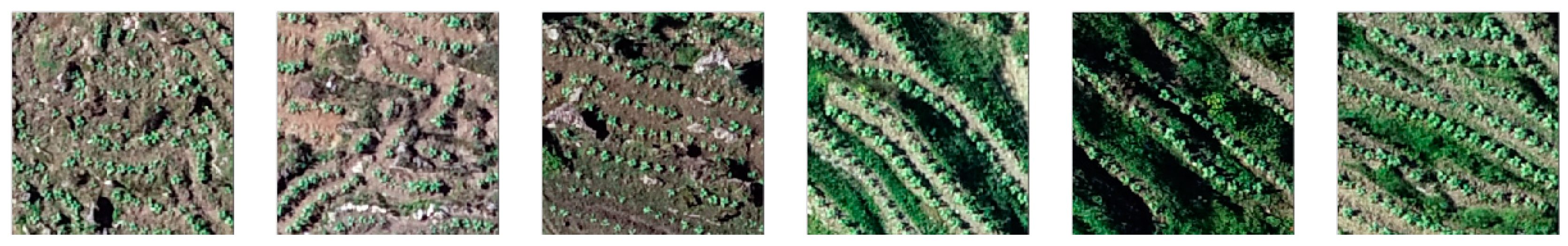

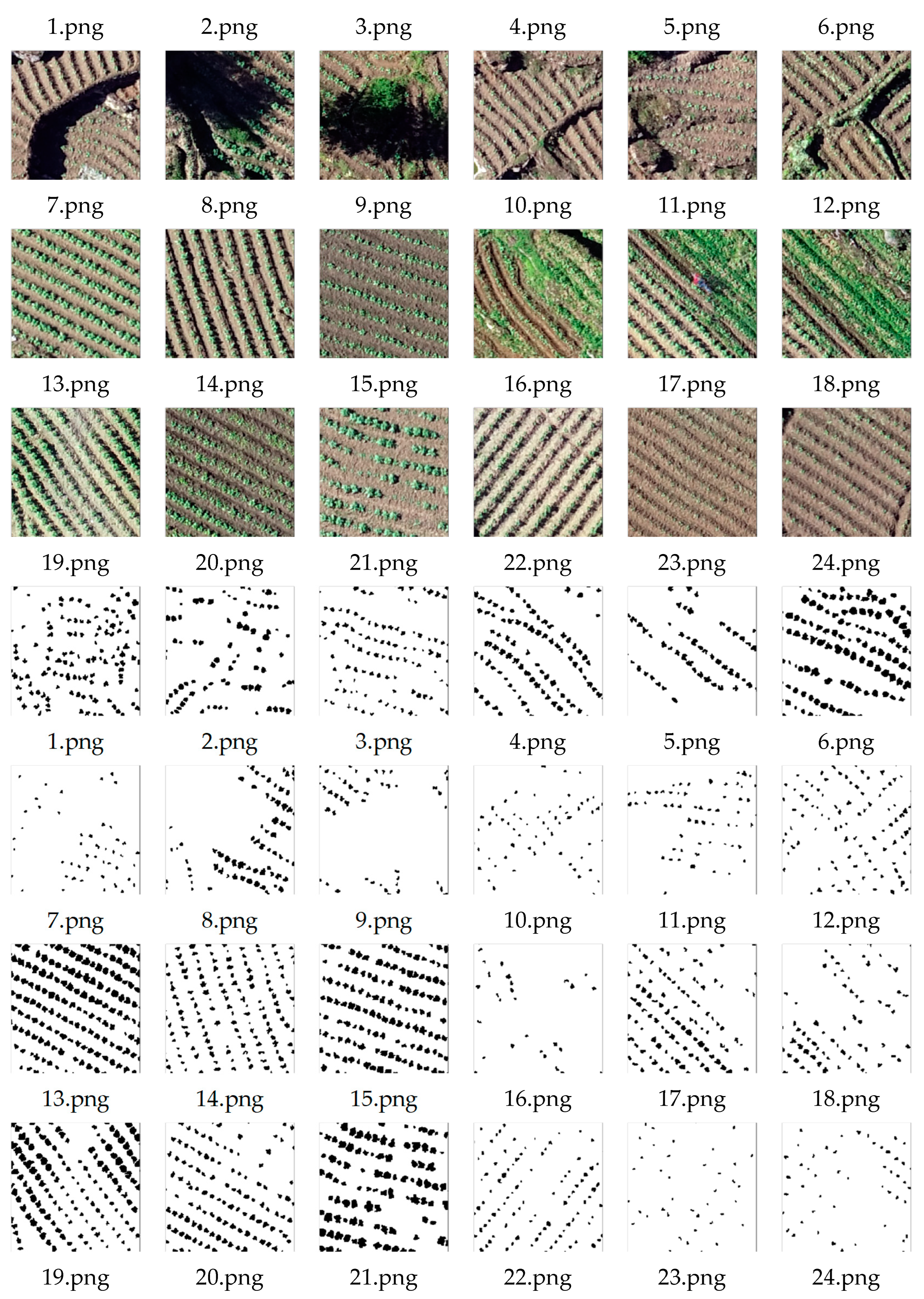

2.4.2 Construction of Sample Datasets

The ROI tool of ENVI5.3 was used to manually annotate the outline of the tobacco plants to generate .xml files. These files were then converted to .shp files. The pixels of tobacco plants were labeled as black (pixel value was 0), and the pixels of non-tobacco plants were labeled as white (pixel value was 255) using the ArcMap tool. The binary mapping of labels was used to evaluate the segmentation and the information extraction of the tobacco. A total of 6,617 plants were labeled. Since the whole UAV image was directly used as a sample, the data volume was too large, and the performance requirements for the computer were high. This was not conducive to model training. Thus, the images and the corresponding labeled tobacco plants were randomly cut into samples with a size of 224 × 224 pixels. The randomly cut samples have cross overlapping parts with random sizes, inducing different samples and enhancing the randomness of the samples. The information in the UAV visible light images can be fully utilized. Finally, 2300 samples were obtained to constitute the tobacco dataset. The tobacco dataset included a sample image folder and a manually labeled label folder. The sample image folder corresponded to the sample images, which were named and arranged in numerical order; the label file contained the manually labeled data corresponding to the sample images. Some captured tobacco plant images and corresponding labels are shown in

Figure 5.

2.5. Evaluation Index

Multiple metrics are usually used in image segmentation to evaluate algorithm precision. In this study, four quantitative metrics, namely, Precision, Recall, F1-score and Intersection-over-Union (IOU), were used to quantitatively evaluate the model recognition results and the segmentation precision of the tobacco plants in each scene of the UAV remote sensing images.

Precision indicates the probability of actually being a positive sample out of all samples identified as positive:

Recall is used to find how many samples that are actually positive are identified as positive:

The F1-score is a common measure for classification problems and is a harmonic mean of precision and recall ranging from 0 to 1. The closer the F1-score is to 1, the more robust the model is:

IOU is a commonly used evaluation method in semantic segmentation and can measure the degree of overlap between the target detection frame and the true frame. Thus, IOU can be used as a criterion to determine whether the detection frame is a positive sample or not. Comparison with the threshold can help to determine whether it is a positive or negative sample. Generally, when the identified frame and the real frame IOU >= 0.5, it is considered to be a positive sample:

Where TP indicates that a tobacco sample is correctly identified as tobacco;FN indicates that a tobacco sample is incorrectly identified as non-tobacco;FP indicates that a non-tobacco sample is incorrectly identified as tobacco.

3. Results

3.1. Quantitative Analysis of Plant Extraction Precision

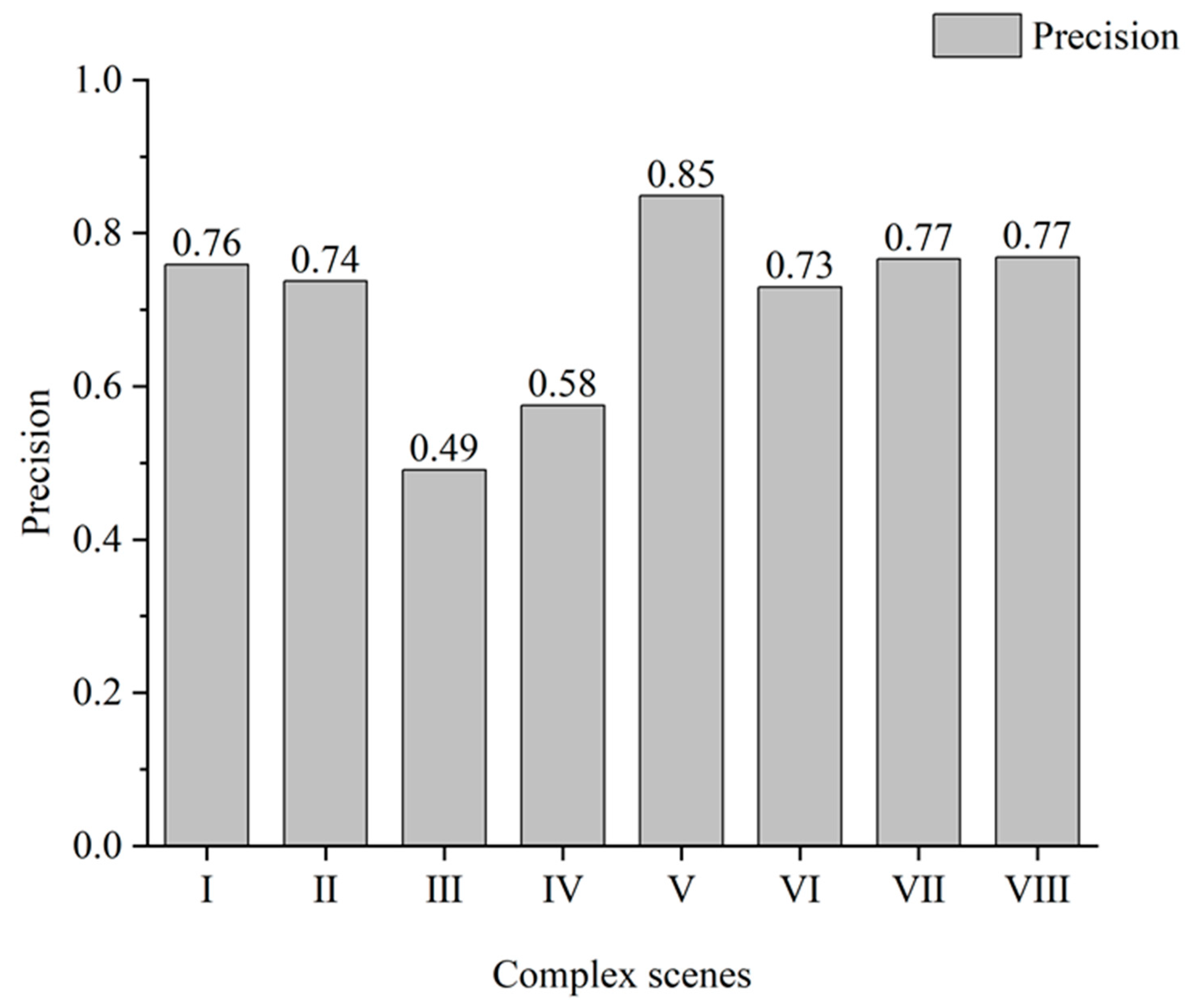

Using the U-Net model to identify UAV remote sensing visible flue-cured tobacco images, the accuracy of the segmentation results is shown in

Table 1.

Table 1 shows that the recognition accuracies of the eight habitats were in the following order: subsurface fragmented and weed-free (Ⅴ) > surface fragmented and planted with smaller seedlings (Ⅷ) > subsurface fragmented and weed-infested (Ⅶ) > smooth tectonics and weed-free (Ⅰ) > smooth tectonics and unevenly growing (Ⅱ) > surface fragmented and shadow-masked (Ⅵ) > smooth tectonics and planted with smaller seedlings (Ⅳ) > smooth tectonics and weed-infested (Ⅲ). Comparing the whole image with the recognition results of eight scenes, Scenes Ⅲ and Ⅳ showed lower accuracy.Then, the other scenes were compared with the whole image of the study area, and the overall accuracy of the whole image was lower, with a Precision of 0.68, a Recall of 0.85, an F1-score of 0.75 and an IOU of 0.60. Maize, which is the same green crop as tobacco, was incorrectly identified as tobacco by the U-Net model due to the fragmented surface, complex planting structure, mixed cultivation of tobacco and maize plots and more bushes and weeds along the cultivated soil canals. Thus, the accuracy of the whole image was low.

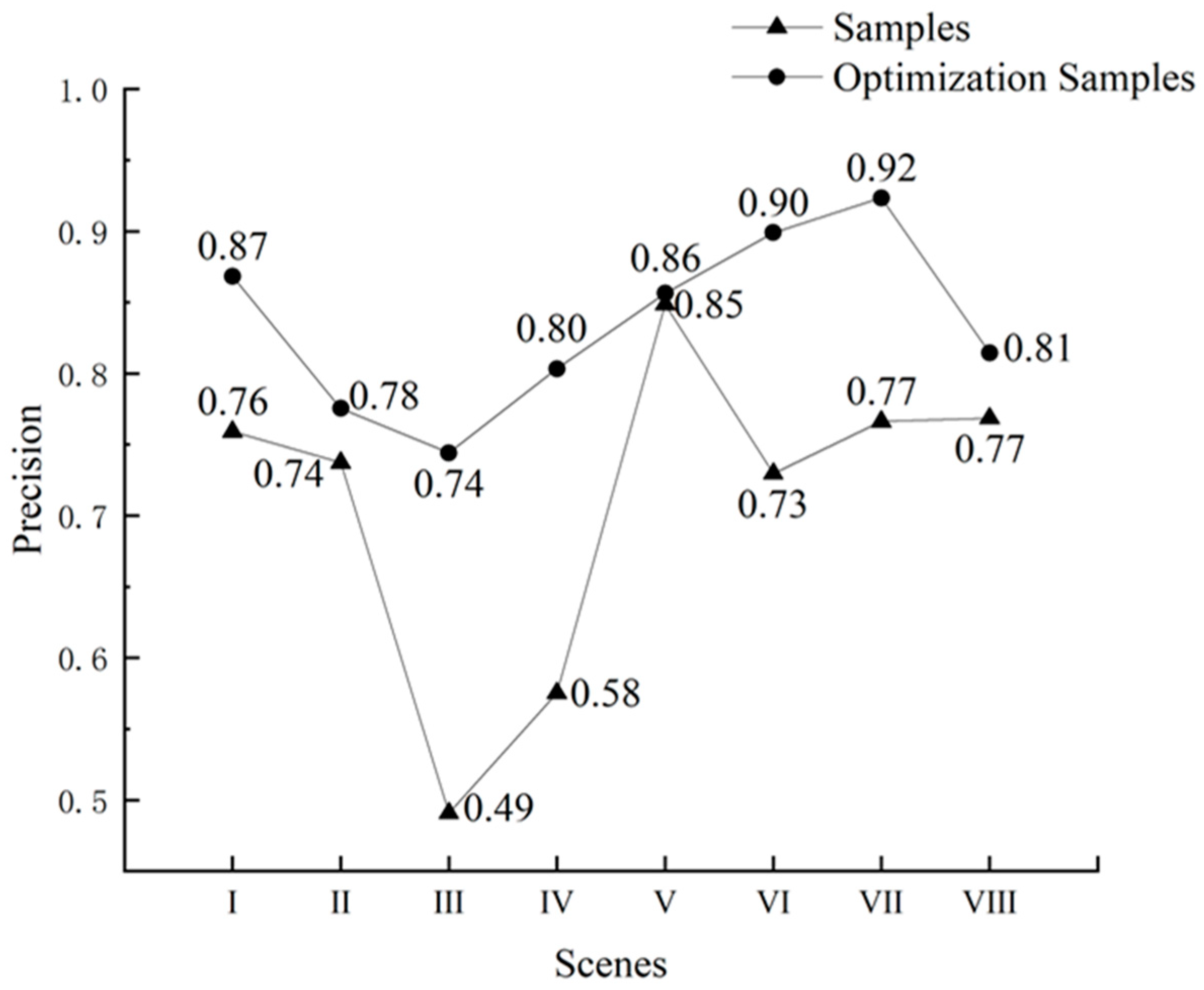

Subsection Scene recognition accuracy is shown in

Figure 6, with some differences. The factors affecting the recognition accuracy in each scene were also different. The scene of “subsurface fragmented and weed-free (V)” had the highest accuracy (Preci-sion=0.85), followed by “surface fragmented and planted with smaller seedlings (VIII)” (Precision=0.77).The scene of “smooth tectonics and weed-infested (Ⅲ)” had the low-est recognition accuracy (Precision=0.49).

Based on

Figure 6 and

Table 1, the significant decrease in the accuracy of Scenes Ⅲ and Ⅳ may be because: (1) weeds and tobacco both are green vegetation with similar shape, spectral, and texture features, and the U-Net model may incorrectly identify weeds as tobacco during tobacco identification; (2) the tobacco plants are smaller and fuzzy in the training samples. The contours of the tobacco are unclear, and the model would omit smaller tobacco plants, leading to a lower accuracy in recognizing Scene Ⅳ.

Comparative analysis reveals that the recognition accuracy of Scene Ⅷ was better than that of Scene Ⅳ. This is mainly attributed to the relatively homogeneous planting habitat in Scene Ⅷ, with more bare rock on the fragmented surface, fewer weeds and other green vegetation and significant differences between the tobacco plants and the background texture features in the images. Thus, the U-Net model was less affected in tobacco identification, resulting in higher identification accuracy of tobacco plants.

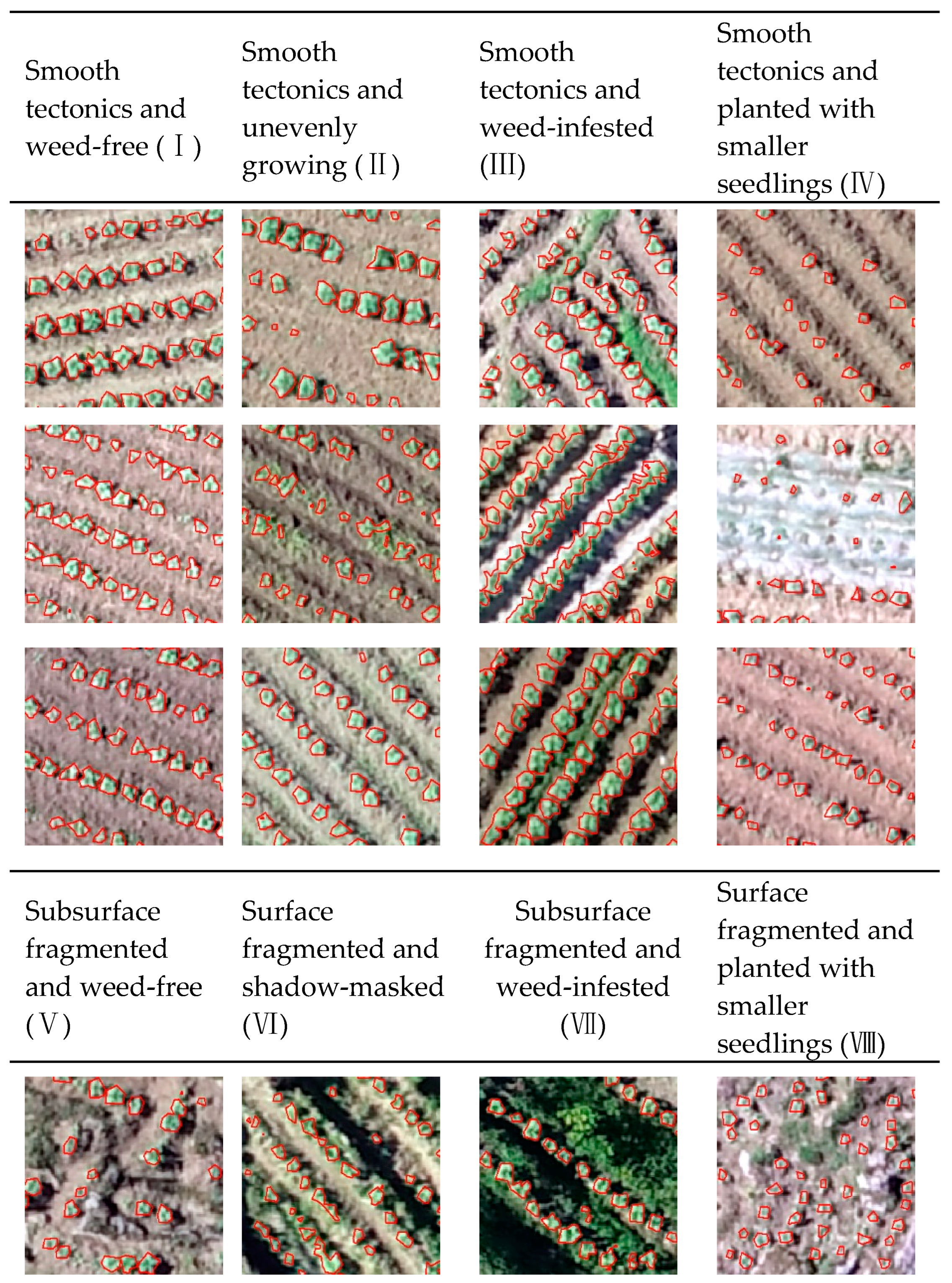

3.2. Visual Analysis of Tobacco Plant Extraction

In

Figure 7, based on the model, sample quality and quantity and complex habitat dataset recognition results, the red contour represents the identified tobacco plants. Scenes Ⅰ and Ⅴscene were taken as the control. Scene Ⅰ had regular tobacco planting and connected tobacco leaves, with overall even recognition accuracy. Scene Ⅴ had irregular tobacco planting, and tobacco plants were smaller due to the water and fertilizer conditions. Its single-plant recognition accuracy was high.

Scene Ⅲ had regular tobacco planting and good plant growth. The model identi-fied non-tobacco parts as tobacco plants during continuous plant identification. Weeds and tobacco plants are both green vegetation and have similar spectral features and texture features. These were the main reasons for the low recognition accuracy of Sce-ne Ⅲ. Scene Ⅶ had irregular tobacco planting. There was weed confusion to some extent. Compared with Scene Ⅲ, Scene Ⅶ had less continuous tobacco planting. Thus, the recognition accuracy of Scene Ⅶ was 0.28 higher than that of Scene Ⅲ.

Scenes Ⅳ and Ⅷ were taken as the control. Tobacco plants in Scene Ⅳ were affected by the transplanting time sequence, and the tobacco plant seedlings were smaller than those in Scene Ⅷ. Thus, the tobacco feature information of the training samples was insignificant. The U-Net model may omit smaller tobacco plants during training, resulting in a lower identification accuracy of Scene Ⅳ than that of Scene Ⅷ by 0.19.

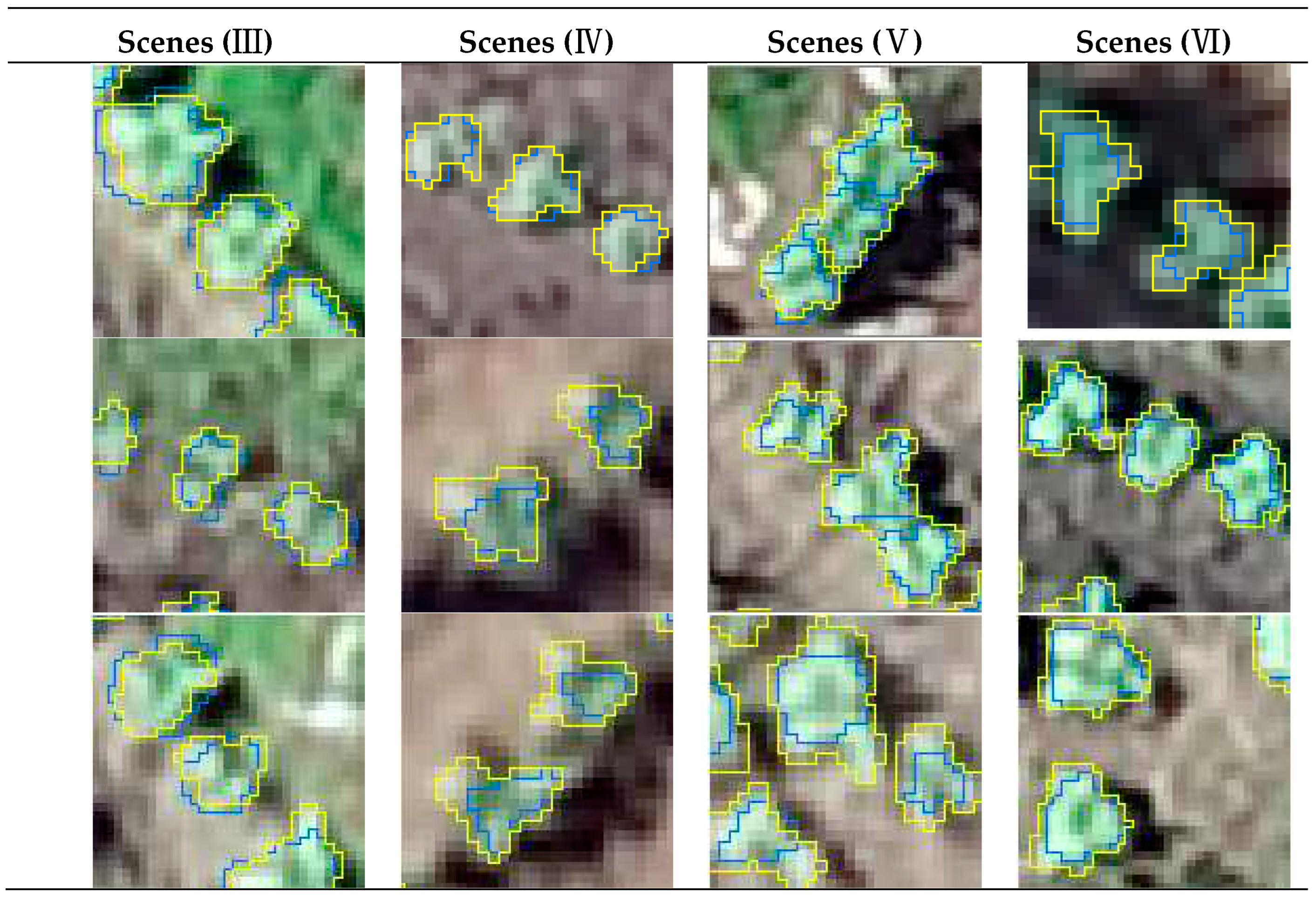

3.3. Optimization Sample

In order to improve the generalization ability and segmentation accuracy of the U-Net model, the samples in the datasets were optimized. The optimized datasets can pro-vide samples closer to real tobacco plants (such as geometric morphological features) for model training. Particularly, some samples were interfered by environmental fac-tors such as light, shadow and ground reflection. To accommodate these interferences, the effects of sample quantity and quality on model accuracy were explored. The ArcGIS software was used to optimize the sample dataset and enhance the tobacco plants. The following four aspects were mainly addressed and improved: (1) Boundary accuracy for samples with smaller plants. (2) Difficulties in distinguishing tobacco plants accompanied by weeds. (3) Weak ground reflections and shadow interference. The interference of incorrectly labeling shadows as tobacco samples can be excluded. (4) Strong ground reflection and indistinct tobacco plant information characteristics; con-sequently, tobacco plant samples are missed. Partial samples and optimized samples are shown in

Figure 8, where blue color indicates original samples and yellow color in-dicates optimized samples. In addition, since the samples were randomly split, the number of training samples was increased to 9500 in order to enrich the content and diversity of the samples.

The U-Net model is trained using the optimized sample dataset, and the results show that the recognition accuracy of the eight complex scenes is generally improved, and the results are shown in

Figure 9. Among them, the scene broken surface with weeds (Ⅶ) has the highest recognition accuracy, followed by broken surface shadow masking (Ⅵ) with 0.92 and 0.90, respectively. The optimized samples are targeted at the scene with broken surface with weeds, so that the boundaries of tobacco and weeds in the training samples are more clear, and tobacco plants on the broken surface are distinguished from bare rocks, etc., and the characteristics of tobacco plants are obvious, and the model has strong generalization ability with high recognition accuracy; the broken surface Shadow blocking (VI), for the area without tobacco blocked by trees is regarded as a non-tobacco area, combining the pictures taken by the UAV with the analysis of synthetic images to improve the recognition accuracy. Scene plot leveling with weeds (Ⅲ) has the lowest accuracy rate, followed by plot leveling with uneven growth (Ⅱ), with accuracy rates of 0.74 and 0.78, respectively; plot leveling with weeds (Ⅲ) has a relatively flat ground; the drone acquires the image in the afternoon, and the shadow of the weeds and tobacco plants has a greater impact on the recognition accuracy of the tobacco plants and reduces the model's recognition accuracy of the tobacco.

4. Discussion

To address the problems of fragile natural environment, fragmented plots and complex planting stru ctures in the karst mountains areas of southern China, we constructed eight sample datasets of tobacco plants in complex scenes to train the U-Net model. Accurate extraction of tobacco information from UAV remote sensing imagery in complex scenes was obtained. The factors affecting the recognition accuracy of the U-Net model in different complex scenes were discussed in two aspects: tobacco plant omission and wrong extraction.

4.1. Analysis of Omitted Factors

In order to investigate the factors affecting the U-Net model on the segmentation of tobacco plants in different complex habitats, we analyzed the influencing factors through the segmentation results of eight habitats. The segmentation accuracy of Sce-ne Ⅲ was the lowest, with a Precision of 0.49 and a Recall of 0.69. A total of 410 plants were omitted, including 95 whole plants (23%) and 315 incomplete plants (77%). The omission of complete plants mainly included two factors, namely, weed cover and small saplings of tobacco plants. For incomplete tobacco plants, the omission mainly resulted from the small size of tobacco plants covered by weeds. Weeds and tobacco plants had similar texture and spectral features. The soil background had low reflec-tance. These factors affected the recognition accuracy of the scene with smooth tecton-ics and weed cover. Overall, the omission of the tobacco plant in this scene was mainly attributed to weed cover.

For tobacco images in Scene Ⅳ, 420 plants were missed, including 187 whole plants (45%) and 233 incomplete tobacco plants (55%). The omission of the whole to-bacco plants was mainly because the tobacco plant sapling was smaller, and larger UAV flight height reduced the UAV image resolution, resulting in low identification accuracy of the tobacco. In the low-altitude remote sensing multi-scale recognition of complex habitats in karst mountainous areas, Li [

4] found that the accuracy of UAV im-ages for tobacco plant recognition decreased with increasing height. In this study, the UAV flight altitude was 120 m. The tobacco plants at the rooting stage were small. Thus, the image resolution was low, and some tobacco plant features were lost. This resulted in the low accuracy of the U-Net model in segmenting the tobacco plant scene.

4.2. Analysis of Erroneous Factors

Further detailed analysis was conducted to reveal the factors affecting the recog-nition accuracy of the model incorrectly identifying non-target features as target fea-tures (tobacco). The model incorrectly identifying non-target features as target features was referred to as misidentification. The six main factors that caused misidentification were identified using overlay analysis of the experimental results (

Table 2), i.e., the edge of tobacco plants, maize plants, bushes, white mulch, bare rocks and weeds. Par-ticularly, the edge of the tobacco plants was the most mislabeled, accounting for 67.21% of the whole image mislabeled. This is mainly because the segmentation level of the U-Net model was at the pixel level. In addition, the data was collected between 15:00-16:00. The sun altitude angle varied. There was a shadow on the leaves of the to-bacco plants, and the model incorrectly identified the shadow of the tobacco leaves as the real tobacco leaves. Thus, there was a discrepancy between the validation labels and the manually drawn ones in identifying the contour of the tobacco plants.

The second was the misidentification caused by the confusion of maize plants and weeds, accounting for 24.50% of the misidentification of the whole image, and weeds accounted for 7.40%. The karst mountainous area had fragmented surfaces, scattered cultivated plots and complex planting structures. The tobacco plots were adjacent to the maize plots and covered with weeds, and they were all green vegetation with sim-ilar shape features, texture features and spectral features. Thus, the model incorrectly identified maize and weeds as tobacco, leading to more misidentification in the U-Net model and lower recognition accuracy.

4.3. Analysis of the Impact of Optimized Samples on the Accuracy of the Model in Identifying Tobacco Plants

Figure 10 and

Table 3 indicates that the sample quality had a certain impact on the recognition accuracy of the U-Net model. In this experiment, the recognition accuracy of the model trained by the optimized samples were higher than that by the original samples. The recognized tobacco plants were closer to the real tobacco plants. The recognization accurarcy of Scene Ⅲ increased by 25.31%. In order to better evaluate the impact of the sample on the model, the results from the scene with smooth tectonics were used as the evaluation indicator of the model trained by the two dataset samples. The calculation results were analyzed to reveal their differences. Positive values indicate that the accuracy is improved, and negative values indicate that the accuracy is reduced. Regarding the accurarcy, Scene Ⅳ showed the highest increase (22.81%). Scene Ⅴ showed the lowest increase (0.78%), followed by Scene Ⅱ (3.82%).

The optimization samples were optimized for the situation that the boundary feature information of the original tobacco and weed samples was insignificant in the scene of fragemented surface and weeds. Thus, the boundary information of tobacco and weeds in the training sample can be more distinct. The generalization ability of the model can be enhanced, thus improving the model recognition accuracy. Tobacco planting has a time sequence. The late planted tobacco plant is smaller. At the same flight altitude, the feature information of smaller tobacco plants is not prominent, which affects the recognition accuracy. Therefore, during the sample optimization, the morphological features of the smaller tobacco plants in the training samples were optimized. Then, the labeled plants can be closer to the real tobacco plants, improving the model recognition accuracy.

5. Conclusions

The experimental results proved that the sample datasets from UAV visible imag-es to train the U-Net model had a certain degree of effectiveness and applicability in identifying tobacco plants in UAV visible images under different planting environ-ments. Scene segmentation reduced the interference of the complexity of plots, plant-ing structure and other factors on the identification accuracy of tobacco plants. This is a new attempt to improve the classification of crop recognition in complex habitats in the karst mountainous areas (particularly habitats with fragmented surfaces).

The findings also reveal that the U-net model showed different abilities in identi-fying features in different habitats due to the influence of some main factors such as plot fragmentation, plant size, presence of weeds and shadow masking. Thus, it is necessary to construct the datasets by scene, increase samples and eliminate interfer-ences in a targeted manner according to the complexity of different scenes and the main factors affecting the model in order to improve the accuracy of the model in clas-sifying complex scenes.

It is found that tobacco plant contour was the most significant influencing factor of the U-Net model in identifying tobacco plants in complex habitats in karst moun-tainous areas. This is related to the sample preparation error, followed by the accom-panying interference of maize and weed. Maize, weeds and tobacco are all green vege-tation and have similar shapes and spectral and texture features, leading to the misi-dentification of the U-Net model. In order to address this problem, the next step of the study is to increase the image bands and spectral information of the ground and use "shape-spectrum" joint features to eliminate different spectra, or to improve the identi-fication accuracy by removing the influence of noise such as weeds through morpho-logical erosion and dilation operation.

The generalization ability and robustness of the U-Net model were strongly influenced by the quality and quantity of samples. The optimized sample dataset was used to train the model, which improved the sample profile, quality and quantity. The accuracy of each scene was higher than the original sample. Therefore, in future research, we can further improve the quantity and quality of samples to improve the model performance.

Author Contributions

All authors contributed to the manuscript. Conceptualization, Y.H. and L.Y; methodology, Q.L.; validation, D.H. and Y.H.; formal analysis, Q.L.; data curation, Y.H and D.H.; writing—original draft preparation, L.Y.; writing—review and editing, Y.H, Z.F., D.H., X.M., L.C., and Q.L.; visu-alization, Y.H. and L.Y.; project administration, Z.F.; funding acquisition, Z.F. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by Guizhou Provincial Basic Research Program (Natural Science) ([2021] General 194) and Guizhou Provincial Key Technology R&D Program ([2023] General 211& [2023] General 218).

Data Availability Statement

All the data used in this study are mentioned in

Section 2 “Materials and Methods”.

Acknowledgments

The authors gratefully acknowledge the financial support of Guizhou Normal University. We would also like to thank the editors and anonymous reviewers for their helpful and productive comments on the manuscript.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the de-cision to publish the results.

References

- Vilma Cokkinides, P.P.B. , MS; Elizabeth Ward, PhD; Ahmedin Jemal, DVM, PhD;Michael Thun, MD, MS. Progress and Opportunities inTobacco Control. CA Cancer J Clin. 2006, 56, 135–142. [Google Scholar]

- Bose, P.; Kasabov, N.K.; Bruzzone, L.; Hartono, R.N. Spiking Neural Networks for Crop Yield Estimation Based on Spatiotemporal Analysis of Image Time Series. IEEE Transactions on Geoscience and Remote Sensing. 2016, 1–11. [Google Scholar] [CrossRef]

- Xiangpeng Fan, J.Z. , Yan Xu. Research advances of monitoring agricultural information using UAV low-altitude remote sensing. Philosophy,Humanities Social Sciences. 2021, 38, 623–631. [Google Scholar]

- Li, Q. Study on Multi-scale identification of tobacco in complex habitat in karst mountainous areas using low altitude remote sensing. Guizhou Normal University, 2023.

- Zhijun Tong, D.F. , Xuejun Chen, Jianmin Zeng, Fangchan Jiao. Genetic analysis of six important yield-related traits in tobacco (Nicotiana tabacum L). Acta Tabacaria Sinica. 2020, 26, 72–81. [Google Scholar]

- Liao, X.; Xiao, Q.; Zhang, H. UAV remote sensing: Popularization and expand application development trend. National Remote Sensing Bulletin. 2019, 23, 1046–1052. [Google Scholar] [CrossRef]

- Huang, D.H.Z.Z.R.P.M.Z.L.Y.Y.Z.D.X.Q.L.L.H.Y. Challenges and main research advances of low-altitude remote sensing for crops in southwest plateau mountains. Journal of Guizhou Normal University (Natural Sciences). 2021, 39, 51–59. [Google Scholar]

- Feng Quanlong, N.B., Zhu Dehai, Chen Boan, Zhang Chao,Yang Jianyu Review.for deep learning in land use and land cover remote sensing classification Nongye Jixie Xuebao/Transactions of the Chinese Society of Agricultural Machinery. 2022, 53, 1–17.

- Huang, L.Y.Z.Z.S.L.D. Research on vegetation extraction and fractional vegetation cover of karst area based on visible light image of UAV. Acta Agrestia Sinica. 2020, 28, 1664–1672. [Google Scholar]

- Doraiswamy, R.C.; Hatfield, J.L.; Jackson, T.J.; Akhmedov, B.; Prueger, J.; Stern, A. Crop condition and yield simulations using Landsat and MODIS. Remote Sensing of Environment: An Interdisciplinary Journal 2004, 92.

- Berni, J.A.J.; Zarco-Tejada, P.J.; Suarez, L.; Fereres, E. Thermal and Narrowband Multispectral Remote Sensing for Vegetation Monitoring From an Unmanned Aerial Vehicle. IEEE Transactions on Geoscience & Remote Sensing. 2009, 47, 722–738. [Google Scholar] [CrossRef]

- Schmidhuber, J. Deep learning in neural networks: An overview. Neural Networks. 2015, 61, 85–117. [Google Scholar] [CrossRef]

- Uto, K.; Seki, H.; Saito, G.; Kosugi, Y. Characterization of Rice Paddies by a UAV-Mounted Miniature Hyperspectral Sensor System. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing. 2013, 6, 851–860. [Google Scholar] [CrossRef]

- Tian, H.X.L. Development of a low-cost agricultural remote sensing system based on an autonomous unmanned aerial vehicle (UAV). Biosystems Engineering. 2011, 108, 174–190. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Communications of the ACM. 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Konstantinos, L.; Patrizia, B.; Dimitrios, M.; Simon, P.; Dionysis, B. Machine Learning in Agriculture: A Review. Sensors. 2018, 18, 2674. [Google Scholar] [CrossRef] [PubMed]

- Pound, M.P.; Atkinson, J.A.; Townsend, A.J.; Wilson, M.H.; Marcus, G.; Jackson, A.S.; Adrian, B.; Georgios, T.; Wells, D.M.; Murchie, E.H. Deep Machine Learning provides state-of-the-art performance in image-based plant phenotyping. Gigascience. 2018, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Tian, Y.; Li, T.; Li, C.; Piao, Z.; Wang, B. Method for recognition of grape disease based on support vector machine. Transactions of the Chinese Society of Agricultural Engineering. 2007, 23, 175–180. [Google Scholar]

- Baldevbhai, P.J. Color Image Segmentation for Medical Images using L*a*b* Color Space. IOSR Journal of Electronics and Communication Engineering. 2012, 32, 11. [Google Scholar] [CrossRef]

- Chang, C.-C.; Lin, C.-J. LIBSVM: A library for support vector machines. ACM Trans. Intell. Syst. Technol. 2011, 2, Article 27. [Google Scholar] [CrossRef]

- Tatsumi; Yamashiki; Torres; MAC; Taipe; CLR. Crop classification of upland fields using Random forest of time-series Landsat 7 ETM+ data. COMPUT ELECTRON AGR 2015, 2015, 115, 171–179. [CrossRef]

- Masci, J.G. , Alessandro; Ciresan, Dan; Fricout, Gabriel; Schmidhuber, Jurgen. A fast learning algorithm for image segmentation with max-pooling convolutional networks..in Proc. IEEE Int. Conf. Image Process 2013.

- Jeon, H.Y.; Tian, L.F.; Zhu, H. Robust Crop and Weed Segmentation under Uncontrolled Outdoor Illumination. Sensors. 2011, 11, 6270–6283. [Google Scholar] [CrossRef]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P. ; Sabine. Süsstrunk. SLIC Superpixels Compared to State-of-the-Art Superpixel Methods. IEEE transactions on pattern analysis and machine intelligence. 2012, 34, 2274–2282. [Google Scholar]

- Maggiori, E.; Tarabalka, Y.; Charpiat, G.; Alliez, P. Convolutional Neural Networks for Large-Scale Remote-Sensing Image Classification. IEEE Transactions on Geoscience and Remote Sensing. 2017, 55, 645–657. [Google Scholar] [CrossRef]

- Chen, Y.; Lee, W.S.; Gan, H.; Peres, N.; Fraisse, C.; Zhang, Y.; He, Y. Strawberry Yield Prediction Based on a Deep Neural Network Using High-Resolution Aerial Orthoimages. Remote Sensing. 2019, 11. [Google Scholar] [CrossRef]

- Oh, S.; Chang, A.; Ashapure, A.; Jung, J.; Dube, N.; Maeda, M.; Gonzalez, D.; Landivar, J. Plant Counting of Cotton from UAS Imagery Using Deep Learning-Based Object Detection Framework. Remote Sensing. 2020, 12, 2981. [Google Scholar] [CrossRef]

- Wu, J.; Yang, G.; Yang, H.; Zhu, Y.; Li, Z.; Lei, L.; Zhao, C. Extracting apple tree crown information from remote imagery using deep learning. Computers and Electronics in Agriculture. 2020, 174. [Google Scholar] [CrossRef]

- Freudenberg, M.; Nölke, N.; Agostini, A.; Urban, K.; Wörgötter, F.; Kleinn, C. Large Scale Palm Tree Detection In High Resolution Satellite Images Using U-Net. Remote Sensing. 2019, 11. [Google Scholar] [CrossRef]

- Yang, M.-D.; Tseng, H.-H.; Hsu, Y.-C.; Tsai, H.P. Semantic Segmentation Using Deep Learning with Vegetation Indices for Rice Lodging Identification in Multi-date UAV Visible Images. Remote Sensing. 2020, 12. [Google Scholar] [CrossRef]

- Liu, X.R.L.Z.C.Y.S.L.X.L.S. Counting cigar tobacco plants from UAV multispectral images via key points detection approach. Journal of Agricultural Machinery. 2023, 54, 266–273. [Google Scholar]

- Li, Q.; Yan, L.; Huang, D.; Zhou, Z.; Zhang, Y.; Xiao, D. Construction of a small sample dataset and identification of Pitaya trees (Selenicereus) based on UAV image on close-range acquisition. Journal of Applied Remote Sensing. 2022, 16. [Google Scholar] [CrossRef]

- Huang, L.; Wu, X.; Peng, Q.; Yu, X.; Camara, J.S. Depth Semantic Segmentation of Tobacco Planting Areas from Unmanned Aerial Vehicle Remote Sensing Images in Plateau Mountains. Journal of Spectroscopy 2021, 2021, 1–14. [Google Scholar] [CrossRef]

- Olaf Ronneberger, P.F. , and Thomas Brox; Computer Science Department and BIOSS Centre for Biological Signalling Studies. U-Net: Convolutional Networks for Biomedical Image Segmentation. 2015, 9351, 234–241. [Google Scholar]

- Liskowski, P.; Krawiec, K. Segmenting Retinal Blood Vessels WithDeep Neural Networks. IEEE Transactions on Medical Imaging 2016, 35, 2369–2380. [Google Scholar] [CrossRef] [PubMed]

- Ubbens, J.R.; Stavness, I. Deep Plant Phenomics: A Deep Learning Platform for Complex Plant Phenotyping Tasks. Frontiers in Plant Science 2017, 8. [Google Scholar] [CrossRef] [PubMed]

- Wei, S.; Zhang, H.; Wang, C.; Wang, Y.; Xu, L. Multi-Temporal SAR Data Large-Scale Crop Mapping Based on U-Net Model. Remote Sensing 2019, 11, 68. [Google Scholar] [CrossRef]

- Lucas Prado Osco a, M.d.S.d.A.b. , José Marcato Junior a. A convolutional neural network approach for counting and geolocating citrus-trees in UAV multispectral imagery. ISPRS Journal of Photogrammetry and Remote Sensing 2020, 160, 97–106. [Google Scholar]

- Kunlin,Zou, C.; Chen, X.; Zhang, F.; Zhou, H.; Zhang, C. A Field Weed Density Evaluation Method Based on UAV Imaging and Modified U-Net. Remote Sensing 2021, 13, 310. [Google Scholar]

- Zhou, Z.; Peng, R.; Li, R.; Li, Y.; Huang, D.; Zhu, M. Remote Sensing Identification and Rapid Yield Estimation of Pitaya Plants in Different Karst Mountainous Complex Habitats. Agriculture 2023, 13, 1742. [Google Scholar] [CrossRef]

- Khan, A.; Ilyas, T.; Umraiz, M.; Mannan, Z.I.; Kim, H. CED-Net: Crops and Weeds Segmentation for Smart Farming Using a Small Cascaded Encoder-Decoder Architecture. Electronics 2020, 9. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M. A survey on Image Data Augmentation for Deep Learning. Journal of Big Data 2019, 6, 48. [Google Scholar] [CrossRef]

Figure 1.

Location maps of the study area :(a) Map of China; (b) UAV image of the study area.

Figure 1.

Location maps of the study area :(a) Map of China; (b) UAV image of the study area.

Figure 2.

U-Net modeling Figure.

Figure 2.

U-Net modeling Figure.

Figure 3.

U-Net modeling accuracy and loss curves.

Figure 3.

U-Net modeling accuracy and loss curves.

Figure 4.

Study area habitat Delineation Map.

Figure 4.

Study area habitat Delineation Map.

Figure 5.

Images of tobacco with manual annotation labels: subsurface fragmented and weed-infested (4.png, 5.png, 6.png); surface fragmented and shadow-masked (7.png, 8.png, 9.png); surface fragmented and planted with smaller seedlings (10.png, 11.png, 12.png); smooth tectonics and weed-free (13, 14, 15); smooth tectonic and weed-infested (16.png, 17.png, 18.png); smooth tectonics and unevenly growing (19.png, 20.png, 21.png); smooth tectonics and planted with smaller seedlings (22.png, 23.png, 24.png).

Figure 5.

Images of tobacco with manual annotation labels: subsurface fragmented and weed-infested (4.png, 5.png, 6.png); surface fragmented and shadow-masked (7.png, 8.png, 9.png); surface fragmented and planted with smaller seedlings (10.png, 11.png, 12.png); smooth tectonics and weed-free (13, 14, 15); smooth tectonic and weed-infested (16.png, 17.png, 18.png); smooth tectonics and unevenly growing (19.png, 20.png, 21.png); smooth tectonics and planted with smaller seedlings (22.png, 23.png, 24.png).

Figure 6.

Complex identification precision histogram.

Figure 6.

Complex identification precision histogram.

Figure 7.

Identification results of the U-Net model.

Figure 7.

Identification results of the U-Net model.

Figure 8.

Comparison between partial samples and optimized samples.

Figure 8.

Comparison between partial samples and optimized samples.

Figure 9.

Recognition precision for the original sample and optimized sample datasets.

Figure 9.

Recognition precision for the original sample and optimized sample datasets.

Figure 10.

Recognition results of training the U-Net model with original and optimized samples (Note: blue color indicates original sample training results and yellow color indicates optimized sample training results).

Figure 10.

Recognition results of training the U-Net model with original and optimized samples (Note: blue color indicates original sample training results and yellow color indicates optimized sample training results).

Table 1.

Identification results for different scenes.

Table 1.

Identification results for different scenes.

| Scenes |

Precision |

Recall |

F1-score |

IOU |

| Smooth tectonics and weed-free (Ⅰ) |

0.76 |

0.86 |

0.81 |

0.67 |

| Smooth tectonics and unevenly growing (Ⅱ) |

0.74 |

0.89 |

0.81 |

0.67 |

| Smooth tectonics and weed-infested (Ⅲ) |

0.49 |

0.69 |

0.57 |

0.40 |

| Smooth tectonics and planted with smaller seedlings (Ⅳ) |

0.58 |

0.79 |

0.67 |

0.50 |

| Subsurface fragmented and weed-free (Ⅴ) |

0.85 |

0.84 |

0.84 |

0.73 |

| Surface fragmented and shadow-masked (Ⅵ) |

0.73 |

0.87 |

0.79 |

0.66 |

| Subsurface fragmented and weed-infested (Ⅶ) |

0.77 |

0.88 |

0.82 |

0.69 |

| Surface fragmented and planted with smaller seedlings (Ⅷ) |

0.77 |

0.79 |

0.78 |

0.64 |

| The whole image |

0.68 |

0.85 |

0.75 |

0.60 |

Table 2.

Statistics of factors influencing misidentification.

Table 3.

Differences between the evaluation indexes of the original and optimized samples for training the U-Net model.

Table 3.

Differences between the evaluation indexes of the original and optimized samples for training the U-Net model.

| Scenes |

Precision |

Recall |

F1-score |

Iou |

| Smooth tectonics and weed-free (Ⅰ) |

10.93% |

0.77% |

6.16% |

9.10% |

| Smooth tectonics and unevenly growing(Ⅱ) |

3.82% |

-9.35% |

-2.08% |

-2.87% |

| Smooth tectonics and weed-infested(Ⅲ) |

25.31% |

7.68% |

18.10% |

20.34% |

| Smooth tectonics and planted with smaller seedlings(Ⅳ) |

22.81% |

-7.81% |

8.92% |

10.74% |

| Subsurface fragmented and weed-free (Ⅴ) |

0.78% |

-4.17% |

-1.80% |

-2.65% |

| Surface fragmented and shadow-masked (Ⅵ) |

16.92% |

-8.65% |

4.29% |

6.10% |

| Subsurface fragmented and weed-infested (Ⅶ) |

15.72% |

-6.97% |

4.34% |

6.44% |

| Surface fragmented and planted with smaller seedlings (Ⅷ) |

4.59% |

-25.21% |

-13.18% |

-15.93% |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).