1. Introduction

The high-rise building machines (HBMs) are innovative self-elevating steel structure building machines designed for constructing super high-rise buildings [

1,

2,

3,

4]. They offer several advantages: high automation, enhanced safety, simplified construction organization, assured structural quality, and standardized processes [

5,

6,

7]. These features contribute to accelerated construction progress.

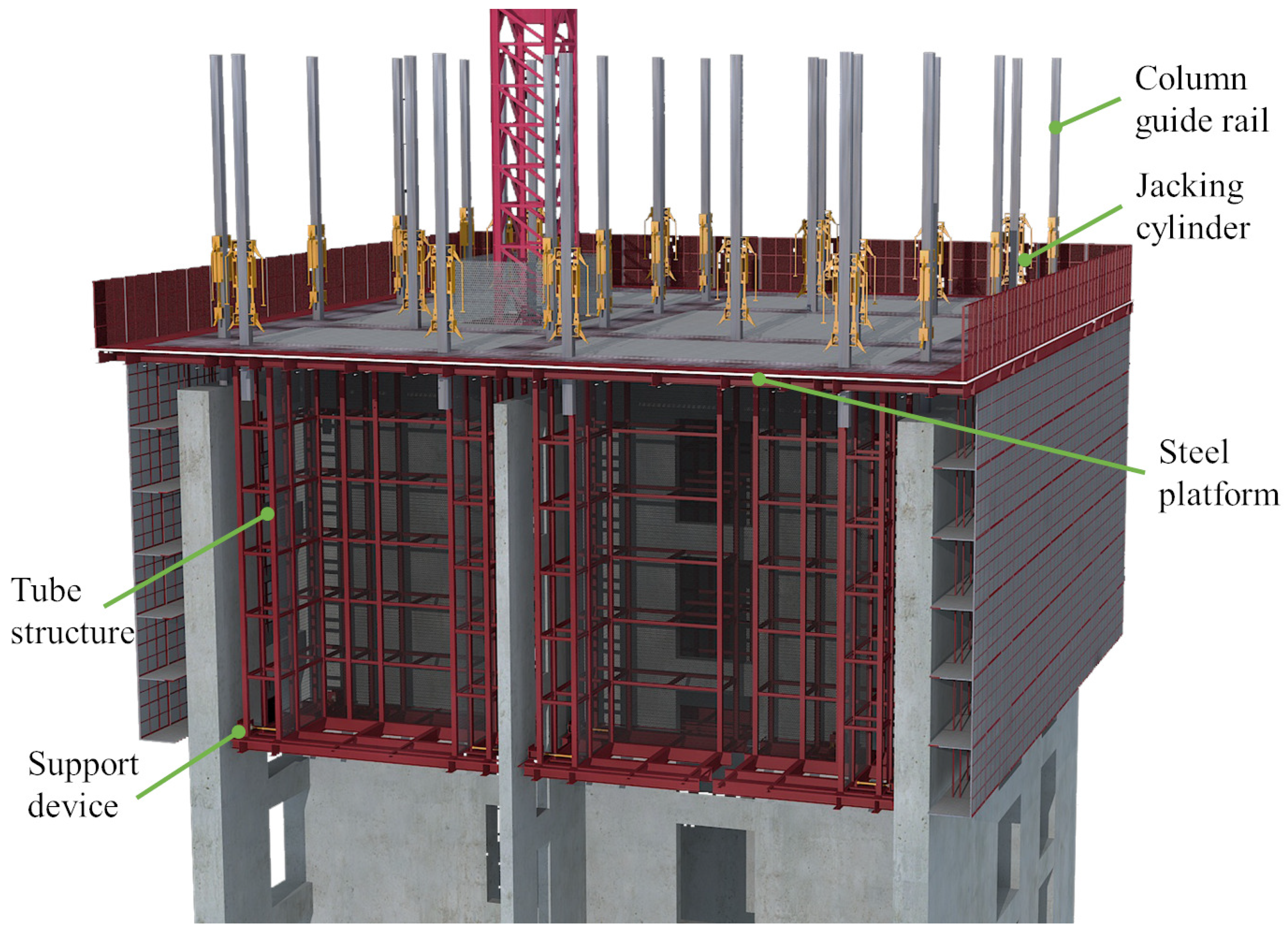

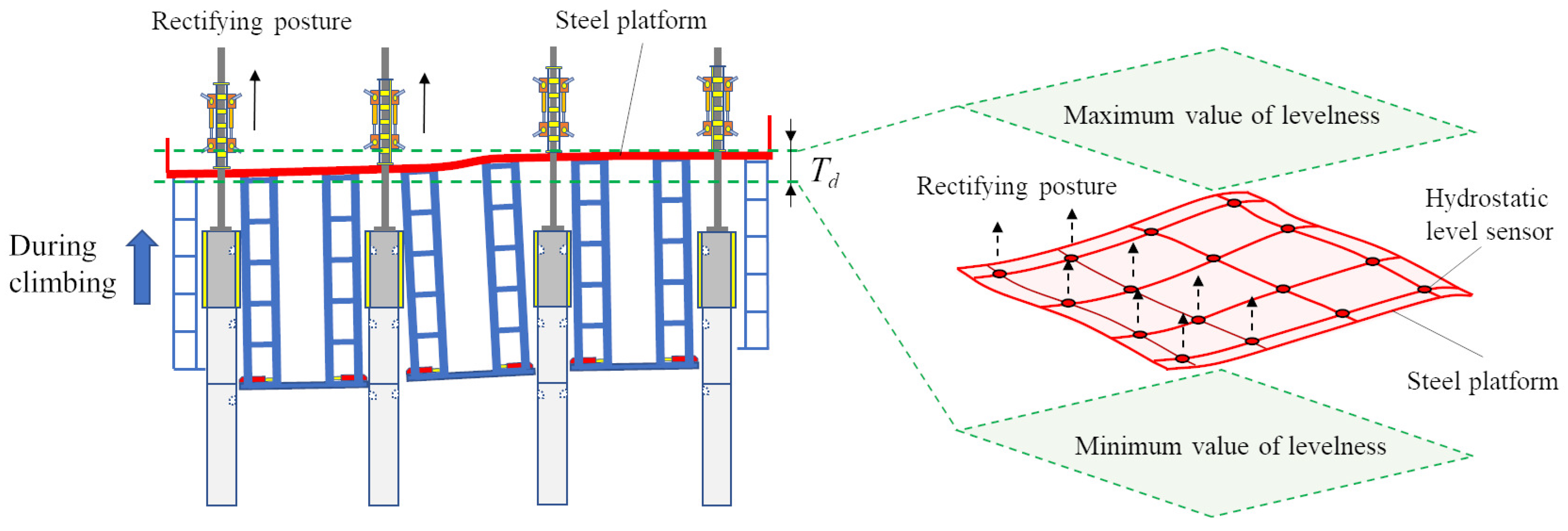

Figure 1 illustrates the three-dimensional structural details of the HBM [

8,

9,

10]. The levelness of the steel platform (SP) represents the HBM’s posture changes [

11,

12,

13]. During the climbing process of the HBM, the telescopic support device retracts from the structurally reinforced concrete shear wall, while the climbing system supports the SP to ascend along the column guide rail. Once the SP reaches the desired position, the telescopic support device is extended into the reserved hole of the structurally reinforced concrete shear wall. However, due to uneven stacking loads on the SP, the levelness of the SP may fluctuate. If excessive levelness deviation is not promptly corrected, the HBM may undergo severe shape distortion, leading to collisions with obstacles and halting the climbing [

14]. In the early stages of HBM usage, workers employed the hanging line pendant method and plumbing instruments to measure the HBM’s verticality. They used jacks to lift parts with low levelness, applying corrective forces to gradually restore the SP’s levelness while continuing to climb [

15,

16]. Subsequently, climbing control operators monitored the HBM’s posture information directly via signal and image displays on the center console. Although this approach improved efficiency and reduced measurement errors, it incurred significant costs. The detection equipment enhanced production efficiency, minimized operational errors, ensured detection repeatability, and generated extensive monitoring data [

17,

18,

19,

20]. However, these measures still failed to prevent levelness deviations during the climbing process, addressing the complexity of repairing such deviations and mitigating subjective errors in worker operations.

We have discovered that the change in posture of the HBM exhibits a multivariate time-series-related process. To effectively capture the intricate patterns and dependencies in time series data, neural networks (NNs) are a valuable tool [

21,

22,

23,

24,

25,

26]. NNs possess linear and nonlinear fitting capabilities, making them adept at handling such data. Notably, NNs technology has shown promising results in prediction tasks [

27,

28,

29,

30]. It is important to note, though, that these neural networks don’t do as well when they have to deal with tasks that use multivariate time-series class information and simulations that have strong back-and-forth correlations. Several articles from 2015 and 2020 have talked about recurrent neural networks (RNNs) [

31,

32,

33,

34,

35]. These are a type of time-series neural network that has memory cells and feedback connections that make it easier for information to flow within the model and handle strong back-and-forth dependencies well. Using a recursive structure, RNNs retain contextual information from past observations and apply it to current or future predictions. However, RNNs encounter challenges when dealing with long-time series, leading to gradient vanishing or exploding issues. Consequently, traditional RNNs excel at solving short-term dependencies. Advanced architectures such as the Long Short-Term Memory (LSTM) [

36,

37,

38] and the Gated Recurrent Unit (GRU) [

39,

40,

41,

42] have been developed to address this. LSTM employs memory cells and gating mechanisms to filter out the noise and selectively capture long-term dependencies more efficiently. On the other hand, GRU makes the LSTM structure simpler by only using hidden states to send information. This cuts down on parameters and computational complexity while keeping performance the same in some situations. Not only recurrent neural networks are used to process time series data, but also Temporal Convolutional Neural Networks (TCNs) [

43,

44,

45,

46]. TCNs modify the conventional approach of using two-dimensional convolutional kernels for image processing by employing one-dimensional convolutional kernels to extract local patterns and features. TCNs get a large enough sensory field to find patterns and regularities across a range of time scales by stacking multiple convolutional layers with pooling operations.

Previous studies have demonstrated the remarkable capabilities of neural networks in forecasting time series data. This study is organized as follows:

Section 2 provides a detailed explanation of the characterization of the HBM through sensor-based monitoring during its climb. It also discusses the preprocessing techniques employed to handle this data effectively. Moving on to

Section 3, an in-depth exploration of three multivariate time series prediction models, namely the LSTM, GRU, and TCN models, is presented. The technical approach adopted to predict the HBM’s posture in this study is also discussed.

Section 4 delves into an insightful comparison between the prediction results of these three models and the actual values. It also includes a meticulous analysis of the potential sources of errors generated by the models. Finally,

Section 5 and

Section 6 present the discussion and conclusions drawn from the research. It is shown that the suggested smart prediction system is better than the old ways of manipulating HBM and reduces the hysteresis of repairing the HBM’s posture.

2. Posture of the HBM

2.1. Data Sources

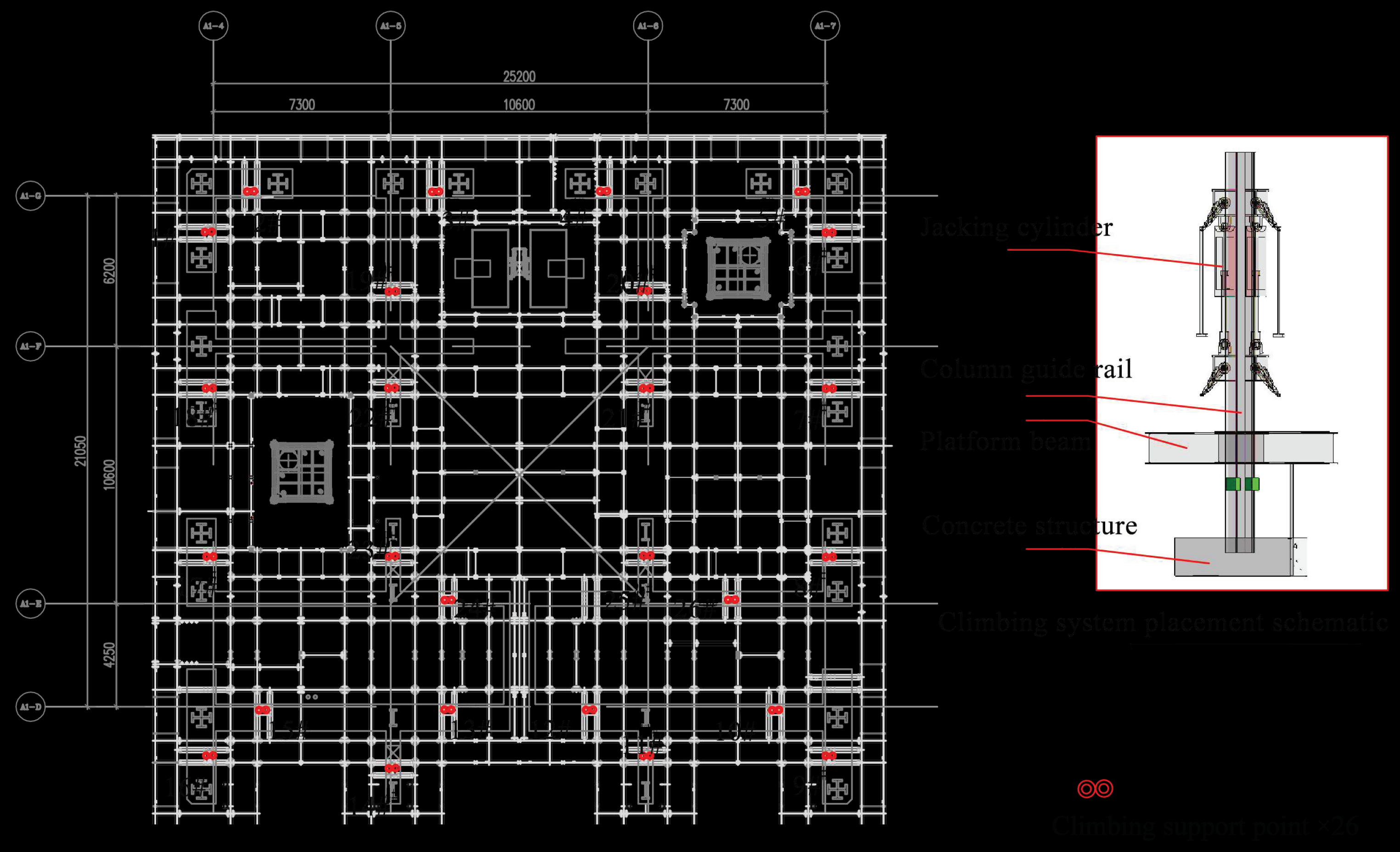

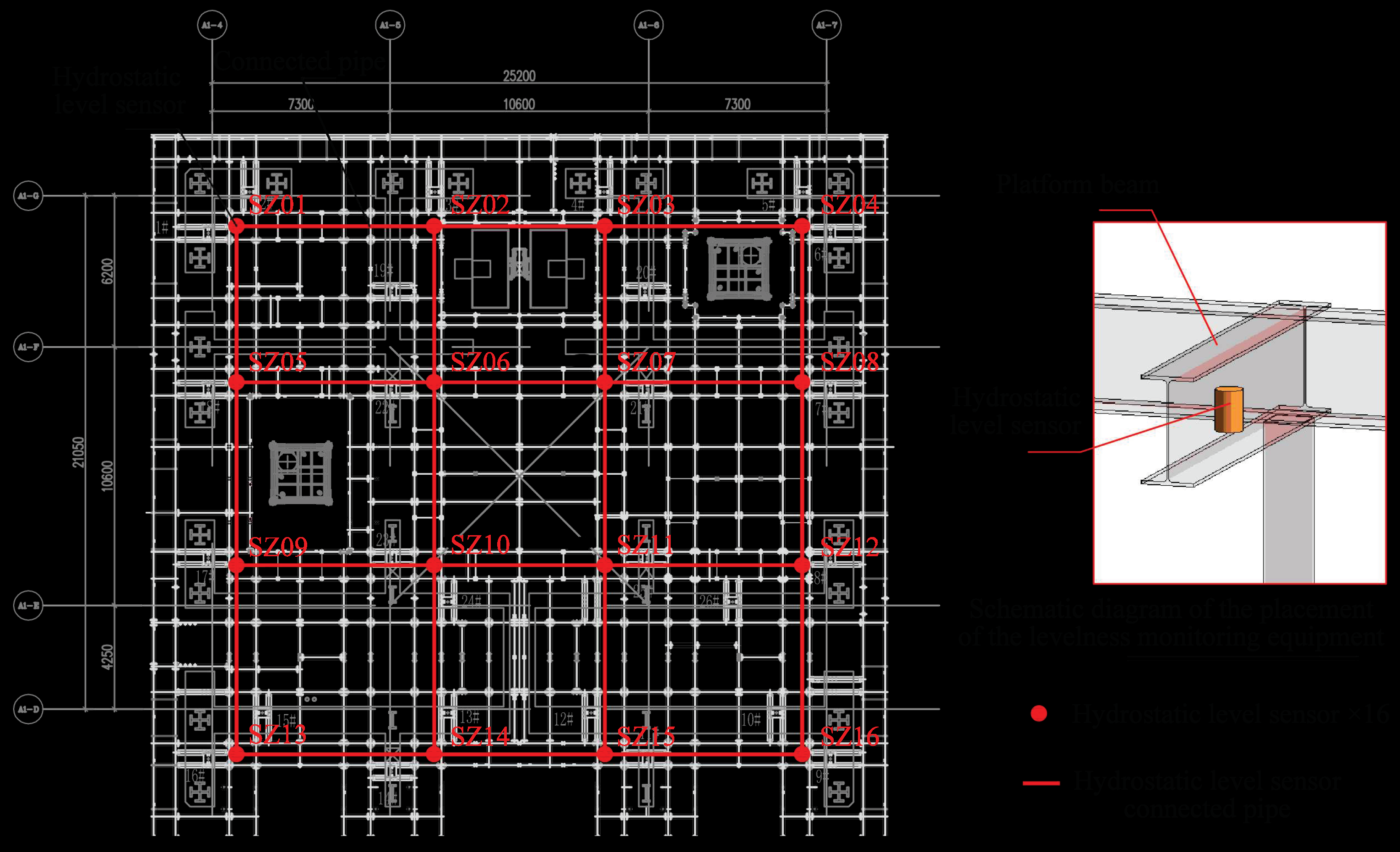

In this groundbreaking research endeavor, we meticulously observed and analyzed the HBM climbing process at the West Tower of the Shenzhen Xinghe Yabao Building project (

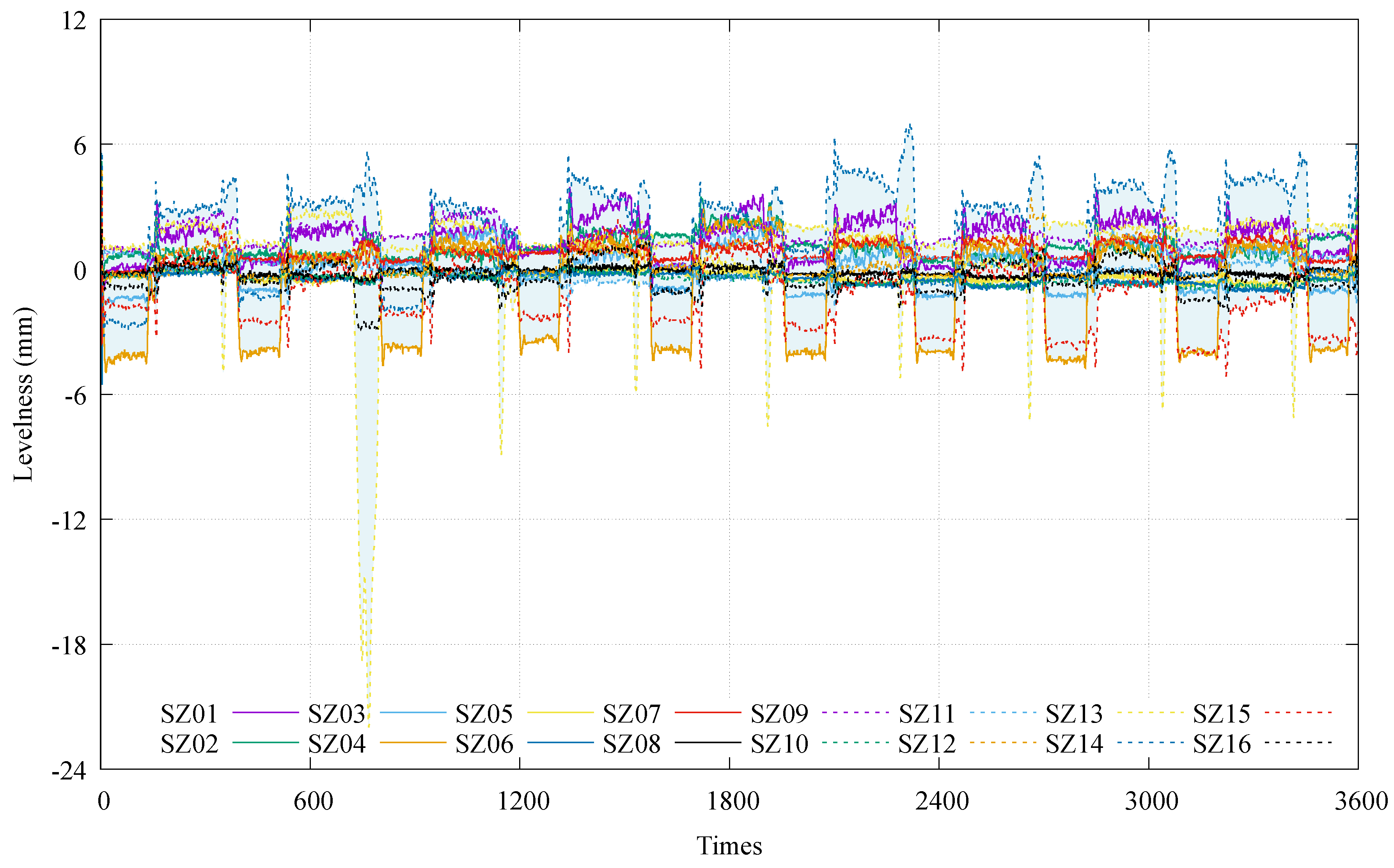

) in China. With an average climbing frequency of once every five days, we embarked on an exploration utilizing an extensive dataset comprising 30 sets/days of monitoring data. Each dataset, meticulously sampled at an impressive rate of 1 sample per second, encompassed 168,048 monitoring data points. These data points comprised 68 sample characterizations, forming a comprehensive foundation for our investigation. As showcased in the captivating

Figure 2, our monitoring efforts focused on meticulously observing and recording the jacking stroke and pressure of 26 climbing jacking cylinders throughout the climbing process. We also closely monitored the levelness of 16 crucial monitoring points on the steel platform beam, as eloquently illustrated in the captivating

Figure 3. To provide further insight,

Table 1 offers a simple breakdown of the essential characteristics exhibited by our meticulously gathered monitoring data, serving as a valuable reference throughout our study.

2.2. Pre-processing of Monitoring Data

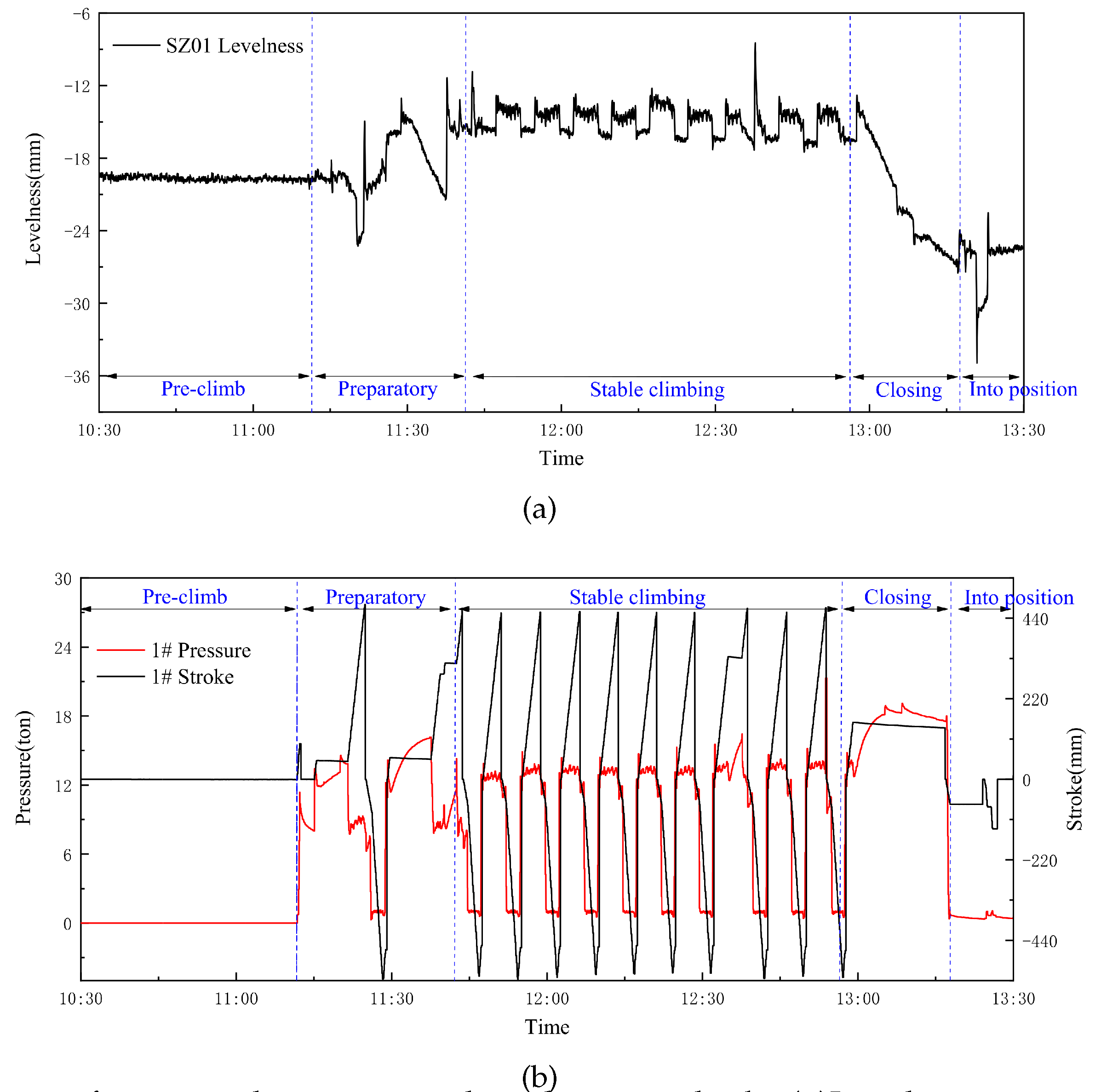

Figure 4 depicts the entire climbing process of the HBM, presenting a visual narrative of the levelness of the SP, jacking cylinder jacking stroke, and jacking cylinder pressure over time. This intricate climb process can be divided into five distinct phases: the pre-climb stage, preparation stage, stable climbing stage, closing of the climbing stage, and climbing into position stage. Remarkably, the pre-climbing and climbing into position stages exhibited minimal changes in the posture of the HBM. On the other hand, the preparation stage and closing of the climbing stage are influenced by equipment operation errors arising from installing the jacking cylinders, such as support jacking cylinder position errors and jacking cylinder tilt. However, as the climb progresses into the stabilized climbing stage, a gradual elimination of the levelness deviation of the SP becomes evident. Consequently, our focus narrows to studying and analyzing the data solely from the stable climbing stage, where the SP’s levelness is relatively stable and reliable. It is worth noting that the initial levelness deviation observed in the stable climbing stage is not attributed to the climbing process itself. To mitigate the occurrence of data jumps during multiple stable climbs, we took measures to initialize the monitoring data for each levelness of the SP at the commencement of every stable climb, ensuring the accuracy and consistency of our analysis.

3. Methodology

Selecting an appropriate machine learning algorithm is crucial to extracting valuable insights from sensor monitoring data during the HBM climbing process. This algorithm should uncover the hidden data and establish a mapping relationship between the HBM climbing parameters and postures. In order to accurately predict postures in HBM, the chosen model must effectively handle multivariate time-series features and automatically capture the nonlinear relationships present in the data. This study employs three multivariate time-series neural networks—LSTM, GRU, and TCN—to predict the postures during HBM climbing.

3.1. Multivariate Time-series Prediction Model

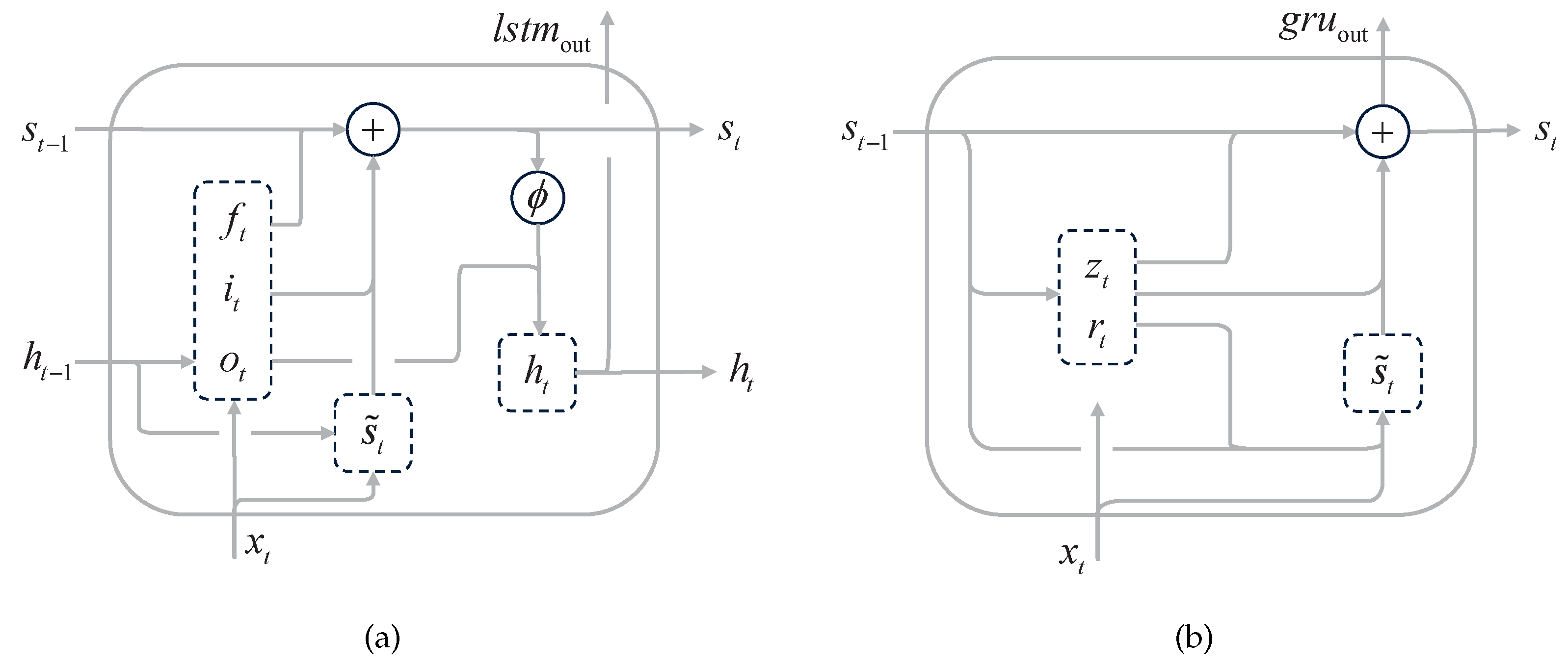

3.1.1. LSTM Model

The LSTM model, introduced by Hochreiter and Schmidhuber (1997) [

47], addresses the limitations of traditional RNNs by incorporating explicit memory management mechanisms. By explicitly adding and subtracting information (see Equation

1) from the LSTM state, the LSTM model ensures that each state cell remains constant over time. Moreover, gated cells and carefully designed memory cells enable the model to preserve long-term memory while maintaining the relevance of the most recent state. Prevents information distortion, disappearance, and sensitivity explosion, which could occur in other models like Neural Turing Machines.

A typical LSTM cell, as illustrated in

Figure 5(a), consists of three key gates: the forget gate, the input gate, and the output gate. These gates play a critical role in managing the memory of the network by modulating the activation of the weighted sum function

. As depicted in Equation

2 [

47], the forget gate

decides which information should be retained or discarded. It utilizes a sigmoid function (

) to compute a vector (output) based on the previous cell state

and the current input information

. The purpose of this scaling is to rescale the values of each dimension of the data within the range of

. Subsequently, the sigmoid function

determines the relevance of the current information and determines which information will contribute to the computation of the cell state

. The input gate

determines the information that should be stored in the cell state. It employs the sigmoid function

to compute its activation based on the previous cell state

and the current input information

. The candidate cell state

is computed using the hyperbolic tangent function (

), which yields a value ranging from -1 to +1. The outputs of these computations (

and

) are combined to update the current internal cell state

. Lastly, the output gate

determines the final output

and the next hidden state

. The output gate

multiplies the previous hidden state

and the current input information

with the sigmoid function

activation output. These calculations determine the information that the hidden layer

will carry.

3.1.2. GRU Model

The GRU model (Cho et al., 2014 [

39]) is a simplified variant of the RNNs architecture. Unlike the LSTM model, the GRU model consists of only two gates: the reset gate and the update gate.

Figure 5(b) illustrates the structure of the GRU model. As shown in Equation

3 [

39], in contrast to LSTM, GRU eliminates the need for a separate hidden state

h. Instead, it directly replaces the input gates

with

, where

represents the update gate. The reset gate

determines the combination of the input information

and the previous cell state

. The update gate

controls the retention of the previous cell state

. The cell state

is then updated by combining all the computed information.

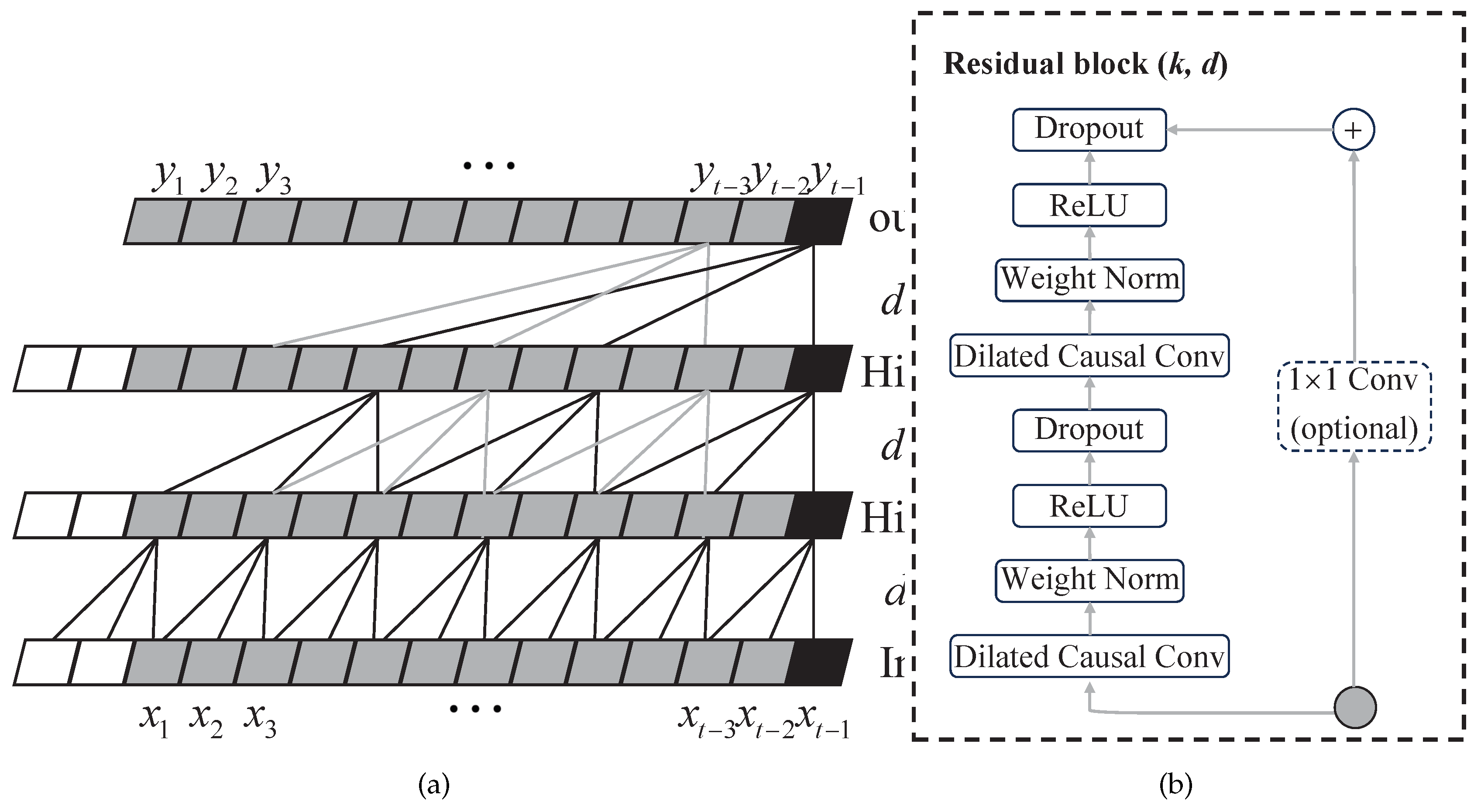

3.1.3. TCN Model

The TCN model (Bai et al., 2018 [

43]) is a one-dimensional dilated causal convolutions neural network (CNN) designed for solving time series problems. It incorporates the architecture of CNNs and introduces the concept of dilated causal convolutions.

Figure 6 illustrates the main components of the TCN model. The TCN model utilizes a one-dimensional dilated causal convolution with a filter size of 3 and a residual network structure. The combination of causal convolution and dilated convolutions allows the convolutional layer to expand its receptive field while strictly adhering to temporal constraints. This larger receptive field enables the model to learn and extract historical information from multivariate time series data. The expansion convolution operation

is employed in the sequence unit

S to process a one-dimensional time series input

, where

is the filter. The operation is defined as [

43]:

Here,

S represents the input sequence information, ∗ is the convolution operator,

k denotes the filter size,

represents the dilation factor, where

i is the levelness of the network and

indicates the localization of specific historical information.

Additionally, the TCN model incorporates a residual network structure, which helps address issues like gradient vanishing or explosion and model degradation during deep neural network training. Typically, the TCN model consists of two layers of residual module. Each module comprises dilated causal convolution, weight normalization, ReLU activation function (

, where

x is the input), and dropout. The previous layer’s output serves as the input to the dilated causal convolution of the next layer, ensuring that both the inputs

and outputs

of the module have the exact dimensions. An additional

convolution is applied to restore the original number of channels.

Where,

refers to a series of transformations leading from one residual module to another.

Figure 6.

Schematic diagram of TCN model: (a) Dilated causal convolution, (b) Residual block network structure.

Figure 6.

Schematic diagram of TCN model: (a) Dilated causal convolution, (b) Residual block network structure.

3.2. The Architecture for the Posture Prediction Model of HBM

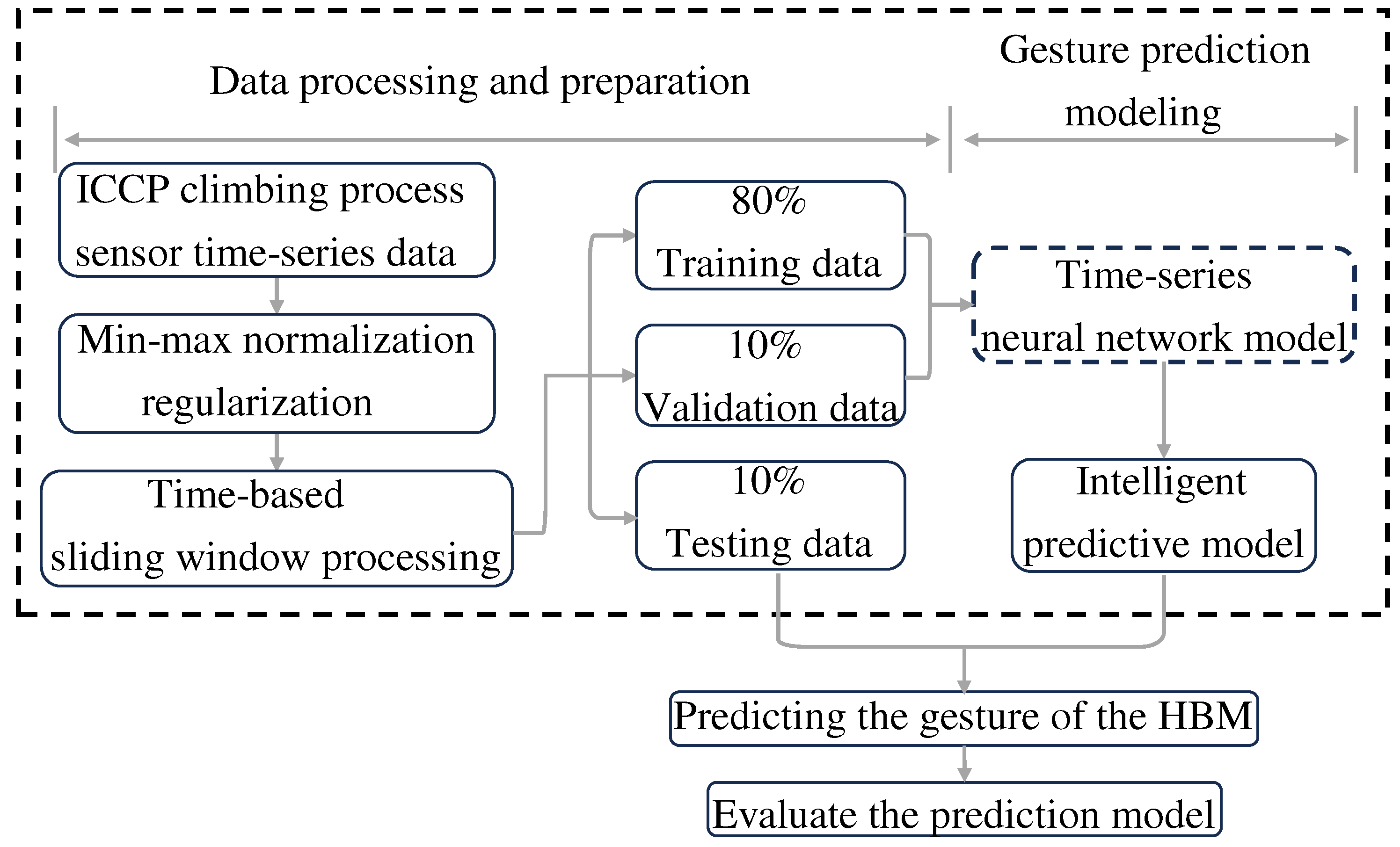

3.2.1. Data Standardization and Set Partitioning

The data obtained from different sensors often have varying size ranges, resulting in sample data in the dataset having different scales. We perform standardized preprocessing on the raw data to address this issue and prevent model bias towards certain features due to the magnitude differences during training. In this experiment, we apply min-max normalization to the sensor data samples, resulting in a value range of [0, 1]. After normalization, the scaled values of the sensor data samples

or

(where

X represents the active input dataset and

Y represents the passive output dataset) can be expressed as follows:

Here,

denotes the maximum value of the sample dataset, and

denotes the minimum value of the sample dataset.

The dataset is partitioned into a training set (80%), a validation set (10%), and a test set (10%). The training set is utilized to train the neural network model. The validation set is used to assess the performance of the untrained model, serving as a means to prevent overfitting and underfitting. Finally, the test set evaluates the optimal model’s accuracy, precision, and recall performance metrics.

3.2.2. Evaluation of Predictive Performance

In this study, the mean absolute error (MAE) and coefficient of determination Goodness of Fit (R

) are used as evaluation metrics for the prediction model:

Here,

m represents the total number of moments,

denotes the actual value at moment

i,

refers to the predicted value of the model output at moment

i, and

denotes the average of all actual values.

The MAE can better reflect the reality of prediction value errors. A more petite MAE indicates a better prediction effect. On the other hand, R ranges between 0 and 1, where a value closer to 1 indicates a better fit of the model to the data, suggesting a more vital predictive capability.

3.2.3. Multivariate Time-series Prediction Architecture

The flowchart in

Figure 7 illustrates the technical route employed in this study to predict the HBM postures. Initially, the sensor monitoring data of the HBM is preprocessed. Subsequently, a multivariate time-series neural network model is trained using the training set data, while the validation set data is utilized for model selection and fine-tuning. This experiment employs the root mean square error (RMSE) as the loss function:

In addition, the Adam optimizer was used to update the network parameters for model training and model output.

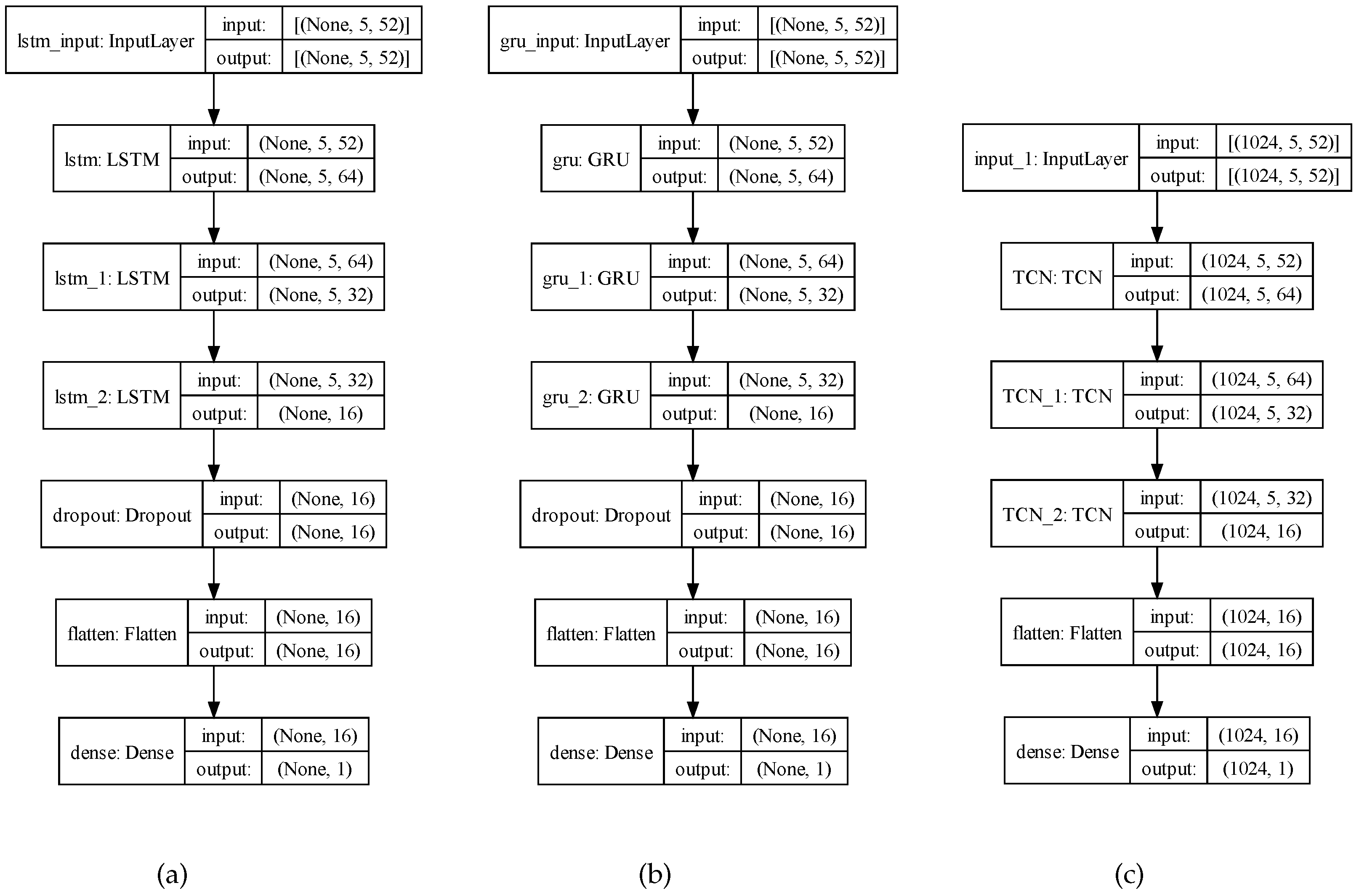

Figure 8 illustrates this study’s three multivariate time-series neural network model architectures. Notably, there are similarities between the LSTM and GRU model architectures. The LSTM, GRU, and TCN functions are utilized in the hidden layers of these neural network model architectures. All three models in this experiment use 3 hidden layers to make the prediction results comparable. In order to prevent overfitting, the Dropout function is employed as a regularization technique. It is worth mentioning that both the LSTM and GRU models implement the Dropout function after the hidden layers, while the TCN model incorporates the Dropout function within each TCN function. Finally, A fully connected layer is employed to achieve dimensionality reduction of the output.

4. Results

4.1. Sensitivity Analysis

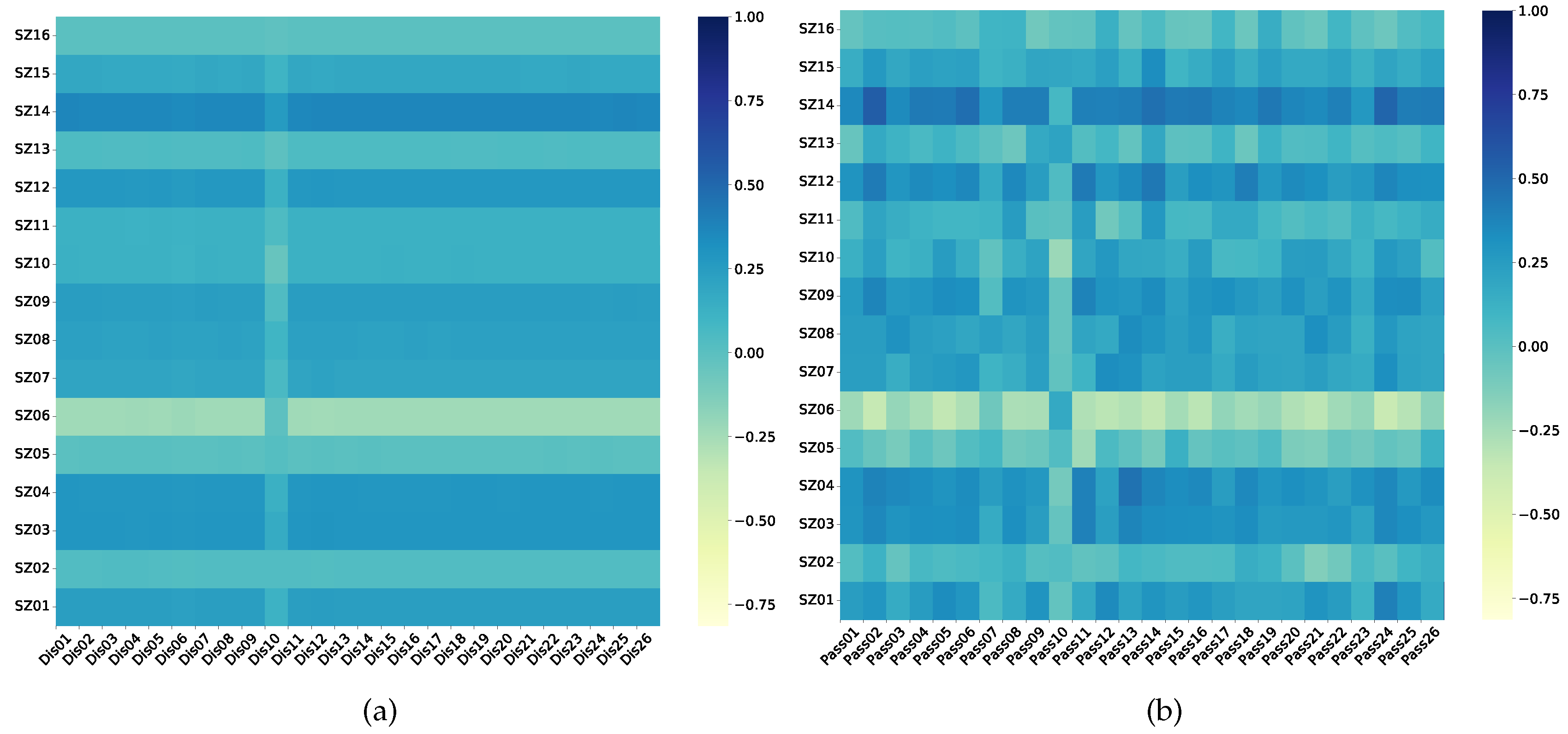

This study selected 26 jacking cylinder strokes and 26 jacking cylinder pressures as the active inputs X for the multivariate analysis of HBM climb postures prediction. The levelness of 16 monitoring points on the SP during the HBM climb was chosen as the passive outputs Y.

Figure 9(a) and

Figure 9(b) illustrate the Pearson correlation coefficients

(see Equation

9) between the jacking cylinder stroke and the SP levelness, between the jacking cylinder pressure and the SP levelness, separately. The Pearson correlation coefficient provides insight into the relationship between the jacking cylinder stroke, jacking cylinder pressure, and the levelness of each position on the SP. Notably, the displacements of the SP at SZ01, SZ03, SZ04, SZ07, SZ08, SZ09, SZ12, and SZ14 show a more pronounced response to the activity of the jacking cylinder.

Where

is the covariance and

is the standard deviation.

4.2. Model Comparison and Evaluation

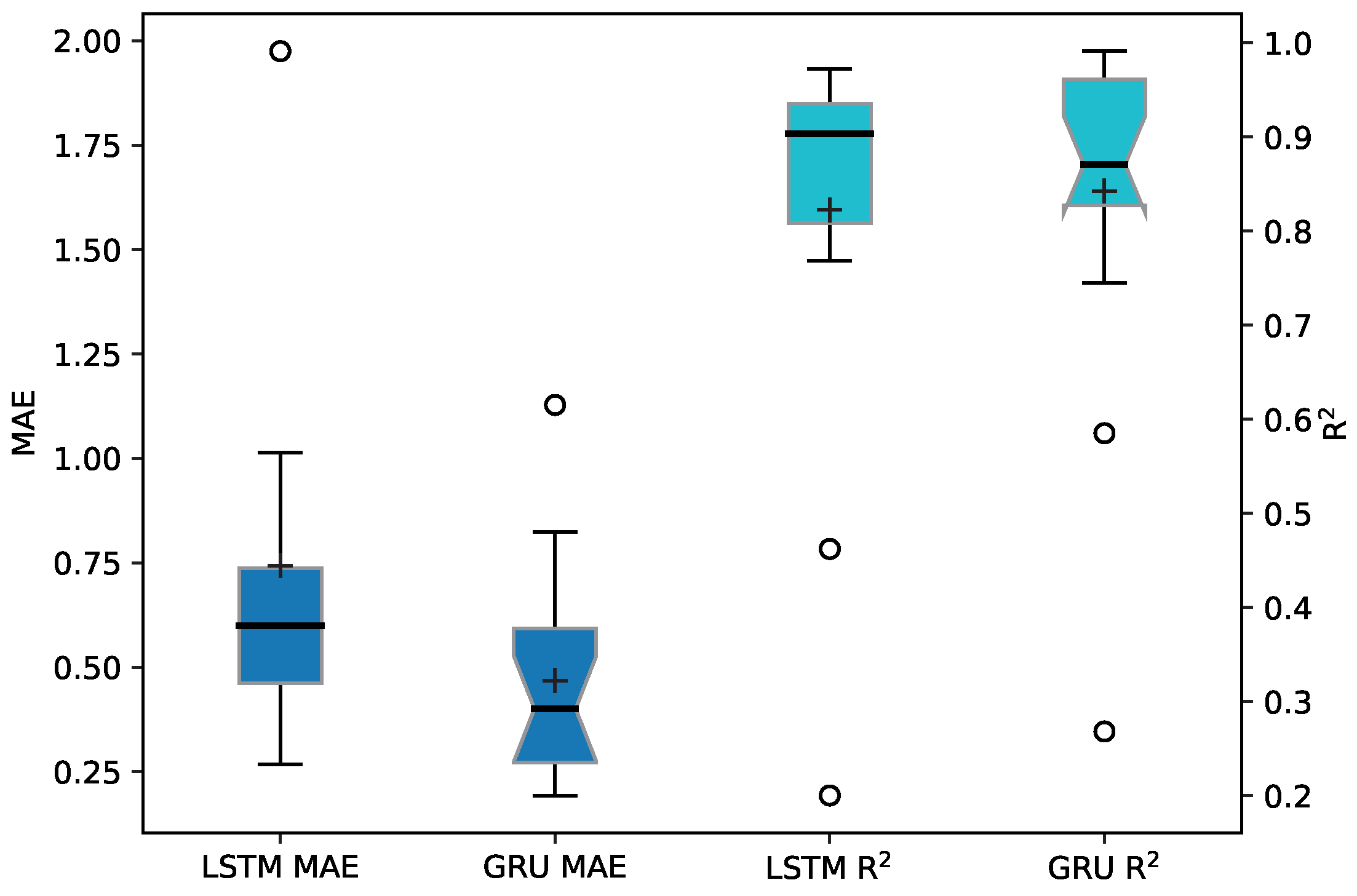

In this study, a posture prediction model for HBM is constructed using the LSTM neural network, GRU neural network, and TCN neural network. These models were trained and validated using separate datasets. After 200 epochs with a batch size of 1024, the models generated an intelligent prediction model for the 16-point levelness of the SP in HBM.

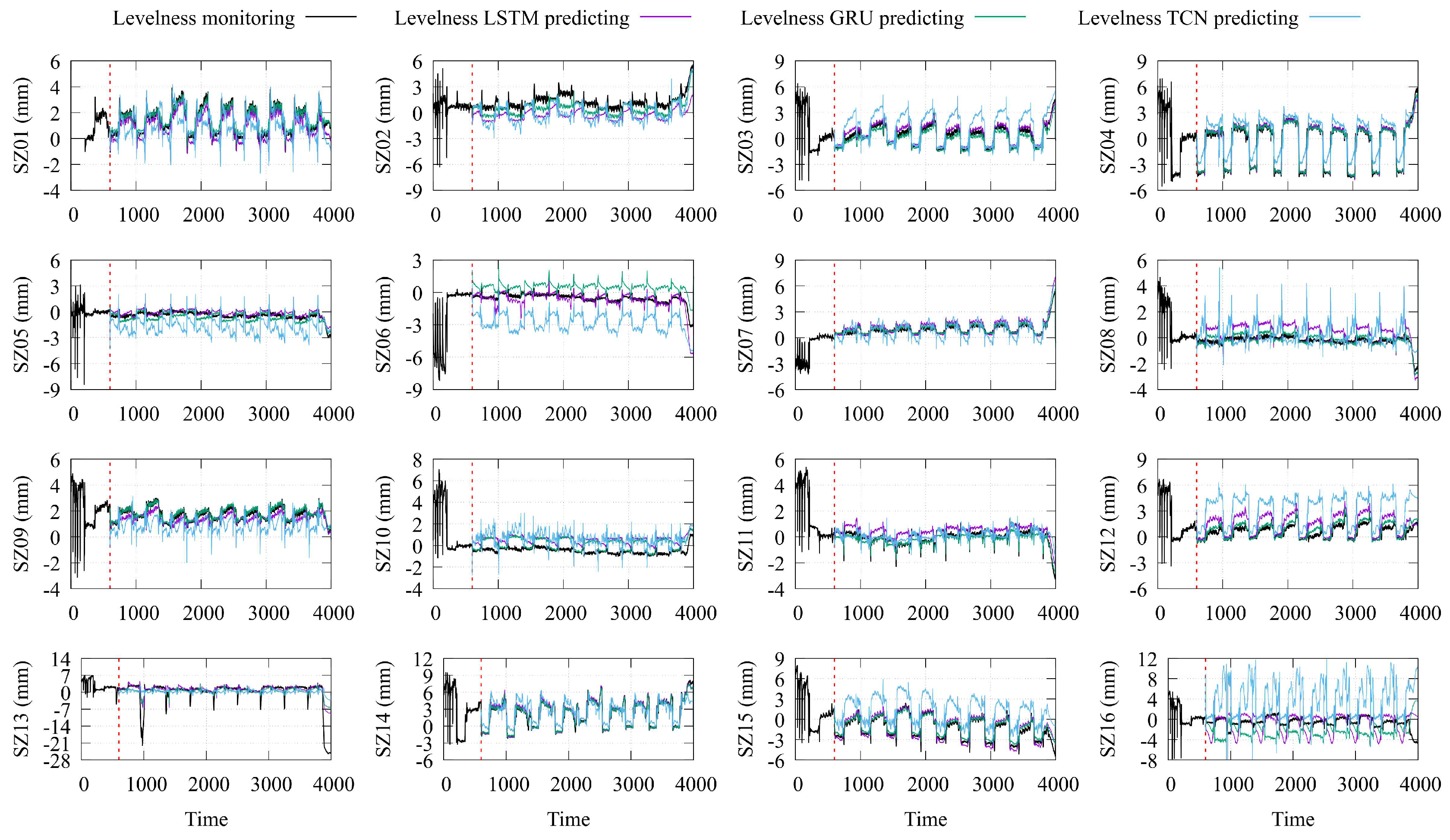

The intelligent prediction model takes real-time time series values of stroke and pressure of the jacking cylinder as input. A test dataset was used to validate the final HBM posture prediction model. The prediction results of the LSTM, GRU, and TCN models were compared with the actual levelness of the 16 monitoring points on the construction platform, as shown in

Figure 10.

Based on the fluctuating patterns and peaks of the overall levelness, the LSTM and GRU models exhibited a certain levelness of reliability in predicting the HBM postures. However, the TCN model demonstrated relatively more significant prediction errors. An in-depth analysis was conducted to evaluate the accuracy and predictive ability of the three intelligent prediction models. The goodness-of-fit correlation coefficient (R

) and the mean absolute error (MAE) (see Equation

7) were used as performance indicators, as detailed in

Table 2. The TCN model consistently exhibited MAE values generally exceeding 1.0, indicating a substantial deviation between the predicted values and the actual monitoring values. Similarly, the R

values for the TCN model were predominantly below 0.6, suggesting a lack of compatibility. For the LSTM model, MAE values surpassing 1.0 were observed at monitoring points SZ13, SZ15, and SZ16, with a significant error of 7.305 at SZ13. The corresponding R

values were less than 0.8 at SZ06, SZ10, SZ13, and SZ16, indicating poor fitting at these monitor points. The GRU model only exhibited an MAE value greater than 1.0 at monitoring point SZ13, reaching 1.128. Moreover, the R

values at SZ05, SZ06, and SZ16 fell below 0.8, indicating a weaker fit at these monitor points. Notably, the R

value at SZ16 was a mere 0.268, suggesting an almost complete lack of fitting ability at this point.

Observing the prediction results of the LSTM and GRU models, although not all the predicted values exactly matched the real levelness, the levelness of the building steel platforms at points SZ01, SZ03, SZ04, SZ07, SZ08, SZ09, SZ12, SZ14, and SZ15 showed a better prediction effect, with similar fluctuation patterns and peaks in the predicted and actual monitoring values. This further validates the results of the sensitivity analysis between multiple explanatory variables and multiple observed variables in

Section 4.1.

Figure 11 also demonstrates that the GRU model consistently had smaller MAE values, indicating higher accuracy in predicting the HBM posture. On the other hand, the LSTM model showcased R

values closer to 1 across all instances, indicating a better fit to the HBM posture.

However, monitoring points SZ10, SZ13, and SZ16 exhibited poor predictive performance across all three intelligent prediction models. Indicating a lack of sensitivity to jacking cylinder data and susceptibility to other influencing factors. The unsatisfactory prediction outcomes of the TCN model imply an inability to capture the significance of long-term features, requiring further enhancements such as introducing larger convolution kernels to capture the long-term trends of HBM postures.

Figure 11.

MAE, and R under LSTM and GRU model prediction.

Figure 11.

MAE, and R under LSTM and GRU model prediction.

5. Discussion

In this research, we focused on utilizing various neural network models, including LSTM, GRU, and TCN, to predict changes in the postures of the HBM based on historical data on the levelness of SP, stroke, and pressure of climbing jacking cylinders. We employed a multivariate time series-related model approach to address the challenge of multiple output prediction. We conducted a thorough analysis by comparing and evaluating the prediction outcomes of these time-series neural network methods. We selected the optimal solution based on calculating the mean square error, which effectively reduced model randomness and improved the correlation of levelness at each monitoring point of the HBM. By training the multivariate time series neural network, we successfully obtained a model capable of predicting the postures of the HBM. Leveraging the initial monitored data during the climbing process, our model enabled accurate predictions of subsequent data, facilitating necessary adjustments in each climbing jacking cylinder to restore the levelness of the HBM, even when the load distribution of the SP remained unknown.

During the climbing of the HBM, it is imperative to minimize the variation in the SP’s postures. Drawing upon the comprehensive analysis presented earlier, it becomes clear that the GRU model exhibits superior accuracy in predicting the hand postures of the HBM. Consequently, the HBM operator can leverage the intelligent prediction model, rooted in the GRU neural network, to anticipate future changes in HBM postures during the climbing process. Moreover, it enables a continuous adjustment of the HBM’s postures in response to the predicted outcomes.

Figure 12 illustrates the temporal data obtained from 16 levelness sensors during the climbing of the HBM. As the HBM climbs, the readings from the 16 sensors consistently demonstrate changes in the measured levelness. Furthermore, the levelness deviations across the 16 positions on the SP undergo dynamic changes in conjunction with the climbing process.

During the intricate process of climbing the HBM, the stroke of the jacking cylinder directly controls the HBM’s posture while significantly impacting its overall posture dynamics. So, it is clearly suboptimal to rely on adjusting the stroke of the jacking cylinder to rectify the HBM’s posture. In order to further leverage the proposed model to offer guidance on the HBM’s climbing state, we engage in a dynamic adjustment of the jacking pressure values across the jacking cylinders of the various positions. This approach enables us to dynamically rectify the HBM’s postures, thereby minimizing the levelness deviations among different positions on the SP.

Figure 9(b) showcases the results of a Pearson correlation analysis conducted between the levelness of the SP and the pressure exerted by the jacking cylinder. Remarkably, there is always the highest correlation discernible between the fluctuations observed in the levelness at each position on the SP and the pressure values of one jacking cylinder. Consequently, when encountering instances where the levelness at a particular position is suboptimal, we adjust the corresponding jacking cylinder’s pressure value, enhancing the levelness, diminishing the deviation in levelness across the SP, and rectifying the HBM’s posture.

Figure 12.

Use of hydrostatic levelness sensors to monitor the time course of the levelness curves at different positions of the steel platform during the climbing process.

Figure 12.

Use of hydrostatic levelness sensors to monitor the time course of the levelness curves at different positions of the steel platform during the climbing process.

In summary, in situations where significant deviations in the HBM’s postures manifest, we can minimize the levelness deviations on the SP by augmenting the pressure value of the jacking cylinder, which has a positive correlation with the low levelness position point on the SP. This approach allows us to rectify the HBM’s postures and attenuate the levelness deviations. By harnessing the predictive capabilities of the proposed model, we can anticipate the forthcoming changes in the HBM’s posture. This integration of the model into the HBM’s intelligent system empowers the HBM to adjust its climbing parameters autonomously, ensuring the smooth and secure operation of the HBM.

Figure 13 visually illustrates the process of adjusting the hand postures of the HBM during its climbing. Throughout the actual climbing process, it is imperative to minimize the levelness deviations among each monitoring position on the SP. Furthermore, it is crucial to maintain the maximum and minimum values of levelness deviations within a threshold

to mitigate the risk of the tube structure colliding with the reinforced concrete main structure of the building. Such collisions can lead to instability in the climbing process, posing a potential hazard to the HBM. The proposed model not only accurately predicts the climbing postures of the HBM but also provides specific adjustment recommendations to ensure the safe operation of the HBM and mitigate construction risks.

6. Conclusions

In this paper, we propose a multivariate time series neural network prediction system to control the HBM and maintain a smooth posture during construction operations. The system utilizes three multivariate time series neural network models: LSTM, GRU, and TCN. The pressure and stroke of the jacking cylinder serve as inputs, while the measured values of sensor levelness at 16 different positions on the SP are used as outputs. The main objective is to address the issue of unstable posture in the HBM caused by significant levelness deviations resulting from uneven stacking on the SP during the climbing process. By predicting future posture changes based on the climb data from the initial working stage of the HBM, we aim to proactively control the levelness deviations of the SP within a threshold value and prevent instability in the HBM’s posture during the climbing process. The results of the study are as follows:

For the same neural network architecture and dataset size, the prediction system that uses the GRU neural network model does a better job of guessing how the HBM will posture change while it climbs. Among the multiple levelness sensors installed on the HBM, only a subset of them demonstrate a strong correlation with the jacking parameters of the jacking mechanism. By adjusting the pressure value of the jacking cylinders, the posture of the HBM can be conveniently corrected. Therefore, we propose employing the GRU neural network prediction system to anticipate the posture changes of the HBM. Additionally, by adjusting the jacking cylinder pressure value, it is possible to maintain levelness within the threshold value.

The validation of the measured data demonstrates that the proposed prediction models can accurately determine the levelness deviations and posture of the HBM’s steel platform by solely utilizing the working data from the jacking cylinders. This capability allows for real-time warnings, indicating that these networks can make significant contributions to the safe and efficient operation of the HBM. Moreover, this modified method can also be extended to monitor the operational status of other engineering equipment, such as hydraulic climbing molds, sliding molds, and integral lifting scaffolds. Widespread adoption and implementation of this method could improve the construction levelness of high-rise buildings.

However, it’s important to note that the model was trained and tested solely based on data from a single HBM, and its applicability to other HBMs or construction platforms has yet to be verified. Additionally, the model primarily considers the pressure and stroke of the jacking cylinder as inputs without accounting for the potential influence of other environmental factors, such as weather conditions. Finally, while the GRU provided the best prediction in this study, it should not be assumed that the GRU is the optimal choice in all scenarios. Therefore, future studies should improve the preprocessing and cleaning of the data and validate the generalization ability of these models. More characteristic factors should be considered under a wider range of equipment and conditions for different types of HBM operational data. This approach will lead to more comprehensive and high-performance predictive models.

The developed models offer real-time predictions to site managers and operators, allowing them to understand the HBM’s status during the climbing process promptly. This timely understanding enables them to make the necessary adjustments in accordance with HBM management requirements and standard specification terms, thus ensuring a safer and more efficient climbing process.

Author Contributions

Conceptualization, X.P. and J.H.; methodology, J.H.; formal analysis, J.H.; resources, X.P. and L.Z.; data curation, L.Z.; writing—original draft preparation, X.P. and J.H.; writing—review and editing, Y.Z. and Z.Z.; visualization, L.Z; supervision, Z.Z; design of the experimental procedure, J.H.; project administration, J.H.; funding acquisition, X.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Key R&D Program of China (Grant No. 2022YFC3802200) and the Shanghai Enterprise Innovation Development and Capacity Enhancement Project (Grant No. 2022007).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available upon request from the corresponding author. The data are private since the data would reveal the operation of the integral climbing construction platform.

Acknowledgments

At this point, we would like to thank the editors and the reviewers for their valuable time in reviewing this study. Any suggestions for improvement you put forward will play an important role in promoting the improvement and perfection of this paper. Thanks again from all authors.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| HBM |

High-rise building machine |

| SP |

Steel platform |

| NNs |

Neural networks |

| RNN |

Rerrent neural network |

| LSTM |

Long short-term memory |

| GRU |

Gated recurrent unit |

| TCN |

Temporal convolutional neural network |

| MTS |

Multivariate time-series |

| MAE |

Mean absolute error |

| RMSE |

Root mean square error |

| R2

|

R-squared coefficient |

References

- Wakisaka, T.; Furuya, N.; Inoue, Y.; Shiokawa, T. Automated construction system for high-rise reinforced concrete buildings. Automation in Construction 2000, 9, 229–250. [Google Scholar] [CrossRef]

- Ikeda, Y.; Harada, T. Application of the automated building construction system using the conventional construction method together. In Proceedings of the Proceedings of 23rd International Symposium on Automation and Robotics in Construction; 2006; pp. 722–727. [Google Scholar] [CrossRef]

- Bock, T.; Linner, T. Site Automation: Automated/Robotic On-Site Factories, 1st ed.; Cambridge University Press: USA, 2016. [Google Scholar]

- Zayed, T.; Mohamed, E. A case productivity model for automatic climbing system. Engineering 2014, 21. [Google Scholar] [CrossRef]

- Liu, X.; Hu, Y.; Chen, D.; Wang, L. Safety Control of Hydraulic Self-climbing Formwork in South Tower Construction of Taizhou Bridge. Procedia Engineering 2012, 45, 248–252. [Google Scholar] [CrossRef]

- M, R. Constructability Assessment of Climbing Formwork Systems Using Building Information Modeling. Procedia Engineering 2013, 64, 1129–1138. [Google Scholar] [CrossRef]

- Gong, J.; Fang, T.; Zuo, J. A Review of Key Technologies Development of Super High-Rise Building Construction in China. Advances in Civil Engineering 2022, 2022, 1–13. [Google Scholar] [CrossRef]

- Pan, X.; Zuo, Z.; Zhang, L.; Zhao, T. Research on Dynamic Monitoring and Early Warning of the High-Rise Building Machine during the Climbing Stage. Advances in Civil Engineering 2023, 2023, 1–12. [Google Scholar] [CrossRef]

- Pan, X.; Zhao, T.; Li, X.; Zuo, Z.; Zong, G.; Zhang, L. Automatic Identification of the Working State of High-Rise Building Machine Based on Machine Learning. Applied Sciences 2023, 13, 11411. [Google Scholar] [CrossRef]

- Zuo, Z.; Huang, Y.; Pan, X.; Zhan, Y.; Zhang, L.; Li, X.; Zhu, M.; Zhang, L.; De Corte, W. Experimental research on remote real-time monitoring of concrete strength for highrise building machine during construction. Measurement 2021, 178, 109430. [Google Scholar] [CrossRef]

- Golafshani, E.M.; Talatahari, S. Predicting the climbing rate of slip formwork systems using linear biogeography-based programming. Applied Soft Computing 2018, 70, 263–278. [Google Scholar] [CrossRef]

- Li, S.; Yu, Z.; Meng, Z.; Han, G.; Huang, F.; Zhang, D.; Zhang, Y.; Zhu, W.; Wei, D. Study on construction technology of hydraulic climbing formwork for super high-rise building under aluminum formwork system. IOP Conference Series: Earth and Environmental Science 2021, 769, 032062. [Google Scholar] [CrossRef]

- Kim, M.J.; Kim, T.; Lim, H.; Cho, H.; Kang, K.I. Automated Layout Planning of Climbing Formwork System Using Genetic Algorithm. In Proceedings of the Proceedings of the 33rd International Symposium on Automation and Robotics in Construction (ISARC); Sattineni, A.A.U., Azhar, S.A.U., Castro, D.G.T.U., Eds.; Auburn, USA, July 2016; pp. 770–777. [Google Scholar] [CrossRef]

- Yao, G.; Guo, H.; Yang, Y.; Xiang, C.; Soltys, R. Dynamic Characteristics and Time-History Analysis of Hydraulic Climbing Formwork for Seismic Motions. Advances in Civil Engineering 2021, 2021, 1–17. [Google Scholar] [CrossRef]

- Dong, J.; Liu, H.; Lei, M.; Fang, Z.; Guo, L. Safety and stability analysis of variable cross-section disc-buckle type steel pipe high support system. International Journal of Pressure Vessels and Piping 2022, 200, 104831. [Google Scholar] [CrossRef]

- Chandrangsu, T.; Rasmussen, K. Investigation of geometric imperfections and joint stiffness of support scaffold systems. Journal of Constructional Steel Research - J CONSTR STEEL RES 2011, 67, 576–584. [Google Scholar] [CrossRef]

- Shen, Y.; Xu, M.; Lin, Y.; Cui, C.; Shi, X.; Liu, Y. Safety Risk Management of Prefabricated Building Construction Based on Ontology Technology in the BIM Environment. Buildings 2022, 12. [Google Scholar] [CrossRef]

- Pham, C.; Nguyen, P.T.; Thanh Phan, P.; T. N, Q.; Le, L.; Duong, M. Risk Factors Affecting Equipment Management in Construction Firms 2020. 07, 347–356. [CrossRef]

- He, Z.; Gao, M.; Liang, T.; Lu, Y.; Lai, X.; Pan, F. Tornado-affected safety assessment of tower cranes outer-attached to super high-rise buildings in construction. Journal of Building Engineering 2022, 51, 104320. [Google Scholar] [CrossRef]

- Jiang, L.; Zhao, T.; Zhang, W.; Hu, J. System Hazard Analysis of Tower Crane in Different Phases on Construction Site. Advances in Civil Engineering 2021, 2021, 1–16. [Google Scholar] [CrossRef]

- Hasan, M.M.; Islam, M.U.; Sadeq, M.J.; Fung, W.K.; Uddin, J. Review on the Evaluation and Development of Artificial Intelligence for COVID-19 Containment. Sensors 2023, 23. [Google Scholar] [CrossRef]

- Li, X.; Wu, Y.; Shan, X.; Zhang, H.; Chen, Y. Estimation of Airflow Parameters for Tail-Sitter UAV through a 5-Hole Probe Based on an ANN. Sensors 2023, 23. [Google Scholar] [CrossRef]

- Tang, X.; Shi, L.; Wang, B.; Cheng, A. Weight Adaptive Path Tracking Control for Autonomous Vehicles Based on PSO-BP Neural Network. Sensors 2023, 23. [Google Scholar] [CrossRef]

- Sresakoolchai, J.; Kaewunruen, S. Track Geometry Prediction Using Three-Dimensional Recurrent Neural Network-Based Models Cross-Functionally Co-Simulated with BIM. Sensors 2023, 23. [Google Scholar] [CrossRef] [PubMed]

- Shevgunov, T.; Efimov, E.; Guschina, O. Estimation of a Spectral Correlation Function Using a Time-Smoothing Cyclic Periodogram and FFT Interpolation–2N-FFT Algorithm. Sensors 2023, 23. [Google Scholar] [CrossRef]

- Zhu, Y.; Wang, M.; Yin, X.; Zhang, J.; Meijering, E.; Hu, J. Deep Learning in Diverse Intelligent Sensor Based Systems. Sensors 2023, 23. [Google Scholar] [CrossRef] [PubMed]

- Hasan, F.; Huang, H. MALS-Net: A Multi-Head Attention-Based LSTM Sequence-to-Sequence Network for Socio-Temporal Interaction Modelling and Trajectory Prediction. Sensors 2023, 23. [Google Scholar] [CrossRef]

- Wu, D.; Yu, Z.; Adili, A.; Zhao, F. A Self-Collision Detection Algorithm of a Dual-Manipulator System Based on GJK and Deep Learning. Sensors 2023, 23. [Google Scholar] [CrossRef]

- Kapoor, B.; Nagpal, B.; Jain, P.K.; Abraham, A.; Gabralla, L.A. Epileptic Seizure Prediction Based on Hybrid Seek Optimization Tuned Ensemble Classifier Using EEG Signals. Sensors 2023, 23. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, .; Polosukhin, I. Attention is all you need. Advances in neural information processing systems 2017, 30.

- Schmidhuber, J. Deep learning in neural networks: An overview. Neural networks 2015, 61, 85–117. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Wang, Z.; Liu, X.; Zeng, N.; Liu, Y.; Alsaadi, F. A survey of deep neural network architectures and their applications. Neurocomputing 2016, 234. [Google Scholar] [CrossRef]

- Wu, Y.c.; Feng, J.w. Development and Application of Artificial Neural Network. Wireless Personal Communications 2018, 102. [Google Scholar] [CrossRef]

- Zignoli, A. Machine Learning Models for the Automatic Detection of Exercise Thresholds in Cardiopulmonary Exercising Tests: From Regression to Generation to Explanation. Sensors 2023, 23. [Google Scholar] [CrossRef]

- Rollo, F.; Bachechi, C.; Po, L. Anomaly Detection and Repairing for Improving Air Quality Monitoring. Sensors 2023, 23. [Google Scholar] [CrossRef]

- Greff, K.; Srivastava, R.; Koutník, J.; Steunebrink, B.; Schmidhuber, J. LSTM: A search space odyssey. IEEE transactions on neural networks and learning systems 2015, 28. [Google Scholar] [CrossRef]

- Alexandru, T.G.; Alexandru, A.; Popescu, F.D.; Andraș, A. The Development of an Energy Efficient Temperature Controller for Residential Use and Its Generalization Based on LSTM. Sensors 2023, 23. [Google Scholar] [CrossRef]

- Zeng, H.; Guo, J.; Zhang, H.; Ren, B.; Wu, J. Research on Aviation Safety Prediction Based on Variable Selection and LSTM. Sensors 2023, 23. [Google Scholar] [CrossRef]

- Cho, K.; Merrienboer, B.; Bahdanau, D.; Bengio, Y. On the Properties of Neural Machine Translation: Encoder-Decoder Approaches 2014. [CrossRef]

- Henry, A.; Gautam, S.; Khanna, S.; Rabie, K.; Shongwe, T.; Bhattacharya, P.; Sharma, B.; Chowdhury, S. Composition of Hybrid Deep Learning Model and Feature Optimization for Intrusion Detection System. Sensors 2023, 23. [Google Scholar] [CrossRef]

- Chang, W.; Sun, D.; Du, Q. Intelligent Sensors for POI Recommendation Model Using Deep Learning in Location-Based Social Network Big Data. Sensors 2023, 23. [Google Scholar] [CrossRef] [PubMed]

- Wei, L.; Wang, Z.; Xu, J.; Shi, Y.; Wang, Q.; Shi, L.; Tao, Y.; Gao, Y. A Lightweight Sentiment Analysis Framework for a Micro-Intelligent Terminal. Sensors 2023, 23. [Google Scholar] [CrossRef] [PubMed]

- Bai, S.; Kolter, J.Z.; Koltun, V. An empirical evaluation of generic convolutional and recurrent networks for sequence modeling. arXiv preprint arXiv:1803.01271, 2018; arXiv:1803.01271 2018. [Google Scholar]

- Bao, S.; Liu, J.; Wang, L.; Konečný, M.; Che, X.; Xu, S.; Li, P. Landslide Susceptibility Mapping by Fusing Convolutional Neural Networks and Vision Transformer. Sensors 2023, 23. [Google Scholar] [CrossRef]

- Song, Y.; Chen, J.; Zhang, R. Heart Rate Estimation from Incomplete Electrocardiography Signals. Sensors 2023, 23. [Google Scholar] [CrossRef] [PubMed]

- Ali, S.; Alam, F.; Arif, K.M.; Potgieter, J. Low-Cost CO Sensor Calibration Using One Dimensional Convolutional Neural Network. Sensors 2023, 23. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-term Memory. Neural computation 1997, 9, 1735–80. [Google Scholar] [CrossRef] [PubMed]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).