1. Introduction

The objective of this experimental protocol is to determine the relationship between physiological measurements and emotional state in children and adults with cerebral palsy. Our goal is to collect objective information on the state of the users in order to achieve greater effectiveness in rehabilitation therapies and in the promotion of physical activity. The knowledge of the user emotional state is important to conduct the appropriate activities for their rehabilitation.

The experimentation will be conducted with a group of users belonging to the Association of People with Cerebral Palsy of Seville (ASPACE) and the Special Education School of Seville Director Mercedes Sanroma.

The methodology will be applied to groups of users of different ages with different capacities. For this reason, this is a flexible methodology that proposes different tasks according to the context. Some of the tasks involve physical activity, which must be taken into account due to the possible disturbance that it can introduce in the measures taken.

Having an emotional characterization of the user based on their physiological measurements will allow us to do a real-time evaluation of the feelings of users during the performance of the tasks. In this way the activity can be conducted better by adapting the necessary parameters so that it can be calculated as efficiently as possible and lead to better results.

The challenge we propose to address is that the subjects have difficulties in expressing and recognizing emotional states, which rules out the use of self-assessment tests to contrast and compare with the measures taken. This makes us resort to their caregivers or relatives or, alternatively or complementary, take measures in contexts or situations of daily life where the emotional state induced in the subject is known.

This experimentation is part of a subproject called AAI (Augmentative Affective Interface), which belongs to the AIR4DP (Artificial Intelligence and Robotic Assistive Technology devices for Disabled People) coordinated project that is funded by the Ministry of Science, Innovation and Universities (I+D+i Projects 2019 - Society Challenges). The main expected result of the AIR4DP project is the implementation of assistive technology that allows incorporating the latest advances in artificial intelligence (AI) to improve the quality of life of people with disabilities. The AAI subproject seeks an improvement in user interaction with systems achieved through knowledge of the emotional state based on physiological measurements and image. If this knowledge is injected into the robotic platform or into the software used in rehabilitation therapies, a greater immersion and motivation of the subject in the proposed activities will be achieved.

2. Review of the state of the art

In order to have a knowledge base, three searches have been carried out with the following keywords:

Emotion & detection & physical & activity. These keywords have been used with the aim of exploring methodologies that allow detecting the emotional state during physical activities, or the proposals of physical activity according to a specific emotional state.

Emotion & detection &cerebral & palsy. With this search, we intended to detect the studies related to the determination of the emotional state in personnel with cerebral palsy. The special characteristics of this population mean that the usual methodologies are not fully applicable, so it is of interest to study cases where this type of measurement has been made.

Emotion & elicitation & music. Music provokes emotions in subjects. The qualities of sound: Frequency, timbre, duration and intensity influence the induced emotions, hence its use in therapies. It can be a way of bringing the subject to the desired emotional state to correlate parameters measured in it or a form of motivation to carry out activities.

In [

1] a health assistant system for subjects with depression is proposed. The system has three facets: the first remembers the taking of medications, the second measures the emotional state of the subjects focuses mainly in electroencephalography (EEG), the third proposes exercises based on the previous determination of the emotional state. In [

2] the purpose is to detect the state of relaxation based on physiological signals, specifically in the galvanic skin response (GSR), also called electrodermal activity (EDA). In [

3] an experiment is carried out to measure stress in real life, where 16 subjects participate. A smartwatch measures temperature, pulse, GSR and acceleration data. Contextual weather information is also measured. The incorporation of the environment measurement increases the precision in the detection of the stress state. In addition, these measures are based on responses to stress self-assessment questionnaires. In [

4] a system is proposed to motivate and monitor the physical activity of the elderly, the system is based on the emotional state for activity proposals. They rely on the database called AMIGOS [

5]. The questionnaires used for both users and caregivers related to their experience using the system are shown: the questionnaire for users is shown in

Table 1 and that of caregivers in

Table 2.

In [

6] a robotic platform is proposed to encourage physical activity in older people, their emotional state is measured through images. In [

7] cognitive fatigue is measured using a mobile application and 18 people participate in the experiment. The measure is based on the responses of the subjects to mobile games, a questionnaire and facial recognition with affective. In [

8] different methods of classification and processing of the EEG signal are evaluated to detect emotional states caused by a game.

There are few works dedicated to the evaluation of emotional states of people with cerebral palsy (CP). This topic has constituted the second of the searches carried out to determine the state of the art. In [

9] visual evoked potentials using IAPS imaging are used to analyze evoked emotions in children with CP versus typically developing children. The study is supported by questionnaires in the family environment (KIDSCREEN52) and also the Self-Assessment Manikin to the participants who were chosen with the appropriate cognitive level to be able to complete this test. The test is done with 15 children with CP and 14 children with normal development. It is observed that images with affective content induce less amplitudes in brain responses in children with CP than in those with normal development, which seems to indicate a lower ability to detect emotions. These emotion elicitation techniques will not always be valid, it is something to study with the help of the professionals who attend to the subjects.

In [

10], a study is done with a 10-year-old girl with a GMFCS (Gross Motor Function Classification System) of III. Virtual reality is used. Their emotions are analyzed based on facial gestures. In [

11], a case study of a girl with cerebral palsy with many communication difficulties with the caregiver is presented, from an image recognition application in PYTHON they are able to detect patterns that inform the caregiver of their status.

Some works in which music is used to induce emotions in the subjects are described below and it is analyzed that it will arouse different emotions depending on the musical properties of the piece. In [

12] emotions are detected while the subject plays a racing video game, the EEG signal is chosen and the classifier is developed by taking the signals from a specific database of emotions. The idea is to insert musical segments into the game according to the emotions detected in the player. In [

13] an experiment is carried out 13 bars in La major and minor are used as stimuli, different self-assessment scales are used to assess the musical pieces and the ECG (Electrocardiogram) signal and respiration are measured. It is observed that the mode significantly influences the physiological signals. In [

26] an experiment is carried out to see how music affects brain activity. Two types of stimuli are used: generated music and classical music. The music generated is used so that a possible familiarity with it does not affect the emotional state, which is why it is based on music that the participant will not know from the outset. Classical music is used in search of strong affective responses. EEG and FMRI (Functional Magnetic Resonance Imaging) measurements are taken and it is observed that the musical stimulus affects the asymmetry in the responses of the two cerebral lobes. In addition to physiological measurements, users must also respond to tests on their emotional state. In [

15] it is intended to elicit astonishment in users and for this, different music stimuli and Virtual Reality (VR) are combined, the results are obtained through various self-assessment tests. Physiological measurements are not performed. In addition, as the elicitation of amazement also depends on the personality of the subjects, two additional scales are used, the first measures the predisposition to experience positive emotions and the second musical preferences. In [

17] an experiment is carried out with 12 people in order to find out the relationship between the EEG signal and emotions. The asymmetry in the value of the EEG signal and its relationship with emotions are studied. To elicit emotions, images from the FACS (Facial Action Coding System) are used, in order to elicit them by imitation and these images are accompanied by music. In [

18] the objective is to elicit emotions in the subjects for which movie clips have been used, the clips are chosen to elicit emotional states of happiness, sadness, fear and relaxation. To modify the emotion induced by the scene, music and the color of the ambient light are used for two minutes.

3. Materials and Methods

3.1. Design of the study

The first part of the study will be descriptive and observational where the choice of those parameters (dependent variables) that adequately characterize the emotional state of the subjects will be sought. The second part of the study will be analytical where the influence of music on changes in the selected dependent variables will be determined. In this second part, a single case study will be made, type AB, for each subject, where, in condition A, the rehabilitation exercise is carried out in silence, while, in condition B, it is accompanied by motivating music.

3.2. Participants

The participants, as already indicated, belong to the ASPACE association (adults) and the director Mercedes Sanromá special education school (children). The study will involve 40 subjects: 20 adults and 20 children. The following criteria are proposed for this group of participants.

3.2.1. Inclusion criteria

People with a recognized disability, caused by a disease or permanent health situation.

Be between 2 and 65 years of age.

Have a degree of functional ability in mobility domain moderate-low (measured through items related to their motor functionalities of the International Classification of Functioning, Disability and Health (ICF) [

16] in the case of adult population and Gross Motor Function Classification System (GMFCS), [

19] and Manual Ability Classification System (MACS) [

20] in the case of children). In [

21] a study of this population was conducted and the two scales were homogenized to measure adults and children in the same way.

People with motivation to use technologies and/or who can use wearable devices during the intervention time.

People who come weekly to the collaborating centers.

People without hearing impairments.

3.2.2. Exclusion criteria

Presenting a health situation that is incompatible with the use of technology ((e.g. use of respirator, pacemaker, sensitive skin...).

Have a very limited cognitive capacity, which prevents you from following the instructions for the proper use of assistive technology (measured through items related with this in the ICF scale in adults and Communication Function Classification System (CFCS) [

22] in children).

Not having adequate human support.

People with hearing impairments.

3.2.3. Recruitment of participants

All people who meet the inclusion criteria will be invited to participate in the study. The recruitment of participants will be carried out by the TAIS (Technology for Assistance Integration and Health) research group of the University of Seville, through contact with the collaborating centers. In all cases, a cover letter will be delivered to the managers or directors of the centers with all the information about the project. The participants and/or their representatives will sign the informed consent form.

3.3. Instruments of measurement

3.3.1. Tests and questionnaires

Tests to determine the basic state or those to be used before and after the intervention of the participants. Questionnaires of the basic state of the subjects. It is important to know the quality of life and emotional situation of the subjects to be measured, with the aim of subsequently contrasting the physiological measurements taken. There are two populations, children and adults. In addition, the cognitive status of the subjects may vary. All of these are factors to be taken into account when applying the measurement instruments. Sometimes, it will have to be the family or the professionals of the centers responsible for completing the questionnaires.

3.3.2. Devices for recording physiological data

During the sessions, data provided by the wearable devices developed in the context of this project will be recorded.

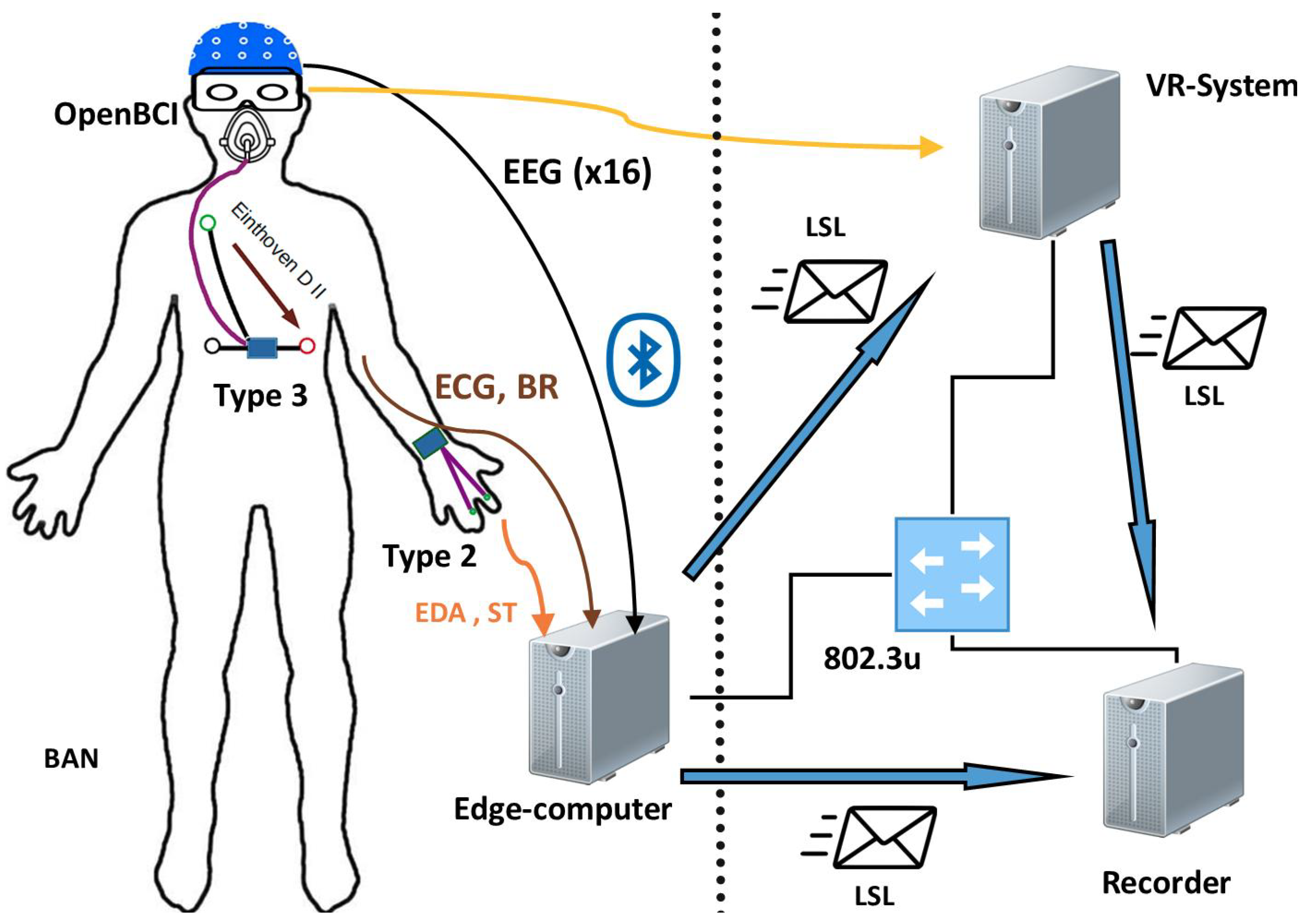

Figure 1 shows a diagram of the sensors used.

These are 4 devices that distribute sensors throughout the body: 4 inertial units on the wrist, ankle, chest and head; a wrist temperature sensor; a sensor of electrical activity of the skin (EDA) in the phalanges (it is measured by dry electrodes placed on the hearten and hypothenar eminences of the dominant hand); an electrocardiography sensor on the chest and 8 channels of electroencephalography (EEG). In addition, the ambient temperature can be recorded.

One of the wearables is the OpenBCI device

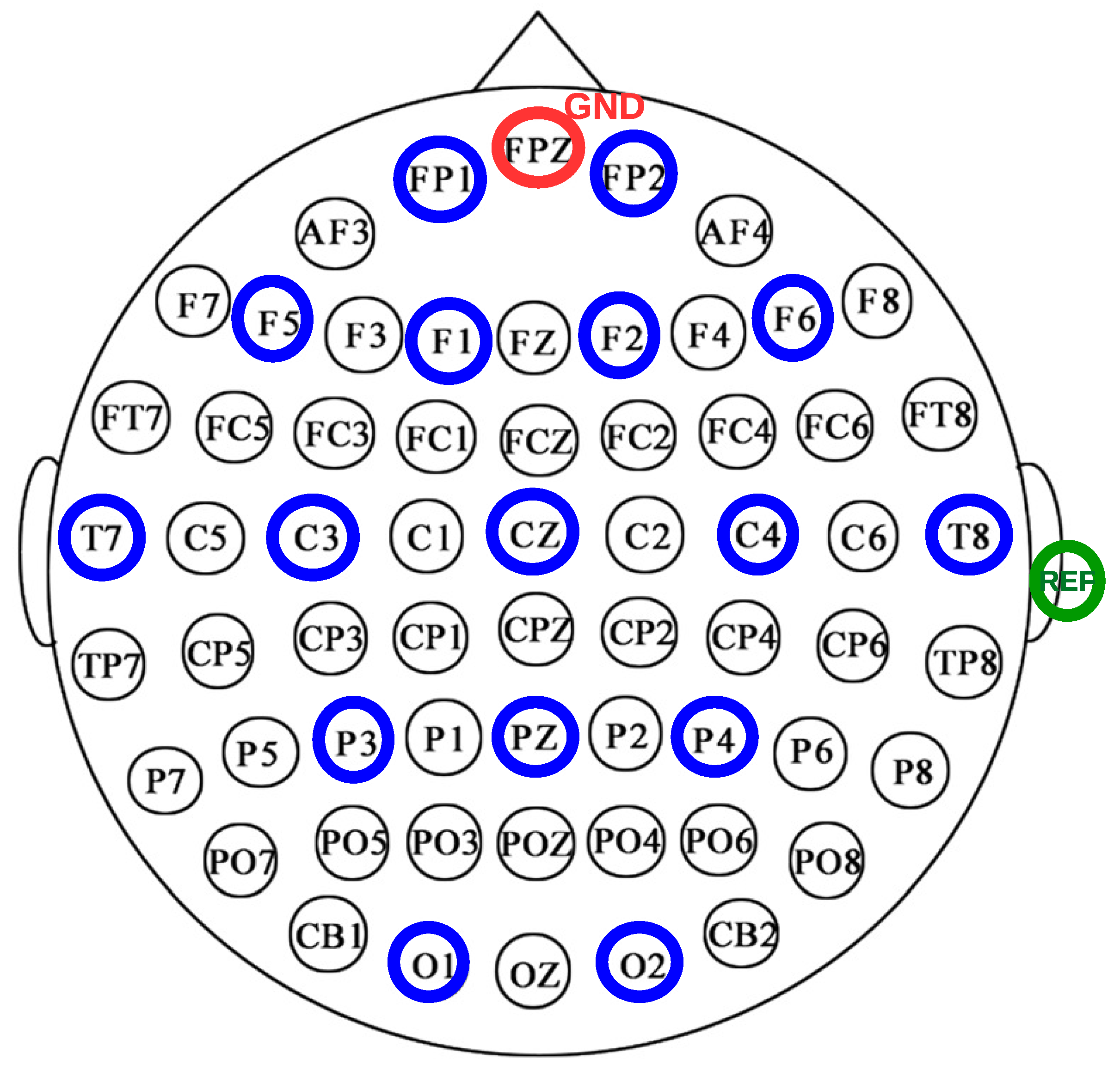

2. It is an 16-channel bioamplifier for EEG measurement, it measures at a maximum rate of 250 Hz and includes an inertial unit that measures at 25 Hz. In

Figure 2 electrode position is indicated.

It will be analyzed the following independent variables:

Average kinetic energy measurements (in joules) using inertial sensors. They provide information about the energy expenditure that these entail.

Instantaneous Heart Rate (HR), in seconds. A wearable placed in the chest with Ag/AgCl electrodes for ECG is used. The position of R wave is determined using an appropriate algorithm and then time difference between two consecutive R waves is calculated, this time difference is used to calculed HR.

The ratio between low frequency, (LF) and high frequency, (HF), (LF/HF) components of HRV (Heart Rate Variability) measured from ECG. The ratio shows the balance between the SNS (Sympathetic Nervous System) and the PNS (Parasympathetic Nervous System).

Temporal parameters of HRV. HVR can be measured using temporal parameters such as: SDNN Standard deviation of NN intervals; RMSSD Root mean square of successive differences between normal heartbeats; pNN50 Percentage of successive RR intervals that differ by more than 50 ms.

Tonic Skin Conductance Level (SCL) This signal is the background tonic of EDA.

Parameters of Phasic Skin Conductance Response (SCR). This signal are constituted by the rapid phase components of the EDA.

Fractal dimension of EEG. The EEG signals are highly complex and dynamic in nature. Fractal dimension (FD) is emerging as a novel feature for computing its complexity. We will use the Higuchi’s algorithm.

Spectral Entropy (SE) of EEG. SE can be used for computing EEG complexity. To do that, the power spectral density (PSD) must be obtained as a first step. After normalizing the PSD by the number of bins, which can be viewed as a probability density function conversion, the classical Shannon’s entropy for information systems is then calculated.

EEG coherence. The interactions between neural systems, operating in each frequency band, are estimated by means of the EEG coherence. While neural synchronization influences EEG amplitude, the coherence between signals captured by one pair of electrodes refers to the consistence and stability of the signal amplitude and its phase. Two brain areas connected should show a signal delay in time domain that is measured as a phase shift in the frequency domain.

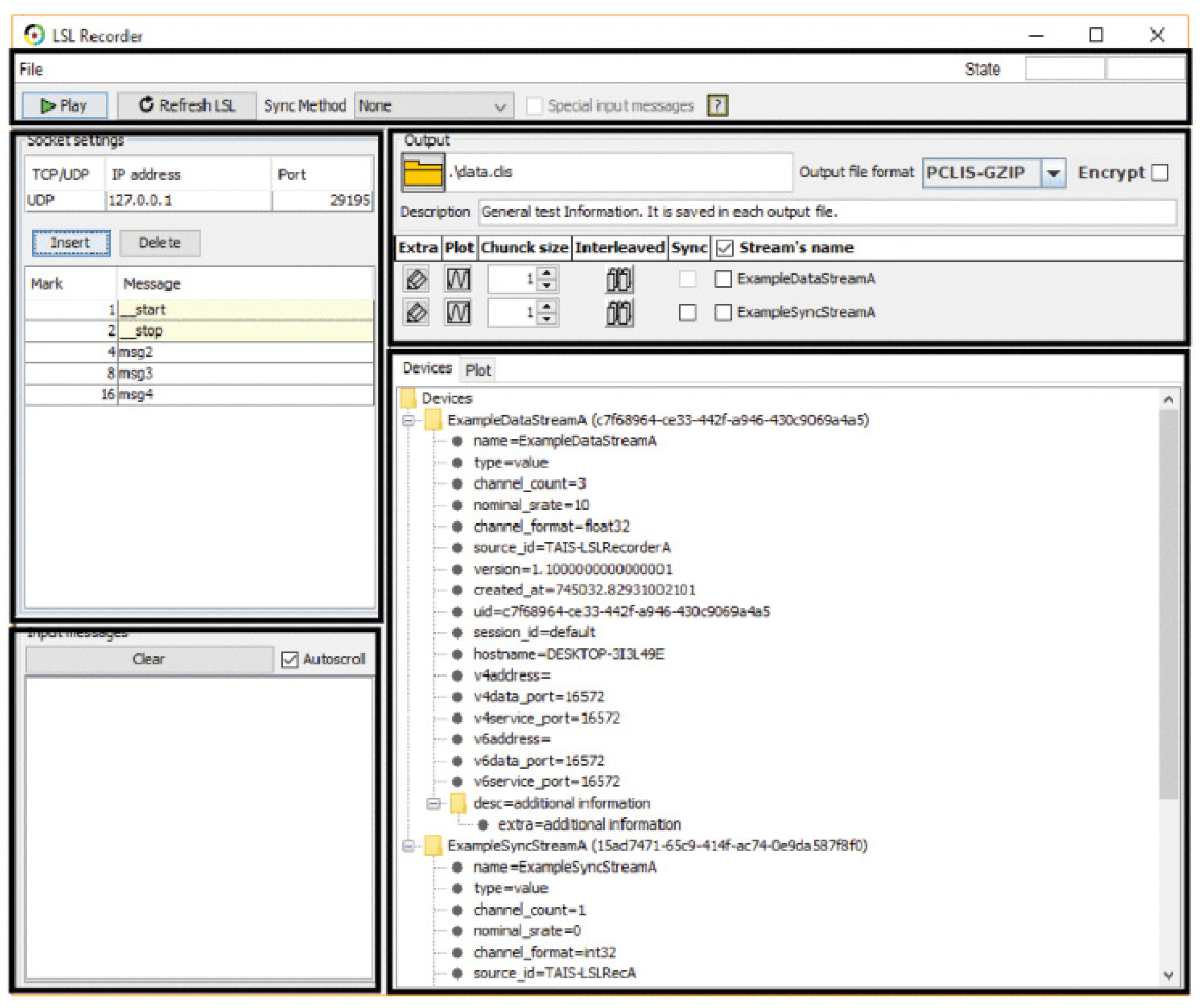

The data are recorded in a synchronized manner thanks to a software application (

Figure 3) designed for this purpose [

23].

3.3.3. Contexts and measurement frequencies

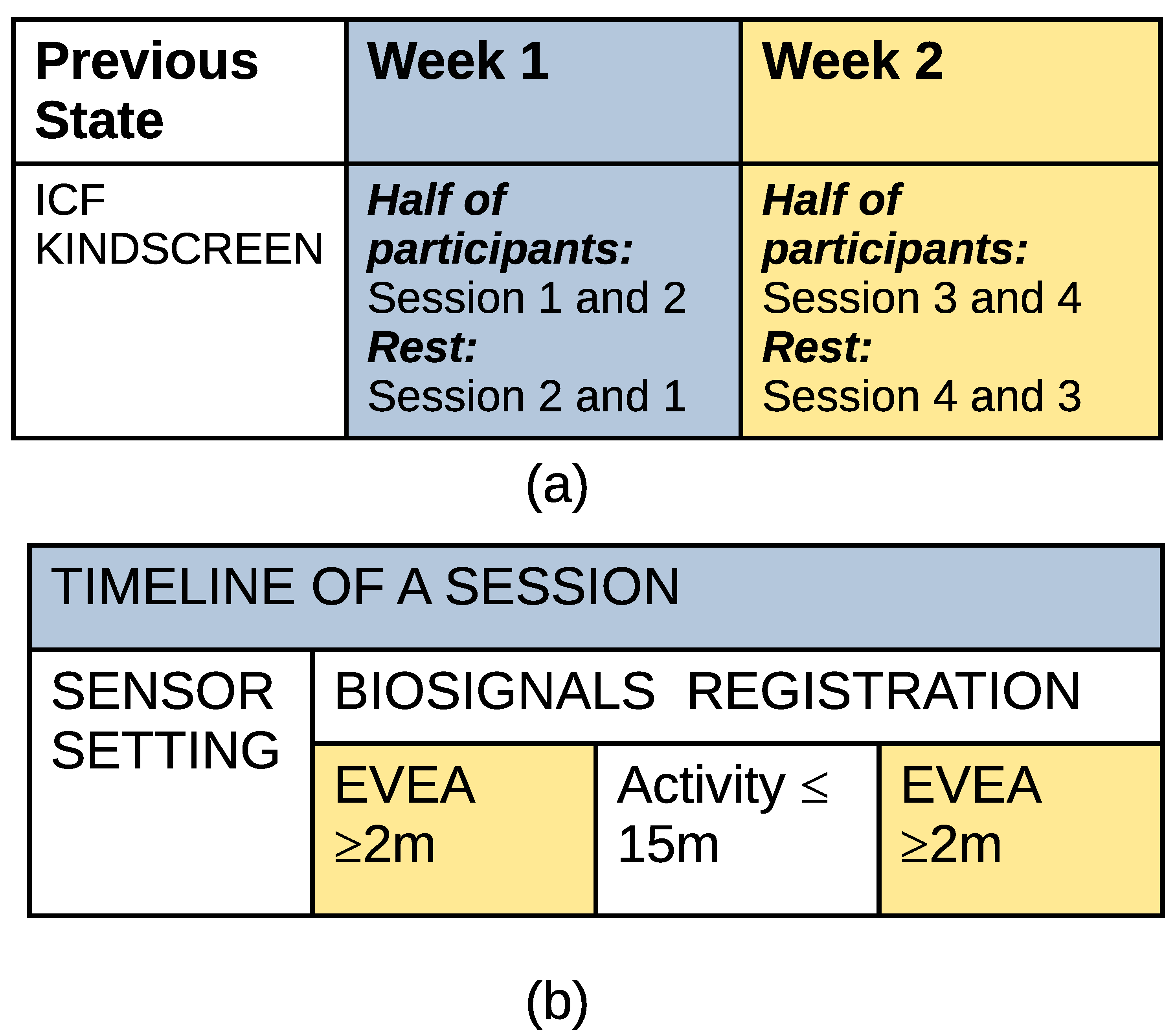

Four sessions will be held, divided into two parts:

Selection of dependent variables The aim of the first two sessions is to be able to count a reference level for physiological variables in activities that provoke pleasant and unpleasant emotions, so that they can be used as a reference in Part 2; the purpose is to try to avoid dependence on the EVEA tests since the subjects will not always be able to express their emotions. The EVEA test is used as a reinforcer for a possible automatic classifier.

Session1: measurement of parameters when the subject is in a pleasurable activity of daily life in the center.

-

Session 2: measurement of parameters when the subject is in a discomfortable activity of daily life in the center.

These sessions will be determined by conversation with the caregiver since they are particular for each subject.

Half of the participants will start with session 2 and then do session 1, while the rest will follow the reverse order.

Effect of music on the dependent variables during the performance of rehabilitation exercises

Session 3: Measurement of parameters to the subject during the performance of rehabilitation activities in the center.

Session 4: Measurement of parameters to the subject during rehabilitation activities in the center. The session will be accompanied with music according to the preferences of the subject.

The pleasant motivational music to be played during session 4 will be selected by each user according to their musical preferences or, failing that, by their caregiver. The rehabilitation activity should be a light exercise for the user, such as pedaling, limb extension, or any other that is measurable through inertial units. The specific activity that each user will have to perform will be determined by the medical staff and/or physiotherapist of each center, as it will be limited by the movement capacity of each participant.

Although each session has a different theme, the structure of the sessions is similar. First, the sensors are placed on the volunteer. Once it has been verified that the data are collected in an adequate manner, data recording begins while the user is answering the EVEA test. This first part of the recording will be used as a baseline for the session, which should last at least two minutes. After that, the activity will start, which will not last more than 15 minutes; and to finish, a new EVEA test will be filled in, with identical restrictions to the first test. With these initial and final baselines, the differential of the measurements of each session can be detected, in addition to the analysis of the evolution of the subject during the activity. For each user, the protocol should be completed in two weeks, during the first week sessions 1 and 2, and during the second week sessions 3 and 4.

Figure 4 shows a diagram of the described protocol.

4. Statistical methodology

4.1. The Sample size

The population of the centers is approximately 200 individuals (100 from each center, adults and children). According to Cochran’s formula for estimating the sample, assuming, in the worst case scenario, a value of p=q=0.5 (maximum variance), a confidence interval of 95% and a margin of error of 15%, the sample size would be of 36 individuals. Therefore, a sample size with 18 adults and 18 children seems adequate.

4.2. Data analysis

In general, numerical variables will be expressed as mean (M) and standard deviation (SD), including range, minimum and maximum.

For the first part of the study, comparisons will be made between the dependent variables obtained between sessions 1 and 2 for each subject using the permutation test of the difference in means between different data windows. Those dependent physiological variables that mark significant differences for most of the subjects will be chosen. Changes in EVEA test responses between sessions 1 and 2 will serve as reinforcement for detecting the level of emotional change.

The results of the EVEA test will also be correlated with the base state questionnaires, to determine the existence of some type of dependency between the base level of EVEA and its variation with respect to the base state. This dependence will be explored with the Spearman or Pearson correlation test.

For the second part, first, the variation in EVEA for sessions 3 and 4 will be analyzed to find out if there is a significant dependence on the independent variable (music) between stages A and B for all the participants. We will use the Kruskal-Wallis test, which does not require any type of assumption regarding the normality and homoscedasticity of the samples. These variations will also be correlated with the quality of life scale(ICF o GMFCS MACS CFCS KIDSCREEN), as was done with the data from the first part.

For the physiological variables and the average kinetic energy, it will be determined if their variations are significant between A and B by means of the Kruskal-Wallis test applied to the group of subjects, but also at the individual level by means of the permutation test.

The significance of the tests will be set at three levels indicated with *(p<0.05), ** (p<0.01) and ***(p<0.001).

5. Conclusions

People with CP have particular difficulties in recognizing both their mood and fatigue. This means that in rehabilitation routines or in the performance of physical activity, it is sometimes difficult to properly program the exercise so that they find it motivating and get the most out of the time devoted to such activity.

There are questionnaires that allow the measurement of both the emotional state [

24] and the fatigue state [

25], but not always a user with CP has the necessary cognitive and communication skills to rely on these questionnaires.

It is necessary to resort to more objective methods and the measurement of biosignals is a good alternative. But in order to rely on biosignals, it is necessary to study whether the changes generated in the biosignals are really significant. To do this, we must put the subjects in contexts where we can be sure what their mood is going to be, these contexts belong to activities that are part of their daily routines and of which we have experience of their response to them. The caregivers and the family environment should support the choice of these routines where the biosignals will be recorded. Whenever possible, these measures can be reinforced with tests to measure emotions and physical state that can be performed by the subjects or caregivers in case the subject does not have physical or cognitive abilities. On the other hand, signal processing should be performed to extract parameters that should then be studied statistically to assess their robustness in determining a state. It is also important to assess how the fact that the person is in motion affects the record. Appropriate techniques should be studied to eliminate the noise produced by this fact so that it does not affect the extraction of information about the condition.

In addition, we want to evaluate how music can be a motivational factor that improves the quality and time dedicated to physical exercise. Therefore, in the proposed experimental protocol, an analytical study of type AB is introduced in the second part. In the third part of the study of the state of the art, works are presented whose common denominator is that musical parameters can induce emotions and the measurement of the change in them is mainly done with the EEG signal. The proposed protocol will take into account these factors for the choice of music and will introduce the measurement of other types of additional physiological signals.

Author Contributions

All authors have assessed the study design, participated in revising the manuscript, read the final manuscript, and accepted it before submission

Funding

This research was funded by Spanish Ministry of Science and Innovation, State Plan 2017-2020: Challenges - R&D&I Projects with grant codes PID2019-104323RB-C32

Institutional Review Board Statement

This research was approved by the Ethics Committee A Coruña-Ferrol with ID 2020/597 and the boards of the participating centers after they had been duly informed of its objectives

Informed Consent Statement

Informed consent will be obtained from all subjects involved in the study.

Data Availability Statement

The data obtained in this research will be hosted in the repository of the University of Seville

https://idus.us.es/

Acknowledgments

In this section you can acknowledge any support given which is not covered by the author contribution or funding sections. This may include administrative and technical support, or donations in kind (e.g., materials used for experiments).

Conflicts of Interest

The authors declare that they have no competing interests.

Abbreviations

The following abbreviations are used in this manuscript:

| AAI |

Augmentative Affective Interface |

| AI |

artificial intelligence (AI) |

| AIR4DP |

Artificial Intelligence and Robotic Assistive Technology devices for Disabled People |

| AMIGOS |

A dataset for Multimodal research of affect, personality traits and mood on Individuals and GrOupS |

| ASPACE |

Association of People with Cerebral Palsy of Seville |

| CFCS |

Communication Function Classification System |

| CP |

Cerebral Palsy |

| ECG |

Electrocardiogram |

| EDA |

electrodermal activity |

| EEG |

electroencephalography |

| EVEA |

Scale for Mood Assessment |

| FACS |

Facial Action Coding System |

| FD |

Fractal dimension |

| FMRI |

Functional Magnetic Resonance Imaging |

| GMFCS |

Gross Motor Function Classification System |

| GSR |

galvanic skin response |

| HF |

High Frequency |

| HR |

Heart Rate |

| HRV |

Heart Rate Variability |

| ICF |

International Classification of Functioning, Disability and Health |

| LF |

Low Frequency |

| M |

Mean |

| MACS |

Manual Ability Classification System |

| pNN50 |

Percentage of successive RR intervals that differ by more than 50 ms |

| PNS |

Parasympathetic Nervous System |

| PSD |

Power Spectral Density |

| RMSSD |

Root mean square of successive 226

differences between normal heartbeats |

| SCL |

Skin Conductance Level |

| SCR |

Skin Conductance Response |

| SD |

Standard Deviation |

| SDNN |

Standard deviation of NN intervals |

| SE |

Spectral Entropy |

| SNS |

Sympathetic Nervous System |

| TAIS |

Technology for Assistance Integration and Health |

| VR |

Virtual Reality |

References

-

L. Luan; W. Xiao; K. Hwang; MS Hossain; G. Muhammad; A. Ghoneim. MEMO Box: Health Assistant for Depression With Medicine Carrier and Exercise Adjustment Driven by Edge Computing. IEEE Access, 2020.

- R. Martinez; A. Salazar-Ramirez; A. Arruti; E. Irigoyen; JI Martin; J. Muguerza. A Self-Paced Relaxation Response Detection System Based on Galvanic Skin Response Analysis. IEEE Access, 2019.

- Can YS, Chalabianloo N., Ekiz D., Fernandez-Alvarez J., Repetto C., Riva G., Iles-Smith H., Ersoy C. Real-Life Stress Level Monitoring Using Smart Bands in the Light of Contextual Information. IEEE Sensors Journal. 2020.

- Rincon JA, Costa A., Novais P., Julian V., Carrascosa C. ME3CA: A cognitive assistant for physical exercises that monitors emotions and the environment. Sensors, 2020.

- Correa, JAM; Abadi, MK; Sebe, N.; Patras, I. Amigos: a dataset for affect, personality and mood research on individuals and groups. IEEETrans. Affect. Comput. 2018.

- Rincon JA, Costa A., Novais P., Julian V., Carrascosa. An affective personal trainer for elderly people. 3rd Workshop on Affective Computing and Context Awareness in Ambient Intelligence, AfCAI 2019; Universidad Politecnica de CartagenaCartagena; Spain; 11 November 2019 through 12 November 2019; Code 160321.2019.

- Price, E. Moore G., Galway L., Linden M. Towards mobile cognitive fatigue assessment as indicated by physical, social, environmental, and emotional factors. IEEE Access. 2019.

- Qureshi, S. Hagelbäck J., Iqbal SMZ, Javaid H., Lindley CA Evaluation of classifiers for emotion detection while performing physical and visual tasks: Tower of Hanoi and IAPS. Intelligent Systems Conference 2018.

- Belmonte, S. Montoya P.; González-Roldán AM, Riquelme I. Reduced brain processing of affective pictures in children with cerebral palsy. Research in Developmental Disabilities. 2019.

- Albiol-Pérez, S. Cano S., Da Silva MG, Gutierrez EG, Collazos CA, Lombano JL, Estellés E., Ruiz MA A novel approach in virtual rehabilitation for children with cerebral palsy: Evaluation of an emotion detection system. Advances in Intelligent Systems and Computing. 2018.

- C. Rosales; L. Jácome; J. Carrión; C. Jaramillo; M. Palma. Computer vision for detection of body expressions of children with cerebral palsy.2017 IEEE Second Ecuador Technical Chapters Meeting (ETCM).

- Kalansooriya, P. Ganepola GAD,Thalagala TS Affective gaming in real-time emotion detection and Smart Computing music emotion recognition: Implementation approach with electroencephalogram. Proceedings - International Research Conference on Smart Computing and Systems Engineering, SCSE 2020.

- Labbé, C. Trost W., Grandjean D.Affective experiences to chords are modulated by mode, meter, tempo, and subjective entrainment. Psychology of Music. 2020.

- Daly, I. Williams D., Hwang F., Kirke A., Miranda ER, Nasuto SJ Electroencephalography reflects the activity of sub-cortical brain regions during approach-withdrawal behaviour while listening to music. Scientific ReportsVolume 9, Issue 1, 1 December 2019, Article number 9415. 1 December.

- Chirico, A. Gaggioli A. Virtual-reality music-based elicitation of awe: When silence is better than thousands sounds. Lecture Notes of the Institute for Computer Sciences, Social-Informatics and Telecommunications Engineering, LNICST. Vol.288.2019.

- WHO. International Classification of Functioning, Disability and Health: ICF; World Health Organization: Geneva, Switzerland, 2001; pp. 1–315. [Google Scholar]

- Aldulaimi, M.A. A real time emotional interaction between EEG brain signals and robot. Proceedings - 2017 IEEE 5th International Symposium on Robotics and Intelligent Sensors, IRIS 2017.

- Tasmania del Pino-Sedeño, Wenceslao Peñate y Juan Manuel Bethencourt. La escala de valoración del estado de ánimo (evea):análisis de la estructura factorial y de la capacidad para detectar cambios en estados de ánimo. Análisis y Modificación de Conducta 2010, Vol. 36, No 153-154, 19-32.

- Palisano, R.; Rosenbaum, P.; Walter, S.; Russell, D.; Wood, E.; Galuppi, B. Development and reliability of a system to classify gross motor function in children with cerebral palsy. Dev. Med. Child Neurol. 1997, 39, 214–223. [Google Scholar] [CrossRef] [PubMed]

- Jeevanantham, D.; Dyszuk, E.; Bartlett, D. The Manual Ability Classification System. Pediatr. Phys. Ther. 2015, 27, 236–241. [Google Scholar] [CrossRef] [PubMed]

- Molina Cantero, Alberto Jesus, Merino Monge, Manuel, Castro García, Juan Antonio, Pousada Garcia, Thais, Valenzuela Muñoz, David, et. al.: A Study on Physical Exercise and General Mobility in People with Cerebral Palsy: Health through Costless Routines. En: International Journal of Environmental Research and Public Health. 2021. Vol. 18. Pag. 1-22. [CrossRef]

- Hidecker, M.J.C.; Paneth, N.; Rosenbaum, P.L.; Kent, R.D.; Lillie, J.; Eulenberg, J.B.; Chester, K.; Johnson, B.; Michalsen, L.; Evatt, M.; et al. Developing and validating the Communication Function Classification System for individuals with cerebral palsy. Dev. Med. Child Neurol. 2011, 53, 704–710. [Google Scholar] [CrossRef] [PubMed]

- Merino Monge, Manuel, Molina Cantero, Alberto Jesus, Castro García, Juan Antonio, Gómez González, Isabel María: An Easy-to-use Multi-source Recording And Synchronization Software for Experimental Trials. En: IEEE Access. 2020. Pag. 1-17. [CrossRef]

- Bradley, M.M., Lang, P.J. Measuring emotion: the Self-Assessment Manikin and the Semantic Differential. J. Behav. Ther. Exp. Psychiatry 25(1), 49–59 (1994).

- E. Borg, G. Borg, K. Larsson, M.Letzter, B.-M. Sundblad. An index for breathlessness and leg fatigue. Scandinavian Journal of Medicine and Science in Sports 20 (4). 543-707 (2010).

- Daly I., Williams D., Hwang F., Kirke A., Miranda ER, Nasuto SJ Electroencephalography reflects the activity of sub-cortical brain regions during approach-withdrawal behaviour while listening to music. Scientific ReportsVolume 9, Issue 1, 1 December 2019, Article number 941.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).