Introduction

Although Artificial Intelligence (AI) was formally coined as a term for the first time at Dartmouth College in 1956, at a conference of researchers from the fields of Mathematics, Electronics and Psychology to study the possibilities of using computers in terms of simulation of human intelligence [

7], in fact as a concept it had already appeared in 1950, in a study by Alan Turing (1912-1954), in which the famous English mathematician posed the question : "

Can machines think?".

Background

The important dates in the history of Artificial Intelligence are the following:

1943-56 The birth of Artificial Intelligence

1943 McCulloch and Pitts propose a model of artificial neurons capable of learning and computing any computable function.

1950 Alan Turing, considered the father of Artificial Intelligence, inspires the imitation test (Turing test) to identify intelligent machines.

1951 Minsky and Edmonts implement the first neural network, SNARC (Stochastic Neural Analog Reinforcement Calculator), which has 40 neurons and uses 3000 lamps.

1956-70 First Phase of Development of Artificial Intelligence.

1956 Meeting at Dartmouth College of researchers from the fields of Mathematics, Electronics and Psychology (McCarthy, Allen Newell, Herbert Simon, Marvin Minsky) with the common goal of studying the possibilities of using computers to simulate human intelligence.

1958 Creation of the Lisp language by McCarthy.

1966 After researching language understanding and machine perception, Weizenbaum creates ELIZA.

1970-80 Maturation of symbolic and computational Artificial Intelligence.

1977 Creation of the first empirical systems: DENDRAL (1971), MYCIN (1975), Prospector (1977).

1972 a. Colmerauer and Roussel from the University of Marseille in collaboration with R. Kowalski from the University of Edinburgh conclude the creation of the language logic programming PROLOG. b. Winograd delves into natural language understanding.

1975 &1977 M. Minsky publishes chapters on knowledge representation in books.

1976 Newell & Simon support the hypothesis that a natural symbolic system possesses the necessary characteristics for intelligent actions.

-

1970 - Development of evolutionary algorithms. Books with studies are published:

- -

1973 by Rechenberg on the optimization of technical systems and the principles of biological evolution.

- -

1975 by Holland on adaptability in natural and artificial systems.

- -

1992 by Koza, on Genetic Programming.

- -

1995 by Fogel on Evolutionary Computation.

1980-90 Renaissance of Artificial Neural Networks.

1986 Rumelhart and McClelland describe the creation of computer simulations of perception.

1987 IEEE (Institute of Electrical and Electronics Engineers) 1st International Conference on Neural Networks.

1960 - : Dealing with ambiguity in knowledge.

1965 &1968 Zadeh is the first to introduce the terms "Fuzzy Sets" (1965) and "Fuzzy Algorithms" (1968).

1983 Sugeno formulates "Fuzzy Theory".

1992 1st IEEE Conference on Fuzzy Sets.

1990 - Creation on the one hand of computer systems and machines based on principles of Artificial Intelligence and which show tendencies to adapt to their environment (e.g. robots) and on the other hand applications that tend to "learn" from their experience: Intelligent agents, Search Engines online, Pervasive Intelligence.

Anticipated Outcomes

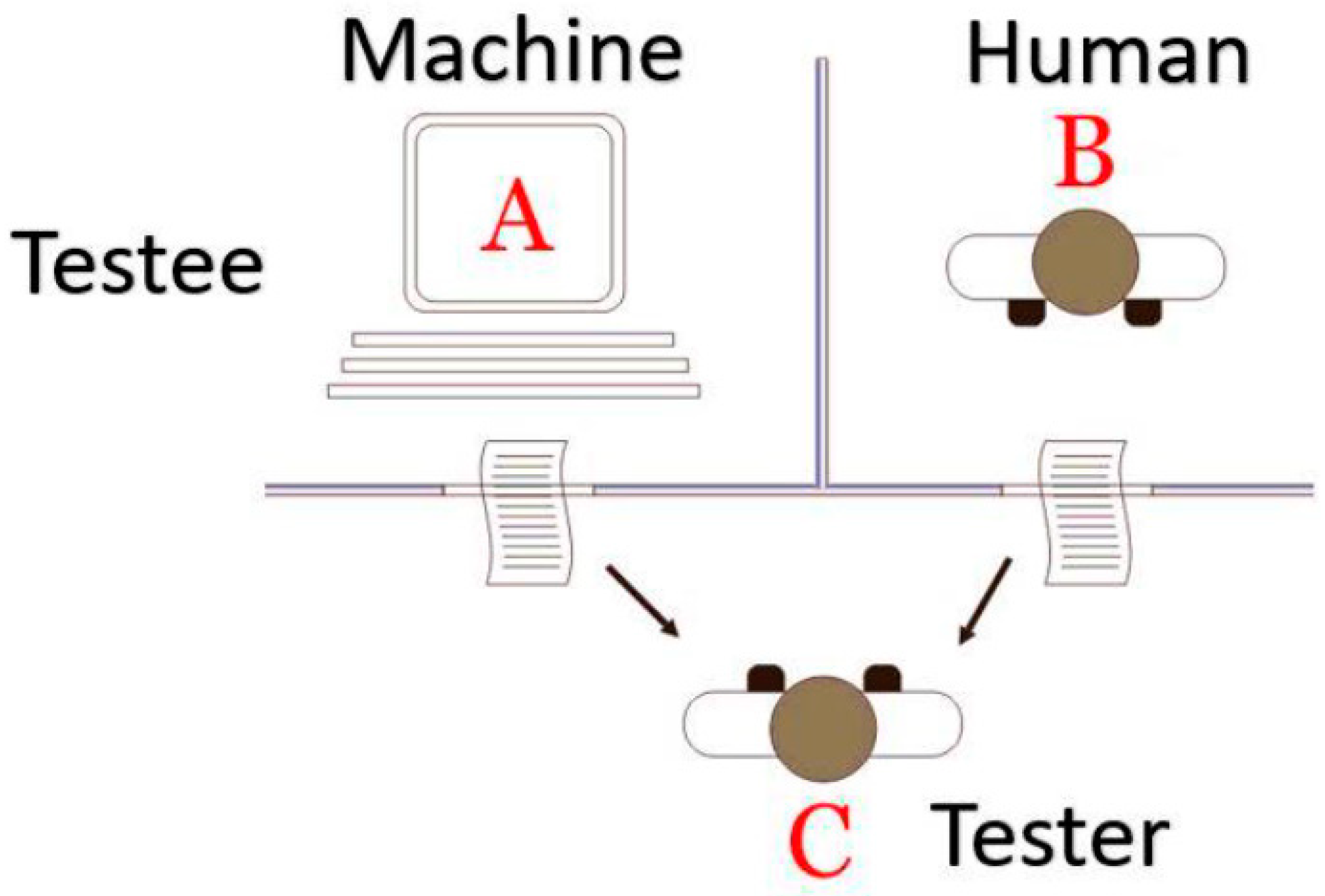

Turing proposed an imitation game that went down in the history of Computer Science as the Turing test (see

Figure 1). In this game, an "investigator", a natural person and a computing machine take part. The interrogator is in a separate space from the physical person and the machine, asks them a series of questions and receives the answers in such a way that it is impossible to perceive which of the other two is answering him each time.

From the way in which the answer was given, the investigator must correctly infer whether the person who answered was the person or the machine. In 1950, in his work

Computing Machinery and Intelligence [

1], Turing wrote that by the year 2000 there will be machines so intelligent that the possibility of the investigator making a mistake, i.e. believing that the answer he received to his question comes from a natural person, while actually coming from a machine, will be greater than 30%.

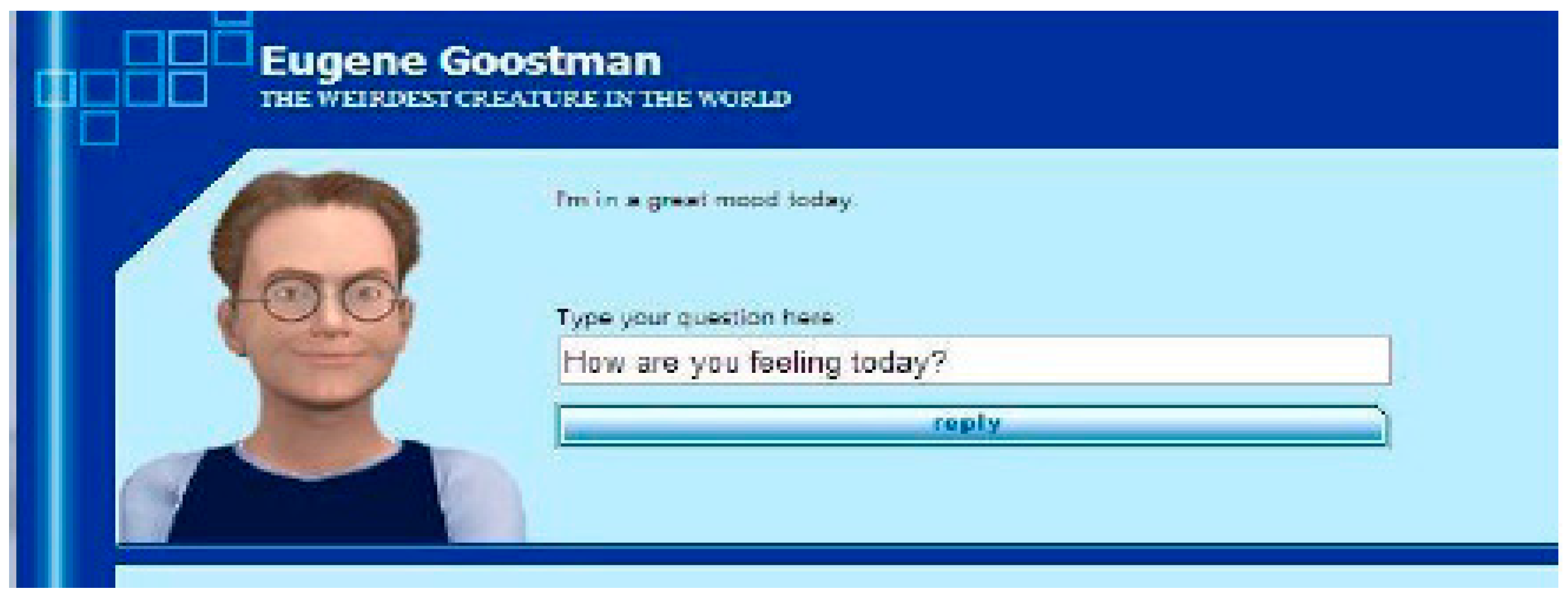

In June 2014, for the first time a computer program, Eugene Goostman, after many entries in similar competitions, passed the full 2014 Turing test held at the famous Royal Society of London, after managing to trick 33% of the judges [

6] (see also

Figure 2).

Turing's prediction may not have been verified at the date mentioned, but certainly among a large number of researchers in the field of Artificial Intelligence there is an opinion that the sought goal is not unattainable, given the progress of Computer Science.

In this direction, competitions based on the Turing test have been created, with the most well-known being the

Loebner Prize in Artificial Intelligence [

2].

The following definition by Dr. Elaine Rich who is a noted Computer Scientist and author of several books on Artificial Intelligence dating back to the 1980’s [

3], is similar:

“AI is the study of how to make computers do things which, at the moment, people do better”.

Since the definition is based on the current capabilities of computing machines, this means that Artificial Itelligence will change subtlety from year to year and dramatically from decade to decade. The scientific goals aim to determine which of the existing ideas about the representation and use of knowledge are able to provide answers to the eternal philosophical question:

"What is intelligence and how is it expressed?"

The models used by Artificial Intelligence, based on either of the above two definitions, rely on the use of complex electronic systems as a means of implementation. This phenomenon is explained on the basis of the fundamental tradition of Western philosophy that the mental capacity (thinking) of man is essentially a logical use of mental symbols, that is, ideas. The electronic computer, unlike other mechanical devices (eg a watch), can handle symbols in the form of "characters", after being properly programmed. According to symbol manipulation theory, intelligence depends only on the organization of a system and its function as a symbol manipulator, not on the material of construction of the symbols or their exact form. It is therefore concluded that modern computer technology is currently the appropriate one, so that there can be models with the potential to demonstrate some form of artificial intelligence. However, nothing precludes that in the future some other technology will prove to be more suitable for the above purpose. The above consideration leads to the definition of the so-called symbolic Artificial Intelligence:

"Symbolic Artificial Intelligence is the science that studies the nature of human intelligence and then how to reproduce it in computers using symbols."

The object of research in the field of symbolic Artificial Intelligence is the study of human thought processes (intelligence, experience), and the ways of their representation through machines (computers, robots, etc.). However, computer science methodologies alone are not enough. Both the analysis and investigation of human intelligent behavior make critical contributions to problem solving, the use and understanding of natural language, and particularly Psychology, Linguistics, and Philosophy, can contribute to gain important knowledge, and are closely related to the abstract principles of mental organization.

The set of sciences mentioned above, now constitute the unified branch of Cognitive Science, with the field of study being the cognitive capacity of intelligence and intellect, i.e. the handling of symbols.

Based on the above, a more complete definition of Artificial Intelligence is the following:

“Artificial intelligence makes machines capable of 'understanding' their environment, solving problems and acting towards a specific goal. The computer receives data (already ready or collected through sensors, e.g. a camera), processes it and responds based on it.”

Computational Intelligence

Computational Intelligence is the scientific field that offers the techniques for solving difficult problems, with the machine simply imitating biological processes, without necessarily demonstrating general intelligence. The term referred as

Computational Intelligence was firstly used by J. Bezdek [

4] in the

International Journal of Approximate Reasoning. In this paper on artificial neural networks, Bezdek introduced the term ABCs to clarify the following:

A = Artificial Non - Biological (Man-Made)

B = Biological Physical + Chemical + (??) = Organic

C = Computational Mathematics + Man-Made Machines

Marks (1993) [

5] stated the following about the relationship between Computational Intelligence and Artificial Intelligence:

"Although they pursue similar goals, Computational Intelligence has emerged as an independent discipline, whose research field is somewhat different from that of Artificial Intelligence."

Computational intelligence is mainly characterized by some important properties, which are characteristic of systems using machine learning techniques, such as:

adaptation

self-organization

learning-evolution

Computational intelligence was used in pattern recognition applications. Today, it typically characterizes applications such as:

Research in the field of Artificial Intelligence

Although more than fifty years have passed since the establishment of the research field of Artificial Intelligence, a first observation is that the modern world has changed technologically, but there are still research challenges, with the central goal of creating more and more intelligent machines.

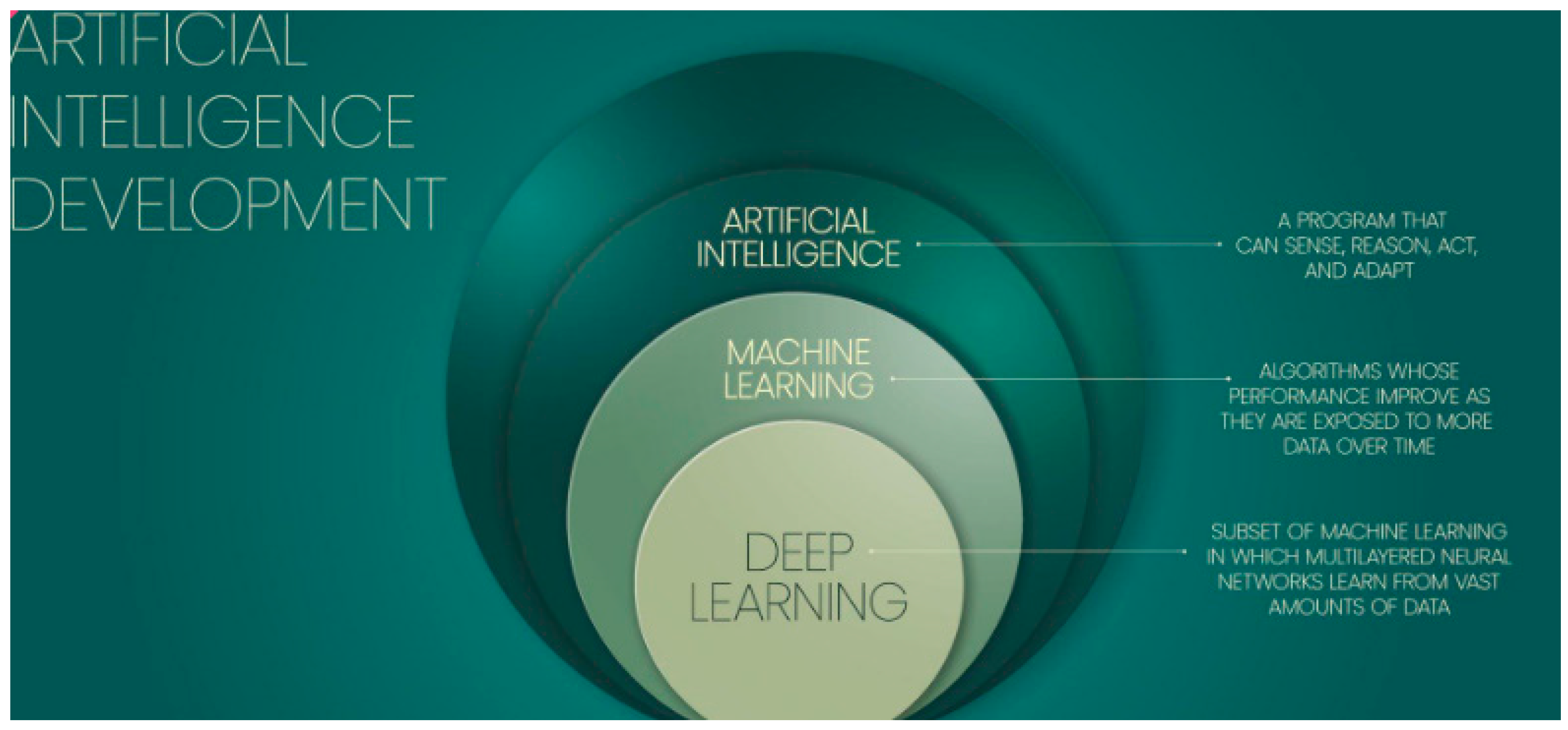

Figure 3 shows the three layers into which the field of basic research related to Artificial Intelligence could be divided.

References

- Turing, A. (1950). Computing Machinery and Intelligence, Mind, 59, 433-460. Available online: http://loebner.net/Prizef/TuringArticle.html (accessed on 20 January 2024).

-

Loebner Prize in Artificial Intelligence. Available online: http://www.loebner.net/Prizef/loebner-prize.html (accessed on 20 January 2024).

- Rich, E. (2007). Automata, Computability and Complexity: Theory and Applications. Prentice Hall.

- Bezdek, J.C. (1998). Computational Intelligence Defined - By Everyone !. In: Kaynak, O., Zadeh, L.A., Türkşen, B., Rudas, I.J. (eds) Computational Intelligence: Soft Computing and Fuzzy-Neuro Integration with Applications. NATO ASI Series, vol 162. Springer, Berlin, Heidelberg. [CrossRef]

- Marks, R. (1993). Intelligence: Computational versus Artificial. IEEE Transactions on Neural Networks, 4(5), 737-739. McCarthy, J. (1960). Recursive Functions of Symbolic Expressions and Their Computation by Machine (Part I). Communications of the ACM, 3(4),184-195. Aνακτήθηκε από. Available online: http://wwwformal.stanford.edu/jmc/recursive.pdf.

- Schofield, J. (2014). Computer chatbot 'Eugene Goostman' passes the Turing test. Available online: http://www.zdnet.com/article/computer-chatbot-eugene-goostman-passes-the-turing-test/ (accessed on 20 January 2024).

- Minsky, M. (1975). A framework for representing knowledge. Στο P. H. Winston (επιμ.), The Psychology of Computer Vision (σ. 211-277). New York: McGraw-Hill. Available online: http://courses.media.mit.edu/2004spring/mas966/Minsky1974Frameworkforknowledge.pdf (accessed on 21 January 2024).

- Triantafyllou, S.A. (2023). A Detailed Study on the 8 Queens Problem Based on Algorithmic Approaches Implemented in PASCAL Programming Language. In: Silhavy, R., Silhavy, P. (eds) Software Engineering Research in System Science. CSOC 2023. Lecture Notes in Networks and Systems, vol 722. Springer, Cham. [CrossRef]

- Triantafyllou, S., & Georgiadis, C. K. (2022). Gamification of MOOCs and security awareness in corporate training. [CrossRef]

- Triantafyllou, S. A., & Georgiadis, C. K. (2022). Gamification Design Patterns for user engagement. Informatics in Education, 21(4), 655-674. [CrossRef]

- Triantafyllou, S.A. (2022) “WORK IN PROGRESS: Educational technology and knowledge tracing models,” 2022 IEEE World Engineering Education Conference (EDUNINE) pp. 1. [CrossRef]

- Triantafyllou, S. (2018). Investigating the power of Web 2.0 Technologies in Greek Businesses (2018). Available online: https://www.morebooks.de/shop-ui/shop/product/978-613-7-38230-1.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).