1. Introduction

Roads are one of the crucial transportation infrastructures that deteriorate over time due to factors such as heavy vehicles, changing weather conditions, human activity, and the use of inferior materials. This deterioration impacts economic development, travel safety, and social activities [

1]. Therefore, it is crucial to periodically assess the condition of roads to ensure their longevity and safety. Additionally, it is imperative to accurately and promptly identify road damage, especially cracks, in order to prevent further deterioration and enable timely repairs.

Currently, pavement condition inspection technologies mainly include traditional manual measurements and automatic distress inspections, such as vehicle-mounted inspection [

2]. Manual inspection methods rely primarily on visual discrimination, requiring personnel to travel along roads to identify damage points. However, this approach is slow, laborious, subjective, lower accuracy, and time-consuming [

3]. Therefore, the development of automatic inspection technologies is crucial for quickly and accurately detecting and identifying cracks on the road. In recent years, intelligent crack inspection systems have gained increasing attention and application. Vehicle-mounted inspection and its intelligent system [

4]; Guo et al.[

5] utilize core components such as on-mounted high-definition image sensors, laser sensors, and infrared sensors etc. These components enable the acquisition of high-precision road crack data in real-time. However, the overall configuration of the vehicle-mounted system is expensive and limited in scope, making it challenging to widely apply [

2].

Notably,automatic pavement distress inspection has traditionally utilized image processing techniques such as Gabor filtering [

6], edge detection, intensity thresholding [

7], and texture analysis. Cracks are identified by analyzing the changes in edge gradients and intensity differences compared to the background, and then extracting them through threshold segmentation [

2]. However, these methods are highly influenced by environmental factors, including lighting conditions, which can affect their accuracy. Moreover, these methods are not effective when the camera configurations vary, making their widespread use impractical [

1,

8]. Given the limitations of these traditional approaches, it is crucial to develop a cost-effective, accurate, fast, and independent method for the accurate detection of road cracks.

In recent years, there have been significant advancements in machine learning and deep learning algorithms, leading to the emergence of automatic deep learning methods as accurate alternatives to traditional object recognition methods. These methods have shown immense potential in visual applications and image analysis, particularly in road distress inspection [

1,

8]. Krizhevsky et al.[

9] proposed a deep convolutional neural network (CNN) architecture for image classification, especially in the detection of distresses in asphalt pavements. Cao et al.[

3] presented an attention-based crack network(ACNet) for automatic pavement crack detection. Extensive experiments on the CRACK500 demonstrated ACNet achieved higher detection accuracy compared to eight other methods. Tran et al.[

10] utilized a supervised machine learning network called RetinaNet to detect and classify various types of cracks developed in asphalt pavements, including lane markers. The validation results showed that the trained network model achieved an overall detection and classification accuracy of 84.9%, considering both the crack type and severity level. Xiao et al.[

11] proposed an improved model called C-Mask RCNN, which enhances the quality of crack region proposal generation through cascading multi-threshold detectors. Experimental results indicated that the mean average precision of the C-Mask RCNN model's detection component was 95.4%, surpassing the conventional model by 9.7%. Xu K et al.[

12] also proposed a crack detection method based on an improved Faster-RCNN for small cracks in asphalt pavements, even under complex backgrounds. The experiments demonstrated that the improved Faster-RCNN model achieved a detection accuracy of 85.64%. Xu X et al.[

13] conducted experiments to evaluate the effectiveness of Faster R-CNN and Mask R-CNN and compared their performance in different scenarios. The results showed that Faster R-CNN exhibited superior crack detection accuracy compared to Mask R-CNN, while both models demonstrated efficiency in completing the detection task with a small training datasets. The study focuses on comparing Faster R-CNN and Mask R-CNN, but does not compare the proposed methods with other existing crack detection methods. In general, these above mentioned methods not only detect the category of an object but also determine the object's location in the image [

14]. The use of deep learning methods can reduce labor costs and improve work efficiency and intelligence in recognizing road cracks [

1].

Meanwhile, Unmanned aerial vehicles (UAV) have demonstrated their versatility in a wide range of applications, including urban road inspections. This is attributed to their exceptional maneuverability, extensive coverage, and cost-effectiveness [

2]. Equipped with high-resolution cameras and various sensors, these vehicles can capture images of the road surface from multiple angles and heights, providing a comprehensive assessment of its condition. Several researchers have utilized UAV imagery to study deep learning methods for road crack object detection, and they have achieved impressive accuracy results. Yokoyama et al.[

15] proposed an automatic crack detection technique using artificial neural networks. The study focused on classifying cracks and non-cracks, and the algorithm achieved a success rate of 79.9%. Zhu et al.[

2]utilized images collected by UAV to conduct experimental comparisons of three deep learning target detection methods (Faster R-CNN, YOLOv3, YOLOv4) via convolutional neural networks(CNN). The study verified that the YOLOv3 algorithm is optimal, with an accuracy of 56.6% mAP . In another study, Jiang et al.[

16]proposed a RDD-YOLOv5 algorithm with Self-Attention for UAV road crack detection, which significantly improved the accuracy with an mAP of 91.48% . Furthermore, Zhang et al.[

17] proposed an improved YOLO3 algorithm for road damage detection from UAV imagery, incorporating a multi-layer attention mechanism. This enhancement resulted in an improved detection accuracy with an mAP of 68.75%. Samadzadegan et al.[

1] utilized the YOLOv4 deep learning network and evaluated the performance using various metrics such as F1-score, precision, recall, mAP, and IoU. The results show that the proposed model has acceptable performance in road crack recognition. Additionally, Zhou et al.[

18] introduced a UAV visual inspection method based on deep learning and image segmentation for detecting cracks on crane surfaces. Moreover, Xiang et al.[

19] presented a lightweight UAV road crack detection algorithm, called GC-YOLOv5s, which achieved an accuracy validation mAP of 74.3%, outperforming the original YOLOv5 by 8.2%. Wang et al.[

20] introduces BL-YOLOv8, an improved road defect detection model that enhances the accuracy of detecting road defects compared to the original YOLOv8 model. BL-YOLOv8 surpasses other mainstream object detection models, such as Faster R-CNN, SDD, YOLOv3-tiny, YOLOv5s, YOLOv6s, and YOLOv7-tiny, by achieving detection accuracy improvements of 17.5%, 18%, 14.6%, 5.5%, 5.2%, 2.4%, and 3.3%, respectively.Furthermore, Omoebamije et al.[

21] proposed an improved CNN method based on UAV imagery, demonstrating a remarkable accuracy of 99.04% on a customized test datasets. Lastly, Zhao et al.[

22] proposed a highway crack detection and CrackNet classification method using UAV remote sensing images, achieving 85% and 78% accuracy for transverse and longitudinal crack detection, respectively. These aforementioned studies primarily aim to enhance the deep learning algorithm using UAV images. This enhancement improves the accuracy of road crack detection and also establishes the methodological foundation for the crack target recognition algorithm discussed in this paper.

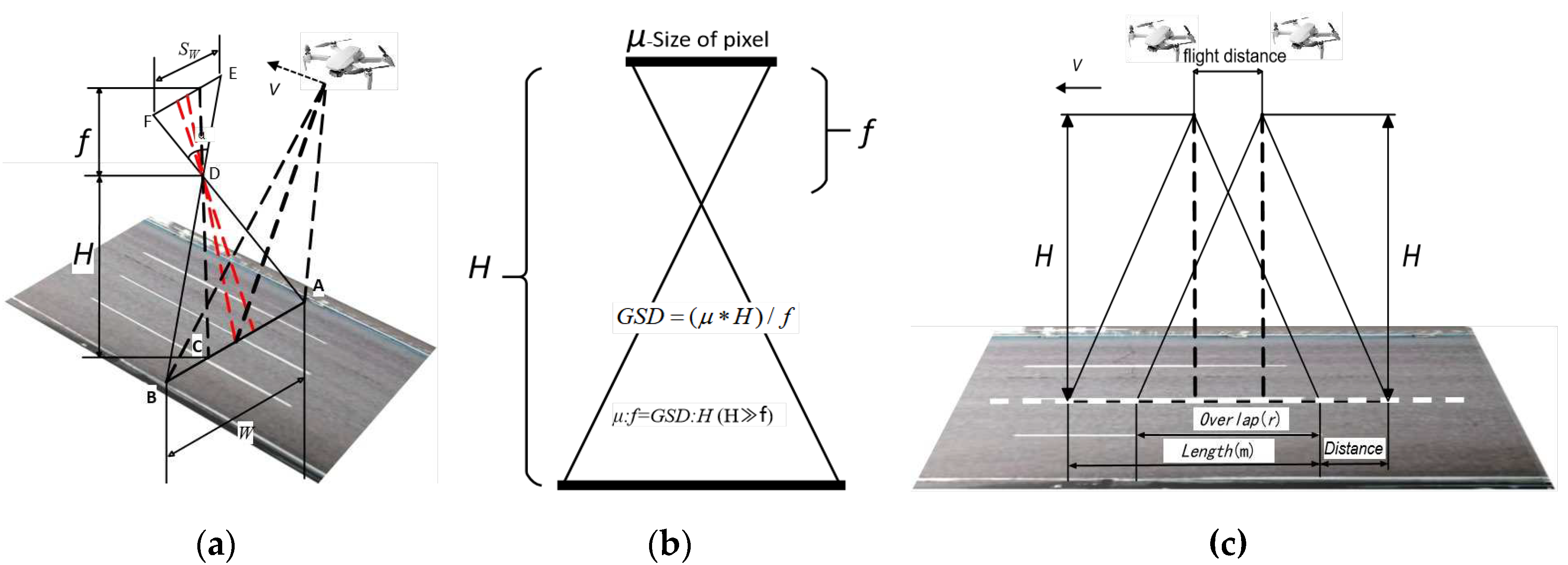

However, most above-mentioned studies primarily focus on UAV detection algorithms and neglect UAV data acquisition and high-quality imagery integrated into detection methods. For instance, the flight settings required for capturing high-quality images have not been thoroughly studied [

2]. Flying too high or too fast may result in poor quality images [

22]. Zhu et al.[

2] and Jiang et al.[

16] both introduced flight setup and experimental tricks for efficient UAV inspection. Liu K.C.,et al.[

23] proposed a systematic solution to automatic crack detection for UAV inspection. These studies are still uncompleted due to its lacks of the detailed data acquisition and pavement distress assessment. Additionally, there is a lack of quantitative measurement methods for cracks, which hampers accurate data support for road distress evaluation. Furthermore, inconsistency in flight altitude and the absence of ground real-scale information of the cracks adversely impact the subsequent quantitative assessment of the cracks.

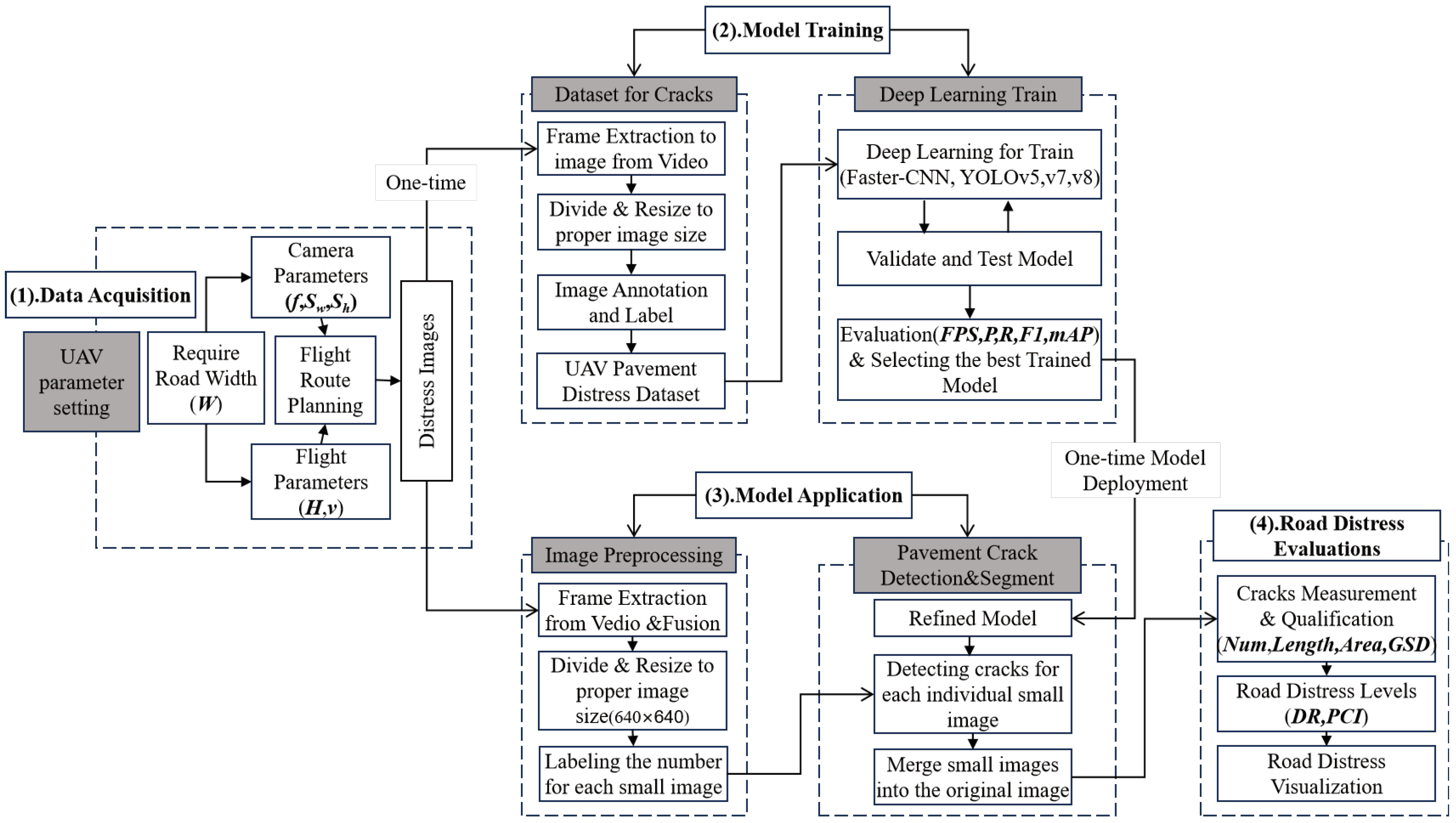

Obviously, existing studies frequently lack a systematic solution or integrated framework for UAV inspection technology, which hinders its widespread application in pavement distress detection. Therefore, this study aims to propose a formal and systematic framework for automatic crack detection and pavement distress evaluation in UAV inspection systems, with the goal of making it widely applicable.

Our proposed framework of UAV inspection system for automatic road crack detection offers several advantages: (1).It demonstrates a more systematic solution. The framework integrates data acquisition, crack identification, and road damage assessment in orderly and closely linked steps, making it a comprehensive system.(2).It exhibits greater robustness. By adhering to the flight control strategy and model deployment scheme, the drone ensures high-quality data collection while employing state-of-the-art automatic detection algorithms based on deep learning models that guarantee accurate crack identification. (3).It presents enhanced practicality. The system utilizes the cost-effective DJI Min2 drone for imagery acquisition and DL-based model deployment, making it an economically viable solution with significant potential for widespread implementation.

The rest of this paper is organized as follows:

Section 2 presents the framework for UAV inspection system designed specifically for pavement distress analysis. In

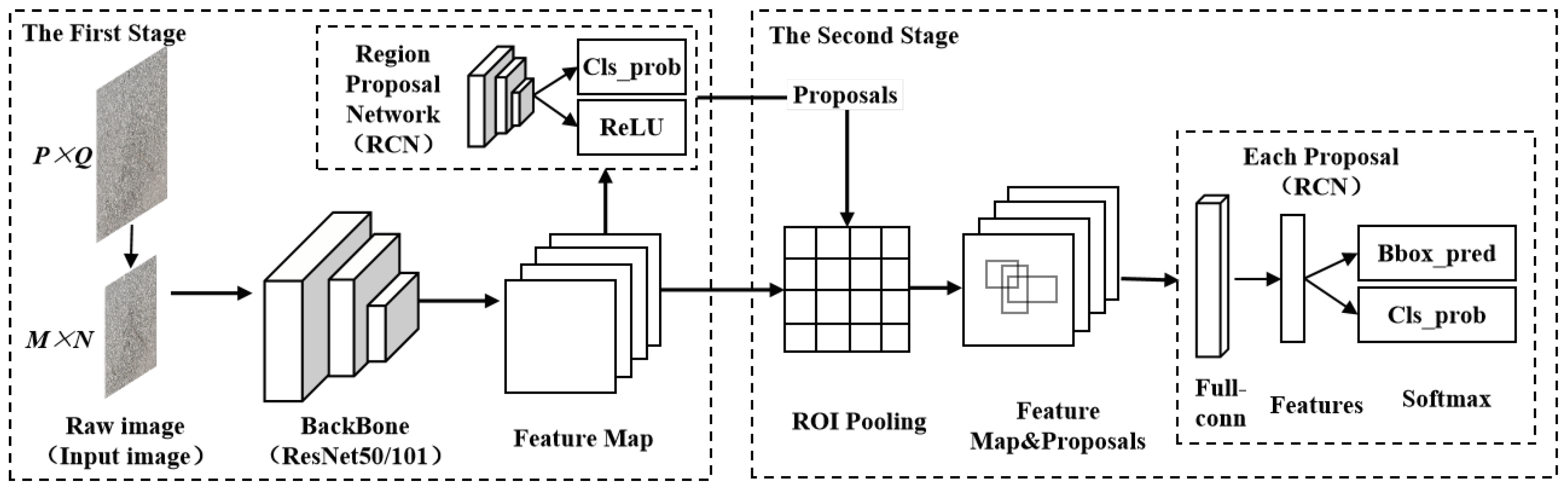

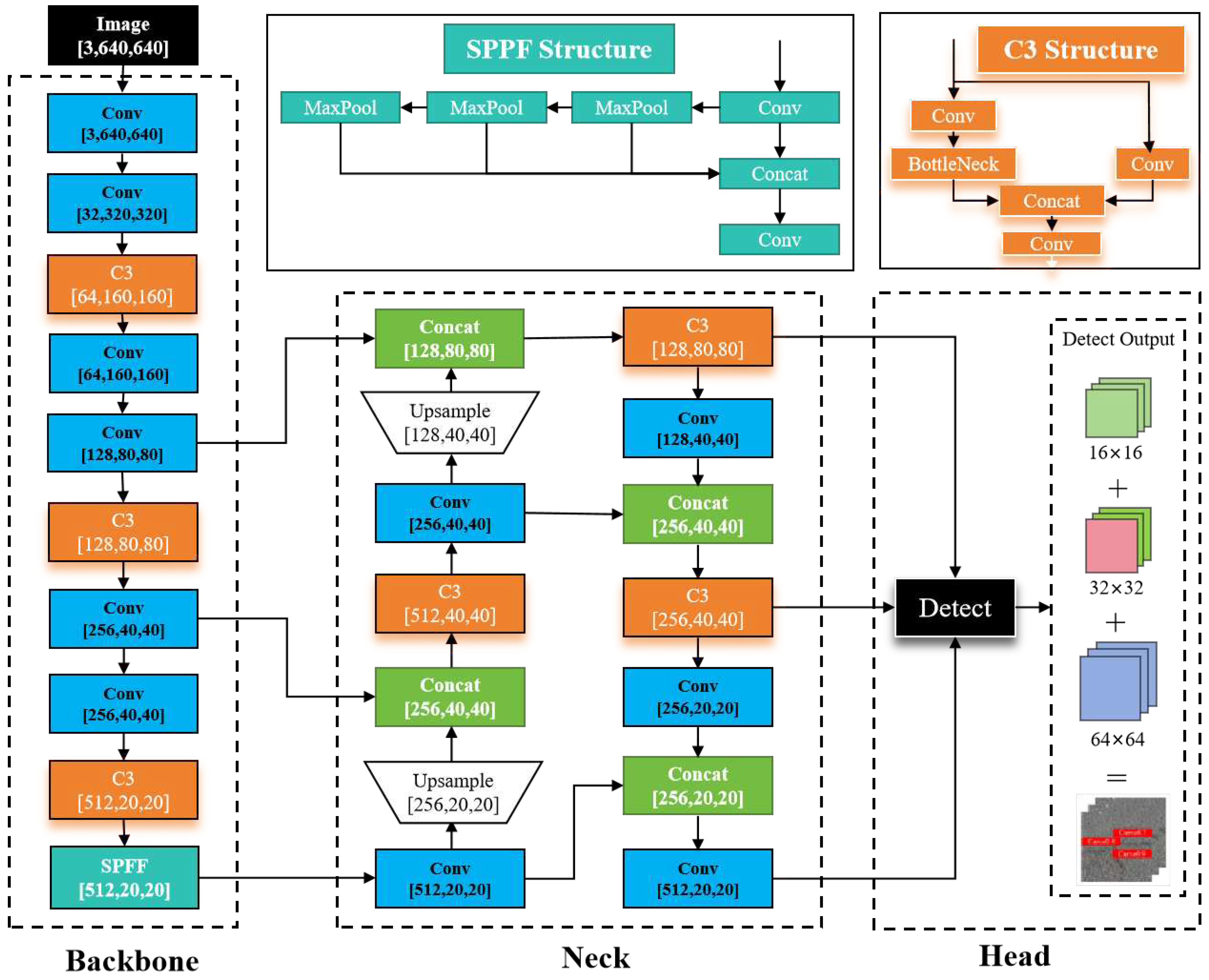

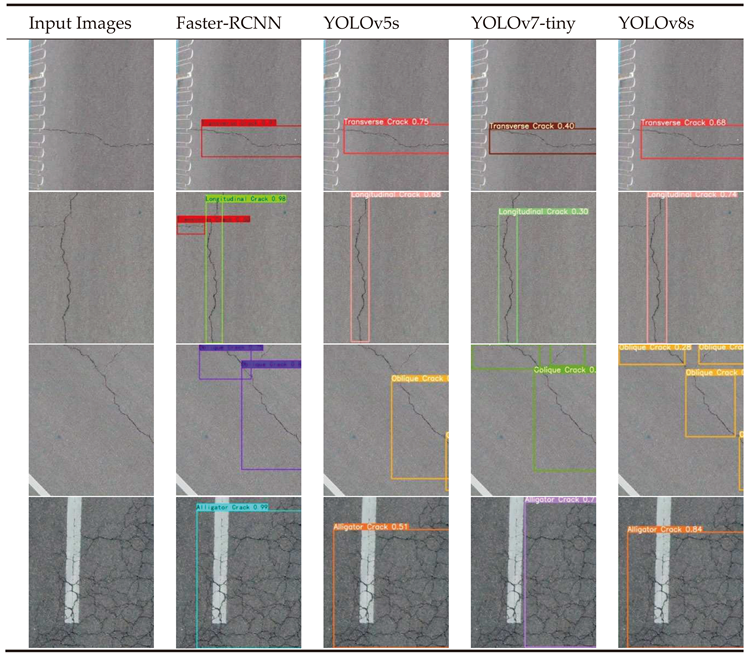

Section 3, we provide a comprehensive overview of four prominent deep learning-based crack detection algorithms, namely Faster-RCNN, YOLOv5s, YOLOv7-tiny, and YOLOv8s, along with their distinctive characteristics.

Section 4 elaborates on the well-defined procedures employed for UAV data acquisition and subsequent data reprocessing. The experimental setup and comparative results are presented in

Section 5. In

Section 6, we propose quantitative methods to evaluate road cracks and assess pavement distress levels. Finally, in

Section 7, we summarize our research while discussing its future work.

6. Road Crack Measurements and Pavement Distress Evaluations

The primary goal of road crack recognition is to evaluate pavement damage on roads. This will help enhance the application of these models and provide factual evidence for maintenance decisions made by road authorities. After conducting a comparative study of various modeling algorithms, it was determined that the model trained by Faster-RCNN outperformed YOLO serial models and can be identified as the refined model for this experiment.

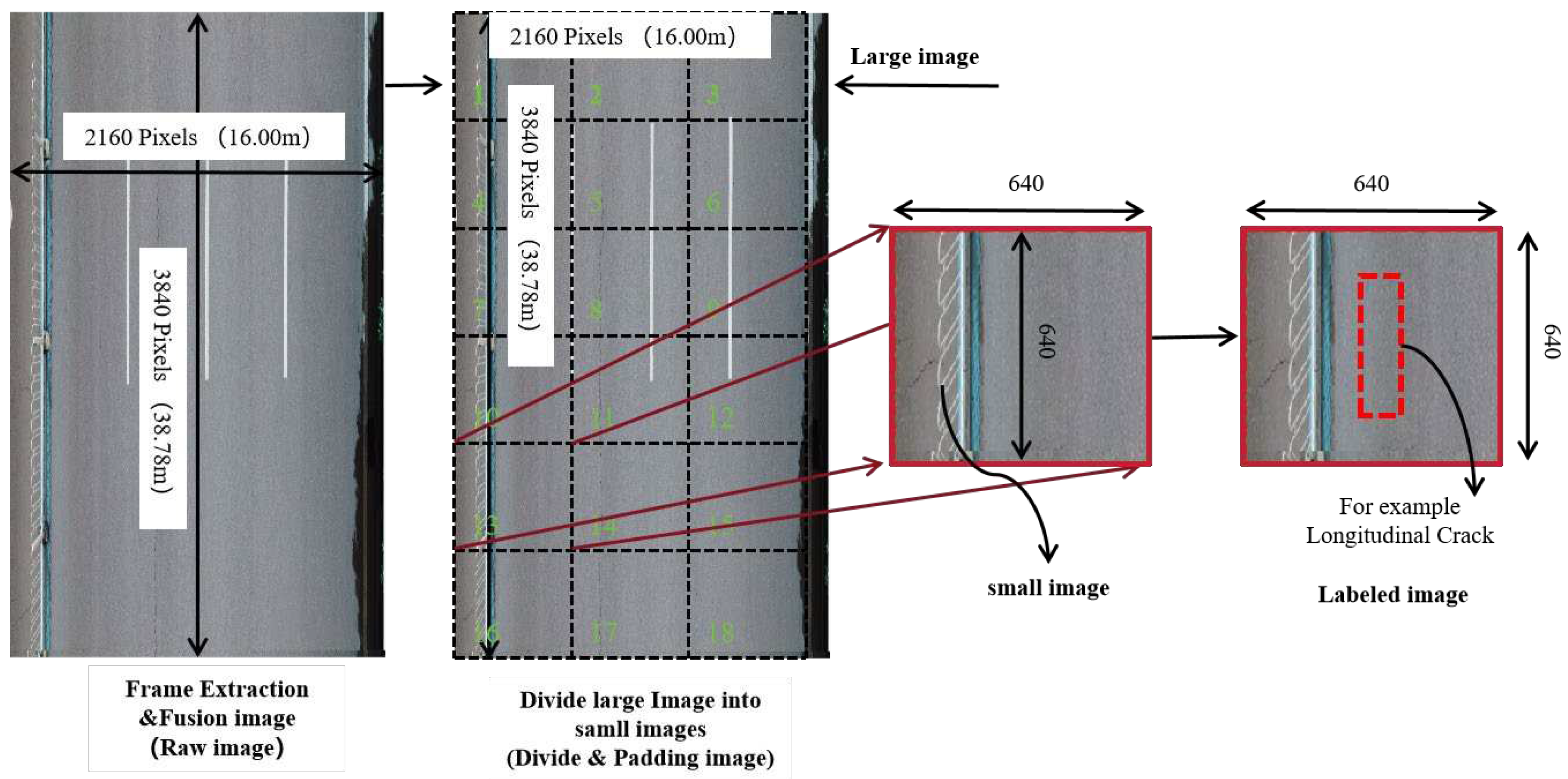

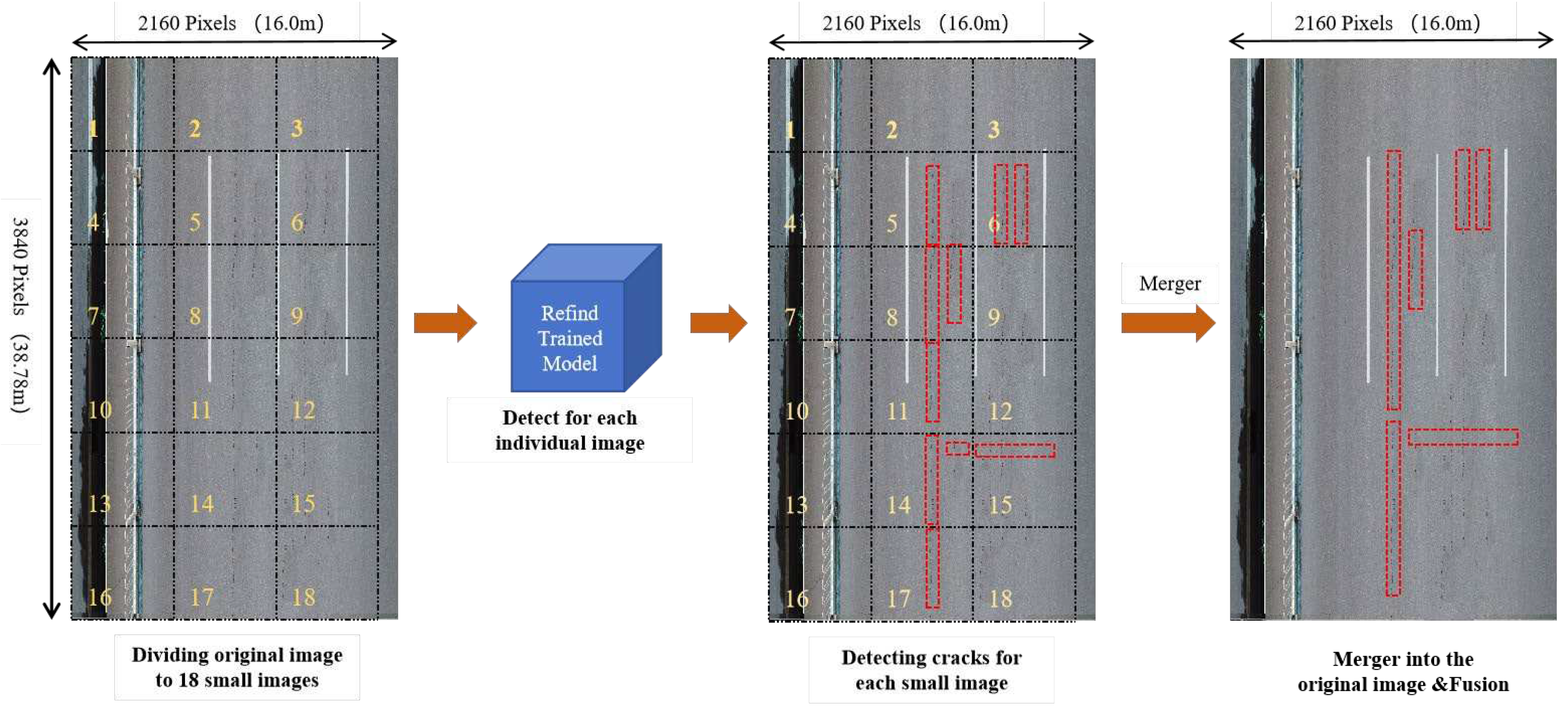

Due to the large size of the obtained images, it is not efficient to use them directly for road crack recognition. This would result in slow recognition speed and require a significant amount of processing resources. To address this issue and ensure that the UAV recognition model remains small and fast, the strategy of 'Divide and Merge' is employed into the UAV imagery with large-size photos . This strategy utilizes a 'Divide-Recognition-Merge-Fusion' method during the crack detection, as illustrated in

Figure 10. The original frame image (3840px×2160px) is divided into 18 of consistency smaller images (640px×640px), each assigned with a unique number. Using the optimal model trained by Faster-RCNN in this experiment, cracks are identified within each cropped image. Finally, these identified images are stitched together, with overlapping multi-crack confidence recognition boxes merging with neighboring combinations.

6.1. Measurement Methods of Pavement Cracks

The measurement methods for crack analysis play a crucial role in statistically analyzing the quantity of cracks. These methods consider various factors such as crack location, crack type, crack length, crack width, crack depth, and crack area. In order to improve the practicality of these methods in road damage maintenance, the quantity of cracks can be roughly estimated, temporarily excluding small cracks.

(i) Pavement Crack Location: The pixel position of the detected crack in the original UAV imagery can be determined based on the corresponding image number; meanwhile, the actual ground position can be inferred through GSD calculation.

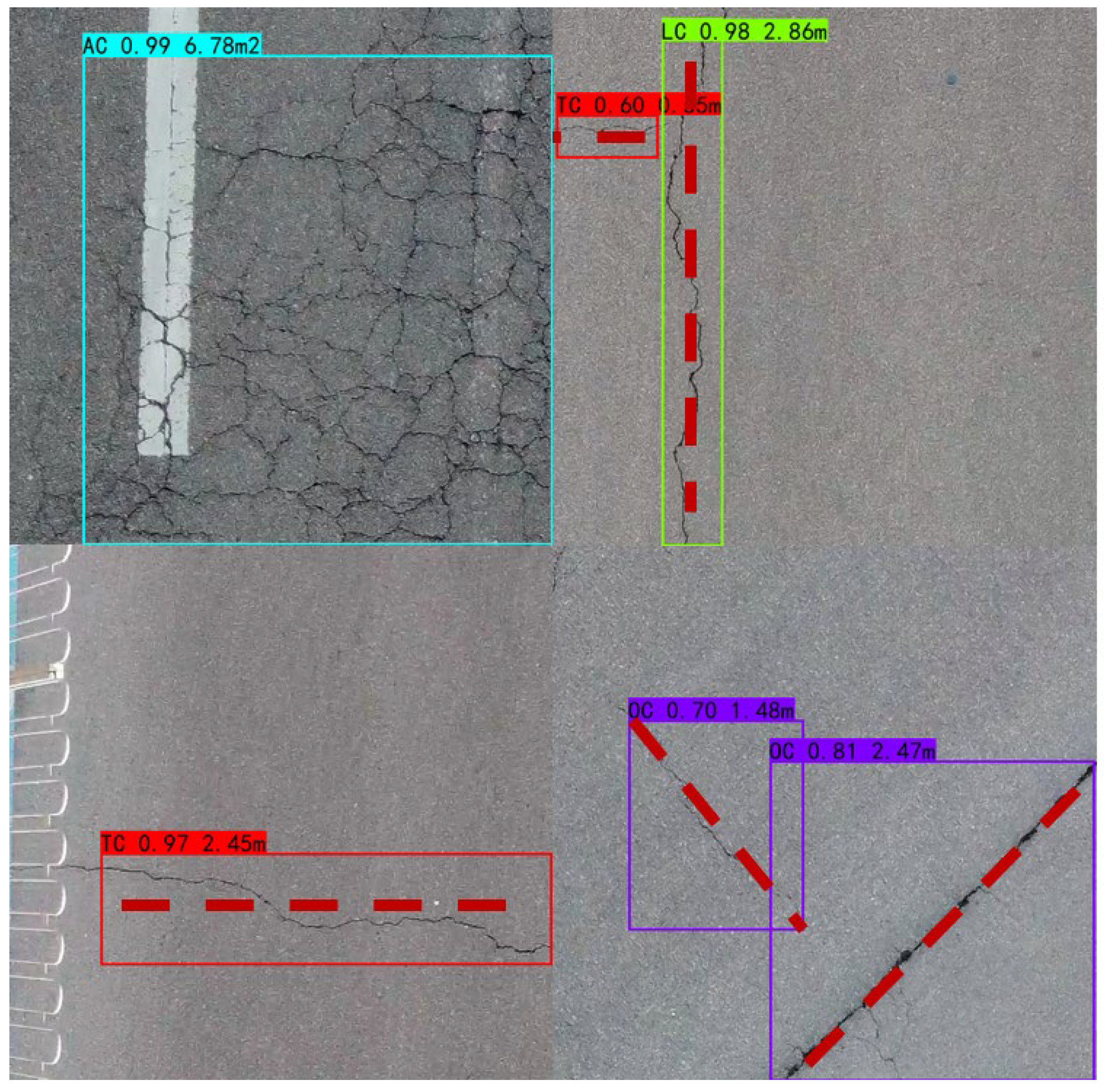

(ii) Pavement Crack Length: It can be determined based on the pixel size of the confidence frame model, as illustrated in

Figure 11. Horizontal cracks are measured by their horizontal border pixel lengths; vertical cracks by their vertical border pixel lengths; diagonal cracks by estimated border diagonal distance pixels; and mesh cracks primarily by measuring border pixel areas.

(iii) Pavement Crack Width: The maximum width of a crack can be determined by identifying the region with the highest concentration of extracted crack pixels.

(iv) Pavement Crack Area: It mainly aims at alligator crack (AC) ,with the measurement of crack area. It can be calculated by the pixels of AC based on the confidence frame model.

Finally, to determine the location, length (L), and area (A) of road crack with ground truth, the quantitative results of cracks can be multiply the ground sampling distance (GSD, Unit: cm/pixel) by the pixels at which it is located. The actual length or width of the crack in meters can be calculated as pixel length (m) × GSD/100, while the actual area of the block affected by the crack in square meters can be derived from pixel area (m2) × GSD2/1002.

6.2. Evaluation Methods of Pavement Distress

The evaluation of pavement damage can be determined using the internationally recognized pavement damage index (

PCI), which is also adopted in China. The

PCI provides a crucial indicator for assessing the level of pavement integrity. Additionally, the pavement damage rate (

DR) represents the most direct manifestation and reflection of physical properties related to pavement condition. In this study, we refer to specifications such as 'Technical Code of Maintenance for Urban Road(CJJ36-2016)' [

33] and 'Highway Performance Assessment Standards(DB11/T1614-2019)'[

34] from Chinese government, incorporating their respective calculation formulas as follows:

where,

Ai is the damage area of the pavement of the

ith crack type (m

2),

N is the total number of damage types, and here it is taken as 4;

A is the pavement area of the investigated road section (multiply the investigated road length by the effective pavement width, m

2);

wi is the damage weight of the pavement of the

ith crack type, directly set as 1. According to the "Highway Performance Assessment Standards(DB11/T 1614-2019)"[

34],

a0 and

a1 represent the material coefficient of the pavement, in which asphalt pavement is taken as

a0=10,

a1= 0.4; while concrete pavement is takes as

a0=9,

a1= 0.42. It is evident that higher

DR leads to lower

PCI value, indicating poorer pavement integrity.

6.3. Visualization Results of Pavement Distress

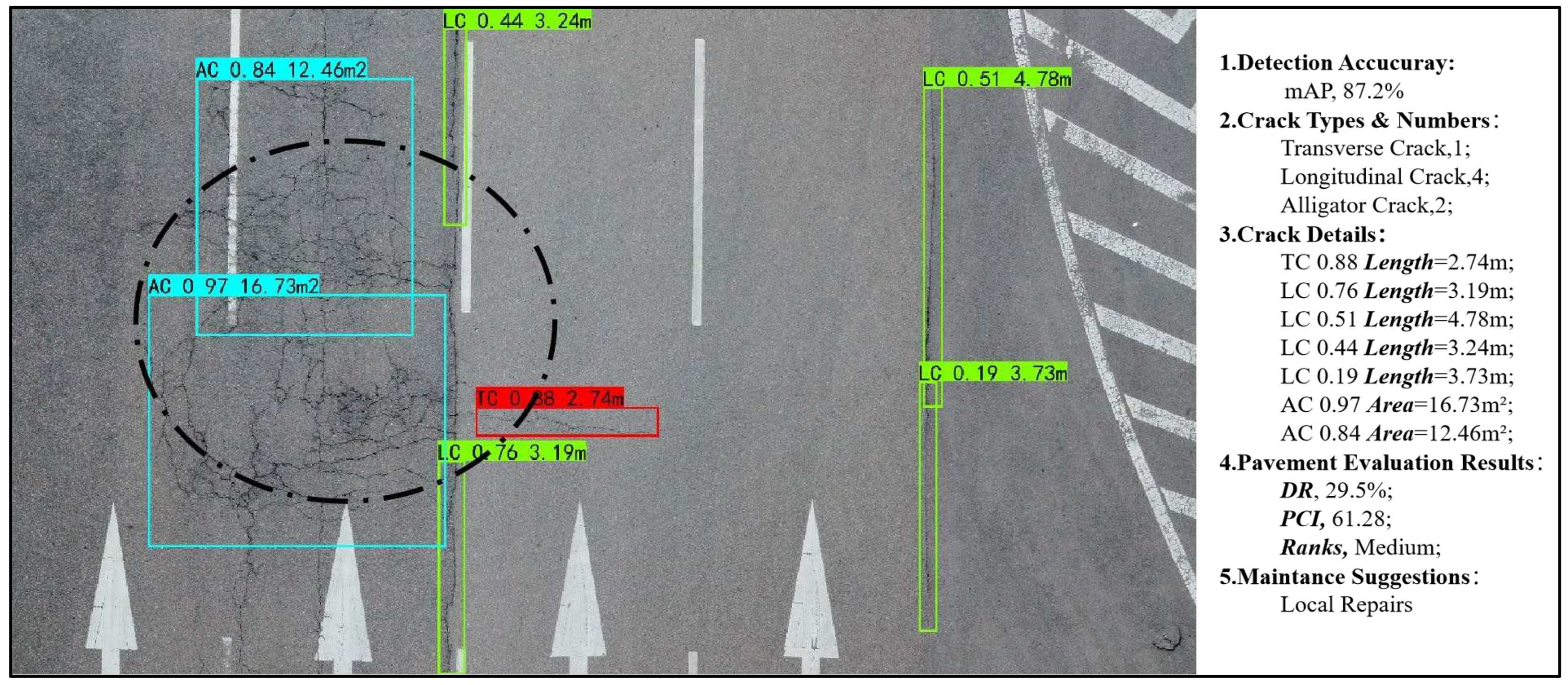

The original, frame image is utilized for crack detection in this experiment, as visualized in

Figure 12. On the right side, the statistical results of the four types of crack measurement are presented. This study employed the preferred Faster-RCNN trained model with an remarkable detection accuracy of 87.2%(

mAP). By conducting crack measurement and statistics on a regional road section, the damage rate (

DR) of 29.5% and the pavement damage index (

PCI) of

61.28 are calculated, indicating a medium rating for road section integrity in this region.

7. Discussions

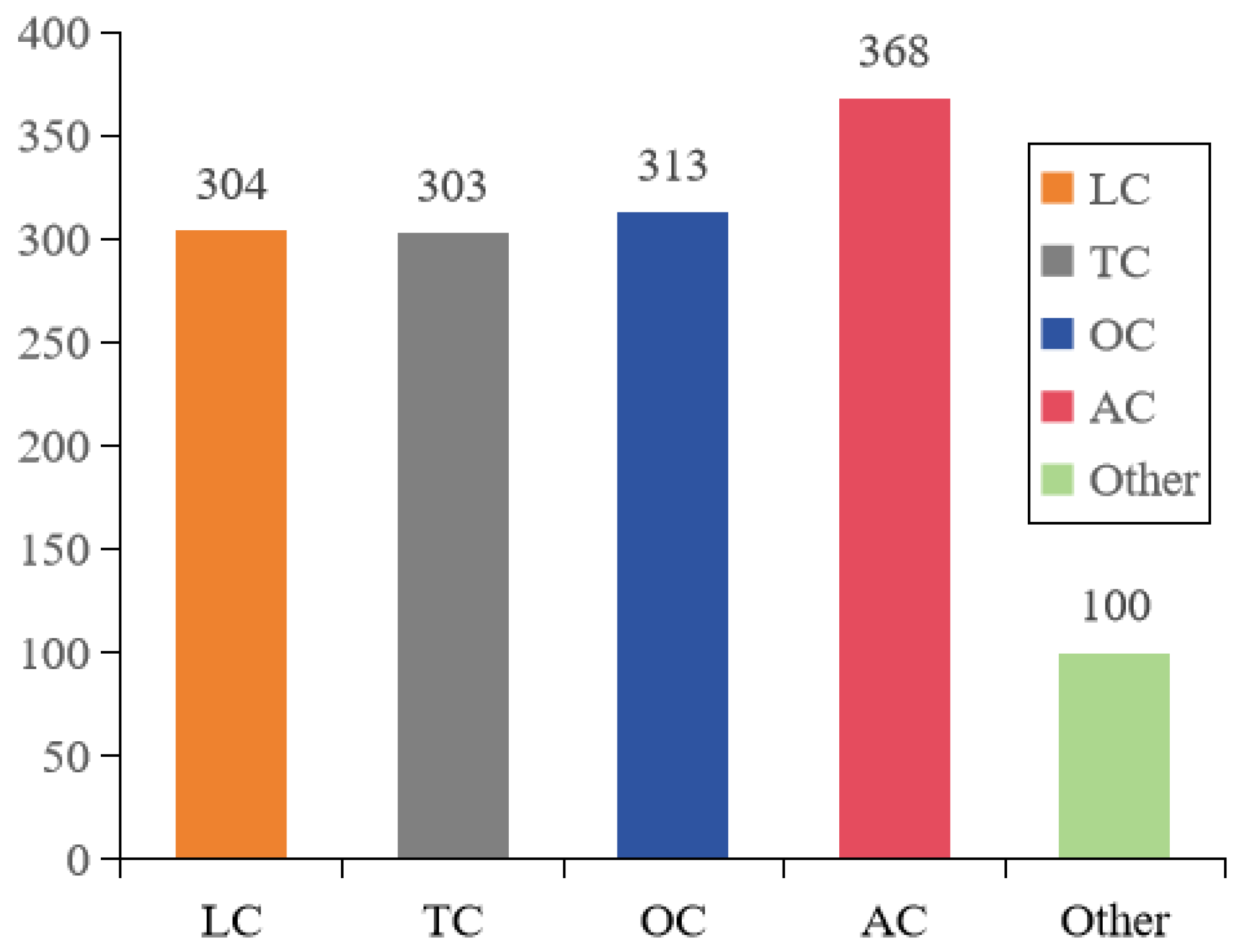

This study proposes a comprehensive and systematic framework and method for automatic crack detection and pavement distress evaluation in UAV inspection system. The framework begins by establishing flight parameters settings and experimental techniques to enhance the high-quality imagery using the DJI Min2 drone in real-world scenarios. Additionally, a benchmark dataset has been created and made available to the community. The dataset includes important information such as GSM, which is essential for evaluating pavement distress. In this experiment, our self-made crack dataset demonstrates its superiority compared to existing datasets used in similar algorithms, achieving the highest accuracy in crack recognition and algorithmic efficiency. The experimental result (refer to

Table 6) revealed the significance of data acquisition quality in the accuracy of crack target recognition, with high-quality image data from the UAV imagery effectively improving recognition accuracy.

In this experiment, the detection capability for road crack in UAV inspection system can be enhanced through a range of strategies. Firstly, adhering to a drone flight control strategy ensures consistent high and stable speed during data acquisition on urban roads. This guarantees the collection of clear and high-quality drone images with attached real spatial scale information for distress assessment. Secondly, the sampling 'divide and conquer' strategy for model training and target detection involves various key steps, including ‘the frame extracting from video &image cropping for large image’, ‘model learning and crack detection for small images’, as well as ‘fusion and splicing from small images’. This approach effectively improves the accuracy of identifying cracks in large-scale images while enhancing the operational efficiency of these models. Thirdly, the deployment of drone detection algorithms using both 'online-offline' and 'online-online' strategies provides flexibility based on different scenarios. The 'One-stage' algorithm operates quickly but has lower detection accuracy, whereas the 'Two-stage' algorithm exhibits slower running efficiency but higher detection accuracy. These deep learning models can be deployed accordingly, depending on specific application scenarios. For instance, in sudden situations requiring fast real-time detection, the lightweight deployment using the 'two-stage' algorithm such as YOLO series models can be employed.

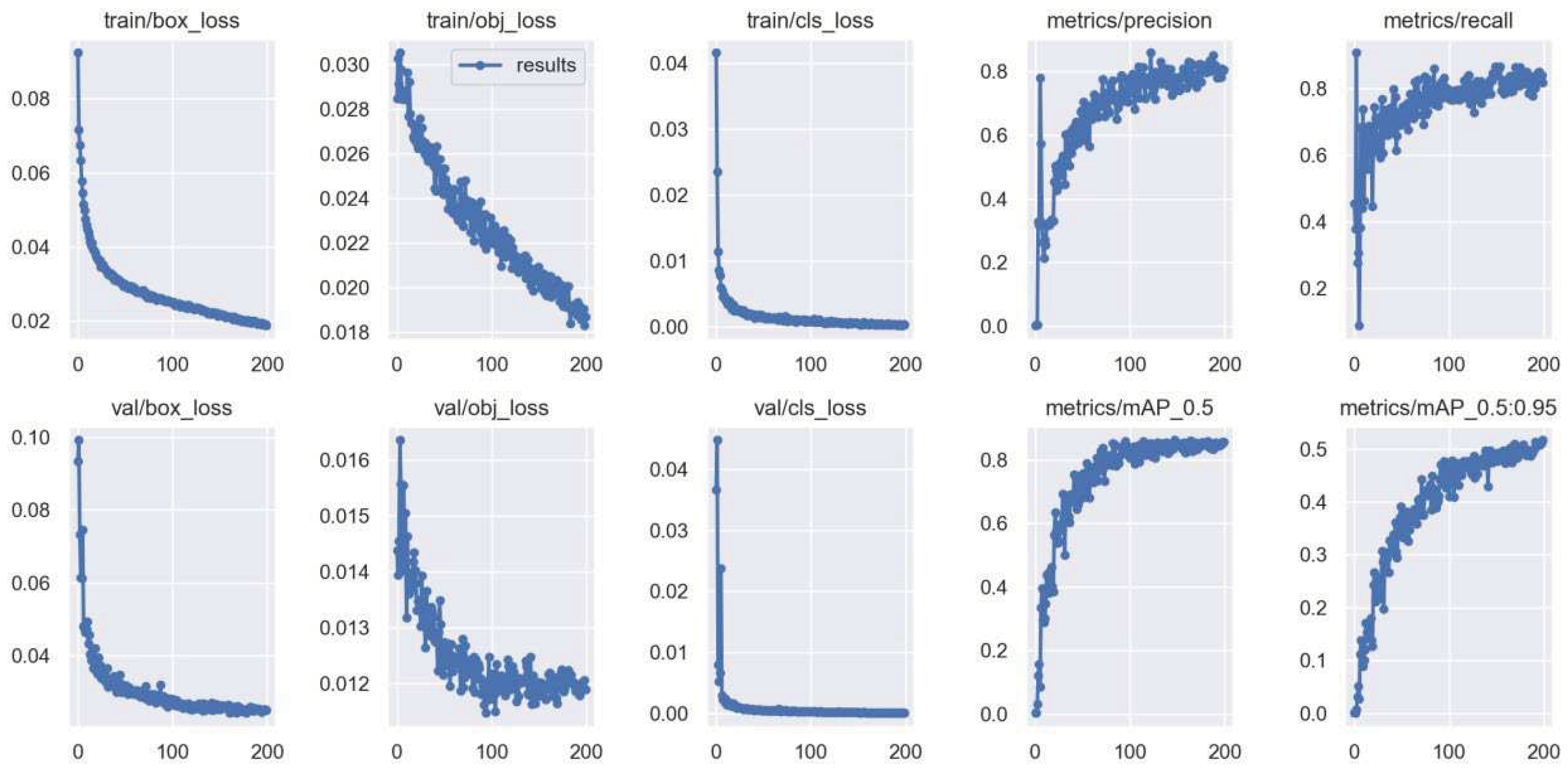

To propose a suitable deployment scheme for the UAV inspection system, this study utilizes prominent deep learning algorithms, namely Faster-RCNN, YOLOv5s, YOLOv7-tiny, and YOLOv8s, for pavement crack object detection and comparative analysis. The results reveal that Faster-RCNN demonstrates the best overall performance, with a precision (P) of 75.6%, a recall (R) of 76.4%, an F1 score of 75.3%, and a mean Average Precision (mAP) of 79.3%. Moreover, the mAP of Faster-RCNN surpasses that of YOLOv5s, YOLOv7-tiny, and YOLOv8s by 4.7%, 10%, and 4% respectively. This indicates that Faster-RCNN outperforms in terms of detection accuracy but requires higher environment configuration, making it suitable for online data collection using UAV and offline inspection at work stations. On the other hand, the YOLO serial models, while slightly less accurate, are the fastest algorithms and are suitable for lightweight deployment of UAV with online collection and real-time inspection. Many studies have also proposed refined YOLO-based algorithms for crack detection in drones, mainly due to their lightweight deployment in UAV system. For instance, the BL-YOLOv8 model[

20] reduces both the number of parameters and computational complexity compared to the original YOLOv8 model and other YOLO serial models. This offers the potential to directly deploy the YOLO serial models on cost-effective embedded devices or mobile devices.

Finally, road crack measurement methods are presented to assess road damage, which will enhance the application of the UAV inspection system and provide factual evidence for maintenance decisions made by the road authorities.Notably, crack is a significant indicator for evaluating road distress. In this study, the evaluation results are primarily obtained through a comprehensive assessment of the crack area, degree of damage, and their proportions. However, relying solely on cracks to determine road distress may be deemed limited, and it should only be considered as a reference for relevant road authorities. Therefore, it is essential to conduct a comprehensive evaluation that takes into account multiple factors, such as rutting and potholes.

8. Conclusion

Traditional manual inspection of road cracks is inefficient, time-consuming, and labor-intensive. Additionally, using multifunctional road inspection vehicles can be expensive. However, the use of UAVs equipped with high-resolution vision sensors offers a solution. These UAVs can remotely capture and display images of the pavement from high altitudes, allowing for the identification of local damages such as cracks. The UAV inspection system, which is based on the commercial DJI Min2 drone, offers several advantages. It is cost-effective, non-contact, highly precise, and enables remote visualization. As a result, it is particularly well-suited for remote pavement detection. In addition,the automatic crack-detection technology based on deep learning models brings significant additional value to the field of road maintenance and safety. It can be integrated into the commercial UAV system, thereby reducing the workload of maintenance personnel.

In this study, the contributions are summarized as follows: (1).A pavement crack detection and evaluation framework of UAV inspection system based on deep learning has been proposed and can be provided a technical guidelines for the road authorities. (2). To enhance the automatic crack detection capability and design a suitable scheme for implementing deep learning-based models in UAV inspection system, we conducted a validation and comparative study on prevalent deep learning algorithms for detecting pavement cracks in urban road scenarios. The study demonstrates the robustness of these algorithms in terms of performance and accuracy, as well as their effectiveness in handling our customized crack image datasets and other popular crack datasets. Furthermore, this research provides recommendations for leveraging UAV in deploying these algorithms. (3).Quantitative methods for road cracks are proposed and pavement distress evaluations are also carried out in our experiment. Obviously, our final evaluation results is also guaranteed according to GSD. (4). The pavement cracks image dataset integrated with GSD has been established and made publicly available for the research community, serving as a valuable supplement to existing crack databases.

In summary, the UAV inspection system under guidance of our proposed framework has been proved to be feasible,yielding more satisfactory results.However, drone inspection has the inherent limitation of limited battery life, making it difficult to perform long-distance continuous road inspection tasks. Drones are better suited for short-distance inspections in complex urban scenarios [

16]. With advancements in drone and vision computer technology, drones equipped with lightweight sensors and these lightweight crack detection algorithms are expected to gain popularity for road distress inspection. In the future, this study aims to incorporate improved YOLO algorithms into the UAV inspection system to enhance road crack recognition accuracy. Furthermore,in order to establish a comprehensive UAV inspection system for road distress, we plan to continue researching multi-category defect detection systems in the future, including various road issues such as rutting and potholes, among which are cracks. Additionally, efforts will be made to enhance UAV flight autonomy for stability and high-speed aerial photography, further improving the quality of aerial images and catering to the requirements of various complex road scenarios.