Submitted:

01 February 2024

Posted:

02 February 2024

You are already at the latest version

Abstract

Keywords:

1. INTRODUCTION

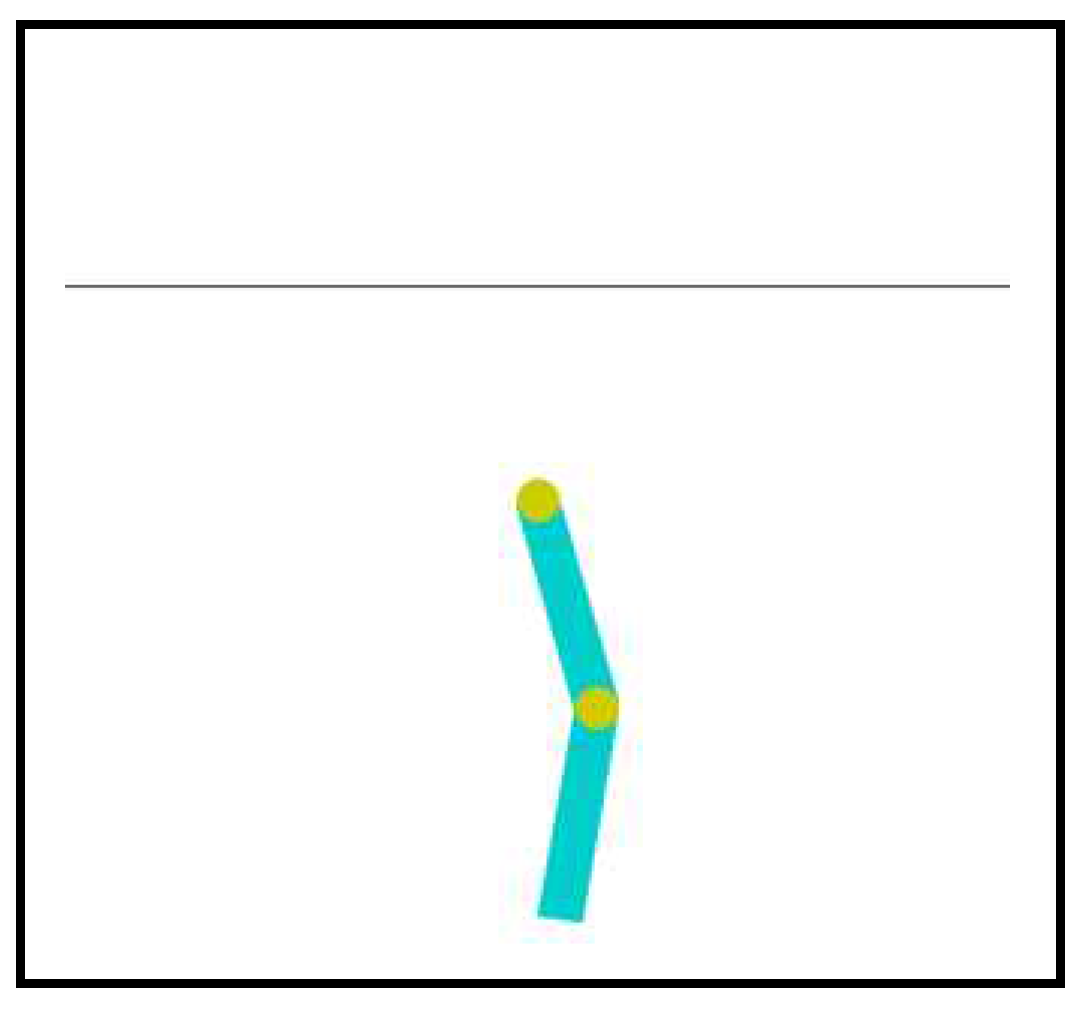

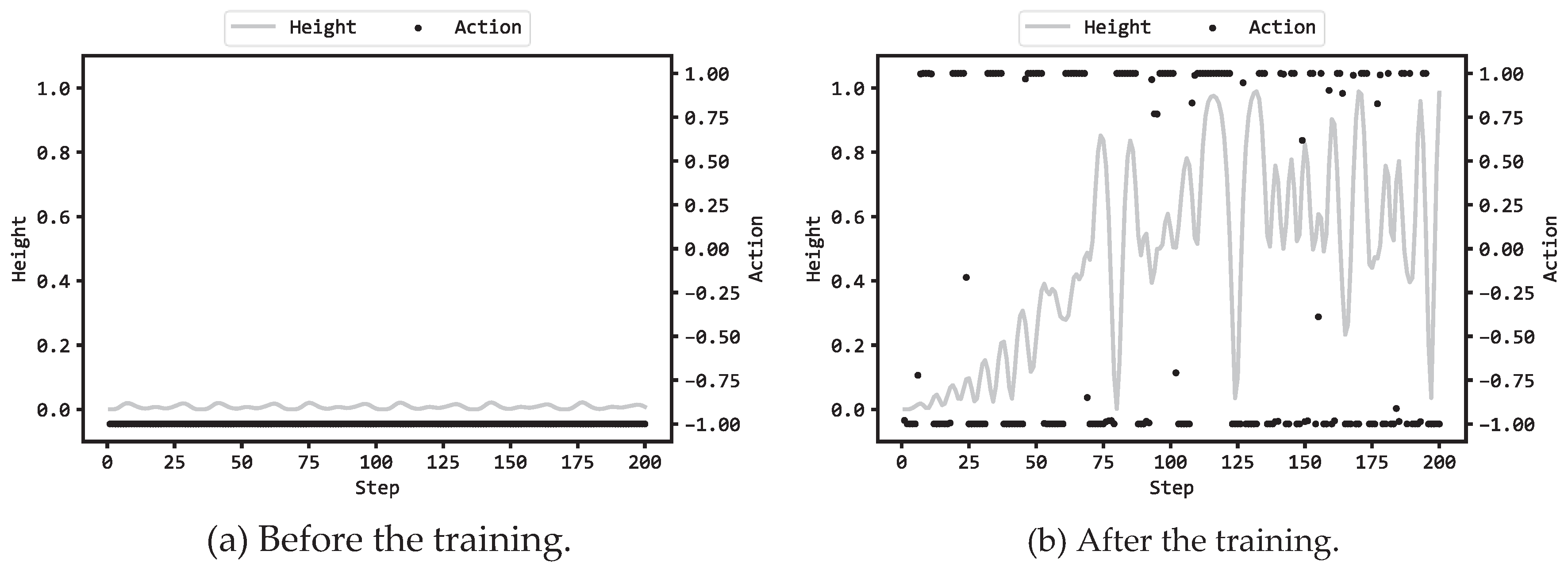

2. ACROBOT CONTROL TASK

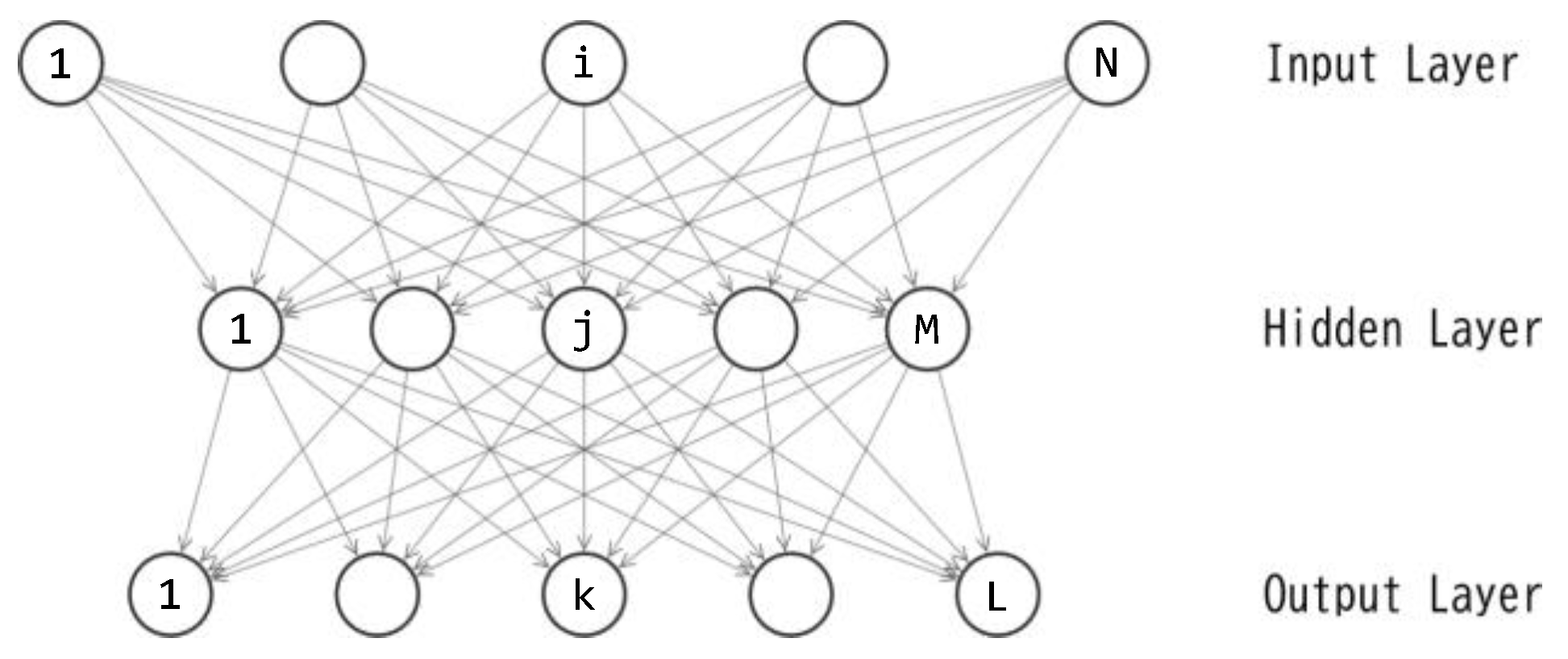

3. NEURAL NETWORKS

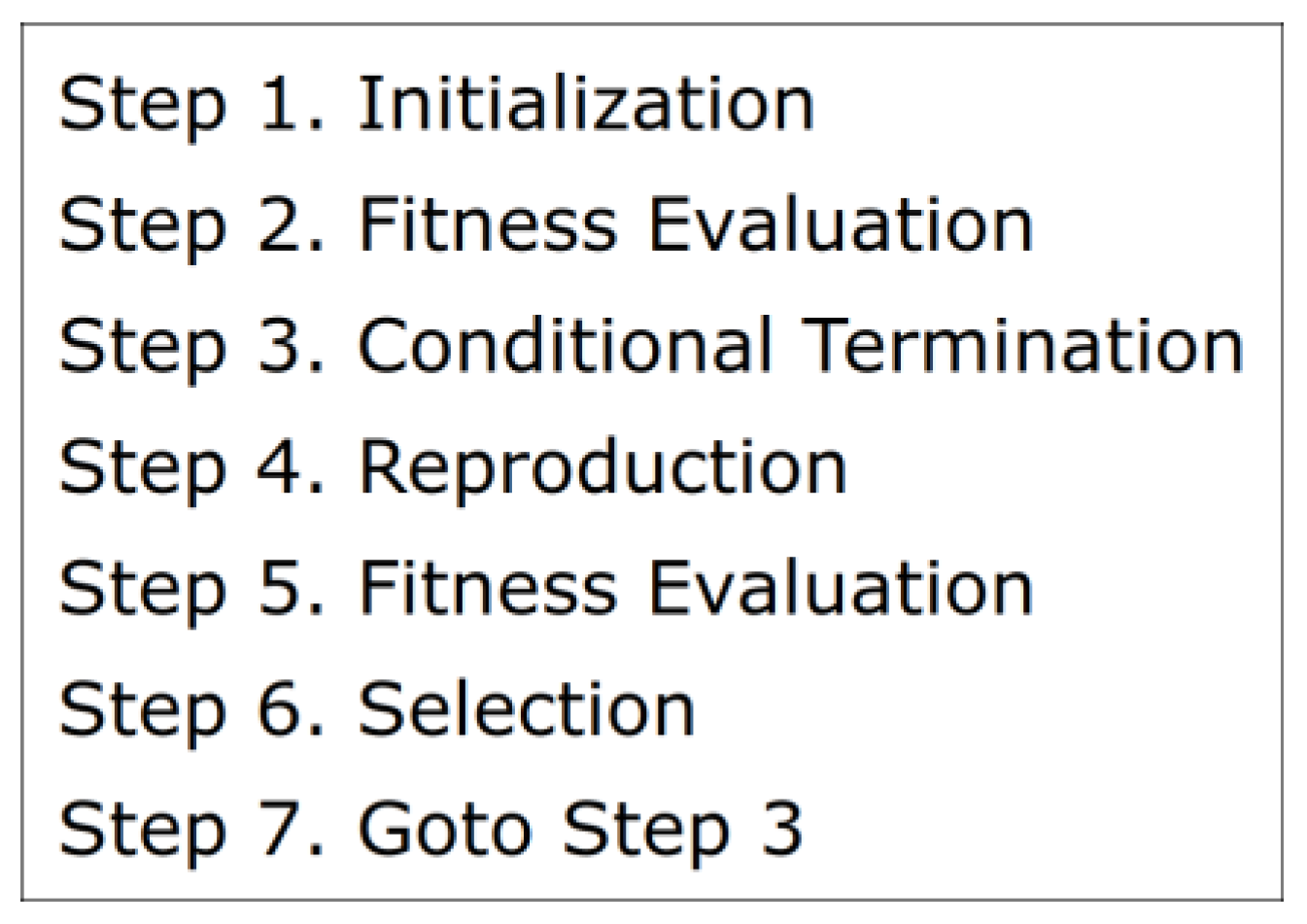

4. TRAINING OF NEURAL NETWORKS BY DIFFERENTIAL EVOLUTION

- Among the parents , three parents are randomly selected where .

- d=1, 2, …, D, where is a preset scaling factor.

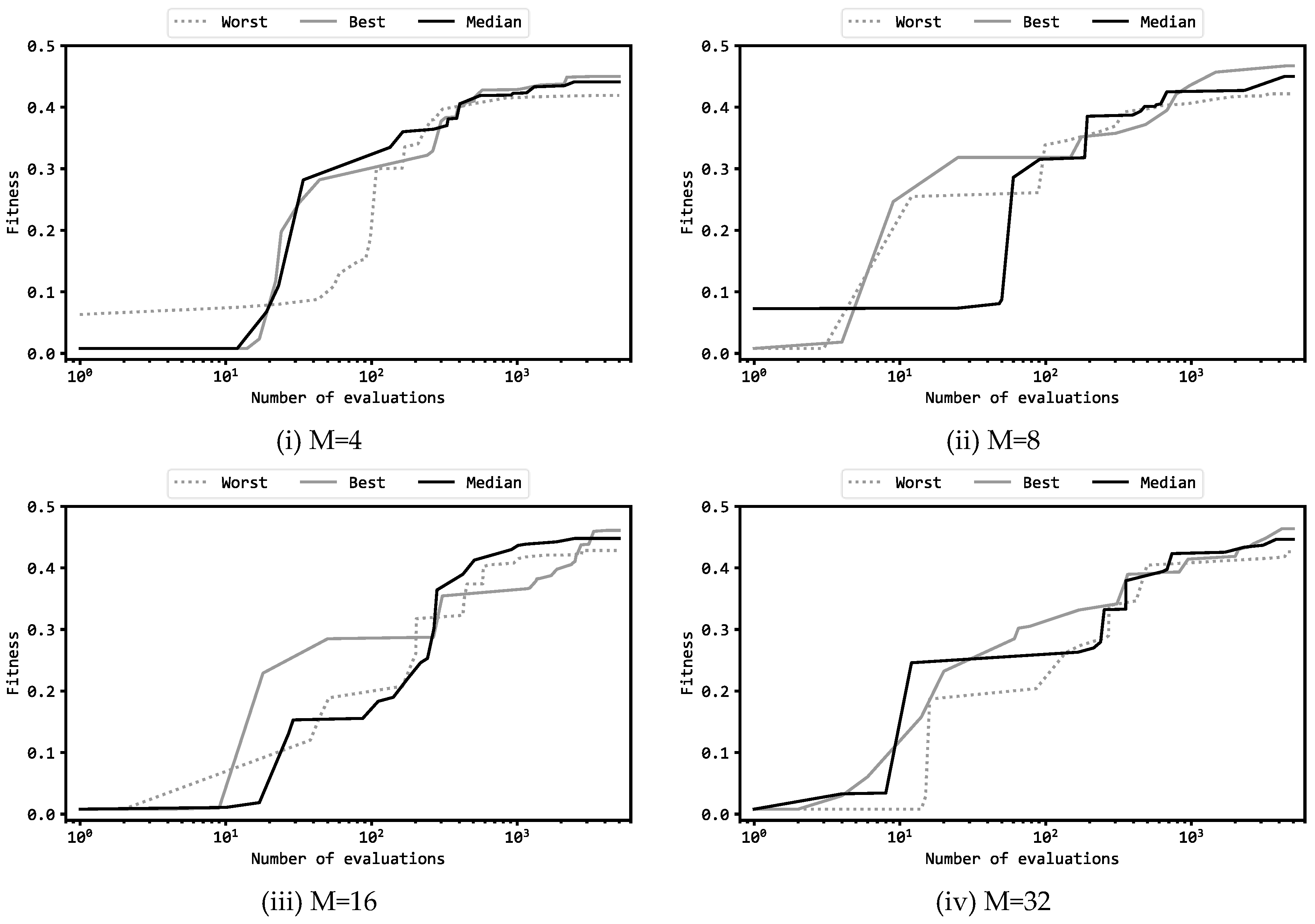

5. EXPERIMENT

6. STATISTICAL TEST TO COMPARE DE WITH GA AND ES

7. Conclusion

- (1)

- The results of the present experiment using DE were compared with the corresponding results of previous experiments using GA and ES. The statistical tests revealed that DE significantly outperformed both GA and ES (p < .01).

- (2)

- Similar to the previous experiments using GA and ES, the present experiment using DE employed two configurations: maintaining a fixed number of 5000 fitness evaluations, (a) a greater number of offsprings per generation, suitable for early-stage global exploration, and (b) a greater number of generations, suitable for late-stage local exploitation. A comparative analysis of the results revealed no statistically significant difference between configurations (a) and (b) in DE. In the experiment with GA, configuration (b) demonstrated a significant superiority over (a), while in the experiment with ES, configuration (a) was significantly superior to (b). These results suggest that DE excels in maintaining a balance between global exploration and local exploitation compared to ES and GA, demonstrating robustness to configuration variations.

- (3)

- Four different numbers of units in the hidden layer of the multilayer perceptron were compared: 4, 8, 16, and 32. The experimental results revealed that 8 units were found to be the optimal choice from the perspective of the trade-off between performance and model size. This finding aligns with previous studies using GA and ES [15,16].

Acknowledgments

| 1 | https://www.gymlibrary.dev/environments/classic_control/acrobot/ |

| 2 | http://youtu.be/MsNRDARXWQw |

| 3 | http://youtu.be/sxmUI8C1IBY |

References

- Bäck, T., & Schwefel, H. P. (1993). An overview of evolutionary algorithms for parameter optimization. Evolutionary computation, 1(1), 1-23. [CrossRef]

- Fogel, D. B. (1994). An introduction to simulated evolutionary optimization. IEEE transactions on neural networks, 5(1), 3-14. [CrossRef]

- Bäck, T. (1996). Evolutionary algorithms in theory and practice: evolution strategies, evolutionary programming, genetic algorithms. Oxford university press.

- Eiben, Á. E., Hinterding, R., & Michalewicz, Z. (1999). Parameter control in evolutionary algorithms. IEEE transactions on evolutionary computation, 3(2), 124-141. [CrossRef]

- Eiben, Á. E. , & Smith, J. E. (2015). Introduction to evolutionary computing. Springer-Verlag Berlin Heidelberg.

- Schwefel, H. P. (1984). Evolution strategies: A family of non-linear optimization techniques based on imitating some principles of organic evolution. Annals of operations research, 1(2), 165-167. [CrossRef]

- Beyer, H. G. , & Schwefel, H. P. (2002). Evolution strategies – a comprehensive introduction. Natural computing, 1, 3-52. [CrossRef]

- Goldberg, D.E., Holland, J.H. (1988). Genetic algorithms and machine learning. Machine learning, 3, 95-99. [CrossRef]

- Holland, J. H. (1992). Genetic algorithms. Scientific American, 267(1), 66-73.

- Mitchell, M. (1998). An introduction to genetic algorithms. MIT press.

- Sastry, K. , Goldberg, D., & Kendall, G. (2005). Genetic algorithms. Search methodologies: introductory tutorials in optimization and decision support techniques, 97-125.

- Storn, R. , & Price, K. (1997). Differential evolution – a simple and efficient heuristic for global optimization over continuous spaces. Journal of global optimization, 11, 341-359. [CrossRef]

- Price, K. , Storn, R. M., & Lampinen, J. A. (2006). Differential evolution: a practical approach to global optimization. Springer Science & Business Media.

- Das, S. , & Suganthan, P. N. (2010). Differential evolution: a survey of the state-of-the-art. IEEE transactions on evolutionary computation, 15(1), 4-31. [CrossRef]

- Okada, H. (2023). Evolutionary reinforcement learning of neural network controller for Acrobot task – Part2: genetic algorithm. Preprints.org. [CrossRef]

- Okada, H. (2023). Evolutionary reinforcement learning of neural network controller for Acrobot task – Part1: evolution strategy. Preprints.org. [CrossRef]

- Rumelhart, D. E. , Hinton, G. E., & Williams, R. J. (1986). Learning internal representations by error propagation. Parallel distributed processing: explorations in the microstructure of cognition, vol. 1: foundations. MIT Press, 318-362.

- Collobert, R. , & Bengio, S. (2004). Links between perceptrons, MLPs and SVMs. In Proceedings of the twenty-first international conference on machine learning (ICML 04). [CrossRef]

- Okada, H. (2023). A comparative study of DE, GA and ES for evolutionary reinforcement learning of neural networks in pendulum task, 25th International conference on artificial intelligence (ICAI ’23), held jointly in 2023 world congress in computer science, computer engineering, & applied computing (CSCE ’23).

| Hyperparameters | (a) | (b) |

|---|---|---|

| Number of offsprings () | 10 | 50 |

| Generations | 500 | 100 |

| Fitness evaluations | 10500=5,000 | 50100=5,000 |

| Scaling factor (F) | 0.5 | 0.1 |

| Crossover rate (CR) | 0.9 | 0.9 |

| M | Best | Worst | Average | Median | |

|---|---|---|---|---|---|

| (a) | 4 | 0.450 | 0.419 | 0.439 | 0.441 |

| 8 | 0.467 | 0.422 | 0.448 | 0.450 | |

| 16 | 0.461 | 0.428 | 0.446 | 0.448 | |

| 32 | 0.464 | 0.426 | 0.445 | 0.446 | |

| (b) | 4 | 0.464 | 0.433 | 0.445 | 0.443 |

| 8 | 0.471 | 0.423 | 0.443 | 0.442 | |

| 16 | 0.460 | 0.427 | 0.445 | 0.444 | |

| 32 | 0.450 | 0.423 | 0.438 | 0.440 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).