1. Introduction

Mango (Mangifera indica L.) is known as the most traded tropical fruit in the world [

1]. It is produced in more than 90 countries and ranks fifth in the world after bananas, apples, grapes and citrus fruits, gradually increasing its international trade in the last decade [

2,

3,

4]. This fruit is highly valued by consumers worldwide for its attractive color, unique flavor and juicy pulp. It also contains several vitamins, high fiber content and several functional compounds that can improve our body’s defenses, fight cellular oxidation and promote physical health [

5,

6,

7,

8].

In Latin America, mango has stood out as one of the main export fruits, with Mexico, Brazil and Peru representing the largest production, offering high quality fruits in order to satisfy world demand [

9]. However, mangoes are sensitive during post-harvest processing, since their shelf life is greatly reduced by physical factors, such as shocks, compression and/or sunburn, which, although they do not immediately destroy the integrity of the fruit, cause the onset of a degenerative process of its organoleptic properties [

10,

11], and ripening factors, since being a climacteric fruit, it reaches its highest respiration peaks a few days after being harvested, quickly triggering biochemical reactions that cause increased acidity, weight loss, rotting, pulp softening, cell wall degradation, among others [

12,

6], which directly affect their quality during storage and transportation before reaching the target market. The color tone plays a crucial role in the selection of this fruit for commercialization, which merits special care due to the subjectivity and fatigue of the workers [

13,

14]. The sales value of mango can vary; at harvest maturity stage, it can present a slightly yellow flesh color that is not related to its green-yellow or reddish-green skin, and on the other hand, at the consumption maturity stage, the flesh acquires yellow-orange tones and a yellow-reddish skin, being very versatile changes in the internal (flesh) and external (skin) color, as well as in its flavor. These changes are attributable to each mango variety [

15,

16,

17].

Figure 1 shows workers during the mango harvesting season in Peru.

The high cost of extending the shelf life of fresh mangoes through the cold chain calls for precise solutions for the identification of fruit at the right maturity, accurately selecting mangoes of suitable quality for trade as a market articulation strategy. As is well known, color is an important factor in quality assessment as it can be used to estimate the maturity of fresh fruit before marketing [

18,

19]. Therefore, the use of non-invasive technologies such as artificial vision systems together with Deep Learning can contribute to finding solutions to various problems, thus promoting the improvement of quality control processes, productivity and flexibility in business management in agriculture and food industry [

20,

21,

22,

23]. Machine vision approaches have been studied for the detection of different post-harvest problems when sorting fruits by color, such as techniques based on identifying patterns by dimension using the circular Hough transform [

24,

25], by color in different thresholds [

14,

26] and textural characteristics on the surface of the fruit [

27,

28]. Thus, it is well known that classification approaches often use structure matching, support vector machines [

29] and recently on CNN [

30,

18,

31,

32], the latter being the one that offers promising results.

A CNN is structurally composed of different layers, and these try to find the ideal numerical values that identify a learning characteristic [

33,

34,

35], this learning if supervised allows trial and error based evaluation to achieve higher accuracy rates in each layer [

36,

37]. In the area of image acquisition, recognition and interpretation, CNNs have performed efficiently and even better than other machine learning techniques in the identification of various qualities to extract and combine typologies that establish the maturity categorization in fruits and vegetables [

38,

39,

40,

41].

2. Materials and Methods

2.1. Acquisition architecture

Image acquisition was performed in a semi-controlled environment. Natural light near neutral (5500K) was used because it is the one that adds the least tonality components to the images [

42], avoiding direct sunlight on the fruit as this is a factor that hinders image processing [

43]. A camera with a tone depth of 8 bits was used, reaching 24 bits per pixel and a spatial resolution of 1200 x 800 pixels, considering 3 channels (RGB) in "jpeg" format, the spatial resolution was approximately 0.02 mm/pixel, the evaluation of these images was performed on a laptop computer (Legion Intel® Core™ i7-10750H, Lenovo, China).

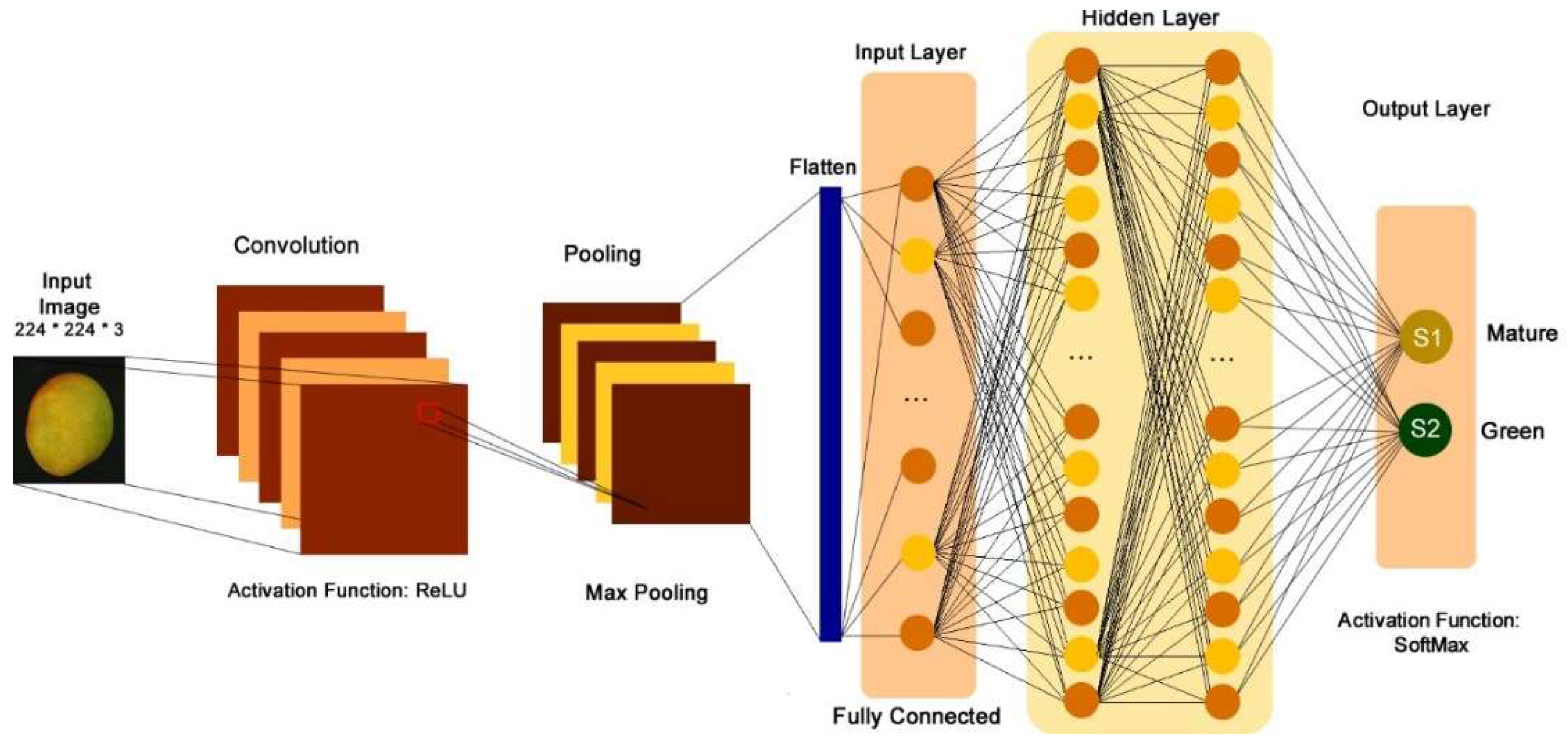

2.2. CNN architecture

The computational model was designed based on standard convolutional neural network architecture, which was parameterized for subsequent implementation using the Python programming language and the IDE tool Google Colab using the cloud service. The structure of the CNN consists of a Convolutional Layer 1, which receives the input image, applying 32 filters with a 3 x 3 pixel mask, an equal fill type so that the output has the same dimension as the input image, the mask offsets are every 3 pixels. A reduction layer follows where it receives the filters from the previous layer and performs a reduction based on the max pooling function, with a filter of 2 x 2 pixels, with a mask offset of 2 pixels.

f (I11, I12, I21, I22) = Maximum value (x, y)

The flattening layer receives the filters from the last layer and oversees converting the multidimensional data into a 1D vector, which is propagated to the other hidden layers, using an activation function called ReLU, because it eliminates negative values and leaves the positive ones, which are the values of an image.

Subsequently the hidden layers have full connectivity, a Dropout of 0.5 is used, to regularize and reduce the overfitting in the neurons, avoiding bias or memorization in learning [

44]. Finally, we arrive at the Output Layer, which is composed of 2 neurons, corresponding to the 2 classes to be classified (mature and green), configured with a SoftMax activation function (

Figure 2).

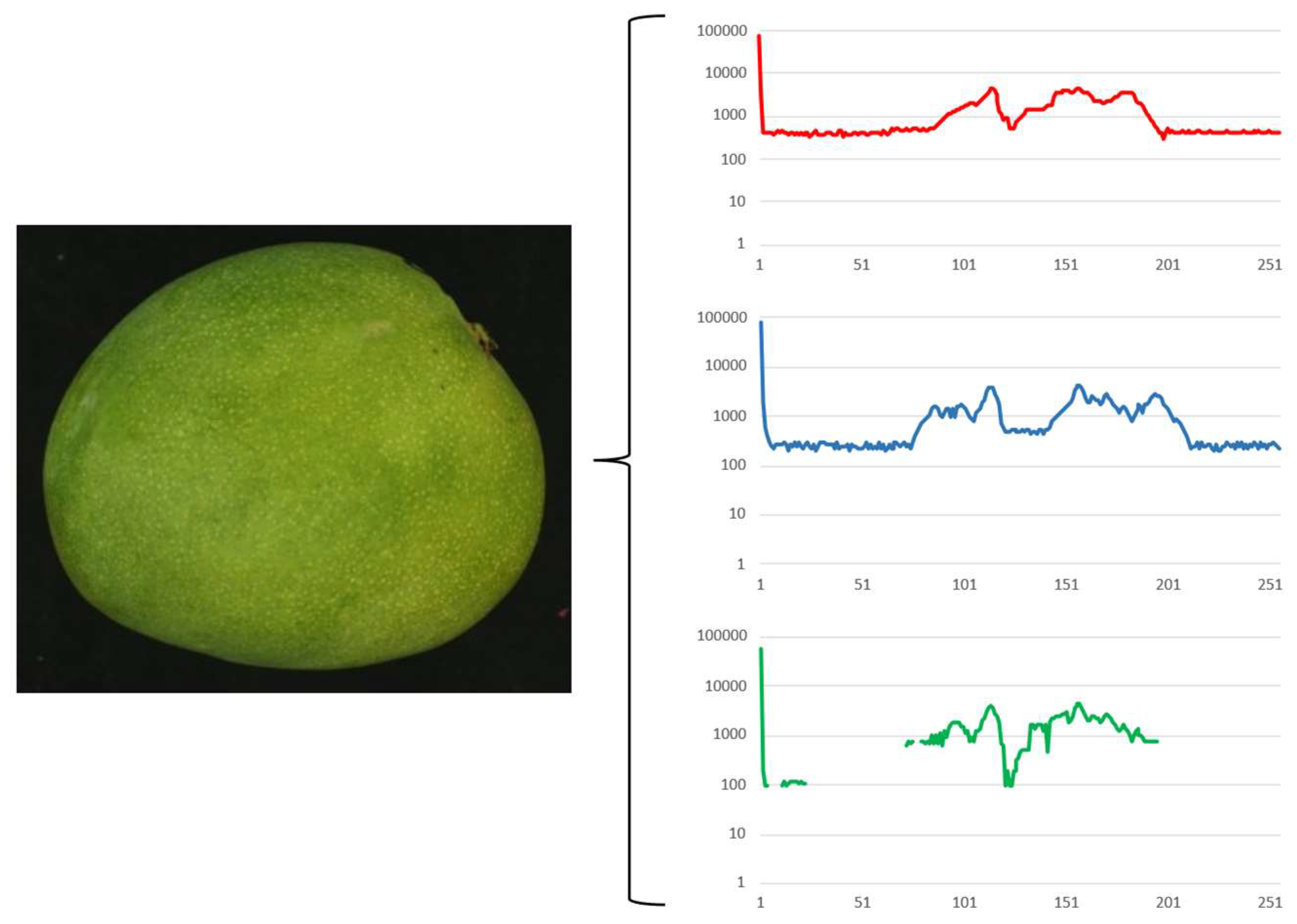

2.3. Analysis and validation of datasets

The dataset structuring consists of 201 images, 50 % are used for training, 50 % for validation, as described in

Table 1.

The images of the training and test dataset are normalized with values between 0 and 1. To improve the training and model results, the "Data Augmentation" technique is used, which allows us to augment our dataset by introducing transformations on the original data. The transformations performed a rotation, horizontal and vertical shift, zoom and a horizontal flip. To analyze the uniformity of image acquisition, the environment was evaluated using histograms, which provide information about the distribution of shades in RGB (

Figure 3).

At the end of the acquisition stage, a Data set composed of a total of 201 images was obtained, which were labeled according to the criteria of physiological maturity: "mature" or "green" based on regulations useful for export [

45].

3. Results and Discussion

In the present work, a computational model based on a standard CNN architecture has been designed, on which a dataset consisting of 210 images was deployed to train and achieve learning for mango classification. To find the best CNN classification performance, configurations were evaluated, which is called parameterization. Five models have been parameterized, where different combinations of layers (convolution and reductions) have been applied repeating 15 epochs with a mini batch size of 15 images, thus calculating the accuracy on the test and training dataset.

3.1. Number of Convolution and Reduction Layers

According to [

46], it suggests that there should always be a convolution and reduction layer, so the number of layers has varied, as seen in

Table 2.

Figure 4 shows that 4 types of layer configuration were evaluated, always considering a convolution and reduction, reaching the best performance in type 1, consisting of 1 convolution and reduction block with an accuracy of 94.94%.

[

47] studied an 8-layer CNN for classifying fruits, obtaining a classification accuracy of 95.67 %, which compared to the present research, the CNN, only with 1 convolution layer and 1 reduction layer achieved an accuracy of 94.94 % demonstrating the potential of CNNs in automatic classification in an objective and fast way, although still to be improved. In another case [

48], they evaluated mango price forecasting using a combined computational model based on back propagation learning and a long-term memory CNN, where they determined that the mean square error, mean absolute percentage error and coefficient of determination R

2 of the model were 0.02, 0.14 % and 0.99 respectively, demonstrating the functionality of this technique and corroborating the flexibility of these designs.

3.2. Spatial resolution of the input images.

The data set consisted of images acquired with a spatial resolution of 1280 x 800 pixels, in 3 channels (RGB).

As shown in

Figure 5, 4 types of resolutions were evaluated based on which the input images were rescaled, obtaining the best result with the resolution of 32 x 32 pixels, followed by a minimum difference of 1.03 % and 1.18 % respectively with the resolution of 64 x 64 pixels and 150 x 150 pixels, observing that when the resolution increases, the accuracy decreases. According to the authors [

49,

50,

51], input images to a convolutional neural network with small dimensions are adequate, as long as the original images are also small, for such reason the comparisons made in the work of [

52] can consider dimensions up to 28 x 28 pixels, due to the data set with which they perform the evaluations which have images of 256 x 256 pixels. Similarly, [

46] used CNN to classify sour lemon images, where they found that with 16×16 pixels, an accuracy of 99.8 % was obtained, moreover, the accuracy of this model was 100 % in the classification of 32 × 32 and 64 × 64-pixel sour lemon images. Therefore, the best resolution to use in the research was 32×32 pixels, as shown in

Figure 5, reaching an accuracy of 96.12 % attributed to the complexity of the fruit.

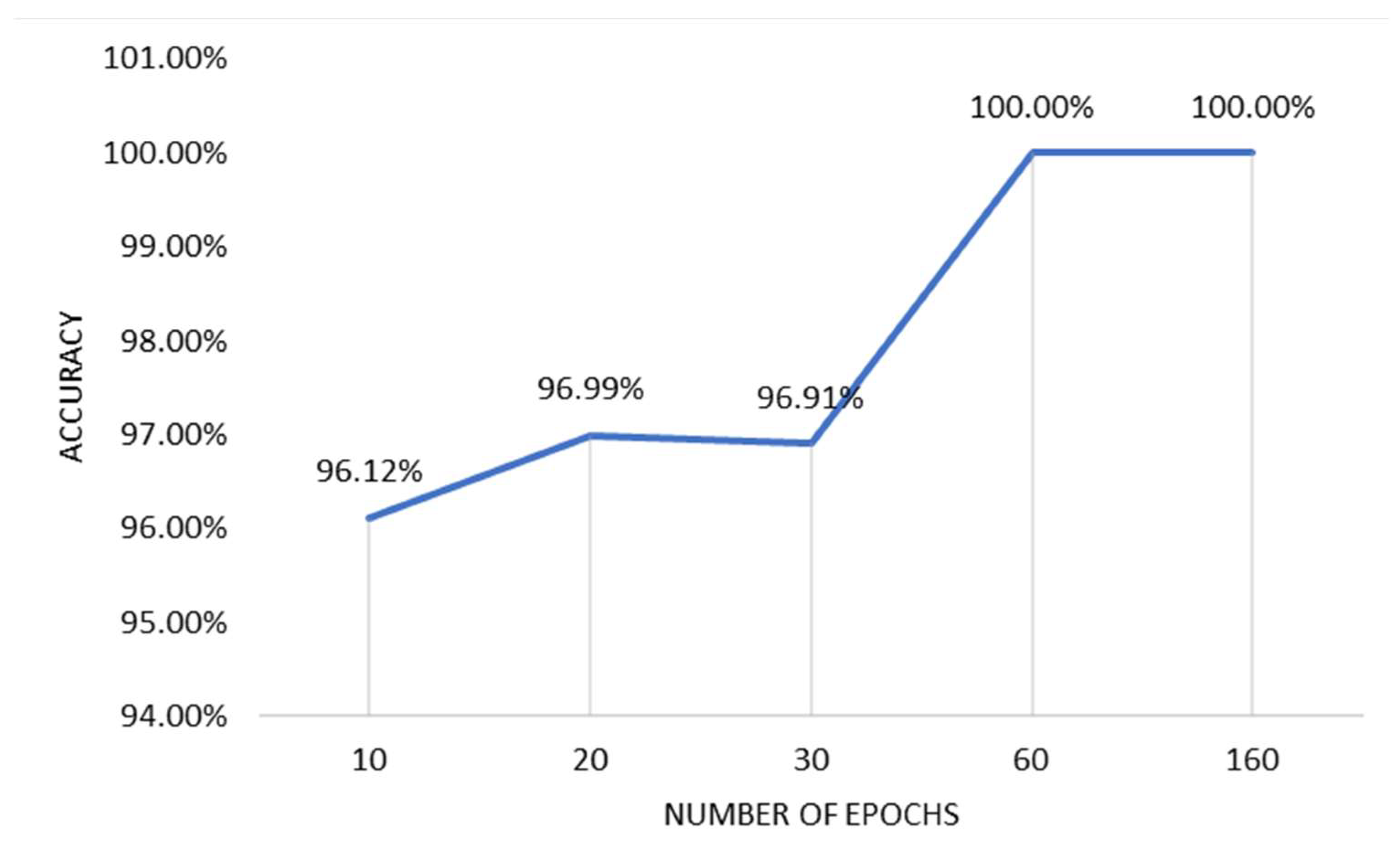

3.3. Number of epochs used in training and validation.

The CNN requires repetitive cycles for training and validation which are called epochs.

As shown in

Figure 6, the epochs started to be evaluated from the 10th cycle, obtaining an accuracy of 96.12 %, in the 20th and 30th epoch the indicator improves, with 60 and 160 reaches 100 %, but analyzing the internal behavior of the CNN, it apparently enters a state of overlearning, which is not recommended in any case of study, for this reason they should not be considered. [

46] found that the number of training repetitions to achieve prediction accuracy in epochs 1-9 has a high error rate, and in epochs 9-40 the error rate is minimal (close to 0). As can be seen in

Figure 6, the best number of epochs are in the range of 10 to 60, which means that to obtain a minimum error (between 3.88 % to 0 %), a number of epochs present in that range should be considered.

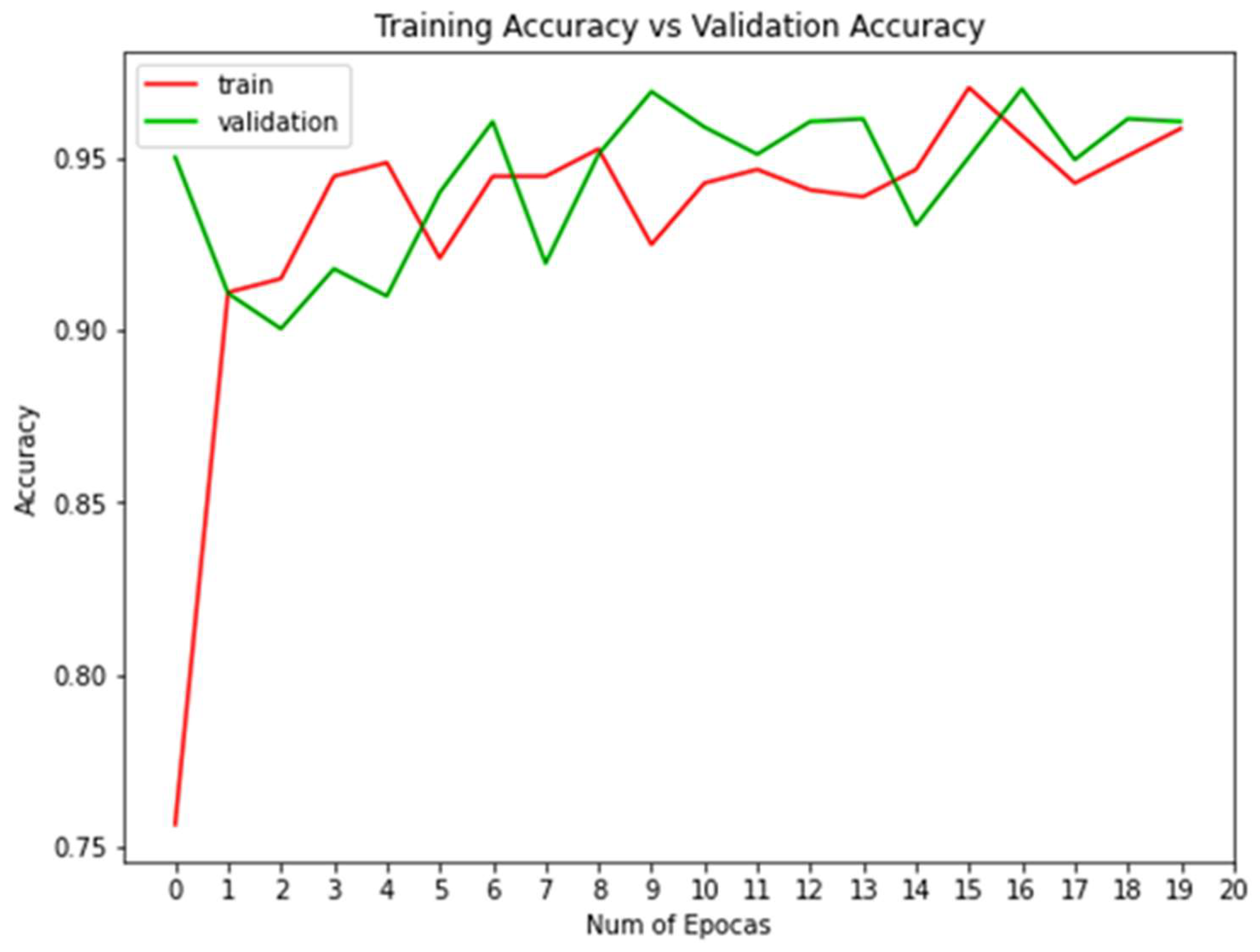

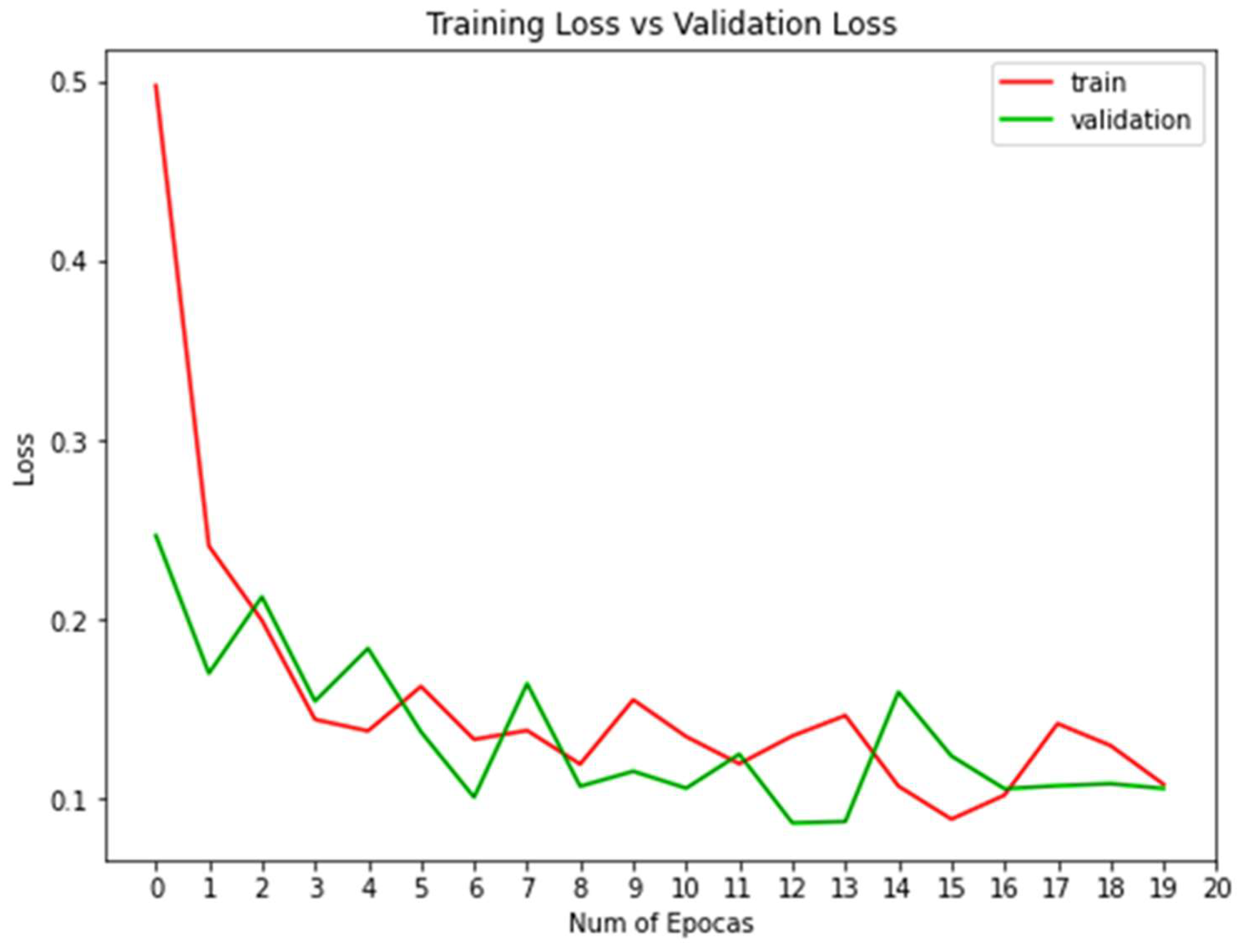

3.4. Parameterization of the CNN model

From the previous evaluations, it has been possible to obtain information about the constructed structure to design a parameterized CNN to improve the level of accuracy in the handle selection process. For this purpose, the following parameters are proposed: Number of convolution and reduction layers: 1 level, convolution and reduction layer per layer, a spatial resolution of the input images of 32 x 32 pixels and a number of 20 epochs. The result of the designed CNN can be seen in

Figure 7 and

8.

The optimized accuracy in the CNN-based computational model designed is 96.04 %, possibly as a consequence of the use of back propagation, this is argued because CNNs have a structure that adapts and strengthens in digital image processing thanks to its convolution and reduction layers. In similar and recent works; [

52,

54], the models manage to achieve a classification accuracy and quality rating of 96.7 % and 95.11 %, probably because they did not use a single model for the evaluation of their factors and that the data set with which they work is prepared to evaluate image processing cases, on the other hand are fruits with very distinguishable characteristics such as color, textures, etc., in the present investigation the images were acquired without any previous preparation being an important aspect for a CNN since it improves with the use because it can learn not only the color characteristics but also the shape and texture approaching what is simulated in real time [

27], this also improves the processing time since in the traditional computer vision scheme it decomposes them into stages [

55], demanding more computational resources [

56,

57]. The work of [

58], is oriented to classify various types of fruits, 25 categories, reaching 100 % accuracy, in this aspect they obtain a higher % than achieved in the present work, it should be considered that the data set with which they work is prepared to evaluate cases of image processing, on the other hand, they are fruits with very distinguishable characteristics by their color, textures, dimensions, etc., in the present research the images were acquired without prior preparation approaching a more real context.

5. Conclusions

The evaluation of the computational model based on CNN with different hyperparameters was designed and improved, and the values that provided the best levels of accuracy for the proposed maturity states were analyzed, concluding that the most adequate number of convolution and reduction layers was 1, the resolution of the input images to the CNN was 32 x 32 pixels with a number of 20 epochs, which provided the best percentage of accuracy with a value of 96.04 %. Therefore, the developed system is an efficient and reliable alternative to be implemented in mango companies under their own particularities.

Author Contributions

Conceptualization, O.S. and J.S.; methodology, J.S. and J.M; software, O.S. and J.P.; validation, O-S. and J.P.; formal analysis, J.S. and M.R.; investigation, M.R and J.M.; resources, O.S.; data curation, O.S.; writing—original draft preparation, J.S. and M.R; writing—review and editing, O.S.; visualization, J.P.; supervision, O.S.; project administration, J.M.; funding acquisition, O.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

A solicited of the authors.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Ebrahimi, F., Rastegar, S. Preservation of mango fruit with guar-based edible coatings enriched with Spirulina platensis and Aloe vera extract during storage at ambient temperature. Scientia Horticulturae 2020, 265, 109258. [CrossRef]

- Evans, E., Ballen, F., Siddiq, M. Mango production, global trade, consumption trends, and postharvest processing and nutrition. Handbook of mango fruit: production, postharvest science, processing technology and nutrition 2017, 1-16. [CrossRef]

- Eshetu, A., Ibrahim, A., Forsido, S., Kuyu, C. Effect of beeswax and chitosan treatments on quality and shelf life of selected mango (Mangifera indica L.) cultivars. Heliyon 2019, 5(1). [CrossRef]

- FAO. 2020. Principales frutas tropicales - Compendio estadístico 2018. Roma. Available online: https://www.fao.org/3/ca5688es/CA5688ES.pdf.

- Masibo, M., He, Q. Major mango polyphenols and their potential significance to human health. Comprehensive reviews in food science and food safety 2008, 7(4), 309-319. [CrossRef]

- Lebaka, V., Wee, J., Ye, W., Korivi, M. Nutritional composition and bioactive compounds in three different parts of mango fruit. International Journal of Environmental Research and Public Health 2021, 18(2), 741. [CrossRef]

- Mirza, B., Croley, C., Ahmad, M., Pumarol, J., Das, N., Sethi, G., Bishayee, A. Mango (Mangifera indica L.): a magnificent plant with cancer preventive and anticancer therapeutic potential. Critical Reviews in Food Science and Nutrition 2021, 61(13), 2125-2151. [CrossRef]

- Ramírez, J., Cabrera, C., Prieto F. A computer vision system for early detection of anthracnose in sugar mango (Mangifera indica) based on UV-A illumination. Information Processing in Agriculture 2023, 10(2), 204-215. [CrossRef]

- Galán, V. Current situation and future prospects of worldwide mango production and market. In Global Conference on Augmenting Production and Utilization Of Mango: Biotic and Abiotic Stresses 2011, 1066, pp. 69-84. [CrossRef]

- Sivakumar, D., Jiang, Y., Yahia, E. Maintaining mango (Mangifera indica L.) fruit quality during the export chain. Food Research International 2011, 44(5), 1254-1263. [CrossRef]

- Datir, S., Regan, S. Advances in physiological, transcriptomic, proteomic, metabolomic, and molecular genetic approaches for enhancing Mango fruit quality. Journal of Agricultural and Food Chemistry 2022, 71(1), 20-34. [CrossRef]

- Maldonado-Celis, M., Yahia, E., Bedoya, R., Landázuri, P., Loango, N., Aguillón, J., Restrepo, B., Guerrero, C. Chemical composition of mango (Mangifera indica L.) fruit: Nutritional and phytochemical compounds. Frontiers in plant science 2019, 10, 1073. [CrossRef]

- Shaikh, T., Rasool, T., Lone, F. Towards leveraging the role of machine learning and artificial intelligence in precision agriculture and smart farming. Computers and Electronics in Agriculture 2022, 198, 107119. [CrossRef]

- Bhargava, A., Bansal, A. Fruits and vegetables quality evaluation using computer vision: A review. Journal of King Saud University-Computer and Information Sciences 2021, 33(3), 243-257. [CrossRef]

- Ntsoane, M., Zude-Sasse, M., Mahajan, P., Sivakumar, D. Quality assesment and postharvest technology of mango: A review of its current status and future perspectives. Scientia Horticulturae 2019, 249, 77-85. [CrossRef]

- Wongkaew, M., Kittiwachana, S., Phuangsaijai, N., Tinpovong, B., Tiyayon, C., Pusadee, T., Sringarm, K., Bhat, F., Sommano, S., Cheewangkoon, R. Fruit characteristics, peel nutritional compositions, and their relationships with mango peel pectin quality. Plants 2021, 10(6), 1148. [CrossRef]

- INIA - Instituto Nacional de Innovación Agraria del Perú. 2022. Estudio de tendencias de mercado Mango. Available online: https://repositorio.inia.gob.pe/handle/20.500.12955/2025.

- Ratprakhon, K., Neubauer, W., Riehn, K., Fritsche, J., Rohn, S. Developing an automatic color determination procedure for the quality assessment of mangos (Mangifera indica) using a CCD camera and color standards. Foods 2020, 9(11), 1709. [CrossRef]

- Gupta, A., Pathak, U., Tongbram, T., Medhi, M., Terdwongworakul, A., Magwaza, L., Mditshwa, A., Chen, T., Mishra, P. Emerging approaches to determine maturity of citrus fruit. Critical Reviews in Food Science and Nutrition 2022, 62(19), 5245-5266. [CrossRef]

- Yang, J., Wang, C., Jiang, B., Song, H., Meng, Q. Visual perception enabled industry intelligence: state of the art, challenges and prospects. IEEE Transactions on Industrial Informatics 2020, 17(3), 2204-2219. [CrossRef]

- Vasconez, J., Kantor, G., Cheein, A. Human–robot interaction in agriculture: A survey and current challenges. Biosystems engineering 2019, 179, 35-48. [CrossRef]

- Worasawate, D., Sakunasinha, P., Chiangga, S. Automatic classification of the ripeness stage of mango fruit using a machine learning approach. AgriEngineering 2022, 4(1), 32-47. [CrossRef]

- Selvakumar, A., Balasundaram, A. Automated Mango Leaf Infection Classification using Weighted and Deep Features with Optimized Recurrent Neural Network Concept. The Imaging Science Journal, 2023, 1-19. [CrossRef]

- Lin, G., Tang, Y., Zou, X., Cheng, J., & Xiong, J. Fruit detection in natural environment using partial shape matching and probabilistic Hough transform. Precision Agriculture 2020, 21, 160-177. [CrossRef]

- Bargoti, S. Underwood, J. Image segmentation for fruit detection and yield estimation in apple orchards. Journal of Field Robot 2017, 34(6), 1039-1060. [CrossRef]

- Wan, P., Toudeshki, A., Tan, H., Ehsani, R. A methodology for fresh tomato maturity detection using computer vision. Computers and electronics in agriculture 2018, 146, 43-50. [CrossRef]

- Bhargava, A., Bansal, A. Fruits and vegetables quality evaluation using computer vision: A review. Journal of King Saud University-Computer and Information Sciences 2021, 33(3), 243-257. [CrossRef]

- Sengupta, S., Lee, W. Identification and determination of the number of immature green citrus fruit in a canopy under different ambient light conditions. Biosystems Engineering 2014, 117, 51-61. [CrossRef]

- Zhang, Y., Wu, L. Classification of fruits using computer vision and a multiclass support vector machine. sensors 2012, 12(9), 12489-12505. [CrossRef]

- Jiang, Y., Li, C. Convolutional neural networks for image-based high-throughput plant phenotyping: a review. Plant Phenomics 2020. [CrossRef]

- Ismail, N., Malik, O. Real-time visual inspection system for grading fruits using computer vision and deep learning techniques. Information Processing in Agriculture 2022, 9(1), 24-37. [CrossRef]

- Xu, W., Zhao, L., Li, J., Shang, S., Ding, X., Wang, T. Detection and classification of tea buds based on deep learning. Computers and Electronics in Agriculture 2022, 192, 106547. [CrossRef]

- Zeiler, M., Fergus, R. Visualizing and understanding convolutional networks. In Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, September 6-12, 2014, Proceedings, Part I 13 (pp. 818-833). Springer International Publishing. [CrossRef]

- Zhang, Y., Dong, Z., Chen, X., Jia, W., Du, S., Muhammad, K., Wang, S. Image based fruit category classification by 13-layer deep convolutional neural network and data augmentation. Multimedia Tools and Applications 2019, 78, 3613-3632. [CrossRef]

- Naranjo-Torres, J., Mora, M., Hernández-García, R., Barrientos, R., Fredes, C., Valenzuela, A. A review of convolutional neural network applied to fruit image processing. Applied Sciences 2020, 10(10), 3443. [CrossRef]

- LeCun, Y., Bengio, Y., Hinton, G. Deep learning. Nature, 2015, 521(7553), 436-444. [CrossRef]

- Zhang, Y., Kang, B., Hooi, B., Yan, S., Feng, J. Deep long-tailed learning: A survey. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2023. [CrossRef]

- Krizhevsky, A., Sutskever, I., Hinton, G. ImageNet classification with deep convolutional neural networks. Communications of the ACM 2017, 60(6), 84-90. [CrossRef]

- Liu, Y., Pu, H., Sun, D. Efficient extraction of deep image features using convolutional neural network (CNN) for applications in detecting and analysing complex food matrices. Trends in Food Science & Technology 2021, 113, 193-204. [CrossRef]

- Gill, H., Khalaf, O., Alotaibi, Y., Alghamdi, S., Alassery, F. Multi-Model CNN-RNN-LSTM Based Fruit Recognition and Classification. Intelligent Automation & Soft Computing 2022, 33(1). [CrossRef]

- Xiao, Z., Wang, J., Han, L., Guo, S., Cui, Q. Application of machine vision system in food detection. Frontiers in Nutrition 2022, 9, 888245. [CrossRef]

- Bures, S., Urrestarazu, M., Kotiranta, S. La luz LED es la invención más revolucionara en la luminotécnia hortícola. 2018, 46 págs. ISBN 978-84-16909-09-4. Available online: http://publicaciones.poscosecha.com/es/cultivo/395-iluminacion-artificial-en-agricultura.html.

- Payne, A., Walsh, K., Subedi, P., Jarvis, D. Estimation of mango crop yield using image analysis–segmentation method. Computers and electronics in agriculture 2013, 91, 57-64. [CrossRef]

- Guo, C., Pleiss, G., Sun, Y., Weinberger, K. On calibration of modern neural networks. In International conference on machine learning 2017, pp. 1321-1330. PMLR.

- INACAL., 2020. Norma Técnica Peruana 011.010:2020 MANGO. Mango fresco. Requisitos. 2ª Edición. Obtenido de: https://acortar.link/MViZwk.

- Jahanbakhshi, A., Momeny, M., Mahmoudi, M., Zhang, Y. Classification of sour lemons based on apparent defects using stochastic pooling mechanism in deep convolutional neural networks. Scientia Horticulturae, 2020, 263, 109133. [CrossRef]

- Wang, S., Chen, Y. Fruit category classification via an eight-layer convolutional neural network with parametric rectified linear unit and dropout technique. Multimedia Tools and Applications, 2020, 79, 15117-15133. [CrossRef]

- Ma, X., Tong, J., Huang, W., Lin, H. Characteristic mango price forecasting using combined deep-learning optimization model. Plos one, 2023, 18(4), e0283584. [CrossRef]

- Su, J., Vargas, D., Sakurai, K. One pixel attack for fooling deep neural networks. IEEE Transactions on Evolutionary Computation, 2019, 23(5), 828-841. [CrossRef]

- Dong, C., Loy, C., Tang, X. Accelerating the super-resolution convolutional neural network. In Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11-14, 2016, Proceedings, Part II 14 (pp. 391-407). Springer International Publishing. [CrossRef]

- Wu, B., Iandola, F., Jin, P., Keutzer, K. Squeezedet: Unified, small, low power fully convolutional neural networks for real-time object detection for autonomous driving. In Proceedings of the IEEE conference on computer vision and pattern recognition workshops, 2017, pp. 129-137. [CrossRef]

- Jiang, P., Chen, Y., Liu, B., He, D., Liang, C. Real-time detection of apple leaf diseases using deep learning approach based on improved convolutional neural networks. IEEE Access, 2019, 7, 59069-59080. [CrossRef]

- Rizwan, H., Hakim, A. Classification and grading of harvested mangoes using convolutional neural network. International Journal of Fruit Science, 22(1), 2022, 95-109. [CrossRef]

- Gururaj, N., Vinod, V., Vijayakumar, K. Deep grading of mangoes using Convolutional Neural Network and Computer Vision. Multimedia Tools and Applications, 2019, 1-26. [CrossRef]

- Salazar-Campos, O., Salazar-Campos, J., Menacho, D., Morales, D., & Aredo, V. Improvement of the classification of green asparagus using a Computer Vision System. Brazilian Journal of Food Technology, 2019, 22. [CrossRef]

- [Zoph, B., Vasudevan, V., Shlens, J., Le, Q. Learning transferable architectures for scalable image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition 2018, pp. 8697-8710. [CrossRef]

- Bhatt, D., Patel, C., Talsania, H., Patel, J., Vaghela, R., Pandya, S., Modi, K., Ghayvat, H. CNN variants for computer vision: History, architecture, application, challenges and future scope. Electronics, 2021, 10(20), 2470. [CrossRef]

- Sakib, S., Ashrafi, Z., Siddique, M. Implementation of fruits recognition classifier using convolutional neural network algorithm for observation of accuracies for various hidden layers. arXiv preprint arXiv:1904.00783, 2019. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).