1.1. Information Geometry

Information geometry (IG) has been widely applied in many research fields such as statistical inference, stochastic control, and neural networks .In other words, IG aims to apply the techniques of differential geometry (DG) to statistics. This means that IG’s main idea is to apply methods and techniques of non-Euclidean geometry to stochastic processes and probability theories. IG indicates that the use of Euclidian geometry technique is useful to think of a family of probability distributions as a statistical manifold (SM). Moreover, IG has been adopted for the study of statistical manifolds (SMs), where the geometric metrics gave a new description of the probability density function which plays an important role in SM and can be regarded as the coordinate system.

A manifold is a topological finite dimensional Cartesian space,

, where one has an infinite-dimensional manifold.

could be described merely as topological space

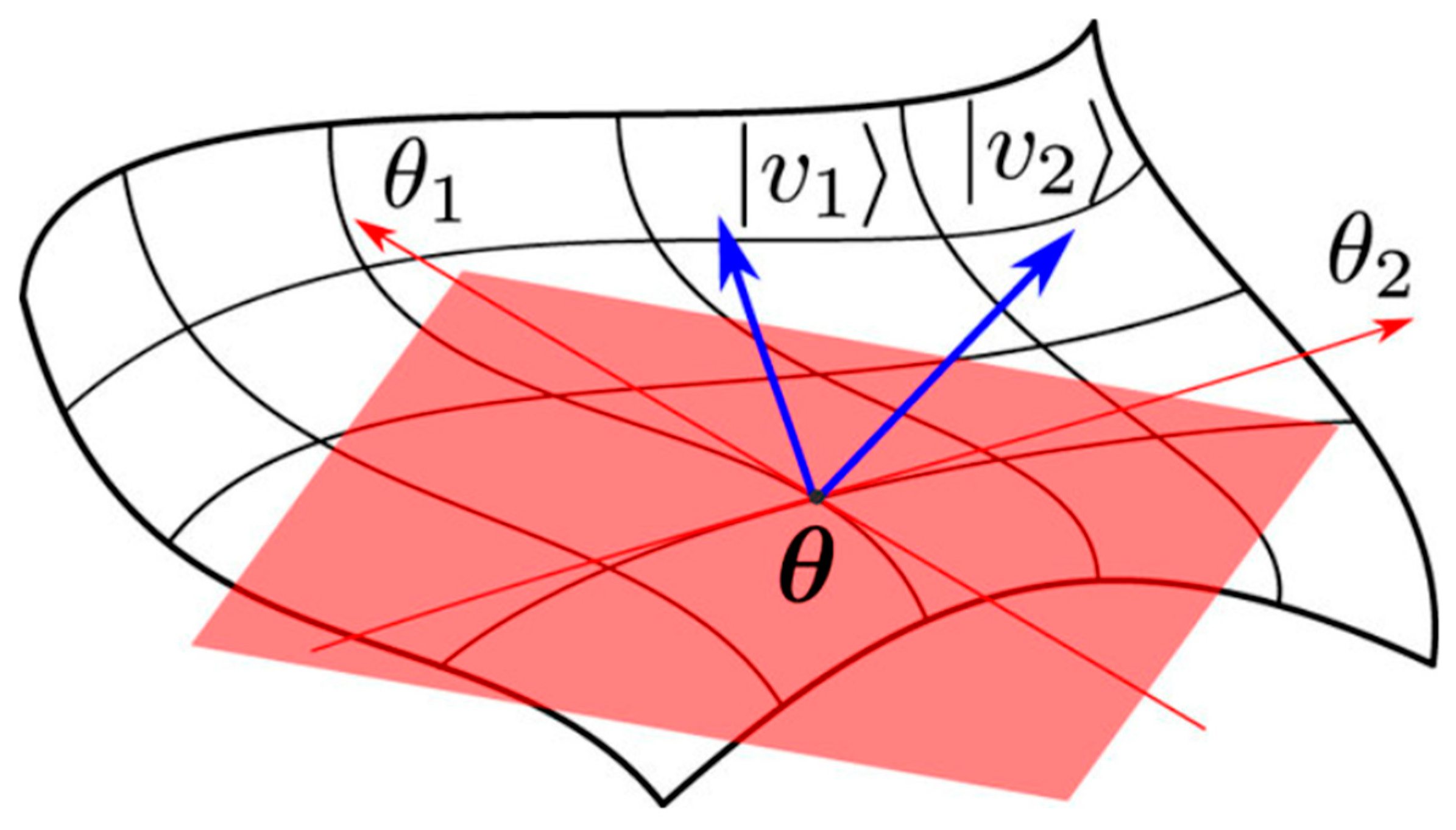

(may be defined as a set of points, along with a set of neighbourhoods for each point, satisfying a set of axioms relating points and neighbourhoods). In addition, IG supports reasoning intuitively the description of SMs. Note that although figures can be visualised (i.e., plotted in coordinate charts), they should be thought of as purely abstract figures, namely, geometric figures. One may have a higher level of appreciation of the significant importance of IG. In

Figure 1, the parameter inference

of a model from data can be interpreted as a decision-making problem: One has to decide which parameter of a family of models

suits “best’’ the data, where

is the set of parameters {

of the probability density function of the distributionof the geometric manifold. IG provides a differential-geometric manifold structure

that is useful for developing decision rules.

In this paper, a study is undertaken of the geometric structure of the Generalized Brownian Motion Manifold (GBM) as well as finding its information matrix exponential (IME). The (IME) is a matrix on square matrices analogous to the ordinary exponential function. Furthermore, the Lorenzian Dynamics for (GBM) is devised.

It is used to solve systems of linear differential equations. In addition to that, the matrix exponential plays a crucial role in the theory of Lie groups. To our knowledge, the current paper is the first ever to revolutionize classic Brownian Motion Theory (BMT) by devising the Info-Geometric analysis of (GBM).

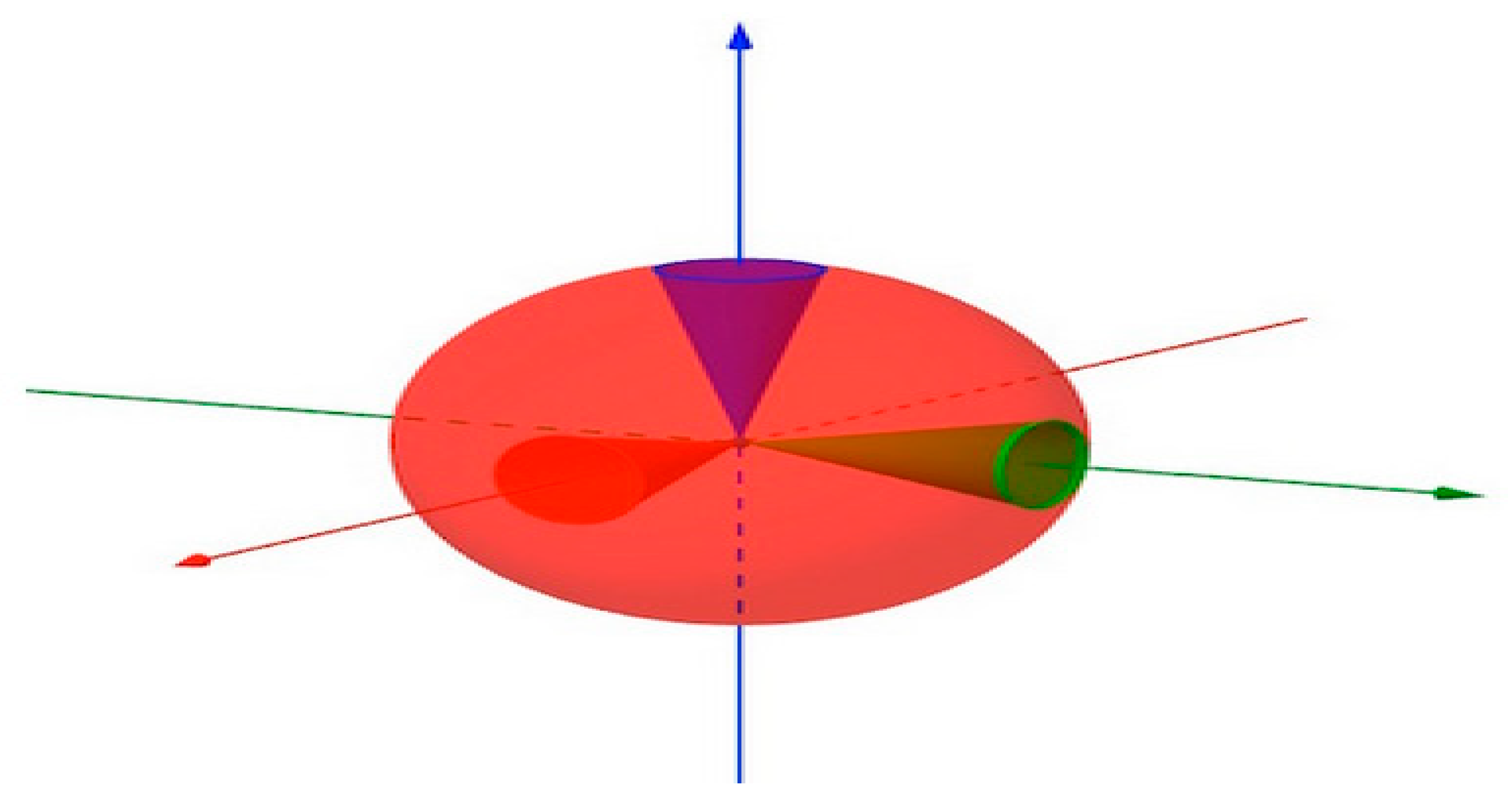

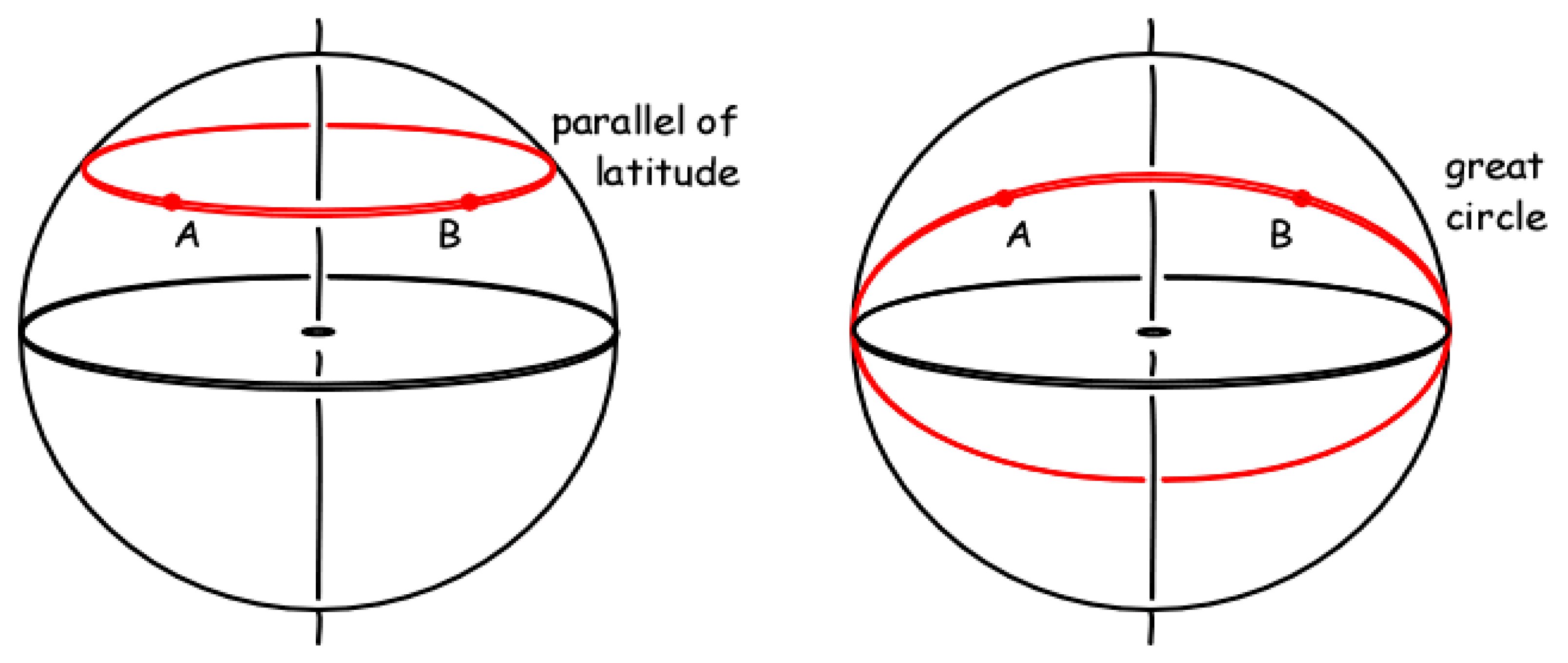

In this context, by analogy to information theory (IT), the geometric approach adopted in this paper enables the study of invariance and equivariance of figures in a coordinate-free approach (n.b., by equivariance as a concept, it is meant when there is a group acting on a pair of spaces and there is a map from functions on one to the functions on the other. In the context of this paper, Ricci curvature measures the deviation of the Riemannian metric (RM) from the standard Euclidean metric (EM) and how scalar curvature measures the deviation in the volume of a geodesic ball from the volume of an Euclidean ball of the same radius (c.f.,

Figure 2).

Geodesics are the analogue of straight lines in Euclidean space and possess many of the same properties as straight lines. In Einstein’s classical concept of General Relativity(GR), objects travel on a geodesic in curved space-time, which extremises the proper time between two points. Hence, the same mathematics describes both the geometry of curved spaces and the geometry of space-time. Moreover, a “straight line on a curved surface” is called a geodesic, which minimizes the distance between 2 points. In IG, the Fisher information metric (FIM) is a particular Riemannian metric (RM), which can be defined on a smooth statistical manifold (i.e., a smooth manifold whose points are probability measures defined on a common probability space). It can be used to calculate the informational difference between measurements. The FIM measures closeness of the shape between two distribution functions, it is also proportional to the amount of information that the distribution function contains about the parameter of the probability density function of the SM.

1.2. Generalized Brownian Motion (GB)

Einstein first gave a rigorous and accurate description of diffusion in simple physical systems. This description can be understood in the Eulerian framework as satisfying the diffusion equation with a constant diffusion coefficient, and in the Lagrangian framework as Brownian motion; a continuous stochastic process with stationary, independent, Gaussian increments, of variance . In recent years several diffusive phenomena that do not fit neatly into Einstein’s framework have been discovered, and these sorts of diffusion have been collectively described as being anomalous. Examples of these phenomena include diffusion in cytoplasm and confined nanofilms, the motion of albatrosses and sharks, diffusion of polymers and dispersion in the geophysical subsurface among many others. One of the hallmarks of classical diffusion (i.e., diffusion which is described by Brownian motion and the diffusion equation with constant diffusion coefficient) is that the mean square displacement grows linearly in time.

Anomalous diffusion processes frequently do not exhibit this behaviour with a power-law mean square displacement often appearing. We should point out, however, that many diffusive processes with linear mean square displacement still are anomalous. A diverse set of models have been constructed to describe the behaviour of anomalous diffusion phenomena. These models include continuous time random walk, Lévy motion, fractional Brownian motion, and many others These models frequently have power-law mean square displacements or heavy tails. In the Lagrangian framework, they can be understood to differ from Brownian motion by having interdependent, non-stationary, or non-Gaussian increments (or some combination of the three).

The Boltzmann-Gibbs entropy can be derived by assuming that the four Shannon- Khinchin axioms hold.

For a set of discrete states, the Tsallis entropy is:

where

is the probability of being in the

state. In the limit

, the Tsallis entropy reduces to the Boltzmann-Gibbs entropy. Assuming that the Tsallis entropy is the appropriate entropy for the system under examination, the value of q that produces an extensive entropy can be determined by examining the volume of phase space (the space of all possible system states) as a function of the system size. For example, in a classical statistical mechanical setting the system size is determined by the number of particles and the phase space volume is given by the set of all possible position and momenta coordinates (

) where

is the box within the particles within

.

For a continuous random variable

, the Tsallis entropy of

is:

where

is the probability density function of

(In a dimensional system, an issue of dimensional consistency arises

the 1 in the integral in equation (1.2) have different units. However, this issue is not essential, because the 1 is a carry-over from the discrete entropy so that a system without ant randomness (one of the

has zero. Shifting

by a constant has no impact on the employed maximum entropy).

Random variables following a

q-Gaussian distribution are maximum Tsallis entropy distributions subject to holding various statistics constant (e.g., the second moment or the second q-moment). Note, however, that for fixed second moment, a q-Gaussian random variable maximizes

rather than

. The maximum entropy properties make the

q-Gaussian distribution in the context of the Tsallis entropy the analogue of the Gaussian distribution in the context of the Boltzmann-Gibbs entropy. The probability density function for a q-Gaussian is given by

where

is called the

q-exponential,

is a normalization constant, and

is a scale parameter. In the range of extensive values of the information theoretic parameter

,

, the

q-Gaussian distribution is a rescaled version of the Student’s

–distribution with

degrees of freedom. The scaling is such that the distributions are the same if

. It is notable to state that the extensive values assigned to the information theoretic parameter

q justifies the physical interpretation of Brownian Motion.

Focussing on this range, will utilize a representation of the Student’s t-distribution for a key part of the analysis below.

1.3. Random Diffusivity

Consider the stochastic differential equation:

where

is a Brownian motion, and

D is a random variable that is independent of

Here the stochastic differential equation is regarded as being conditioned on

D. If the probability density function,

, of

D is given by

then

D is a constant, and the distribution of the displacement due to diffusion,

, is a Gaussian (note that the Gaussian distribution maximizes the Boltzmann-Gibbs entropy). This naturally leads to the question of whether there are distributions of

D that would make the distribution of

maximize the Tsallis entropy. We will answer this question in the affirmative and explicitly construct the appropriate distribution for

D.

Suppose that:

where

is a chi-squared distribution with

degrees of freedom and

denotes that two random variables have the same distribution. Then the distribution of

takes the form

where

Z is a standard normal random variable. At this point, we note that a Student’s t-distribution takes the form

where

Z is a standard normal distribution and

is a chi-squared distribution with ν degrees of freedom. Therefore, the right-hand side of equation (1.9) is a rescaled (by a factor

) Student’s t-distribution, or, in other words, a

q-Gaussian. Hence, the distribution of

maximizes the Tsallis entropy.

By changing variables in equation (1.7), we obtain the probability density function for

D:

This equation can be recast in terms of

q by recalling that

.

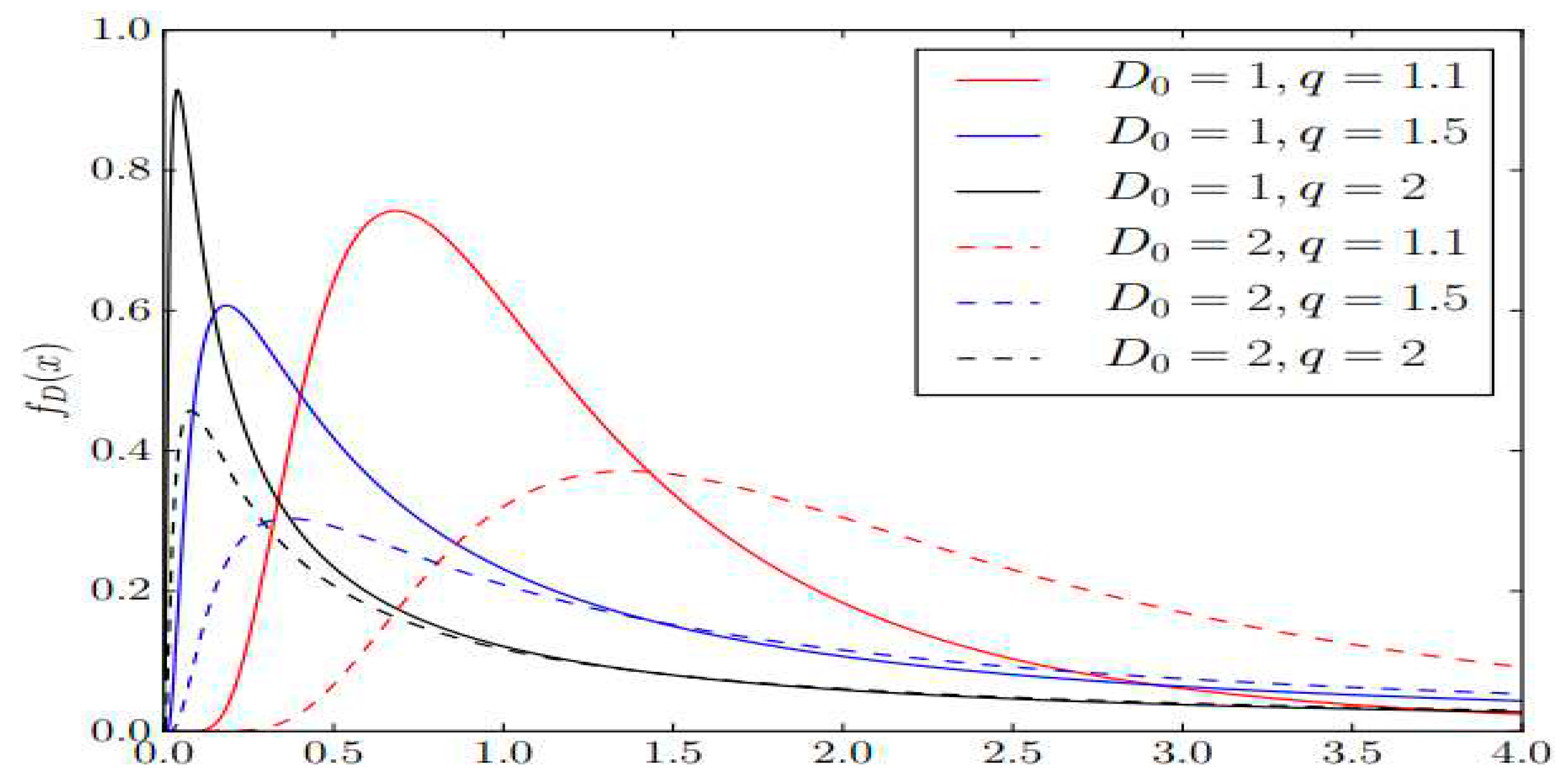

Figure 3 shows several plots of

Note that in the limit as

(or equivalently,

→ ∞),

→

, so that equation (1.6) is satisfied in the limit. Therefore, classical advection-dispersion is recovered in the limit as

for equation (1.5). This is to be expected, since

corresponds to the classical Boltzmann-Gibbs entropy, and classical advection-dispersion maximizes the Boltzmann-Gibbs entropy.

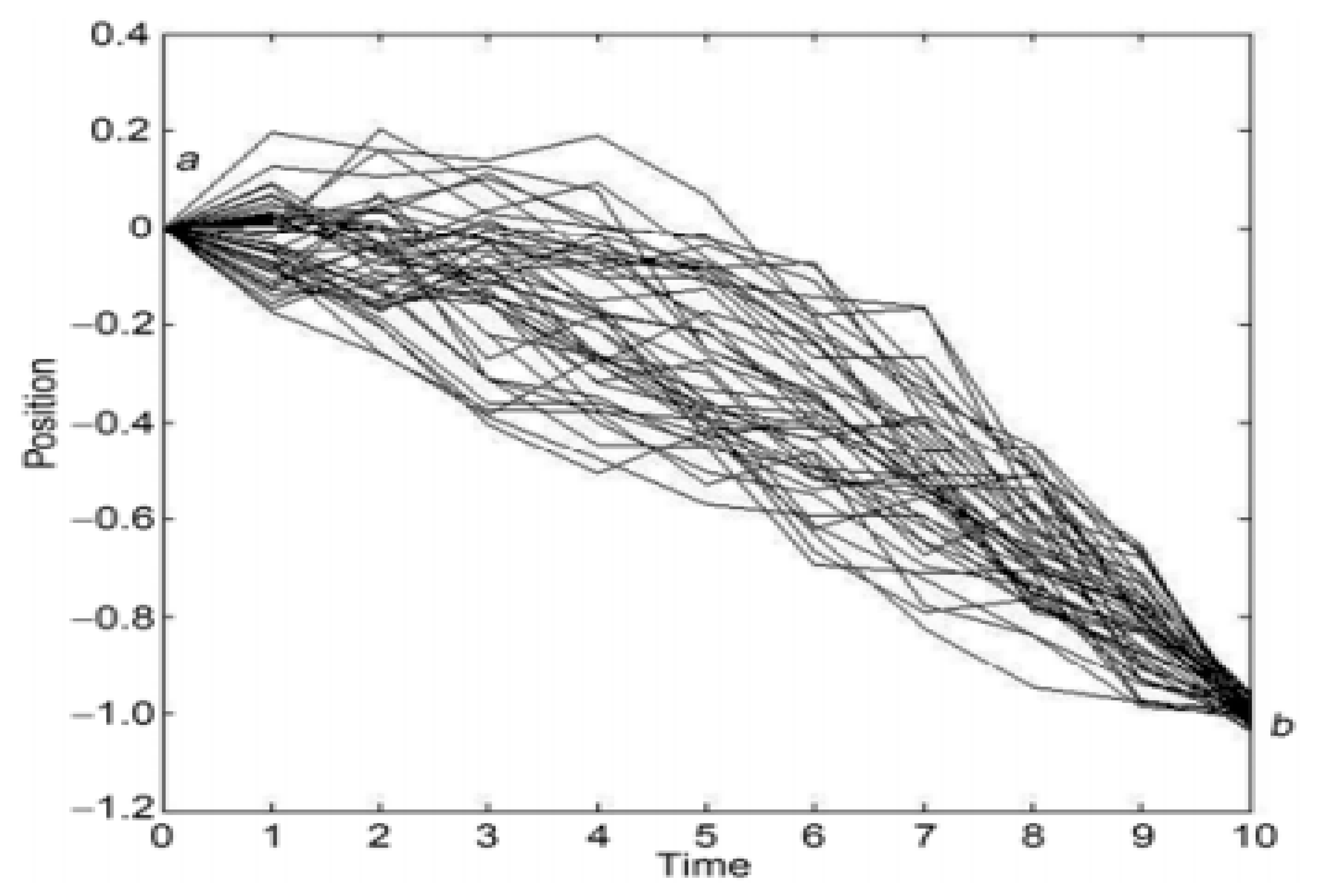

Brownian motion is an idealized approximation to actual random dynamics that has been extensively investigated over a long period time, but possibly still not thoroughly understood. Recently, Brownian, and random motion have been extended into the field of fractional Brownian motion, stochastic noise, and quantum random walks.

Figure 4 shows a numerical simulation of paths (bundle) from point a to point b for particles in a constant force field such as weight. The time duration of the motion is

steps with the same unit time increment for each step. Each path is a sequence of positions

The main original contributions of this paper are described below.

The provision of the Ricci Tensor of GBM manifold

Revealing Ricci scalar of GBM manifold

Obtaining Einstein and Stress Energy tensors which unifies GBM significantly with both general and special relativity.

The rest of this paper is organised as follows:

Section 1 lays out a brief introduction to Information geometry, IG and Generalized Brownian Motion , GBM.

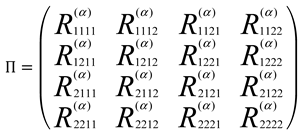

Section 2 presents preliminary definitions associated with (IG). In section 3, Ricci scalar,

and the

Tensor,

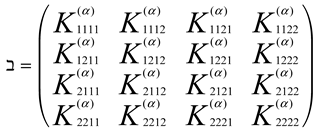

are calculated. In section 4, the Ricci Tensor,

the are calculated. Concluded remarks and future work are given in section 5.