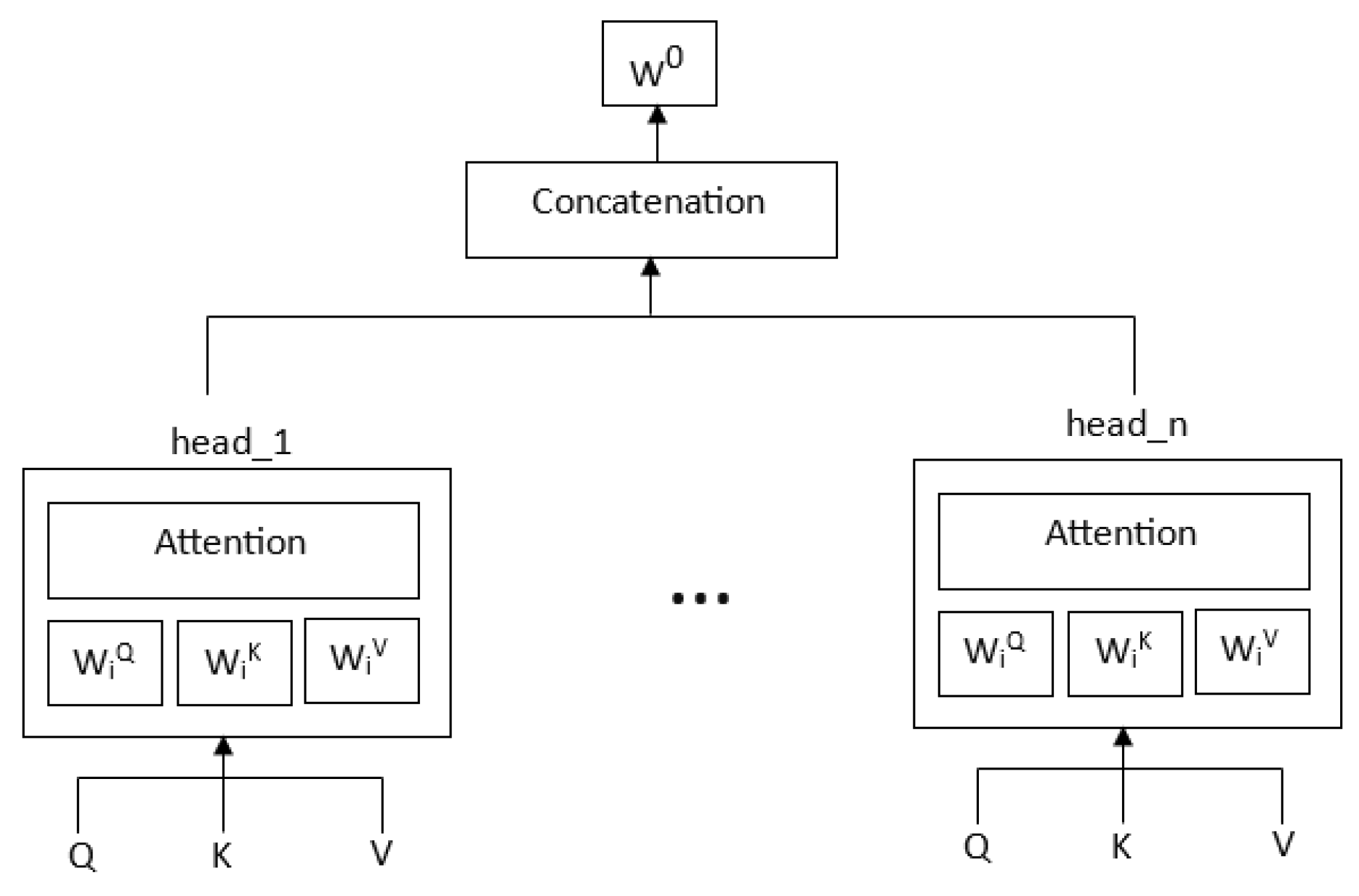

3. Transformer

After its inception, the Transformer soon became the de-facto standard for Natural Language tasks. Below, we discuss several variants of the original transformer-based model that were proposed to deal with NLU and NLGU tasks.

3.1. Encoder-Decoder based Model

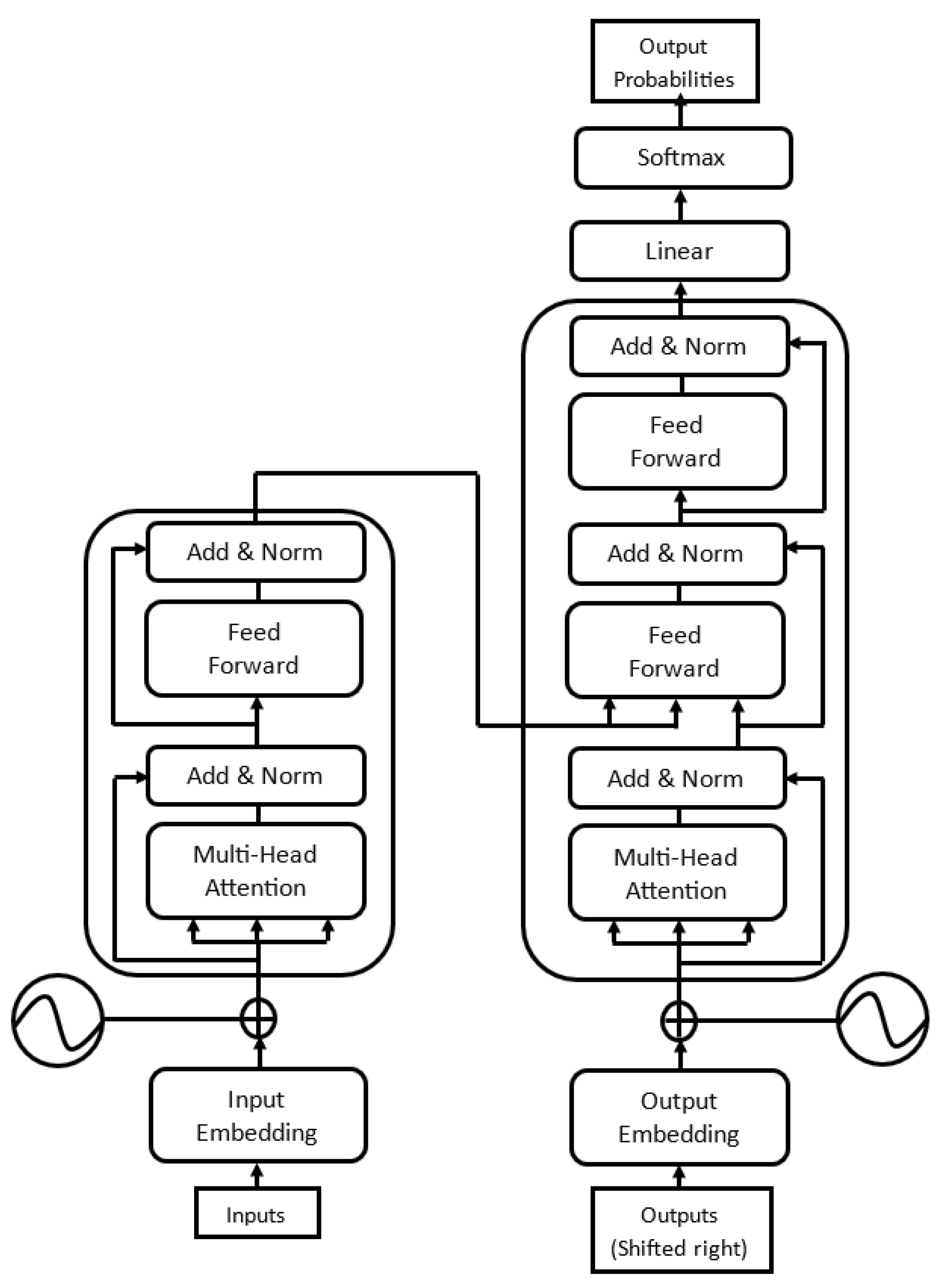

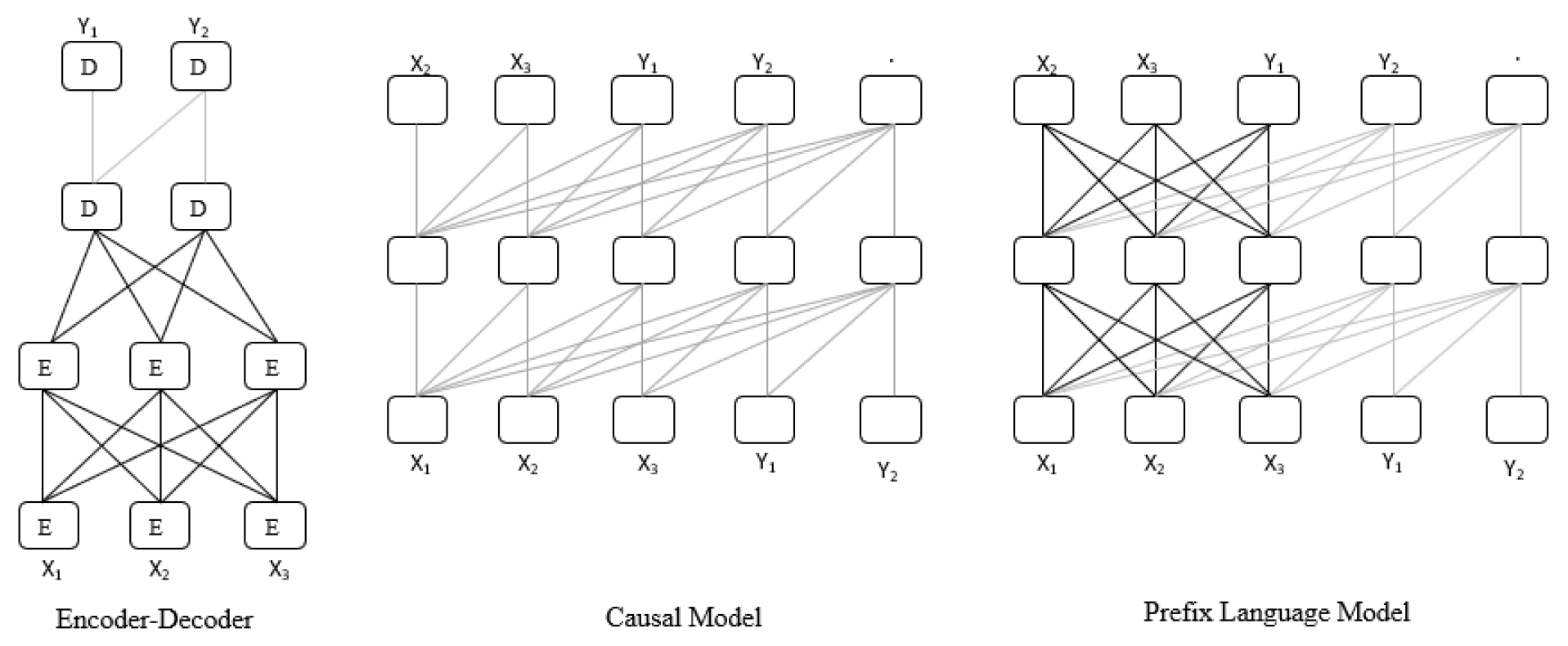

The example of Encoder-Decoder architecture is the Transformer model proposed in [23]. Its encoder and decoder blocks are stacked with multiple layers. As show in

Figure 3, Transformer encoder layer consists of two consecutive layers, namely a self-attention layer followed by a position-wise feed-forward layer. Decoder is similar to encoder, except it adds third cross-attention layer, which attends over encoder output.

Encoder-decoder models adopt bidirectional attention for the encoder, unidirectional attention for the decoder, and cross attention mechanism between them. Cross-attention in the decoder has access only to the fully processed encoder output, and is responisble for connecting input tokens to target tokens. The encoder-decoder-based models are pre-trained for seq2seq tasks. They can also be pretrained on conditional generation tasks, where the output is generated in regards to the given input, for example in summarization, question answer, and translation tasks. T5 [26] uses encoder-decoder architecture. As stated in T5, using encoder-decoder structure helped achieve good performance over classification as well as generative tasks.

Although, Encoder-decoder models end up having twice as much parameters as their decoder-only or encoder-only counterparts, they still have similar computational cost. Compared to PrefixLM models where the parameters are shared, here the input and target are independently processed and use separate set of parameters. Unlike decoder-only language models that are trained to generate the input, encoder-decoder models output target tokens.

The original transformer consisted of encoder-decoder blocks and was initially used for sequence-to-sequence tasks, such as NMT. However, it was discovered that with the change in how the input is fed to the model, the single-stack (decoder or encoder) could also do sequence-sequence model tasks. As a result, the subsequent models started containing either an encoder or decoder architecture. Below, we discuss these architectural variants of the original transformer model.

3.2. Encoder-only based Model

Encoder-only models use bidirectional attention, where the target token can attend to the previous and next tokens. Encoder-only-based models, for instance, BERT [25], produce a single prediction for a given input sequence. As a result, they are more fit for classification and understanding tasks rather than NLG tasks, such as translation and summarization.

3.3. Decoder-only (Causal) based Model

In decoder-only models, the goal is to predict the next token in the sequence; therefore, such models are auto-regressive. These models are trained solely for next-step prediction, so decoder-only models are well-suited for NLG tasks. In decoder-only models, the input and target tokens are concatenated before processing. As a result, the representation of inputs and targets are simultaneously built layer by layer as they propagate concurrently through the network. In the encoder-decoder model, the input and target tokens are processed separately and rely on cross-attention components to connect them. GPT [24] was one of the first models which relied solely on decoder-based architecture. However, as decoder-only models use a unidirectional attention mechanism, their performance might be hindered for tasks involving longer sequences, such as summarization.

3.4. Prefix (Non-Causal) Language Model

Prefix Language models are also decoder-only based models but differ in the masking mechanism. Instead of a causal mask, a fully visible mask is used for the prefix part of the input sequence, and a causal mask is for the target sequence.

For example, to translate an English sentence "I am doing well" to French, the model would apply a fully-visible mask to the prefix “translate English to French: I am doing well. Target: ", followed by causal masking while predicting the target “je vais bien". Also, unlike the Causal language models where the targets-only paradigm is used, the Prefix language model uses the input-to-target paradigm. Both Causal and Prefix model architectures are autoregressive, as the objective is to predict the next token. However, the Causal model uses a unidirectional attention mask, while the Prefix model modifies the masking mechanism to employ bidirectional attention over prefix tokens.

Figure 4 demonstrates the mechanism of the above architectures. The lines represent the attention visibility. Dark lines represent the fully visible masking (bidirectional attention), and light grey lines represent causal masking (unidirectional attention).

As shown in

Figure 4, in the encoder-decoder architecture, fully visible masking is used in the encoder, and causal mask is used in the decoder. In a decoder-only model, the input and target are concatenated, and then a causal mask is used throughout. A decoder-only model with a prefix allows fully visible masking over part of the input token (prefix), followed by causal masking on the rest of the sequence. In general, autoencoding models learn bidirectional contextualized representation suited for NLU tasks, whereas autoregressive models learn to generate the next token and hence are suited for NLG tasks.

Table 1 details architectural information of prominent LLM models, such as their parameter size, hardware used, number of Encoder (E) and Decoder (D) layers, attention heads etc.

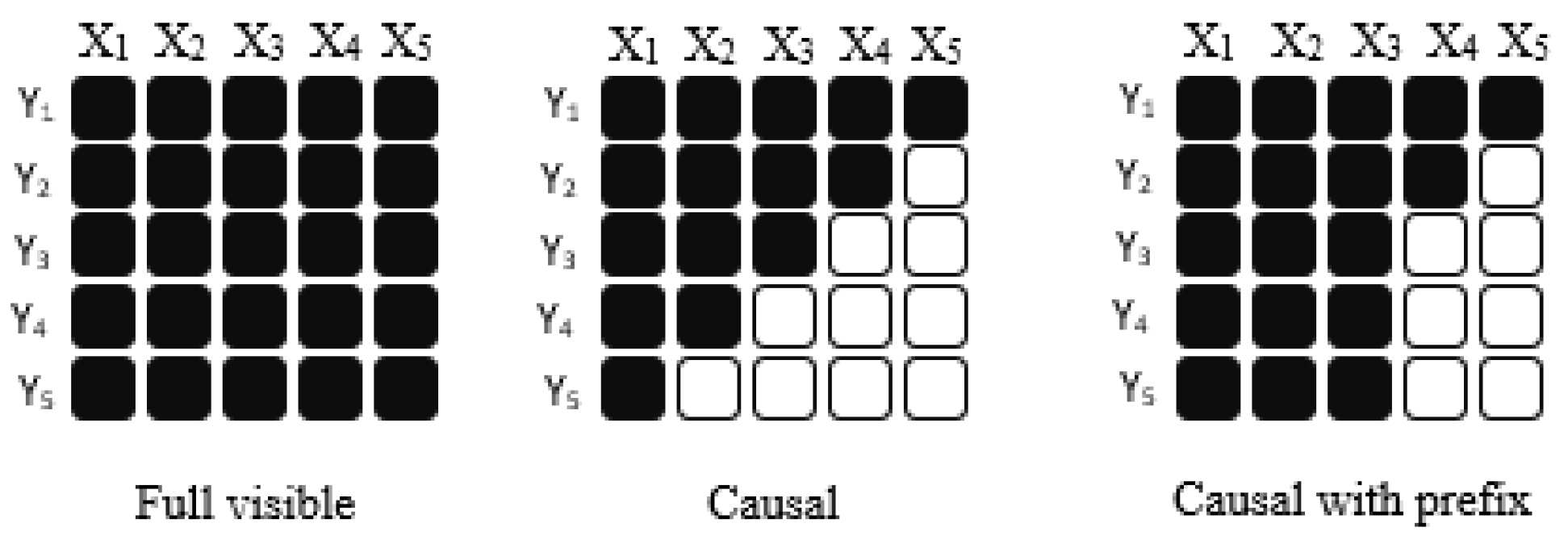

3.5. Mask Types

Self-attention is the variant of the attention mechanism proposed in [22]. It generates an output sequence with the same length as the input sequence, replacing each element with the rest of the sequence’s weighted average. Below, we look at different masking techniques that are used to zero out certain weights. By zeroing out the weights, the mask decides which entries can be attended by the attention mechanism at a given output timestep. As highlighted in

Figure 5, by using fully visible mask, the attention mechanism can attend to the entire input sequence when producing each entry of its output.

In Causal-Mask, the attention mechanism can attend only to the previous tokens and is prohibited from attending to the input tokens from the future. That is, while producing the entry, causal masks prevent the attention mechanism from attending to all the entries occurring after the entry so that the model cannot see into the future. The prefix-causal mask is a combination of these two approaches, allowing the attention mechanism to use a fully visible mask on a portion of the input sequence (called the prefix) and a causal mask on the rest of the sequence.

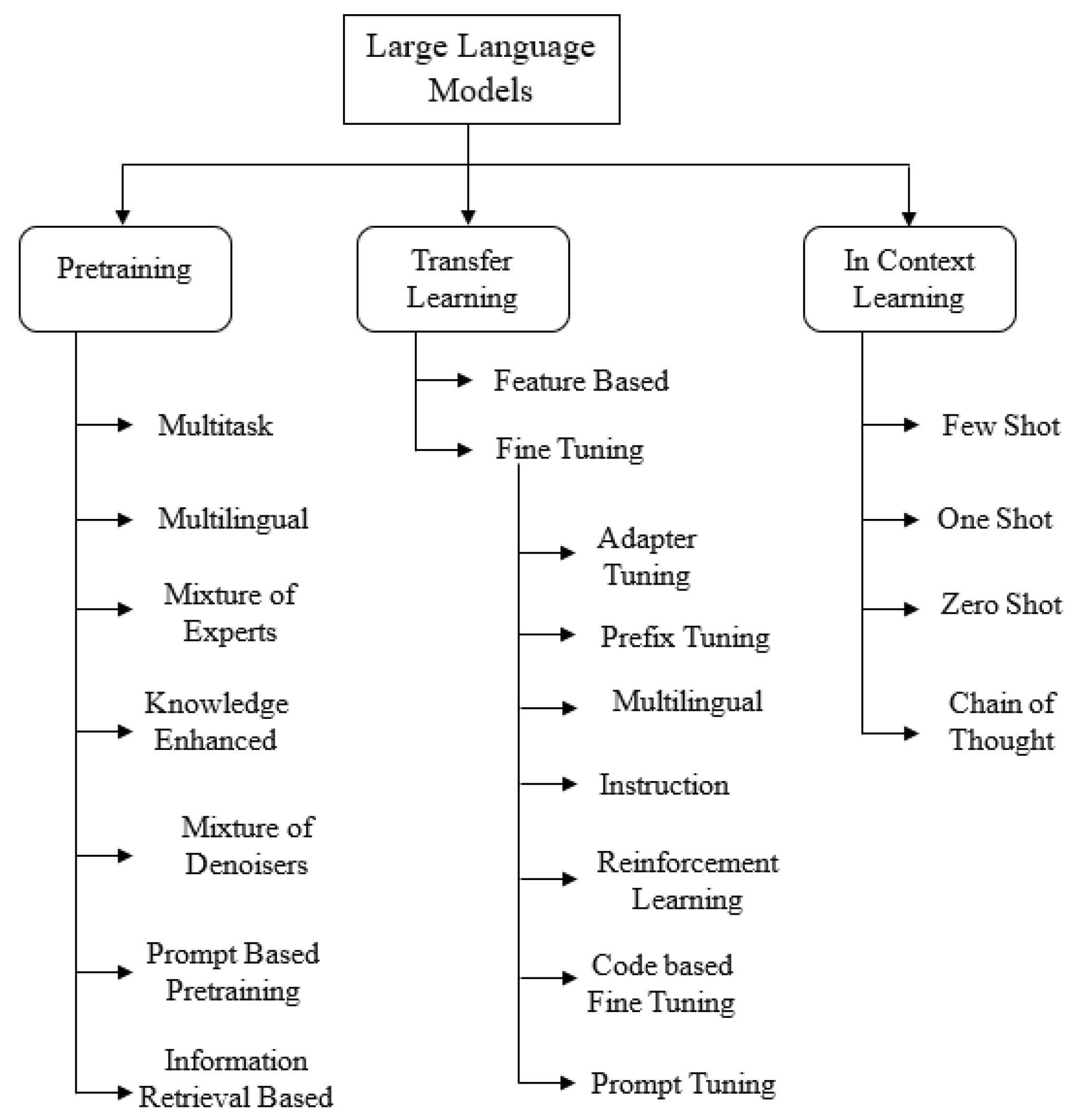

5. Transfer Learning Strategies

Discriminatively trained models perform well if labeled data is available in abundance, but they don’t perform adequately for tasks with scarce dataset as it limits their learning abilities. To address this issue, LLMs were first pre-trained on large unlabeled datasets using the self-supervised approach, where the learning was then transferred discriminatively on specific tasks. As a result, transfer learning helps leverage the capabilities of pre-trained models and is advantageous, especially in data-scare settings. For example, GPT [24] used generative language model objective for pretraining, followed by discriminative fine-tuning. Compared to pretraining, the transfer learning process is inexpensive and converges faster than training the model from scratch. Additionally, pretraining uses an unlabeled dataset and follows a self-supervised approach, whereas transfer learning follows a supervised technique using a labeled dataset particular to the downstream task. The pretraining dataset comes from a generic domain, whereas during transfer learning, data comes from specific distributions (supervised datasets specific to the desired task).

5.1. Fine Tuning

Transfer learning started with feature-based techniques, where pre-trained embeddings such as Word2Vec were used on the custom downstream models. Once learned, the embeddings are not refined to the downstream tasks, making them task-dependent. In finetuning, after copying the weights of the pre-trained network, they are finetuned to adapt to the peculiarities of the target task. In finetuning, as the parameters learned during pretraining are adjusted to a specific downstream task, it outperforms the feature-based transfer technique. Such finetuning enables the model to learn task-specific features and improve the downstream task performance. As a result, the Fine-tuned embeddings adapt not only to the context but also to the downstream task in consideration. So, unlike feature or representation-based transfer, finetuning does not require task-specific model architecture. Although the Finetuning strategy yields strong performance on many benchmarks, it has some limitations, such as the need for a large amount of downstream task-specific datasets, which can lead to poor generalization for data from out of distribution and the possibility of spurious features. During finetuning, instead of including all the parameters, adapter layers and gradual unfreezing techniques were proposed, which considered only a subset of parameters during finetuning.

5.2. Adapter Tuning

Feature and vanilla finetuning techniques could be more parameter efficient since they require new network weights for every downstream task. So, these techniques require an entirely new model for every downstream task. To address this issue, [92] proposed a transfer with the Adapter module, in which a module gets added between layers of a pre-trained network. In each block of the transformer, these adapter layers, which are dense-RELU-dense blocks, get added after the feed-forward networks. Since their output dimensionality matches their input, no structural or parameter changes are required to insert adapter layers. During finetuning, most of the original model is kept fixed, and only the parameters from adapter layers get updated. In adapter tuning, task-specific layers get inserted, with only a few trainable parameters added per task. Also, a high degree of parameter sharing occurs as the original network is kept fixed.

Unlike the feature-based technique, which reads the inner layer parameters to form the embeddings, adapters write to the inner layers instead, enabling them to reconfigure network features. The main hyperparameter of this approach is the feed-forward network’s inner dimensionality ‘d’, since it determines the number of new parameters that will get added to the model. This approach is a promising technique in the experiments conducted in [26]. Adapter tuning attains comparable performance with finetuning on NLU and NLG benchmarks by using only 2-4% task-specific parameters. Experiments from [92] demonstrated how BERT with adapters added only a few (3:6%) parameters per task to attain near SOTA on GLUE benchmark.

5.3. Gradual Unfreezing

In gradual unfreezing, more and more of the model’s parameters are finetuned over time. In this approach, at the start of fine-tuning, only the parameters of the final layer are updated first. Next, the parameters of the second-last layers are included in the fine-tuning. This process continues, until the parameters of all network layers get fine-tuned (updated). It is normally recommended to included an additional layer in fine-tuning, after each epoch of training. This approach was used in [26], where gradual-unfreezing caused minor degradation in performance across all tasks. In [26], it was found that the basic approach of updating all of a pre-trained model’s parameters during fine-tuning outperformed methods that are designed to update fewer parameters, although updating all parameters is most expensive.

5.4. Prefix Tuning

Fine-tuning, although it leverages the knowledge from pre-trained models to perform downstream tasks, requires a separate copy of the entire model for each task as it modifies all the network parameters. To address this issue, Prefix Tuning [94] keeps the pre-trained parameters frozen and optimizes only the task-specific vectors. These continuous task-specific vectors, called prefixes, are prepended to the input sequence so the subsequent tokens can attend to these vectors. Prefix Tuning uses a small trainable module to train and optimize these small task-specific vectors associated with the prefix. The errors are backpropagated to prefix activations prepended to each layer during tuning. In prefix tuning for each task, only the prefix parameters get stored, making it a lightweight, modular, and space-efficient alternative. Despite learning 1000x fewer parameters than fine-tuning, Prefix Tuning [94] outperformed fine-tuning in low data settings and maintained comparable performance in full data settings. It also extrapolated better to the examples having topics that were unseen during training by learning only 0.1% of the parameters.

5.5. Prompt Tuning

Although fine-tuning the pre-trained language models has successfully improved the results of downstream tasks, one of its shortcomings is that there can be a significant gap between the objectives used in pretraining and those required by downstream tasks. For instance, downstream tasks require objective forms such as labeling (parts of speech tagging) or classification, whereas pretraining is usually formalized as a next-token prediction task. One of the reasons behind the prompt-tuning approach was to bridge this gap between pretraining and fine-tuning objectives and help in better adaption of knowledge from pretrained models to downstream tasks. In Prompt Tuning, Prompts are used to interact with LLMs where a prompt is a user-provided input to which the model responds to. Prompting is prepending extra information for the model to condition on during the generation of output. This extra information typically includes questions, instructions, and a few examples as tokens to the task input.

5.5.1. Prompt Engineering

The motivation behind “prompt engineering” is that not all prompts lead to the same accuracy. Thus, one should tune the prompt’s format and examples to achieve the best possible performance. Prompt Engineering involves the process of carefully designing optimal prompts to obtain optimal results. Prompts need to be constructed to best elicit knowledge and maximize the prediction performance of the language model. The prompt-based approach is a promising alternative to fine-tuning since, as the scale of LLMs grow, learning via prompts becomes efficient and cost-effective. Additionally, unlike fine-tuning, where a separate model is required for each downstream task, a single model serves multiple downstream tasks in prompt tuning. They also help the model generalize better to held-out tasks and cross-tasks by using multitask prompts.

As per [31], fine-tuning on downstream tasks for trillion-scale models results in poor transferability. Also, these models need to be bigger to memorize the samples in fine-tuning quickly. To overcome these issues, the Prompt-tuning or P-tuning approach [112] is used, which is a parameter-efficient tuning technique. For example, GPT3 [29] (which was not designed for fine-tuning), heavily relied on handcraft prompts to steer the model for downstream applications. Prompt-tuning came into play to scale this (manual-) prompt engineering technique. Prompt tuning can be categorized into discrete and continuous approaches.

Unlike fine-tuning where separate model is required for each downstream task, in prompt tuning single model serves multiple different downstream tasks. In discrete prompt tuing, as human efforts are involved in crafting the prompts, the process becomes time-consuming and fallible as human efforts are involved in crafting the prompts. It sometimes can be non-intuitive for many tasks (e.g., textual entailment). Also, models are susceptible to this context, so improperly constructed contexts cause artificially low performance. To overcome these issues, a continuous or tunable prompt tuning technique was proposed.

5.5.2. Continuous Prompt Tuning

In continuous prompt tuning, additional k tunable tokens are used per downstream tasks, which are prepended to the input text. These prompts are learned through backpropagation and are tunable or adjustable to incorporate signals from any number of labeled examples. Unlike fine-tuning, only the parameters of these inserted prompt tokens get updated in prompt tuning. Hence, they are also called as soft-prompts. [96] demonstrated how their approach outperformed GPT-3’s few-shot learning based on discrete text prompts by a large margin. They also demonstrated that prompt tuning becomes more competitive with scale, where it matches the performance of fine-tuned models. For example, prompt tuning of T5 matched the model’s fine-tuning quality as size increased while enabling the reuse of a single frozen model for all tasks.

P-tuning uses a small trainable model that encodes the text prompt and generates task-specific tokens. These tokens are then appended to the prompt and passed to the LLM during fine-tuning. When the tuning process is complete, these tokens are stored in a lookup table and used during inference, replacing the smaller model. In this approach, the time required to tune a smaller model is much less. [31] utilized a P-tuning technique to automatically search prompts in the continuous space, which enabled the GPT-style model to perform better on NLU tasks. Unlike the discrete-prompt approach, in continuous-prompt, as there are trainable embedding tensors, the prompt encoder can be optimized in a differentiable way. P-tuning helped augment the pre-trained model’s NLU ability by automatically searching for better prompts in the continuous space. As demonstrated in [31], the P-tuning method improves GPTs and BERTs in both few-shot and fully-supervised settings.

Additionally, as the parameters of only prompt tokens are stored, which are less than 0.01% of the total model parameters, the prompt tuning approach saves a significant amount of storage space. For example, CPM-2 [34] used only 100 prompt tokens, where only 409.6K trainable parameters were to be updated compared to the 11B parameters of fine-tuning. As demonstrated in CPM-2, except for the Sogou-Log task, CPM-2 with prompt-tuning achieved comparable performance to the fine-tuning approach. The total size required for gradient tensors and optimizer state tensors also significantly decreases since, in prompt tuning, the number of parameters needed to be optimized is also much smaller. As a result, Prompt tuning can save at most 50% GPU memory as compared to fine-tuning. However, prompt tuning takes many more steps to converge, hence more time.

[36] demonstrated how p-tuning with only 4K examples provided comparable results to RoBERTwhich was fine-tuned on 150K data. P-tuning was able to significantly enhance the robustness of HyperCLOVA as well as the accuracy. Bloom [55] used Multitask prompted fine-tuning where it was fine-tuned on a training mixture composed of a large set of different tasks specified through natural language prompts. T0 and Bloom demonstrated how language models fine-tuned on a multitask mixture of prompted datasets have strong zero-shot task generalization abilities. MemPrompt [72] is a memory-enhanced GPT-3 that allows users to interact and improve the model without retraining. It pairs GPT-3 with a growing memory of recorded cases where the model misunderstood the user’s intents, along with user feedback for clarification. Such a memory allows the system to produce enhanced prompts for any new query based on the user feedback for error correction in similar cases in the past.

PTR [95] proposed prompt tuning with rules for many-class text classification, which apply logic rules to construct (task-specific) prompts with several sub-prompts. This enables PTR to encode prior knowledge about tasks and classes into prompt tuning. This introduction of sub-prompts can further alleviate the difficulty of designing templates and sets of label words. AutoPrompt [93] creates a prompt by combining the original task inputs with a collection of trigger tokens according to a template. The same set of trigger tokens is used for all inputs and is learned using a variant of the gradient-based search. AutoPrompt searches for a sequence of discrete trigger words and concatenates it with each input to elicit sentiment or factual knowledge from a masked LM. AutoPrompt elicited more accurate factual knowledge from MLMs than the manually created prompts on the LAMA benchmark. These results demonstrate that automatically generated prompts are a viable parameter-free alternative to existing probing methods since prompting does not introduce large amounts of additional parameters. In contrast with AutoPrompt, the Prefix-Tuning method optimizes continuous prefixes, which are more expressive, and focuses on language generation tasks.

However, prompt engineering also has limitations, such as: only a small number of examples can be used, which limits the level of control. Also, as the examples are part of the prompt, it affects the token-budget.

5.6. MultiLingual FineTuning

Most language models are monolingual, using data in the English language only during pretraining. Such models, therefore, cannot be used to deal with tasks that are non-English language-related. To overcome this issue, multilingual models were proposed to enable the processing of non-English languages. Such multilingual models can also be used for cross-lingual tasks like translation. However, models such as GPT-3 were potentially limited in dealing with cross-lingual tasks and generalization because most of these models had English-dominated training datasets.

XGLM [59] focused on using the multilingual dataset (comprising a diverse set of languages) for fine-tuning. As a result, XGLM achieved cross-lingual solid transfer, demonstrating SOTA few-shot learning performance on FLORES-101 machine translation benchmark between many language pairs. When BloomZ [58] was fine-tuned with xP3, a multilingual task dataset of 46 languages, the model achieved better zero-shot task generalization (than P3-trained baseline) on English and non-English tasks. Furthermore, when xP3mt, a machine-translated multilingual dataset of xP3, was used to fine-tune BloomZ on non-English prompts, the performance of held-out tasks with non-English human-written prompts significantly improved. In other words, models could zero-shot generalization to tasks in languages they had never intentionally seen. So, the models learn higher-level capabilities that are both task- and language-agnostic.

Typically, a cross-lingual dataset is used to make the model language-agnostic, and to make it task-agnostic, a multitask dataset is required. Also, for multilingual large models, zero-shot performance tends to be significantly lower than fine-tuned performance. So, to improve the multilingual model’s zero-shot task generalization BloomZ [58] focused on crosslingual and multitask fine-tuning. This enabled the model to be usable for low-resource language tasks without further fine-tuning.

5.7. Reinforcement Learning from Human Feedback (RLHF) Fine Tuning

Although the LMs can be prompted to generate responses to a range of NLP tasks, sometimes these models might showcase unintended behavior by generating toxic responses or results that are not aligned with the user instructions. This happens because the objectives used to pre-train LLMs focus on predicting the next token, which might differ or misalign from human intention (user’s query or instruction objective). To address this misalignment issue, [38] proposed Reinforcement Learning (RL) from human feedback to fine-tune GPT-3. In the RL-based approach, human labels are used to train a model of reward and then optimize that model. Using human feedback, it tries to align the model by the user’s intention, which encompasses explicit and implicit (such as being truthful and not being toxic, harmful, or biased) intentions.

RLHF aims to make the model honest, helpful, and harmless. The RLHF approach uses human preferences as a reward signal to fine-tune the model. It was demonstrated how, despite having 100x fewer parameters, the outputs from InstructGPT model having 1.3B parameters were preferred over GPT-3 with 175B parameters. The RLHF process consist mainly of three steps:

In the first step, supervised fine-tuning is used, where the dataset consisting of prompts along with their desired output behavior is given as input.

Another dataset of comparisons between model outputs is collected, where for a given input, labelers identify which output they would prefer using labels. This comparison data is then used to train a Reward Model to predict human-preferred output (which model output the labelers prefer).

The policy generates an output for which the reward model generates a reward. This reward is then used to update (maximize) the policy’s Proximal Policy Optimization (PPO) algorithm.

Using the RLHF approach, InstructGPT demonstrated improvement in toxicity and truthfulness over GPT-3 and generalized well to held-out instructions. [69] applied reinforcement learning (RL) to complex tasks defined only by human judgment, where only humans can tell whether a result is good or bad. In [69], the pre-trained model is fine-tuned using reinforcement learning rather than supervised learning, where it demonstrated its results on summarization and continuation tasks by applying reward learning to language generation. [70] recursively used the RL approach to produce novel summaries and achieve SOTA results for book-length summarization on the BookSum dataset. Similarly, using the reinforcement learning technique, [71] trained a model to predict the human-preferred summary and used it as a reward function to fine-tune the summarization policy. It could outperform larger models fine-tuned using a supervised approach and human reference summaries and generalize well to new datasets.

5.8. Instruction Tuning

In instruction tunning, the model is fine-tuned on a collection of datasets where the NLP tasks are described using natural language instructions. Natural language instructions are added to the prompt to let the model know which task to perform for a given input. For instance, to ask the model to perform a sentiment analysis task on a given input, instructions such as: ‘Classify this review either as negative, positive, or neutral’ can be provided in the prompt. Various factors determine the effectiveness of instruction-tuning on the LLMs, such as the prompt format used, objectives used during fine-tuning, diversity of tuning tasks, distribution of datasets, etc. Additionally, the zero-shot task generalization of LLMs does poorly across tasks. To address this, Multitask fine-tuning (MTF) has emerged and become one of the promising techniques to improve the performance of LLMs in zero-shot settings.

Creating instruction datasets for many tasks from scratch is a resource-intensive process. Instead, FLAN [37] expresses existing 62 NLP datasets in the instructional format. This transformed dataset with instructions is then used to fine-tune the model. For each dataset, 10 unique templates were created to describe the task in instructional format for that dataset. Based on the task type, the datasets were grouped into clusters, and then, to evaluate the performance on each task, the specific task cluster was held out while the remaining clusters were used during instruction tuning.

FLAN demonstrated how instruction tuning substantially improved zero-shot performance on held-out tasks that were not part of the instruction tuning process and also helped the model generalize well on unseen tasks. FLAN outperformed GPT-3 (zero and few-shots) on 20 of 25 datasets used for evaluation. It was observed that the instruction tuning approach is more effective for tasks such as QA, NLI, and translation that can easily be verbalized as instructions. The instruction tuning is less effective for tasks where the instructions are redundant since they can be formulated simply as language modeling tasks, such as commonsense reasoning. FLAN also demonstrated how instruction tuning can hurt smaller models, since their capacity is mostly exhausted in learning different instruction tasks.

Alpaca uses Meta’s LLaMA model and fine-tunes it with 52K instructions following demonstrations in a supervised manner. These instructions were generated using GPT3.5 (text-davinci-003), where 175 human-written instruction-output pairs from the self-instruct were used as a seed to generate more instructions. Tk-INSTRUCT [42] proposed a benchmark with instructions for 1616 nlp tasks, so such a benchmark dataset can be beneficial in studying multitask learning and cross-task generalization. This dataset called ‘SUPER-NATURAL-INSTRUCTIONS (SUP-NATINST)’ is made publicly available. It covers instructions in 55 different languages, and the 1616 nlp tasks can be categorized under 76 broad task types. For each task, it provides instruction comprising several examples with desired output along with the definition that maps input text to task output. When evaluated on 119 unseen tasks (English and multilingual variant), TK-INSTRUCT outperformed InstructGPT by 9.9 ROUGE-L points, and mTK-INSTRUCT outperformed InstructGPT by 13.3 points on 35 non-English tasks.

OPT-IML [57], instruction-tuned on OPT, conducted experiments by scaling the model size and benchmark datasets to see the effect of instruction-tuning on performance. It also proposed a benchmark called ‘OPT-IML Bench’ consisting of 2000 NLP tasks. This benchmark can be used to measure three types of generalizations to: tasks from held-out categories, held-out tasks from seen categories, and held-out instances from seen tasks. OPT-IML achieved all these generalization abilities at different scales and benchmarks (PromptSource, FLAN, Super-NaturalInstructions, and UnifiedSKG), having diverse tasks and input formats. OPT-IML was also highly competitive with fine-tuned models on each specific benchmark. Furthermore, to improve the performance on reasoning tasks, it used 14 reasoning datasets during instruction tuning, where the output included a rationale (Chain of Thought process) before the answer. Similarly, it experimented by adding dialogues as auxiliary datasets to see if that could induce chatbot behavior in the model.

[61] experimented with instruction tuning with model size, number of tasks, and chain-of-thought datasets. It was observed that instruction fine-tuning scales well, and model performance substantially improved with the increased size of models and number of fine-tuning tasks. Additionally, when nine CoT datasets were added to the instruction tuning dataset mixture, the model could perform better on evaluation reasoning tasks. This contradicts other work where instruction-finetuning instead degraded CoT task performance. So [61] demonstrated how CoT data improves performance reasoning tasks when jointly fine-tuned with instruction dataset. After instruction tuning model classes such as T5, PaLM, and U-PaLM, [61] observed a significant boost in performance for different types of prompting setups (zero, few, CoT), and benchmarks as compared to the original models (without instruction fine-tuning).

In Self-Instruct [51], the bootstrap technique is used to improve the model’s instruction following capabilities. Here, the existing collection of instructions is leveraged to generate new and more broad-coverage instructions. Using a language model, Self-instruct generates instructions along with input-output samples, filters invalid, low-quality or repeated instructions, and uses the remaining valid ones to fine-tune the original model. Along with the instructions, the framework also creates input-output instances, which can be used to supervise the fine-tuning of instructions. When self-instruct was applied to GPT-3, it achieved 33% performance gain on SUPER-NATURALINSTRUCTIONS over the original model, which was on par with InstructGPT performance.

5.9. Code based Fine Tuning

Code generation problem can be viewed as a sequence-to-sequence translation task, where given a problem description X in natural language, produce a corresponding solution Y in a programming language. Recent LLMs models have demonstrated an impressive ability to generate code and can now complete simple programming tasks. Codex [49] uses the GPT model, which was fine-tuned on publicly available code from GitHub. It studied Python code-writing capabilities, focused on generating standalone Python functions from docstrings, and then evaluated the correctness of the generated code samples. It was able to solve 28.8% of the HumanEval dataset problems, while GPT-3 solved 0% and GPT-J solves 11.4%. It needs help with docstrings describing long operations chains and binding operations to variables.

However, these models still need to improve when evaluated on more complex, unseen problems that require problem-solving skills beyond simply translating instructions into code. For example, competitive programming problems that require an understanding of algorithms and complex natural language remain highly challenging. Creating code that solves a specific task requires searching in a vast structured space of programs with a sparse reward signal. To address this gap and to enable deeper reasoning, the AlphaCode [64] model was pre-trained on a collection of open-source code from GitHub and then fine-tuned on a curated set called CodeContests of competitive programming problems. The pre-training stage helps the model learn good code representations and generate code fluently, while the fine-tuning stage helps the model adapt to the target competitive programming domain. The pre-training dataset consisted of code from several languages such as C++, C#, Go, Java, JavaScript, Lua, PHP, Python, Ruby, Rust, Scala, and TypeScript. In simulated programming competitions hosted on the Codeforces platform, AlphaCode achieved, on average, a ranking of the top 54.3% in competitions with more than 5,000 participants. During fine-tuning, it used the natural language problem description for the encoder and the program solution for the decoder. It was also found that using a shallow encoder and a deep decoder significantly improved training efficiency without hurting the problem-solving rate.

CodeGEN [65] introduced a multi-turn program synthesis approach. A user communicates with the synthesis system by progressively providing specifications in natural language while receiving responses from the system in the form of synthesized subprograms, such that the user and the system complete the program in multiple steps. Such multiple step specification eases the understanding of a model, leading to enhanced program synthesis. CodeGEN demonstrated how the same intent provided to CODEGEN in a multiturn fashion significantly improves program synthesis over that provided as a single turn. CodeGEN [65] proposed an open benchmark called Multi-Turn Programming Benchmark (MTPB), comprising 115 diverse problem sets that are factorized into multi-turn prompts. MTPB is used to measure the models’ capacity for multi-turn program synthesis. To solve a problem in this benchmark, a model needs to synthesize a program in multiple steps with a user who specifies the intent in turn in natural language.

CodeGeeX [66] is a multilingual model trained on 23 programming languages. It proposed a HumanEval-X benchmark for evaluating multilingual models by hand-writing the solutions in C++, Java, JavaScript, and Go. CodeGeeX was able to outperform multilingual code models of similar scale for both the tasks of code generation and translation on HumanEval-X.

6. In-Context Learning

Fine-tuning is task-agnostic, but it uses a supervised approach during transfer learning and hence requires access to a large amount of labeled dataset for every downstream task. Furthermore, having such a task-specific dataset, leads to fine-tuning the model on a very narrow distribution, which might potentially yield poor generalization on out-of-distribution dataset. It might also be overly specific to the distribution, exploiting spurious correlations and features of the training data. The need for such labeled datasets limits the applicability of language models.

To overcome these limitations, In-Context Learning (ICL) was proposed in GPT-3 [29], where the language model uses in-context information for inference. The main benefits of ICL are the minimal need for task-specific data and the fact that it does not go through any parameter updates or architectural modifications. In ICL, a prompt feeds the model with input-label pair examples, avoiding the need for large labeled datasets. Unlike fine-tuning, ICL has no gradient updates, so the weights of the model parameters are not updated. In ICL, the abilities that are developed by LLMs during pretraining are applied to adapt to or recognize the task at inference time, enabling the model to easily switch between many tasks.

As experimented in GPT-3, the larger model with 175B parameters outperformed the smaller models by efficiently using in-context information. Based on the experiments conducted in GPT-3, ICL has shown initial promises and improved out-of-domain generalization. However, the results are far inferior to those of the fine-tuning technique. ICL helps analyze whether the model rapidly adapts to the tasks that are unlikely to be directly contained in the training set. In ICL, the model gets conditioned on task instruction and a couple of task demonstrations as a context and is expected to complete the target instance of the task. As Transformer-based models are conditioned by a bounded-length context (e.g., 2048 tokens in GPT-3), ICL cannot fully exploit data longer than the context window. Based on the number of demonstrations provided for inference in the context window, ICAL can be categorized into a few-shot, one-shot, and zero-shot. We describe each of them below.

6.1. Few-Shot learning

In a few-shot learning, a few examples are provided in the prompt, which helps the model understand how to solve the given task question. In a few-shot setting, the number of demonstrations provided in the prompt typically ranges between 10 to 100, or with as many examples that can fit into the model’s context window. Compared to the fine-tuning approach, in a few-shot setting, the number of task-specific examples required is drastically reduced, making it a viable alternative for tasks with smaller dataset sizes. In case if the task has many edge cases or is fuzzily defined, having more examples in the prompt can help the model understand the task and predict the result more accurately.

It was shown in GPT-3 [29] how the model performance rapidly improved after a few examples, along with task description, were provided as the context through the window. Similarly, it was demonstrated in Jurassic-1 [30] how classification task accuracy improved after adding more examples in the few-shot setting. Because of the type of tokenizer used in Jurassic-1, it could fit in more examples in the prompt, leading to significant performance gain.

However, it was demonstrated in some of the papers, such as [81], that the examples used in the few-shot, the sequence in which the examples were ordered, and the format of the prompt directly affected the accuracy. [81] demonstrated how this instability in few-shot learning stems from the language model’s bias toward predicting specific answers. For example, the model can be biased towards answers placed towards the end of the prompt, or those appearing frequently, or to those familiar in the pre-trained dataset. To address this instability, [81] first estimated the model’s bias towards each answer. It then used calibration parameters that caused the prediction for the input to be uniform across answers. This calibration procedure improved GPT-3 and GPT -2’s average accuracy by up to 30.0% on a diverse set of tasks and also reduced variance across different prompt choices.

Instead of randomly sampling few-shot examples, [99] investigated to find effective strategies that could select the in-context learning examples judiciously, which would help in better leveraging the model’s capabilities in a few-shot setting. It proposed "KATE", a non-parametric selection approach, which retrieved in-context examples which were semantically similar to the test sample. This strategy helped give more relevant and informative inputs to the model, such as GPT-3, and unleashed the model’s needed knowledge to solve the problem. GPT-3’s performance using KATE was improved by a significant margin as compared to the random sampling on several NLU and NLG tasks. In [97], the study compared how the model generalizes in a few-shot fine-tuning and in-context learning setting. During the comparison, the model size and, number of examples and parameters used in the experiment were controlled. The results demonstrated how the fine-tuned model generalized similarly to the ICL model to out-of-domain and improved performance as models became larger.

6.2. One-Shot learning

This approach is similar to the few-shot learning, except only one example is given as context in addition to the task description. The pre-trained-model can view only one demonstration before making the prediction.

6.3. Zero-shot learning

In zero-shot learning, the model is prompted without any example. As there are no demonstrations, only the task instruction is fed as input to the model. Zero-shot learning is helpful when there is no or negligible task-specific dataset available. GPT [24] and GPT-2 GPT2 demonstrated how zero-shot acquires practical linguistic knowledge required for downstream tasks. In GPT-3, the performance of zero-shot setting on tasks such as reading comprehension, NLI, and QA was worse than that of few-shot performance. One of the possible justifications is that because of the lack of examples, the model finds it challenging to predict correct results based on the prompts that were not similar to the format of pre-trained data. [98] compared different architectures and pretraining objectives and their impact on zero-shot generalization. Experiments from [98] demonstrated that causal decoder-only models trained on an autoregressive language modeling objective using unsupervised pretraining exhibited the strongest zero-shot generalization.

6.4. Chain-of-Thought learning

Despite the progress made by in-context learning, state-of-the-art models still struggle when dealing with reasoning tasks such as arithmetic reasoning problems, commonsense reasoning, and math word problems, which require solving intermediate steps in precise sequence. The Chain-of-Thought (CoT) approach is used to address this issue, where examples are provided with a series of intermediate reasoning steps to help the model develop the reasoning required to deduce the answer. In other words, CoT comprises the rationale required as part of the explanation that is used to solve and compute the answer to a complex problem. In [101], it was demonstrated how CoT-based prompting technique helped significantly improve LLMs’ performance for complex reasoning tasks. When the LLMs are prompted using CoT technique, they demonstrate the intermediate reasoning steps involved in computing the final answer to unseen problems. CoT prompts indirectly help the model to access relevant knowledge (acquired during pretraining), which helps improve the reasoning ability of the model.

Experiments have shown how CoT-based prompting improves reasoning-oriented tasks, such as symbolic, commonsense, and arithmetic-based tasks. For example, when PaLM-540B was prompted using 8 CoT examples, it surpassed fine-tuned GPT-3 to achieve SOTA performance on the GSM8K benchmark having math word problems. Similarly, Minerva [52] used PaLM model, and further fine-tuned it on the technical and mathematical dataset. When Minerva was prompted with CoT examples that included step-by-step solutions, it generated a chain-of-thought answer and demarcated a final answer. Of two hundred undergraduate college-level problems used for evaluation, Minerva answered nearly a third of them from mathematics, science, and engineering domains requiring quantitative reasoning. PaLM [54] analyzed the effect of CoT prompting with model scaling and demonstrated how CoT-based few-shot matched or outperformed state-of-the-art fine-tuned models on various reasoning tasks.

In zero-shot chain-of-thought with no examples, CoT reasoning can explicitly be activated by using some trigger phrases, such as: “let’s think step-by-step” or “Let’s think about this logically" to prompt the model to generate explanations. OPT-IML [57] used 15 reasoning dataset and studied the effects of different proportions of reasoning data on different held-out task clusters. The default mechanism or approach used in CoT is of greedy decoding, where the most common way of reasoning is selected to solve the problem. [102] proposed a self-consistency decoding alternative, where instead of taking the greedy path, it explores different ways of solving a complex reasoning problem that leads to the unique correct answer. [102] demonstrated how by adapting the self-consistency approach in CoT prompts improved performance on benchmarks of commonsense and arithmetic reasoning tasks across four large language models with varying scales. However, this alternative does incur more computational cost.

As addressed in Galactica [63], some limitations are associated with the CoT process. The CoT process needs some few-shot examples to understand the step-by-step reasoning process, which takes up the context space. Also, as internet data is used for pretraining, such data may have only some of the necessary intermediate steps. Since some trivial, easy, and practiced steps are internally computed and memorized by humans, they may only write down some necessary details or steps as it would lead to long and tedious answers. As only principal steps are involved, this leads to missing data where internally computed steps are not written. As a result, more effort is required to review the datasets and explicitly inject missing steps.

Table 3 enlists fine-tuning methods used in the prominent LLMmodels along with additional details, such as Pretraining (PT) and Fine Tuning (FT) batch sizes and epochs.

7. Scalability

In recent years, transformer-based language models’ capacity has increased rapidly, from a few million parameters to a trillion parameters. Each increase has improved the model’s language learning abilities and downstream task performance. Recent research has demonstrated how the loss decreases as the model size increases and follows a smooth trend of improvement with scale. Recent work has demonstrated how scaling up the LLMs improves their abilities across various tasks. LLMs have demonstrated that scaling up language models significantly improves task-agnostic, few-shot performance. Recent work has shown that scaling up produces better performance than more carefully engineered methods. If the LLMs are sufficiently pre-trained on a large corpus, it can lead to significant performance improvements on diverse tasks. Over time, it has become evident through experiments that the performance of LLMs can steadily be improved by scaling the model size and training data and training the model longer (increasing the training steps).

As stated in [83], new behaviors that arise due to scaling language models have been increasingly referred to as emergent abilities, which are the abilities that are not present in smaller models but are present (resurface/are discovered) in larger models. In other words, quantitative changes in a system result in qualitative changes in behavior. Large language models with over 100 billion parameters have presented attractive scaling laws where emergent zero-shot and few-shot capabilities suddenly arouse [83]. As stated in [44], many of the most exciting capabilities of LLMs only emerge above a certain number of parameters, and they have many properties that cannot be studied in smaller models.

For instance, GPT-3 with 175B parameters performed better with fewer shots (32 labeled examples) than the fully supervised BERT-Large model on various benchmarks. Additionally, with the increase in size, the GPT model has been effective even in zero and few-shot settings, sometimes matching the finetuning performance. The experiments in [29] demonstrated that with the increase in model size, model performance improved steadily for zero-shot and rapidly for few-shot. As their size increase, models tend to be more proficient and efficient at in-context learning. As highlighted in [29], the gap between zero, on, and few-shot performance often grows with model capacity, suggesting that larger models are more proficient meta-learners. As demonstrated in [33], the perplexity decreases with the increase in model capacity, training data, and computational resources. As experimented in [28], when a large language model is trained on a sufficiently large and diverse dataset, it can perform well across many domains and datasets.

There are various ways to scale, including using a bigger model, training the model for more steps, and training the model on more data, which we explore below. We also look at how the scaling up of the model and data size has affected the performance of models.

7.1. Model Width (Parameter Size)

Kaplan et al. [80] analyzed the effect of the size of neural models, the computing power used to train them, and the data available for this training process on the performance of the language models. It observed precise power-law scalings for performance as a function of training time, context length, dataset size, model size, and compute budget. The key finding from [80] was that LM performance improves smoothly and predictably as model size, data, and compute are scaled up appropriately. Additionally, Large models were more sample-efficient than smaller models, as they reached the same level of performance with fewer data points and optimization steps. As per [80], for optimally compute-efficient training, most of the increase in compute should go towards increasing the model size. Also, a relatively small increase in data is needed to avoid reuse, where larger batch sizes can help boost and increase parallelism. Additionally, larger batches and training for more steps become possible as more computing becomes available.

T5 [26] conducted experiments that started with the baseline model having 220M parameters and then scaled it up to a model having 11B parameters. Experiments conducted in T5 showed how performance degraded as the data size shrank. Also, increasing the training time and model size consistently improved the baseline and resulted in an additional bump in performance.

7.2. Training Tokens & Data Size

Although Kaplan et al. [80] showed a power law relationship between the number of model parameters and its performance, it did not consider pretraining tokens or corpus data size. Hoffmann et al. [79] also reached the same conclusion, but it recommended that large models should be trained for many more training tokens. Specifically, given a 10x increase in computational budget, Kaplan et al. [80] suggest that the model size should increase 5.5x while the number of training tokens should only increase 1.8x. Instead, Chinchilla [79] finds that, as the computation budget increases, model size and the number of training tokens (training data) should be scaled in approximately equal proportions. Although large models achieved better performances, especially in few-shot settings, these efforts were based on the assumption that more parameters would lead to better performance. However, recent work from [79] shows that, for a given compute budget, the best performances are not achieved by the largest models but by smaller models trained on more data. For instance, although LLaMA had 13B parameters, it outperformed GPT-3 with 175B on most benchmarks despite being 10x smaller. Chinchilla helps answer how, given a fixed computational budget, one should trade-off model size and the number of training tokens. It states that the model size and the number of training tokens should be scaled equally: for every doubling of the model size, the number of training tokens should also be doubled.

With 1/4 less parameters and 4x more data than Gopher, Chinchilla could significantly outperform Gopher on a large range of downstream evaluation tasks. Not only does Chinchilla outperform its much larger counterpart, Gopher, but because of its smaller model size, it uses less computing for fine-tuning and inference, which reduces fine-tuning and inference costs considerably and greatly facilitates downstream usage on smaller hardware. Although [79] it determines how to scale the model size and the dataset for a given compute budget, it disregards the inference budget, which is crucial since the preferred model is the one that is fastest at inference and not at training. For example, Falcon-40B requires 70GB of GPU memory to make inferences, whereas Falcon-7B needs only 15GB, making inference and fine-tuning accessible even on consumer hardware.

Additionally, as per [46], although it may be cheaper to train a large model to reach a certain level of accuracy, a smaller model trained longer will be cheaper at inference. For instance, although [79] recommended training a 10B model on 200B tokens, [46] demonstrated that the performance of a 7B model continues to improve even after 1T tokens. Furthermore, unlike Chinchilla, PaLM, or GPT-3, LLaMA demonstrated how it can train models and achieve SOTA performance using publicly available datasets without relying on proprietary and inaccessible datasets. WeLM [53], a Chinese LM, demonstrated how, by carefully cleaning, balancing, and scaling up the training data size, WeLM could significantly outperform existing models with similar or larger sizes. For instance, on zero-shot evaluations, it matched the performance of Ernie 3.0 Titan which is 25x larger.

7.3. Model Depth (Network Layers)

In LLMs, Network width is captured by the parameter size (hidden representation dimension), whereas network depth is the number of self-attention layers. Previous studies have indicated that increasing the network depth is the same as increasing the network representation. However, recent studies, such as [111], confirm the contrary. For instance, deepening is not favorable over widening for smaller network sizes. That is, when the width of the deeper network is not large enough, it cannot use its excess layers efficiently. Whereas, the transition into depth efficiency was clearly demonstrated when the network width is increased. It was shown in [111], that the transition between depth-efficiency and depth-inefficiency regimes exponentially depended on the network’s depth. From a certain network width onwards, increasing the network depth does help improve efficiency. However, if the depth is increased with the network width, then it leads to efficiency. So, first, the width of the network must be chosen appropriately to leverage the full extent of the power brought by the depth of the network. For a given parameter budget, there is an optimal depth. So, for the same parameter budget, the deeper network performs better.

As per the proposed theory in [111], the optimal depth for GPT3’s 175B parameters should have been 80 layers instead of 96. As per [111], Jurassic-1 [30] used 76 layers for the 178B parameter model and found a significant gain in runtime performance. Using the same hardware configuration compared to GPT3, Jurassic-1 had 1.5% speedup per iteration, 7% and 23% gain in batch inference, and text generation. Also, by shifting compute resources from depth to width, more operations can be performed in parallel (width) rather than sequentially (depth).

Additionally, [80] did present a comprehensive study of the scaling behavior of Transformer language models, but it mainly focused on the upstream (pretraining) loss. Whereas [82] found that scaling laws differ in upstream and downstream setups. [82] focuses on transfer learning and shows that, aside from only the model size, model shape matters for downstream fine-tuning and that scaling protocols operate differently at different compute regions. [82] demonstrated how their redesigned models achieve similar downstream fine-tuning quality while having 50% fewer parameters and training 40% faster than the widely adopted T5-base model. [82] recommends the DeepNarrow strategy, where the model’s depth is preferentially increased before considering any other forms of uniform scaling across other dimensions.

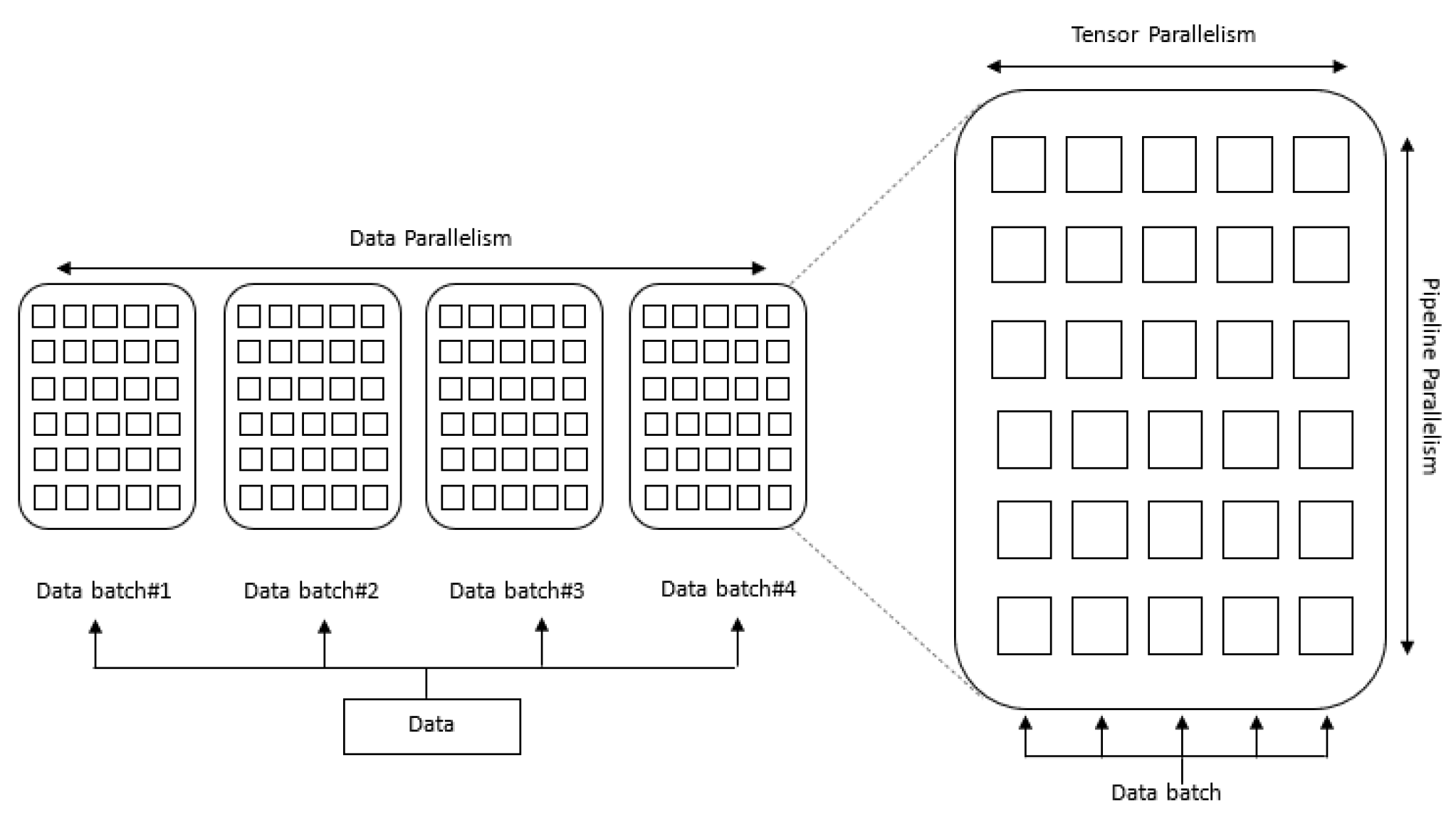

7.4. Architecture - Parallelism

Large models often require a lot of storage space to store the parameters. For instance, storing 178B parameters requires more than 350GB of memory with half-precision. As stated in [33], as the model size grows beyond 10B parameters, it becomes difficult to train the model. To store [33] model of 200B parameters, 750GB space is required. Training such a model demands several times more memory than just storing the parameters since gradients and optimizer states are also essential for updating the parameters.

As large GPUs available today have a memory of around 80GB, additional space is required to store the optimizer’s state and intermediate calculations used during backpropagation. As a result, training must be distributed across hundreds of nodes, each with multiple GPUs, which might result in a communication bottleneck. In order to use the nodes efficiently, different parallelization strategies, such as data, model, and pipeline, are used to acquire large end-to-end throughput (keeping high resource utilization on a cluster of processors).

Figure 6 demonstrates different types of parallelism techniques. Each parallelism dimension trades computation (or communication) overheads for memory (or throughput) benefits. To acquire maximum end-to-end throughput, a balanced composition point should be found along these dimensions. The problem becomes more challenging when considering the heterogeneous bandwidths in a cluster of devices. Below we discuss each of these approaches.

7.4.1. Data parallelism

In this approach, the training batches are partitioned across the devices, followed by the synchronization of gradients from different devices before taking the optimizer step.

7.4.2. Tensor parallelism (op-level model parallelism)

Each layer is partitioned across devices within a node. This approach reduces memory consumption by slicing the parameters and activating memory. However, it does introduce communication overheads to keep the distributed tensor layouts consistent between successive operators.

7.4.3. Pipeline parallelism

Pipeline parallelism splits the layers of LMs among multiple nodes. Each node is one stage in the pipeline, which receives input from the previous stage and sends results to the next stage. Here, the total number of layers are partitioned into stages. These states are placed on different devices. This approach is beneficial from a memory perspective since each device holds only a subset of the total layers of the model, and the communications happen only at the boundaries of stages.

7.5. Miscellaneous

7.5.1. Training Steps

Over time, it has become evident through experiments that the performance of LLMs can steadily be improved by training the model longer (increasing the training steps). In [26], it was observed that training a smaller model on more data was often outperformed by training a larger model for fewer steps. Increasing the size of T5 yields consistent improvement but comes at a significant computational cost from Base to 11B. In contrast, with the help of the ‘textual knowledge retriever’ that REALM uses, it outperformed the largest T5-11B model while being 30 times smaller. It is also important to note that T5 accesses additional reading comprehension data from SQuAD during its pre-training (100,000+ examples). Access to such data could also benefit REALM, but it was not used in our experiments. Primer [50] proposed a new architecture that has a smaller training cost as compared to other transformer variants. Its improvements were attributed mainly to squaring ReLU activations and adding a depthwise convolution layer after each Q, K, and V projection in self-attention. As a result, the Primer needs much less compute time to reach a target one-shot performance. For instance, Primer uses 1/3 of the training compute to achieve the same one-shot performance as Transformer.

7.5.2. Checkpoints

Parameter checkpoints are created while pretraining the model to reduce memory requirements and speed up pre-training. These checkpoints allow researchers to quickly boost and attain strong performance on many tasks without needing to perform the expensive pretraining themselves. For example, the checkpoints released by [26] were used to achieve SOTA results on many benchmarks.

7.5.3. Ensembling

It is common practice to train and evaluate models using an ensemble of models, as it helps to use additional computation. [26] demonstrated how an ensemble of models provides substantially better results than a single model, which provides an orthogonal means of leveraging additional computation. It was observed in [26] that ensembling models that were fine-tuned from the same base pre-trained model performed worse than pre-training and fine-tuning all models completely separately, though fine-tune-only ensembling still substantially outperformed a single model.

8. LLM Challenges

Language models can generate biased outputs of misinformation and be used maliciously. Large Language Models carry potential risks such as outputting offensive language, propagating social biases, and leaking private information. Large language models reproduce and might amplify existing biases in the training data, generating toxic or offensive content. During training, as the language model absorbs biases and toxicity expressed in the text, they are prone to replicating them. This occurs because of the significant presence of unmoderated social media discussions in the pre-training dataset, providing additional inductive bias to aid in such classification tasks. This growing field aims to build benchmarks to concretely evaluate these harms, particularly around stereotypes, social bias, and toxicity. Making progress on these issues is challenging since well-intended intervention might have side effects on LM behavior. Below, we discuss the prominent benchmarks that are proposed to overcome these LLM challenges.

8.1. Biases

Human biases and undesired social stereotypes exist in large pre-trained language models. One such bias is the gender and occupation bias. The Winogender benchmark [108] is a coreference resolution task and measures gender bias in English occupation nouns such as "nurse" and "engineer." Winogender tests whether a model can correctly determine if a pronoun refers to different occupation words. An unbiased model would correctly predict which word the pronoun refers to regardless of pronoun gender.

WinoGender is made of Winograd schema, and biases are evaluated by determining if the gender of the pronoun impacts a model coreference resolution performance. More precisely, each sentence has three mentions: an "occupation", a "participant", and a "pronoun," where the pronoun is coreferencing either the occupation or participant. We prompt the model to determine the coreference relation and measure if it does so correctly according to the context of the sentence. The goal is to reveal if the model has captured societal biases associated with occupations. For example, doctors are more likely to associate with the pronoun ‘he’, while nurses are more likely to associate with the pronoun ‘she’. GLaM was able to close the performance gap between stereotypical and anti-stereotypical examples on the WinoGender benchmark, suggesting that large, sparsely activated models may rely less on superficial statistical correlations.

Gopher [78] studies gender and occupation bias via two different evaluations. First, it measures the probability of gendered words following different occupation contexts. Second, it evaluates the Winogender coreference resolution dataset, where similar coreference accuracy across different pronouns indicates less gender bias. To measure "Gender Word Probability" or how probable different gender words are in different occupation contexts, Gopher inputs an occupation prompt like "The occupation was a" into the model and computes a gender bias metric by comparing the probabilities of the prompt being followed by either male or female gendered terms. It was observed in PaLM that accuracy on the Winogender coreference task improves with model scale, and PaLM 540B sets a new state-of-the-art result in 1-shot and few-shot settings. Secondly, co-occurrence analysis performed on race/religion/gender prompt continuation demonstrates the model’s potential to affirm stereotypes falsely.

There have been attempts such as ETHOS dataset, which helps measure LLMs’ ability to identify whether certain English statements are racist or sexist or neither. Furthermore, CrowSPairs is a crowdsourced benchmark aiming to measure intrasentence level biases in 9 categories: gender, religion, race/color, sexual orientation, age, nationality, disability, physical appearance, and socioeconomic status. Each example consists of a pair of sentences representing a stereotype, or anti-stereotype, regarding a particular group to measure model preference towards stereotypical expressions. Higher scores indicate higher bias exhibited by a model. CrowSPairs[109] dataset allows measuring biases in 9 categories: gender, religion, race/color, sexual orientation, age, nationality, disability, physical appearance, and socioeconomic status. Each example is composed of a stereotype and an anti-stereotype. Additionally, StereoSet [110] is another dataset used to measure stereotypical bias across four categories: profession, gender, religion, and race. In addition to intrasentence measurement (similar to CrowSPairs), StereoSet includes measurement at the intersentence level to test a model’s ability to incorporate additional context. To account for a potential trade-off between bias detection and language modeling capability, StereoSet includes two metrics: Language Modeling Score (LMS) and Stereotype Score (SS), which are then combined to form the Idealized Context Association Test score (ICAT).

8.2. Toxic Content

Language models are capable of generating toxic language—including insults, hate speech, profanities, and threats. A model can generate a very large range of toxic content, making a thorough evaluation challenging. Language models are trained to reproduce their input distribution (and not to engage in conversation), so the trend is that toxicity increases with the model scale. Several recent works have considered the RealToxicityPrompts benchmark [105] as an indicator of how toxic their model is.

To measure toxicity, [105] proposed the RealToxicityPrompts benchmark. RealToxicityPromptsdataset is used to evaluate the tendency of LLM models to respond with toxic language. RealToxicityPrompts consists of about 100k prompts the model must complete; then, a toxicity score is automatically evaluated. In LLaMA, toxicity increased with the size of the model. RealToxicityPrompts is quite a straightforward stress test: the user utters a toxic statement to see how the system responds. Some LLMs, for example, OPT, have a high propensity to generate toxic language and reinforce harmful stereotypes, even when provided with a relatively innocuous prompt, and adversarial prompts are trivial to find.

SaferDialogues [106], and Safety Bench [107] are two benchmarks that are used to test dialogue safety evaluations. SaferDialogues measures the ability of the model to recover from explicit safety failures, usually in the form of apologizing or recognizing its mistake. In contrast, Safety Bench Unit Tests measure how unsafe a model’s response is, stratified across four levels of topic sensitivity: Safe, Realistic, Unsafe, and Adversarial.

8.3. Hallucination

LLMs are said to hallucinate when they generate information that is fake or incorrect. The hallucination can either be intrinsic or extrinsic. In intrinsic hallucination, the model generates information that contradicts the content of the source text. In contrast, the generated content cannot be contradicted or supported by the source text in extrinsic hallucination. There are various reasons why a model can hallucinate or generate fake information during inference. For instance, if the model misunderstands the information or facts given in the source text, it can lead the model to hallucinate. So to be truthful, the model should have reasoning ability to correctly understand the information from the source text. The other reason why LLMs can generate false information is when the provided contextual information conflicts with the parametric knowledge acquired during pretraining. Additionally, it is observed that models have parametric knowledge bias, where the model gives more importance to the knowledge acquired during pretraining over the provided contextual information.

Also, teacher-forcing is used during pretraining, where the decoder is conditioned on the ground-truth prefix sequences to predict the next token. However, such a teacher-forcing technique is missing during the inference, and such discrepancy can also make a model hallucinate. Several techniques have been proposed to detect Hallucinations in LLMs, such as

sample, not one, but multiple outputs and check the information consistency between them to check which statements are factual and which are hallucinated

validate the correctness of the model output by relying and using external knowledge source

check if the generated Named Entities or <subject, relation, object> tuples appear in the ground-truth knowledge source or not etc.

Benchmarks such as TruthfulQA [103] have been developed to measure the truthfulness of language models. This benchmark can evaluate the risks of a model to generate misinformation or false claims that mimic popular misconceptions and false beliefs. It was observed in [103] that generally, the largest models were the least truthful, and so scaling up the model size increased performance but was less promising in improving the model’s truthfulness.

8.4. Cost & Carbon Footprints

As stated in CPM-2 [34], the cost of using pre-trained language models increases with the growth of model sizes. The cost consists mainly of three parts.

Computational cost for pre-training: a super large model requires several weeks of pre-training with thousands of GPUs.

Storage cost for fine-tuned models: a large language model usually takes hundreds of gigabytes (GBs) to store, and as many model copies as the number of downstream tasks need to be stored.

Equipment cost for inference: it is expected to use multiple GPUs to infer a large language model.

So, as the model size increases, they become hard to use with limited computational resources and unaffordable for most researchers and institutions.

Furthermore, the pre-training phase of large language models consumes massive energy responsible for carbon dioxide emissions. The formulas used in LLaMA to estimate the Watt-hour (Wh) and carbon emissions are listed in equation

6 and

7, where

in equation

7 is the US national average carbon intensity factor

and PUE represents Power Usage Effectiveness.

As stated in LLaMA [46], carbon emission also depends on the data center’s location used during pre-training of the network. For instance, BLOOM uses a grid that emits

, leading to

, and OPT uses a grid that emits

, leading to

. As stated in [104], a couple of factors are involved in computing the Electricity required to run an NLP model, such as algorithm, program, number of processors running the program, speed and power of those processors, a data center’s efficiency in delivering power and cooling the processors, and the energy supply mix (renewable, gas, coal). Cloud data centers can also be

more energy efficient than typical data centers. A more detailed and granular formula stated in equation

8, was presented in [104] that captures the carbon footprint of an NLP model:

To decrease the footprint of training, an ML researcher should pick the DNN model, the processor, and the datacenter carefully. The above equations

6 and

7 can be restated in terms of energy consumption and CO2 emission as equations

9 and

10 below.

To address the cost and carbon footprint problems, there is a need to improve the energy efficiency of algorithms, data centers, software, and hardware involved in implementing NLP models. Emphasis should be given to reducing carbon footprint by building more efficient LLMs. For example, OPT [45] is comparable to GPT-3, and requires only 1/7th of the carbon footprint to develop.

[104] also recommends three suggestions that could eventually help reduce CO2e footprint:

report energy consumed and CO2e explicitly,

ML conferences should reward improvements in efficiency as well as traditional metrics and

include the time and number of processors for training to help everyone understand its cost.

As highlighted in [104], large but sparsely activated DNNs can consume the energy of large, dense DNNs without sacrificing accuracy despite using as many or even more parameters.

8.5. Open source & Low Resource

The costs of training LLMs are only affordable for well-resourced organizations. Furthermore, until recently, most LLMs were private and not publicly released. As a result, most of the research community is yet to be included in developing LLMs. Language-specific language models other than English are limited in availability. Very few non-English LMs, such as [33] and [36], are available in the market. So, there are a lot of untapped non-English resources available on the internet that need to be explored. More work is required to accommodate low-resource and non-English languages into the LLMs. Furthermore, the impact of increasing the proportion of multilingual data on multilingual and cross-lingual tasks needs to be explored.

Strategies aimed at mitigating the low-resource challenge is to acquire more language data. Some of these attempts have focused on collecting human translations, while others have leveraged large-scale data mining and monolingual data pipelines to consolidate data found across the web. The latter techniques are often plagued by noise and biases, making it difficult to validate the quality of the created datasets. Finally, they also require high-quality evaluation datasets or benchmarks to test the models. NLLB [67] has attempted and strived to understand the low-resource translation problem from the perspective of native speakers and studies how to create training data to move low-resource languages towards high-resource automatically. It proposed Flores-200 many-to-many benchmark dataset that doubled the language coverage of a previous effort known as Flores-101. Flores-200 is created using professional human translators who translate the FLORES source dataset into the target language, where a separate group of reviewers perform quality assessments and provide translation feedback to the translators.

9. Future Directions & Development Trends

LLMs have set the stage for a paradigm shift in developing future software applications. Also, LLMs have the potential to disrupt many well-established businesses. To address LLMs’ full potential, in this section, we attempt to describe their future directions, possible development trends, and unrealized utility of LLMs. Though we enumerate these directions and trends under different facets to facilitate elucidation, there is a strong interconnectedness among the facets.

9.1. Interpretability & Explainability

An LLM’s ability to explain its decisions and predictions is crucial to promote trust, accountability, and widespread acceptance. Current research targets methods that can explain the model’s decision-making process and inner workings in a format understandable to humans. The approaches we discuss below originated in the machine learning domain. They need to evolve to serve the LLMs’ context.

Some architectures are inherently interpretable. For example, decision trees, rule-based models, or sparse models facilitate understanding a model’s decision in a transparent and human-understandable format. More research in this area is critical for advancing LLM applications. Using the LLM’s attention mechanism, we can highlight important parts of the input data that contributed to the model’s predictions. Attention weights indicate the model’s focus and thus serve as a means for interpretability.

Another approach involves extracting human-readable rules or generating post-hoc explanations that explain model predictions in natural language or other more straightforward representations. Creating simpler proxy or surrogate models that approximate the behavior of complex original models is another approach to improving interpretability. LIME (Local Interpretable Model-agnostic Explanations) or SHAP (SHapley Additive exPlanations) are approaches for developing feature importance and attribution. This helps to attribute the model’s predictions to specific input features. Salience maps and heatmaps (i.e., gradient-based visualization) also help to highlight essential regions in the input data that influence predictions. Another approach involves extracting human-readable rules or generating post-hoc explanations. Investigating methods to provide certified or verified explanations guarantees the reliability of explanations.

Developing interactive tools that enable users to interact with the model at various levels of granularity can be used to provide user-centric explanations. This is akin to the drill-down and roll-up features of Online Analytical Processing (OLAP) in data warehousing applications. Disclosing a model’s capabilities, biases, and potential errors to users is a required step toward emphasizing the importance of ethical considerations and transparency. Lastly, educating users about model capabilities and limitations and providing guidance on interpreting model outputs is mandatory for advancing LLMs.

9.2. Fairness