1. Introduction

Urban trees play a vital role in fostering sustainable development within cities. They enhance the quality of the living environment, supply oxygen for organisms, and clean urban air. [

1] Typically, urban trees grow in isolation amidst various urban facilities. Therefore, examining specific tree species becomes a fundamental aspect of urban management. [

2] Traditional methods of classifying tree species heavily depend on on-site observation, relying on the visual recognition of inspectors, which is subjective and prone to errors. This approach is not only time-consuming but also incurs significant costs. [

3] The rapid advancement of Unmanned Aerial Vehicle (UAV) remote sensing technology has introduced high-resolution and multi-source data in a timely, effective, and cost-efficient manner. Developing a suitable algorithm to harness this valuable data is essential for delivering accurate results promptly in individual tree classification. [

4]

Recently, numerous advancements have emerged in the classification of urban tree species, encompassing various data sources such as hyperspectral images, LiDAR data, and high-resolution images from UAV using two primary classification approaches: 1) Random Forest (RF) and Support Vector Machine (SVM); 2) Artificial Neural Network (ANN) and its derivatives, including Convolutional Neural Networks (CNN) and Hierarchical Convolutional Neural Network (H-CNN). [

5,

6,

7,

8,

9]

Liu et al. [

10] conducted supervised data classification by extracting eight textures, including mean and variance, utilizing Maximum Likelihood Classification (MLC) and RF. Their findings indicated that MLC exhibited significantly lower time consumption compared to RF. Slavík et al. [

11] proposed a novel approach for tree species classification using Generalized Linear Models (GLM) and RF on UAV laser scanning 3D point clouds. Through a method based on the Clark-Evans spatial aggregation index (CE), they demonstrated that RF achieved significantly higher classification accuracy than GLM. Rochdi et al. [

12] utilized RF and SVM to assess the classification performance of LiDAR and RapidEye data, individually and in combination. Their results indicated a significant enhancement in classification accuracy with the joint use of LiDAR and RapidEye data, with the RF classifier outperforming SVM in tree species classification. Adelabu et al. [

13] employed RF and SVM based on EnMap Box for the tree species classification of 5-band RapidEye satellite data. Their study revealed that SVM outperformed RF when dealing with limited training samples. Freeman et al. [

14] investigated the performance of RF in addressing species imbalances across strata in Nevada. They proposed strategies to mitigate imbalances in sampling intensity and target species within training data. However, it’s noted that traditional machine learning methods still rely on manually acquiring relevant information and manually creating necessary features, such as leaf shape, tree crown, various vegetation indices, and texture features.

In 1998, LeCun et al. [

15] utilized CNN to classify two-dimensional shape changes, highlighting the significant advantages of CNN in automatically extracting features, capturing various visual structures, and facilitating classification. In 2017, Krizhevsky et al. [

16] introduced the deeper CNN architecture, AlexNet, to the ImageNet dataset. This model, trained extensively on GPU, achieved breakthrough results on the dataset. Li et al. [

26] employed the Caffe platform and a dual-task Gabor CNN on the Caffe platform for rapid tree species identification, presenting intuitive results in the application interface. Lei et al. [

18] utilized a Hierarchical Convolutional Neural Network (H-CNN) for multi-temporal image classification, leveraging rich individual tree phenological features to enhance classification outcomes. With the rapid development of UAVs, acquiring high-resolution aerial images with superior detail and texture compared to satellite images has become more accessible. Qin et al. [

19] developed a system in 2018 employing deep neural networks to classify high-resolution images, significantly reducing manual costs. Egli [

20] utilized a shallow CNN for tree species classification based on high-resolution drone images, demonstrating consistent classification results exceeding 92%, regardless of external conditions. Lee et al. [

21] compared the performance of two deep learning algorithms, illustrating that incorporating mixed tree species improves model classification performance. Li et al. [

22] applied three CNN models to classify single tree images in high-resolution images, revealing a substantial advantage over RF and SVM methods. Liang et al. [

23] employed a Spectral-Spatial Parallel Convolutional Neural Network (SSPCNN) for feature classification in hyperspectral images, demonstrating strong competitiveness. Nezami et al. [

24] investigated tree species classification using a 3D Convolutional Neural Network (3D-CNN), with the best 3D-CNN classifier achieving approximately 5% higher accuracy than Multilayer Perceptron (MLP) at all levels. Shi et al. [

25] employed an adjusted asymmetric convolution transfer learning model for hyperspectral image classification, significantly improving local tree species classification accuracy. Li et al. [

26] enhanced various CNN models for identifying complex forest tree species, improving accuracy by introducing edge loss and accelerating model convergence. Furthermore, Chen et al. [

27] integrated deep learning algorithms with UAV LIDAR data to segment single tree crowns, offering a comprehensive framework for accurate tree crown segmentation in forest conditions. However, it’s crucial to note that the intertwining growth of trees and variations in canopy images can impact final classification results. Hardware limitations of UAV imaging equipment and natural factors during drone flights, such as weather and sunlight, can also affect classification data due to boundary weakening and blurring.

Upon meticulous examination and assessment of the documented research findings, it becomes evident that CNN emerges as highly effective in individual tree classification. Nevertheless, the varied outcomes associated with different CNN variations and the inherent challenges of utilizing two-dimensional image data for tree species classification prompted us to explore alternative approaches. We are pleased to report a successful discovery in addressing these challenges. We pursued a classification methodology based on a Pseudo tree crown (PTC) perspective by employing the latest and most advanced CNN-based artificial intelligence model, specifically PyTorch.

The exploration of PTC traces back to earlier efforts, notably highlighted in Fourier et al. [

28] and Zhang and Hu [

29] identified a promising avenue by leveraging the longitudinal profile of tree crowns, marking an initial attempt to correlate physical crown attributes with their optical nadir views. Results underscored a robust correlation. However, this approach lacked flexibility across various contexts due to computational constraints and reliance on parameterized equations and failed to gain widespread adoption.

In 2017, Balkenhol and Zhang [

30] further delved into the potential expansion of three-dimensional correlations between the physical tree crown and the tree crown greyscale three-dimensional view. Limited by classification methods of the time, the study primarily showcased individual physical crown attributes alongside their 3D grayscale representations, laying the groundwork for the PTC concept. Another prototype was reported in Miao et al. [

31].

The utilization of the PyTorch model yielded a commendable classification result of 85%, with an impressive accomplishment reaching 97% when adopting the PTC approach.

3. Methodology

Our study comprises two primary components, representing the main contributions of this research. Firstly, we introduced the Pseudo Tree Crown (PTC) as a new input image instead of standard nadir view images, marking its first incorporation in classification literature. Secondly, we compared three prominent AI-based classification methodologies - PyTorch, TensorFlow 2.0, and YOLOv5 - alongside the conventional Random Forest classification approach.

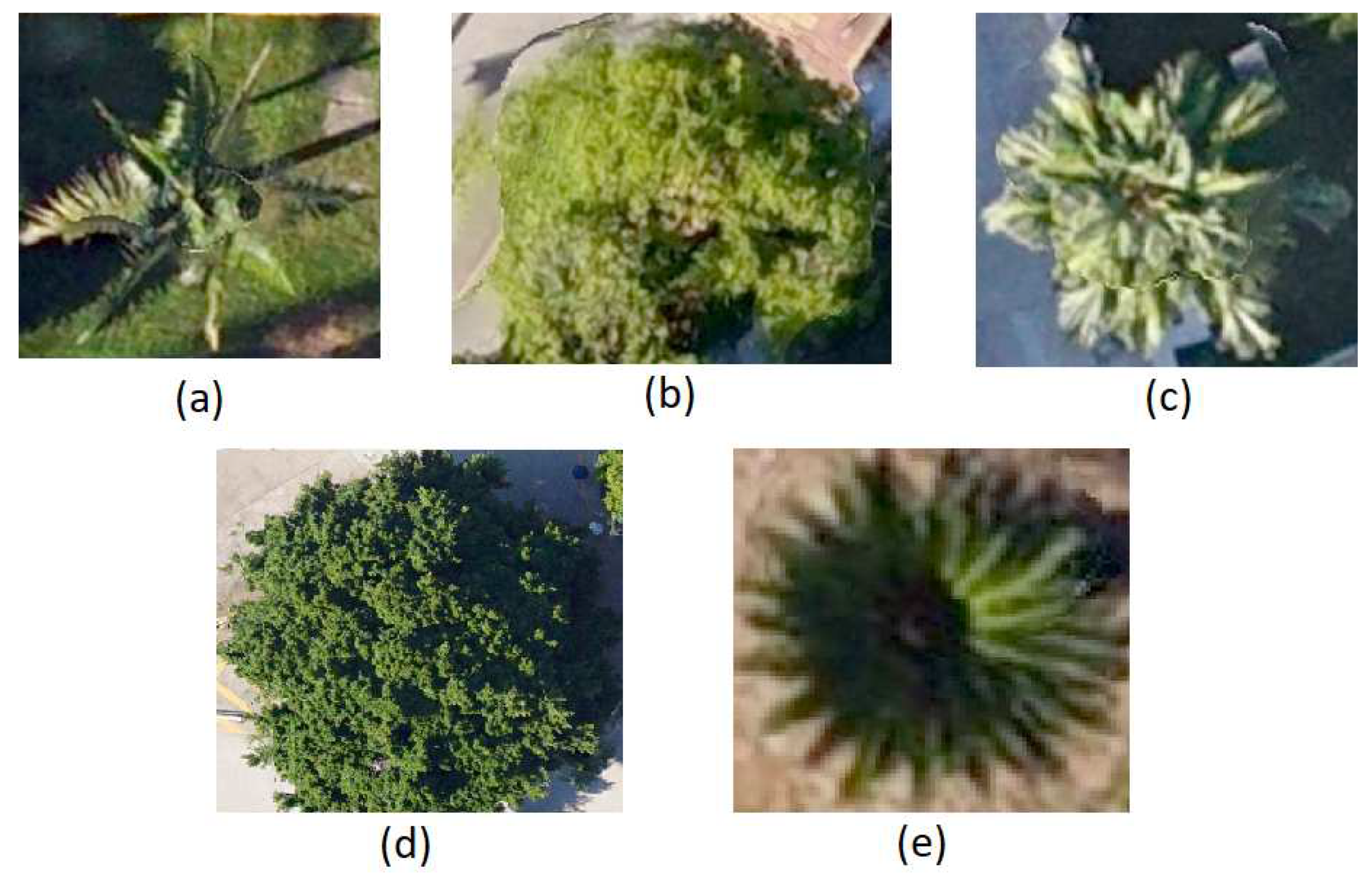

3.1. Pseudo Tree Crown (PTC)

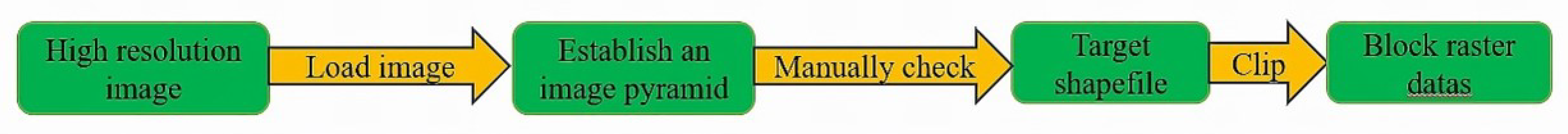

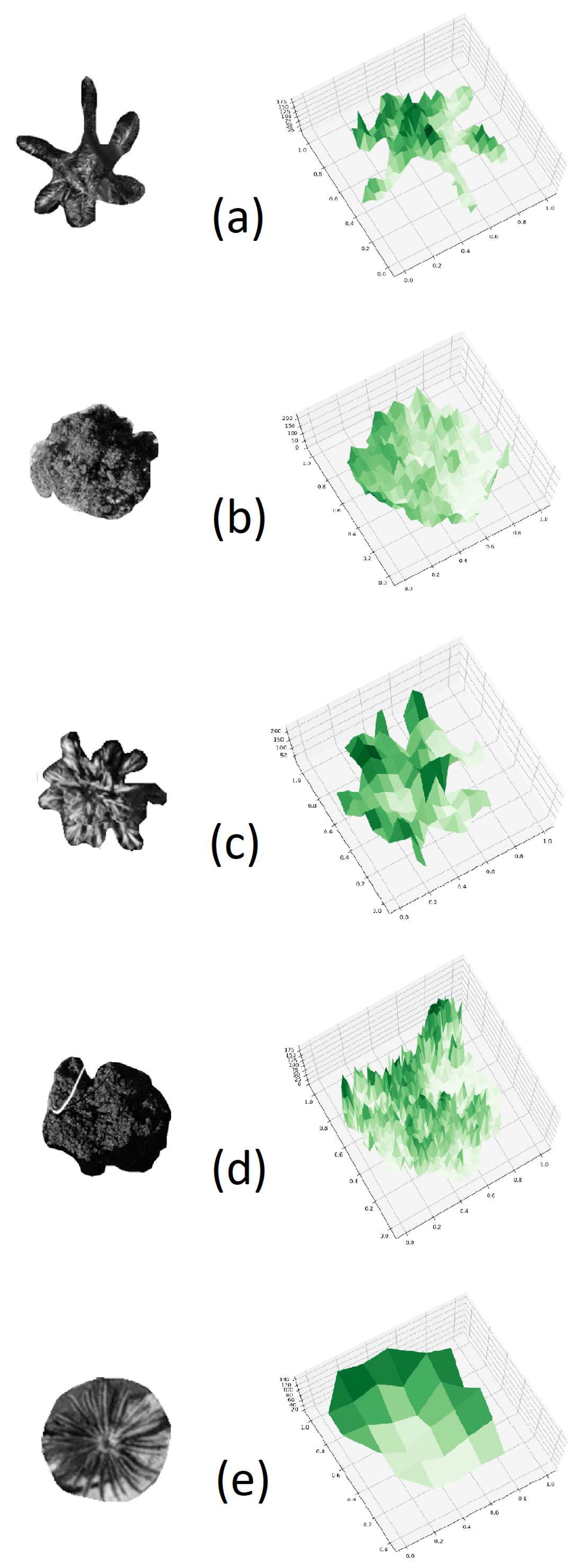

The first step involves generating the PTC from the nadir view tree crown images, as shown in

Figure 4. All collected sample data underwent parsing to extract information regarding the number of rows, columns, and associated bands for each sample. Subsequently, an affine matrix was formulated, and the image’s projection details were extracted to retrieve pixel data for the green band. Upon acquiring this data, it was converted into an array format and organized into a grid. Any pixel values exceeding 255 were reset to nadir values, and the subplot’s projection mode was configured for three-dimensional representation.

For the creation of our PTC, azimuth angles of -120° and an elevation angle of 75° were used. It started as a conventional default setting from Python 3D plot. However, we conducted a study to verify the variance in azimuth and elevation angles, elaborated in

Section 4.2. Additionally, a comparative analysis was undertaken using different spatial resolutions of PTC and using original nadir view images instead of PTC, with findings detailed in sections 4.3 and 4.4, respectively.

3.2. Convolutional Neural Networks (CNN)

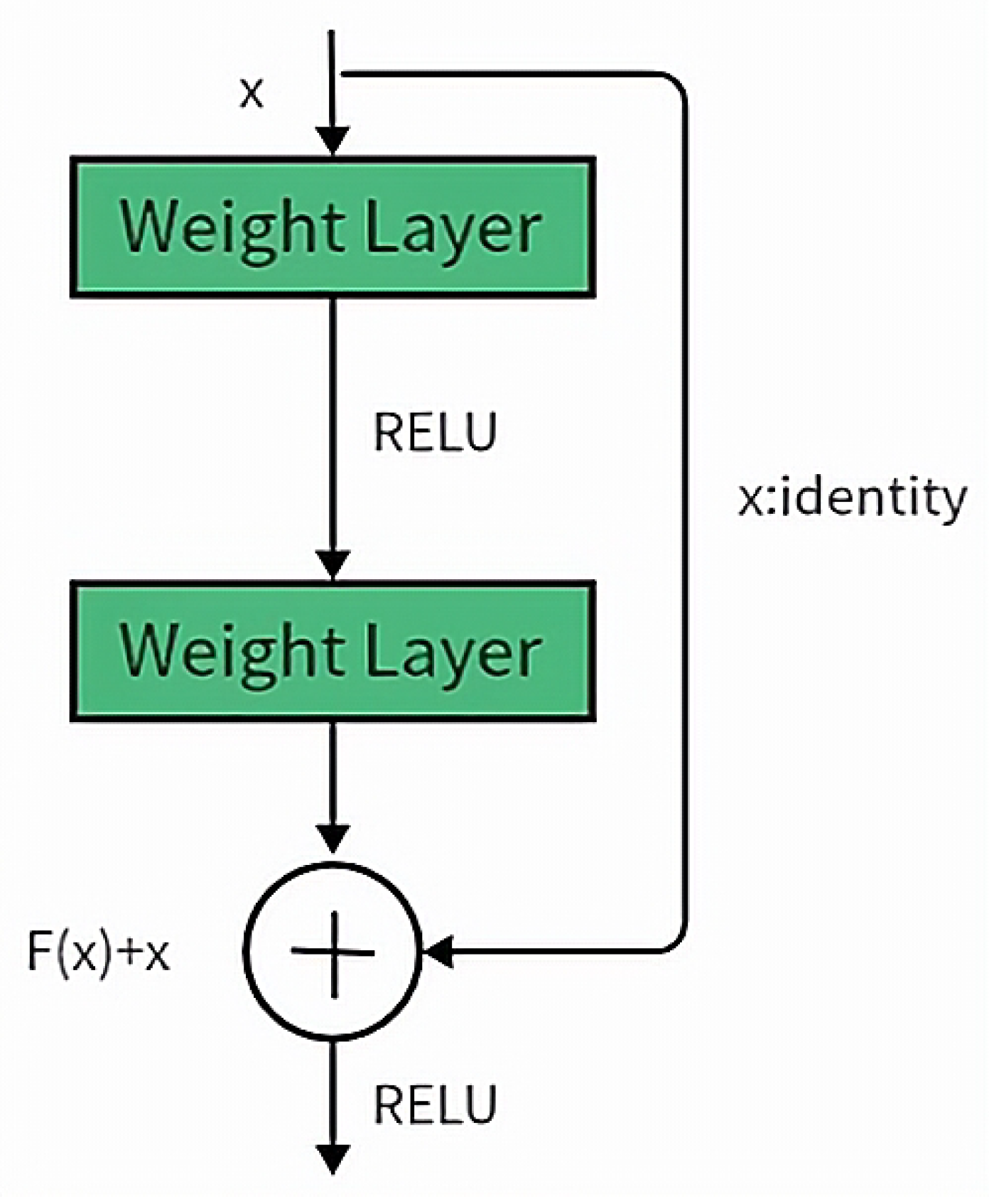

The increase in CNN depth can extract better features and deliver better classification results. However, traditional CNN faces issues such as network degradation, vanishing gradients, and exploding gradients with the increasing depth of layers. This results in the performance of deeper networks being inferior to shallower ones. The ResNet introduces residual blocks, as shown in

Figure 5. ResNet, short for Residual Network, is mainly used for image classification and has wide applications in areas such as image segmentation and object detection.

ResNet addresses the challenges of gradient vanishing and accuracy degradation in deep networks by incorporating residual learning into traditional CNN. This innovation allows the network to become deeper, ensuring accuracy while controlling speed and effectively solving the problem of network degradation in deep networks. ResNet’s main innovative points include the introduction of the Batch Normalization (BN) layer, which replaces Dropout to solve the vanishing/exploding gradient problem. Additionally, ResNet introduces the concept of residual learning to address network degradation.

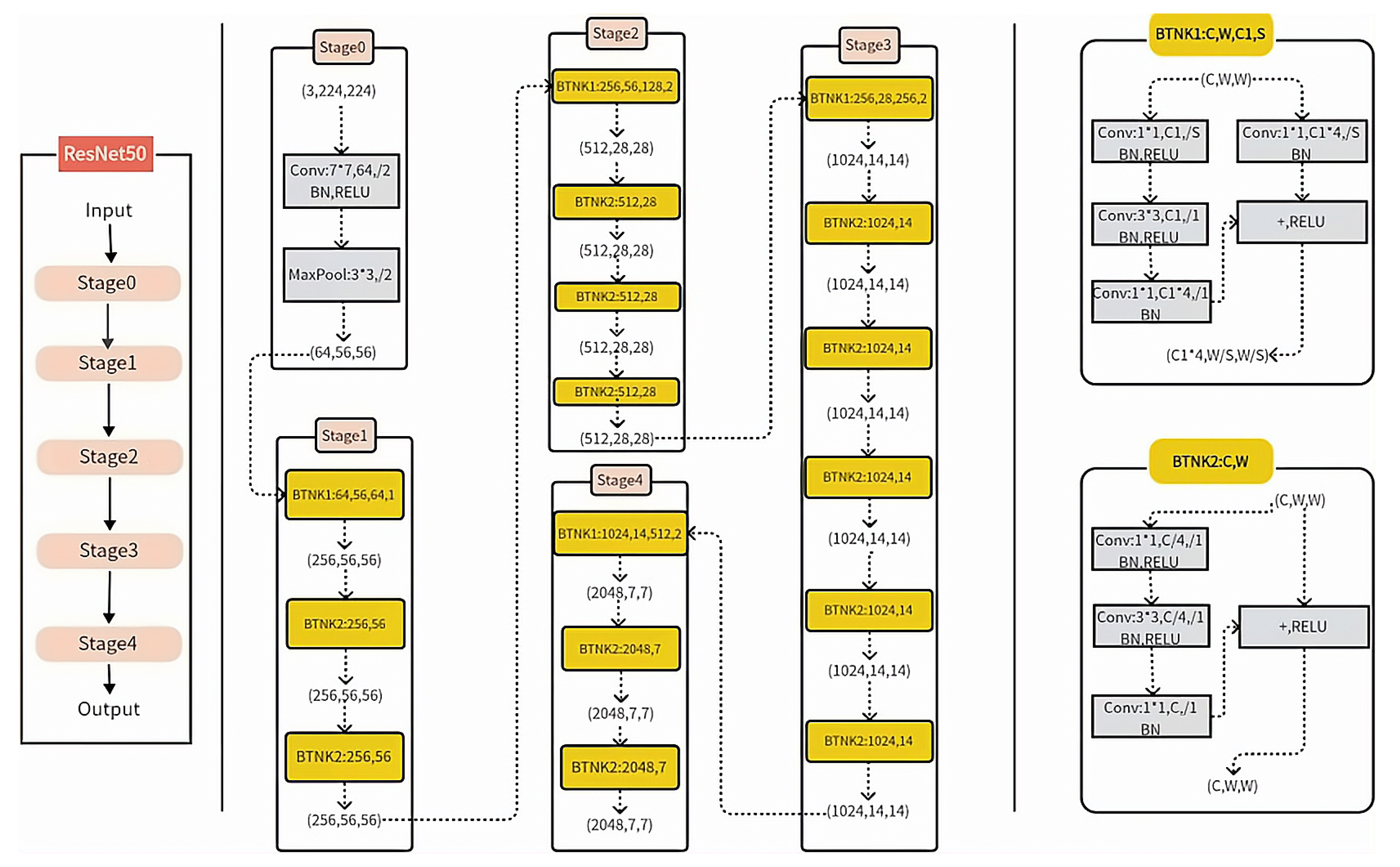

Among various deep residual networks, we chose ResNet-50 to perform three-dimensional model image classification in the PyTorch and Tensorflow 2.0 frameworks. Through experiments, it was found that ResNet-50 successfully balanced model depth and performance. As the name suggests, ResNet-50 consists of 50 layers, as shown in

Figure 6. The ResNet-50 network flows from input -> stage0 -> stage1 -> stage2 -> stage3 -> stage4 -> output. Stages 1 to 4 consist of 3, 4, 6, and 3 convolutional blocks.

In stage0, the input (3, 224, 224) undergoes a convolution operation with 64 filters of size (7, 7) and a stride of 2. Batch Normalization (BN) is applied, followed by the Rectified Linear Unit (ReLU) activation function. Finally, max-pooling with a kernel size of 3×3 and a stride of 2 is performed, resulting in an output size of 56. After this stage, the image shape changes from (3, 224, 224) to (64, 56, 56).

In stage1, three bottleneck blocks are used to process the output from stage0. Each bottleneck block consists of a series of convolution operations, including 1×1 convolution with 64 output channels, 3×3 convolution with 64 output channels, and 1×1 convolution with 256 output channels. These operations reshape the image to (256, 56, 56).

Similar operations are performed in stages 2, 3, and 4, gradually reducing the spatial dimensions and increasing the number of channels. In the end, global average pooling is applied to the (2048, 7, 7) image, resulting in an output of (2048, 1, 1). The feature map is then flattened to a one-dimensional vector and processed through fully connected layers for classification.

Considering this experiment involves five tree species, accuracy values for each category are provided in the end. The detailed architecture specifications of ResNet-50 are shown in

Table 1.

3.3. Deep Learning Framework

3.3.1. Pytorch

In most cases, deep learning frameworks tend to focus on usability or speed, making it challenging to balance the two. PyTorch is a machine learning library indicating that these two goals can be somewhat compatible. It provides an imperative and Python programming style, supporting code as a model, making debugging easy, and maintaining compatibility with other popular scientific computing libraries. Additionally, it remains efficient and supports GPU acceleration for computations. Previous efforts recognized the value of dynamic eager execution in deep learning, and some recent frameworks have implemented this run-time-defined approach. However, they either sacrifice performance or use faster but less expressive languages, limiting their applicability. Through careful implementation and design choices, PyTorch achieves dynamic, eager execution without sacrificing performance. It enables dynamic tensor computations using automatic differentiation and GPU acceleration, maintaining performance comparable to the fastest deep learning libraries. This combination has gained popularity in the research community. PyTorch provides an array-based programming model accelerated by GPUs and allows differentiation through automatic differentiation integrated into the Python ecosystem.

One of the highlights of PyTorch is its simple and efficient interoperability, opening up possibilities to leverage the rich Python library ecosystem as part of user programs. PyTorch allows bidirectional data exchange with external libraries. It provides a mechanism using the torch.from_numpy() function and .numpy(), facilitating the conversion of tensors between NumPy arrays and PyTorch tensors. These exchanges occur without any data copying, making these operations very convenient and efficient, regardless of the size of the converted arrays.

Another significant advantage of PyTorch is that users can freely replace any component in PyTorch that does not meet their project requirements or performance needs. These components are designed to be completely interchangeable, allowing users to adjust them based on their temporary needs.

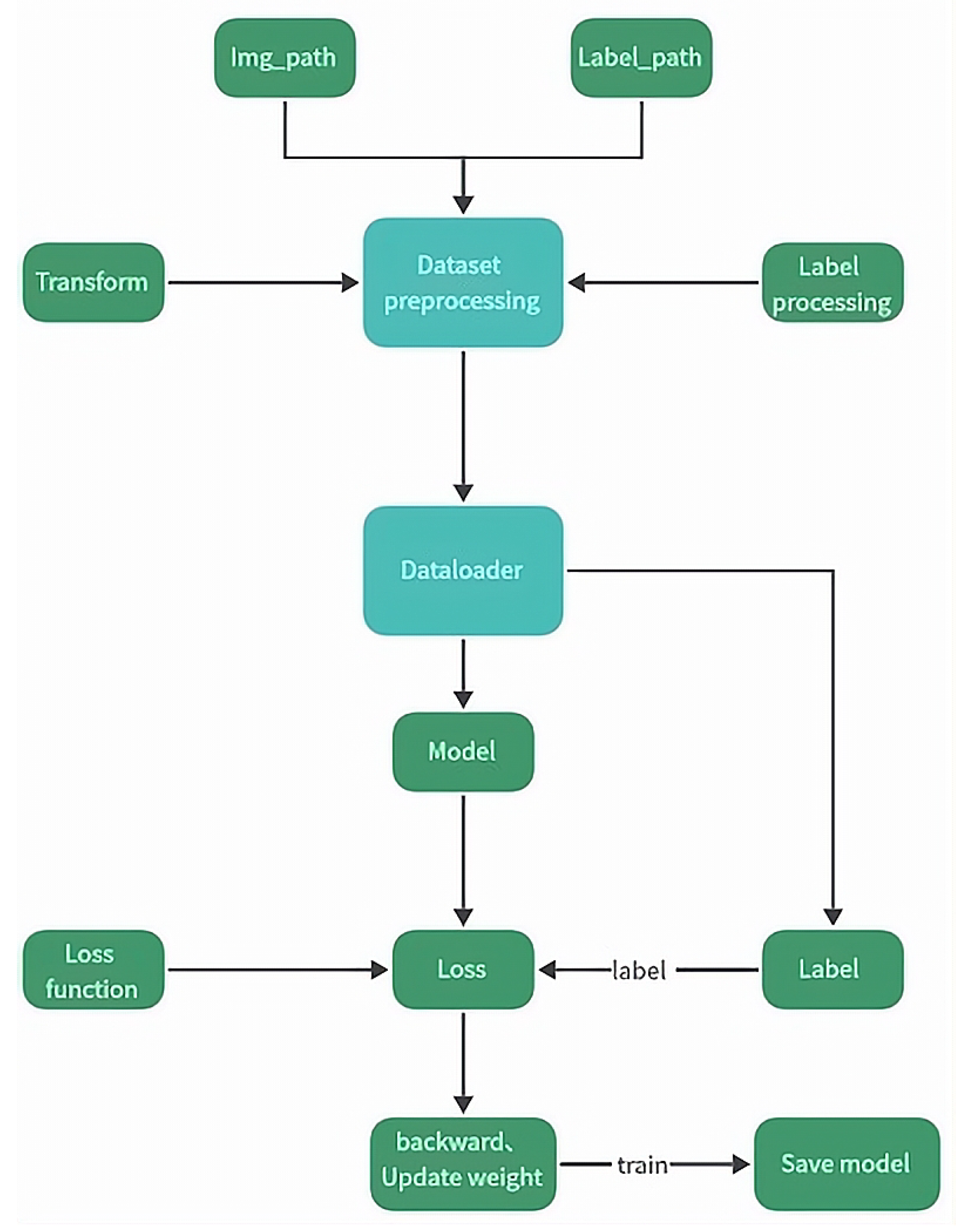

Efficiently running deep learning algorithms from the Python interpreter is currently one of the biggest challenges in this field. However, PyTorch addresses this issue differently by carefully optimizing various aspects of deep learning execution while allowing users to leverage additional optimization strategies easily. The PyTorch classification flowchart is illustrated in

Figure 7.

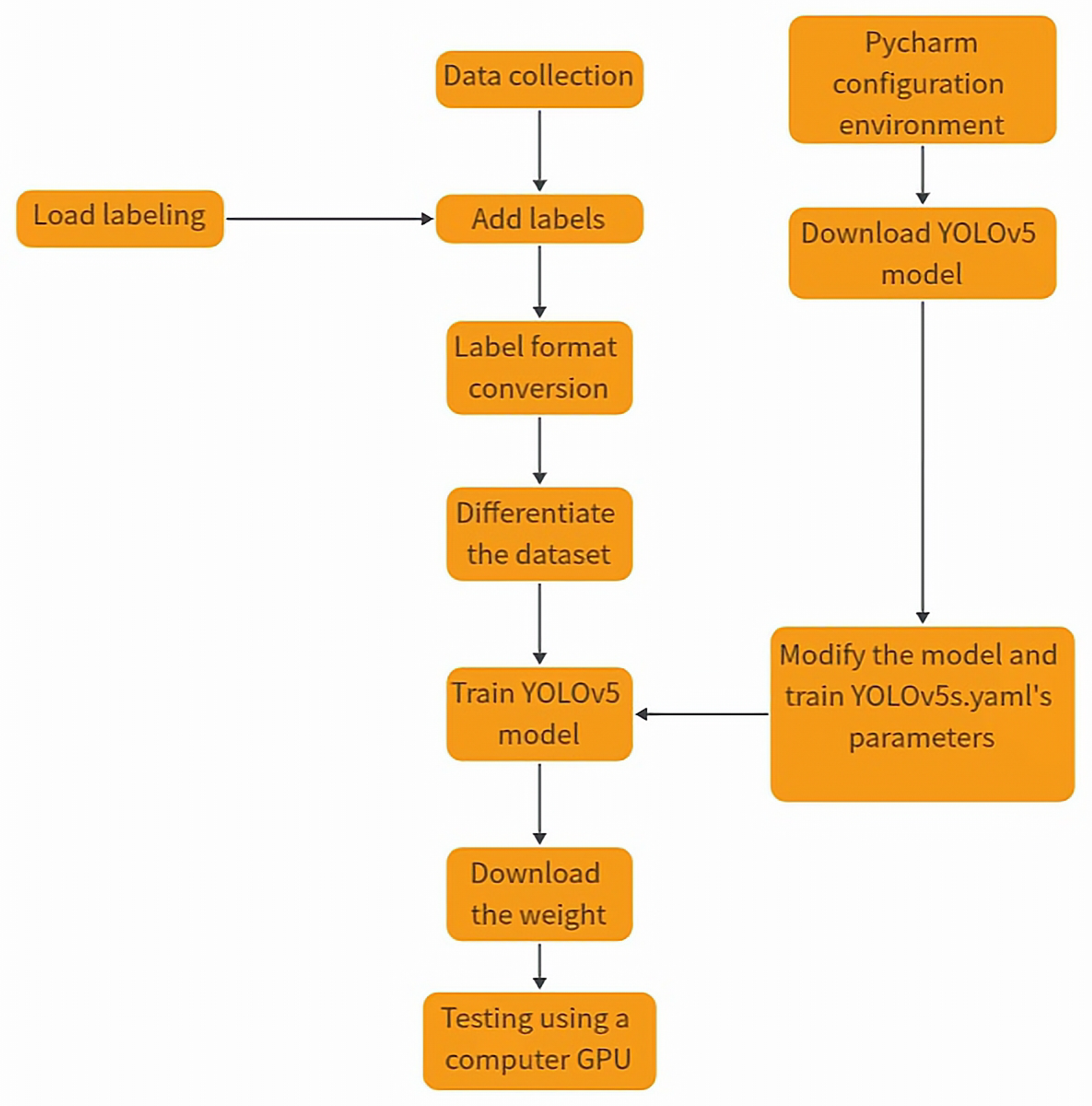

3.3.2. YOLOv5

YOLOv5 has achieved significant improvements in both detection accuracy and inference speed. It comes with a small weight file, approximately 90% smaller than YOLOv4, making it suitable for real-time detection on embedded devices. Compared to its predecessors, YOLOv5 is characterized by high detection accuracy, lightweight, fast detection times, and relatively mature technology. YOLOv5 includes four different models: YOLOv5s, YOLOv5m, YOLOv5l, and YOLOv5x, each with distinct weight files. The differences among these architectures lie in their feature extraction modules and the convolutional kernels of the network. Another distinction is the size of the model and the number of model parameters, which vary for the four different architectures. We choose the YOLOv5s.pt as the weight file for training, and the training process flowchart for YOLOv5 is depicted in

Figure 8.

3.3.3. Tensorflow 2.0

TensorFlow is a numerical computing software library based on data flow graphs, providing interfaces and computational frameworks for implementing machine learning or deep learning algorithms. It combines flexibility and scalability, supporting various commonly used programming languages. TensorFlow ensures its efficiency and stability by utilizing CUDA, among other technologies. It allows mapping computation results to different hardware or operating systems, such as Windows, Linux, Android, iOS, and even large-scale GPU clusters. This significantly reduces development challenges. TensorFlow provides large-scale distributed training methods, enabling users to update model parameters using different devices, thus saving development costs. This flexibility allows users to implement model designs and train models on massive datasets quickly.

In recent years, TensorFlow has been widely applied in machine learning, deep learning, and other computational fields, including speech recognition, natural language processing, computer vision, robot control, information extraction, and more. In October 2019, Google released TensorFlow 2.0, and one of its major changes was the official integration and comprehensive support for Keras. Keras is a high-level neural network API known for its highly modular, minimalist, and extensible features. It provides clear and actionable error messages and supports Convolutional Neural Networks (CNN) and Recurrent Neural Networks (RNN). TensorFlow 2.0 uses the Sequential, compile, and fit methods of tf.keras to build, compile, and train models. The TensorFlow 2.0 training process flowchart is illustrated in

Figure 9.

3.3.4. Parameters settings

We set the input image size to 64 × 64 pixels for training classification models using these three deep learning frameworks. During the training process, we utilized a batch size of 4, selected the SGD optimizer to optimize our algorithm, and employed shuffling to prevent overfitting and ensure the model captures more accurate features. The training was conducted for a total of 50 epochs. The initial learning rate was set to1 * 10−4 and the momentum during training was set to 1 * 10−3. All the parameters obtained from the final trained model were recorded in Tensorboard, allowing us to monitor the model’s changing trends in real-time.

3.4. Random Forest

Random Forest is a supervised learning algorithm employing an ensemble learning method consisting of numerous decision trees to generate a consensus output, representing the best answer to a given problem. Random Forest can be used for classification or regression and is a popular ensemble learning algorithm. It implements the Bagging (Bootstrap Aggregation) method in ensemble learning, serving as a homogeneous estimator composed of many decision trees. The individual decision trees in a Random Forest are not correlated. When performing classification with a Random Forest, each sample is evaluated and classified by every decision tree in the forest. Each decision tree produces a classification result, and the final result of the Random Forest is determined by the majority result (mode) among all decision trees.

4. Results and Discussion

4.0.1. Framework Comparison

The primary objective of this research is to assess how various models affect tree species classification using PTC. Three prominent frameworks, PyTorch, Tensorflow 2.0, and YOLOv5, were selected for analysis. The results are summarized in

Table 2 in Average Training Time and Average Classification Accuracy. We found PyTorch is more flexible and user-friendly than the other two methods. It provides an intuitive Python API, making it more convenient for users. While TensorFlow 2.0 has seen improvements in API design compared to version 1.0, it may still feel relatively complex in certain situations. YOLOv5, being specialized in object detection tasks, has its model and structure fixed towards specific objectives in object detection and may not be as flexible as PyTorch in image classification. PyTorch boasts strong community support and a wealth of third-party libraries, offering various pre-trained models and tools for rapidly developing image classification applications. Although TensorFlow 2.0 also has robust community support, its ecosystem is relatively complex compared to PyTorch. YOLOv5, on the other hand, has fewer model weights and options than the first two. In achieving the same goal, PyTorch often achieves the desired results with less effort, while TensorFlow 2.0 may require more work for similar objectives. YOLOv5, being less widely used in image classification and having a relatively smaller scale, may face limitations in support and issue resolution compared to the broader availability of PyTorch.

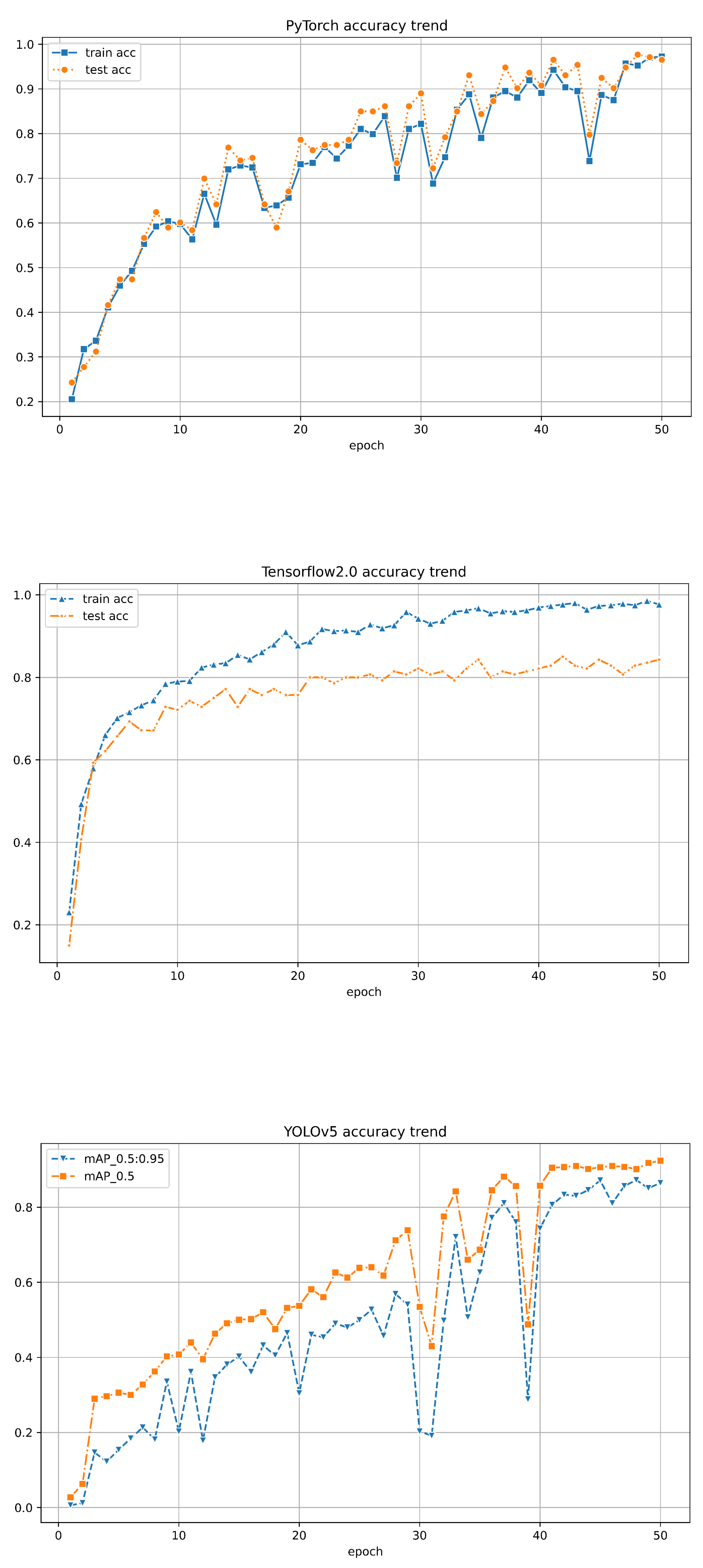

Under 50 epochs, YOLOv5 showed the most volatile performance but yielded a good final convergence. In contrast, TensorFlow 2.0 showed consistent stability but relatively lower accuracy. The results are illustrated in Figures 10 through 13. It was observed that the accuracy of all models exceeded 90% around 40 epochs, with models trained using the PyTorch framework achieving over 95%. While both PyTorch and Tensorflow 2.0 achieved training set accuracy of over 95%, the test set accuracy of Tensorflow2.0 was only around 80%, whereas PyTorch maintained an accuracy of over 95%, maintaining a good performance. For YOLOv5, it was noticed that when the classification threshold was set to 0.5, the training accuracy was generally higher than when the threshold was between 0.5 and 0.95. However, its highest classification accuracy only reached 92%, never exceeding 95%. Moreover, when all three models underwent 50 epochs, the training time for PyTorch was 45 minutes, while Tensorflow2.0 and YOLOv5 took 76 minutes and 102 minutes, respectively (Table 2). Overall, PyTorch demonstrated stronger stability, higher accuracy, and better efficiency in tree species classification.

Figure 10.

The accuracy of training and validation on the dataset for PyTorch, TensorFlow 2.0, and YOLOv5 is described in (a)-(c) respectively

Figure 10.

The accuracy of training and validation on the dataset for PyTorch, TensorFlow 2.0, and YOLOv5 is described in (a)-(c) respectively

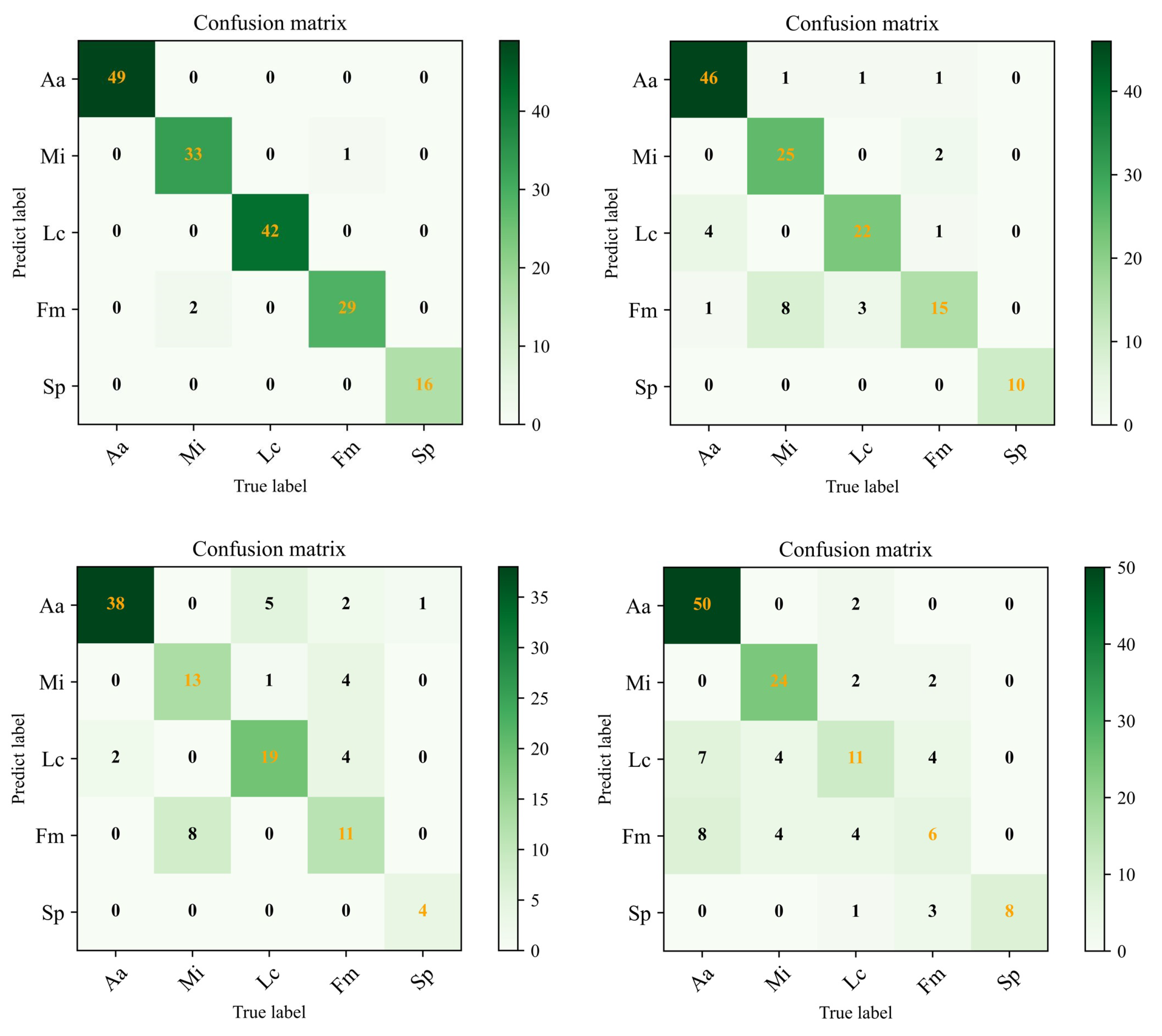

Figure 11.

The confusion matrices for training on the dataset in PyTorch, TensorFlow 2.0, YOLOv5, and RF are presented in (a)-(d) respectively.

Figure 11.

The confusion matrices for training on the dataset in PyTorch, TensorFlow 2.0, YOLOv5, and RF are presented in (a)-(d) respectively.

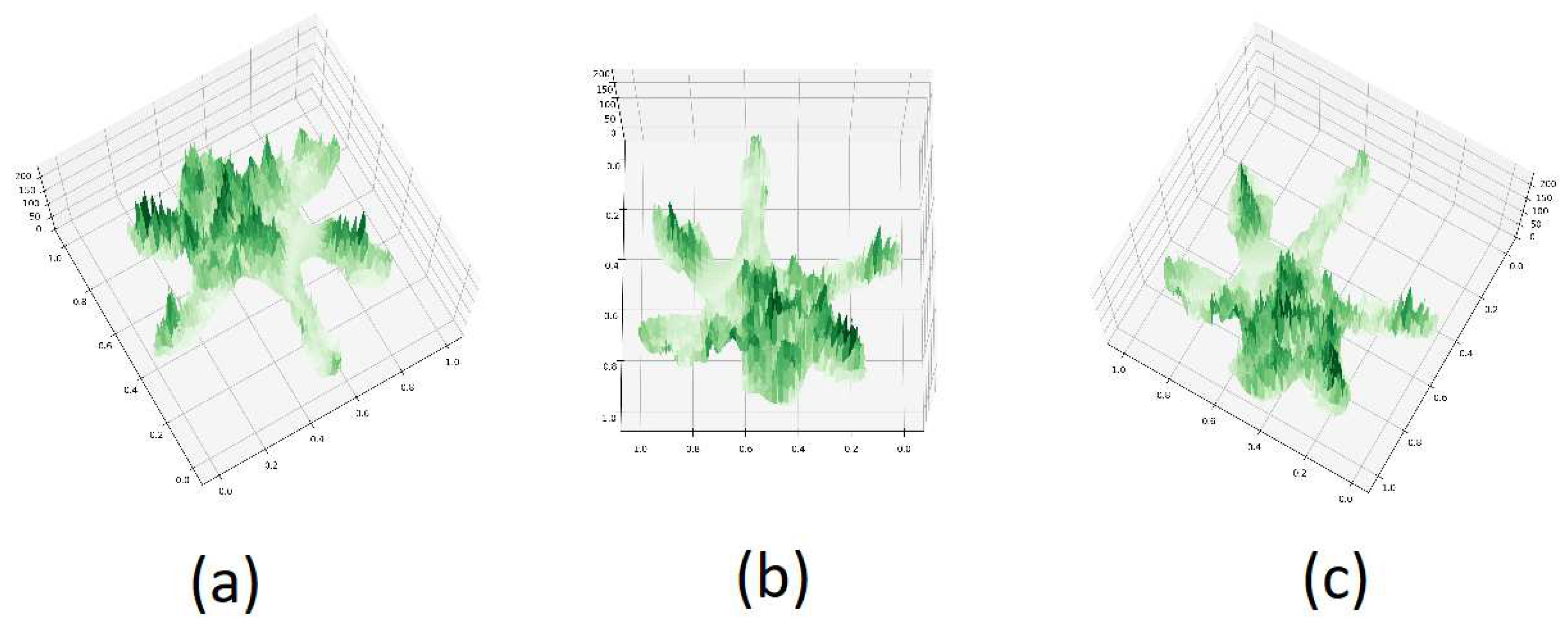

Figure 12.

PTC of Aa with different azimuth and elevation:(a) -120, 75 (b) 90, 75 (c) 120, 75

Figure 12.

PTC of Aa with different azimuth and elevation:(a) -120, 75 (b) 90, 75 (c) 120, 75

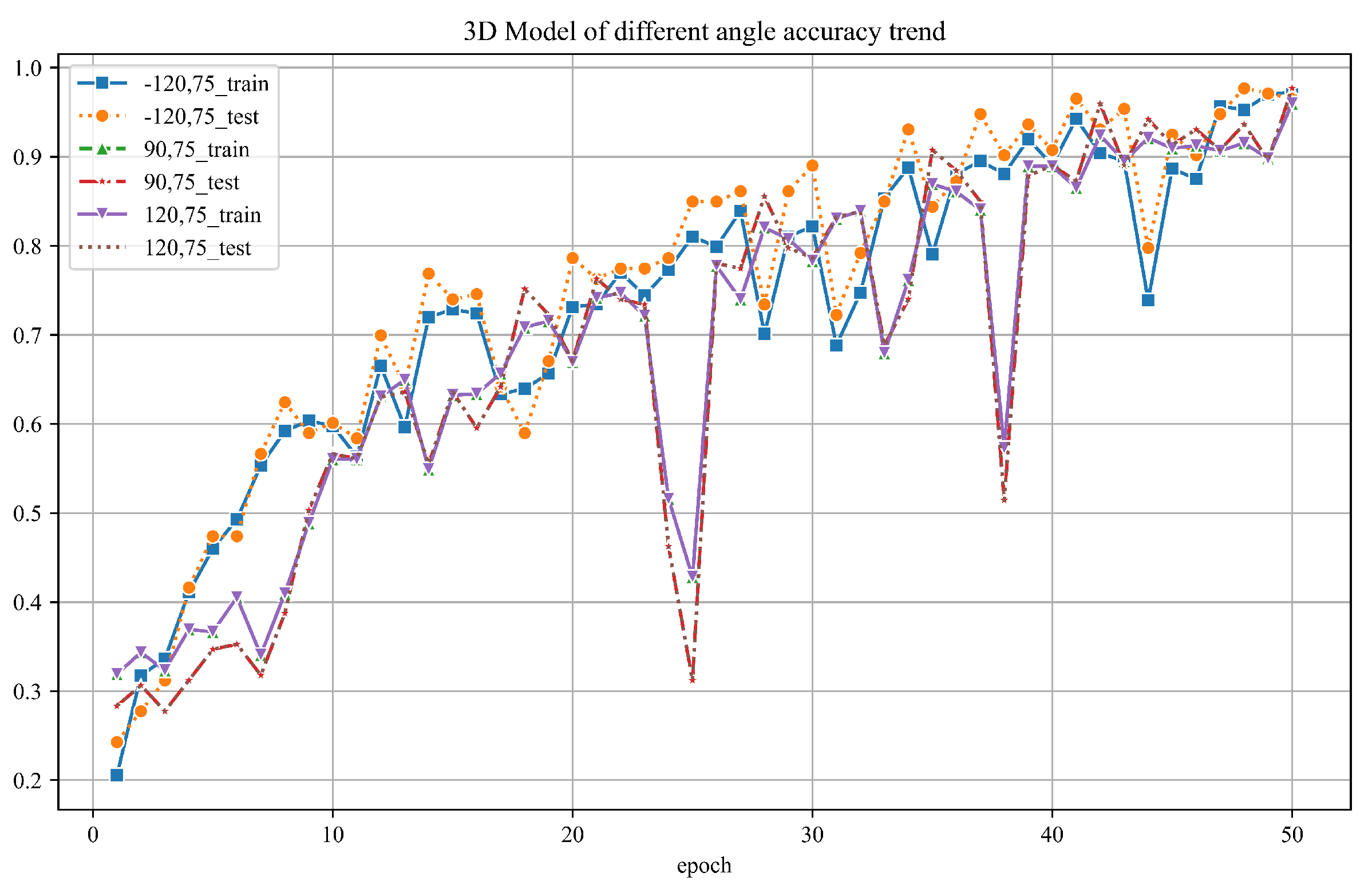

Figure 13.

The PTC classification accuracy by different azimuth and elevation angles

Figure 13.

The PTC classification accuracy by different azimuth and elevation angles

4.1. Classification Accuracy Assessment

To further corroborate our results, we evaluated each model’s overall classification accuracy and confusion matrices, as shown in

Figure 14. Precision, recall, and specificity for each tree class are detailed in Table 3 to 5, providing quantitative metrics for the models’ performance in tree species classification tasks. Through analyzing these results, we can gain a clearer and more comprehensive understanding of the performance of each model in tree species classification.

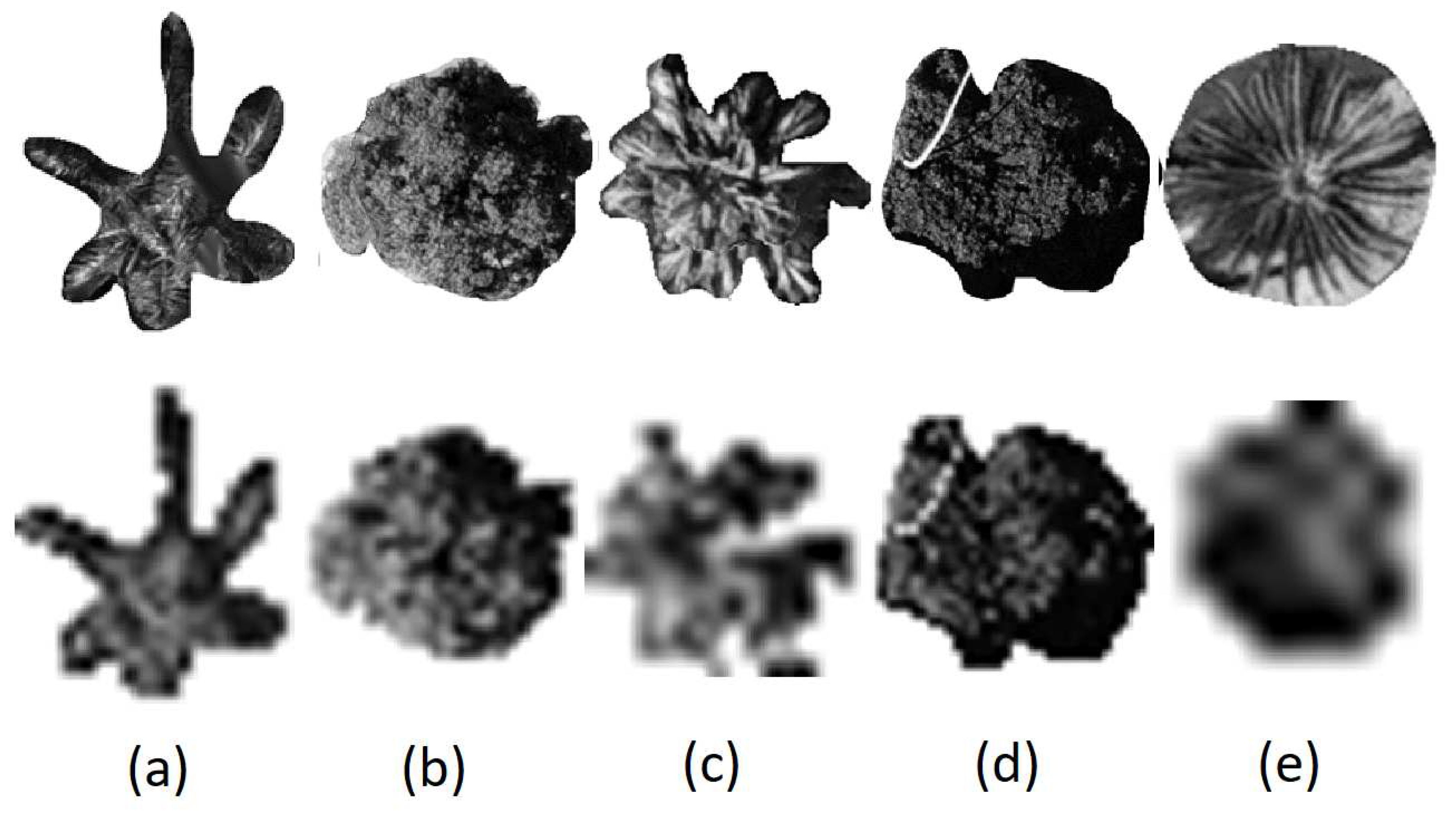

Figure 14.

Resampled images of (a) Aa (b) Mi (c) Lc (d) Fm ) (e) Sp

Figure 14.

Resampled images of (a) Aa (b) Mi (c) Lc (d) Fm ) (e) Sp

Table 3.

Precision (PyTorch, TF2.0, YOLOv5 and RF)

Table 3.

Precision (PyTorch, TF2.0, YOLOv5 and RF)

| Species |

PyTorch |

TF2.0 |

YOLOv5 |

RF |

| Archontophoenix alexandrae (Aa) |

1.000 |

0.902 |

0.950 |

0.769 |

| Mango indica (Mi) |

0.971 |

0.735 |

0.620 |

0.750 |

| Livistona chinensis (Lc) |

1.000 |

0.846 |

0.760 |

0.579 |

| Ficus microcarpa (Fm) |

0.935 |

0.790 |

0.524 |

0.400 |

| Sago palm (Sp) |

1.000 |

1.000 |

0.800 |

1.000 |

Table 4.

Recall (PyTorch, TF2.0, YOLOv5 and RF)

Table 4.

Recall (PyTorch, TF2.0, YOLOv5 and RF)

| Species |

PyTorch |

TF2.0 |

YOLOv5 |

RF |

| Archontophoenix alexandrae (Aa) |

1.000 |

0.938 |

0.826 |

0.962 |

| Mango indica (Mi) |

0.943 |

0.926 |

0.722 |

0.857 |

| Livistona chinensis (Lc) |

1.000 |

0.815 |

0.760 |

0.423 |

| Ficus microcarpa (Fm) |

0.967 |

0.556 |

0.579 |

0.273 |

| Sago palm (Sp) |

1.000 |

1.000 |

1.000 |

0.727 |

Table 5.

Specificity (PyTorch, TF2.0, YOLOv5 and RF)

Table 5.

Specificity (PyTorch, TF2.0, YOLOv5 and RF)

| Species |

PyTorch |

TF2.0 |

YOLOv5 |

RF |

| Archontophoenix alexandrae (Aa) |

1.000 |

0.945 |

0.970 |

0.828 |

| Mango indica (Mi) |

0.993 |

0.920 |

0.915 |

0.928 |

| Livistona chinensis (Lc) |

1.000 |

0.965 |

0.931 |

0.929 |

| Ficus microcarpa (Fm) |

0.986 |

0.965 |

0.893 |

0.923 |

| Sago palm (Sp) |

1.000 |

1.000 |

0.991 |

1.000 |

PyTorch achieved the highest overall classification accuracy at 98.26%, followed by Tensorflow2.0 at 84.29%, YOLOv5 at 75.89%, and the traditional Random Forest (RF) with an overall classification accuracy of 70.71%. As indicated in the table, in PyTorch, the precision, recall, and specificity for Aa, Lc, and Sp were all 1.0, while Mi maintained these three metrics above 0.95. The precision for Fm was slightly lower at 0.935, but the recall and specificity remained above 0.96. In Tensorflow2.0, Sp’s performance was excellent, achieving 1.0 for all three metrics. Aa also maintained results above 0.9. Mi had a lower precision of 0.735, with recall and specificity above 0.92. The precision and recall for Lc ranged between 0.8 and 0.85, but specificity reached 0.965. Although the precision for Fm in Tensorflow2.0 was 0.79, the recall was only 0.556, and the specificity was 0.965. YOLOv5 and RF had less favourable classification results compared to PyTorch and Tensorflow2.0. Despite generally lower performance, YOLOv5 achieved a recall of 1.0 for Sp. RF attained a precision and specificity of 1.0 for Sp, indicating that our dataset performed best in identifying Sp regardless of the classification method used.

In summary, our research results suggest that due to the influence of factors such as the blurred edges and interweaving crowns of canopy images and poor texture effects in the original data, PyTorch’s classification performance is superior to Tensorflow2.0, YOLOv5, and RF. This demonstrates that PyTorch has significant potential for multi-classification tasks using PTC from high-resolution remote sensing images. However, when evaluating the efficiency of a classification model, there are still many evaluation metrics to consider, along with the need to integrate various features of the dataset and the specific requirements of the classification task. Further research and refinement of these models’ performance using different datasets in various environments are necessary.

4.2. PTC Azimuth and Elevation Angle Impact Study

To explore the influence of varying azimuth and elevation angles on tree species classification outcomes using PTC, we selected three sets of angles: -120°, 75°; 90°, 75°; and 120°, 75°, as illustrated in

Figure 12. PTCs were generated using these different angles and inputted into our PyTorch-based model for classification. The resultant classification outcomes are shown in

Figure 13.

The graph illustrates that across all angle configurations, the final accuracy of both the training and test datasets consistently exceeds 95%. This suggests that changes in azimuth and elevation angles have minimal impact on the overall classification accuracy. This resilience is logical given PyTorch’s application in training on human images, which does not necessitate specific viewing angles.

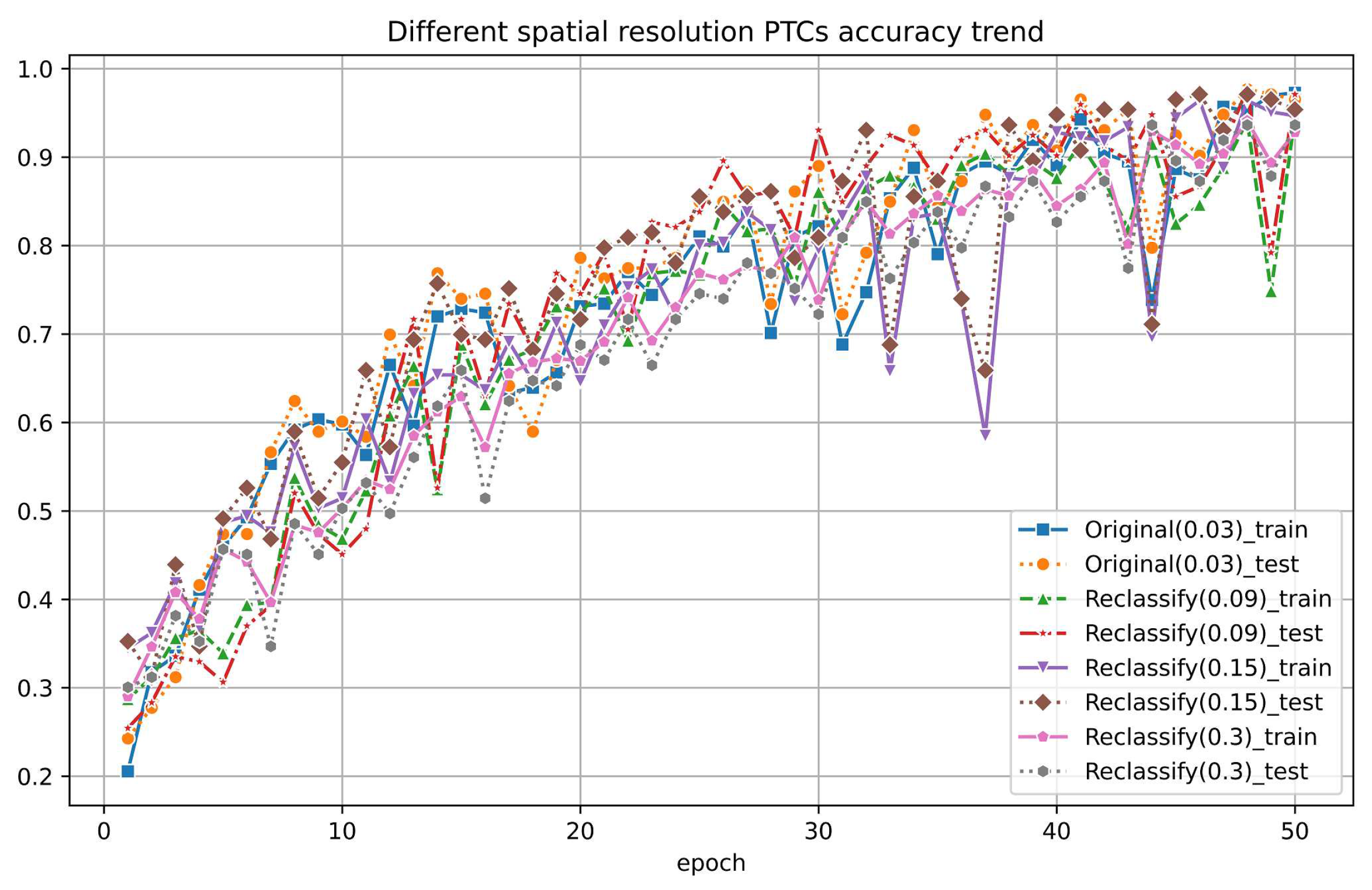

4.3. Different Spatial Resolution PTC Impact Analysis

We also examined the influence of spatial resolution on PTC. Given that PTC derives height information from grayscale values and partially obscures tree crown details due to viewing angles, it inherently masks out many spatial intricacies, suggesting a natural resilience to changes in spatial resolution. However, we were interested in determining the scale at which classification accuracy would be affected.

To investigate this, we downsampled the original RGB images into lower-resolution versions, which reduces the resolution to a factor of 3, 5 and 10 of the original resolution. We extracted the green band again, cropped out patches of individual trees for each species, and established a resampled dataset, which is illustrated in

Figure 14 (reduced by a factor of 10 from 0.03 m to 0.3 m). Different spatial resolution PTC were created after that. All datasets maintain accuracy above 90%, while the highest resolution, 0.03 m, archives a classification accuracy of over 95%. Remarkably, we observed no significant decrease in accuracy until the scale was reduced by over ten times. This indicates that as the resolution of the original data decreases, the features displayed by PTC are, to some extent, unfamiliar to the classifier in our PyTorch framework, leading to a slight decrease in accuracy.

Figure 15.

The classification results by different spatial resolution PTCs

Figure 15.

The classification results by different spatial resolution PTCs

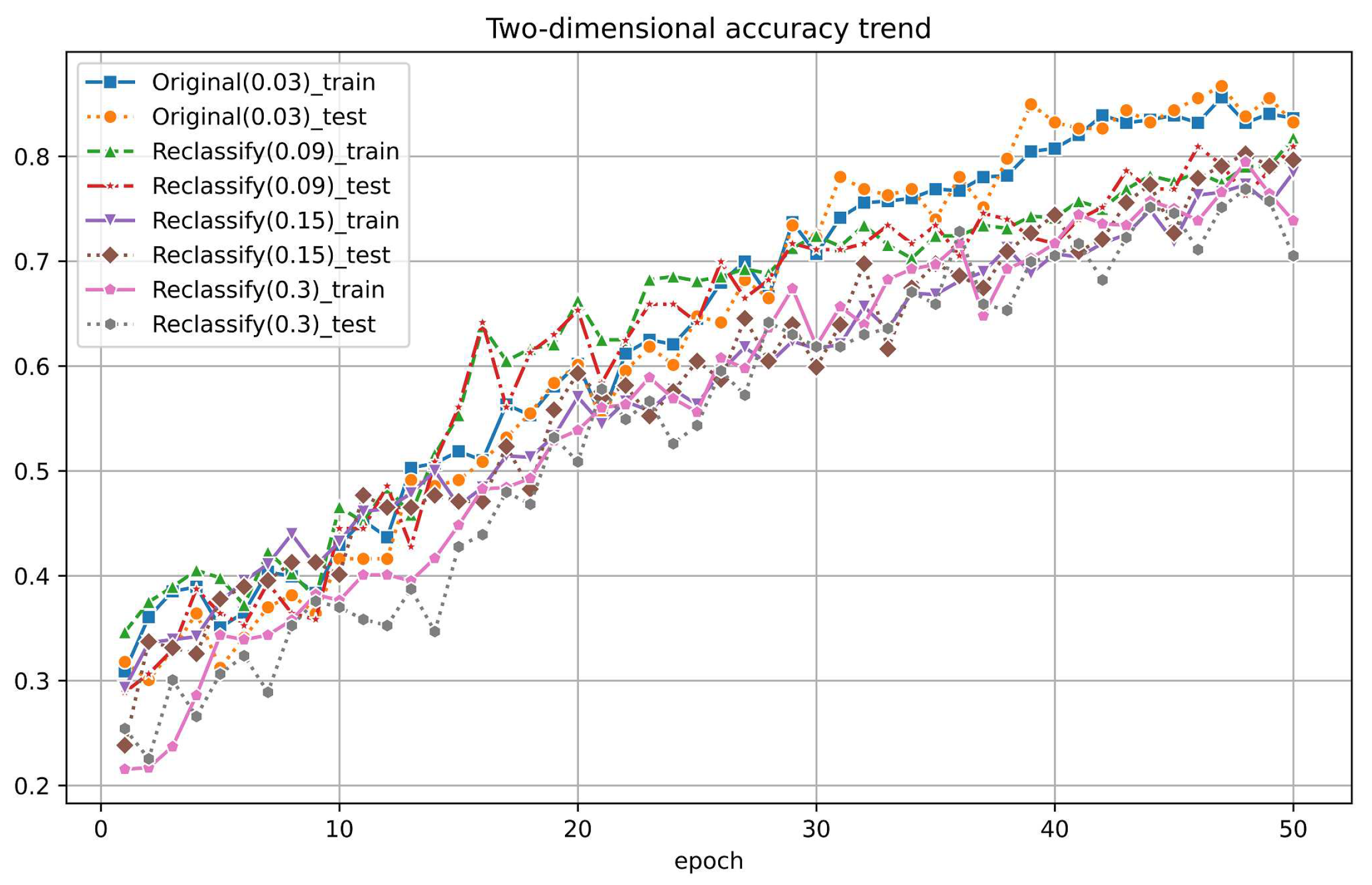

4.4. Compare the Original RGB Image

Additionally, we compared the PTC with the original nadir view images using PyTorch classification. To keep the model’s performance trained in PyTorch consistent on the PTC and original images, we also extracted the green band of the original 2D nadir view images as the input dataset. The accuracy of the original nadir view dataset during training reached between 80% and 85% for both the training and test sets, which is a bit worse than 97% from PTC using PyTorch.

We further explored the resistance of the resolution change for the original images. We did the same resample for the original nadir images. All of these datasets were then input into PyTorch for training, and the accuracy trends on the training and test sets are shown in

Figure 16.

In contrast, due to reduced resolution, the accuracy of the reclassified original images dropped to between 70% and 80% for both the training and test sets. It is much more significant compared to the PTC, which remains approximately 90% even at a factor of 10.

Therefore, we conclude the PTC is much more resistant to reducing spatial resolution. It implicates more flexible application areas and robust classification results.

4.5. Other Findings

It is worth mentioning that the accuracy for the tree species Aa is the highest in each classification method. This indicates that the classification model more easily recognizes the PTC, which is favourable to the species that have more spatial features. This could be attributed to its robust trunk and sparse, broad leaves, resulting in higher pixel distinguishability than other tree species. On the other hand, the accuracy for the Fm is relatively low, possibly due to its large crown, complex crown structure, and the intertwining growth of each tree. This leads to unclear boundaries of the crown layer, making it more challenging for the model to accurately recognize its features, thus affecting the classification of the banyan tree. In the future, we aim to improve our classification model algorithm further to achieve comprehensive and higher classification accuracy.

Eva Lindberg et al. utilized dense airborne laser scanning (ALS) data for crown delineation, extracting the spatial distribution of ALS data based on an elliptical crown model and conducting classification. Their method improved crown recognition, achieving a classification result of only 71% for tree species such as pine and spruce in cross-validation. In contrast, in this paper, by simply outputting the green band of RGB images and creating PTC, we achieved a classification accuracy of over 95% with simpler data processing and less workload. This further highlights the sensitivity of deep learning classification algorithms to the recognition of tree crown three-dimensional models.

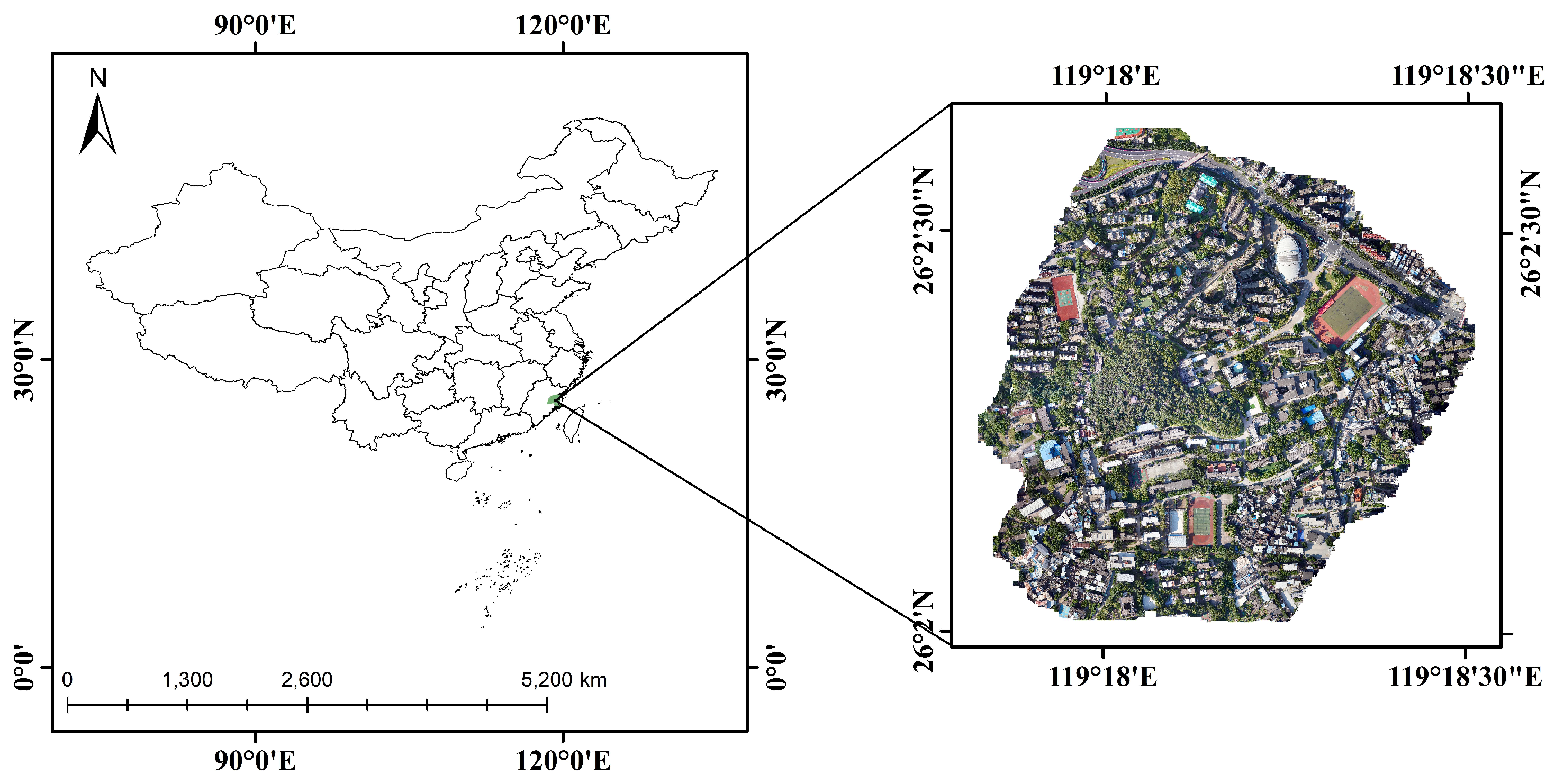

5. Conclusions

In this study, we utilize a deep learning-based methodology to evaluate tree species classification within the Cangshan campus of Fujian Normal University, leveraging high-resolution aerial imagery acquired through low-altitude UAV flights. We have attained promising outcomes by employing individual tree PTC derived from these high spatial resolution aerial images. Our approach involves integrating PTC images into the PyTorch classification framework, resulting in classification accuracies consistently surpassing 95

We are excited to present the first application of PTC in image classification, yielding a 95% accuracy compared to utilizing nadir view images directly with PyTorch (87%) using PyTorch-based classification. Furthermore, among various AI-based classification methodologies, PyTorch has demonstrated superior robustness, accuracy, and efficiency compared to TF2.0 and YOLOv5.

Future research will focus on exploring the correlation between physical tree crowns and PTCs by incorporating LiDAR data. Preliminary findings indicate encouraging results. If successful, this endeavour will directly link 2D nadir images to 3D tree structures, facilitating the integration of additional parameters—such as diameter at breast height (dbh)—into classification models, a feat previously unattainable with solely 2D image data.