Submitted:

19 February 2024

Posted:

20 February 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. State of the art

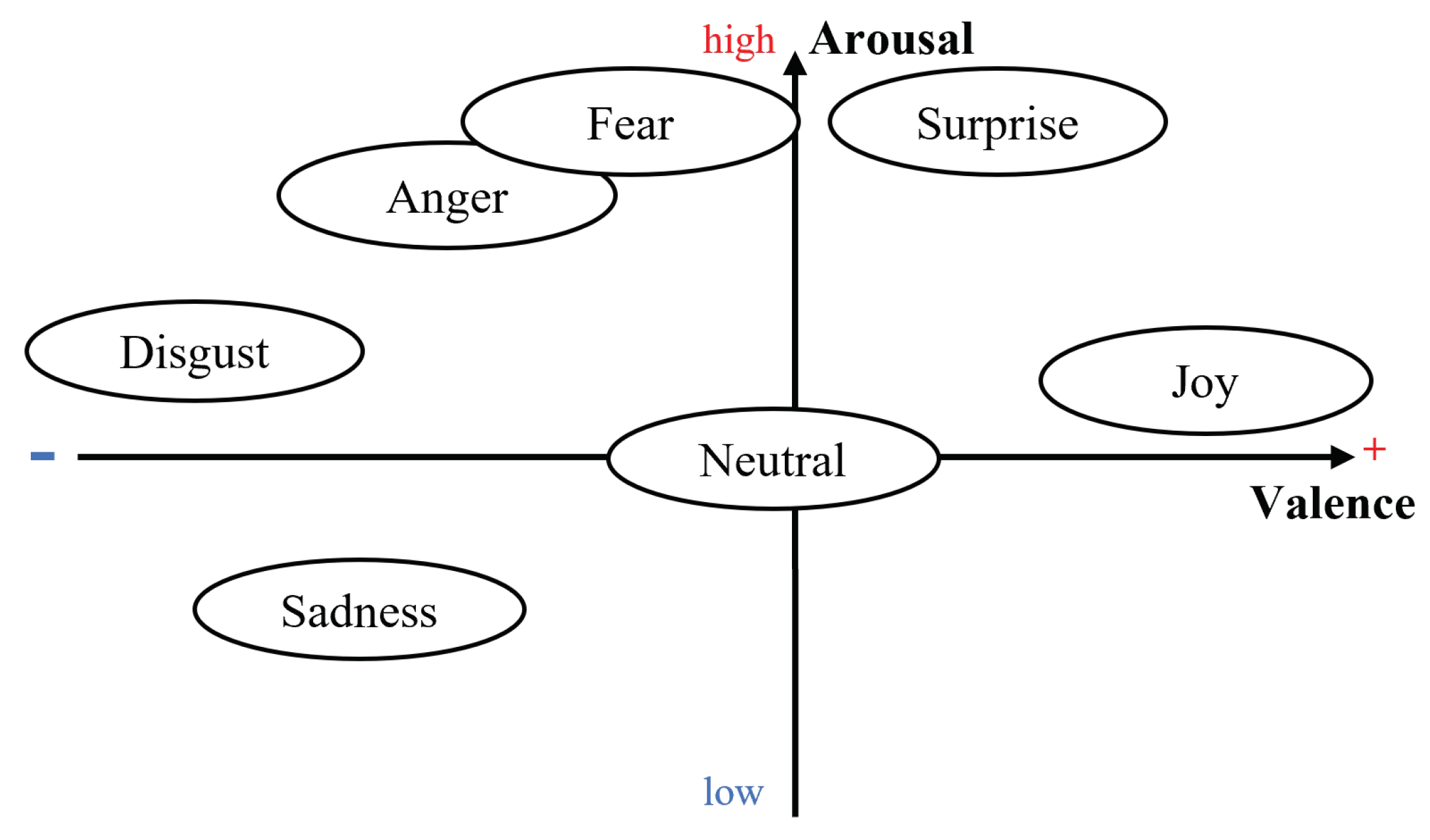

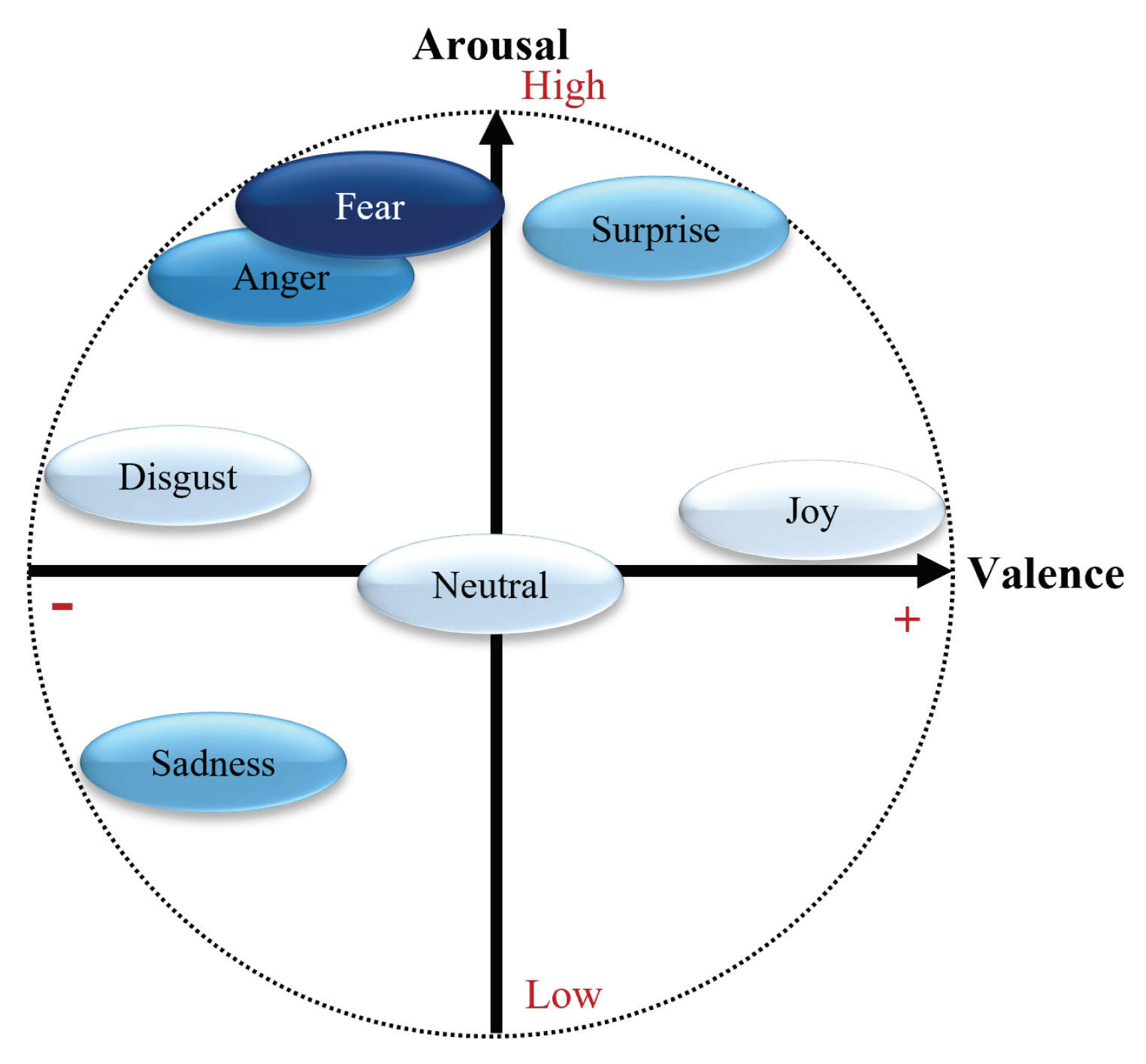

2.1. Emotion recognition

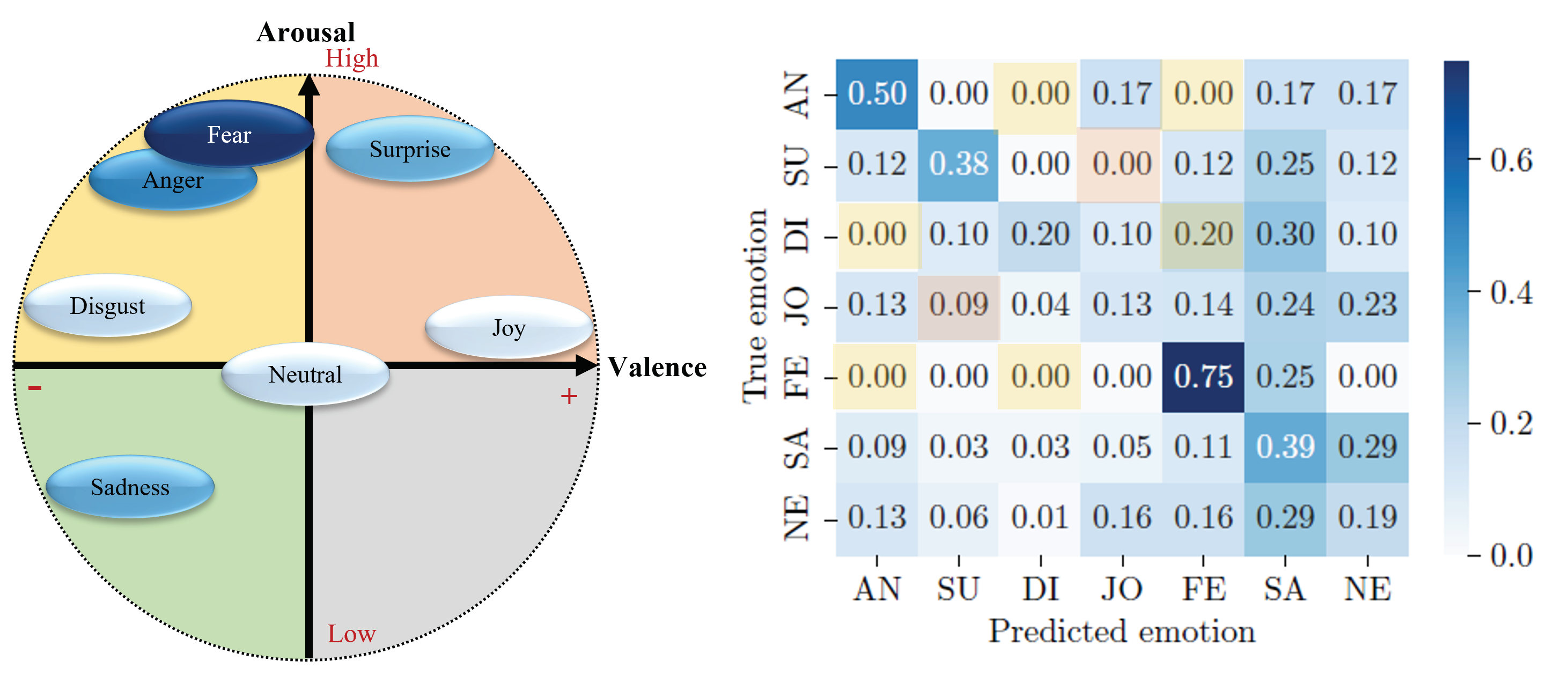

2.2. Using plants as sensors

3. Method

3.1. Experimental setup

- 1.

- First, the participant’s consent was collected and the observer in charge of running the session answered any open questions.

- 2.

- Then the observer quickly described to the participant the task that they would be asked to perform. The task was to watch a video sequence designed to elicit strong emotional responses from participants. The video sequence was created based on previous work by Gloor et al. [31] and is described in Table 1.

- 3.

-

The participant then sat in the experimental room and the sensors (plant and camera) were activated. Figure 2 is a photograph of the experimental set-up.A screen displays the videos that elicit the participants’ emotional reactions (see Table 1). These reactions are filmed by a wide angle camera placed just below the screen. The camera is set up to obtain a zoomed image of the participant’s face. Finally a basil plant Ocimum basilicum, equipped with a sensor SpikerBox [29] is positioned in front of the participant.

- 4.

- Then the observer started the video sequence and left the room to let the participant watch the video.

- 5.

- Once the video sequence was finished, all sensors were deactivated and the data collected by the plant sensor and the camera was saved.

3.2. Analysis

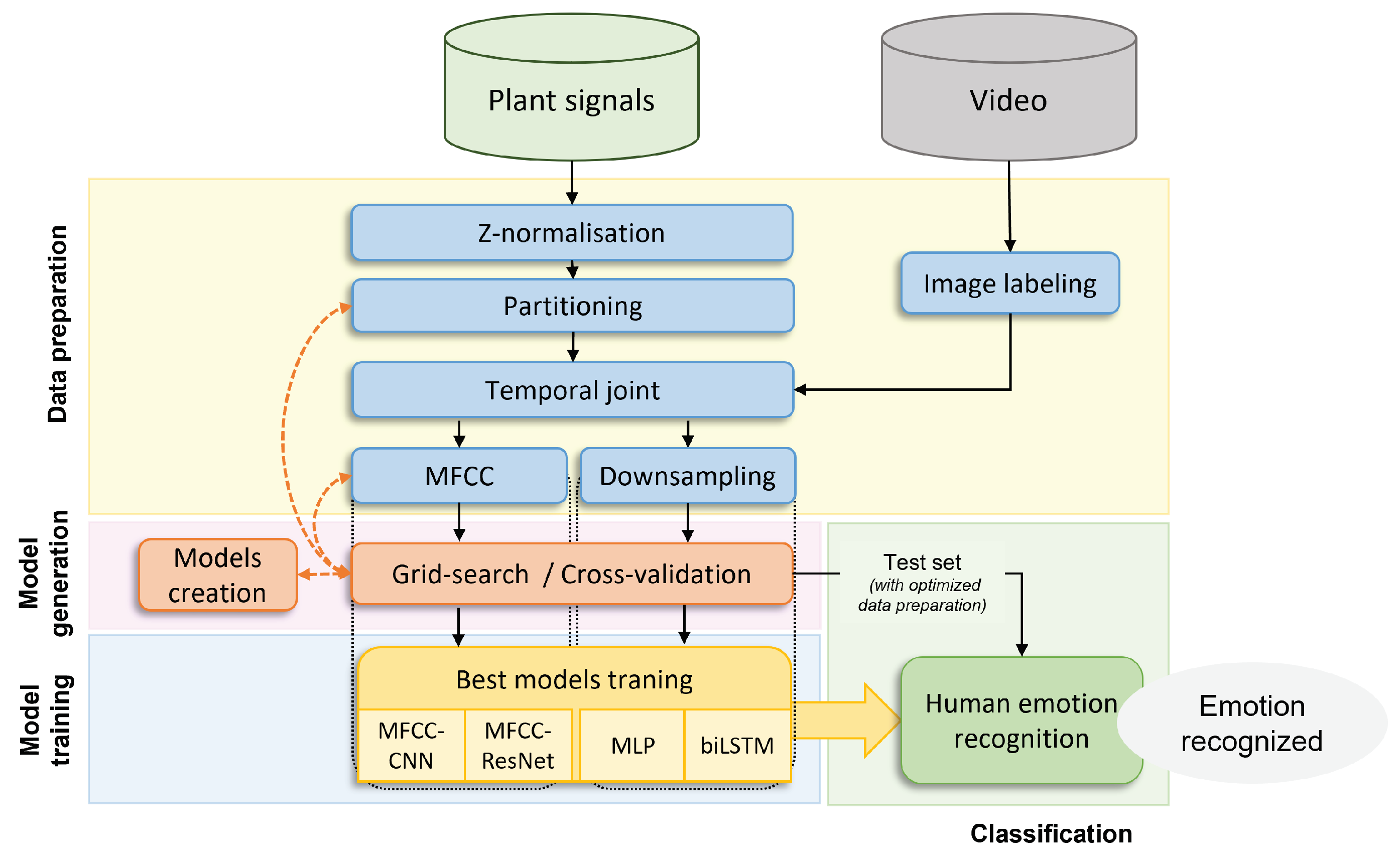

3.3. Data preparation

3.3.1. Initial data preparation approach - MFCC extraction with windowing

- The first dataset consists of the downsampled short-plant signals. Since the plant sensor has a high sampling rate (10kHz), a downsampling of the signals is required before feeding them into the different training models. Downsampling reduces the complexity of the signal while retaining the relevant information [11]. Its rate is a hyperparameter called downsampling rate whose value is determined by trial and error.

- The second dataset consists of the computation of MFCC features from each window. The result is a 2D matrix of shape [time steps, number of MFCCs] that can be processed by various deep learning algorithms such as LSTM or CNN.

3.3.2. Alternative data preparation approach - raw electrical signal analysis

3.4. Models generation

3.5. Models training

3.6. Classification

4. Results

4.1. Data collection

4.2. Analysis

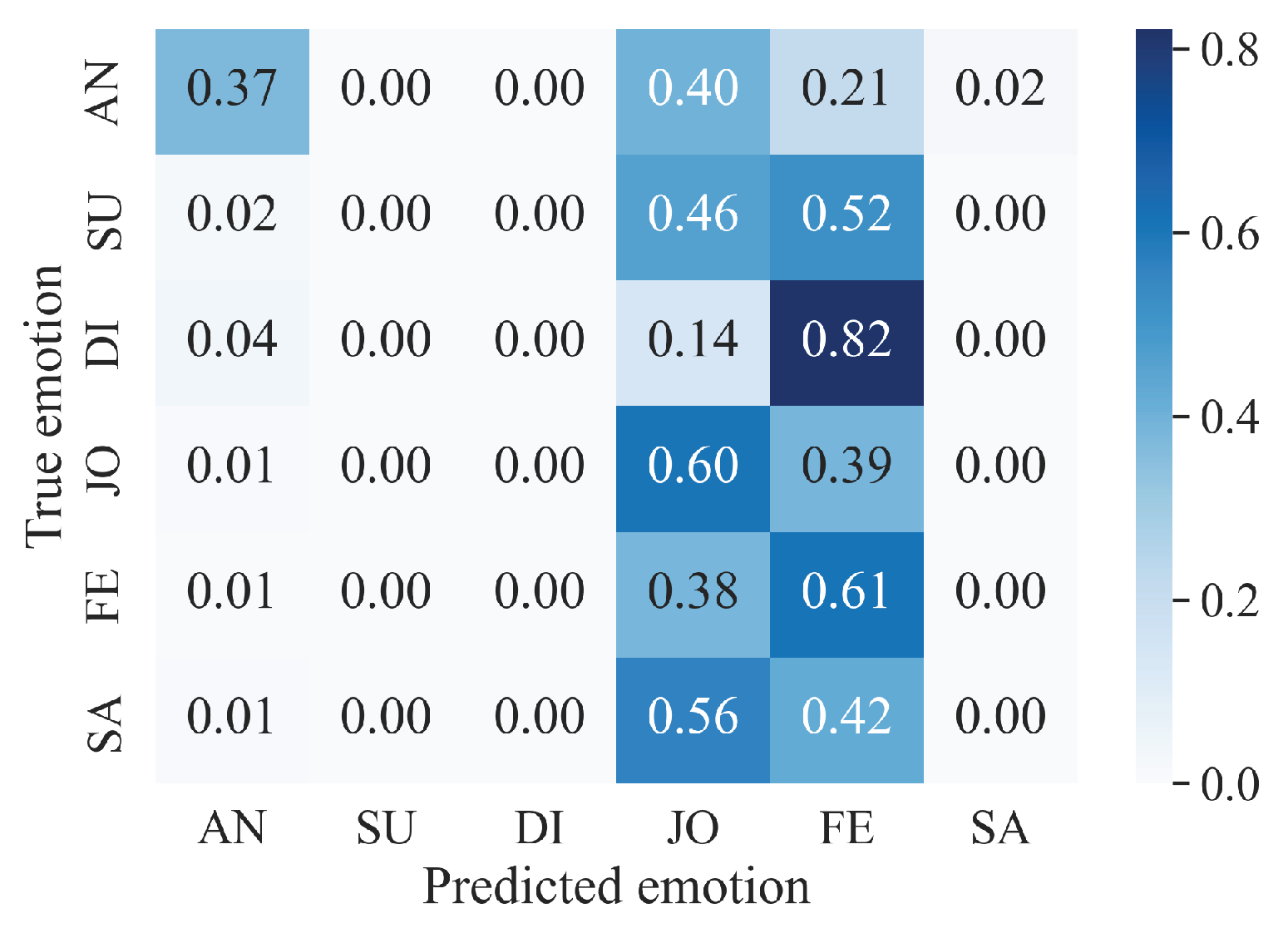

4.3. Evaluation

5. Discussion

5.1. Limitations and Further Research

6. Conclusions

Ethical Statement

References

- Lerner, J.S.; Li, Y.; Valdesolo, P.; Kassam, K.S. Emotion and Decision Making. Annual Review of Psychology 2015, 66, 799–823. [Google Scholar] [CrossRef]

- Ekman, P.; Friesen, W. Constants across cultures in the face and emotion. Journal of personality and social psychology 1971, 17, 124. [Google Scholar] [CrossRef]

- Ko, B.C. A Brief Review of Facial Emotion Recognition Based on Visual Information. Sensors 2018, 18. [Google Scholar] [CrossRef]

- Li, I.H. Technical Report for Valence-Arousal Estimation on Affwild2 Dataset. 2021, arXiv:cs.CV/2105.01502. [Google Scholar]

- Verma, G.; Tiwary, U. Affect representation and recognition in 3D continuous valence–arousal–dominance space. Multimed Tools Appl 2017, 76, 2159–2183. [Google Scholar] [CrossRef]

- El Ayadi, M.; Kamel, M.S.; Karray, F. Survey on speech emotion recognition: Features, classification schemes, and databases. Pattern Recognition 2011, 44, 572–587. [Google Scholar] [CrossRef]

- Khalil, R.A.; Jones, E.; Babar, M.I.; Jan, T.; Zafar, M.H.; Alhussain, T. Speech Emotion Recognition Using Deep Learning Techniques: A Review. IEEE Access 2019, 7, 117327–117345. [Google Scholar] [CrossRef]

- Bi, J. Stock Market Prediction Based on Financial News Text Mining and Investor Sentiment Recognition. Mathematical Problems in Engineering 2022, pp. 2427389 (9 pp.) –. [Google Scholar] [CrossRef]

- Kusal, S.; Patil, S.; Choudrie, J.; Kotecha, K.; Vora, D.; Pappas, I. A Review on Text-Based Emotion Detection – Techniques, Applications, Datasets, and Future Directions. 2022, arXiv:cs.CL/2205.03235. [Google Scholar]

- Alswaidan, N.; Menai, M. A survey of state-of-the-art approaches for emotion recognition in text. Knowl Inf Syst 2020, 62, 2937–2987. [Google Scholar] [CrossRef]

- Oezkaya, B.; Gloor, P.A. Recognizing Individuals and Their Emotions Using Plants as Bio-Sensors through Electro-static Discharge. 2020, arXiv:eess.SP/2005.04591. [Google Scholar]

- Relf, P.D. People-plant relationship. In Horticulture as therapy: Principles and practice; (Eds.), S.P.S..M.C.S., Ed.; Haworth Press, 1998; pp. 21–42. [Google Scholar]

- Peter, P.K. Do Plants sense music? An evaluation of the sensorial abilities of the Codariocalyx Motorius. PhD thesis, Universität zu Köln, 2021.

- LLC, P.E.G. Universal emotions, 2022. Last accessed 26 May 2023.

- Ekman, P. Emotions Revealed: Recognizing Faces and Feelings to Improve Communication and Emotional Life; Henry Holt and Company, New York, 2003.

- Izard, C.E. Human Emotions; Springer Science & Business, Springer New York, NY, 2013. [CrossRef]

- Thanapattheerakul, T.; Mao, K.; Amoranto, J.; Chan, J.H. Emotion in a Century: A Review of Emotion Recognition, New York, NY, USA, 2018. [CrossRef]

- Darwin, C. The expression of the emotions in man and animals; John Murray: United Kingdom, 1872. [Google Scholar]

- Ekman, P. , Expression and the nature of emotion. In Approaches to emotion; 1984.

- Russell, J. A circumplex model of affect. Journal of Personality and Social Psychology. Journal of Network and Computer Applications 1980, 39, 1161–1178. [Google Scholar] [CrossRef]

- Ekman, P.; Friesen, W. Facial Action Coding System (FACS). APA PsycTests 1978. [Google Scholar] [CrossRef]

- Mühler, V. justadudewhohacks/face-api.js: v0.22.2, 2022. Last accessed 24 May 2023.

- Shu, L.; Xie, J.; Yang, M.; Li, Z.; Li, Z.; Liao, D.; Xu, X.; Yang, X. A Review of Emotion Recognition Using Physiological Signals. Sensors (Basel, Switzerland) 2018, 18, 2074. [Google Scholar] [CrossRef] [PubMed]

- Volkov, A.G.; Ranatunga, D.R.A. Plants as Environmental Biosensors. Plant Signaling & Behavior 2006, 1, 105–115. [Google Scholar] [CrossRef]

- Volkov, A.G.; Courtney, L.B. Electrochemistry of plant life. In Plant electrophysiology; (Ed.), A.G.V., Ed.; Springer Berlin Heidelberg, 2006; pp. 437–457. [CrossRef]

- Volkov, A.G. A. g. volkov (ed.) ed.; Springer Berlin, Heidelberg, 2006. [CrossRef]

- Chatterjee, S. An approach towards plant electrical signal based external stimuli monitoring system. PhD thesis, University of Southampton, 2017.

- Chatterjee, S.K.; Das, S.; Maharatna, K.; Masi, E.; Santopolo, L.; Mancuso, S.; Vitaletti, A. Exploring strategies for classification of external stimuli using statistical features of the plant electrical response. Journal of The Royal Society Interface 2015, 12, 20141225. [Google Scholar] [CrossRef]

- Brains, I.B. The plant spikerbox, 2022. Last accessed 24 May 2023.

- Kruse, J. Comparing Unimodal and Multimodal Emotion Classification Systems on Cohesive Data, 2022. Master’s thesis at Technical University Munich.

- Gloor, P.A.; Fronzetti Colladon, A.; Altuntas, E.; Cetinkaya, C.; Kaiser, M.F.; Ripperger, L.; Schaefer, T. Your Face Mirrors Your Deepest Beliefs Predicting Personality and Morals through Facial Emotion Recognition. Future Internet 2022, 14. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. 2015, arXiv:cs.CV/1512.03385. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition; 2009; pp. 248–255. [Google Scholar] [CrossRef]

- Qin, Z.; Kim, D.; Gedeon, T. Rethinking Softmax with Cross-Entropy: Neural Network Classifier as Mutual Information Estimator. 2020, arXiv:cs.LG/1911.10688. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. 2017, arXiv:cs.LG/1412.6980. [Google Scholar]

- Chollet, F.; et al. Keras, 2015.

- Virtanen, P.; Gommers, R.; Oliphant, T.E.; Haberland, M.; Reddy, T.; Cournapeau, D.; Burovski, E.; Peterson, P.; Weckesser, W.; Bright, J.; et al. SciPy 1.0: Fundamental Algorithms for Scientific Computing in Python. Nature Methods 2020, 17, 261–272. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. Journal of Machine Learning Research 2011, 12, 2825–2830. [Google Scholar]

- Harris, C.R.; Millman, K.J.; van der Walt, S.J.; Gommers, R.; Virtanen, P.; Cournapeau, D.; Wieser, E.; Taylor, J.; Berg, S.; Smith, N.J.; et al. Array programming with NumPy. Nature 2020, 585, 357–362. [Google Scholar] [CrossRef] [PubMed]

- Reuther, A.; Kepner, J.; Byun, C.; Samsi, S.; Arcand, W.; Bestor, D.; Bergeron, B.; Gadepally, V.; Houle, M.; Hubbell, M.; et al. Interactive supercomputing on 40,000 cores for machine learning and data analysis. In Proceedings of the 2018 IEEE High Performance extreme Computing Conference (HPEC). IEEE; 2018; pp. 1–6. [Google Scholar]

- Rooney, B.; Benson, C.; Hennessy, E. The apparent reality of movies and emotional arousal: A study using physiological and self-report measures. Poetics 2012, 40, 405–422. [Google Scholar] [CrossRef]

- Shirai, M.; Suzuki, N. Is Sadness Only One Emotion? Psychological and Physiological Responses to Sadness Induced by Two Different Situations: "Loss of Someone" and "Failure to Achieve a Goal". Frontiers in psychology 2017, 8, 288. [Google Scholar] [CrossRef]

- Yu, D.; Sun, S. A Systematic Exploration of Deep Neural Networks for EDA-Based Emotion Recognition. Information 2020, 11, 212. [Google Scholar] [CrossRef]

| Video ID |

Name | Short description | Expected emotion |

Duration (sec) |

|---|---|---|---|---|

| 1 | Puppies | Cute puppies running | Happiness | 13 |

| 2 | Avocado | A toddler holding an avocado | Happiness | 8 |

| 3 | Runner | Competitive runners supporting a girl from another team over the finish line |

Happiness | 24 |

| 4 | Maggot | A man eating a maggot | Disgust | 37 |

| 5 | Raccoon | Man beating raccoon to death | Anger | 16 |

| 6 | Trump | Donald Trump talking about foreigners | Anger | 52 |

| 7 | Montain bike | Mountain biker riding down a rock bridge | Surprise | 29 |

| 8 | Roof run | Runner almost falling of a skyscraper | Surprise | 18 |

| 9 | Abandoned | Social worker feeding a starved toddler | Sadness | 64 |

| 10 | Waste | Residents collecting electronic waste in the slums of Accra |

Sadness | 31 |

| 11 | Dog | Sad dog on the gravestone of his master | Sadness | 11 |

| 12 | Roof bike | Person biking on top of a skyscraper | Fear | 28 |

| 13 | Monster | A man discovering a monster through his camera |

Fear | 156 |

| 14 | Condom ad | Child throwing a tantrum in a supermarket |

Multiple | 38 |

| 15 | Soldier | Soldiers in battle | Multiple | 35 |

| Model Name | Utility | Input | Architecture |

|---|---|---|---|

| MLP | Baseline | Alternation of ReLu-activated densely connected layers with dropout layers to limit overfitting. |

|

| The last layer is a SoftMax activated dense layer of neurons. |

|||

| biLSTM | Considers the temporal dependencies of the plant signal |

Two blocks’ model | |

| Downsampled plant signal |

1. LSTM Layers embedded in a bidirectional wrapper |

||

| 2. Alternation of 2 ReLu-activated dense layers with dropout layers. Each dense layer is composed of 1024 and 512 neurons respectively. |

|||

| The last layer is a SoftMax activated dense layer of neurons. |

|||

| MFCC-CNN | Specialized in 2D or 3D inputs like in multifeatured time-series |

Two blocks’ model. | |

| 1. Alternation of convolutional layers with max pooling operations. |

|||

| 2. Alternation of ReLu activated dense layers with dropout layers. |

|||

| MFCCs features | The last layer is a SoftMax activated dense layer of neurons |

||

| MFCC-ResNet | Pretrained DeepCNN to emphasize the importance of the network depth |

ResNet architecture slightly modified to fit the emotion detection task. The top dense layers used for classification are replaced by a dense layer of 1024 neurons, followed by a dropout layer. |

|

| The last layer is a SoftMax activated dense layer of nbemotion neurons |

|||

|

Random Forest not windowed |

Effective for diverse datasets. Good overall robustness. |

Utilizes an ensemble of decision trees. Parameters include : 300 (number of trees), : 20 (maximum depth of each tree), and : None. This configuration is aimed at handling complex classification tasks, balancing bias and variance. |

|

|

1-Dimensional CNN not windowed |

Suitable for time-series analysis |

Sequential model with a 1D convolutional layer (64 filters, kernel size of 3, `swish’ activation, input shape of (10000, 1)). Followed by a MaxPooling layer (pool size of 2), a Flatten layer, a Dense layer (100 neurons, `swish’ activation), and an output Dense layer (number of neurons equal to unique classes in `y’, ’softmax’ activation). Compiled with Adam optimizer, `sparse_categorical_crossentropy’ loss, and accuracy metrics.. |

|

| Raw plant signal normalized, not windowed |

The last layer is a SoftMax activated dense layer of nbemotion neurons |

||

|

biLSTM not windowed |

Considers the temporal dependencies of the plant signal |

Sequential model with a Bidirectional LSTM layer (1024 units, return sequences true, input shape based on reshaped training data), followed by another Bidirectional LSTM layer (1024 units). Concludes with a Dense layer (100 neurons, ’swish’ activation) and an output Dense layer (number of neurons equal to unique classes in `y’, ’softmax’ activation). Optimized with Adam (learning rate 0.0003), using loss and accuracy metrics. |

|

| The last layer is a SoftMax activated dense layer of nbemotion neurons |

| Model Name |

Parameter | Values | Number of configurations |

|---|---|---|---|

| MLP | Dense Units Dense Layers Dropout Rate Learning Rate Balancing Window Hop |

1024, 4096 2,4 0, 0.2 3e-4, 1e-3 Balance, Weights, None 5, 10, 20 5, 10 |

288 |

| biLSTM | LSTM Units LSTM Layers Dropout Rate Learning Rate Balancing Window Hop |

64, 256, 1024 1,2,3 0, 0.2 3e-4, 1e-3 Balance, Weights, None 5, 10, 20 5, 10 |

648 |

| MFCC-CNN | Conv Filters Conv Layers Conv Kernel Size Dropout Rate Learning Rate Balancing Window Hop |

64, 128 2,3 3,5,7 0, 0.2 3e-4, 1e-3 Balance, Weights, None 5, 10, 20 5, 10 |

864 |

| MFCC-ResNet | Pretrained Number of MFCCs Dropout Rate Learning Rate Balancing Window Hop |

Yes, No 20, 40, 60 0, 0.2 3e-4, 1e-3 Balance, Weights, None 5, 10, 20 5, 10 |

432 |

| RF no windowing | Number of estimators Max Depth Balancing |

100, 200, 300, 500, 700 None, 10, 20, 30 Balance, Weights, None |

60 |

| 1D CNN no windowing | Conv Filters Conv Layers Conv Kernel Size Dropout Rate Learning Rate Balancing |

64, 128 2,3 3,5,7 0, 0.2 3e-4, 1e-3 Balance, Weights, None |

144 |

| biLSTM no windowing | LSTM Units LSTM Layers Dropout Rate Learning Rate Balancing |

64, 256, 1024 1,2,3 0, 0.2 3e-4, 1e-3 Balance, Weights, None |

108 |

| Model Name | Parameters | Values |

|---|---|---|

| MLP | Dense Units | 4096 |

| Dense Layers | 2 | |

| Dropout Rate | 0.2 | |

| Learning Rate | 0.001 | |

| Balancing | Balanced | |

| Window | 20sec | |

| Hop | 10sec | |

| biLSTM | LSTM Units | 1024 |

| LSTM Layers | 2 | |

| Dropout Rate | 0 | |

| Learning Rate | 0.0003 | |

| Balancing | Balanced | |

| Window | 20sec | |

| Hop | 10sec | |

| MFCC-CNN | Conv Filters | 96 |

| Conv Layers | 2 | |

| Conv Kernel Size | 7 | |

| Dropout Rate | 0.2 | |

| Learning Rate | 0.0003 | |

| Balancing | Balanced | |

| Window | 20sec | |

| Hop | 10sec | |

| MFCC-ResNet | Pretrained | No |

| Number of MFCCs | 60 | |

| Dropout Rate | 0.2 | |

| Learning Rate | 0.001 | |

| Balancing | Balanced | |

| Window | 20sec | |

| Hop | 10sec | |

| RF no windowing | Number of estimators | 300 |

| Max Depth | 20 | |

| Balancing | None | |

| 1D CNN no windowing | Conv Filters | 96 |

| Conv Layers | 2 | |

| Conv Kernel Size | 7 | |

| Dropout Rate | 0.2 | |

| Learning Rate | 0.0003 | |

| Balancing | None | |

| biLSTM no windowing | LSTM Units | 1024 |

| LSTM Layers | 2 | |

| Dropout Rate | 0 | |

| Learning Rate | 0.0003 | |

| Balancing | None |

| Model | Test set Accuracy | Test set Recall |

|---|---|---|

| MLP | 0.399 | 0.220 |

| biLSTM | 0.260 | 0.351 |

| MFCC-CNN | 0.377 | 0.275 |

| MFCC-RestNet | 0.318 | 0.324 |

| RF no windowing | 0.552 | 0.552 |

| 1D CNN no windowing | 0.461 | 0.514 |

| biLSTM no windowing | 0.448 | 0.380 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).