Submitted:

20 February 2024

Posted:

20 February 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

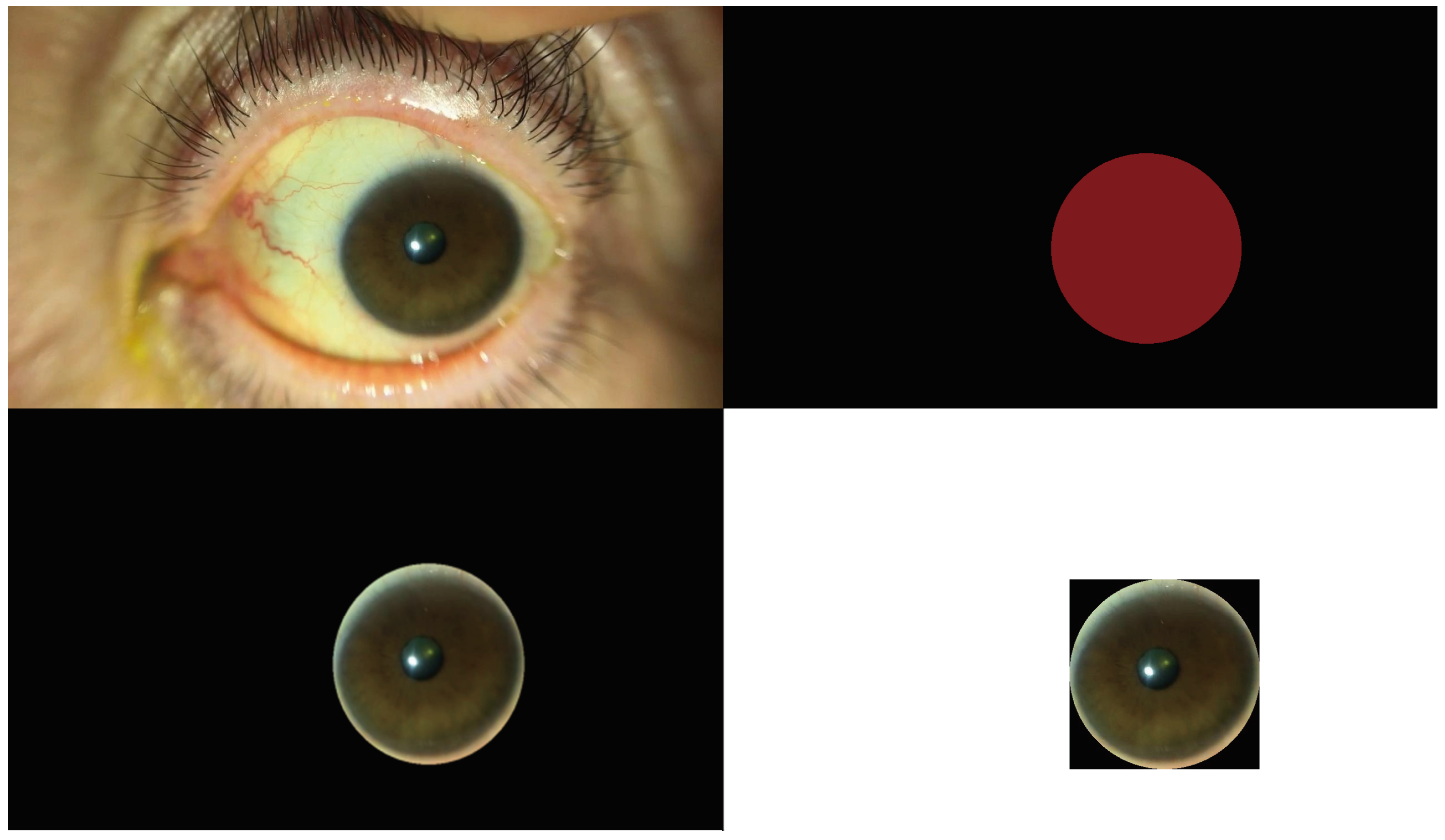

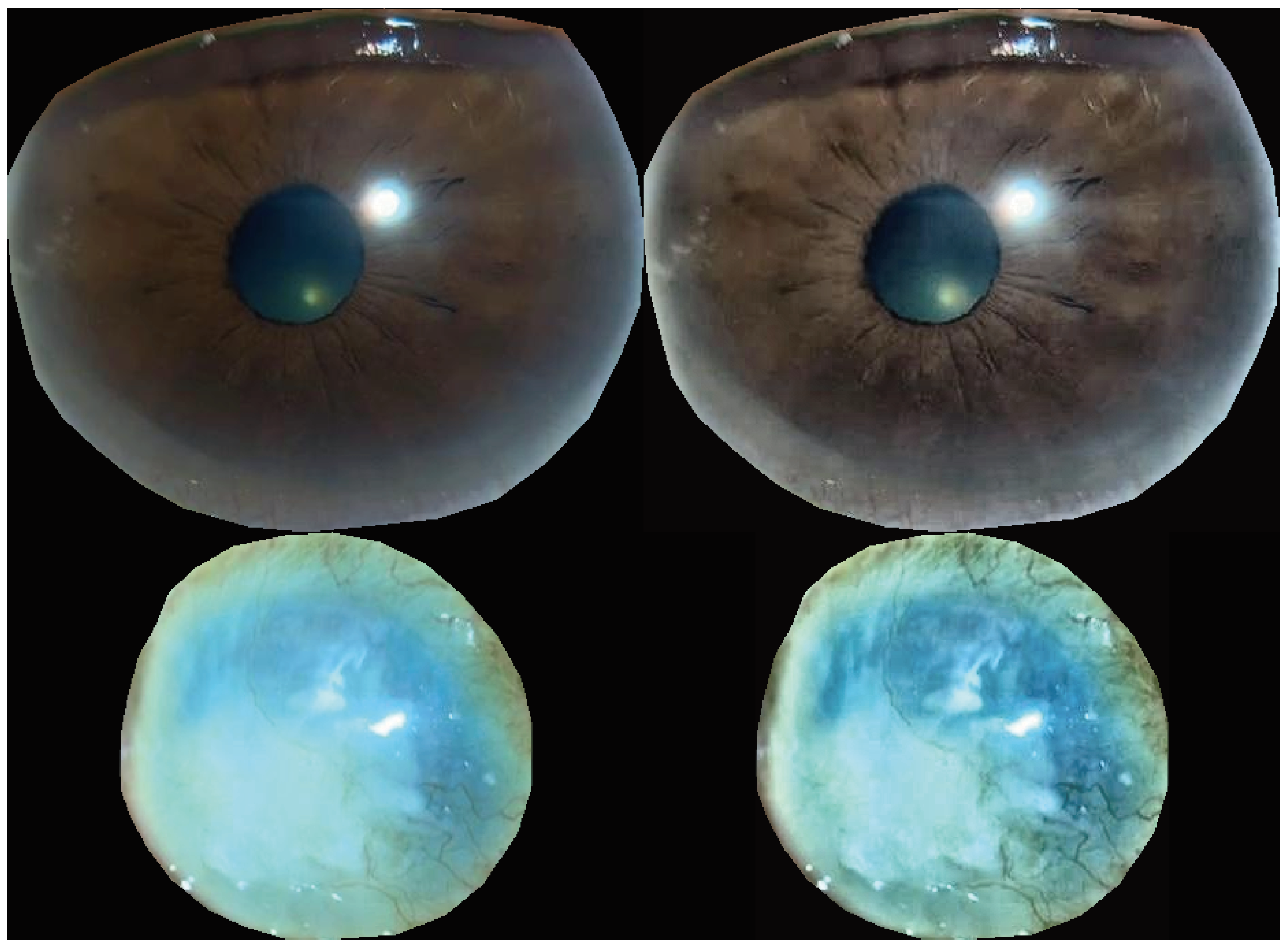

2. Materials and Methods

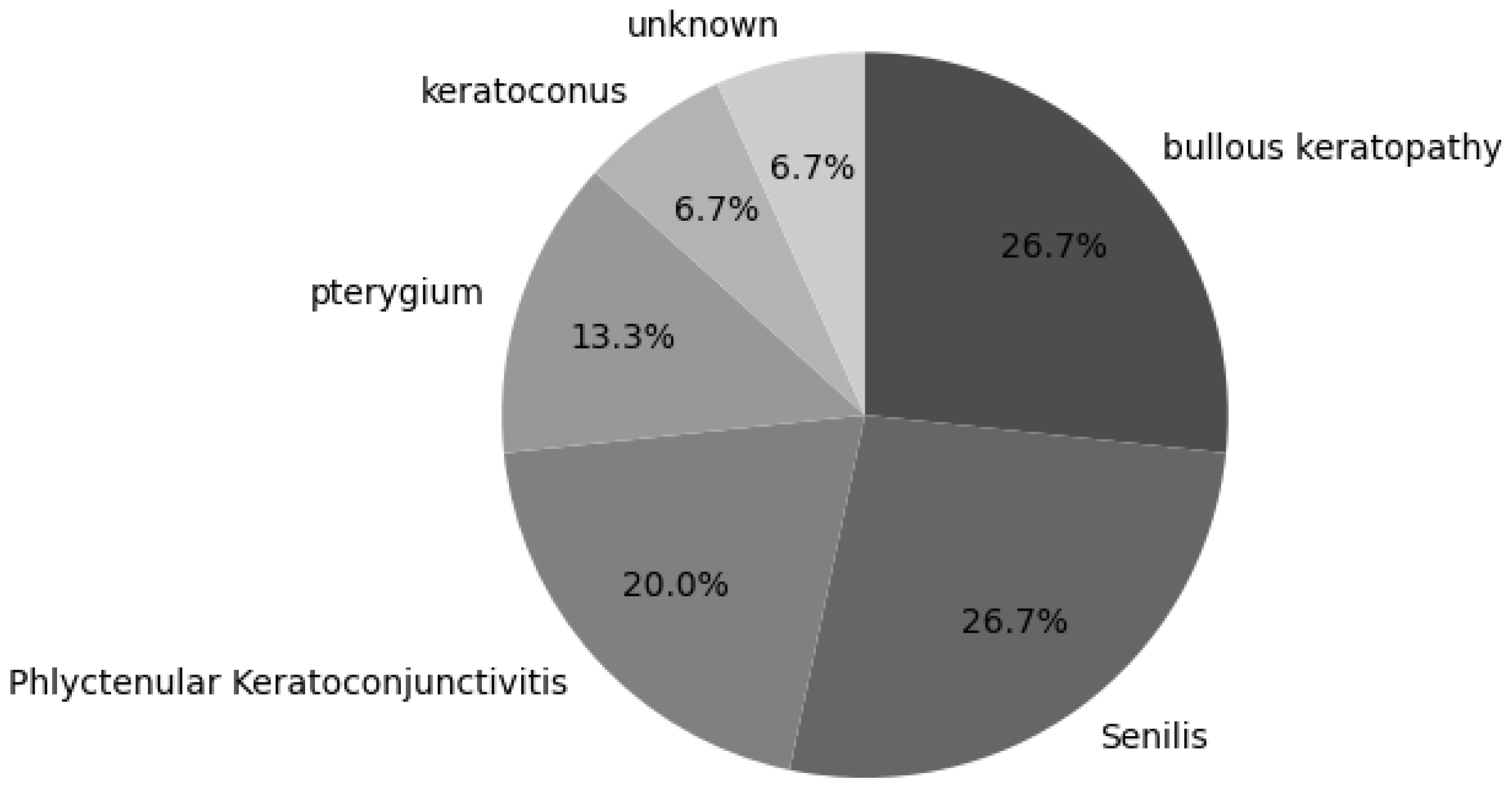

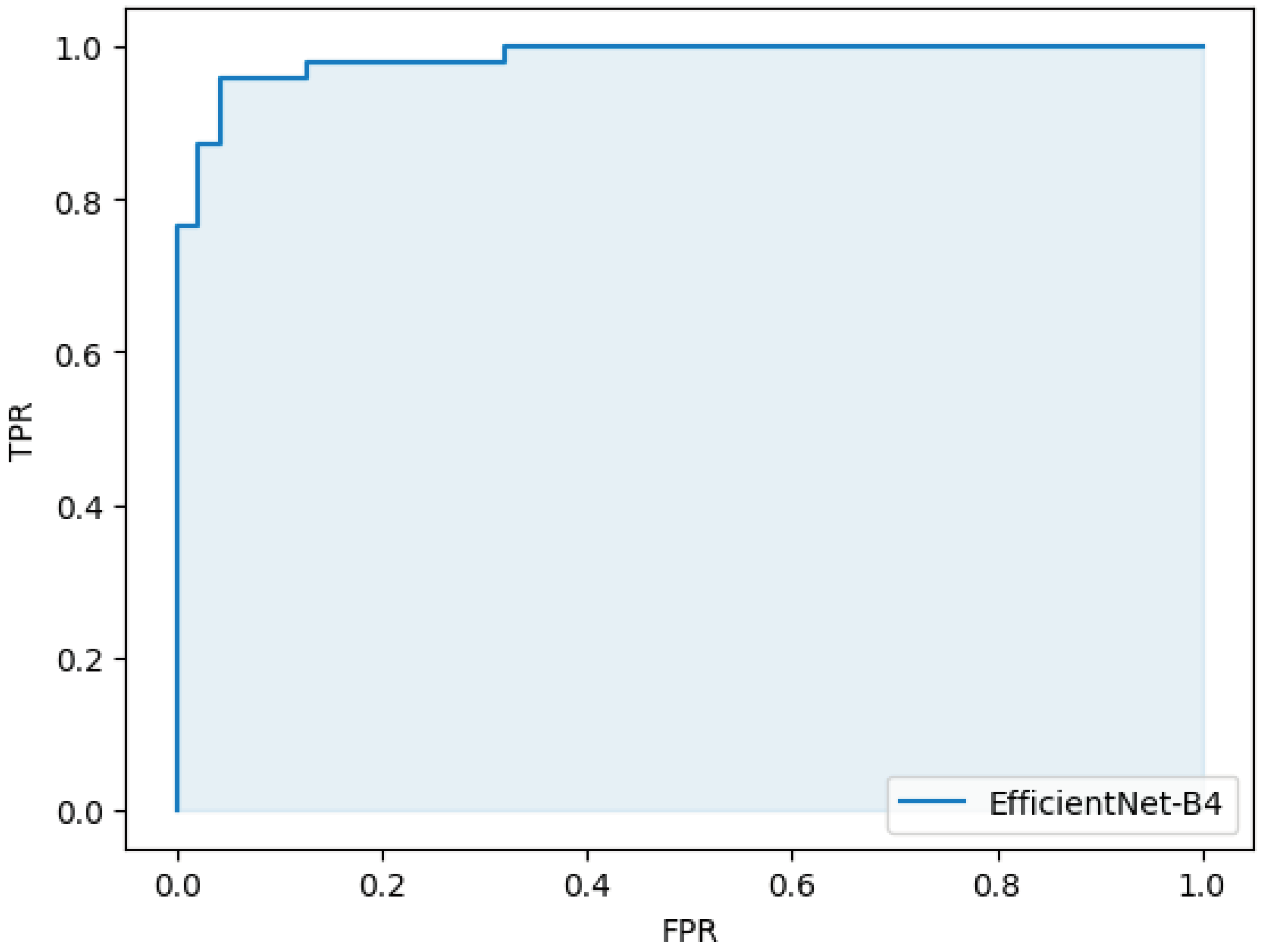

3. Results

4. Discussions

5. Conclusions

References

- Resnikoff S, Felch W, Gauthier T, et al, ’The number of ophthalmologists in practice and training worldwide: a growing gap despite more than 200,000 practitioners’, British Journal of Ophthalmology 2012,96,783-787. [CrossRef]

- Larry Schwab, MD and Randolph Whitfield, Jr, MD, ’Appropriate ophthalmic surgical technology in developing nations.’, Ophthalmic Surgery, Lasers and Imaging Retina, 2013,13(12),991–993. [CrossRef]

- J. Singh, S. Kabbara, M. Conway, G. Peyman, and R. D. Ross, ’Innovative Diagnostic Tools for Ophthalmology in Low-Income Countries’, Novel Diagnostic Methods in Ophthalmology. IntechOpen, Sep. 04, 2019. [CrossRef]

- Chirambo, M.C. ’The role of Western ophthalmologists in dealing with cataract blindness in developing countries.’, Doc Ophthalmol 81, 349–350 (1992). [CrossRef]

- ’Smart Eye Camera: Ophthalmic Exams Via Your Smartphone Anywhere, Anytime’, https://ouiinc.jp, (accessed on 10th January 2024).

- Handayani AT, Valentina C, Suryaningrum IGAR, Megasafitri PD, Juliari IGAM, Pramita IAA, Nakayama S, Shimizu E, Triningrat AAMP. Interobserver Reliability of Tear Break-Up Time Examination Using “Smart Eye Camera” in Indonesian Remote Area. Clin Ophthalmol. 2023 Jul 24;17:2097-2107. [CrossRef]

- Andhare P, Ramasamy K, Ramesh R, Shimizu E, Nakayama S, Gandhi P. A study establishing sensitivity and accuracy of smartphone photography in ophthalmologic community outreach programs: Review of a smart eye camera. Indian J Ophthalmol. 2023 Jun;71(6):2416-2420. [CrossRef]

- Yazu H, Shimizu E, Okuyama S, Katahira T, Aketa N, Yokoiwa R, Sato Y, Ogawa Y, Fujishima H. Evaluation of Nuclear Cataract with Smartphone-Attachable Slit-Lamp Device. Diagnostics (Basel). 2020 Aug 9;10(8):576. [CrossRef]

- Shimizu E, Yazu H, Aketa N, Yokoiwa R, Sato S, Yajima J, Katayama T, Sato R, Tanji M, Sato Y, Ogawa Y, Tsubota K. A Study Validating the Estimation of Anterior Chamber Depth and Iridocorneal Angle with Portable and Non-Portable Slit-Lamp Microscopy. Sensors (Basel). 2021 Feb 19;21(4):1436. [CrossRef]

- Yazu H, Shimizu E, Sato S, Aketa N, Katayama T, Yokoiwa R, Sato Y, Fukagawa K, Ogawa Y, Tsubota K, Fujishima H. Clinical Observation of Allergic Conjunctival Diseases with Portable and Recordable Slit-Lamp Device. Diagnostics (Basel). 2021 Mar 17;11(3):535. [CrossRef]

- Shimizu E, Yazu H, Aketa N, Yokoiwa R, Sato S, Katayama T, Hanyuda A, Sato Y, Ogawa Y, Tsubota K. Smart Eye Camera: A Validation Study for Evaluating the Tear Film Breakup Time in Human Subjects. Transl Vis Sci Technol. 2021 Apr 1;10(4):28. [CrossRef]

- Shimizu E, Ogawa Y, Yazu H, Aketa N, Yang F, Yamane M, Sato Y, Kawakami Y, Tsubota K. “Smart Eye Camera”: An innovative technique to evaluate tear film breakup time in a murine dry eye disease model. PLoS One. 2019 May 9;14(5):e0215130. [CrossRef]

- Sengupta, S., Singh, A., Leopold, H., Gulati, T., Lakshminarayanan, V. (2020). Ophthalmic diagnosis using deep learning with fundus images - A critical review. Artificial intelligence in medicine, 102, 101758. [CrossRef]

- Xu, B., Chiang, M., Chaudhary, S., Kulkarni, S., Pardeshi, A., Varma, R. (2019). Deep Learning Classifiers for Automated Detection of Gonioscopic Angle Closure Based on Anterior Segment OCT Images.. American journal of ophthalmology. [CrossRef]

- Christopher, M., Bowd, C., Belghith, A., Goldbaum, M., Weinreb, R., Fazio, M., Girkin, C., Liebmann, J., Zangwill, L. (2019). Deep Learning Approaches Predict Glaucomatous Visual Field Damage from OCT Optic Nerve Head En Face Images and Retinal Nerve Fiber Layer Thickness Maps.. Ophthalmology. [CrossRef]

- Wanichwecharungruang, B., Kaothanthong, N., Pattanapongpaiboon, W., Chantangphol, P., Seresirikachorn, K., Srisuwanporn, C., Parivisutt, N., Grzybowski, A., Theeramunkong, T., Ruamviboonsuk, P. (2021). Deep Learning for Anterior Segment Optical Coherence Tomography to Predict the Presence of Plateau Iris. Translational Vision Science & Technology, 10. [CrossRef]

- Zheng, C., Xie, X., Wang, Z., Li, W., Chen, J., Qiao, T., Qian, Z., Liu, H., Liang, J., Chen, X. (2021). Development and validation of deep learning algorithms for automated eye laterality detection with anterior segment photography. Scientific Reports, 11. [CrossRef]

- Chase, C., Elsawy, A., Eleiwa, T., Ozcan, E., Tolba, M., Shousha, M. (2021). Comparison of Autonomous AS-OCT Deep Learning Algorithm and Clinical Dry Eye Tests in Diagnosis of Dry Eye Disease. Clinical Ophthalmology (Auckland, N.Z.), 15, 4281 - 4289. [CrossRef]

- Wainberg, M., Merico, D., Delong, A., Frey, B. (2018). Deep learning in biomedicine. Nature Biotechnology, 36, 829-838. [CrossRef]

- Liu, X., Faes, L., Kale, A., Wagner, S., Fu, D., Bruynseels, A., Mahendiran, T., Moraes, G., Shamdas, M., Kern, C., Ledsam, J., Schmid, M., Balaskas, K., Topol, E., Bachmann, L., Keane, P., Denniston, A. (2019). A comparison of deep learning performance against health-care professionals in detecting diseases from medical imaging: a systematic review and meta-analysis.. The Lancet. Digital health, 1 6, e271-e297. [CrossRef]

- Rahimy, E. (2018). Deep learning applications in ophthalmology. Current Opinion in Ophthalmology, 29, 254–260. [CrossRef]

- Thakur, A., Goldbaum, M., Yousefi, S. (2020). Predicting Glaucoma before Onset Using Deep Learning.. Ophthalmology. Glaucoma, 3 4, 262-268. [CrossRef]

- Iao, W., Zhang, W., Wang, X., Wu, Y., Lin, D., Lin, H. (2023). Deep Learning Algorithms for Screening and Diagnosis of Systemic Diseases Based on Ophthalmic Manifestations: A Systematic Review. Diagnostics, 13. [CrossRef]

- M, M., Prasad, D., Kulkarni, M., K, S., S, V. (2022). A Systematic Study of Deep Learning Architectures for Analysis of Glaucoma and Hypertensive Retinopathy. International Journal of Artificial Intelligence & Applications. [CrossRef]

- Rahimy, E. (2018). Deep learning applications in ophthalmology. Current Opinion in Ophthalmology, 29, 254–260. [CrossRef]

- Tan, Mingxing, Quoc V. Le. "EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks." arXiv preprint arXiv:1905.11946 (2019). [CrossRef]

- K. Zuiderveld: “Contrast limited adaptive histogram equalization”, In:Academic PressGraphics Gems Series: Graphics Gems, Vol.IV, pp.474- 485 (1994). [CrossRef]

- Ronneberger, O., Fischer, P., Brox, T. (2015). U-Net: Convolutional Networks for Biomedical Image Segmentation. In: Navab, N., Hornegger, J., Wells, W., Frangi, A. (eds) Medical Image Computing and Computer-Assisted Intervention – MICCAI 2015. MICCAI 2015. Lecture Notes in Computer Science(), vol 9351. Springer, Cham. [CrossRef]

- Ueno Y, Oda M, Yamaguchi T, Fukuoka H, Nejima R, Kitaguchi Y, Miyake M, Akiyama M,Miyata K, Kashiwagi K, Maeda N, Shimazaki J, Noma H, Mori K, Oshika T. Deep learning model for extensive smartphone-based diagnosis and triage of cataracts and multiple corneal diseases. Br J Ophthalmol. 2024 Jan 19:bjo-2023-324488. [CrossRef]

- Wu X, Huang Y, Liu Z, Lai W, Long E, Zhang K, Jiang J, Lin D, Chen K, Yu T, Wu D, Li C, Chen Y, Zou M, Chen C, Zhu Y, Guo C, Zhang X, Wang R, Yang Y, Xiang Y, Chen L, Liu C, Xiong J, Ge Z, Wang D, Xu G, Du S, Xiao C, Wu J, Zhu K, Nie D, Xu F, Lv J, Chen W, Liu Y, Lin H. Universal artificial intelligence platform for collaborative management of cataracts. Br J Ophthalmol. 2019 Nov;103(11):1553-1560. [CrossRef]

- Shimizu E, Tanji M, Nakayama S, Ishikawa T, Agata N, Yokoiwa R, Nishimura H, Khemlani RJ, Sato S, Hanyuda A, Sato Y. AI-based diagnosis of nuclear cataract from slit-lamp videos. Sci Rep. 2023 Dec 12;13(1):22046. [CrossRef]

- Shimizu E, Ishikawa T, Tanji M, Agata N, Nakayama S, Nakahara Y, Yokoiwa R, Sato S, Hanyuda A, Ogawa Y, Hirayama M, Tsubota K, Sato Y, Shimazaki J, Negishi K. Artificial intelligence to estimate the tear film breakup time and diagnose dry eye disease. Sci Rep. 2023 Apr 10;13(1):5822. [CrossRef]

- Li Z, Jiang J, Chen K, Chen Q, Zheng Q, Liu X, Weng H, Wu S, Chen W. Preventing corneal blindness caused by keratitis using artificial intelligence. Nat Commun. 2021 Jun 18;12(1):3738. [CrossRef]

- Li Z, Jiang J, Chen K, Chen Q, Zheng Q, Liu X, Weng H, Wu S, Chen W. Preventing corneal blindness caused by keratitis using artificial intelligence. Nat Commun. 2021 Jun 18;12(1):3738. [CrossRef]

- S. Hu et al., "Unified Diagnosis Framework for Automated Nuclear Cataract Grading Based on Smartphone Slit-Lamp Images," in IEEE Access, vol. 8, pp. 174169-174178, 2020. [CrossRef]

- Son, Ki Young et al. “Deep Learning-Based Cataract Detection and Grading from Slit-Lamp and Retro-Illumination Photographs: Model Development and Validation Study.” Ophthalmology science vol. 2,2 100147. 18 Mar. 2022. [CrossRef]

- Ueno, Y., Oda, M., Yamaguchi, T., Fukuoka, H., Nejima, R., Kitaguchi, Y., Miyake, M., Akiyama, M., Miyata, K., Kashiwagi, K., Maeda, N., Shimazaki, J., Noma, H., Mori, K., Oshika, T. (2024). Deep learning model for extensive smartphone-based diagnosis and triage of cataracts and multiple corneal diseases. The British journal of ophthalmology, bjo-2023-324488. Advance online publication. [CrossRef]

| negative | positive | total | |

|---|---|---|---|

| train/val | 188 | 188 | 376 |

| test | 47 | 47 | 94 |

| True positive(45) | False negative(2) |

| False positive(2) | True negative(45) |

| sensitivity | 0.96 (95%CI 0.97-0.99) |

| specificity | 0.96 (95%CI 0.97-0.99) |

| accuracy | 0.96 (95%CI 0.97-0.99) |

| AUC | 0.98 (95%CI 0.98-0.99) |

| dice | 0.94 |

| IoU | 0.94 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).