1. Introduction

The emergence of language is considered one of the major evolutionary transitions [

1]. It enables us to connect with others in rich ways, as individuals receive and process information from their surroundings and respond with behavioural changes. It is efficiently propagated among individuals (through its acquisition by children from adults) and is easily learnt, allowing its users to access general conceptualisation [

2]. In the evolutionary context, the development of human language is intricately tied to the survival advantages it conferred on early social groups.

If anything characterizes linguistic phenomena is its astonishing ubiquity and diversity, spanning disparate research fields and scales [

2,

3,

4]. Linguistics [

5], psychology [

6], population dynamics [

7,

8], population genetics [

9], artificial intelligence [

10,

11,

12], network science [

13,

14,

15,

16,

17], statistical physics [

18,

19,

20,

21,

22,

23], theoretical and evolutionary biology [

24,

25,

26,

27] and cognitive science [

28,

29,

30,

31] provide different frameworks encompassing some aspect of language complexity. Yet, such explanations are not independent, but their relations give rise to a complex net of relations, as illustrated in

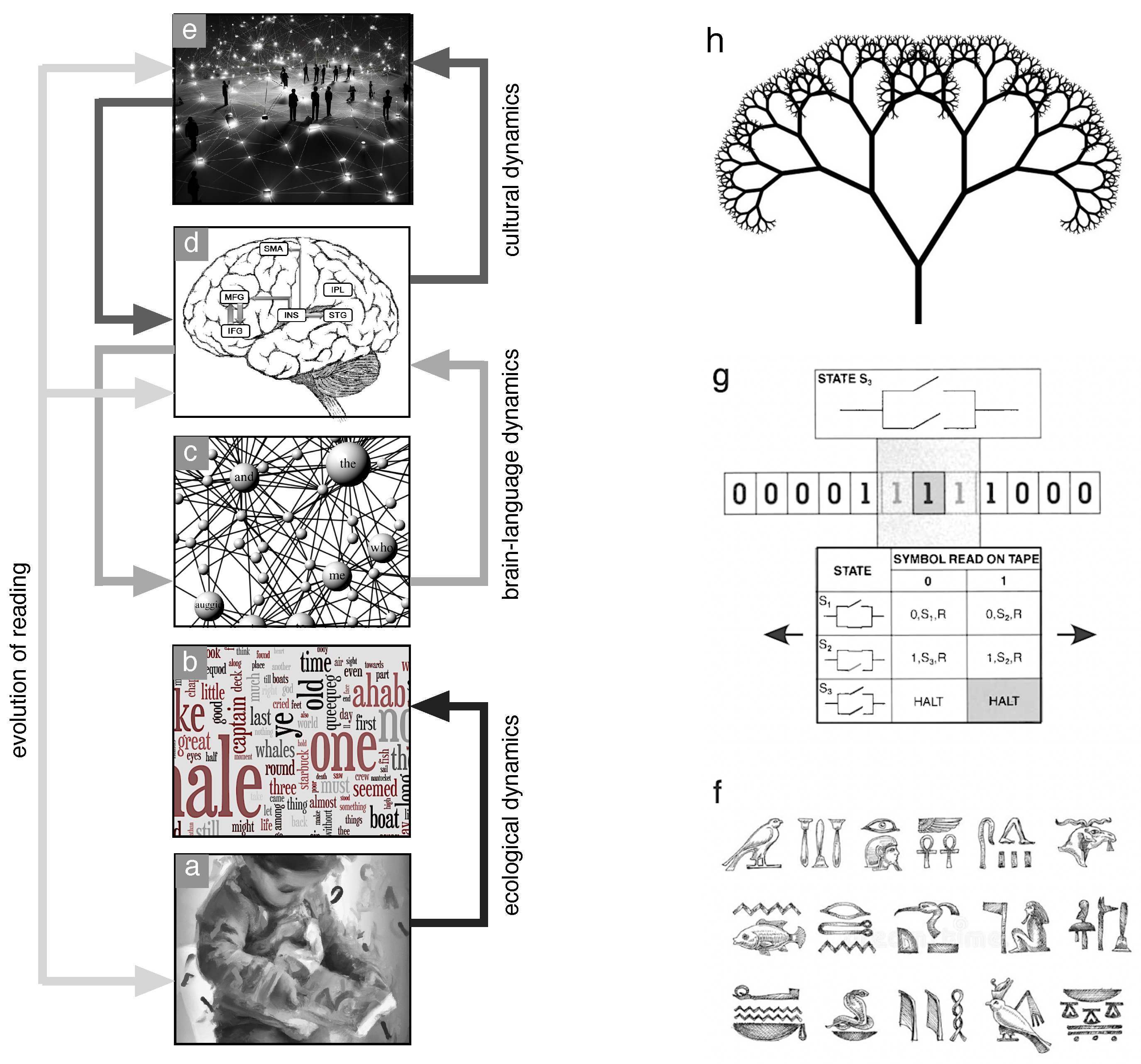

Figure 1a-e, exhibiting a hierarchy of such representations ranging from its minimal components to the socio-cultural domain. This complexity is deeply rooted in the evolutionary history of human communication and its coevolution with the brain, which reflects adaptive responses to environmental and social challenges. At the microscale, the arrangement and combination of these units adhere to syntactic and grammatical rules, providing the structural basis for constructing words and sentences. At the mesoscale, language exhibits emergent properties beyond lexicons, as words and sentences interact to convey new meaning. Sentence structures, constrained by grammatical rules, emerge from complex syntactic and semantic rules.

Each scale involves novel features: the properties observed at one scale seem to be emergent, that is, not reducible to the properties of the lower one. Within each scale, scholars have found constraints to the space of the possible: extant languages (written or spoken) seem to share several ‘optimal’ properties, which can often be seen as the result of certain evolutionary dynamics. As a matter of fact, in some respects languages behave like species in ecosystems—originating, coexisting within a great diversity, and potentially becoming extinct. In addition, the scales of description interact in causal loops: language is maintained by the cultural substrate of interacting human groups, but needs human brain architectures to develop within individuals. These features necessitate such diverse models and frameworks that a unified picture seems very challenging.

Despite the disparity of phenomena, the obvious differences among languages, and their ‘accidental’ histories, scholars have intensively explored the possibility of discovering general laws. Behind this effort lies the hypothesis that all languages share fundamental traits that reveal the presence of so-called universal patterns of organization, either at the level of grammar, writing systems or statistical patterns of their usage. This search puts language in a unique position within other scientific disciplines, as

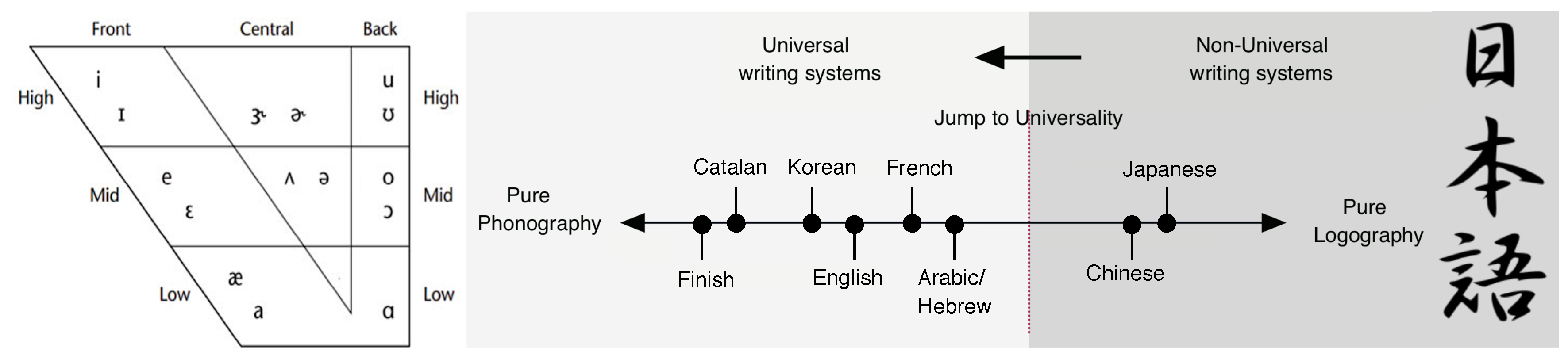

universality in language is defined, postulated or identified from many different angles, more than any other phenomena, some of them sketched in (

Figure 1f-h). As we shall discuss in this work, the evolution of written languages (

Figure 1f) provides a privileged window into the transitions towards universal patterns. The gold standard of universality is that of universal Turing machines (see e.g. [

32];

Figure 1g), for its power and beauty. Intimately connected to universality will be the concept of recursion, shown as an example in (

Figure 1h), where a tree is generated by a simple algorithm that can be represented as a grammar [

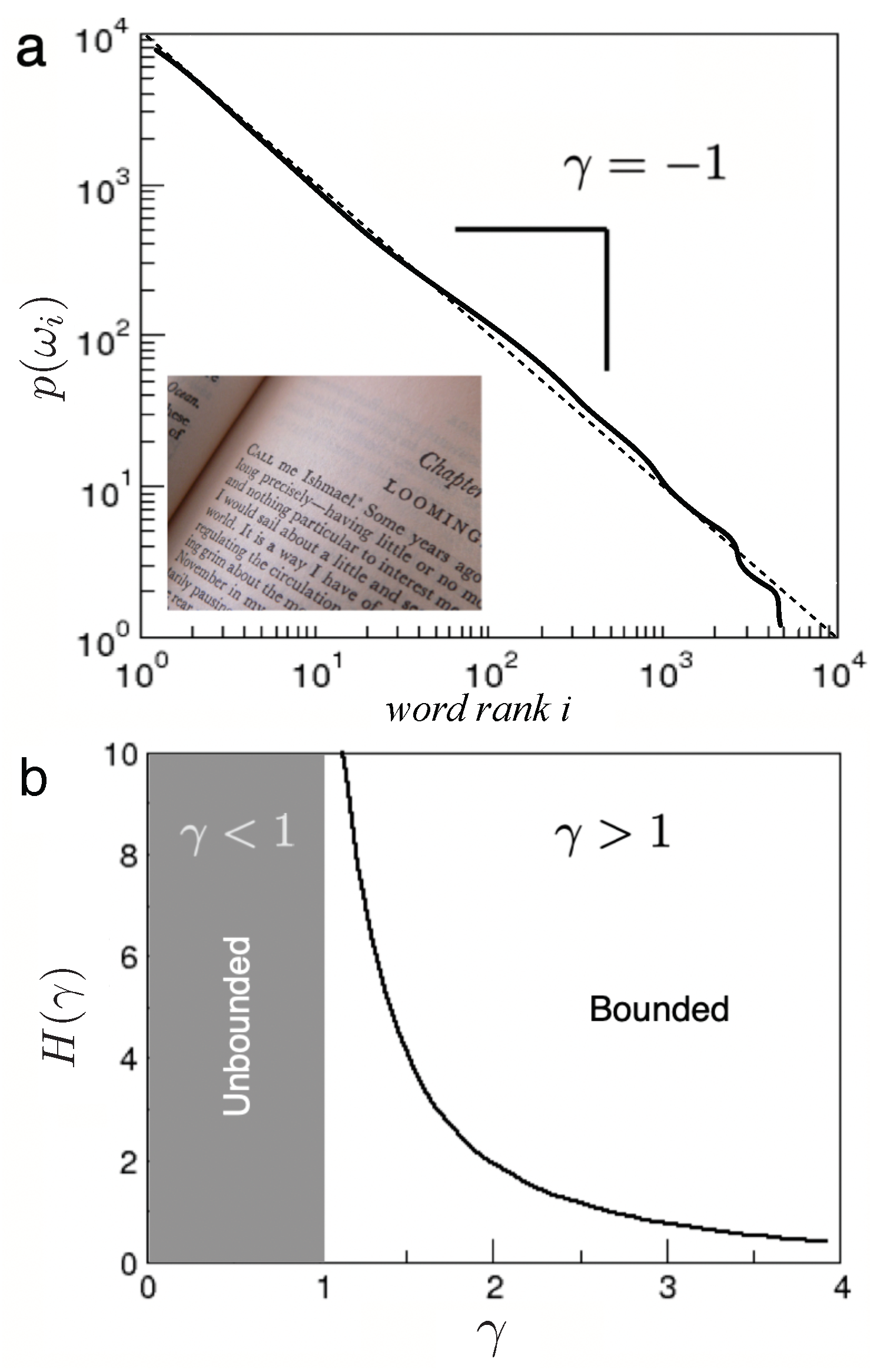

33]. Another instance of universality are scaling laws in the usage of words.

In physics, certain forms of universality plays a crucial role in our understanding of complexity, particularly in relation with phase transitions: very different systems share commonalities close to critical points [

34,

35]. The conceptual power of this type of universality has percolated deeply within complex systems sciences, as well as evolutionary theory [

36,

37]. In particular, ‘universal’ statistical patterns have been identified (such as Zipf’s law, which we will consider in

Section 5). However, most of these works have largely ignored other important studies on the structure of grammars. This includes the so-called

Universal Grammar, which describes an innate knowledge and stems from the rationalist tradition [

38,

39]. Or the so-called

Greenberg Universals [

40], which postulate the existence of universal traits in the grammatical structure of any language, a result based only on empirical grounds. As a consequence of the divergent course taken by these studies, there is a knowledge gap that necessitates a theoretical bridge.

In other words: Despite the central role of universality in approaches to linguistic phenomena, no definition has yet been provided that enables to

- (i)

Identify and characterize the potential universals, and, more importantly,

- (ii)

Establish relations between them.

The goal of this paper is to bridge this gap by first establishing a minimal definition of universality, termed a ‘basic structure’. We shall then identify this skeleton in various established instances of universality, casting the different examples in this light. This groundwork will enable the exploration of potential connections between them, as well as the study of the transition from non-universal to universal (sometimes called the

jump to universality [

41]).

To this end, we first present the basic structure of universality, as well as that of recursion. We identify the latter as a special case of universality (where the relation between the universal object and the objects in the collection is of a special kind), and term its universal object a ‘grammar’. We then examine various notions of grammars in this light: we cast generative grammars of formal languages, the Universal Grammar and the Greenberg Universals in these structures, and compare the mathematical properties of the last two. We also define universal writing systems, and show that they are the ones that exclusively use the rebus principle. Lastly, we consider the statistics of word usage, where we encounter Zipf’s law. We explain one mechanism that accounts for its emergence, draw a connection between Zipf’s law and universal writing systems, and compare its properties with those of universal Turing machines.

As it shall become clear soon, to instantiate the basic structure of universality entails, in a certain way, that a plurality be considered a unity. One main conclusion of this work is that, while all notions of universality instantiate the basic structure, universality comes in two main flavours. One is such that when universality is attained, all possibilities of a complexity universe can be explored.

1 While the paradigmatic example is a universal Turing machine, we also encounter universal spin models [

43], NP-complete problems, the denseness of a subset, the basis of a vector space and others [

44]. In this case, the jump to universality is the crossing of a threshold after which no more complexity need be incorporated in the universal object so that it can reach any other object in the collection.

In contrast, in the second flavour of universality, the transition to universality is that by which a collection of objects come to share an attribute. This is a form of emergence: a degree of freedom, capturing that attribute, becomes relevant at the scale where the transition occurs. The paradigmatic example is that of universality classes in statistical mechanics, where many hamiltonians behave similarly close to criticality. This transition often has a more empirical nature than the first one.

If the first flavour is related to an exhaustion of possibilities, the second is linked to a massive reduction of effective degrees of freedom. The first is related to forms of unreachability via Lawvere’s fixed point theorem [

44,

45,

46], which can be seen as limitations—in probability (via Gödel’s theorem), computability (via the uncomputability of the halting problem), the definition of sets (via Russell’s paradox), or the impossibility to define the set of all sets or an ordinal that contains all others (via Cantor’s theorem; see also [

47]), to mention a few (see [

45] for more). The second is related to the presumed validity of asymptotic reasoning to derive macroscopic behaviours [

48], which results in limitations of predictability insofar as the appearance of new effective degrees of freedom was not foreseen from the finite.

2 We shall refer to the first flavour of universality as

mechanistic and to the second as

emergent. Note that we do not have a rigorous definition of either, but only the properties mentioned in the preceding paragraphs. Devising a meaningful definition would be part of the solution.

Broadening the scope, our contribution can be seen as part of a larger endeavour, which seeks to understand:

What is the relation between mechanistic-universality and emergent-univerality?

Does one imply the other? Or are they independent? These questions can only be addressed from a common framework with which to compare them. This is what we (hope to) provide in this work.

This paper is structured as follows. First we present the basic structures of universality and recursion (

Section 2). Then, we cast several notions of universality in grammars in these structures (

Section 3, and other infinite sets with a finite description in

Appendix A). Then we turn to written representations of languages, where we define and characterize universal writing systems (

Section 4). Finally, we examine emergent universals in the light of our structures (

Section 5, while the details of communication conflicts leading to Zifp’s law are deferred to

Appendix B). We conclude and present an outlook in

Section 6.

2. The basic structures of universality and recursion

Let us start by defining the basic structure of universality and recursion.

‘Universal’ means ‘relative to the Universe’, and by extension, ‘all-encompassing’.

3 More formally (see

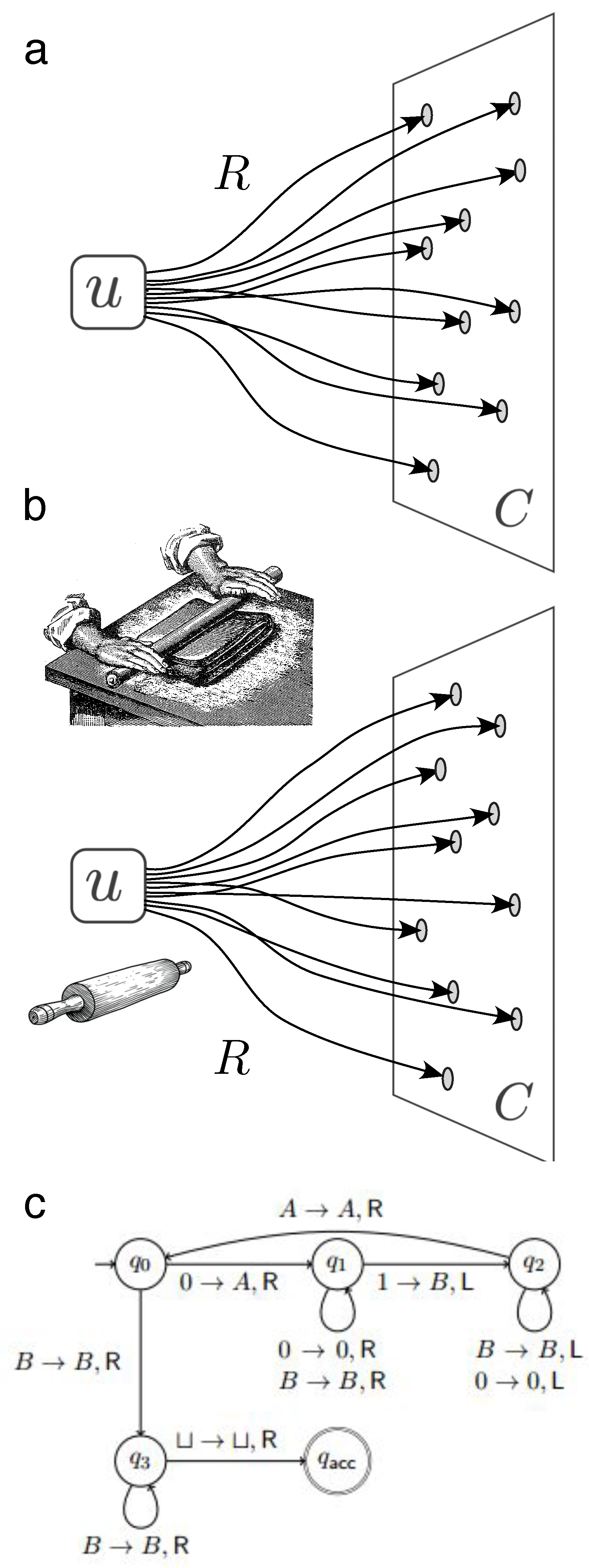

Figure 2),

Basic structure of universality

Given a set C and a relation R landing in C,

a finite u is universal if for all .

‘All’ qualifies the set

C and ‘encompass’ is captured by the relation

R—in this minimal sense, this formalizes the idea that universal stands for all-encompassing. Recall that a relation

R is a subset of the Cartesian product of two other sets,

, i.e.

R is identified with the set of pairs where it is true. We say that

Rlands in

C if the second component of this product is precisely

C. We leave

S unspecified, as we do not want to commit to where

u belongs. We also require that

u have a finite description, because, how could we even present an object that requires an infinite description?

4 If

C is infinite, the finiteness of

u will exclude trivial structures of universality in which

R is the identity relation and

. However, if

C is finite (e.g.

in

Section 3.2), such trivial universality is not excluded by the finiteness of

u.

A special kind of universality is given by recursion:

Basic structure of recusion

Let u be universal for set C with relation R. u is recursive if R reads ‘can be applied to a base case a finite but unbounded number of times’. We call such u a grammar.

In this case,

R consists of applying a finite set of rules (or ‘mould’) a potentially unbounded number of times to generate the elements of a family. Note that this use of the term ‘grammar’ is broader than the usual one. A paradigmatic example are so-called generative grammars (

Section 3.1), such as

and

, with

S the start symbol,

A and

B terminal symbols and 0 the empty symbol, where these two rules (the ‘mould’) generate the (context-free) language

(the set

C; see

Figure 2c).

Another example of a recursive system is an axiomatic formal system, where a finite number of axioms and transformation rules generate an (infinite) set. For example, in the simplest case and the most naive formulation, the natural numbers (the set

C) are generated by the successor function

applied to a base case (the number 0)—where the two define the ‘mould’—an unbounded number of times. One of the appeals (if not the main one) of axiomatization is precisely that it purports to trap an infinite wealth of information in a finite, manageable stock of basic (self-evident) principles (see e.g. [

50]).

3. Grammars

Let us now consider notions of universality in grammars. We will first examine generative grammars (

Section 3.1), which form a paradigmatic framework for a theory of grammar [

51,

52]. We will then consider two concepts of universality postulated for the grammatical structure of human language(s): the Universal Grammar in

Section 3.2, and the Greenberg Universals in

Section 3.3, as well as their potential connections in

Section 3.4.

Let us start with some general considerations. The two notions of universality originate from two distinct, almost opposed perspectives. First, the potential existence of an innate endowment provided by a Universal Grammar originates from the rationalist tradition [

38,

39,

51]. Rationalism posits that human beings possess

a priori knowledge, which exists independently of experience [

53,

54,

55]. This universality, therefore, would be generic and part of the human brain’s capacity for processing complex computations, presumably utilized for communication purposes. Its existence is postulated to be necessary to overcome the lack of comprehensive and systematic linguistic input encountered during the process of language acquistion [

56].

In contrast, the Greenberg Universals provide a different, albeit potentially related notion, as they postulate the existence of traits that presumably hold in the grammatical structures of any language—so-called universal traits [

40,

57]. The conclusion is drawn from a generalization that relies solely on empirical evidence, without any regard to the epistemological issues related to language acquisition.

Throughout the text we pass no judgements on the validity of either approach in exploring the functioning of human language.

3.1. Generative grammars

Given a finite alphabet

—e.g.

for a binary alphabet—and its so-called Kleene star

where

denotes the

n-fold Cartesian product of

, a formal language

L is

, that is, a subset of the set of finite concatenations of elements of the alphabet. Let a generative grammar

G be a finite set of transformation rules which are well-formed, i.e. contain a start symbol, non-terminal and terminal symbols (see e.g. [

32] or [

58]). Additional constraints lead to the appearance of specific grammars organized in a hierarchy of escalating complexity that begins with regular grammars, progresses through context-free and context-sensitive grammars, and culminates in unrestricted grammars. This classification of grammars is known as the Chomsky hierarchy (

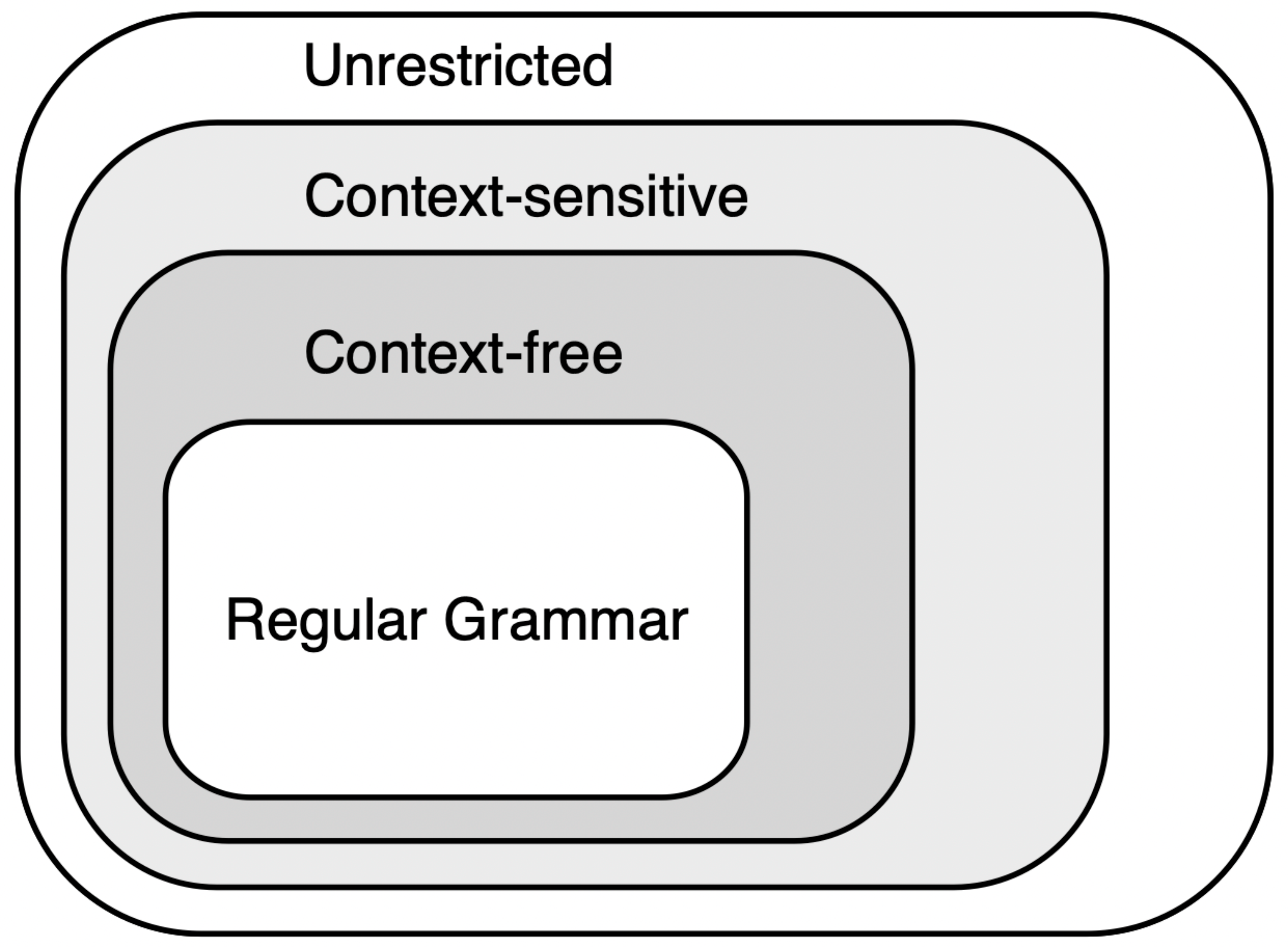

Figure 3).

A grammar

G is said to

generate a formal language

L, written

, if for every

, there exists a finite sequence of applications of the rules in

G resulting in

x [

58]. This instantiates the basic structure of recursion as follows:

so that the elements

are precisely the strings

. Let

denote ‘there exists a finite application of the grammar rules

G from the start symbol

S that result in string

x’. Then relation

R precisely captures this condition, namely

If , we say that ‘G generates L’ or, in this work, that ‘G is universal for L’.

Four remarks are in order. First, another recursive description of

L is provided by the Turing machine (TM)

T that accepts it, denoted

. A Turing machine is a finite set of rules (formally it is a 7-tuple specifying some finite alphabets, start and end symbol as well as a finite set of transition rules [

32]) such that, if input

x is initially written on the tape,

T (eventually) accepts it if

. In this case,

T is said to accept

L; if

x is accepted if and only if

,

T is said to

decideL. What is important for our discussion is that an infinite collection of strings (namely

L) is characterized by a finite mould (namely the transition rules of

T). The mould can be unravelled to give rise to the finite but unbounded element, namely any

. Depending on the complexity of the grammar, a language is accepted by a finite state automaton, a pushdown automaton, a linear bounded automaton or a Turing machine if and only if it is generated by a regular grammar, context free grammar, context sensitive grammar or unrestricted grammar, respectively (cf.

Figure 3). In this sense, the Turing machine

T provides an alternative finite description of

L than its generative grammar

G—that of

T can be seen as a passive description, as it accepts the string, whereas that of

G as an active description, as it generates the string. Either way, they are both recursive and thus universal according to our definition, only the relation

R is slightly different in the two cases.

Second, note that the foregoing definition of universality for Turing machines is different than that of a universal Turing machine, by which a fixed Turing machine can simulate any other Turing machine on any input (see e.g. [

44]). Here, that a Turing machine

T be universal for a language

L means that it accepts it, i.e.

. A universal Turing machine

U accepts the language

where

d is a map from Turing machine to strings, i.e. a description, and # is a special symbol separating the parts of the string.

Third, the vast majority of formal languages do not admit a finite description (either by a generative grammar or a machine), but their only ‘description’ consists of providing each string

x in the language—which scarcely qualifies as a description at all. These languages cannot be cast in the basic structure of universality because of the requirement that

u be finite. (Without this requirement, they could trivially be cast by identifying

where

L is the language, and

R with the identity). This mismatch between languages with a finite description (or handle) and generic languages can be seen with a simple counting argument: the number of generative grammars or Turing machines is given by the cardinality of the naturals,

, whereas the number of languages is given by the cardinality of power set of

,

, which is

, an infinity of larger cardinality. By Cantor’s Theorem, there does not exist a surjection from the naturals to its power set. It follows that the fact that

L have a finite description is extremely informative. Note that having a finite description significantly contributes to making the language

L interesting, at least for most notions of ‘interesting’ we can think of (see also the discussion in [

41]). As a matter of fact, most languages we ever consider have a grammar, i.e. are recursively enumerable. This situation is parallel to that of other infinite sets with a finite handle (

Appendix A).

Finally, these considerations do not only apply to formal languages, but also extend to grammars of natural languages. The latter are usually classified as context free, or mildly context sensitive in certain exceptional cases [

58].

3.2. Universal Grammar

The concept of a Universal Grammar (UG) was proposed by Chomsky in 1957 to explain how a child, exposed to only a finite set of sentences, can ‘extract’ the underlying grammar from this sample and then creatively construct new sentences that adhere to

correct grammatical rules [

51]. This seemingly paradoxical situation is known as the problem of the

Poverty of Stimulus [

39,

56]. Possessing a ‘universal grammar’ in our brain would solve this paradox: The existence of the UG would restrict enormously the search space of the potential grammatical structures, thereby acting both as a guide and a frame for the process of language acquisition. This ‘grammar’ would transform language acquisition into a process of setting the parameters for the specific grammar of the language the child is exposed to. Specifically, the UG—in the Principles & Parameters approach [

59,

60]—can be seen as a ‘grammar’ with free parameters. As these parameters are set to different values, the UG specializes to the grammar of different natural languages [

61], presumably characterized by certain parametrization of the UG. In this regard, the UG can be considered a meta-grammar.

We start by observing that, from a mathematical perspective, the existence of a UG in the Principles & Parameters paradigm is trivial, for the following reason. There is a finite number of natural languages (about six thousand [

62]); let us assume that every natural language has its own distinctive grammar. It is a plain fact that every finite set can be parametrized. From a mathematical standpoint, this is precisely what the Universal Grammar represents: a parametrization of the set of grammars for all natural languages. More quantitatively, a set of about six thousand elements can be parametrized with

independent binary parameters, which is roughly the number of parameters of

[

60]. For example, the value of one parameter would determine if the order of the sentence is Subject-Verb-Object (as in Catalan) or Subject-Object-Verb (as in Japanese), and another would determine whether the subject of the sentence can be omitted (as in Catalan) or not (as in German). (For further parameters, see [

60].)

Slightly more formally, let

denote the space of values of 13 binary parameters, and let

denote the set of grammars of natural languages. We see the universal grammar

as a map

so that

corresponds to the grammar of Navajo, Mohawk, Welsh or Basque (to mention some) depending on

. The claim of universality corresponds to the statement that, for any

, there is a

so that

. That is,

is surjective. But this holds because

is a parameterization of

.

We can easily cast the Universal Grammar in the basic structure of universality, by identifying

,

and

R with

We would also like to mention that it is often unclear in the literature whether

refers to the set of grammars of existing languages, or of languages that

could exist. In our view, characterising the latter would be tantamount to achieving a thorough understanding of

what is possible in terms of grammars of natural languages—a major achievement (see [

63] and [

64,

65] for considerations on the actual and the possible). For this reason, we assume that

refers to the former, and maintain our claims above.

Yet, we highlight the conspicuous fact that the number of parameters in the UG corresponds precisely to the number required to parametrize the set of

actual languages (in a binary manner). By ‘actual’ we mean a language that either exists or has existed and there is a record of it. If the aim of the UG endeavour is to characterize the set of

possible human languages, then our claims above about the mathematical triviality of the instantiation of the basic structure of universality would no longer hold, as such a set would presumably have a larger cardinality, so it is not trivial that it can be parametrized with 13 binary parameters. This challenge underpins some theoretical extensions, in particular the so-called Minimalist Program [

66,

67], which puts less emphasis on the structure of parameters but maintains the thesis that a fundamental backbone is shared by all languages.

3.3. Greenberg Universals

A somewhat orthogonal perspective is given by the identification of patterns in grammar that seem to appear throughout all known languages. In this case, the presence of so-called universal traits is the result of a generalization stemming from an empirical approach, in contrast to the rationalistic reasoning underlying the UG considered in

Section 3.2. Despite this fact, these ‘Grammar Universals’, also called ‘Greenberg Universals’, aim to be the starting point of a theory of grammar with predictive capacity. In any case, so far this approach makes no statements about the epistemological problem of language acquisition.

We focus on the early proposal made by J. H. Greenberg [

40], based on a list of declarative statements with various logical structures. Without the aim of being fully comprehensive, one can classify the universals by their logical structure. Given the set of all natural languages

and some grammatical properties

P,

Q,

V, the statements mainly obey one of the two following logical structures:

The first type of statements (supposedly) hold for all natural languages. The second type of statements are implicational, and apply to those languages where the first condition is satisfied. The statements may contain negations, too.

An example of a statement of the first type is:

A language never has more gender categories in nonsingular numbers than in the singular.

An example of a statement of the second type—which are the vast majority—is:

If a language has the category of gender, then it has the category of number.

In standard (classical) logic, an implicational universal of the form can be written as . As a consequence, each implicational statement can be interpreted as an assertive statement (i.e. of the first type), that is, an attribute satisfied by all grammars of natural languages.

Let us now cast the Greenberg Universals in our basic structure (cf.

Section 2). Let

be the set of all natural languages;

5 we identify the set

C with

. Let

be the finite set of all logical statements in the list of Greenberg Universals. We write

to denote that statement

is true for language

. Relation

R between

u and

, as stated by Greenberg, can be written as

That is, relation

R holds if all (potentially infinite) natural languages satisfy the (finite set of) statements provided by the list of Greenberg’s Universals. We observe that, again, the notion of universality is very simple from a mathematical perspective; what is not simple is the identification of these common attributes, and more interestingly, the explanation of the mechanisms giving rise to them.

3.4. On the relation between UG and GU

While the two notions of universality stem from radically different grounds, they should bear, at the very least, a consistency relation: The statements of the two approaches cannot contradict each other. Moreover, despite the fact the UG is not directly accessible as only particular instantiations thereof are manifested—languages—, it is reasonable to assume that it must leave ‘footprints’ in the structure of languages, which should lead to some kind of general phenomenology within the set of actual human languages. One would like to see the Greenberg Universals as consequences, or by-products, of the instantiations of the UG. Let us make this idea more precise and, as a result, sketch the potential relation between the two notions of universality.

Let

F be the state space of the truth values of the attributes satisfied by all natural languages. If there are 47 Greenberg Universals,

F is isomorphic to

, where

stands for false and

for true. The Greenberg Universals can be seen as a map

,

where, for

,

is a string of length 47 specifying whether

G satisfies the first attribute (

), does not satisfy the second attribute (

), etc.

Now, the Universal Grammar

and the Greenberg Universals

can be seen as maps

where the universality of the Universal Grammar translates to the mathematical statement that

be surjective, while the universality of the Greenberg Universals translates to the statement that the image of

has a single point, namely

, that is, all grammars in

have those attributes. In conclusion, mathematically, the two universality statements are opposite: One is to the effect that a given map is a parametrization, whereas the other states that all elements in a set share a set of properties. Note also that, if the goal is to characterize

, this corresponds to the co-domain of

and the domain of

. We are aware that such interpretation may be riddled with various issues arising from the different nature of the two approaches.

Finally, we note that it is unclear whether the Universal Grammar or the Greenberg Universals exhibit universality of the mechanistic or emergent type.

4. Writing of languages

The writing of languages was a major technological innovation, which arose independently in various cultures and became a cornerstone of all emerging civilizations. As pointed out by Stanislas Dehaene, writing could be understood as a virus: from its original creation in Mesopotamia, it massively spread to all neighbouring cultures [

68]. Writing and reading enabled the storage and transmission of information in time and space.

The emergence of writing required a fortunate combination of already-present brain circuits; similarly, the transition to a reading brain involved a blend of contingency and inevitability. While reading and writing only entered the cognitive scene a few thousand years ago, once this cultural innovation emerged it became widespread [

69]. The cultural transmission of these capabilities was not only affected by the still-evolving writing systems, but also by the anatomical and perceptual constraints placed by human brains. For example, written symbols were spatially localized and well-contrasted, exploiting the feature of the human eye by which a reduced area of the retina (the fovea) can capture an item in a single eye fixation. In this process, a revolutionary turn occurred: The transition to alphabetic languages, by which as a small inventory of basic symbols

representing sounds can be combined to form syllables and words. As we shall discuss very soon, this transition can be seen as a jump to universality.

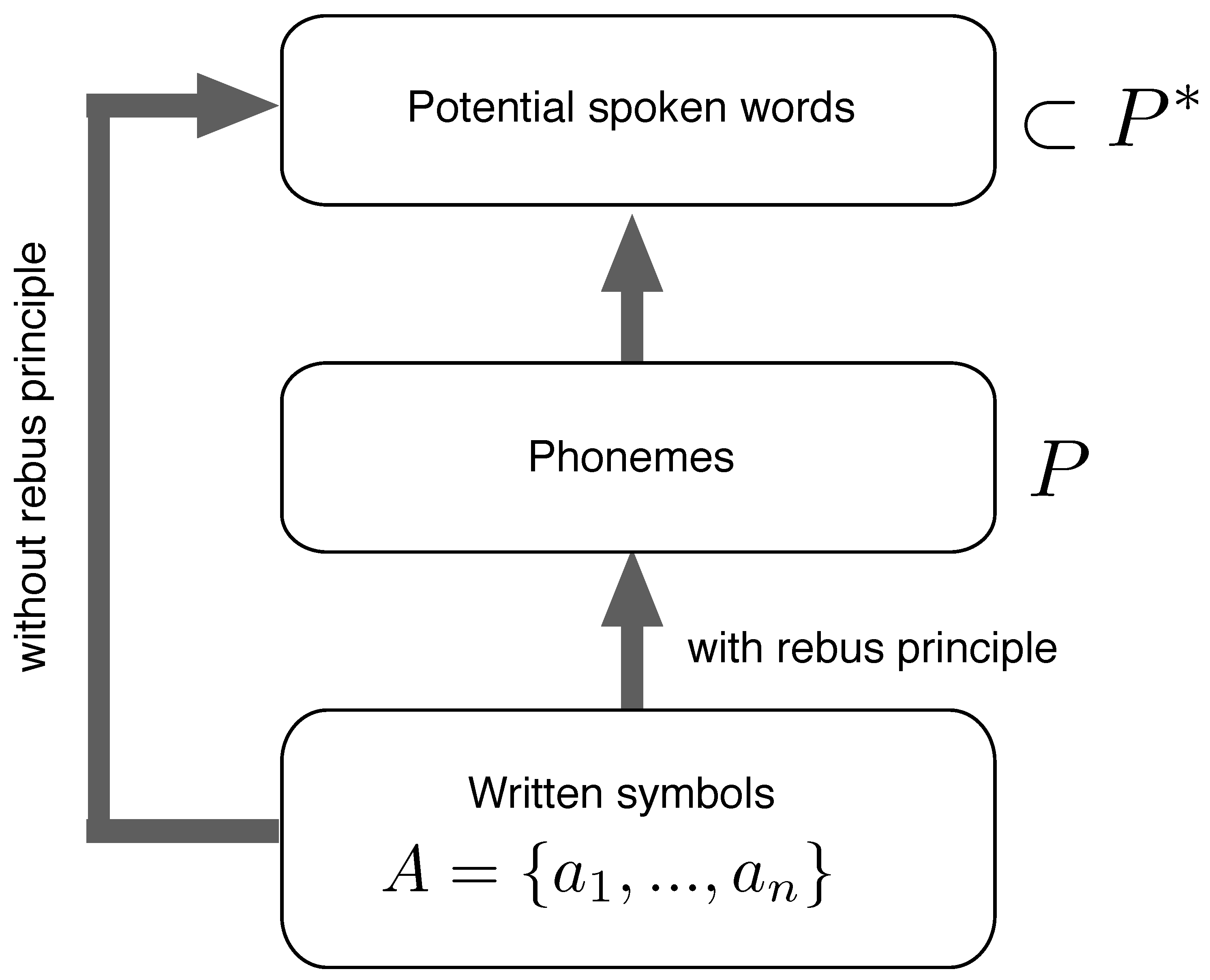

So let us consider notions of universality in the written representation of natural languages. In some writing systems, symbols represent objects or concepts. A prominent example are pictograms such as

or the ideographic writing system Blissymbolics. These writing systems do not obey the so-called

rebus principle, by which symbols represent phonemes, instead of spoken words [

70]. Since the phonemes of a language constitute a finite alphabet

P, and spoken words can be identified with finite strings of phonemes (i.e. elements of

), it follows that in writing systems using the rebus principle, a finite number of symbols suffices to represent an unbounded number of words. This is in opposition to writing systems that do not (exclusively) use the rebus principle, where a new lexical item

6 generally leads to the introduction of a new symbol.

This brings us to our definition of universal writing systems:

A writing system for a natural language is universal if it can represent any possible lexical item with a finite number of symbols.

The basic structure of universality is instantiated by letting

C be the set of possible lexical items,

R the relation of written representation, and

u the finite repertoire of symbols (we shall be more specific below). Note that ‘possible’ is an important qualification in this definition, as every natural language has a finite number of lexical items and hence a finite representation, but not necessarily a finite representation of any

possible lexical item. While we cannot characterize (or even grasp) the set of possible lexical items, we posit that it be unbounded, and that suffices for our argument.

More formally, we can model the generation of words as a map. Let

denote the alphabet, that is, the finite repertoire of symbols. Let

denote the set of finite and ordered sequences of symbols of

A, and let

be one such sequence. Let

S denote the set of lexical items of a spoken language. As mentioned above, if

S denotes the set of

actual spoken lexical items, then

S is finite; if it is the set of

possible spoken lexical items, then we contend it is unbounded. The following argument holds for either case. Writing such lexical items can be seen as a map

r (for ‘representation’),

The basic structure of universality (cf.

Section 2) is reproduced by letting

,

, and

That is, the alphabet

A and a spoken word

s are in relation

R if

s can be represented as a string of elements of

A. If relation

R holds for all

, the writing system given by

A and

r is universal.

Writing systems using exclusively the rebus principle are finite, and thus universal according to our definition (

Figure 4). They exploit the fact that a finite number of phonemes give rise to an unbounded number of spoken words. That is, ultimately they exploit the fact that the phonetic code itself is finite. Examples are the Latin, Cyrillic, Greek or Korean alphabet. The transition from writing systems without the rebus principle to those with it can be seen as a jump to universality (see

Figure 5). Note that this universality is of the mechanistic type (cf.

Section 1).

We remark that the rebus principle requires that there be

a map from sounds to symbols, yet the degree to which this map is one-to-one depends on the orthography of the language. In some languages (e.g. Finnish) this map is quite faithful, i.e. close to a bijection, whereas for others it is far from so (e.g. Arabic and Hebrew generally do not write vowels, and English is well-known for its intrincate and sometimes inconsistent spelling rules). On the other hand, the writing systems of Chinese and Japanese

partially employ the rebus principle in the creation of characters (but not only), so generally necessitate an unbounded number of characters to represent an unbounded number of words (

Figure 5). In this context, some have suggested that the rebus principle triggered the transition from proto-writing to full-writing (see [

70, p.89]).

Finally, we note that precisely because pictograms are detached from phonetics, they can be ‘understood’ by speakers of different languages, provided there is a shared cultural background. This is why they are used in public spaces like airports, on clothing labels, and to indicate facilities such as bathrooms or pharmacies. As a matter of fact, this widespread comprehensibility is often the very reason for their introduction. In this sense, pictographic writing systems are more universal than writing systems with the rebus principle, precisely becuase the former decouple representation from phonetics. This somewhat paradoxical situation can be phrased in terms of a tension between the universality of a pictographic code, accessible to speakers of different languages, and a code with the rebus principle, which systematically encodes an unbounded number of lexical items.

Ultimately, what is at stake in this discussion is Leibniz’ dream of a

Characteristica Universalis, an idea borrowed from Ramon Llull [

71]. The

Characteristica Universalis is supposed to be an artificial formal language that establishes a clear and unambiguous correspondence between symbols and concepts [

72, Chapter 2]. Leibniz envisioned the program as consisting of three key steps: (i) to systematically identify simple concepts, (ii) to choose signs or characters to designate these concepts, constituting a kind of universal alphabet for the artificial language, and (iii) to develop a combinatorial method governing the combination of these concepts. Such an artificial formal language should allow to resolve any dispute through calculation.

7 It remains uncertain whether the number of simple concepts in (i) should be finite. What is even more uncertain, and of greater significance, is whether a

Characteristica Universalis can exist at all. Addressing this question requires facing the challenging question of the extent to which to think is to compute.

6. Summary and Outlook

In summary, we have (attempted to) establish a common ground to study and compare universalities of different aspects of language. In particular, we have defined the basic structure of universality and recursion, and have identified it in generative grammars, the Universal Grammar, Greenberg Universals, writing systems, and Zipf’s law. On some occasions, we have examined the boundary of universality with regard to the transition from non-universal to universal. We have also posited that there are two ‘flavours’ of universality, termed mechanistic and emergent, which we have identified and compared whenever possible.

Let us now turn to the outlook of this work. On the question of grammars, recent works have seen spin models as formal languages, and consequently physical interactions as grammars [

86,

87]. It would be interesting to shine the light of universality for natural languages on spin models, and to explore its implications.

Regarding scriptures, our main conclusion was that the universality of writing systems was enabled because the very code of language is universal, that is, the phonetic code is finite. We note that this is consistent with Deutsch’s suggestion [

41] that a discrete code is a necessary condition for the jump the universality, as only discrete codes allow for error correction.

Still on the topic of language representations, an interesting question concerns sign languages, as they a priori would not need to draw any connection to phonetics. How would an analogue to the rebus principle for sign languages be defined? In fact, we need not address this question, as sign language do correlate to phonetics, and have a finite repertoire of signs by virtue of the finiteness of phonetical traits. The same is true for tactile languages such as Braille.

Analogously to the representation of language, the representation of music is also discrete and finite, as mentioned in [

41]. Despite the fact that frequency is continuous, the representation of music uses a discrete number of notes (C, C

♯, D, D

♯, E, F, F

♯, G, G

♯, A, A

♯, B), because the code is finite.

12 The same is true for volume: despite its continuity in the physical world, the dynamic markings in partitures are discrete (

ppp,

pp,

p,

mp,

mf,

f,

ff,

fff). In this sense, notes play an analogous role to phonemes in language—they are abstract and discrete—and partitures represent music as books represent language.

Returning to language, we may wonder: In what other way could a representation of language be finite? Without stepping on the muddy terrain of the relation between language and thought (see, e.g., [

88]), this question brings us back to Leibniz’ quest for an alphabet of thought, the

Characteristica Universalis (see e.g. [

72, Chapter 2] or [

50]), and the many fascinating implications derived from it.

Acknowledgements.— GDLC’s research was funded by the Austrian Science Fund (FWF) [START Prize Y-1261-N]. For open access purposes, the author has applied a CC BY public copyright license to any author accepted manuscript version arising from this submission. B.C.-M. acknowledges the support of the field of excellence ‘Complexity of life in basic research and innovation’ of the University of Graz. RS thanks Luis F. Seoane for so many discussions about language complexity and the Santa Fe Institute, where many of these ideas were explored.

or the ideographic writing system Blissymbolics. These writing systems do not obey the so-called rebus principle, by which symbols represent phonemes, instead of spoken words [70]. Since the phonemes of a language constitute a finite alphabet P, and spoken words can be identified with finite strings of phonemes (i.e. elements of ), it follows that in writing systems using the rebus principle, a finite number of symbols suffices to represent an unbounded number of words. This is in opposition to writing systems that do not (exclusively) use the rebus principle, where a new lexical item6 generally leads to the introduction of a new symbol.

or the ideographic writing system Blissymbolics. These writing systems do not obey the so-called rebus principle, by which symbols represent phonemes, instead of spoken words [70]. Since the phonemes of a language constitute a finite alphabet P, and spoken words can be identified with finite strings of phonemes (i.e. elements of ), it follows that in writing systems using the rebus principle, a finite number of symbols suffices to represent an unbounded number of words. This is in opposition to writing systems that do not (exclusively) use the rebus principle, where a new lexical item6 generally leads to the introduction of a new symbol.